Validated Profile: University Vertical

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Solution overview

This document aims to provide guidance and act as a validated reference for deploying university networks using Catalyst Center and Cisco Software-Defined Access (SD-Access) solutions.

The education industry is undergoing significant transformations, including the adoption of smart campus technologies, automation, hybrid learning environments, and secure distance learning. The exponential increase in connected endpoints, driven by students and faculty bringing personal devices to campus, poses unique challenges. Additionally, university students and faculty often travel globally and require immediate access to research materials.

Like other industries, educational networks demand advanced network services, seamless mobility, high availability, and efficient management. However, university networks have unique requirements, including enhanced security for residential services, support for wireless-heavy infrastructures, and robust wireless mobility.

This document highlights key considerations tailored to address the specific needs of the education sector.

Scope

This guide serves as a comprehensive roadmap for understanding the challenges faced by university networks, exploring common use cases, and demonstrating how Cisco SD-Access can address these needs. While it does not delve into detailed configuration steps, it provides valuable insights to support the development of an effective university network strategy.

Traditional network versus Cisco SD-Access

This section provides an overview of the key differences between traditional network and Cisco SD‑Access.

Traditional networks:

● Traditional networks require network devices to be configured manually.

● They often require a separate overlay network for segmentation.

● Security policies are typically enforced between network boundaries.

● Scaling the network can be complex and time-consuming.

● Troubleshooting is often reactive and requires manual intervention.

● Limited visibility into network traffic and application performance.

Cisco SD-Access:

● SD-Access automates network provisioning and management through intent-based automation.

● It simplifies network design by carrying Virtual Network (VN) and Security Group Tag (SGT) information in the VXLAN overlay while using a single underlay network for both connectivity and segmentation.

● Security policies are applied dynamically based on user and device identity.

● SD-Access scales more easily through automation and centralized control.

● Troubleshooting is proactive with network-wide visibility and analytics.

● SD-Access provides detailed insights into network traffic and application performance.

In summary, Cisco SD-Access offers a more streamlined and flexible approach compared to traditional networks, with centralized management, improved scalability, and enhanced security features.

Challenges in traditional networks

Today there are many challenges in managing the network, because of manual configuration and fragmented tool offerings. Manual operations are slow and error prone. Issues are exacerbated because of a constantly changing environment. The growth of users and different device types makes it more complex to configure and maintain a consistent user policy across the network.

● Network deployment challenges:

Setup or deployment of a single network switch can take several hours due to scheduling requirements and the need to work with different infrastructure groups. In some cases, deploying a batch of switches can take several weeks.

● Network security challenges:

Security is a critical component of managing modern networks. Organizations need to protect resources and make changes efficiently in response to real-time needs. In traditional networks, it can be challenging to track VLANs, access control lists (ACLs), and IP addresses to ensure optimal policy and security compliance.

● Wireless and wired network challenges:

Disparate networks are common in many organizations, because different systems are managed by different departments. Typically, the main IT network is operated separately from building management systems, security systems, and other production systems. This leads to duplication of network hardware procurement and inconsistency in management practices.

● Network operations challenges:

IT teams often contend with outdated change management tools, difficulty in maintaining productivity, and slow issue resolution.

Advantages of Cisco SD-Access

Cisco SD-Access is designed to address the demands of rapid digitization. The core philosophy of the Cisco SD-Access architecture revolves around policy-based automation, enabling secure user and device segmentation across both wired and wireless connectivity.

Automation and simplicity boost productivity, allowing IT staff to innovate quickly and lead the industry in digital transformation, thereby enhancing operational effectiveness. A consistent segmentation framework aligned with business policies, regardless of transport medium (wired or wireless), is crucial for core effectiveness.

Cisco SD-Access provides technological advantages, including:

● Simplified operations:

Simplifies network operations by providing a single, intuitive interface for managing the entire infrastructure, reducing complexity and operational overhead.

● Automation:

Automates routine network operations such as configuration, provisioning, and management. This reduces the risk of human error and increases efficiency. Catalyst Center streamlines the deployment, minimizing the need for interaction with Command Line Interfaces (CLI).

● Agility:

Network operations become more agile and align with business requirements by minimizing manual configuration steps.

● Security:

Provides enhanced security and segmentation through Virtual Networks (VNs) and Security Group Tags (SGTs). SD-Access provides a strong framework for securing and managing complex enterprise networks through macro-segmentation with Virtual Networks (VNs), and micro-segmentation with Security Group Tags (SGTs).

● Consistent policies for wired and wireless:

Extends segmentation, visibility, and policy from wired to wireless networks. Distributed wireless termination scales network throughput while centralizing management and troubleshooting.

● Support for business analytics:

Aggregates analytics and telemetry information into a single platform, aiding business decisions and facilitating growth or diversification planning.

University network overview

For guidance and recommendations on constructing a new greenfield deployment of the Cisco SD-Access fabric tailored to the challenges and use cases of a university network, see the sections for an in-depth exploration of SD-Access fabric components. Discover the benefits Cisco SD-Access solutions provide in addressing the unique requirements and challenges of the education sector.

Traditional networks can be managed using Cisco Prime Infrastructure or Catalyst Center. Catalyst Center offers advanced automation, monitoring, and telemetry capabilities for both traditional networks and SD‑Access environments. If you are currently managing a network with Cisco Prime Infrastructure and plan to migrate to Catalyst Center, see Cisco Prime Infrastructure to Cisco Catalyst Center Migration.

To transition existing Cisco Catalyst legacy networks to a Cisco SD-Access fabric, see Migration to Cisco SD-Access, which outlines options for migrating existing networks with both wired and wireless endpoints.

Cisco Catalyst Center

Catalyst Center (formerly known as Cisco DNA Center) is a centralized network management and orchestration platform designed to simplify network operations and management. It provides a single dashboard to manage and monitor your network infrastructure, including switches, routers, and wireless access points (APs).

Using Catalyst Center, network administrators can do various tasks, including:

● Automate network provisioning:

Easily deploy network devices and services using automated workflows, reducing the time and effort required for configuration.

● Monitor network health:

Gain visibility into the entire network, including device status, traffic patterns, and performance metrics, to quickly identify and resolve issues.

● Implement security policies:

Define and enforce security policies across the network, ensuring compliance and protecting against threats.

● Manage software updates:

Simplify the process of updating device software and firmware, ensuring that network devices are up to date with the latest features and security patches.

● Troubleshoot network problems:

Use built-in tools and analytics to diagnose and resolve network issues quickly, minimizing downtime and disruption.

Overall, Catalyst Center helps organizations streamline network operations, improve efficiency, and enhance security, making it an essential tool for managing modern network infrastructures.

The Catalyst Center platform is available in various form factors, including physical and virtual appliances. For details, see these resources:

● Cisco Catalyst Center Data Sheet (for supported platform and scale).

● Cisco Catalyst Center Installation Guide

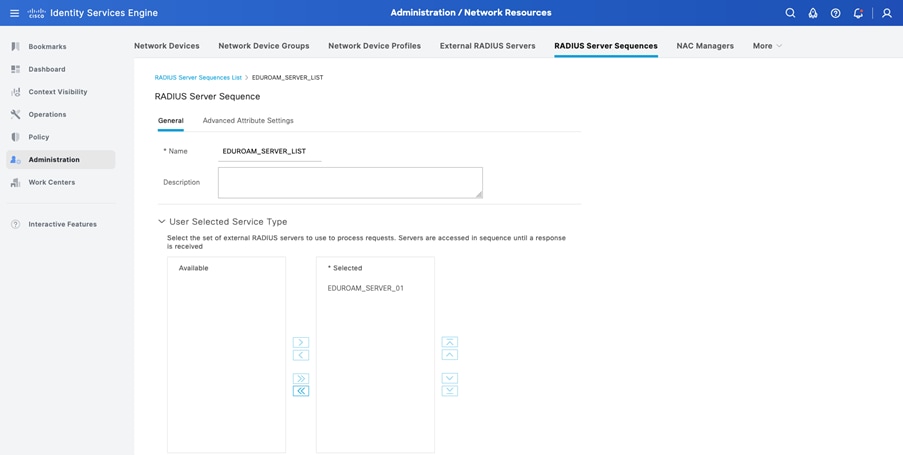

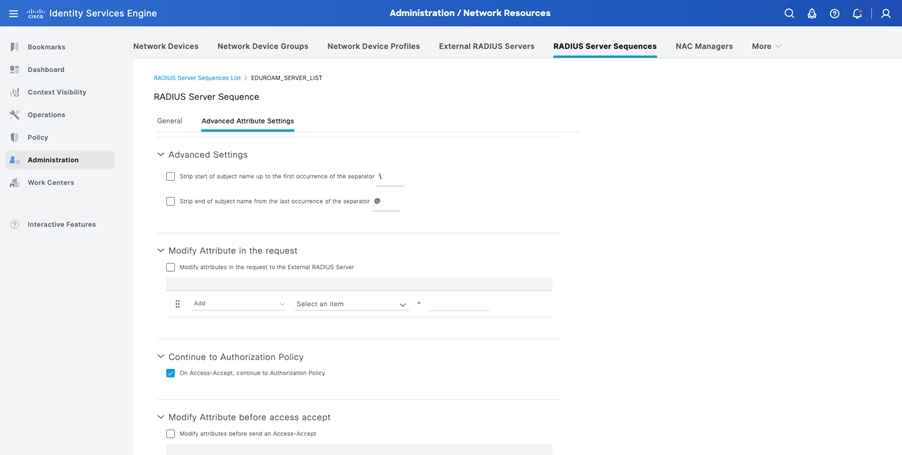

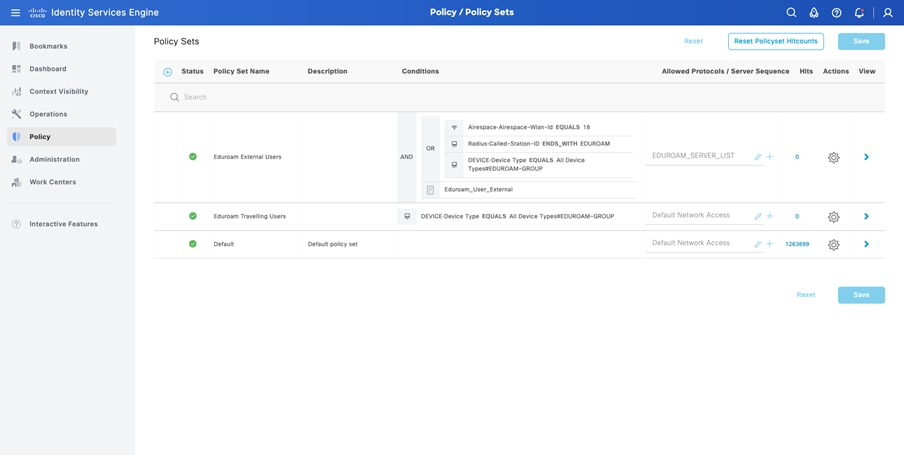

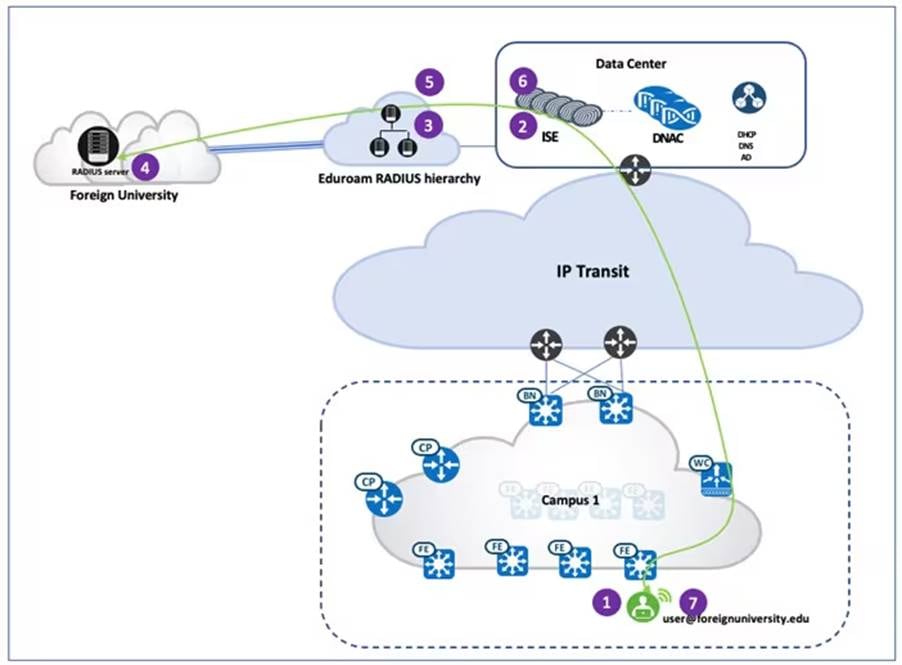

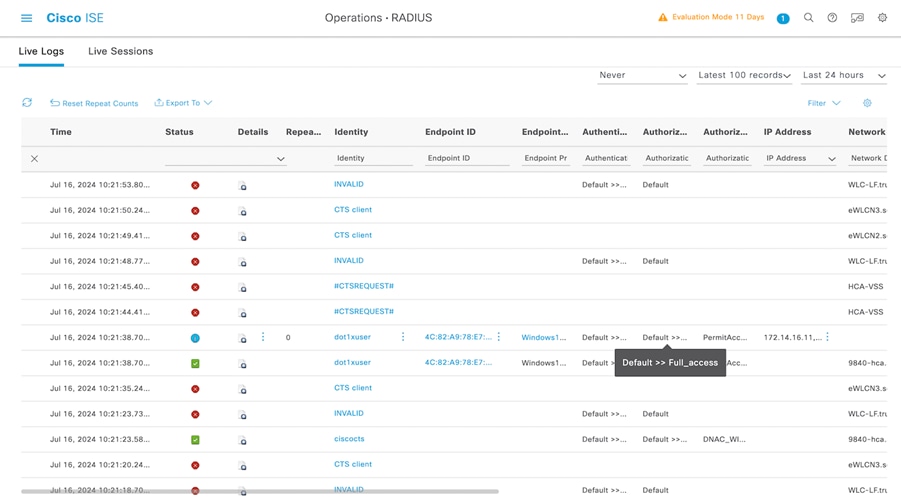

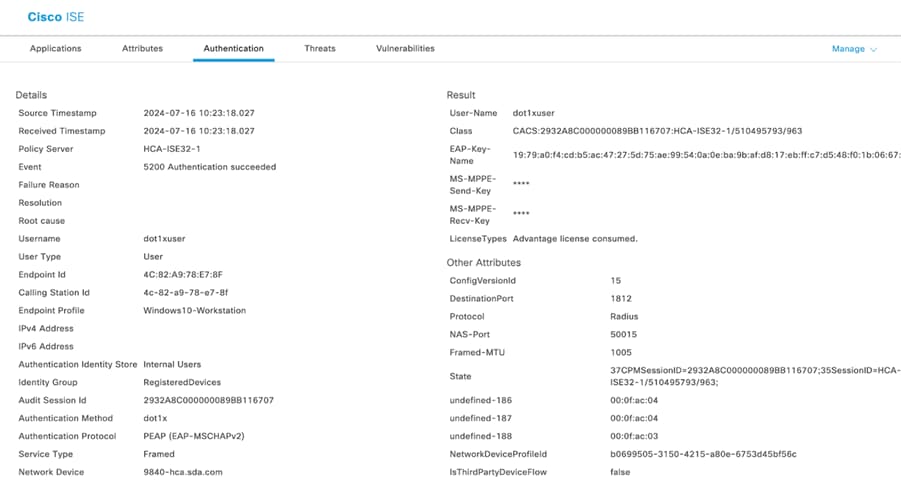

Cisco Identity Service Engine

Cisco Identity Services Engine (ISE) is a security policy management and control platform. It automates and simplifies access control and security compliance for wired, wireless, and VPN connectivity. Cisco ISE offers secure access to network resources, enforces security policies, and delivers comprehensive visibility into network access.

The key features of Cisco ISE include:

● Policy-based access control:

Define and enforce policies based on user roles, device types, and other contextual information.

● Authentication and authorization:

Support for various authentication methods (for example, 802.1X, MAB, web authentication) and enables dynamic authorization based on changing conditions.

● Endpoint compliance:

Assess the compliance of endpoints with security policies and enforce remediation actions, if necessary.

● Guest access:

Provide secure guest access to the network with customizable guest portals and sponsor approval workflows.

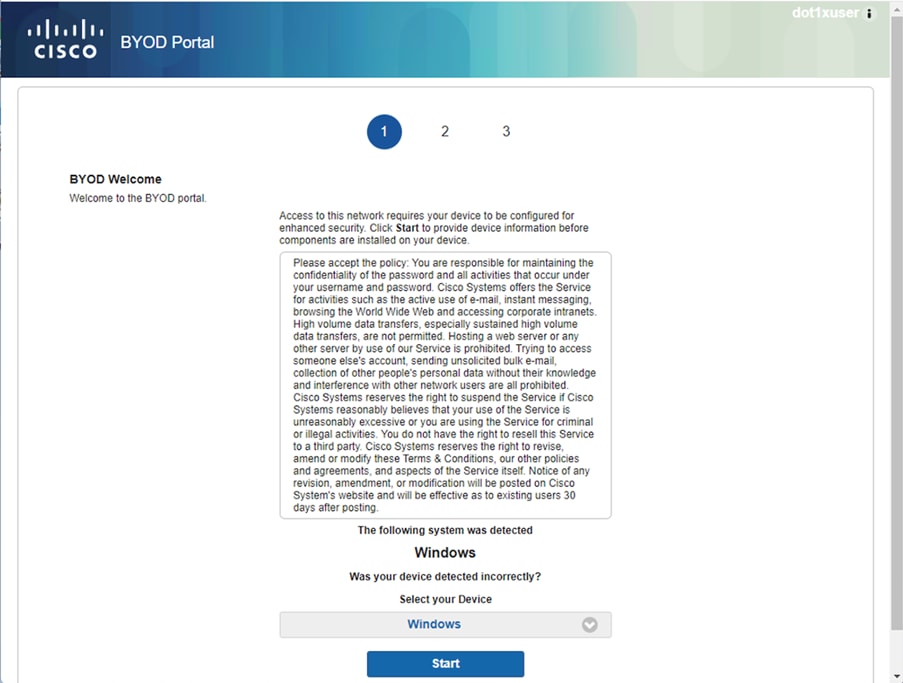

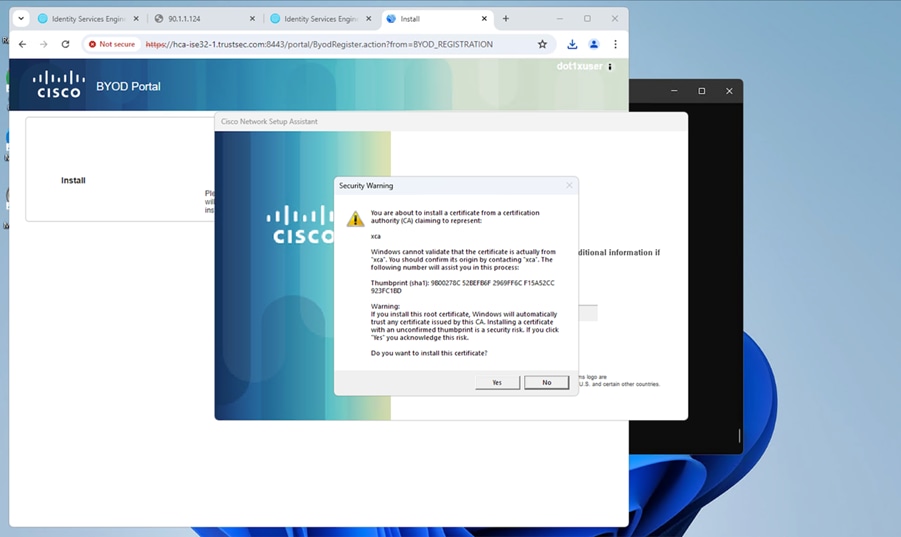

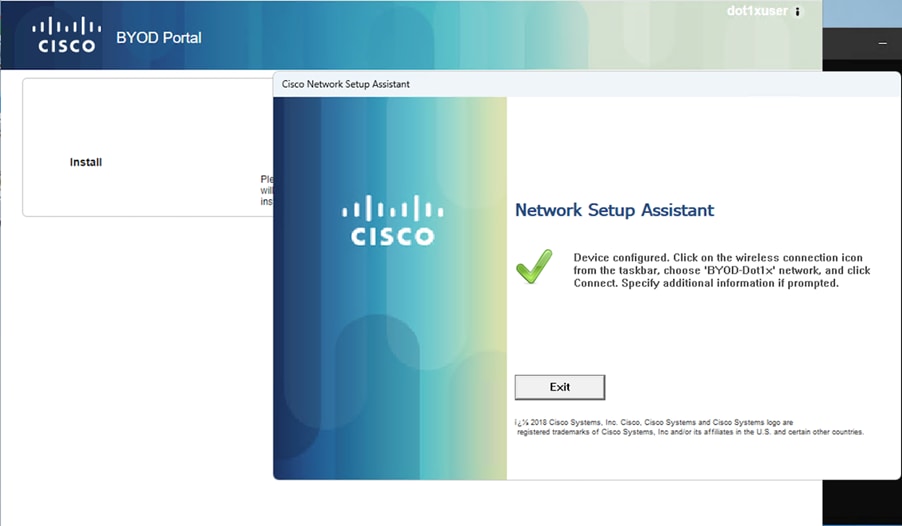

● Bring Your Own Device (BYOD) support:

Enable secure BYOD initiatives with device onboarding and policy enforcement.

● Integration and Ecosystem:

Integrate with other security and networking technologies through APIs and partner ecosystem.

● Visibility and reporting:

Gain insights into network access and security posture through comprehensive reporting and analytics.

Cisco ISE is a critical component of Cisco's security and network access control portfolio, providing organizations with a centralized and scalable solution to address their security and access control needs. Cisco Identity Services Engine (ISE) supports both standalone and distributed deployment models. Multiple distributed nodes can be deployed collectively to enhance failover resiliency and scalability. Minimally, a basic two-node ISE deployment is recommended for SD-Access single-site deployments, with each ISE node running all services (personas) for redundancy.

For more details, see:

● Cisco Identity Services Engine Administrator Guides

● Performance and Scalability Guide for Cisco Identity Services Engine

Cisco SD-Access fabric

Cisco SD-Access fabric is a networking architecture that uses software-defined networking (SDN) concepts to automate network provisioning, segmentation, and policy enforcement. It aims to simplify network operations, enhance security, and improve user experiences in modern digital workplaces.

Key features of Cisco SD-Access fabric include:

● Unified wired and wireless automation:

One of the standouts features of SD-Access is its ability to integrate wired and wireless networks into a single, automated management framework.

● Network segmentation:

Divides the network into virtual segments based on user and device identity, enabling granular control over access and security policies.

● Centralized policy management:

Policies are defined centrally and enforced consistently across the entire network, reducing the risk of misconfiguration and policy conflicts.

● ISE:

Provides authentication and authorization services, ensuring that only authorized users and devices can access the network.

● Catalyst Center:

Serves as the management and orchestration platform for SD-Access, providing a single pane of glass for network management and troubleshooting.

● Scalability:

Supports large-scale deployments, enabling organizations to easily scale their networks as their needs expand.

● Enhanced security:

Improves network security by dynamically segmenting the network and enforcing security policies based on user and device identity.

Overall, Cisco SD-Access fabric aims to simplify network management, improve security, and enhance scalability, making it an attractive option for organizations looking to modernize their network infrastructure.

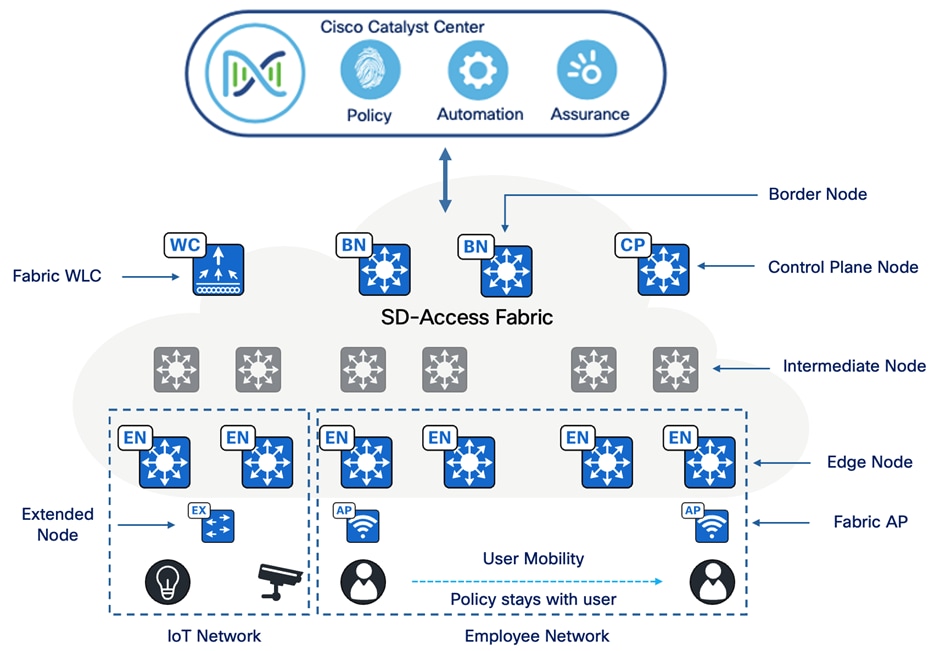

Fabric architecture overview

Cisco SD-Access fabric architecture is designed to simplify network operations, enhance security, and improve user experiences. It is based on the principles of software‑defined networking (SDN) and incorporates various components to achieve these goals:

● Underlay network:

The physical network infrastructure that provides basic connectivity between devices. It typically consists of switches, routers, and cables.

● Overlay network:

A logical network built on top of the underlay network that provides virtualized connectivity between devices. It enables network segmentation and policy enforcement without the need for physical reconfiguration.

● Control plane:

Manages the overall operation of the network, including routing, forwarding, and policy enforcement. It is typically implemented using a centralized controller, such as Catalyst Center.

● Data plane:

Handles the actual forwarding of data packets within the network. It is implemented on network devices, such as switches and routers, and operates based on the instructions provided by the control plane.

● Policy plane:

Defines and enforces network policies, such as access control and segmentation. It ensures that network resources are used efficiently and securely.

● Management plane:

Provides tools and interfaces for managing and monitoring the network. It includes features such as configuration management, monitoring, and troubleshooting.

Overall, Cisco SD-Access fabric architecture offers a comprehensive solution for modernizing network infrastructure, providing scalability, security, and automation capabilities to meet the evolving needs of digital businesses.

Network architecture

Fabric technology supports the SD-Access architecture on campus, enabling the use of VNs (overlay networks) running on a physical network (underlay network) to create alternative topologies for connecting devices. In SD-Access, the user-defined overlay networks are provisioned as virtual routing and forwarding (VRF) instances that provide separation of routing tables.

Fabric roles

A fabric role is an SD-Access software construct running on physical hardware. These software constructs are designed with modularity and flexibility in mind. For example, a device can run either a single role or multiple roles. Care should be taken to provision SD-Access fabric roles in alignment with the underlying network architecture, ensuring a distributed function approach. Separating roles across different devices provides the highest level of availability, resilience, deterministic convergence, and scalability.

The SD-Access fabric roles include:

● Control plane node

● Border node

● Edge node

● Intermediate node

● Fabric wireless controller

● Fabric-mode APs

Control plane node

SD-Access fabric control plane node combines LISP map-server functionalities and map-resolver functionalities on a single node. It maintains a database that tracks all endpoints within the fabric site, mapping them to fabric nodes. This design separates an endpoint’s IP or MAC address from its physical location (nearest router), ensuring efficient network operations.

Key functions of the control plane node:

● Host Tracking Database (HTDB):

Acts as a central repository for EID-to-RLOC bindings, where the routing locator (RLOC) is the loopback zero IP address of a fabric node. It functions similarly to a traditional LISP site, storing endpoint registrations.

● Endpoint Identifier (EID):

Identifies endpoint devices using MAC, IPv4, or IPv6 addresses in the SD-Access network.

● Map server:

Receives endpoint registrations, associates them with their corresponding RLOCs, and updates the HTDB accordingly.

● Map resolver:

Responds to queries from fabric devices, providing EID-to-RLOC mappings from the HTDB. This allows devices to determine the appropriate fabric node for forwarding traffic.

Border node

SD-Access fabric border node serves as the gateway between a fabric site and external networks, handling network virtualization interworking and the propagation of SGTs beyond the fabric.

Key functions of border nodes:

● EID subnet advertisement:

Uses the Border Gateway Protocol (BGP) to advertise endpoint prefixes outside the fabric, ensuring return traffic is directed correctly.

● Fabric site exit point:

Functions as the default gateway for edge nodes using LISP Proxy Tunnel Router (PxTR). Internal border nodes can register known subnets with the control plane node.

● Network virtualization extension:

Extends segmentation beyond the fabric using VRF-lite and VRF-aware routing protocols.

● Policy mapping:

Maintains SGT information outside the fabric via SGT Exchange Protocol (SXP) or inline tagging in Cisco metadata.

● VXLAN encapsulation and de-encapsulation:

Converts external traffic into VXLAN for the fabric and removes VXLAN for outgoing traffic, acting as a bridge between the fabric and non-fabric networks.

Edge node

SD-Access fabric edge nodes function like access layer switches in a traditional campus LAN. They operate based on ingress and egress tunnel routers (xTR) in LISP and must be deployed using a Layer 3 routed access design. These edge nodes perform several key functions:

● Endpoint registration:

Each edge node maintains a LISP control plane session with all control plane nodes. When an endpoint is detected, it is added to a local database called the EID-table. The edge node then sends a LISP map-register message to update the control plane’s HTDB (Host Tracking Database).

● Anycast Layer 3 gateway:

All edge nodes sharing the same EID subnet use a common IP and MAC address for seamless mobility and optimal forwarding. The anycast gateway is implemented as a Switched Virtual Interface (SVI) with a uniform MAC address across all edge nodes in the fabric.

● Layer 2 bridging:

Edge nodes handle Layer 2 traffic for endpoints within the same VLAN. They determine whether to bridge or route packets and use VXLAN Layer 2 VNIs (equivalent to VLANs) to bridge traffic to the correct destination. If traffic needs to exit the fabric, a Layer 2 border node is used.

● User-to-VN mapping:

Endpoints are assigned to VNs by associating them with VLANs linked to an SVI and VRF. This mapping ensures fabric segmentation at both the Layer 2 and Layer 3 LISP VNIs, even at the control plane level.

● AAA authentication:

Edge nodes can statically or dynamically assign endpoints to VLANs using 802.1X authentication. Acting as a Network Access Device (NAD), they collect authentication credentials, send them to an authentication server, and enforce access policies.

● VXLAN encapsulation and de-Encapsulation:

When an edge node receives traffic from an endpoint (directly connected, via an extended node, or through an AP), it encapsulates it in VXLAN and forwards it across the fabric. Depending on the destination, the traffic is sent to another edge node or a border node. When encapsulated traffic arrives at an edge node, it is de-encapsulated and delivered to the endpoint. This mechanism enables endpoint mobility, allowing devices to move between edge nodes without changing their IP addresses.

Intermediate node

Intermediate nodes are part of the Layer 3 network used for interconnections among devices operating in fabric roles, such as the connections between border nodes and edge nodes. These interconnections are established in the global routing table on the devices and are collectively known as the underlay network. For example, in a three-tier campus deployment where the core switches are provisioned as border nodes and the access switches as edge nodes, the distribution switches function as the intermediate nodes.

Intermediate nodes do not require VXLAN encapsulation/de-encapsulation, LISP control plane messaging, or SGT awareness. Their primary function is to provide IP reachability and physical connectivity, while also supporting the increased maximum transmission unit (MTU) to accommodate larger IP packets encapsulated with fabric VXLAN information. Essentially, intermediate nodes route and transport IP traffic between devices operating in fabric roles.

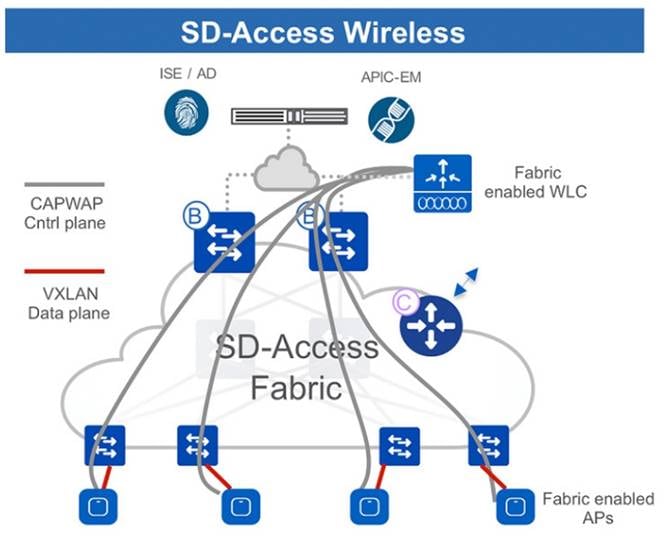

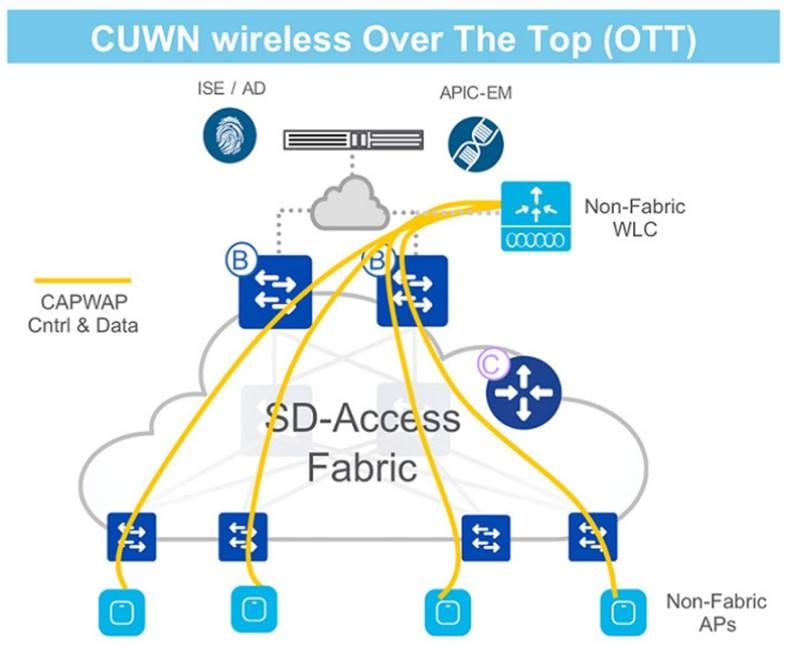

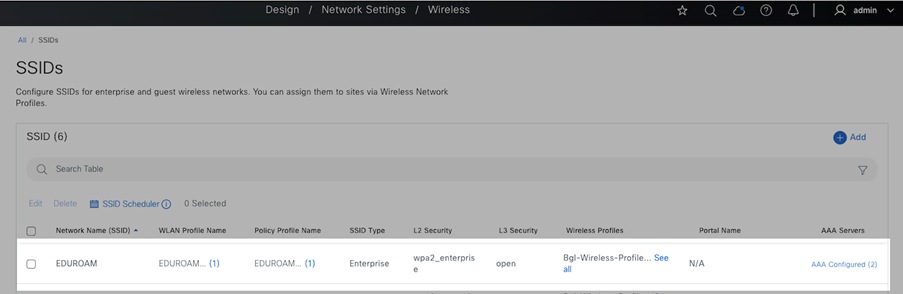

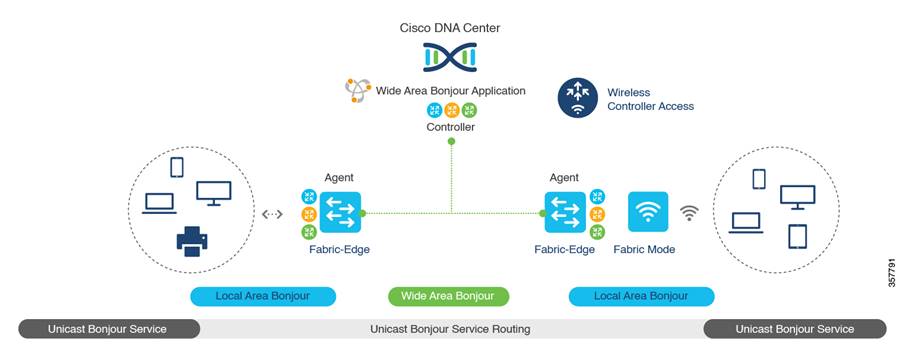

Fabric wireless controller

Both fabric wireless controllers and nonfabric wireless controllers provide AP image and configuration management, client session management, and mobility services. Fabric wireless controllers offer additional services for fabric integration, such as registering MAC addresses of wireless clients into the HTDB of the fabric control plane nodes during wireless client join events and supplying fabric edge node RLOC‑association updates to the HTDB during client roam events. Fabric integration with a wireless controller occurs on a per-SSID basis. Fabric-enabled SSID traffic is tunneled by the AP using VXLAN encapsulation to the fabric edge node, while centrally switched SSID traffic is tunneled by the AP using the Control and Provisioning of Wireless Access Points (CAPWAP) protocol to the wireless controller. Thus, the wireless controller can operate in a hybrid or mixed mode, where some SSIDs are fabric‑enabled while others are centrally switched.

● Traditional vs. SD-Access data handling:

In a traditional Cisco Unified Wireless network or nonfabric deployment, both control traffic and data traffic are tunneled back to the wireless controller using CAPWAP. From a CAPWAP control plane perspective, AP management traffic is generally lightweight, while client data traffic is the larger bandwidth consumer. Wireless standards have enabled progressively larger data rates for wireless clients, resulting in more client data being tunneled to the wireless controller. This requires a larger wireless controller with multiple high-bandwidth interfaces to support the increase in client traffic.

In nonfabric wireless deployments, wired and wireless traffic have different enforcement points in the network. The wireless controller addresses quality of service and security when bridging the wireless traffic onto the wired network. For wired traffic, enforcement occurs at the first-hop access layer switch. This paradigm shifts entirely with SD-Access Wireless. In SD-Access Wireless, the CAPWAP tunnels between the wireless controllers and APs are used only for control traffic. Data traffic from wireless endpoints is tunneled to the first-hop fabric edge node, where security and policy can be applied in the same manner as for wired traffic.

● Network connectivity and wireless controller placement:

Typically, fabric wireless controllers connect to a shared services network through a distribution block or data center network that is located outside the fabric and fabric border, with the wireless controller management IP address existing in the global routing table. For wireless APs to establish a CAPWAP tunnel for wireless controller management, the APs must be in a VN with access to this external device. This means that the APs are deployed in the global routing table, and the wireless controller’s management subnet or specific prefix must be present in the Global Routing Table (GRT) within the fabric site.

In the SD-Access solution, Cisco Catalyst Center configures wireless APs to reside within an overlay VN named INFRA_VN, which maps to the global routing table. This setup eliminates the need for route leaking or fusion routing (a multi-VRF device selectively sharing routing information) to establish connectivity between the wireless controllers and the APs. Each fabric site must have a wireless controller unique to that site. Most deployments place the wireless controller within the local fabric site itself, rather than across a WAN, due to latency requirements for local mode APs.

● Latency requirements and deployment considerations:

Fabric APs operate in local mode, which requires a Round-Trip Time (RTT) of 20 ms or less between the AP and the wireless controller. This typically means that the wireless controller is deployed in the same physical site as the APs. However, if this latency requirement is met through dedicated dark fiber or other very low‑latency circuits between physical sites, and the wireless controllers are deployed physically elsewhere, such as in a centralized data center, the wireless controllers and APs can be in different physical locations.

This deployment type, where fabric APs are located separately from their fabric wireless controllers, is commonly used in metro area networks and SD-Access for Distributed Campus environments. APs should not be deployed over WAN or other high-latency circuits from their wireless controllers in an SD‑Access network. Maintaining a maximum RTT of 20 ms between these devices is crucial for performance.

Fabric mode APs

Fabric mode APs are Cisco Wi-Fi 7 (802.11be), Wi-Fi 6 (802.11ax) and 802.11ac Wave 2 APs associated with the fabric wireless controller that have been configured with one or more fabric-enabled SSIDs. These fabric mode APs continue to support the same wireless media services as traditional APs, such as applying Application Visibility and Control (AVC), Quality of Service (QoS), and other wireless policies. Fabric APs establish a CAPWAP control plane tunnel to the fabric wireless controller and join as local-mode APs. They must be directly connected to the fabric edge node or extended node switch within the fabric site. For their data plane, fabric APs establish a VXLAN tunnel to their first-hop fabric edge switch, where wireless client traffic is terminated and placed on the wired network.

Fabric APs are considered special case wired hosts. Edge nodes use the Cisco Discovery Protocol to recognize APs as these wired hosts, apply specific port configurations, and assign the APs to a unique overlay network called INFRA_VN. As wired hosts, APs have a dedicated EID space and are registered with the control plane node. This EID space is associated with the predefined INFRA_VN overlay network in the Cisco Catalyst Center UI. It is a common EID space (prefix space) and VN for all fabric APs within a fabric site. The assignment to this overlay VN simplifies management by using a single subnet to cover the AP infrastructure within a fabric site.

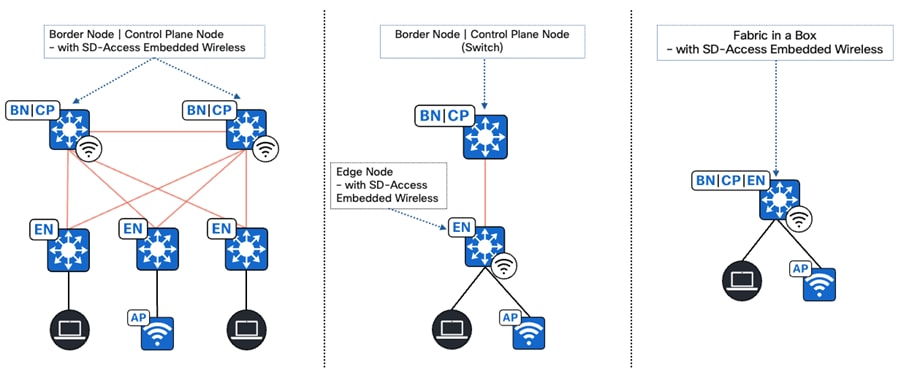

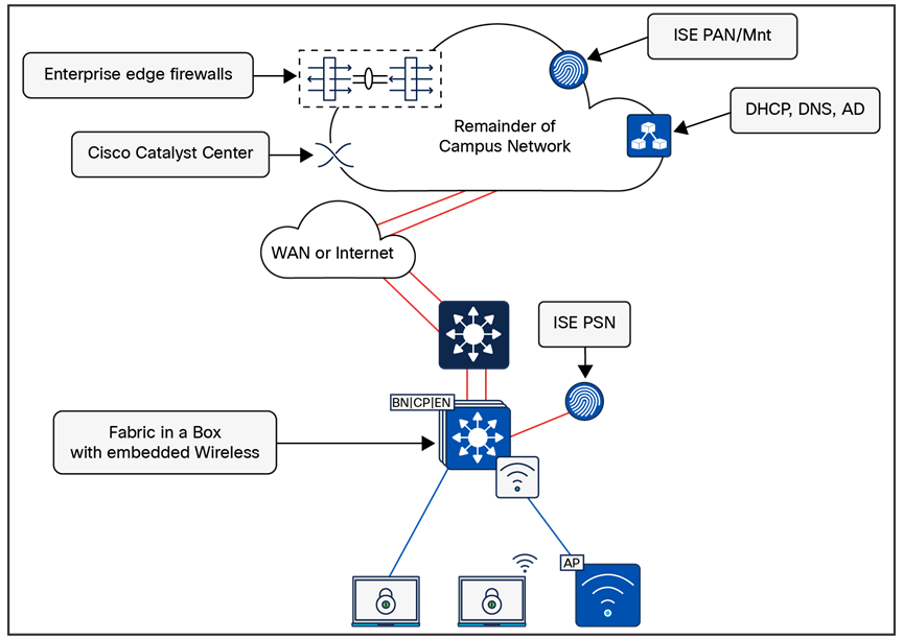

Fabric in a Box

Fabric In a Box (FIAB) integrates all the functionalities of a traditional SD-Access network such as border node, control plane node, and edge node into a single physical device. This device can be a single switch, a switch with hardware stacking capabilities, or part of a StackWise Virtual deployment.

The benefits of FIAB include:

● Simplicity

● Cost-effectiveness

● Faster deployment

● Ideal for branches and small-sized deployments

For more details, see the StackWise Virtual White Paper.

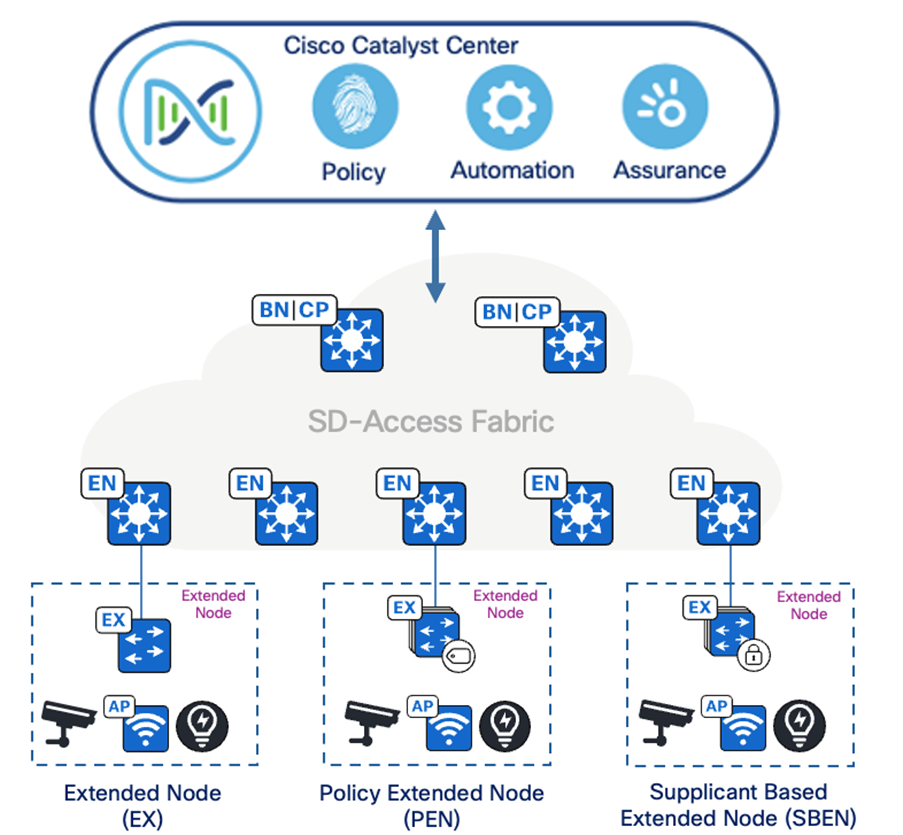

Extended nodes

SD-Access Extended Nodes enable the extension of the enterprise network to non-carpeted areas. Extended nodes provide a Layer 2 port extension to a fabric edge node while ensuring segmentation and applying group-based policies to the connected endpoints. Using Extended Nodes, organizations can extend the benefits of SD-Access such as enhanced security, simplified management, and consistent policy application to a broader range of devices and endpoints within their network.

For more details, see extended node design.

Fabric wireless controllers and APs

wireless controllers and traditional wireless controllers manage AP images and configurations, handle client sessions, and offer mobility services. Fabric wireless controllers additionally support fabric integration by registering MAC addresses of wireless clients into the host tracking database of fabric control plane nodes.

Fabric-mode APs that are Cisco Wi-Fi 7 (802.11be), Wi-Fi 6 (802.11ax) and 802.11ac Wave 2 APs associated with the fabric wireless controller, are configured with one or more fabric-enabled SSIDs. These fabric-mode APs retain support for wireless media services such as AVC, quality of service (QoS), and other wireless policies that are traditional APs.

For more details, see SD-Access Wireless Design and Deployment Guide.

SD-Access embedded wireless

For distributed branches and small campuses, wireless controller functionality can be achieved without a hardware wireless controller through the Cisco Catalyst 9800 Embedded Wireless Controller, available as a software package for Catalyst 9000 Series switches.

The Catalyst 9800 Embedded Wireless Controller supports SD-Access deployments in three topologies:

● Cisco Catalyst 9000 Series switches function as co-located border and control plane.

● Cisco Catalyst 9000 Series switches function as an edge node when the border and control plane node are on a routing platform.

● Cisco Catalyst 9000 Series switches functioning as fabric consolidation.

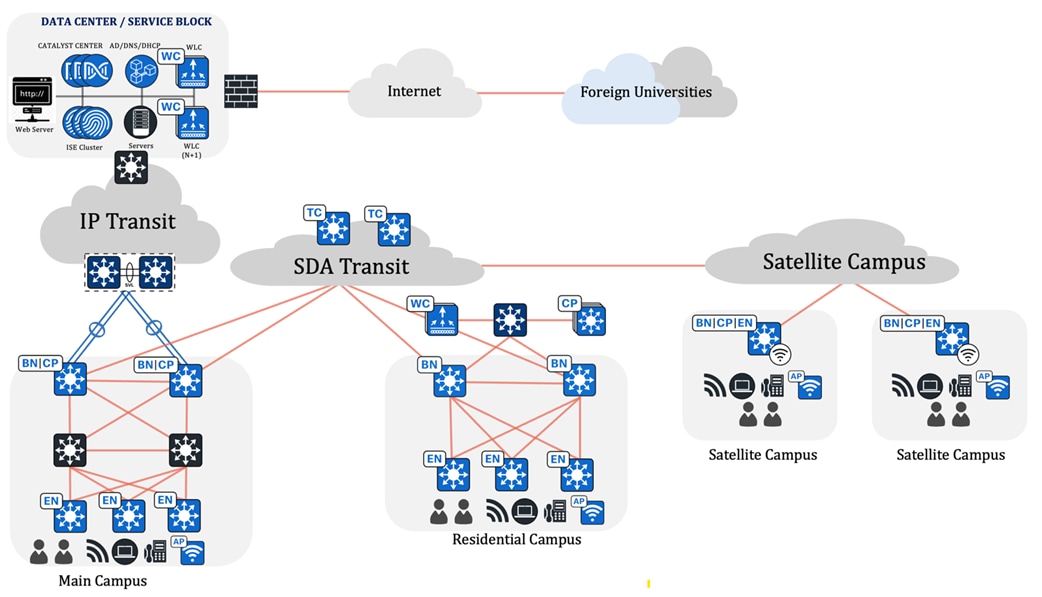

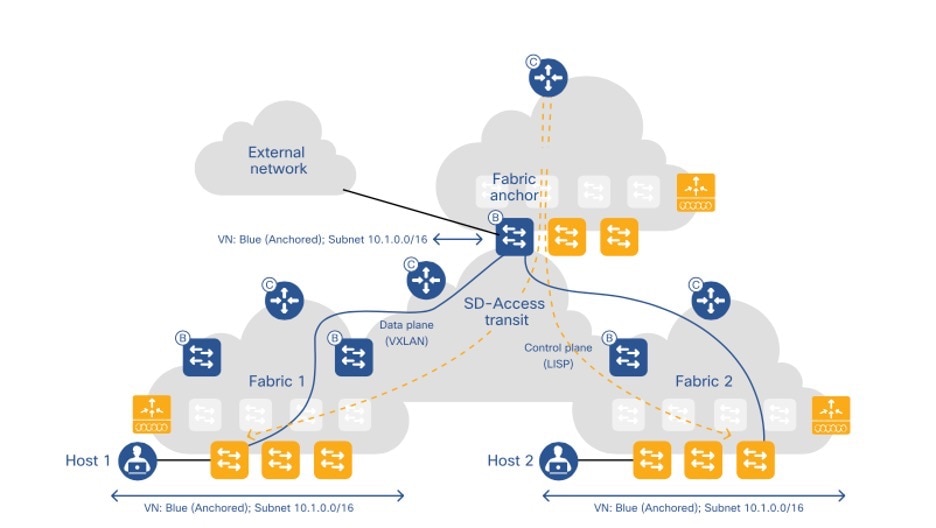

Transits

Transits can connect multiple fabric sites or link a fabric site to non-fabric domains such as a data center or the internet. Transits are a Cisco SD-Access construct that defines how Catalyst Center automates the border node configuration for connections between fabric sites or between a fabric site and an external domain. The two types of transits, include:

● IP-based transit:

With IP-Based Transits, the Fabric VXLAN header is removed, leaving the original native IP packet. Once in native IP form, packets are forwarded using traditional routing and switching protocols between Fabric Sites. Unlike an SD-Access Transit, an IP-Based Transit is provisioned with a VRF-Lite connection to an upstream peer device. IP-Based Transits typically connect to a data center, WAN, or the Internet. Use an IP-Based Transit to connect to shared services using a VRF-A ware Peer.

● SD-Access transit:

An SD-Access Transit uses VXLAN encapsulation and does not rely on a VRF-Lite connection to an upstream peer. Similar to IP-Based Transits, packets are forwarded using traditional routing and switching protocols between Fabric Sites. However, unlike IP-Based Transits, an SD-Access Transit is an overlay that operates on top of a W AN/MAN network, much like SD-W AN and DMVPN.

For more details about Cisco SD-Access components and architecture, see Cisco SD-Access.

IP-based transit and SD-Access transit comparison

IP-based transit:

● Leverages existing IP infrastructure:

Uses traditional IP-based routing protocols to connect fabric sites.

● Requires VRF remapping:

VRFs and Security Group Tags (SGTs) require to be remapped between sites, adding complexity.

● Supports existing IP networks:

This approach is ideal if you already have an established IP-based WAN infrastructure.

● Offers flexibility:

Provides more flexibility in terms of routing protocols and traffic engineering options.

SD-Access transit:

● Is the native SD-Access fabric:

Uses LISP, VXLAN, and CTS for inter-site communication.

● Preserves SGTs:

Maintains SGTs across fabric sites, enhancing security and policy enforcement.

● Centralizes control:

Uses a domain-wide Control Plane node for simplified management.

● Requires dedicated infrastructure:

Requires additional infrastructure for the SD-Access transit control plane.

Cisco Catalyst 9000 series switches

Cisco Catalyst 9000 series switches offer more flexible and highly scalable design options. Switches supported in different fabric roles offer secure, fast, and reliable connectivity to users and endpoints within the network.

For more details, see Catalyst 9000 switches.

Cisco Catalyst wireless controller and AP

Cisco Catalyst 9800 series wireless controllers and APs provide seamless network management and deployment in both on-premises and cloud for wireless clients.

See the data sheet for Catalyst 9800 and Catalyst 9100 devices:

● Cisco Access Point and Wireless Controller Selector

Compatibility matrix

Catalyst Center provides coverage for Cisco enterprise switching, routing, and mobility products.

For a complete list of supported Cisco products, see the compatibility matrix:

● Cisco Catalyst Center Compatibility Matrix

● Cisco SD-Access Compatibility Matrix

University deployment and design solutions

Profile deployment

This section provides design guidance for the education sector, emphasizing the requirements and the use of Cisco SD-Access to create a network that is simple, secure, and flexible. This section discusses topologies, use cases, and solutions tailored to meet standard deployment options for universities while also addressing the specific themes and requirements.

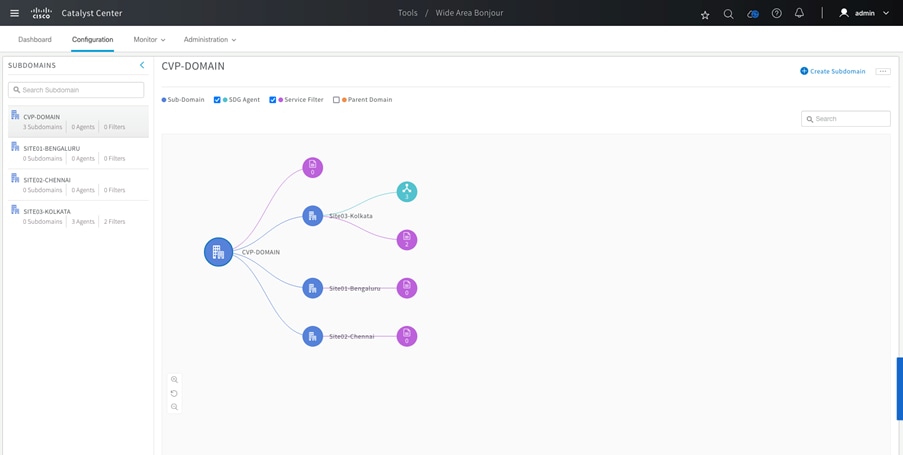

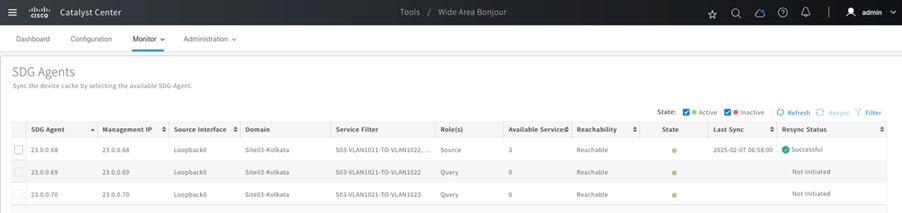

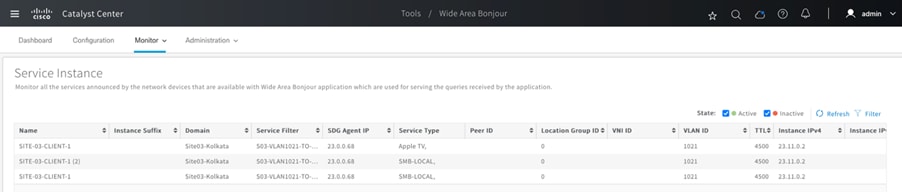

Solution topology

University business outcomes and challenges

A modern network infrastructure tailored to specific business outcomes empowers universities to remain competitive, drive innovation, and meet the evolving expectations of students, faculty, and the broader academic community. As universities increasingly rely on advanced and dependable network infrastructures to achieve their educational and operational goals. However, some of the universities’ challenges and potential outcomes include:

● Security

● Compliance

● Operational

● Financial

● Experience

Security

For universities, enhancing security measures, mitigating risks, and ensuring regulatory compliance can be accomplished by implementing robust security protocols, conducting regular risk assessments, and adhering to relevant industry regulations and standards. Given the open and dynamic nature of university environments, which must balance accessibility for students, faculty, and researchers with safeguarding sensitive data, universities are particularly vulnerable to security threats. If not properly managed, malicious actors can exploit vulnerabilities, leading to substantial financial and reputational harm.

Operational

For universities, maintaining network uptime is crucial for seamless operations and the achievement of business objectives. Given the mission-critical nature of university networks, the primary goal is to approach 100% availability. Achieving five-nines availability (99.999% uptime) significantly advances this objective, permitting only 5 minutes and 16 seconds of downtime annually. Uninterrupted services are vital for ensuring the productivity of students and researchers, as well as supporting overall institutional success. By leveraging automation, monitoring, load balancing, and failover mechanisms, universities can attain or even exceed the five-nines availability benchmark.

Financial

Implementing a modern network infrastructure provides substantial financial advantages for universities by optimizing resource utilization, reducing costs, and creating opportunities for innovative revenue generation. For example, automating deployments across multiple campuses helps streamline expenses and increase efficiency. By embracing modern network solutions, universities can enhance operational effectiveness and service delivery while positioning themselves for long-term financial growth in an increasingly digital and competitive educational environment.

Experience

Optimize user and application experiences by leveraging modern technologies that enable critical business capabilities. While security, compliance, and availability are vital, a network with inconsistent or slow Quality of Service (QoS) can negatively impact user satisfaction and productivity. In time-sensitive environments, where delays can be critical, ensuring low latency and reliable QoS is essential to meet institutional demands effectively.

Solutions to university business outcomes

This section outlines solutions to help achieve the business outcomes defined for a university network deployment.

Security challenges

The education sector encounters substantial security challenges due to its intricate and ever-evolving environments. These include expanded attack surfaces, data breaches, insider threats, regulatory compliance requirements, advanced cyberattacks, and securing remote work. The SD-Access framework effectively tackles these issues with a robust suite of tools and capabilities:

● Macrosegmentation

● Microsegmentation

● Policy enforcement models

● Group-Based Policy Analytics

● AI endpoint analytics

● Endpoint security with zero-trust solution

● Isolation of guest users

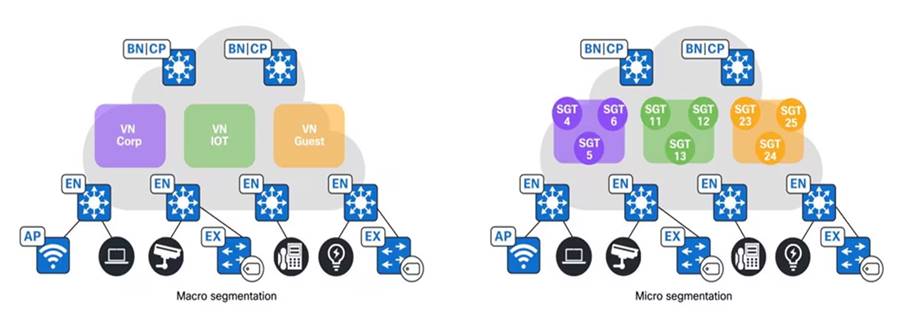

Macrosegmentation

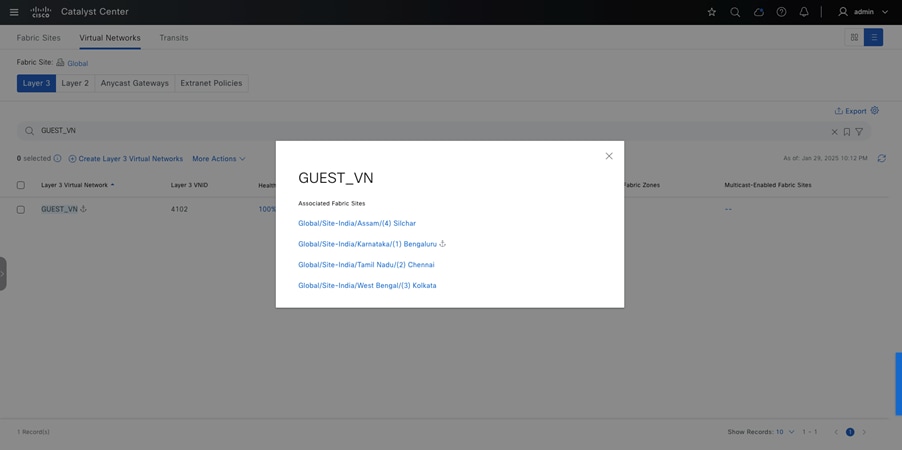

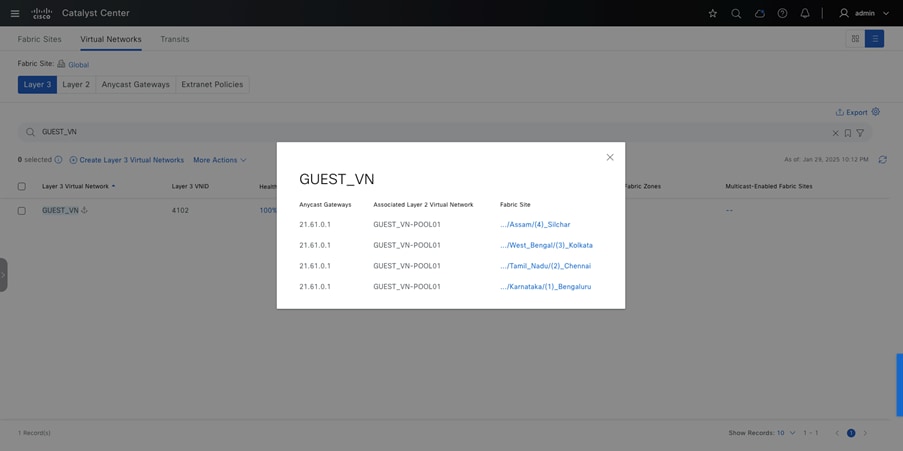

For university networks, assign different VRF instances to network endpoints, such as students, monitoring devices, and guests, to implement a recommended segmentation strategy. SD-Access provides the ability to macrosegment endpoints into distinct VRFs, which can be configured within the network using Catalyst Center.

Examples demonstrating the implementation of VNs include:

● INFRA VN:

This VN is exclusively for APs, classic extended nodes, and policy extended nodes to ensure connectivity. It is mapped to the global routing table.

● Student VN:

Use this VN for regular student access, ensuring secure and segregated connectivity for all internal users.

● Guest VN:

This VN provides internet access to visitors and guests while ensuring they cannot access the internal network.

● Monitoring VN:

Use this VN to dedicate network monitoring and management devices, ensuring they are isolated from regular user traffic.

● Resident VN:

Use this VN for resident users, ensuring secure and segregated connectivity for all internal users.

By implementing VNs in an SD-Access network, a university can effectively segment and secure diverse types of traffic, enhancing overall network performance and security.

Microsegmentation

Microsegmentation simplifies the provisioning and management of network access control by using security groups to classify traffic and enforce policies, allowing for more granular security within SD‑Access VNs.

Typically, within a single VN, you should further segment by grouping employees based on their department or placing devices such as printers in different security groups. Traditionally, this was done by placing groups in different subnets enforced by IP ACLs. However, Cisco SD-Access provides the flexibility of microsegmentation, allowing the use of the same subnet with a user and endpoint-centric approach. Dynamic authorization assigns different SGTs based on authentication credentials and Security‑Group Access Control Lists (SGACLs) enforces these SGT-based rules.

When users connect to the network, they are authenticated using methods such as 802.1X and MAC authentication bypass (MAB). Network authorization then classifies the user’s traffic using information such as identity, LDAP group membership, location, and access type. This classification information is propagated to a network device that enforces the dynamically downloaded policy, determining whether the traffic should be allowed or denied.

For more details, see the Software-Defined Access Macro Segmentation Deployment Guide.

Policy enforcement models

Cisco TrustSec is a security solution designed to simplify network access provisioning and management while enforcing security policies across an organization. It enables comprehensive segmentation and access control based on roles and policies rather than traditional IP-based methods, enhancing security and operational efficiency across wired and wireless environments.

In computing and network security enforcement, policy enforcement models generally fall into two categories:

● Deny-list model (default permit IP):

The default action permits IP traffic, and any restrictions must be explicitly configured using Security Group Access Lists (SGACLs). Use this model when there is an incomplete understanding of traffic flows within the network. It is relatively easy to implement.

● Allow-list model (default deny IP):

The default action denies IP traffic, so the required traffic must be explicitly permitted using SGACLs. Use this model when the customer has a good understanding of traffic flows within the network. This requires a detailed study of the control plane traffic, as it can block all traffic upon activation.

For more details, see Cisco ISE TrustSec Allow-List Model (Default Deny IP) with SD-Access.

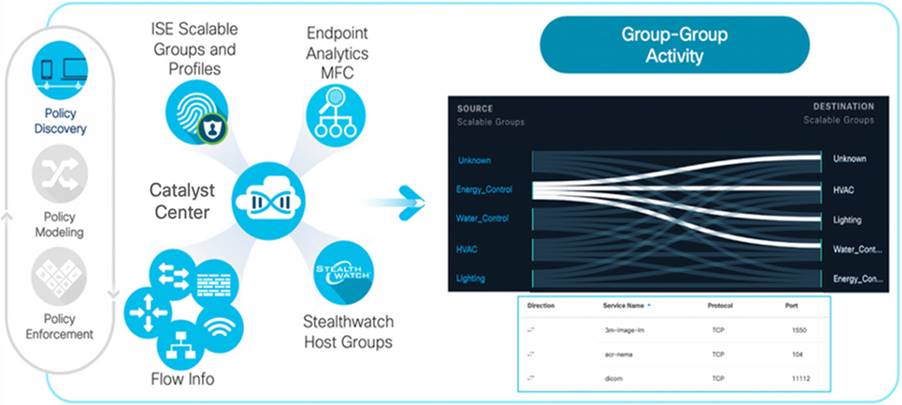

Group-Based Policy Analytics

High-profile cyber-attack news is driving universities to move beyond perimeter security and implement internal network segmentation. However, the lack of visibility into user and device behavior within the network makes it difficult to create effective segmentation policies. Businesses are seeking solutions to navigate this complex landscape.

Cisco offers a solution on Catalyst Center that addresses these challenges by providing Group-Based Policy Analytics (GBPA). GBPA provides capabilities including:

● Discover and visualize group interactions:

GBPA analyzes network traffic flows to identify how different network groups communicate, such as departments, functions, and so on.

● Identify communication patterns:

GBPA pinpoints the specific ports and protocols used by different groups, providing granular insights into network behavior.

● Simplify policy creation:

GBPA streamlines the process of building effective security policies to control communication between groups based on the discovered information.

GBPA leverages information from these sources to create a holistic view of your network:

● Cisco ISE:

When integrated with ISE, GBPA learns about network groups defined as Scalable Groups (SGTs) and Profile Groups, which categorize different types of connected devices.

● Endpoint Analytics:

Endpoint Analytics leverages machine learning and multifactor classification to reduce unidentified devices on the network and provides more accurate profile groups for segmentation.

● Cisco Secure Network Analytics (Optional):

Integration with Cisco Secure Network Analytics (SNA) allows GBPA to learn about Host Groups identified by SNA, further enriching network visibility.

● NetFlow data integration:

GBPA incorporates NetFlow data from network devices to provide context for group information. This combined data is then visualized through graphs and tables, enabling administrators to clearly understand network behavior based on group interactions.

GBPA empowers network administrators with network discovery, visualization, and the tools to analyze security policy requirements. This comprehensive approach leads to the creation of more effective and targeted security policies for today's dynamic threat landscape.

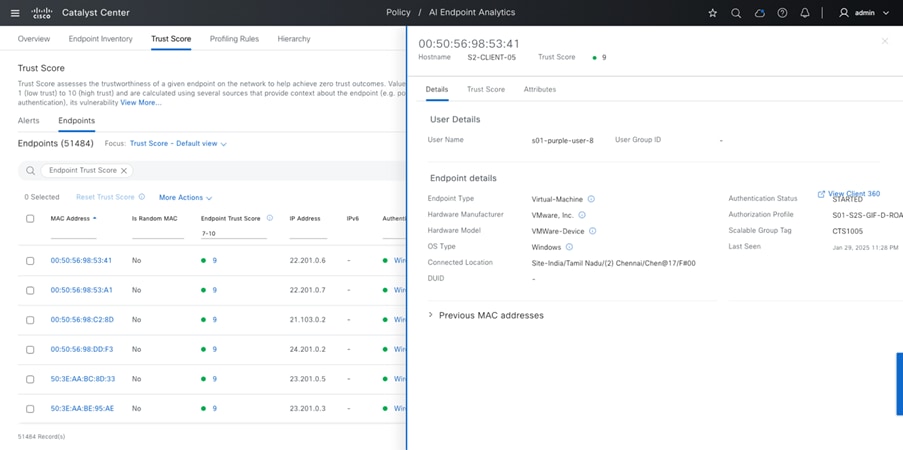

AI Endpoint Analytics

Cisco AI Endpoint Analytics, next-generation endpoint visibility solution, provides deeper insights from your network and IT ecosystem, making all endpoints visible and searchable. It detects and reduces the number of unknown endpoints in your enterprise using the following techniques:

● Deep packet inspection (DPI):

Gathers deeper endpoint context by scanning and understanding applications and communication protocols for IT, Building Automation, and Healthcare endpoints.

● Machine learning (ML):

Intuitively groups endpoints with common attributes and helps IT administrators label them. These unique labels are then anonymously shared with other organizations as suggestions, assisting in reducing the number of unknown endpoints and grouping them based on new labels.

● Integrations with Cisco and third-party products:

Provides additional network and non-network context to profile endpoints.

In summary, Cisco AI Endpoint Analytics addresses a critical challenge faced by many customers when implementing security policies: overcoming the lack of endpoint visibility, with high fidelity. It is available in Catalyst Center Release 2.1.2.x and higher as a new application. Customers with a subscription level of Cisco Catalyst Advantage and higher will have access to Cisco AI Endpoint Analytics. This technology primer will explore Cisco AI Endpoint Analytics and the benefits it offers to Cisco customers.

For more details, see these resources:

● Cisco SD-Access AI Endpoint Analytics

● Cisco Catalyst Center Guide - AI Endpoint Analytics

Endpoint security with zero-trust solutions

Endpoint security with zero-trust solutions in SD-Access is a comprehensive approach to network security that aims to protect endpoints, such as laptops, smart phones, and IoT devices, within an SD-Access environment. Applying zero-trust principles means that no device or user is automatically trusted, even if they are inside the network perimeter. Before granting access to network resources, each device is verified and authenticated.

The Cisco SD-Access zero-trust security solution provides the capability to automate network access policies using these features:

● Endpoint visibility:

You can identify and group endpoints. You can map their interactions through traffic flow analysis and define access policies.

● Trust monitoring:

You can continuously monitor the endpoint behavior, scan for vulnerabilities, verify trustworthiness for continued access, and isolate rogue or compromised endpoints.

● Network segmentation:

You can enforce group-based access policies and secure network through multilevel segmentation.

Cisco SD-Access can enforce the secure onboarding of network devices such as APs and switches using IEEE 802.1x mechanisms. This protects the network from unauthorized device attachment by maintaining closed authentication on all edge node access ports. Switches onboarded securely using closed authentication are called supplicant-based extended nodes (SBENs).

SBENs are provisioned as policy extended nodes by Catalyst Center to have a supplicant with EAP-TLS authentication on their uplink to the edge node. The EAP-TLS certificate is provisioned by Catalyst Center using the Catalyst Center Certificate Authority (CA). After successful onboarding, access to the port is purely based on authentication status. If the device or port goes down, the authentication session is cleared, and traffic is not allowed on the port. When the port comes back, it goes through dot1x authentication to regain access to the Cisco SD-Access network.

Secure AP onboarding authorizes the AP on a closed authentication port, allowing limited access to DHCP/DNS and Catalyst Center for the PnP workflow. The PnP workflow on Catalyst Center enhances to enable a dot1x supplicant on the AP, which the AP uses to authenticate with Cisco ISE.

For more details about SBEN, see the “Steps to Configure Supplicant-Based Extended Nodes” section in the Cisco Catalyst Center User Guide.

Isolation of guest users

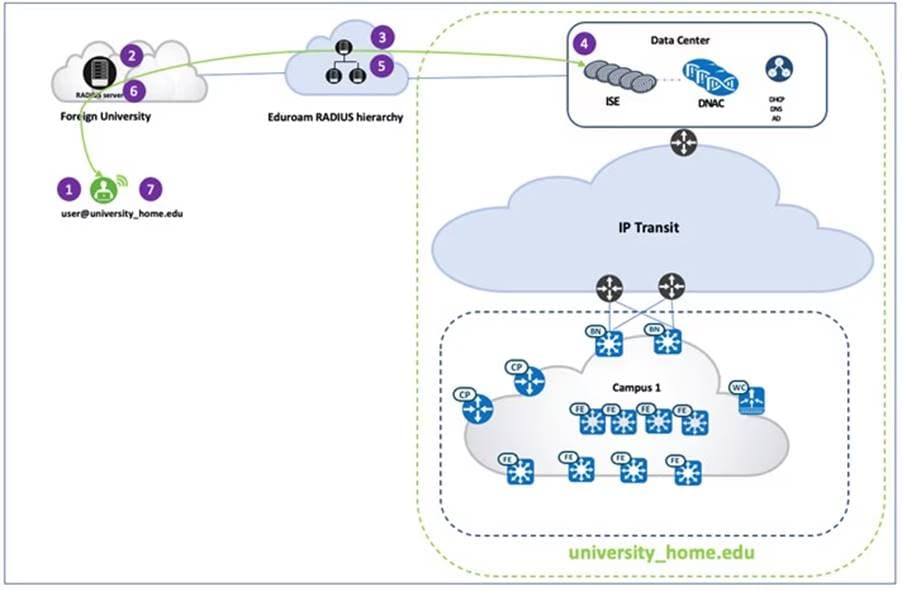

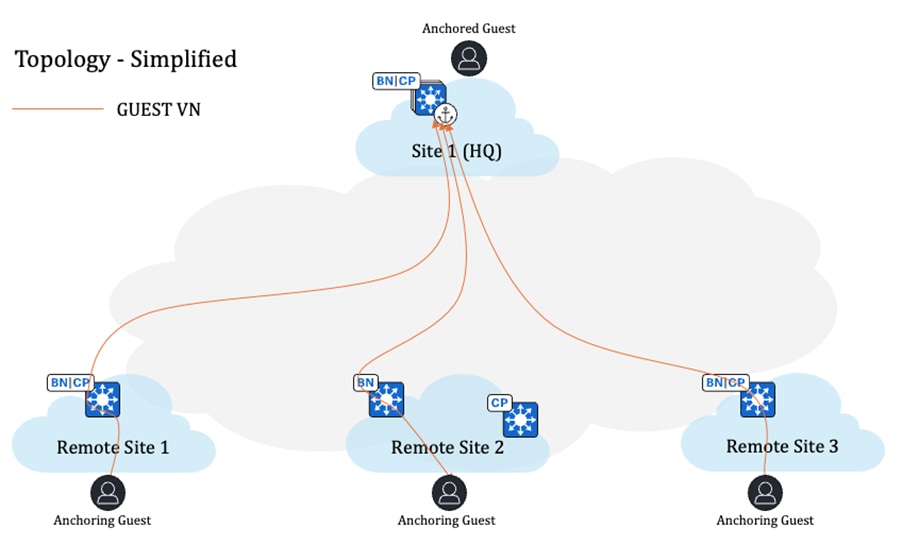

Guest wireless isolation is a crucial security feature that ensures guest users remain completely separated from university networks while providing controlled access to the internet. To address this requirement, SD-Access introduces the Multisite Remote Border (MSRB) solution.

This solution allows traffic from a VN spanning multiple dispersed sites to be aggregated at a central location, known as the anchor site. Instead of configuring separate subnets for each site, the anchor site leverages a single, shared subnet for guest VN. By implementing a centralized and streamlined subnet structure, VN anchors simplify guest service deployments across multiple locations while maintaining consistent and secure traffic segmentation in university environments.

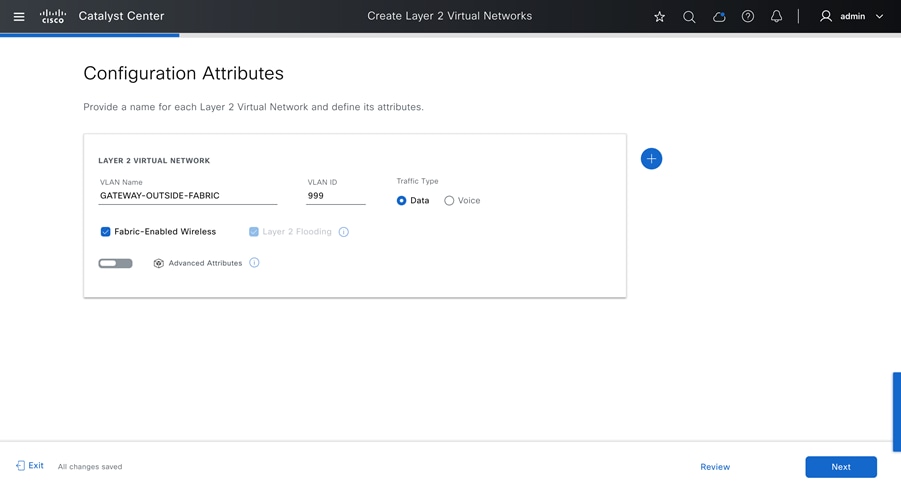

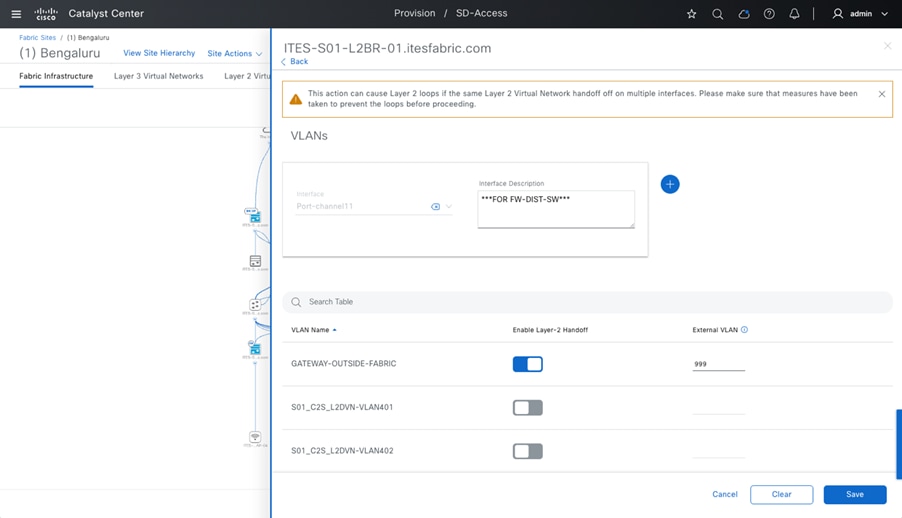

Enhancing security with external gateways

In SD-Access, a default gateway is present on all edge nodes for each subnet in a VN within a given fabric site. Traffic destined for a remote subnet is processed by the default gateway on the edge node and then routed to the appropriate destination.

In many networks, the default gateway needs to be on an external firewall rather than on the local edge node. Firewall traffic inspection is a common security and compliance requirement in such networks. By enabling the gateway outside of the fabric functionality, the default gateway is not provisioned on the edge nodes. Instead, it can be provisioned on an external device, such as a firewall, allowing traffic to be inspected before reaching its destination.

Seamless and secure campus network access

The increasing use of personal mobile devices and the growing dependence on cloud-based services have reshaped how universities handle technology and network access. Students and faculty now expect uninterrupted access to university resources from their own devices, regardless of location.

This evolution has transformed network security, access control, and resource management. Traditional approaches that rely on institution-owned devices and restricted networks are becoming outdated. To keep pace with these changes, universities are embracing BYOD strategies to provide flexibility while maintaining data security and regulatory compliance.

By integrating BYOD with SD-Access, universities can create a secure, adaptable network environment. SD-Access enforces access controls, protects sensitive data through network segmentation, and automates device onboarding, reducing the burden on IT teams. This approach strengthens security, improves operational efficiency, and fosters a more connected, technology-driven learning experience.

Compliance regulations

Compliance regulations are the rules and standards that institutions must follow to operate lawfully within a specific domain or jurisdiction. Advanced technologies assist in ensuring compliance by automating regulatory processes, improving data security, and enabling real-time monitoring and reporting to meet regulatory requirements effectively.

Staying compliant with industry regulations can be a complex task. Cisco SD-Access offers several features that can simplify this process:

● Audit logs

● Configuration compliance

● Configuration drift

Role-Based Access Control

Role-Based Access Control (RBAC) in Catalyst Center provides a way to control access to features and operations based on the roles of individual users within the organization. RBAC helps enforce the principle of least privilege, ensuring that users have access only to the resources necessary for their roles. Catalyst Center supports the flexibility to assign permissions to users based on either a local or external RADIUS/TACACS database. You can assign roles to users and also grant access to specific applications within Catalyst Center.

Audit logs

Audit logs are a record of events or actions that have occurred within the Catalyst Center application. These logs typically include details such as who performed the action, what action was taken, and when it occurred. Audit logs are important for security and compliance purposes, as they help administrators track changes made to the network infrastructure, identify potential security breaches, and ensure that users are following proper procedures. By reviewing audit logs, administrators can gain insight into the activities within the Catalyst Center application and take appropriate actions as needed.

For more details, see View audit logs.

Configuration compliance

Compliance helps in identifying any intent deviation or out-of-band changes in the network that may be injected or reconfigured without affecting the original content. A network administrator can conveniently identify devices in Catalyst Center that do not meet compliance requirements for the various aspects of compliance, such as software images, PSIRT, network profiles, and so on.

You can automate compliance checks or do on demand compliance checks using these schedule options:

● Automated compliance check:

Uses the latest data collected from devices in Catalyst Center. This compliance check listens to the traps and notifications from various services, such as inventory and SWIM, to assess data.

● Manual compliance check:

Let’s you manually trigger the compliance in Catalyst Center.

● Scheduled compliance check:

A scheduled compliance job runs every day at 11:00 pm and triggers the compliance check for devices that have not undergone a compliance check in the past seven days.

Catalyst Center currently supports the following types of compliance checks:

● Flag compliance errors when running configuration on network devices differs from the startup configuration view that Catalyst Center has for the device.

● Software image compliance flag to indicate if the golden image is not running on network devices.

● Flag fabric compliance errors if the configurations deployed by the SD-Access Fabric workflows were tampered with, breaching out-of-band PSIRT compliance, to alert network administrators to existing vulnerabilities in the network.

● Network compliance alerts if the devices are not running configuration per the intent called out for the given site in Catalyst Center.

For more details, see Compliance User Guide.

Operational efficiency

Operational efficiency is crucial for universities, as it directly impacts productivity, cost management, and the quality of services provided to students and staff. By optimizing workflows and leveraging digital transformation, universities can maximize faculty and staff output, enhance administrative processes, and strengthen their reputation and institutional value. SD-Access addresses critical aspects of operational efficiency, including:

● HA

● System resiliency

● Reports

● Efficient troubleshooting

High availability

High availability (HA) is a critical component that ensures systems and applications remain operational and accessible to users with minimal interruption, even during technical setbacks such as hardware failures or software glitches. An overview for achieving HA for the components includes:

● Disaster recovery

● Resilient network architecture

● Fallback segments

Disaster Recovery

Universities have a low tolerance for disruptions in critical systems, including management, control, or data operations. The Catalyst Center ensures both intracluster and intercluster resiliency to safeguard operations. Its Disaster Recovery framework consists of three key components: the primary site, the recovery site, and the witness site. At any time, the primary and recovery sites operate in active or standby roles. The active site oversees network management, while the standby site maintains a continuously updated replica of the active site's data and services. In the event of an active site failure, the Catalyst Center automatically initiates a failover, transitioning the standby site into the active role to ensure continuity.

For more details, see Implement Disaster Recovery.

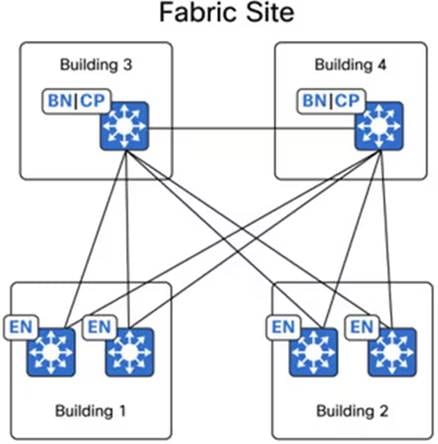

Resilient network architecture

Resilient network architecture in Cisco SD-Access is designed to ensure a highly available and reliable infrastructure, allowing critical university services to remain operational even during disruptions.

● Similar to the Virtual Switching System (VSS), StackWise Virtual (SVL) simplifies Layer 2 operations by combining two physical switches into a single logical switch at the control and management plane levels. This eliminates the need for spanning tree protocols and first-hop redundancy configurations, streamlining network management.

● By implementing Layer 3 routed access, the boundary between Layer 2 and Layer 3 shifts from the distribution layer to the access layer. This shift reduces the reliance on the distribution and collapsed core layers to manage Layer 2 adjacency and redundancy.

In university networks, traditional resilience techniques such as stacking and StackWise Virtual are complemented by strategies to safeguard regional hubs and campus headquarters against building-level failures. These measures ensure uninterrupted connectivity to data centers for critical academic and administrative applications.

Cisco SD-Access offers a flexible deployment model, enabling fabric borders to span multiple physical sites while integrating them seamlessly within a single fabric site, as illustrated in Figure 6:

Cisco SD-Access offers the flexibility to designate priorities to these border node deployments. This allows for the prioritization of a border node or its exclusive use as the active border for traffic. In the event of a building failure, the border node in the alternate building can seamlessly assume all traffic from the edge nodes.

Fallback segments

In Cisco SD-Access, there is support for the Critical VLAN feature, which ensures that endpoints maintain a minimum level of network connectivity even when they lose connectivity to their ISE server due to outages like a WAN outage.

For wired clients (not applicable to wireless clients) that have already been onboarded, if the connection to the ISE Policy Service Node (PSN) is lost, the system pauses periodic re-authorization to prevent disruptions in the authentication path from affecting the data plane. For clients that have not yet been onboarded, the Critical VLAN feature assigns them to a specific VLAN if connectivity to ISE is lost, providing them with limited network access.

These Critical VLANs can use microsegmentation to enforce policies in the absence of ISE, but to achieve this, assign a security group during the provisioning of the anycast gateway for the critical VLAN such as VLAN-SGT mapping and configure the appropriate policy matrix to be downloaded onto the switches.

In summary, Critical VLAN in SD-Access ensures that even when devices cannot authenticate properly, they are not entirely disconnected from the network but are given limited access for remediation and troubleshooting purposes.

System resiliency

To ensure system resiliency, it is important to implement high availability and redundancy solutions for critical components of the network infrastructure. An overview about achieving this for these components includes:

● Catalyst Center HA

● ISE HA

● Cisco Wireless LAN Controller Redundancy

Catalyst Center HA

Catalyst Center HA is a feature designed to minimize downtime and increase network resilience. It achieves this by ensuring that critical services remain available in the event of hardware or software failures. HA in Catalyst Center typically involves deploying redundant hardware and software configurations to provide seamless failover and continuous operation. This helps organizations maintain network stability and reliability, even during unexpected events.

For more details, see Cisco Catalyst Center High Availability Guide.

ISE HA

Cisco ISE can be deployed in two main configurations: Standalone and Distributed.

● Standalone deployment:

In a standalone deployment, a single ISE node serves all the necessary functions, including administration, policy services, and monitoring. This configuration is suitable for smaller networks where a single node can handle the workload and redundancy is not a critical requirement.

● Distributed deployment:

In a distributed deployment, ISE nodes are distributed across multiple physical or virtual machines to provide scalability, redundancy, and high availability. This configuration is suitable for larger networks where scalability and redundancy are important.

Each deployment option has its own advantages and is chosen based on the specific requirements of the network in terms of scalability, redundancy, and performance. To support failover and to improve performance, you can set up a deployment with multiple Cisco ISE nodes in a distributed fashion.

For more details, see "Distributed Deployment Scenarios" in the Cisco Identity Services Engine Installation Guide.

Cisco Wireless Controller redundancy

Cisco Wireless Controller redundancy is essential for maintaining continuous wireless network services. In an HA pair setup, two wireless controllers are configured as a pair. One wireless controller functions as the primary (active) controller, managing all wireless clients and traffic, while the other serves as the secondary (standby) controller. The secondary controller stays synchronized with the primary controller's configuration and state.

If the primary controller encounters an issue, the secondary controller seamlessly takes over, ensuring uninterrupted wireless service. This redundancy feature significantly improves the reliability of wireless networks, providing failover capabilities in the event of wireless controller hardware or software failures. Consequently, users experience minimal disruption and maintain connectivity to the wireless network.

For more details, see Cisco Catalyst 9800 Series Wireless Controllers HA SSO Deployment Guide.

Reports

The Catalyst Center Reports feature provides a comprehensive suite of tools for deriving actionable insights into your network's operational efficiency. This feature enables data generation in multiple formats, with flexible scheduling and configuration options, allowing for tailored customization to meet your specific operational needs.

The Reports feature supports various use cases that include:

● Capacity planning:

Understanding device utilization within your network.

● Pattern change analysis:

Tracking changes in usage patterns, including clients, devices, bands, and applications.

● Operational reporting:

Reviewing reports on network operations, such as upgrade completions and provisioning failures.

● Network health assessment:

Evaluating the overall health of your network through detailed reports.

By leveraging Catalyst Center's reporting capabilities, you can significantly enhance your network's operational efficiency, ensuring a smooth-running, high-performing network environment.

For more details, see the Cisco Catalyst Center Platform User Guide.

Efficient troubleshooting

Efficient troubleshooting is a critical aspect of maintaining uninterrupted operations within a university IT infrastructure. The Catalyst Center offers advanced debugging features tailored to meet these requirements effectively. These tools enable IT administrators to swiftly identify, diagnose, and resolve Catalyst Center-related issues, ensuring optimal performance of the university network systems. The tools help with troubleshooting, including:

Validation Tool:

Before Catalyst Center version 2.3.5.x, the Audit and Upgrade Readiness Analyzer (AURA) tool was used to evaluate upgrade readiness. With the implementation of the restricted shell in version 2.3.5.x, most AURA upgrade checks are now integrated into the Catalyst Center. The Validation Tool assesses both the Catalyst Center appliance hardware and its connected external systems, identifying potential issues before they impact the university network.

For more details, see these links:

● Validate Cisco DNA Center Upgrade Readiness

Catalyst Center Validation Tool provide invaluable support for university network administrators. These tools enable proactive maintenance, efficient troubleshooting, and improved network stability, significantly enhancing the operational efficiency of the university IT services.

Financial efficiency

Reducing operational expenses and increasing revenue are key priorities for universities striving for financial sustainability. By automating the deployment and monitoring of large-scale, multisite networks, universities can significantly lower operational costs, streamline administrative processes, and maintain efficient IT operations. This approach allows for the management of complex campus networks with minimal manual intervention, enhancing overall efficiency and enabling better allocation of resources. Some strategies adopted by universities to achieve financial efficiency include:

● Automation and monitoring

● IP Address Management (IPAM) integration

● IT Service Management (ITSM) integration

● SD-Access extension

Automation and monitoring

Automation and monitoring are essential components of modern IT infrastructure management. Automation can include tasks such as software deployment, configuration management, system provisioning, and workflow orchestration. By automating repetitive and time-consuming tasks, organizations can improve efficiency, reduce errors, and free up human resources to focus on more strategic activities. Monitoring, on the other hand, involves continuously observing and analyzing the performance and health of IT systems, networks, applications, and services. Below is an overview of how to implement these strategies for these components:

● LAN automation

● Plug and Play and Return Material Authorization

● Software Image Management

● Intelligent Capture

● Assurance and visibility

LAN automation

LAN automation in Catalyst Center is a feature designed to simplify the deployment and management of network infrastructure by automating the configuration and provisioning of network devices. This automation reduces the complexity and potential for errors associated with manual configuration, resulting in more efficient and reliable network operations.

Cisco LAN automation provides these key benefits:

● Zero-touch provisioning:

Network devices are dynamically discovered, onboarded, and automated from their factory-default state to fully integrated in the network.

● End-to-end topology:

Dynamic discovery of new network systems and their physical connectivity can be modelled and programmed. These new systems can be automated with Layer 3 IP addressing and routing protocols to dynamically build end-to-end routing topologies.

● Resilience:

LAN automation integrates system and network configuration parameters that optimize forwarding topologies and redundancy. LAN automation enables system-level redundancy and automates best practices to enable best-in-class resiliency during planned or unplanned network outages.

● Security:

Cisco-recommended network access and infrastructure protection parameters are automated, providing security from the initial deployment.

● Compliance:

LAN automation helps eliminate human errors, misconfiguration, and inconsistent rules and settings that drain IT resources. During new system onboarding, LAN automation provides compliance across the network infrastructure by automating globally managed parameters from Catalyst Center.

For more details, see the Cisco Catalyst Center SD-Access LAN Automation Deployment Guide.

Plug and Play and Return Material Authorization

Catalyst Center features Plug and Play (PnP) functionality, which simplifies the deployment of Cisco Catalyst switches, routers, and wireless APs. With PnP, network administrators can easily onboard new devices to the network without the need for manual configuration. Devices with PnP capability can automatically download the required software image and configuration from a PnP server, such as Catalyst Center, making the deployment process faster and more efficient.

Catalyst Center provides support for the Return Material Authorization (RMA) processes. In case of hardware failure or replacement, the RMA feature allows administrators to easily manage the return and replacement of faulty devices. This includes generating RMA requests, tracking the status of RMAs, and managing the replacement process through a centralized interface. Overall, the PnP and RMA features in Catalyst Center help streamline device deployment and replacement processes, reducing complexity and enhancing network management efficiency.

For more details, see the Network Device Onboarding for Cisco Catalyst Center Deployment Guide.

Software Image Management

The Catalyst Center Software Image Management (SWIM) feature simplifies and automates the process of managing software images across Catalyst switches, routers, and wireless devices in the network. Network administrators who wish to automate the upgrade of a Catalyst 9000 series switch at a branch or campus can use the Catalyst Center SWIM solution.

Catalyst Center stores all unique software images according to image type and version for the devices in your network. It allows you to view, import, and delete software images and push them to your network's devices. The software upgrade can be optimized by decoupling software distribution and activation to minimize downtime within the maintenance window. Overall, SWIM enhances operational efficiency, reduces downtime, and helps ensure network security and compliance by simplifying and automating the management of software images across Catalyst devices.

For more details, see the SWIM Deployment Guide.

Intelligent Capture

Catalyst Center Intelligent Capture (iCAP) is a powerful feature designed to enhance network troubleshooting and performance monitoring. It leverages advanced analytics and machine learning to provide deep insights into network traffic and client behaviors. iCap provides support for a direct communication link between Catalyst Center and APs, so each of the APs can communicate with Catalyst Center directly. Using this channel, Catalyst Center can receive packet capture (PCAP) data, AP and client statistics, and spectrum data. With the direct link from the AP to Catalyst Center through gRPC, iCap allows you to access data from APs that is not available from wireless controllers.

For more details, see the Cisco Intelligent Capture Deployment Guide.

Assurance and visibility

Catalyst Center manages your network by automating network devices and services but also provides network assurance and analytic capabilities. Catalyst Center collects telemetry from network devices, Cisco ISE, users/endpoints, applications, and other integrations across the network. Catalyst Center Network Analytics correlates data from various sources to help administrators or operators to offer comprehensive network insights into:

● Device 360 and Client 360:

Provides the ability to view device or client connectivity, which includes information on topology, throughput, and latency from various times and different applications.

● Network time travel:

Provides the ability to go back in time and see the cause of a network issue.

● Application experience:

Provides unparalleled visibility and performance control on the applications critical to your core business on a per-user basis.

● Network analytics:

Provides recommendations for corrective actions for found issues in the network. These actions can involve guided remediation, where the engine specifies steps for a network administrator to do.

For details, see Cisco Catalyst Assurance.

IP Address Management integration

IP Address Management (IPAM) integration in Catalyst Center streamlines the process of managing IP addresses within a network. This integration provides a centralized platform to automate and simplify IP address allocation, tracking, and management. In SD-Access deployments, IPAM integration provides Catalyst Center access to existing IP address scopes. When configuring new IP address pools in Catalyst Center, it automatically updates the IPAM server, reducing the IP address management tasks.

Two third-party integration modules are included in Catalyst Center, one for IPAM provider Infoblox and one for Bluecat. Other IPAM providers may be configured for use with Catalyst Center by providing an IPAM provider REST API service that meets the Catalyst Center IPAM provider specification.

For more details, see Configure an IP Address Manager.

IT Service Management integration

IT Service Management (ITSM) refers to the implementation and management of quality IT services that meet the needs of a business. ServiceNow is a popular ITSM platform that provides a suite of applications to help organizations automate and streamline their IT services.

Catalyst Center and ServiceNow integration supports these capabilities:

● Integrating Catalyst Center into ITSM processes of incident, event, change, and problem management.

● Integrating Catalyst Center into ITSM approval and preapproval chains.

● Integrating Catalyst Center with formal change and maintenance window schedules.

The scope of the integration is mainly to check your network for assurance and maintenance issues and for events requiring software image updates for compliance, security, or any other operational triggers. Details about these issues are then published to an ITSM (ServiceNow) system or any REST endpoint.

For more details, see the Cisco Catalyst Center ITSM Integration Guide.

SD-Access extension

SD-Access extension is a critical capability that allows organizations to extend the reach of their SD-Access fabric, ensuring consistent policy enforcement, enhanced security, simplified management, and improved network performance across a broader range of environments and devices.

An extended node connects to the SD-Access in Layer 2 mode, facilitating the connection of IoT endpoints but does not support fabric technology. Using Catalyst Center, the extended node can be onboarded from a factory reset state through the PnP method, enabling security controls on the extended network and enforcing fabric policies for endpoints connected to the extended node.

To implement SD-Access extension, deploy extended nodes, which are available in three different types:

● Extended Node (EX):

Extended Node is a Layer 2 switch that connects to a fabric edge node in a Cisco SD-Access network. It provides connectivity for IoT endpoints and other devices that do not support full SD-Access capabilities. Extended nodes are typically managed and configured through a centralized controller like Catalyst Center. They rely on the fabric edge for advanced network functions like LISP, VXLAN, and SGACL enforcement.

● Policy Extended Node (PEN):

Policy Extended Node is a specific type of extended node that offers additional capabilities. It can perform 802.1X/MAB authentication, dynamically assign VLANs and SGTs to endpoints, and enforce SGACLs. This type of node provides a more granular level of policy control compared to a standard extended node, allowing for more flexible network segmentation and security.

● Supplicant-Based Extended Node (SBEN):

Supplicant-Based Extended Node is an extended node that undergoes a stricter onboarding process. It requires an IEEE 802.1X supplicant configuration and completes a full authentication and authorization process before being allowed into the SD-Access network. This approach enhances security by ensuring that only authorized devices can access the network. SBENs are often used in environments with heightened security requirements.

Key points:

● Extended Nodes provide connectivity for endpoints that cannot directly participate in SD-Access.

● Policy Extended Nodes offer enhanced policy enforcement capabilities.

● Supplicant-Based Extended Nodes implement stricter security measures through 802.1X authentication.

For more details, see:

● Cisco SD-Access Solution Design Guide

● Connected Communities Infrastructure - General Solution Design Guide

Experience improvement

Enhancing user and customer experiences through strategic use of modern technologies involves prioritizing Quality of Service (QoS), leveraging application visibility, and implementing video streaming, particularly in environments where performance directly impacts business operations and customer satisfaction. In today's competitive landscape, prioritizing QoS is not merely an option but a necessity for delivering exceptional user and customer experiences.

An overview of strategies for enhancing these areas, includes:

● QoS

● Application Visibility

● Video streaming across sites

QoS

QoS refers to the network capability to prioritize or differentiate service for selected types of network traffic. Configuring QoS ensures that network resources are utilized efficiently while meeting business objectives, such as ensuring enterprise-grade voice quality or delivering a high Quality of Experience (QoE) for video. Catalyst Center facilitates QoS configuration in your network through application policies.

These policies include core parameters, including: