1.2T line cards

This section describes the supported card modes, card configurations, and procedures to configure the card modes on the 1.2T line card.

A 1.2T line card-

supports both module and slice configurations,

-

provides flexible trunk and client port assignment.

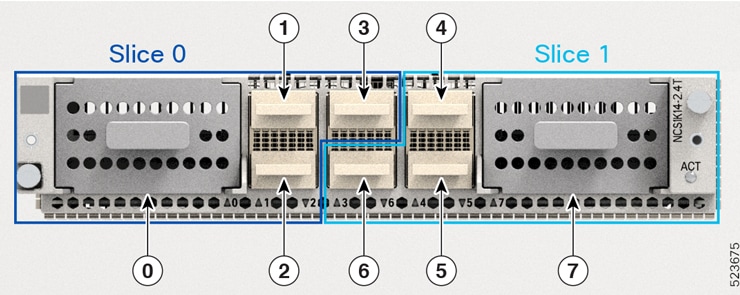

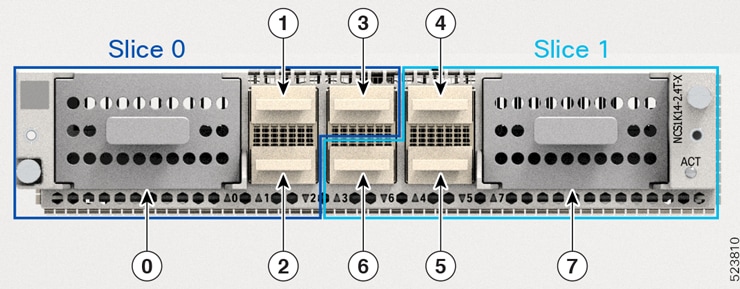

The line cards are equipped with trunk and client ports:

-

Two trunk ports (0 and 1), and

-

12 client ports (2 through 13).

Configuration modes

You can configure the line cards in these two modes:

-

Muxponder mode: both trunk ports are configured with the same trunk rate. The client-to-trunk mapping is sequential.

-

Muxponder slice mode: the client-to-trunk mapping is fixed.

This table lists the client ports assigned to each trunk for the 1.2T card in muxponder slice mode.

|

Card |

Trunk 0 client ports |

Trunk 1 client ports |

|---|---|---|

|

1.2T |

2 to 7 |

8 to 13 |

Supported data rates on the 1.2T line card

The 1.2T line card supports these data rates.

This table shows the client and trunk ports that are enabled on these muxponders for the 100GE and OTU4 data rates.

-

Muxponder

-

Muxponder slice 0

-

Muxponder slice 1

|

Trunk data rate |

Client data rate (100GE, OTU4) |

Muxponder mode |

Muxponder slice mode |

||

|---|---|---|---|---|---|

|

Trunk ports |

Client ports |

Client ports for trunk 1 |

Client ports for trunk 0 |

||

|

100 |

100GE, OTU4 |

0 |

2 |

8 |

2 |

|

200 |

100GE, OTU4 |

0, 1 |

2, 3, 4, 5 |

8, 9 |

2, 3 |

|

300 |

100GE, OTU4 |

0, 1 |

2, 3, 4, 5, 6, 7 |

8, 9, 10 |

2, 3, 4 |

|

400 |

100GE, OTU4 |

0, 1 |

2, 3, 4, 5, 6, 7, 8, 9 |

8, 9, 10, 11 |

2, 3, 4, 5 |

|

500 |

100GE, OTU4 |

0, 1 |

2, 3, 4, 5, 6, 7, 8, 9, 10, 11 |

8, 9, 10, 11, 12 |

2, 3, 4, 5, 6 |

|

600 |

100GE, OTU4 |

0, 1 |

2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13 |

8, 9, 10, 11, 12, 13 |

2, 3, 4, 5, 6, 7 |

All configurations can be accomplished by using appropriate values for client bitrate and trunk bitrate parameters of the hw-module command.

This table shows the trunk parameter ranges for the 1.2T line card.

|

Trunk payload |

FEC |

Min BPS |

Max BPS |

Min GBd |

Max GBd |

|---|---|---|---|---|---|

|

50G |

15% |

1 |

1.3125 |

24.0207911 |

31.5272884 |

|

50G |

27% |

1 |

1.4453125 |

24.0207911 |

34.7175497 |

|

100G |

15% |

1 |

2.625 |

24.0207911 |

63.0545768 |

|

100G |

27% |

1 |

2.890625 |

24.0207911 |

69.4350994 |

|

150G |

15% |

1.3203125 |

3.9375 |

24.0207911 |

71.6359689 |

|

150G |

27% |

1.453125 |

4.3359375 |

24.0207911 |

71.6749413 |

|

200G |

15% |

1.7578125 |

5.25 |

24.0207911 |

71.7420962 |

|

200G |

27% |

2 |

4.40625 |

31.51 |

69.43 |

|

250G |

15% |

2.1953125 |

6 |

26.2727403 |

71.8059237 |

|

250G |

27% |

2.4140625 |

6 |

28.9312914 |

71.9068991 |

|

300G |

15% |

2.6328125 |

6 |

31.5272884 |

71.8485385 |

|

300G |

27% |

2.8984375 |

6 |

34.7175497 |

71.8681352 |

|

350G |

15% |

3.0703125 |

6 |

36.7818364 |

71.8790086 |

|

350G |

27% |

3.3828125 |

6 |

40.503808 |

71.8404724 |

|

400G |

15% |

3.5078125 |

6 |

42.0363845 |

71.9018782 |

|

400G |

27% |

3.8671875 |

6 |

46.2900663 |

71.8197392 |

|

450G |

15% |

3.9453125 |

6 |

47.2909326 |

71.9196757 |

|

450G |

27% |

4.34375 |

6 |

52.0763245 |

71.9327648 |

|

500G |

15% |

4.3828125 |

6 |

52.5454806 |

71.93392 |

|

500G |

27% |

4.8281250 |

6 |

57.8625828 |

71.9068991 |

|

550G |

15% |

4.8203125 |

6 |

57.8000287 |

71.9455787 |

|

550G |

27% |

5.3125 |

6 |

63.6488411 |

71.88575 |

|

600G |

15% |

5.2578125 |

- |

- |

71.9552971 |

|

Trunk Payload |

FEC |

Min BPS |

Max BPS |

Min GBd |

Max GBd |

|---|---|---|---|---|---|

|

100G |

15% |

1 |

2.625 |

24.0207911 |

63.0545768 |

|

100G |

27% |

1 |

2.890625 |

24.0207911 |

69.4350994 |

|

150G |

15% |

1.3203125 |

3.9375 |

24.0207911 |

71.6359689 |

|

150G |

27% |

1.453125 |

4.3359375 |

24.0207911 |

71.6749413 |

|

200G |

15% |

2 |

4 |

31.5272884 |

63.0545768 |

|

200G |

27% |

2 |

4.40625 |

31.51664088 |

69.43509943 |

|

250G |

15% |

2.1953125 |

4.5 |

35.0303204 |

71.8059237 |

|

250G |

27% |

2.4140625 |

4.5 |

38.5750552 |

71.9068991 |

|

300G |

15% |

2.6328125 |

4.5 |

42.0363845 |

71.8485385 |

|

300G |

27% |

2.8984375 |

4.5 |

46.2900662857142 |

71.86813526 |

|

350G |

15% |

3.0703125 |

4.5 |

49.0424486 |

71.8790086 |

|

350G |

27% |

3.3828125 |

4.5 |

54.0050773 |

71.8404724 |

|

400G |

15% |

3.5078125 |

4.5 |

56.0485127 |

71.9018782 |

|

400G |

27% |

3.8671875 |

4.5 |

61.72008838 |

71.81973921 |

You can configure the sub 50G or coupled mode on the 1.2T line card only in the muxponder mode.

This table shows the port configuration for the supported data rates in the muxponder mode.

|

Trunk data rate (per trunk) |

Total configured data rate |

Trunk ports |

Client ports for trunk 0 (100G) |

Shared client port (50G per trunk) |

Client ports for trunk 1 (100G) |

|---|---|---|---|---|---|

|

50G |

100G |

0, 1 |

- |

2 |

- |

|

150G |

300G |

0, 1 |

2 |

3 |

4 |

|

350G |

700G |

0, 1 |

2, 3, 4 |

5 |

6, 7, 8 |

|

450G |

900G |

0, 1 |

2, 3, 4, 5 |

6 |

7, 8, 9, 10 |

|

550G |

1.1T |

0, 1 |

2, 3, 4, 5, 6 |

7 |

8, 9, 10, 11, 12 |

The 1.2T line card supports an alternate port configuration for Sub 50G (split client port mapping) that you can configure using CLI.

This table shows the port configuration for the supported data rates in the split client port mapping mode.

|

Trunk data rate (per trunk) |

Total configured data rate |

Trunk ports |

Client ports for trunk 0 (100G) |

Shared client port (50G per trunk) |

Client ports for trunk 1 (100G) |

|---|---|---|---|---|---|

|

50G |

100G |

0, 1 |

- |

7 |

- |

|

150G |

300G |

0, 1 |

2 |

7 |

8 |

|

250G |

500G |

0, 1 |

2, 3 |

7 |

8, 9 |

|

350G |

700G |

0, 1 |

2, 3, 4 |

7 |

8, 9, 10 |

|

450G |

900G |

0, 1 |

2, 3, 4, 5 |

7 |

8, 9, 10, 11 |

|

550G |

1.1T |

0, 1 |

2, 3, 4, 5, 6 |

7 |

8, 9, 10, 11, 12 |

Note |

In all x50G configurations, client traffic on the middle port is affected with ODUK-BDI and LF alarms after the power cycle or link flap on the trunk side. This issue is raised when the two network lanes work in coupled mode and move from low to high power. To solve this issue, create a new frame either at the near-end or far-end by performing shut or no shut of the trunk ports. |

Coupled mode restrictions

These restrictions apply to the coupled mode configuration:

-

Both trunk ports must be configured with the same bits-per-symbol or baud rate. Both must be sent over the same fiber and direction.

-

The chromatic dispersion must be configured to the same value for both trunk ports.

-

When trunk internal loopback is configured, you must configure it on both trunk ports. If you configure internal loopback only on one trunk, traffic loss occurs.

-

Fault on a trunk port of a coupled pair may cause errors on all clients including those running only on the unaffected trunk port.

Configure split client port mapping

Use this task to configure the trunk port to client port mapping for sub 50G data ratesin the default mode or in the split client port mapping mode.

Procedure

SUMMARY STEPS

- Perform any of these steps to configure or remove the split client port mapping mode:

- To configure the trunk port to client port mapping for sub 50G configuration in the split client port mapping mode, run the

split-client-port-mapping command.

Example: RP/0/RP0/CPU0:ios#configure RP/0/RP0/CPU0:ios(config)#hw-module location 0/1/NXR0 mxponder RP/0/RP0/CPU0:ios(config-hwmod-mxp)#split-client-port-mapping RP/0/RP0/CPU0:ios(config-hwmod-mxp)#commit RP/0/RP0/CPU0:ios(config-hwmod-mxp)#end - To remove the split client port-mapping configuration, run the no split-client-port-mapping command.

Example: RP/0/RP0/CPU0:ios#configure RP/0/RP0/CPU0:ios(config)#hw-module location 0/1/NXR0 mxponder RP/0/RP0/CPU0:ios(config-hwmod-mxp)#no split-client-port-mapping RP/0/RP0/CPU0:ios(config-hwmod-mxp)#commit RP/0/RP0/CPU0:ios(config-hwmod-mxp)#end - Run the commit command to apply the changes.

- Verify the port mapping using the show hw-module location location mxponder command.

DETAILED STEPS

|

Step 1 |

Perform any of these steps to configure or remove the split client port mapping mode:

|

|

Step 2 |

Run the commit command to apply the changes. Example: |

|

Step 3 |

Verify the port mapping using the show hw-module location location mxponder command. Example:This example shows how to verify the split client port-mapping configuration. |

The split client port mapping is configured.

This is a sample in which split-client-port-mapping is configured with a 450G trunk payload.

RP/0/RP0/CPU0:ios#configure

RP/0/RP0/CPU0:ios(config)#hw-module location 0/1/NXR0 mxponder

RP/0/RP0/CPU0:ios(config-hwmod-mxp)#split-client-port-mapping

RP/0/RP0/CPU0:ios(config-hwmod-mxp)#commit

RP/0/RP0/CPU0:ios(config-hwmod-mxp)#endThis is a sample in which split-client-port-mapping is removed.

RP/0/RP0/CPU0:ios#configur

RP/0/RP0/CPU0:ios(config)#hw-module location 0/1/NXR0 mxponder

RP/0/RP0/CPU0:ios(config-hwmod-mxp)#no split-client-port-mapping

RP/0/RP0/CPU0:ios(config-hwmod-mxp)#commit

RP/0/RP0/CPU0:ios(config-hwmod-mxp)#endConfigure the card mode

Use this task to configure the 1.2T line card in the module (muxponder) or slice configuration (muxponder slice), specifying its client and trunk data rates.

Procedure

|

Step 1 |

Configure the client and trunk rates for the muxponder at the specified location.

Example:This is a sample in which the card is configured in the muxponder mode with a 550G trunk payload. This is a sample in which the card is configured in the muxponder mode with a 500G trunk payload. |

|

Step 2 |

Use these commad to configure the client data rates and trunk data rates of the card in the muxponder slice mode.

Example:This is a sample in which the card is configured in the muxponder mode with a 550G trunk payload. This is a sample in which the card is configured in the muxponder mode with a 500G trunk payload. This is a sample in which the card is configured in the muxponder slice 0 mode with a 500G trunk payload. This is a sample in which the card is configured in the muxponder slice 1 mode with a 400G trunk payload. This is a sample in which the card is configured with mixed client rates in the muxponder slice mode. |

Verify the 1.2T line card configuration

Use this task to verify the configured settings of the 1.2T line card in either muxponder or muxponder slice mode.

Procedure

|

Use the show hw-module location <location> mxponder command to verify the card configuration. Example:This is a sample output of the coupled mode configuration where the shared client port is highlighted. This is a sample output of all the muxponder slice 0 configurations. This is a sample output of all the muxponder slice 1 configurations. This is a sample output of the muxponder slice 1 configuration with client configured as OTU4. This is a sample to verify the mixed client rate configuration in the muxponder slice mode. |

Clear alarm statistics

Use this task to clear alarm statistics on the optics or coherent DSP controller.

Procedure

|

Run the clear counters controller controllertype R/S/I/P command to clear alarm statistics on the optics or coherent DSP controller. Example:This is a sample in which the alarm statistics are cleared on the coherent DSP controller. |

Regeneration mode

In an optical transmission system, 3R regeneration helps extend the reach of the optical communication links by reamplifying, reshaping, and retiming the data pulses.

Regeneration helps to correct any distortion of optical signals by converting it to an electrical signal, processing that electrical signal, and then retransmitting it again as an optical signal.

In Regeneration (Regen) mode, the OTN signal is received on a trunk port and the regenerated OTN signal is sent on the other trunk port of the 1.2T line card and the other way round. In this mode, only the trunk optics controller and coherentDSP controllers are created.

Regeneration can be configured only on the 1.2T line card.

Configure the card in Regen mode

Use this task to configure the regeneration mode on the 1.2T line card. The supported trunk rates are 100G to 600G in multiples of 100G.

Procedure

|

Step 1 |

Run the hw-module location location regen trunk-rate command to configure the regeneration mode on the 1.2T card. Example:The is a sample to configure the regeneration mode on the 1.2T line card with the trunk-rate 300. |

|

Step 2 |

Commit and exit the configuration. Example: |

Verify the Regen mode

Use this task to verify the regeneration mode configuration.

Procedure

|

Run the show hw-module location location regen command to verify the regen mode. Example:The terms, East Port and West Port are used to represent OTN signal regeneration at the same layer. |

Configure the BPS

This section provides instructions for configuring the Bits-Per-Symbol (BPS) parameter and viewing BPS and baud rate ranges on supported optical interfaces.

The bits-per-symbol parameter allows you to configure the modulation format on optical interfaces. This setting directly affects the spectral

efficiency and data rate on a per-wavelength basis.

|

Traffic rate |

Minimum baud rate |

Maximum baud rate |

|---|---|---|

|

400 |

43.34518 |

130.4647 |

|

600 |

59.53435 |

148.0555 |

|

800 |

79.37913 |

148.0555 |

|

1000 |

99.22392 |

148.0555 |

Use this task to configure BPS value to 3.4375 on the 1.2T and 2-QDD-C line cards to support 300G trunk configurations on 75 GHz networks.

Procedure

|

Step 1 |

Run the controller optics R/S/I/P bits-per-symbol value command to configure the BPS to 3.4375 on the 1.2T and 2-QDD-C line cards. This configuration supports 300G trunk configurations on 75 GHz networks. Example:This is a sample in which the BPS is configured to 3.4375. |

|

Step 2 |

Run these commands to view the BPS and baud for a specific range.

Example:Example: |

Trunk rates and BPSK modulation

Trunk rates on the 1.2T and 2-QDD-C line cards can be configured to 50G, 100G, and 150G to support Binary Phase-Shift Keying (BPSK) modulation.

This configuration optimizes the efficiency of carrying information over radio signals.

Configuration methods

You can configure trunk rates for BPSK modulation using these methods:

-

Command-Line Interface (CLI)

-

NETCONF YANG

-

OC Models

Supported trunk rates and BPSK modulation

This table lists the trunk rates with the supported BPSK modulation:

|

Trunk rate |

BPSK modulation |

|---|---|

|

50G |

1 to 1.4453125 |

|

100G |

1 to 2.890625 |

|

150G |

1.453125 to 4.3359375 |

Configure trunk rate for BPSK modulation

Use this task to configure the trunk rate for BPSK modulation.

Procedure

|

Run the configurehw-module location location mxponder trunk-rate {50G | 100G | 150G}command to configure the trunk rate for BPSK modulation. Example:This example shows how to configure trunk rate to 50G: |

View BPSK trunk rate ranges

Determine the trunk rate configured for BPSK modulation on network hardware.

Procedure

|

Run the show command to view the trunk rate configured for BPSK modulation. Example: |

Feedback

Feedback