Tenant Routed Multicast

A Tenant Routed Multicast (TRM) is a VXLAN EVPN multicast forwarding solution that

-

enables efficient multicast delivery across a multi-tenant VXLAN fabric using a BGP-based EVPN control plane

-

supports multicast forwarding between senders and receivers within the same or different subnets and VTEPs, and

-

improves Layer-3 overlay multicast scalability and efficiency for modern data center networks.

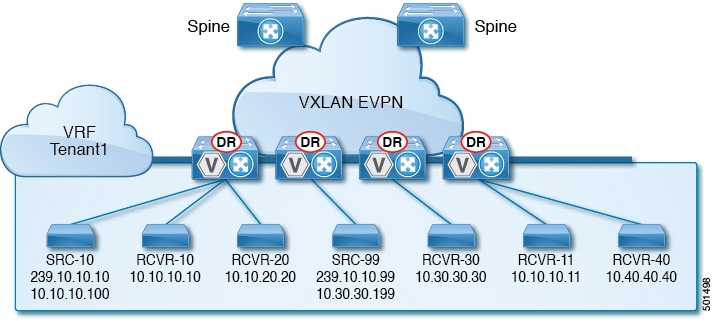

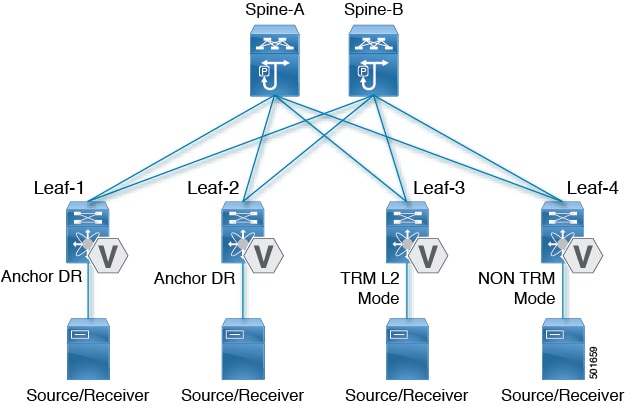

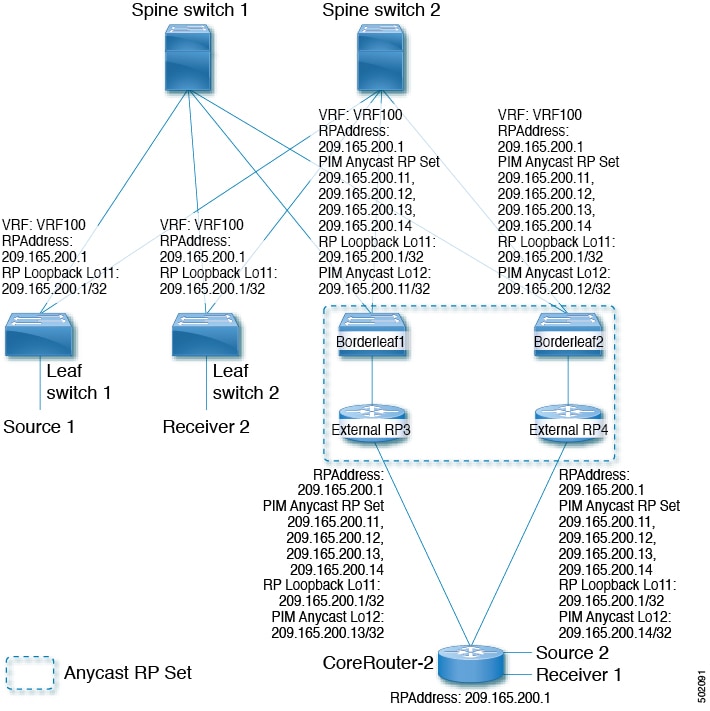

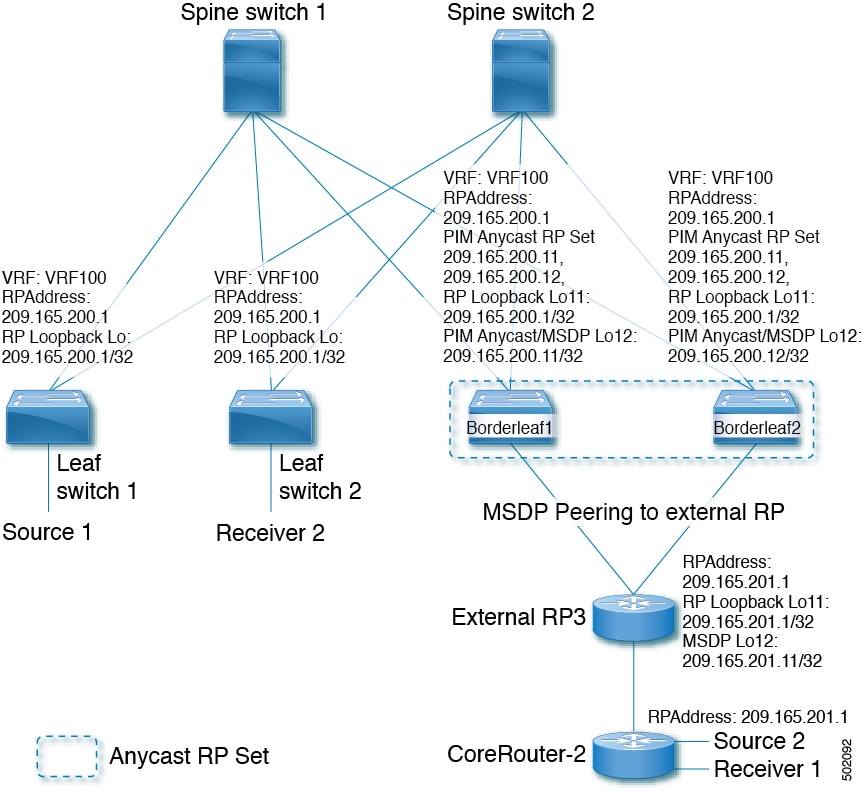

Tenant routed multicast brings standards-based multicast capabilities to the VXLAN overlay network by leveraging next generation multicast VPN (ngMVPN) as described in IETF RFC 6513 and 6514. TRM allows each edge device (VTEP) with a distributed IP Anycast Gateway to also act as a Designated Router (DR) for multicast forwarding. Bridged multicast forwarding is optimized through IGMP snooping, ensuring only interested receivers at the edge receive the multicast traffic, while all non-local multicast traffic is routed for efficient delivery.

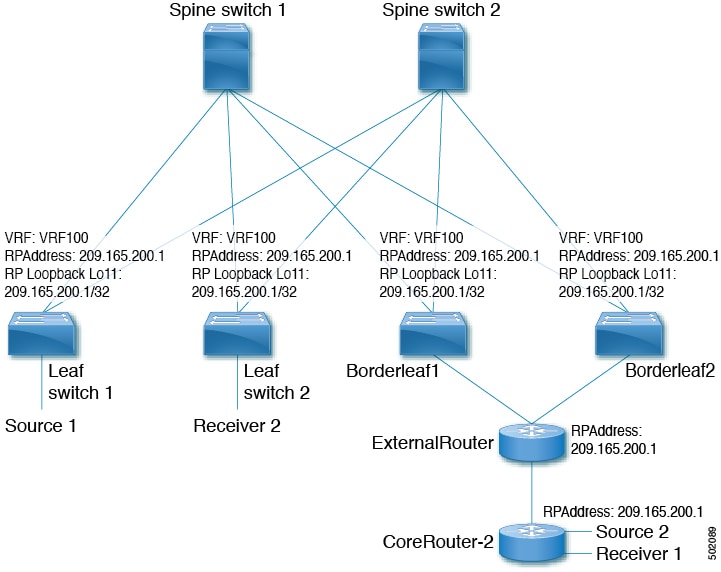

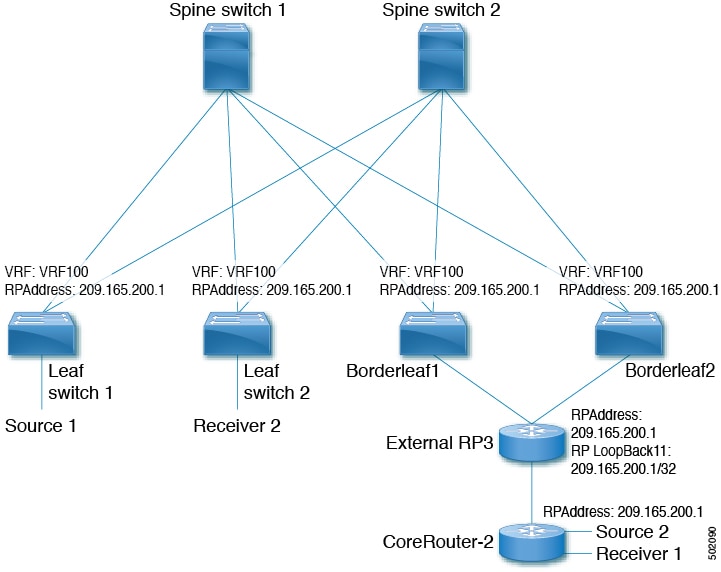

When TRM is enabled, multicast forwarding in the underlay network is used to replicate VXLAN-encapsulated routed multicast traffic. A Default Multicast Distribution Tree (Default-MDT) is built per VRF, which supplements the existing multicast groups for Layer-2 VNI Broadcast, Unknown Unicast, and multicast replication. Overlay multicast groups are mapped to corresponding underlay multicast addresses for scalable transport. The BGP-based approach allows the fabric to distribute the rendezvous point (RP) functionality, making every VTEP an RP for multicast.

TRM enables seamless integration with existing multicast-enabled networks, supporting external multicast rendezvous points and tenant-aware external connectivity through Layer-3 physical or subinterfaces.

In a data center fabric using TRM, multicast sources and receivers can be located within the data center, in a separate campus network, or reachable externally via the WAN. TRM ensures multicast traffic reaches only interested receivers, even across different sites and tenants, while using underlay multicast replication to optimize bandwidth and resiliency.

Feedback

Feedback