VXLAN EVPN multi-sites

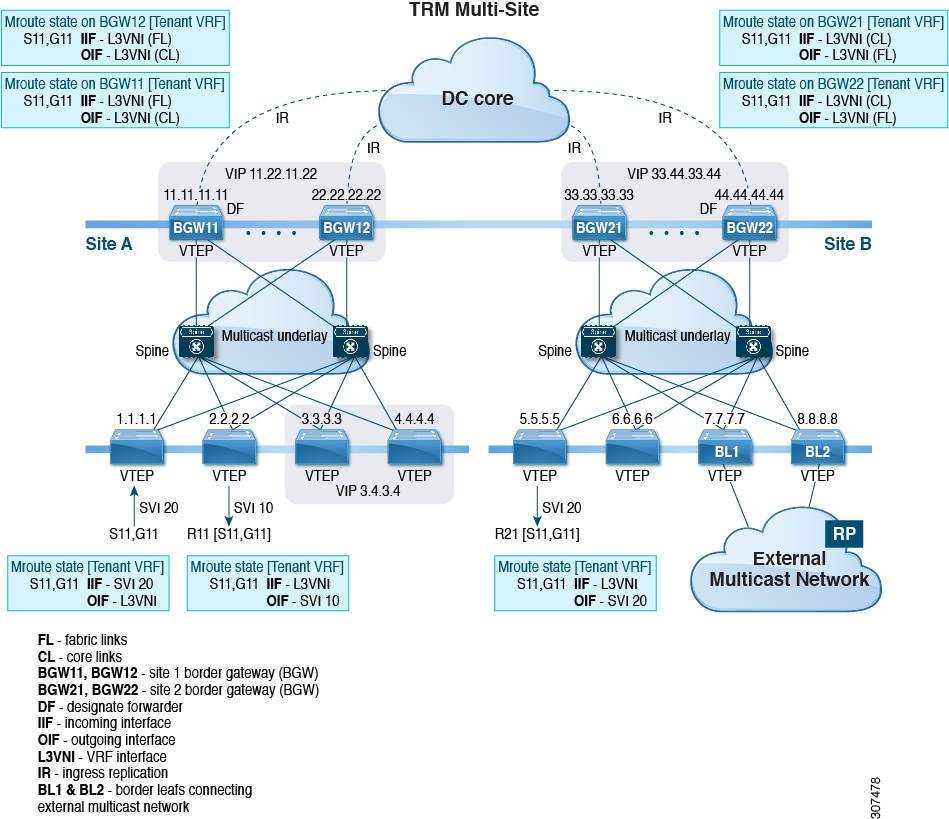

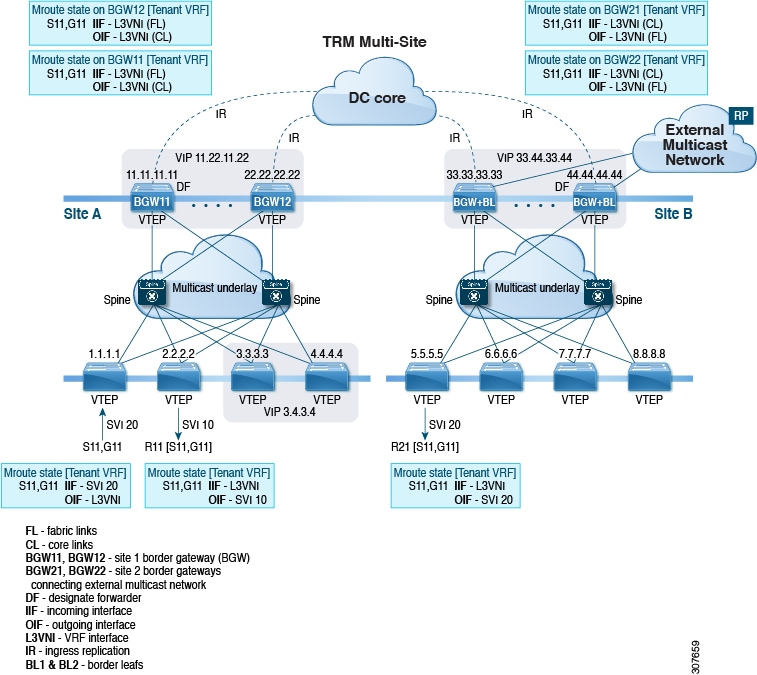

A VXLAN EVPN multi-site is a data center network solution that

-

interconnects two or more BGP-based Ethernet VPN (EVPN) sites or overlay domains over an IP-only network,

-

uses border gateways (BGWs) in anycast or vPC mode to terminate and interconnect sites, and

-

enforces scalable traffic control and failure containment across domains.

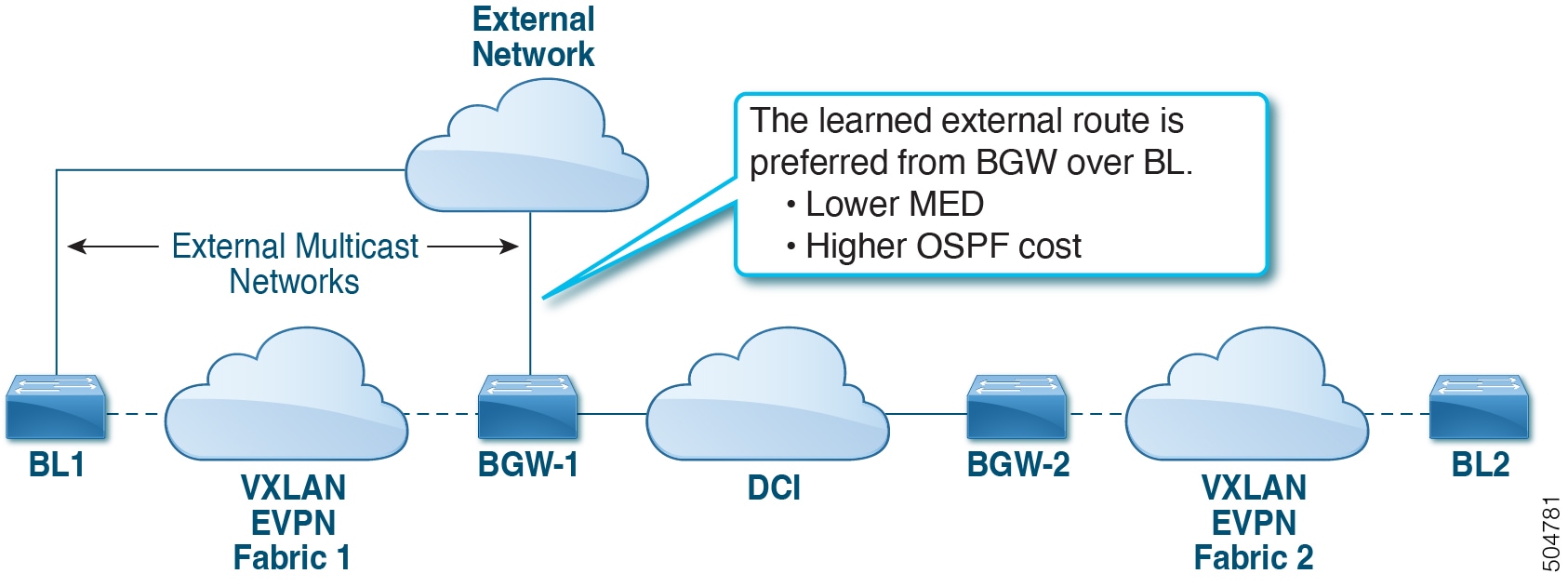

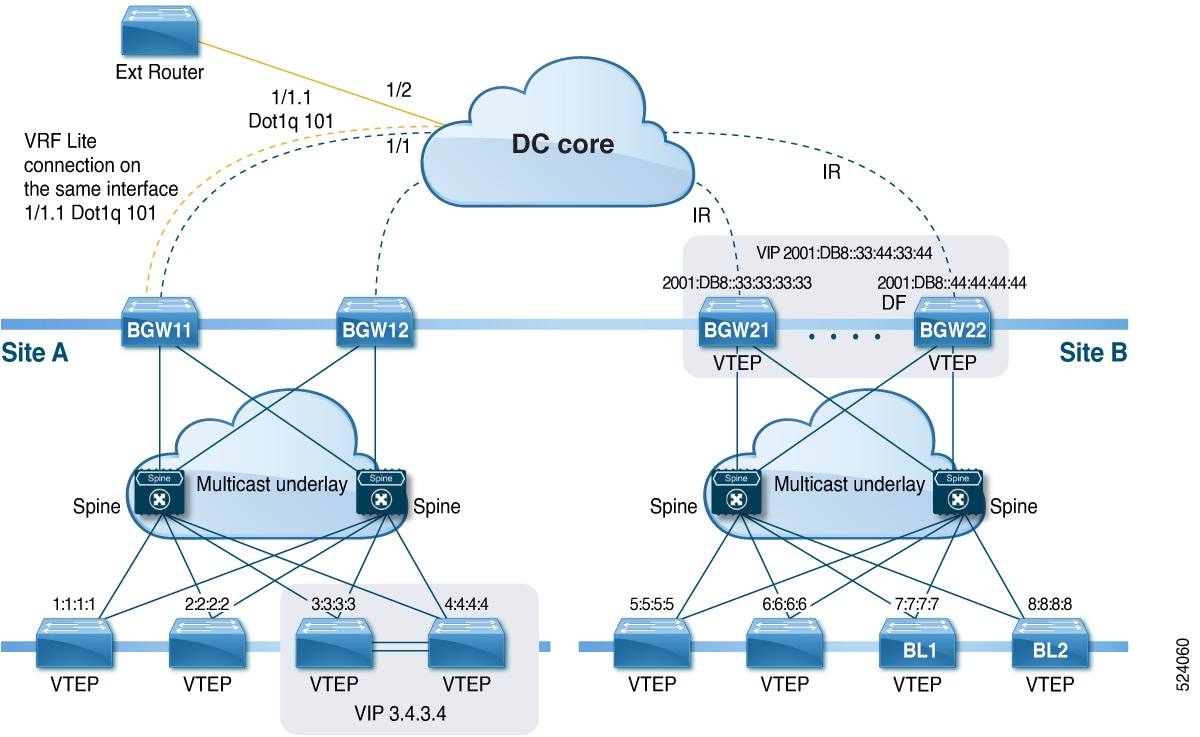

All routes that reach destinations outside a fabric have a next hop on the BGW, for both Layer 2 and Layer 3 traffic. The BGW serves as the node that interacts both with local site nodes and with nodes external to the site. In a leaf-spine data center fabric, BGWs can be leaf switches, spine switches, or dedicated gateway devices.

The VXLAN EVPN multi-site approach creates multiple site-local EVPN control planes and IP forwarding domains, interconnected by a single, common EVPN control and IP forwarding domain.

-

Each EVPN node receives a unique site-scope identifier. Site-local EVPN domains consist of nodes using the same identifier; BGWs belong both to their site’s EVPN domain and to the common multi-site EVPN domain.

-

Site-local bridging, routing, and flood domains connect only via BGWs to corresponding domains in other sites.

-

Selective advertisement on BGWs configures per-tenant information, such as IP VRF or MAC VRF (EVPN instance). When external connectivity (VRF-lite) and EVPN Multi-Site share a BGW, advertisements remain enabled.

-

In the BGP control plane, for releases prior to Cisco NX-OS Release 9.3(5), BGWs rewrite next hop information for EVPN routes and reoriginate them. Beginning with Cisco NX-OS Release 9.3(5), reorigination is always enabled (with either single or dual route distinguishers), and rewrite is not performed. For more information, see .

| Attribute | VXLAN EVPN single-site | VXLAN EVPN multi-sites |

|---|---|---|

| Scope of control | Single overlay domain | Multiple overlay domains |

| Inter-site gateway | Not required | Required (BGWs) |

| Failure containment | Fabric-wide | Site-specific, enforced at BGWs |

A VXLAN EVPN multi-site is like several office buildings (sites), each protected by its own security staff (BGWs). Visitors and deliveries travel only through main entrances (BGWs), ensuring each building’s safety and managing interactions between locations.

Feedback

Feedback