A flexible collaborative workplace designed to improve productivity and optimize workspace resources.

Prior to the 2020 pandemic, more than 70% of employees worked from home at least one day a week. This meant that on any given day, only 50% of our office space was occupied. We redesigned our office space to efficiently accommodate different work styles based on advanced wireless and collaboration technologies.

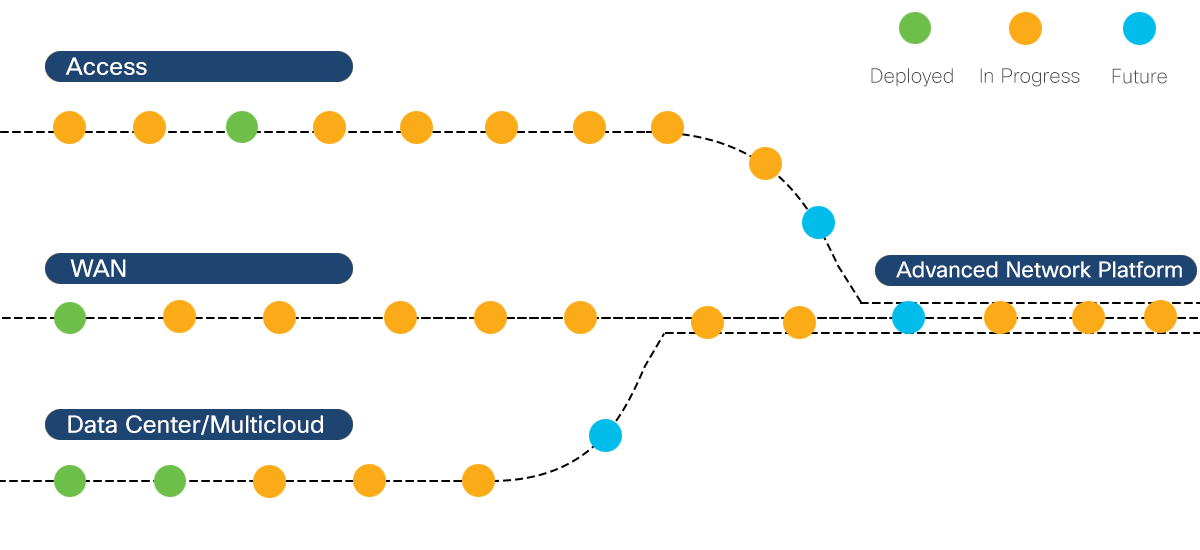

Icon signifies an area of technology that is continuously refreshed.