Packet classification

Packet classification is a process that

-

sorts network traffic into specific categories or classes based on predefined criteria

-

categorizes a packet within a specific class and assigns it a traffic descriptor to implement Quality of Service (QoS) policies where different traffic types (such as voice, video, or data) are treated according to their priority, and

-

ensures traffic flows meet the required network performance metrics, such as minimal delay, high throughput, or low packet loss.

A traffic descriptor is a set of specific attributes attached to a packet that

-

indicates the forwarding treatment (QoS) that the packet should receive, such as priority, bandwidth allocation, and queuing treatment

-

is used by traffic policers and traffic shapers to ensure that the packet adheres to a specified traffic profile or contract (such as rate limits or traffic priority), and

-

facilitates QoS handling throughout the network, allowing routers, switches, and other devices to apply the appropriate traffic management policies based on the packet’s markings (such as DSCP and IP Precedence).

|

Feature Name |

Release Information |

Feature Description |

|---|---|---|

|

Ingress and Egress Packet Classification |

Release 25.1.1 |

Introduced in this release on: Fixed Systems (8010 [ASIC: A100])(select variants only*) *This feature is supported on Cisco 8011-4G24Y4H-I routers. |

|

Ingress and Egress Packet Classification |

Release 24.4.1 |

Introduced in this release on: Fixed Systems (8700 [ASIC:K100])(select variants only*) *This feature is supported on Cisco 8712-MOD-M routers. |

|

Ingress and Egress Packet Classification |

Release 24.3.1 |

Introduced in this release on: Modular Systems (8800 [LC ASIC: P100]) (select variants only*), Fixed Systems (8200) (select variants only*), Fixed Systems (8700 (P100, K100)) (select variants only*) You can categorize packets into specific groups or classes and assign them traffic descriptors for QoS to classify and manage network traffic effectively. *This feature is supported on:

|

|

Ingress and Egress Packet Classification |

Release 24.2.1 |

Introduced in this release on: Modular Systems (8800 [LC ASIC: P100])(select variants only*) Categorizing packets into specific groups or classes and assigning them traffic descriptors for QoS helps classify and manage network traffic effectively. At the ingress, the QoS map and TCAM are used for classification. The QoS map is used for classification at the egress when policy matches only on DSCP, while TCAM is used for other criteria such as MPLS. *This feature is supported on 88-LC1-36EH. |

Your router uses packet classification in a staged approach to identify, group, and assign QoS actions. The table summarizes these key stages and the corresponding sections for more detail.

|

Stage |

Description |

See section |

|---|---|---|

|

Packet classification technique |

Determines how traffic is identified using methods such as IP Precedence and Differentiated Services Code Point. |

|

|

Packet handling mode |

Determines which packet header fields are visible to classification based on the configured DiffServ tunneling mode. Packet handling models influence how QoS markings are interpreted during packet traversal but do not perform marking. |

|

|

Traffic class definition |

Defines match criteria and logic for grouping similar packets. | |

|

Traffic policy application |

Applies QoS actions (mark, shape, police, queue) based on traffic classes. |

Types of packet classification

Your router offers several advanced packet classification techniques to manage network traffic effectively. These methods help prioritize and control traffic flows based on network parameters. They ensure efficient resource use and QoS adherence. Also see Packet classification systems on your router.

Classification identifies traffic, while marking writes QoS values. For marking actions, see Packet marking.

|

Classification technique |

What it is |

Used for |

|---|---|---|

|

IP Precedence |

A 3-bit field in the IPv4 header, allowing traffic to be categorized into one of 8 priority levels, ranging from 0 (lowest priority) to 7 (highest priority). |

Prioritizing traffic at the network edge when basic prioritization (like voice or video) is required. |

|

Differentiated Services Code Point (DSCP) |

A 6-bit field in the IP header used to classify traffic more granularly than IP precedence. Common DSCP Values are:

|

Fine-grained control over traffic, especially in large-scale networks where different types of traffic require varied treatment, such as real-time voice versus bulk data. |

|

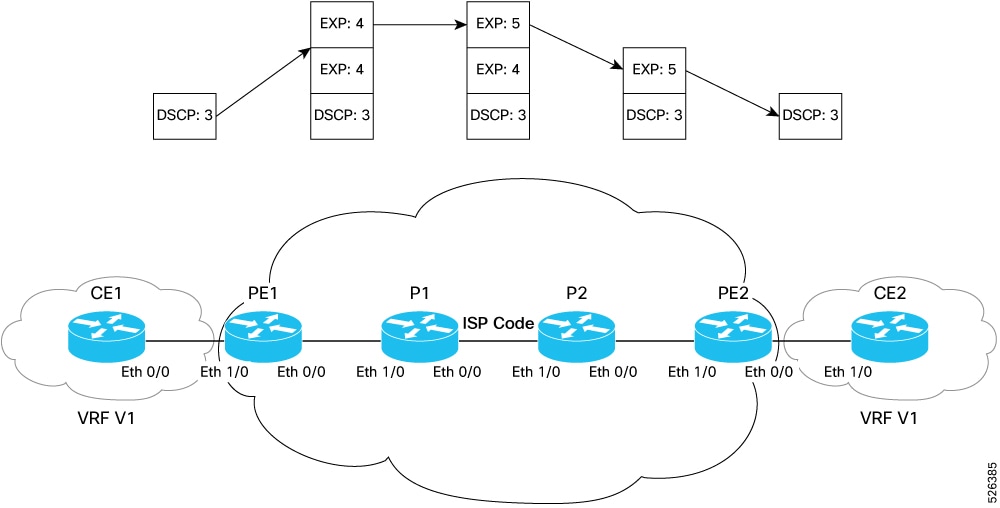

Experimental (EXP) bits in MPLS |

A part of the MPLS label which uses 3 bits, allowing for 8 levels of priority. |

MPLS VPNs and traffic engineering where precise control over traffic routing and prioritization is required. |

|

Priority Code Point (PCP) |

A 3-bit field in the Class of Service (CoS) portion of an Ethernet frame. It is used to prioritize Layer 2 (Ethernet) traffic. PCP values range from 0 to 7, with 0 being the lowest and 7 being the highest priority. |

Ethernet networks and VLANs where Layer 2 traffic needs to be prioritized, such as voice traffic in a VLAN. |

|

Drop Eligibility Indicator (DEI) |

A part of the CoS field in the Ethernet header which indicates whether a packet is eligible to be dropped during congestion. |

Ethernet networks where low-priority traffic (such as best-effort data) should be dropped first during periods of congestion. |

|

QoS group |

A technique to classify traffic based on QoS group numbers. |

Grouping traffic flows for specialized handling or service-level agreements |

|

Access Control Lists (ACLs) |

A technique to classify traffic by matching specific attributes such as IP address, protocol type, and port numbers. |

Custom traffic filtering or when you need to define very specific conditions for traffic classification. |

The sections that follow focus on IP Precedence as an illustration of how typical packet classification techniques work.

IP Precedence to prioritize traffic

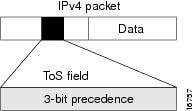

IP Precedence is a method for prioritizing network traffic by assigning a priority level to IP packets. It uses the first 3 bits of the Type of Service (ToS) field in the IPv4 header, allowing for 8 different priority levels (0-7). Each precedence level has a corresponding name defined in RFC 791.

Advantages of IP Precedence

-

Differentiated Services (DiffServ): You can assign higher priority to critical traffic (for example, VoIP) and lower priority to less time-sensitive traffic. You can do this by setting precedence levels and combining them with QoS queuing features to ensure service differentiation.

-

End-to-end QoS: When you set IP Precedence at the edge of your network, core network devices can enforce QoS policies based on those markings, ensuring consistent prioritization across the network.

-

Integration with QoS features: You can use IP Precedence with features like Low Latency Queuing (LLQ) and traffic shaping to manage congestion and bandwidth allocation.

-

Layer 2 mapping: You can map IP Precedence (Layer 3) values to Class of Service (CoS) values at Layer 2 (in the 802.1Q VLAN tag), extending QoS policies across different network segments.

Considerations for deploying IP Precedence in packet classification

-

Edge deployment: IP Precedence is usually deployed as close to the edge of the network or administrative domain as possible. This allows core network devices to implement QoS based on the precedence already set.

-

Reserved values: IP Precedence bit settings 6 and 7 are reserved for network control traffic, such as for routing updates. These values must not be used for user traffic.

-

Class-based marking and LLQ: Class-based unconditional packet marking and Low Latency Queuing (LLQ) features can use IP Precedence to classify and prioritize traffic.

How IP Precedence Works

Summary

The key components involved in applying IP Precedence are:

-

Edge router: Classifies and marks incoming traffic by setting the IP Precedence value in the packet header.

-

Policy map: Defines QoS policies that specify how traffic is handled based on its precedence level.

-

Network devices (such as core routers and switches): Reference the IP Precedence value to enforce traffic handling policies such as prioritization, bandwidth allocation, and congestion management.

The IP Precedence process involves marking packets at the edge of the network and applying QoS policies throughout the network path. The edge router assigns a precedence value to each packet based on policy configurations. This value is used by downstream devices to prioritize traffic, allocate bandwidth appropriately, and manage congestion.

Higher-precedence traffic is given preferential treatment in queuing and transmission, ensuring that critical applications—such as voice and video—are delivered with lower latency and better performance.

The process occurs in real time as packets are received and forwarded through the network. This ensures consistent traffic prioritization from edge to core.

Workflow

These stages describe the IP Precedence classification process:

-

Edge router sets the IP Precedence value in the packet’s IPv4 header based on configured classification policies.

-

Policy map defines how traffic should be treated based on the assigned IP Precedence value. This includes prioritization, bandwidth allocation, and congestion behavior.

-

Network devices (such as core routers and switches) read the IP Precedence value and determine the appropriate handling of the packet:

-

Prioritize higher-precedence packets in the queue.

-

Allocate bandwidth as defined by the policy map.

-

Drop lower-precedence packets first during congestion.

-

-

All network devices continue to forward packets through the network, consistently applying QoS actions based on the IP Precedence value set at the edge.

Feedback

Feedback