Stages of Priority Flow Control configuration

Each stage provides a high-level summary of the configuration flow, helping you locate the section that aligns with your operational goal, which could be selecting a PFC buffer mode, tuning Explicit Congestion Notification (ECN) thresholds, or enabling congestion protection for High Bandwidth Memory (HBM).

|

Stage |

Description |

See Section |

|---|---|---|

|

Understand PFC fundamentals |

Learn how PFC uses pause frames to prevent packet loss on congested queues. |

|

|

Select a PFC mode |

Compare buffer-internal, buffer-extended, and buffer-extended hybrid modes and identify which one best suits your traffic profile, link distance, and latency targets. |

|

|

Configure buffer-internal mode |

Configure pause-threshold, headroom, and ECN values; adjust ECN thresholds and marking probability. |

|

|

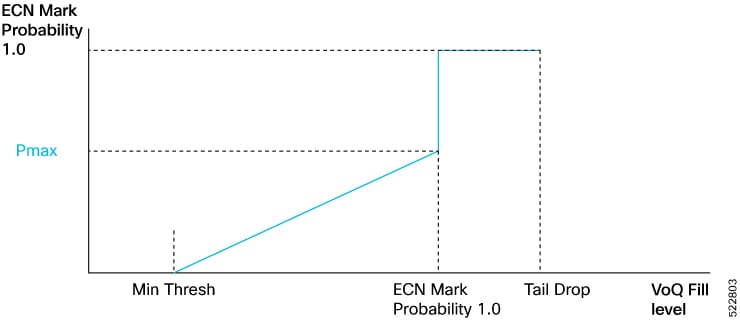

Configure ECN threshold and marking probability values |

Optimize congestion handling by defining ECN minimum and maximum thresholds and marking probability for buffer-internal PFC. |

|

|

Configure buffer-extended and hybrid modes |

Apply interface-level ECN and queue limit settings, define hybrid buffer partitions, and follow tuning guidelines for supported hardware. |

|

|

Configure PFC on an interface |

Enable PFC on specific interfaces so the router can send and respond to 802.1Qbb pause frames, generate pause-duration telemetry, and operate with PFC watchdog monitoring. |

|

|

Detect and manage HBM congestion |

Enable High Bandwidth Memory (HBM) congestion detection in the buffer-extended mode to monitor when VOQs spill into external memory and trigger pause protection if required. |

|

|

Enable global pause protection |

Configure global X-off frames to prevent packet drops when HBM congestion occurs on buffer-extended devices. |

Feedback

Feedback