Crosswork Cluster Requirements

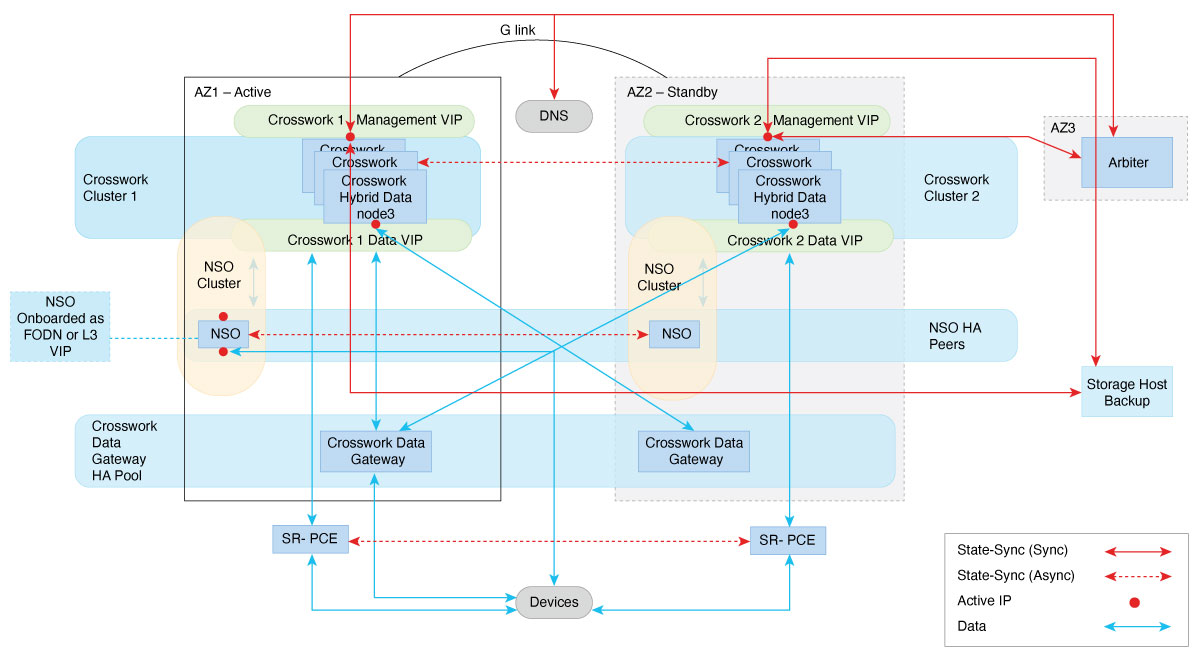

Geo redundancy solution requires double the number of VMs required for a regular Crosswork Cluster installation. For more information, see Installation Prerequisites for VMware vCenter.

Important Notes

-

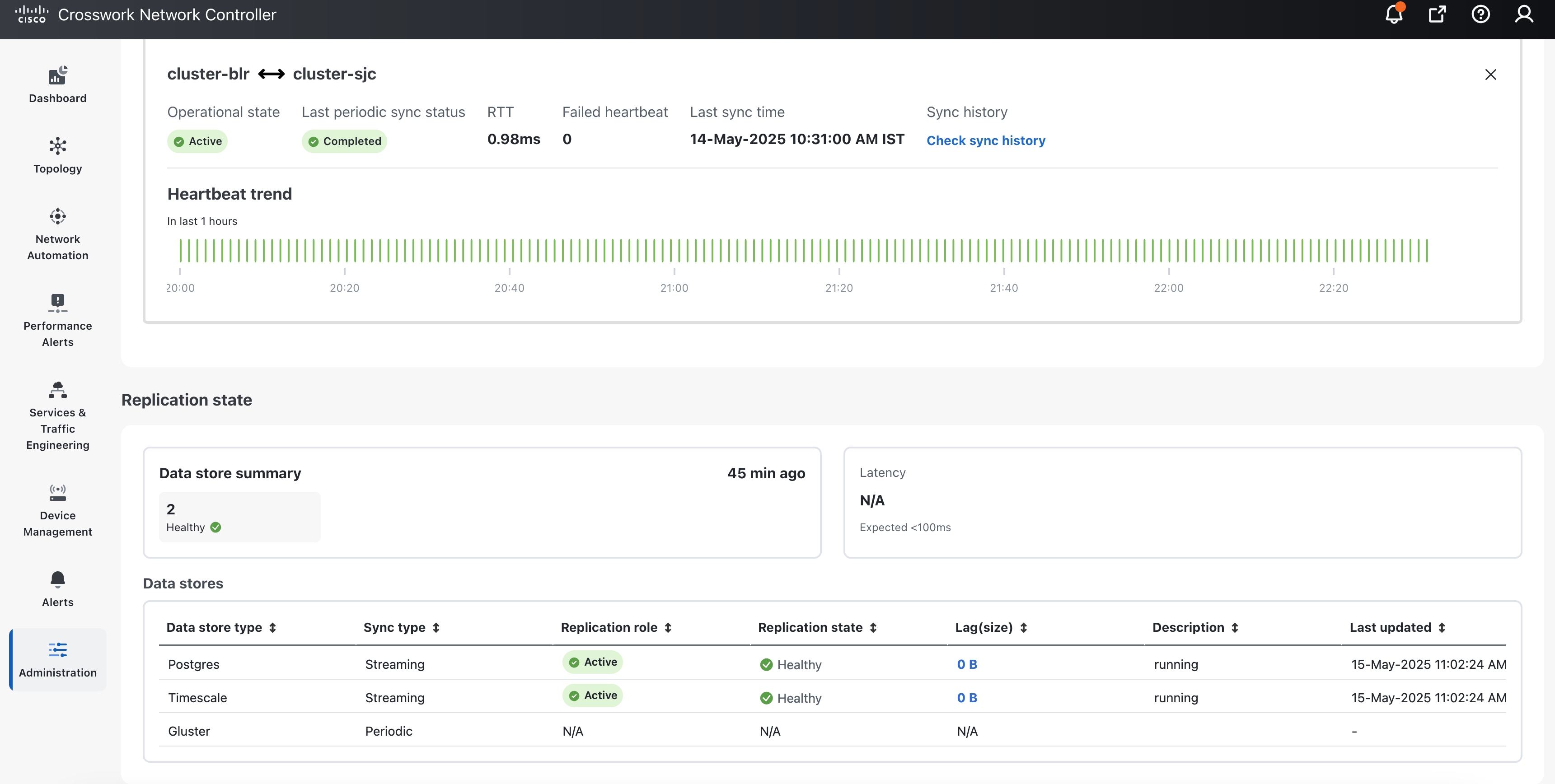

To ensure synchronization between the clusters, the network connection between the data centers should be set up with a minimum bandwidth of 5 Gbps and a latency of less than 100 milliseconds.

-

While preparing a geo inventory file, you must include details of cluster constituents along with connectivity information.

-

Configure the DNS server to resolve the unified multi-cluster FQDN (data and management) domain (for example, *.cw.cisco) that you want to use. The DNS server must be reachable from both clusters, the Crosswork Data Gateway, NSO, and SR-PCE. For more information on the DNS setup procedure, see the Cisco Prime Network Registrar Caching and Authoritative DNS User Guide.

-

The DNS server should forward any outside domains to the external DNS servers.

-

In case of dual stack deployments, Crosswork cluster and Crosswork Data Gateway deployments should be configured in dual stack mode, with the geo FQDN (data and management) pointing to the dual stack IP addresses.

-

You should sequentially bring up the active cluster, standby cluster, and arbiter VM using the existing installer mechanism. Ensure you use the previously identified DNS server during the installation of the Crosswork cluster, Crosswork Data Gateway, and NSO. It is recommended to have multiple DNS servers, with one in each data center.

-

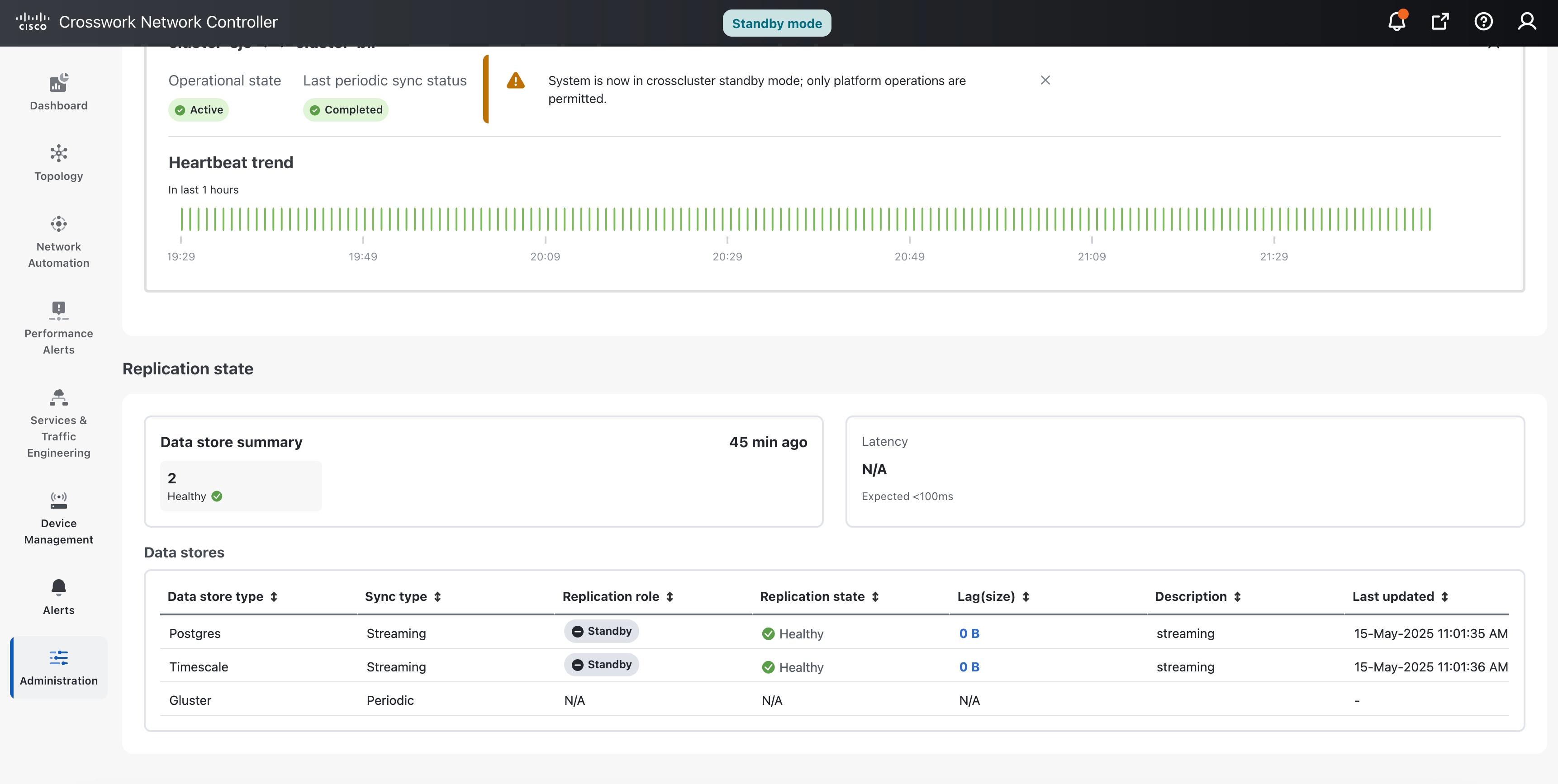

Applications should be installed only after enabling geo redundancy and completing the initial on-demand synchronization. Begin by installing applications on the active cluster, followed by the standby cluster. Other configuration information (such as devices, providers, or destinations) must be onboarded only on the active cluster and will be synchronized between the clusters as part of the activation process.

-

You should ensure that the Day 0 inventory is onboarded before enabling geo redundancy.

-

The NSO in HA mode requires provider connectivity onboarding via an L3 VIP or FQDN in the Crosswork Network Controller.

-

Before enabling geo-redundancy mode, you are recommended to make a backup of the active and standby cluster.

-

An arbiter VM requires a resource footprint of 8 vCPUs, 48 GB of RAM, and 650 GB of storage. For installation instructions, see Deploy an arbiter VM.

Warning |

Once the geo-redundancy mode is set up, it cannot be undone because the certificates are regenerated using common root CA. To revert to non-geo redundancy mode, you must restore the backup made before enabling the geo redundancy mode. |

Feedback

Feedback