Introduction

The chapters in this part explains the requirements and processes to install or upgrade Geo Redundancy in the Crosswork Network Controller solution.

Attention |

Geo Redundancy is an optional functionality offered by Crosswork Network Controller solution. For any assistance, please contact the Cisco Customer Experience team. |

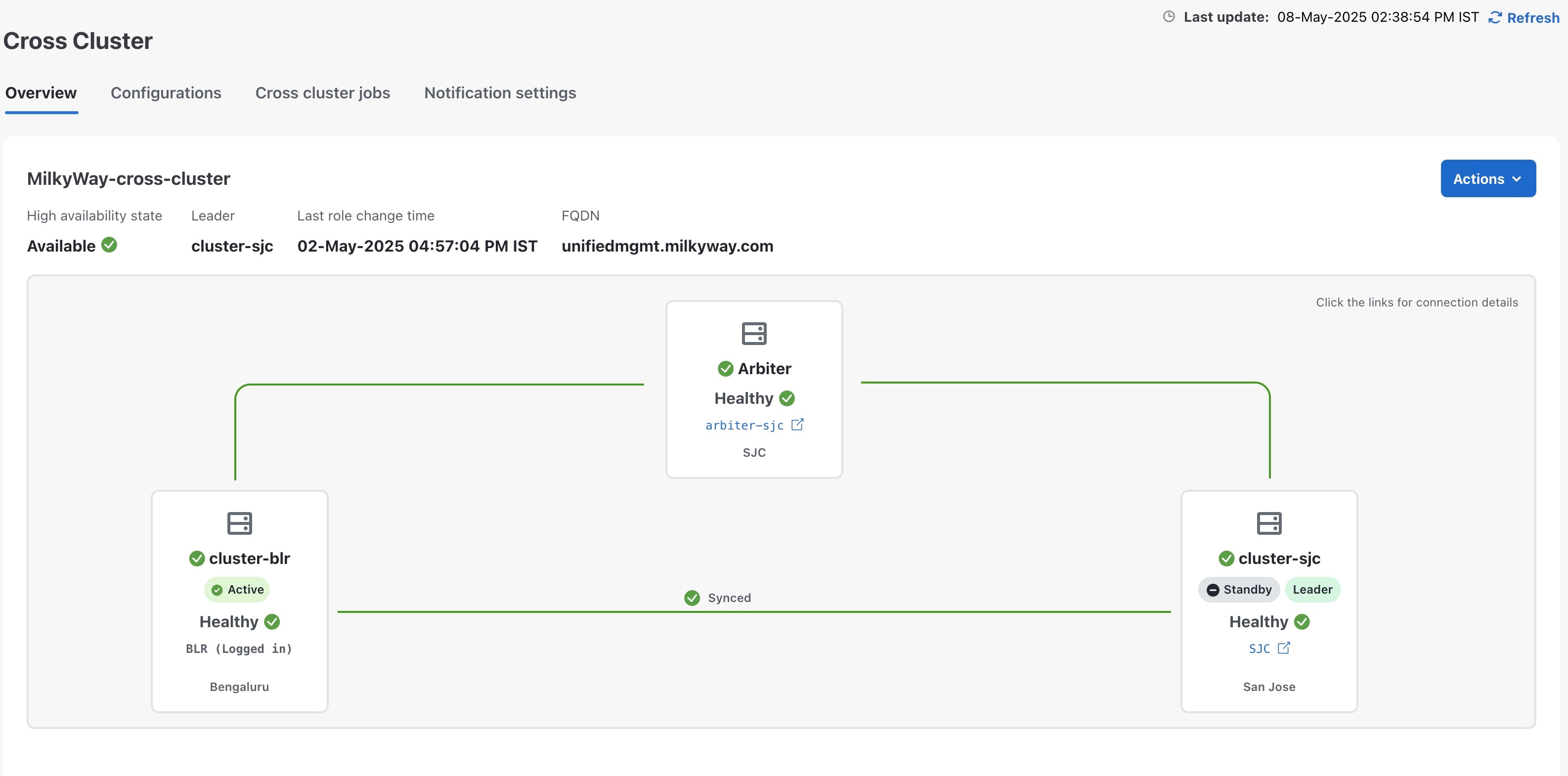

The geo redundancy solution ensures business continuity in case of a region or data center failure for on-premise deployment. It adds another layer of protection in the high availability stack for Crosswork through geographical or site redundancy. Geo redundancy protects against entire site failure, reduces disruption during system upgrades, and reduces overall data loss.

Geo redundancy involves placing physical servers in geographically diverse availability zones (AZ) or data centers (DC) to safeguard against catastrophic events and natural disasters.

Key factors

Some of the key factors that ensure geo redundancy are:

-

VM Node availability: Ensure that both the active and standby clusters are configured with the same number of virtual machines (cluster nodes, NSO, Crosswork Data Gateway, etc.) and maintain same level of network accessibility between the clusters and the network nodes.

-

Geo availability of Nodes: Physical data centers must not share any common infrastructure, such as power and network connectivity. It is recommended to place them in different availability zones (AZ) or regions to avoid a single point of failure that could impact all the VM nodes.

-

Network Availability: To keep the clusters synchronized, the network link between the data centers must meet the availability and latency requirements detailed later in this chapter.

Deployment scenarios

-

Traditional geo redundancy: Active and standby sites

-

Arbiter-based geo redundancy: Active, standby, and arbiter sites.

Important

You can deploy a geo-HA setup with AZ1 alone, without AZ2 or the arbiter node (AZ3). While AZ1 starts successfully in this scenario, this setup defeats the purpose of HA, which requires at least AZ1 and AZ2. Keep in mind that this configuration will significantly limit the geo-HA functionalities offered by the Crosswork Network Controller, and will result in these issues:

-

Attempting to save the cross-cluster settings triggers an error pop-up that indicates the settings failed to save on the peer cluster(s).

-

The sync and switchover buttons remain active in the drop-down menu on the cross-cluster UI window, regardless of whether peer clusters are present. However, if you press either button to initiate a job, the operation will fail with error messages indicating the absence of peer cluster(s).

-

Feedback

Feedback