Cisco Application Centric Infrastructure (ACI) Design Guide

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Table of Contents

Cisco Nexus 9000 Series Hardware

Cisco Application Policy Infrastructure Controller (APIC)

Fabric with Mixed Hardware or Software

Fabric with Different Spine Types

Fabric with Different Leaf Switch Types

Fabric with Different Software Versions

Leaf and Spine Switch Functions

Multi-tier Design Considerations

Per Leaf RBAC (Role-based Access Control)

Virtual Port Channel Hardware Considerations

Hardware Compatibility Between vPC Pairs

Placement of Outside Connectivity

Border Leaf Switches with VRF-lite, SR/MPLS Handoff and GOLF

Using Border Leaf Switches for Server Attachment

Limit the use of L3Out for Server Connectivity

Service Leaf Switch Considerations

In-band and out-of-band Management Connectivity

Multiple Locations Data Centers Design Considerations

Fabric Infrastructure (Underlay) Design

Choosing the Leaf Switch Forwarding Profile

Common Reserved VLANs on External Devices

Hardening the Infrastructure VLAN

BGP Autonomous System Number Considerations

BGP Route-Reflector Placement Considerations

Network Time Protocol (NTP) configuration

In-Band and Out-of-Band Management

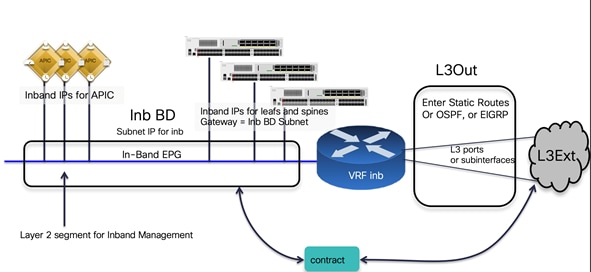

In-band Connectivity to the Outside

In-band Management Configuration

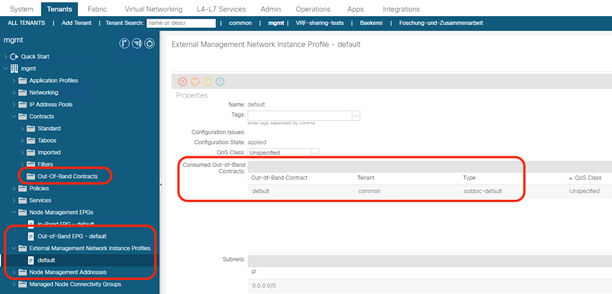

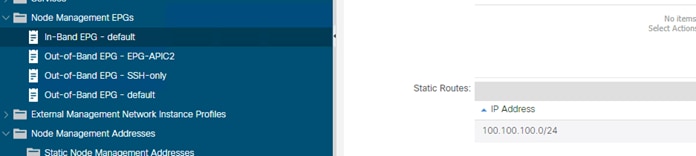

Out-of-band Management Configuration

Management Connectivity for VMM Integration

In-band Management Requirements for Telemetry

IS-IS Metric for Redistributed Routes

Configuring the Fabric Infrastructure for Faster Convergence

Cisco APIC Design Considerations

Port tracking and Cisco APIC Ports

In-Band and Out-of-Band Management of Cisco APIC

Internal IP Address Used for Apps

Summary of Cisco APIC design considerations

Cisco ACI Objects Design Considerations

Fabric Infrastructure Configurations

Objects with Overlapping Names in Different Tenants

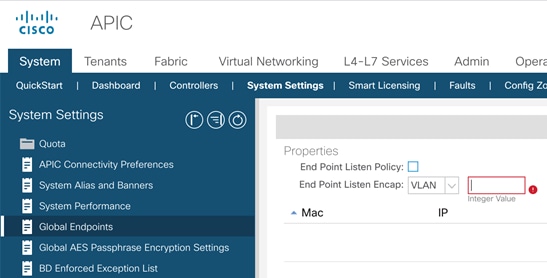

Connectivity Instrumentation Policy

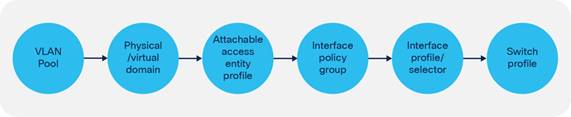

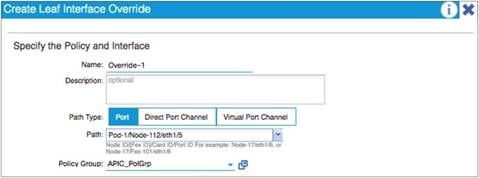

Fabric-access Policy Configuration Model

Defining VLAN Pools and Domains

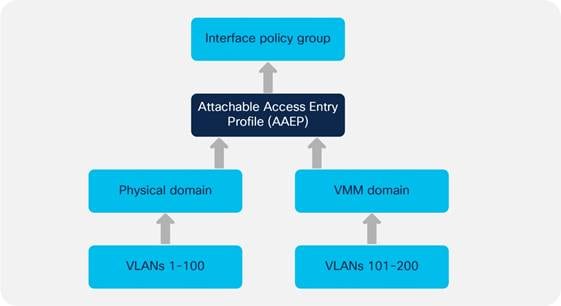

Attachable Access Entity Profiles (AAEPs)

Understanding VLAN Use in Cisco ACI and to Which VXLAN They Are Mapped

Domain and EPG VLAN Validations

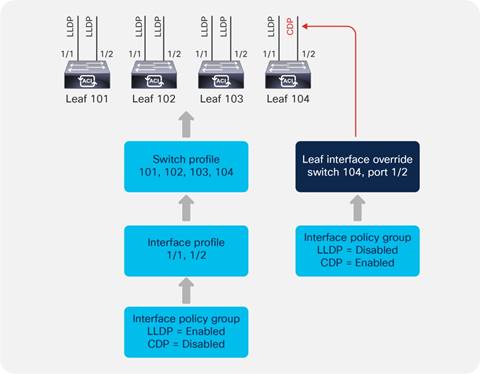

Cisco Discovery Protocol, LLDP, and Policy Resolution

Port Channels and Virtual Port Channels

Static Port Channel, LACP Active, LACP Passive

Configuration for Faster Convergence with VPCs

Port Channels and Virtual Port Channels Configuration Model in Cisco ACI

Interaction with Cisco APIC Ports

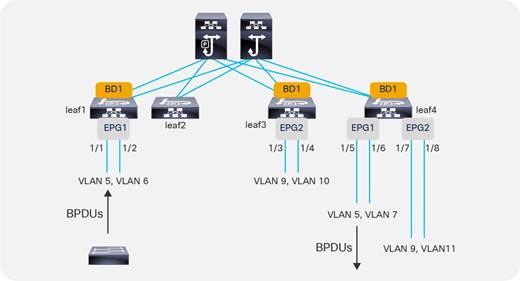

Loop Mitigation Features Overview

LLDP for Mis-Cabling Protection

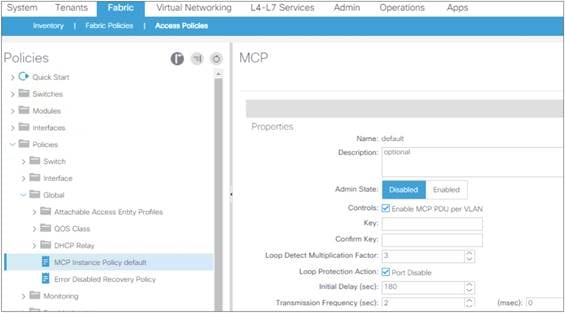

Mis-Cabling Protocol (MCP) Overview

Link Aggregation Control Protocol (LACP) Suspend Individual Ports

Interface-level Control Plane Policing (CoPP)

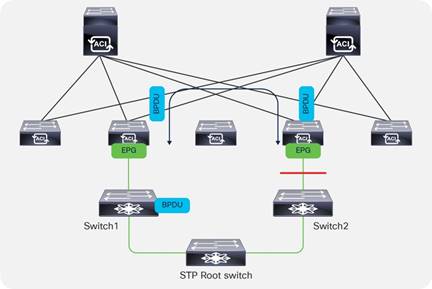

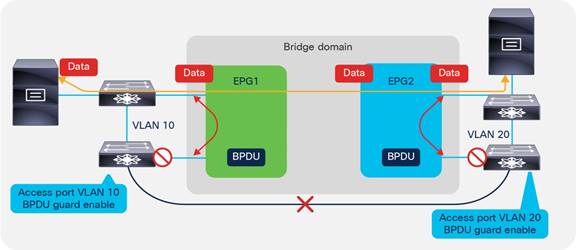

Spanning Tree Protocol Considerations

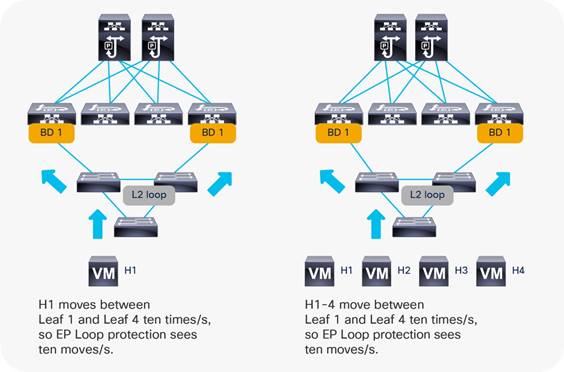

Endpoint Move Dampening, Endpoint Loop Protection, and Rogue Endpoint Control

Rogue Endpoint Control Exceptions

Summary Best Practices for Layer 2 Loop Mitigation

Network-centric and Application-centric Designs (and EPGs Compared with ESGs)

Implementing a Network-centric Topology

Default Gateway for the Servers

Assigning Servers to Endpoint Groups

Layer 2 Connectivity to the Outside with Network Centric Deployments

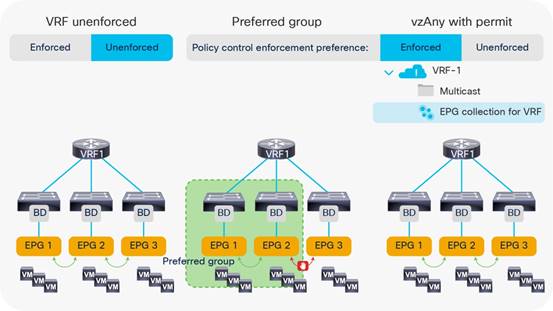

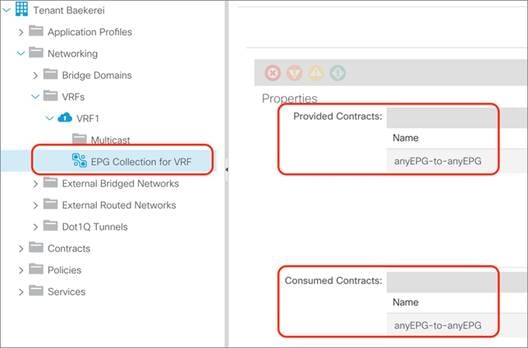

Using VRF Unenforced Mode or Preferred Groups or vzAny with Network Centric Deployments

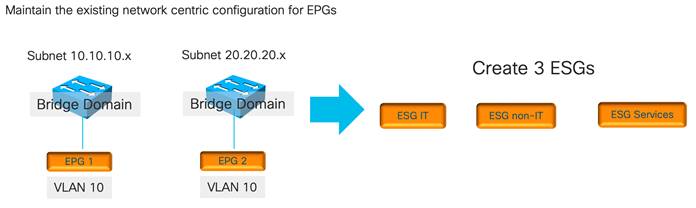

Using ESGs to Create the Equivalent of Multiple Preferred Groups

Implementing a Tenant Design With Segmentation Using EPGs or ESGs (Application-centric)

Adding EPGs to Existing Bridge Domains

Merging Bridge Domains and Subnets (with Flood in Encapsulation)

Using Endpoint Security Groups

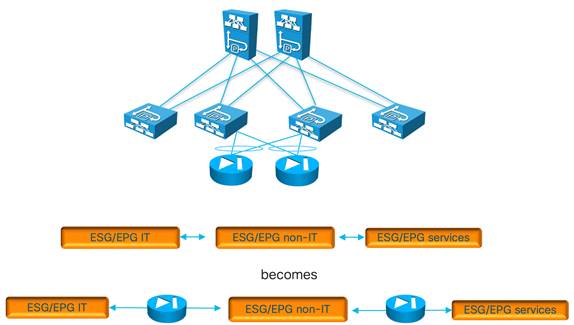

Adding Filtering Rules with Contracts and Firewalls with vzAny and Service Graph Redirect

Default Gateway (Subnet) Design Considerations

Bridge Domain Subnet, SVI, Pervasive Gateway

Subnet Configuration: Under the Bridge Domain and Why Not Under the EPG

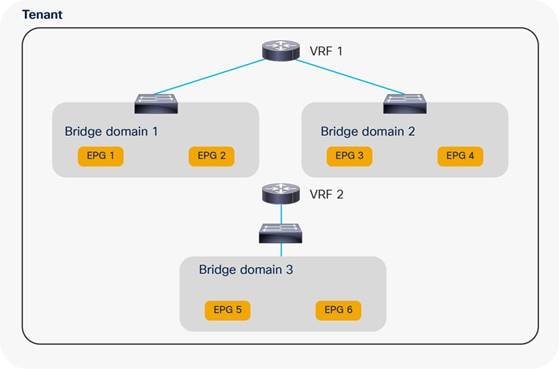

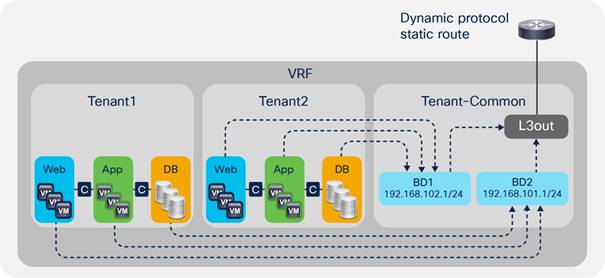

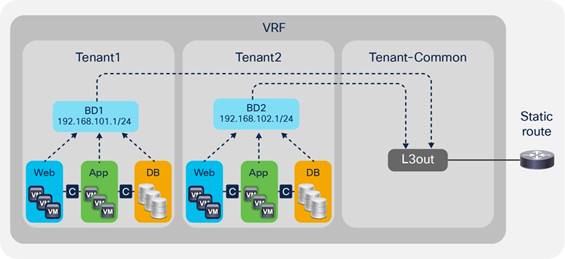

VRF Instances and Bridge Domains in the Common Tenant

VRF Instances in the Common Tenant and Bridge Domains in User Tenants

VRF Ingress Versus VRF Egress Filtering Design Considerations

Bridge Domain Design Considerations

Bridge Domain Configuration for Migration Topologies

BPDU Handling in the Bridge Domain

Using Hardware-Proxy to Reduce Flooding

Layer 2 Multicast and IGMP Snooping in the Bridge Domain

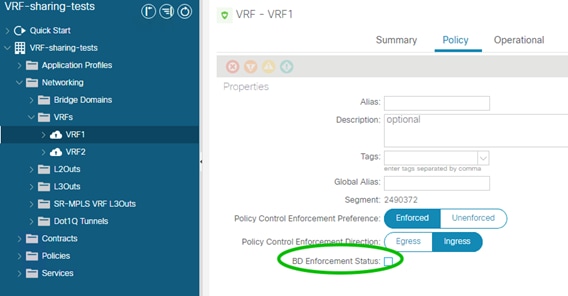

Bridge Domain Enforcement Status

Summary of Bridge Domain Recommendations

Configuring Trunk Ports with Nexus 9300-EX and Newer

Configuring Trunk Ports with First Generation Leaf switches

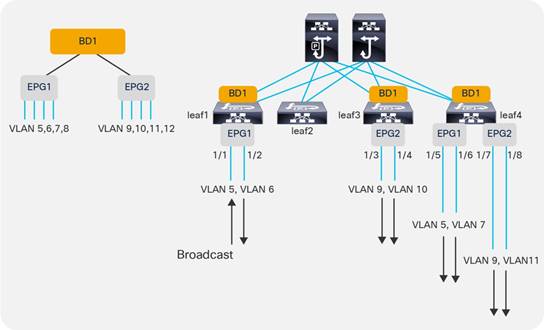

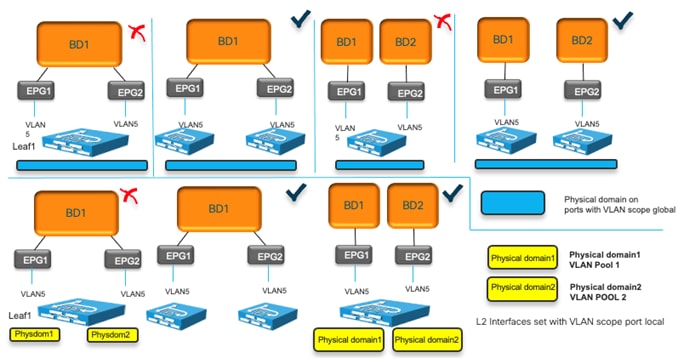

EPGs, Bridge Domains, and VLAN mapping

EPGs, Physical and VMM Domains, and VLAN Mapping on a Specific Port (or Port Channel or vPC)

Internal VLANs on the Leaf Switches: EPGs and Bridge Domains Scale

Assigning Physical Hosts to EPGs

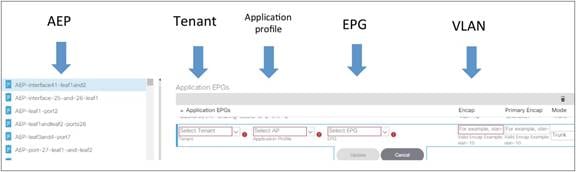

Using the Application Profile EPG

Assigning Hosts to EPGs from the Attachable Access Entity Profile (AAEP)

Assigning Virtual Machines to EPGs

EPG Configuration Workflow with VMM Integration

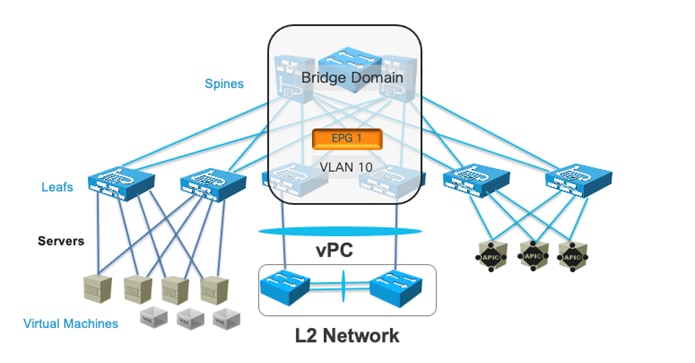

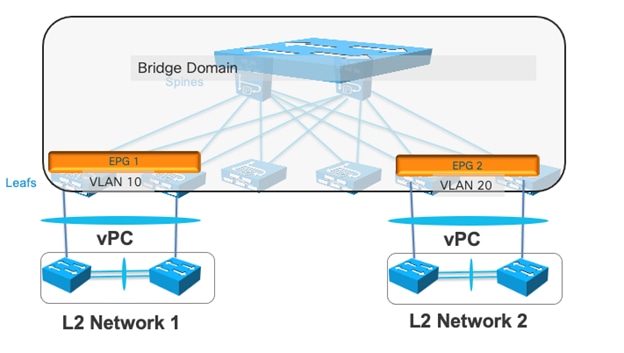

Connecting EPGs to External Switches

Using EPGs to connect Cisco ACI to External Layer 2 Networks

EPG and Fabric Access Configurations for Multiple Spanning Tree

Minimize the scope of Spanning Tree Topology Changes

Using EPGs to Connect Cisco ACI to External Layer 2 Networks Using vPCs

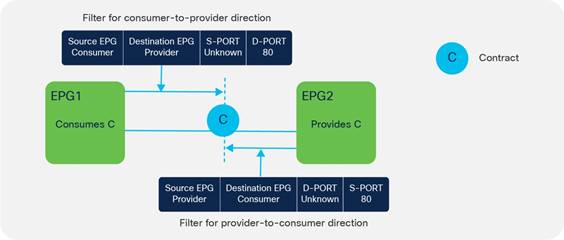

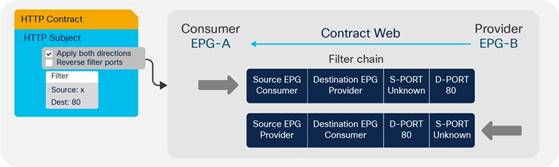

Contracts Design Considerations

Security Contracts are ACLs Without IP Addresses

Permit, Deny, Redirect, and Copy

Concept of Direction in Contracts

Understanding the Bidirectional and Reverse Filter Options

Configuring a Stateful Contract

Configuring a Single Contract Between EPG/ESGs

Contracts and Filters in the Common Tenant

Setting the Contract Scope Correctly

Saving Policy-CAM Space with Compression

Pros and Cons of using Contracts from Tenant Common

Unenforced VRF Instances, Preferred Groups, vzAny

Contracts and Filtering Rule Priorities

Resolution and Deployment Immediacy of VRF Instances, Bridge Domains, EPGs, and Contracts

EPG Resolution Immediacy and Deployment Immediacy Options

EPG Resolution Immediacy and Deployment Immediacy Considerations for Virtualized Servers

Endpoint Learning Considerations

Local Endpoint Learning on the Leaf Switches

Endpoint Aging with Multiple IP Addresses for the Same MAC Address

Endpoint Retention Policy at the Bridge Domain and VRF Level

Dataplane Learning from ARP packets

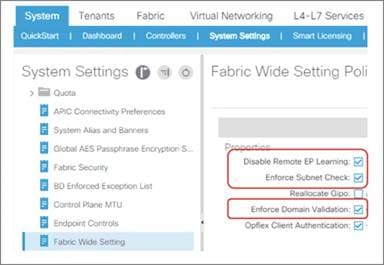

When and How to disable Remote Endpoint Learning (for Border Leaf Switches)

Floating IP Address Considerations

When and How to Disable IP Dataplane Learning

Stale Entries and Endpoint Announce Delete

Server Connectivity and NIC Teaming Design Considerations

Design Model for IEEE 802.3ad with a vPC

NIC Teaming Configurations for Non-Virtualized Servers

Server Active/Active (802.3ad Dynamic Link Aggregation) Teaming with vPC

NIC Teaming Active/Active non-Port Channel-based (non-vPC)

NIC Teaming Configurations for Virtualized Servers (Without the Use of VMM Integration)

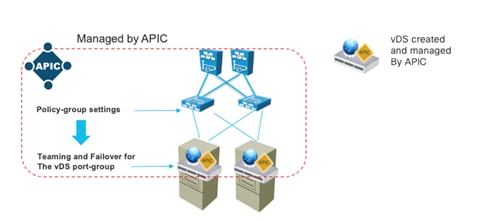

NIC Teaming Configurations for Virtualized Servers with VMM Integration

CDP and LLDP in the Policy Group Configuration

Configuring Teaming using the Cisco ACI VMM Integration

Teaming Options with VMM Integration

Choosing between Policy-Group type Access Leaf Port and vPC

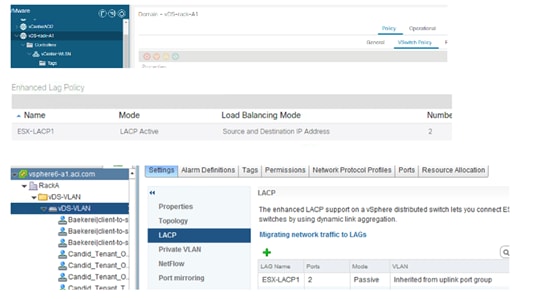

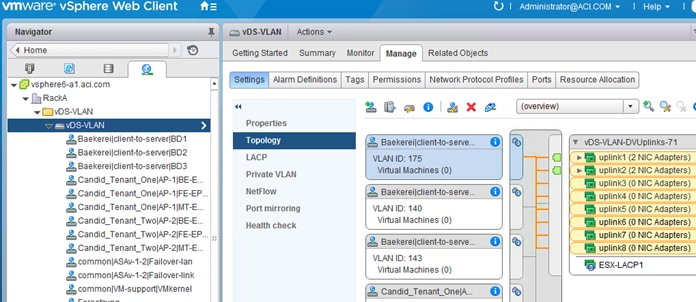

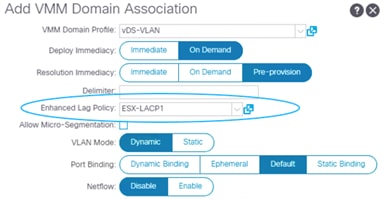

Using LACP Between the Virtualized Host and the Cisco ACI Leaf switches

Teaming Configuration with Servers Not Directly Attached to the Cisco ACI Leaf switches

UCS connectivity with Fabric Interconnect

Designing External Layer 3 Connectivity

The evolution of L3Out: VRF-lite, GOLF and SR/MPLS handoff

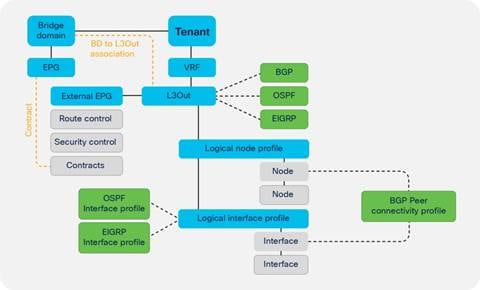

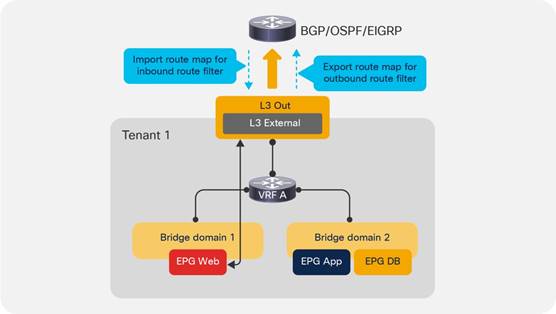

Layer 3 Outside (L3Out) and External Routed Networks

L3Out Router ID Considerations

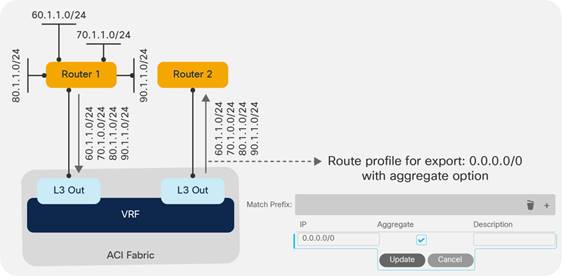

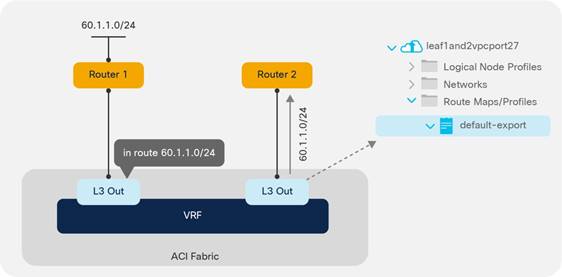

Route Announcement Options for the Layer 3 Outside (L3Out)

Route Map Handling Differences Between OSPF, EIGRP and BGP

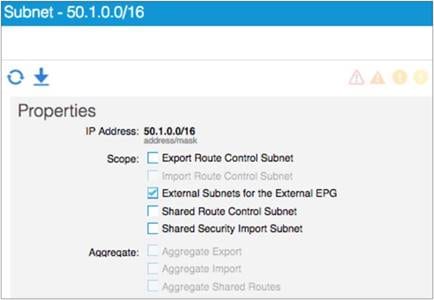

External Network (External EPG) Configuration Options

Advertisement of Bridge Domain Subnets

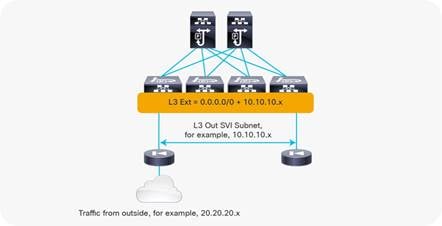

Add L3Out SVI Subnets to the External EPG

Bidirectional Forwarding Detection (BFD) for L3Out

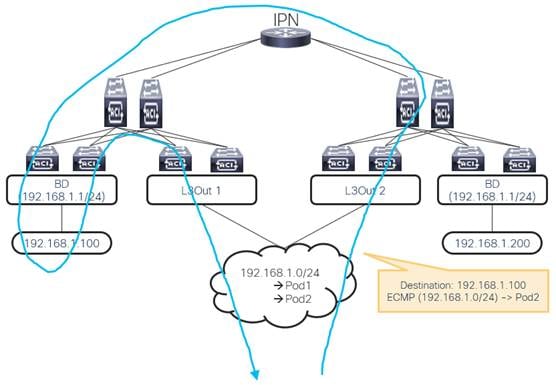

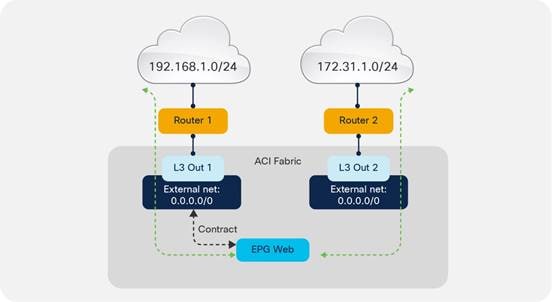

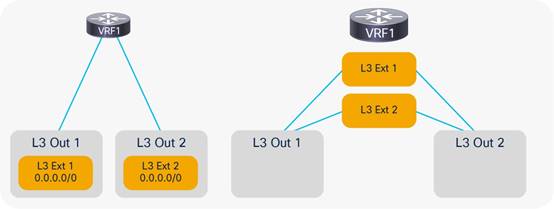

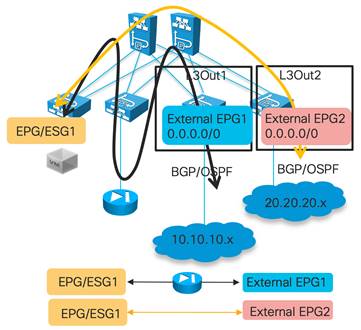

Considerations for Multiple L3Outs

External EPGs Have a VRF Scope

Using Dynamic L3Out EPG Classification (DEC)

Considerations When Using More Than Two Border Leaf Switches

Using BGP for External Connectivity

BGP Autonomous System (AS) number

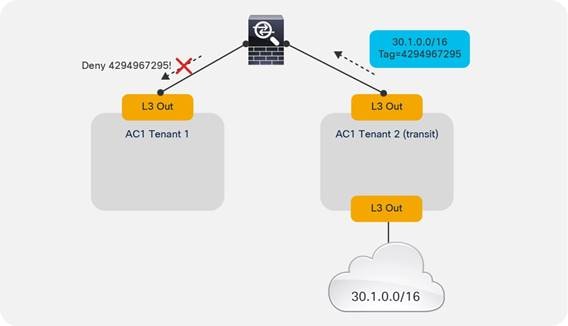

Supported Combinations for Transit Routing

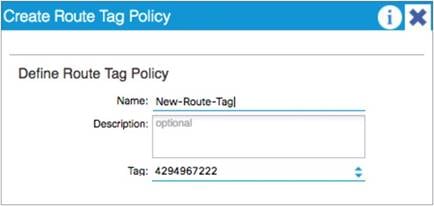

Loop Prevention in Transit Routing Scenarios

Quality of Service (QoS) In Cisco ACI

Quality of Service for Traffic Going to an IPN

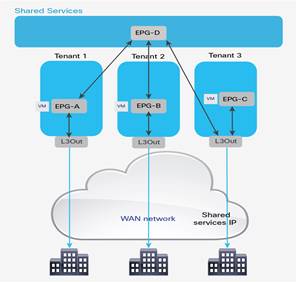

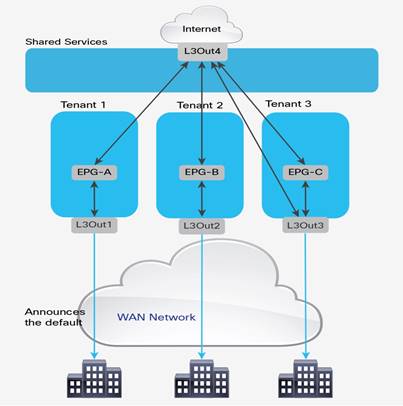

VRF Sharing Design Considerations

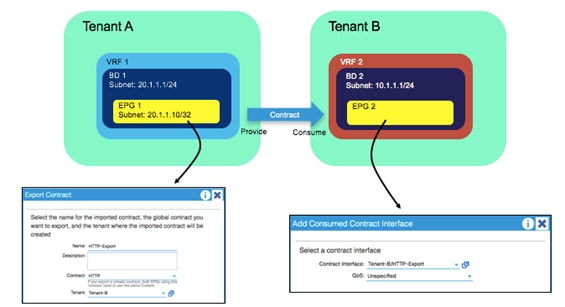

Inter-Tenant and Inter-VRF Communication

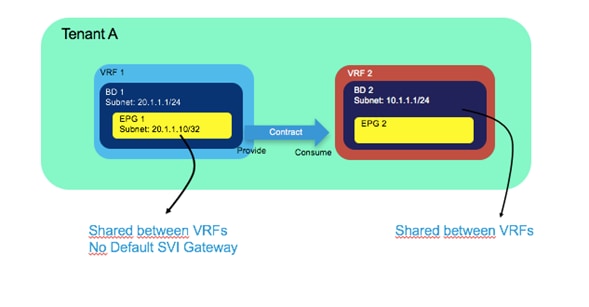

Inter-VRF Communication using EPGs

Inter-VRF Communication using ESGs

Configuration of the Subnet: When to Enter the Subnet Under the EPG

Policy Enforcement with Inter-VRF Traffic

Special Considerations and Restrictions for VRF Sharing Designs

Reducing the Cisco APIC Upgrade Time

Reducing Traffic Disruption During Upgrades

Graceful Upgrades Versus Graceful Insertion and Removal

Features That Must be Disabled Before an Upgrade or a Downgrade

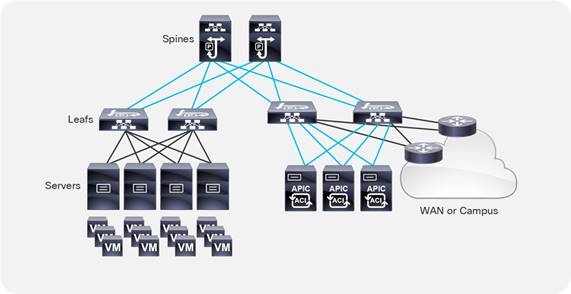

Cisco Application Centric Infrastructure (Cisco ACI™) technology enables you to integrate virtual and physical workloads in a programmable, multi-hypervisor fabric to build a multiservice or cloud data center. The Cisco ACI fabric consists of discrete components connected in a spine and leaf switch topology that it is provisioned and managed as a single entity.

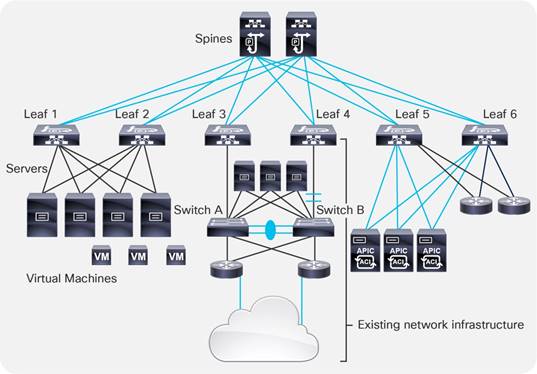

This document describes how to implement a fabric such as the one depicted in Figure 1.

The design described in this document is based on the following reference topology:

● Two spine switches interconnected to several leaf switches

● Top-of-Rack (ToR) leaf switches for server connectivity, with a mix of front-panel port speeds: 1/10/25/40/50/100/200/400-Gbps

● Physical and virtualized servers dual-connected to the leaf switches

● A pair of border leaf switches connected to the rest of the network with a configuration that Cisco ACI calls a Layer 3 Outside (L3Out) connection

● A cluster of three Cisco Application Policy Infrastructure Controllers (APICs) dual-attached to a pair of leaf switches in the fabric

The network fabric in this design provides the following main services:

● Connectivity for physical and virtual workloads

● Partitioning of the fabric into multiple tenants, which may represent departments or hosted customers

● The ability to create shared-services partitions (tenant) to host servers or virtual machines whose computing workloads provide infrastructure services such as Network File System (NFS) and Microsoft Active Directory to the other tenants

● Capability to provide dedicated or shared Layer 3 routed connections to the tenants present in the fabric

A Cisco ACI fabric can be built using a variety of Layer 3 switches that, while compatible with each other, differ in terms of form factors and ASICs to address multiple requirements.

You can find the list of available leaf and spine switches at the following URL:

https://www.cisco.com/c/en/us/products/switches/nexus-9000-series-switches/models-comparison.html

This document is based on features that are present in Cisco ACI release 6.0(1g).

Cisco ACI can integrate with every virtualized server using physical domains and the EPG Static Port configuration for "static binding" (more on this later) and with many external controllers using direct API integration, which is called Virtual Machine Manager (VMM) integration. Cisco APIC can integrate using VMM integration with VMware ESXi hosts with VMware vSphere, Hyper-V servers with Microsoft SCVMM, RedHat Virtualization, Kubernetes, OpenStack, OpenShift, and more. Cisco ACI 5.1(1) and later releases can integrate with VMware NSX-T Data Center (NSX).

The integration using static binding doesn’t require any special software version, whereas for the integration using Virtual Machine Manager you need specific Cisco ACI versions to integrate with specific Virtual Machine Manager versions.

VMware ESXi hosts with VMware vSphere 7.0 can be integrated with Cisco ACI release 4.2(4o) or later using VMM. VMware ESXi hosts integrate with Cisco ACI using the VMware vSphere Distributed Switch (vDS).

Note: This design guide explains design considerations related to teaming with specific reference to the VMM integration with VMware vSphere and it does not include the integration with VMware NSX-T.

For information about the support for virtualization products with Cisco ACI, see the ACI Virtualization Compatibility Matrix.

For more information about integrating virtualization products with Cisco ACI, see the virtualization documentation.

Cisco Nexus 9000 Series Hardware

For a list of available Cisco ACI Nexus 9000 series switches, see Cisco Nexus 9000 Series Switches.

This section provides some clarification about the naming conventions used for the leaf and spine switches referred to in this document:

● N9K-C93xx refers to the Cisco ACI leaf switches

● N9K-C95xx refers to the Cisco modular chassis

● N9K-X97xx refers to the Cisco ACI spine switch line cards

The trailing -E and -X signify the following:

● -E: Enhanced. This refers to the ability of the switch to classify traffic into endpoint groups (EPGs) based on the source IP address of the incoming traffic.

● -X: Analytics. This refers to the ability of the hardware to support analytics functions. The hardware that supports analytics includes other enhancements in the policy CAM, in the buffering capabilities, and in the ability to classify traffic to EPGs.

● -F: Support for MAC security.

● -G: Support for 400 Gigabit Ethernet.

For simplicity, this document refers to any switch without a suffix or with without the -X suffix as a first generation switch, and any switch with -EX, -FX, -GX, or any later suffix as a second generation switch.

Note: The Cisco ACI leaf switches with names ending in -GX have hardware that is capable of operating as either a spine or leaf switch. The software support for either option comes in different releases. For more information, see Cisco Nexus 9300-GX Series Switches Data Sheet.

For port speeds, the naming conventions are as follows:

● G: 100M/1G

● P: 1/10-Gbps Enhanced Small Form-Factor Pluggable (SFP+)

● T: 100-Mbps, 1-Gbps, and 10GBASE-T copper

● Y: 10/25-Gbps SFP+

● Q: 40-Gbps Quad SFP+ (QSFP+)

● L: 50-Gbps QSFP28

● C: 100-Gbps QSFP28

● D: 400-Gbps QSFP-DD

● E: 800-Gbps

For the taxonomy, see Taxonomy for Cisco Nexus 9000 Series Part Numbers.

For more information about Cisco Nexus 400 Gigabit Ethernet switches hardware (which includes Cisco ACI leaf and spine switches switches), see 400G Data Center and Cloud Networking.

In Cisco ACI, all workloads connect to leaf switches. The leaf switches used in a Cisco ACI fabric are Top-of-the-Rack (ToR) switches. A number of leaf switch choices differ based on function:

● Port speed and medium type

● Buffering and queue management: All leaf switches in Cisco ACI provide advanced capabilities to load balance traffic more precisely, including dynamic packet prioritization, to prioritize short-lived, latency-sensitive flows (sometimes referred to as mouse flows) over long-lived, bandwidth-intensive flows (also called elephant flows). The newest hardware also introduces more sophisticated ways to keep track and measure elephant and mouse flows and prioritize them, as well as more efficient ways to handle buffers.

● Policy CAM size and handling: The policy CAM is the hardware resource that allows filtering of traffic between EPGs. It is a TCAM resource in which Access Control Lists (ACLs) are expressed in terms of which EPG (security zone) can talk to which EPG (security zone). The policy CAM size varies depending on the hardware. The way in which the policy CAM handles Layer 4 operations and bidirectional contracts also varies depending on the hardware. -FX and -GX leaf switches offer more capacity compared with -EX and -FX2.

● Multicast routing support in the overlay: A Cisco ACI fabric can perform multicast routing for tenant traffic (multicast routing in the overlay).

● Support for analytics: The newest leaf switches and spine switch line cards provide flow measurement capabilities for the purposes of analytics and application dependency mappings.

● Support for link-level encryption: The newest leaf switches and spine switch line cards provide line-rate MAC security (MACsec) encryption.

● Scale for endpoints: One of the major features of Cisco ACI is the endpoint database, which maintains the information about which endpoint is mapped to which Virtual Extensible LAN (VXLAN) tunnel endpoint (VTEP), in which bridge domain, and so on.

Ability to change the allocation of hardware resources, such as to support more Longest Prefix Match entries, or more policy CAM entries, or more IPv4 entries. This concept is called "tile profiles," and it was introduced in Cisco ACI 3.0. For more information, see Cisco APIC Forwarding Scale Profiles and Verified Scalability Guide.

The -GX hardware can be deployed both as leaf or as a spine switch, and in case of high density 100 or 400 ports leaf switches you can use breakout cables to connect lower speed ports. For more information, see Nexus 9300 400 GE Switches.

For more information about the differences between the Cisco Nexus® 9000 series switches, see the following documents:

● https://www.cisco.com/c/en/us/products/switches/nexus-9000-series-switches/models-comparison.html

The spine switches are available in several form factors both for modular switches as well as for fixed form factors. Cisco ACI leaf switches with name ending in -GX have hardware that can operate both as spine and as leaf switch.

The differences among spine switches with different hardware are as follows:

● Port speeds

● Support for analytics: although this capability is primarily a leaf switch function and it may not be necessary in the spine switch, in the future there may be features that use this capability in the spine switch.

● Support for link-level encryption and for CloudSec. For information, see Cisco ACI Multi-Site Configuration Guide, Release 2.0(x).

● Support for Cisco ACI Multi-Pod and Cisco ACI Multi-Site: Refer to the specific documentation on Cisco ACI Multi-Pod and Cisco ACI Multi-Site, including the respective release notes, for more details.

At the time of this writing, the speed of ports used for spine switches was moving more and more to 400 Gigabit Ethernet density and the same -GX hardware can be used as a leaf or spine switch. For more information, see Nexus 9300 400 GE Switches.

Note: For information about Cisco ACI Multi-Site hardware requirements, see Cisco ACI Multi-Site Hardware Requirements Guide, Release 2.0(x).

The Cisco ACI fabric forwards traffic based on host lookups (when doing routing): all known endpoints in the fabric are programmed in the spine switches. The endpoints saved in the leaf switch forwarding table are only those that are used by the leaf switch in question, thus preserving hardware resources at the leaf switch. As a consequence, the overall scale of the fabric can be much higher than the individual scale of a single leaf switch.

The spine switch models also differ in the number of endpoints that can be stored in the spine proxy table, which depends on the type and number of fabric modules installed.

You should use the verified scalability limits for the latest Cisco ACI release and see how many endpoints can be used per fabric. See the Verified Scalability Guide for your release.

According to the verified scalability limits, the following spine switch configurations have the indicated endpoint scalabilities:

● Max. 450,000 Proxy Database Entries with four (4) fabric line cards

● Max. 180,000 Proxy Database Entries with the fixed spine switches

The above numbers represent the sum of the number of MAC, IPv4, and IPv6 addresses; for instance, in the case of a Cisco ACI fabric with fixed spine switches, this translates into:

● 180,000 MAC-only EPs (each EP with one MAC only)

● 90,000 IPv4 EPs (each EP with one MAC and one IPv4)

● 60,000 dual-stack EPs (each EP with one MAC, one IPv4, and one IPv6)

The number of supported endpoints is a combination of the capacity of the hardware tables, what the software allows you to configure, and what has been tested.

Refer to the Verified Scalability Guide for a given release and to the Capacity Dashboard in the Cisco APIC GUI for this information.

Detailed guidelines about which type of transceivers and cables you should use is outside of the scope of this document. The Transceiver Compatibility Matrix is a great tool to help with this task: https://tmgmatrix.cisco.com/

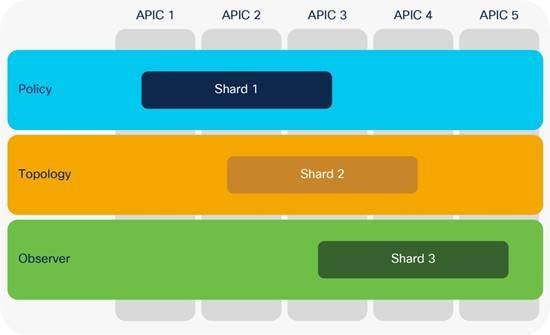

Cisco Application Policy Infrastructure Controller (APIC)

The Cisco APIC is the point of configuration for policies and the place where statistics are archived and processed to provide visibility, telemetry, and application health information and enable overall management of the fabric. The controller is a physical appliance based on a Cisco UCS® rack server with two interfaces for connectivity to the leaf switches. The Cisco APIC is also equipped with Gigabit Ethernet interfaces for out-of-band management.

For more information about the Cisco APIC models, see Cisco Application Policy Infrastructure Controller Data Sheet.

Note: A cluster may contain a mix of different Cisco APIC models; however, the scalability will be that of the least powerful cluster member.

Note: The naming of the Cisco APICs, such as M3 or L3, is independent of the UCS series names.

Fabric with Mixed Hardware or Software

Fabric with Different Spine Types

In Cisco ACI, you can mix new and old generations of hardware for the spine and leaf switches. For instance, you could have first-generation hardware leaf switches and new-generation hardware spine switches, or vice versa. The main considerations with spine hardware are as follows:

● Uplink bandwidth between leaf and spine switches

● Scalability of the spine proxy table (which depends primarily on the type of fabric line card that is used in the spine)

● Cisco ACI Multi-Site requires spine switches based on the Cisco Nexus 9500 platform cloud-scale line cards to connect to the intersite network

You can mix spine switches of different types, but the total number of endpoints that the fabric supports is the minimum common denominator.

Fabric with Different Leaf Switch Types

When mixing leaf switches of different hardware types in the same fabric, you may have varying support of features and different levels of scalability.

In Cisco ACI, the processing intelligence resides primarily on the leaf switches, so the choice of leaf switch hardware determines which features may be used (for example, multicast routing in the overlay, or FCoE). Not all leaf switches provide the same hardware capabilities to implement all features.

Cisco APIC pushes the managed object to the leaf switches regardless of the ASIC that is present. If a leaf switch does not support a given feature, it raises a fault. For multicast routing you should ensure that the bridge domains and Virtual Routing and Forwarding (VRF) instances configured with the feature are deployed only on the leaf switches that support the feature.

Fabric with Different Software Versions

The Cisco ACI fabric is designed to operate with the same software version on all the APICs and switches. During upgrades, there may be different versions of the OS running in the same fabric.

If the leaf switches are running different software versions, the following behavior applies: Cisco APIC pushes features based on what is implemented in its software version. If the leaf switch is running an older version of software and the Cisco APIC does not understand a feature, the Cisco APIC will reject the feature; however, the Cisco APIC may not raise a fault.

For more information about which configurations are allowed with a mixed OS version in the fabric, see the software and firmware installation and upgrade guides.

Running a Cisco ACI fabric with different software versions is meant to be just a temporary configuration to facilitate upgrades, and minimal or no configuration changes should be performed while the fabric runs with mixed OS versions.

You can connect fabric extenders (FEXes) to the Cisco ACI leaf switches; the main purpose of doing so should be to simplify migration from an existing network with fabric extenders. If the main requirement for the use of FEX is the Fast Ethernet port speeds, you may want to consider the Cisco ACI leaf switch models with -G or -T in the product name, such as Cisco Nexus N9K-C9348GC-FXP, N9K-C93108TC-FX, N9K-C93108TC-FX-24, N9K-C93108TC-EX, N9K-C93108TC-EX-24, N9K-C93216TC-FX2, and N9K-93108TC-FX3P.

To connect a FEX to a Cisco ACI leaf switch, you must assign a FEX ID to each FEX, and this number has leaf scope, so the same FEX ID can be re-used on a different leaf switch.

A FEX can be connected to Cisco ACI using a port channel with what is known as a straight-through topology, and vPCs can be configured between hosts and the FEX, but not between the FEX and Cisco ACI leaf switches.

A FEX can be connected to leaf switch front-panel ports as well as converted downlinks (since Cisco ACI release 3.1).

A FEX has many limitations compared to attaching servers and network devices directly to a leaf switch. The main limitations as follows:

● No support for L3Out on a FEX

● No Rate limiters support on a FEX

● No Traffic Storm Control on a FEX

● No Port Security support on a FEX

● A FEX should not be used to connect routers or Layer 4 to Layer 7 devices with service graph redirect

● The use in conjunction with microsegmentation works, but if microsegmentation is used, then Quality of Service (QoS) does not work on FEX ports because all microsegmented traffic is tagged with a specific class of service. Microsegmentation and a FEX is a feature that at the time of this writing has not been extensively validated.

Support for FCoE on a FEX was added in Cisco ACI release 2.2. See Cisco Application Policy Infrastructure Controller, Release 2.2(1), Release Notes.

When using Cisco ACI with a FEX, you want to verify the verified scalability limits; in particular, the limits related to the number of ports multiplied by the number of VLANs configured on the ports (commonly referred to as P, V). For more information, see the Verified Scalability Guide for your release.

With regard to scalability, you should keep in mind the following points:

● The total scale for VRF instances, bridge domains (BDs), endpoints, and so on is the same whether you are using FEX attached to a leaf switch or whether you are connecting endpoints directly to a leaf switch. This means that, when using FEX, the amount of hardware resources that the leaf switch provides is divided among more ports than just the leaf switch ports.

● The total number of VLANs that can be used on each FEX port is limited by the maximum number of P,V pairs that are available per leaf switch for host-facing ports on FEX. For the latest supported scale numbers, see the Verified Scalability Guide.

● The maximum number of EPGs per FEX port is the maximum number of encapsulations per FEX port as specified in the Verified Scalability Guide.

● For the maximum number of FEXes per leaf switch, see the Verified Scalability Guide.

Note: For more information about which leaf switch is compatible with which fabric extender, refer to the following link:

For more information about how to connect a fabric extender to Cisco ACI, see Nexus 9000 Series Switch FEX Support.

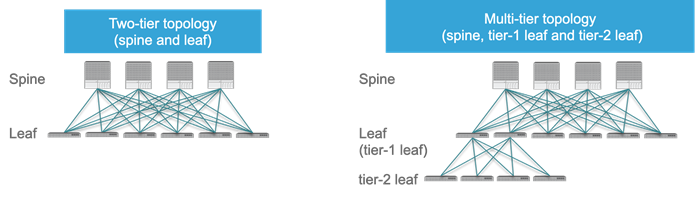

As of release 4.1, a Cisco ACI fabric can be built as a two-tier fabric or as a multi-tier (three-tiers) fabric.

Prior to Cisco ACI 4.1, the Cisco ACI fabric allowed only the use of a two-tier (spine and leaf switch) topology, in which each leaf switch is connected to every spine switch in the network with no interconnection between leaf switches or spine switches.

Starting from Cisco ACI 4.1, the Cisco ACI fabric allows also the use of two tiers of leaf switches, which provides the capability for vertical expansion of the Cisco ACI fabric. This is useful to migrate a traditional three-tier architecture of core-aggregation-access that have been a common design model for many enterprise networks and is still required today. The primary reason for this is cable reach, where many hosts are located across floors or across buildings; however, due to the high pricing of fiber cables and the limitations of cable distances, it is not ideal in some situations to build a full-mesh two-tier fabric. In those cases, it is more efficient for customers to build a spine-leaf-leaf switch topology and continue to benefit from the automation and visibility of Cisco ACI.

Leaf and Spine Switch Functions

The Cisco ACI fabric is based on a two-tier (spine and leaf switch) or three-tier (spine switch, tier-1 leaf switch and tier-2 leaf switch) architecture in which the leaf and spine switches provide the following functions:

● Leaf switches: These devices have ports connected to classic Ethernet devices, such as servers, firewalls, and router ports. Leaf switches are at the edge of the fabric and provide the VXLAN Tunnel Endpoint (VTEP) function. In Cisco ACI terminology, the IP address that represents the leaf switch VTEP is called the Physical Tunnel Endpoint (PTEP). The leaf switches are responsible for routing or bridging tenant packets and for applying network policies.

● Spine switches: These devices interconnect leaf switches. They can also be used to build a Cisco ACI Multi-Pod fabric by connecting a Cisco ACI pod to an IP network, or they can connect to a supported WAN device (see more details in the "Designing external layer 3 connectivity" section). Spine switches also store all the endpoints-to-VTEP mapping entries (spine switch proxies).

Within a pod, all tier-1 leaf switches connect to all spine switches, and all spine switches connect to all tier-1 leaf switches, but no direct connectivity is allowed between spine switches, between tier-1 leaf switches, or between tier-2 leaf switches. If you incorrectly cable spine switches to each other or leaf switches in the same tier to each other, the interfaces will be disabled. You may have topologies in which certain leaf switches are not connected to all spine switches (such as in stretched fabric designs), but traffic forwarding may be suboptimal in this scenario.

Up until Cisco ACI 3.1, fabric ports on leaf switches were hard-coded as fabric (iVXLAN) ports and could connect only to spine switches. Starting with Cisco ACI 3.1, you can change the default configuration and make ports that would normally be fabric links, be downlinks, or vice-versa. For more information, see Cisco Application Centric Infrastructure Fundamentals.

Note: For information about the optics supported by Cisco ACI leaf and spine switches, use the following tool:

https://tmgmatrix.cisco.com/home

Multi-tier Design Considerations

Only Cisco Cloudscale switches are supported for multi-tier spine and leaf switches.

● Spine: EX/FX/C/GX spine switches (Cisco Nexus 9332C, 9364C, and 9500 with EX/FX/GX line cards)

● Tier-1 leaf: EX/FX/FX2/GX except Cisco Nexus 93180LC-EX

● Tier-2 leaf: EX/FX/FX2/GX

Design considerations for multi-tier topology include the following:

● All switch-to-switch links must be configured as fabric ports. For example, Tier-2 leaf switch fabric ports are connected to tier-1 leaf switch fabric ports.

● A tier-2 leaf switch can connect to more than two tier-1 leaf switches, in comparison to a traditional double-sided vPC design, which has only two upstream switches. The maximum number of ECMP links supported by a tier-2 leaf switch to tier-1 leaf switch is 18.

● An EPG, L3Out, Cisco APIC, or FEX can be connected to tier-1 leaf switches or to tier-2 leaf switches.

● Tier-1 leaf switches can have both hosts and tier-2 leaf switches connected on it.

● Changing from a tier-1 to a tier-2 leaf switch and back requires decommissioning and recommissioning the switch.

● Multi-tier architectures are compatible with Cisco ACI Multi-Pod and Cisco ACI Multi-Site.

● Tier-2 leaf switches cannot be connected to remote leaf switches (tier-1 leaf switches).

● Scale: The maximum number of tier-1 leaf switches and tier-2 leaf switches combined must be less than or equal to the maximum number of leaf switches that have been validated for a given release. (400 per pod; 500 per Cisco ACI Multi-Pod as of Cisco ACI release 6.0(1)).

More information about Cisco ACI multi-tier can be found at the following link: https://www.cisco.com/c/en/us/solutions/data-center-virtualization/application-centric-infrastructure/white-paper-c11-742214.html

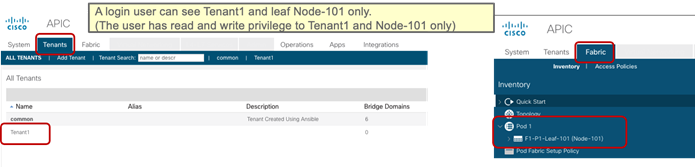

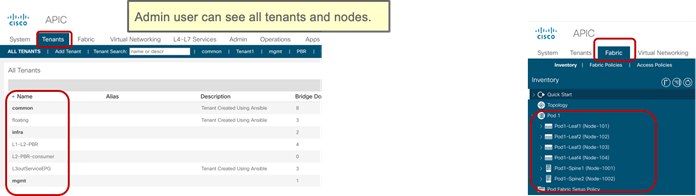

Per Leaf RBAC (Role-based Access Control)

Up until Cisco ACI 5.0, a Cisco ACI fabric administrator could assign a tenant to a security domain to let users have read/write privilege for a specific tenant assigned to that security domain, but that RBAC feature was not applicable to specific leaf switch.

Starting from Cisco ACI 5.0, a leaf switch can be assigned to a security domain so that only specific users can configure leaf switches assigned to that security domain and users in other security domains have no access to the leaf switches assigned to the security domain. For example, a user in Figure 3 can see tenant1 and leaf switch Node-101 only, and can’t see other user tenants or leaf switches, whereas the admin user in Figure 4 Figure 4can see everything. This is useful for allocating leaf switches for different tenants, customers, or organizations.

More information can be found at the following link: https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/5-x/security/cisco-apic-security-configuration-guide-50x/m-restricted-access-security-domains.html

Virtual Port Channel Hardware Considerations

Cisco ACI provides a routed fabric infrastructure with the capability to perform equal-cost multipathing for Layer 2 and Layer 3 traffic.

In addition, Cisco ACI supports the virtual port channel (vPC) technology on leaf switch ports to optimize server connectivity to the fabric. The purpose of this section is not to describe vPC in detail, but to highlight the relevant considerations for the planning of the physical topology. For more information about vPC, refer to the "Designing the fabric access / Port Channels and Virtual Port Channels" section.

It is very common for servers connected to Cisco ACI leaf switches to be connected through a vPC (that is, a port channel on the server side) to increase throughput and resilience. This is true for both physical and virtualized servers.

vPCs can also be used to connect to existing Layer 2 infrastructure or for L3Out connections (vPC plus a Layer 3 switch virtual interface [SVI]).

Hardware Compatibility Between vPC Pairs

You must decide which pairs of leaf switches in the fabric should be configured as part of the same vPC domain, which in the Cisco ACI configuration is called an "explicit vPC protection group."

When creating a vPC domain between two leaf switches, both switches must be of the same switch generation. Switches not of the same generation are not compatible vPC peers. For example, you cannot have a vPC consisting of a N9K-C9372TX and -EX or -FX leaf switches.

● Generation 1 switches are compatible only with other generation 1 switches. These switch models can be identified by the lack of the "EX," "FX, "FX2," "FX3," "GX" or later suffix at the end of the switch name: for example, N9K-9312TX is a generation 1 switch.

● Generation 2 and later switches can be mixed together in a vPC domain. These switch models can be identified by the "EX," "FX, "FX2," "FX3," "GX" or later suffix at the end of the switch name: for example N9K-93108TC-EX, or N9K-9348GC-FXP are generation 2 switches.

Note When using two different models of the same generation, if there is a difference of scale in terms of forwarding tables, buffers, and so on, you should design your fabric according to the minimum common denominator. We recommend that you use two identical models to be part of the same vPC domain.

Even if two leaf switches of different hardware generation are not meant to be vPC peers, the Cisco ACI software is designed to make the migration from one leaf switch to another compatible switch by using a vPC. Assume that the fabric has Cisco Nexus 9372PX leaf switch pairs (called 9372PX-1 and 9372PX-2 in the following example), and they need to be replaced with Cisco Nexus N9K-C93180YC-EX leaf switches (called 93180YC-EX-1 and 93180YC-EX-2).

The insertion of newer leaf switches works as follows:

● When 93180YC-EX-2 replaces 9372PX-2 in a vPC pair, 9372PX-1 can synchronize the endpoints with 93170YC-EX2.

● The vPC member ports on 93180YC-EX-2 stay down.

● If you remove 9372PX-1, the vPC member ports on 93180YC-EX-2 go up after 10 to 20s.

● 93180YC-EX-1 then replaces 9372PX-1, and 93180YC-EX-2 synchronizes the endpoints with 93180YC-EX-1.

● The vPC member ports on both 93180YC-EX-1 and 93180YC-EX-2 go up.

Members of a vPC must be configured with the same scale profile, however if you need to modify the scale profile on a vPC pair you may need to have two different scale profiles for a transient period required to change the configuration on both.

If you need to modify the scale profile on vPC leaf switches proceed as follows:

● On APIC, configure/enable the new scale profile on a vPC pair. The configuration is pushed to both vPC peers.

● Reload one vPC member at a time (to bring-up the leaf switch with the new profile). During the downtime the other member acts as an active switch.

● Reload the second member vPC leaf switch.

For more information, see the following document:

When configuring vPC pairs, they must be running the same software version. This means that configuration changes should not be performed with different versions, but traffic forwarding for existing configurations still works even with different software versions.

Note: ACI supports certain operations with mixed software versions, but two leaf switches that are part of the same vPC must run the same software release. For more information, see the following document:

With Cisco ACI, you can configure a total of 32 ports as part of the same vPC port channel, with 16 ports on each leaf switch. This capability was introduced in Cisco ACI 3.2. Previously, you could have a total of 16 ports in the vPC with 8 ports per leaf switch.

A FEX can be connected to Cisco ACI with what is known as a straight-through topology, and vPCs can be configured between hosts and FEX.

Different from NX-OS, a FEX cannot be connected to Cisco ACI leaf switches using a vPC.

Placement of Outside Connectivity

The external routed connection, also known as an L3Out, is the Cisco ACI building block that defines the way that the fabric connects to the external world. This can be the point of connectivity of the fabric to a campus core, to the WAN, to the MPLS-VPN cloud, and so on. This topic is extensively covered in the "Designing external layer 3 connectivity" section. The purpose of this section is to highlight physical level design choices related to the external routing technology that you plan to deploy.

Border Leaf Switches with VRF-lite, SR/MPLS Handoff and GOLF

Layer 3 connectivity to the outside can be implemented in one of two ways: by attaching routers to leaf switches (normally designated as border leaf switches) or directly to spine switches. Connectivity using border leaf switches can be further categorized in VRF-lite connectivity and SR/MPLS handoff.

● Connectivity through border leaf switches using VRF-lite: This type of connectivity can be established with any routing-capable device that supports static routing, OSPF, Enhanced Interior Gateway Routing Protocol (EIGRP), or Border Gateway Protocol (BGP), as shown in Figure 5.Figure 5 Leaf switch interfaces connecting to the external router are configured as Layer 3 routed interfaces, subinterfaces, or SVIs.

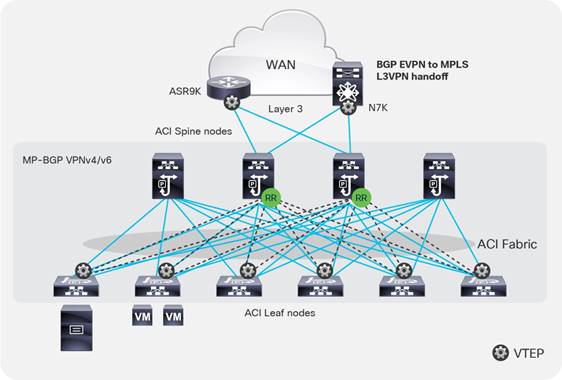

● Connectivity through border leaf switches using SR/MPLS handoff: This type of connectivity requires -FX or later type of leaf switches (it doesn’t work with first generation leaf switches nor with -EX leaf switches). The router attached to the border leaf switch must be BGP-LU and MP-BGP EVPN-capable. For more information about the SR/MPLS handoff solution, refer to the following document: https://www.cisco.comc/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-744107.html#SRMPLSlabelexchangeandpacketwalk

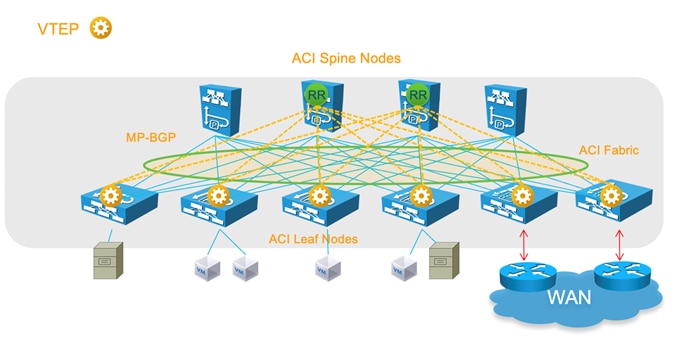

● Connectivity through spine ports with multiprotocol BGP (MP-BGP) EVPN and VXLAN (also known as GOLF): This connectivity option requires that the WAN device that communicates with the spine switches is MP-BGP EVPN-capable and that it optionally supports the OpFlex protocol. This feature uses VXLAN to send traffic to the spine ports as illustrated in Figure 6.Figure 6 This topology is possible only with Cisco Nexus 7000 series and 7700 platform (F3) switches, Cisco® ASR 9000 series Aggregation Services Routers, or Cisco ASR 1000 series Aggregation Services Routers. In this topology, there is no need for direct connectivity between the WAN router and the spine switch. For example, there could be an OSPF-based network in between.

The topology in Figure 5 illustrates the use of border leaf switches to connect to the outside.

The topology in Figure 6 illustrates the connectivity for a GOLF L3Out solution. This requires that the WAN routers support MP-BGP EVPN, OpFlex protocol, and VXLAN. With the topology in Figure 6, the fabric infrastructure is extended to the WAN router, which effectively becomes the equivalent of a border leaf switch in the fabric.

For designs based on the use of a border leaf switch, you can either dedicate leaf switches to border leaf switch functions or use a leaf switch as both a border switch and a computing switch. Using a dedicated border leaf switch is usually considered beneficial, compared to using a leaf switch for both computing and L3Out purposes, for scalability reasons.

For more details about L3Outs based on VRF-lite, or border leaf switches with SR/MPLS handoff or GOLF, refer to the "Designing external layer 3 connectivity" section.

Using Border Leaf Switches for Server Attachment

Attachment of endpoints to border leaf switches is fully supported when all leaf switches in the Cisco ACI fabric are second generation leaf switches or later, such as the Cisco Nexus 9300-EX and Cisco 9300-FX platform switches.

If the topology contains first-generation leaf switches, and regardless of whether the border leaf switch is a first- or second-generation leaf switch, you need to consider the following options:

● If VRF ingress policy is enabled (which is the default configuration), you need to make sure that the software is Cisco ACI release 2.2(2e) or later.

● If you deploy a topology that connects to the outside through border leaf switches that are also used as computing leaf switches, you should disable remote endpoint learning on the border leaf switches.

The recommendation at the time of this writing is that starting with Cisco ACI 3.2 and with topologies that include only -EX leaf switches and newer you don’t need to disable remote endpoint learning.

The "When and How to disable Remote Endpoint Learning" section provides additional information.

Limit the use of L3Out for Server Connectivity

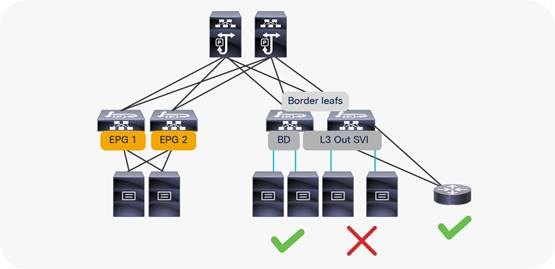

Border leaf switches can be configured with three types of interfaces to connect to an external router:

● Layer 3 (routed) interface

● Subinterface with IEEE 802.1Q tagging

● Switch Virtual Interface (SVI)

When configuring an SVI on an interface of a L3Out, you specify a VLAN encapsulation. Specifying the same VLAN encapsulation on multiple border leaf switches on the same L3Out results in the configuration of an external bridge domain.

The L3out is meant to attach routing devices including servers that run dynamic routing protocols. It is not meant to attach server interfaces that send Layer 2 traffic directly on the SVI of an L3Out. Sometimes it necessary to use L3Out for server connectivity, when servers run dynamic routing protocols, but except for this scenario, servers should be attached to EPGs and bridge domains.

There are multiple reasons for this:

● The Layer 2 domain created by an L3Out with SVIs is not equivalent to a regular bridge domain.

● The traffic classification into external EPGs is designed for hosts multiple hops away.

You can configure static or dynamic routing protocol peering over a vPC for an L3Out without any special design considerations.

Service Leaf Switch Considerations

When attaching firewalls, load balancers, or other Layer 4 to Layer 7 devices to the Cisco ACI fabric, you have the choice of whether to dedicate a leaf switch or leaf switch pair to aggregate all service devices, or to connect firewalls and load balancers to the same leaf switches that are used to connect servers.

This is a consideration of scale. For large data centers, it may make sense to have leaf switches dedicated to the connection of Layer 4 to Layer 7 services.

For deployment of service graphs with the service redirect feature, dedicated service leaf switches must be used if the leaf switches are first-generation Cisco ACI leaf switches. With Cisco Nexus 9300-EX and newer switches, you do not have to use dedicated leaf switches for the Layer 4 to Layer 7 service devices for the service graph redirect feature.

Cisco ACI has several types of SPAN as the following ones:

● Access SPAN

o Source: access port, port channel (downlink) on a leaf switch

o Destination: local leaf switch interface or an endpoint IP address anywhere in the fabric (ERSPAN)

● Fabric SPAN

o Source: fabric port (fabric link) on a leaf or spine switch

o Destination: an endpoint IP address anywhere in the fabric (ERSPAN)

● Tenant SPAN

o Source: EPGs anywhere in the fabric

o Destination: an endpoint IP address anywhere in the fabric (ERSPAN)

In case of ERSPAN, your SPAN destination can be connected as an endpoint anywhere in the Cisco ACI fabric, which gives more flexibility about where to attach the traffic analyzer (SPAN destination), but it uses bandwidth from the fabric uplinks.

Starting with ACI 4.1 you can use a port channel as a SPAN destination on ACI -EX leaf switches or newer.

Thus, if you need to monitor traffic wherever it’s connected to the Cisco ACI fabric, you might want to consider having a SPAN destination (analyzer) on every single leaf switch. Starting with Cisco ACI 4.2(3), the number of span sessions has increased to 63, which means that you can potentially configure local access span for all front panel ports of a Cisco ACI leaf switch.

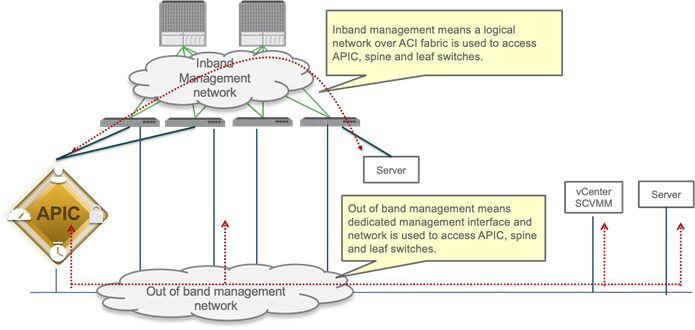

In-band and out-of-band Management Connectivity

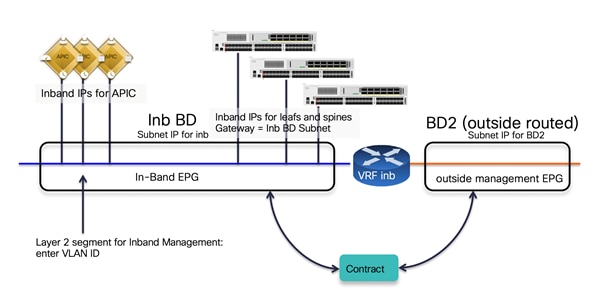

An administrator can connect to the Cisco APICs, leaf and spine switches of a Cisco ACI fabric using in-band or out-of-band connectivity for management purposes.

Out-of-band management is mandatory for the Cisco APIC initial setup and requires additional cabling on the management interfaces on the leaf and spine switches (interface mgmt0), whereas in-band management doesn’t require additional cabling as the traffic traverses Cisco ACI fabric.

In-band management is necessary if you plan to use Cisco Nexus Insights: it must be configured on each leaf and spine switch to export telemetry data.

Note: For more information about telemetry, refer to the Cisco Nexus Insight documentation:

https://www.cisco.com/c/en/us/products/data-center-analytics/nexus-insights/index.html

However, an administrator might not be able to connect to leaf and spine switches using an in-band management network if there is something wrong with the Cisco ACI fabric. Thus, the general recommendation is to use out-of-band management or use both in-band and out-of-band managements for critical network connectivity.

If both in-band and out-of-band managements are available, Cisco APIC uses the following forwarding logic:

● Packets that come in an interface go out from the same interface

● Packets sourced from the Cisco APIC, destined to a directly-connected network, go out the directly-connected interface

● Packets sourced from the Cisco APIC, destined to a remote network, prefer in-band, followed by out-of-band by default.

The third bullet needs attention if you have communication sourced from the Cisco APIC, such as VMM domain integration, external logging, export, or import configuration. The preference can be changed at System > System Settings > APIC Connectivity Preferences. Another option is to configure static route on the Cisco APIC, which is available starting from Cisco ACI release 5.1.

For more information about in-band and out-of-band management, refer to the "Fabric Infrastructure (Underlay) / In-Band and Out-of-Band Management" section.

Multiple Locations Data Centers Design Considerations

When having multiple data centers that need to be interconnected with each other, you have the choice of whether to manage network in each location separately, or take advantage of the "Cisco ACI Anywhere" solution that includes Cisco ACI Multi-Pod, Cisco ACI Multi-Site, Remote Leaf, vPod and public cloud integrations.

A detailed description of Cisco ACI Anywhere is outside of the scope of this document, but it is important to keep into account the high-level requirements for extending Cisco ACI when designing and setting up the fabric such as IP addressing used in the infrastructure (TEP pool), Round Trip Time requirements, requirement for Multicast Routing (or not), MTU requirements and so on.

The following solutions are the deployment options to extend multiple on-premises data centers and centrally manage separate physical Cisco ACI fabrics:

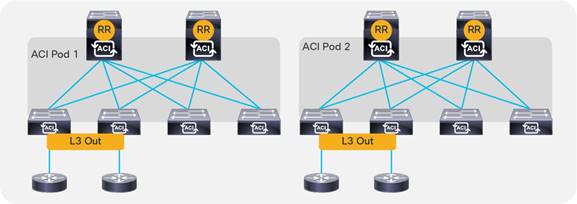

● Cisco ACI Multi-Pod: Enables a single Cisco APIC cluster to manage the different Cisco ACI fabrics that are interconnected over a private IP network that must be configured for PIM bidir. Those separate Cisco ACI fabrics are named "pods", and each pod is a regular two-tier or three-tier topology. The same Cisco APIC cluster can manage multiple pods. The main advantage of the Cisco ACI Multi-Pod design is operational simplicity, with multiple separate pods managed as if they were logically a single entity.

● Cisco ACI Multi-Site: Addresses the need for fault domain isolation across different Cisco ACI fabrics that are interconnected over an IP network, which may as well be a WAN without the need for multicast routing in the IP network. Those separate Cisco ACI fabrics are named "Sites", and each site is a regular two-tier or three-tier topology with independent Cisco APIC clusters. Separate Cisco ACI sites are managed by a Cisco ACI Multi-Site Orchestrator (MSO) that provides centralized policy definition and management.

● Remote Leaf Switch: Addresses the need to extend connectivity and consistent policies to remote locations that are connected using a private or a public network (such as a WAN) where it’s not possible or desirable to deploy a full Cisco ACI pod (with leaf and spine switches). The Cisco APIC cluster in the main location can manage the remote leaf switches connected over an IP network as if they were local leaf switches.

Figure 9 provides an example of how to physically connect spine switches and remote leaf switches to the IP network between locations. All of these solutions can be deployed together. The spine and remote leaf switch interfaces are connected to the IP network devices through point-to-point routed interfaces with an 802.1q VLAN 4 value.

The hardware and software requirements are as follows:

● Cisco ACI Multi-Pod requires Cisco ACI 2.0 or later.

● Cisco ACI Multi-Site requires Cisco ACI 3.0 or later, and a second-generation spine switch or later in each site.

● Remote leaf switch requires Cisco ACI 3.1 or later, a second-generation spine switch or later in the main location, and a second-generation leaf switch or later in the remote location.

● First-generation spine switches and second-generation spine switches can be part of the same Cisco ACI fabric. However, only second-generation spine switches should connect to the IP network for Cisco ACI Multi-Site and the remote leaf switch.

● Use of Cisco ACI Multi-Site and a remote leaf switch requires Cisco ACI 4.1(2) or later.

The following design requirements/considerations apply to the IP network between locations:

● MTU (this topic is covered also in the Fabric Infrastructure (undelay) design):

o MTU of the frames generated by the endpoints connected to the fabric: VXLAN encapsulation overhead needs to be taken into consideration. VXLAN data-plane traffic adds 50 bytes of overhead (54 bytes if the IEEE 802.1q header of the original frame is preserved), so you must be sure that all the Layer 3 interfaces in the IP network between locations can accept packets with the increased MTU size. A generic recommendation is to add at least 100 bytes to the MTU configuration on network interfaces for the case where CloudSec encryption is also enabled. For example, if the endpoints are configured with the default 1500-byte value, then the IP network MTU size should be set to 1600 bytes.

o MTU of the MP-BGP control-plane communication between locations: By default, the spine switches generate 9000-byte packets for exchanging endpoint routing information. If that default value is not modified, the IP network between locations must support an MTU size of at least 9000 bytes, otherwise the exchange of control plane information across sites would not succeed (despite being able to establish MP-BGP adjacencies). The default value can be tuned by modifying the corresponding system settings at System > System Settings > Control Plane MTU.

● OSPFv2 is required on external routers that are connected to the spine switch or to a remote leaf switch.

● PIM-Bidir is required for Cisco ACI Multi-Pod.

● DHCP relay is required for Cisco ACI Multi-Pod and a remote leaf switch.

● Ensure that the maximum latency between pods is within the validated limits.

● We recommend that you configure a proper CoS-to-DSCP mapping on Cisco APIC to ensure that traffic received on the destination spine switch or remote leaf switch in a remote location can be assigned to its proper Class of Service (CoS) based on the DSCP value in the outer IP leader of inter-pod VXLAN traffic. This is because the IP network devices between locations are external to the Cisco ACI fabric and may not be possible to assume that the 802.1p values are properly preserved across the IP network and that the DSCP values set by the spine switches before sending the traffic into the IP network can then be used to differentiate and prioritize the different types of traffic. For more information about Cisco ACI QoS, refer to the "Quality of Service (QoS) in ACI" section.

● TEP pool addresses (this topic is covered also in the Fabric Infrastructure (underlay) design):

o Cisco ACI Multi-Pod: Each pod is assigned a separate and non-overlapping infra TEP pool prefix that needs to be routable in the IPN (Interpod Network).

o Cisco ACI Multi-Site: The infra TEP pool prefixes used within each site do not need to be exchanged across sites to allow intersite communication. Instead, the following TEP addresses (which are not from the infra TEP pool): BGP-EVPN Router-ID (EVPN-RID), Overlay Unicast TEP (O-UTEP), and Overlay Multicast TEP (O-MTEP) need to be routable across the Inter-Site Network (ISN) connecting the fabrics. If sites are connected over a WAN, they need to be public routable IP addresses.

o Remote Leaf: Each remote leaf switch location is assigned a remote leaf switch TEP pool that needs to be reachable from all the pods and other remote leaf switches within the same Cisco ACI fabric. Since a Cisco ACI pod could make use of an infra TEP pool that may not be routable across the network infrastructure connecting to the remote leaf switches, you must assign an additional external TEP pool to each Cisco ACI pod part of the fabric. Cisco APICs, spine switches and border leaf switches are automatically allocated TEP IP addresses from these external TEP pools. Due to the fact that the infra TEP pool is meant to be a private network, we strongly recommend that you always configure an external TEP pool.

For more information about each architecture, refer to the white papers:

Fabric Infrastructure (Underlay) Design

The purpose of this section is to describe the initial design choices for the setting up the fabric infrastructure or underlay: the choice of infra VLAN, TEP pool, MP-BGP configuration, hardware profile for the leaf switches, and so on.

This not a replacement to the Cisco APIC Getting Started Guide, which you should consult prior to deploying Cisco ACI:

Choosing the Leaf Switch Forwarding Profile

The hardware of -EX, -FX, FX2, -GX leaf switches or later is based on a programmable hardware architecture. The hardware is made of multipurpose "tiles" where each tile can be used to perform routing functions or filtering functions and so on. Starting with the Cisco ACI 3.0 release, the administrator can choose to which function to allocate more tiles based on predefined profiles.

Note The profile functionality is available on the -EX, -FX, -FX2, and -GX leaf switches, but not on the Nexus 9358GY-FXP switch.

The functions whose scale is configurable using the use of tiles are:

● The MAC address table scalability

● The IPv4 scalability

● The IPv6 scalability

● The Longest Prefix Match table scalability

● The Policy Cam scalability (for contracts/filtering)

● The space for Routed Multicast entries

The default profile (called also "Dual Stack") allocates the hardware as follows:

● MAC address table scalability: 24k entries

● The IPv4 scalability: 24k entries

● The IPv6 scalability: 12k entries

● The Longest Prefix Match table scalability: 20k entries

● The Policy Cam scalability (for contracts/filtering): 64k entries

● Multicast: 8k entries

Table 1 provides the information about the scale of different profiles and in which release they were introduced. The rows in the table that do not specify the type of leaf switch are applicable to -EX, -FX, -FX2, and -GX leaf switches.

| Tile profile |

Cisco ACI Release when first introduced |

EP MAC

|

EP IPv4 |

EP IPv6 |

LPM |

Policy |

Multicast |

| Default |

Release 3.0 |

24K |

24K |

12K |

20K (IPv4) 10k (IPv6) |

61K (Cisco ACI 3.0) 64K (Cisco ACI 3.2) |

8K (Cisco ACI 3.0) |

| IPv4 |

Release 3.0 |

48K |

48K |

0 |

38K (IPv4) 0 (IPv6) |

61K (Cisco ACI 3.0) 64K (Cisco ACI 3.2) |

8K (Cisco ACI 3.0)) |

| High Dual Stack for -EX, -FX2 |

Release 3.1 |

64k |

64k |

24K |

38K (IPv4) 19K (IPv6) |

8k (Cisco ACI 3.1) |

0 (in Cisco ACI 3.1) 512 (in Cisco ACI 3.2) |

| High Dual Stack for -FX, -GX |

Release 3.1 (FX only) |

64K |

64K |

24K (ACI3.1) 48K (Cisco ACI 3.2) |

38K (IPv4) 19K (IPv6) |

8k (Cisco ACI 3.1) 128K (Cisco ACI 3.2) |

0 (in Cisco ACI 3.1) 512 (in Cisco ACI 3.2) 32k (in Cisco ACI 4.0) |

| High LPM |

Release 3.2 |

24K |

24K |

12K |

128k (IPv4) 64k (IPv6) |

8K |

8K |

| High Policy (N9K-C93180YC-FX and N9K-C93600CD-GX with 32GB of RAM only) |

Release 4.2 |

24K |

24K |

12K |

20K (IPv4) 10k (IPv6) |

256K |

8K |

Note: Cisco Nexus 9300-FX2 with the High Dual Stack profile cannot compress policy-cam rules.

When deploying the fabric, you may want to define from the very beginning which forwarding profile is more suitable for the requirements of your data center.

The default profile configures the leaf switch for support of both IPv4 and IPv6 and Layer 3 multicast capacity. But, if you plan to use Cisco ACI primarily as a Layer 2 infrastructure, the IPv4 profile with more MAC address entries and no IPv6 entries may be more suitable. If, instead, you plan on using IPv6, the high dual-stack profile may be more suitable for you. Some profiles offer more capacity for the Longest Prefix Match table for designs where, for instance, Cisco ACI is a transit routing network, in which case the fabric offers less capacity for IPv4 and IPv6.

The profile configuration is done per leaf switch, so you can potentially define different scale profiles for leaf switches that are used for different purposes. For example, you may want to configure a leaf switch that is used as a dedicated border leaf switch with a bigger Longest Prefix Match table.

The configuration of the hardware profiles can be performed from Fabric > Access > Leaf Switches > Policy-Groups > Forwarding Scale Profile Policy as illustrated in the following picture:

Note You need to reboot the leaf switch after changing the hardware profile.

There is also the possibility to set the forwarding scale profile from the capacity dashboard. You should use this second approach with caution, because when you modify the leaf switch profile from the capacity dashboard, the UI selects the profile that is already associated with the leaf switch that you chose. Normally the profile that is associated with all leaf switches is the "default" profile. Hence, if you modify a profile, you will modify the hardware profile for all the leaf switches. To prevent this operational mistake, you should configure a non-default policy group for all the leaf switches or per group of leaf switches that share the same use/characteristics.

For more information about the configurable forwarding profiles, see the following document:

When configuring a Cisco ACI fabric, you need to give a fabric-id to it. The fabric-id should not be confused with the pod-id or the site-id. You should just use "fabric-id 1," unless there is some specific reason not to, such as if you plan to use GOLF with Auto-RT, and all sites belong to the same ASN. Refer to the Cisco ACI Multi-Site Architecture white paper for more information:

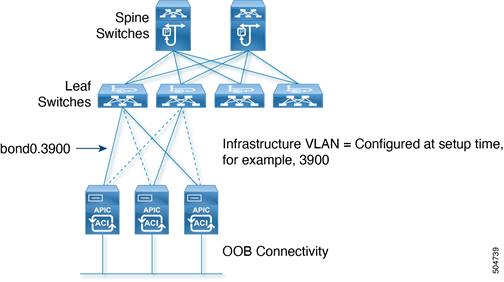

The Cisco APIC communicates with the Cisco ACI fabric through a VLAN that is associated with the tenant called infrastructure, which appears in the Cisco APIC User Interface as tenant "infra". This VLAN is used for internal control communication between fabric switches (leaf and spine switches and Cisco APICs).

The infrastructure VLAN number is chosen at the time of fabric provisioning. This VLAN is used for internal connectivity between the Cisco APIC and the leaf switches.

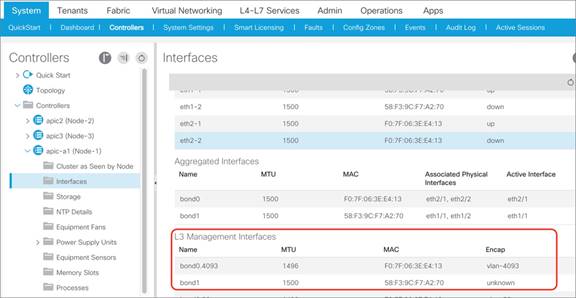

From the GUI, you can see which infrastructure VLAN is in use, as in Figure 11. From the command-line interface, you can find the infrastructure VLAN; for instance, by using this command on a leaf switch:

leaf1# show system internal epm vlan all | grep Infra

The infrastructure VLAN is also used to extend the Cisco ACI fabric to another device. For example, when using Cisco ACI with Virtual Machine Manager (VMM) integration, the infrastructure VLAN can be used by Cisco ACI Virtual Edge to send DHCP requests and get an address dynamically from the Cisco ACI fabric TEP pool and to send VXLAN traffic.

In a scenario in which the infrastructure VLAN is extended beyond the Cisco ACI fabric (for example, when using Cisco ACI Virtual Edge, OpenStack integration with OpFlex protocol, or Hyper-V integration), this VLAN may need to traverse other (that is, not Cisco ACI) devices.

Note: To enable the transport of the infrastructure VLAN on Cisco ACI leaf switch ports, you just need to select the checkbox in the Attachable Access Entity Profile (AAEP) that is going to be associated with a given set of ports.

Common Reserved VLANs on External Devices

Some platforms (for example, Cisco Nexus 9000, 7000, and 5000 series switches) reserve a range of VLAN IDs, typically 3968 to 4095.

In Cisco UCS, the VLANs that can be reserved are the following:

● FI-6200/FI-6332/FI-6332-16UP/FI-6324: 4030–4047. Note that vlan 4048 is being used by VSAN 1.

● FI-6454: 4030-4047 (fixed), 3915–4042 (can be moved to a different 128 contiguous block VLAN, but requires a reboot).

To avoid conflicts, we highly recommend that you choose an infrastructure VLAN that does not fall within the reserved range of other platforms. For example, choose a VLAN < 3915.

Hardening the Infrastructure VLAN

Starting with Cisco ACI 5.0 it is possible to harden the infrastructure VLAN to limit the traffic that is allowed on the infra VLAN from the front panel ports by restricting it to the traffic generated by the Cisco APICs, or OpFlex or VXLAN-encapsulated traffic generated by hypervisors.

You can configure Cisco ACI for this from System Settings > Fabric-Wide Settings > Restrict Infra VLAN Traffic.

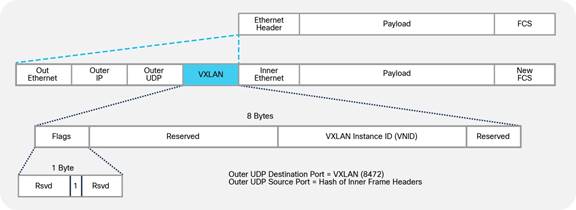

Cisco ACI forwarding is based on a VXLAN overlay. Leaf switches are virtual tunnel endpoints (VTEPs), which, in Cisco ACI terminology, are known as PTEPs (physical tunnel endpoints).

Cisco ACI maintains an endpoint database containing information about where (that is, on which TEP) an endpoint's MAC and IP addresses reside.

Cisco ACI can perform Layer 2 or Layer 3 forwarding on the overlay. Layer 2 switched traffic carries a VXLAN network identifier (VNID) to identify bridge domains, whereas Layer 3 (routed) traffic carries a VNID with a number to identify the VRF.

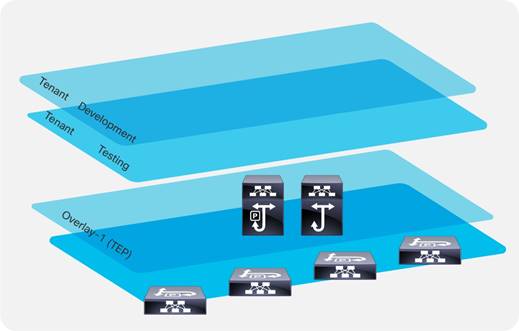

Cisco ACI uses a dedicated VRF and a subinterface of the uplinks as the infrastructure to carry VXLAN traffic. In Cisco ACI terminology, the transport infrastructure for VXLAN traffic is known as Overlay-1, which exists as part of the tenant "infra".

The Overlay-1 VRF contains /32 routes to each VTEP, vPC virtual IP address, Cisco APIC, and spine-proxy IP address.

The VTEPs representing the leaf and spine switches in Cisco ACI are called physical tunnel endpoints, or PTEPs. In addition to their individual PTEP addresses, spine switches can be addressed by a proxy TEP. This is an anycast IP address that exists across all spine switches and is used for forwarding lookups. Each VTEP address exists as a loopback on the Overlay-1 VRF.

vPC loopback VTEP addresses are the IP addresses that are used when leaf switches forward traffic to and from a vPC port.

The fabric is also represented by a fabric loopback TEP (FTEP), used to encapsulate traffic in VXLAN to a vSwitch VTEP if present. Cisco ACI defines a unique FTEP address that is identical on all leaf switches to allow mobility of downstream VTEP devices.

All these TEP IP addresses are assigned by the Cisco APIC to leaf and spine switches using DHCP addressing. The pool of these IP addresses is called TEP pool, and it is configured by the administrator at the fabric initial setup.

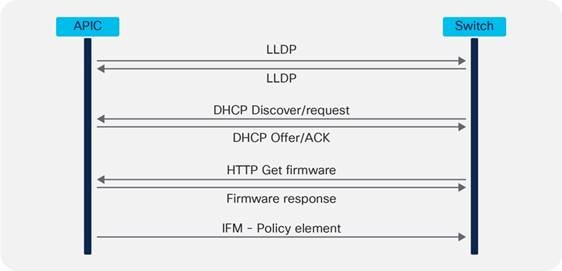

The Cisco ACI fabric is brought up in a cascading manner, starting with the leaf switches that are directly attached to the Cisco APIC. Link Layer Discover Protocol (LLDP) and control-plane IS-IS protocol convergence occurs in parallel to this boot process. The Cisco ACI fabric uses LLDP-based and DHCP-based fabric discovery to automatically discover the fabric switch switches, assign the infrastructure TEP addresses, and install the firmware on the switches.

Figure 12 shows how bootup and autoprovisioning works for the Cisco ACI switches. The switch gets an IP address from the Cisco APIC. Then, the switch asks to download the firmware through an HTTP GET request.

Although TEPs are located inside the fabric, there are some scenarios where the TEP range may be extended beyond the fabric. As an example, when you use Cisco ACI Virtual Edge, fabric TEP addresses are allocated to the virtual switch. Therefore, it is not advisable to use overlapping addresses between the internal TEP range and the external network in your data center. Furthermore, when planning for the TEP pool you, should also keep into account the requirements of Cisco ACI Multi-Pod or Cisco ACI Multi-Site and so on if you plan to deploy a Cisco ACI in multiple data centers as described in the "Multiple locations Data Centers design considerations" section.

It is important to distinguish the following types of TEP pools:

● The infra TEP pool: This is the pool of IP addresses used for the loopbacks on spine switches, leaf switches, vPCs, and so on, and the pool is typically just a private IP address space, which may need to be routable on a private network (for instance on an IPN for Cisco ACI Multi-Pod), but doesn’t need to be externally routable on a WAN. The infra TEP pool is defined at provisioning time (day 0).

● The remote TEP pool: This is a pool to provide addressing for remote leaf switches that you don’t need to configure at the fabric bring up time. The pool has to be a routable pool of IP addresses and not just a private pool, as it is possibly used over a WAN. This pool is configured when and if there is a need to connect remote leaf switches. The configuration can be found at: Fabric > Inventory > Pod Fabric Setup Policy > Physical Pods > Remote Pools.

● The external TEP pool: This is a pool that doesn’t need to be configured at the fabric bring up. The purpose of this pool is to provide externally routable IP addresses for the Cisco APICs, spine switches, and border leaf switches for scenarios where some TEP addresses need to be routable over a public network. Examples are the use of remote leaf switches and the Inter-Site L3Out. This feature has been added from Cisco ACI 4.1(2). The configuration can be found at: Fabric > Inventory > Pod Fabric Setup Policy > Physical Pods > External TEP. The external TEP pool feature gives more freedom in the design of the IP network (to connect to remote leaf switches for instance) in that you don’t need to plan to carry infra TEP addresses on it, instead Cisco ACI uses the external TEP pool addresses for traffic that needs to be sent over the WAN. You can find more information in the following document:

● Other External TEP addresses: You need addresses such as the Control-Plane External Tunnel Endpoint, the Data-Plane ETEP, the Head-End Replication ETEP when and if deploying Cisco ACI Multi-Site. The addresses can be external, public routable IP addresses that are not from the infra TEP pool nor from the external TEP pool. You can configure the addresses using the Cisco ACI Multi-Site Orchestrator.

For the purpose of this design guide, the focus is on the infra TEP pool.

The number of addresses required for the infra TEP address pool depends on a number of factors, including the following:

● Number of Cisco APICs

● Number of leaf and spine switches

● Number of Cisco ACI Virtual Edge instances, Hyper-V hosts or, more generally, virtualized hosts managed using VMM integration and integrated with OpFlex

● Number of vPCs required

Note: In this calculation, you do not need to include the count of switches of a different pod because each pod uses its own TEP pool that should not overlap with other pod pools, as described in the following document:

To avoid issues with address exhaustion in the future, we strongly recommend that you allocate a /16 or /17 range, if possible. If this is not possible, a /19 range should be considered the absolute minimum. However, this may not be sufficient for larger deployments. It is critical for you to size the TEP range appropriately, because you cannot easily modify the size later.

You can verify the TEP pool after the initial configuration by using the following command:

Apic1# moquery –c dhcpPool

If you are planning to use Cisco ACI Multi-Pod, Cisco ACI Multi-Site, a remote leaf switch, and vPod in the future, the following list summarizes the TEP address-related points:

● Cisco ACI Multi-Pod: You need to make sure the pool you define is nonoverlapping with other existing or future pods. However, to count the infra TEP pool range, you do not need to include the count of switches of a pod other than the one you are configuring, because each pod uses its own infra TEP pool that should not overlap with other pod pools, as described in the following document:

● Cisco ACI Multi-Site: With Cisco ACI Multi-Site, each site uses an independent TEP pool, so you could potentially re-use the same infra TEP pool as another site. Quoting https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-739609.pdf: "The TEP pool prefixes used within each site do not need to be exchanged across sites to allow intersite communication. As a consequence, there are no technical restrictions regarding how those pools should be assigned. However, the strong recommendation is not to assign overlapping TEP pools across separate sites so that your system is prepared for future functions that may require the exchange of TEP pool summary prefixes."

● Cisco ACI Multi-Site uses these public routable TEP addresses in addition to the infra TEP pool: The Control-Plane External Tunnel Endpoint (one per spine connected to the Inter-Site Network), the Data-Plane ETEP (one per site per pod) and the Head-End Replication ETEP (one per site).

● The support for Intersite L3Out mandates the deployment of an "external TEP pool" for each site that is part of the Cisco ACI Multi-Site domain. These addresses are added to the border leaf switch infra TEP address. For more information, refer to the following document:

● For remote leaf switches, you need to consider the need to configure a routable TEP pool for the Cisco APICs, spine switches, and border leaf switches, but starting from Cisco ACI 4.1(2) you can use the external TEP pool feature instead. You can find more information in the following document:

Note You can view the infra TEP pool as well as the external TEP pools from Fabric > Inventory > Pod Fabric Setup Policy.

In the bring up phase, you need to provide a multicast range that Cisco ACI uses as an external multicast destination for traffic in a bridge domain. This address can be any address in the range 225.0.0.0/15 to 231.254.0.0/15, and it should be a /15. This address range is needed for Cisco ACI to forward multidestination traffic on bridge domains because Cisco ACI implements routed multicast trees in the underlay for this type of traffic.

Each bridge domain is assigned a group IP outer (GIPo) address (as opposed to group IP inner [GIPi] or the multicast address in the overlay). This is also referred to as the flood GIPo for the bridge domain and is used for all multidestination traffic on the bridge domain inside the fabric. The multicast tree in the underlay is set up automatically without any user configuration. The roots of the trees are always the spine switches, and traffic can be distributed along multiple trees according to a tag, known as the forwarding tag ID (FTAG).

With Cisco ACI Multi-Pod, the scope of this multicast address range encompasses all pods, hence multicast routing must be configured on the Inter-Pod Network.

Routing in the infrastructure VRF is based on IS-IS. Routing within each tenant VRF is based on host routing for endpoints that are directly connected to the Cisco ACI fabric, or Longest Prefix Match (LPM) with bridge domain subnets or routes from external routers learned from a border leaf switch. A border leaf switch is where Layer 3 Outs (L3Outs) are deployed.

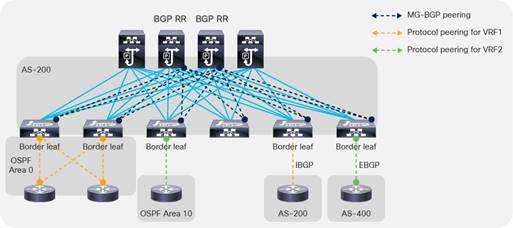

Cisco ACI uses MP-BGP VPNv4/VPNv6 to propagate external routes in tenant VRF instances within a pod.

In the case of Cisco ACI Multi-Pod and Cisco ACI Multi-Site, Cisco ACI uses MP-BGP VPNv4/VPNv6/EVPN to propagate endpoint IP/MAC addresses and external routes in tenant VRF instances between pods or sites.

Cisco ACI uses BGP route reflectors to optimize the number of BGP peers.

There are two types of route reflectors in Cisco ACI:

● Regular BGP route reflectors are used for VPNv4/VPNv6 within a pod between leaf and spine switches.

● External BGP route reflectors are used for VPNv4/VPNv6/EVPN across pods between spine switches for Cisco ACI Multi-Pod, or sites for Cisco ACI Multi-Site.

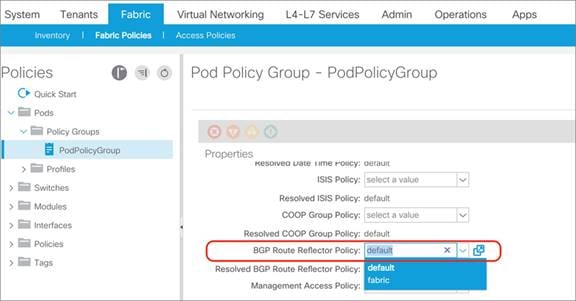

The BGP Route Reflector Policy controls which spine switches should operate as BGP reflectors within a pod (regular) and between pods/sites (external).

Regular BGP route reflectors must be configured per pod while external BGP route reflectors are optional.

When using Cisco ACI Multi-Pod or Cisco ACI Multi-Site, if external BGP route reflectors are not configured, spine switches between pods or sites will form a full mesh of iBGP peers.

It is important to note that the BGP Autonomous System (AS) number is a fabric-wide configuration setting that applies across all Cisco ACI pods that are managed by the same Cisco APIC cluster (Cisco ACI Multi-Pod).

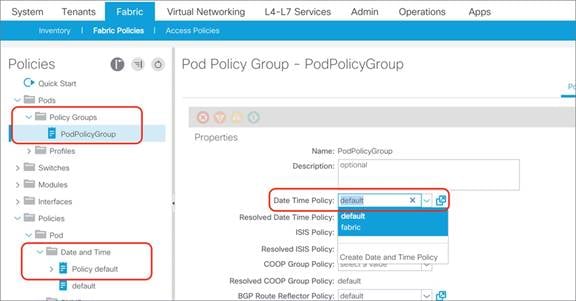

To enable and configure MP-BGP within the fabric, you can find the configuration depending on the release as follows:

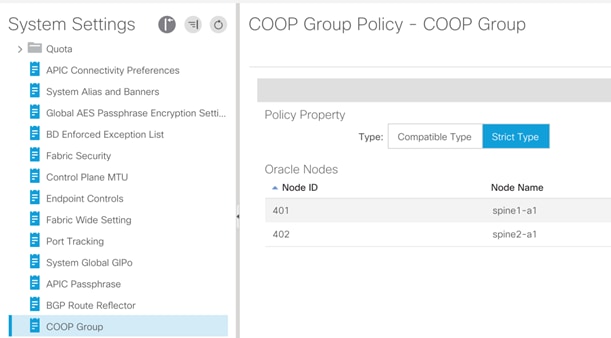

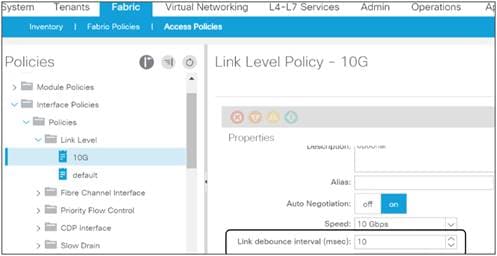

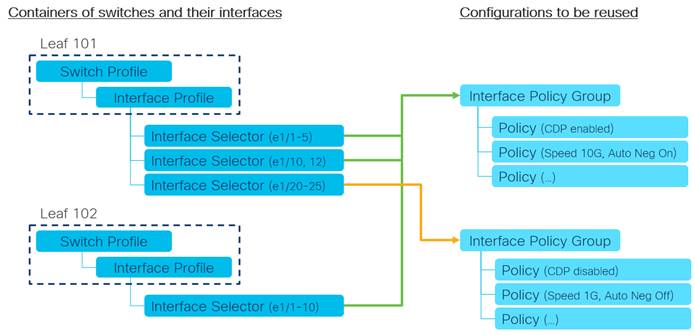

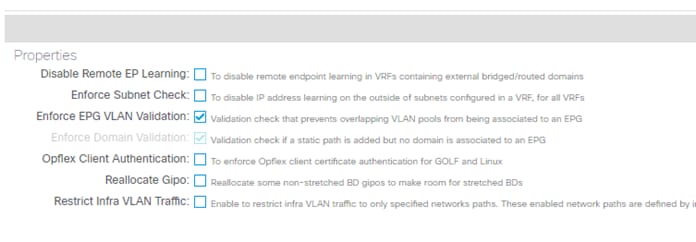

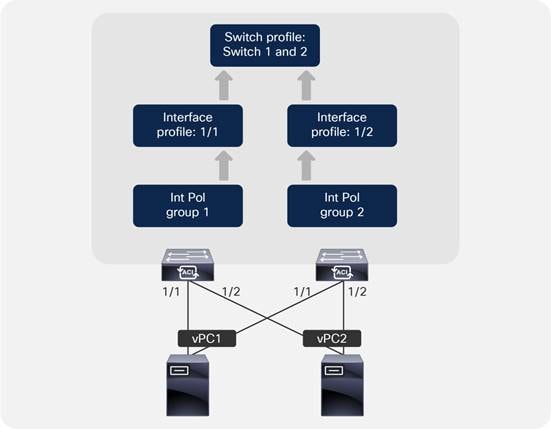

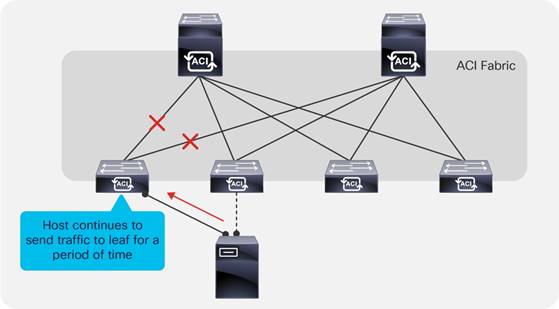

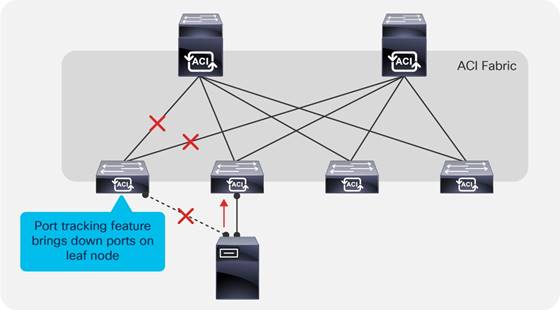

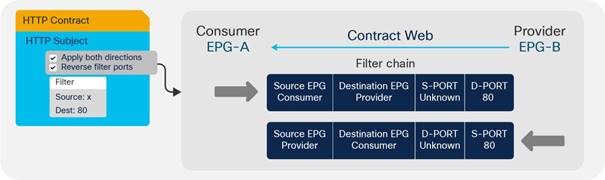

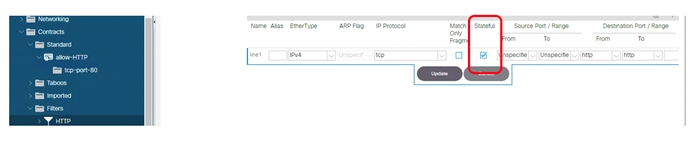

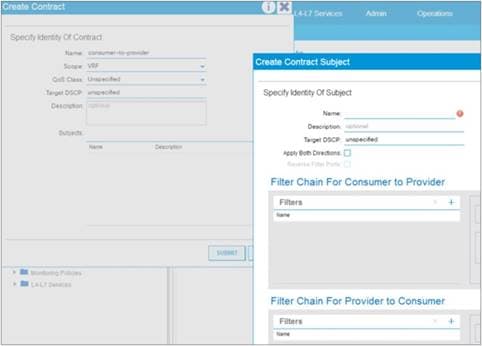

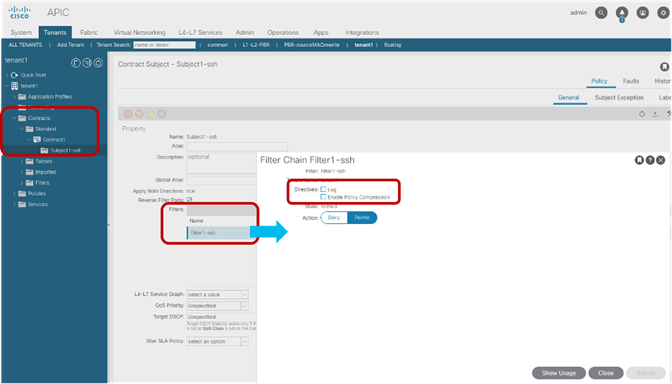

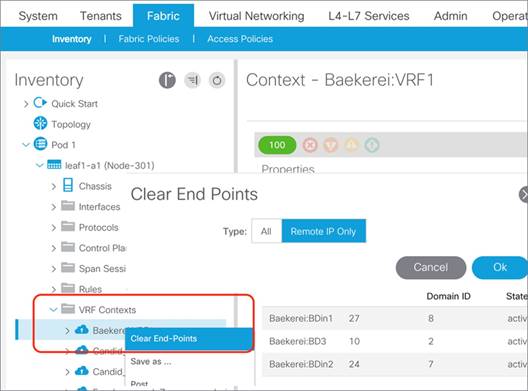

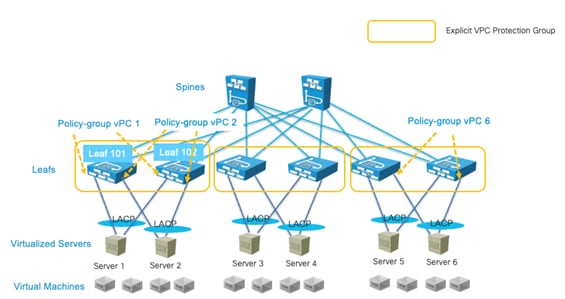

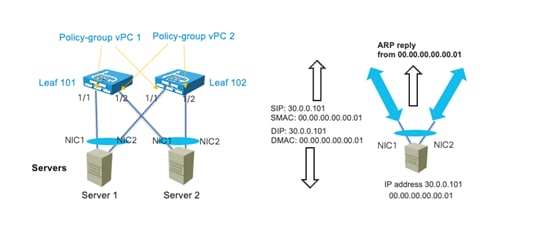

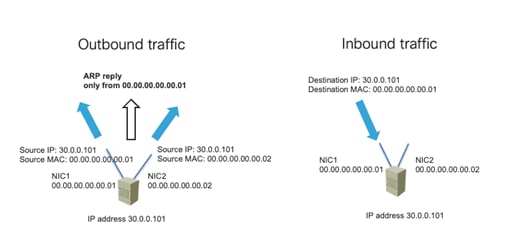

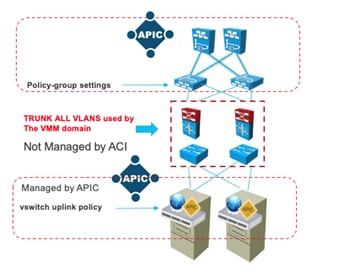

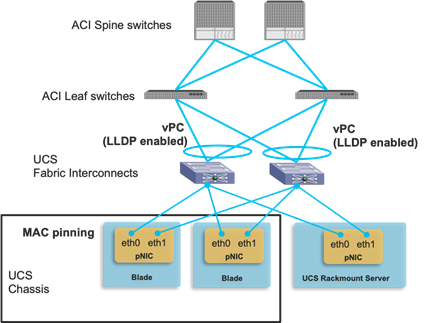

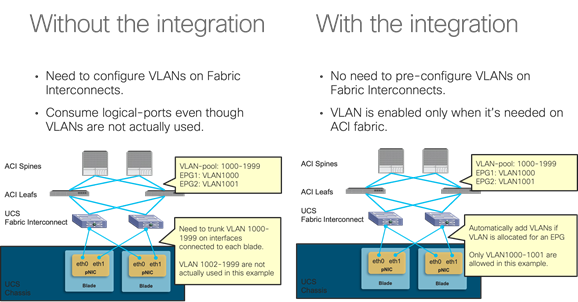

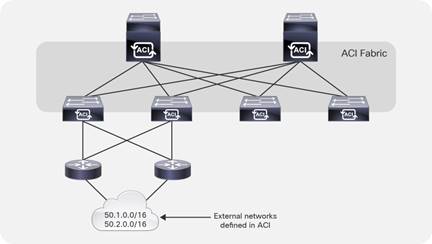

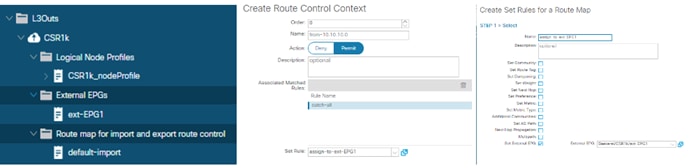

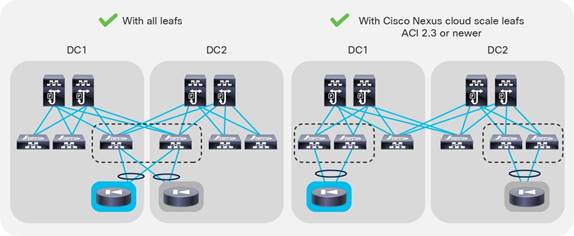

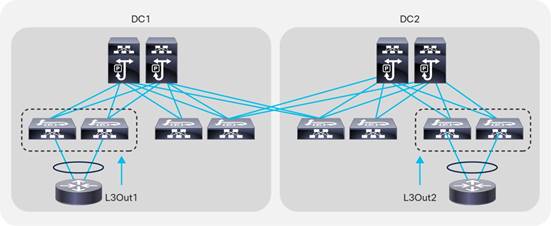

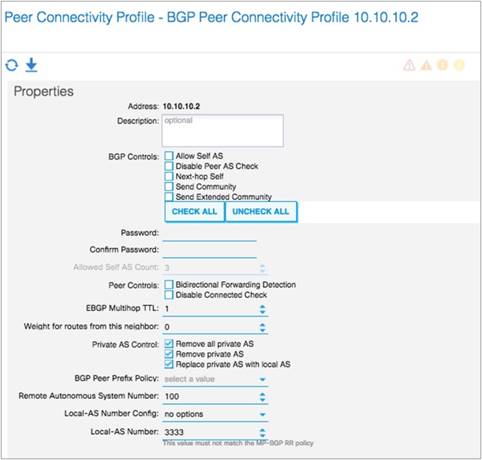

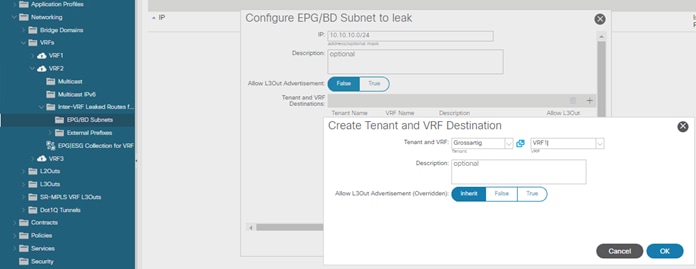

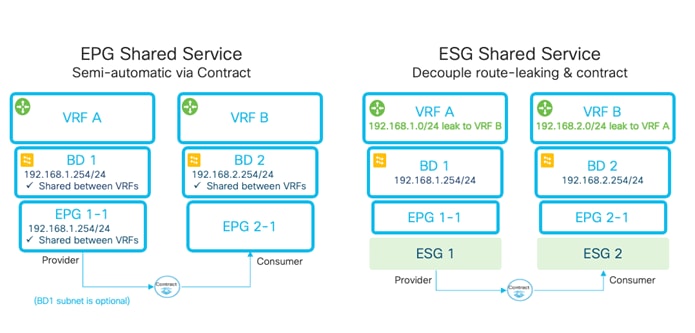

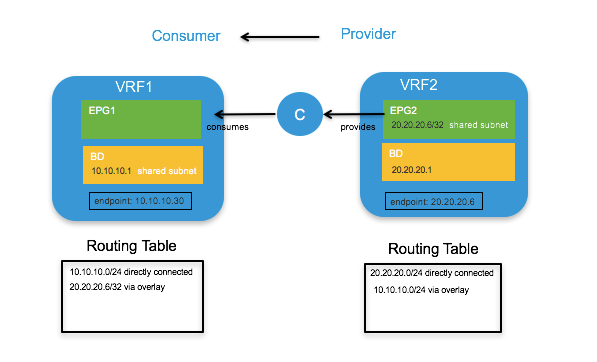

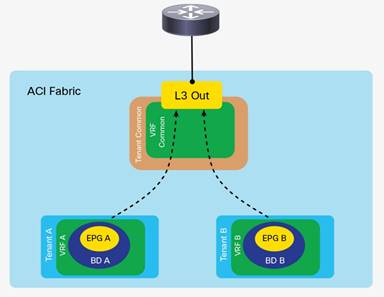

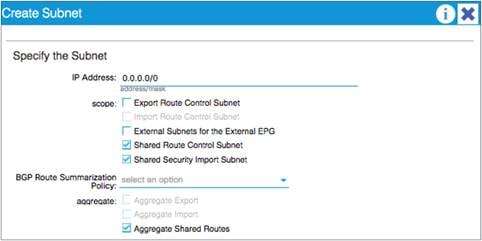

● Under Fabric > Fabric Policies > Pod Policies > BGP Route Reflector default