Cisco Application Centric Infrastructure Policy-Based Redirect Service Graph Design White Paper

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

Cisco Application Centric Infrastructure (Cisco ACI) technology provides the capability to insert Layer 4 through Layer 7 (L4-L7) functions using an approach called a service graph. One of the main features of the service graph is Policy-Based Redirect (PBR).

With PBR, the Cisco ACI fabric can redirect traffic between security zones to L4-L7 devices, such as a firewall, Intrusion-Prevention System (IPS), or load balancer, without the need for the L4-L7 device to be the default gateway for the servers or the need to perform traditional networking configuration such as Virtual Routing and Forwarding (VRF) sandwiching or VLAN stitching. Cisco ACI can selectively send traffic to L4-L7 devices based, for instance, on the protocol and the Layer 4 port. Firewall inspection can be transparently inserted in a Layer 2 domain with almost no modification to existing routing and switching configurations.

This document provides PBR service graph design and configuration guidance using a variety of use cases and options.

This document assumes that the reader has a basic knowledge of Cisco ACI and service graphs and how these work. For more information, see the Cisco ACI white papers available at Cisco.com: https://www.cisco.com/c/en/us/solutions/data-center-virtualization/application-centric-infrastructure/white-paper-listing.html.

This document uses the following terms with which you must be familiar:

● BD: Bridge domain

● EPG: Endpoint group

● Class ID: Tag that identifies an EPG

● Policy: In Cisco ACI, “policy” can mean configuration in general, but in the context of this document, “policy” refers specifically to the Access Control List (ACL)–like Ternary Content-Addressable Memory (TCAM) lookup used to decide whether a packet sourced from one security zone (EPG) and destined for another security zone (EPG) is permitted, redirected, or dropped

● PBR node: L4-L7 device that is used for a PBR destination

● Consumer connector: PBR node interface facing the consumer side

● Provider connector: PBR node interface facing the provider side

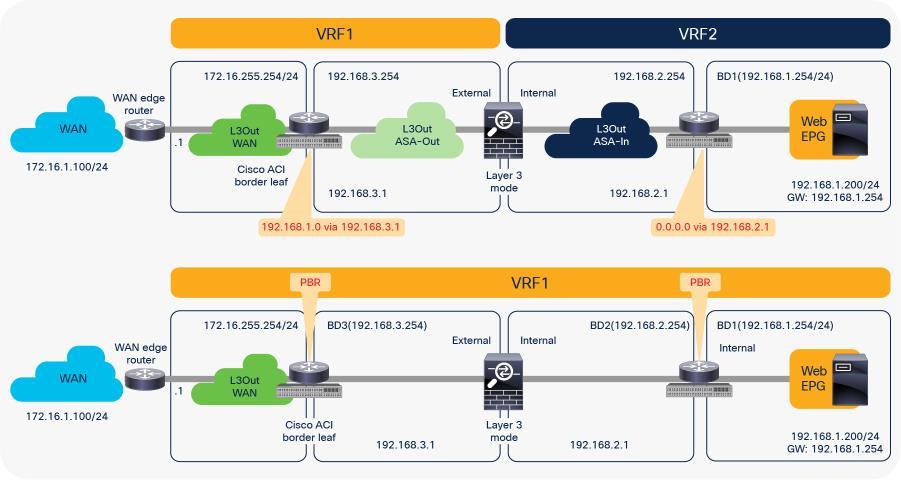

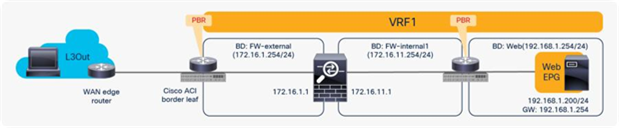

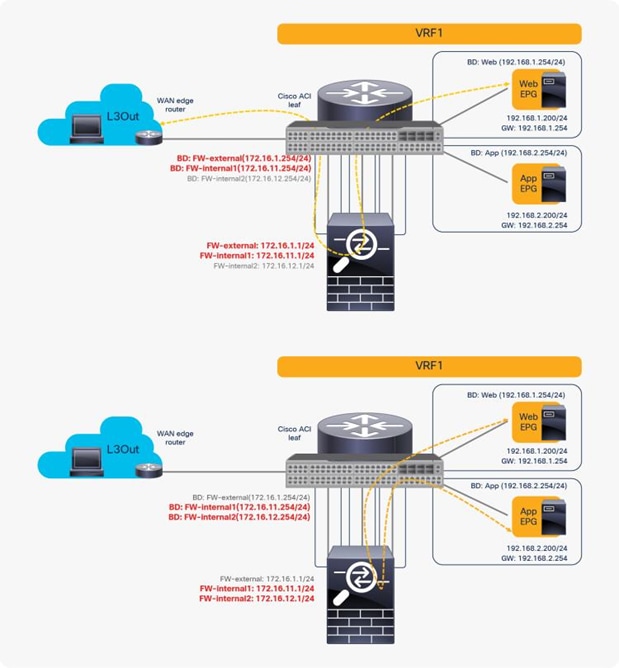

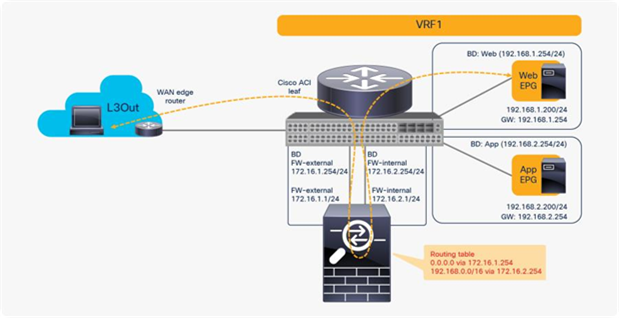

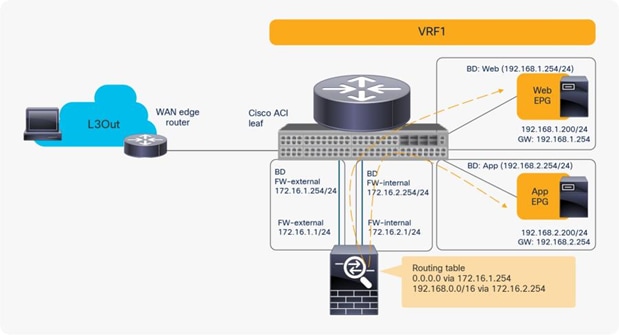

For example, Figure 1 illustrates the difference between a routing-based design (a classic VRF sandwich) and PBR in Cisco ACI. In a routing-based design, Layer 3 outside (L3Out) connections are established between the fabric and the internal and external firewall interfaces. A classic VRF sandwich configuration hence must enforce traffic through the routed firewall: the web subnet and the IP subnet of the firewall internal interface are associated with a firewall inside VRF2 instance. The firewall outside interface and the Layer 3 interface facing the WAN edge router are instead part of a separate firewall outside VRF1 instance. Otherwise, traffic is carried directly between two endpoints, because the destination endpoint IP address can be resolved in the VRF instance.

The use of PBR simplifies configuration, because the previously described VRF sandwich configuration is now not required to insert a Layer 3 firewall between security zones. The traffic instead is redirected to the node based on the PBR policy.

Comparison: VRF sandwich design and PBR design

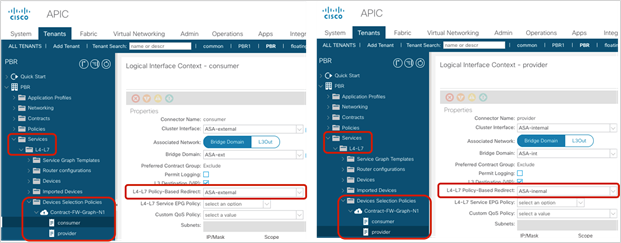

PBR requires a service graph attached to the contract between endpoint groups (EPGs). Traffic redirection is based on the source EPG, destination EPG, and filter (protocol, source Layer 4 port, and destination Layer 4 port) configuration in the contract.

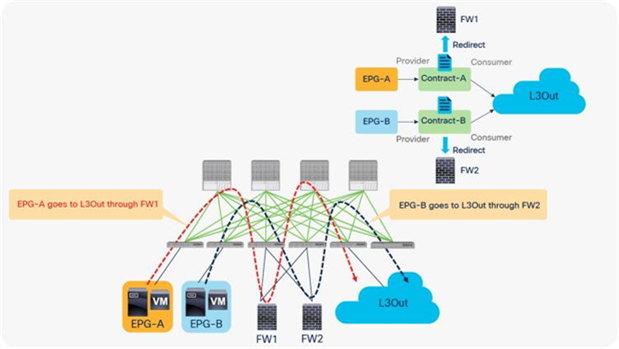

For example, if you have Contract-A with a PBR service graph between the L3Out EPG and EPG-A, only the traffic between the L3Out EPG subnet and an endpoint in EPG-A will be redirected to service node FW1. If you have another EPG, EPG-B, that uses another contract, Contract-B, to communicate with the same L3Out interface, you can configure a different action, such as redirection to a different service node, FW2, or traffic forwarding to the L3Out interface directly (Figure 2).

Example: Use of different PBR policy based on the source and destination EPG combination

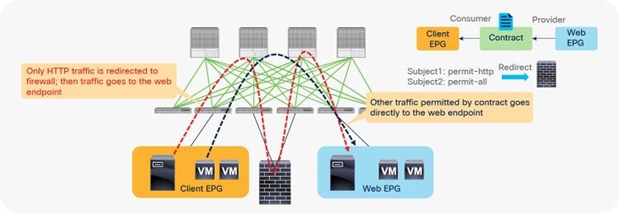

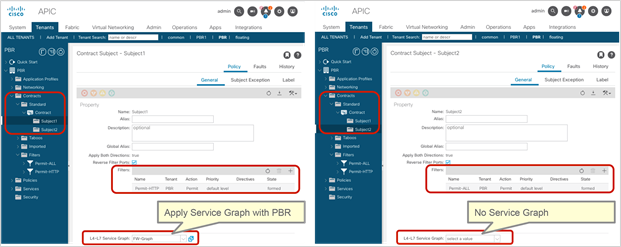

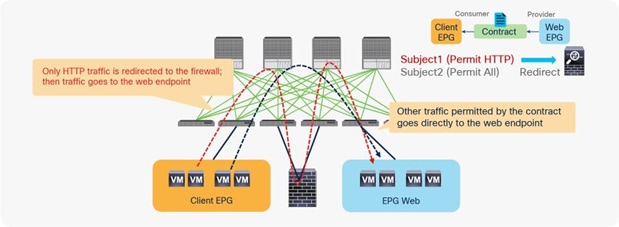

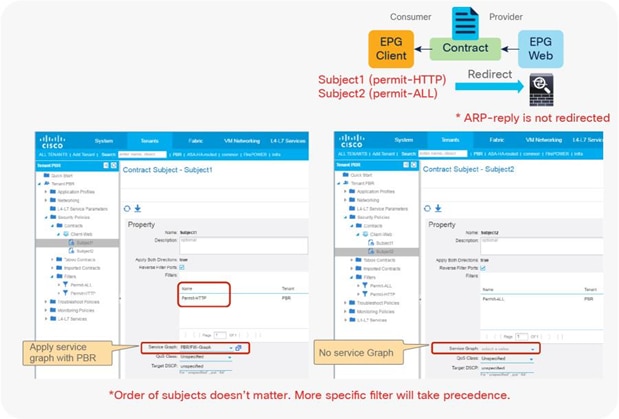

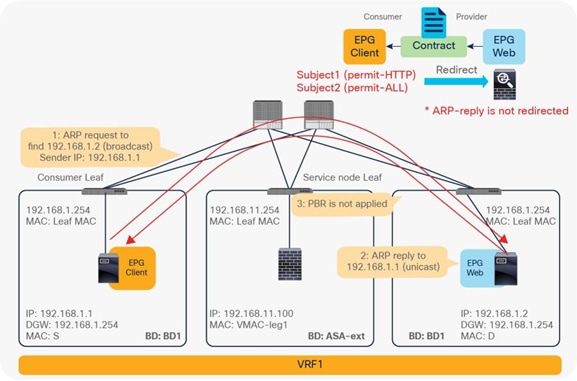

In addition, you can use different filters in a contract to send traffic to different L4-L7 devices. In Cisco ACI, filters are organized into subjects, and a contract is a collection of subjects. The service graph always is deployed by applying it to a subject under a contract. If you have Contract1 that has Subject1 that permits HTTP with a PBR service graph and Subject2 that permits all without a PBR service graph, only HTTP traffic will be redirected. A typical use case is the insertion of an IPS or Deep Packet Inspection (DPI) device that needs to examine the data inside a packet. If the data is encrypted, redirecting the traffic to an IPS would just consume service device resources without any benefit. With service graph redirection, you can configure the contract to redirect only the unencrypted traffic (Figure 3).

Example: Use of different PBR policy based on the contract filter

Requirements and design considerations

This section presents the requirements and design considerations for Cisco ACI PBR. Note that this document refers to a service graph device with the PBR feature as a PBR node, and it refers to a bridge domain that contains a PBR node interface as a PBR node bridge domain.

The main Cisco ACI PBR capabilities are as follows:

● PBR works with both physical and virtual service appliances.

● PBR works with service graphs in both managed mode (service-policy mode) and unmanaged mode (network-policy mode).

● PBR works with both bidirectional and unidirectional contracts.

● PBR can be used between L3Out EPG and EPGs, between EPGs, and between L3Out EPGs. PBR is not supported if L2Out EPG is part of the contract.

● PBR is supported in Cisco ACI Multi-Pod, Multi-Site, and Remote Leaf environments.

● The load can be distributed across multiple L4-L7 devices (symmetric PBR).

The main use cases for Cisco ACI PBR are as follows:

● Use PBR to insert firewalls or load balancers in the path between endpoints while keeping the default gateway on the Cisco ACI fabric to use distributed routing.

● Use PBR to insert an L4-L7 device in the path between endpoints that are in the same subnet.

● Use PBR to send traffic selectively to L4-L7 devices based on protocol and port filtering.

● Use Symmetric PBR to horizontally scale the performance of L4-L7 devices.

The main requirements for Cisco ACI PBR with routed mode device (L3 PBR) are as follows:

● You should use Cisco APIC Release 2.0(1m) or later.

● The Cisco ACI fabric must be the gateway for the servers and for the PBR node.

● The L4-L7 device must be deployed in go-to mode (routed mode).

● PBR node interface must be connected under leaf down link interface, not under FEX host interface. Consumer and Provider endpoint can be connected under FEX host interfaces”.

● PBR node interfaces must be in a bridge domain and not in an L3Out. For releases newer than APIC Release 5.2, this requirement is not mandatory for L3 PBR. The L3 PBR node interface can be in an L3Out.

● The PBR node bridge domain must not be the consumer or provider bridge domain. Therefore, you need a dedicated service bridge domain. For releases later than APIC Release 3.1, this requirement is not mandatory. The PBR node bridge domain can be the same as the consumer or provider bridge domain.

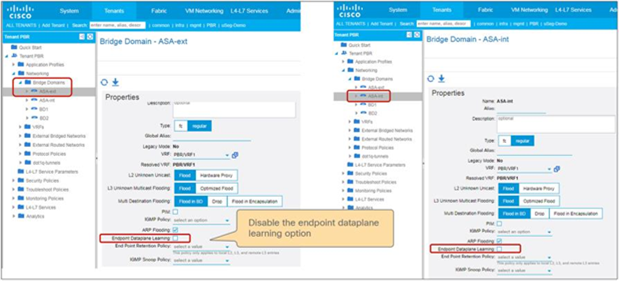

● Prior to APIC Release 3.1, the admin needed to disable Dataplane learning for the bridge domain where the PBR node is attached. For releases later than APIC Release 3.1 with Cisco Nexus 9300-EX and -FX platform leaf switches onward, there is no need for the admin to disable dataplane IP learning for the BD where the PBR node interface is attached.

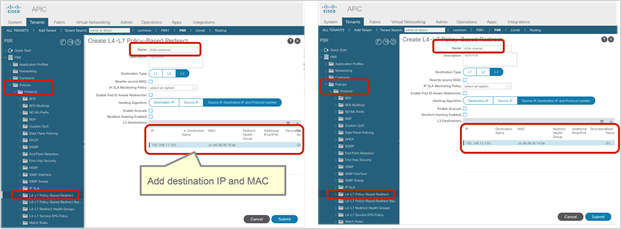

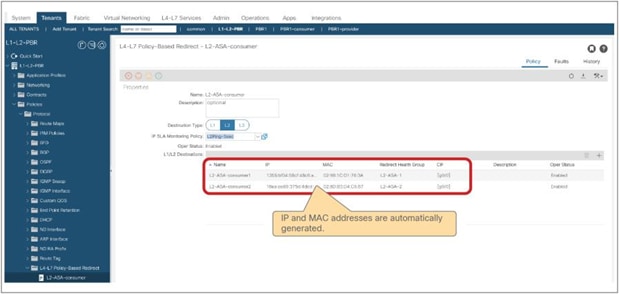

● The administrator must enter the PBR node IP address and MAC address in the APIC configuration. For releases later than APIC Release 5.2, the MAC address configuration is not mandatory for L3 PBR if IP-SLA tracking is enabled.

● Symmetric PBR (more than one PBR destination per PBR policy) requires Cisco Nexus 9300-EX and -FX platform leaf switches onward.

● The PBR node bridge domain and the L3Out for PBR node must belong to the same VRF instance as either the consumer bridge domain (EPG) or provider bridge domain (EPG).

Design considerations for Cisco ACI PBR with routed mode device (L3 PBR) include the following:

● If the fabric consists of first-generation Cisco Nexus 9300 platform switches such as Cisco Nexus 93128TX, 93120TX, 9396TX, 9396PX, 9372PX, 9372PX-E, 9372TX and 9372TX-E, the PBR node must not be under the same leaf node as either the consumer or provider EPG.

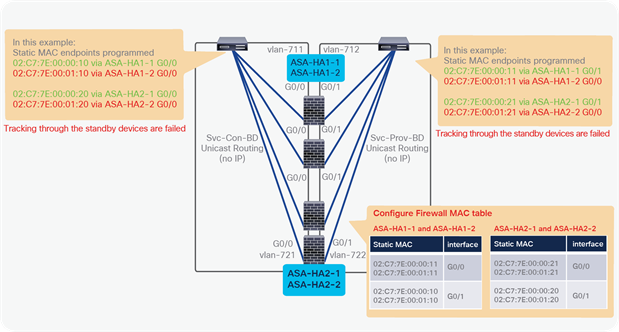

● Prior to APIC Release 5.2, which does not support dynamic PBR destination MAC address detection, in a high-availability active/standby deployment, you need to configure the L4-L7 device with a virtual IP and virtual MAC address. A virtual IP and virtual MAC address is defined as a floating IP and MAC address that, when the active L4-L7 node goes down, is taken over by the standby node.

● It’s recommended to enable GARP-based detection on the PBR node bridge domain because GARP is commonly used for L4-L7 device failover.

● If PBR nodes exchange link-local multicast packets such as HSRP, VRRP and IPv6 NS, each PBR node pair that is supposed to exchange the link-local multicast packets must be under different leaf due to CSCvq57414 and CSCvq76504.

● Prior to APIC Release 3.2, PBR can be used for only one node of a service graph. For releases later than APIC Release 3.2, PBR can be used for multiple nodes of a service graph.

● Prior to APIC Release 3.2, PBR was not supported for Cisco ACI Multi-Site environments. (PBR was not supported in the contract between EPGs in different sites.) For APIC Release 3.2, the one-node Firewall PBR is supported in Cisco ACI Multi-Site environments. The two-node PBR service graph, for example Firewall and Load Balancer, is supported in APIC Release 4.0.

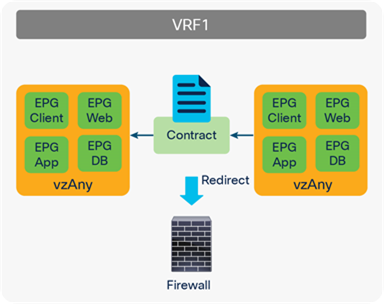

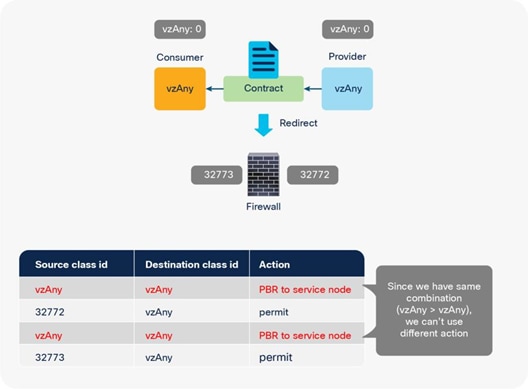

● Prior to APIC Release 3.2, you cannot associate a service graph with PBR with a contract with vzAny as provider. For releases later than APIC Release 3.2, PBR with a contract with vzAny as provider is supported. Note that vzAny cannot be provider for an inter-VRF contract regardless with or without a service graph.

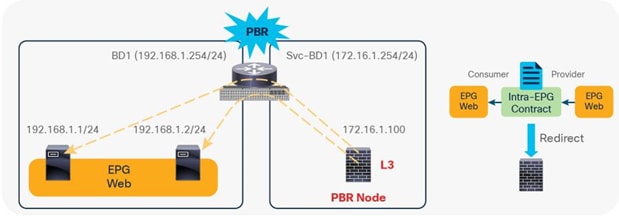

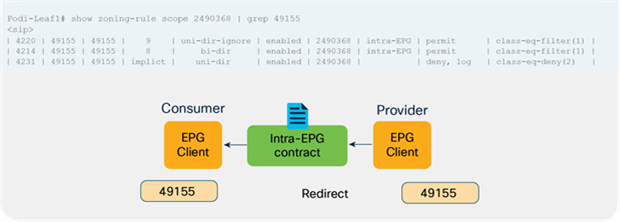

● Prior to APIC Release 4.0, you could not associate a service graph with an intra-EPG contract. For releases later than APIC Release 4.0, PBR with an intra-EPG contract is supported. Starting with APIC Release 5.2 onward, PBR with an intra Ext-EPG contract is supported.

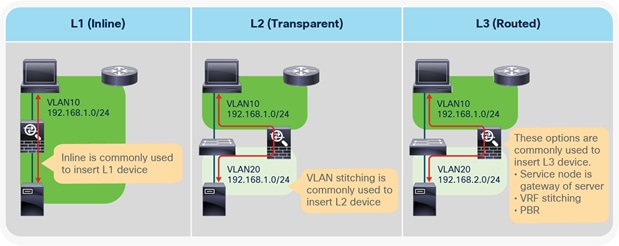

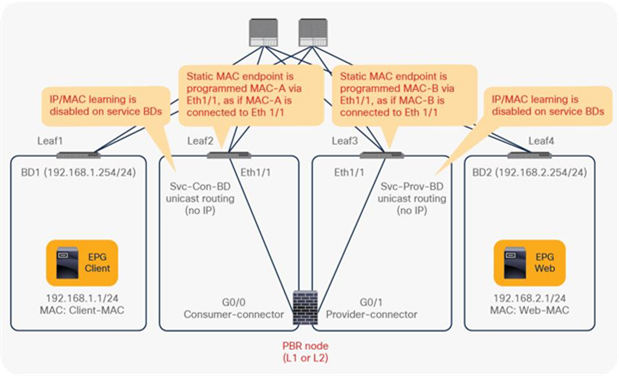

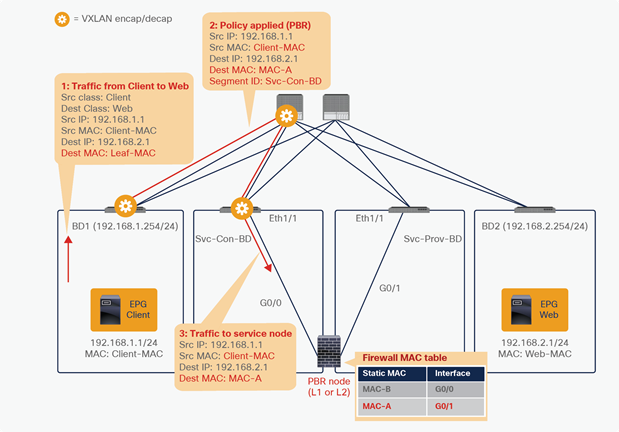

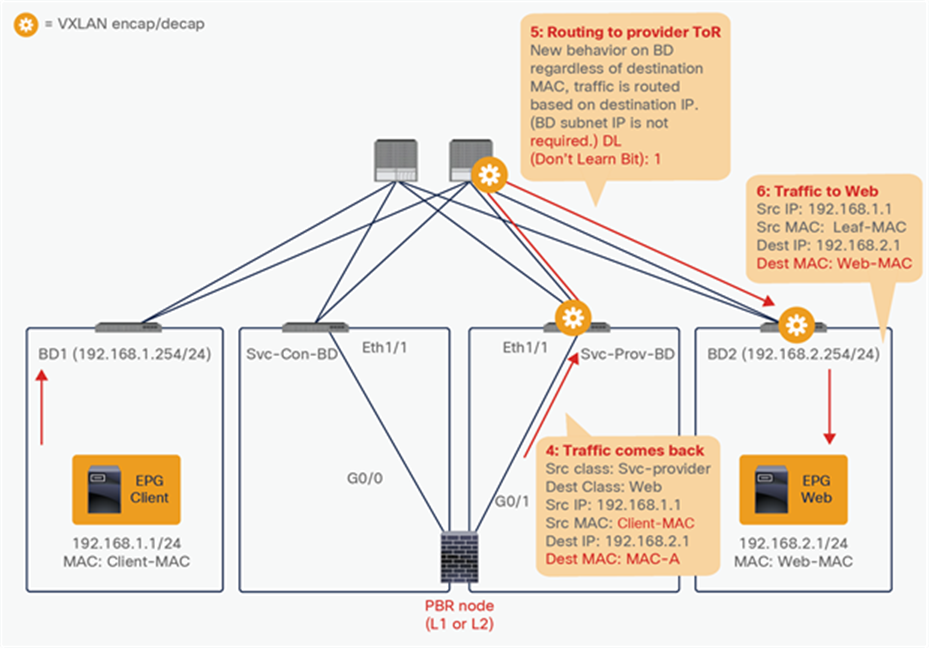

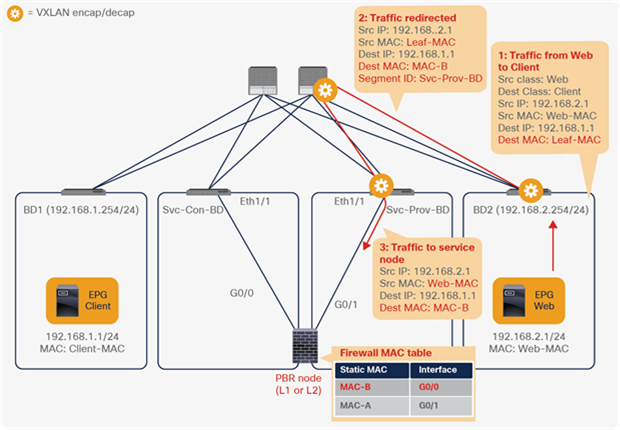

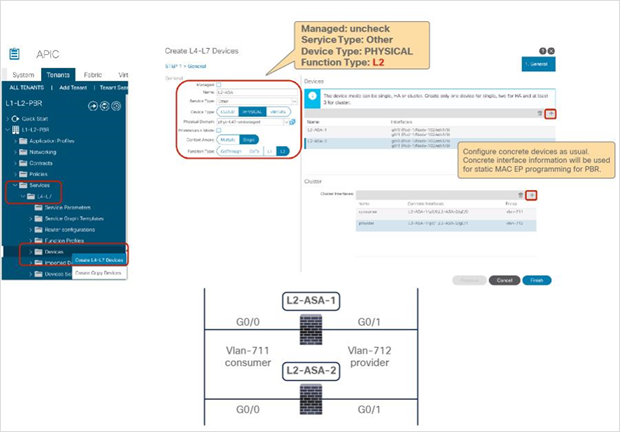

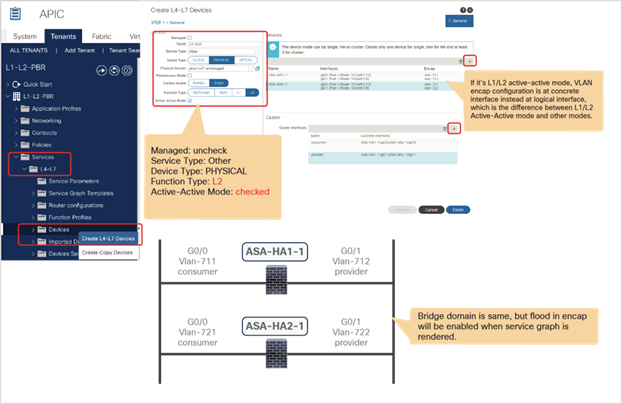

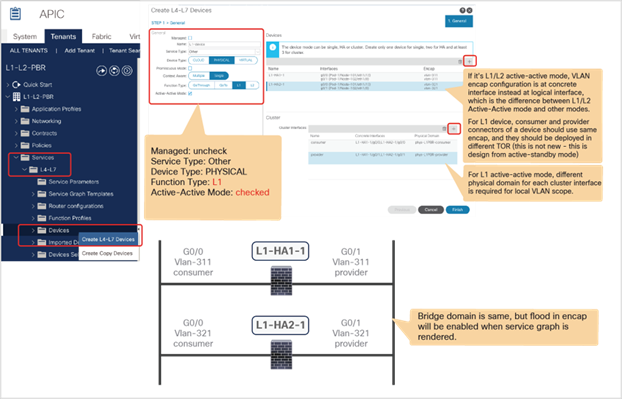

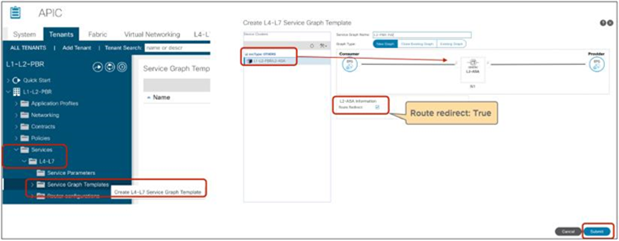

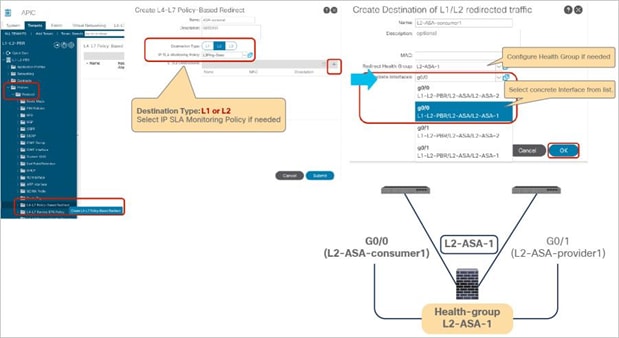

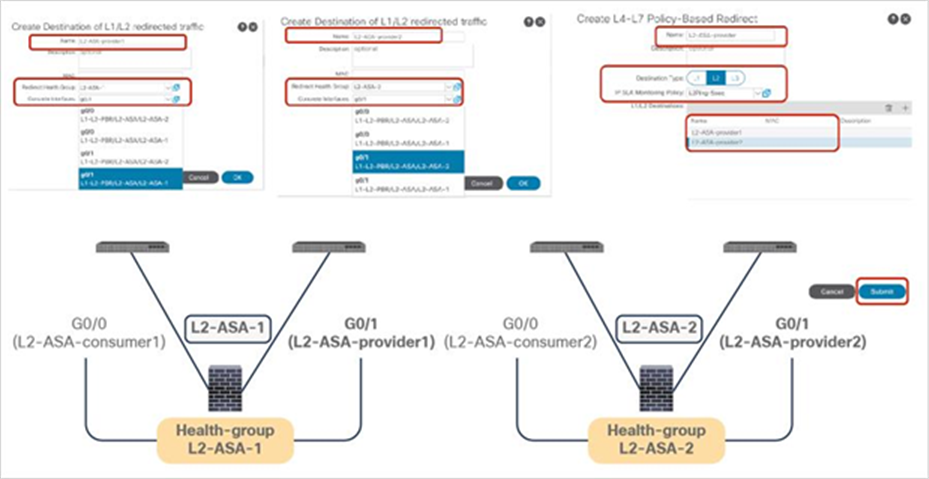

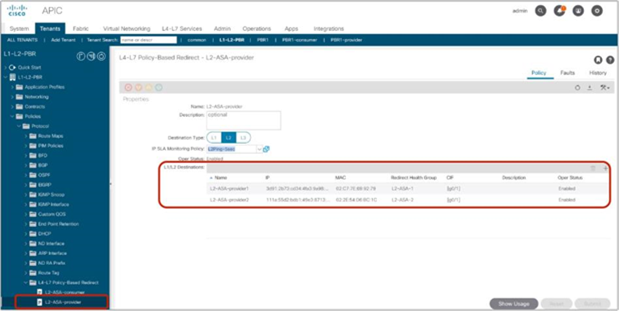

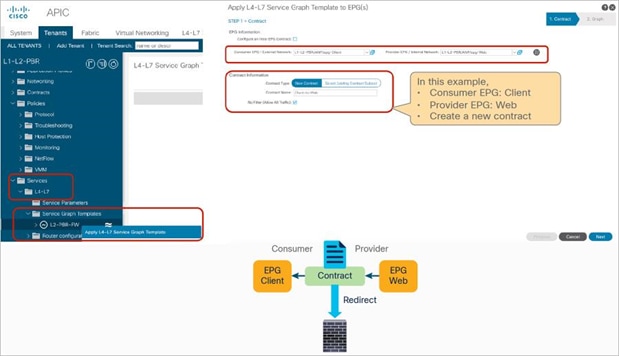

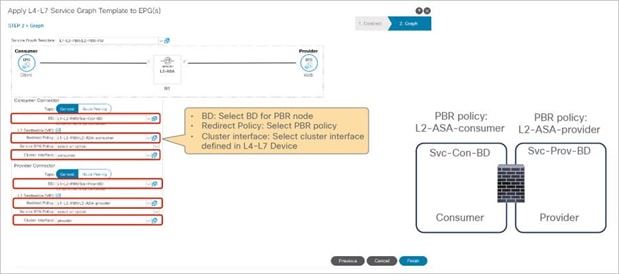

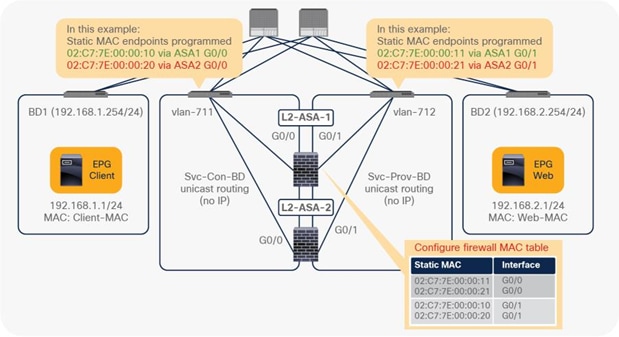

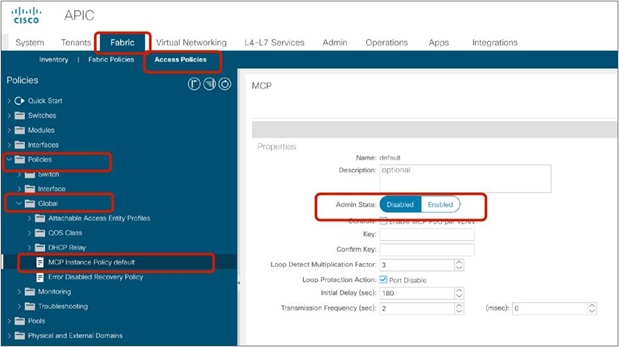

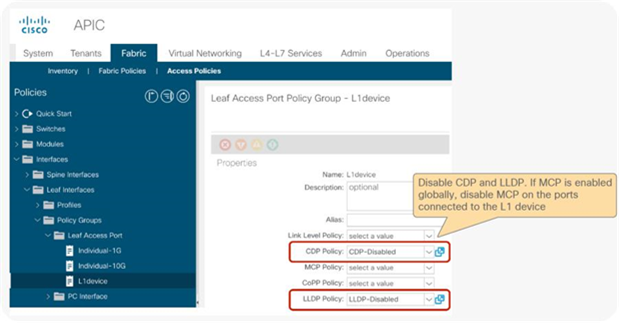

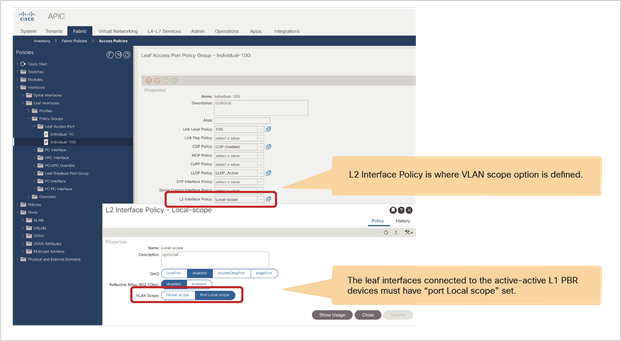

Starting from APIC Release 4.1, PBR can be used with L1 or L2 devices; for example, inline IPS, transparent firewall (FW), etc. The main requirements for Cisco ACI with L1/L2 mode device (L1/L2 PBR) are as follows:

● You should use APIC Release 4.1 or later.

● L1/L2 PBR requires Cisco Nexus 9300-EX and -FX platform leaf switches onward.

● The Cisco ACI fabric must be the gateway for the servers and for the PBR node.

● The L4-L7 device must be deployed as L1 or L2 mode in physical domain.

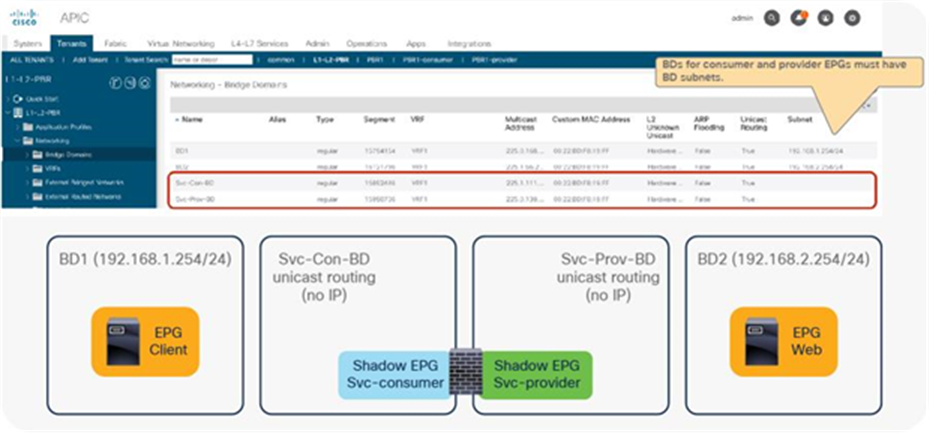

● L1/L2 PBR node interfaces must be in a bridge domain and not in an L3Out. The PBR node bridge domain must be a dedicated BD that cannot be shared with other endpoints or other L4-L7 devices’ interfaces.

● The PBR node bridge domain must belong to the same VRF instance as either the consumer bridge domain (EPG) or provider bridge domain (EPG).

● L1/L2 device must be in two-arm mode. The consumer and provider connectors of the L1/L2 device must be in different BDs.

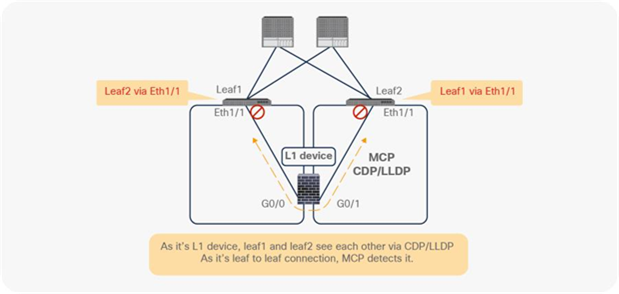

● Consumer and provider connectors of the L1 device must be connected to different leaf nodes. Per port VLAN is not supported. The L2 device doesn’t have this consideration.

Design considerations for Cisco ACI with an L1/L2 mode device (L1/L2 PBR) include the following:

● L1/L2 PBR is supported with unmanaged mode Service Graph only.

● L2 Unknown Unicast option in the service bridge domains must be set to Hardware Proxy for L1/L2 PBR.

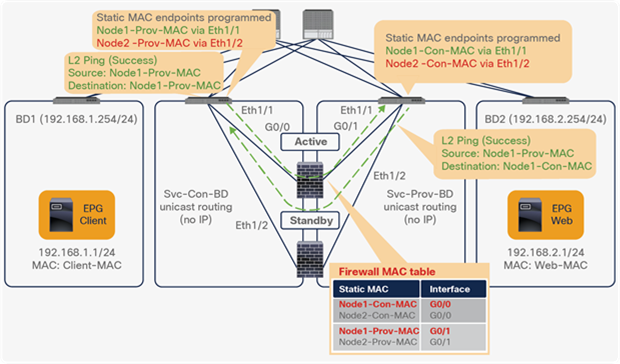

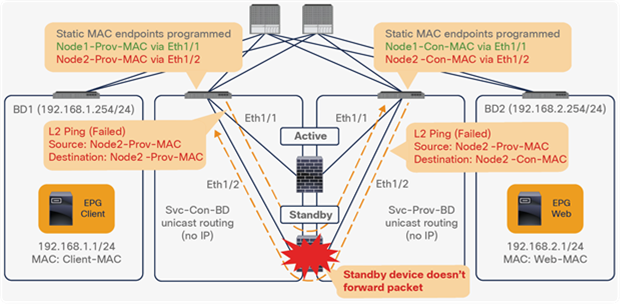

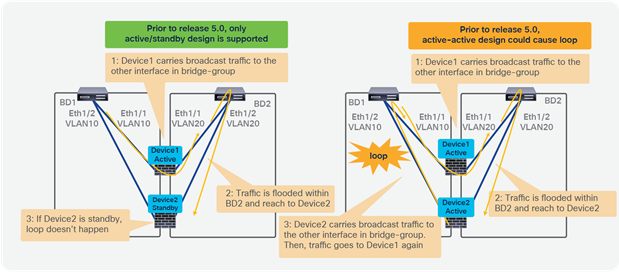

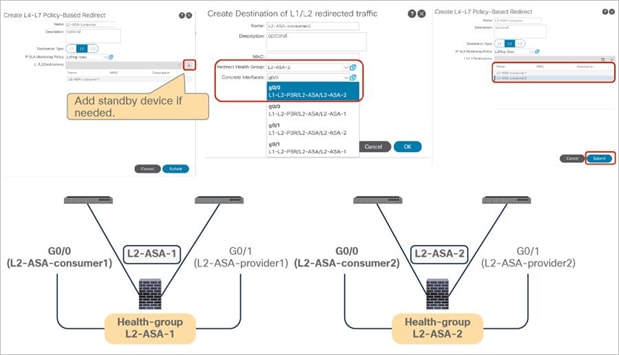

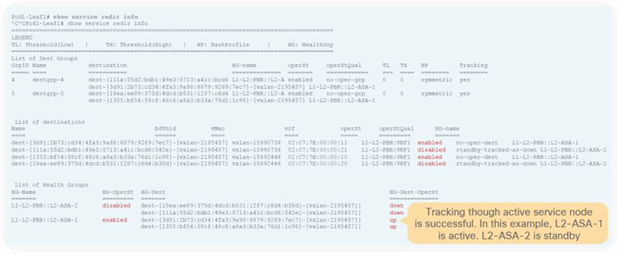

● Prior to APIC Release 5.0, L1/L2 PBR supports active/standby mode only. When using ACI version prior to ACI Release 5.0, there is no support for active/active deployment with L1/L2 PBR, unlike L3 PBR. This means that you can configure up to two L1/L2 destinations (meaning up to two L4/L7 devices) per PBR destination group. More than two L4/L7 devices in the same PBR destination group are not supported in APIC Release 4.1 and 4.2. The PBR tracking is required for active/standby mode. As active/active is not supported, the threshold is not applicable. The down action is denied when tracking is enabled. A down action permit cannot be set in APIC Release 4.1.

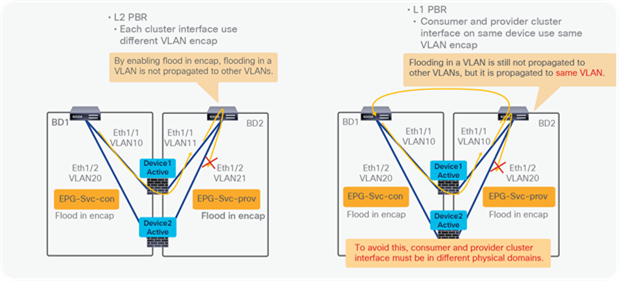

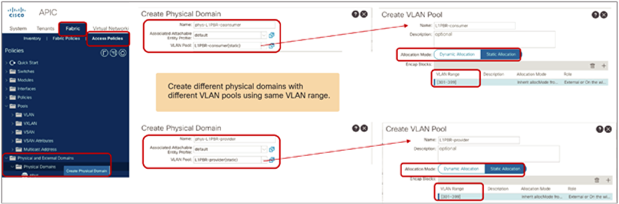

● Starting from APIC Release 5.0, L1/L2 PBR also supports active/active Symmetric PBR deployment. Symmetric PBR related features such as threshold, down action and backup PBR policy (N+M high availability) are also supported in APIC Release 5.0. For L1 PBR active/active mode, consumer and provider interfaces of each L4-L7 device (aka as consumer and provider connectors) must be in different physical domains.

● Note: Multiple active/standby pairs with L1/L2 PBR active/active design is not supported with backup PBR policy.

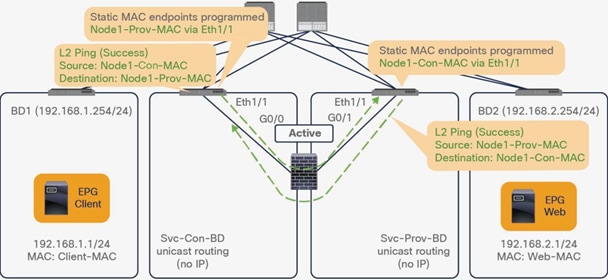

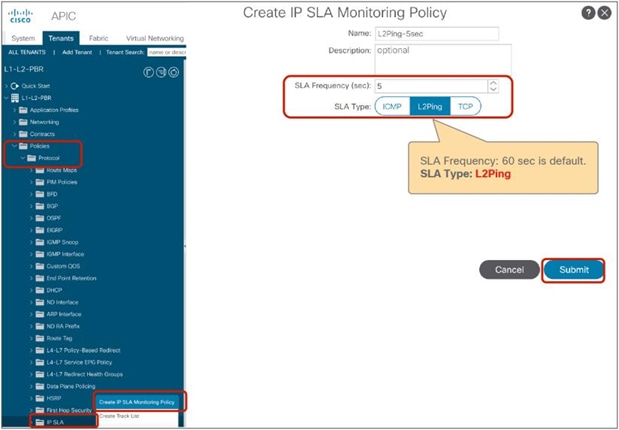

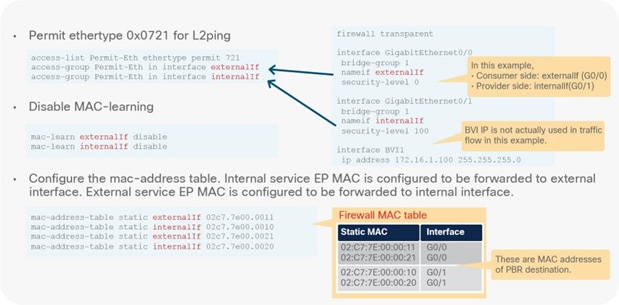

● L2 Ping (Ethertype 0x0721) is used for tracking. L2 Ping is exchanged between leaf nodes, which is going through the service device. Thus, the L4-7 device operating in L1/L2 mode needs to permit Ethertype 0x0721.

● If intermediate switch is connected between leaf port and L1/L2 PBR destination, the intermediate switch must be able to carry the traffic with the PBR destination MACs. Static MAC configuration or promiscuous mode configuration might be required on the intermediate switch in addition to permitting Ethertype 0x0721 to permit L2 Ping.

● L1/L2 PBR can be used with Multi-Pod, Multi-Site, and Remote Leaf deployments. For L1/L2 PBR active-active design, PBR destinations can’t be connected to remote leaf as Flood in Encap is not supported on remote leaf. Provider and consumer can still be connected to remote leaf.

● Multinode PBR is supported. The L4-L7 devices operating in L1/L2 mode and L3 mode can be mixed in a service graph.

● PBR with vzAny or intra-EPG contract is not supported as it requires one-arm mode.

Design considerations for Cisco ACI PBR that are applicable to both L1/L2 PBR and L3 PBR include the following:

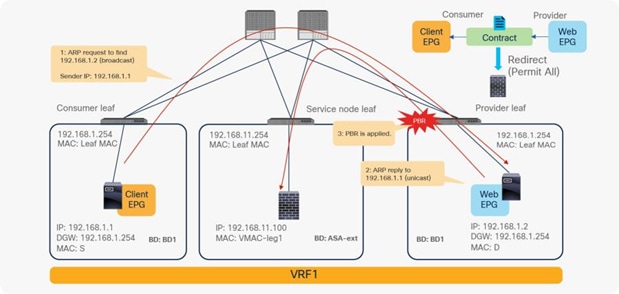

● Multicast and broadcast traffic redirection are not supported because the contract is applied to unicast traffic only.

● User-defined contract actions, such as redirect, copy, and deny, cannot be applied to specific types of packets. See the frequently asked questions (FAQ) in the ACI Contract Guide for more details.

● PBR is not supposed to be applied to non-IP traffic and control plane traffic such as ARP, ND-Sol ICMPv6 and ND-Advt ICMPv6 traffic. Thus, a common default filter that includes ARP, ethernet traffic, and other non-IP traffic should not be used for PBR. One of the examples is described later in this document. In case of IPv6 traffic, you need to make sure ND-Sol ICMPv6 and ND-Advt ICMPv6 traffic are excluded from a contract subject with PBR even if you use non-default filter because IP and IPv6 ethertypes include ICMPv6.

● Stateful Service device is supposed to be inserted for both consumer to provider and provider to consumer directions. For example:

◦ Firewall (or a device that doesn’t perform IP translation) is inserted by using PBR for both directions.

◦ Load Balancer (or a device that performs IP translation) is inserted by using unidirectional PBR and the fact that the destination IP (VIP or NAT’d IP) for the other direction is owned by the device.

● Although each service device model has different HA/clustering mechanism, it’s generally recommended to use separate segments (BDs) for HA/clustering communication and data traffic where PBR is enforced.

● It’s generally recommended to use vzAny contract to enable PBR for many EPGs to many EPGs traffic instead of many EPGs consuming and providing the same contract.*

● PBR can be applied to bridged traffic as well, where source and destination endpoints are in the same subnet if they are in an L3 bridge domain. Even though source and destinations are in the same subnet, the original source MAC is not preserved and TTL is decremented because ACI fabric routes traffic when PBR policy is applied (ACI fabric rewrites the destination MAC address to the PBR destination MAC address, which means routing).

● PBR is not supported for traffic that includes Out-of-Band Management EPG or In-Band Management EPG regardless it’s in predefined oob VRF, inb VRF or user defined VRF because only permit and deny contract actions are supported for the Management EPGs.

● L4-L7 devices (also referred to as PBR destinations or PBR nodes) used in the same service graph must not be distributed between remote leaf nodes and the main location.

● If multiple PBR policies have the same PBR destination IP in the same VRF, it must use the same IP-SLA policy, health-group, and Pod-ID-aware redirection configurations for the PBR destination. This is because the PBR destination uses (VRF, IP) as the key for tracking status and configuration. Examples are described later in this document.

● TCAM Compression (“Enable Policy Compression” formerly known as “no stats” option in the contract filter) does not take effect on a zoning rule with a redirect rule. This means that the ability to optimize TCAM utilization with contracts/filters doesn’t apply for contract/filter rules that are used for the purpose of service graph redirection (PBR).

● Starting from APIC Release 4.2(6) and 5.0(1), contract inheritance with service graph is supported if the contract and EPGs are in the same tenant.

● The use of copy service with a PBR node in the same service graph is not supported.

*Note: It is because a possible impact on changing a configuration on a contract that has many provider and consumer EPGs. If one configuration change on APIC is related to multiple zoning-rule changes at the same time, it would take time to finish programming the hardware of a give leaf node. Please see the Scalability Consideration section in ACI Contract Guide.

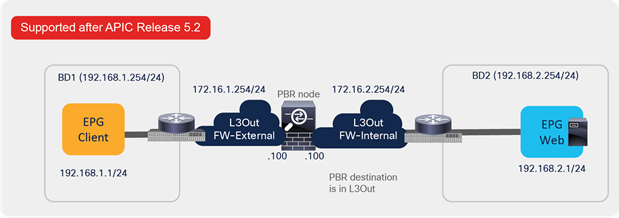

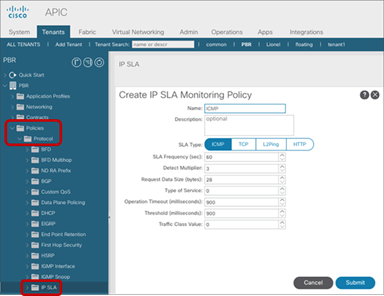

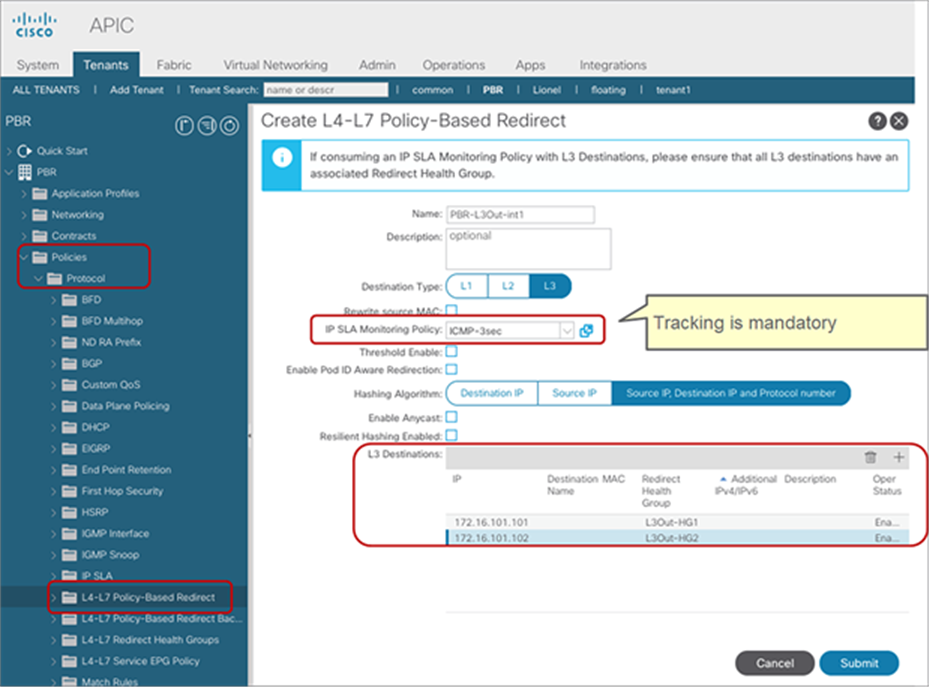

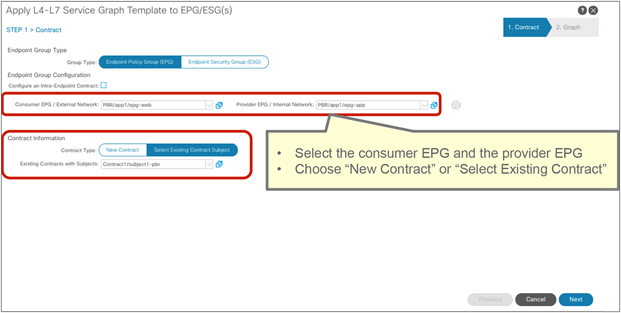

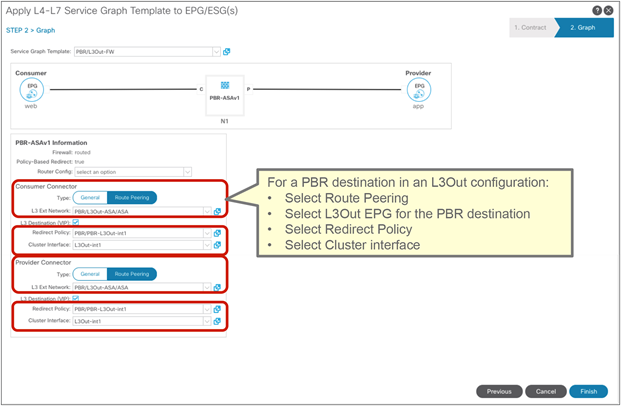

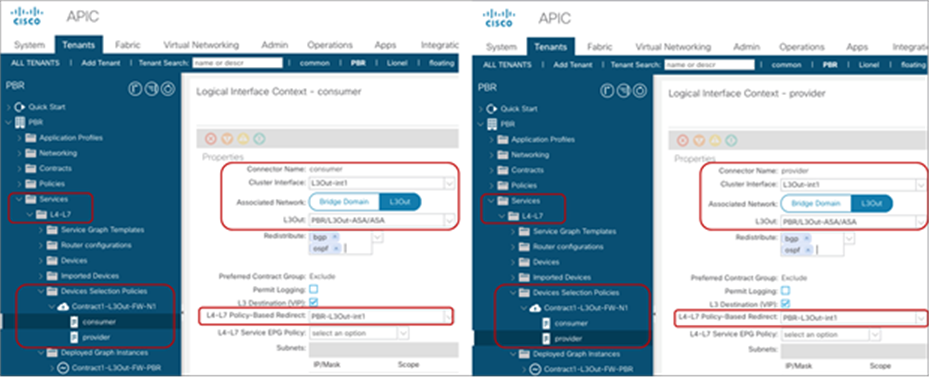

Starting from APIC Release 5.2, L3 PBR destinations can be in an L3Out instead of an L3 bridge domain. The main requirements for PBR destinations in an L3Out are:

● You should use APIC Release 5.2 or later.

● The L3Out for the PBR destinations must belong to the same VRF instance as either the consumer bridge domain (EPG) or provider bridge domain (EPG).

● IP-SLA Tracking is mandatory.

● An L3Out EPG with 0.0.0.0/0 or 0::0 cannot be used for the L3Out EPG for PBR destinations.

Design considerations for PBR destinations in an L3Out include:

● L3Out with SVI, routed sub-interface, or routed interface are supported. (Infra L3Out, GOLF L3Out, SDA L3Out, or L3Out using floating SVI for a PBR destination are not supported)

● Single pod, Multi-Pod, and Remote Leaf are supported. Multi-Site is not supported as of APIC Release 5.2.

● Multinode PBR is supported.

● If the consumer/provider EPG is an L3Out EPG, it must not be under the same L3Out for PBR destinations.

● If the consumer/provider EPG is an L3Out EPG, it must not be under the service leaf nodes, where the L3Out for PBR destinations resides. If the consumer/provider EPG is a regular EPG-not an L3Out EPG-the consumer, provider, and the L3Out for PBR destinations can be under the same leaf. This consideration is applicable to the case where a consumer/provider EPG communicates with an L3Out EPG for a service device via another service device where PBR destination in an L3Out is enabled. For example, PBR destination in an L3Out is enabled on the firewall to redirect traffic between the consumer EPG and the VIP of the load balancer behind the L3Out:

◦ Two node service graph that has a firewall as the first node and a load balancer as the second node.

◦ The firewall and the load balancer are connected via L3Outs: L3Out-FW and L3Out-LB.

◦ The traffic between the consumer EPG and the VIP of the load balancer hits this consideration because PBR destination in an L3Out is enabled for the traffic between the consumer EPG and the VIP (L3Out-LB EPG). L3Out-FW and L3Out-LB must not be under the same leaf nodes.

● If the service device is in two-arm mode and one of the L3Outs for the PBR destinations learns 0.0.0.0/0 or 0::0 route, both arms of the service device must be connected to the same leaf node or the same vPC pair.

● Mixing of PBR destinations in an L3 bridge domain and PBR destinations in an L3Out within the same function node in the service graph is not supported. For example:

◦ These configurations are not supported:

◦ Consumer connector of Function Node1 is in BD1 (PBR is enabled)

◦ Provider connector of Function Node1 is in an L3Out1 (PBR is enabled)

◦ These configurations are supported:

◦ Consumer connector of Function Node1 is in BD1 (PBR is NOT enabled)

◦ Provider connector of Function Node1 is in an L3Out1 (PBR is enabled)

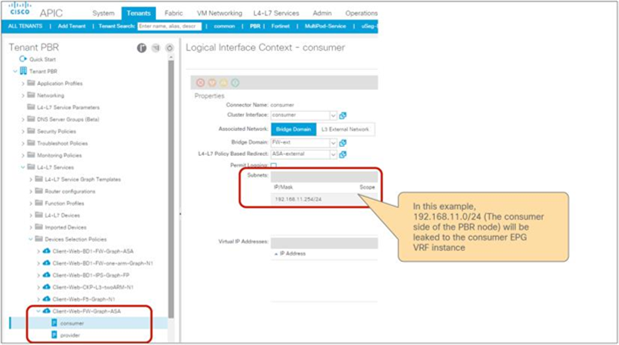

● The inter-VRF contract has the following considerations:

◦ EPG contract: If the L3Out for a PBR destination is in the provider VRF for inter-VRF contracts, the L3Out EPG subnet must be leaked to the consumer VRF. Otherwise, the consumer VRF doesn’t have the route to the PBR destination and the provider VRF doesn’t have a permit rule for the traffic from the PBR destination in the provider VRF to the consumer EPG. (In the case of a PBR destination in a BD, the service BD for the PBR destination does not have to be leaked to the consumer VRF.)

◦ ESG contract: Regardless of whether the L3Out EPG is in the consumer or provider VRF, the L3Out EPG subnet must be leaked to the other VRF.

● The Bypass feature has a known caveat: CSCvy31805

● vzAny-to-vzAny contract with PBR destination in an L3Out is supported. Because the L3Out EPG for the PBR destination is also part of the vzAny in the VRF, another contract that has a higher priority than one for vzAny-to-vzAny contract is required to avoid redirecting traffic whose source IP is matched with the L3Out EPG for the PBR destination.

Unless otherwise indicated, topology and design examples in this document shall be examples with L3 PBR.

This document mainly covers single pod design considerations. For Multi-Pod and Multi-Site environment details, please see the Multi-Pod Service integration white paper.

This section shows topology examples for PBR. More information is provided later in this document.

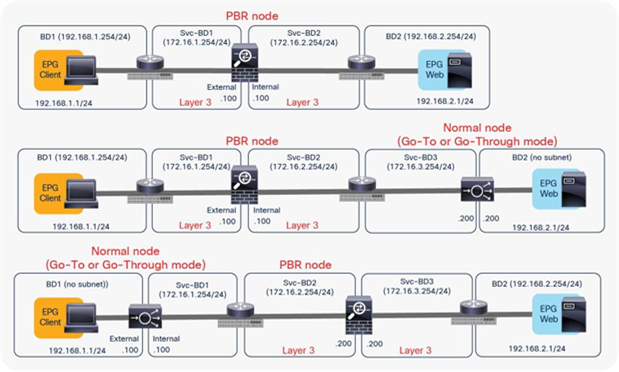

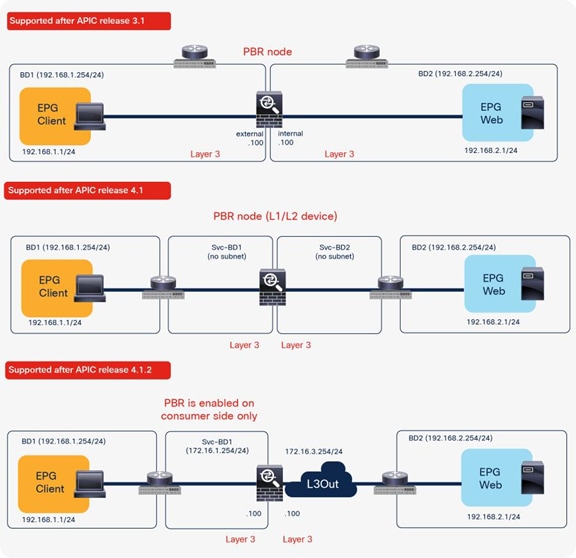

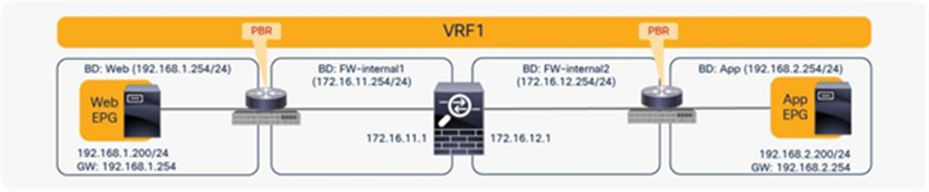

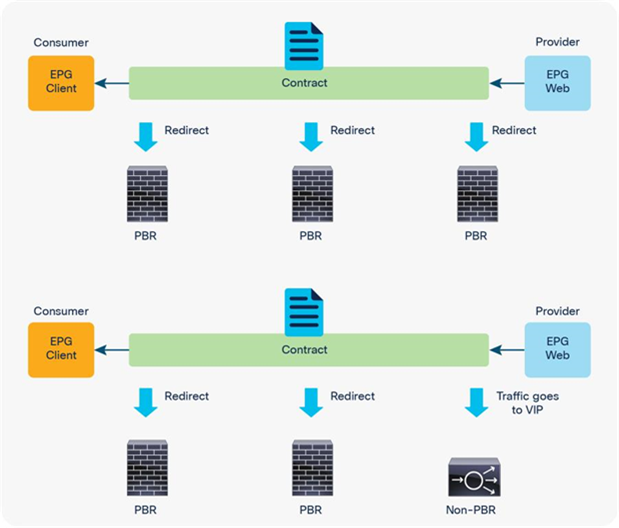

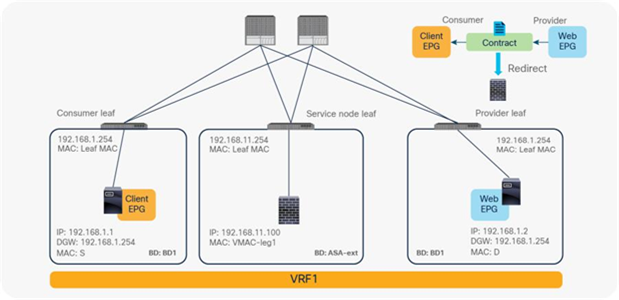

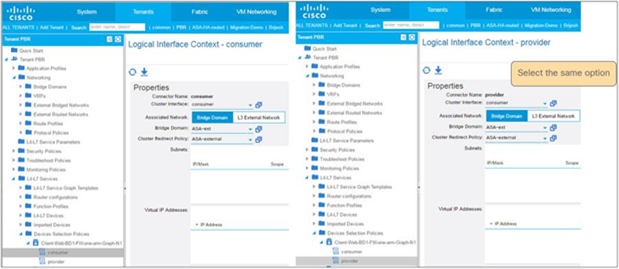

The first example in Figure 4 shows the typical use case of one-node firewall insertion. The PBR node is a Layer 3 node. Prior to APIC Release 3.1, the PBR node bridge domain must not be the consumer or provider bridge domain that contains the consumer or provider EPG. Therefore, a different bridge domain and subnet range were required for the PBR node, such as in Figure 4, below. Starting from APIC Release 3.1, this requirement is no longer mandatory. Please see the section “Design with PBR node and consumer and provider EPGs in the same subnet” for details.

The second and third examples are two-node service graphs. Prior to APIC Release 3.2, if you have a two-node service graph, either the first node or the second node can be a PBR node. A non-PBR node can be in the same bridge domain as the consumer or provider EPG, but prior to APIC Release 3.1, the PBR node must be in a dedicated service bridge domain. The fourth example is PBR node in a nondedicated service bridge domain. Starting from APIC Release 3.2, multimode PBR is introduced. It enables you to use PBR multiple times in a service graph. Please see the section “Multinode service graph with PBR” for details.

The fifth example is L1/L2 PBR. Prior to APIC Release 4.1, PBR node must be an L3 device. Starting from APIC Release 4.1, PBR to an L1/L2 device is introduced Please see the section “L1/L2 PBR” for details.

The sixth example is unidirectional PBR with the other connector in L3Out. Prior to APIC Release 4.1.2, both consumer and provider connectors of a PBR node must be in a bridge domain and not in an L3Out even though PBR is enabled on one of the connectors only. Starting from APIC Release 4.1.2, this requirement is no longer mandatory. L3Out can be used for a connector where PBR is not enabled. Please see the section “Unidirectional PBR with the other connector in L3Out” for details.

The seventh example is PBR destination in an L3Out. Prior to APIC Release 5.2, the PBR destination must be in a bridge domain and not in an L3Out if PBR is enabled on the connector. Starting from APIC 5.2, this requirement is no longer mandatory. L3 PBR destinations can be in an L3Out. See the section, “PBR destination in L3Out”, for more details.

These examples show two-arm-mode PBR nodes, but you can also deploy a one-arm-mode PBR node except in L1/L2 PBR. More information about service graph designs is provided later in this document.

Examples of supported topologies

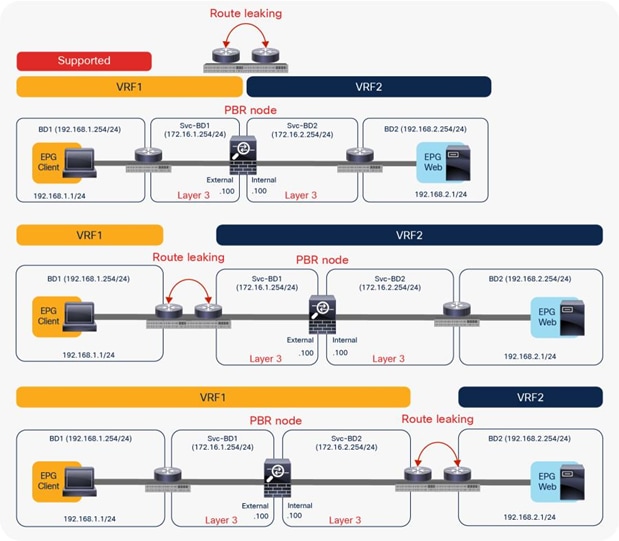

The PBR node can be between VRF instances or within one of the VRF instances. The PBR node must be in either the consumer or provider VRF instance (Figure 5). For example, you cannot put the PBR node in VRF3, which is neither a consumer nor a provider VRF instance.

Examples of supported topologies (VRF sandwich design)

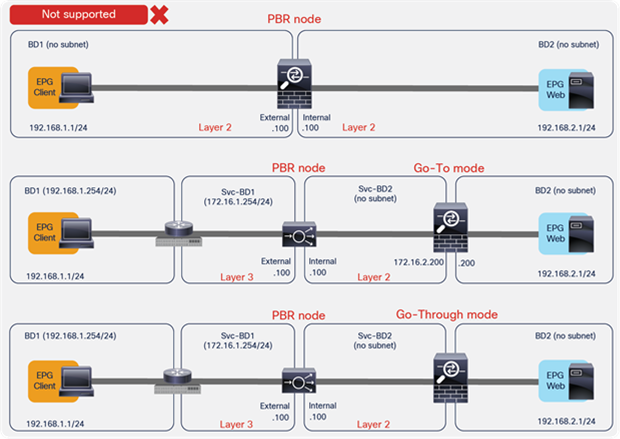

Figure 6 shows examples of unsupported topologies. The PBR node must be in an L3 bridge domain, not in an L2 bridge domain.

Examples of unsupported topologies (PBR node must be in L3 bridge domain)

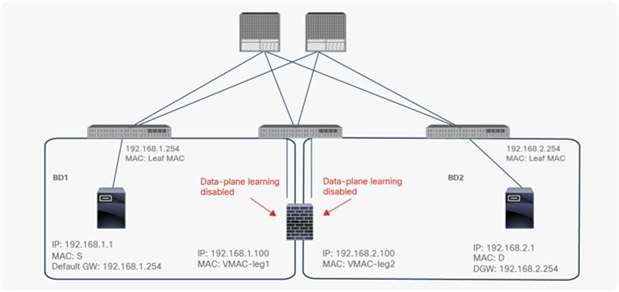

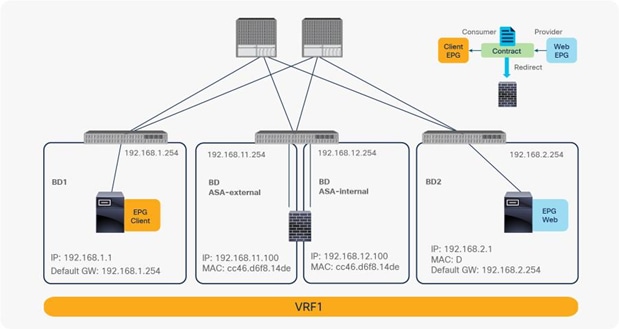

Endpoint Dataplane Learning configuration for PBR node

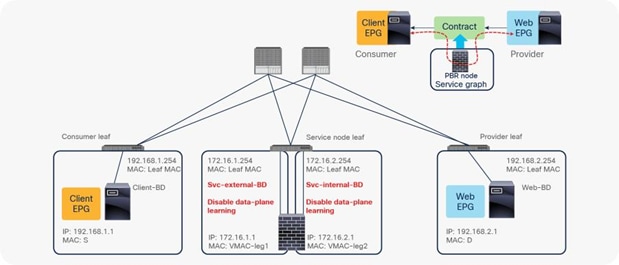

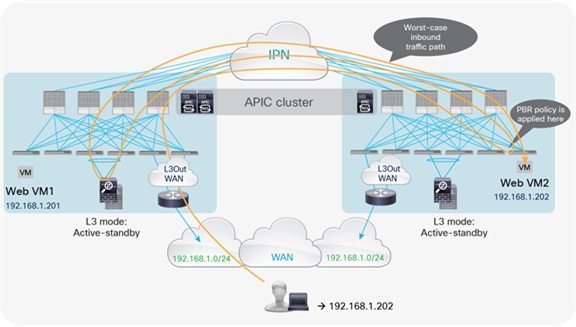

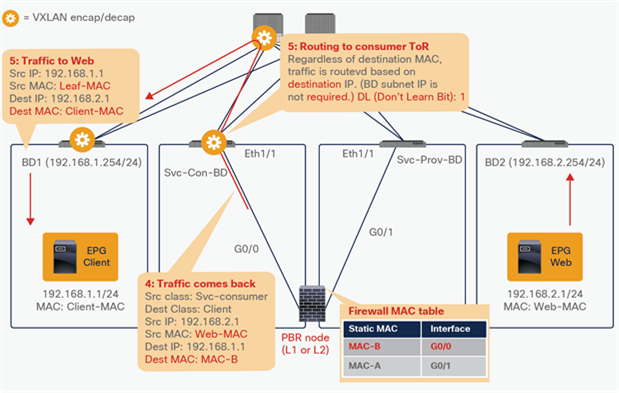

When you deploy a service graph with PBR, the L4-L7 device must be connected to an L3 bridge domain or an L3Out. This bridge domain must be configured with Endpoint Dataplane IP Learning disabled. Figure 8 illustrates this point. This figure depicts bidirectional PBR with the PBR node, a firewall, inserted between the Client and Web EPGs.

This section explains why you must disable Endpoint Dataplane IP Learning for a PBR node bridge domain. It’s not applicable to PBR destinations in an L3Out because IP addresses are not learned from the data plane in an L3Out domain.

PBR design example

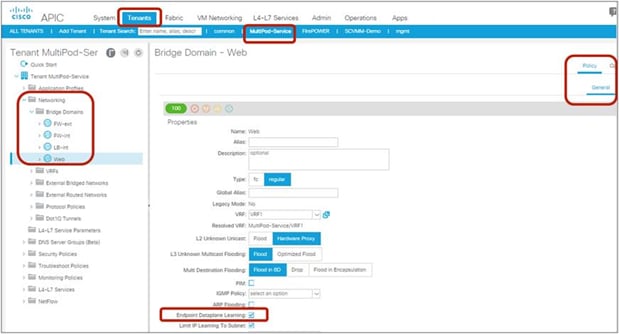

The Endpoint Dataplane Learning option is located in Tenants > Networking > Bridge Domains (Figure 8). The default configuration is enabled. The setting enables and disables Endpoint Dataplane IP Learning. Starting from APIC Release 5.0(1), this option is moved under the “Advanced/Troubleshooting” tab within the Policy tab at a bride domain.

Enable and disable endpoint data-plane learning for the bridge domain

Note: Prior to APIC Release 3.1, disabling the Endpoint Dataplane Learning setting in the PBR node bridge domain was mandatory. After APIC Release 3.1, the configuration in the PBR node bridge domain is not mandatory. The Endpoint Dataplane Learning setting on the PBR node EPG is automatically disabled during service graph instantiation.

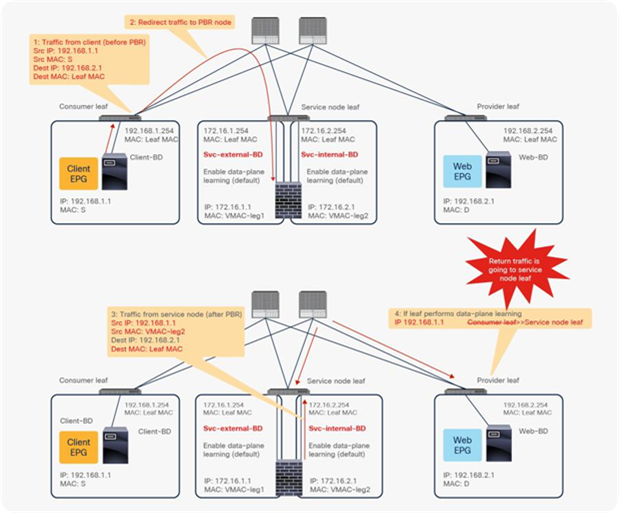

The reason that you must disable endpoint data-plane IP learning for a service graph with PBR is that leaf nodes involved in the PBR traffic flow may experience unwanted endpoint learning behavior if you leave the Endpoint Dataplane Learning setting enabled in the PBR node bridge domains.

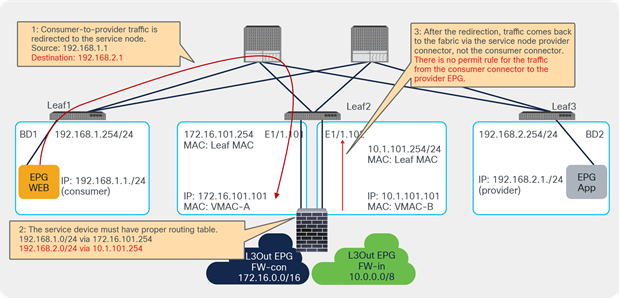

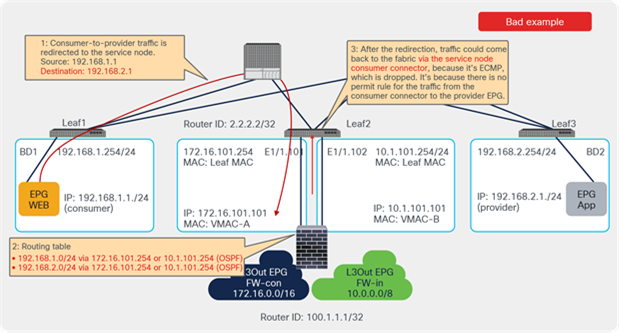

For example, as shown in Figure 9, the source IP address of traffic returning from the PBR node is still 192.168.1.1 even after PBR is enforced. Therefore, the provider leaf node will receive packets with 192.168.1.1 as the inner source IP address and the service node leaf Virtual Extensible LAN (VXLAN) Tunnel Endpoint (VTEP) as the outer source IP address. So the provider leaf node will learn 192.168.1.1 through the service node leaf VTEP IP address, even though 192.168.1.1 is actually under a different leaf node.

If you disable Endpoint Dataplane Learning on Svc-internal-BD, the bridge domain for the provider side of the PBR node, the provider leaf node doesn’t learn 192.168.1.1 through the traffic from the PBR node.

To maintain symmetric traffic, PBR for the return traffic is also required in this example. The Endpoint Dataplane Learning option must be disabled for Svc-external-BD as well to prevent the consumer leaf node from learning 192.168.2.1 through the service leaf node after PBR is enforced.

Why data-plane learning must be disabled in the PBR node bridge domain

Note: Although the provider leaf node does not learn the consumer endpoint, the traffic can be forwarded by using the spine proxy node.

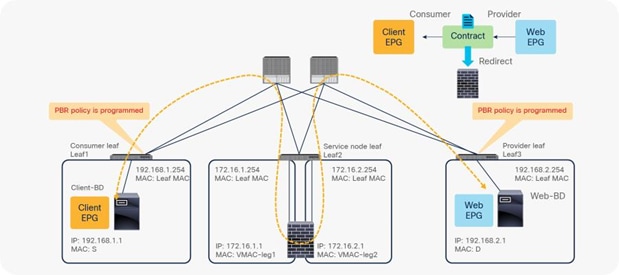

This section explains how a policy is updated in the Cisco ACI fabric when a service graph with PBR is deployed.

PBR policy is programmed on consumer and provider leaf nodes. For example, if you have consumer, provider, and service leaf nodes as shown in Figure 10, the PBR policy is configured on Leaf1 and Leaf3, but not on Leaf2.

Topology example

Before a service graph is applied to the contract between the Client EPG (class ID 32774) and the Web EPG (class ID 32771), Permit entries between them are programmed on leaf nodes as shown in Figure 11 and Table 1 (scope ID 2621442 is the VRF ID).

Before service graph is deployed

Table 1. Permit rule without service graph

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 32771 (Web EPG) |

32774 (Client EPG) |

38 (The filter used in the contract subject) |

Permit |

| 32274 (Client EPG) |

32771 (Web EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Permit |

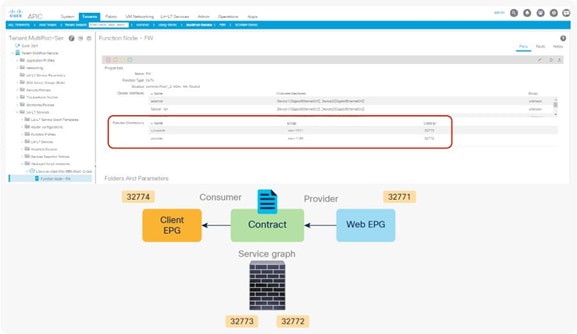

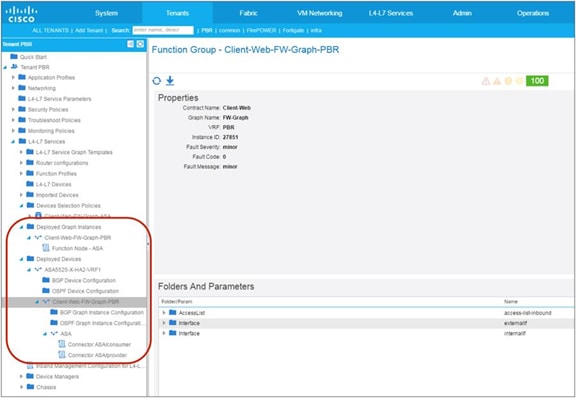

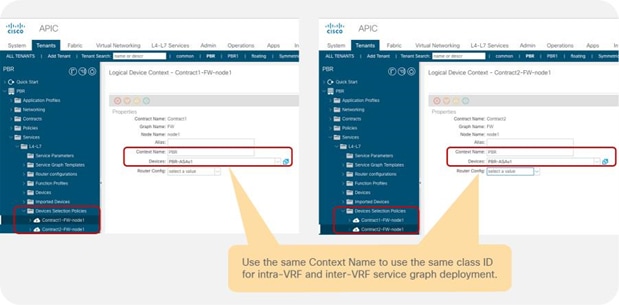

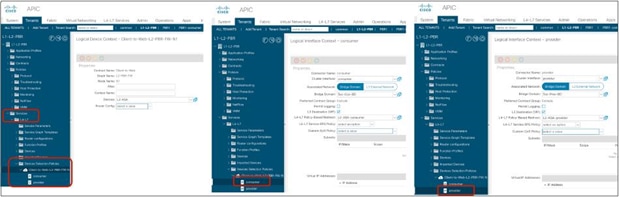

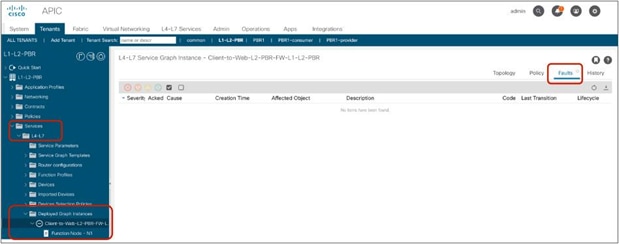

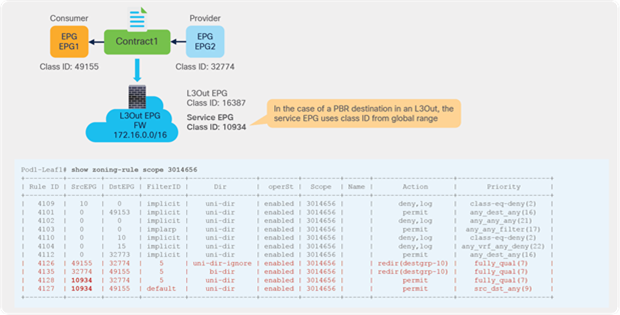

When the service graph is deployed, the EPGs for the consumer and provider service node connectors are created internally. The class ID for the service node can be found in the function node under the deployed graph instance. The location is Tenant > L4-L7 Services > Deployed Graph Instances > Function Node (Figure 12).

Class ID for service node

When you add the service graph, the permit rule is updated as shown in Table 2. Because the intention of the service graph is to insert service devices between the consumer and provider EPGs, the consumer and provider connectors for the service node are inserted between the consumer and provider EPGs.

Table 2. Permit rule with service graph (without PBR)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 32774 (Client EPG) |

32773 (consumer connector of service node) |

The filter used in the contract subject |

Permit |

| 32772 (provider connector of service node) |

32771 (Web EPG) |

default |

Permit |

| 32771 (Web EPG) |

32772 (provider connector of service node) |

The reverse filter of the filter used in the contract subject |

Permit |

| 32773 (consumer connector of service node) |

32774 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Permit |

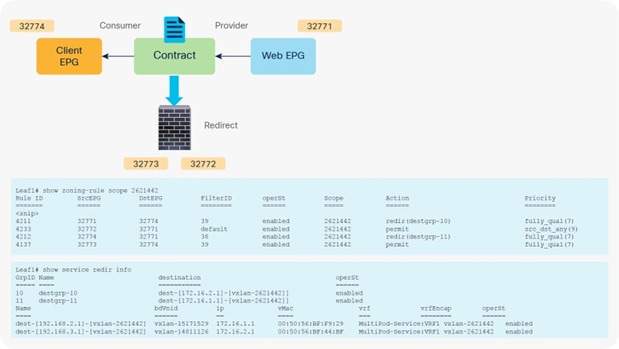

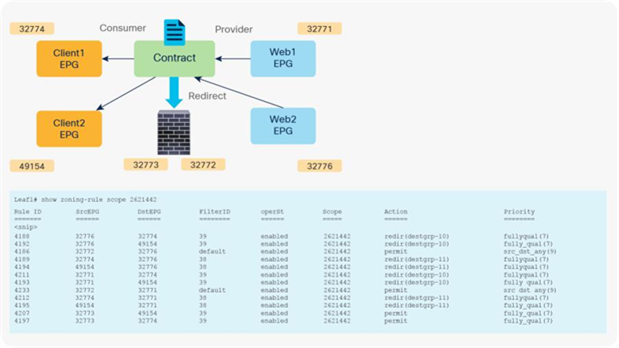

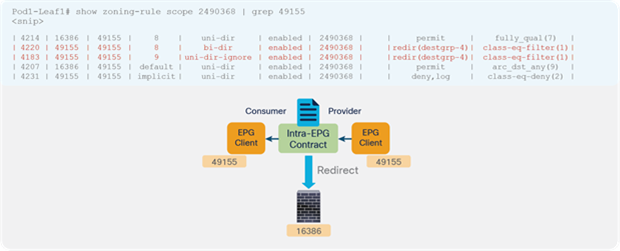

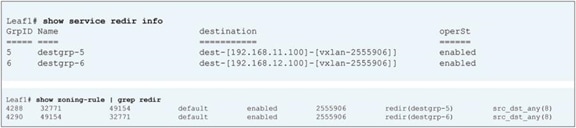

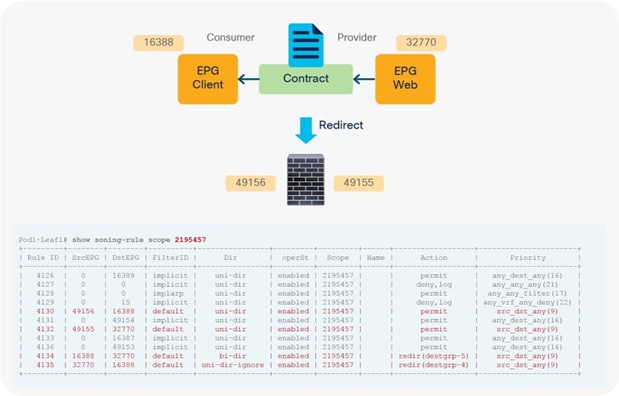

When you add the service graph with PBR, the redirect policy is programmed on the switches on which the consumer or provider EPG is located. In this example, PBR destination 172.16.1.1 is the consumer connector of the firewall node, and 172.16.2.1 is the provider connector the firewall node. If the source class is 32774 (Client EPG) and the destination class is 32771 (Web EPG), traffic will be redirected to the consumer connector the PBR node. Then traffic is routed by the PBR node and returns to the Cisco ACI fabric. Here the source class is 32772 (provider connector of the PBR node), and the destination class is 32771, which is permitted. Return traffic is also redirected to the provider connector of the PBR node because the source class is 32771 and the destination class is 32774. After PBR for return traffic is performed and traffic returns to the Cisco ACI fabric from the PBR node, the source class is 32773 (consumer connector of PBR node), and the destination class is 32774, which is permitted (Figure 13 and Table 3).

After service graph with PBR is deployed

Table 3. Permit and redirect rules with service graph (with PBR)

| Source EPG |

Destination EPG |

Filter ID |

Action |

| 32774 (Client EPG) |

32771 (Web EPG) |

38 (The filter used in the contract subject) |

Redirect |

| 32772 (provider connector of service node) |

32771 (Web EPG) |

Default |

Permit |

| 32771 (Web EPG) |

32774 (Client EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Redirect |

| 32773 (consumer connector of service node) |

32774 (Client EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Permit |

Note: The filter ID in the show zoning-rule output in Figure 13 shows that the default filter (permit all) is applied in a rule for the PBR node provider connector to the provider EPG (Table 3). This same behavior applies to a regular service graph without PBR (Table 2). Cisco ACI uses the default filter for zoning rules that don’t include a consumer EPG class ID as a source or destination, even with a specific filter used in the contract subject for which you applied a service graph. The assumption is that security enforcement has already been performed on the external (consumer) side. Starting from APIC Release 4.2(3), the filters-from-contract option is available at a service graph template level to use the specific filter of the contract subject instead of the default filter (Table 4). See the “Filters-from-contract option” section for details.

Table 4. Permit and redirect rules with service graph (with PBR and the filters-from-contract option)

| Source EPG |

Destination EPG |

Filter ID |

Action |

| 32774 (Client EPG) |

32771 (Web EPG) |

38 (The filter used in the contract subject) |

Redirect |

| 32772 (provider connector of service node) |

32771 (Web EPG) |

38 (The filter used in the contract subject) |

Permit |

| 32771 (Web EPG) |

32774 (Client EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Redirect |

| 32773 (consumer connector of service node) |

32774 (Client EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Permit |

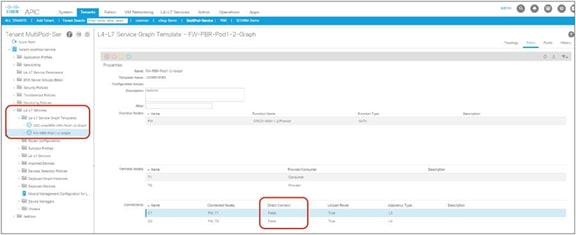

If you deploy a service graph with PBR with the default configuration, the keepalive messages from L4-L7 devices to servers to monitor their availability is failed. It is because there is no permit entry for the traffic from the provider EPG to the provider connector of the PBR node. In the preceding example, traffic from the consumer EPG (32774) to the consumer connector of the PBR node (32773) and from the provider EPG (32771) to the provider connector of the PBR node (32772) is not permitted. For situations in which you require permit entries for this traffic, you can set the Direct Connect option to True.

This configuration is located in Tenant > L4-L7 Services > L4-L7 Service Graph Templates > Policy (Figure 14). The default setting is False.

Direct Connect option in L4-L7 service graph template

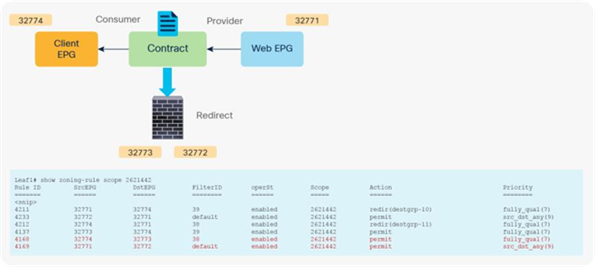

Figure 15 shows an example in which Direct Connect is set to True on both connections. In this case, traffic from the consumer EPG (32774) to the consumer side of the PBR node (32773) and from the provider EPG (32771) to the provider side of the PBR node (32772) are permitted (Table 5).

After service graph with PBR is deployed (Direct Connect set to True)

Table 5. Permit and redirect rules with service graph (with PBR and Direct Connect set to True)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 32774 (Client EPG) |

32771 (Web EPG) |

38 (The filter used in the contract subject) |

Redirect |

| 32772 (provider connector of service node) |

32771 (Web EPG) |

default |

Permit |

| 32771 (Web EPG) |

32774 (Client EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Redirect |

| 32773 (consumer connector of service node) |

32774 (Client EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Permit |

| 32774 (Client EPG) |

32773 (consumer connector of service node) |

38 (The filter used in the contract subject) |

Permit |

| 32771 (Web EPG) |

32772 (provider connector of service node) |

default |

Permit |

Service EPG selector for endpoint security groups (ESGs)

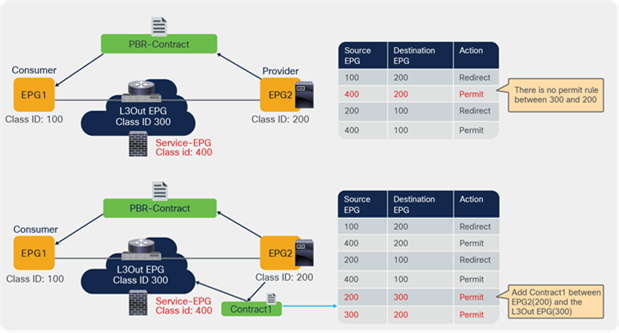

Prior to the 5.2(4) release, users could not manually create a contract with a service EPG created through service graph, which would have some challenges. For example:

● Direct Connect can be used to add a permit rule for the traffic from the service EPG to the consumer/provider EPG. However, an EPG that is not either the consumer or provider EPG cannot communicate with the service EPG unless a vzAny contract or a preferred group is configured.

● As vzAny includes the service EPG, a vzAny-to-vzAny contract can permit traffic between the service EPG and other EPGs in the VRF. However, all other EPGs in the VRF can talk to the service EPG instead of allowing specific EPGs to communicate with the service EPG.

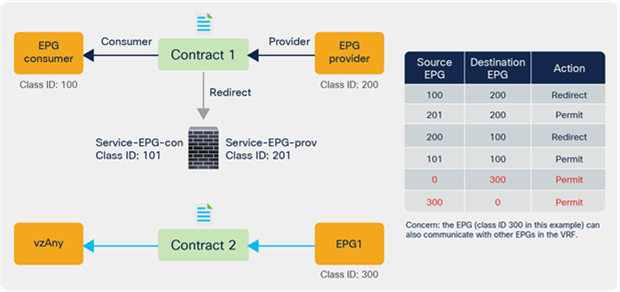

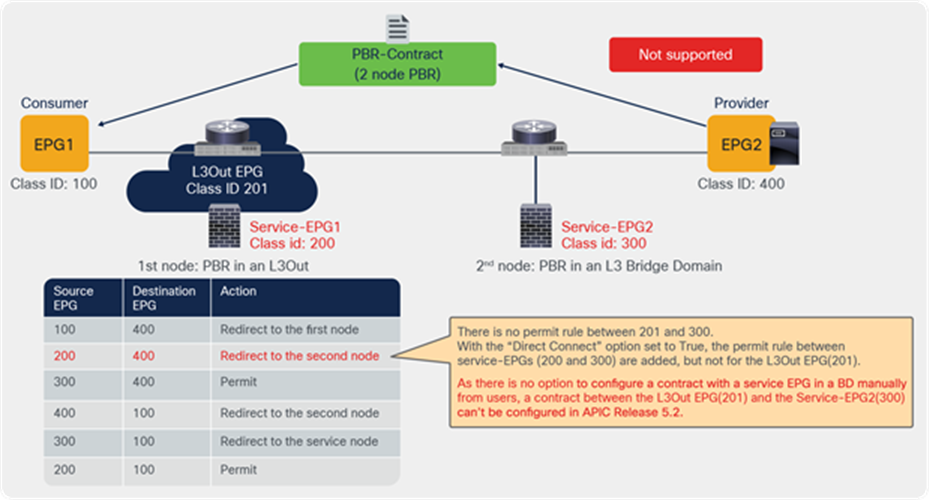

The figure below illustrates the second example.

Use case example without service EPG selector for ESGs

Starting from APIC Release 5.2(4), Service EPG selector for ESGs allows users to map a service EPG to an ESG and create a contract with the ESG. The figure below illustrates a use case. In addition to a vzAny-to-vzAny permit contract, adding a deny contract between the service ESG and other ESGs to allow specific ESGs to communicate with the service ESG.

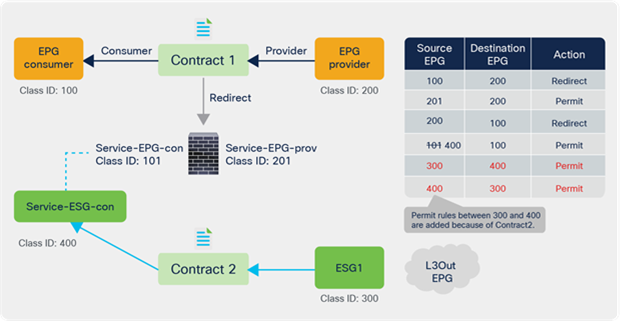

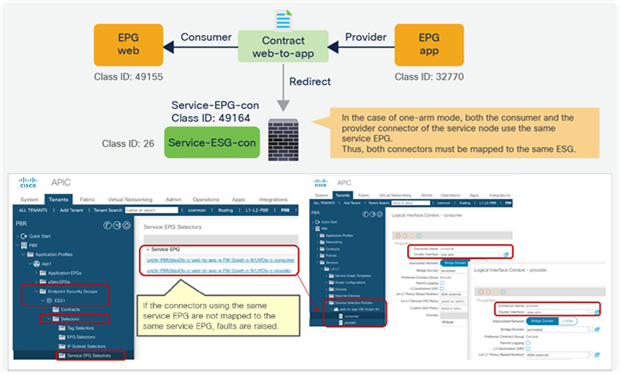

The figure below illustrates an example. Service EPG “Service-EPG-con” for the firewall consumer connector is mapped to ESG “Service-ESG-con” that has a contract with ESG1 and/or an L3Out EPG. Zoning-rules that involve service EPGs are inherited when the service EPG class ID gets changed to the ESG class ID. It’s important to note that the ESG for the service device interface (Service-ESG-con in this example) can have a contract with an ESG or an L3Out EPG, not an EPG because contracts between an EPG and an ESG are not supported.

Use case example 1 with service EPG selector for ESGs

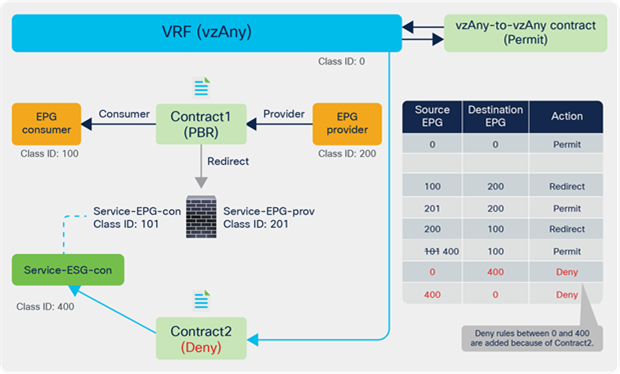

The figure below illustrates another use case. A vzAny-to-vzAny contract is used to permit all traffic within the VRF. By adding a deny contract between vzAny to the ESG for the service-device interface (Service-ESG-con in this example), only specific EPGs can communicate with the service-device interface.

Use case example 2 with service EPG selector for ESGs

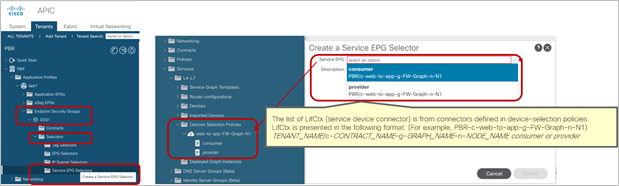

This configuration is located in Tenant > Endpoint Security Groups > ESG_NAME > Selectors > Service EPG Selectors. The list of LifCtx (service-device connector, representing the service EPG) defined in device selection policies in the tenant shows up in the dropdown menu. By selecting a LifCtx, the service EPG is mapped to the ESG.

Service EPG selector for ESGs

Service EPG selector for ESGs has the following considerations:

● Contracts between an EPG and an ESG are not supported.

● Although zoning-rules that involve service EPGs are inherited, the class ID of the service EPG will be changed to a global class ID because it is mapped to an ESG that uses a global class ID. Because the class ID gets changed, traffic loss will occur.

● All the LifCtx in the same device using the same BD should be mapped to the same ESG. For example:

◦ One-arm mode PBR. (Please see the example in Figure 20 below.)

◦ Reuse the service device interface for multiple service graph deployments.

● The Service EPG and the ESG must be in the same VRF.

◦ If the service EPG and ESG are in different tenants, there are additional considerations. (Please see the example in figures 21 and 22 below.)

● Multi-Site is not supported. (NDO does not support ESG as of this writing.)

● Support only for L3 PBR with PBR destination in a BD.

◦ PBR destination in an L3Out is not supported. (Contracts can be manually configured with an L3Out EPG.)

◦ L1/L2 PBR is not supported. (L1/L2 device interfaces are not supposed to communicate with servers directly.)

All the LifCtx in the same device using the same BD should be mapped to the same ESG (one-arm mode)

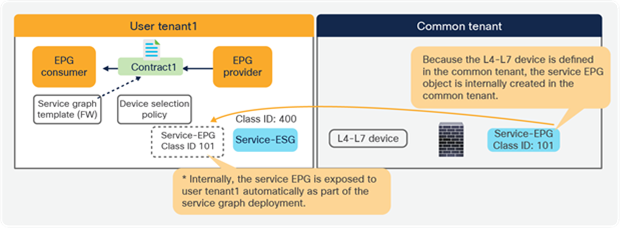

Figures 21 and 22 below illustrate a consideration if the service EPG and the ESG are in different tenants. It’s important to note that a service EPG object is internally created in the tenant where the L4-L7 device is defined. If an L4-L7 device is defined in a different tenant, the service EPG object is internally created in the tenant where the L4-L7 device resides. If the service EPG to an ESG mapping is defined only in one tenant, as illustrated in Figure 21, it is supported.

Multiple tenants consideration (supported)

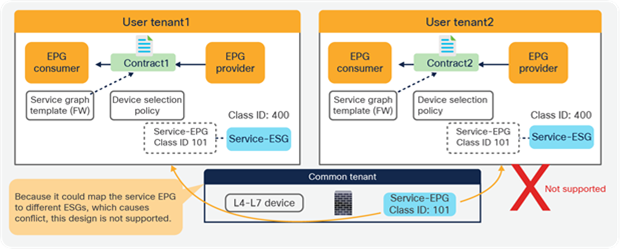

However, if the service EPG to an ESG mapping is defined in multiple tenants, as illustrated in Figure 22, it is NOT supported because it could cause conflict.

Multiple tenants consideration (not supported)

Multiple consumer and provider EPGs

Service graphs are applied to contracts, and contracts can be placed between multiple pairs of EPGs. When you use service graphs with L4-L7 devices in routed (Go-To) mode or bridge (Go-Through) mode, the reuse of a graph must take into account the bridge domain to which the L4-L7 device is attached. When you use a service graph with PBR, you have more flexibility in attaching the contract between any two pairs of EPGs across multiple bridge domains, as long as this approach is compatible with the VRF instance to which the L4-L7 device belongs.

If you have two consumer EPGs and two provider EPGs, as in the previous example, policy is programmed as shown in Figure 23. If traffic is between one of the consumer EPGs and one of the provider EPGs, it is redirected to the PBR node.

After service graph with PBR is deployed (multiple consumer and provider EPGs)

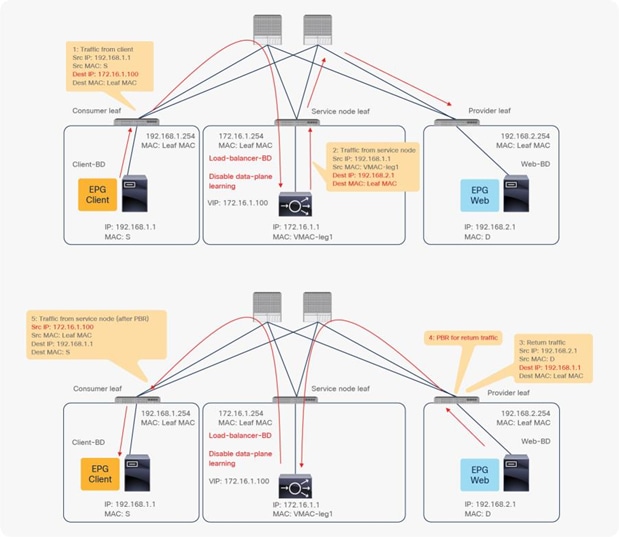

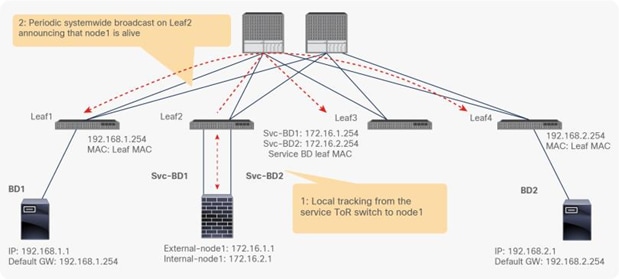

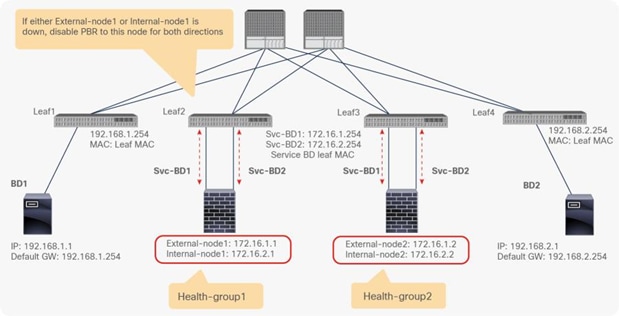

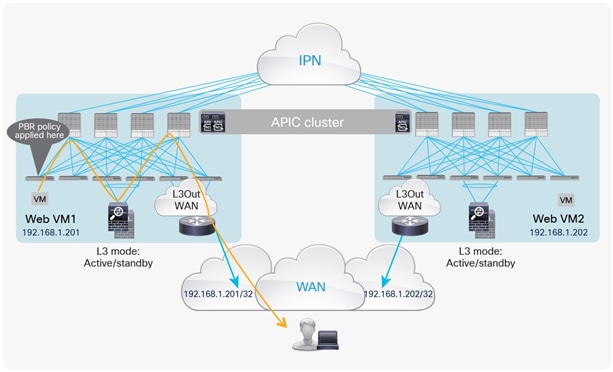

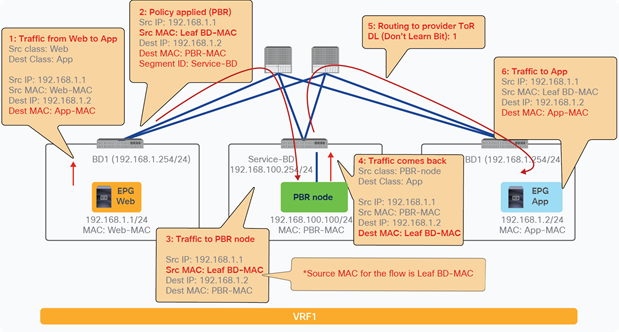

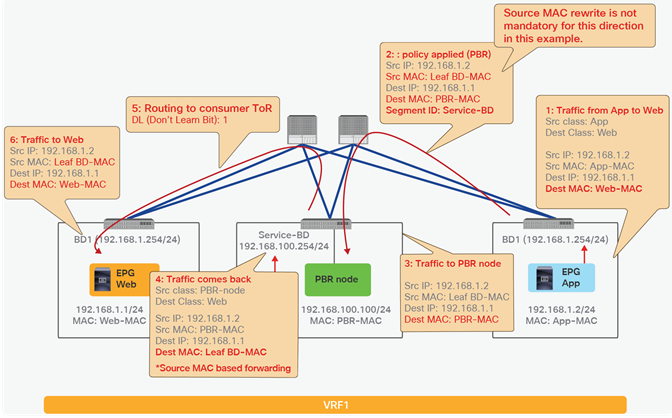

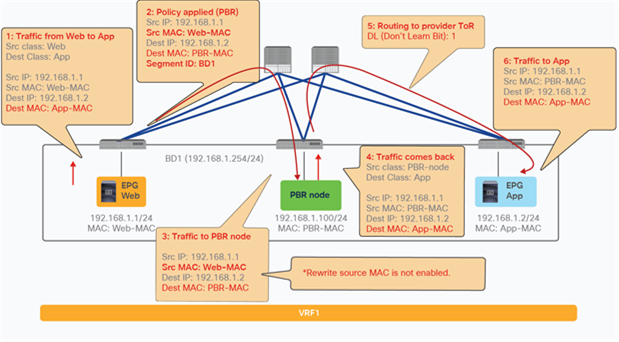

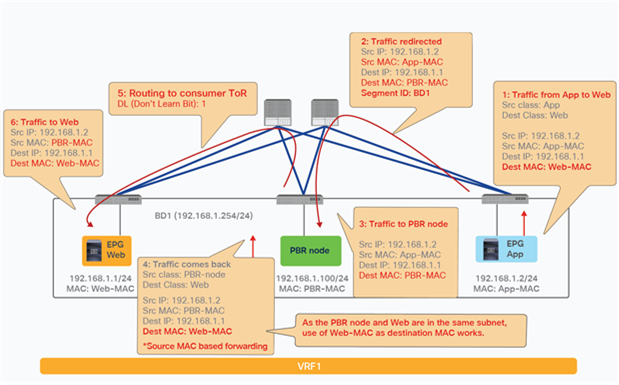

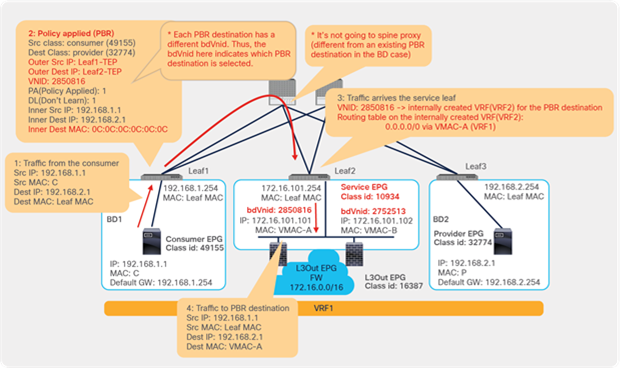

This section explains PBR end-to-end packet flow using a PBR destination in an L3 bridge domain. For a PBR destination in an L3Out, refer to the section, “PBR destination in an L3Out”. Note that because several designs and traffic flows are possible, the example used in this discussion may not exactly reflect your environment.

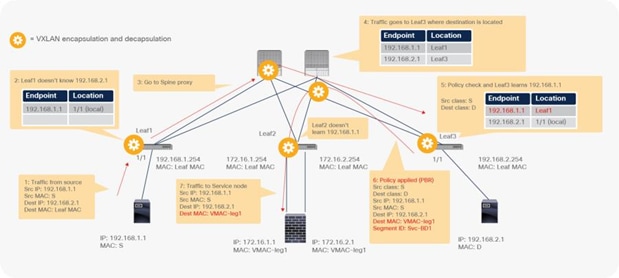

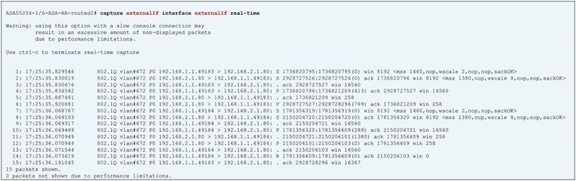

Figure 24 shows an example in which the Client EPG is a consumer EPG, and the Web EPG is a provider EPG with a contract with the PBR service graph, and the client endpoint generates traffic destined for the web endpoint. If Leaf1 hasn’t learned the destination endpoint, Leaf1 can’t resolve the destination EPG class ID. Therefore, the traffic goes to the spine proxy, and the spine node forwards the traffic to Leaf3, to which the destination endpoint is connected. Leaf3 learns the source endpoint from this traffic. Then Leaf3 can resolve the source and destination EPG class IDs, so PBR is performed on Leaf3. Here, the destination segment ID (VNID) is rewritten to the bridge domain VNID of the PBR node bridge domain, and the destination MAC address is rewritten to the PBR node MAC address that is configured in the APIC. Leaf3 doesn’t know where the destination MAC address is connected, the traffic goes to the spine proxy, and the spine node forwards the traffic to Leaf2, to which the PBR node is connected. Leaf2 doesn’t learn the client IP address from this traffic because Endpoint Dataplane Learning is disabled for the PBR node bridge domain.

End-to-end packet flow example (client to web)

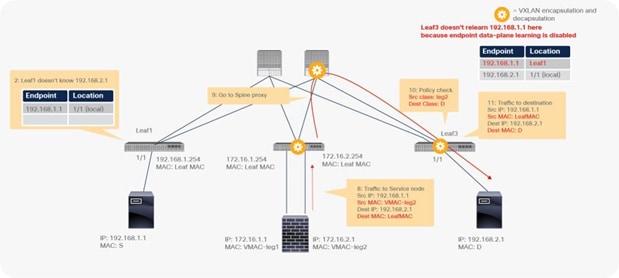

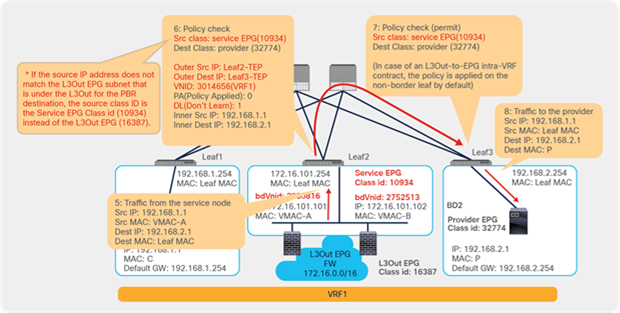

Traffic is routed on the PBR node based on the routing table of the PBR node, and traffic returns to the Cisco ACI fabric. Because Leaf2 does not know the destination endpoint, the traffic goes to the spine proxy again and then to Leaf3. Here the source EPG is the PBR node provider connector class ID, and the destination is the provider EPG class ID. The traffic is only permitted and arrives at the web endpoint. The key point here is that Leaf3 does not learn the client IP address from this traffic because Endpoint Dataplane Learning is disabled for the PBR node bridge domain (Figure 25).

End-to-end packet flow example (PBR node to web)

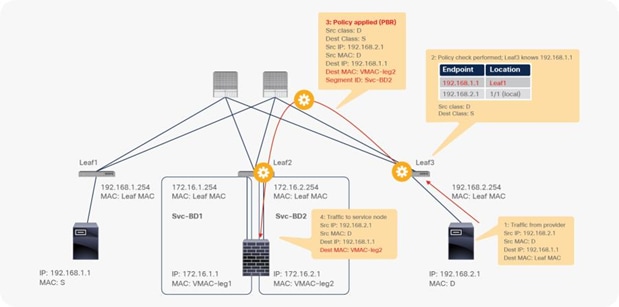

For the return traffic, because Leaf3 can resolve both the source and destination EPG class IDs, PBR is performed on Leaf3. The destination MAC address is rewritten, and the traffic goes to the PBR node on the provider side (Figure 26).

End-to-end packet flow example (web to client)

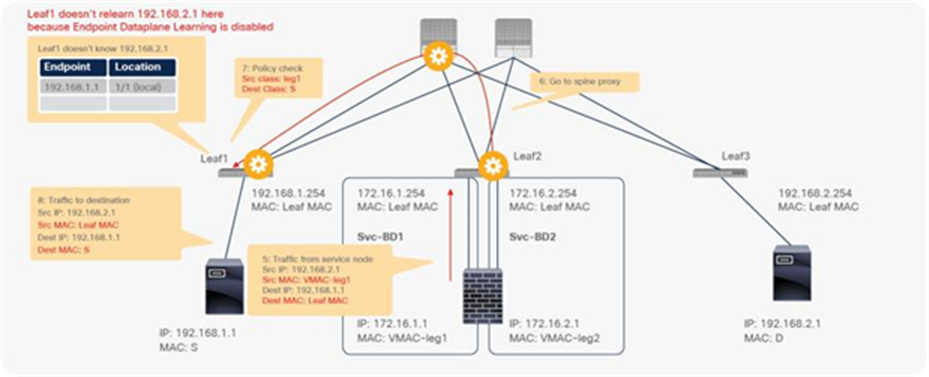

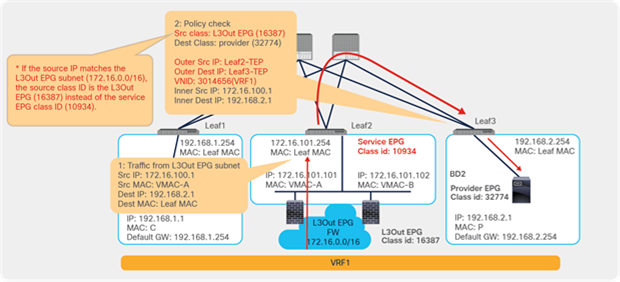

The traffic returns to the Cisco ACI fabric from the consumer side of the PBR node. Because Leaf2 does not know the destination endpoint, the traffic goes to the spine proxy again and then to Leaf1. Leaf1 performs policy enforcement, and the traffic is permitted because the source EPG is the PBR node consumer connector class ID, and the destination is the consumer EPG class ID. Leaf1 does not learn the web endpoint IP address from this traffic because Endpoint Dataplane Learning for the PBR node bridge domain is disabled (Figure 27).

End-to-end packet flow example (PBR node to client)

The rest of the traffic will also be redirected on Leaf3 because Leaf1 does not learn the web endpoint IP address in this example. Cisco ACI enforces policies depending on whether the source and destination class IDs can be determined, which depends on the traffic flow. If traffic is generated from the web endpoint first, or if other traffic lets Leaf1 learn the web endpoint IP address, PBR policy can be performed on Leaf1.

As it is routed at a leaf, TTL is decreased. If you run a traceroute, ACI leaf IP would be in your traceroute output. Because a network device sends an ICMP "Time Exceeded" message back to the source by using its closest IP as the source IP, you may see the same subnet range twice, depending on your network design.

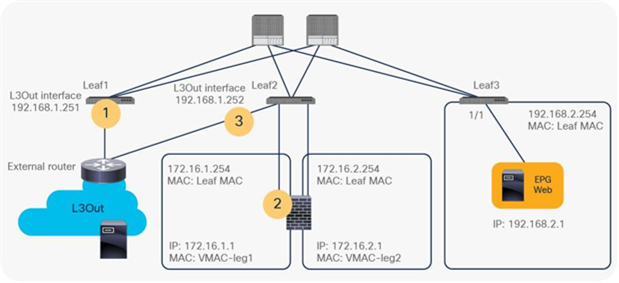

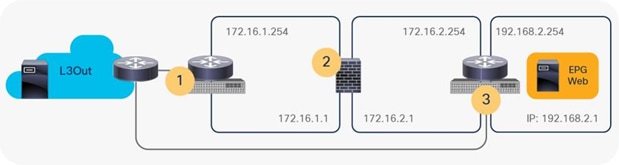

For example, if ICMP traffic is redirected and you run a traceroute from an external client behind L3Out to the destination endpoint at 192.168.2.1 (Figure 28), you would see the following hops in traceroute output:

1. IP of L3Out interface on either Leaf1 or Leaf2 (192.168.1.251 or 192.168.1.252)

2. IP of external connector of PBR node (172.16.1.1) if PBR node decreases TTL*

3. IP of L3Out interface on Leaf2 (192.168.1.252)

Traceroute consideration (topology)

This is because the Leaf2 uses its L3Out interface IP as source IP for the ICMP “Time Exceeded” message back to the external client. Figure 29 illustrates the logical network topology.

Traceroute consideration (logical network topology)

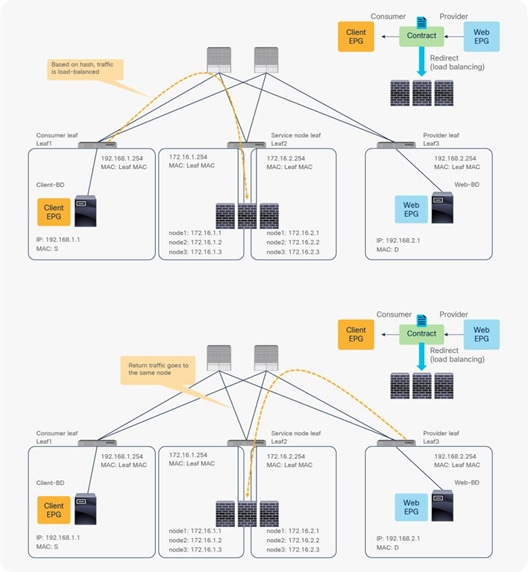

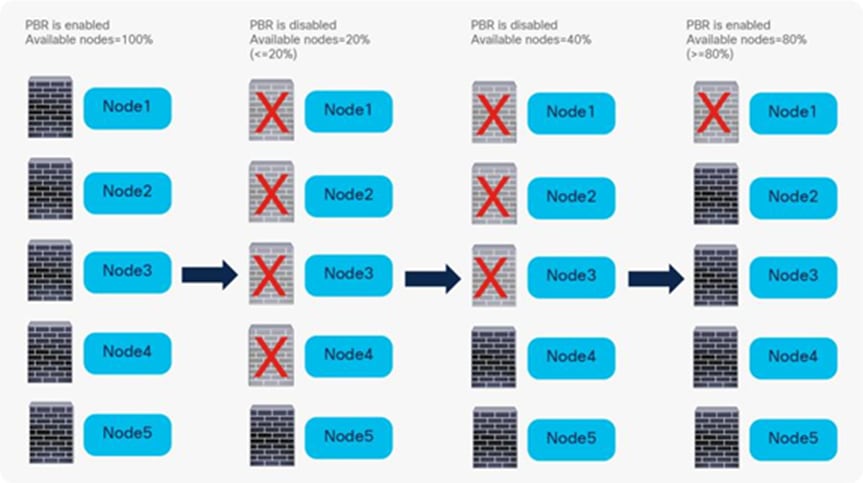

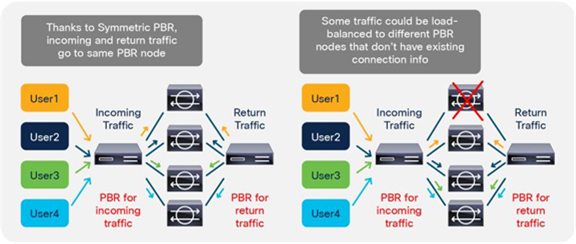

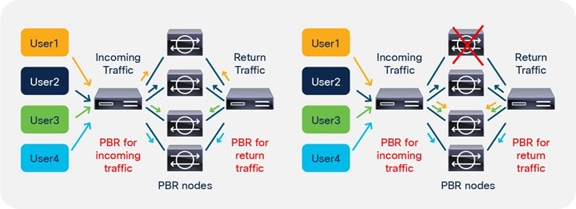

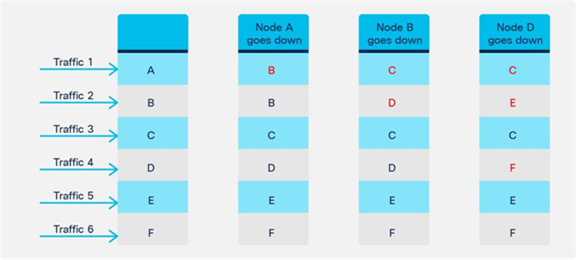

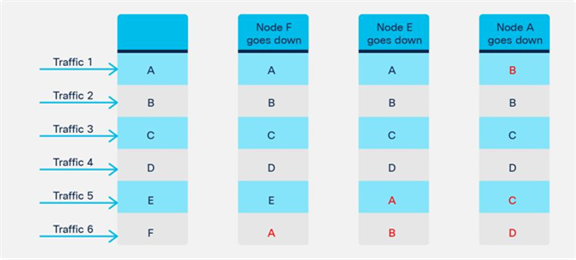

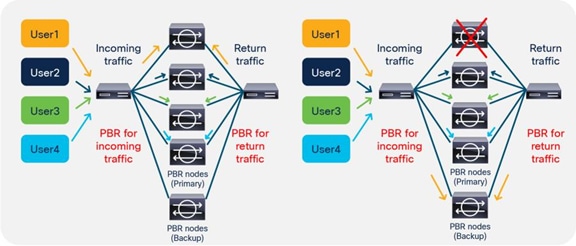

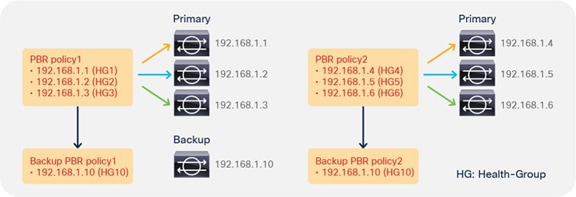

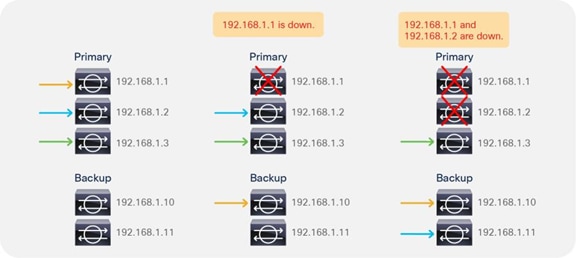

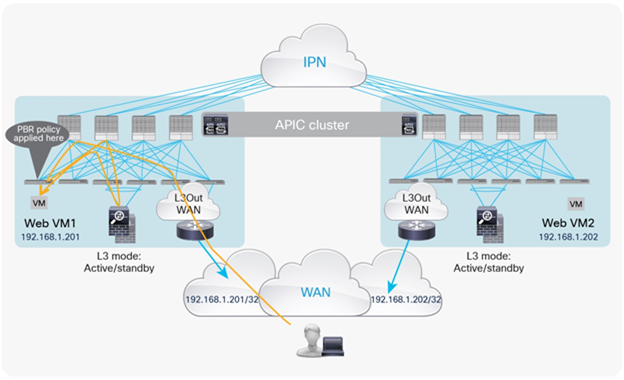

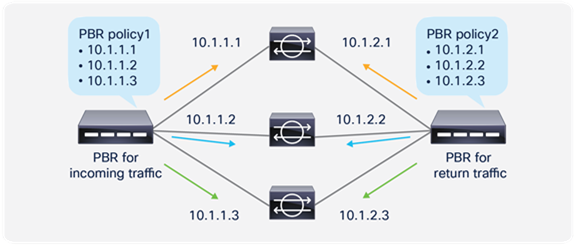

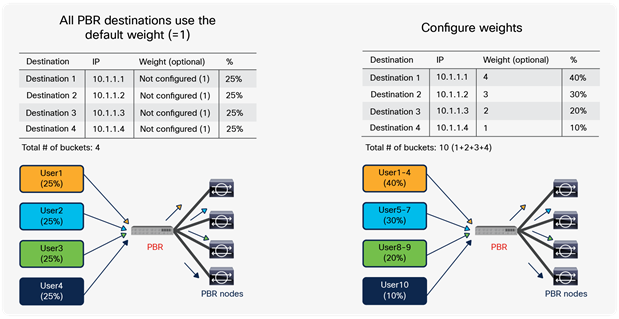

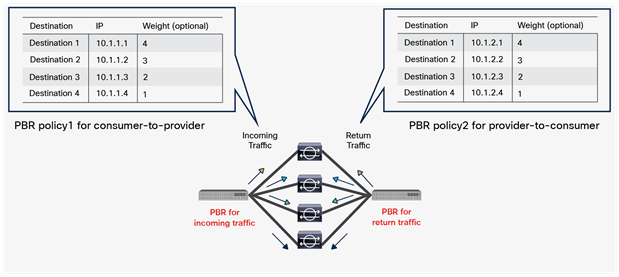

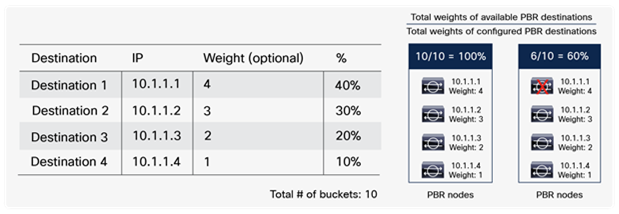

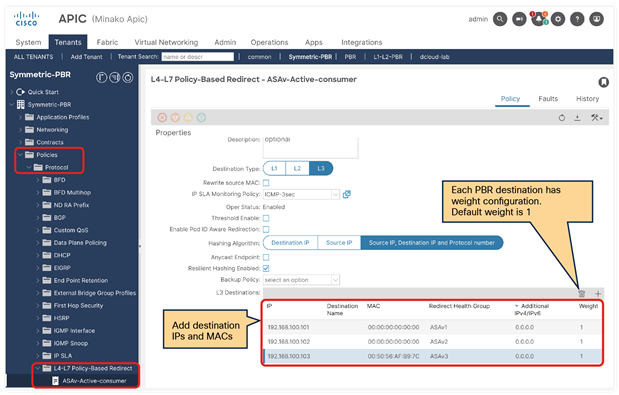

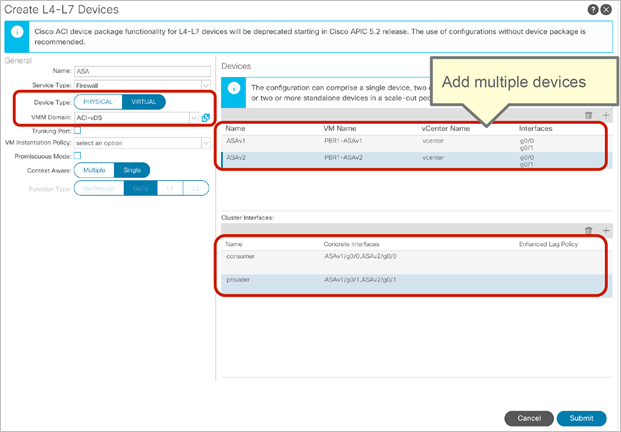

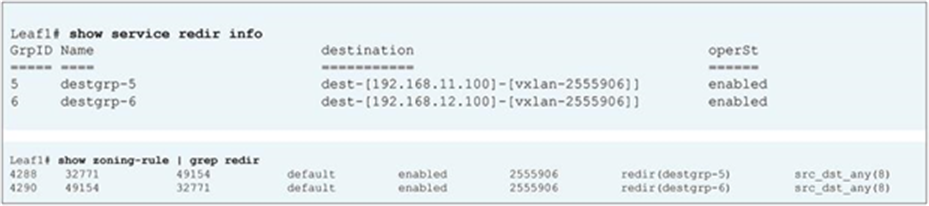

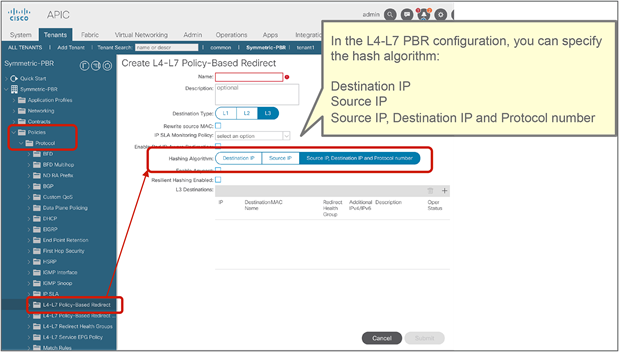

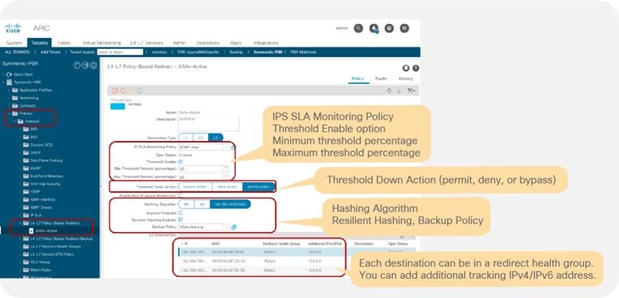

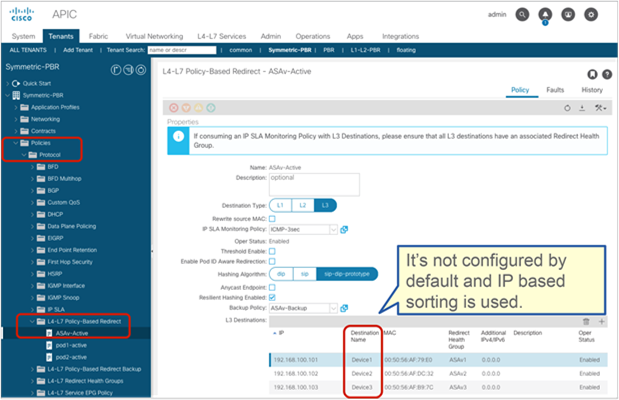

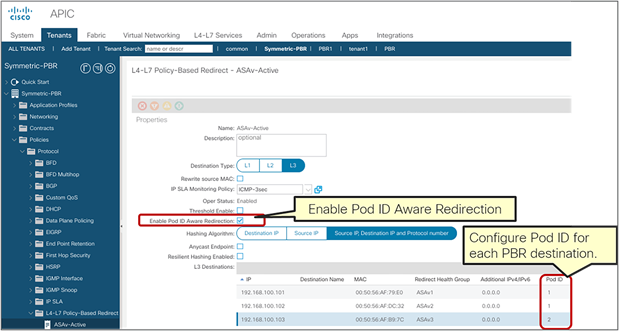

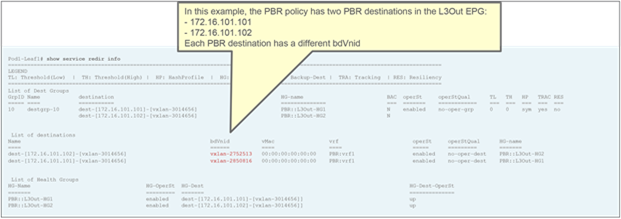

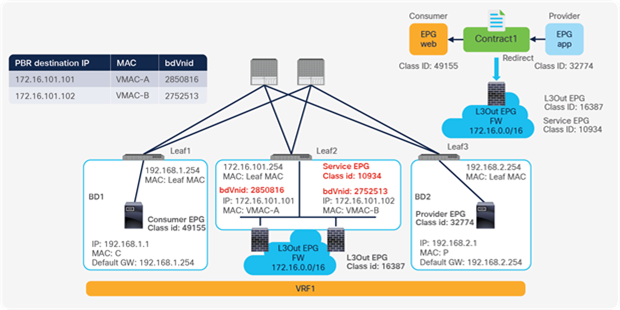

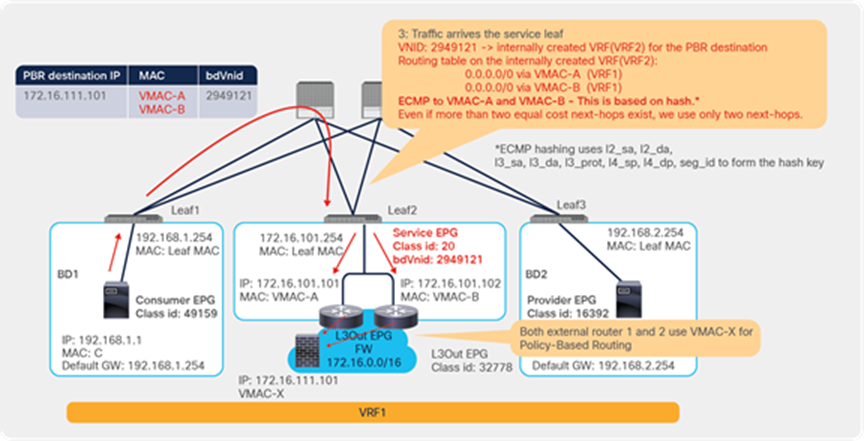

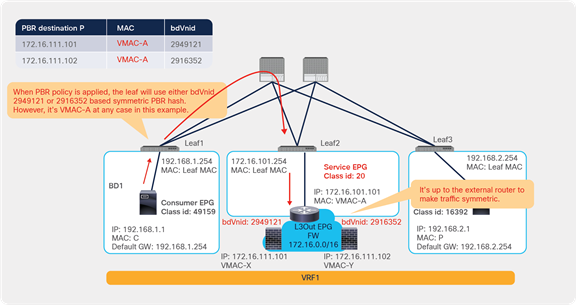

So far, this document has discussed PBR based on the assumption that the PBR destination is a single L4-L7 device. However, PBR can load-balance traffic to more than just one PBR destination such as an individual firewall. If, for example, you have three PBR destinations, IP and MAC address pairs are configured in a PBR policy, and traffic is redirected to one of the three PBR nodes based on hashing. The hash tuple is the source IP address, destination IP address, and protocol number by default. Because L4-L7 devices perform connection tracking, they must see both directions of a flow. Therefore, you need to make sure that incoming and return traffic are redirected to the same PBR node. Symmetric PBR is the feature that enables this capability (Figure 30).

Symmetric PBR is useful for inserting multiple service nodes to scale a system. It requires Cisco Nexus 9300-EX and -FX platform leaf switches onward.

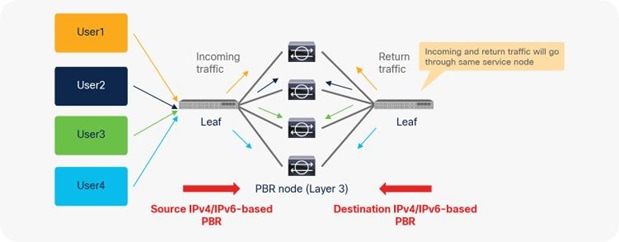

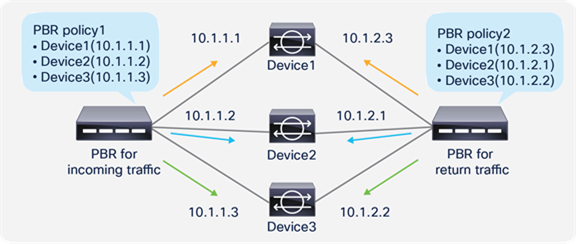

Symmetric PBR

Starting from APIC Release 2.2(3j) and 3.1, the hash tuple is user configurable. You can use the source IP address only; the destination IP address only; or a combination of the source IP address, destination IP address, and protocol number (default). If you use the source IP address only or the destination IP address only option, you need to configure options for both directions to keep traffic symmetric. For example, if you use the source IP address only option for incoming traffic, you must use the destination IP address only option for return traffic to keep traffic symmetric, as shown in Figure 31.

The use case for symmetric PBR with the source IP only or the destination IP only is a scenario in which the traffic from a source IP address (user) always needs to go through the same service node.

Example with only source IP address and destination IP address

This section describes various deployment options you can use with PBR.

EPGs in a different subnet in the same VRF instance

The basic, common deployment of PBR consists of EPGs and PBR nodes in the same VRF instance, with each EPG in a different bridge domain, as shown in Figure 32 and Figure 33. The gateway for the endpoints is the Cisco ACI fabric, which is required for PBR.

Intra-VRF design (L3Out EPG to Web EPG)

Intra-VRF design (Web EPG to App EPG)

Consumer and provider EPGs in the same subnet

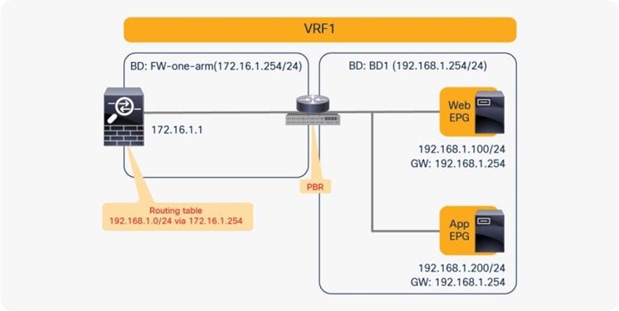

PBR can redirect traffic even if the endpoints are in the same bridge domain.

For example, even though the Web and App EPGs are in the same bridge domain and the same subnet, PBR can be enforced. This design requires the use of the same interface on the PBR node unless the PBR node has a more specific static route. Such a scenario is called a one-arm mode deployment (Figure 34). Though this example uses a dedicated bridge domain for the PBR node, L3 PBR destination can be in the same bridge domain and the same subnet with Web and App EPGs after APIC Release 3.1.

Consumer and provider EPGs in the same subnet

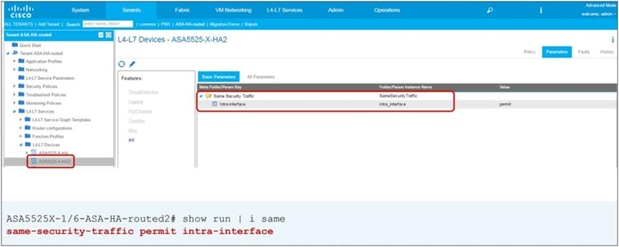

Note: The firewall may prevent traffic from entering and leaving through the same interface. Therefore, the firewall must be configured appropriately to permit intra-interface traffic. See the Cisco Adaptive Security Appliance (ASA) configuration example later in this document.

Prior to APIC Release 4.0, you cannot associate a service graph with an intra-EPG contract. For releases later than APIC Release 4.0, PBR with an intra-EPG contract is supported. Starting with APIC Release 5.2, PBR with an intra Ext-EPG contract is also supported.

PBR can be deployed as bidirectional PBR or unidirectional PBR.

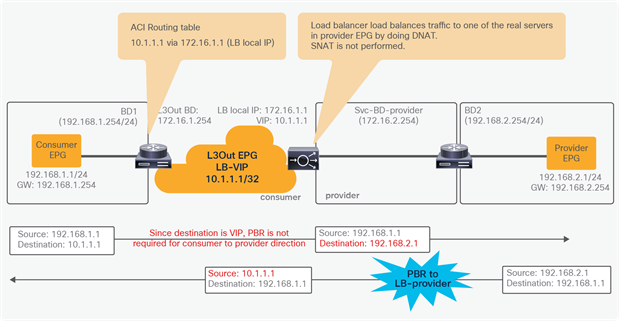

Unidirectional PBR for load balancer without source NAT

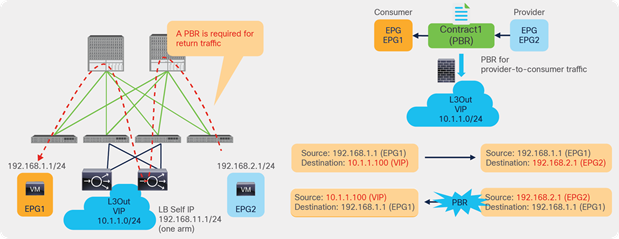

One use case for unidirectional PBR is load-balancer integration without source Network Address Translation (NAT).

For example, as shown in Figure 35, because the destination IP address from the client is the virtual IP address on the load balancer, PBR is not required for client-to-web traffic. If the load balancer doesn’t translate the source IP address, PBR is required for return traffic; otherwise, the return traffic won’t come back to the load balancer.

Unidirectional PBR example

Note: You must set Direct Connect to True to allow keepalive messages from the load-balancer endpoint to the web endpoint.

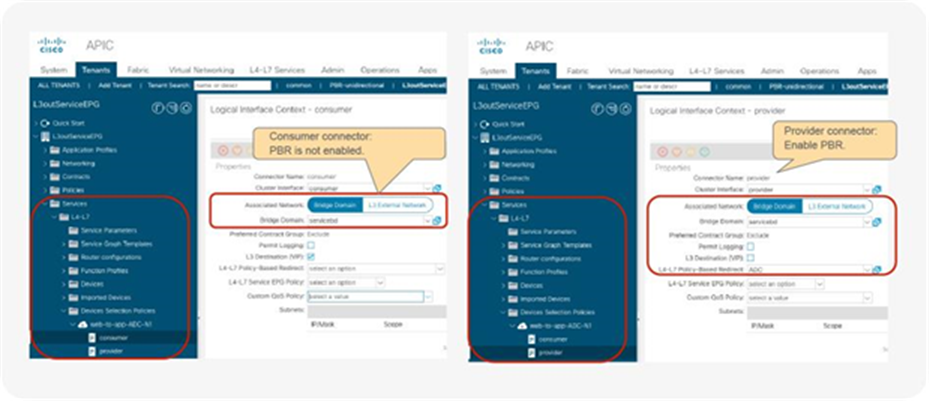

Unidirectional PBR with the other connector in L3Out

Prior to APIC Release 4.1.2, both consumer and provider connectors of a PBR node had to be in a bridge domain and not in an L3Out; even with unidirectional PBR. Starting from APIC Release 4.1.2, this is no longer required. A L3Out can be used to connect the interface of L4-L7 device whereas the other interface is connected to a bridge domain and it receives traffic via PBR redirection.

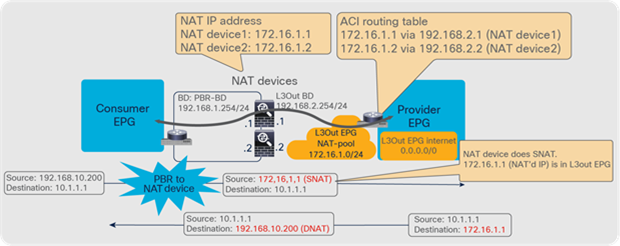

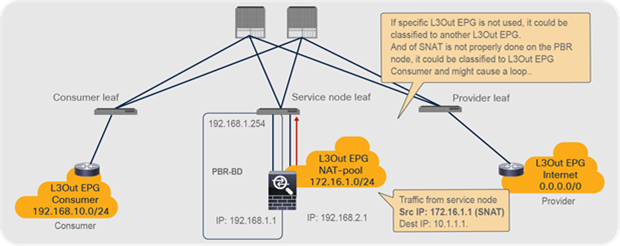

One use case for unidirectional PBR with the other connector in L3Out is a NAT IP-pool outside the local subnet. Figure 36 illustrates an example. Consumer-to-provider traffic is redirected to one of the PBR nodes. The PBR node performs source NAT, and the NAT IP addresses are outside of the local subnet. Thus, L3Out is required to add the route to the NAT IP addresses that are the destination IP addresses of the return traffic from the provider. PBR is not required on the provider connector of the PBR node because the return traffic is destined to the NAT IP address.

Design example of unidirectional PBR with the provider connector in a L3Out

Prior to APIC Release 5.0, L3Out was supported only on the provider connector (the provider side interface of a L4-L7 device) of the last node in a service graph that is exemplified in Figure 36.

Starting from APIC Release 5.0, this requirement is no longer mandatory. Figure 37 illustrates an example of unidirectional PBR for the provider to consumer direction with the other connector in L3Out. The use case is a load balancer VIP outside the local subnet. Consumer to provider traffic is going to the VIP through L3Out, which doesn’t require PBR because it’s destined to the VIP. If the load balancer doesn’t perform NAT, PBR is required for return traffic. In this example, the L3Out is used on consumer connector.

Design example of unidirectional PBR for provider to consumer direction with the consumer connector in a L3Out

Note: You need to make sure that IP translation is performed properly on the PBR node, and make sure that the specific L3Out EPG subnet is configured if there are other L3Out EPGs in the same VRF. Otherwise, a loop could occur, because L3Out EPG classification is per VRF, not per interface.

Design consideration for unidirectional PBR with the other connector

Starting with APIC Release 5.2, PBR destinations can be in an L3Out instead of an L3 bridge domain. Refer to the section, “PBR destination in an L3Out” for details.

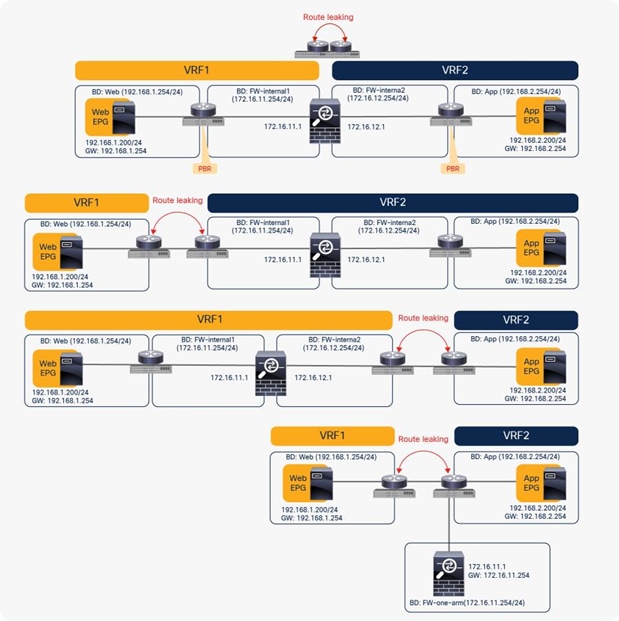

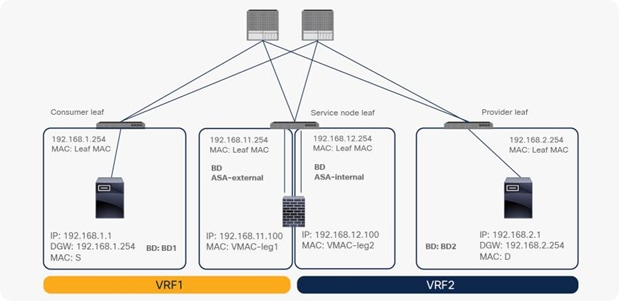

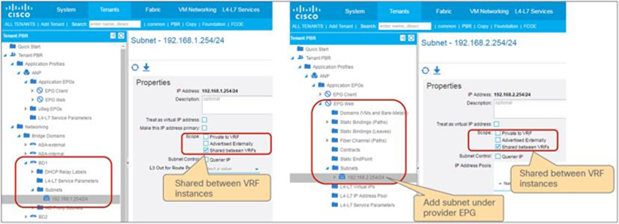

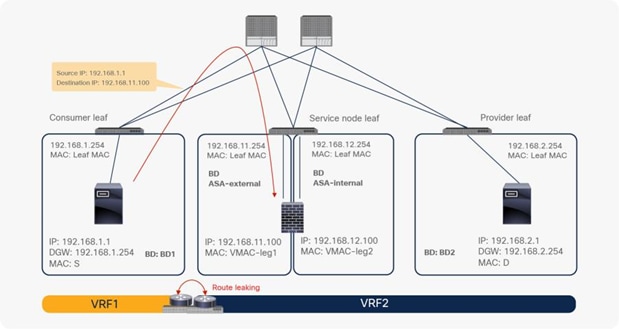

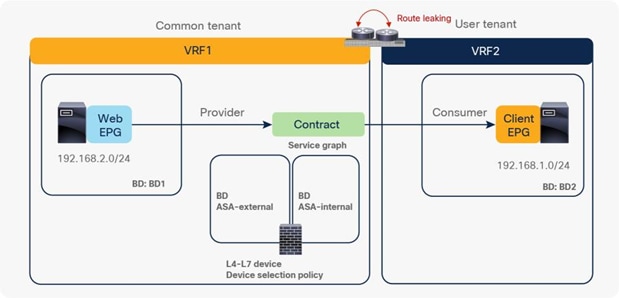

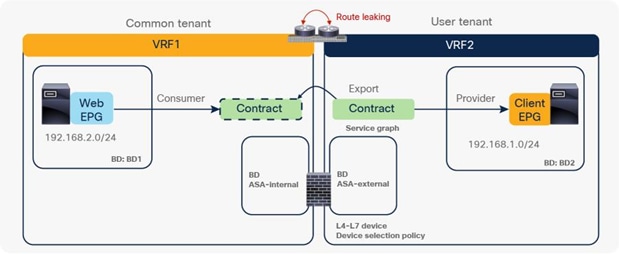

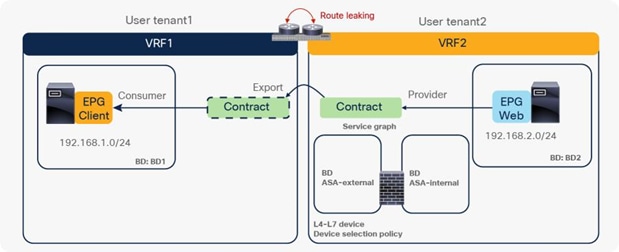

PBR across VRF instances

PBR can be deployed between EPGs in different VRF instances. One use case for this design is a service in one VRF instance shared by endpoints in different VRF instances.

A PBR device can be between consumer and provider VRF instances or in either instance, as shown in Figure 39. The PBR node bridge domain must be in either the consumer or provider EPG VRF instance. It must not be in another VRF instance.

Inter-VRF design

Note: Consumer and provider VRF instances can be in the same tenant or in different tenants.

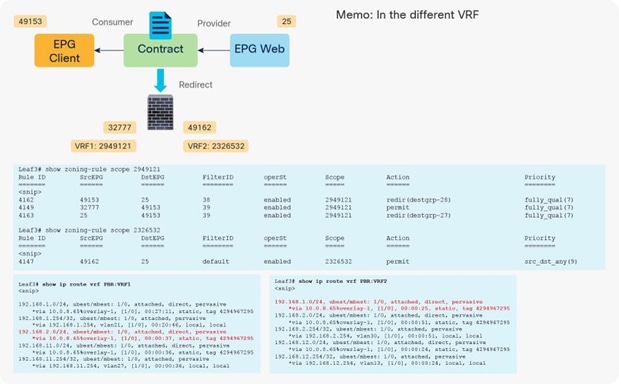

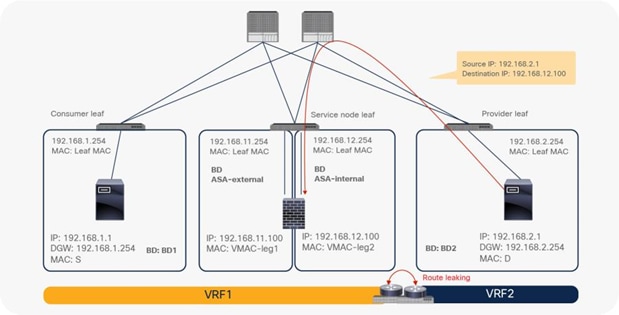

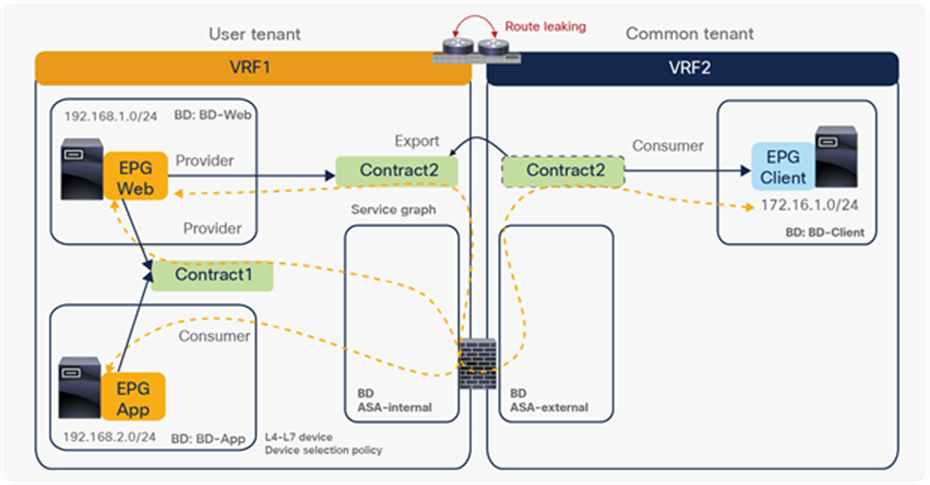

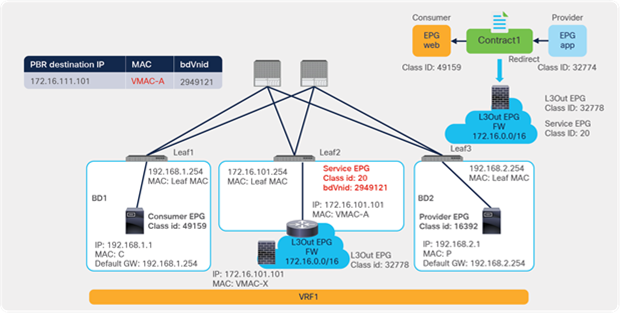

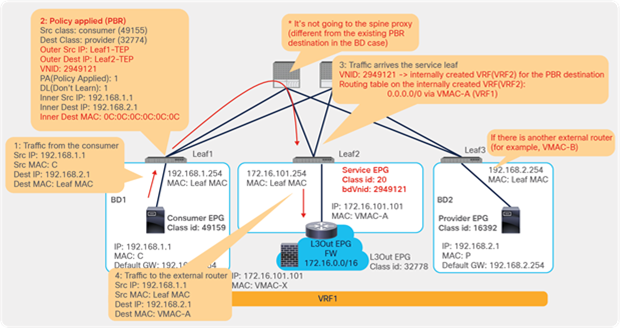

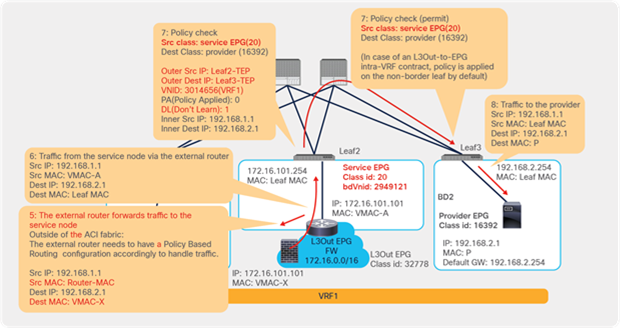

In the case of an inter-VRF contract, provider and consumer routes are leaked between VRF instances, and the consumer VRF instance enforces the Cisco ACI contract policy. Similarly, with PBR, route leaking across VRF instances is required even with PBR. (A route-leaking configuration example is presented later in this document.) For example, VRF1 must contain provider EPG subnet 192.168.2.0/24 that is leaked from VRF2, and VRF2 must contain consumer EPG subnet 192.168.1.0/24 that is leaked from VRF1. After the service graph is deployed, the consumer VRF instance (scope 2949121) has permit and redirect rules for inter-VRF traffic, and the provider VRF instance (scope 2326532) has a permit rule for intra-VRF traffic (Figure 40 and Table 6).

Inter-VRF design with permit and redirect rules

Table 6. Permit and redirect rules (inter-VRF instance)

| VRF instance |

Source class ID |

Destination class ID |

Filter ID |

Action |

| VRF1 |

49153 (Client EPG) |

25 (Web EPG) |

38 (The filter used in the contract subject) |

Redirect |

| VRF1 |

32777 (consumer connector of service node) |

49153 (Client EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Permit |

| VRF1 |

25 (Web EPG) |

49153 (Client EPG) |

39 (The reverse filter of the filter used in the contract subject) |

Redirect |

| VRF2 |

49162 (provider connector of service node) |

25 (Web EPG) |

default |

Permit |

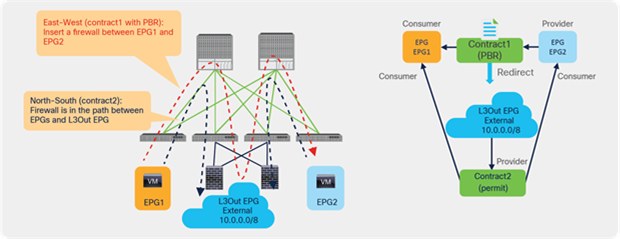

Two-node service graph (firewall with PBR plus load balancer with NAT)

If you want to insert two service nodes, for example, a firewall followed by a load balancer, between EPGs, you will likely need PBR to insert the firewall because the traffic is destined for the load balancer’s virtual IP address, which doesn’t require redirection.

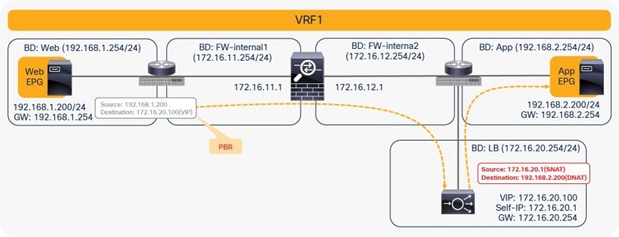

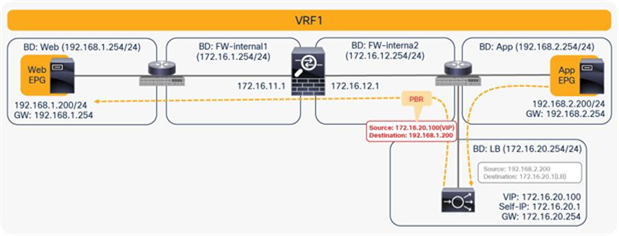

For example, the first node is the firewall, which is a PBR node, and the second node is the load balancer, which is not a PBR node. The consumer endpoint generates traffic destined for the virtual IP address of the load balancer. The traffic will be redirected to the firewall, where PBR policy is applied on the traffic from the Web EPG (the provider EPG) to the load-balancer EPG (the consumer connector of the second node). Then the traffic will go to the load balancer, and the source and destination IP addresses are translated by the load balancer. Finally, it will go to the destination (Figure 41).

Two-node service graph (incoming traffic)

For return traffic, because source NAT was performed by the load balancer, the destination IP address is the load balancer’s IP address. Traffic goes back to the load balancer, and the IP addresses will be translated. Then PBR policy is applied again between the load-balancer EPG (the consumer side of the second node) and the Web EPG (Figure 42).

Prior to APIC Release 3.2, either the first or the second node in a service graph can be a PBR node. Therefore, NAT is required on the second node in this example.

Two-node service graph (return traffic)

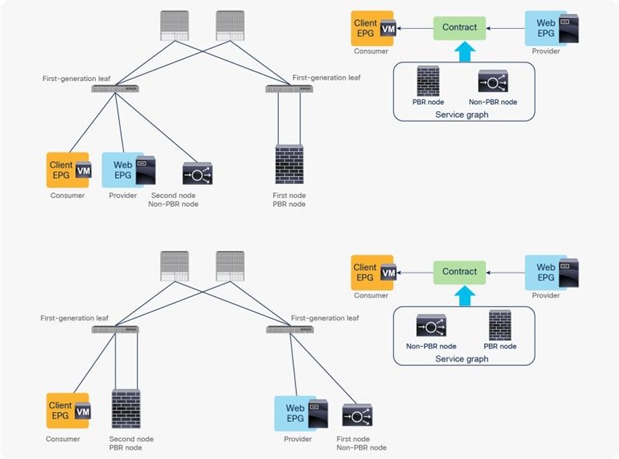

Note: If you use Cisco Nexus 9300 platform switches (except Cisco Nexus 9300-EX and -FX platform switches onward), the first node (the PBR node) must be under a different leaf node than the leaf node to which the consumer endpoint and the second node are connected. However, the consumer endpoint, the provider endpoint, and the second node can be under the same leaf node. If the second node is a PBR node, the PBR node must be under a different leaf node than the leaf node to which the provider side of the first node and the provider EPG are connected, but the consumer endpoint and the PBR node can be under the same leaf node.

Cisco Nexus 9300-EX and -FX platform leaf switches onward do not have this requirement (Figure 43).

Cisco Nexus 9300 platform (except Cisco Nexus 9300-EX and -FX platforms onward) leaf node considerations

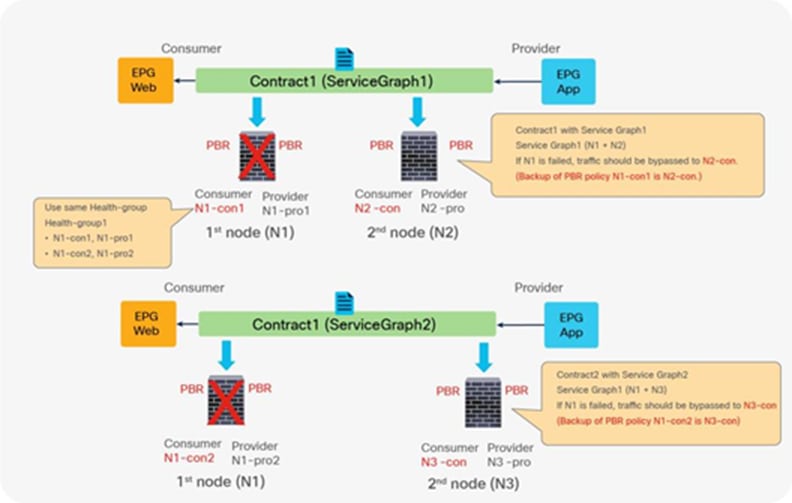

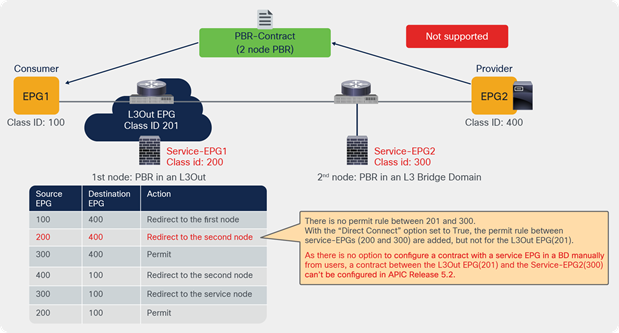

Multinode service graph with PBR

Multinode PBR is introduced in APIC Release 3.2. It enables you to use PBR multiple times in a service graph, which simplifies insertion of multiple service functions in a specific order without VRF or BD sandwich considerations.

PBR node and non-PBR node can be mixed in same service graph, for example:

● FW (PBR) + IPS (PBR) + TCP optimizer (PBR)

● FW (PBR) + IPS (PBR) + Load Balancer (non-PBR)

Multinode PBR examples

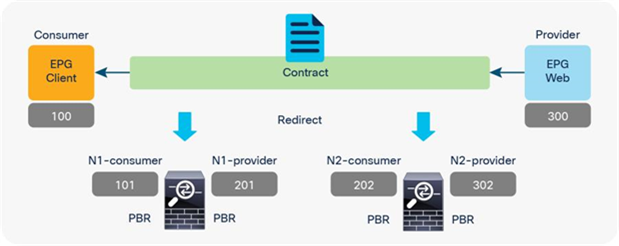

Multinode PBR without non-PBR node

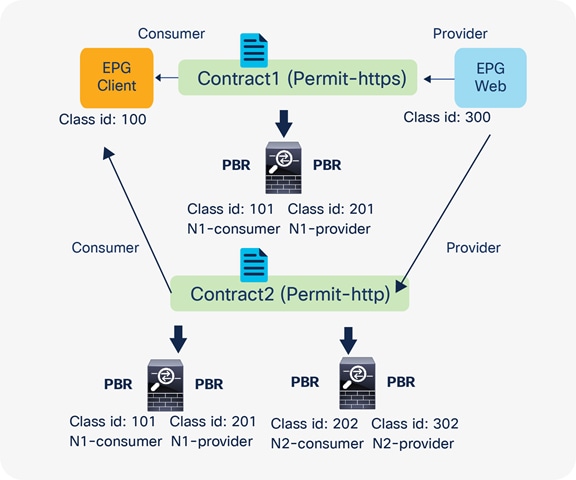

Figure 45 and Table 7 illustrate an example of what policies are programmed for two-node PBR. If all of the service nodes are PBR nodes, it will perform similarly to single-node PBR. The destination class ID is always the consumer or provider EPG class ID.

● Traffic from Client EPG (class ID: 100) to Web EPG (class ID: 300) is redirected to the consumer connector of N1.

● Traffic from provider connector N1 (class ID: 201) to Web EPG (class ID: 300) is redirected to the consumer connector of N2.

● Traffic from provider connector N2 (class ID: 302) to Web EPG (class ID: 300) is permitted.

● Traffic from Web EPG (class id: 300) to Client EPG (class ID: 100) is redirected to the provider connector of N2.

● Traffic from consumer connector N2 (class ID: 202) to EPG Client (class ID: 100) is redirected to the provider connector of N1.

● Traffic from consumer connector N1 (class ID: 101) to EPG Client (class ID: 100) is permitted.

Two-node PBR

Table 7. Permit and redirect rules (Two node PBR)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 100 (Client EPG) |

300 (Web EPG) |

The filter used in the contract subject |

Redirect to N1-consumer |

| 201 (provider connector of N1) |

300 (Web EPG) |

default |

Redirect to N2-consumer |

| 302 (provider connector of N2) |

300 (Web EPG) |

default |

Permit |

| 300 (Web EPG) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Redirect to N2-provider |

| 202 (consumer connector of N2) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Redirect to N1-provider |

| 101 (consumer connector of N1) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Permit |

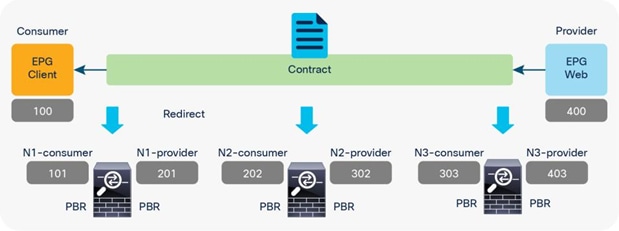

Figure 46 and Table 8 illustrate an example of what policies are programmed for three-node PBR. Similar to the two-node PBR case, the source and destination class ID is always the consumer or provider EPG class ID.

Three-node PBR

Table 8. Permit and redirect rules (three-node PBR)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 100 (Client EPG) |

400 (Web EPG) |

The filter used in the contract subject |

Redirect to N1-consumer |

| 201 (provider connector of N1) |

400 (Web EPG) |

Default |

Redirect to N2-consumer |

| 302 (provider connector of N2) |

400 (Web EPG) |

Default |

Redirect to N3-consumer |

| 403 (provider connector of N3) |

400 (Web EPG) |

Default |

Permit |

| 400 (Web EPG) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Redirect to N3-provider |

| 303 (consumer connector of N3) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Redirect to N2-provider |

| 202 (consumer connector of N2) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Redirect to N1-provider |

| 101 (consumer connector of N1) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Permit |

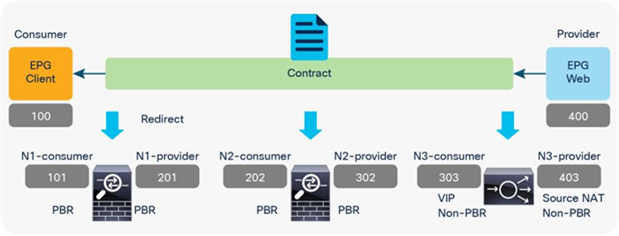

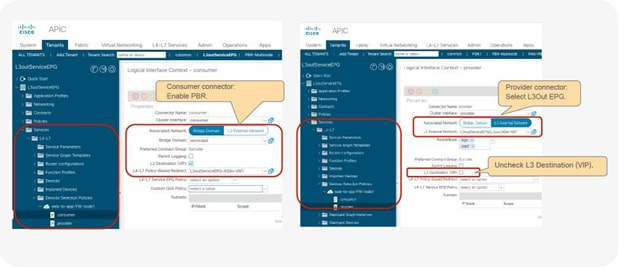

Multinode PBR with a combination of PBR and non-PBR nodes

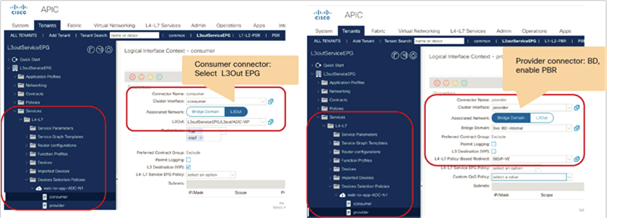

If you have both PBR and non-PBR nodes in a service graph, what policies should be programmed differ from those presented in Tables 7 or 8 because non-PBR nodes (for example, Load Balancer VIP, firewall with NAT, etc.) do not require redirection as traffic is destined to them. When PBR is required, it is important to identify whether or not a connector of a service node is a traffic destination. For a combination of PBR and non-PBR nodes, a new flag has been introduced called an “L3 Destination (VIP),” on the Device Selection Policy, to identify where the traffic is destined in the service chain.

Figure 47 and Table 9 illustrate an example of what policies should be programmed for a three-node service graph where N1 and N2 are PBR nodes; for example, firewall and IPS without address translation, and N3 is Load Balancer with source NAT.

Since traffic from Client EPG is destined to Load Balancer VIP, the destination class ID is the consumer connector of N3 where the VIP is located, until the traffic goes through N3.

● Traffic from Client EPG (class ID: 100) to the consumer connector of N3 (class ID: 303) is redirected to the consumer connector of N1.

● Traffic from the provider connector of N1 (class id: 201) to the consumer connector of N3 (class ID: 303) is redirected to the consumer connector of N2.

● Traffic from the provider connector of N2 (class ID: 302) to the consumer connector of N3 (class ID: 303) is permitted.

● Traffic from the provider connector of N3 (class ID: 403) to Web EPG (class ID: 400) is permitted.

For return traffic, the destination class ID is the provider connector of N3 where the Source NAT’d address is located until the traffic goes through N3. The traffic from the Web EPG (class ID: 400) to the provider connector of N3 is permitted, and then the traffic will be redirected to the provider connector of N2 and then to provider connector of N1, similar to the Client-to-Web traffic flow.

Combination of PBR and non-PBR nodes (Node 3 is Load Balancer with Source NAT.)

Table 9. Permit and redirect rules (combination of PBR and non-PBR nodes)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 100 (Client EPG) |

303 (consumer connector of N3. VIP on LB) |

The filter used in the contract subject |

Redirect to N1-consumer |

| 201 (provider connector of N1) |

303 (consumer connector of N3. VIP on LB) |

default |

Redirect to N2-consumer |

| 302 (provider connector of N2) |

303 (consumer connector of N3. VIP on LB) |

default |

Permit |

| 403 (provider connector of N3) |

400 (Web EPG) |

default |

Permit |

| 400 (Web EPG) |

403 (provider connector of N3. SNAT address) |

default |

Permit |

| 303 (consumer connector of N3) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Redirect to N2-provider |

| 202 (consumer connector of N2) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Redirect to N1-provider |

| 101 (consumer connector of N1) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject |

Permit |

In this example, the consumer and provider connector of N3 must be set to the new flag “L3 Destination (VIP)” on the Device Selection Policy, so that the PBR policy is programmed accordingly.

The filter-from-contract option in the service graph template is introduced in APIC Release 4.2(3). It enables you to use the specific filter of the contract subject where the service graph is attached, instead of the default filter for zoning rules that don’t include the consumer EPG class ID as a source or destination. (This option is disabled by default. Refer to the “Dataplane programming” section for the default behavior.)

Figure 48, Table 10, and Table 11 show a use case example. One node and two node service graphs are attached to contracts with different filters between the same consumer and provider EPGs pair. Contract1, with a one-node service graph, uses permit-https filter and Contract2, with a two-node service graph, uses permit-http filter. The first service node interfaces used in both service graphs are same. With the default behavior using the default filter for zoning rules that don’t include a consumer EPG class ID as a source or destination, the result will be a duplicated zoning rule. The zoning rule generated by those two service graphs will have a rule with the same exact source class, destination class, and filter (default filter), however with a different redirect destination, even though the filters in the contracts are different. Hence, use of the filter-from-contract option is required for this use case to enforce different policies.

Two-node PBR and three-node PBR using the same service node

Note: If the source or destination class ID is unique, the filters-from-contract option is not mandatory. For example, Contract1 and Contract2 have different provider EPGs or the provider connector of the first service node is different.

Table 10. Permit and redirect rules for the one-node PBR (without the filters-from-contract option)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 100 (Client EPG) |

300 (Web EPG) |

The filter used in the contract subject (source port: any; destination port: 443) |

Redirect to N1-consumer |

| 201 (provider connector of N1) |

300 (Web EPG) |

Default |

Permit |

| 300 (Web EPG) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 443; destination port: any) |

Redirect to N1-provider |

| 101 (consumer connector of N1) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 443; destination port: any) |

Permit |

Table 11. Permit and redirect rules for the two-node PBR (without the filters-from-contract option)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 100 (Client EPG) |

300 (Web EPG) |

The filter used in the contract subject (source port: any; destination port: 80) |

Redirect to N1-consumer |

| 201 (provider connector of N1) |

300 (Web EPG) |

Default |

Redirect to N2-consumer |

| 302 (provider connector of N2) |

300 (Web EPG) |

Default |

Permit |

| 300 (Web EPG) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 80; destination port: any) |

Redirect to N2-provider |

| 202 (consumer connector of N2) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 80; destination port: any) |

Redirect to N1-provider |

| 101 (consumer connector of N1) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 80; destination port: any) |

Permit |

By enabling the filters-from-contract option at either or both service graph templates, zoning rules become unique and different policies can be enforced. Tables 12 and 13 show the zoning-rule examples with the filters-from-contract option enabled at both service graph templates.

Table 12. Permit and redirect rules for the one-node PBR (with the filters-from-contract option)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 100 (Client EPG) |

300 (Web EPG) |

The filter used in the contract subject (source port: any; destination port: 443) |

Redirect to N1-consumer |

| 201 (provider connector of N1) |

300 (Web EPG) |

The filter used in the contract subject (source port: any; destination port: 443) |

Permit |

| 300 (Web EPG) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 443; destination port: any) |

Redirect to N1-provider |

| 101 (consumer connector of N1) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 443; destination port: any) |

Permit |

Table 13. Permit and redirect rules for the two-node PBR (with the filters-from-contract option)

| Source class ID |

Destination class ID |

Filter ID |

Action |

| 100 (Client EPG) |

300 (Web EPG) |

The filter used in the contract subject (source port: any; destination port: 80) |

Redirect to N1-consumer |

| 201 (provider connector of N1) |

300 (Web EPG) |

The filter used in the contract subject (source port: any; destination port: 80) |

Redirect to N2-consumer |

| 302 (provider connector of N2) |

300 (Web EPG) |

The filter used in the contract subject (source port: any; destination port: 80) |

Permit |

| 300 (Web EPG) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 80; destination port: any) |

Redirect to N2-provider |

| 202 (consumer connector of N2) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 80; destination port: any) |

Redirect to N1-provider |

| 101 (consumer connector of N1) |

100 (Client EPG) |

The reverse filter of the filter used in the contract subject (source port: 80; destination port: any) |

Permit |

Reuse of service graph with PBR

The service graph template and L4-L7 device can be reused in multiple contracts. For example, if you want to insert a firewall in multiple inter-EPG traffic flows in a tenant, you probably want to use the same firewall with either the same or different interfaces. Both designs are possible.

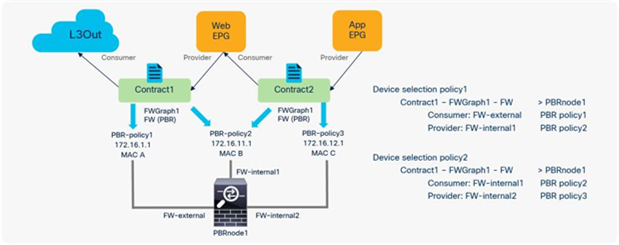

Reuse the same PBR node with different interfaces

You can reuse the same PBR node with a different interface for each tier. From the L3Out EPG to the web EPG, traffic is redirected to FW-external, and return traffic is redirected to FW-internal1. From the web EPG to the App EPG, traffic is redirected to FW-internal1, and return traffic is redirected to FW-internal2 (Figure 49).

Reuse the same PBR node (using different interfaces)

In this case, you can reuse the service graph template and the L4-L7 device. To redirect traffic to a different interface based on the source and destination EPG pair, a different PBR policy and a device selection policy are required. (For basic information about the service graph configuration with PBR, see the later part of this document.)

Here is a configuration example (Figure 50):

● Contract (Tenant > Security Policies > Contracts)

◦ Contract1: Between L3Out EPG and Web EPG

◦ Contract2: Between Web EPG and App EPG

● L4-L7 device (Tenant > L4-L7 Services > L4-L7 Devices)

◦ PBRnode1 has three cluster interfaces

◦ FW-external: Security zone for L3Out connection

◦ FW-internal1: Security zone for Web EPG

◦ FW-internal2: Security zone for AppEPG

● Service graph template (Tenant > L4-L7 Services > L4-L7 Service Graph Templates)

◦ FWGraph1: Node1 is the firewall function node that is PBR enabled

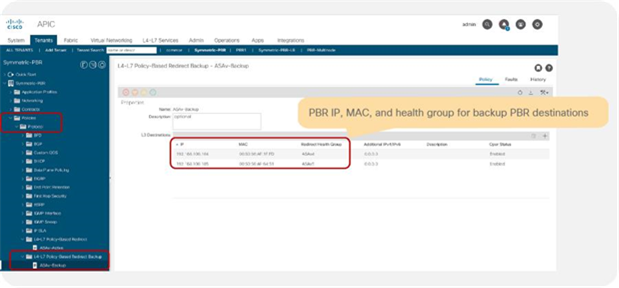

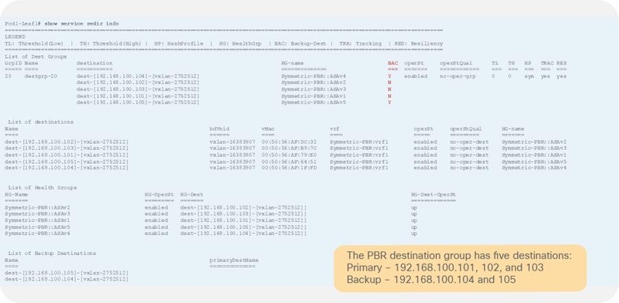

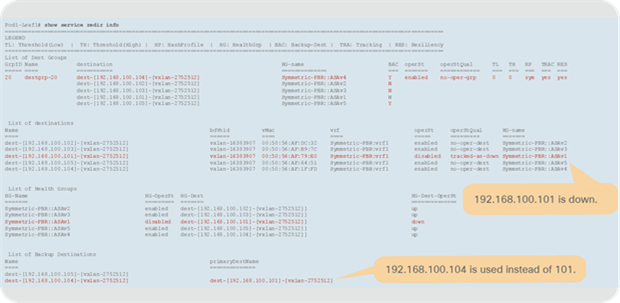

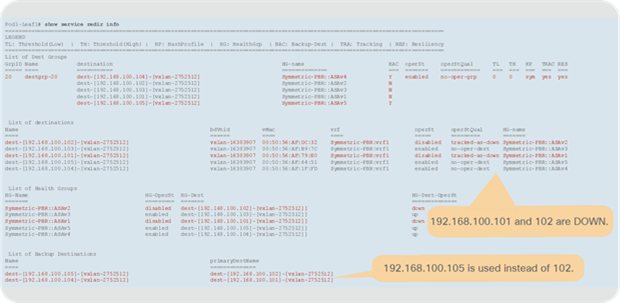

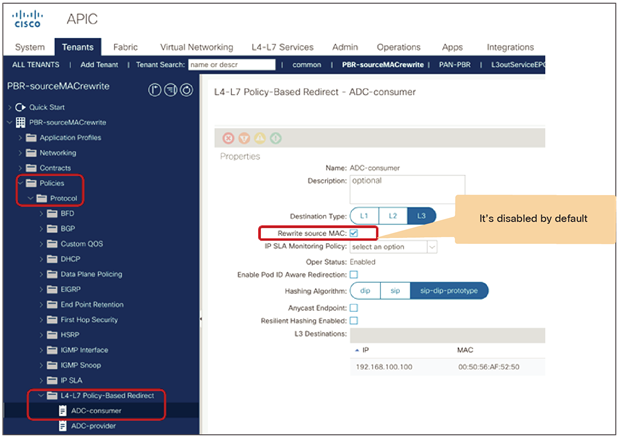

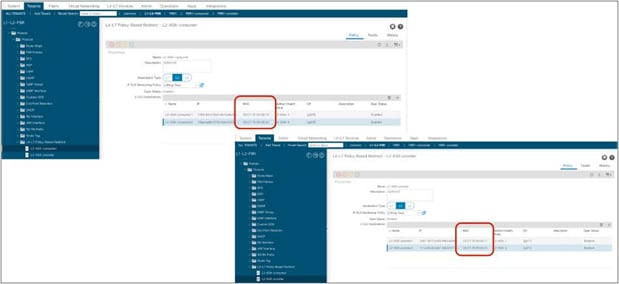

● PBR policies (Tenant > Networking > Protocol Policies > L4-L7 Policy Based Redirect)

◦ PBR-policy1 (172.16.1.1 with MAC A)

◦ PBR-policy2 (172.16.11.1 with MAC B)

◦ PBR-policy3 (172.16.12.1 with MAC C)

● Device selection policy (Tenant > L4-L7 Services > Device Selection Policies)

◦ Contract1-FWGraph1-FW (If FWGraph1 is applied to Contract1, the firewall function node will be this node.)

◦ Node: PBRnode1

◦ Consumer: FW-external with PBR-policy1

◦ Provider: FW-internal1 with PBR-policy2

◦ Contract2-FWGraph1-FW (If FWGraph1 is applied to Contract2, the firewall function node will be this node.)

◦ Node: PBRnode1

◦ Consumer: FW-internal1 with PBR-policy2

◦ Provider: FW-internal2 with PBR-policy3

Configuration example: Reuse the same PBR node (using different interfaces)

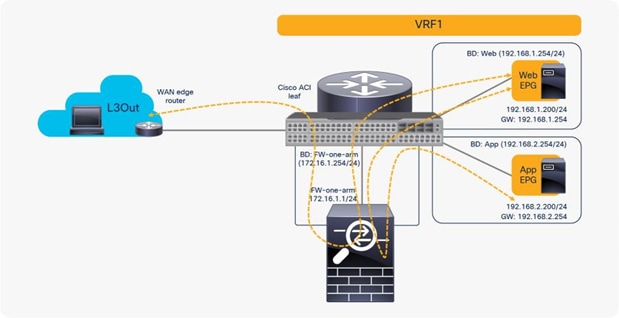

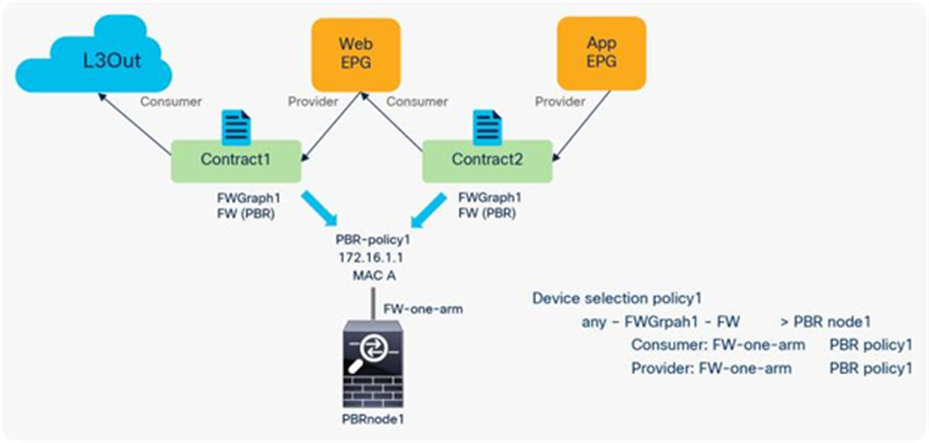

Reuse the same PBR node and the same interface

If you want to use the same PBR node and its interfaces, you can reuse the service graph template, L4-L7 device, PBR policy, and device selection policy. In this example, traffic is redirected to FW-one-arm if it is between the L3Out EPG and the Web EPG, or between the Web EPG and the App EPG (Figure 51).

Reuse the same PBR node (using the same interfaces in one-arm mode)

Here is a configuration example (Figure 52):

● Contract (Tenant > Security Policies > Contracts)

◦ Contract1: Between L3Out EPG and Web EPG

◦ Contract2: Between Web EPG and App EPG

● L4-L7 device (Tenant > L4-L7 Services > L4-L7 Devices)

◦ PBRnode1 has one cluster interface

◦ FW-one-arm

● Service graph template (Tenant > L4-L7 Services > L4-L7 Service Graph Templates)

◦ FWGraph1: Node1 is the firewall function node that is PBR enabled

● PBR policies (Tenant > Networking > Protocol Policies > L4-L7 Policy Based Redirect)

◦ PBR-policy1 (172.16.1.1 with MAC A)

● Device selection policy (Tenant > L4-L7 Services > Device Selection Policies)

◦ any-FWGraph1-FW (If FWGraph1 is applied to any contract, the firewall function node will be this node.)

◦ Node: PBRnode1

◦ Consumer: FW-one-arm with PBR-policy1

◦ Provider: FW-one-arm with PBR-policy1

Configuration example: Reuse the same PBR node (using the same interface)

You may wonder whether you can use a firewall with two interfaces rather than use one-arm mode or a different interface for each EPG. For example, you may want consumer-to-provider traffic to always be redirected to the FW-external interface, and you may want provider-to-consumer traffic to always be redirected to the FW-internal interface, regardless of which EPG is a consumer or a provider (Figure 53).

Reuse the same PBR node (using two-arm mode for north-south traffic)

The problem with such a design is the routing configuration on the firewall. The firewall probably has a 0.0.0.0/0 route through 172.16.1.254 in the FW-external bridge domain and a 192.168.1.0/24 route through 172.16.2.254 in the FW-internal bridge domain, which is fine for the traffic between the L3Out EPG and the Web EPG. However, for the traffic between the Web and - App EPGs, the firewall would have 192.168.2.0/24 routed through 172.16.2.254 in the FW-internal bridge domain. If traffic from the App EPG is destined for the Web EPG is redirected to FW-internal, the firewall will send it back using 172.16.2.254 as the next hop because both 192.168.1.0/24 and 192.168.2.0/24 use 172.16.2.254 as the next hop. The result is a traffic path like that of a one-arm design with intra-interface traffic forwarding. Therefore, you can use a two-arm design for north-south traffic, but you should use a one-arm design for the east-west traffic because of the routing table on the PBR node (Figure 54).

Reuse the same PBR node (using one-arm mode for east-west traffic)

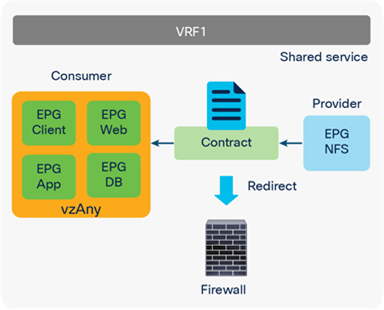

The vzAny managed object is a collection of all EPGs in a VRF instance. It is useful if you have a security requirement that is applied to all EPGs in a VRF; it also helps to reduce policy TCAM consumption.

Prior to APIC Release 3.2, although you cannot associate a service graph with PBR with a contract with vzAny as provider, you can associate it with vzAny as consumer. This is helpful for inserting service nodes for traffic between shared service providers and all EPGs as consumer in a VRF. Figure 55 illustrates an example of this. If you have a contract with PBR between vzAny as consumer and an NFS (network file system) EPG as provider in VRF1, the NFS access from all endpoints in VRF1 to NFS can be inspected by firewall without consuming policy TCAM for multiple consumer EPGs.

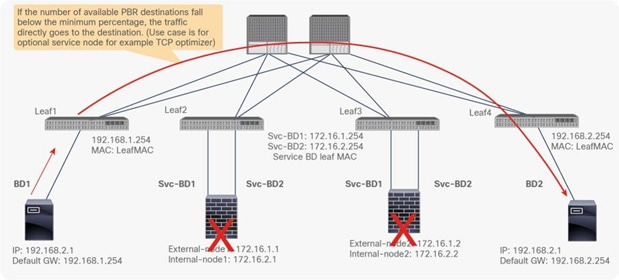

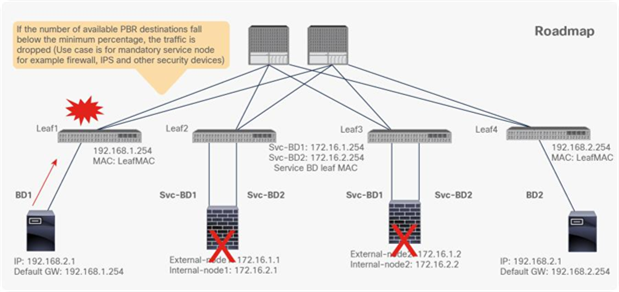

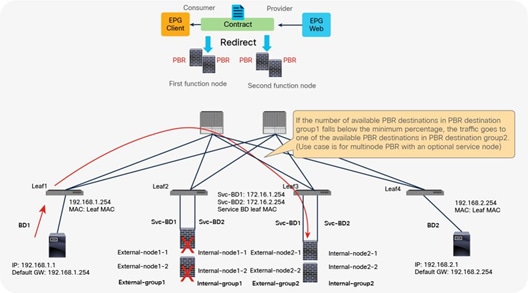

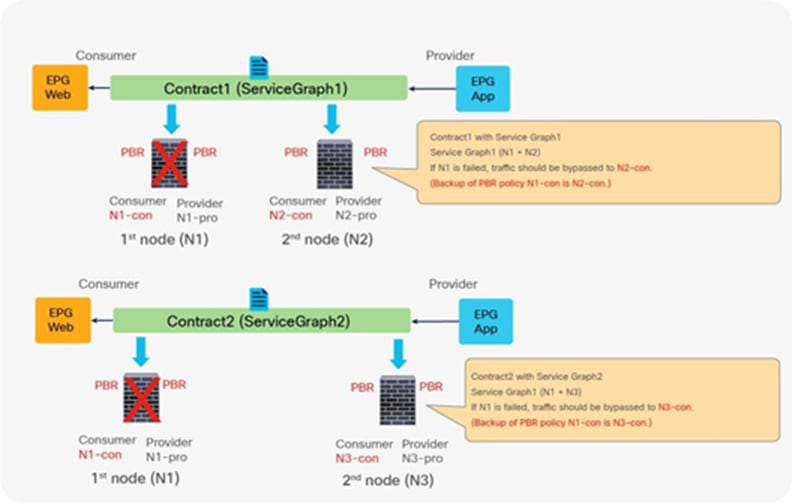

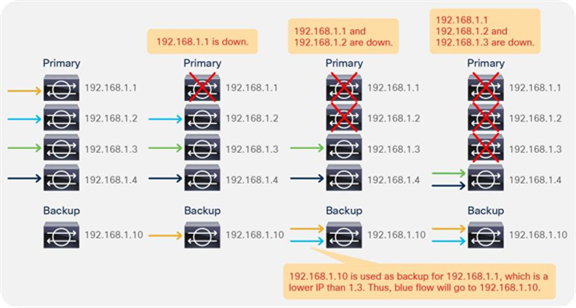

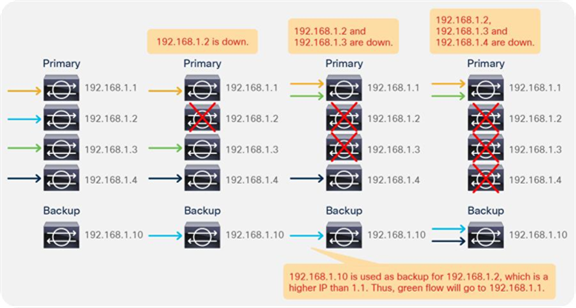

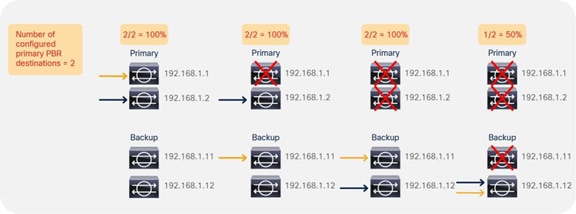

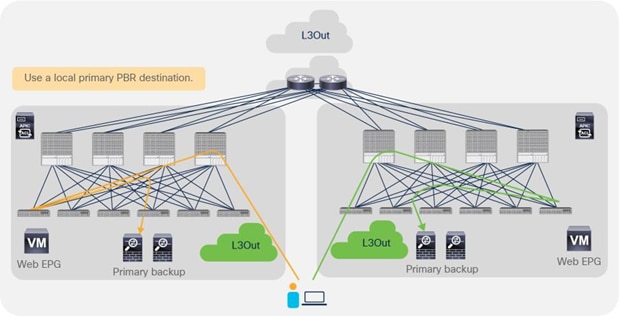

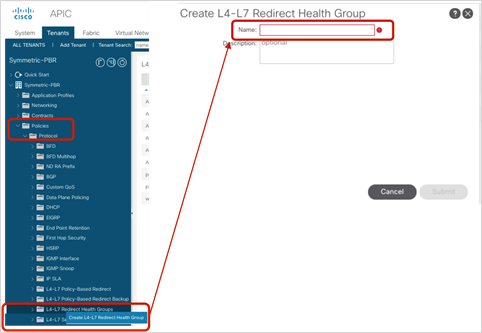

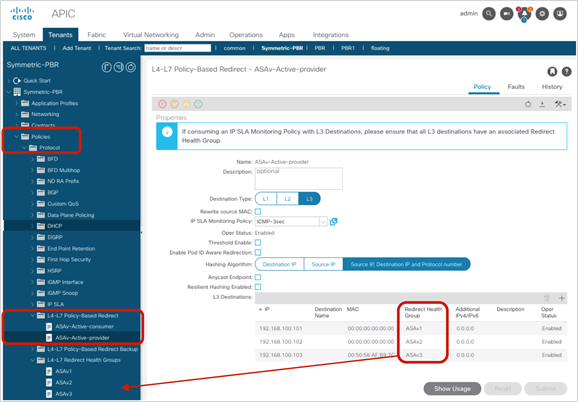

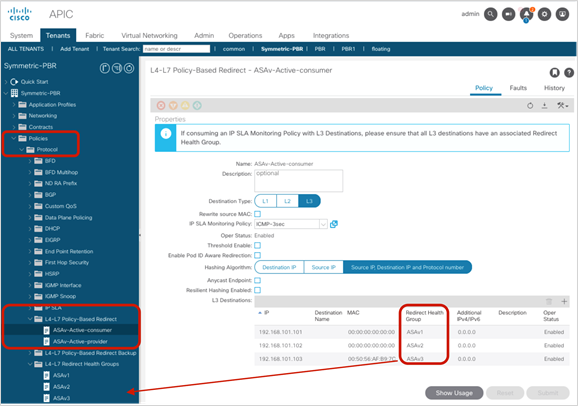

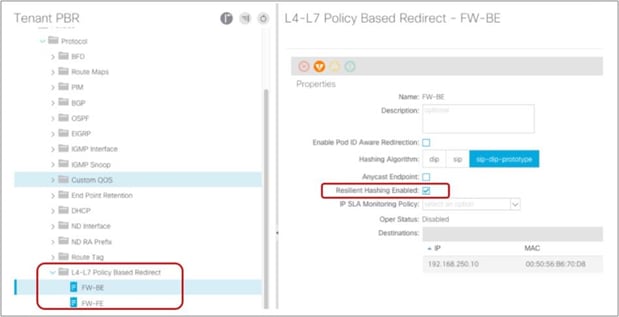

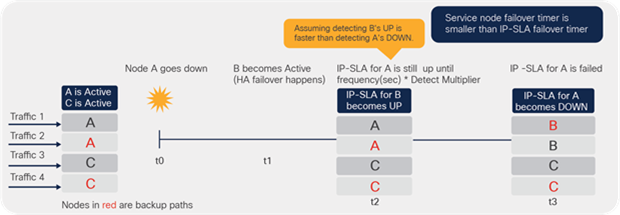

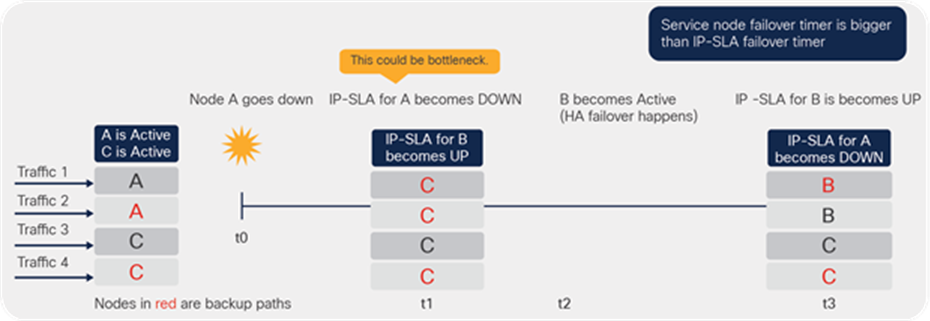

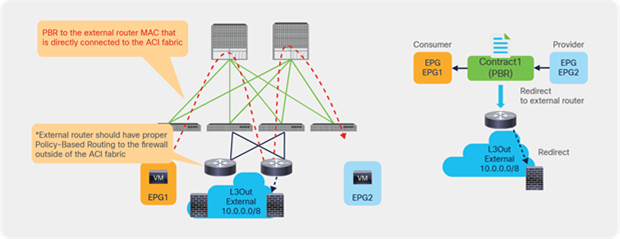

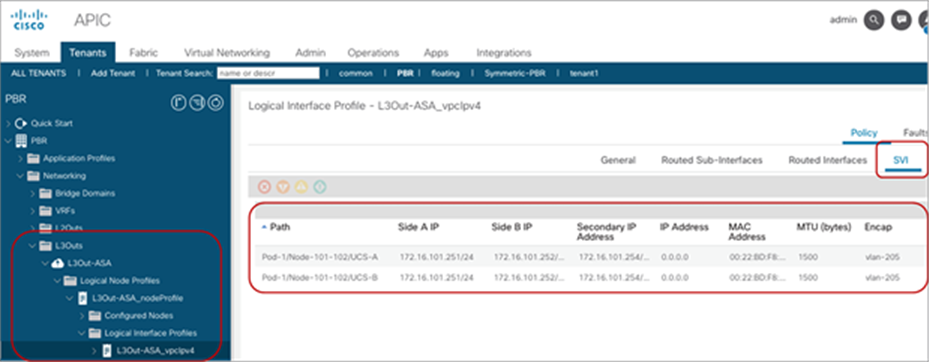

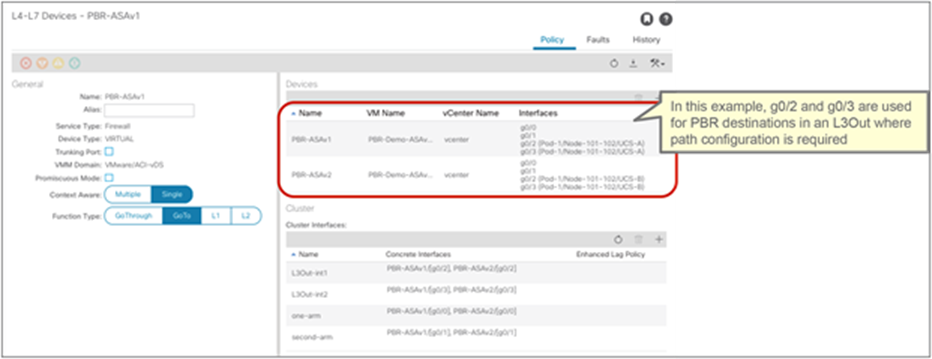

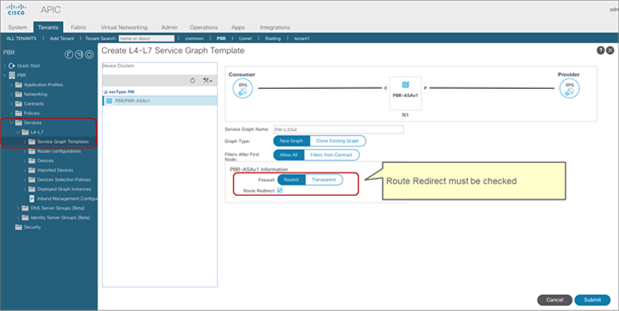

vzAny as consumer (shared service-provider use case)