New and changed information

The following table provides an overview of the significant changes up to this current release. The table does not provide an exhaustive list of all changes or of the new features up to this release.

| Release Version | Feature | Description |

|---|---|---|

|

Nexus Dashboard 4.1.1 |

Improved navigation and workflow when editing AI Data Center Routed fabric settings. |

Beginning with Nexus Dashboard 4.1.1, the navigation and workflow when editing AI Data Center Routed fabric settings in Nexus Dashboard have been enhanced. |

Editing AI Data Center Routed fabric settings

A AI Data Center Routed fabric is a type of fabric that provides automated provisioning of AI ready BGP Layer 3 networks on Cisco Nexus (NX-OS) devices.

When you first create a AI Data Center Routed fabric using the procedures provided in Creating LAN and ACI Fabrics and Fabric Groups, the standard workflow allows you to create a fabric using the bare minimum settings so that you are able to create a fabric quickly and easily. Use the procedures in this article to make more detailed configurations for your routed fabric.

-

Navigate to the main Fabrics window:

Manage > Fabrics

-

Locate the fabric that you want to edit.

-

Click the circle next to the routed fabric that you want to edit to select that fabric, then click Actions > Edit Fabric Settings.

The Edit fabric_name Settings window appears.

-

Click the appropriate tab to edit these settings for the fabric:

-

Telemetry (if the Telemetry feature is enabled for the fabric)

General

Use the information in this section to edit the settings in the General window for your routed fabric.

Change the general parameters that you configured previously for the routed fabric, if necessary, or click another tab to leave these settings unchanged.

| Fabric type | Description |

|---|---|

|

Name |

The name for the fabric. This field is not editable. |

|

Type |

The fabric type for this fabric. This field is not editable. |

|

Location |

Choose the location for the fabric. |

|

BGP ASN for Spines |

Enter the BGP autonomous system number (ASN) for the fabric’s spine switches. |

|

AI QOS & Queuing policy |

Choose the queuing policy from the drop-down list based on the predominant fabric link speed for certain switches in the fabric. For more information, see AI QoS classification and queuing policies. Options are:

|

|

License tier |

Choose the licensing tier for the fabric:

Click on the information icon (i) next to License tier to see what functionality is enabled for each license tier. |

|

Enabled features |

Check the Telemetry check box to enable telemetry for the fabric. This is the equivalent of enabling the Nexus Dashboard Insights service in previous releases. |

|

Telemetry collection |

This option becomes available if you choose to enable Telemetry in the Enabled features field above. Choose either Out-of-band or In-band for telemetry collection. |

|

Telemetry streaming |

This option becomes available if you choose to enable Telemetry in the Enabled features field above. Choose either IPv4 or IPv6 for telemetry streaming. |

|

Security domain |

Choose the security domain for the fabric. |

Fabric Management

Use the information in this section to edit the settings in the Fabric Management window for your AI Data Center Routed fabric. The tabs and their fields in the screen are explained in the following sections. The fabric-level parameters are included in these tabs.

General Parameters

The General Parameters tab is displayed, by default. The fields in this tab are described in the following table.

| Field | Description |

|---|---|

|

BGP ASN for Super Spines |

Enter the ASN used for super spine and border super spines, if the fabric contains any super spine or border super spine switches. |

|

BGP ASN for Leafs |

Enter the ASN used for leaf switches, if the fabric contains any leaf switches. |

|

BGP ASN for Border and Border Gateway switches |

Enter the ASN used for border and border gateway switches, if the fabric contains any border and border gateway switches. |

|

BGP AS Mode |

Choose Multi-AS or Same-Tier-AS.

In both Multi-AS and Same-Tier-AS, all spine switches in a fabric share one unique AS number. The fabric is identified by the spine switch ASN. |

|

Allow Same ASN On Leafs |

Uses the same ASN on all the leaf nodes even when you have configured Multi-AS mode. |

|

Enable IPv6 routed fabric or VXLAN with IPv6 underlay |

Enables IPv6 routed fabric or IPv6 underlay. With the checkbox cleared, the system configures IPv4 routed fabric or IPv4 underlay. To configure IPv6 underlay, you must also configure the VXLAN overlay parameters in the EVPN tab. |

|

Underlay Subnet IP Mask |

Specifies the subnet mask for the fabric interface IP addresses. |

|

Manual Underlay IP Address Allocation |

Check the check box to disable dynamic underlay IP address allocations. |

|

Underlay Routing Loopback IP Range |

Specifies the loopback IPv4 addresses for protocol peering. |

|

Underlay Subnet IP Range |

Specifies the IPv4 addresses for underlay P2P routing traffic between interfaces. |

|

Underlay Routing Loopback IPv6 Range |

Specifies the loopback IPv6 addresses for protocol peering. |

|

Disable Route-Map Tag |

Disables subnet redistribution. |

|

Route-Map Tag |

Configures a route tag for redistributing subnets. By default, the tag value of 12345 is configured, when enabled. |

|

Subinterface Dot1q Range |

Specifies the subinterface range when Layer 3 sub interfaces are used. |

|

Enable Performance Monitoring |

Check the check box to enable performance monitoring. Ensure that you do not clear interface counters from the command-line interface of the switches. Clearing interface counters can cause the Performance Monitor to display incorrect data for traffic utilization. If you must clear the counters and the switch has both Performance monitoring is supported on switches with NX-OS Release 9.3.6 and later. |

EVPN

| Field | Description |

|---|---|

|

Enable EVPN VXLAN Overlay |

Enables the VXLAN overlay provisioning for the fabric. You can convert a routed fabric to a VXLAN enabled fabric by selecting this option. When the fabric is VXLAN enabled, you can create and deploy overlay networks or VRF instances. The procedure for creating and deploying networks or VRFs is the same as in Data Center VXLAN EVPN. For more information, see the "Creating Network for Standalone Fabrics" and "Creating VRF" sections in Editing Data Center VXLAN EVPN Fabric Settings. You must uncheck the Enable EVPN VXLAN Overlay check box to create a routed fabric (an IP fabric with no VXLAN encapsulation). In a routed fabric, you can create and deploy networks. For more information, see the section "Overview of Networks in a Routed Fabric" in Editing Routed Fabric Settings. Whether you create an eBGP routed or eBGP VXLAN fabric, the fabric uses eBGP as the control plane to build intra-fabric connectivity. Links between spine and leaf switches are auto-configured with point-to-point (P2P) numbered IP addresses with eBGP peering built on top. If a network or a VRF is created in a fabric, you cannot switch between VXLAN EVPN mode and routed fabric mode by selecting the Enable EVPN VXLAN Overlay check box. You need to delete these networks or VRFs to change the fabric setting. Routed_Network_Universal Template is applicable to a routed fabric only. When you convert the routed fabric to a VXLAN EVPN fabric, set the network template and network extension template to the ones defined for VXLAN EVPN: Default_Network_Universal and Default_Network_Universal. If you have a customized template for a VXLAN EVPN fabric, you can also choose to use it. |

|

First Hop Redundancy Protocol |

This field is available if you did not select the Enable EVPN VXLAN Overlay option above. This field is only applicable to a routed fabric. Specify the First Hop Redundancy Protocol that you want to use:

After a network has been created, you cannot change this fabric setting. You should delete all networks, and then change the First Hop Redundancy Protocol setting. |

|

The following fields in the EVPN tab are only applicable if you selected the Enable EVPN VXLAN Overlay option above. |

|

|

Anycast Gateway MAC |

Specifies the anycast gateway MAC address for the leaf switches. |

|

Enable VXLAN OAM |

Enables the VXLAN operations, administration, and maintenance (OAM) function for existing switches. This is enabled by default. Uncheck the check box to disable VXLAN OAM feature. If you want to enable the VXLAN OAM on specific switches and disable on other switches in the fabric, use freeform configurations to enable OAM and disable OAM in the fabric settings. The VXLAN OAM feature in Nexus Dashboard is supported on a single fabric or site only. VXLAN OAM is not supported with multi-site fabrics. |

|

Enable Tenant DHCP |

Enables tenant DHCP support. |

|

vPC advertise-pip |

Check the check box to enable the advertise PIP (primary IP address) feature on vPC enabled leaf or border leaf switches. |

|

vPC advertise-pip on Border only |

Check the check box to enable advertise-pip on vPC border switches and border gateways only. Applicable only when the vPC advertise-pip option is not enabled. |

|

Replication Mode |

Specifies the mode of replication that is used in the fabric - ingress replication or multicast. |

|

Multicast Group Subnet |

Specifies the IP address prefix used for multicast communication. A unique IP address is allocated from this group for each overlay network. This field is used when the underlay is IPv4 and replication mode is multicast. We recommend using the old multicast group pool into the spine or leaf freeform if they are being referenced by existing networks (and potentially VRFs if TRM is enabled) and in use. Use the new or updated multicast group pool in the Multicast Group Subnet settings. This ensures that the new or updated multicast pool is used for every new network. |

|

IPv6 Multicast Group Subnet |

Specifies the IPv6 address prefix used for multicast communication. A unique IP address is allocated from this group for each overlay network. This field is used when the underlay is IPv6 and replication mode is multicast. |

|

Enable IPv4 Tenant Routed Multicast |

Check the check box to enable Tenant Routed Multicast (TRM) for IPv4 support for VXLAN EVPN fabrics. For more information, see the section "Creating a VXLAN EVPN Fabric Using the Data Center VXLAN EVPN template" in Editing Data Center VXLAN EVPN Fabric Settings. |

|

Enable IPv6 Tenant Routed Multicast (TRMv6) |

Check the check box to enable Tenant Routed Multicast (TRM) for IPv6 support for VXLAN EVPN fabrics. For more information, see the section "Configuring VXLAN EVPN Fabrics with a PIMv6 Underlay and TRMv6" in Editing Data Center VXLAN EVPN Fabric Settings. |

|

Default MDT Address for TRM VRFs |

Indicates the multicast address for TRMv4 traffic. By default, this address is from the IP prefix specified in the Multicast Group Subnet field. When you update either field, ensure that the address is chosen from the IP prefix specified in Multicast Group Subnet. |

|

Default MDT IPv6 Address for TRM VRFs |

Indicates the multicast address for TRMv6 traffic. By default, this address is from the IP prefix specified in the IPv6 Multicast Group Subnet field. When you update either field, ensure that the address is chosen from the IP prefix specified in the IPv6 Multicast Group Subnet field. For more information, see the section "Overview of Tenant Routed Multicast" in Editing Data Center VXLAN EVPN Fabric Settings. |

|

Rendezvous-Points |

Enter the number of spine switches acting as rendezvous points. |

|

RP mode |

Choose from the two supported multicast modes of replication - ASM (for Any-Source Multicast [ASM]) or BiDir (for Bidirectional PIM [BIDIR-PIM]). When you enable multicast mode, only the fields pertaining to that multicast mode is enabled and the fields related to other the multicast mode are disabled. BIDIR-PIM is supported on Cisco’s Cloud Scale Family platforms 9300-EX and 9300-FX/FX2, and with NX-OS Release 9.2(1) and later. |

|

Underlay RP Loopback ID |

Specifies the loopback ID used for the Rendezvous Point (RP). The default is 254. |

|

The following fields are enabled if you choose bidir as the RP mode. Depending on the RP count, either 2 or 4 phantom RP loopback ID fields are enabled. |

|

|

Underlay Primary RP Loopback ID |

Specifies the primary loopback ID used for phantom RP. |

|

Underlay Backup RP Loopback ID |

Specifies the secondary (or backup) loopback ID used for Fallback Bidir-PIM phantom RP. |

|

The following Loopback ID options are applicable only when the RP count is 4 and if bidir is chosen. |

|

|

Underlay Second Backup RP Loopback ID |

Specifies the second backup loopback ID used for phantom RP. |

|

Underlay Third Backup RP Loopback ID |

Specifies the third backup loopback ID used for phantom RP. |

|

Enable MVPN VRI ID Generation |

This field was previously named Allow L3VNI w/o VLAN. In an IPv4 underlay, enabling this option generates an MVPN VRI ID if there is a VRF with TRM or TRMv6 and no VRF with Layer 3 VNI VLAN. In an IPv6 underlay, an MVPN VRI ID is generated once TRM or TRMv6 is enabled, regardless of whether this option is enabled or not. For more information, see Layer 3 VNI without VLAN. |

|

MVPN VRI ID Range |

Use this field for allocating a unique MVPN VRI ID per vPC. This field is needed for the following conditions:

The MVPN VRI ID cannot be the same as any site ID within a multi-site fabric. The VRI ID has to be unique within all sites within an MSD. |

|

Enable MVPN VRI ID Re-allocation |

Enable this check box to generate a one-time VRI ID reallocation. Nexus Dashboard automatically allocates a new MVPN ID within the MVPN VRI ID range above for each applicable switch. Since this is a one-time operation, after performing the operation, this field is turned off. Changing the VRI ID is disruptive, so plan accordingly. |

|

VRF Template |

Specifies the VRF template for creating VRFs, and the VRF extension template for enabling VRF extension to other fabrics. |

|

VRF Extension Template |

|

|

Network Template |

Specifies the network template for creating networks, and the network extension template for extending networks to other fabrics. |

|

Network Extension Template |

|

|

Overlay Mode |

Specify the VRF/network configuration using one of the following options:

The default option is config-profile. The overlay mode can only be changed before deploying overlay configurations to the switches. After the overlay configuration is deployed, you cannot change the mode unless all the VRF and network attachments are removed. For a brownfield import, if the overlay is deployed as config-profile, it can be imported in the config-profile mode only. However, if the overlay is deployed as cli, it can be imported in either config-profile or cli modes. |

|

The Allow L3VNI w/o VLAN option has been renamed to Enable MVPN VRI ID Generation. |

|

|

Enable L3VNI w/o VLAN |

Check the box to set the default value of the VRF. The setting at this fabric-level field is the default value of Enable L3VNI w/o VLAN at the VRF level. For more information, see:

|

|

Site Id |

eBGP fabrics are now supported as child fabrics in a VXLAN EVPN Multi-Site fabric. For more information, see Border Gateway Support. Enter a value in this field for the ASN for the BGP fabric in the VXLAN EVPN Multi-Site fabric. Valid entries for this field range from 1-281474976710655. If left empty, the value for this field defaults to the fabric ASN. |

|

Anycast Border Gateway advertise-pip |

Check the check box to advertise the Anycast Border Gateway PIP as a Virtual Tunnel End Point (VTEP) when adding a BGP fabric as a child fabric in a VXLAN EVPN Multi-Site fabric. This setting takes affect when you click Recalculate Config as part of the VXLAN EVPN Multi-Site fabric configuration process. For more information, see Border Gateway Support. |

|

Underlay VTEP Loopback IP Range |

Specifies the loopback IP address range for VTEPs. This is typically the Loopback1 IP address range. |

|

Underlay RP Loopback IP Range |

Specifies anycast or phantom RP IP address range. |

|

Underlay VTEP Loopback IPv6 Range |

Specifies the loopback IPv6 address range for VTEPs. This is typically the Loopback1 and Anycast Loopback IPv6 address range. |

|

Layer 2 VXLAN VNI Range |

Specify the VXLAN VNI IDs for the fabric. |

|

Layer 3 VXLAN VNI Range |

|

|

Network VLAN Range |

VLAN ranges for the Layer 3 VRF and overlay network. |

|

VRF VLAN Range |

|

|

VRF Lite Deployment |

Specifies the VRF Lite method for extending inter fabric connections. Only Manual is supported. |

vPC

| Field | Description |

|---|---|

|

vPC Peer Link VLAN |

VLAN used for the vPC peer link SVI. |

|

Make vPC Peer Link VLAN as Native VLAN |

Enables vPC peer link VLAN as Native VLAN. |

|

vPC Peer Keep Alive option |

From the drop-down list, select management or loopback. To use IP addresses assigned to the management port and the management VRF, select management. To use IP addresses assigned to loopback interfaces (in non-management VRF), select loopback. If you use IPv6 addresses, you must use loopback IDs. |

|

vPC Auto Recovery Time |

Specifies the vPC auto recovery time-out period in seconds. |

|

vPC Delay Restore Time |

Specifies the vPC delay restore period in seconds. |

|

vPC Peer Link Port Channel Number |

Specifies the Port Channel ID for a vPC Peer Link. By default, the value in this field is 500. |

|

vPC IPv6 ND Synchronize |

Enables IPv6 Neighbor Discovery synchronization between vPC switches. The check box is enabled by default. Uncheck the check box to disable the function. |

|

Fabric wide vPC Domain Id |

Enables the usage of same vPC Domain Id on all vPC pairs in the fabric. When you select this field, the vPC Domain Id field is editable. |

|

vPC Domain Id |

Specifies the vPC domain ID to be used on all vPC pairs. Otherwise unique vPC domain IDs are used (in increment of 1) for each vPC pair. |

|

Enable Qos for Fabric vPC-Peering |

Enables QoS on spines for guaranteed delivery of vPC Fabric Peering communication. QoS for vPC fabric peering and queuing policies options in fabric settings are mutually exclusive. |

|

Qos Policy Name |

Specifies QoS policy name that should be same on all spines. |

Protocols

| Field | Description |

|---|---|

|

Routing Loopback Id |

The loopback interface ID is populated as 0 by default. It is used as the BGP router ID. |

|

VTEP Loopback Id |

The loopback interface ID is populated as 1 and it is used for VTEP peering purposes. |

|

BGP Maximum Paths |

Specifies maximum number for BGP routes to be installed for same prefix on the switches for ECMP. |

|

Enable BGP Authentication |

Check the check box to enable BGP authentication. If you enable this field, the BGP Authentication Key Encryption Type and BGP Authentication Key fields are enabled. |

|

BGP Authentication Key Encryption Type |

Choose the three for 3DES encryption type, or seven for Cisco encryption type. |

|

BGP Authentication Key |

Enter the encrypted key based on the encryption type. Plain-text passwords are not supported. Log on to the switch, retrieve the encrypted key. Enter the key in the BGP Authentication Key field. For more information, see Retrieve the encrypted BFD authentication key. |

|

Enable PIM Hello Authentication |

Enables the PIM hello authentication. |

|

PIM Hello Authentication Key |

Specifies the PIM hello authentication key. |

|

Enable BFD |

Check the Enable BFD check box to enable feature bfd on all switches in the fabric. This feature is valid only on IPv4 underlay and the scope is within a fabric. Nexus Dashboard supports BFD within a fabric. The BFD feature is disabled by default in the Fabric Settings. If enabled, BFD is enabled for the underlay protocols with the default settings. Any custom BFD configurations requires configurations to be deployed via the per switch freeform or per interface freeform policies. The following configuration is pushed after you enable BFD. 'feature bfd' For Nexus Dashboard with BFD-enabled, the following configurations are pushed on all the P2P fabric interfaces: no ip redirects no ipv6 redirects For information about BFD feature compatibility, refer your respective platform documentation and for information about the supported software versions, see Cisco Nexus Dashboard Fabric Controller Compatibility Matrix. |

|

Enable BFD for BGP |

Check the check box to enable BFD for the BGP neighbor. This option is disabled by default. |

|

Enable BFD Authentication |

Check the check box to enable BFD authentication. If you enable this field, the BFD Authentication Key ID and BFD Authentication Key fields are enabled. |

|

BFD Authentication Key ID |

Specifies the BFD authentication key ID for the interface authentication. |

|

BFD Authentication Key |

Specifies the BFD authentication key. For information about how to retrieve the BFD authentication parameters, see Retrieve the encrypted BFD authentication key. |

Security

| Field | Description |

|---|---|

|

Enable MACsec |

Check the check box to enable MACsec in the fabric. MACsec configuration is not generated until MACsec is enabled on an intra-fabric link. Perform a Recalculate and deploy operation to generate the MACsec configuration and deploy the configuration on the switch. |

|

MACsec Cipher Suite |

Choose one of the following MACsec cipher suites for the MACsec policy:

The default value is GCM-AES-XPN-256. |

|

MACsec Primary Key String |

Specify a Cisco Type 7 encrypted octet string that is used for establishing the primary MACsec session. For AES_256_CMAC, the key string length must be 130 and for AES_128_CMAC, the key string length must be 66. If these values are not specified correctly, an error is displayed when you save the fabric. The default key lifetime is infinite. |

|

MACsec Primary Cryptographic Algorithm |

Choose the cryptographic algorithm used for the primary key string. It can be AES_128_CMAC or AES_256_CMAC. The default value is AES_128_CMAC. You can configure a fallback key on the device to initiate a backup session if the primary session fails. |

|

MACsec Fallback Key String |

Specify a Cisco Type 7 encrypted octet string that is used for establishing a fallback MACsec session. For AES_256_CMAC, the key string length must be 130 and for AES_128_CMAC, the key string length must be 66. If these values are not specified correctly, an error is displayed when you save the fabric. |

|

MACsec Fallback Cryptographic Algorithm |

Choose the cryptographic algorithm used for the fallback key string. It can be AES_128_CMAC or AES_256_CMAC. The default value is AES_128_CMAC. |

|

MACsec Status Report Timer |

Specify the MACsec operational status periodic report timer in minutes. |

Advanced

| Field | Description |

|---|---|

|

Intra Fabric Interface MTU |

Specifies the MTU for the intra fabric interface. This value must be an even number. |

|

Layer 2 Host Interface MTU |

Specifies the MTU for the Layer 2 host interface. This value must be an even number. |

|

Power Supply Mode |

Choose the appropriate power supply mode. |

|

CoPP Profile |

From the drop-down list, select the appropriate Control Plane Policing (CoPP) profile policy for the fabric. By default, the strict is selected. |

|

VTEP HoldDown Time |

Specifies the NVE source interface hold down time. |

|

VRF Lite Subnet IP Range |

These fields are prefilled with the DCI subnet details. Update the fields as needed. The values shown on the page are automatically generated. If you want to update the IP address ranges, VXLAN Layer 2/Layer 3 network ID ranges or the VRF/network VLAN ranges, ensure that each fabric has its own unique range and is distinct from any underlay range to avoid possible duplication. You should only update one range of values at a time. If you want to update more than one range of values, do it in separate instances. For example, if you want to update Layer 2 and Layer 3 ranges, you should do the following.

|

|

VRF Lite Subnet Mask |

|

|

Enable CDP for Bootstrapped Switch |

Check the check box to enable CDP for switches discovered using Bootstrap. |

|

Enable NX-API |

Check the check box to enable NX-API on HTTPS. This check box is checked by default. |

|

Enable NX-API on HTTP |

Specifies enabling of NX-API on HTTP. Check Enable NX-API on HTTP and Enable NX-API check boxes to use HTTP. This check box is checked by default. If you uncheck this check box, the applications that use NX-API and supported by Nexus Dashboard, such as Endpoint Locator (EPL), Layer 4-Layer 7 services (L4-L7 services), VXLAN OAM, and so on, start using the HTTPS instead of HTTP. If you check both Enable NX-API and Enable NX-API on HTTP check boxes, applications use HTTP. |

|

Enable Strict Config Compliance |

Enable the Strict Configuration Compliance feature by selecting this check box. For more information, see the section "Strict configuration compliance" in Configuration Compliance. |

|

Enable AAA IP Authorization |

Enables AAA IP authorization (make sure IP Authorization is enabled in the AAA Server). |

|

Enable Nexus Dashboard as Trap Host |

Check the check box to enable Nexus Dashboard as a trap host. |

|

Enable TCAM Allocation |

TCAM commands are automatically generated for VXLAN and vPC Fabric Peering when enabled. |

|

Greenfield Cleanup Option |

Enable the switch cleanup option for greenfield switches without performing a switch reload. This option is typically recommended only for the Data centers with the Cisco Nexus 9000v Switches. |

|

Enable Default Queuing Policies |

Check the check box to apply QoS policies on all the switches in this fabric. To remove the QoS policies that you applied on all the switches, uncheck this check box, update all the configurations to remove the references to the policies, and deploy the configuration. Pre-defined QoS configurations are included that can be used for various Cisco Nexus 9000 Series Switches. When you check this check box, the appropriate QoS configurations are pushed to the switches in the fabric. The system queuing is updated when configurations are deployed to the switches. You can perform the interface marking with defined queuing policies, if required, by adding the necessary configuration to the peer interface freeform block. Review the actual queuing policies by opening the policy file in the template editor. From the Nexus Dashboard Web UI, choose Operations > Template. Search for the queuing policies by the policy file name, for example, queuing_policy_default_8q_cloudscale. Choose the file and click the Modify/View template icon to edit the policy. See the Cisco Nexus 9000 Series NX-OS Quality of Service Configuration Guide for platform specific details. |

|

N9K Cloud Scale Platform Queuing Policy |

Choose the queuing policy from the drop-down list to be applied to all Cisco Nexus 9200 Series Switches and the Cisco Nexus 9000 Series Switches that ends with EX, FX, and FX2 in the fabric. The valid values are queuing_policy_default_4q_cloudscale and queuing_policy_default_8q_cloudscale. Use the queuing_policy_default_4q_cloudscale policy for FEXs. You can change from the queuing_policy_default_4q_cloudscale policy to the queuing_policy_default_8q_cloudscale policy only when FEXs are offline. |

|

N9K R-Series Platform Queuing Policy |

Select the queuing policy from the drop-down list to be applied to all Cisco Nexus switches that ends with R in the fabric. The valid value is queuing_policy_default_r_series. |

|

Other N9K Platform Queuing Policy |

Choose the queuing policy from the drop-down list to be applied to all other switches in the fabric other than the switches mentioned in the above two options. The valid value is queuing_policy_default_other. |

|

Priority flow control watch-dog interval |

When enabling the AI feature, The |

|

Enable Real Time Interface Statistics Collection |

Valid for NX-OS fabrics only. Check the box to enable the collection of real time interface statistics. |

|

Interface Statistics Load Interval |

Enter the interval for the interface statistics load, in seconds (min: 5, max: 300). |

Freeform

The fields in this tab are shown below. For more information, see "Enabling Freeform Configurations on Fabric Switches" in Configuring Switches for LAN and IPFM Fabrics.

| Field | Description |

|---|---|

|

Leaf Pre-Interfaces Freeform Config |

Enter additional CLIs, added before interface configurations, for all Leafs and Tier2 Leafs as captured from Show Running Configuration. |

|

Spine Pre-Interfaces Freeform Config |

Enter additional CLIs, added before interface configurations, for all Spines as captured from Show Running Configuration. |

|

Leaf Post-Interfaces Freeform Config |

Enter additional CLIs, added after interface configurations, for all Leafs and Tier2 Leafs as captured from Show Running Configuration. |

|

Spine Post-Interfaces Freeform Config |

Enter additional CLIs, added after interface configurations, for all Spines as captured from Show Running Configuration. |

|

Intra-fabric Links Additional Config |

Add CLIs that should be added to the intra-fabric links. |

Manageability

| Field | Description |

|---|---|

|

DNS Server IPs |

Specifies the comma-separated list of IP addresses (v4/v6) of the DNS servers. |

|

DNS Server VRFs |

Specifies one VRF for all DNS servers or a comma separated list of VRFs, one per DNS server. |

|

NTP Server IPs |

Specifies the comma-separated list of IP addresses (v4/v6) of the NTP server. |

|

NTP Server VRFs |

Specifies one VRF for all NTP servers or a comma-separated list of VRFs, one per NTP server. |

|

Syslog Server IPs |

Specifies the comma-separated list of IP addresses (v4/v6) IP address of the syslog servers, if used. |

|

Syslog Server Severity |

Specifies the comma-separated list of syslog severity values, one per syslog server. The minimum value is 0 and the maximum value is 7. To specify a higher severity, enter a higher number. |

|

Syslog Server VRFs |

Specifies one VRF for all syslog servers or a comma-separated list of VRFs, one per syslog server. |

|

AAA Freeform Config |

Specifies the AAA freeform configs. If AAA configs are specified in the fabric settings, switch_freeform PTI with source as UNDERLAY_AAA and description as AAA Configurations will be created. NOTE: If you enter a clear text password while editing the AAA freeform configuration, it is converted to an encrypted password, and displayed as an encrypted password when you perform a show run command on the switch. For example, if you changed the password in the configuration as: The show run command output displays the encrypted password as follows: |

Bootstrap

| Field | Description |

|---|---|

|

Enable Bootstrap |

Check the Enable Bootstrap check box to enable the bootstrap feature. After you enable bootstrap, you can enable the DHCP server for automatic IP address assignment using one of the following methods:

|

|

Enable Local DHCP Server |

Check the Enable Local DHCP Server check box to enable DHCP service on Nexus Dashboard and initiate automatic IP address assignment. When you check this check box, the DHCP Scope Start Address and DHCP Scope End Address fields become editable. If you do not check this check box, Nexus Dashboard uses the remote or external DHCP server for automatic IP address assignment. |

|

DHCP Version |

Select DHCPv4 or DHCPv6 from this drop-down list. When you select DHCPv4, the Switch Mgmt IPv6 Subnet Prefix field is disabled. If you select DHCPv6, the Switch Mgmt IP Subnet Prefix is disabled. Nexus Dashboard IPv6 POAP is not supported with Cisco Nexus 7000 Series Switches. Cisco Nexus 9000 and 3000 Series Switches support IPv6 POAP only when switches are either Layer2 adjacent (eth1 or out-of-band subnet must be a /64) or Layer3 adjacent residing in some IPv6 /64 subnet. Subnet prefixes other than /64 are not supported. |

|

DHCP Scope Start Address |

Specifies the first and last IP addresses of the IP address range. IPs from this scope are allocated to the switches during the POAP bootstrap process. |

|

DHCP Scope End Address |

|

|

Switch Mgmt Default Gateway |

Specifies the default gateway for the DHCP scope. |

|

Switch Mgmt IP Subnet Prefix |

Specifies the prefix length for DHCP scope. |

|

DHCP scope and management default gateway IP address specification |

If you specify the management default gateway IP address 10.0.1.1 and subnet mask 24, ensure that the DHCP scope is within the specified subnet, between 10.0.1.2 and 10.0.1.254. |

|

Switch Mgmt IPv6 Subnet Prefix |

Specifies the IPv6 prefix for the Mgmt0 interface on the switch. The prefix should be between 112 and 126. This field is editable if you enable IPv6 for DHCP. |

|

Enable AAA Config |

Check the check box to include AAA configs from the Manageability tab during device bootup. |

|

Bootstrap Freeform Config |

Enter additional commands as needed. For example, if you are using AAA or remote authentication related configurations, you need to add these configurations in this field to save the intent. After the devices boot up, they contain the intent defined in the Bootstrap Freeform Config field. Copy-paste the running-configuration to a freeform config field with correct indentation, as seen in the running configuration on the NX-OS switches. The freeform config must match the running config. For more information, see the section "Resolve freeform config errors in switches" in Working with Inventory in Your Nexus Dashboard LAN or IPFM Fabrics. |

|

DHCPv4/DHCPv6 Multi Subnet Scope |

Specifies the field to enter one subnet scope per line. This field is editable after you check the Enable Local DHCP Server check box. The format of the scope should be defined as: DHCP Scope Start Address, DHCP Scope End Address, Switch Management Default Gateway, Switch Management Subnet Prefix For example: 10.6.0.2, 10.6.0.9, 10.6.0.1, 24 |

Configuration Backup

| Field | Description |

|---|---|

|

Hourly Fabric Backup |

Check the Hourly Fabric Backup check box to enable an hourly backup of fabric configurations and the intent. You can enable an hourly backup for fresh fabric configurations and the intent. If there is a configuration push in the previous hour, Nexus Dashboard takes a backup. Intent refers to configurations that are saved in Nexus Dashboard but yet to be provisioned on the switches. |

|

Scheduled Fabric Backup |

Check the check box to enable a daily backup. This backup tracks changes in running configurations on the fabric devices that are not tracked by configuration compliance. |

|

Scheduled Time |

Specifies the scheduled backup time in a 24-hour format. This field is enabled if you check the Scheduled Fabric Backup check box.

|

Flow Monitor

| Field | Description |

|---|---|

|

Enable Netflow |

Check the Enable Netflow check box to enable Netflow on VTEPs for this Fabric. By default, Netflow is disabled. When Netflow is enabled on the fabric, you can choose not to have netflow on a particular switch by having a fake no_netflow PTI. If netflow is not enabled at the fabric level, an error message is generated when you enable netflow at the interface, network, or VRF level. For information about Netflow support for Nexus Dashboard, see the "Configuring Netflow support" section in Creating LAN and ACI Fabrics and Fabric Groups. |

|

Netflow Exporter |

To add Netflow exporters for receiving netflow data:

|

|

Netflow Record |

To add Netflow records:

|

|

Netflow Monitor |

To add Netflow monitors:

The record name and exporters referred to in each netflow monitor must be defined in the Netflow Record and Netflow Exporter configuration sections in the Flow Monitor tab. . Click Save to configure the flow monitor. |

Telemetry

The telemetry feature in Nexus Dashboard allows you to collect, manage, and monitor real-time telemetry data from your Nexus Dashboard. This data provides valuable insights into the performance and health of your network infrastructure, enabling you to troubleshoot proactively and optimize operations. When you enable telemetry, you gain enhanced visibility into network operations and efficiently manage your fabrics.

Follow these steps to enable telemetry for a specific fabric.

-

Navigate to the Fabrics page.

Go to Manage > Fabrics.

-

Choose the fabric for which you want to enable telemetry.

-

From the Actions drop-down list, choose Edit fabric settings.

The Edit fabric-name settings page displays.

You can also access the Edit fabric-name settings page for a fabric from the Fabric Overview page. In the Fabric Overview page, click the Actions drop-down list and choose Edit fabric settings.

-

In the Edit fabric-name settings page, click the General tab.

-

Under the Enabled features section, check the Telemetry check box.

-

Click Save.

Navigate back to the Edit fabric-name settings page. The Telemetry tab displays.

NOTE: The Telemetry tab appears only when you enable the Telemetry option under the General tab in the Edit fabric-name settings page.

The Telemetry tab includes these options.

-

Configuration — allows you to manage telemetry settings and parameters.

-

NAS — provides Network Analytics Service (NAS) features for advanced insights.

Edit configuration settings

The Configuration tab includes these settings.

-

General — allows you to enable analysis.

You can enable these settings.

-

Enable assurance analysis — enables you to collect of telemetry data from devices to ensure network reliability and performance.

-

Enable Microburst sensitivity - allows you to monitor traffic to detect unexpected data bursts within a very small time window (microseconds). Choose the sensitivity type from the Microburst Sensitivity Level drop-down list. The options are High sensitivity, Medium sensitivity, and Low sensitivity.

The Enable Microburst sensitivity option is available only for ACI fabrics.

-

-

Flow collection modes — allows you to choose the mode for telemetry data collection. Modes include NetFlow, sFlow, and Flow Telemetry.

For more information see: Flow collection and Configure flows.

-

Flow collection rules — allows you to define rules for monitoring specific subnets or endpoints. These rules are pushed to the relevant devices, enabling detailed telemetry data collection.

For more information, see Flow collection.

Edit NAS settings

Nexus Dashboard allows you to export captured flow records to a remote NAS device using the Network File System (NFS) protocol. Nexus Dashboard defines the directory structure on NAS where the flow records are exported.

You can choose between the two export modes.

-

Full — exports the complete data for each flow record.

-

Base — exports only the essential 5-tuple data for each flow record.

Nexus Dashboard needs both read and write permissions on the NAS to perform the export successfully. If Nexus Dashboard cannot write to the NAS, it will generate an alert to notify you of the issue.

Disable Telemetry

You can uncheck the Telemetry check box on your fabric’s Edit Fabric Settings > General > page to disable the telemetry feature for your fabric. Disabling telemetry puts the telemetry feature in a transition phase and eventually the telemetry feature is disabled.

In certain situations, the disable telemetry workflow can fail, and you may see the Force disable telemetry option on your fabric’s Edit Fabric Settings page.

If you disable the telemetry option using the instructions provided in Perform force disable telemetry on your fabric on your fabric, Nexus Dashboard acknowledges the user intent to disable telemetry feature for your fabric, ignoring any failures.

The Nexus Dashboard Force disable telemetry allows you to perform a force disable action for the telemetry configuration on your fabric. This action is recommended when the telemetry disable workflow has failed and you need to disable the telemetry feature on your fabric.

Using the Force disable telemetry feature may leave switches in your fabric with stale telemetry configurations. You must manually clean up these stale configurations on the switches before re-enabling telemetry on your fabric.

Perform force disable telemetry on your fabric

Follow these steps to perform a force disable telemetry on your fabric.

-

(Optional) Before triggering a force disable of telemetry configuration, resolve any telemetry configuration anomalies flagged on the fabric.

-

On the Edit Fabric Settings page of your fabric, a banner appears to alert you that telemetry cannot be disabled gracefully, and a Force Disable option is provided with the alert message.

-

Disable telemetry from the Nexus Dashboard UI using one of these options.

-

Click the Force disable option in the banner that appears at the top of your fabric’s Edit Fabric Settings page to disable telemetry for your fabric gracefully.

-

Navigate to your fabric’s Overview page and click the Actions drop-down list to choose Telemetry > Force disable telemetry option.

Once the force disable action is executed, the Telemetry configuration appears as disabled in Edit Fabric Settings > General > Enabled features > Telemetry area, that is, the Telemetry check box is unchecked.

-

-

Clean up any stale telemetry configurations from the fabric before re-enabling telemetry on Nexus Dashboard.

NAS

You can export flow records captured by Nexus Dashboard on a remote Network Attached Storage (NAS) with NFS.

Nexus Dashboard defines the directory structure on NAS where the flow records are exported.

You can export the flow records in Base or Full mode. In Base mode, only 5-tuple data for the flow record is exported. In Full mode the entire data for the flow record is exported.

Nexus Dashboard requires read and write permission to NAS in order to export the flow record. A system issue is raised if Nexus Dashboard fails to write to NAS.

Guidelines and limitations for network attached storage

-

In order for Nexus Dashboard to export the flow records to an external storage, the Network Attached Storage added to Nexus Dashboard must be exclusive for Nexus Dashboard.

-

Network Attached Storage with Network File System (NFS) version 3 must be added to Nexus Dashboard.

-

Flow Telemetry and Netflow records can be exported.

-

Export of FTE is not supported.

-

Average Network Attached Storage requirements for 2 years of data storage at 20k flows per sec:

-

Base Mode: 500 TB data

-

Full Mode: 2.8 PB data

-

-

If there is not enough disk space, new records will not be exported and an anomaly is generated.

Add network attached storage to export flow records

The workflow to add Network Attached Storage (NAS) to export flow records includes the following steps:

-

Add NAS to Nexus Dashboard.

-

Add the onboarded NAS to Nexus Dashboard to enable export of flow records.

Add NAS to Nexus Dashboard

Follow these steps to add NAS to Nexus Dashboard.

-

Navigate to Admin > System Settings > General.

-

In the Remote storage area, click Edit.

-

Click Add Remote Storage Locations.

-

Complete the following fields to add NAS to Nexus Dashboard.

-

Enter the name of the Network Attached Storage and a description, if desired.

-

In the Remote storage location type field, click NAS Storage.

-

In the Type field, choose Read Write.

Nexus Dashboard requires read and write permission to export the flow record to NAS. A system issue is raised if Nexus Dashboard fails to write to NAS.

-

In the Hostname field, enter the IP address of the Network Attached Storage.

-

In the Port field, enter the port number of the Network Attached Storage.

-

In the Export path field, enter the export path.

Using the export path, Nexus Dashboard creates the directory structure in NAS for exporting the flow records.

-

In the Alert threshold field, enter the alert threshold time.

Alert threshold is used to send an alert when the NAS is used beyond a certain limit.

-

In the Limit (Mi/Gi) field, enter the storage limit in Mi/Gi.

-

Click Save.

-

Add the onboarded NAS to Nexus Dashboard

Follow these steps to add the onboarded NAS to Nexus Dashboard.

-

Navigate to the Fabrics page:

Manage > Fabrics

-

Choose the fabric with the telemetry feature enabled.

-

Choose Actions > Edit Fabric Settings.

-

Click Telemetry.

-

Click the NAS tab in the Telemetry window.

-

Make the necessary configurations in the General settings area.

-

Enter the name in the Name field.

-

In the NAS server field, choose the NAS server added to Nexus Dashboard from the drop-down list.

-

-

In the Collection settings area, choose the flow from the Flows drop-down list.

-

In Base mode, only 5-tuple data for the flow record is exported.

-

In Full mode, the entire data for the flow record is exported.

-

-

Click Save.

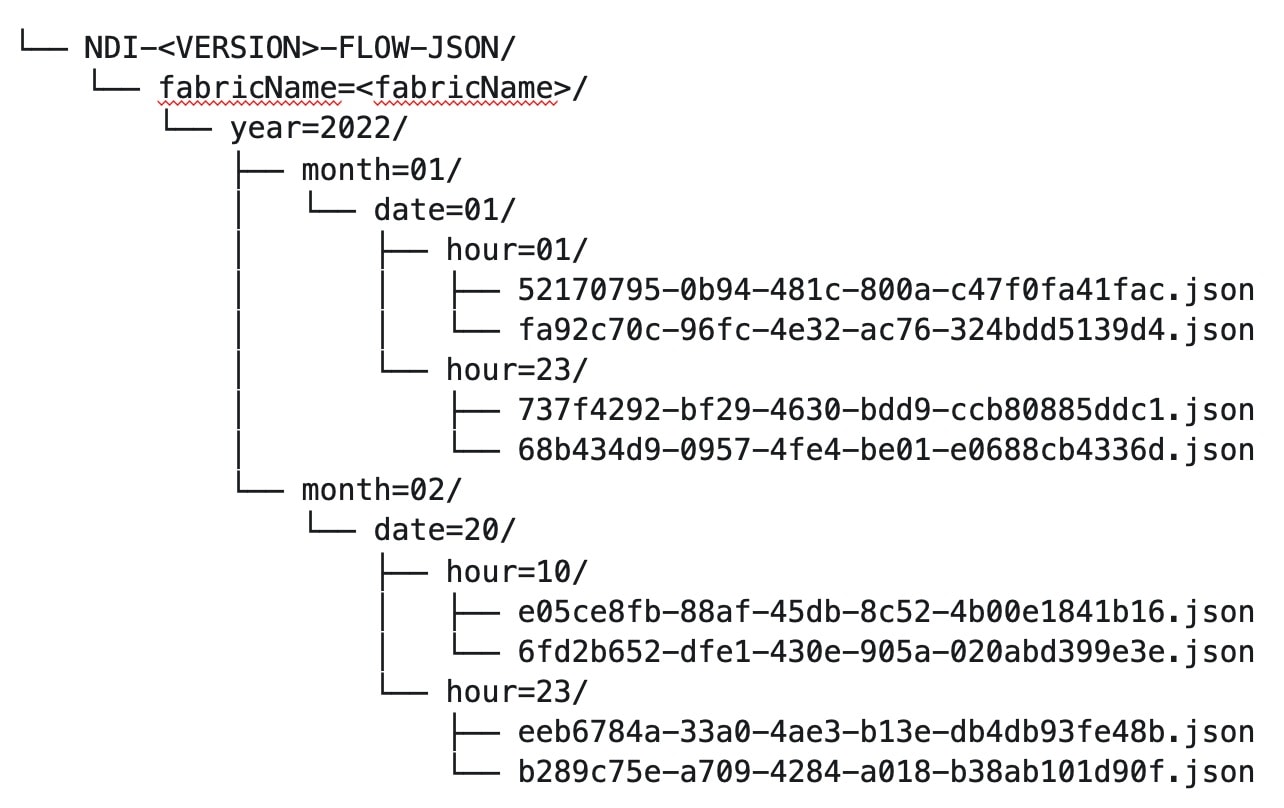

The traffic from the flows displayed in the Flows page is exported as a JSON file to the external NAS in the following directory hierarchy.

Navigate to Analyze > Flows to view the flows that will be exported.

Each flow record is written as a line delimited JSON.

JSON output file format for a flow record in base mode

{"fabricName":"myapic","terminalTs":1688537547433,"originTs":1688537530376,"srcIp":"2000:201:1:1::1","dstIp":"2000:201:1:1::3","srcPort":1231,"dstPort":1232,"ingressVrf":"vrf1","egressVrf":"vrf1","ingressTenant":"FSV1","egressTenant":"FSV1","protocol":"UDP"}

{"fabricName":"myapic","terminalTs":1688537547378,"originTs":1688537530377,"srcIp":"201.1.1.127","dstIp":"201.1.1.1","srcPort":0,"dstPort":0,"ingressVrf":"vrf1","egressVrf":"","ingressTenant":"FSV2","egressTenant":"","protocol":"ANY-HOST"}

JSON output file format for a flow record in full mode

{"fabricName":"myapic","terminalTs":1688538023562,"originTs":1688538010527,"srcIp":"201.1.1.121","dstIp":"201.1.1.127","srcPort":0,"dstPort":0,"ingressVrf":"vrf1","egressVrf":"vrf1","ingressTenant":"FSV2","egressTenant":"FSV2","protocol":"ANY-HOST","srcEpg":"ext-epg","dstEpg":"ext-epg1","latencyMax":0,"ingressVif":"eth1/15","ingressVni":0,"latency":0,"ingressNodes":"Leaf1-2","ingressVlan":0,"ingressByteCount":104681600,"ingressPktCount":817825,"ingressBurst":0,"ingressBurstMax":34768,"egressNodes":"Leaf1-2","egressVif":"po4", "egressVni":0,"egressVlan":0,"egressByteCount":104681600,"egressPktCount":817825,"egressBurst":0,"egressBurstMax":34768,"dropPktCount":0,"dropByteCount":0,"dropCode":"","dropScore":0,"moveScore":0,"latencyScore":0,"burstScore":0,"anomalyScore":0,"hashCollision":false,"dropNodes":"[]","nodeNames":"[\"Leaf1-2\"]","nodeIngressVifs":"[\"Leaf1-2,eth1/15\"]","nodeEgressVifs":"[\"Leaf1-2,po4\"]“ ,"srcMoveCount":0,"dstMoveCount":0,"moveCount":0,"prexmit":0,"rtoOutside":false,"events":"[[\\\"1688538010527,Leaf1-2,0,3,1,no,no,eth1/15,,po4,po4,,,,,0,64,0,,,,,,,,\\\"]]"}

Flow collection

Understanding flow telemetry

Flow telemetry allows users to see the path taken by different flows in detail. It also allows you to identify the EPG and VRF instance of the source and destination. You can see the switches in the flow with the help of flow table exports from the nodes. The flow path is generated by stitching together all the exports in order of the flow.

You can configure the Flow Telemetry rule for the following interface types:

-

VRF instances

-

Physical interfaces

-

Port channel interfaces

-

Routed sub-interfaces (Cisco ACI fabric)

-

SVIs (Cisco ACI fabric)

In a Cisco ACI fabric, if you want to configure routed sub-interfaces from the UI, select L3 Out.

In an NX-OS fabric, physical or port channel flow rules are supported only on routed interfaces.

Flow telemetry monitors the flow for each fabric separately, as there is no stitching across the fabrics in a fabric group. Therefore, flow telemetry is for individual flows. For example, if there are two fabrics (fabric A and fabric B) within a fabric group, and traffic is flowing between the two fabrics, they will be displayed as two separate flows. One flow will originate from Fabric A and display where the flow exits. And the other flow from Fabric B will display where it enters and where it exits.

Flow telemetry guidelines and limitations

-

All flows are monitored as a consolidated view in a unified pipeline for Cisco ACI and NX-OS fabrics, and the flows are aggregated under the same umbrella.

-

Even if a particular node (for example, a third-party switch) is not supported for Flow Telemetry, Nexus Dashboard will use LLDP information from the previous and next nodes in the path to identify the switch name and the ingress and egress interfaces.

-

Nexus Dashboard supports Kafka export for Flow anomalies. However, Kafka export is not currently supported for Flow Event anomalies.

Flow telemetry guidelines and limitations for NX-OS fabrics

-

Ensure that you have configured NTP and enabled PTP in Nexus Dashboard. See Cisco Nexus Dashboard Deployment Guide and Precision Time Protocol (PTP) for Cisco Nexus Dashboard Insights for more information. You are responsible for configuring the switches with external NTP servers.

-

In the Edit Flow page, you can enable all three telemetry types. sFlow is most restrictive, Netflow has some more capability, and Flow Telemetry has the most capability. We recommend that you enable Flow Telemetry if it is available for your configuration. If Flow Telemetry is not available, then use Netflow. If Netflow is not available, use sFlow.

-

If there are multiple Nexus Dashboard clusters onboarded to Nexus Dashboard, partial paths will be generated for each fabric.

-

If you manually configure the fabric to use with Nexus Dashboard and Flow Telemetry support, the Flows Exporter port changes from 30000 to 5640. To prevent a breakage of the Flows Exporter, adjust the automation.

-

Nexus Dashboard supports Kafka export for Flow anomalies. However, Kafka export is not currently supported for Flow Event anomalies.

-

Flow telemetry is supported in -FX3 platform switches for the following NX-OS versions:

-

9.3(7) and later

-

10.1(2) and later

-

Flow telemetry is not supported in -FX3 platform switches for NX-OS version 10.1(1).

-

-

Interface based Flow Telemetry is only supported on modular chassis with -FX land -GX line cards on physical ports and port-channels rules.

-

If interface-based Flow Telemetry is pushed from Nexus Dashboard for Classic LAN and External Connectivity Network fabrics, perform the following steps:

-

Choose the fabric.

-

Choose Policies > Action > Add policy > Select all > Choose template > host_port_resync and click Save.

-

In the Fabric Overview page, choose Actions > Recalculate and deploy.

-

-

For VXLAN fabrics, interface-based Flow Telemetry is not supported on switch links between spine switch and leaf switch.

-

If you want to use the default VRF instance for flow telemetry, you must create the VRF instance with a name of "default" in lowercase. Do not enter the name with any capital letters.

-

Flow telemetry is not supported in classic LAN topologies with 2-level VPC access layers.

-

If you want to enable Flow Telemetry, ensure that there are no pre-existing Netflow configurations on the switches. If there are any pre-existing configurations, the switch configuration may fail.

To enable Flow Telemetry without configuration issues, follow these steps:

-

Ensure that there are no pre-existing Netflow configurations on the switches. If such configurations exist, enabling Flow Telemetry might result in a system anomaly with an error message stating

invalid command match IP source address. -

If you encounter the error, disable Flow Telemetry.

-

Remove any existing Netflow configurations from the switches.

-

Re-enable Flow Telemetry.

-

For some flows, latency information is not available, which could happen due to latency issues. In these cases, latency information will be reported as 0.

-

Flow telemetry rules guidelines and limitations for NX-OS fabrics

-

If you configure an interface rule (physical or port channel) on a subnet, it can monitor only incoming traffic. It cannot monitor outgoing traffic on the configured interface rule.

-

If a configured port channel that contains two physical ports, only the port channel rule is applicable. Even if you configure physical interface rules on the port, only port channel rule takes precedence.

-

For NX-OS release 10.3(2) and earlier, if a flow rule are configured on an interface, then global flow rules are not matched.

-

For NX-OS release 10.3(3) and later, a flow rule configured on an interface is matched first and then the global flow rules are matched.

Configure flows

Configure flow collection modes

Follow these steps to configure flow collection modes.

-

Navigate to Admin > System Settings > Flow collection.

-

In the Flow collection mode area, choose Flow telemetry.

Enabling Flow Telemetry automatically activates Flow Telemetry Events. Whenever a compatible event takes place, an anomaly will be generated, and the What’s the impact? section in the Anomaly page will display the associated flows. You can manually configure a Flow Telemetry rule to acquire comprehensive end-to-end information about the troublesome flow.

Configure flow collection rules in an NX-OS fabric

Follow these steps to configure flow collection rules in an NX-OS fabric.

-

Navigate to the Telemetry window for your fabric.

-

Navigate to the main Fabrics page:

Manage > Fabrics

-

In the table showing all of the Nexus Dashboard fabrics that you have already created, locate the LAN or IPFM fabric where you want to configure telemetry settings.

-

Single-click on that fabric.

The Overview page for that fabric appears.

-

Click Actions > Edit Fabric Settings.

The Edit fabric_name Settings window appears.

-

Verify that the Telemetry option is enabled in the Enabled features area.

The Telemetry tab doesn’t become available unless the Telemetry option is enabled in the Enabled features area.

-

Click the Telemetry tab to access the telemetry settings for this fabric.

-

-

Click the Flow collection tab in the Telemetry window.

-

In the Mode area, click Flow telemetry.

-

In the Flow collections rules area, determine what sort of flow collection rule that you want to add.

VRF

To add a VRF rule:

-

Click the VRF tab.

A table with already-configured VRF flow collection rules is displayed.

For any VRF flow collection rule in this table, click the ellipsis (…), then click Edit rule to edit that rule or Delete rule to delete it.

-

Add a new rule by clicking Create flow collection rule.

-

In the General area, complete the following:

-

Enter the name of the rule in the Rule Name field.

-

The VRF field is disabled. The flow rule applies to all the VRF instances.

-

In the Flow Properties area, select the protocol for which you intend to monitor the flow traffic.

-

Enter the source and destination IP addresses. Enter the source and destination port.

-

Click Save.

-

-

Physical interface

To add a physical interface rule:

-

Click the Physical interface tab.

A table with already-configured physical interface flow collection rules is displayed.

For any physical interface flow collection rule in this table, click the ellipsis (…), then click Edit rule to edit that rule or Delete rule to delete it.

-

Add a new rule by clicking Create flow collection rule.

-

In the General area, complete the following:

-

Enter the name of the rule in the Rule Name field.

-

Check the Enabled check box to enable the status. If you enable the status, the rule will take effect. Otherwise, the rule will be removed from the switches.

-

In the Flow Properties area, select the protocol for which you intend to monitor the flow traffic.

-

Enter the source and destination IP addresses. Enter the source and destination port.

-

In the Interface List area, click Select a Node. Use the search box to select a node.

-

From the drop-down list, select an interface. You can add more than one row (node+interface combination) by clicking Add Interfaces. However, within the rule, a node can appear only once. Configuration is rejected if more than one node is added.

-

Click Save.

-

-

Port channel

To add a port channel rule:

-

Click the Port channel tab.

A table with already-configured port channel flow collection rules is displayed.

For any port channel flow collection rule in this table, click the ellipsis (…), then click Edit rule to edit that rule or Delete rule to delete it.

-

Add a new rule by clicking Create flow collection rule.

-

In the General area, enter the name of the rule in the Rule Name field.

-

Select the Enabled check box to enable the status. If you enable the status, the rule will take effect. Otherwise, the rule will be removed from the switches.

-

In the Flow Properties area, select the protocol for which you intend to monitor the flow traffic.

-

Enter the source and destination IP addresses. Enter the source and destination port.

-

From the drop-down list, select an interface. You can add more than one row (node+interface combination) by clicking Add Interfaces. However, within the rule, a node can appear only once. Configuration is rejected if more than one node is added.

-

Click Save.

-

-

-

Click Done.

Monitor the subnet for flow telemetry

In the following example, the configured rule for a flow monitors the specific subnet provided. The rule is pushed to the fabric which pushes it to the switches. So, when the switch sees traffic coming from a source IP or the destination IP, and if it matches the subnet, the information is captured in the TCAM and exported to the Nexus Dashboard service. If there are 4 nodes (A, B, C, D), and the traffic moves from A > B > C > D, the rules are enabled on all 4 nodes and the information is captured by all the 4 nodes. Nexus Dashboard stitches the flows together. Data such as the number of drops and the number of packets, anomalies in the flow, and the flow path are aggregated for the 4 nodes.

Follow these steps to monitor the subnet for flow telemetry.

-

Navigate to Manage > Fabric.

-

Choose a fabric.

-

Verify that your Fabrics and the Snapshot values are appropriate. The default snapshot value is 15 minutes. Your choice will monitor all the flows in the chosen fabric or snapshot fabric.

-

Navigate to Connectivity > Flows to view a summary of all the flows that are being captured based on the snapshot that you chose.

The related anomaly score, record time, the nodes sending the flow telemetry, flow type, ingress and egress nodes, and additional details are displayed in a table format. If you click a specific flow in the table, specific details are displayed in the sidebar for the particular flow telemetry. In the sidebar, if you click the Details icon, the details are displayed in a larger page. In this page, in addition to other details, the Path Summary is also displayed with specifics related to source and destination. If there are flows in the reverse direction, that will also be visible in this location.

For a bi-directional flow, there is an option to choose to reverse the flow and see the path summary displayed. If there are any packet drops that generate a flow event, they can be viewed in the Anomaly dashboard.

Understanding Netflow

Netflow is an industry standard where Cisco routers monitor and collect network traffic on an interface. Netflow version 9 is supported.

Netflow enables the network administrator to determine information such as source, destination, class of service, and causes of congestion. Netflow is configured on the interface to monitor every packet on the interface and provide telemetry data. You cannot filter on Netflow.

Netflow in Nexus series switches is based on intercepting the packet processing pipeline to capture summary information of network traffic.

The components of a flow monitoring setup are as follows:

-

Exporter: Aggregates packets into flows and exports flow records towards one or more collectors

-

Collector: Reception, storage, and pre-processing of flow data received from a flow exporter

-

Analysis: Used for traffic profiling or network intrusion

-

The following interfaces are supported for Netflow:

| Interfaces | 5 Tuple | Nodes | Ingress | Egress | Path | Comments |

|---|---|---|---|---|---|---|

|

Routed Interface/Port Channel |

Yes |

Yes |

Yes |

No |

Yes |

Ingress node is shown in path |

|

Sub Interface/Logical (Switch Virtual Interface) |

Yes |

Yes |

No |

No |

No |

No |

In an NX-OS fabric, port channel support is available if you monitor only the host-facing interfaces.

Understanding Netflow types

You can use these Netflow types:

Full Netflow

With Full Netflow, all packets on the configured interfaces are captured into flow records in a flow table. Flows are sent to the supervisor module. Records are aggregated over configurable intervals and exported to the collector. Except in the case of aliasing (multiple flows hashing to the same entry in the flow table), all flows can be monitored regardless of their packet rate.

Nexus 9000 Series switches with the Fabric Controller type as well as switches in a Cisco ACI fabric support Full Netflow.

Sampled Netflow

With Sampled Netflow, packets on configured interfaces are time sampled. Flows are sent to the supervisor or a network processor for aggregation. Aggregated flow records are exported at configured intervals. The probability of a record for a flow being captured depends on the sampling frequency and packet rate of the flow relative to other flows on the same interface.

Nexus 7000 and Nexus 7700 Series switches with F/M line cards and the Fabric Controller type, support Sampled Netflow.

Netflow guidelines and limitations

-

In Cisco Nexus 9000 series switches, Netflow supports a small subset of the published export fields in the RFC.

-

Netflow is captured only on the ingress port of a flow as only the ingress switch exports the flow. Netflow cannot be captured on fabric ports.

-

You must configure persistent IP addresses under the cluster configuration, including 7 IP addresses in the same subnet as the data network.

Netflow guidelines and limitations for Cisco ACI fabrics

-

We recommend that you enable Flow Telemetry. If that is not available for your configuration, use Netflow. However, you can determine which mode of flow to use based upon your fabric configuration.

-

Enabling both Flow Telemetry and Netflow is not supported.

-

After you enable Netflow, you must obtain the Netflow collector IP address and configure Cisco APIC with the collector IP address. See Cisco APIC and NetFlow.

To obtain the Netflow collector IP address, navigate to Admin > System Settings > General, then locate the External Pools area. Click View all at the bottom left area of the External Pools tile; the Telemetry-collector persistent IP addresses listed in the table are used for the Netflow collector IP address.

-

The Netflow and sFlow flow collection modes do not support any anomaly.

Netflow guidelines and limitations for NX-OS fabrics

-

In the Edit Flow page, you can enable all three modes. Choose the best possible mode for a product. sFlow is the most restrictive, Netflow has more capabilities, and Flow Telemetry has the most capabilities. We recommend that you enable Flow Telemetry if it is available for your configuration. If Flow Telemetry is not available, then use Netflow. If Netflow is not available, use sFlow.

-

In Nexus 7000 and Nexus 9000 Series switches, only the ingress host-facing interface configured for Netflow are supported (either in VXLAN or Classic LAN).

-

The Netflow supported fabrics are Classic and VXLAN. VXLAN is not supported on fabric ports.

-

Netflow configurations will not be pushed. However, if a fabric is managed, the software sensors will be pushed.

-

If you manually configure the fabric to use with Nexus Dashboard and Netflow support, the Flows Exporter port changes from 30000 to 5640. To prevent a breakage of the Flows Exporter, adjust the automation.

-

To configure Netflow on fabric switches, see the Configuring Netflow section in the Cisco Nexus 9000 Series NX-OS System Management Configuration Guide.

Configure Netflow

Follow these steps to configure Netflow.

-

Navigate to the Telemetry page for your LAN or IPFM fabric.

-

Click the Flow collection tab on the Telemetry page.

-

In the Mode area, make the following choices:

-

Choose Netflow.

-

Choose Flow Telemetry.

-

-

Click Save.

Understanding sFlow

sFlow is an industry standard technology traffic in data networks containing switches and routers. Nexus Dashboard supports sFlow version 5 on Cisco Nexus 3000 series switches.

sFlow provides the visibility to enable performance optimization, an accounting and billing for usage, and defense against security threats.

The following interfaces are supported for sFlow:

| Interfaces | 5 Tuple | Nodes | Ingress | Egress | Path | Comments |

|---|---|---|---|---|---|---|

|

Routed Interface |

Yes |

Yes |

Yes |

Yes |

Yes |

Ingress node is shown in path |

Guidelines and limitations for sFlow

-

Nexus Dashboard supports sFlow with Cisco Nexus 3000 series switches.

-

It is recommended to enable Flow Telemetry if it is available for your configuration. If it is not available for your configuration, use Netflow. If Netflow, is not available for your configuration, then use sFlow.

-

For sFlow, Nexus Dashboard requires the configuration of persistent IPs under cluster configuration, and 6 IPs in the same subnet as the data network are required.

-

sFlow configurations will not be pushed. However, if a fabric is managed, the software sensors will be pushed.

-

If you manually configure the fabric to use with Nexus Dashboard and sFlow support, the Flows Exporter port changes from 30000 to 5640. To prevent a breakage of the Flows Exporter, adjust the automation.

-

Nexus Dashboard does not support sFlow in the following Cisco Nexus 3000 Series switches:

-

Cisco Nexus 3600-R Platform Switch (N3K-C3636C-R)

-

Cisco Nexus 3600-R Platform Switch (N3K-C36180YC-R)

-

Cisco Nexus 3100 Platform Switch (N3K-C3132C-Z)

-

-

Nexus Dashboard does not support sFlow in the following Cisco Nexus 9000 Series fabric modules:

-

Cisco Nexus 9508-R fabric module (N9K-C9508-FM-R)

-

Cisco Nexus 9504-R fabric module (N9K-C9504-FM-R)

-

-

To configure sFlow on fabric switches, see the Configuring sFlow section in the Cisco Nexus 9000 Series NX-OS System Management Configuration Guide.

Configure sFlow telemetry

Prerequisites

Follow these steps to configure sFlow telemetry.

-

Navigate to the Telemetry page for your LAN or IPFM fabric.

-

Click the Flow collection tab on the Telemetry page.

-

In the Mode area, make the following choices:

-

Choose sFlow.

-

Choose Flow Telemetry.

-

-

Click Save.

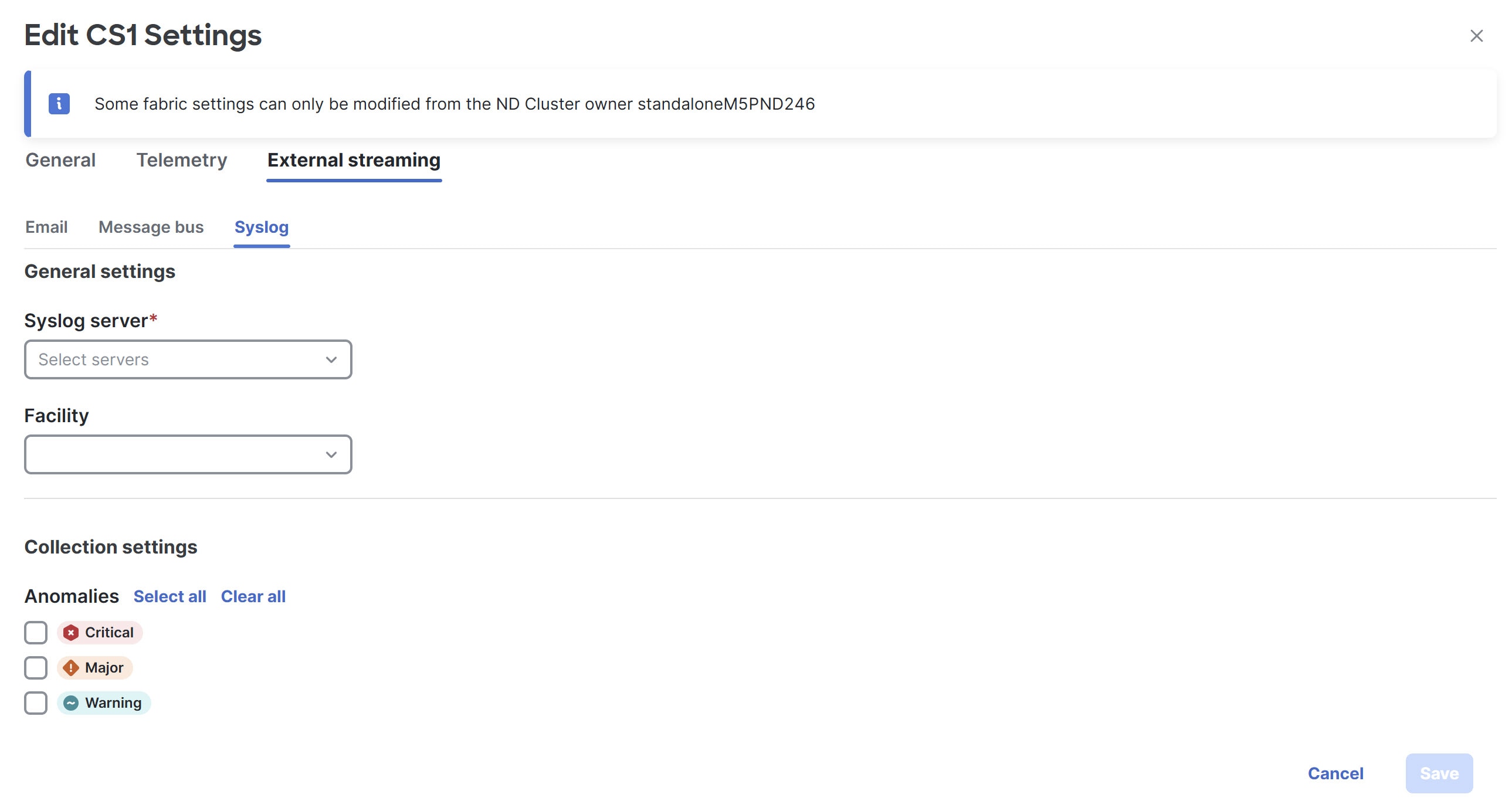

External streaming

The External streaming tab in Nexus Dashboard allows you export data that Nexus Dashboard collects over Kafka, email, and syslog. Nexus Dashboard generates data such as advisories, anomalies, audit logs, faults, statistical data, and risk and conformance reports. When you configure a Kafka broker, Nexus Dashboard writes all data to a topic. By default, the Nexus Dashboard collects export data every 30 seconds or at a less frequent interval.

For ACI fabrics, you can also collect data for specific resources (CPU, memory, and interface utilization) every 10 seconds from the leaf and spine switches using a separate data pipeline. To export this data, select the Usage option under Collection Type in the Message bus export settings. Additionally, CPU and memory data is collected for the controllers.

Nexus Dashboard does not store the collected data in Elasticsearch; instead, it exports the data directly to your repository or data lake using a Kafka broker for consumption. By using the Kafka export functionality, you can then export this data to your Kafka broker and push it into your data lake for further use.

You can configure an email scheduler to define the type of data and the frequency at which you want to receive information via email. You can also export anomaly records to an external syslog server. To do this, select the Syslog option under the External Streaming tab.

Configure external streaming settings

Follow these steps to configure external streaming settings.

-

Navigate to the Fabrics page.

Go to Manage > Fabrics.

-

Choose the fabric for which you configure streaming settings.

-

From the Actions drop-down list, choose Edit fabric settings.

The Edit fabric-name settings page displays.

You can also access the Edit fabric-name settings page for a fabric from the Fabric Overview page. In the Fabric Overview page, click the Actions drop-down list and choose Edit fabric settings.

-

In the Edit fabric-name settings page, click the External streaming tab.

You can view these options.

-

Email

-

Message bus

-

Syslog

-

Guidelines and limitations

-

Intersight connectivity is required to receive the reports by email.

-

You can configure up to five emails per day for periodic job configurations.

-

A maximum of six exporters is supported for export across all types of exporters including email, message bus, and syslog. You must provide unique names for each export.

-

The scale for Kafka exports is increased to support up to 20 exporters per cluster. However, statistics selection is limited to any six exporters.

-

Before configuring your Kafka export, you must add the external Kafka IP address as a known route in your Nexus Dashboard cluster configuration and verify that Nexus Dashboard can reach the external Kafka IP address over the network.

-

The anomalies in Kafka and email messages include categories such as Resources, Environmental, Statistics, Endpoints, Flows, and Bugs.

-

Export data is not supported for snapshot fabrics.

-

You must provide unique names for each exporter, and they may not be repeated between Kafka export for Alerts and Events and Kafka export for Usage.

-

Nexus Dashboard supports Kafka export for flow anomalies. However, Kafka export is not currently supported for flow Event anomalies.

Guidelines and limitations in NX-OS fabrics

-

Remove all configurations in the Message Bus Configuration and Email page before you disable Software Telemetry on any fabric and remove the fabric from Nexus Dashboard.

The email scheduler feature in Nexus Dashboard automates the distribution of summarized data collected from Nexus Dashboard. It allows customization of selection of email recipients, choice of email format, scheduling frequency settings, and configuring the types of alerts and reports.

To configure email at the system settings level, see the section "Email" in Working with System Settings.

Follow these steps to configure an email scheduler.

-

Navigate to the Fabrics page.

Go to Manage > Fabrics.

-

Choose the fabric for which you configure streaming settings.

-

From the Actions drop-down list, choose Edit fabric settings.

The Edit fabric-name settings page displays.

-

In the Edit fabric-name settings page, click the External streaming tab.

-

Click the Email tab.

-

Review the information provided in the Email tab for already-configured email configurations.

The following details display under Email tab.

| Field | Description |

|---|---|

|

Name |

The name of the email configuration. |

|

|

The email addresses used in the email configuration. |

|

Start time |

The start date used in the email configuration. |

|

Frequency |

The frequency in days or weeks set in the email configuration. |

|

Anomalies |

The severity level for anomalies and advisories set in the email configuration. |

|

Advisories |

|

|

Risk and conformance reports |

The status of the overall inventory for a fabric, including software release, hardware platform, and a combination of software and hardware conformance. |

To add a new email configuration, click Add email in the Email page.

-

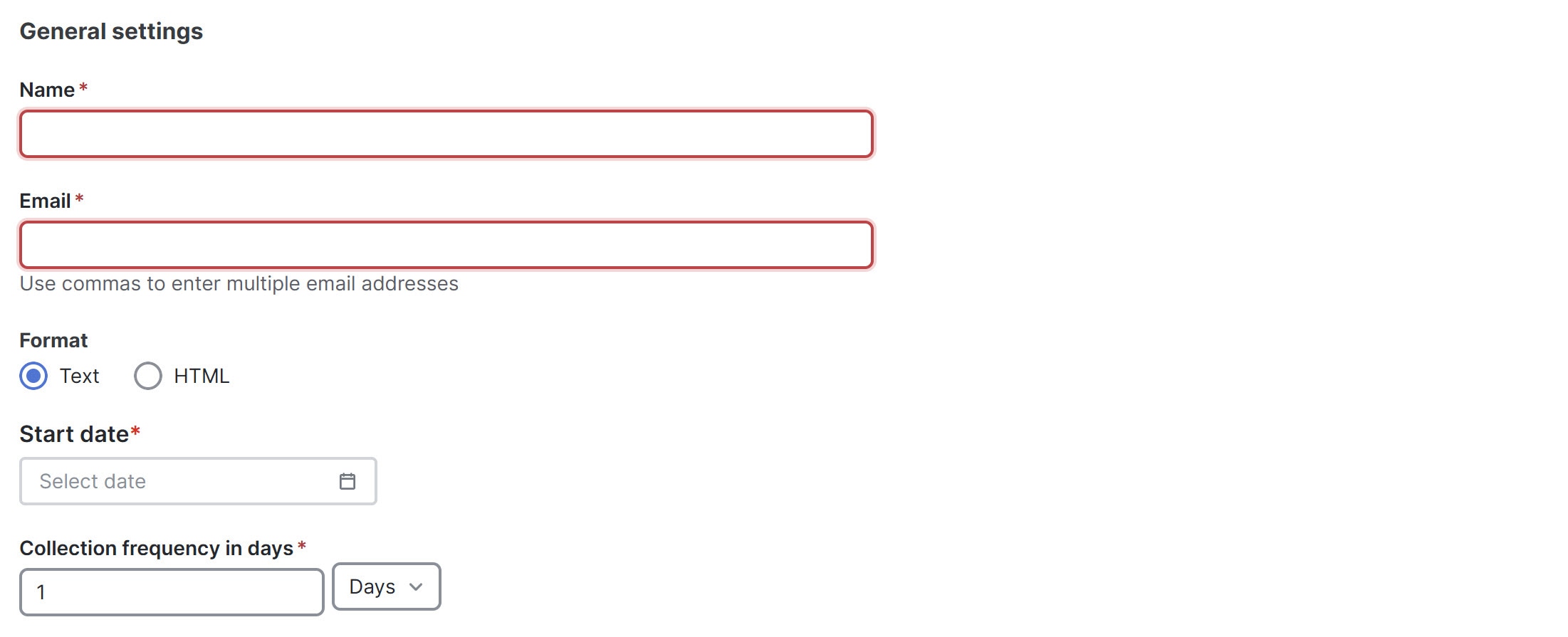

Follow these steps to configure General Settings.

-

In the Name field, enter the name of the email scheduler.

-

In the Email field, enter one or more email addresses separated by commas.

-

In the Format field, choose Text or HTML email format.

-

In the Start date field, choose the start date when the scheduler should begin sending emails.

-

In the Collection frequency in days field, specify how often the summary is sent, you can choose days or weeks.

-

-

Follow these steps to configure Collection Settings.

-

In the Mode field, choose one of the following modes.

-

Basic — displays the severity levels for anomalies and advisories.

-

Advanced — displays the categories and severity levels for anomalies and advisories.

-

-

Check the Only include active alerts in email check box, to include only active anomaly alerts.

-

Under Anomalies choose the categories and severity levels for the anomalies.

-

Under Advisories choose the categories and severity levels for the advisories.

-

Under Risk and Conformance Reports, choose from the following options.

-

Software

-

Hardware

-

-

-

Click Save.

The Email area displays the configured email schedulers.