Feature Summary and Revision History

Summary Data

|

Applicable Product(s) or Functional Area |

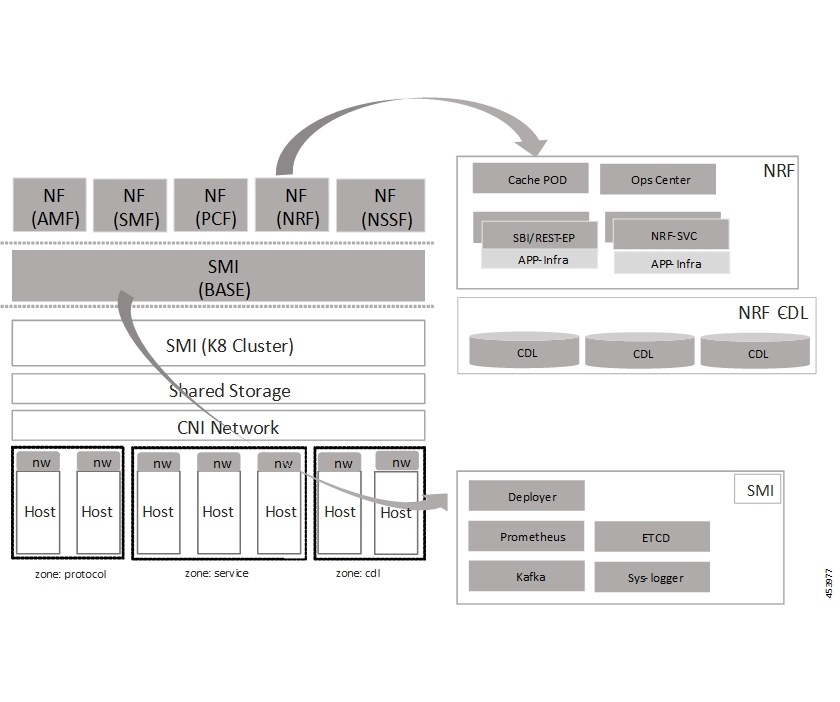

5G-NRF |

|

Applicable Platform(s) |

SMI |

|

Feature Default Setting |

Enabled - Always-on |

|

Related Changes in this Release |

Not Applicable |

|

Related Documentation |

Not Applicable |

Revision History

|

Revision Details |

Release |

|---|---|

|

First introduced. |

2026.01 |

Feedback

Feedback