Supported GPUs and Server Firmware Requirements

The following table lists the minimum server firmware versions for the supported GPU cards.

|

GPU Card |

PID |

Type |

Number of Supported GPUs* |

Cisco IMC/BIOS Minimum Version Required |

|---|---|---|---|---|

|

NVIDIA Tesla A10 |

UCSC-GPU-A10 or HX-GPU-A10= |

Single-wide |

5 |

4.2(2f) |

|

NVIDIA Tesla A16 |

UCSC-GPU-A16=or HX-GPU-A16= |

Double-wide |

3 |

4.2(2f) |

|

NVIDIA Tesla A30 |

UCSC-GPU-A30=or HX-GPU-A30= |

Double-wide |

3 |

4.2(2f) |

|

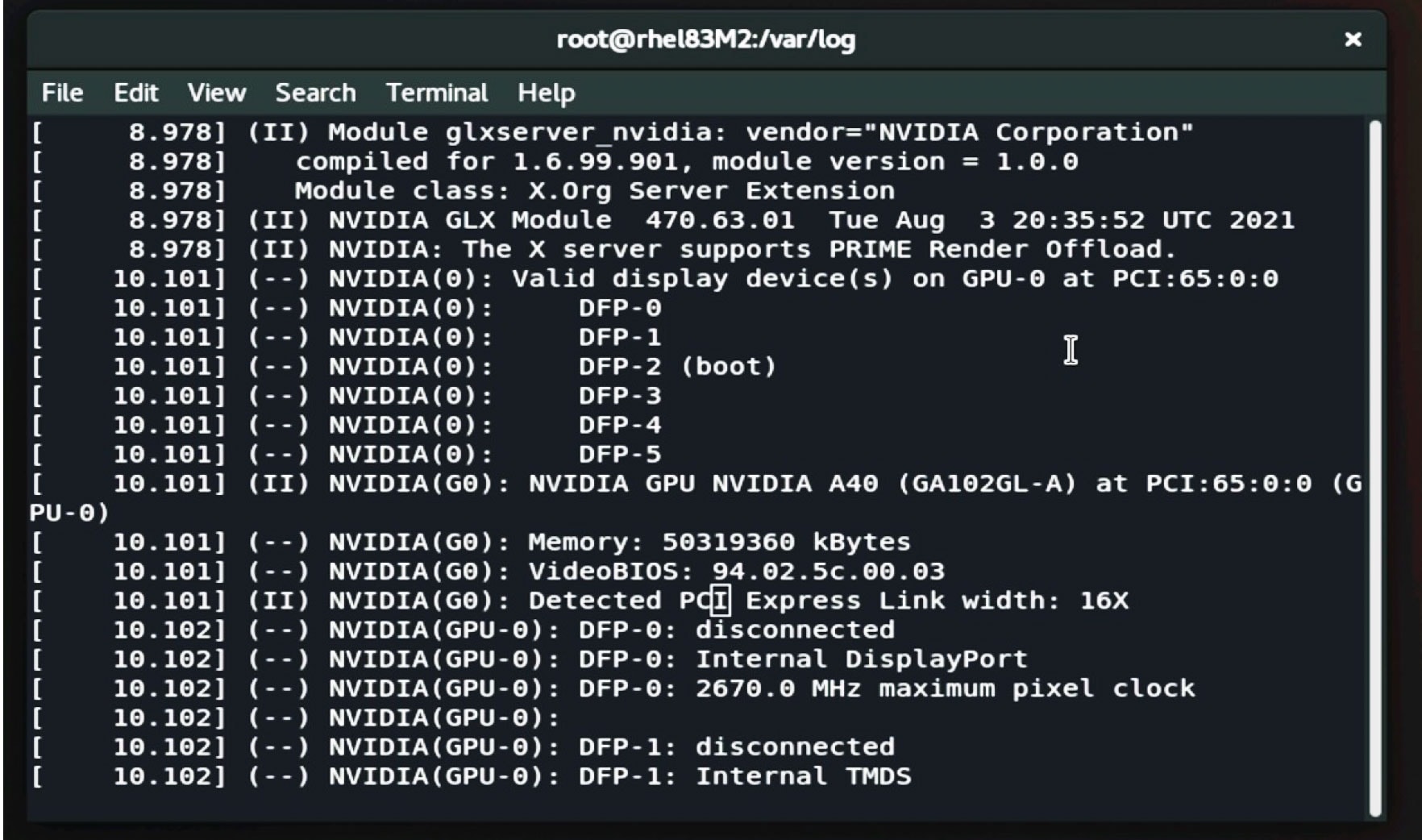

NVIDIA Tesla A40 |

UCSC-GPU-A40 or HX-GPU-A40= |

Double-wide |

3 |

4.2(2f) |

|

NVIDIA Tesla A100 |

UCSC-GPU-A100= |

Double-wide |

3 |

4.2(2f) |

|

NVIDIA Tesla A100-80 |

UCSC-GPU-A100-80= |

Double-wide |

3 |

4.2(2f) |

*The NVME server supports only 2 double-wide GPUs or 4 single-wide GPUs since it supports only two risers.

Feedback

Feedback