Wind Farm

Available Languages

![]()

Cisco Solution for Renewable Energy:

Offshore Wind Farm 1.2

Design Guide

January 2025

|

Cisco Systems, Inc. www.cisco.com

THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS DESCRIBED IN THIS DOCUMENT ARE SUBJECT TO CHANGE WITHOUT NOTICE. THIS DOCUMENT IS PROVIDED “AS IS.”

ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS DOCUMENT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS, IMPLIED, OR STATUTORY INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR INCIDENTAL DAMAGES UNDER ANY THEORY OF LIABILITY, INCLUDING WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OF OR INABILITY TO USE THIS DOCUMENT, EVEN IF CISCO HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

All printed copies and duplicate soft copies of this document are considered uncontrolled. See the current online version for the latest version.

Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices.

©2025 CISCO SYSTEMS, INC. ALL RIGHTS RESERVED

Contents

Introduction to Cisco Solution for Renewable Energy: Offshore Wind Farm

Cisco Solution for Renewable Energy Offshore Wind Farms

Offshore Wind Farm Cisco Validated Design

Scope of Wind Farm Release 1.2 CVD

New Capabilities in Offshore Wind Farm Release 1.2

Chapter 2: Wind Farm Use Cases

Offshore Wind Farm Places in the Network

Wind Farm Actors in the Network

Chapter 3: Solution Architecture

Solution Hardware and Software Compatibility

Chapter 4: Solution Design Considerations

TAN Non-HA Design Considerations

TAN High Availability Design with REP

Offshore Substation Network and Building Blocks

OSS DMZ and Third-Party Network

Network VLANs and Routing Design

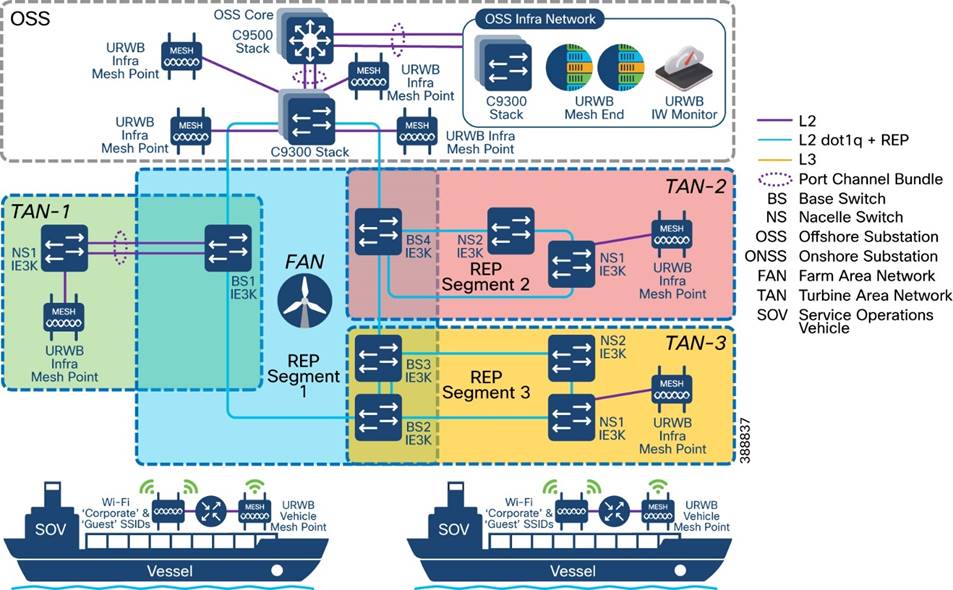

Use case for Service Operations Vessel Wireless Backhaul within a Wind Farm

URWB: Terminology and Miscellaneous Configurations

URWB Mobility Architecture: Layer 2 Fluidity

URWB Access Layer: Fast Convergence on Failure

Onboard Radio Redundancy: Failover and Recovery

SCADA Applications and Protocols

Open Platform Communications Unified Architecture

Turbine Operator Network Design

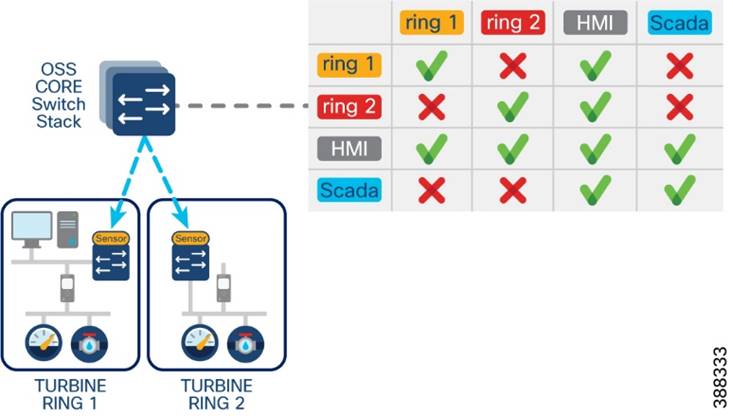

OSS (third-party) Turbine Operator Core Network Ring Design

OSS (third-party) Turbine Operator Aggregation Network Design

Farm Area SCADA Network (FSN) Design

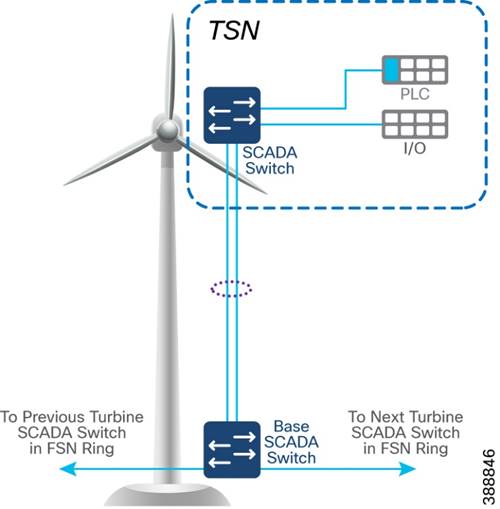

Turbine SCADA Network (TSN) Design

TSN High Availability Design with REP

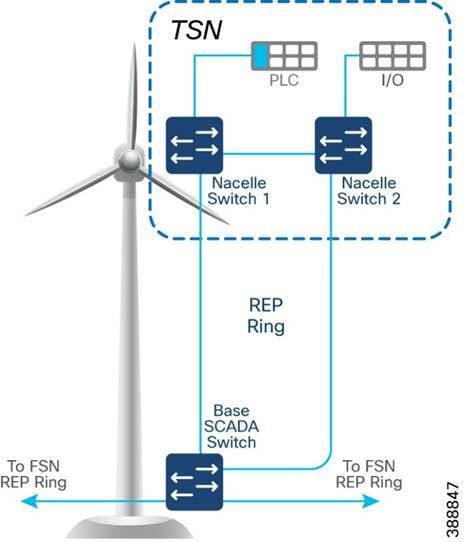

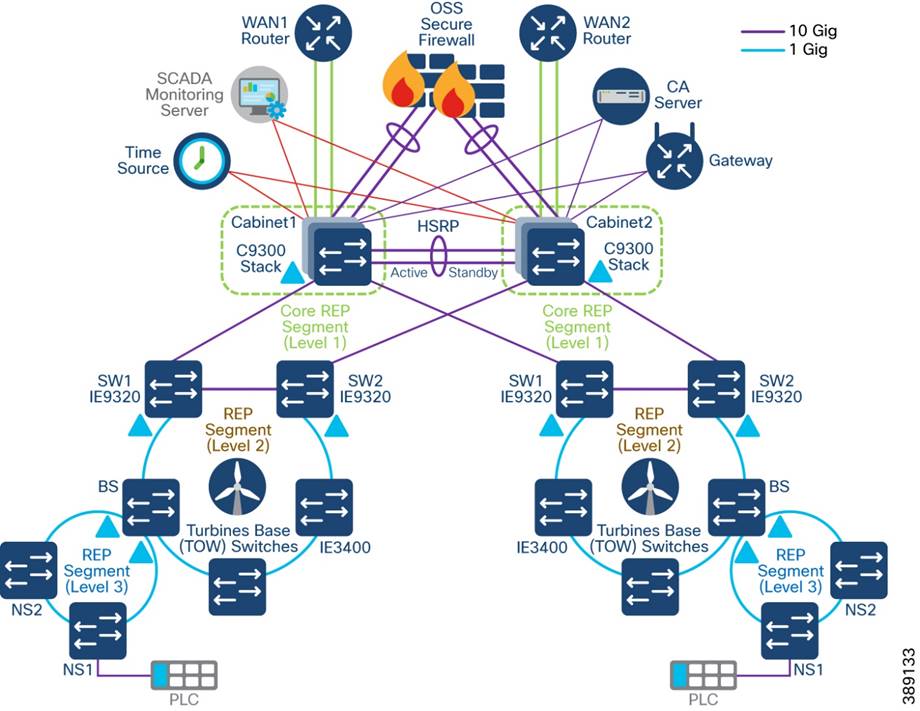

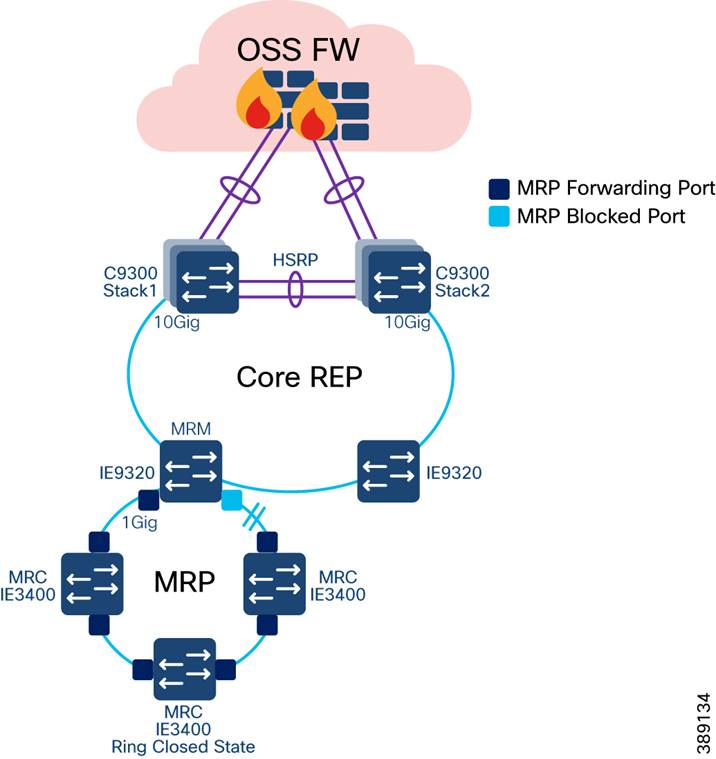

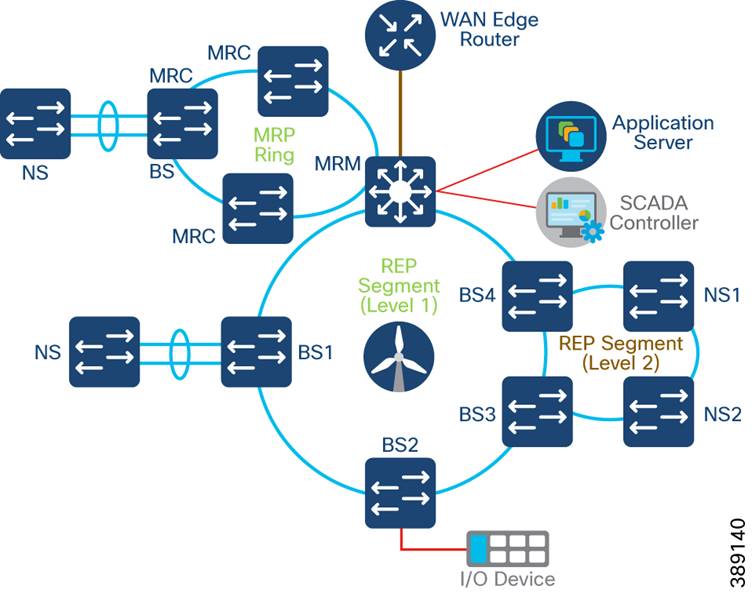

Multi-level advanced REP rings design across Cabinets

Media Redundancy Protocol (MRP) Ring design for FSN

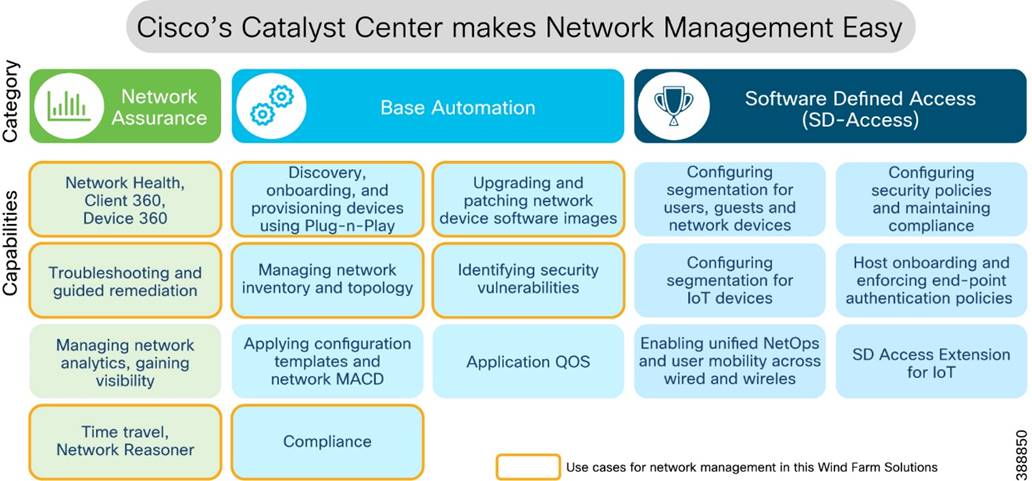

Chapter 5: Network Management and Automation

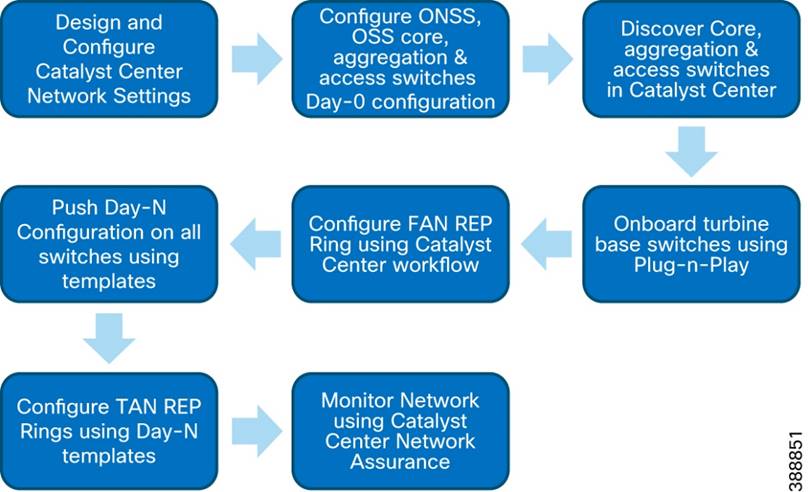

Device Discovery and Onboarding

Device Plug-n-Play Onboarding Using Catalyst Center

FAN REP Ring Provisioning using Catalyst Center REP Automation Workflow

REP Ring Design Considerations, Limitations, and Restrictions

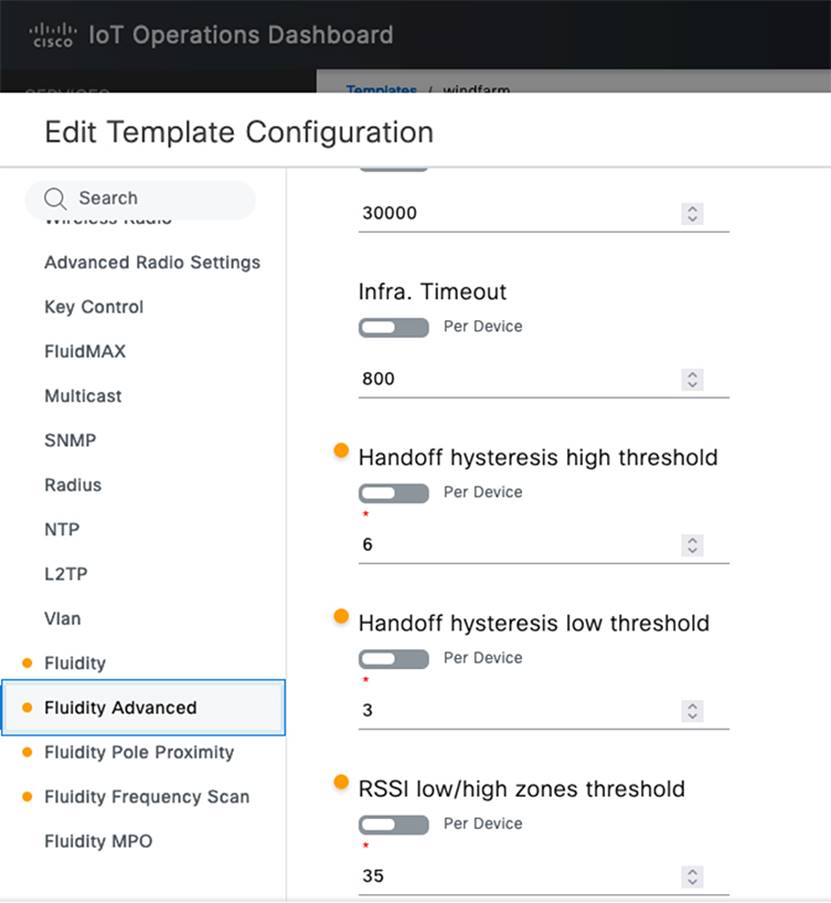

Day N Operations and Templates

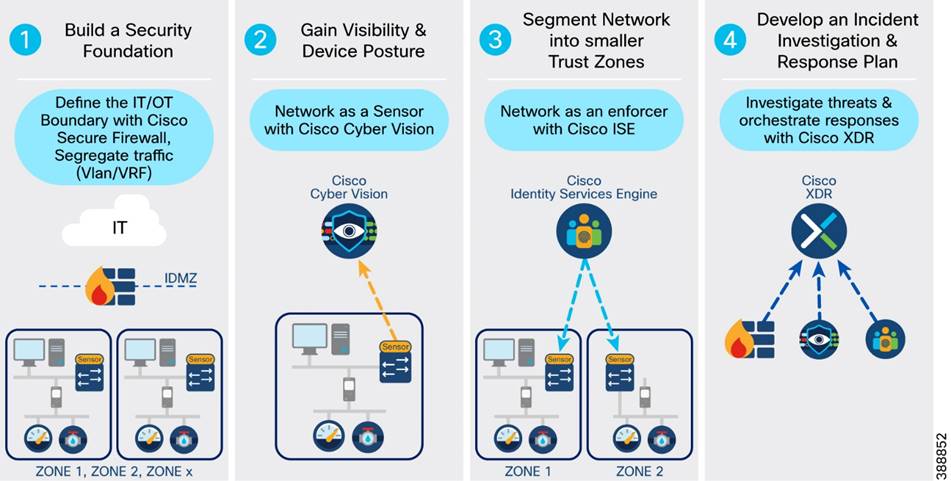

Chapter 6: Security Design Considerations

Security Approach and Philosophy

Wind Farm Network Security Use Cases and Features

Advantages of Network Segmentation

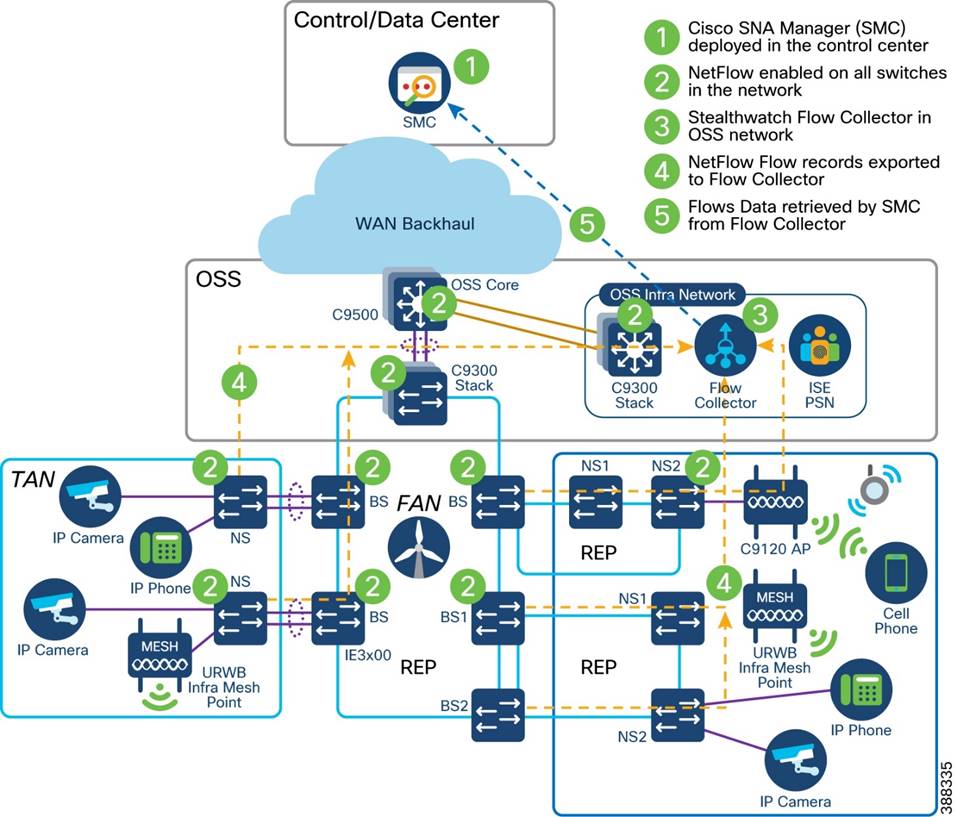

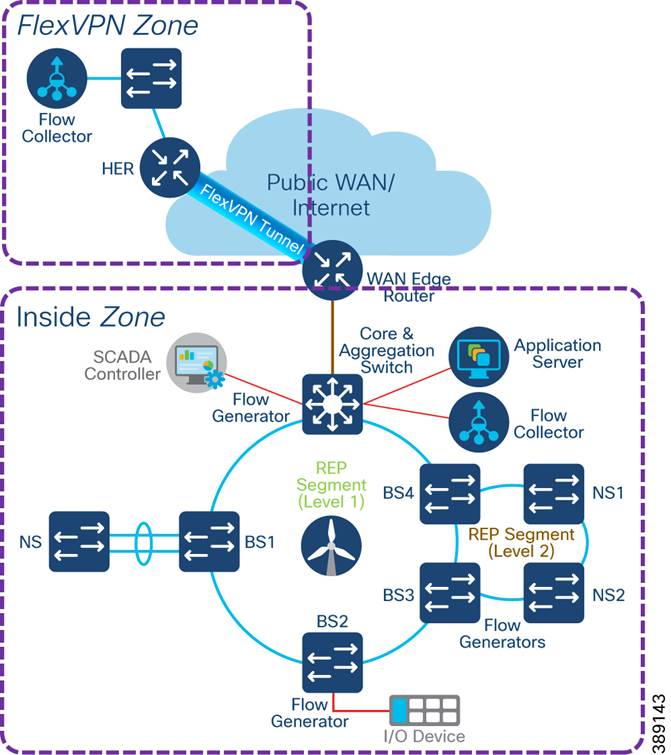

Cisco Secure Network Analytics (Stealthwatch)

Flexible NetFlow Data Collection

Cisco Secure Network Analytics for Windfarm Network Security

Cisco Secure Network Analytics for Abnormal Traffic Detection

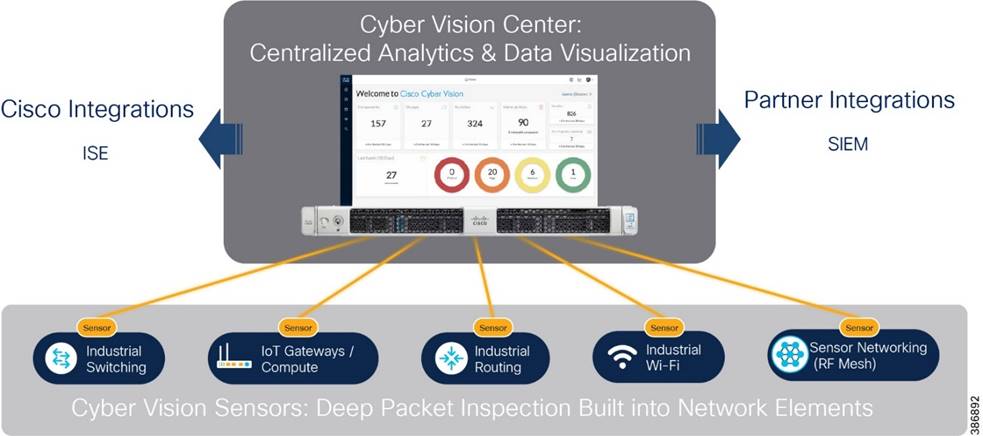

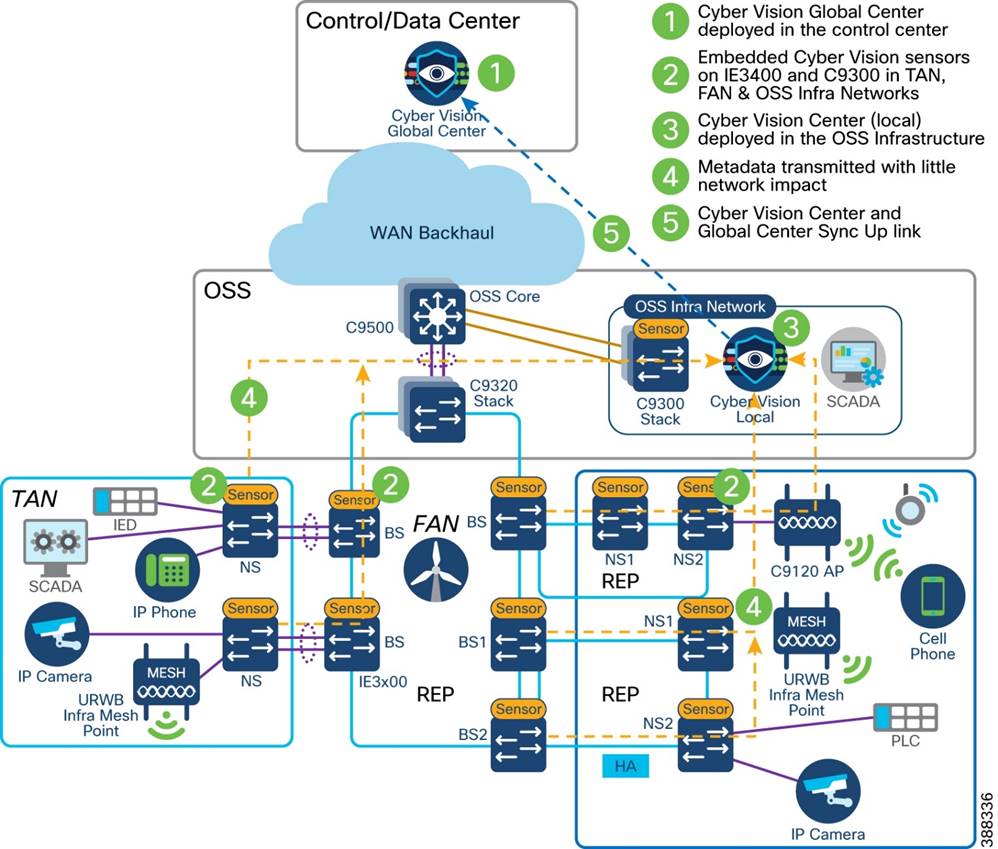

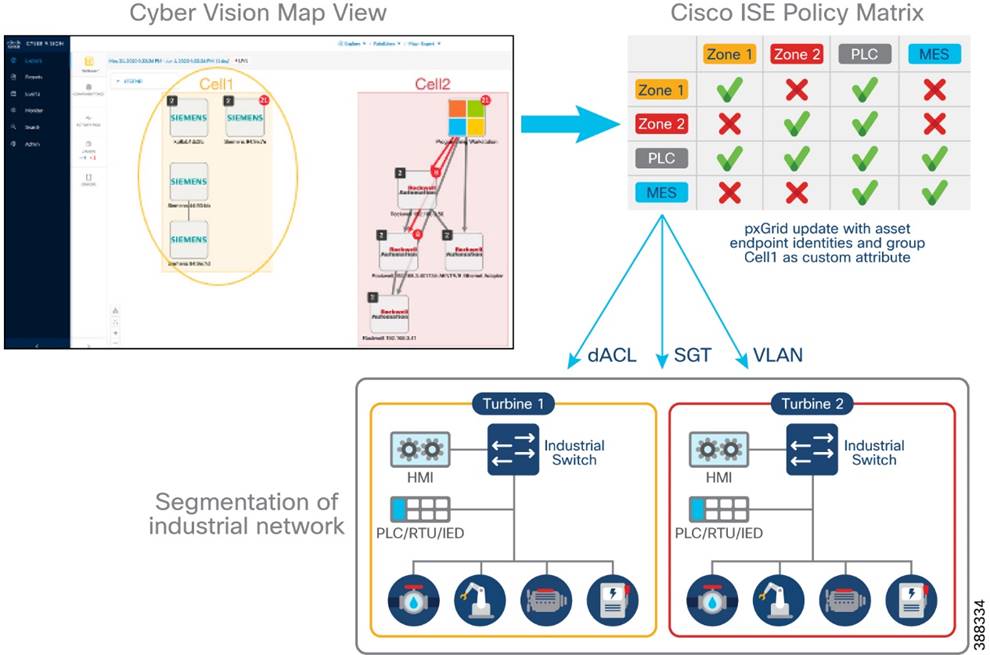

Operational Technology Flow and Device Visibility using Cisco Cyber Vision

Cyber Vision Design Considerations

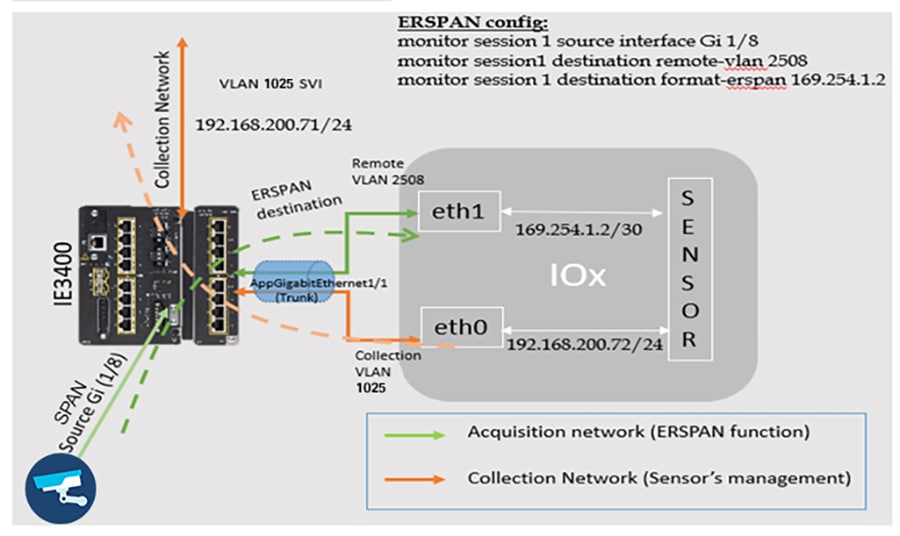

Wind Farm Cyber Vision Network Sensors

Turbine Operator Network Security Design

MACsec Encryption in Turbine Operator Network

Network micro-segmentation using Private VLAN

NERC CIP Compliance Features and Guidance

CIP-005-7 - Electronic Security Perimeter(s)

CIP-007-6 – System Security Management

CIP-008-6 – Incident Reporting and Response Planning

CIP-010-4 – Configuration Change Management and Vulnerability Assessments

CIP-011-3 – Information Protection

CIP-013-2 – Supply Chain Risk Management

Chapter 7: Network Scale and High Availability Summary

Wind Farm Operator Network High Availability Summary

FAN Aggregation High Availability

OSS and ONSS Core High Availability

Turbine operator Network High Availability Summary

SCADA Core Network High Availability

Chapter 8: Turbine Operator Compact Onshore Substation

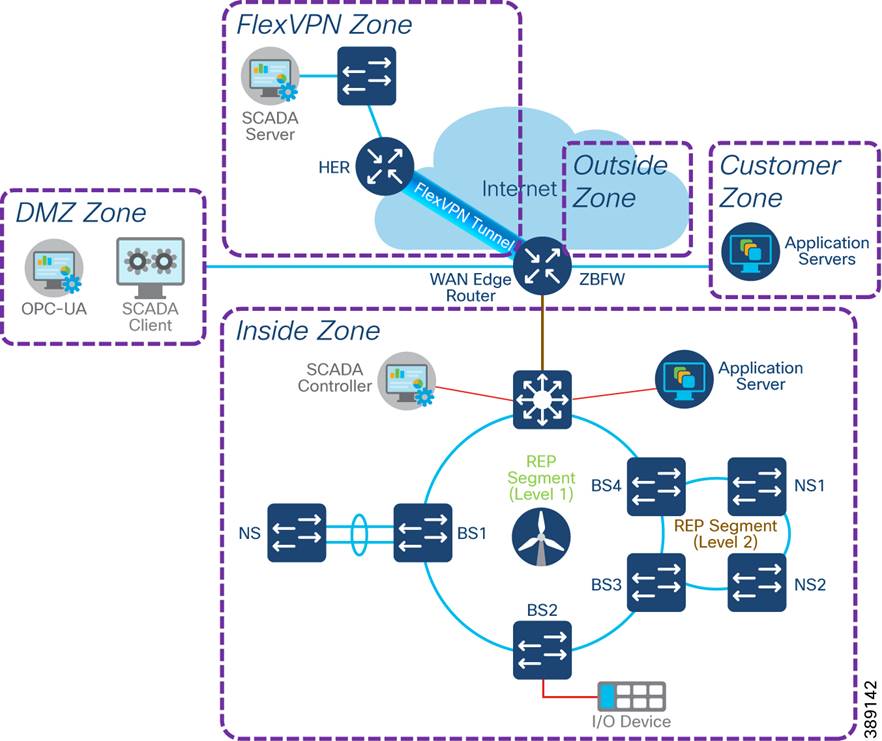

Compact Onshore Substation Use Cases

Compact Onshore Substation Network Architecture

Core Network and Routing design considerations

WAN Edge with FlexVPN network design

Farm Area SCADA Network (FSN) REP Ring Design

FSN with MRP and REP Rings Design

Turbine SCADA Network (TSN) Design with REP

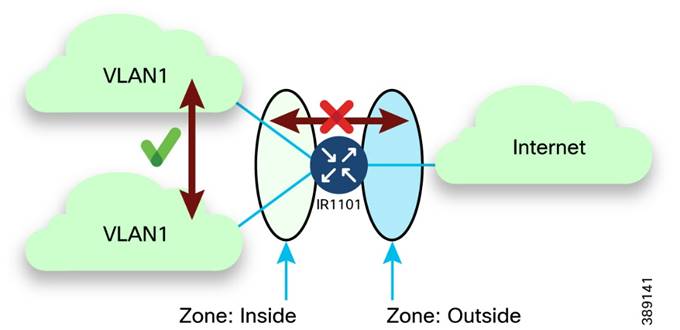

Compact Substation Network Security

Compact Substation Network Segmentation using Private VLAN

Zone Based Firewall (ZBFW) Design

Network Visibility using NetFlow

Compact Substation Quality of Service

![]()

Introduction to Cisco Solution for Renewable Energy: Offshore Wind Farm

Most countries are investing in renewable energy generation to accelerate the move toward carbon neutrality. The following technologies are growing steadily and being deployed at scale:

§ Onshore and offshore wind

§ Onshore solar farms

§ Onshore battery storage

Other renewable technologies also are being researched and developed, such as wave, tidal, and energy storage technologies. We will start to see innovative renewable energy deployments in the future.

Some countries are leading the push to integrate renewable energy into the grid. China and the UK are examples of countries that are leading the way with large deployments of wind farms, both onshore and offshore. European countries in general are setting big targets for offshore wind farms. And the United States is predicted to become a major offshore wind energy producer in the coming decade. Cisco can help with renewable energy technologies, in onshore and offshore wind farms, onshore solar farms, and onshore battery storage facilities. This document focusses on the complexities that offshore wind farms are facing and the solutions that Cisco offers.

Deploying and operating renewable energy technologies can be challenging: they need to operate in harsh and remote locations, a secure and reliable network is required, and that network needs to work flawlessly with the various OT and IT technologies that form the solution.

Chapter 1: Solution Overview

As digital technology is increasingly required to operate remote distributed energy resource locations, networking and communication equipment must be installed with close attention paid to ease of operations, management, and security. Cisco validated designs are simple, scalable, and flexible. They focus on operational processes that are field-friendly and don’t require a technical wizard. Our centralized network device management (Cisco Catalyst Center) and strong networking asset operation capabilities eliminate the need for manual asset tracking and the inconsistencies in field deployments from one site to another. Integration with operations ensures that field technicians can easily deploy and manage devices without the need for IT support, while IT and OT teams have full visibility and control of the deployed equipment.

Additionally, Cisco provides a wide range of connectivity options, ranging from fiber to cellular or high-speed wireless where hardwired connections are not available.

Cisco has launched a complete validated design for offshore wind farms. This design focusses on an end-to-end architecture for the asset operator’s network, including both onshore and offshore locations.

This document refers to the following stakeholders:

§ Wind farm operator: The asset operator responsible for the daily operations and administration of a wind farm as a power-producing entity. Many operators are also involved in the development, ownership, and construction of the wind farm. Operators sell power that is produced to public utility companies, typically with long-term fixed price contracts in place.

§ Wind farm owner (asset owner): Typically, a consortium of parties such as public utilities or oil and gas companies and financing companies. There are also dedicated renewable energy companies and others who invest in this area. Many wind farm owners also are operators. Many such companies are dedicated renewable companies or renewable energy branches of traditional utilities or oil and gas companies.

§ Turbine supplier: Wind turbine suppliers design, test, and manufacture wind turbine equipment, including wind turbine generators (WTGs), and ancillary systems such as supervisory control and data acquisition (SCADA) and power automation. These suppliers also provide ongoing support and maintenance services (O&M) for many wind farm operators. It is typical for the monitoring and maintenance of the wind turbine network to be outsourced to these same suppliers.

§ Offshore transmission owner (OFTO): Offshore wind farms are connected to the onshore grid by an export cable system. Regulatory requirements in many countries prohibit power generators from owning transmission assets. Therefore, the export cable often is owned and operated by another third-party, the OFTO. Developers, who often also are the wind farm owners or operators, divest the export cable system to a third-party through a regulated auction. In some regions, the requirement to divest the transmission assets (export cable systems) is not required.

§ Grid utility: A traditional power grid operator that provides the connection point for exported power from a wind farm can be either a transmission system operator (TSO), a distribution network operator (DNO), or a distribution system operator (DSO). Public utilities that are also wind farm owners separate the grid and renewable businesses usually due to strict regulatory requirements.

This chapter includes the following topics:

§ Cisco Solution for Renewable Energy Offshore Wind Farms

§ Offshore Wind Farm Cisco Validated Design

§ Scope of Wind Farm Release 1.2 CVD

Cisco Solution for Renewable Energy Offshore Wind Farms

Offshore wind farms are large infrastructure deployments with multiple locations. They include the following:

§ Onshore substation

§ Offshore substation (platform offshore)

§ Offshore wind turbines (ranging from 50 to 300 turbines)

§ Onshore operations and maintenance offices

§ Offshore service operations vessels (SOV), which provide worker accommodations, offices, and workshops while offshore

Reliable and secure connectivity is key for providing monitoring and control of these offshore and therefore remote assets. Without a reliable and secure communications infrastructure, monitoring and control would be challenging.

From the offshore wind farm operator’s viewpoint, the network needs to be easy to deploy, monitor, upgrade, and troubleshoot. The network design also needs to be standardized to enable easy specification and procurement at the early stages of a project. Avoiding both bespoke work and delivering different architectures for each project should enable a speedier project delivery phase.

A standardized solution is required that provides the flexibility to meet these needs while facilitating a clear path forward as complexity and scale evolve (for example, larger wind farms, increased number of devices and applications, and increased reliability).

Offshore Wind Farm Cisco Validated Design

Cisco has developed a complete Cisco Validated Design (CVD) for offshore wind farm projects. It provides a blueprint solution for all phases of a project, from specification and procurement to deployment.

The CVD includes networking infrastructure from offshore wind turbine through offshore platforms to the onshore WAN interface point and connectivity to the wind farm operator’s control center.

The CVD is modular to allow for varying wind farm sizes, so it can be adapted for any number of turbines. It provides resilient architectures to allow for fault conditions, and includes cyber security built in from the start.

This CVD offers the following key benefits:

§ Flexible deployment options: Support for simple to advanced solutions that cover various deployment options (scalable for small to large wind farms). A modular design that can adjust to the various sizes of wind farms that are deployed. Providing a flexible platform for the deployment of future services and applications.

§ Rugged and reliable network equipment: Network equipment designed for harsh offshore environments where required. The ability for network equipment to operate in space-constrained locations and tough environmental conditions.

§ Simplified provisioning: Automation and simple onboarding, monitoring, and management of remote networking assets with centralized monitoring and management of multiple wind farm networks.

§ Simplified operations: Increased operational visibility, minimized outages, and faster remote issue resolution. Compliance of network device configurations (changes from a known baseline are flagged) and firmware and powerful analytics to provide deep visibility of the network assets.

§ Multi-level security: End-to-end robust security capabilities to protect the infrastructure and associated services, monitor traffic flows, and provide control points for interfacing to third-party networks and equipment. Vulnerability information for discovered assets and asset reporting to aid regulatory compliance (for example, NIS 2 and NERC CIP).

The validated design is built on the following functional blocks:

§ Wind farm operator data center

§ Wind farm wide area network (WAN)

§ Onshore DMZ

§ Onshore substation

§ Offshore DMZ

§ Offshore substation

§ Turbine Operator network

§ Power control and metering (PCM) network

§ Turbine plant IT network (for example, enterprise and plant services)

§ Service SOVs

§ Operations and maintenance buildings (O&M)

§ Turbine Operator Compact Onshore Substation

The validated design allows customers or partners to select the parts are applicable to a particular project or deployment or use the complete end-to-end architectures.

Scope of Wind Farm Release 1.2 CVD

This Design Guide provides network architecture and design guidance for the planning and subsequent implementation of a Cisco Renewable Energy Wind Farm solution. In addition to this Design Guide, Cisco Wind Farm Solution Implementation Guide provides more specific implementation and configuration guidance with sample configurations.

This Release 1.2 supersedes and replaces the Cisco Offshore Wind Farm Release 1.1 Design Guide.

New Capabilities in Offshore Wind Farm Release 1.2

The turbine operator in an offshore wind farm is responsible for the daily operations and administration of turbines in a wind farm as a power-producing entity. Many operators are also involved in the development, ownership, and construction of the wind farm. Operators sell power that is produced to public utility companies, typically with long-term fixed price contracts in place. Turbine operators like Siemens, Vestas etc., monitor and maintain the wind turbine network with the help of turbine suppliers.

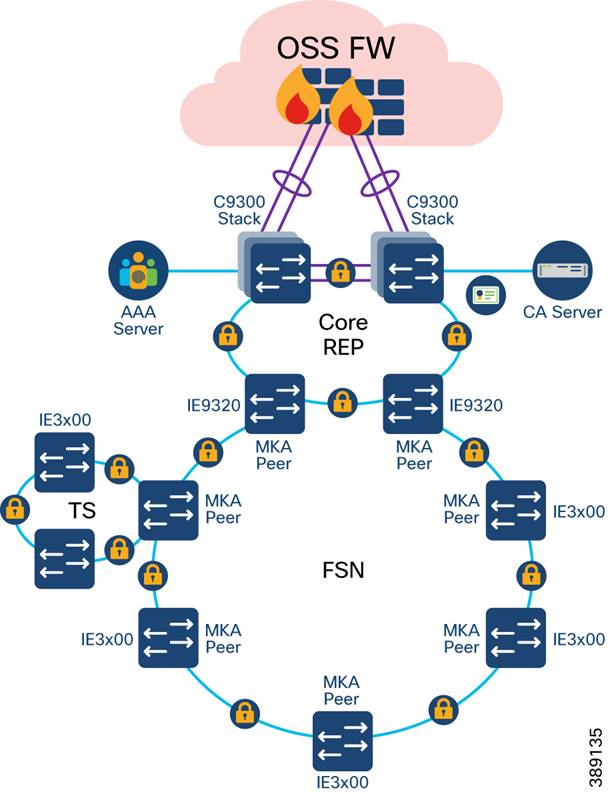

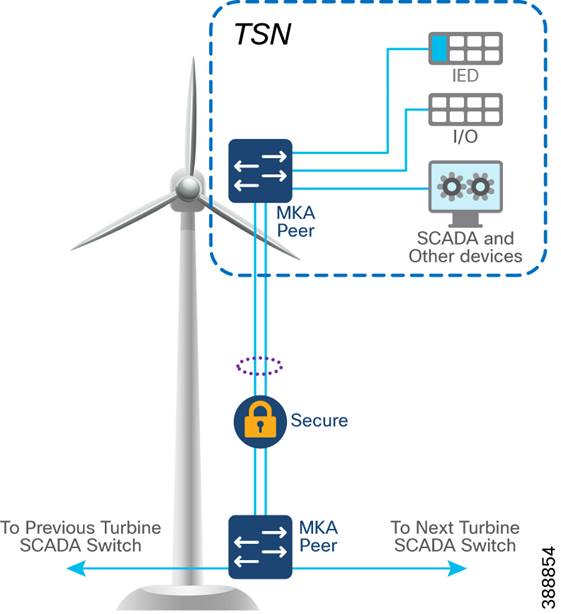

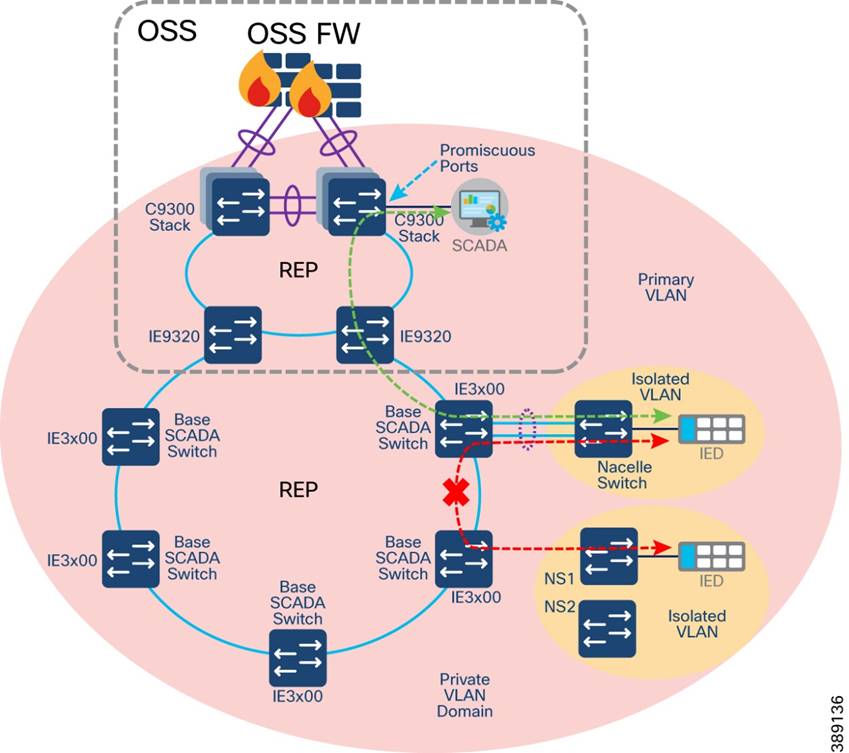

§ Turbine Operator Network design in Offshore Substation (OSS) which includes Farm Area SCADA network (FSN), Turbine SCADA Network (TSN) with highly available and resilient network architecture across different OSS geo-locations in the OSS third-party network

§ Turbine Operator network design with:

o Core network comprises of a stack of two Catalyst 9300 Series switches in an OSS cabinet

o Open REP ring design of two OSS cabinets in two different geo-locations along with Industrial Ethernet (IE) 9320 switches in core network

o Multi-level REP rings from turbine operator core network to Turbine SCADA Network (TSN) for network resiliency

o Co-existence of Media Redundancy Protocol (MRP) ring of FSN along with core and FSN REP rings on IE9320 switch

§ Turbine Operator standalone Compact Onshore substation (ONSS) network architecture design with:

o Cisco Industrial Router 1101 (IR1101) as WAN edge router for control/data center connectivity using FlexVPN over public WAN backhaul

o Cisco IE3400 Series switch as turbine operator core network and FSN aggregation switch

o IP routing design using dynamic routing protocols like BGP and OSPF between data center, WAN Edge router (IR1101) and core switch

o Co-existence of MRP ring of FSN along with REP rings on core IE switch

o Security using Zone-Based Firewall (ZBFW) for Layer 3 security and Private VLANs for layer 2 network segmentation along with first-hop layer 2 security for turbine SCADA endpoints

o Quality-of-service (QoS) and network visibility using NetFlow

The following architectural blocks are out of the scope of this CVD:

§ Backhaul design for WAN handoff routers

§ Typical service provider or customer MPLS network or other fixed connectivity options (microwave, fiber, and so on).

§ Data center network design

Chapter 2: Wind Farm Use Cases

This chapter includes the following topics:

§ Offshore Wind Farm Places in the Network

§ Use Cases

§ Wind Farm Actors in the network

§ Traffic Types and Flows

Offshore Wind Farm Places in the Network

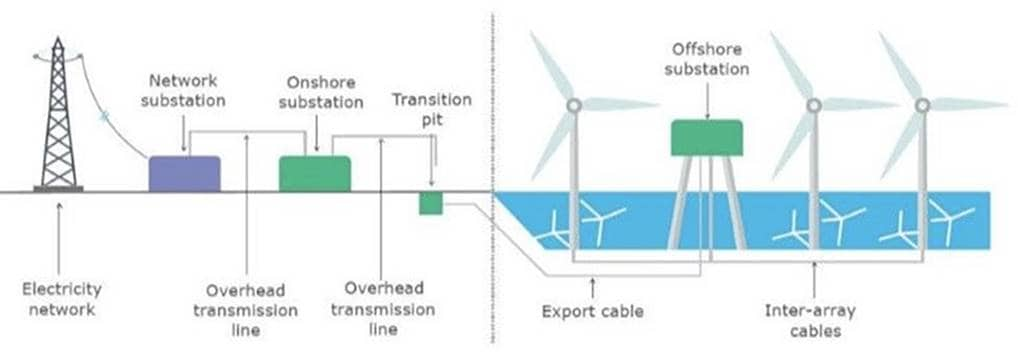

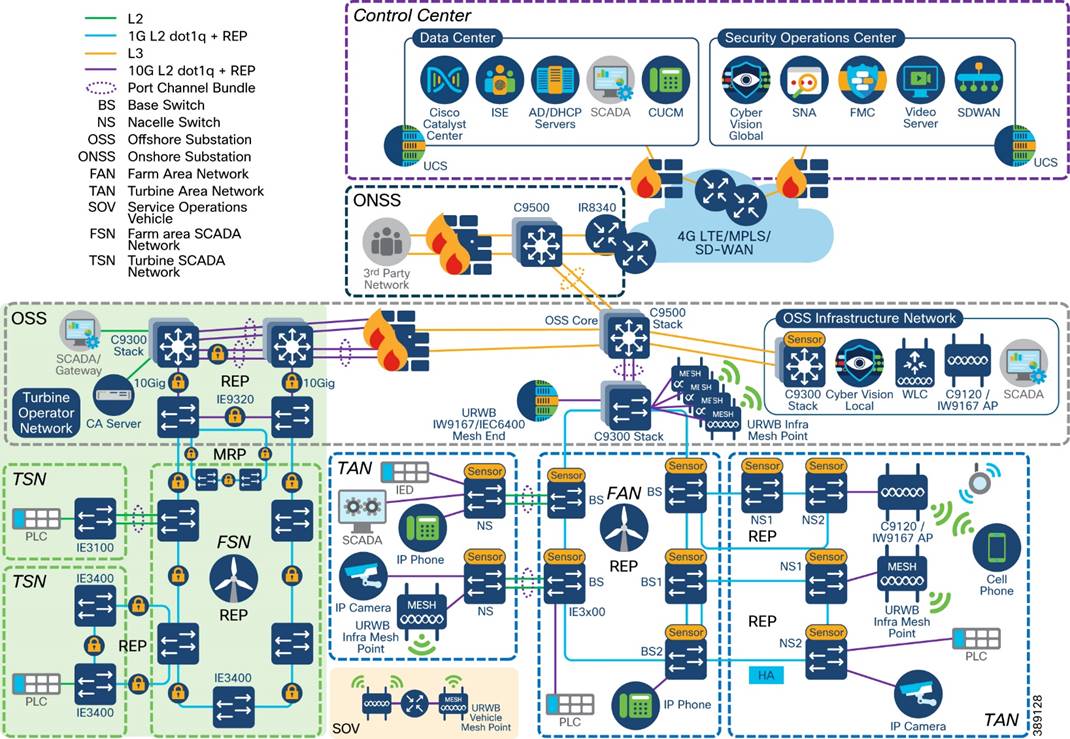

Figure 2-1 shows the places that an offshore wind farm has in a network.

Figure 2-1 Wind Farm Places in a Network

§ Wind turbine generator (WTG)

§ Offshore substation (OSS)

§ Onshore substation (ONSS)

§ Service operations vessel (SOV)

§ Crew transfer vessel (CTV)

Wind Farm solution architecture network building blocks are defined based on the places in the offshore wind farms.

Use Cases

The communications options that are available at a given site greatly influence the outcomes and capabilities for any use case. The availability of dependable lower latency, high bandwidth connectivity (such as fiber, LTE/5G cellular, and Wi-Fi) allows for more advanced network and data service options, while sites with bandwidth constrains may be limited to simpler use cases such as remote management and monitoring. Table 2-1 lists the key use cases in an offshore wind farm.

Table 2-1 Wind Farm Use Cases

| Use Case |

Type of Services |

Description |

| WTG SCADA

|

§ Turbine telemetry § Fire detection § Turbine ancillary systems § Weather systems

|

§ Telemetry data collection associated with turbine systems and components. § Detection of smoke and fire within the turbine. § Telemetry data collection associated with ancillary systems (for example, elevator, navigation lights). § Data from weather-related systems such as radar for offshore farms, wind speed anemometers. |

| Process and control systems (ONSS, OSS, other miscellaneous systems) |

§ Heating and ventilation systems § Public announcement and general alarm (PAGA) systems § Backup generators § Fire detection systems |

§ Heating, ventilation, and air conditioning (HVAC) systems. § Audio systems for announcements and alarms. § Generators for emergency power. § Fire detection systems. |

| Marine-related Systems |

§ Tetra, VHF, UHF Radio § Automatic identification system (AIS) § Radar systems |

§ Ship and worker radio systems. § Shipping identification system. § Radar for SOV management. |

| Enterprise services |

§ IP telephony § Corporate network access § Guest network access |

§ Enterprise voice communications for workers. § Fixed and mobile handsets (Wi-Fi). § General network access for enterprise services such as email, file sharing, video, and web. § Basic internet access for subcontractors. |

| Physical security |

§ Closed circuit television (CCTV) § Access control |

§ Physical security monitoring of turbine assets and areas around turbines for safety and security. § Intrusion detection and entry into areas such as O&M offices and turbine towers. |

| Miscellaneous systems |

§ Bat and bird monitors § Radar § Lightning detection systems § Lidar (turbine monitoring) |

§ Detection of protected wildlife. § Additional radar equipment as specified by certain bodies (military, Coast Guard, and so on). § Detection of lightning strikes. § Monitoring of turbine performance and blade dynamics. |

| SOV connectivity |

§ IP telephony § Wireless network access (Wi-Fi) |

§ Enterprise voice communications for workers. § Fixed and mobile handsets. § Wi-Fi access points within SOVs to provide enterprise network access for staff and to provide IP telephony coverage. |

| Environmental sensors |

§ Heat and humidity § Door open and close § Machine temperature |

§ Turbine nacelle or tower and external measurements. § Turbine tower, external ancillary cabinets. § Machine casing or transformer case temperature. |

| Location-based services |

§ Personnel location and man down (for lone worker) |

§ Wi-Fi access points providing Bluetooth capability for short-range personnel devices for location and man down worker safety. |

| Turbine Operator Network Services |

§ Turbine operational telemetry data collection § Turbine telemetry data (SCADA) translation to OPC-UA § Provide turbine monitor and operational data to wind farm operator using OPC-UA |

§ Turbine monitor and operational data collection using SCADA systems § OPC-UA gateway/server which translates turbine telemetry data (Eg. SCADA MODBUS) into OPC-UA protocol messages § Provide turbine telemetry data as OPC-UA protocol messages to asset operator on demand |

Wind Farm Actors in the Network

Various wind farm use cases and places need different endpoints or actors in the network. The following actors are needed in a wind farm network to deliver the features for the use cases that the previous section describes. These actors usually are key to operating a renewable energy site, providing both monitoring and control capabilities.

§ CCTV cameras: Physical safety and security IP cameras

§ IP phones: Voice over IP (VoIP) telephony devices

§ Programmable logic controller (PLC) devices and input and output (I/O) controllers: Power systems protection and control

§ Intelligent electronic devices (IED): Power systems protection and control

§ Wi-Fi access points: Provide corporate IT Wi-Fi access

§ Cisco Ultra Reliable Wireless Backhaul (URWB) access points: Provide wireless backhaul for SOV connectivity

§ Wind turbine monitoring and control: SCADA and monitoring systems

§ Fire detection and alarming devices or sensors

§ HVAC systems

§ Environmental and weather systems: Sensors that are associated with monitoring weather and environmental conditions

§ Lightning detection: Sensors that are associated with lightning detection

§ Marine systems (radar, radio)

Traffic Types and Flows

Each actor in an offshore wind farm requires network communication with other actors or application servers in the offshore substation (OSS) and control or operations center based on use case requirements. Table 2-2 lists the traffic types and flow in the offshore wind farms places in the network.

Table 2-2 Offshore Wind Farm Traffic Types and Flows

| Traffic Type |

Traffic Flows in the Network |

| Video traffic (CCTV cameras) |

§ Turbine nacelle to control center or OSS: CCTV camera in turbine nacelle switch streaming live video to video server in the control center or OSS infrastructure § Turbine base switch to control center: CCTV camera in base switch streaming live video to a video server in the Control Center or OSS Infrastructure |

| SCADA data for monitoring and control (PLCs and I/O devices that are external to the turbine supplier’s dedicated SCADA network))i.e., wind farm operator SCADA |

§ Traffic within turbine nacelle: PLC and I/O devices communication using SCADA protocols (for examples, DNP3, MODBUS, or T104) § Turbine base switch to nacelle: PLC in turbine base switch to an I/O device in nacelle using SCADA protocols (for example: DNP3, MODBUS, or T104) § Traffic within turbine base switch: Communication between PLC and I/O devices in a turbine base switch § Turbine base switch to base: Communication between PLC in a turbine base switch and I/O device in another turbine base switch |

| IP telephony voice traffic (IP phones) |

§ Maintenance and operations personnel voice communication between turbine base switch or nacelle and OSS § Maintenance and operations personnel voice communication between turbine base switch networks § Maintenance and operations personnel voice communication between an SOV and turbine base switch or OSS networks |

| Wi-Fi traffic (Wi-Fi APs) |

§ Turbine nacelle to OSS infrastructure or control center): Workforce AP in nacelle to WLC in the OSS network § Turbine base switch to OSS infrastructure or control center § Workforce AP in a turbine base switch to WLC in the OSS network § Workforce corporate network access from offshore wind farm § Guest internet wireless access from offshore and onshore substation |

| Offshore and onshore power automation and control SCADA traffic (IEDs, switchgear, other substation OT devices if any) |

§ Turbine nacelle switch to OSS infrastructure § Turbine base switch to OSS infrastructure § Management traffic between IEDs and SCADA systems in the OSS and IEDs and SCADA devices connected turbine nacelle or turbine switches |

| Cisco Ultra Reliable Wireless Backhaul (URWB) traffic |

§ SOV wireless connectivity using a URWB network from turbine nacelle or turbine switch or OSS network |

| Turbine Operator network traffic (dedicated turbine Operator SCADA network provided by a turbine manufacturer) |

§ SCADA OPC-UA protocol traffic between OSS DMZ and OSS infrastructure network § SCADA OT Protocols (DNP3/MODBUS IP) to OPC-UA protocol messages translation (OPC-UA gateway) between IED, Switchgear and OPC-UA server in OSS |

| SD-WAN and network management traffic (OMP, SSH, SNMP and network control protocols, and so on) |

§ Management traffic from ONSS and OSS via the WAN to the control center § Management traffic related to Cisco SD-WAN and Catalyst Center network management platform |

| Auxiliary systems traffic (HVAC, fire, lighting detection, environmental sensors) |

§ Turbine nacelle and switch to OSS infrastructure or control center |

Chapter 3: Solution Architecture

This chapter includes the following topics:

§ Overall Network Architecture

§ Solution Components

§ Solution Hardware and Software Compatibility

Overall Network Architecture

Offshore wind farm solution architecture is built on the following functional blocks:

§ Wind farm operator control center (includes data center and security operations center): Hosts wind farm IT and OT data center applications and servers such as Cisco Catalyst Center, Cisco ISE, AD server, DHCP server, Cisco Cyber Vision Center (CVC), and more.

§ Wind farm wide area network (WAN): A backhaul network for interconnecting a wind farm onshore substation with a control center. It can be a privately owned MPLS network, a service provider LTE network, or a Cisco SD-WAN managed network.

§ Onshore substation (ONSS): A remote site in a wind farm that interconnects an offshore substation with a control center via a WAN.

§ Offshore Substation (OSS): Consists of offshore core network and infrastructure applications to provide network connectivity and application access to wind turbine bases and nacelle switches and their IT and OT endpoints.

§ Farm area network (FAN): An aggregation network that connects multiple wind turbines base switches and to their aggregation switches.

§ Turbine area network (TAN): A switched layer 2 network typically formed by one or more nacelle switches in a wind turbine.

§ SOV: Wind farm network operation and maintenance vehicle that moves around offshore wind farms and connects to TAN and OSS or ONSS networks for service operation personnel network communication.

§ Third-party networks or turbine operator networks: Turbine vendor’s SCADA control network that runs separately from a wind farm operator’s network. This CVD also covers the third-party turbine operator network architecture which includes:

o Farm Area SCADA Network (FSN) - A dedicated network that links multiple turbines to the offshore substation. Commonly a fiber ring that aggregates on the offshore substation.

o Turbine SCADA Network (TSN) - A dedicated network within the turbine itself (tower and nacelle), providing connectivity for the turbine SCADA devices.

Each of these building blocks and design considerations are discussed in detail in Chapter 4: Solution Design Considerations.

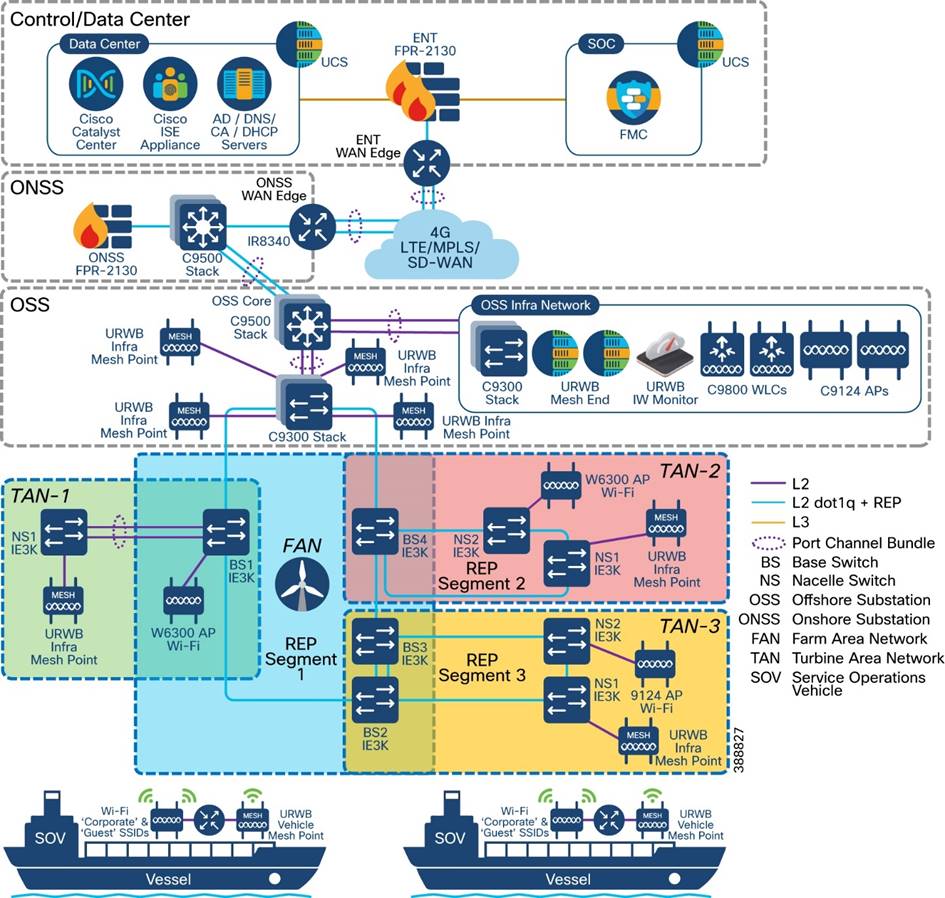

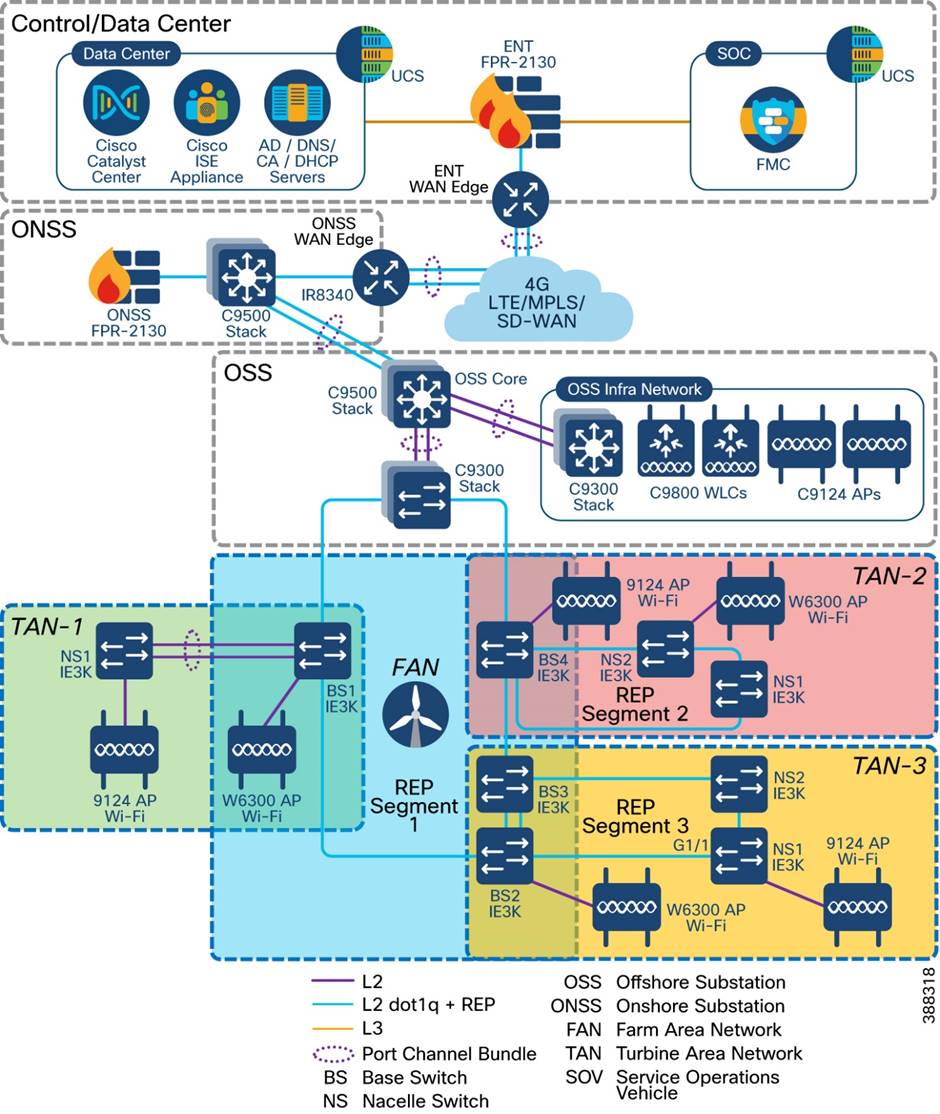

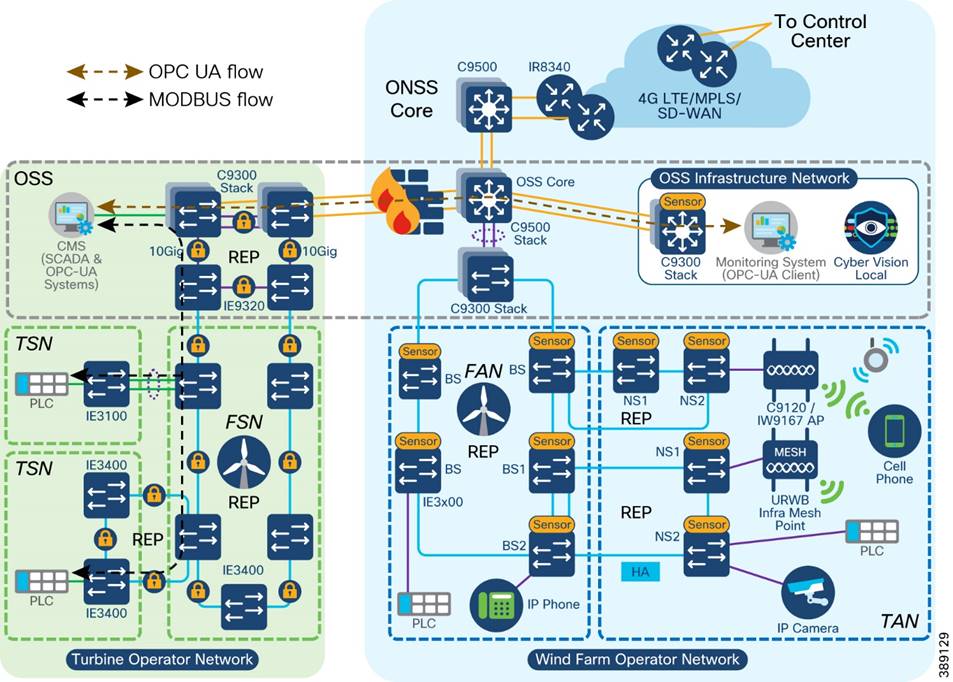

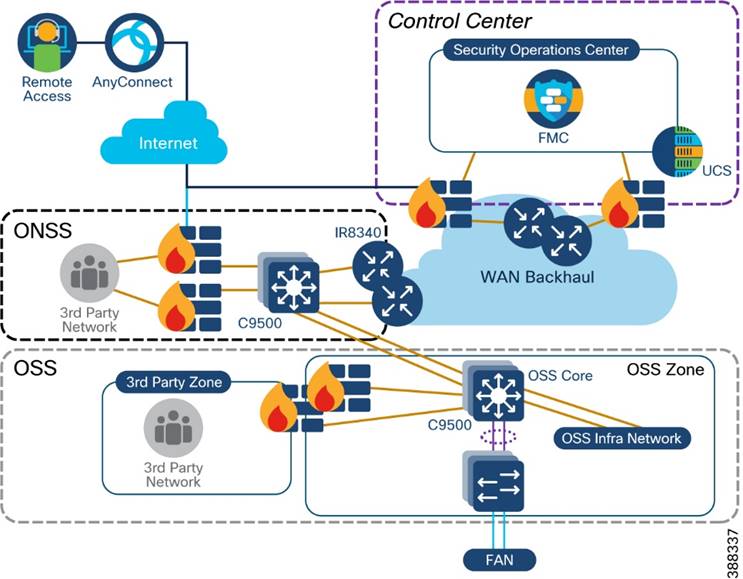

Figure 3-1 shows the end-to-end solution network architecture of a wind farm.

Figure 3-1 Offshore Wind Farm Solution Network Architecture

In Figure 3-1, section highlighted in green is Turbine Operator Network and new capabilities in an offshore wind Farm, as discussed in “Scope of Wind Farm Release 1.2 CVD”.

Solution Components

This section describes the components of a wind farm network. Several device models can be used at each layer of the network. The device models that are suitable for each role in the network and the corresponding CVD software versions are described in Solution Hardware and Software Compatibility.

You can choose a device model to suit specific deployment requirements such as network size, cabling and power options, and access requirements. Table 3-1 describes device models that are used for components in the architecture for a wind farm solution.

Table 3-1 Components and Device Models in Wind Farm Architecture

| Component Role |

Component |

Description |

| Turbine nacelle switch, no HA |

Cisco Catalyst Industrial Ethernet (IE) 3400 Series Switch |

1G fiber ring with port channel connectivity to base switch. |

| Turbine nacelle switch, with HA |

Cisco Catalyst Industrial Ethernet (IE) 3400 Series Switch |

1G fiber ring for nacelle switch redundancy. |

| Turbine base switch |

Cisco Catalyst Industrial Ethernet (IE) 3400 Series Switch and/or Cisco Catalyst Industrial Ethernet (IE) 3100 Series Switch |

1G fiber ring for base switches in FAN. Up to 20 switches can be in the ring. 8, 9, or 10 switches in the ring are common in a deployment. |

| Farm area aggregation |

Cisco Catalyst 9300 Series switch Stack |

REP ring aggregation switch. Stack for HA. |

| OSS and ONSS core switch, with HA |

Cisco Catalyst 9500 Series switches with Stackwise Virtual (SVL) |

Offshore IT network core. Deployed with SVL for HA. |

| OSS IT network access switch |

Cisco Catalyst 9300 Series switch stack |

Consists of two switches for HA to provide access connectivity to OSS network infrastructure devices. |

| OSS firewall |

Cisco Secure Firewall 2100 or 4100 Series |

OSS network firewall. |

| ONSS WAN router |

Cisco Catalyst IR8300 Rugged Series Router or Cisco Catalyst 8000 Series Edge Platform |

Onshore substation WAN router |

| OT network sensor |

Cisco Cyber Vision network sensor on IE3400 Series Switches |

CV network sensors on all IE switches in the ring and FAN. |

| OT security dashboard

|

Cisco Cyber Vision Center global and local virtual appliances |

CVC deployed globally and locally in control center and OSS network infrastructures, respectively. |

| Wireless LAN controller |

Cisco Catalyst 9800 Wireless Controller (WLC) |

Catalyst Wi-Fi network controller in OSS network infrastructure. |

| SCADA application server |

SCADA application server |

SCADA application server in OSS network infrastructure. |

| URWB gateway |

URWB IW9167E or IEC6400 Edge Compute Appliance |

URWB wireless network mesh end |

| Network management |

Cisco Catalyst Center |

Wind farm network management application in control center and DC. |

| Authentication, authorization, and accounting (AAA) |

Cisco ISE |

AAA and network policy administration. |

| IT and OT security management |

Cisco Secure Network Analytics (Stealthwatch) Manager and Flow Collector Virtual Edition |

Network flow analytics and security dashboard in control center. |

| Physical safety video server |

Cisco or third-party video server for IP cameras |

Cisco or third-party video server for IP cameras in control center |

| 4G-LTE or 5G connectivity for SOV |

Cisco Industrial Router 1101 with 4G-LTE or 5G SIM |

4G-LTE or 5G connectivity for SOV when in range of cellular connectivity close to shore. |

| OSS, FAN, or TAN wireless backhaul |

URWB IW9167E |

URWB infrastructure AP on OSS, FAN, or TAN for SOV wireless backhaul connectivity. |

| OSS vessel wireless backhaul |

URWB IW9167E |

OSS vessel mesh point for connectivity into OSS or TAN. |

| Wi-Fi network access point |

C9120 AP or IW9167I |

Catalyst Wi-Fi network AP in TAN and OSS. |

| Hardened Wi-Fi access point |

Cisco IW6300 |

Catalyst Wi-Fi network AP in TAN and OSS. |

| Turbine operator SCADA network core switch |

Cisco Catalyst 9300 Series switches |

Consists of two switches in a stack with HSRP for Core network HA. Provides access connectivity to OSS turbine operator SCADA network infrastructure devices. |

| Farm area SCADA Network (FSN) rings aggregation switch |

Cisco Catalyst Industrial Ethernet 9300 Rugged Series switches |

Turbine operator Farm area SCADA network aggregation switch. Also provides 10G uplink core network resiliency using REP. |

| Farm area SCADA Network (FSN) switch |

Cisco Catalyst Industrial Ethernet (IE) 3400 Series Switch or Cisco Catalyst Industrial Ethernet (IE) 3100 Series Switch |

1G fiber ring for SCADA network base switches in FSN. Up to 20 switches can be in the REP ring or up to 50 switches in an MRP ring |

| Turbine SCADA Network (TSN) switch |

Cisco Catalyst Industrial Ethernet (IE) 3400 Series Switch or Cisco Catalyst Industrial Ethernet (IE) 3100 Series Switch |

Turbine SCADA network nacelle switches in in a HA topology (ring or port channel) |

| Compact ONSS WAN Edge router |

Cisco Catalyst IR1101 Rugged Series Router |

Compact Onshore substation WAN edge router for data center connectivity |

| Compact ONSS WAN Headend Router |

Cisco Catalyst 8500 Series Edge platform |

Compact Onshore substation data center headend router for FlexVPN tunnels |

Solution Hardware and Software Compatibility

Table 3-2 lists the Cisco products and software versions that are validated in this CVD. Table 3-3 lists the third-party products that are used in the validation.

Table 3-2 Wind Farm Operator Network Cisco Hardware and Software Versions Validated in this CVD

| Component Role |

Hardware Model |

Version |

| Turbine nacelle switch, no HA |

IE3400-8P2S IE3400-8T2S IE3100-8T4S |

17.16.1 |

| Turbine nacelle switch, with HA |

IE3400-8P2S IE3400-8T2S IE3100-8T4S |

17.16.1 |

| Turbine base switch |

IE3400-8P2S, IE3400-8T2S IE3100-8T4S |

17.16.1 |

| Farm area aggregation |

C9300-24UX |

17.16.1 |

| OSS core switch, with HA |

C9500-16X |

17.16.1 |

| OSS IT network access switch |

C9300-24UX |

17.16.1 |

| ONSS core switch |

C9300-24UX |

17.16.1 |

| OSS and ONSS DMZ firewall |

Cisco Secure Firewall 2140 |

7.0.1 |

| Firewall management application |

Cisco Secure Firewall Management Center Virtual Appliance |

7.0.1 |

| ONSS WAN edge router |

Cisco Catalyst IR8340 Rugged Series Router |

17.16.1 |

| Network management application |

Cisco Catalyst Center Appliance DN2-HW-APL |

2.3.6.0 |

| Unified Computing System (UCS) |

UCS-C240-M5S |

3.1.3c |

| Authentication, authorization, and accounting (AAA) server |

Cisco ISE Virtual Appliance |

3.2 |

| CV network sensors |

IoX Sensor App |

5.04.0 |

| OT security dashboard

|

Cisco Cyber Vision Center global and local virtual appliance |

5.04.0 |

| Wireless LAN controller |

C9800-L-C-K9 |

17.16.1 |

| Cisco IW6300 ruggedized AP for Wi-Fi access |

IW6300-AP |

17.16.1 |

| Cisco AP for Wi-Fi access |

AIR-AP9120 or IW91671 |

17.16.1 |

| URWB mesh point |

URWB IW9167E |

17.16.1 |

| URWB mesh gateway |

URWB IW9167E or IEC6400 |

17.16.1 |

| URWB IW-Monitor |

URWB IW-Monitor VM |

v2.0 |

| IT and OT security management |

Cisco Secure Network Analytics (Stealthwatch) Manager and Flow Collector Virtual Edition |

7.4.1 |

| Control center headend router |

ASR-1002-HX |

17.3.4a |

| WAN management |

Cisco SD-WAN, SD-WAN Manager, SD-WAN, SD-WAN Validator virtual appliances |

20.8.1 |

Table 3-3 Turbine Operator Network Cisco Hardware and Software versions Validated in this CVD

| Component Role |

Hardware Model |

Version |

| Turbine Operator network core switch |

C9300-24UX |

17.16.1 |

| Farm area SCADA network rings aggregation switch |

IE-9320-22S2C4X |

17.16.1 |

| Turbine SCADA switch, no HA |

IE3400-8P2S IE3400-8T2S IE3100-8T4S |

17.16.1 |

| Turbine SCADA switch, with HA |

IE3400-8P2S IE3400-8T2S IE3100-8T4S |

17.16.1 |

| Turbine base switch in FSN |

IE3400-8P2S, IE3400-8T2S IE3100-8T4S |

17.16.1 |

| Compact Onshore Substation WAN edge router |

IR1101-K9 |

17.16.1 |

| Compact Onshore Substation Headend router |

C8500-12X |

17.15.1a |

Table 3-4 Third-party Hardware and Software Versions Validated in this CVD

| Component Role |

Hardware Model |

Version |

| Turbine physical security (CCTV) camera |

AXIS P3717-PLE |

10.3.0 |

| Video server for CCTV camera |

Axis Device Manager (ADM) |

5.9.42 |

| CA, AD, DHCP, and DNS servers in control center |

Microsoft Windows 2016 Server |

Windows 2016 Server Edition |

Note: Enable appropriate licenses for each of the wind farm network components. See the component’s data sheets for information about enabling the features and functions that you need.

Chapter 4: Solution Design Considerations

This chapter includes the following topics:

n Turbine Area Network Design

n Turbine Base Switch Network Design

n Farm Area Network Design

n Offshore Substation Network and Building Blocks

n Onshore Substation Network

n WAN Network Design

n Control Center Design

n Network VLANs and Routing Design

n Wireless Network Design

n URWB Wireless Backhaul

n SCADA Applications and Protocols

n Quality of Service Design

n Turbine Operator Network Design

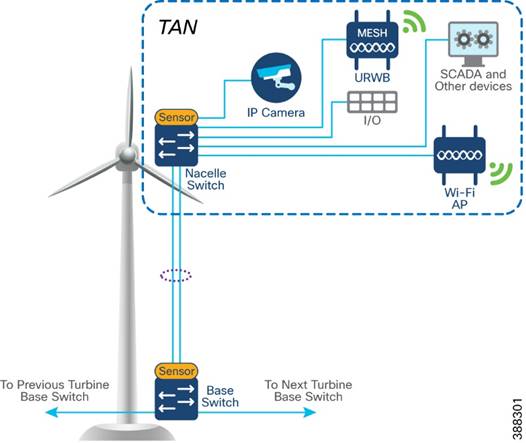

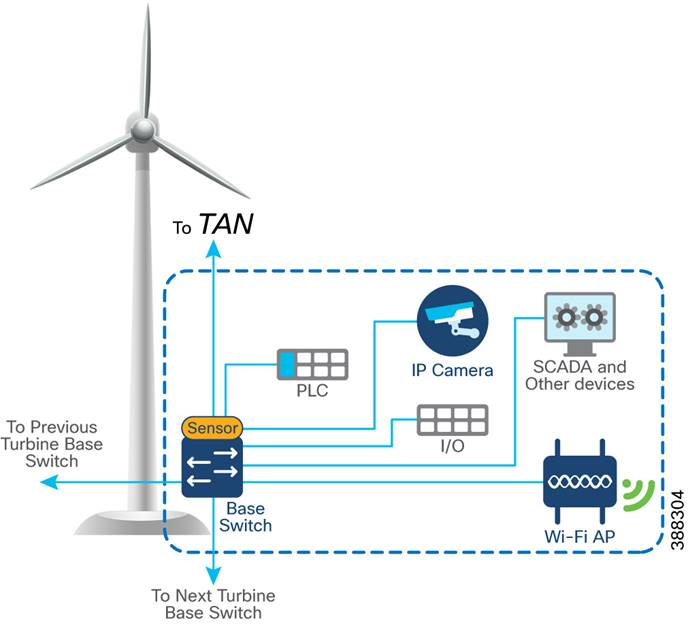

Turbine Area Network Design

In offshore wind farms, each wind turbine has a Cisco IE3400 switch deployed at the turbine nacelle to provide offshore substation (OSS) network connectivity to various endpoints in the turbine. These endpoints include SCADA devices, PLC, I/O devices, CCTV cameras, and so on. The IE switch deployed in the turbine nacelle is also called a nacelle switch (NS). The NS with its OT and IT endpoints forms a turbine area network (TAN) in the wind farm solution architecture, as shown in Figure 4-1.

Figure 4-1 TAN Design

Table 4-1 lists the actors and traffic types in a TAN.

Table 4-1 TAN Actors and Traffic Types

| Actors |

Traffic Type |

| CCTV camera |

TAN to control center. CCTV camera in nacelle switch streaming live video to video server in OSS infrastructure or control center. |

| PLC and IO |

Traffic within the TAN. OT Traffic between PLC and I/O in nacelle. TAN to base. PLC in base of turbine to I/O in nacelle. |

| Wi-Fi access point |

TAN to OSS infrastructure and CC. Workforce AP in Nacelle to WLC in OSS network. Provides corporate network access and guest internet access. |

| SCADA |

TAN to OSS infrastructure. management traffic between SCADA endpoints in OSS. |

| URWB |

Offshore vessel wireless connectivity using URWB network from the TAN. |

TAN Non-HA Design Considerations

§ Single Cisco IE3400 switch deployed in each turbine nacelle, as shown in Figure 4-1 for the TAN non-HA design option turbine nacelle Ethernet switch.

§ Layer 2 Star Topology (non-HA) of nacelle switches connecting to turbine base switch (shown in Figure 4-1).

§ An LACP port-channel with two member links to a base switch provides link-level redundancy to TAN.

§ Multiple VLANs for segmenting TAN devices are configured in the NS. Examples include CCTV camera VLAN, OT VLAN for SCADA endpoints, Wi-Fi AP management VLAN, management VLAN (FTP/SSH), URWB VLAN for vessel connectivity, voice VLAN for VOIP phones, and marine systems VLAN.

§ First hop security protocols with device authentication using MAB or Dot1x are configured for securing TAN endpoints.

§ Layer 3 gateway for all VLANs in the NS is configured in OSS Core switch (C9500 switch)

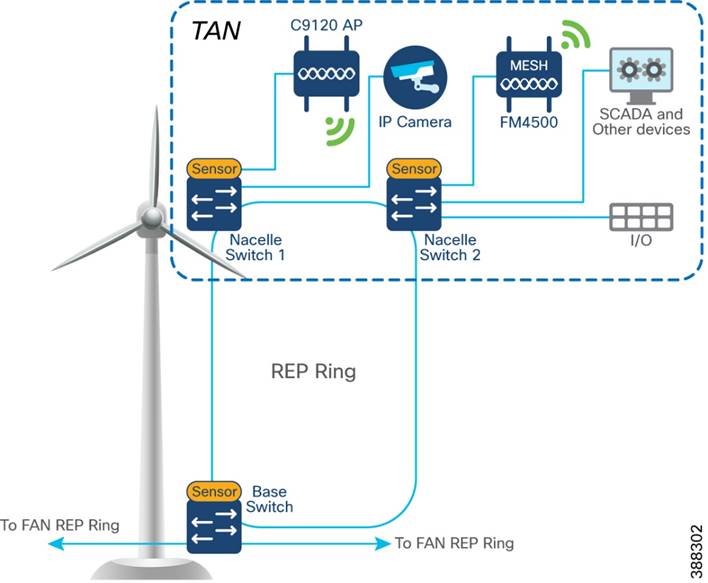

TAN High Availability Design with REP

An IE3400 nacelle switch in the TAN provides a single point of failure for TAN endpoints. To provide a highly available TAN, two nacelle switches are deployed for TAN endpoints network connectivity. In addition, a redundancy protocol is configured.

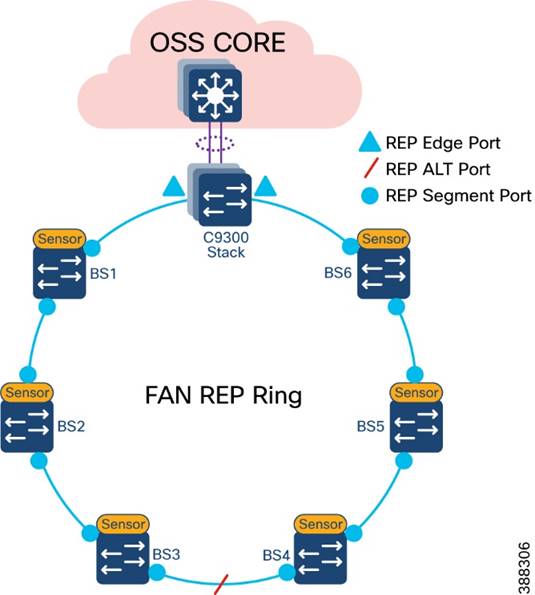

Resilient Ethernet Protocol Ring

Resilient Ethernet Protocol (REP) is a Cisco proprietary protocol that provides an alternative to Spanning Tree Protocol (STP) for controlling network loops, handling link failures, and improving convergence time. REP controls a group of ports that are connected in a segment, ensures that the segment does not create bridging loops, and responds to link failures within the segment. REP provides a basis for constructing complex networks and supports VLAN load balancing. It is the preferred resiliency protocol for IoT applications.

A REP segment is a chain of ports that are connected to each other and configured with a segment ID. Each segment consists of standard (non-edge) segment ports and two user-configured edge ports. The preferred alternate port selected by REP is blocked during normal operation of the ring. If a REP segment fails, the preferred alternate port is automatically enabled by REP, which provides an alternate path for the failed segment. When the failed REP segment recovers, the recovered segment is made the preferred alternate port and blocked by REP. In this way, recovery happens with minimal convergence time.

Two uplink ports from two nacelle switches deployed for HA in TAN are connected to a turbine base switch.

There are two options for TAN high availability design. In the first option, shown in Figure 4-2, a closed ring topology of two nacelle switches connects to a single turbine base switch. This arrangement forms a subtended REP ring to the FAN main ring.

Figure 4-2 TAN HA Design, Option 1

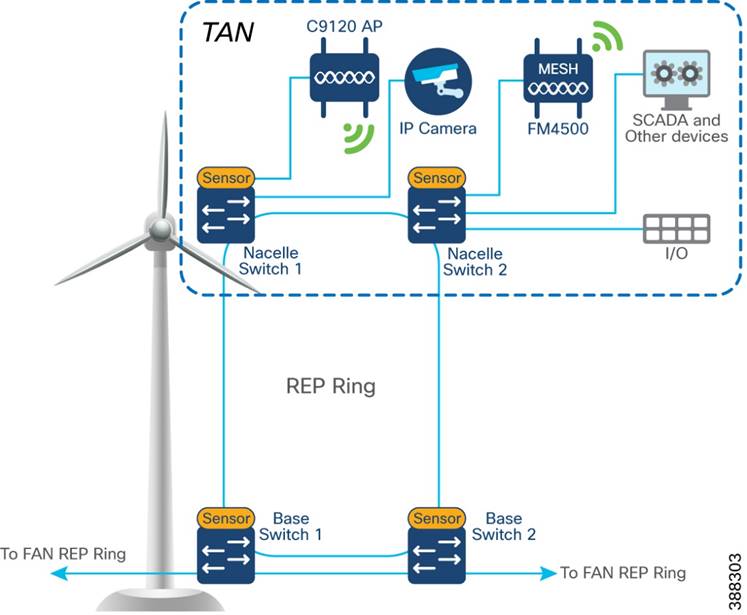

In the second option, shown in Figure 4-3, the uplinks form two nacelle switches are connected to two different base switches in a TAN, which provides redundancy for the turbine base switch network and the TAN. In this option:

§ An open ring topology of two nacelle switches connects to two turbine base switches.

§ A subtended RIP ring of FAN main REP ring of base switches is formed.

Figure 4-3 TAN HA Design, Option 2

Turbine Base Network Design

In offshore wind farms, each wind turbine has an IE3400 switch that is deployed at the turbine base to provide OSS network connectivity to various endpoints in the turbine base. These endpoints include SCADA devices, PLC, I/O devices, CCTV cameras. the TAN, and so on. The IE switch that is deployed in the turbine base is also called the base switch (BS). The BS, with its OT and IT endpoints, forms a Turbine Base Network (TBN) in the wind farm solution architecture, as shown in Figure 4-4.

Figure 4-4 Turbine Base Network Design

Table 4-2 lists the actors and traffic type in a turbine base switch network.

Table 4-2 Turbine Base Network Actors and Traffic Types

| Actors |

Traffic Type |

| CCTV camera |

§ Base to control center. CCTV camera in base switch streaming live video to video server in OSS infrastructure or control center. |

| PLC and I/O controller |

§ Traffic within base. OT traffic between PLC and I/O in base. § Base to base. PLC in base of turbine to I/O in base. |

| Wi-Fi access point |

· Base to OSS infrastructure or control center. Workforce AP in base switch communicating with the WLC in the OSS infrastructure. Provides connectivity to the OSS and DC network based on needs and provides guest internet access. |

| SCADA |

· Base to OSS Infrastructure. Management traffic between SCADA endpoints in OSS. |

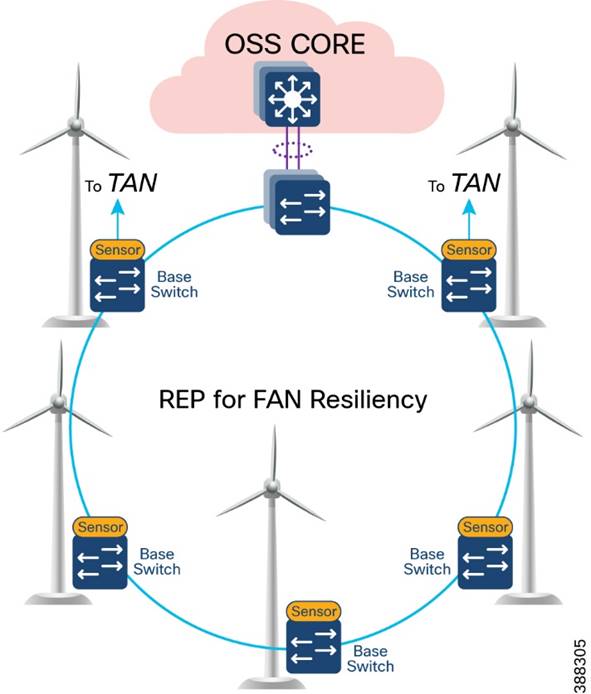

Farm Area Network Design

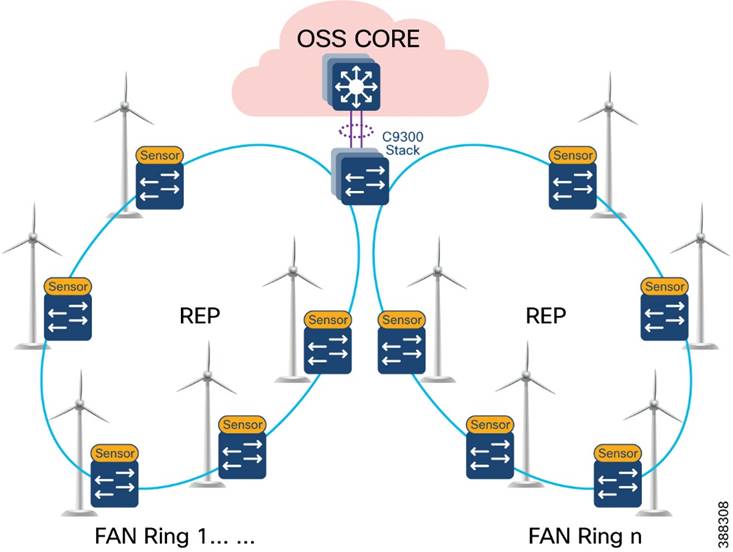

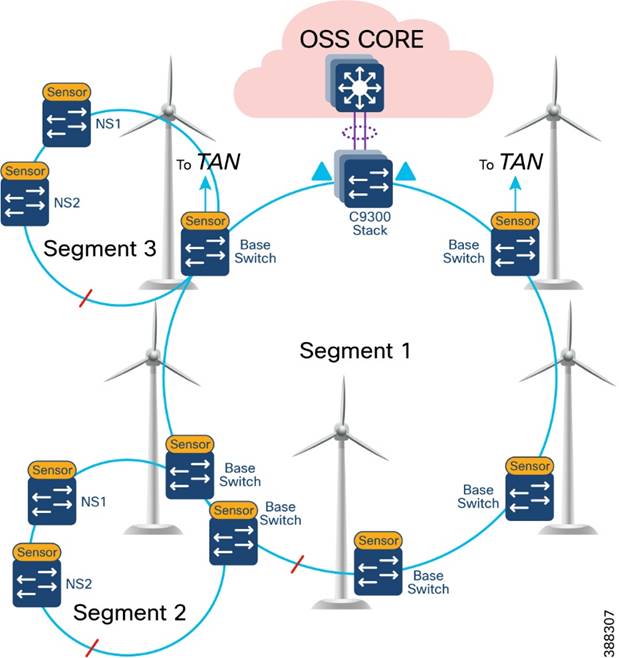

In offshore wind farms, the base switch from each wind turbine is connected in a ring topology using a 1G fiber cable with Catalyst 9300 stack switches to form a farm area network (FAN) ring. A REP is configured in the FAN ring to provide FAN resiliency for faster network convergence if a REP segment fails.

Figure 4-5 shows a FAN ring aggregating to a pair of Cisco Catalyst 9300 switches in a stack configuration. A Catalyst 9300 stack aggregates all FAN rings in an offshore wind farm.

Figure 4-5 FAN Design

Design Considerations

§ Cisco Industrial Ethernet 3400 Switches as turbine base ethernet switches.

§ A layer 2 closed ring of turbine base switches connected via 1G fiber forms a FAN.

§ FAN base switches aggregate subtended REP ring for HA traffic from the TAN with HA.

§ A FAN ring consists of a maximum of 18 base switches. A Catalyst 9300 stack aggregates up to 10 FAN rings, depending on the Catalyst9300 model and port density.

§ REP protocol is used for base switches and FAN resiliency; REP edge ports are configured on a Catalyst 9300 stack in OSS aggregation.

§ Multiple VLANs are configured for network segmentation of TAN and FAN devices. Examples include CCTV camera VLAN, OT VLAN for SCADA endpoints, management VLAN (FTP and SSH), URWB VLAN for vessel connectivity, Wi-Fi AP management VLAN, voice VLAN for VoIP Phones, and marine systems VLAN.

FAN REP Ring Design

A closed REP ring of FAN forms a main REP segment to forward all VLAN traffic in an offshore wind farm network. Primary and secondary REP edge ports are configured on an OSS aggregation switch stack (Catalyst9300) and an alternate port is configured in the middle of the ring. See Figure 4-6.

A FAN REP ring can be provisioned by using the Cisco Catalyst Center REP workflow, which automates the REP configuration from daisy-chained IE switches. For more detailed information about FAN REP ring and subtended REP ring provisioning using Cisco Catalyst Center, see Chapter 5: Network Management and Automation.

Figure 4-6 FAN REP Design

FAN Subtended REP Ring Design

A TAN REP ring aggregating to a turbine base switch or a pair of base switches, as discussed in TAN High Availability Design with REP, creates a subtended REP ring or ring of REP rings in an offshore wind farm network. A closed or open REP ring configured with REP Topology Change Notification (TCN) within a REP segment notifies REP neighbors of any topology changes. At the edge, REP can propagate the TCN to other REP segments.

Figure 4-7 shows the FAN main REP ring and subtended REP rings design in the wind farm solution architecture.

Figure 4-7 FAN main REP Ring and Subtended REP Rings Design

FAN Aggregation

An offshore wind farm can have more than 200 turbines, and each turbine can have more than 2 base switches that connect to the OSS network. We recommend that a deployment have a FAN ring size of more than 20 IE switches. Multiple such FAN rings should be aggregated to access offshore substation (OSS) and onshore substation (ONSS) IT networks.

The FAN aggregation infrastructure is composed of Cisco Catalyst 9300 Series switches, typically with two of these switches in a physical stack, that are capable of providing 10G uplinks to OSS and ONSS networks. A stack of two Catalyst 9300 switches physically located in an OSS network connects turbine base switches in a ring via fiber cables (turbine string cables) and aggregates layer 2 traffic from each ring to upper layers of the wind farm network infrastructure, for or example, to an OSS core switch.

Figure 4-8 shows the FAN aggregation design using a stack of Catalyst 9300 Series switches to aggregate FAN rings and their traffic from and to offshore wind turbines.

Figure 4-8 FAN Aggregation Design

We recommend that between one and nine FAN rings be aggregated to a stack of Catalyst 9300 switches for optimal network performance. If there are more than 200 turbines or base switches in a wind farm, another stack of two Catalyst 9300 switches can be added to the FAN aggregation network in the offshore substation OSS.

Offshore Substation Network and Building Blocks

This section discusses the design for a wind farm OSS network. The OSS network has following building blocks:

§ OSS core network: Provides network layer 3 routing across offshore and onshore substations

§ OSS DMZ and third-party or other networks: Provides secure remote access for corporate employees and third-party vendors to OSS assets

§ OSS infrastructure network: Hosts OSS infrastructure services and application servers

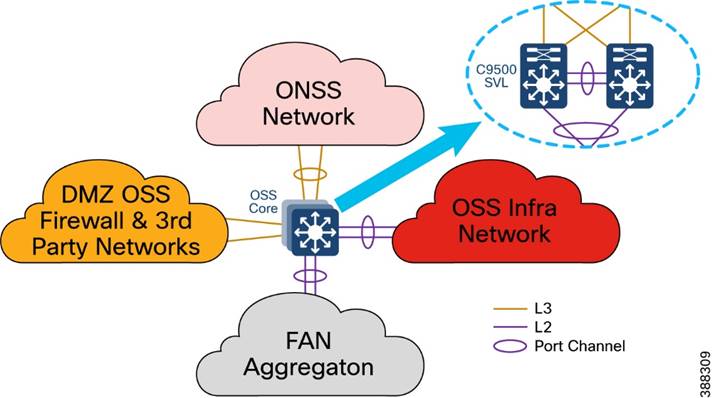

OSS Core Network Design

An offshore substation core network is composed of a pair of suitably sized layer 3 devices that provide resilient core networking and routing capabilities. Multilayer switches may be used as core switches, even though they are intended for routing. In the wind farm solution architecture, Cisco Catalyst 9500 Stackwise Virtual (SVL) switches are used as OSS core network switches.

The OSS core connects to multiple components, and this connection should be resilient, providing higher bandwidth (10Gbps) layer 3 links. The OSS core network connects the following building blocks in an OSS network and provides connectivity through fiber uplinks to the onshore substation network (ONSS), as shown in Figure 4-9.

§ ONSS network: Connects to ONSS core switches.

§ OSS infrastructure network: Provides layer 2 access switch connectivity to infrastructure applications such as CVC, SCADA servers, WLC, and so on.

§ FAN aggregation: Aggregates FAN rings in a wind farm

§ OSS DMZ and firewall: Connects to third-party networks (for example, turbine vendor SCADA networks such as GE, VESTAS, and others, and substation automation networks export cable HVDC and AC systems).

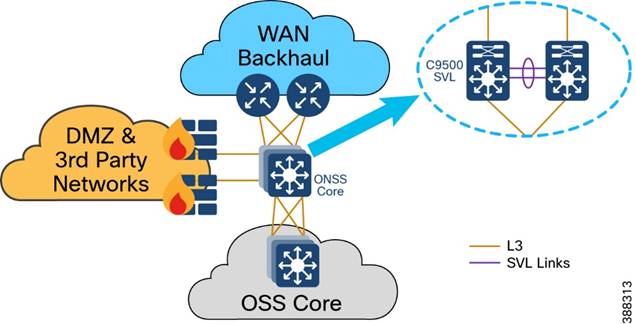

Figure 4-9 OSS Core Network Design

In Figure 4-9, a pair of Cisco Catalyst 9500 switches in Stackwise Virtual (SVL) configuration provides core network high availability across OSS networks with layer 3 links to the OSS DMZ firewall and ONSS core. These layer 3 links can be configured as Equal-Cost Multi Pathing (ECMP) routing links or links bundled in a layer 3 port channel.

The C9500 SVL switch connects to the OSS infrastructure and FAN aggregation switches using layer 2 port channels with each port channel bundling two 10 Gb ethernet interfaces.

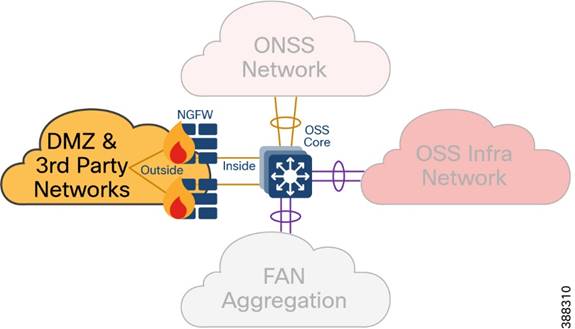

OSS DMZ and Third-Party Network

A DMZ in a wind farm OSS network provides a layer of security for the internal network by terminating externally connected services at the DMZ and allowing only permitted services to reach the internal network nodes.

Any network service that runs as a server that communicates with an external network or the Internet is a candidate for placement in the DMZ. Alternatively, these servers can be placed in a data center and be reachable only from the external network after being quarantined at the DMZ.

Cisco Next-Generation Firewall (NGFW) is deployed with outside interface connectivity to third-party turbine vendor networks and inside interface connectivity to the OSS core network, as shown in Figure 4-10. A Cisco Secure Firewall Management Center in the control center centrally manages all Cisco Secure Firewall instances in the OSS and ONSS networks.

Figure 4-10 OSS DMZ Network

The OSS DMZ is composed of a resilient pair of Cisco NGFW Secure Firewall 2100 or 4100 Series appliances. The Cisco Secure Firewall in OSS DMZ provides OSS network security protection from outside vendor networks.

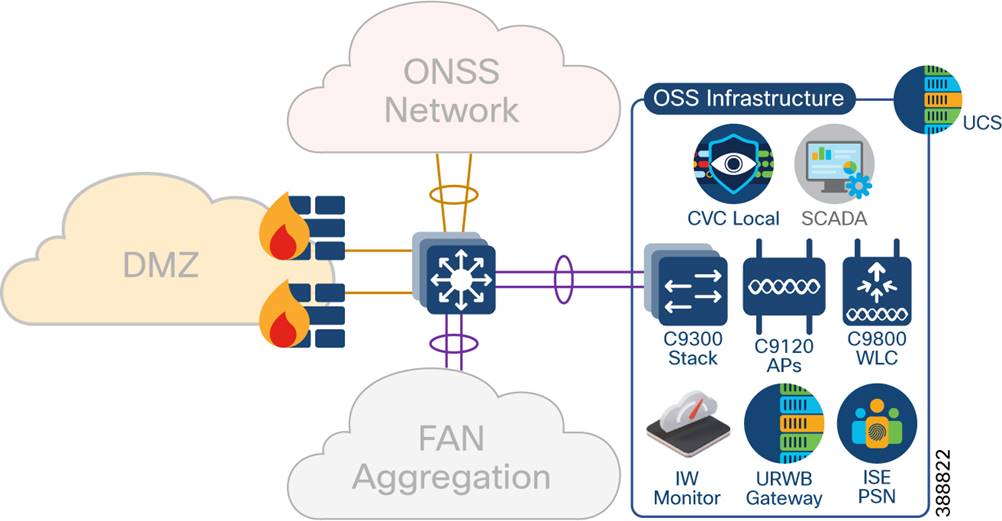

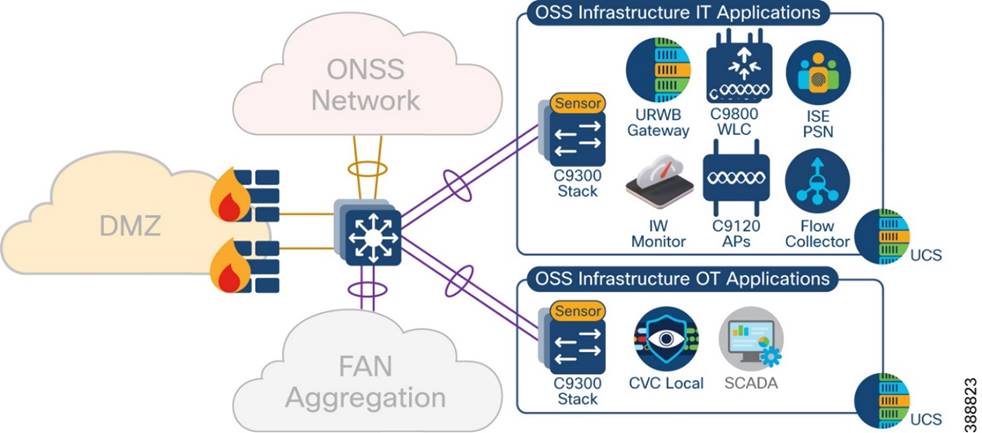

OSS Infrastructure Network

This section covers various infrastructure components and application servers in a wind farm network. The OSS infrastructure is composed of a set of resources that are accessible by devices or endpoints across the FAN and TAN. The OSS infrastructure is deployed with a pair of Cisco Catalyst 9300 Series switches in a stack to extend access to various applications and servers, as shown in Figure 4-11 as Option 1.

You also can deploy the OSS infrastructure with a separate access switch stack and UCS server for wind farm OSS IT and OT applications respectively, as shown in Figure 4-12 as Option 2. The OSS infrastructure includes of:

§ One or more Cisco Unified Computing System (UCS) servers for hosting virtual machines for the applications

§ On Cisco Cyber Vision Center (CVC) Local

§ One or more ISE PSNs*

§ Two URWB gateways (IW9167E or IEC6400)

§ Two Catalyst 9800 WLCs

§ Four IW9167E devices with 90-degree horn antennas

§ IW6300 or C9120 APs for Wi-Fi access

§ One URWB IW monitor

§ SCADA server application

Figure 4 11 shows the wind farm OSS infrastructure network and its components.

Figure 4-11 OSS Infrastructure Network Deployment Option 1

*ISE PSN may optionally be deployed at the OSS infrastructure network for the distributed deployment of ISE with PAN at the control center. You also may choose to decentralize PSNs if there is a latency concern.

Figure 4-12 shows the wind Farm OSS Infrastructure network option for IT and OT applications separated into two access switch stacks and UCS servers.

Figure 4-12 OSS Infrastructure Network Deployment Option 2

Onshore Substation Network

In a wind farm network, an onshore substation (ONSS) is a renewable energy site that is normally in remote areas where communication network is not readily available.

Generally, offshore substations connect to ONSSs in rural locations where access to backhaul technologies is limited. While offshore to onshore connectivity is served by fiber optic cable, the backhaul from the onshore location is more challenging and often relies on service provider network availability for services such as fiber, MPLS, metro Ethernet, and so on.

ONSS Network Design

In the wind farm solution architecture, Cisco Catalyst 9500 Stackwise Virtual (SVL) switches are used as ONSS core network switches. The ONSS core connects to multiple components. The connections should be resilient and provide higher bandwidth (10Gbps) and layer 3 links for scalable L3 routing.

Figure 4-13 shows the building blocks in an ONSS network that the ONSS core network connects to.

Figure 4-13 ONSS Network and its Building Blocks

OSS network building blocks include:

§ OSS network: Connects to an OSS core switch via 10 Gb fiber links

§ ONSS DMZ and firewall: Connects to third-party networks (for example, turbine vendor SCADA network, power control and metering network, export cable HVAC and DC system)

§ WAN backhaul: Connects wind farm data center and control center to the ONSS via service provider MPLS, 4G LTE, and so on.

The ONSS DMZ is similar to the OSS DMZ that is discussed in OSS DMZ and Third-Party Network. WAN backhaul and control center are discussed in detail in the following section.

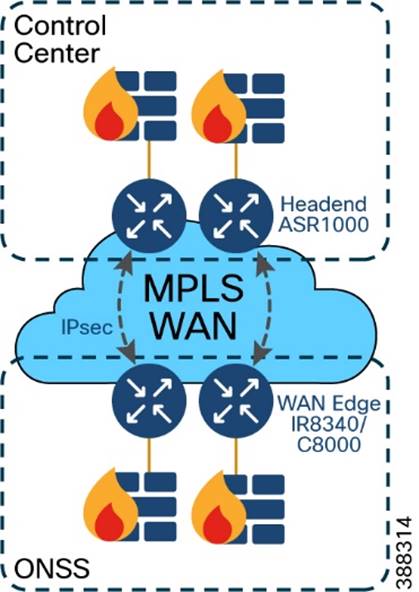

WAN Network Design

This section discusses wind farm WAN backhaul connectivity in an onshore substation. The wind farm WAN often is a dedicated WAN infrastructure that connects the transmission service operator (TSO) control center with various substations and other field networks and assets. Wind farm WAN connections can include a variety of technologies, such as cellular LTE or 5G options for public backhaul, fiber ports to connect wind farm operator or utility owned private networks, leased lines or MPLS PE connectivity options, and legacy multilink PPP backhaul aggregating multiple T1/E1 circuits.

ONSS WAN router can provide inline firewall (zone-based firewall) functionality, or a dedicated firewall can be placed beyond the substation router to protect wind farm assets. This approach results in a unique design in which a DMZ is required at the substation edge. All communications into and out of the substations network must pass through the DMZ firewall. The zone traffic egressing the substation edge should be encrypted using IPsec and put into separate logical networks using Layer 3 virtual private network (L3VPN) technology, as shown in Figure 4-14.

A WAN tier aggregates the wind farm operator’s control center and onshore and offshore substations. A Cisco IR8340 or C8000 Series Router deployed as an ONSS WAN edge router serves as an interface between the onshore substation and the control center.

Figure 4-14 Example Wind farm WAN Backhaul

WAN circuits and backhaul failure options are efficiently designed, provisioned, and managed using Cisco SD-WAN. For more information, see Cisco SD-WAN Design Guide:

https://www.cisco.com/c/en/us/td/docs/solutions/CVD/SDWAN/cisco-sdwan-design-guide.html

The wind farm WAN backhaul design is similar to the Cisco Substation Automation Solution WAN backhaul design. For more information about WAN backhaul design, see Substation Automation Design Guide – The New Digital Substation:

https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/SA/3-0/CU-3-0-DIG.pdf

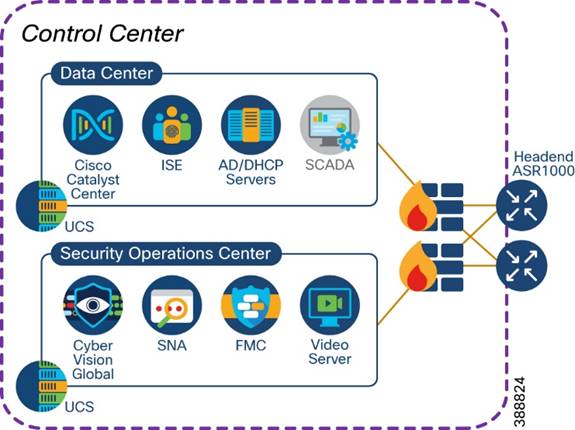

Control Center Design

A wind farm asset operator’s control center hosts multiple IT and OT applications with other network infrastructure servers. All communications to the control center are secured by using a pair of firewalls in HA deployment and a pair of Cisco ASR1000 series routers acting as headend or hub routers. Cisco ASR1000 Series routers terminate all IPSec tunnels from remote substations WAN edge routers.

Figure 4-15 shows a wind farm control center with its IT and OT applications and servers.

Figure 4-15 Wind farm Control Center

The control center network consists of:

§ One or more Cisco ASR1000 Series routers for WAN headend

§ One or more 2100 or 4100 Series firewalls

§ One or more Cisco Unified Computing System (UCS) servers for hosting virtual machines for applications

§ One Cisco Catalyst Center for network management

§ One or more Cisco ISE policy administration node (PAN)*

§ One centralized Active Directory

§ One centralized DHCP server

§ One network time protocol (NTP) server

§ One SCADA server application for wind farm turbine control

§ One video server for CCTV

§ One Cyber Vision Global

§ One Cisco Centralized Secure Firewall Management Center

§ One Cisco Secure Networks Analytics Manager (SMC)

* PSN may optionally be deployed at the OSS Infrastructure network for the distributed deployment of ISE with the PAN located at the control center. You may also choose to decentralize the PSN whenever there is a concern about latency.

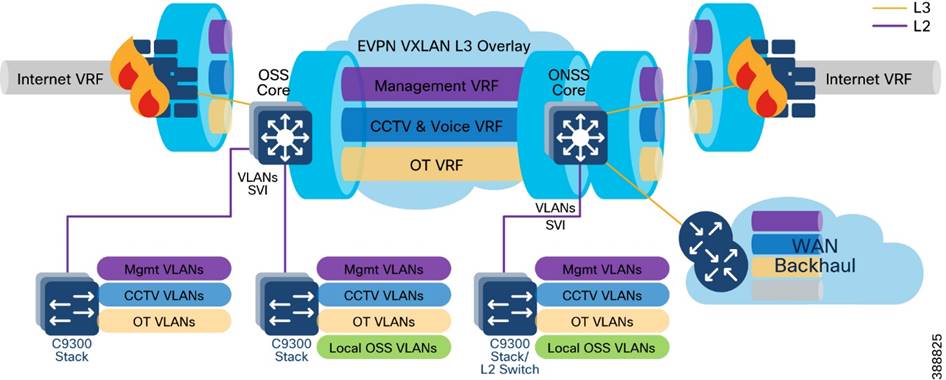

Network VLANs and Routing Design

This section covers the different VLANs in a wind farm network and virtual routing and forwarding (VRF) for layer 3 routing between OSS and ONSS core networks. The wind farm network is segmented by using VLANs for various endpoints and applications traffic. There is a dedicated VLAN and VRF for each service, endpoint, or application traffic in the network. Table 4-3 summarizes the design guidance for creating multiple VRFs and VLANs in the network.

Table 4-3 VLANs and VRFs in the Wind Farm Network Design

| VRF |

VLAN Description |

| Management VRF (VRF for network management traffic) |

· Network device management VLAN(s) · Wi-Fi and URWB aps management VLAN(s) · FAN and TAN REP ring administrative VLAN(s) · Cyber Vision (CV) collection network VLAN(s) |

| Video and voice VRF (VRF for CCTV cameras and IP telephony voice traffic) |

· VLANs for CCTV Cameras in FAN and TAN · IP telephony devices voice VLAN |

| Wi-Fi access VRF |

· Employee and contractor Wi-Fi access VLAN · Guest Wi-Fi access VLAN |

| URWB traffic VRF |

· URWB traffic VLAN |

| Operational technology (OT) (VRF for all renewables and OT traffic in the network) |

· VLANs for SCADA traffic such as weather systems, HVAC, fire detection, lightning detection, and other systems such as wildlife monitoring · VLANs for automation systems such as I/O controllers, PLCs, and so on |

| Internet VRF (VRF for device Internet access from the wind farm network) |

· DMZ VLANs for Internet traffic routing in OSS and ONSS networks |

| Global routing table (GRT) |

· VLANs local to OSS network (not to be routed) · VLANs local to ONSS network (not to be routed) |

A VRF creates a separate routing and forwarding table in the network for IP routing, which is used instead of a default global routing table (GRT). A VRF provides high-level network segmentation across multiple services or traffic in the network. Each VLAN layer 3 interface (SVI) is created and assigned to a VRF for layer 3 routing in the OSS and ONSS core switches.

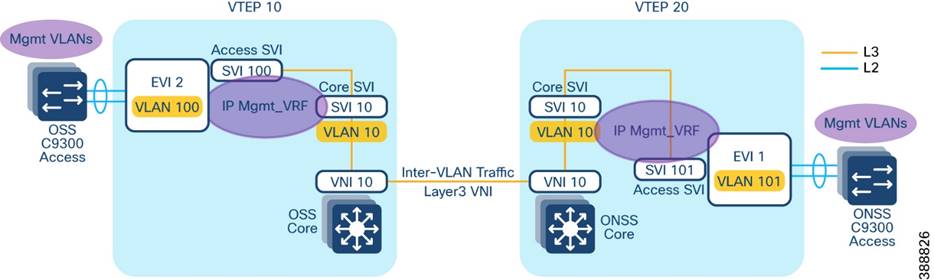

The VRF-lite feature is used along with Ethernet VPN - Virtual Extensible LAN (EVPN-VXLAN) design to provide a unified overlay network solution for layer 3 routing between OSS and ONSS core Catalyst 9500 Stackwise Virtual (SVL) switches per VRF. This provides a more scalable solution for traffic segregation (per VRF) while using a single control plane routing protocol to exchange VRF prefixes (BGP).

Figure 4-16 illustrates the layer 3 IP routing design in the wind farm architecture.

Figure 4-16 Wind Farm Network IP Routing Design

For more information about the VRF-lite feature, see “Information about VRF-lite” in IP Routing Configuration Guide, Cisco IOS XE Dublin 17.10.x (Catalyst 9500 Switches):

Ethernet VPN (EVPN) is a control plane for VXLAN that is used to reduce the flooding in the network and address scalability challenges in a VXLAN network due to flood and learn mechanism. To address this issue, a control plane is used to manage the MAC address learning and Virtual Tunneling End Point (VTEP) discovery. In BGP EVPN VXLAN deployments, Ethernet Virtual Private Network (EVPN) is used as the control plane. EVPN control plane provides the capability to exchange both MAC address and IP address information. EVPN uses Multi-Protocol Border Gateway Protocol (MP-BGP) as the routing protocol to distribute reachability information pertaining to the VXLAN overlay network, including endpoint MAC addresses, endpoint IP addresses, and subnet reachability information.

Refer to the following URL for more details on BGP EVPN VXLAN design:

An EVPN VXLAN Layer 3 overlay network allows host devices in different Layer 2 networks to send Layer 3 or routed traffic to each other. The network forwards the routed traffic using a Layer 3 virtual network instance (VNI) and an IP VRF. In the Wind Farm Asset operator’s offshore and onshore substations (OSS & ONSS) core networks are configured in a two VTEP topology without a spine switch, as shown in Figure 4-17.

In this design, a layer 3 overlay VXLAN network between OSS and ONSS core switches is configured per VRF (with BGP routing) to route inter-subnet traffic between these core switches using layer 3 Virtual Network Identifier (L3VNI). A Layer 3 VNI and a VTEP is configured per VRF in the wind farm network. The following Figure 4-17 shows the movement of traffic in an EVPN VXLAN Layer 3 overlay network using a Layer 3 VNI for a VRF in the wind farm network.

Figure 4-17 EVPN VXLAN Overlay L3VNI Routing design between OSS and ONSS Core

Refer to the following URL for more information about EVPN VXLAN Layer 3 overlay network.

https://www.cisco.com/c/en/us/td/docs/switches/lan/catalyst9500/software/release/17-13/configuration_guide/vxlan/b_1713_bgp_evpn_vxlan_9500_cg/configuring_evpn_vxlan_layer_3_overlay_network.html

Wireless Network Design

Figure 4-18 shows the offshore wind farm wireless architecture. This architecture includes URWB on the OSS and TAN for SOV connectivity and enterprise Wi-Fi on the OSS, TAN, FAN, and vessel. The following sections provide details about the URWB architecture.

Figure 4-18 Offshore Wind Farm Wireless Architecture (Wi-Fi and URWB)

Enterprise Wi-Fi Network

This section provides an overview of the Cisco Wi-Fi deployment at an offshore windfarm for employee, contractor, and guest access on the OSS, FAN, and TAN. The wireless deployment leverages Cisco’s next-generation wireless controller, the Cisco Catalyst 9800 WLC deployed within the OSS infra network and is managed centrally via the Cisco Catalyst Center at the control center. Micro segmentation is provided by Cisco ISE and TrustSec.

Cisco Wi-Fi Architecture for Off-Shore Windfarm

Figure 4-19 shows the Wi-Fi architecture for an offshore wind farm deployment, which enables Wi-Fi access for employees, contractors, and guests on the OSS network, FAN, and TAN.

Figure 4-19 Offshore Windfarm Wi-Fi Access Architecture

The Cisco Catalyst Center is located onshore within the control center. It has connectivity to the Cisco Catalyst 9800 WLC over a WAN connection. The Catalyst Center is used to centrally manage and configure the WLCs and APs. It can be used to view the health metrics of the wired and wireless networks within the offshore windfarm network. The Catalyst Center also is used to configure the TrustSec matrix that Is used for segmentation of user traffic.

A Microsoft Windows servers is located within the control center and provides the following functionality:

§ Employees user identity store (group, username, password)

§ Contractor user identity store (group, username, password)

§ Certificate authority (CA)

§ DNS server

§ DHCP server (DHCP scopes for employee and guest wireless access)

The ISE server is collocated in the control center. The ISE server acts as the central identity and policy management server used for wireless IEEE 802.1X authentication and authorization. It assigns security group tags (SGTs) to clients. These tags are used for micro-segmentation. The ISE server is integrated with the Cisco Catalyst Center and the Catalyst 9800 WLC.

ISE also hosts the wireless guest portal for guest wireless access.

The appropriate firewall ports need to be opened on the enterprise firewall at the boundary of the control center for Catalyst Center to WLC communications (configuration and telemetry), ISE to WLC communication (IEEE 802.1X, TrustSec), and WLC to AD connectivity (DHCP, DNS ports).

The Cisco Catalyst 9800 WLCs are deployed as a redundant SSO high-availability pair with the OSS infra network connected to the Cisco Catalyst 9300 switches. The Wi-Fi deployment is managed using Catalyst Center Catalyst Center and Cisco ISE for IEEE802.1X wireless and guest access. A good practice is to use different WLC interfaces for wireless management (access port), wireless client traffic (trunk port) and guest user traffic (access port).

Cisco IW6300s (ruggedized APs 9124s (enterprise APs) and the IW9167E/I) are deployed in local mode on the OSS, FAN BS, or TAN NS to provide wireless access where needed. The Catalyst IW 9167 provides more capacity in terms of throughput at the PHY level offering speeds up to 5Gbps on the ethernet port and up to 10Gbps on the SFP port with a max data rate of 7.96 Gbps. In addition to offering dual operation modes (Wi-Fi & URWB) for flexibility the 9167 can operate within the 6ghz frequency with its tri radio design. The wireless traffic is carried over the CAPWAP tunnel from the APs to the WLC and dropped off in the appropriate client VLAN on the Catalyst 9300 switch within the OSS infra network.

A dedicated VLAN and subnet needs to be assigned for wireless AP management. One or more VLANs and subnets need to be assigned for wireless client traffic. A dedicated VLAN and subnet needs to be assigned for guest wireless traffic. The AP management subnet needs to be trunked to whichever switch has APs connected to it. It also needs to exist on the switches to which the WLCs are connected. The AP switch port can be configured as an access port in the AP management VLAN with spanning-tree portfast enabled.

The SVIs for the VLANs and subnets need to exist on the Cisco 9500 stack.

Different user groups need to be created for employee users and contractors within Microsoft Active Directory (AD) or another LDAP server of your choice. Employee and contractor users need to be created in Microsoft AD or LDAP server and assigned to the appropriate groups. Unique scalable group tags (SGTs) need to be assigned for the employee, contractor, and guest user groups within ISE.

The employee SGT usually is configured to provide full access to all the required enterprise services so that it can accomplish its job functions. The contractor SGT usually provides limited access only to the services that it needs to access for its function, or only internet access if that is all it requires. The guest SGT is provided only with internet access. These policies can be defined and configured on the Cisco Catalyst Center and pushed to the ISE. The ISE then pushes these policies to the WLC.

When a wireless client is connected and is authenticated by ISE, the IP-SGT binding is generated on the controller.

URWB Wireless Backhaul

Use case for Service Operations Vessel Wireless Backhaul within a Wind Farm

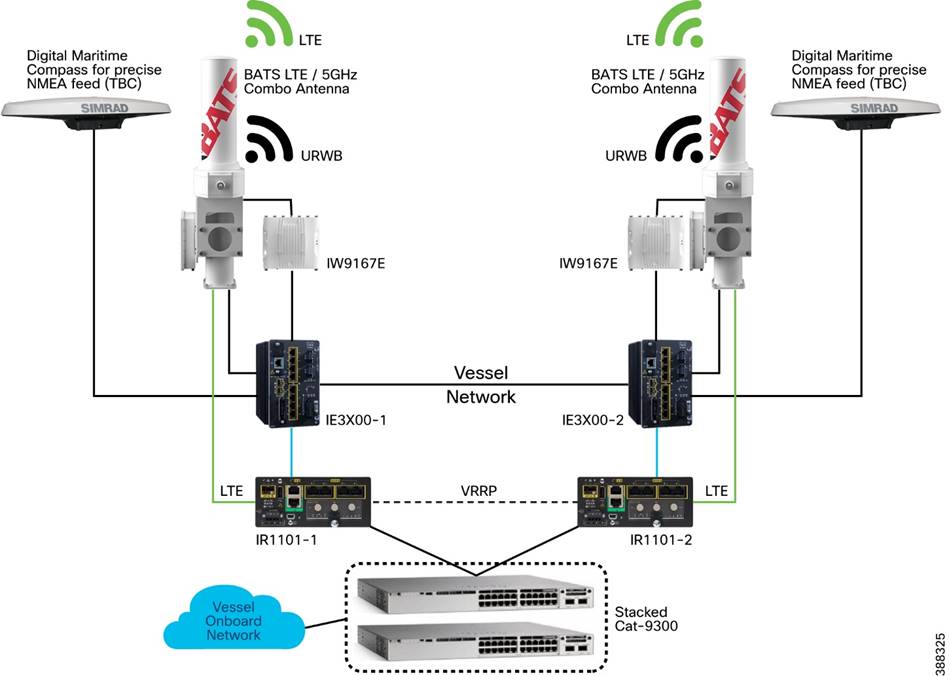

There is a need for a reliable, high-bandwidth wireless backhaul solution that connects to the large Service Operations Vessels (SOVs) and smaller crew transfer vessels (CTVs), both of which move staff around an offshore wind farm estate. During periods near shore, a vessel should use public cellular connectivity.

This section provides an overview of URWB technology, the wireless network components needed to build out the wind farm solution, and the high-level and low-level architecture to support connectivity SOVs and CTVs to the OSS network.

The following high-level requirements are met by this CVD:

§ Reliable wireless backhaul connectivity to vessels that are within a 10 km radius of an OSS platform using URWB radios.

§ The head end or wayside is on the OSS.

§ Vessels can switch to cellular connectivity when in range of onshore cellular networks.

§ Vessels have specialist antennas with appropriate radios and modems.

§ Antennas on vessels automatically adjust their direction to optimize the radio signals for best performance by using a GPS feed to dynamically change the beam direction.

§ Antennas on vessels are combination antennas that support 5 GHz URWB wireless and public LTE.

§ Target throughput: 30 to 50 Mbps for vessels that are within a 10 km radius of an OSS.

§ Support for connectivity to a public LTE network when a vessel is going to or from a harbor, extending to a few miles offshore.

§ IP telephony extended to a vessel, with Cisco Survivable Remote Site Telephony (SRST) preferred onboard for periods when no OSS connectivity is available.

§ Corporate and guest user networks to be extended to the SOV using fixed and Wi-Fi connections.

URWB Overview

The Catalyst IW9167 Series addresses the growing need to provide reliable wireless connectivity for mission-critical applications as organizations automate processes and operations. It comes with three 4x4 radios in a heavy-duty design that is IP67 rated and packed with advanced features. Cisco URWB provides up to 99.995% stability with <10 ms latency, 0 packet loss and seamless roaming. For a detailed overview of Cisco’s URWB please view the IW9167 datasheet.

URWB: Key Technology Pillars

The following key technologies underlay the foundation for the URWB solution:

§ Prodigy 2.0: MPLS-based transmission protocol built to overcome the limits of standard wireless protocols.

§ Fluidity: Proprietary fast-roaming algorithm for vehicle-to-wayside communication with a 0 ms roam delay and no roam loss for speeds up to 200 Mph (360 kph).

§ Fast-failover high-availability mechanism that provides hardware redundancy and carrier-grade availability.

Prodigy 2.0: MPLS Overlay

URWB uses the proprietary wireless-based MPLS transmission protocol Prodigy to discover and create label-switched paths (LSPs) between mesh-point radios and mesh end(s). Prodigy helps make the wireless mesh networks resilient. It also helps making fixed and mobility networks resilient. MPLS provides an end-to-end packet delivery service operating between layer 2 and layer 3 of the OSI network stack. It relies on label identifiers, rather than on the network destination address as in traditional IP routing, to determine the sequence of nodes to be traversed to reach the end of the path.

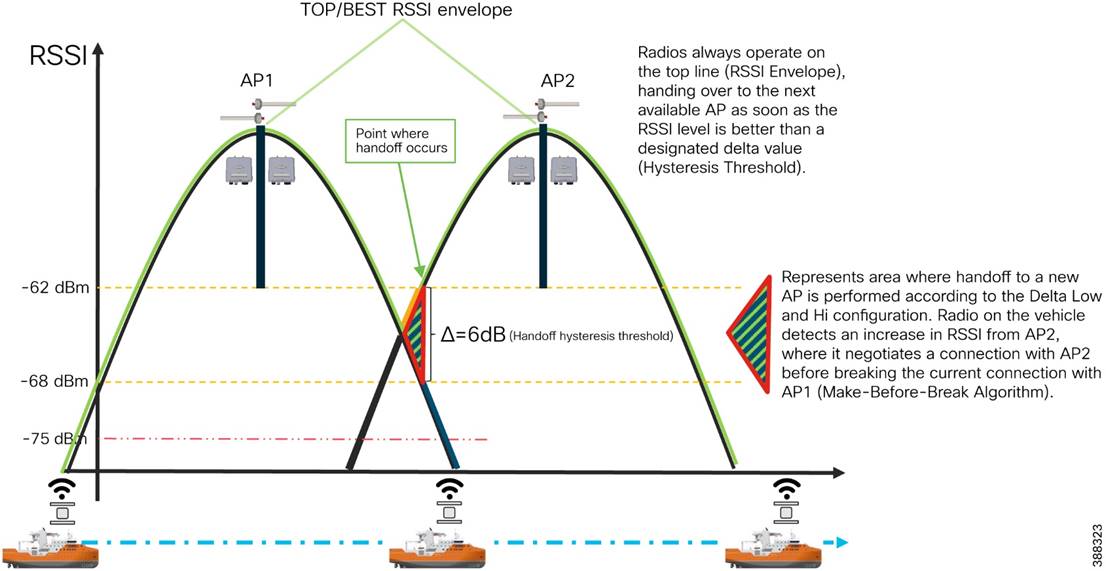

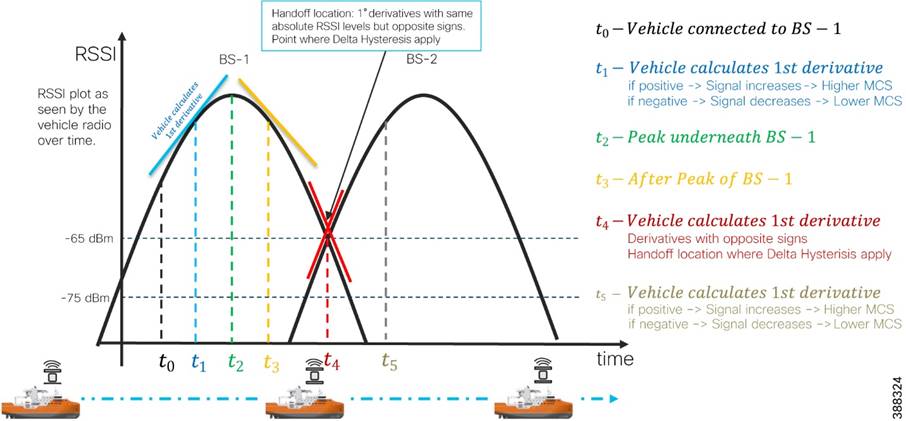

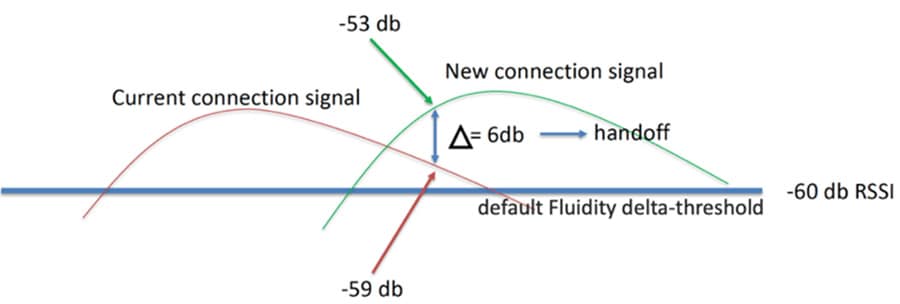

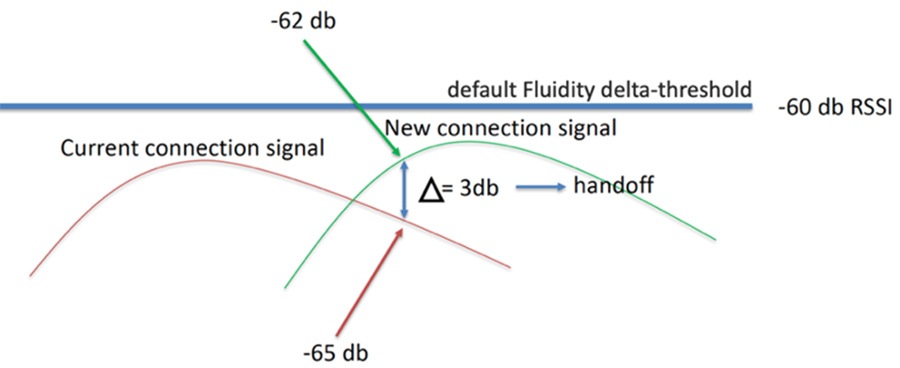

Fluidity

Fluidity enables a vehicle that is moving between multiple infrastructure APs to maintain end-to-end connectivity with seamless handoffs between APs. Vehicle radios negotiate with the infrastructure APs and form a new wireless connection to a more favorable infrastructure AP with better signal quality before breaking or losing their currently active wireless connections.

Hardware Redundancy and High-Availability

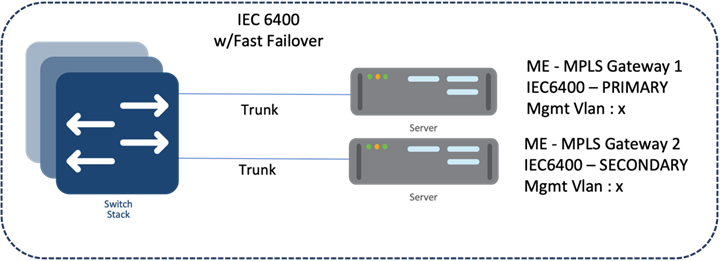

Fast-failover is a proprietary feature that provides high-availability and protection against hardware failures. This feature virtually guarantees uninterrupted service for mission-critical applications where safety and or operations would otherwise be compromised by failure of a single radio or gateway device. Leveraging an MPLS-based protocol, the URWB achieves device failovers within 500 ms within layer 2 and layer 3 networks. The IW9167 supports both Gateway + MP (Mesh Point) – MP (with same tower ID) and ME (Mesh End) – ME fast-failover scenarios.

URWB Network Components

URWB Mesh End Gateway

All fluidity and fixed infrastructure deployments need a mesh end. The mesh end functions as a gateway between wireless and wired networks. We recommend that all systems using Fluidity use a redundant pair of mesh end gateways to terminate the MPLS tunnels, aggregate traffic, and act as an interface between the wired and wireless network. Mesh end gateways can also be thought of as MPLS label edge routers (LERs) on the infrastructure network. The mesh end gateway is responsible for encapsulating the traffic that comes from the wired network to the fluidity overlay network using MPLS, decapsulating MPLS, and delivering standard datagrams onto the wired network.

URWB gateways are rugged, industrial grade network appliances that make setup and management of medium and large-scale URWB fluidity and fixed infrastructure deployments fast and easy.

Cisco URWB mesh end gateways are deployed as a redundant pair within an OSS infrastructure network using IW9167s or the Cisco IEC6400 (Edge Compute Appliance). The IEC6400 leverages the capabilities of the UCS C220 M6 Rack Server. While this appliance allows you to extend the benefits of the URWB to large-scale, high-demanding wireless networks, it also works as an aggregation point for all the MPLS-over-the-wireless communications in networks with up to hundreds of IW devices.

Figure 4-20 URWB Gateway Models Comparison

| Feature |

IW9167E |

|

| Scalability |

Up to 5 Gbps |

Up to 40 Gbps |

| Core |

2048 MB DRAM |

3rd Gen Intel Xeon |

| Ports: RJ45 |

1X 100M/1000M/2.5G/5G |

Dual 10GBASE-T X550 ethernet |

| Ports: fiber |

1 x SFP(Copper) 100M/1000M/10G or 1x SFP (fiber) 1G/10G |

UCS VIC 1455 Quad Port 10/25G SFP28 CNA PCIE |

| Power supply |

Single (AC/DC), PoE+, PoE++, UPOE, power injector |

UCS 1050W AC power |

URWB IW9167E Radio Unit

Figure 4-21 IW9167E Radio Unit

The IW9167E is deployed to create point-to-point, point-to-multipoint, mesh, and mobility networks for outdoor and industrial environments. It is designed with external antenna ports to provide flexibility in choosing the right antenna based on the use case.

It is especially suitable for wayside-to-vehicle communication in industries where reliable, stable, and low-latency communications are essential for safe operations and optimal productivity. The IW9167 has all the benefits of Wi-Fi 6 including higher density, throughput, channels, power efficiency and improved security.

For the offshore windfarm deployment, IW9167E can be used as an infrastructure radio on the OSS, turbines, and vessels. IW9167 models support Wi-Fi 6 and 6-GHz which can boost capacity and help mitigate interference with its tri radio design. The IW9167 can also be used on board the SOV as a Mesh Point operating in vehicle mode which is intended for vessels with roaming intent. It is important to note that the IW91671 does not support URWB mode at the time of this writing.

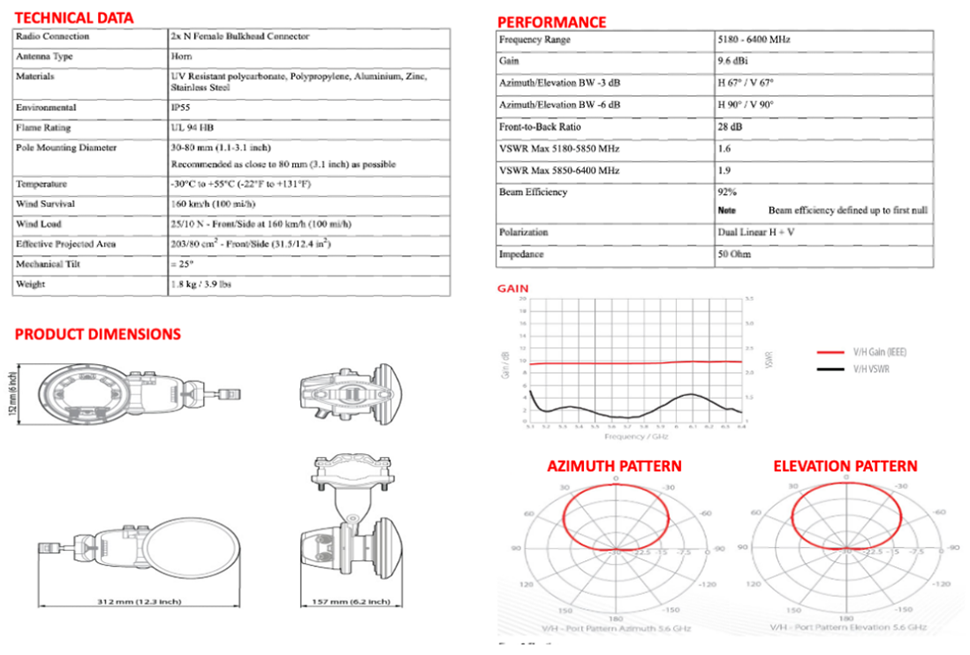

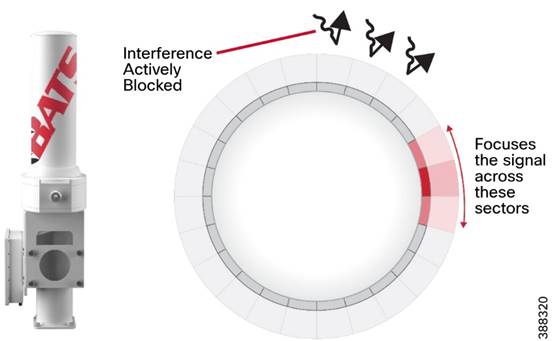

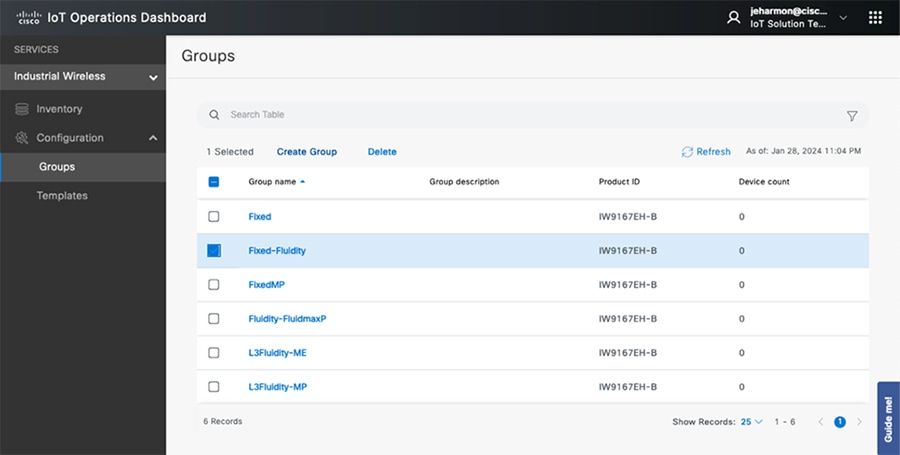

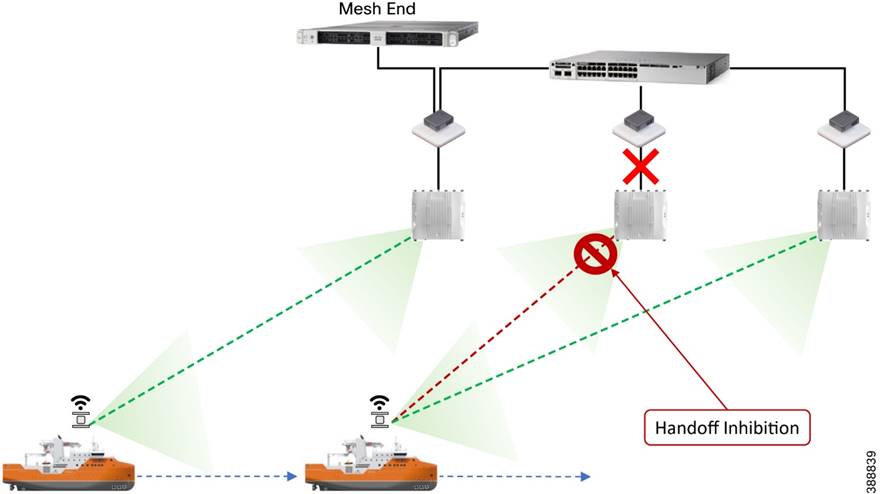

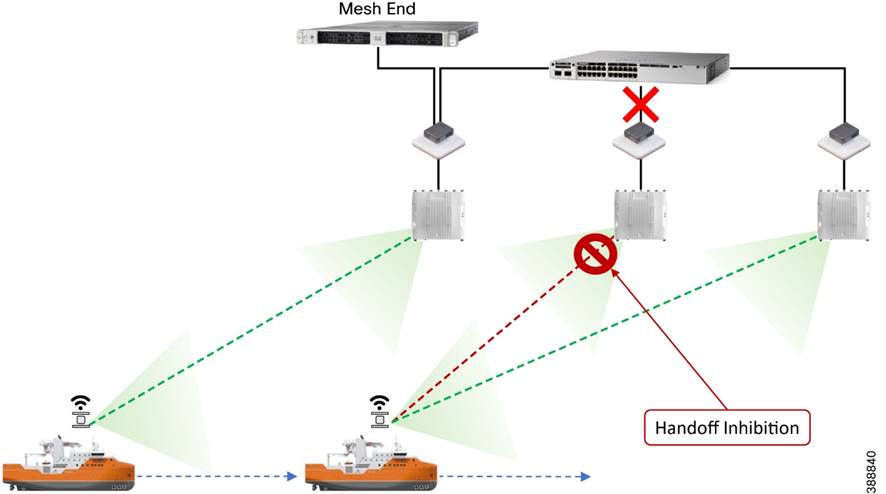

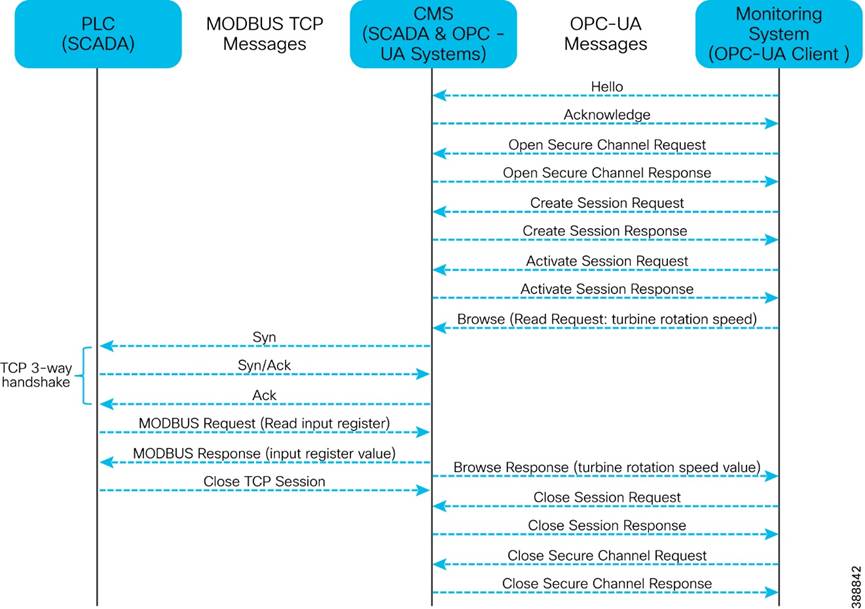

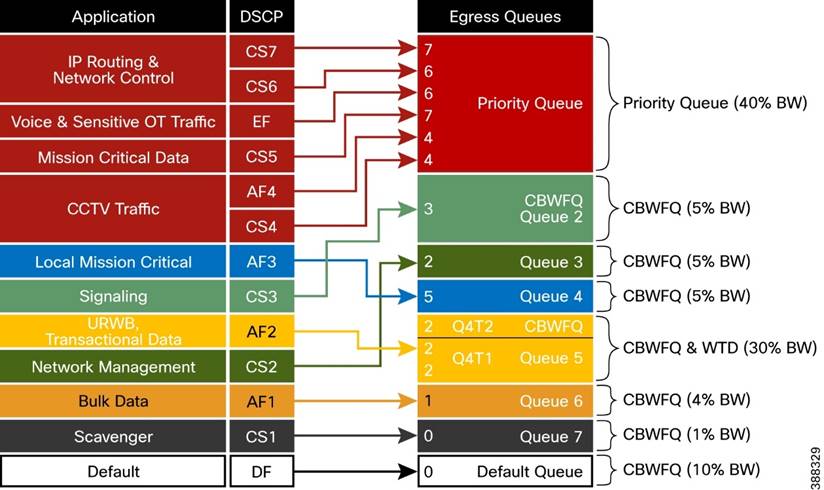

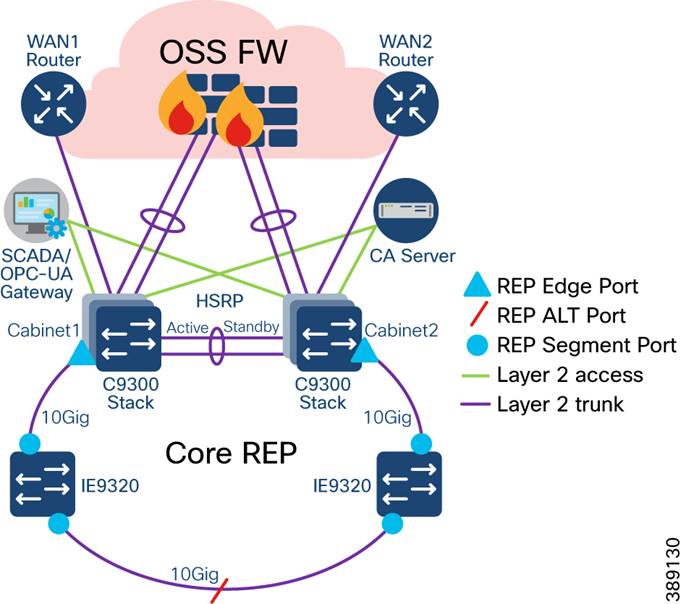

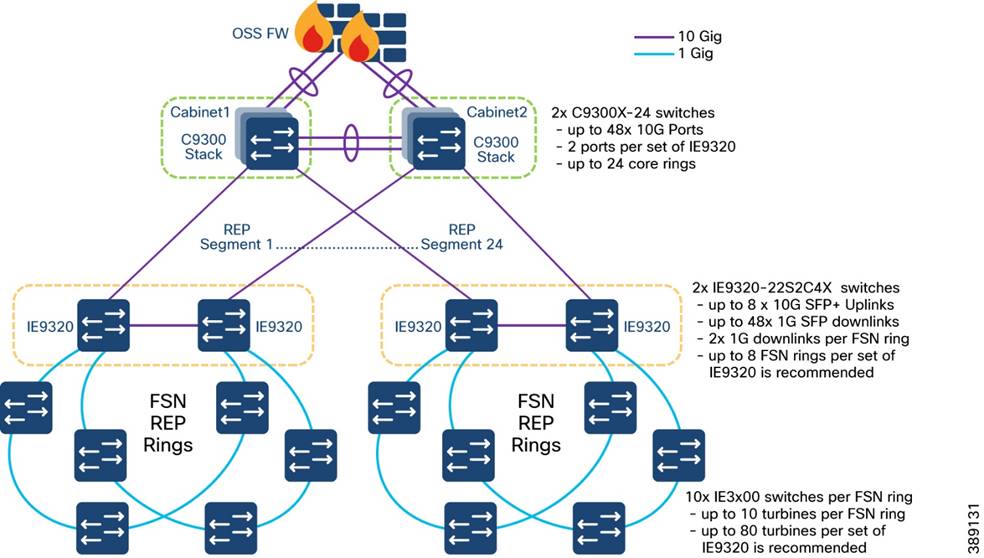

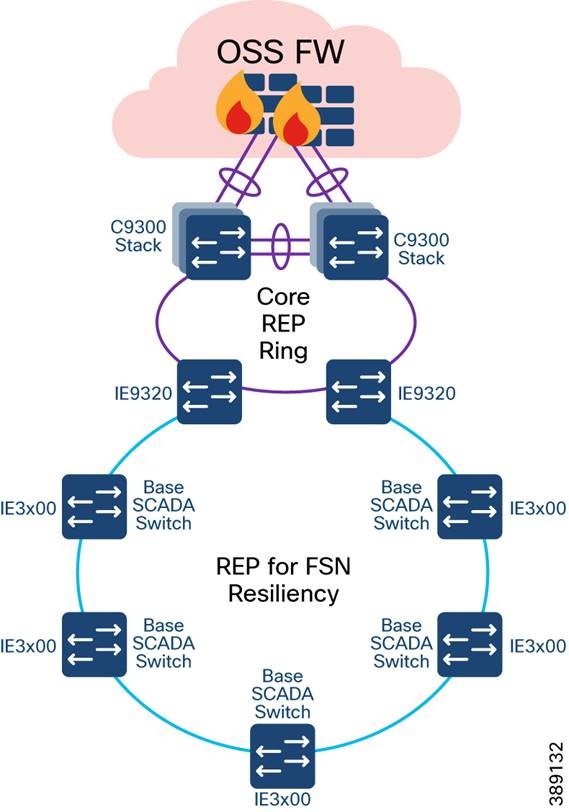

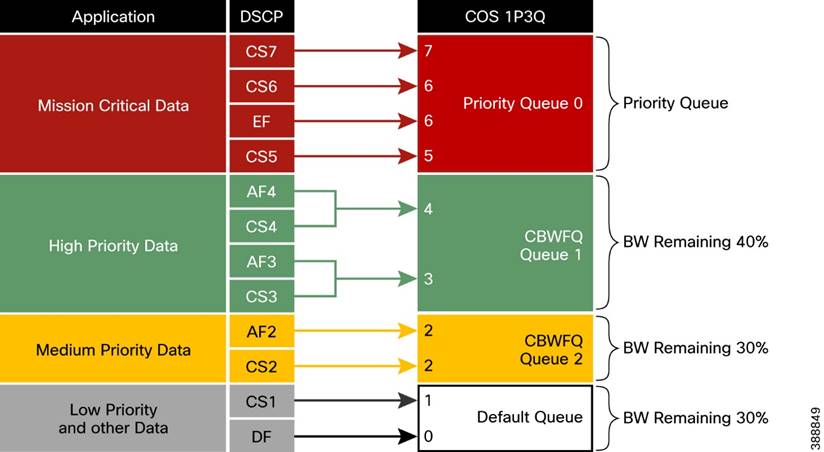

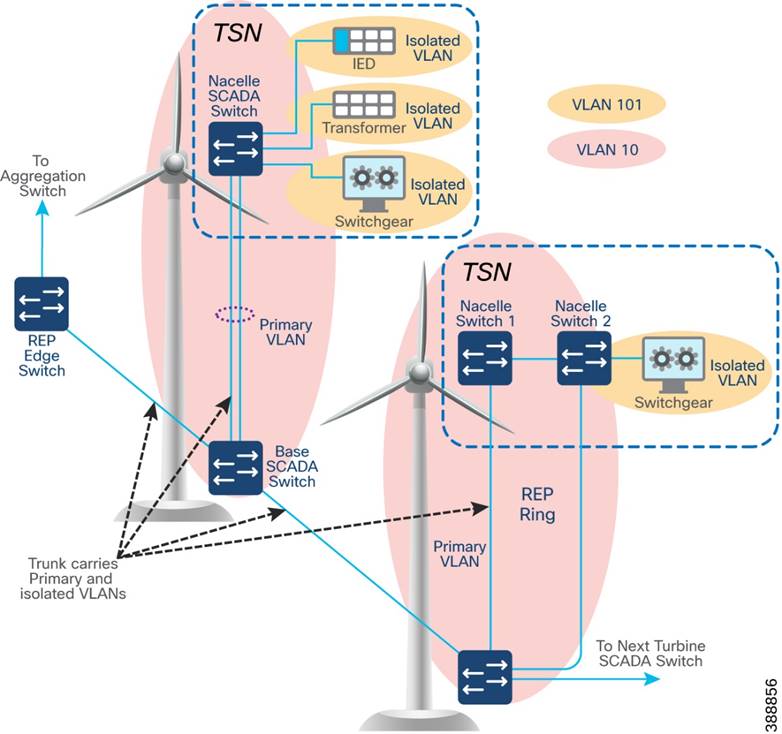

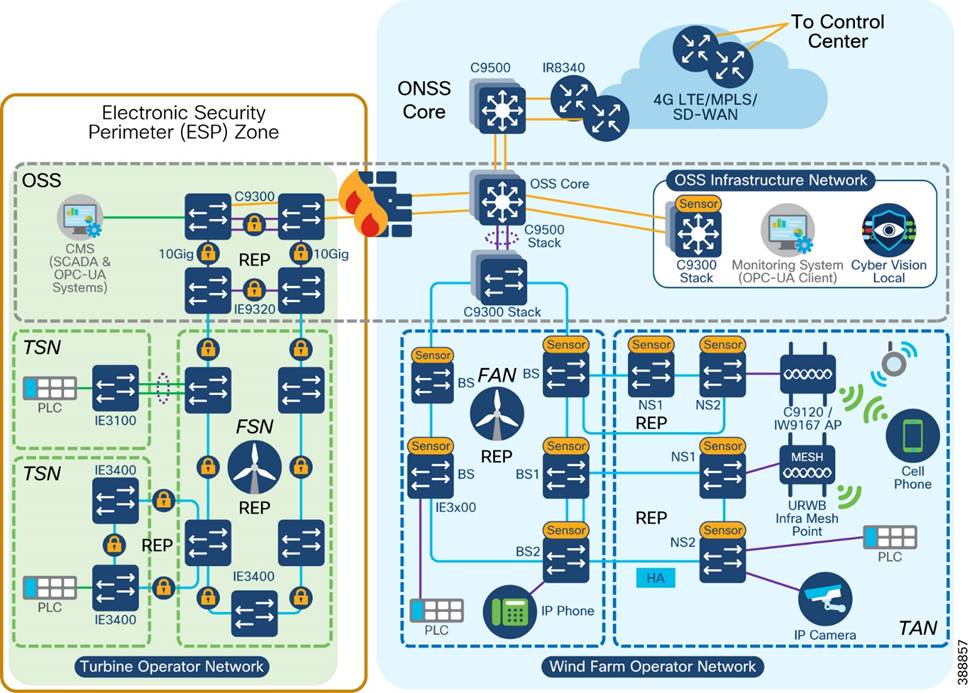

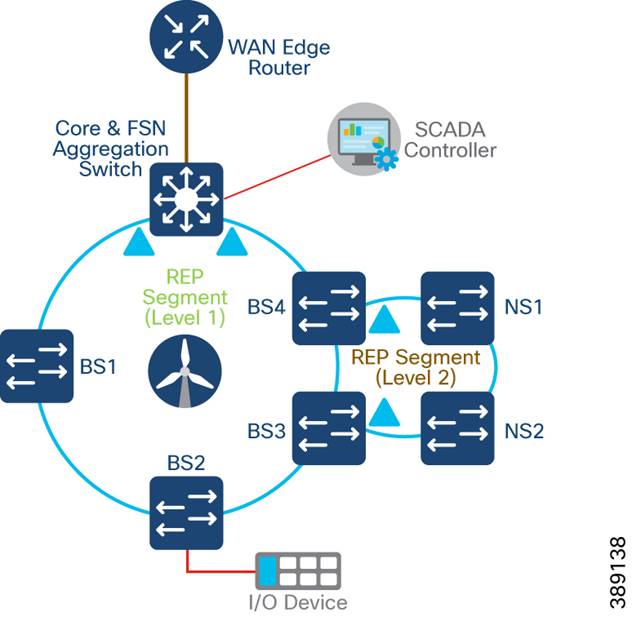

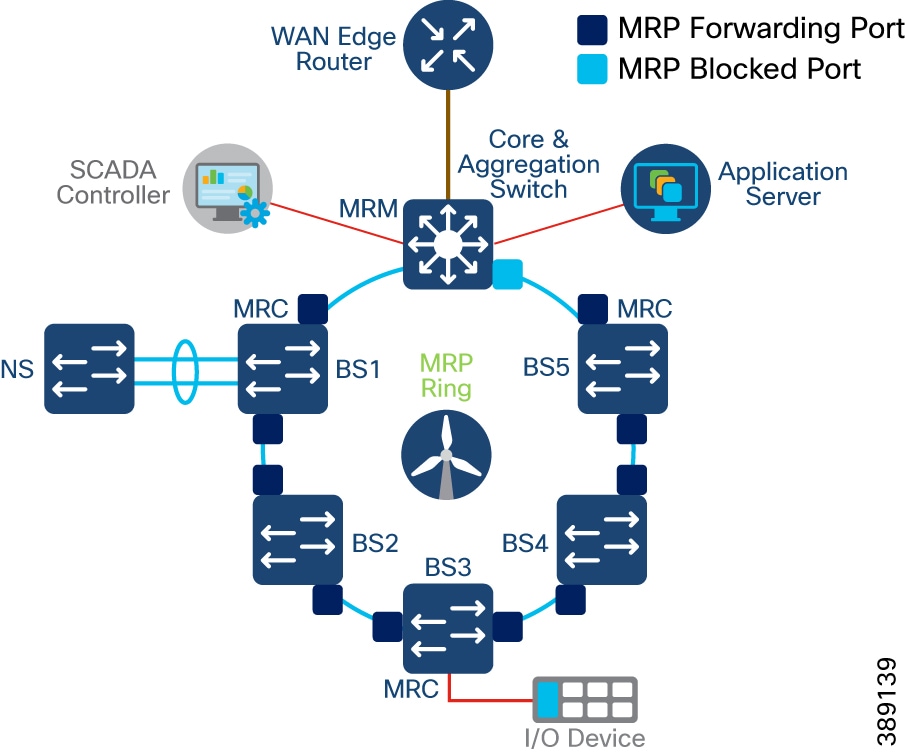

§ IW-ANT-H90-510-N Antenna