|

Static RP

|

ippimrp-address

|

Configures a PIM static RP address for a multicast group range. You can specify an optional route-map policy that lists multicast

group ranges for the static RP. If no route-map is configured, the static RP will apply to all multicast group ranges excluding

any configured SSM group ranges.

The mode is ASM.

|

|

Fabric RP

|

n/a

|

Configures an anycast RP on all multicast enabled border leaf switches in the fabric. Anycast RP is implemented using PIM

anycast RP. You can specify an optional route-map policy that lists multicast group ranges for the static RP.

|

|

Auto-RP Forward Auto-RP Updates

|

ip pim auto-rp forward

|

Enables the forwarding of Auto-RP messages. The default is disabled.

|

|

Auto-RP Listen to Auto-RP Updates

|

ip pim auto-rp listen

|

Enables the listening for Auto-RP messages. The default is disabled.

|

|

Auto-RP MA Filter *

|

ip pim auto-rp mapping-agent-policy

|

Enables Auto-RP discover messages to be filtered by the border leaf based on a route-map policy where you can specify mapping

agent source addresses. This feature is used when the border leaf is configured to listen for Auto-RP messages. The default

is no filtering of Auto-RP messages.

|

|

BSR Forward BSR Updates

|

ippimbsr forward

|

Enables forwarding of BSR messages. The default is disabled, which means that the leaf does not forward BSR messages.

|

|

BSR Listen to BRS Updates

|

ip pim bsr listen

|

Enables listening for BSR messages. The default is disabled, which means that the leaf does not listen for BSR messages.

|

|

BSR Filter

|

ip pim bsr bsr-policy

|

Enables BSR messages to be filtered by the border leaf based on a route-map policy where you can specify BSR source. This

command can be used when the border leaf is configured to listen to BSR messages. The default is no filtering of BSR messages.

|

|

ASM Source, Group Expiry Timer Policy *

|

ip pim sg-expiry-timer <timer> sg-list

|

Applies a route map to the ASM Source, Group Expiry Timer to specify a group/range of groups for the adjusted expiry timer.

|

|

ASM Source, Group Expiry Timer Expiry (sec)

|

ip pim sg-expiry-timer

|

To adjust the (S,G) expiry timer interval for Protocol Independent Multicast sparse mode (PIM-SM) (S,G) multicast routes.

This command creates persistency of the SPT (source based tree) over the default 180 seconds for intermittent sources. Range

is from 180 to 604801 seconds.

|

|

Register Traffic Policy: Max Rate

|

ip pim register-rate-limit

|

Configures the rate limit in packets per second. The range is from 1 to 65,535. The default is no limit.

|

|

Register Traffic Policy: Source IP

|

ip pim register-source

|

Used to configure a source IP address of register messages. This feature can be used when the source address of register messages

is routed in the network where the RP can send messages. This may happen if the bridge domain where the source is connected

is not configured to advertise its subnet outside of the fabric.

|

|

SSM Group Range Policy*

|

ippimssm route-map

|

Can be used to specify different SSM group ranges other than the default range 232.0.0.0/8. This command is not required if

you want to only use the default group range. You can configure a maximum of four ranges for SSM multicast including the default

range.

|

|

Fast Convergence

|

n/a

|

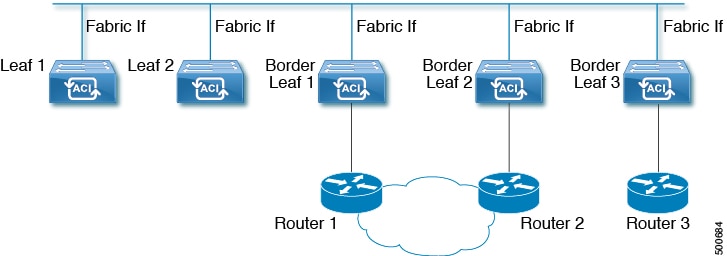

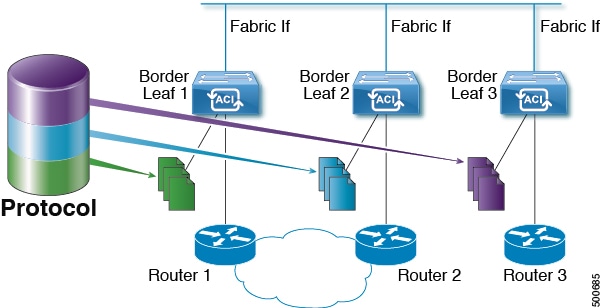

When fast convergence mode is enabled, every border leaf in the fabric will send PIM joins towards the root (RP for (*,G)

and source (S,G)) in the external network. This allows all PIM enabled BLs in the fabric to receive the multicast traffic

from external sources but only one BL will forward traffic onto the fabric. The BL that forwards the multicast traffic onto

the fabric is the designated forwarder. The stripe winner BL decides on the DF. The advantage of the fast-convergence mode

is that when there is a changed of the stripe winner due to a BL failure there is no latency incurred in the external network

by having the new BL send joins to create multicast state.

Note: Fast convergence mode can be disabled in deployments where the cost of additional bandwidth outweighs the convergence

time saving.

|

|

Strict RFC Compliant

|

ip pim strict-rfc-compliant

|

When configured, the switch will not process joins from unknown neighbors and will not send PIM joins to unknown neighbors

|

|

MTU Port

|

ippimmtu

|

Enables bigger frame sizes for the PIM control plane traffic and improves the convergence. Range is from 1500 to 9216 bytes

|

|

Resource Policy Maximum Limit

|

ip pim state-limit

|

Sets the maximum (*,G)/(S,G) entries allowed per VRF. Range is from 1 to 4294967295

|

|

Resource Policy Reserved Route Map*

|

ip pim state-limit <limit> reserved <route-map>

|

Configures a route-map policy matching multicast groups or groups and sources to be applied to the Resource Policy Maximum

Limit reserved entries.

|

|

Resource Policy Reserved Multicast Entries

|

ip pim state-limit <limit> reserved <route-map> <limit>

|

Maximum reserved (*, G) and (S, G) entries allowed in this VRF. Must be less than or equal to the maximum states allowed.

Used with the Resource Policy Reserved Route Map policy

|

Feedback

Feedback