Guidelines and Limitations

The specific hardware used for ASA Virtual deployments can vary, depending on the number of instances deployed and usage requirements. Each virtual appliance you create requires a minimum resource allocation—memory, number of CPUs, and disk space—on the host machine.

Important |

The ASA Virtual deploys with a disk storage size of 8GB. It is not possible to change the resource allocation of the disk space. |

Note |

Starting from ASA Virtual Version 9.16.x, when you are downgrading from ASAv100, whose device configuration is 16 vCPU and 32GB RAM, to ASAv10, then you must configure the device with 1 vCPU and 4GB RAM. |

Review the following guidelines and limitations before you deploy the ASA Virtual.

ASA Virtual on KVM System Requirements

Make sure to conform to the specifications below to ensure optimal performance. The ASA Virtual has the following requirements:

-

The host CPU must be a server class x86-based Intel or AMD CPU with virtualization extension.

For example, ASA Virtual performance test labs use as minimum the following: Cisco Unified Computing System™ (Cisco UCS®) C series M4 server with the Intel® Xeon® CPU E5-2690v4 processors running at 2.6GHz.

Recommended vNICs

The following vNICs are recommended in order of optimum performance.

-

i40e in PCI passthrough—Dedicates the server's physical NIC to the VM and transfers packet data between the NIC and the VM via DMA (Direct Memory Access). No CPU cycles are required for moving packets.

-

i40evf/ixgbe-vf—Effectively the same as above (DMAs packets between the NIC and the VM) but allows the NIC to be shared across multiple VMs. SR-IOV is generally preferred because it has more deployment flexibility. See

-

virtio—This is a para-virtualized network driver that supports 10Gbps operation but also requires CPU cycles.

Note |

ASA Virtual instance running on KVM system might encounter data connectivity issues with the SR-IOV interface using the vNIC driver i40e version 2.17.4. We recommend you upgrade this vNIC version to other versions as a workaround to fix this issue. |

Performance Optimizations

To achieve the best performance out of the ASA Virtual, you can make adjustments to the both the VM and the host. See Performance Tuning for more information.

-

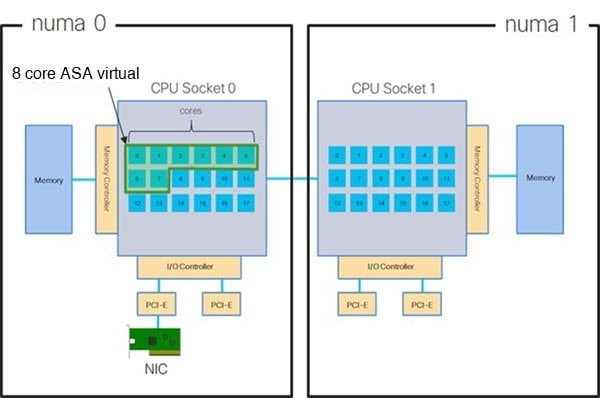

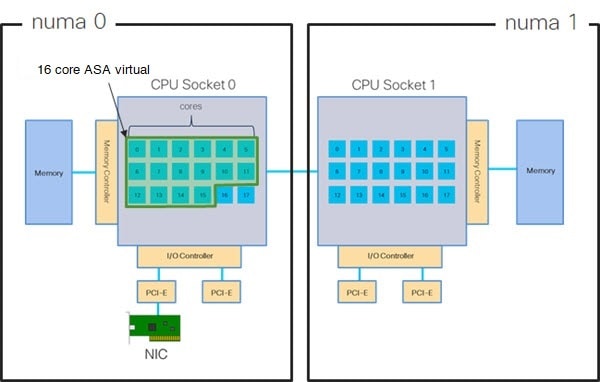

NUMA—You can improve performance of the ASA Virtual by isolating the CPU resources of the guest VM to a single non-uniform memory access (NUMA) node. See NUMA Guidelines for more information.

-

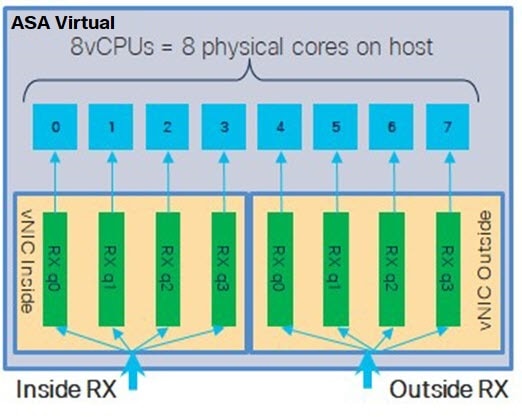

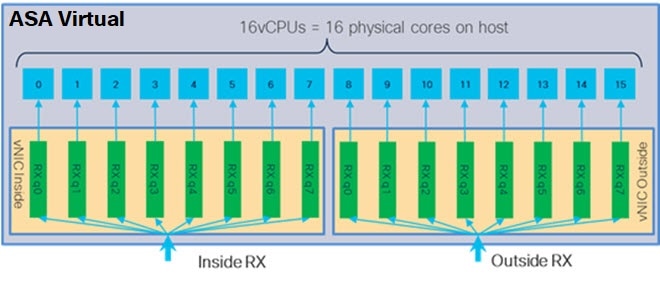

Receive Side Scaling—The ASA Virtual supports Receive Side Scaling (RSS), which is a technology utilized by network adapters to distribute network receive traffic to multiple processor cores. See Multiple RX Queues for Receive Side Scaling (RSS) for more information.

-

VPN Optimization—See VPN Optimization for additional considerations for optimizing VPN performance with the ASA Virtual.

Clustering

Starting from version 9.17, clustering is supported on ASA virtual instances deployed on KVM. See ASA Cluster for the ASAv for more information.

CPU Pinning

CPU pinning is required for the ASA Virtual to function in a KVM environment; see Enable CPU Pinning.

Failover for High Availability Guidelines

For failover deployments, make sure that the standby unit has the same license entitlement; for example, both units should have the 2Gbps entitlement.

Important |

When creating a high availability pair using ASA Virtual, it is necessary to add the data interfaces to each ASA Virtual in the same order. If the exact same interfaces are added to each ASA Virtual, but in different order, errors may be presented at the ASA Virtual console. Failover functionality may also be affected. |

ASA Virtual on Proxmox VE

Proxmox Virtual Environment (VE) is an open-source server virtualization platform that can manage KVM virtual machines. Proxmox VE also provides a web-based management interface.

When you deploy the ASA Virtual on Proxmox VE, you need to configure the VM to have an emulated serial port. Without the serial port, the ASA Virtual will go into a loop during the bootup process. All management tasks can be done using the Proxmox VE web-based management interface.

Note |

For advanced users who are used to the comfort of the Unix shell or Windows Powershell, Proxmox VE provides a command line interface to manage all the components of your virtual environment. This command line interface has intelligent tab completion and full documentation in the form of UNIX man pages. |

To have the ASA Virtual boot properly the VM needs to have a serial device configured:

-

In the main management center, select the ASA Virtual machine in the left navigation tree.

-

Power off the virtual machine.

-

Choose and add a serial port.

-

Power on the virtual machine.

-

Access the ASA Virtual machine using Xterm.js.

See the Proxmox Serial Terminal page for information on how to setup and activate the terminal on the guest/server.

IPv6 Support

For creating vNICs with IPv6 support configuration on KVM, you must create an XML file for each interface that consists of IPv6 configuration parameters. You can install vNICs with the IPV6 network protocol configurations by running these XML files using the command virsh net-create <<interface configuration XML file name>>.

For each interface, you can create the following XML file:

-

Management interface - mgmt-vnic.xml

-

Diagnostic interface - diag-vnic.xml

-

Inside interface - inside-vnic.xml

-

Outside interface - outside-vnic.xml

Example:

<network>

<name>mgmt-vnic</name>

<bridge name='mgmt-vnic' stp='on' delay='0' />

<ip family='ipv6' address='2001:db8::a111:b220:0:abcd' prefix='96'/>

</network>

virsh net-list

brctl show

UEFI and Secure Boot Limitations

On KVM, UEFI firmware and UEFI Secure Boot are supported only for greenfield (fresh) deployments and must be configured at deployment time.

Existing brownfield deployments using legacy BIOS mode can be upgraded to Version 9.24 without impact. Switching to UEFI firmware or enabling UEFI Secure Boot after deployment is not supported.

Upgrade Restrictions and Limitations

Revert upgrade restrictions

Caution |

Reverting an upgrade is not supported. |

Ensure that you take a backup before upgrading. After upgrading to ASA Virtual 9.24, you cannot roll back to a previous software version. To return to an earlier version, you must redeploy the Management Center.

Feedback

Feedback