EVPN multiple services per Ethernet segment

An Ethernet segment is a network infrastructure component that

-

supports multiple services on the same physical hardware resource

-

provides traffic segregation among these services, and

-

enables users to manage traffic configurations effectively.

|

Feature Name |

Release Information |

Feature Description |

|

EVPN Multiple Services per Ethernet Segment |

Release 25.1.1 |

Introduced in this release on: Fixed Systems (8010 [ASIC: A100]) (select variants only*) *This feature is now supported on the Cisco 8011-4G24Y4H-I routers. |

|

EVPN Multiple Services per Ethernet Segment |

Release 24.4.1 |

Introduced in this release on: Fixed Systems (8700 [ASIC: P100])(select variants only*) * The EVPN multiple services per Ethernet segment functionality is now extended to the Cisco 8712-MOD-M routers. |

|

EVPN Multiple Services per Ethernet Segment |

Release 24.3.1 |

Introduced in this release on: Fixed Systems (8200 [ASIC: P100], 8700 [ASIC: P100])(select variants only*); Modular Systems (8800 [LC ASIC: P100])(select variants only*) * The EVPN multiple services per Ethernet segment functionality is now extended to:

|

|

EVPN Multiple Services per Ethernet Segment |

Release 24.2.11 |

Introduced in this release on: Modular Systems (8800 [LC ASIC: Q200, P100]) (select variants only*) You can configure EVPN to run multiple services on a single Ethernet Segment (ES), which enables the efficient use of network resources. While the services run on the same physical hardware resource, each service can be associated with a different EVPN instance and separated from each other. This allows traffic segregation, which enables users to employ their own traffic management configurations. * This feature is supported only on routers with the Q200 and 88-LC1-36EH line cards. |

Highlights and benefits of EVPN multiple services per Ethernet segment

-

Enables consolidation of diverse services on a shared Ethernet Segment without compromising service isolation.

-

Supports independent traffic policies and configurations for each service, enhancing operational control.

-

Facilitates efficient use of physical infrastructure by allowing multiple services to coexist on the same hardware.

-

Improves network scalability and flexibility by reducing the need for separate physical segments.

-

Simplifies maintenance and upgrades by centralizing service management on a single Ethernet segment.

These capabilities help network operators optimize resource utilization while maintaining clear separation and control of service traffic, leading to streamlined operations and reduced costs.

Services supported on a single Ethernet bundle

You can configure multiple services on a single Ethernet bundle, with one service assigned to each sub-interface. The supported services include:

-

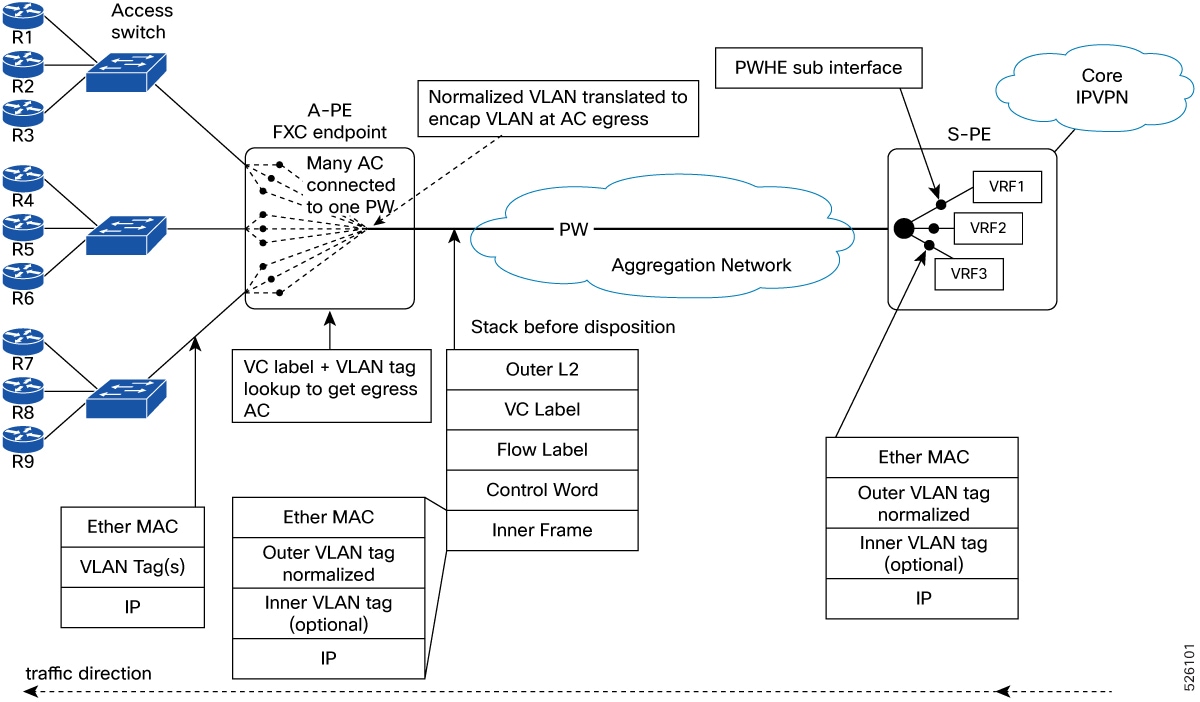

EVPN E-Line xconnect service

-

Native EVPN E-LAN service

These services are supported only on all-active multihoming mode.

Configure EVPN multiple services per Ethernet segment

Configure multiple EVPN services on bundle-Ethernet sub-interfaces to support diverse customer services over a single Ethernet segment.

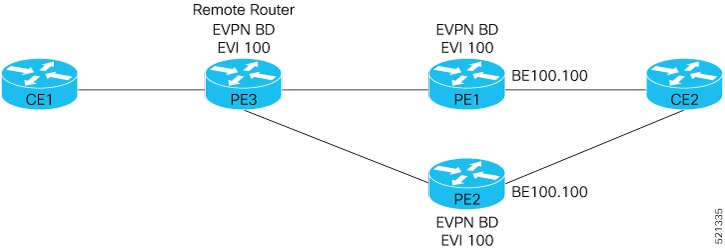

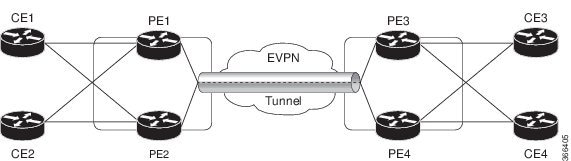

Consider a CE device connected to two PE devices through bundle-Ethernet interface 22001. Configure multiple services on bundle Ethernet sub-interfaces.

Procedure

|

Step 1 |

Configure attachment circuits. Consider bundle-Ether22001 ES, and configure multiple services on sub-interface. Example: |

|

Step 2 |

Configure EVPN E-Line xconnect service. Example: |

|

Step 3 |

Configure native EVPN. Example: |

|

Step 4 |

Running configuration of EVPN multiple services per Ethernet segment. Example: |

|

Step 5 |

Use show l2vpn xconnect summary and show l2vpn xconnect group xg22001 xc-name evpn-vpws-mclag-22001 commands to verify if each of the services is configured on the sub-interface. Example: |

Feedback

Feedback