eBook - Cisco Catalyst 9000 Switching Family

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

This book represents a collaborative effort between Technical Marketing, Product Management, Engineering and Sales teams during a week-long intensive session in 2017 at Cisco Headquarters in San Jose, CA.

● Bob Sayle — Sales

● Dave Zacks — Technical Marketing

● Dimitar Hristov — Technical Marketing

● Fabrizio Maccioni — Technical Marketing

● Ivor Diedricks — Product Management

● Jay Yoo — Engineering

● Kenny Lei — Technical Marketing

● Mahesh Nagireddy — Technical Marketing

● Minhaj Uddin — Technical Marketing

● Muhammad Imam — Technical Marketing

● Sai Zeya — Technical Marketing

● Shawn Wargo — Technical Marketing

The following authors contributed to the 2nd revision of this book in April 2019:

● Gary M Davis — Customer Experience

● Ginger Liu — Product Management

● Jay Sharma — Technical Marketing

● Jeffrey Meek — Marketing

● Kenny Lei — Technical Marketing

● Minhaj Uddin — Technical Marketing

● Sai Zeya — Technical Marketing

● Shawn Wargo — Technical Marketing

The following authors contributed to the 3rd revision of this book in April 2022:

● Arun Bhat — Product Management

● Ivor Diedricks — Product Management

● Jay Sharma — Product Management

● Jeff Meek — Product Marketing

● Jeremy Cohoe — Technical Marketing

● Kenny Lei — Technical Marketing

● Minhaj Uddin — Technical Marketing

● Ninad Diwakar — Technical Marketing

● Rajesh Edamula — Engineering

● Raj Kumar Goli - Technical Marketing

● Sai Zeya — Technical Marketing

● Shawn Wargo — Technical Marketing

● Siddharth Krishna — Technical Marketing

● With contribution from Manas Pati — Engineering.

A special thanks to Cisco’s Enterprise Networking Business Product Management, Engineering and Sales teams who supported the realization of this book. We are also genuinely appreciative of our Book Sprints (www.booksprints.net) team:

● Faith Bosworth (Facilitator)

● Henrik van Leeuwen and Lennart Wolfert (Illustrators)

● Raewyn Whyte and Christine Davis (Proofreaders)

● Manuel Vazquez (Book Designer)

Faith and the team created an enabling environment that allowed us to exercise our collaborative and technical skills to produce this technical publication to meet growing demand.

This book is best read in the order presented. However, based on the roles of the reader and their interests, some chapters can be reviewed out of sequence. The book is organized into chapters, with each chapter having multiple sections.

First, we introduce the Cisco Catalyst 9000 Switching Family, review the business drivers for enterprises and illustrate how Catalyst 9000 switches address the challenges enterprise IT faces. Next, we review the architectural foundations of the Catalyst 9000 Switching Platform, both from a hardware perspective, with the innovative Cisco Unified Access Data Plane (UADP), the Silicon One ASIC and the cutting-edge capabilities provided by Cisco IOS XE software. These foundational elements enable the Catalyst 9000 Switching Family to address the many demands of hybrid work placed on enterprise networks today.

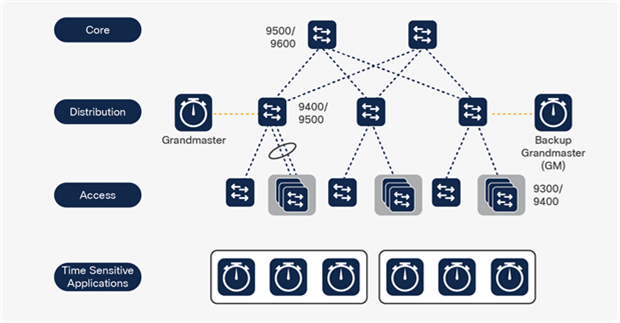

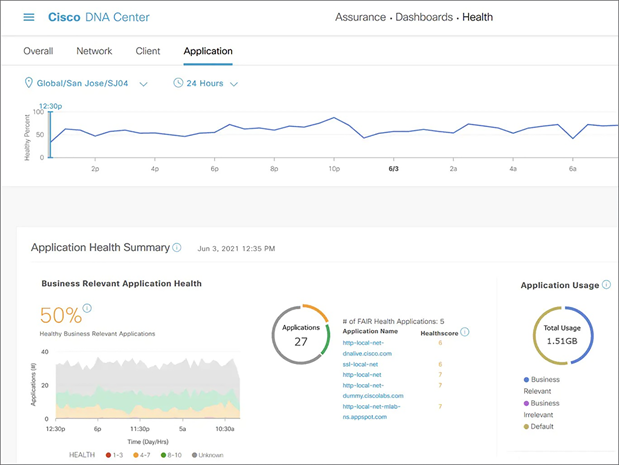

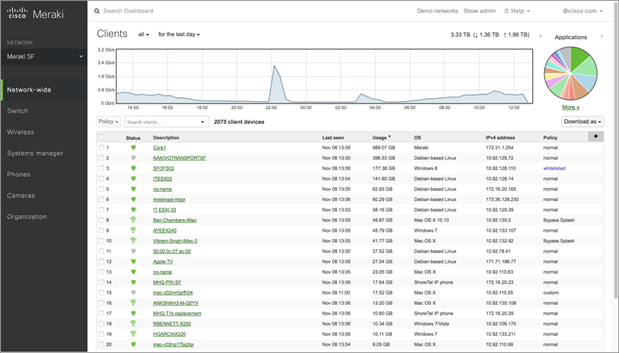

Subsequent sections outline how the Cisco IOS XE software on the Catalyst 9000 Switching Family meets these demands, covering High Availability, Security and Identity, Quality of Service, Network Visibility, Time-Sensitive Networks, Smart and Sustainable Buildings and Application Hosting. The open and model-based approach to network management is discussed in the Network Management chapter, in addition to Cisco DNA Center, Cisco ISE and Cisco Meraki.

Finally, the book examines the present state and future evolution of Campus Network Design and how the Catalyst 9000 Switching Family leads the way toward the ongoing transformation of enterprise network architectures.

IT and OT administrators, engineers, architects and integrators are constantly under pressure to meet their organizations' business and sustainability needs. This book focuses on the innovative Cisco Catalyst 9000 Family of Switches and how they help solve the many challenges that networking professionals face today.

The Catalyst 9000 Switching Family provides state-of-the-art technologies driven by open, flexible and powerful hardware and software. Networking professionals will be able to utilize this book to understand the Catalyst 9000 Switching Family, delve deep into its architecture and understand how it provides a strong foundation for next- generation networks.

This book assists customers and partners, network professionals, IT managers, executives and anyone interested in the latest and greatest networking technologies that the Catalyst 9000 Switching Family enables.

Fix your eyes on perfection and you make almost everything speed towards it

W.E. Channing

A group of Cisco Engineers from diverse backgrounds accepted the challenge of writing a book about a platform that changes the paradigm of enterprise networking. At the end of day one, the task seemed even more daunting, given the breadth of capabilities that Catalyst 9000 switches bring to networks. However, the team persisted and after hundreds of hours of diligent penmanship, this book was born!

The Book Sprints (www.booksprrriiinttts.nettt) methodology captured each of our unique strengths, fostered a team-oriented environment and accelerated the overall time to completion.

#CiscoCatalyst

#Catalyst9000

#cat9k

#CiscoHybridWork

#YourNetworkYourWay

#smartbuilding

#sustainability

What is new in this edition of the book?

This book has been updated to reflect several new and improved features available with the Cisco Catalyst 9000 Family of Switches.

Here are the highlights of this revision:

Catalyst 9200CX compact switches — the latest addition to the Catalyst 9000 fixed enterprise access-layer switching portfolio. The Catalyst 9200CX compact switches offer full PoE+, copper and fiber uplinks in a compact, fan-less design.

Catalyst 9300X and 9300LM switches — new high-performance fixed enterprise access/distribution switches. The Catalyst 9300 Series with UADP 2.0sec provides security, resiliency and performance at scale with a comprehensive set of industry- leading Layer 2 and Layer 3 features.

Catalyst 9400X line cards and supervisors — new modular enterprise access/distribution switching platform. The Catalyst 9400 Series with UADP 3.0sec provides security, resiliency and performance at scale with a comprehensive set of industry-leading Layer 2 and Layer 3 features.

Catalyst 9500X switches — new fixed enterprise core/distribution switching switches, using the new Silicon One Q200 ASIC and introducing up to 400G interfaces. The Catalyst 9500 Series provides security, resiliency and performance at scale, with a comprehensive set of industry-leading Layer 2 and Layer 3 features.

Catalyst 9600X line cards and supervisors — new modular enterprise core/distribution switching platform, using the new Silicon One Q200 ASIC and introducing up to 400G interfaces. The Catalyst 9600 Series provides security, resiliency and performance at scale with a comprehensive set of industry-leading Layer 2 and Layer 3 features.

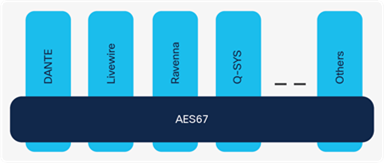

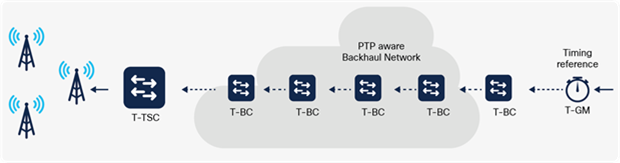

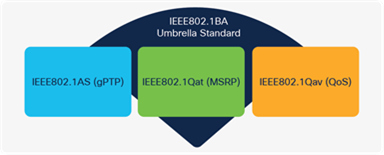

Time-Sensitive Networks — A look into Cisco’s solutions for time-sensitive networks driven by use cases across media and service provider networks.

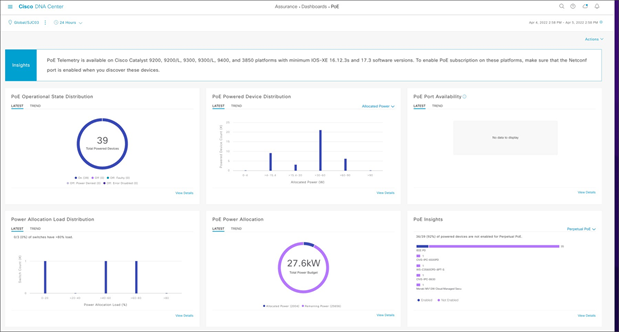

Smart and Sustainable Buildings — As the world gears up to a hybrid work model, Cisco Smart Building solutions offer reimagined workspaces that are safe, efficient and secure to meet every organization’s hybrid work business goals.

Note: There have also been many updates to all the chapters throughout the book, including Zero Trust, Cloud Security, Edge Networking, BGP EVPN and many more.

The book's revised edition of the Catalyst 9000 Switching Family addresses all the above areas and capabilities.

Continue reading to know more!

The world is changing rapidly. The demands of hybrid work require ubiquitous mobility, network visibility and pervasive security. IT managers need to rethink how their networks are designed to adapt to evolving IoT, manage cloud adoption and mitigate rapidly advancing security threats.

Enterprises of all sizes are replacing their legacy systems with new and evolving technologies to create a competitive advantage, enable higher productivity, greater sustainability, workplace health and lower operating costs. Businesses cannot build networks the same way they have for the past 30 years. Organizations need to create flexible networks that can constantly learn, adapt, protect and evolve.

This book explores the Catalyst 9000 Family of Switches and examines how these platforms meet the quickly evolving needs of the hybrid enterprise and extended network, today and well into the future.

As an expansive single family of fixed and modular LAN switches, the Catalyst 9000 Switch Family runs a single software code base across every platform in campus and branch networks. Design considerations can now be focused entirely on the scale and feature requirements for different places in the network. This allows IT operators to design the network to meet the evolving needs and reduce the Total Cost of Ownership (TCO) for enterprise networks.

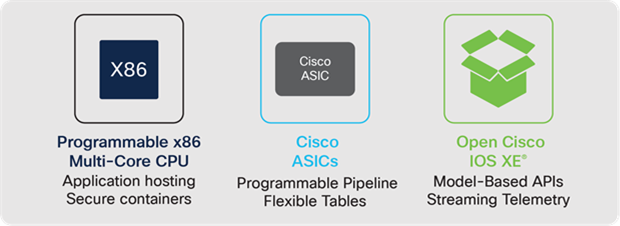

The Catalyst 9000 Switch Family are based on three foundational aspects:

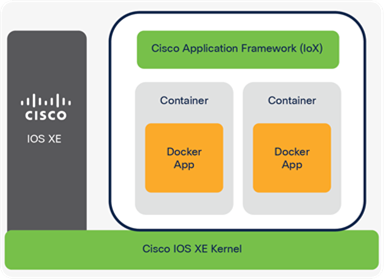

1. Multi-Core x86 CPU — built to support application hosting.

2. Cisco Custom ASICs — built with a flexible, programmable ASIC architecture.

3. Common software — built with an open, modular operating system, with simple feature licenses.

Foundational attributes of the Catalyst 9000 Switch Family

The Catalyst 9000 Switch Family is built on a custom Cisco ASIC architecture, powered by the Cisco Silicon One and Cisco Unified Access Data Plane (UADP) ASICs. This serves as an innovative, programmable and flexible foundation. The Silicon One and UADP ASICs enable network infrastructures to adapt to new technologies, trends and business needs over time. The Catalyst 9000 Switching Family is also built on a Multi- Core 64-bit x86 CPU architecture. A complementary CPU architecture provides predictable software processing and control plane management, providing the horsepower to tackle next-generation network architectures and providing a platform for application hosting. The Catalyst 9200 Series switches use an ARM CPU integrated into the UADP, for greater cost efficiency and lower power consumption.

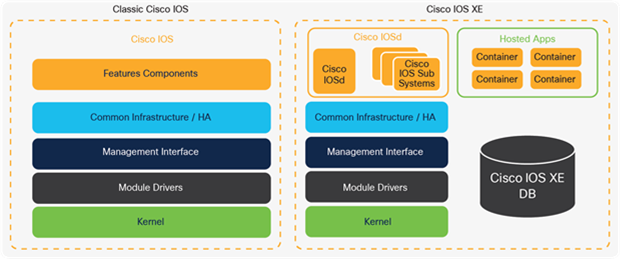

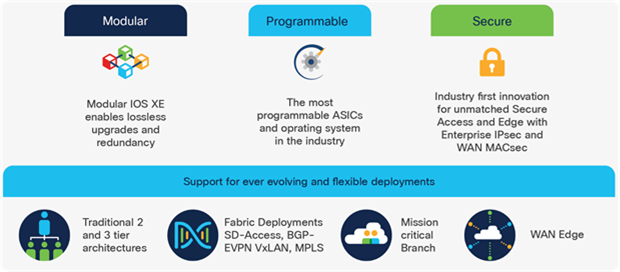

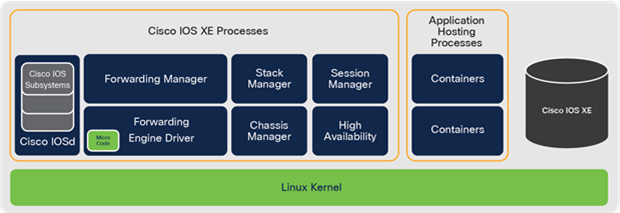

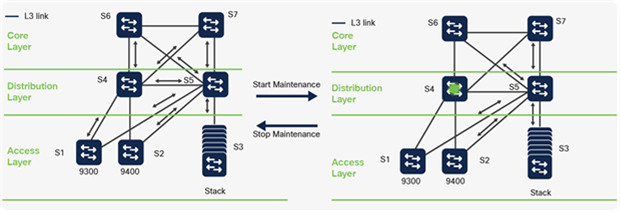

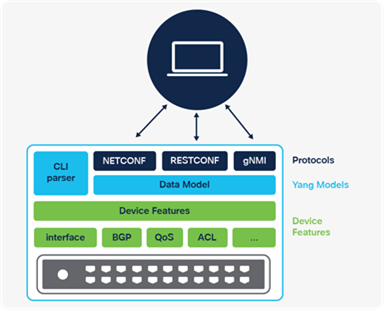

Every Catalyst 9000 switch runs on the open and modular Cisco IOS XE. This improves portability across Cisco enterprise platforms including Catalyst 9000 switches, wireless LAN controllers, access points and the Catalyst 8000 family of edge routers. It increases feature development velocity, improves High Availability and enables consistent deployment of features across the campus network. IOS XE provides a well-defined set of open APIs that improves management, increases visibility and simplifies network programmability and automation.

| The bottom line |

| Catalyst Switches are built on complementary hardware and common software and the switches are the foundation of the enterprise network. |

The significant trends seen in the industry today fall into four main categories — Hybrid Work and mobility, Smart Buildings and IoT, Cloud and Security.

Hybrid Work and mobility

The need for untethered, uninterrupted access enabled by new wireless and mobility technologies are driving the enterprise network infrastructure market. Hybrid Work and mobile applications make it possible for workers to access corporate assets from nearly anywhere, and from any device. High-definition video collaboration and applications such as Augmented Reality (AR), Virtual Reality (VR) and metaverse experiences add further demands for higher speeds, capacity and scale. This creates significant challenges for back-end IT infrastructures and those who manage it. Mobility is now not just a cost of doing business, but a strategic business asset, making it an integral part of the future enterprise network.

Smart Buildings and IoT

The digital transformation of business processes and operations includes powering and interconnecting new devices, sensors and endpoints to improve productivity, reduce risk and increase security. Organizations want smarter outcomes from their workplaces. They need to add extra intelligence to their infrastructure to implement density monitoring, contact tracing and environmental monitoring, all while acting without manual intervention. Billions of machine-to-machine connections will emerge over the next several years that require machine learning intelligence based on analytics and business policy. Enterprise campus networks will be required to support this influx of machine connectivity.

Cloud

Enterprises are augmenting internal IT with cloud services, either on-premises, colocated private cloud or public cloud. Campus networks must not only interface with private and public clouds but ensure the same application performance, security and policy adherence for those workloads as if they were still on-premises.

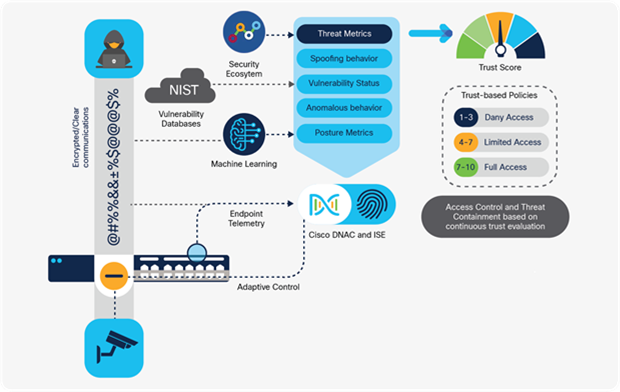

Security

All these new connections have profound security implications on the network. Each new connection is a potential attack vector. Attacks are becoming more and more sophisticated, and worse, they are often obscured via encryption. Campus networks must be able to secure these new connections by detecting anomalies and recognizing potentially malicious behaviors and patterns in real-time at scale.

The Catalyst 9000 Family of Switches extends Cisco networking leadership with breakthrough innovations to address the emerging trends.

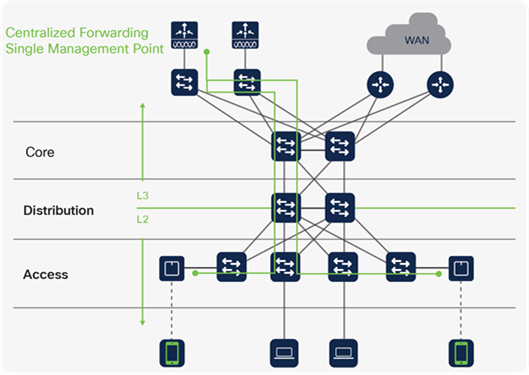

Enabling Hybrid Work

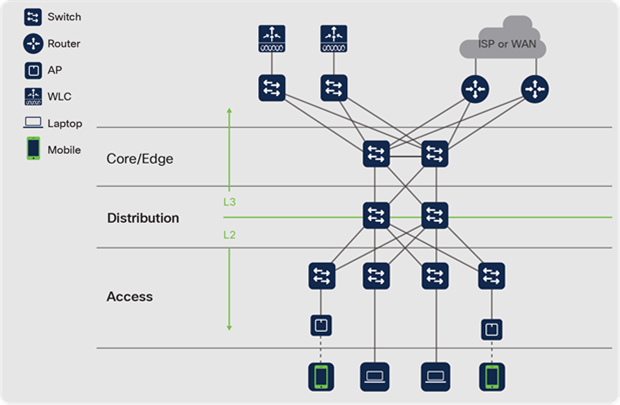

The hybrid workplace is a heterogeneous mix of people working in various locations, with device types such as corporate-issued laptops and BYOD phones and tablets. Hybrid work requires collaboration with high-definition video streaming, at home and in the office. This drives the need for high-density and high-bandwidth wireless, requiring higher bandwidths in the Access layer, with cascading effects on the Distribution and Core. Add the rapidly increasing number of IoT and OT devices, and IT has their hands full with managing access permissions and monitoring for intrusions.

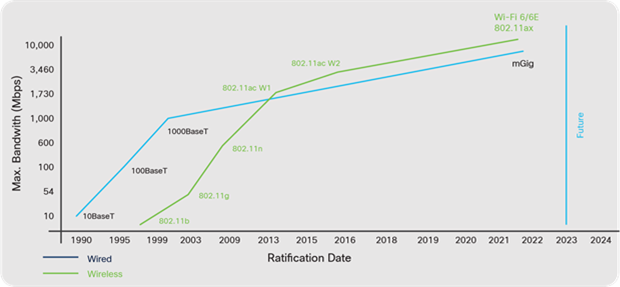

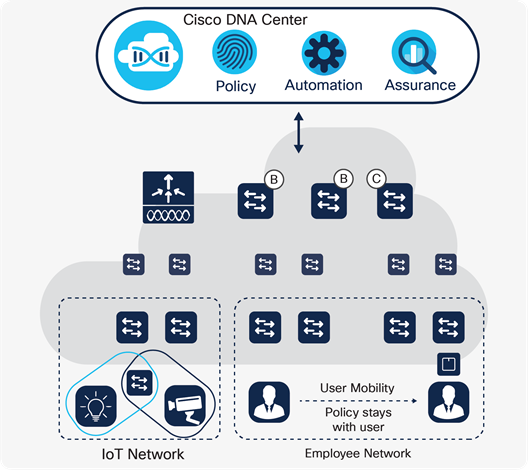

The Catalyst 9000 Family of Switches, enhanced by the new Catalyst 9000X models, delivers industry-leading multiGigabit (mGig) and Power over Ethernet (PoE) density and performance, enabling customers to build the densest wireless and IoT environments. These platforms also enhance scale, capacity and flexibility at every layer, while delivering on the promise of longer-term investment protection. The Catalyst 9000 Switch Family, along with the Cisco SD-Access Zero Trust solution for the workplace, connects and automates the management of network devices and endpoints, enabling a secure and scalable hybrid workplace.

| The bottom line |

| The Catalyst 9000 Switch Family along with SD-Access offers the optimal foundation for Hybrid Work. |

Enabling Smart Buildings and IoT

Many new devices are being connected to the network such as sensors, alarm systems, HVAC systems, LED lighting, UHD cameras, PoE dongles, smart desks and badge readers, which have not traditionally been connected to the same IT network or have been using proprietary protocols.

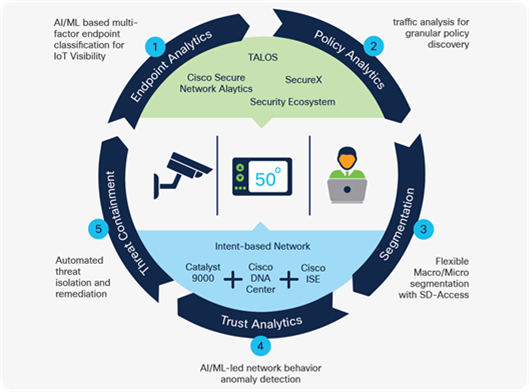

The Catalyst 9000 Switch Family platforms, together with Cisco DNA Center and Cisco Identity Services Engine (ISE), can automatically profile devices, provide security and segmentation, apply policies and monitor trust.

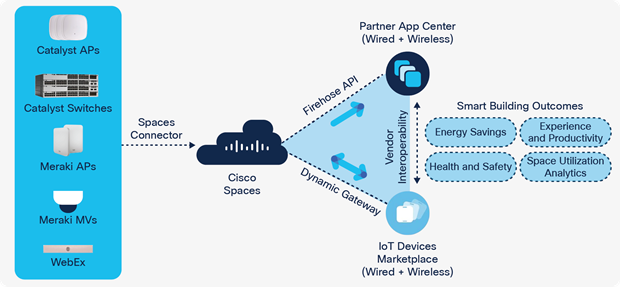

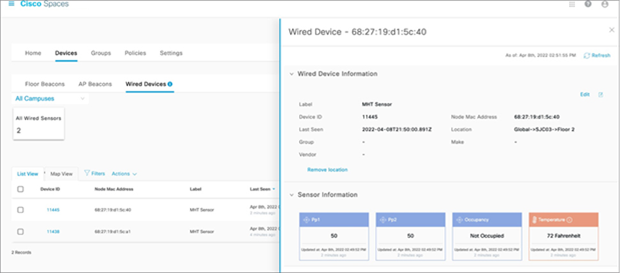

All these IoT devices and services are brought together under Cisco Spaces: a powerful, end-to-end, indoor location services cloud platform that helps customers enable business outcomes at scale, by providing wired and wireless customers with rich location-based services, including location analytics, business insights, customer experience management, asset tracking, safety and compliance, IoT fleet management and cloud APIs.

Some IoT devices, such as LED lighting, require always-on Power over Ethernet (PoE). The Catalyst 9000 Switch Family supports perpetual PoE and fast PoE to keep the lights on, even while the switch reloads.

Multicast DNS (mDNS) protocol, known as Bonjour, continues to gain popularity and many devices now support and use it for advertising or consuming services over the network. Cisco DNA Service for Bonjour delivers visibility to these services across locations and segments of the network, assigns policy based on these services, and orchestrates all this from a centralized point with Cisco DNA Center.

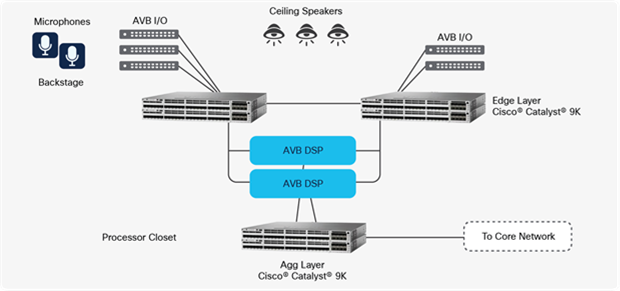

For professional media (audio-video) and precision time applications and endpoints, Catalyst 9000 switches support Audio Video Bridging (AVB) and IEEE 1588 timing.

| The bottom line |

| Catalyst 9000 switches are the ideal platform for Smart Buildings and connecting to the Internet of Things. |

Enabling Cloud

Applications are transitioning to the cloud at a rapid pace and are no longer only hosted at a central location. Traditional methods of protecting the network perimeter from security threats don’t work for the cloud. With the continued growth of cloud services, the boundaries between cloud-hosted applications and the endpoints at the edge of the network will continue to blur. Users accessing applications and network services across multiple clouds expect the same performance as on a LAN.

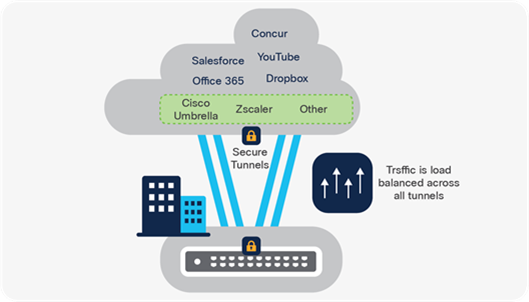

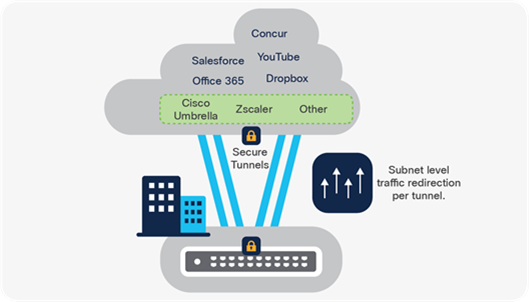

Catalyst 9000X switches deliver services securely to the user with high-speed encryption capabilities. For example, IPsec encryption enables a lean branch-in-a-box solution for traditional (without SD-WAN) Internet-only branches, where services are present in the cloud. The Catalyst 9000 Switch Family offers a consolidated solution for secure point-to-point connectivity from the branch to the edge, enabling cloud-based Security services such as Umbrella SIG. Cisco DNA Center provides full life-cycle service automation and assurance for these lean branches.

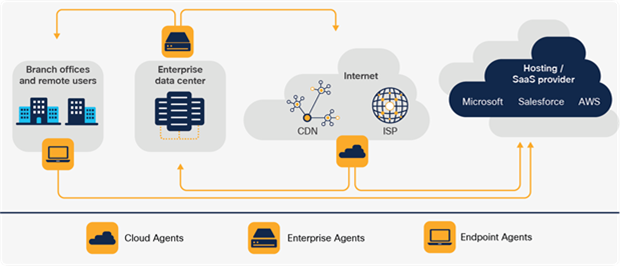

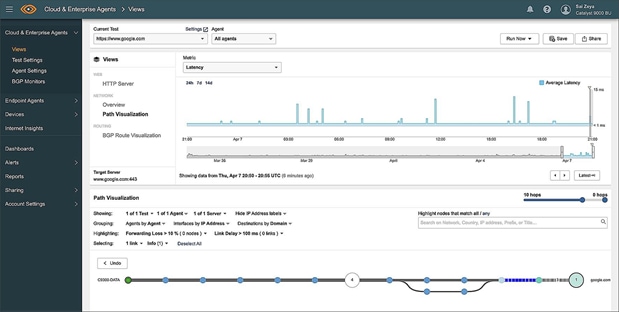

The Catalyst 9000 Switch Family supports Application Hosting with local storage enabling fog computing and network function virtualization. This supports distributed intelligent agents embedded into the network for analytics, assurance, security and cloud-connected applications. Customers can host third-party applications on the Catalyst 9000 Switch Family, making this the most flexible platform in the industry.

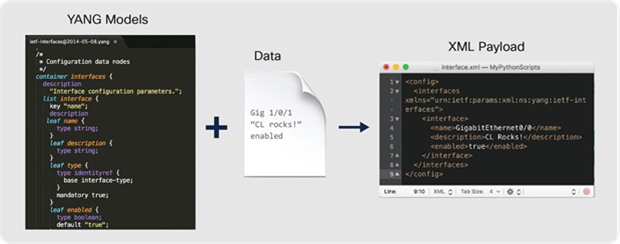

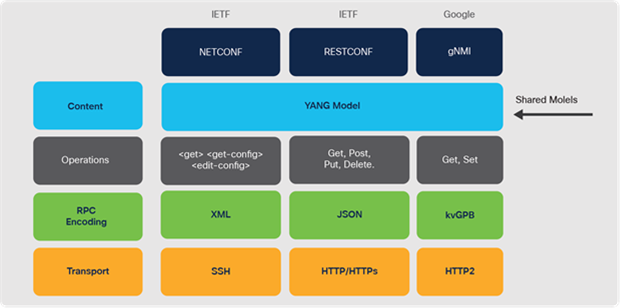

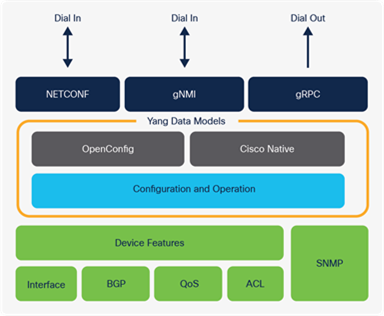

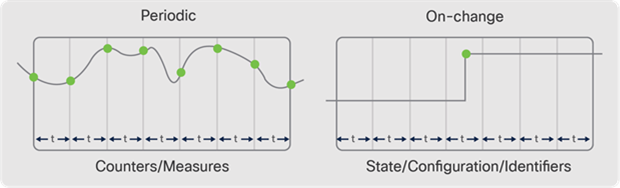

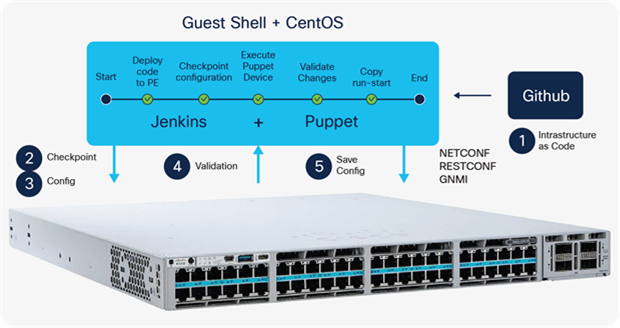

To make deployment and operation of the network more agile, Cisco has added a programmatic framework and tools to drive the use of automation through NETCONF, RESTCONF, gNMI and gNOI APIs with YANG models as well as integrations for Infrastructure as Code (IaC) software tools such as Terraform that can be used to automate infrastructure from LAN to cloud. For more information about IOS XE programmability, refer to https://www.cisco.com/c/dam/en/us/products/collateral/enterprise-networks/nb-06-ios-xe-prog-ebook-cte-en.pdf

| The bottom line |

| The Catalyst 9000 Switch Family is the ideal platform for accessing cloud- native infrastructure securely. |

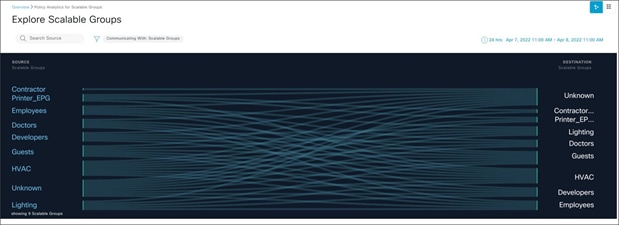

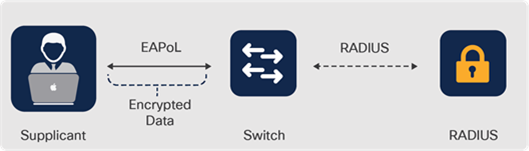

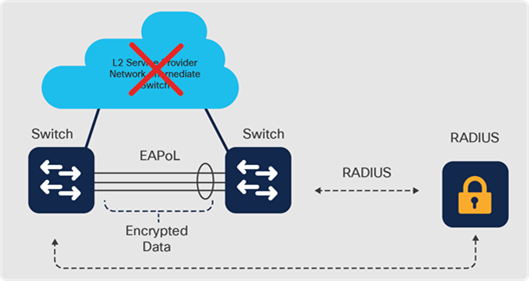

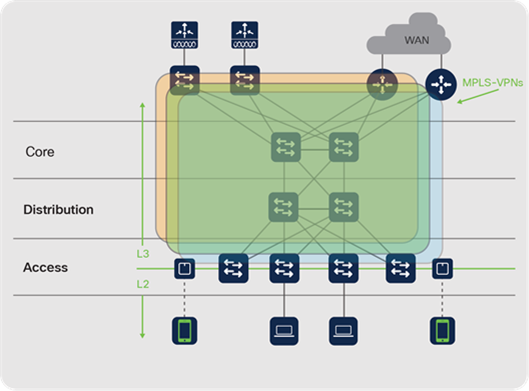

Enabling Security

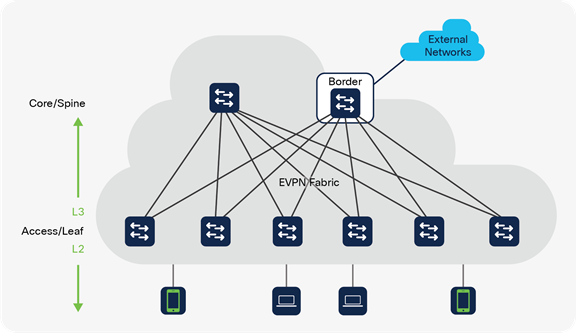

A diverse and growing set of devices are connected to enterprise networks. Network segmentation may be used to constrain devices and users so that communication is only possible once allowed. The Catalyst 9000 Switch Family supports numerous segmentation capabilities at a macro (network segment) and micro (user or device group) level with support or Virtual Routing and Forwarding (VRF) with VRF-Lite or MPLS, Virtual Network Instances (VNIs) with VXLAN, BGP EVPN, SD-Access or Cisco TrustSec.

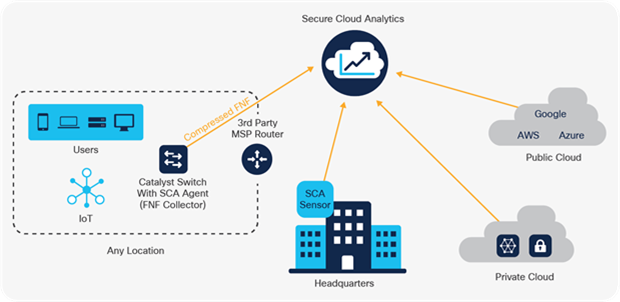

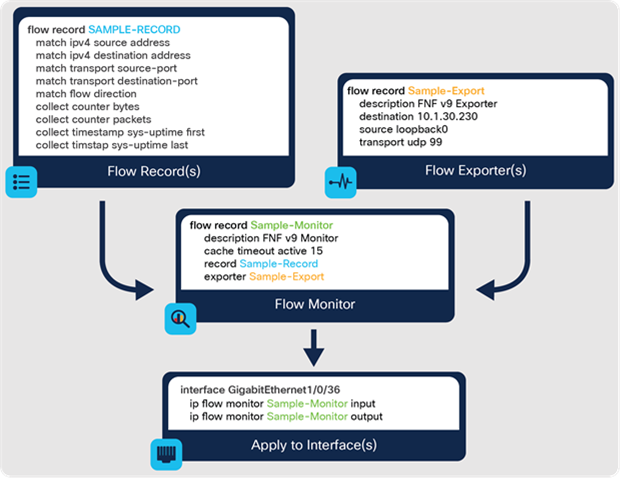

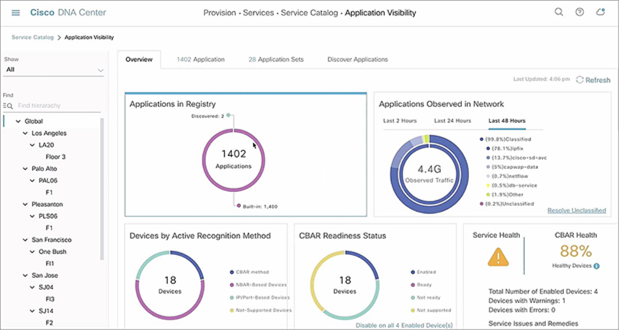

The Catalyst 9000 Switch Family collects metadata about all flows traversing the network, using Full Flexible NetFlow (FNF), without affecting network performance. Software-Defined Application Visibility and Control (SD-AVC) uses this to enable the detection of applications running in the network and optimize bandwidth with application-aware policies. Also, combining this FNF data with Cisco security solutions, such as Cisco AI Endpoint Analytics and Cisco Secure Network Analytics (formerly Stealthwatch), provides detection of denial-of-service attacks and other malicious activity.

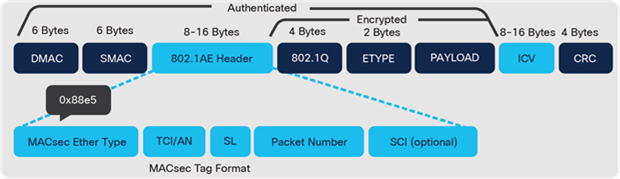

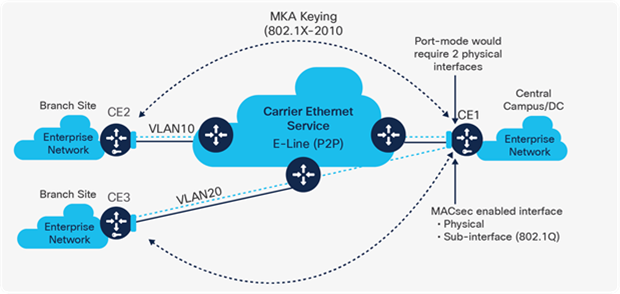

With the Catalyst 9000 Family of Switches, the links between switches can be encrypted using up to 256-bit AES MACsec, operating at line-rate. This encryption can also be used for connections between the switch and endpoints in the LAN. For advanced hardware-based encryption over the WAN, Catalyst 9000X switches have introduced support for WAN MACsec and IPsec, enabling secured site-to-site and site- to-cloud interconnects.

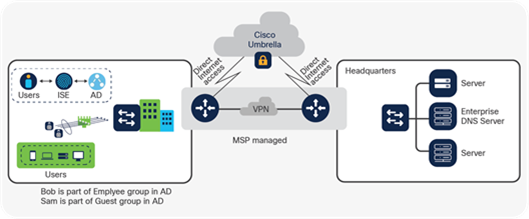

The Catalyst 9000 Switch Family runs on-box agents that enable integrations with Cisco Umbrella and Cisco Secure Cloud Analytics. As more and more network traffic is becoming encrypted, it is critical that these threats are detected and mitigated upon entry to the network. The Catalyst 9000 Switch Family can detect and mitigate malware hiding in encrypted traffic using Encrypted Traffic Analytics (ETA) without the need for decryption.

Finally, Cisco Trustworthy Solutions protects the switches themselves. A holistic approach provides comprehensive verification of hardware and software integrity, by securing the device and hosted applications.

| The bottom line |

| The Catalyst 9000 Switch Family provides the most secure switching environment. |

The Catalyst 9000 Family of Switches

The Cisco Catalyst 9000 Switching Family is the next generation of the Cisco Catalyst portfolio of enterprise LAN Access, Distribution and Core switches. Catalyst 9000 switches extend Cisco networking leadership with breakthrough innovations in Hybrid Work, IoT, Cloud and Security.

The Catalyst 9000 Switching Family addresses the challenges of today's always-on hybrid work to help you focus on the needs of the business, not on the network — now and for whatever the future brings. These switches are designed from the ground up for higher performance, greater flexibility, security and resiliency.

Catalyst 9000 Family of Switches

Catalyst 9000 switches are built on a common architecture with a strong hardware and software foundation. This commonality and consistency bring simplicity and ease of operations for IT and OT staff, reducing total operational cost and creating a better user experience.

Consistent hardware architecture

The Catalyst 9000 switching hardware uses a consistent internal and external design.

Internally, the hardware uses either Cisco Unified Access Data Plane (UADP) or the Cisco Silicon One ASICs, providing flexibility and capacity for packet handling. The hardware also uses a common onboard x86-based CPU to allow the switch to host additional applications (beyond those normally possible on a network switch).

Externally, the hardware is designed by one of the best industrial design firms globally — Pininfarina, whose customers include Ferrari and Maserati. This level of design focus brings an enhanced user experience to the Catalyst 9000 Switching Family. It provides user-centric, ergonomic design and common attributes that simplify device operations to improve usability, thus helping reduce the total cost of ownership. Catalyst 9000 switches add many usability improvements to the device, such as RFID, blue beacon and Bluetooth console.

Common software architecture

The Catalyst 9000 Family of Switches runs a common operating system, the Cisco IOS XE. Cisco IOS XE is an enhanced, open and programmable OS and with a 30-year history and thousands of features, it is inarguably the most feature-rich OS in the networking industry. A common code base shared across the switching platforms enables end-to-end feature support and feature parity throughout the network.

Catalyst 9000 switching has five family members, broadly segregated into three types of network design models:

● Simple branch deployment

● Secure branch deployment

● Business-critical campus deployment

More details are provided in the chapter Campus Network Design

● Catalyst 9200 Switches — fixed, stackable and compact access (simple branch)

● Catalyst 9300 Switches — fixed, stackable access and distribution (secure branch and business-critical campus)

● Catalyst 9400 Switches — modular access and distribution (secure, resilient campus)

● Catalyst 9500 Switches — fixed edge, core and distribution (secure, resilient campus)

● Catalyst 9600 Switches — modular edge, core and distribution (secure, resilient campus)

These platforms are discussed in further detail in the following chapters.

Catalyst 9200 switches focus on offering right-sized switching for simple branch and compact deployments. With its Catalyst 9000 family pedigree, the Catalyst 9200 Series offers simplicity without compromise – they are secure, always-on and IT simplified. The Catalyst 9200 and 9200L models offer full PoE+, power and fan redundancy, modular and fixed uplink options, stacking bandwidth up to 160 Gbps, Layer 3 feature support and patching. Catalyst 9200 switches are purpose-built for cost-effective branch office access and space-constrained deployments. Catalyst 9200CX compact fanless models are ideal for fiber to the edge, high-speed data connectivity and Power over Ethernet (PoE+) connectivity in places where space is at a premium.

Catalyst 9200 Series Switches

Platform overview

The Catalyst 9200 Switches offer three model options:

● Catalyst 9200 — with modular uplinks and fans

● Catalyst 9200L — with fixed uplinks and fans

● Catalyst 9200CX — for compact, fanless deployments

The Catalyst 9200 and 9200L models have 24 and 48 port copper options with three configurations:

● Data-only 1G — optimized for devices that primarily require 10/100/1000 Mbps

● PoE+ 1G — provides all capabilities of the data-only models with support for PoE (15.4W) and PoE+ (30W) power. All ports provide PoE+ power simultaneously with dual power supplies.

● MultiGigabit — provides speeds up to 10 Gbps on mGig ports. The 1G and mGig ports are PoE+ capable and full PoE+ are supported with dual power supplies.

The Catalyst 9200CX compact models have 8 and 12 port copper options in 1G Data and PoE+ configurations.

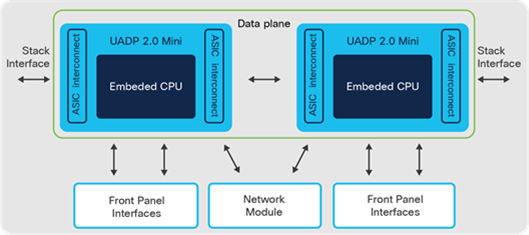

Architecture

Catalyst 9200 switches have been designed for a simple branch deployment with a simple but powerful architecture. The switch architecture consists of two main components:

● UADP ASIC

● ASIC interconnects/Stack interface

UADP ASIC

Catalyst 9200 switches have an embedded 4-core ARM CPU on the Cisco UADP 2.0 mini ASIC to run the operating system. The Cisco IOS XE operating system has been optimized as Cisco IOS XE Lite, providing a smaller image size and faster boot time, accommodating the hardware without compromising the benefits of Cisco IOS XE.

Catalyst 9200 and 9200L models with mGIG have two UADP 2.0 mini ASICs and all other models are powered by a single UADP 2.0 mini ASIC. All Catalyst 9200 switch ports are line-rate for all packet sizes.

ASIC interconnect

Catalyst 9200 switches consist of an internal stack interface of 160 Gbps (80 Gbps full- duplex) on the Catalyst 9200 and 9200L models, respectively, acting as ASIC interconnect on the switches with dual ASICs.

Catalyst 9200 Switch architecture

StackWise-160/80

Catalyst 9200 and 9200L models can stack up to eight switches, providing a centralized control plane while allowing distribution of the data plane. The modular uplink Catalyst 9200 models support 160 Gbps, whereas fixed uplink Catalyst 9200L models have a stacking bandwidth of 80 Gbps.

Note: Catalyst 9200CX models do not support stacking.

Additional details about Cisco StackWise can be found in the chapter High Availability.

Network modules

Catalyst 9200 modular models have an optional slot for uplink network modules. There are four variants of uplink modules: 4x 1G SFP ports, 4x 10G SFP ports, 2x 25G SFP ports and 2x 40G QSFP ports.

Uplink modules are field-Replaceable Units (FRU) that enable a swap of network modules without interrupting switch operations, providing investment protection without compromising on availability.

Note: Catalyst 9200L and 9200CX models have fixed uplink configurations for each model.

Power supply and fans

All Catalyst 9200 and 9200L switch models support dual redundant power supplies. The data-only model uses a 125W AC power supply; 24-port PoE+ and mGig models use 600W AC, while the 48-port PoE+ and mGig models use a 1000W AC supply. All three power supplies are highly efficient and rated 80 Plus Silver (125W) and Platinum (600W, 1000W) efficiency. Such high efficiency and innovations such as Energy Efficient Ethernet (EEE) lead to a significantly lower cost of ownership.

Catalyst 9200CX provides flexible options to power the switch. In addition to an external AC power adapter, select SKUs also support the PoE passthrough capability, allowing the switch to be powered via an upstream UPOE switch.

Catalyst 9200 and 9200L models are equipped with dual variable-speed fans to accommodate variance in temperature and altitude. All models can cool the switch with a single fan, in event of one fan failure. On modular uplink models, the fans can be replaced on an operational switch without downtime. On fixed uplink models, the fans are fixed, whereas all Catalyst 9200CX models are fanless.

Cisco Catalyst 9300 Series switches are the leading business-critical stackable enterprise fixed Access and Distribution switching platform. These Access switches are ideal for business-critical branch and campus environments where scale, optimal security, resiliency and programmability are necessary. They offer up to 1 Tbps of stacking bandwidth for eight devices in a stack. There are a variety of copper and fiber downlink speeds, flexible high-speed uplink options and the switches are built to future-proof next-generation access networks. These offer enhanced scale, compute resources, dense Cisco UPOE+, Cisco StackPower, Multigigabit (mGig) connectivity, strong security and built-in resiliency features.

Catalyst 9300 Series switches

Platform overview

All models of the Catalyst 9300 Series are 1RU units with dual power supplies and redundant fans. The Catalyst 9300 and 9300X modular models have an optional slot for uplink network modules, UPOE+ (90W), StackPower and StackWise-480/1T. The Catalyst 9300L and 9300LM models support fixed uplinks, UPOE (60W) and StackWise- 320.

Different models offer a variety of connectivity and scale. These can be organized into various configurations, each with 24 and 48 port copper or 12 and 24 port fiber options:

● Data-only — optimized for devices that just need data connectivity, without PoE, with speeds from 10 Mbps to 10 Gbps

● PoE/PoE+ — provide the same capability as the copper data models plus support for up to 30W of PoE. All the ports support PoE/PoE+ and all ports can be active simultaneously with PoE+.

● Universal PoE (UPOE) — provides the same capability as the PoE+ models with the support of 60W of PoE. Any of the ports can be configured with UPOE, but the maximum available PoE power is dependent on the power supplies used.

● Universal PoE+ (UPOE+) — provides the same capability as the UPOE models with the added support of 90W of PoE. Any of the ports can be configured with UPOE+, but the maximum available PoE power is dependent on the power supplies used.

● 1G/mGig/10G Copper — provides connectivity at multiple speeds from 100 Mbps to 10 Gbps on RJ45 ports. Different models address varied port density requirements.

● 1G/10G/25G Fiber — provides fiber downlink connectivity options at 1G (SFP), 10G (SFP+) and 25G (SFP28) options to enable Fiber To The Desk (FTTD) and collapsed-core deployments.

● High-Scale models — provides higher MAC, IP route, ACL scale and deeper buffers to address the requirements of rich multimedia delivery and larger forwarding tables.

Catalyst 9300X models are the first switches in the industry with up to 1 Tbps stacking and 100G hardware IPsec. With double the local compute (CPU cores, memory, CPU Interconnect and storage) for Application Hosting, Catalyst 9300X models can serve many diverse needs for Hybrid Work and Smart Buildings. These switches can also be used as a Branch-in-a-Box solution for lean branch sites not using SD-WAN. Catalyst 9300X models offer the industry’s densest mGig fixed access portfolio that can also provide 90W power on each port, with 100G uplink options.

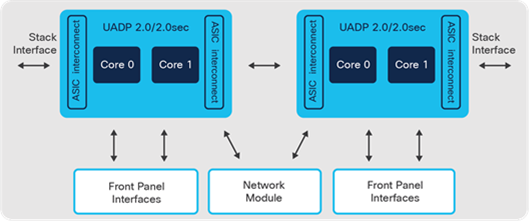

Architecture

Catalyst 9300 Series switches operate at line-rate, non-blocking performance and offer configurable system resources to optimize support for specific features. The switch architecture consists of three main components:

● UADP ASIC

● x86 CPU complex

● ASIC interconnect/Stack interface

UADP ASIC

Catalyst 9300, 9300L and 9300LM models are built with UADP 2.0 ASIC. The mGig models are equipped with two UADP 2.0 ASICs. The models without mGig are powered by a single Cisco UADP 2.0 ASIC.

Catalyst 9300-B (High-Scale) models use the UADP 2.0XL ASIC that supports double the buffers (i.e., 32MB, 16MB per core) per ASIC. It also supports double the MAC and IP route scale and a higher ACL scale when compared to the non-XL version. mGig models are built using 2 ASICs, whereas 1G models use a single ASIC.

Catalyst 9300X models are built with UADP 2.0sec ASIC that offers higher stack bandwidth, enhanced scale (2x routes, higher ACLs), 2x 10G AppGigabit ports (for application hosting) and L3 encryption in hardware up to 100G.

X86 CPU complex

Catalyst 9300, 9300L and 9300LM models are equipped with a 1.8 GHz x86 4-core CPU and 8 GB of DRAM. Catalyst 9300X models have a 2.4 GHz x86 4-core CPU that supports Quick Assist Technology (QAT) for software security performance acceleration and 16 GB of DRAM. All switches support 16 GB of internal flash storage and an external USB 3.0 SSD storage for application hosting and general-purpose use.

ASIC interconnect

Catalyst 9300 switches consist of an internal stack interface with 540, 240 and 160 Gbps on the Catalyst 9300X, 9300 and 9300L models respectively, acting as ASIC interconnect on the switches with multiple ASICs.

Catalyst 9300 Switch architecture

StackWise-1T, StackWise-480 and StackWise-320

Catalyst 9300 switches provide the ability to stack up to eight switches using dedicated cables on the back, combining them to operate as a single, logical switch.

Catalyst 9300X models use the same stack cables as existing Catalyst 9300 models. Catalyst 9300X switches can be stacked together and are backward-compatible with existing Catalyst 9300 models. These platforms enable flexible design options, allowing for a mix of 10/25G fiber and copper models in the same stack.

Note: Stacking between Catalyst 9300/9300X and Catalyst 9300-B, Catalyst 9300L or 9300LM models is not supported.

Additional details about Cisco StackWise can be found in the chapter High Availability.

Network modules

All Catalyst 9300 switches have an optional slot for uplink network modules. The ports on these modules can be used for both uplink and downlink connectivity.

Catalyst 9300X uplink module options:

● 4x 40G/100G QSFP ports

● 2x 40G/100G QSFP ports

● 8x 10G-mGig RJ45 ports (no PoE)

● 8x 1G/10G/25G SFP28 ports

Catalyst 9300 uplink module options:

● 4x 1G RJ45 ports (10/100/1000Mbps)

● 4x 10G-mGig RJ45 ports (no PoE)

● 8x 10G SFP+ ports

● 2x 25G SFP28 ports

● 2x 40G QSFP ports

Note: The Catalyst 9300X uplink modules are only compatible with Catalyst 9300X models.

Note: Catalyst 9300 switches are compatible with Catalyst 3850 switch uplink modules. However, Catalyst 9300 switch uplink modules are not compatible with Catalyst 3850 switches.

Uplink modules are field Replaceable Units (FRU) that enable a swap of network modules without interrupting switch operations, thereby providing investment protection without compromising on availability.

Power supply and fans

Catalyst 9300 switches support dual redundant power supplies. These 80 Plus platinum-rated AC power supplies are available in 350W, 715W, 1100W and 1900W and provide maximum energy efficiency. A DC power supply variant is also available in 715W. The power supplies can be mixed in any combination, for example, AC and DC.

Catalyst 9300 switches are equipped with three field-replaceable variable speed fans to accommodate variance in temperature and altitude. These fans are operated in an N+1 redundant mode.

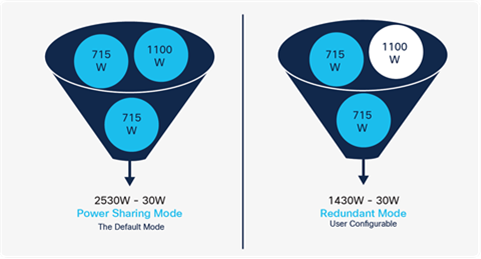

StackPower

Catalyst 9300 switches provide the ability to create a shared pool of power using dedicated stack power cables. In the event of power supply failure or more PoE power draw, the switch can utilize the power from the shared pool to support the extra load.

StackPower can be deployed in two modes: power-sharing and redundant mode. Additional details are provided in the chapter High Availability.

Cisco Catalyst 9400 Series switches are the leading business-critical modular enterprise Access and Distribution switching platform. Catalyst 9400 switches provide unparalleled investment protection with a flexible chassis architecture capable of supporting up to 9 Tbps of system bandwidth. They also offer unmatched power delivery for high-density PoE deployments, delivering dense 90W Cisco UPOE+ to endpoints. Catalyst 9400 switches deliver state-of-the-art High Availability with capabilities such as dual supervisors and N+1/N+N power supply redundancy. The platform is campus-optimized with an innovative dual-serviceable fan tray design, side- to-side airflow and is closet-friendly with a 16-inch depth. A single Catalyst 9400 switch can scale up to 384 access ports.

Catalyst 9400 Series Switches

Platform overview

Catalyst 9400 switches provide up to 480 Gbps per slot bandwidth. Three models offer different densities to fit different size requirements: 4-slot, 7-slot and 10-slot chassis.

All three chassis options provide dual supervisor slots for maximum availability. Each chassis is designed to support up to 720G of bandwidth between the two supervisor slots, allowing supervisors to support multiple 100G ports. With the growing need for increased PoE, the chassis has the capability of providing more than 4,800W of PoE power per slot delivering a max of 260 ports powered at 90W.

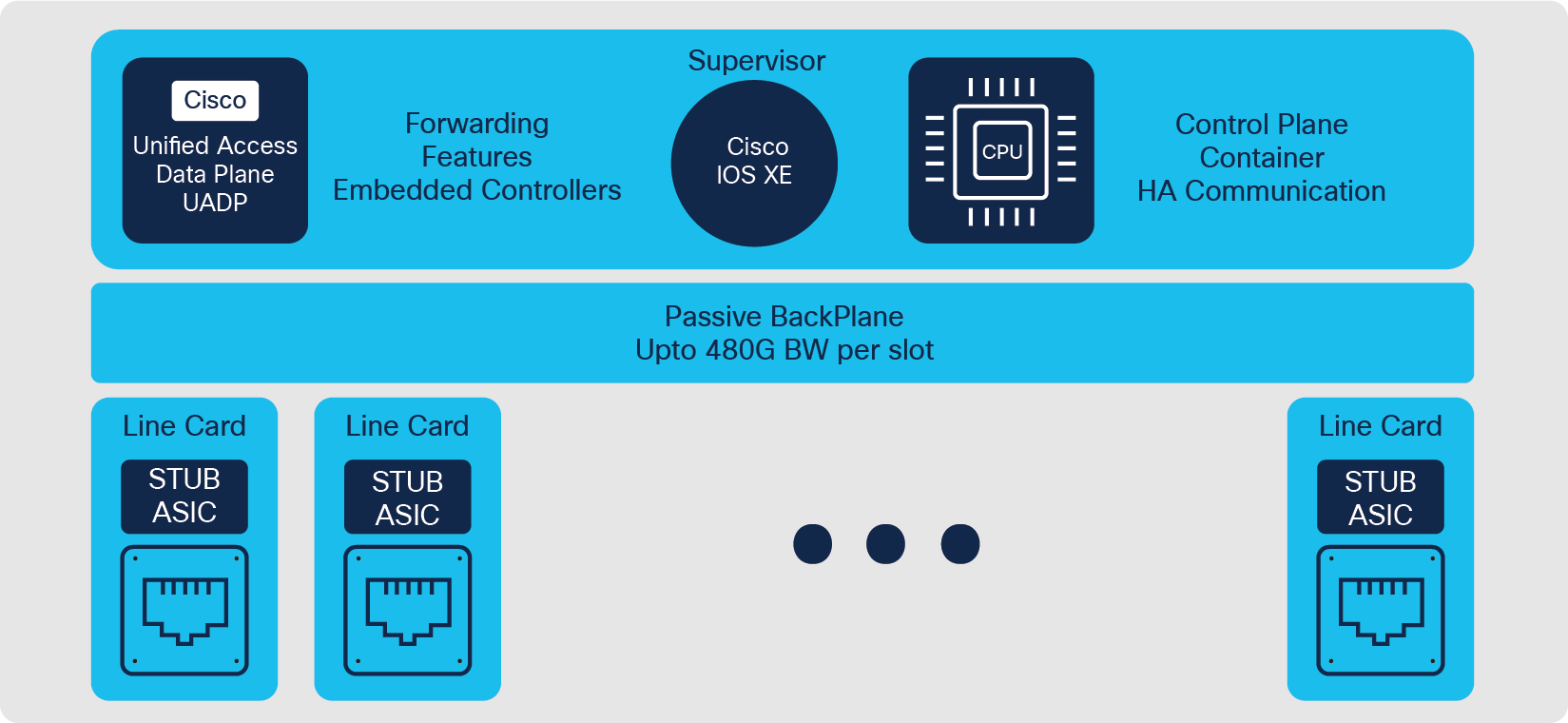

Architecture

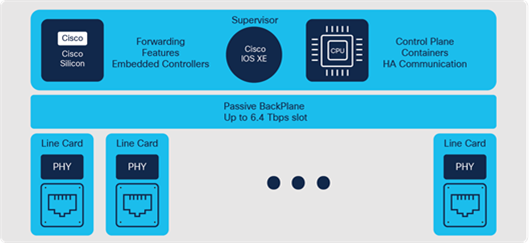

Catalyst 9400 switches are based on a centralized architecture, which means all forwarding, service and queuing are done on the supervisor, while the line cards are considered transparent, containing only stub ASICs and PHYs. The simplicity of this centralized design allows easy upgrade of bandwidth and features by just upgrading the supervisor while retaining existing line cards. This provides significant investment protection.

Catalyst 9400 Series Switch architecture

Supervisors

The Catalyst 9400 Series comes with multiple supervisor offerings that address a varied set of port speed, slot capacity and scale requirements. The supervisors are categorized based on their generation:

Catalyst 9400 Generation 2 supervisors:

● Catalyst 9400X-SUP-2

● Catalyst 9400X-SUP-2XL

Catalyst 9400 Generation 1 supervisors:

● Catalyst 9400-SUP-1

● Catalyst 9400-SUP-1XL

● Catalyst 9400-SUP-1XL-Y

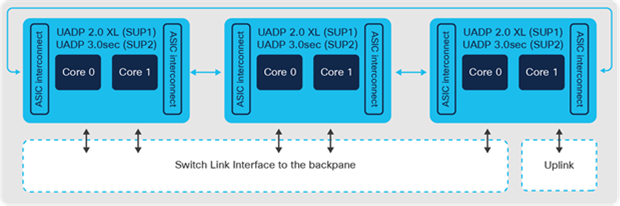

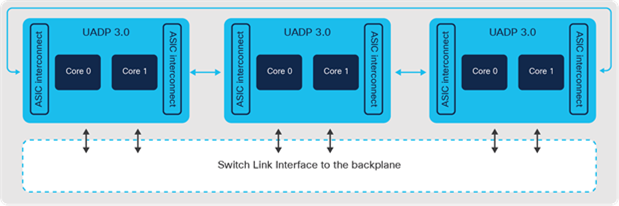

The Catalyst 9400 Gen-2 supervisors are powered by three UADP 3.0sec ASICs and a 2.3 GHz 8-core x86 CPU. Each ASIC provides up to 1.6 Tbps bandwidth, increased routing capabilities, higher buffer capacity and hardware support for IPsec and WAN MACsec. The three ASICs are interconnected with a 1.6 Tbps ASIC interconnect. UADP 3.0sec ASIC comes with 36MB unified packet buffers shared between the ASIC cores that help with improved microburst absorption. The Gen-2 supervisors support 4x 10/25G and 4x 40/100G uplink ports that support mixed combinations with overall uplink bandwidth of 400 Gbps. Catalyst 9400-SUP-2XL and 9400-SUP-2 enable 480G and 240G of per-slot bandwidth respectively, on all chassis types.

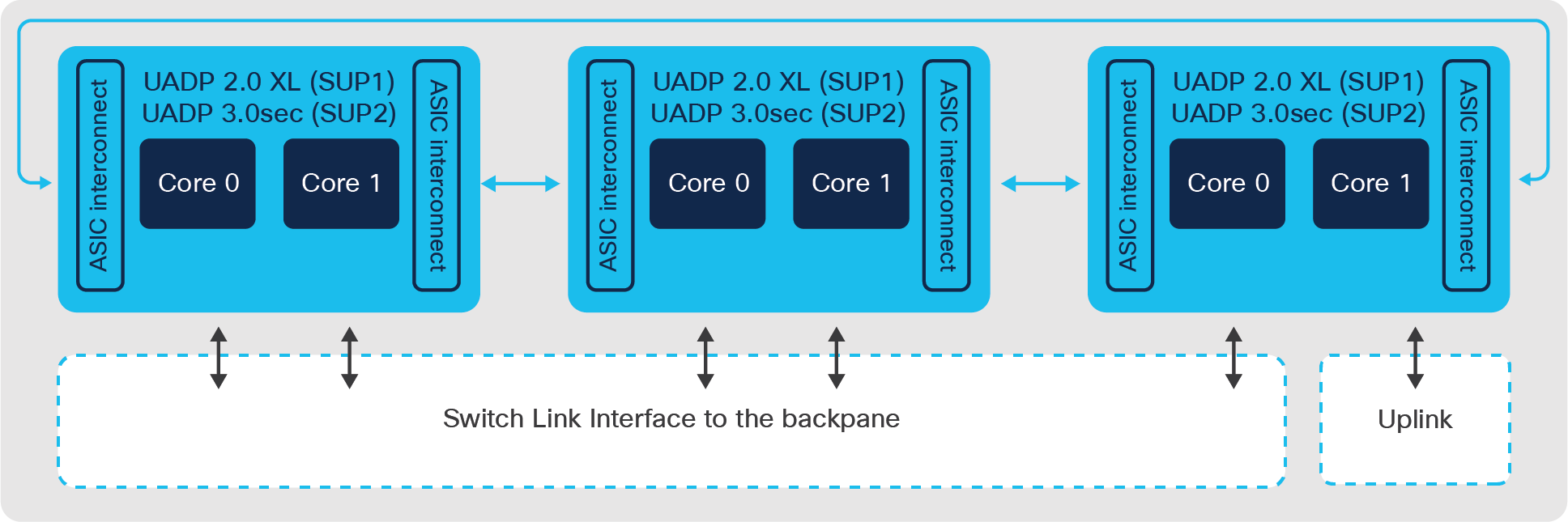

All Catalyst 9400 Gen-1 supervisors are powered by three UADP 2.0 XL ASICs and 2.4 GHz 4-core x86 CPU. The three ASICs are interconnected through a 720G ASIC interconnect. The Gen-1 supervisors have 8x SFP/SFP+ interfaces (ports 1 and 5 capable of 25G on 9400-SUP-1XL-Y) and 2x QSFP interfaces on the front provide a total of 80G uplink bandwidth shared between interfaces in various combinations. SUP-1 provides 80 Gbps of bandwidth per slot for all chassis models. SUP-1XL/1XL-Y provides 80 Gbps of bandwidth per slot in the 10-slot chassis, 120 Gbps of bandwidth per slot for the 7- slot chassis and 240 Gbps per slot for the 4-slot chassis.

All Catalyst 9400 switch Gen-1/Gen-2 supervisors come with 16 GB DRAM and 16 GB of internal flash storage. For application hosting or general-purpose storage, these switches support front-panel USB 3.0 SSD storage and additionally support onboard M2 SATA SSD up to 960 GB.

All supervisors support different ASIC templates to accommodate various deployment models as discussed in 13 - Campus Network Design. SUP-2/XL is also hardware capable of supporting customized ASIC templates that allow users to carve forwarding resources.

Catalyst 9400 switch supervisors use Switch Link Interfaces (SLIs) to connect line card stub devices through the backplane. The Catalyst 9400-SUP1 models support 10G SLI speeds for ASIC to switch backplane interconnects. The Catalyst 9400X-SUP2 models support 30G SLI speeds for Gen-2 line cards and 10G SLI speeds for Gen-1 line cards.

Catalyst 9400 Switch Supervisor-1XL and Supervisor-2XL architecture

Line cards

The Catalyst 9400 Series offer options of 1G, mGig (1/2.5/5/10G), Cisco PoE+, UPOE, UPOE+ and 1G/10G fiber line cards for different connectivity requirements.

● 48-port Data-only line card — all 48 ports on this module support 10/100 Mbps and 1 Gbps

● 48-port PoE+/UPOE/UPOE+ line cards — all speeds of the data-only line card with support for PoE+ (30W), UPOE (60W) and UPOE+ (90W) respectively. All 48 ports within the slot can provide the rated PoE power simultaneously.

● mGig line cards — various combinations from 100 Mbps to 10 Gbps and PoE up to 90W on RJ45 ports are supported based on requirements. The three variants are:

◦ 24 ports 10G mGig + 24 ports 1G with 60W UPOE

◦ 48 ports 5G mGig with 90W UPOE+

◦ 48 ports 10G mGig with 90W UPOE+ (Gen-2 Sup only)

● SFP/SFP+ Fiber line cards — the three variants are:

◦ 24/48 ports 1G/SFP

◦ 24 ports 10G/SFP+

◦ 48 ports 10G/SFP+ (Gen-2 Sup only)

ASIC — Line Card mapping

The diagram below illustrates the mapping of the ASICs to the line cards for the 4-slot, 7-slot and 10-slot chassis.

Table 1. Catalyst 9400 Switch ASIC — Line Card mapping

| ASIC # |

4-slot |

7-slot |

10-slot |

| UADP #1 |

Slot 1 |

Slot 2 and 7 |

Slots 1, 9 and 10 |

| UADP #2 |

Slot 4 |

Slots 1 and 5 |

Slots 2, 3 and 4 |

| UADP #3 |

Uplinks |

Slot 6 and uplinks |

Slots 7 and 8 and uplinks |

Line card oversubscription

All Gen-2 line cards (48x mGig, 48x 10G SFP+) are non-blocking, line-rate regardless of the chassis type with SUP2-XL and 2:1 oversubscribed with SUP2. Gen-1 line cards (with exception of 24x mGig + 24x 1G which is 1.1:1 oversubscribed) are line-rate with both SUP-2XL and SUP-2 on all chassis types.

The Gen-1 10G fiber and mGig line cards are oversubscribed with SUP-1, 1XL and 1XL-Y (24XS is line-rate with SUP-1XL on 4-slot chassis). All variants of 1G line cards operate at line-rate for all packet sizes.

All Gen-1 line cards get a significant bandwidth boost when operating with the Gen-2 supervisors (i.e., 3x bandwidth uplift on 10-Slot Chassis with SUP-2XL and 2x on 7-slot chassis with SUP-2XL), helping customers make the most of their existing infrastructure and investments.

Power supply

The power supplies for Catalyst 9400 switches come in small form-factor while providing high capacity and efficient output — 3200W AC with 240V input (1570W with 120V input), 2100W AC PS with 240V input (940W with 120V input) and 3200W DC PS. All AC power supplies are 80 Plus platinum-rated, providing the highest efficiency in converting AC power to DC.

The 7-slot and 10-slot chassis provide eight power supply bays while the 4-slot chassis provides four power supply bays. The Catalyst 9400 switch combines N+1 and N+N redundant options for power supplies.

Additional details are provided in the chapter High Availability.

Fan tray

The fan tray of Catalyst 9400 switches contains multiple individual fans operating in an N+1 redundant mode. Fans operate at variable speeds based on the system temperature and altitude. This makes efficient use of the power and provides lower noise levels. The field-replaceable fan tray can be replaced from the front or the rear of the chassis. This is a tremendous help with operations and reduces downtime since the cable management in a typical wiring closet could become unwieldy when removing the cables from the front of the chassis.

Catalyst 9400 Switch fan tray

Cisco Catalyst 9500 Series switches are purpose-built business-critical fixed 40G/100G/400G Core and Distribution layer switches for the campus. These switches deliver exceptional table scales, buffering capabilities, up to 12 Tbps of switching capacity and up to 8 Bpps of forwarding performance. The platform offers non-blocking 100/200/400G QSFPDD, 100G QSFP28, 40G QSFP, 25G SFP28 and 10G SFP+ switches with high port densities.

Catalyst 9500 Series Switches

Platform overview

Catalyst 9500 Series switches are 1RU fixed-configuration switches for Core, Distribution and Edge deployments. The platform supports all the foundational High Availability capabilities, including dual redundant 80Plus platinum-rated power supplies and variable-speed, high-efficiency redundant fans.

Catalyst 9500 switches are powered by either the Cisco UADP or Cisco Silicon One ASICs depending on the model. Cisco Silicon One is the first network silicon to offer switching capacity up to 25.6 Tbps in the enterprise. Catalyst 9500X powered by the Silicon One Q200 along with Catalyst 9600 SUP-2 herald the entrance of 400G into the campus Core. The architecture of both ASICs are similar but differ in switching capacity, port density, port speeds, buffering capability and forwarding scalability.

Different models offer a variety of connectivity and scale. These can be organized into various configurations of QSFP or SFP fiber options:

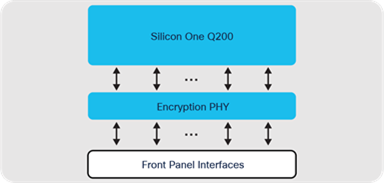

Note: Catalyst 9500X models with Silicon One Q200 leverage a new 800 Gbps PHY with built-in hardware encryption, IEEE 1588 time-stamping, as well as standard electrical and optical physical conversion.

● 400G QSFPDD — Catalyst 9500X (Silicon One Q200 ASIC) switch with 28x 40/100G ports + 8x 40/100/200/400G ports

● 100G QSFP28 — Catalyst 9500 high-performance (UADP 3.0) switch with 32x 40/100GE ports

● 40/100G QSFP28 — Catalyst 9500 high-performance (UADP 3.0) switch with 32x 40GE or 16x 100G ports

● 40G QSFP — Catalyst 9500 (UADP 2.0 XL) switch with 24x 40GE ports, Catalyst 9500 (UADP 2.0 XL) switch with 12x 40G ports

● 10/25G SFP28 — Catalyst 9500 high-performance (UADP 3.0) switch with 48x 25GE + 4x40/100 GE ports, Catalyst 9500 high-performance (UADP 3.0) switch with 24x 25GE + 4x40/100 GE ports

● 10G SFP+ — Catalyst 9500 (UADP 2.0 XL) switch with 40x 1/10GE ports, Catalyst 9500 (UADP 2.0 XL) switch with 16x 1/10GE ports

Architecture

Catalyst 9500 switches operate at line-rate and offer configurable system resources to optimize support for specific features.

The switch architecture consists of three main components:

● ASIC

● x86 CPU complex

● ASIC interconnect

ASIC

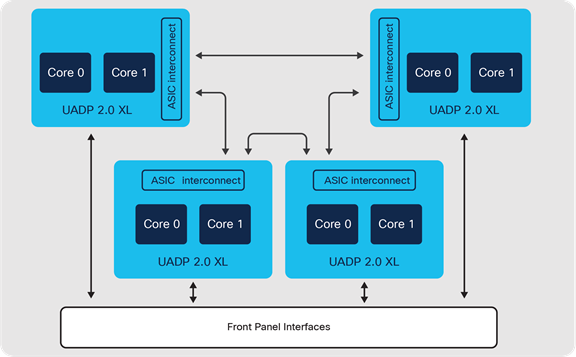

Cisco UADP 2.0 XL ASIC is built using 28-nanometer technology with two cores, capable of supporting up to 240 Gbps with a maximum forwarding capacity of 375 Mpps.

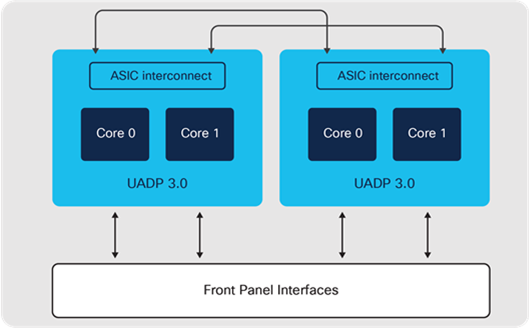

Cisco UADP 3.0 ASIC is built on 16-nanometer technology using two cores, capable of supporting up to 1.6 Tbps with a maximum forwarding capacity of 1 Bpps.

Cisco Silicon One Q200 ASIC is built on 7-nanometer technology using six slices, capable of supporting up to 12.8 Tbps, with a maximum forwarding capacity of up to 8 Bpps. The Silicon One Q200 ASIC features 80 MB of dedicated low latency buffers and 8 GB of High Bandwidth memory. Catalyst 9500X models with Silicon One Q200 also introduce 400G into the enterprise.

X86 CPU complex

Catalyst 9500 switches come with a 2.4 GHz x86 4-core CPU and 16 GB DRAM, while the Catalyst 9500X Switches come equipped with a 2.4 GHz x86 8-core CPU and 32 GB DRAM. In addition to 16GB of onboard flash storage, all models support up to 960GB USB 3.0 SSD drive for general-purpose storage and application hosting.

ASIC interconnect

Catalyst 9500 switches with Cisco UADP use high-speed ASIC interconnect links for inter-ASIC communication. UADP 2.0 XL has up to 720 Gbps (360 Gbps full-duplex) of interconnect bandwidth and UADP 3.0 has up to 1.6 Tbps (800 Gbps full-duplex). Packets destined to local ports within the ASIC do not use ASIC interconnect links.

Note: Models using Silicon One Q200, with 25.6 Tbps (12.8 Tbps full-duplex) of forwarding capacity, all Silicon One Q200 models can be powered with a single ASIC, thus eliminating the need for interconnects.

Cisco Catalyst 9500 Switch block diagram — Cisco UADP 2.0 XL

Cisco Catalyst 9500 high-performance switch block diagram — Cisco UADP 3.0

Cisco Catalyst 9500X switch block diagram — Cisco Silicon One Q200

Network modules

Only the Catalyst 9500 UADP 2.0 XL models support uplink modules. There are two variants of uplink modules that can be used to provide connectivity to an uplink switch and be used to connect hosts.

● 8x 10G SFP ports

● 2x 40G QSFP ports

Uplink modules are field-replaceable and can be swapped without interrupting switch operations, thereby providing investment protection without compromising availability.

Power supply

Catalyst 9500 switches support up to two AC 80 Plus platinum-rated or DC small form- factor power supply units: 650W AC, 930W DC, 950W AC/DC, 1500W AC/DC and 1600W AC/DC. Power supplies can be installed in the following combinations: two AC, two DC or an AC and DC combination. The power supplies work together in a redundant (1:1) load-sharing mode, in which each power supply operates at approximately 50 percent of its capacity. In case of power supply failure, the other power supply can provide power for the entire system.

Fans and fan tray

Catalyst 9500 Series switches have up to six variable speed independent fan units or dual fan trays depending on the SKU. Each fan operates at variable speeds to accommodate variance in temperature and altitude.

Catalyst 9500X switches feature two sets of six variable speed independent fan units to support reversible airflow, an important requirement enabling customers to delineate hot and cold aisles in their environment, for optimal cooling. One type is for port-side intake fans and the other type is for port-side exhaust fans.

The Catalyst 9500 Series switch can accommodate a failure of up to one individual fan or fan tray.

Cisco Catalyst 9600 Series switches are leading business-critical modular enterprise campus Core, Distribution and Edge platforms. The Catalyst 9606R chassis supports a switching capacity of up to 25.6 Tbps. Catalyst 9600 switches provide high port densities that fit diverse campus needs, from 1G to 400G non-blocking speeds. The platform delivers High Availability with field-replaceable dual supervisors, redundant power supplies and fans. The platform is campus-optimized with an innovative dual- serviceable fan tray design, side-to-side airflow and is closet-friendly with about 16- inch depth. A single Catalyst 9600 switch can scale up to 192 core ports.

Cisco Catalyst 9606R chassis

Platform overview

Catalyst 9606R is a 6-slot chassis, with two middle slots dedicated for the supervisors and four slots dedicated for the line cards. Each line card slot has a dedicated total bandwidth of up to 6.4 Tbps (3.2 Tbps full-duplex). The Catalyst 9606R chassis can provide up to 32x 400G (QSFPDD) or 128 x 40G/100G (QSFP) or 192 x 1G/10G/25G (SFP) ports.

Architecture

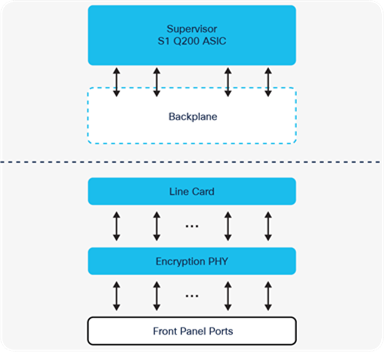

Catalyst 9600 switches are based on a centralized architecture. The supervisor does all forwarding, security and queueing, while the line cards are considered transparent, containing only PHYs and control logic. The simplicity of this centralized design allows easy upgrade of features and additional bandwidth, by upgrading the supervisor while keeping the existing line cards. The centralized architecture and transparent line card combination also provides uninterrupted supervisor switchover as the foundation for In-Service Software Upgrade (ISSU).

Catalyst 9600 Series Switching architecture

Supervisors

Catalyst 9600 comes with multiple supervisor offerings that address a varied set of port speed, slot capacity and scale requirements. The supervisors are categorized based on their generation:

Catalyst 9600 Generation 2 supervisor:

● Catalyst 9600X-SUP-2

Catalyst 9600 Generation 1 supervisor:

● Catalyst 9600-SUP-1

The Catalyst 9600 Gen-1 Supervisor is powered by three UADP 3.0 ASICs, 2.0 GHz x86 8-core CPU, 16GB DRAM and 16 GB of internal flash storage. Each ASIC provides up to 1.6 Tbps bandwidth. The three ASICs are interconnected with a 3.2 Tbps ASIC interconnect. The Catalyst 9600 Gen-1 Supervisor provides 2.4 Tbps (1.2 Tbps full- duplex) of bandwidth per slot for all the line card slots.

Catalyst 9600 Switch Supervisor-1 architecture

The Catalyst 9600 Gen-2 supervisor introduces the Cisco Silicon One Q200 to the Enterprise Modular Core family, delivering the full potential of the Catalyst 9600 chassis with 6.4 Tbps (3.2 Tbps full-duplex) bandwidth per slot for all the line card slots, for a total of 25.6 Tbps switching bandwidth. The Gen-2 supervisor has a 2.7 GHz x86 8- core CPU, 32 GB DRAM and 16 GB of internal flash storage. New Line cards unlock the full potential of the new supervisor, while existing line cards get a 2x bandwidth boost with a supervisor upgrade, delivering unmatched value and investment protection.

Catalyst 9600X Switch - Supervisor-2 and LC diagram

Both Gen-1 and Gen-2 supervisors support up to 960GB M2 SATA SSD options for application hosting or general-purpose storage.

Line cards

Catalyst 9600 switches offer five types of line cards for different connectivity requirements. Catalyst 9600 line cards can be leveraged for both uplink and downlink connectivity, including the new Gen-2 combo (SFP and QSFP) line cards that provide 100/200/400G uplink ports.

● Gen-2 Fiber Combo line card:

◦ With SUP-2: 40x 10/25/50G SFP56, 2x 40/100/200G QSFP56 uplinks and 2x 40/100/200/400G QSFPDD uplinks

◦ With SUP-1: 40x 1/10/25G SFP and 2x 40/100G QSFP Uplinks

Note: Gen-2 line cards leverage a new 800 Gbps PHY with built-in hardware encryption, IEEE 1588 time-stamping, as well as standard electrical and optical physical conversion.

● Gen-1 Fiber QSFP line card:

◦ 24-port QSFP28 line card

◦ With SUP-2: 24x 40/100G

◦ With SUP-1: 24x 40G or 12x 100G

● Gen-1 Fiber SFP line cards:

◦ 48-port SFP56 line card

◦ With SUP-2, 48x 10/25/50G

◦ With SUP-1: 48x 1/10/25G

◦ 48-port SFP line card

◦ 48x 1G fiber (only supported on SUP-1)

● Gen-1 Copper mGig line card:

◦ 48-port RJ45 copper

◦ With SUP-2: 48x 10G ports

◦ With SUP-1: 48x 100M/1/2.5/5/10G ports

Note: Gen-1 line cards leverage a PHY without hardware encryption. When operating with the Gen-2 supervisor, hardware encryption is not supported.

Power supply

The power supplies for Catalyst 9600 switches come in a small form factor while providing high capacity and efficient output. Catalyst 9600 switches support up to four 80 Plus platinum-rated AC or DC power supply units of 2kW (AC and DC) and 3kW (AC). The platform supports both combined and N+1 redundant modes.

Additional details are provided in the High Availability chapter 5 – High Availability.

Fans and fan tray

Catalyst 9600 Series switches contain a single fan tray with multiple individual fans operating in an N+1 redundant mode. The fans operate at variable speeds based on the system temperature and altitude. This makes efficient use of the power and provides a reduced noise level. The fan tray on Catalyst 9600 switches can be replaced from the front or the rear of the chassis. This is a tremendous help with operations and reduces downtime since the cable management in a typical wiring closet could make it unwieldy to remove the cables from the front of the chassis to service the fan tray.

Catalyst 9600 Series Switch fan tray

ASICs — the power of programmable silicon

An Application-Specific Integrated Circuit (ASIC) is a custom silicon microchip designed for a specific task such as forwarding network packets, rather than for general-purpose processing such as a CPU.

In a network switch, an ASIC handles packet recognition and Layer 2/Layer 3 processing at extremely high speeds (hundreds of Gigabits per second, trending towards Terabits per second). In addition to this, an ASIC also handles a rich set of network services, including prioritization with QoS, traffic filtering and enforcement with ACLs, segmentation with VRFs and SGTs, accounting with NetFlow and many more.

ASIC microchips are built using a process that is designated by its minimum feature size, for example, 7-nanometer technology. This translates to the size of various components used, including transistors and memory. The three main reasons for driving towards these smaller ASICs are:

● Increased speed, as electrons have shorter distances to travel

● Lower power consumption and more efficiency, with less energy wasted as heat

● Lower cost, by improving the yield (number per wafer) and quality of chips

Modern ASICs are developed with several technologies that range from 45 nm to as small as 7 nm. Newer ASICs are also packaged with multiple additional memory modules to achieve deeper buffers and scale of lookup tables.

Why do we need ASICs?

A general-purpose CPU is too slow for forwarding networking traffic. While a general- purpose CPU might be fast at running random access applications on a laptop or server, processing and forwarding network traffic is a different matter. Traffic handling requires constant lookups against large memory tables, including L2 for MAC addresses, L3 for IP routes, L4 for ACLs, Security and QoS.

In a general-purpose CPU, these tables are held in off-chip memories and incur significant performance losses for frequent access. There are also limited data paths and buffers to handle packets that are being processed at millions or billions of packets per second. Once packets have been received and queued, the CPU must perform the actual processing functions of finding destination ports and rewriting packet formats. For these reasons, a general-purpose CPU is not well-suited for network processing.

| The bottom line |

| CPUs are flexible but slow. ASICs are necessary to meet the requirements of enterprise networks. |

The following sections examine traditional and programmable network ASICs central to how a switch operates and forms the foundation of the enterprise network and is capable of handling current and future network requirements.

Traditional ASICs

Many different ASICs have been used in Cisco switches and routers over the years. Each of these were designed and developed for the specific features, speed and scale needed for different roles in the campus network.

This class of networking ASICs are known as fixed ASICs. All aspects of these ASICs (behavior, speed, scale, etc.) are hard-wired (fixed) into them as part of the manufacturing process and cannot be changed without creating a new version of the ASIC.

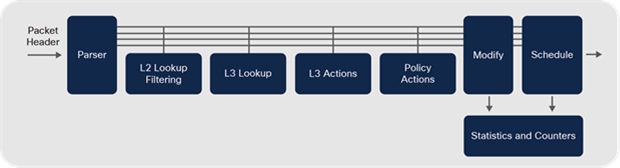

Another reason they are called fixed ASICs is their processing behavior. As the name suggests, all incoming packets are subject to a fixed series of steps, known as a processing pipeline. A typical fixed processing pipeline’s stages are similar to the following:

1. Parse incoming packets (examine headers).

2. Layer 2 processing (e.g., MAC lookup).

3. Layer 3 processing (e.g., IP lookup).

4. Policy processing (e.g., ACL lookup).

5. Packet rewrite and traffic counters.

6. Queue scheduling and transmission.

Traditional ASIC — processing pipeline

Fixed ASICs are very cost-effective and efficient but are not flexible or adaptable. They are only able to handle the types of packets that the chip is hard-wired to process.

Network and protocol evolution

Why do ASICs need to change? To provide an example, the ASIC in Catalyst 3750 can only forward IPv4 and IPv6 packets in hardware. It was designed before VXLAN was developed, and since it is a fixed ASIC, it cannot handle VXLAN in hardware. An entirely new ASIC is needed for this purpose.

This lack of flexibility may have been acceptable when networks, and related protocols, did not change much. In the new era of networking, however, everything is software- defined, with ever-evolving protocols and scale requirements. This requires ASICs to support new packet formats and encapsulations such as VXLAN-GPO, GPE and NSH.

| The bottom line |

| Traditional fixed ASICs are not conducive to the demands of the new Software-Defined world. |

Programmable ASICs

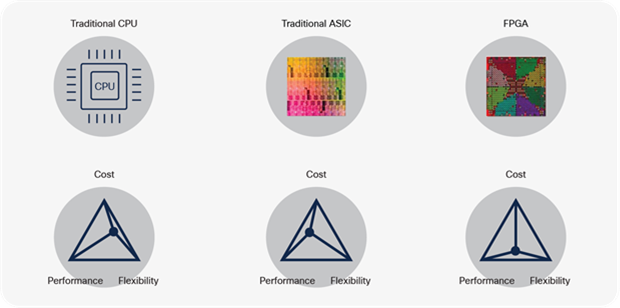

How do we get the best of both worlds? How do we get the speed we need for Gigabits or Terabits of bandwidth and deliver the flexibility to keep pace with new network innovations? These questions led to the concept of programmable ASICs — flexible network microchips designed to adapt to new capabilities and offer the performance that modern networks demand.

Early attempts led to the development of the Field Programmable Gate Array (FPGA). These are processors with fully reprogrammable logic gates that allow changing original behavior after manufacturing. Although FPGAs do provide a level of flexibility, their use as a primary switch-forwarding engine is typically cost-prohibitive due to design, manufacturing costs, board space, heat and power considerations.

These limitations typically relegate FPGAs to a special-purpose role in most network devices. For instance, an FPGA may be used to augment the packet forwarding of a fixed ASIC for that one “special” feature the fixed chip does not have, such as providing VXLAN encapsulation not supported on the switch ASIC. But this raises the total cost of a switch, using combined FPGA and ASIC designs to achieve flexibility.

Traditional CPUs, ASICs and FPGA

| The bottom line |

| CPUs are flexible and inexpensive but do not scale for high-speed forwarding. Fixed ASICs are fast and scalable, but inflexible. FPGAs are flexible and scalable, but expensive. |

To summarize, programmable ASICs should offer:

● A flexible processing pipeline

● An option to use deep or shallow packet buffers

● An option to scale lookup tables (on-die or on-package memories)

● A single architecture that scales from low to high bandwidth, with single or multiple devices in a mesh or fabric

Cisco saw this need coming several years ago and developed the programmable Cisco Unified Access Data Plane (UADP) ASIC family.

The Cisco UADP ASIC combines the flexibility to address new and emerging networking protocols and encapsulations, with the speed of a fixed ASIC and the cost and scalability to address multiple different areas of the campus network, such as Access, Distribution, Core and more.

This approach continued with the new Cisco Silicon One ASIC family to achieve higher scale and bandwidth. The Silicon One ASIC architecture brings a scalable slice-based processing pipeline, with support for industry-standard programmable P4 microcode for datapath pipelines and support for optional on-package memories. This results in a flexible ASIC for either Switching, Routing, Data Center or Service Provider designs.

The rest of this chapter explores the Cisco UADP and Silicon One ASICs, which are at the heart of the Catalyst 9000 Family of Switches.

Cisco Programmable Switching Silicon

Flexibility in programming sets the ideal foundation for the world's most advanced switches. This enables Catalyst 9000 switches to:

● Handle new frame encapsulations, allowing new features and protocols

● Reprogram their memory tables allowing switches to adapt to changing needs

● Support multiple interface types and chassis configurations to address evolving network designs

● Maintain consistent high performance to address a growing diversity of applications

● Provide a rich, integrated set of flexible traffic handling and accounting services

Flexibility at every stage

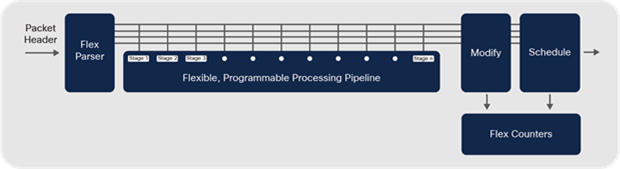

In Cisco programmable switching silicon, almost every processing stage is made flexible and programmable, unlike a fixed-pipeline chip.

The first stage of the ASIC is a parser, whose job is to recognize packet types and headers and analyze them for further processing in the ASIC pipeline. In traditional fixed ASICs, the parser stage is fixed, making it impossible to upgrade the ASIC to recognize or process new packet types and headers in hardware.

Network Processing Unit (NPU)

This stage is the most similar to a CPU, where the network forwarding and service processing occur. Unlike fixed-processing pipelines, Cisco programmable ASICs multi- stage flexible pipelines (L2, L3 forwarding, policy, rewrite, queuing, etc.) are also completely reprogrammable via firmware microcode.

NPUs can be a single processor or multiple processors (cores) integrated into a single package to share the load and expand capacity. There is an ingress NPU pipeline to process incoming packets and an egress NPU pipeline to process outgoing packets.

Cisco’s programmable ASICs are also capable of reallocating and customizing memory resources through different configurable templates, unlike fixed ASICs where the resources are fixed to a specific stage or function.

Programmable Cisco ASIC — processing pipeline and NPU

Integrated micro-engines

Certain functions executed by a Programmable ASIC may be very processing intensive. Several basic tasks are based on well-known fixed algorithms and it does not make sense to waste cycles in the ASIC pipeline. In such cases, an on-chip micro-engine is available that can process these functions in parallel, saving valuable ASIC performance.

Some examples of micro-engine functions built into the ASIC include:

● Encryption and decryption

● Fragmentation

● Packet replication

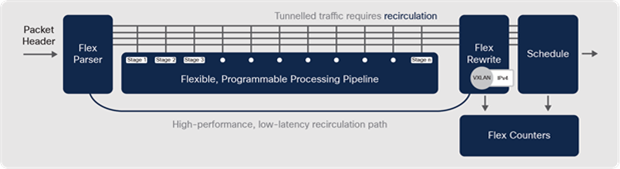

Packet recirculation

Traffic tunneling is a common requirement in modern networks. IPsec, GRE, MPLS and VXLAN are common tunneling protocols. They add an additional header outside the original packet when sending (known as encapsulation or encryption) and remove the outer header when the packet is received (known as decapsulation or decryption).

Any time packets need to be tunneled in an ASIC, the original packet may need to be processed more than once, to add or remove additional headers. This is known as recirculation.

Programmable Cisco ASIC — packet recirculation

The bandwidth available for recirculation is flexible, meaning recirculation can also use the spare bandwidth not currently being used by the front-panel ports. When tunneling is required, the impact on forwarding performance is minimal. A packet can be recirculated multiple times, but only two or three passes are normally required.

ASIC Interconnects

In some cases, based on required port types and densities, a switch may be built around a single ASIC or multiple ASICs interconnected.

For this purpose, dedicated Input/Outputs (I/O) on the ASIC can be used to interconnect multiple ASICs together in either a point-to-point (mesh or fabric) or a ring (stack), expanding the total scale of the system. These interconnects can be within the switch or use external cabling outside the switch.

CPU Interconnects

Enterprise networks are now dealing with massive volumes of data and there is a need to collect and analyze this data to respond faster and deliver insightful context. Traditional approaches using remote servers will no longer work.

Edge computing (enabled by Application Hosting) can greatly reduce the data sent to the cloud or a remote server, by collecting and analyzing the data at the Edge and making decisions locally to reduce the latency and bandwidth of the network. To meet this requirement, ASICs should provide dedicated links for application traffic from the ASIC to the CPU, to maximize the performance of such hosted applications.

Cisco UADP and Silicon One ASICs support application port(s) for speeds up to 10 Gbps each, that directly map front-panel ports to the CPU. For more details on container networking and how it can be utilized, refer to Application Hosting.

Programming ASICs with microcode

Cisco IOS XE is a multi-layered operating system. Some lower software layers are closely associated with hardware. Meanwhile, hardware drivers and infrastructure pieces of the software (known as microcode) directly interact with the hardware. This microcode layer of the software programs the ASIC. Refer to the chapter Cisco IOS XE for more details about the software architecture.

The microcode for programming the ASIC is included in the Cisco IOS XE image. Any changes to the microcode are included with the image that runs on a Catalyst 9000 switch. Microcode upgrades allow flexible ASIC resources allocation.

Programmable ASICs in the Catalyst 9000 Switch Family

The Catalyst 9000 family consists of two main variants of programmable ASICs

● Cisco UADP family — UADP is a feature-rich ASIC primarily used on the platforms positioned as Access, Distribution and Core layers of the enterprise campus, where the Internet routing scale is not required.

● Cisco Silicon One family — Silicon One is a high-performance ASIC, primarily used on platforms positioned for Core and (ISP/WAN) Edge, where higher scale and buffer memory is essential.

Now, let us deep dive into each of the ASIC families in detail.

The Cisco UADP ASIC family began in 2013 with UADP 1.0 and has progressed significantly in terms of technology and has incorporated more transistors and memories with each generation. Each additional transistor means that additional performance, scalability, features and functionalities can be built into the ASIC.

The Cisco Silicon One ASIC family began in 2017 with Q200, with the acquisition of Leaba Networks, and represents a new class of programmable multi-slice ASICs designed for Service Provider, Enterprise and Data Center Core networks.

Catalyst 9000 switches are built on the next generations of UADP (UADP 2.0 and 3.0) and Silicon One (Q200) ASICs.

Cisco UADP 2.0

The Cisco UADP 2.0 is a dual-core 28 nm ASIC providing aggregate bandwidth up to 240 Gbps (full-duplex). UADP 2.0 also has large shared, flexible memory tables that can be reprogrammed using SDM templates, giving the option to deploy the same device in multiple network areas, as discussed in Campus Network Design.

Cisco UADP 2.0 ASICs have four variants: UADP 2.0, 2.0sec, 2.0 XL and 2.0 mini. Both 2.0 and 2.0 XL have the same architecture, but the UADP 2.0 bandwidth, table scale and overall performance have been optimized for business-critical access layer switches. Cisco UADP 2.0sec has a similar architecture as UADP 2.0, but provides higher bandwidth for front-panel ports and stacking, as well as 100G of hardware encryption for IP security.

Cisco UADP 2.0 XL has been optimized for Modular Access and Distribution layer switches. It has larger memory table sizes (hence the XL designation) with greater aggregate bandwidth and overall performance for port speeds and density needed for these roles. UADP 2.0 XL also has inter-ASIC connectivity using dual datapaths of 720 Gbps, to support platforms where multiple ASICs are required. The first-generation Catalyst 9500 Series switches and the Catalyst 9400 Series Supervisor-1 and 1XL use UADP 2.0 XL.

Cisco UADP 2.0 mini has a modified single-core architecture with an integrated quad- core ARM CPU and bandwidth, table scale, overall performance and power consumption have been optimized for simple access layer switches.

Table 2. Cisco UADP 2.0 product comparison

|

|

UADP 2.0 mini Catalyst 9200L/ Catalyst 9200 |

UADP 2.0 Catalyst 9300 |

UADP 2.0sec Catalyst 9300X |

UADP 2.0 XL Catalyst 9400 SUP1/SUP1-XL Catalyst 9500 |

| MAC entries |

16K/32K |

32K |

32K |

64K |

| Host routes IPv4 |

8K/10K |

24K |

24K |

48K |

| LPM routes IPv4 |

3K/4K |

8K |

15K |

64K |

| LPM routes IPv6 |

1.5K/2K |

4K |

7.5K |

32K |

| Multicast routes |

1K |

8K |

8K |

16K |

| QoS entries |

1K |

5K |

4K |

18K |

| ACL entries |

1K |

5K |

8K |

18K |

| NetFlow entries (per ASIC) |

16K |

64K |

64K |

128K |

| SGT entries |

2K |

8K |

8K |

16K |

| Buffers |

6 MB |

16 MB |

16 MB |

32 MB |

| Inter-ASIC bandwidth |

80 Gbps |

240 Gbps |

540 Gbps |

720 Gbps |

Cisco UADP 3.0

The Cisco UADP 3.0 is a dual-core 16 nm ASIC that provides a significant increase of aggregate bandwidth of up to 1.6 Tbps (full-duplex). UADP 3.0 is designed to address the requirements of new interface speeds of 25G and 100G. The increased bandwidth and performance make it the ideal ASIC for campus Core and Distribution layer switches.

Cisco UADP 3.0 has larger memory tables and greater reprogramming flexibility, with larger shared packet buffers (36MB) to support interface speed increases. It also has double-wide memory table sizes to store both IPv4 (32-bit) and IPv6 (128-bit) addresses in a single entry thus allowing the same scale for IPv4 and IPv6 networks.

Cisco UADP 3.0sec has a similar architecture to UADP 3.0 with the main addition of the 100G hardware encryption capability and extra scale for IP security. Catalyst 9400X models with Supervisor 2 and 2XL use the UADP 3.0sec ASIC.

Table 3. Cisco UADP 3.0 product comparison

|

|

UADP 3.0 Catalyst 9500 High Performance Catalyst 9600 SUP1 |

UADP 3.0sec Catalyst 9400 SUP2/2XL |

| MAC entries |

up to 128K |

up to 128K |

| Host routes (IPv4/v6) |

up to 256K/256K |

up to 256K/256K |

| LPM routes (IPv4/v6) |

up to 256K/256K |

up to 256K/256K |

| Multicast routes (IPv4/v6) |

up to 32K/32K |

up to 32K/32K |

| QoS entries |

up to 16K |

up to 16K |

| ACL entries |

up to 27K |

up to 27K |

| NetFlow entries (per ASIC) |

up to 128K |

up to 128K |

| SGT entries |

up to 64K |

up to 64K |

| Buffers |

36 MB |

36 MB |

| Inter-ASIC bandwidth |

up to 1600 Gbps |

up to 1600 Gbps |

For more details about the supported combination of features and scales, please refer to the configuration guide: cisco.com/go/sdmtemplates.

Cisco Silicon One Q200

The Cisco Silicon One architecture enables a single multi-slice ASIC to be used in both switching or routing platforms, to achieve higher flexibility, performance and better power efficiency. There are several variants of Silicon One ASICs depending on the bandwidth and buffer requirements, including the Q200 that is used on Catalyst 9000 switches.

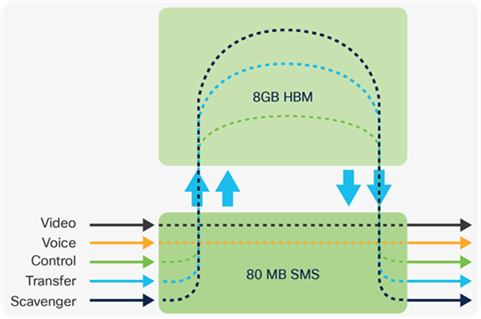

The Q200 is a six-slice 7 nm ASIC with an overall throughput of 12.8 Tbps (full-duplex). The smaller size and high bandwidth per ASIC helps achieve a better than 40% improvement in power consumption for the same bandwidth compared to similar ASICs. In addition to 80 MB of on-die Shared Memory System (SMS) buffers, the on- package High Bandwidth Memory (HBM) of 8 GB allows deep buffers and expands the L3 table scale. Silicon One ASICs also support standardized P4 programmable microcode language that allows flexibility in processing pipeline programming.

The Q200 ASIC supports flexible input and output (I/O) speeds enabling interfaces to operate from 10 Gbps up to 400 Gbps.

Table 4. Cisco Silicon One Q200 product details

|

|

Cisco Silicon One Q200 Catalyst 9500X Catalyst 9600X SUP2 |

| MAC entries |

up to 256K |

| Host routes IPv4/v6 |

up to 256K/128K |

| LPM routes IPv4/v6 |

up to 2M/1M |

| Multicast routes IPv4/v6 |

up to 32K/16K |

| QoS entries |

up to 10K |

| ACL entries |

up to 10K |

| SGT entries |

up to 32K |

| MPLS labels |

up to 512k |

| Buffers (SMS/HBM) |

80 MB/8 GB |

Additional details and block diagrams are available at:

Catalyst 9500 Series — cisco.com/go/cat9500architecture

Catalyst 9600 Series — cisco.com/go/cat9600architecture

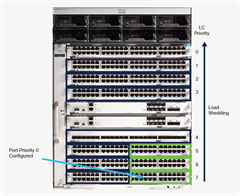

Catalyst 9000 switches use a Switching Database Manager (SDM) template to define how ASIC memory resources (MAC and IP addresses, Security and QOS ACLs, FNF cache entries, etc.) should be allocated. Cisco IOS XE allows the user to define the SDM template to be used, enabling the switch to be used in different roles in the network.