EIBGP policy-based multipath with equal-cost multipath

EIBGP policy-based multipath with Equal-Cost Multipath (ECMP) is a BGP feature that

-

enables routers to use multiple best paths for forwarding traffic,

-

allows load balancing across both internal (iBGP) and external (eBGP) BGP sessions, and

-

supports advanced policies like policy-based multipath, ECMP, resilient hashing, and selective multipath.

By using BGP communities, nexthops, path types, and consistent hashing, the solution increases flexibility and reliability in managing traffic distribution across large-scale networks

-

ECMP: A routing strategy that allows load balancing over multiple paths with the same cost.

-

eBGP/iBGP/eiBGP: External/Internal/External-Internal BGP sessions, defining the relationship between the BGP peers.

-

BGP community: An attribute used to tag and group routes for policy application.

|

Feature Name |

Release Name |

Description |

|---|---|---|

|

EIBGP policy-based multipath with equal-cost multipath |

Release 25.1.1 |

Introduced in this release on: Fixed Systems (8700 [ASIC: K100], 8010 [ASIC: A100])(select variants only*) *This feature is supported on:

|

|

EIBGP policy-based multipath with equal-cost multipath |

Release 24.4.1 |

Introduced in this release on: Fixed Systems (8200 [ASIC: P100], 8700 [ASIC: P100])(select variants only*); Modular Systems (8800 [LC ASIC: P100])(select variants only*) *This feature is supported on:

|

|

EIBGP policy-based multipath with equal-cost multipath |

Release 7.10.1 |

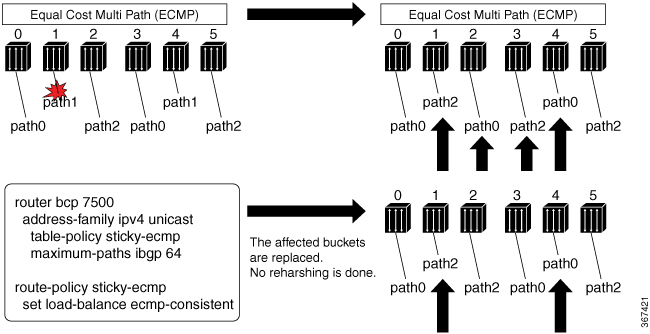

You can control traffic distribution and load balancing in BGP by enabling policy-based multipath selection for iBGP, eBGP, and eiBGP sessions. This approach uses BGP communities, nexthops, and path types to define how paths are selected. Additionally, by using the Equal-Cost Multipath (ECMP) option in eiBGP, you can balance traffic across multiple eligible iBGP paths chosen for eiBGP. This feature gives you greater flexibility and efficiency in managing BGP routing and load balancing. The feature introduces these changes: CLI: The keywords route-policy and equal-cost are added to the maximum-paths command. YANG Data Model:

(see GitHub, YANG Data Models Navigator) |

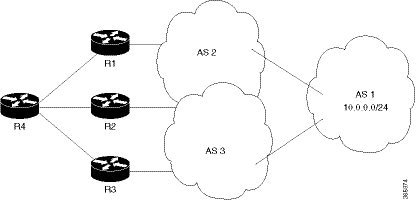

The enhanced policy-based multipath selection in BGP operates now at the default Virtual Routing and Forwarding (VRF) level for variations of BGP, such as iBGP, eBGP, and eiBGP. To improve this functionality, the policy-based multipath selection is now extended to include iBGP, eBGP, and eiBGP by utilizing communities as the underlying mechanism. By utilizing communities, the selection of multiple paths based on specific policy criteria becomes more elaborate. It enables better control over the routing decisions within the BGP network.

eiBGP traditionally implements the unequal-cost mutipath (UCMP) capability to enable the use of both iBGP and eBGP paths. This feature, utilizing the equal-cost multipath option (ECMP), ensures that the nexthop IGP metric remains consistent across the chosen iBGP paths. Hence the metric evaluation is not performed between eBGP and iBGP paths because they have distinct path types.

Example topology and behavior

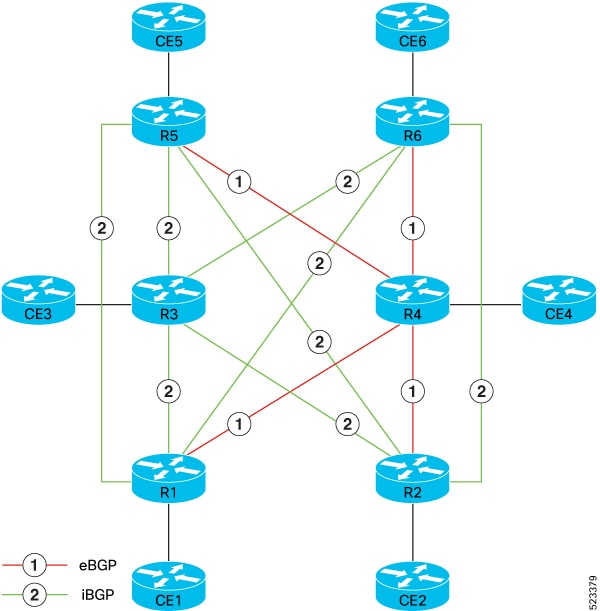

This topology illustrates a network comprising BGP peers denoted as R1 through R6.

Consider a scenario where there is a specific need to transition from using eBGP multipaths to iBGP multipaths. Throughout this transition, you require the simultaneous operation of both eBGP and iBGP to facilitate a seamless migration.

This topology showcases distinct path types, where eBGP paths are visually depicted using a red-colored line labeled as 1, and the iBGP paths are visually illustrated using a green-colored line labeled as 2.

In the context of CE routers, CEI to CE6, the preferred path for prefixes will be from eBGP, specifically from the R4 router. The selection of best paths prioritizes eBGP multipaths from R4, although paths might exist from R5 and R6 routers and also from RI and R2 routers through iBGP. This is the classic behavior. In classic eiBGP, unequal cost paths are employed, leading to the disregard of metrics. However, you rely on the IGP metric for optimal performance.

The iBGP paths with the shortest AS-PATH length are chosen for R5 and R6. The same iBGP multipath selection process applies to paths from R1 and R2. As a result, R1 and R2 establish iBGP peering sessions with R3. This setup creates a combination of eBGP and iBGP paths, called eiBGP, which are available for prefixes advertised to hosts beyond the CE devices. The CE routers must balance prefixes between R3 and R4, and you should exclude paths from R5, R6, R1, and R2. To do this, configure the additive community attribute on R1 and R2 for routes advertised toward R5 and R6.

With this topology, you can run both eBGP and iBGP, allowing a smooth transition between eBGP and iBGP multipaths. Include the default VRF in policy-based multipath selection to apply route policies that control how your network distributes traffic. Use BGP attributes such as communities, next hops, and path types in these policies to select paths. For example, use BGP communities to prioritize routes or change next hops to direct traffic over specific paths. This approach helps you optimize routing decisions, control traffic distribution, and improve load balancing across all BGP types in your network.

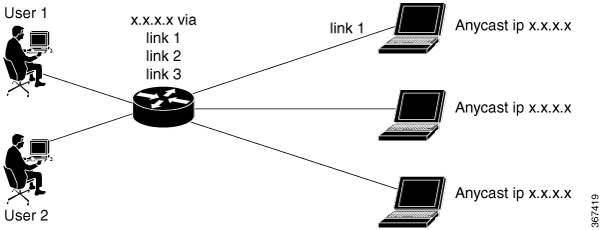

Enable ECMP to distribute traffic evenly across multiple equal-cost paths. This prevents overloading any single path and improves load balancing. With the ECMP option in eiBGP, the router can use multiple iBGP paths with equal cost for traffic distribution.

Benefits of EIBGP policy-based multipath with ECMP

-

Optimizes traffic distribution and network utilization across iBGP, eBGP, and eiBGP sessions.

-

Enables seamless migration between eBGP and iBGP multipath scenarios.

-

Provides granular control over path selection using communities, nexthops, and path types.

-

Ensures efficient load balancing and prevents single-path overload by leveraging ECMP.

Caveats of multipath selection without BGP attributes and ECMP

-

If BGP communities, nexthops, or path types are not used in policy-based multipath selection, control over routing is reduced, leading to suboptimal load balancing.

-

Not enabling ECMP in eiBGP causes the router to rely on classic best-path selection and forgo the benefits of multipath load balancing.

-

Avoid comparing IGP metrics between eBGP and iBGP paths, as ECMP only considers iBGP paths with equal cost.

Restrictions and guidelines for eiBGP policy-based multipath with ECMP

eiBGP multipath configuration restrictions

-

You cannot configure eiBGP along with either eBGP or iBGP.

-

The maximum-paths route policy checks only the community, next hop, and path type.

-

The OpenConfig model is not supported.

eiBGP multipath configuration guidelines

-

Use the Accumulated Interior Gateway Protocol (AIGP) metric attribute only with equal-cost eiBGP paths.

-

When you configure both eBGP and iBGP multipath, you can assign the same or different route policies to each. The router automatically applies the policy for the best path type of each prefix:

-

If iBGP provides the best path for a prefix, the iBGP route policy is applied.

-

If eBGP provides the best path, the eBGP route policy is applied.

-

How eiBGP policy-based multipath with ECMP works

Summary

The key components involved in the process are:

-

BGP communities: Used for tagging and grouping routes according to policy.

-

Multipath selection policy: Determines which iBGP, eBGP, or eiBGP paths are included based on community, nexthop, and path type.

-

ECMP option: Ensures that equal-cost iBGP paths are included in eiBGP multipath selection.

Enhanced policy-based multipath selection operates at the default VRF for iBGP, eBGP, and eiBGP. The solution uses BGP communities to enable flexible and granular selection of multiple paths according to policy.

Workflow

These stages describe how eiBGP policy-based multipath with ECMP works:

- The router receives routes from eBGP and iBGP peers.

- Route policies using communities are applied to select eligible multipaths.

- With ECMP enabled, the router balances traffic across all equal-cost iBGP paths that are chosen as part of the eiBGP multipath set.

- The router installs the selected multipaths and forwards traffic accordingly.

Result

Traffic distribution and load balancing are controlled and optimized, supporting seamless transitions between eBGP and iBGP multipath operation.

Configure eiBGP policy-based multipath with ECMP

Enable eiBGP policy-based multipath routing with ECMP by applying route policies that use communities, path types, or next hops.

Use this task to configure ECMP for eiBGP, allowing your routers to consider multiple eligible paths for load balancing, based on policy.

Before you begin

-

Ensure that BGP and required address families are enabled on all participating routers.

-

Have appropriate community values and route policies planned.

Follow these steps to configure eiBGP policy-based multipath with ECMP.

Procedure

|

Step 1 |

Define a community set for R1 and R2 routers. Example: |

|

Step 2 |

Create a route policy named EIBGP on R1 and R2. Example:This policy checks the BGP communities of received routes and takes action based on the community value.

|

|

Step 3 |

Configure BGP to use ECMP for eiBGP and apply the route policy. Example: |

|

Step 4 |

Verify the running configuration. Example: |

|

Step 5 |

Verify that eiBGP multipath and the route policy are working as intended. Example:Review the output to confirm that multiple eligible paths are installed and used according to the route policy and ECMP configuration. The router selects paths for multipath if they match the community criteria and the metric of the best path within the same path type, iBGP or eBGP. A path with a higher metric than the best path of its type is not selected, even if it meets the community constraint. |

The router uses ECMP for eiBGP routes that meet your route policy conditions, improving traffic distribution and load balancing.

Feedback

Feedback