About Multi-Pod

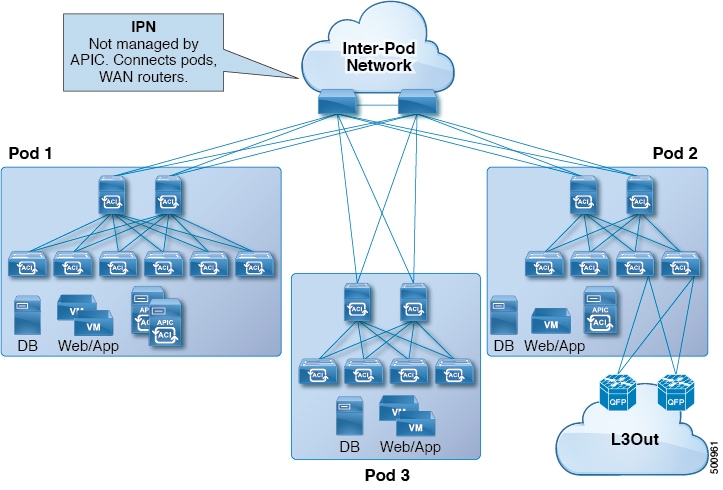

Multi-Pod enables provisioning a more fault-tolerant fabric comprised of multiple pods with isolated control plane protocols. Also, Multi-Pod provides more flexibility with regard to the full mesh cabling between leaf and spine switches. For example, if leaf switches are spread across different floors or different buildings, Multi-Pod enables provisioning multiple pods per floor or building and providing connectivity between pods through spine switches.

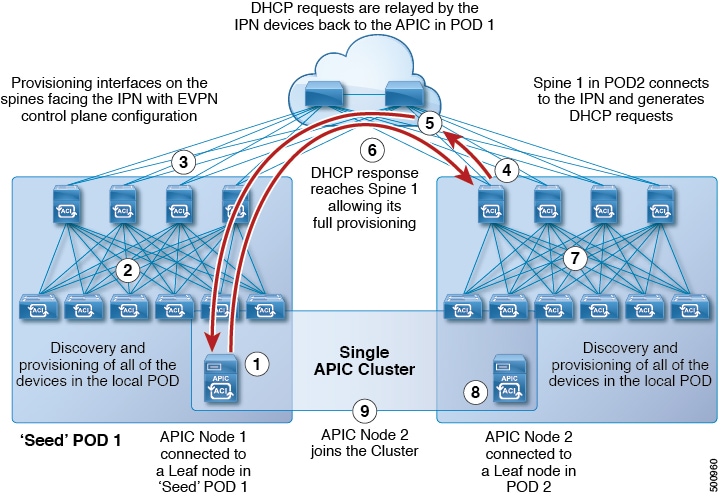

Multi-Pod uses MP-BGP EVPN as the control-plane communication protocol between the ACI spines in different pods.

In releases before Cisco APIC Release 5.2(3), OSPF is used in the underlay to peer between the physical spines and the IPN. Beginning with Cisco APIC Release 5.2(3), the underlay protocol can be OSPF or BGP (eBGP only) or a mixture, with some pods using OSPF and some using BGP.

WAN routers can be provisioned in the Inter-Pod Network (IPN), directly connected to spine switches, or connected to border leaf switches. Spine switches connected to the IPN are connected to at least one leaf switch in the pod.

Multi-Pod uses a single APIC cluster for all the pods; all the pods act as a single fabric. Individual APIC controllers are placed across the pods but they are all part of a single APIC cluster.

Note |

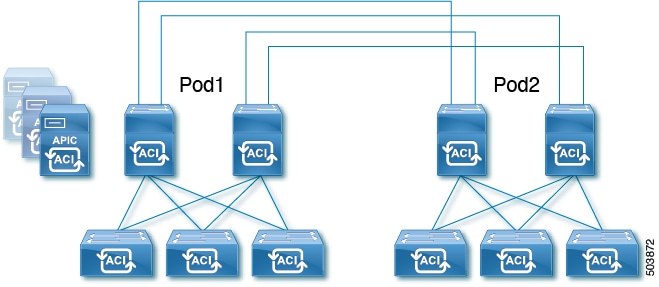

Beginning with Cisco APIC Release 5.2(3), a fabric consisting of only two pods can be connected directly, without an IPN. For information about this Multi-Pod Spines Back-to-Back topology, see About Multi-Pod Spines Back-to-Back. |

Feedback

Feedback