Migrate User Applications between Kubernetes Clusters Using Velero and Restic

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

Cisco Intersight (https://intersight.com) is an API-driven, cloud-based, Software-as-a-Service (SaaS) infrastructure lifecycle management platform. It is designed to help organizations to achieve their IT management and operations with a higher level of automation, simplicity, and operational efficiency. It is a new generation of global management tool for the Cisco Unified Computing System (Cisco UCS), Cisco HyperFlex systems, other Cisco Intersight—connected devices, and third-party Intersight-connected devices. It provides a holistic and unified approach to managing customers’ distributed and virtualized environments. As a result, customers can achieve significant TCO savings and deliver applications faster in support of new business initiatives.

Cisco Intersight provides installation wizards to install, configure, and deploy the Intersight-connected devices including Cisco UCS and HyperFlex systems. The wizard constructs a pre-configuration definition called a profile. The profiles are built on policies in which administrators define sets of rules and operating characteristics. These policies can be created once and used any number of times to simplify deployments, resulting in improved productivity and compliance, and lower risk of failures due to inconsistent configurations.

Cisco Intersight Kubernetes Service (IKS) is introduced as a new feature into the Cisco Intersight cloud platform. IKS integrates the Kubernetes lifecycle management capabilities into the Intersight orchestration platform to offer Kubernetes as a service. It enables the customers to deploy quickly and easily and manage the lifecycle of Kubernetes clusters across the globe using a single cloud portal — Cisco Intersight. IKS also has a full stack of visibility, monitoring, and logging for Kubernetes management. With IKS, Cisco Intersight delivers a turn-key SaaS solution for deploying and operating consistent, production-grade Kubernetes clusters anywhere. However we will end-of-life IKS Fall of 2022 and customers must move to a well known Kubernetes distribution ASAP.

Cisco Container Platform (CCP) is an easy-to-use, lightweight, multi-cluster container management software platform for deploying production-class upstream Kubernetes clusters and managing their lifecycle across on-premises and public cloud environments. CCP delivers the capability to install and operate a lightweight Containers-as-a-Service (CaaS) on-premises platform. Although CCP has been in the market for some years, helping customers overcome the challenges of deploying Kubernetes clusters we will end-of-life CCP Fall of 2022 and customers must move to a well know Kubernetes distribution ASAP.

The full migration of whole CCP clusters and IKS clusters is not supported, but migrating user-installed applications from CCP tenant clusters and IKS tenant clusters to a well known Kubernetes distribution/clusters is feasible.

This document describes a specific example of user application migration from CCP to IKS. This document must be used as a conceptual description of migration and apply appropriate steps required to move user applications from CCP or IKS to a well know Kubernetes distribution/cluster.

In this example there is a step-by-step procedure on how to migrate the user applications on the CCP tenant clusters to the IKS tenant clusters using the backup/restore methodology of Velero and Restic. As mentioned, The procedure is not limited to CCP or IKS though and can be applied to migration between any Kubernetes clusters.

Velero is an open-source tool to safely back up and restore, perform disaster recovery, and migrate cluster resources and persistent volumes. Velero uses Restic to back up any kind of persistent volume you have. Velero supports using different providers for backup storage options.

For simplicity in this example, the solution uses a MinIO storage server, an AWS S3 compatible object storage system, as the backup storage location. The MinIO server runs on an Ubuntu 18.04 host. For the user guide on how to install and utilize MinIO servers, please refer to the MinIO documentation.

Prerequisites:

● Access to your S3 object storage system

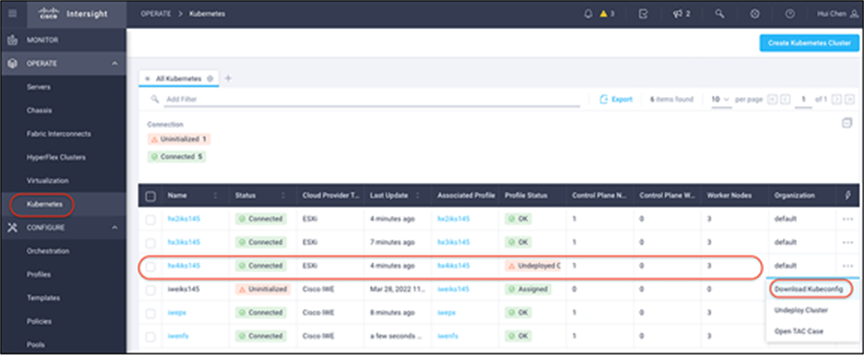

● A workstation with kubectl installed, which you can use to interact with the Kubernetes clusters. It is your choice to manage the CCP tenant clusters and the IKS clusters separately using different workstations.

This section describes migration of user applications from CCP to IKS clusters. However, You can use the same concept with appropriate steps to migrate the user applications from CCP or IKS to Kubernetes clusters of your choice.

1. Install Velero. This can be installed on Linux, Windows, or MacOS and will be used to create and manage the Velero backup artifacts in your Kubernetes clusters like CRDs, Pods, and Daemon sets. Make sure that you download a version of Velero that works with the Kubernetes version that you are running.

2. Create an S3 bucket on your object storage system that is used by Velero to store backup files. This guide will use MinIO object storage, but you are free to use any S3 bucket.

3. Create an S3 credentials file on your workstation that will be used by Velero to store the key ID and key secret to access your S3 object storage system.

4. Run a “velero install” command to install the Velero application into your CCP tenant cluster. Make sure your $KUBECONFIG is pointing to your CCP tenant. Specify the appropriate parameters for the application to meet the configurations in your environment.

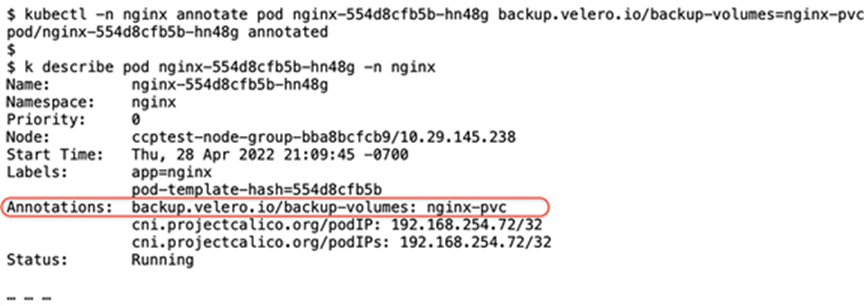

5. Run a “velero backup create” command to let Velero take the backup of the resources for your user applications on the CCP tenant clusters. Wait for the command to complete. Before you create the backup object, annotate every pod that contains a volume to be backed up using Restic with the volume’s name using the backup.velero.io/backup-volumes annotation.

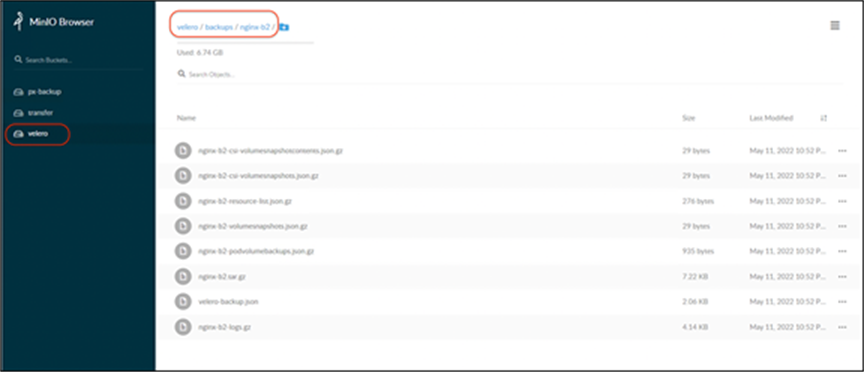

6. Go to the management console of your S3 object storage system. Verify that the backup objects have been created successfully.

7. Switch your $KUBECONFIG to point to your IKS tenant cluster.

8. Run the same “velero install” command used at Step 4 to install the Velero application into your IKS cluster.

9. Run a “velero get backups” command to verify that you can see the backup that you created at Step 5.

10. Run a “velero restore create” command to restore the user application to your IKS cluster.

11. Run the appropriate Kubernetes commands to verify that the user application is restored on your IKS cluster successfully.

1. OPTIONAL: Install MinIO; there are different options available here.

If you have Docker available, all you need to do is:

docker run -p 9000:9000 minio/minio server /data

Create a Velero-specific S3 bucket (“velero”) on the MinIO object storage server.

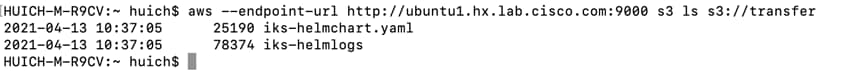

2. Verify connectivity to your S3 storage server from the workstation that is used to interact with CCP tenant clusters and IKS tenant clusters. You can use the AWS CLI client for this:

sudo snap install aws-cli --classic

aws configure

aws --endpoint-url http://<minio-ip-address or minio-server-name>:9000 s3 ls s3://<velero-bucket>

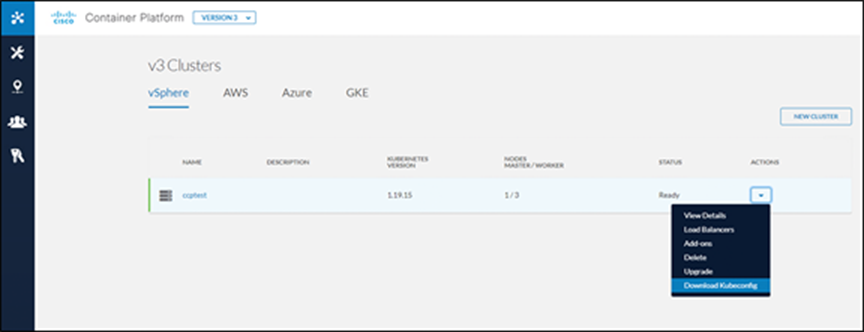

3. From the CCP administrative portal, download the kubeconfig file and save it to your workstation that is used to manage your CCP tenant clusters.

4. Log in to the workstation, set the KUBECONFIG environment variable to the kubeconfig file you just downloaded.

5. Verify that you are now able to communicate with the API server of the CCP tenant cluster.

kubectl get node

$ kubectl get node

NAME STATUS ROLES AGE VERSION

NAME STATUS ROLES AGE VERSION

ccptest-master-gro-2f9940efcd Ready master 26d v1.19.15

ccptest-node-group-274b392643 Ready <none> 26d v1.19.15

ccptest-node-group-4e816c4ae9 Ready <none> 26d v1.19.15

ccptest-node-group-bba8bcfcb9 Ready <none> 26d v1.19.15

6. Download the latest Velero software from the Velero website and save the tar file on the workstation.

7. Extract the tar file.

tar -xzvf velero-v1.8.1-darwin-amd64.tar.gz

$

tar xzvf velero-v1.8.1-darwin-amd64.tar.gz

x velero-v1.8.1-darwin-amd64/LICENSE

x velero-v1.8.1-darwin-amd64/examples/README.md

x velero-v1.8.1-darwin-amd64/examples/minio

x velero-v1.8.1-darwin-amd64/examples/minio/00-minio-deployment.yaml

x velero-v1.8.1-darwin-amd64/examples/nginx-app

x velero-v1.8.1-darwin-amd64/examples/nginx-app/README.md

x velero-v1.8.1-darwin-amd64/examples/nginx-app/base.yaml

x velero-v1.8.1-darwin-amd64/examples/nginx-app/with-pv.yaml

x velero-v1.8.1-darwin-amd64/velero

8. The directory you extracted will have the prefix velero-. Go to this directory and check the contents.

cd velero-v1.8.1-darwin-amd64/

ls -lts

$ ls -lts

total 139312

0 drwxr-xr-x 6 huich staff 192 May 9 18:14 examples

139288 -rwxr-xr-x@ 1 huich staff 71314368 Mar 14 19:07 velero

24 -rw-r--r--@ 1 huich staff 10255 Mar 10 01:45 LICENSE

9. Create an S3 credentials file (“credentials-velero”) in the local directory that is used by Velero to store the key ID and key secret to access your S3 object storage system.

[default]

aws_access_key_id = <your_S3_access_key>

aws_secret_access_key = <your_S3_access_secret>

in case of a default MinIO installation, you can use the default credentials.

10. Run the following “velero install” command to install the Velero application into your CCP tenant cluster. Specify the appropriate parameters for the application to meet the configurations in your environment.

./velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.4.0 \

--bucket velero \

--secret-file ./credentials-velero \

--use-volume-snapshots=false \

--backup-location-config region=minio, s3ForcePathStyle="true", s3Url=http://<minio-ip-address or minio-server-name>:9000 \

--use-restic

CustomResourceDefinition/backups.velero.io: attempting to create resource

CustomResourceDefinition/backups.velero.io: created

……

DaemonSet/restic: attempting to create resource

DaemonSet/restic: created

Velero is installed! Use 'kubectl logs deployment/velero -n velero' to view the status.

In this example, provider aws is specified and S3 object storage is used for backup-location-config. The use-restic option is specified to enable Restic support. Our sample cluster does not have a volume provider capable of snapshots, so use-volume-snapshot is set to false.

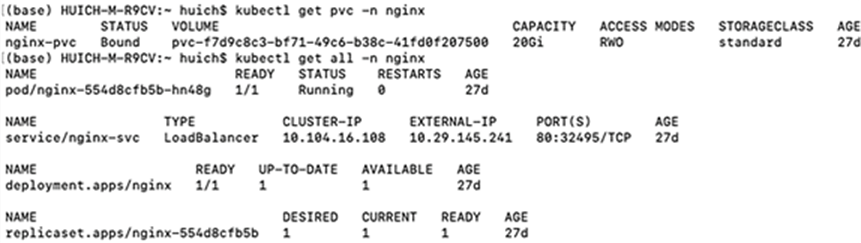

11. Now check the resources for the user application (“nginx”) that we are going to migrate.

kubectl get pvc -n <application namespace>

kubectl get all -n <application namespace>

12. Annotate the pod with the volume’s name using the backup.velero.io/backup-volumes annotation, so that the volume can be backed up using Restic.

13. Run a “velero backup create” command to let Velero take the backup of the resources for your user applications on the CCP tenant clusters. Wait for the command to complete.

$ ./velero backup create nginx-b2 --include-namespaces nginx --wait

Backup request "nginx-b2" submitted successfully.

Waiting for backup to complete. You may safely press ctrl-c to stop waiting - your backup will continue in the background.

..

Backup completed with status: Completed. You may check for more information using the commands `velero backup describe nginx-b2` and `velero backup logs nginx-b2`.

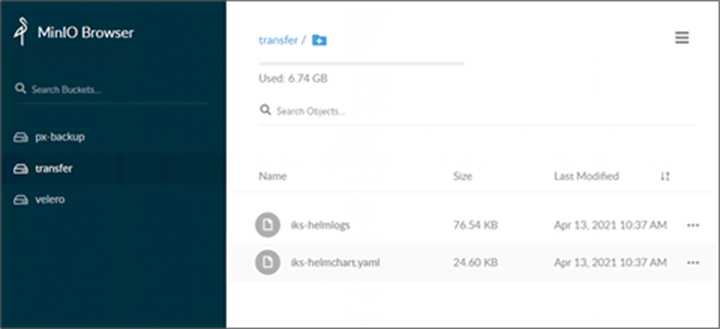

14. Go to the management console of your S3 object storage system. Verify that the backup objects have been created successfully.

15. Now let us work on the IKS side. From the Cisco Intersight cloud platform, click on Operate à Kubernetes, select the IKS cluster to which you want to migrate the user applications. Download the kubeconfig file and save it to the workstation used to manage your IKS tenant clusters.

16. Point your KUBECONFIG environment variable to the kubeconfig file you just downloaded.

export KUBECONFIG=~/Downloads/<iks-kubeconfig.yaml>

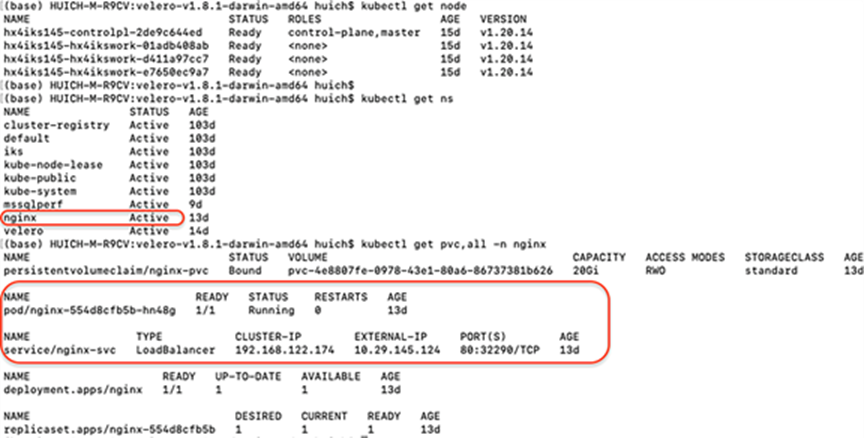

17. Verify that you are able to communicate with the API server of the IKS tenant cluster.

kubectl get node

$ kubectl get node

NAME STATUS ROLES AGE VERSION

hx4iks145-controlpl-2de9c644ed Ready control-plane,master 15d v1.20.14

hx4iks145-hx4ikswork-01adb408ab Ready <none> 15d v1.20.14

hx4iks145-hx4ikswork-d411a97cc7 Ready <none> 15d v1.20.14

hx4iks145-hx4ikswork-e7650ec9a7 Ready <none> 15d v1.20.14

18. Run the same “velero install” command used at Step 10 to install the Velero application into your IKS tenant cluster.

./velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.4.0 \

--bucket velero \

--secret-file ./credentials-velero \

--use-volume-snapshots=false \

--backup-location-config region=minio, s3ForcePathStyle="true", s3Url=http://<minio-ip-address or minio-server-name>:9000 \

--use-restic

19. Verify the status of Velero and Restic:

kubectl get all -n velero

$ kubectl get all -n velero

NAME READY STATUS RESTARTS AGE

pod/restic-6lg7q 1/1 Running 0 14d

pod/restic-k62md 1/1 Running 0 14d

pod/restic-wsztf 1/1 Running 0 14d

pod/velero-879fff5fc-qm8hx 1/1 Running 0 14d

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/restic 3 3 3 3 3 <none> 14d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/velero 1/1 1 1 14d

NAME DESIRED CURRENT READY AGE

replicaset.apps/velero-879fff5fc 1 1 1 14d

20. Run a “velero get backups” command on your workstation to verify you can see the backup you created at Step 13.

velero get backups

$ ./velero get backups

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

nginx-b1 Completed 0 1 2022-05-11 18:35:55 -0700 PDT 15d default <none>

nginx-b2 Completed 0 0 2022-05-11 22:52:21 -0700 PDT 16d default <none>

sql-b1 Completed 0 0 2022-05-16 14:17:30 -0700 PDT 20d default <none>

sql-b2 Completed 0 0 2022-05-16 14:52:15 -0700 PDT 20d default <none>

21. Run the following “velero restore create” command to restore the user application to your IKS cluster.

velero restore create --from-backup nginx-b2

$ ./velero restore create --from-backup nginx-b2

Restore request "nginx-b2-20220511112328" submitted successfully.

22. Run `velero restore describe nginx-b2-20220511112328` or `velero restore logs nginx-b2-20220511112328` for more details. Verify that the user application has been restored on your IKS cluster successfully.

23. Access the external IP of the restored Nginx instance, verify that the index page save on the Pod’s volume was restored successfully.

Note: Consult with your application providers if additional configurations are needed to restore the full services after the migration of the applications from CCP to IKS. In this document, both tenant clusters use the standard vSphere storage class. Consult with your storage providers if different storage classes are applied in your Kubernetes clusters.

Below is the list of the components that are used for validation testing in the lab:

● Cisco Intersight cloud-management platform

● Cisco Container Platform (CCP): Release 9.0.0

● Kubernetes API server on CCP: Version 1.19.15

● Kubernetes API server on IKS: Version 1.20.14

● Kubernetes client (Kubectl): Version 1.20.14

● Velero: Version 1.8.1

● MacOS workstation to manage Kubernetes cluster: Monterey Version 12.3.1

Note: The kubernetes versions listed here are for reference. It doesn’t mean the solution cannot work with a more recent version of Kubernetes.

This document has described the end to end validation of a specific example of user application migration from CCP to IKS using Velero. This document must be used as a conceptual description of migration and must apply appropriate steps required (using the backup/restore methodology of Velero and Restic) to move user applications from CCP or IKS to a well know Kubernetes distribution/cluster.

For additional information, see the following:

● Cisco Intersight Kubernetes Service (IKS): https://www.cisco.com/c/en/us/products/cloud-systems-management/cloud-operations/intersight-kubernetes-service.html

● Terraform provider for Cisco Intersight: https://github.com/CiscoDevNet/terraform-provider-intersight

● To download Velero: https://velero.io/