FlashStack with Nutanix Cloud Infrastructure Software

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

![]()

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone

Executive Summary

Business demands are rapidly outpacing the limitations of existing infrastructure choices. Organizations are seeking infrastructure solutions that are simplified, flexible, independently scalable, powerful, and agile solutions that avoid locking them into a specific software suite and enable them to repurpose existing hardware investments. In addition, with recent rapid changes in virtualization, many customers are reevaluating their current virtualization strategies and looking for new paths forward.

FlashStack, originally developed by Cisco and Pure Storage, offers a pre-engineered Converged Infrastructure solution that integrates compute, storage, and networking, reducing IT risk by validating the architecture and ensuring compatibility across all components. FlashStack’s success is rooted in its adaptability and ongoing evolution, consistently incorporating the latest technology and product innovations in management, compute, storage, and networking.

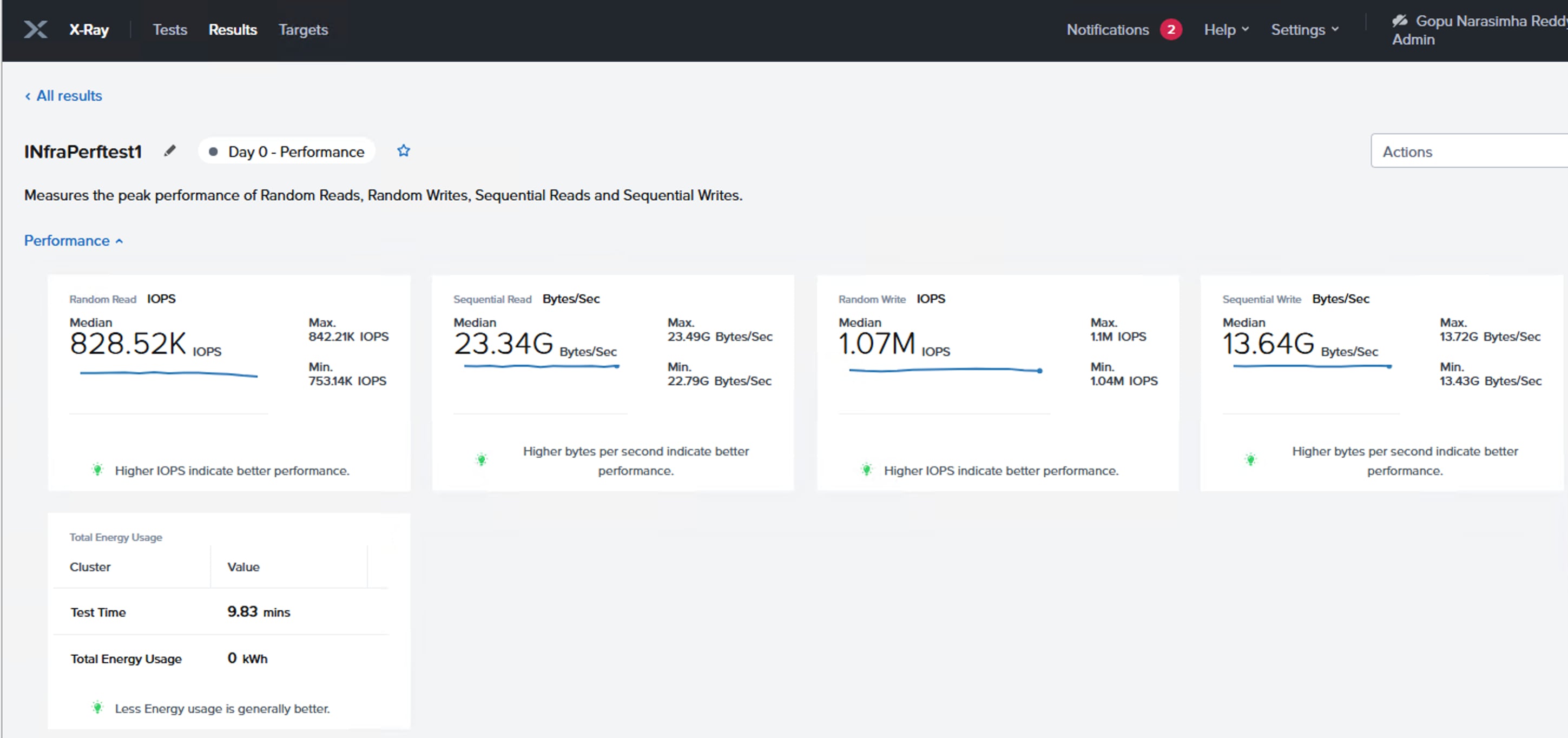

To address the evolving needs, Nutanix joined the decade-long partnership of Cisco and Pure Storage to bring the Nutanix hypervisor (AHV) support to FlashStack. By combining industry-leading Cisco UCS compute, Pure Storage, and the Nutanix Cloud Platform, this solution offers a robust virtualization platform purpose built to address modern customer challenges. The architecture is ideally suited for hosting enterprise-critical workloads that demand consistent performance, support for mixed workloads with varying I/O requirements, large virtual machine farms, and independent scalability of compute and storage resources.

This document presents validated best practices design and deployment details for FlashStack with Nutanix AOS and AHV, built with Cisco UCS, Cisco Networking, and Pure Storage FlashArray. It explains two deployment types; Greenfield deployment for customers with no existing Cisco UCS or Pure Storage infrastructure and brownfield deployment for customers who have already invested in Cisco UCS or Pure Storage and wish to repurpose their existing hardware investments.

Solution Overview

This chapter contains the following:

● Audience

FlashStack with Nutanix Cloud Platform represents a cohesive and flexible validated converged infrastructure solution that combines compute, network, and storage resources into a single, integrated architecture. Designed as a collaborative effort between Cisco, Pure Storage, and Nutanix, this converged infrastructure platform is engineered to deliver high levels of efficiency, scalability, and performance, suitable for a multitude of data center workloads. By standardizing on a validated design, organizations can accelerate deployment, reduce operational complexities, and confidently scale their IT operations to meet evolving business demands.

The FlashStack architecture leverages Cisco's Unified Computing System (Cisco UCS) servers, Cisco Nexus networking, Pure’s innovative storage systems, and Nutanix Cloud Platform (NCP) software, providing a robust foundation for virtualized environments.

The intended audience for this document includes, but is not limited to IT architects, sales engineers, field consultants, professional services, Cloud Native teams, IT managers, IT engineers, partners, and customers who are interested in taking advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

This document provides deployment guidance for setting up the FlashStack solution with NCP. This document introduces various design elements and explains various considerations and best practices for a successful Nutanix Compute Cluster using Cisco UCS and Pure Storage FlashArray.

Some of the highlights of FlashStack with Nutanix are:

● Alternate and Flexible Virtualization Infrastructure: FlashStack with Nutanix offers an alternative full-stack virtual infrastructure that is modern, scalable, and ready to handle most mission-critical, data-intensive workloads. This integrated solution combines the operational simplicity of Nutanix Cloud Infrastructure (NCI) solution with the high-performance and modular FlashStack architecture, delivering improved and consistent application performance. This solution stands out as the standard virtualization infrastructure choice for customers who are looking to re-evaluate and modernize their virtualization strategies.

● Repurpose the existing infrastructure Investments: This solution enhances the value of existing Cisco UCS servers and Pure Storage FlashArrays. By leveraging the stateless computing architecture of Cisco UCS alongside the modular design of Pure Storage FlashArrays, organizations can repurpose previous-generation hardware and maximize their infrastructure investments. This approach enables seamless modernization without the need to replace current assets, allowing organizations to upgrade and innovate while fully utilizing their existing technology resources.

● Seamless Solution Integration: All the components of the solution are deeply integrated to enable seamless Nutanix cluster provisioning, cluster expansion and lifecycle management of the entire solution. Cisco Intersight Device Connector for Nutanix Prism Central, combined with Pure Storage FlashArray integration, delivers a unified view of the entire solution through a single management console.

● Scalability and Consistent performance: HyperConverged Infrastructure (HCI) offers linear and predictable scaling, which is great for some workloads, however, some customers need more flexibility with independent hardware resource scalability. The modular architecture of FlashStack enables independent scaling of compute and storage resources, allowing organizations to meet the ongoing business demands of modern IT applications. Cisco UCS’s modular and stateless computing combined with Pure Storage FlashArray’s modular NVMe architecture delivers a high-performance solution that is ideally suited for mission-critical workloads with varying I/O requirements. In addition, this architecture utilizes NVMe over Fabric (NVMe-oF) protocol over TCP (NVMe/TCP) extending the high-performance benefits of NVMe architecture of FlashArray across standard Ethernet networking using TCP/IP.

● Operational efficiency and Consistent infrastructure configuration: The solution provides unified management through Cisco Intersight, Prism Central, and Pure1. This integrated approach enables centralized monitoring, management, and automation across compute, storage, and virtualization layers, simplifying operations and enhancing visibility for IT administrators. Intersight Integration with Nutanix Foundation Central (FC) automates and enhances deployment experience without hopping on to multiple management points avoiding human configuration errors. In addition to the compute-specific hardware and software innovations, integration of the Cisco Intersight cloud platform with Pure Storage FlashArray and Cisco Nexus delivers monitoring, orchestration, and workload optimization capabilities for different layers of the FlashStack solution.

Technology Overview

This chapter contains the following:

● FlashStack with Nutanix Cloud Platform

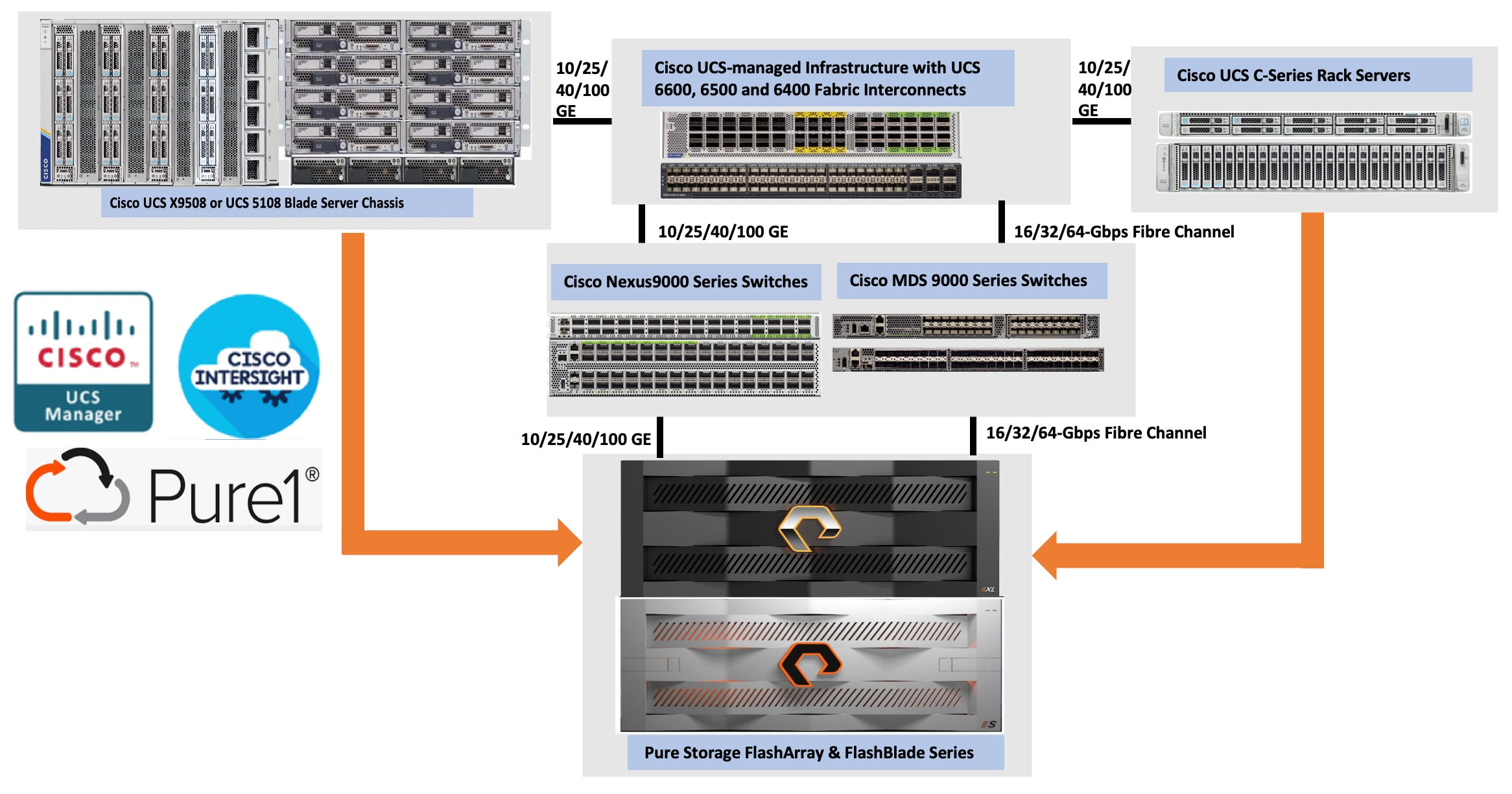

All FlashStack components are integrated, allowing you to deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. One of the main benefits of FlashStack is its ability to maintain consistency at scale. Figure 1 illustrates the series of hardware components used for building the generic FlashStack architectures. Each of the component families; Cisco Unified Computing System (Cisco UCS), Cisco Nexus, Cisco MDS, and Pure Storage FlashArray systems, offers platform and resource options to scale-up or scale-out the infrastructure while supporting the same features and functions. For more details on the FlashStack architecture, go to: FlashStack.

FlashStack with Nutanix Cloud Platform

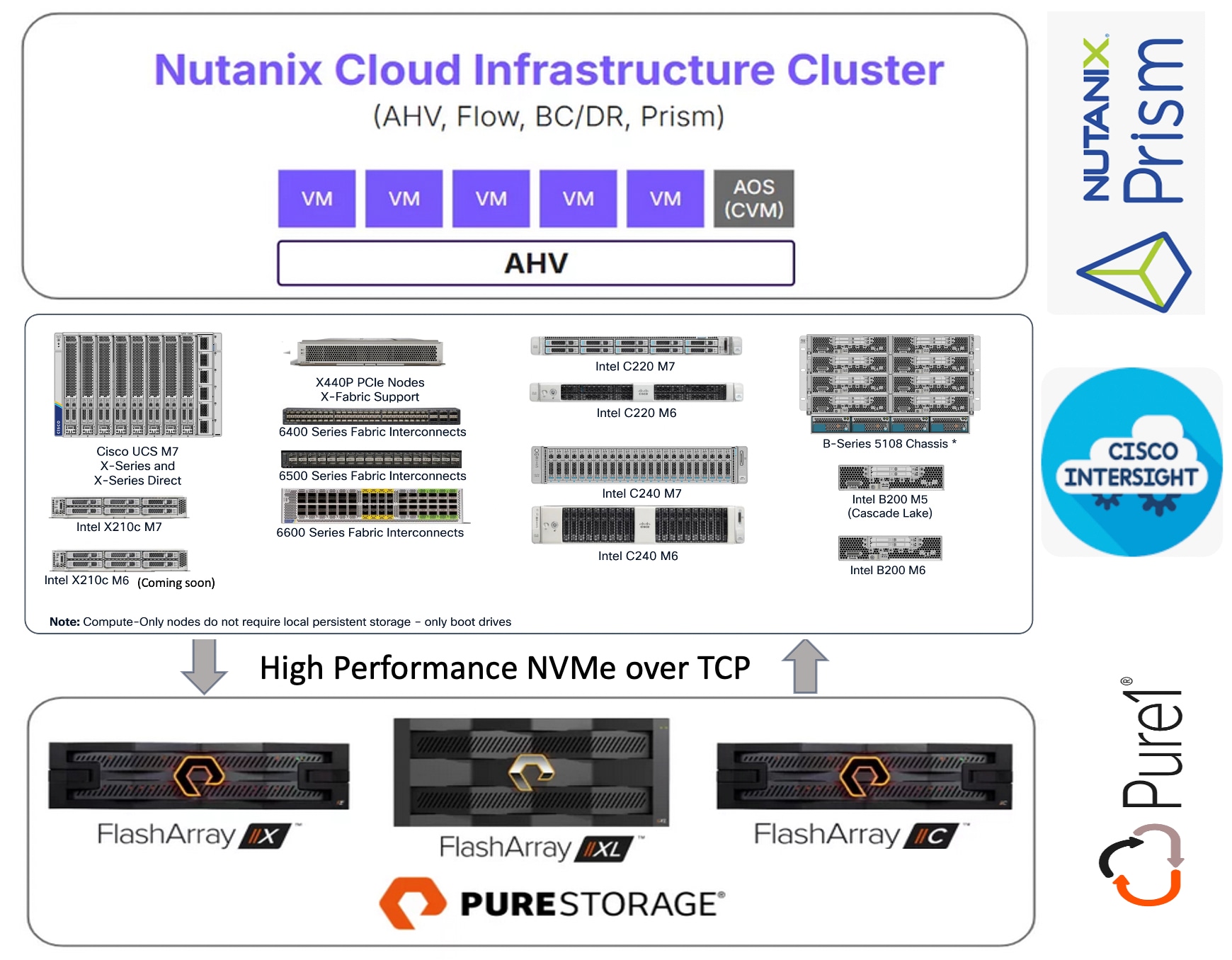

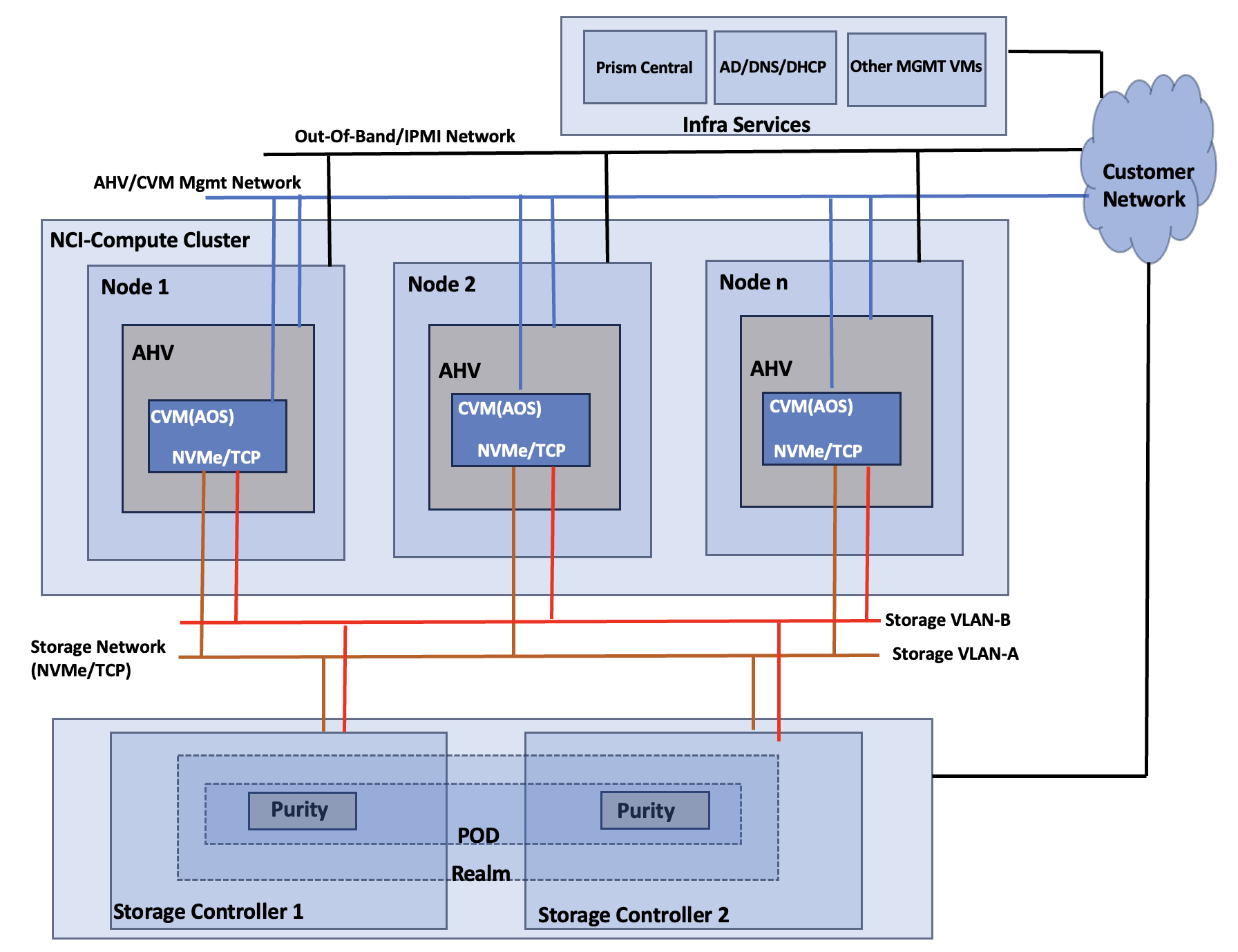

Nutanix Cloud Platform (NCP) is a comprehensive hybrid cloud infrastructure solution. The NCP combines the core elements of its HCI technology with additional cloud services, automation, and integrations, enabling organizations to build, manage, and optimize their IT infrastructure across on-premises, edge, and public cloud environments. The high-level design of the solution is described in Figure 2.

Nutanix Cloud Infrastructure (NCI) is the core software stack of NCP that unifies compute, storage, and networking into a single, scalable software-defined system simplifying management across data centers, edge and public-clouds for hybrid multi-cloud environments. It offers simplified operations leveraging AHV for virtualization and AOS for storage and data services and Prism Central for centralized management across the environments.

Nutanix Acropolis Hypervisor (AHV) is a native, enterprise-grade virtualization solution built directly into the Nutanix Cloud Infrastructure, eliminating the complexity and cost of third-party virtualization while delivering enterprise-grade performance and scalability. High availability of VMs, Live Migration, Dynamic scheduling, Integrated Networking are the core features of AHV. Nutanix Flow adds policy-driven micro segmentation and virtual networking, providing granular control over east-west traffic and helping meet compliance requirements. Designed around virtual machines, it enables fast provisioning, easy scaling, responsive performance, and built-in resilience for business-critical applications.

Nutanix Cloud Infrastructure – Compute Cluster is part of the NCP and provides core compute capabilities, while storage is facilitated by the external storage array. The NCI Compute Cluster runs Nutanix AHV and AOS software on a set of Compute-Only (CO) nodes like Cisco UCS x86 standard servers and forms a compute cluster backed by an external Pure Storage FlashArray. The NCI compute cluster provides compute resources to the VMs, delivers VM availability, security, disaster recovery, and lifecycle management functionalities. The cluster does not include any directly attached storage; instead, it consumes storage from an externally connected Pure Storage FlashArray through the Nutanix AOS storage controller.

The role of Nutanix Acropolis Operating System (AOS) in this NCI Compute cluster architecture is different from its traditional HCI architectures. All the code changes required for enabling and integrating external storage into the solution are done within the AOS controller VM, not at the AHV hypervisor level. Some of the key responsibilities of AOS controller VM are:

● External Storage Array connectivity: The AOS controller VM acts as a translator, connecting the AHV hypervisor to the external storage via NVMe-over-Fabric (NVMe-oF) over TCP. NVMe-oF over TCP is a protocol that enables NVMe storage devices to connect and communicate across standard Ethernet network using TCP/IP. This approach delivers the high-speed and low-latency benefits of NVMe storage without requiring specialized hardware, making it simple, scalable and cost effective high performance storage solution. The increase in Ethernet speeds (25/40/100 GbE and beyond) significantly accelerates adoption of NVMe-oF over TCP.

● Data Path Redundancy: AOS ensures if a network path to the storage fails, the VM’s storage access is automatically rerouted (autopathing).

● Snapshot and Clones: AOS delegates these operations to the external storage but presents them to the administrator as standard Nutanix VM-Level operations.

● DR Automation: AOS manages disaster recovery orchestration and replication for VMs even though the actual data blocks reside on the external storage array.

● Unified Operations via Prism: Even without local storage to manage, AOS enables you to manage VMs, networking, and security policies from the same standard Prism interface used for standard HCI clusters.

Cisco UCS is an integrated data center platform that combines compute, networking, and storage into a single, centrally managed system, simplifying management, reducing complexity, and improving efficiency for diverse workloads like virtualization, cloud, and AI. Key components include servers (blades, rack, modular) and Fabric Interconnects (FIs) for unified connectivity.

FlashStack with Nutanix Integrates Cisco UCS servers (Cisco UCS C-Series, Cisco UCS B-Series, and Cisco UCS X-Series) as compute-only nodes with Nutanix’s Cloud Platform and external Pure Storage FlashArray, creating a flexible, disaggregated infrastructure managed by Cisco Intersight.

● The stateless computing model (where every compute aspect is virtualized and abstracted) and midplane-free modular design of Cisco UCS set it apart as a unique server platform. This approach enables you to repurpose their existing servers by converting them into fully supported Nutanix nodes, maximizing investment and flexibility.

● Currently, the last two generation server platforms for blades and racks: Cisco UCS B200 M5, Cisco UCS B200 M6, Cisco UCS C220 M6, Cisco UCS C240 M6, and Cisco UCS X210c M7, Cisco UCS X210c M6 (coming soon), Cisco UCS C220 M7, and Cisco UCS C240 M7 are supported by the solution. Support for Cisco UCS M8 server platforms will be available in the future releases of the solution.

● The CO nodes do not require local disks for persistent storage. Only Local M.2 SSD disks are used for booting the AHV hypervisor and storing the AOS Controller VM binaries on the Nutanix node. The CO nodes connect to the Pure Storage FlashArray for virtual machine storage utilizing the NVMe over Fabrics (NVMe-oF) protocol over TCP.

● NVMe over TCP provides a highly simplified and fast way to access storage across IP networks, delivering high performance and low latency without requiring specialized network infrastructure. As Ethernet network speeds continue to increase, the adoption of NVMe over TCP is further accelerated, enabling even faster data transfer and improved performance for storage solutions over standard IP networks.

Pure Storage FlashArray provides storage services such as user data persistence, data resiliency, data availability, data security, and data efficiency.

● The Pure Storage Platform delivers a unified, cloud-like storage-as-a-service foundation that consolidates block, file, and object with simple, centralized management, cutting complexity and accelerating outcomes.

● FlashArray//X, //XL and //C series arrays are supported and support other series arrays will be available in the future phases of the program. Built on Evergreen architecture for non‑disruptive upgrades (even with in-place upgrades) and zero‑downtime operations, it future-proofs the estate while driving cost, space, and energy efficiency so teams can focus on innovation instead of migrations.

● It’s always-on global compression and deduplication deliver industry-leading data efficiency, often requiring significantly less hardware than alternatives. The platform’s modular NVMe architecture enables true disaggregated simplicity, separating compute and storage for sub-millisecond latency across all workloads.

● With full integration into the Nutanix Prism control plane, customers gain VM-level snapshots, and seamless Day 1 and Day 2 operations, all from a single interface. FlashArray delivers six-nines availability, even during in-place upgrades, and scales with DirectFlash Modules up to 150TB per drive (300TB announced), offering more usable capacity with fewer devices.

Nutanix Prism provides central access to configure, monitor, and manage virtual environments. Nutanix Prism uses machine learning to mine large volumes of system data easily and quickly, generating actionable insights for optimizing all aspects of virtual infrastructure management. Included as a part of every Nutanix deployment, Nutanix Prism has two core components:

● Prism Element: Prism Element is a service built into the platform for every deployed Nutanix cluster. Prism Element fully configures, manages, and monitors Nutanix clusters running any hypervisor.

● Prism Central: Because Prism Element manages only the cluster that it’s part of, each deployed Nutanix cluster has a unique Prism Element instance for management. With Prism Central, you can manage different clusters across separate physical locations on one screen and gain an organizational view into a distributed Nutanix environment.

Foundation Central (FC) is a Nutanix software that allows you to create clusters from factory-imaged nodes and remotely reimage existing nodes that are already registered with Foundation Central from Prism Central or a standalone Foundation Central appliance VM.

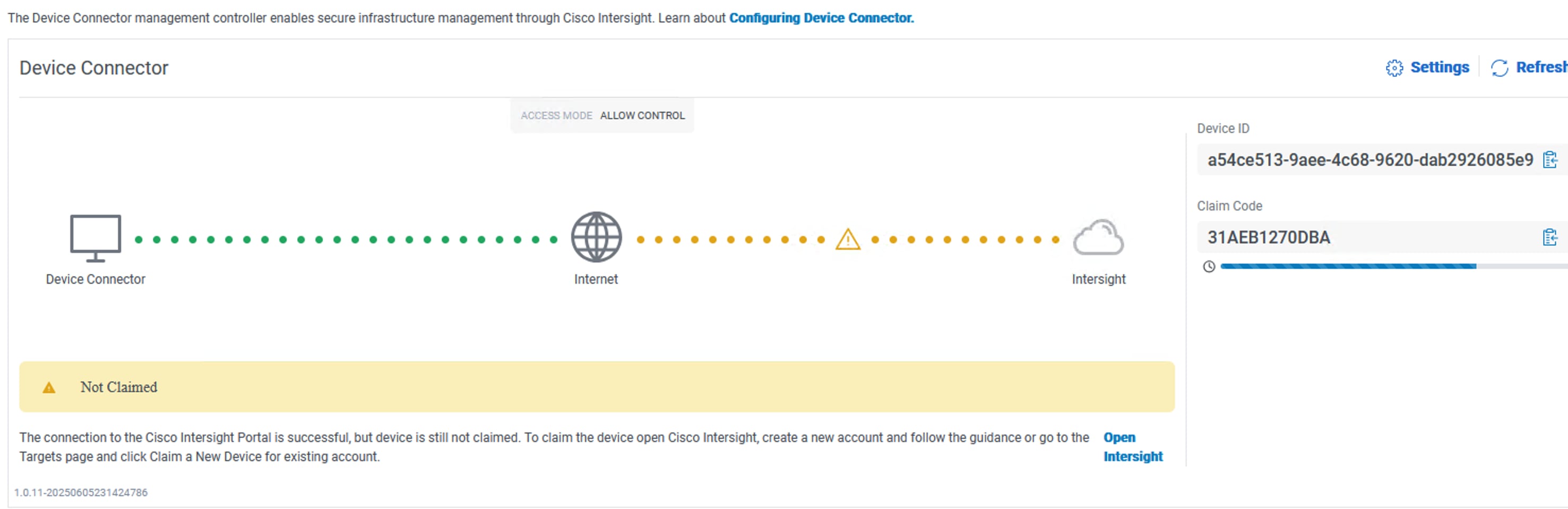

Cisco Intersight Device Connector in Prism Central is an application installed via the Nutanix Marketplace that enables secure, bi-directional communication between Nutanix clusters and the Cisco Intersight portal. It facilitates centralized management, monitoring, and proactive alerts for Nutanix infrastructure within the Intersight dashboard. The following list provides key capabilities and functionality.

● Unified Management Interface: A “single pane of glass” view through a dedicated Nutanix cluster dashboard and aggregated data views across multiple Prism Centrals.

● Comprehensive Visibility and Monitoring: Provides deep visibility and detailed monitoring capabilities including:

◦ Alarms at the cluster-level

◦ Detailed Information about nodes, Virtual Machines, software versions, license information (for both Intersight and Nutanix), and storage utilization.

◦ Support for Inventory at the cluster, node, VM, and Cluster GPU levels.

● Simplified Lifecycle Management: Facilitates easy upgrades and lifecycle management through Nutanix Life Cycle Manager (LCM).

Cisco Intersight

Cisco Intersight is a lifecycle management platform for your infrastructure, regardless of where it resides. In your enterprise data center, at the edge, in remote and branch offices, at retail and industrial sites—all these locations present unique management challenges and have typically required separate tools. Cisco Intersight Software as a Service (SaaS) unifies and simplifies your experience of Cisco UCS.

The Cisco Intersight Managed Mode (also referred to as Cisco IMM) is an architecture that manages Cisco UCS fabric interconnect–attached systems through a Redfish-based standard model. Cisco IMM standardizes both policy and operation management for Cisco UCS C-Series, Cisco UCS X-Series, and Cisco UCS B-Series compute nodes used in this deployment guide.

For this release, all Cisco UCS servers intended for use as compute-only nodes in the Nutanix cluster must be connected to a pair of Fabric Interconnects and managed exclusively with Cisco Intersight using IMM. Support for other management options will be available in future releases.

Cisco Intersight integration with Prism Central allows Foundation Central to communicate directly with Intersight, automatically creating the necessary Intersight pools, policies, and server profiles. This automation streamlines and simplifies the deployment of Nutanix clusters on Cisco UCS servers, significantly enhancing the overall customer experience.

In addition to the Cisco Intersight SaaS platform, air-gapped Cisco Intersight Private Virtual Appliance (PVA) and Cisco Intersight Connected Virtual Appliance (CVA) are also supported for managing hardware infrastructure and support the deployment of Nutanix cluster on FlashStack. If an air-gapped Cisco Intersight Private Virtual Appliance is used, updates and downloads must be managed manually. The firmware bundles for all the supported hardware platforms such as Cisco UCS B-Series, Cisco UCS C-Series, Cisco UCS X-Series must be uploaded to the PVA software repository. Nutanix Phoenix AHV and AOS software bundles also must be uploaded to the PVA. If the Fabric Interconnects need to be upgraded, then their firmware bundles also must be uploaded the PVA. For more information about downloading firmware bundles into PVA, see: Creating an Appliance Account for Downloading Software Packages. For more information about deploying CVA, see: Installing Cisco Intersight Virtual Appliance and Intersight Assist on VMware vSphere.

Note: Cisco Intersight connected or air-gapped virtual appliances (CVA and PVA) version 1.1.5-1 or above must be used for installing Nutanix AHV installation on FlashStack. For more information on the specific differences using CVA/PVA (when compared to Cisco Intersight SaaS Intersight) for the Nutanix AHV deployment, see the Cisco FlashStack with Nutanix Installation Field Guide.

Pure Storage Pure1

Pure Storage Pure1 is a cloud-based, AI-driven SaaS platform designed to simplify and optimize data storage management for Pure Storage arrays. It offers features such as proactive monitoring, predictive analytics, self-service upgrades, and automated tasks.

● Provides a single, intuitive interface for monitoring and managing all your Pure Storage FlashArrays, FlashBlades, Portworx integrating capabilities for capacity management, security monitoring, data protection, and troubleshooting—all in one place.

● Provides proactive recommendations before the storage array faces an issue. The SelfService upgrade feature enables customers to upgrade their storage arrays on their own schedule, providing comprehensive health checks and step-by-step wizards to ensure smooth, non-disruptive upgrades.

● Pure1’s Genealogy feature tracks your hardware evolution from installation through upgrades and sends reminders about upcoming renewals, helping you avoid lapses in support coverage.

● Offers robust support for identifying bottlenecks across virtual disks, datastores, hosts, and physical arrays, whether the issues are in the storage or virtualization layer.

Note: Pure Storage FlashArray must be upgraded to Purity 6.10.3 or later to support the Nutanix AHV deployment on a FlashStack environment. This version is a global requirement for the Nutanix Cloud Platform on Pure Storage, applicable across all integrations – whether deployed as a FlashStack solution or on independent server hardware.

Solution Design

This chapter contains the following:

● FlashStack Design Considerations

● Considerations and Recommendations for FlashStack with Nutanix

● Supported Hardware and Software Components

● FlashStack Physical Topology

● FlashStack with Nutanix Networking

● FlashStack with Nutanix Storage Layout

FlashStack Design Considerations

FlashStack with Cisco UCS and Cisco Intersight meets the following design requirements:

● Resilient design across all the layers of infrastructure with no single point of failure

● Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed

● Modular design that can be replicated to expand and grow as the needs of the business grow

● Flexible design that can support different models of various components with ease

● Simplified design with the ability to integrate and automate with external automation tools

● AI-Ready design to support required NVIDIA GPUs for running AI/ML based workloads

● Cloud-enabled design which can be configured, managed, and orchestrated from the cloud using GUI or APIs

● Unified full-stack visibility for real-time monitoring, faster troubleshooting, and improved digital resilience by correlating metrics, logs, and traces across infrastructure and applications

To deliver a solution that meets all these design requirements, various solution components are connected and configured as explained in later sections.

Considerations and Recommendations for FlashStack with Nutanix

Consider the following design aspects, recommendations, limitations of Nutanix Cloud Platform with Pure Storage FlashArray.

● It is recommended to use 480G M.2 SSDs configured with RAID1 as a boot device for storing AHV/AOS OS binaries. 480G SSDs provides enough storage for additional files that would be created on daily basis (log files) and for future proofing (future upgrades). M.2 240G SSDs are also supported with certain configurations.

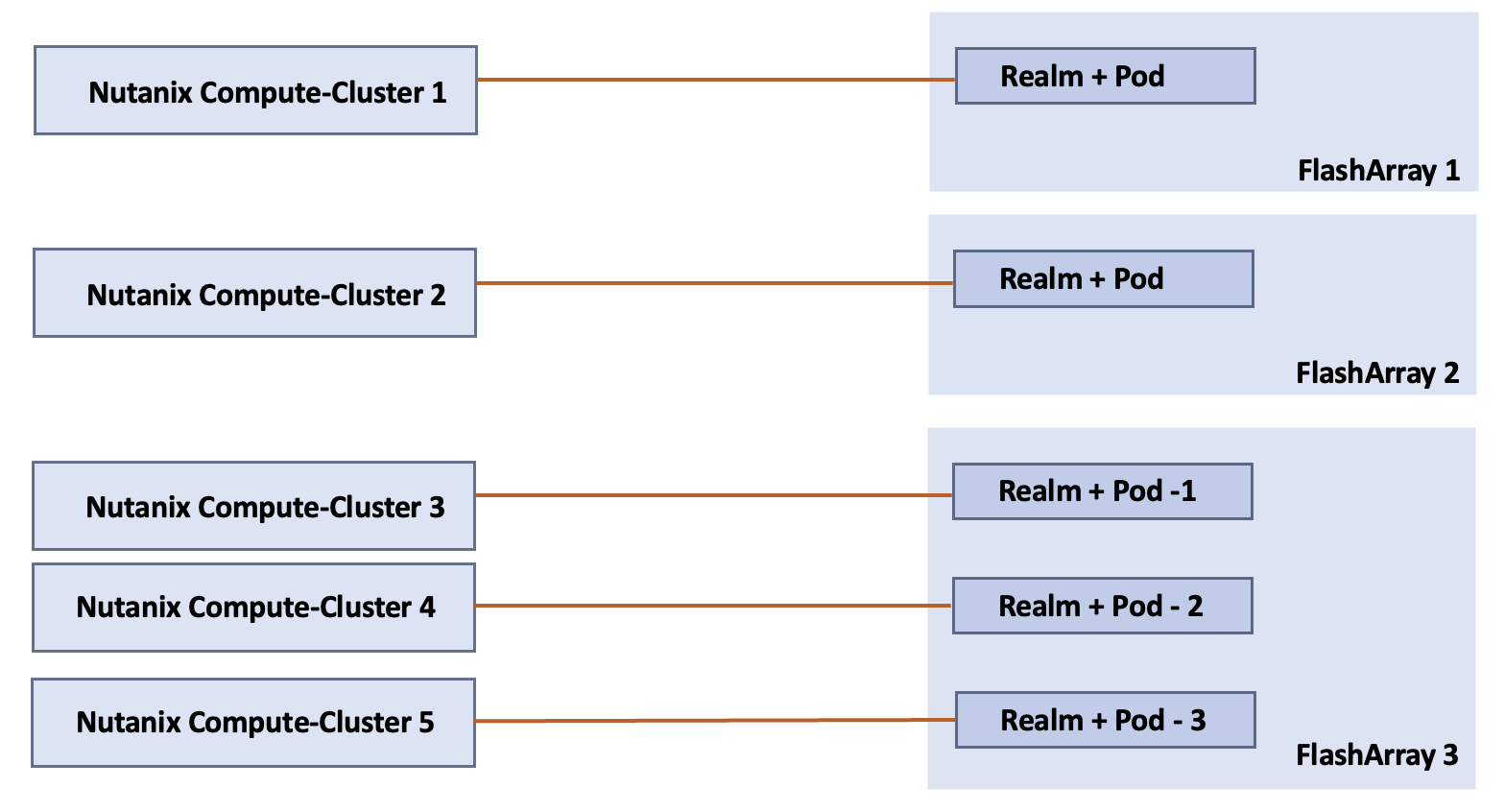

● A Nutanix Cloud Infrastructure (NCI) compute cluster can connect to only one external Pure Storage FlashArray. However, a single FlashArray can provide storage to multiple NCI compute clusters. Pure Storage FlashArray supports data-at-rest encryption for the storage consumed by the NCI compute clusters. For more details, see: Pure Storage FlashArray data security and compliance.

● For production deployments, it is required to use a minimum of five compute-only nodes which can be scaled up to 32 nodes per NCI compute cluster. For non-production deployments, a Nutanix cluster can be deployed with as few as three compute-only nodes. Single or two-node NCI compute clusters in both production and non-productions environments is not supported.

● In the FlashStack design, which is standardized for enterprise workloads, it is recommended to connect the storage array to the Top-of-Rack (ToR) switches (like the Nexus 9000 series) rather than directly to the Cisco UCS Fabric Interconnects (FIs). Connecting the storage array to the ToR switches provides significantly better scalability, flexibility, and resource utilization. By connecting the FlashArray directly to the FIs, you are limiting storage array access only to the servers connected to the FIs rather than making it as a centralized shared resource. For this validation, a pair of Cisco Nexus 93600CD-GX switches are used as ToR switches. All the compute nodes (including blades and racks) are connected to Nexus Switches via Fabric Interconnects and Pure Storage FlashArray is directly connected to the ToR switches. While attaching a storage array to Fabric Interconnects (FIs) is not the recommended deployment method, it remains a technically supported option for customers who have specific constraints or are confident to manage the associated risks.

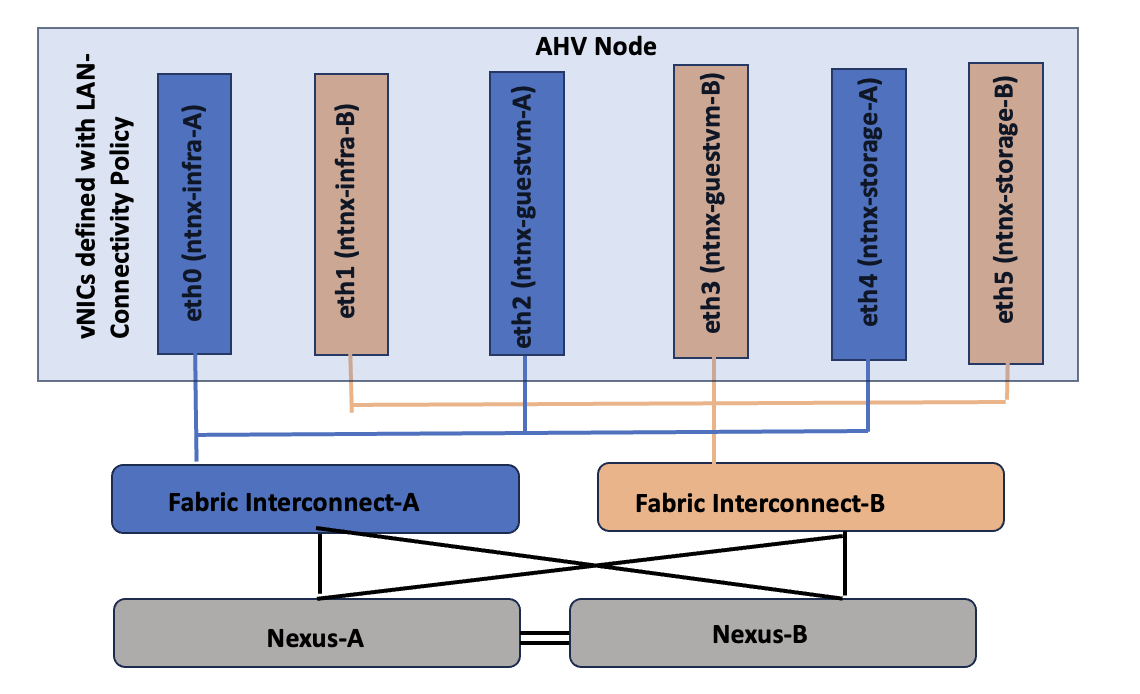

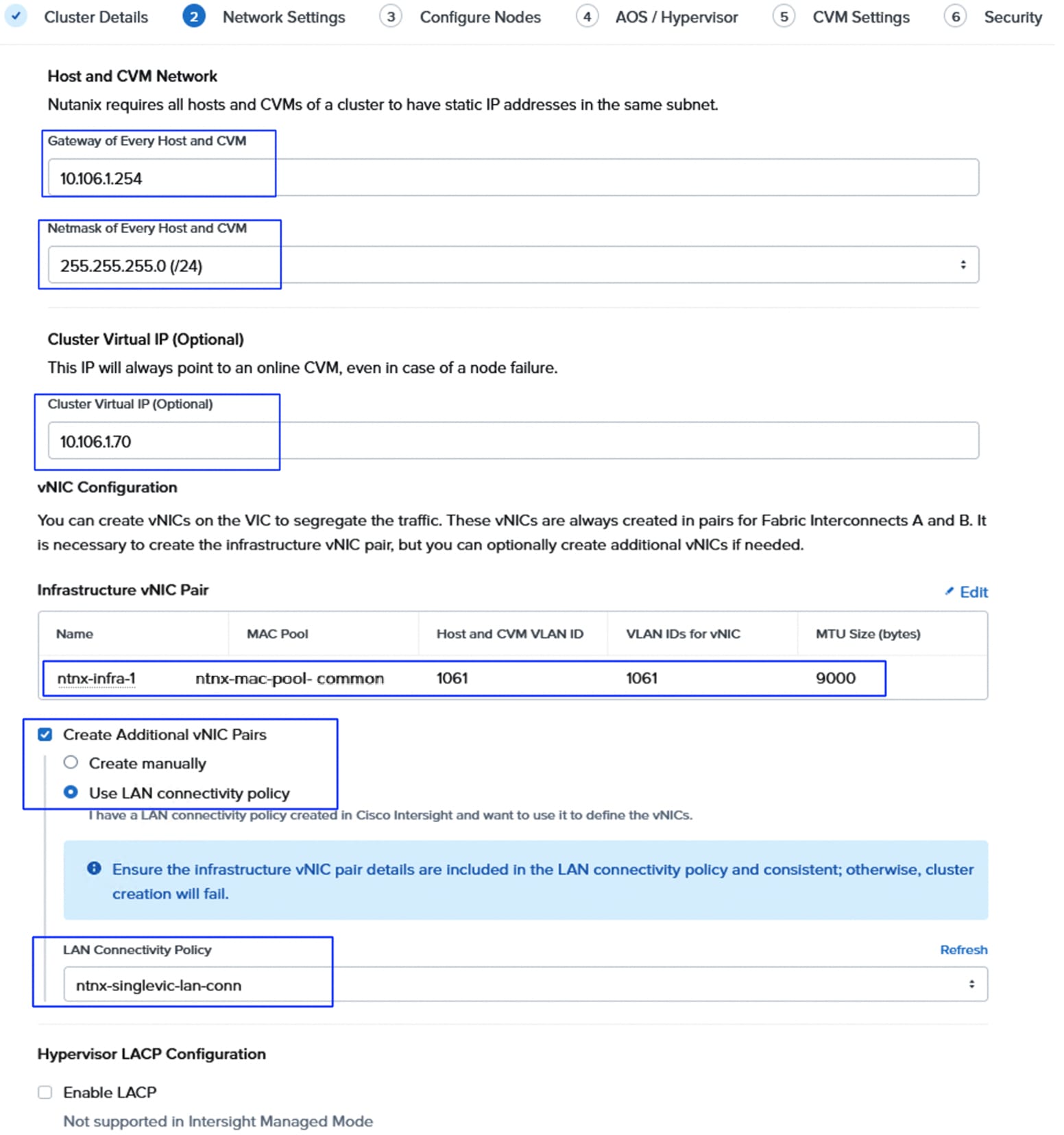

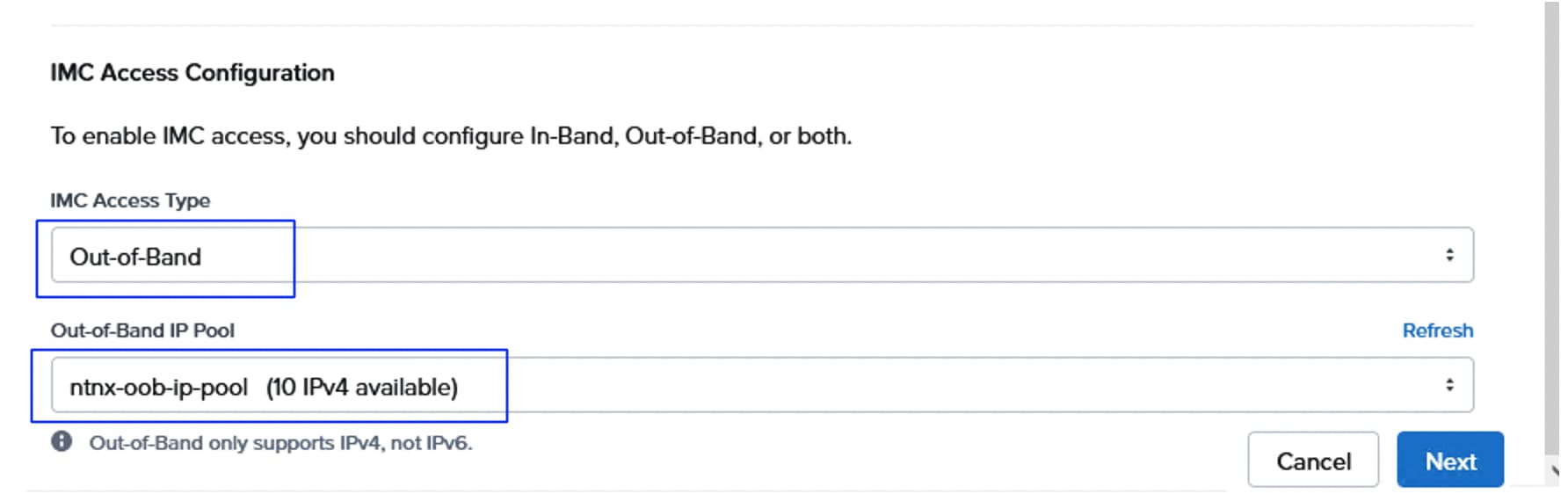

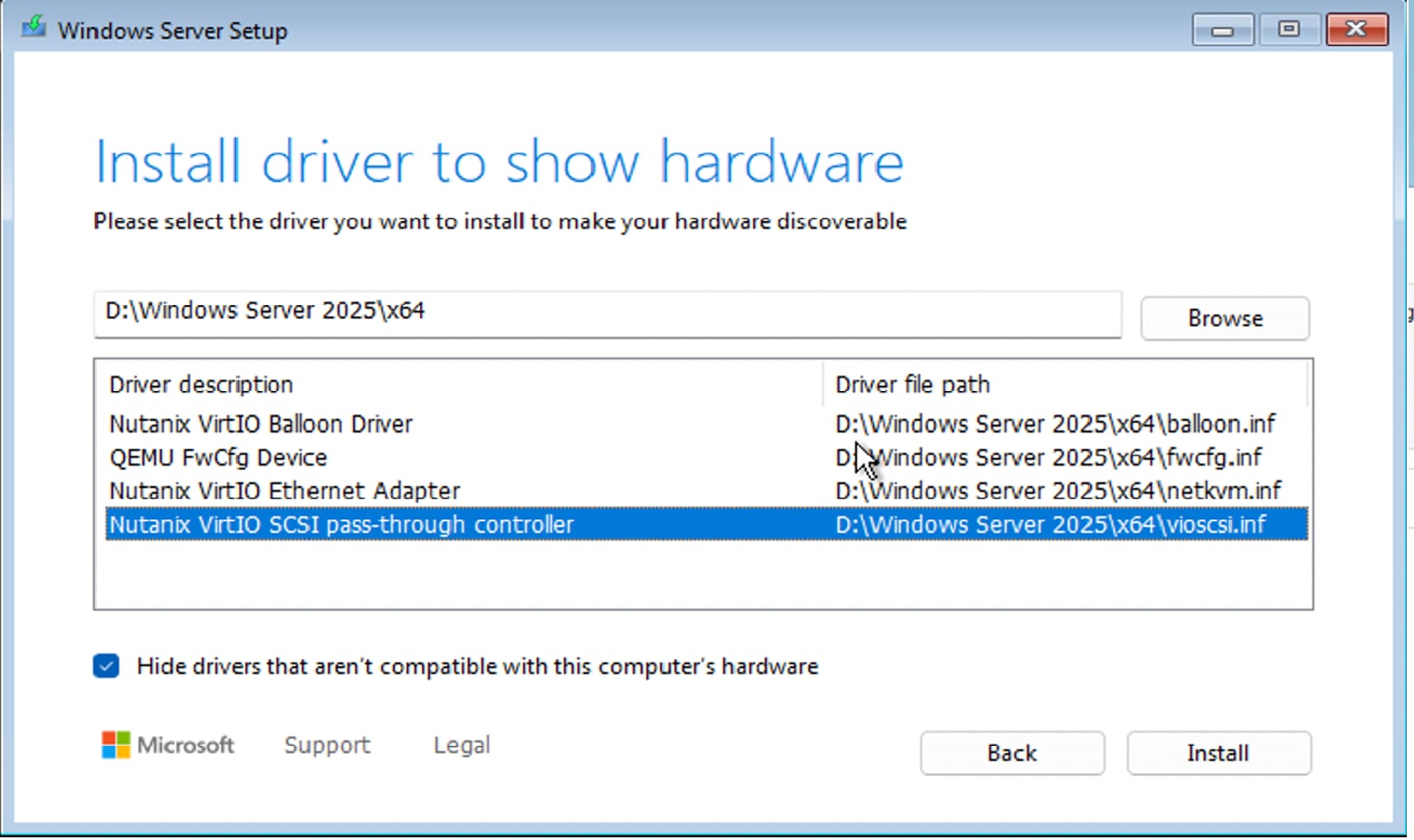

● It is required to create a separate pair of vNIC interfaces for each node to segregate different types of traffic. For instance, external storage traffic that uses NVMe-oF need to be segregated by creating a dedicated pair of vNICs. Instead of defining required vNIC pairs from the Foundation Central (FC) at the time of deployment, Cisco UCS LAN Connectivity policy can be used to define the required vNICs with advanced configuration options including vNIC placement, VLAN ID, PCI order and so on.

● Every vDisk that is created in an NCI compute cluster is directly mapped to a corresponding volume pair (data and meta data volumes) in the FlashArray. At the time of writing this document, not more than 5000 vDisks are supported per NCI compute cluster. For more information, go to: Nutanix and Pure Storage Requirements, Limits and Feature Compatibility

● After the NCI compute cluster is deployed, it is necessary to configure the external storage from the Prism Element of the cluster before creating virtual machines, as AHV boot disk cannot be used to store virtual machine vDisks.

● Ensure that you do not destroy, connect, or disconnect any volumes and do not delete or modify the hosts or host ports in Purity//FA UI that begin with prefix "nx-" as these storage constructs are fully controlled by the NCI Compute Cluster. Manual intervention can lead to data unavailability or management desynchronization.

Supported Hardware and Software Components

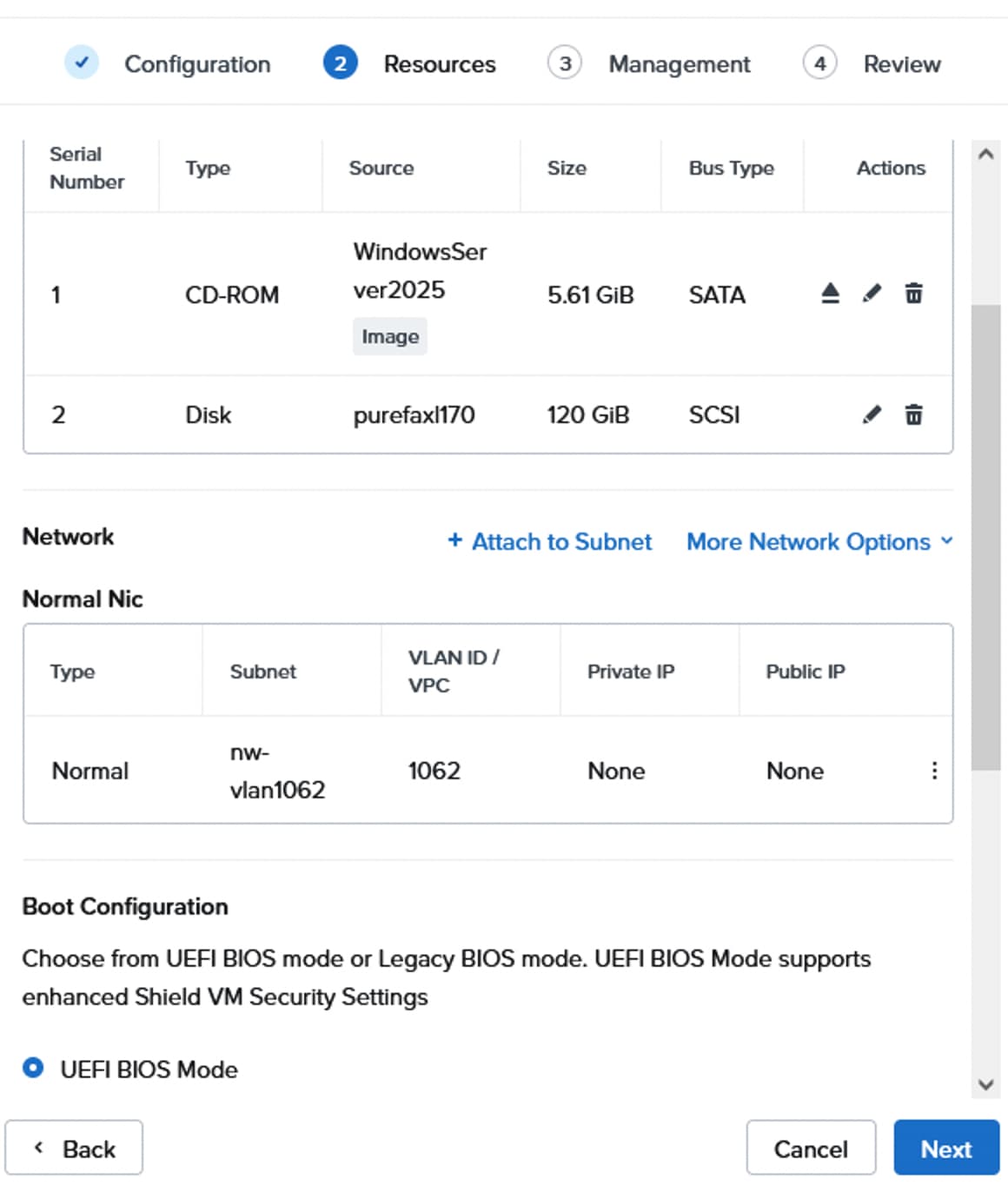

This document covers two types of deployment options.

● Greenfield Deployment: This option is for the customers with no existing Cisco UCS or Pure Storage infrastructure. For this type of deployment, customers can acquire supported hardware and software components for deploying FlashStack with Nutanix Cloud Platform. Table 1 lists supported server and storage platforms for greenfield deployments.

Table 1. Supported Cisco UCS and Pure Storage platforms for Greenfield deployments

| Platform |

Details |

| Cisco UCS Fabric Interconnects |

6500 Series Fabric Interconnects (FI 6536) 6600 Series Fabric Interconnects (FI 6664) |

| Server Platform |

Cisco X-Series Blade Chassis:

● Cisco UCS X96508 and UCS X96508 Direct

Cisco UCS X-Series Blades:

● Cisco UCS X210c M7

● Cisco UCS X210c M6 (Coming soon)

● Cisco UCS C-Series Rack Servers (with single or Dual VICs)

● Cisco UCSC-C220-M7S

● Cisco UCSC-C220-M7N

● Cisco UCSC-C240-M7SX

● Cisco UCSC-C240-M7SN

|

| Pure Storage |

Pure Storage FlashArray//X, //XL and //C Series |

● Brownfield Deployment: This option is for the customers who have already invested in Cisco UCS and Pure Storage and wish to repurpose the existing hardware. Table 2 lists the Cisco UCS servers and Pure Storage platforms supported to deploy Nutanix clusters.

Table 2. Supported Cisco UCS and Pure Storage platforms for Brownfield deployments

| Platform |

Details |

| Cisco UCS Fabric Interconnects |

6400 Series Fabric Interconnects (FI-6454 and FI-64108) 6500 Series Fabric Interconnects (FI 6536) |

| Server Platform |

Cisco UCS B-Series Blade Chassis: UCS 5108 Cisco UCS B-Series Blades:

● UCSC B200 M5 with multiple VIC combinations

● UCSC B200 M6 with multiple VIC combinations

● C-Series Rack Servers (with single or Dual VICs)

● UCSC-C220-M6S

● UCSC-C220-M6SN

● UCSC-C240-M6SX

|

| Pure Storage |

Pure Storage FlashArray//X, //XL and //C Series |

Note: In both the deployments, supported server platforms must be configured with dual identical M.2 SSDs (240/480/960 GB) configured with RAID1 using Cisco Boot-Optimized M.2 RAID Controller.

Note: Table 2 lists the supported hardware platforms in the first release of the Nutanix support for FlashStack. The support for new platforms will be added in the future releases of the solution. For the complete list of up to date and supported hardware platforms, see: Cisco Compute Hyperconverged with Nutanix and Cisco UCS Compute Server Hardware Compatibility.

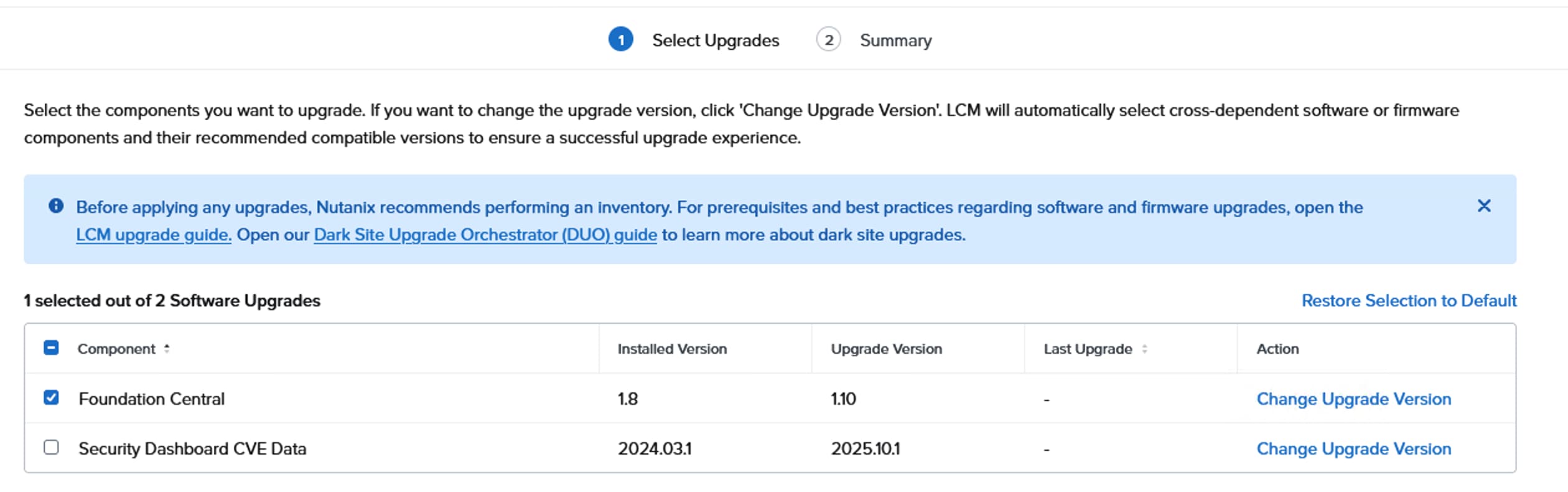

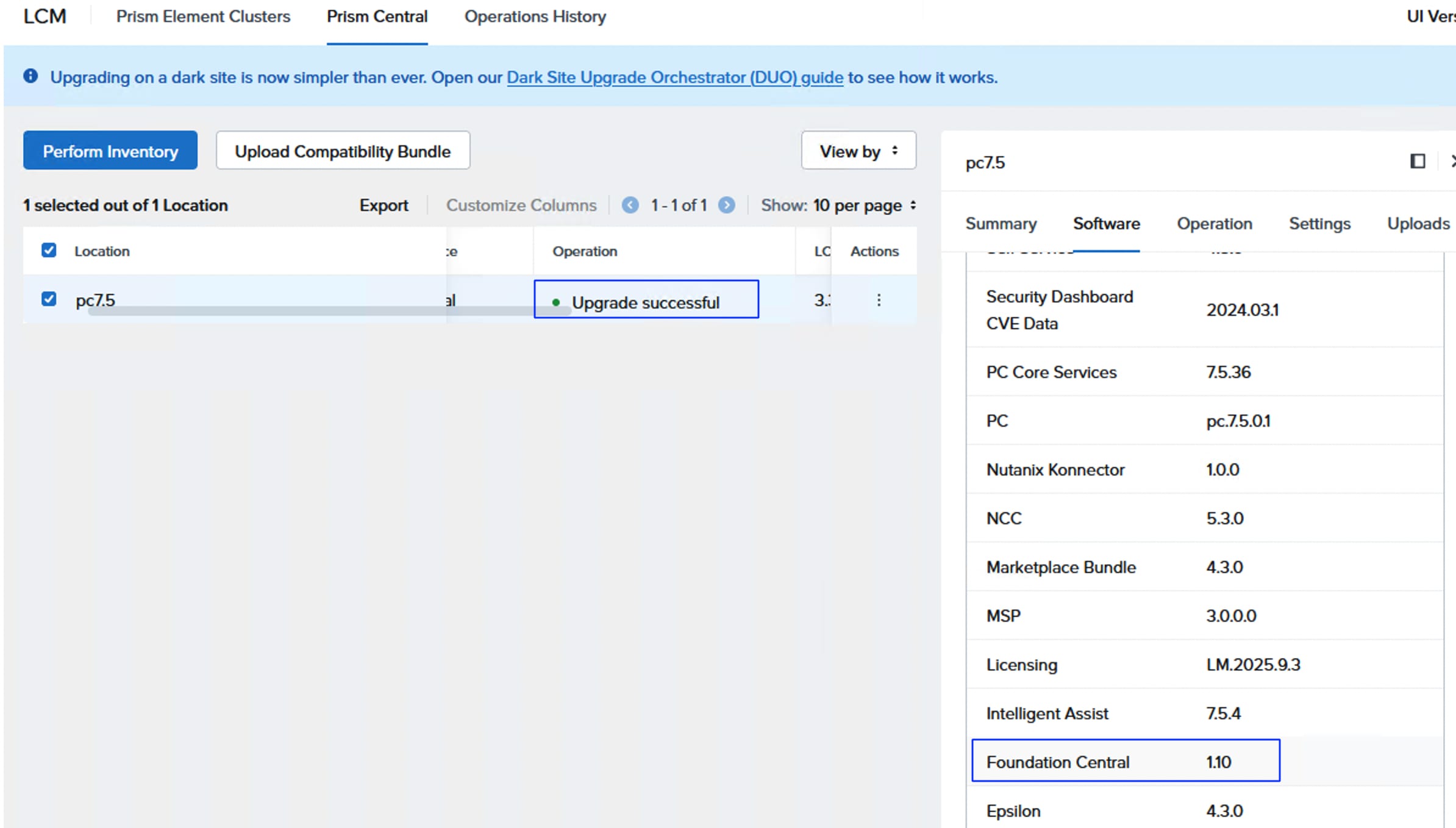

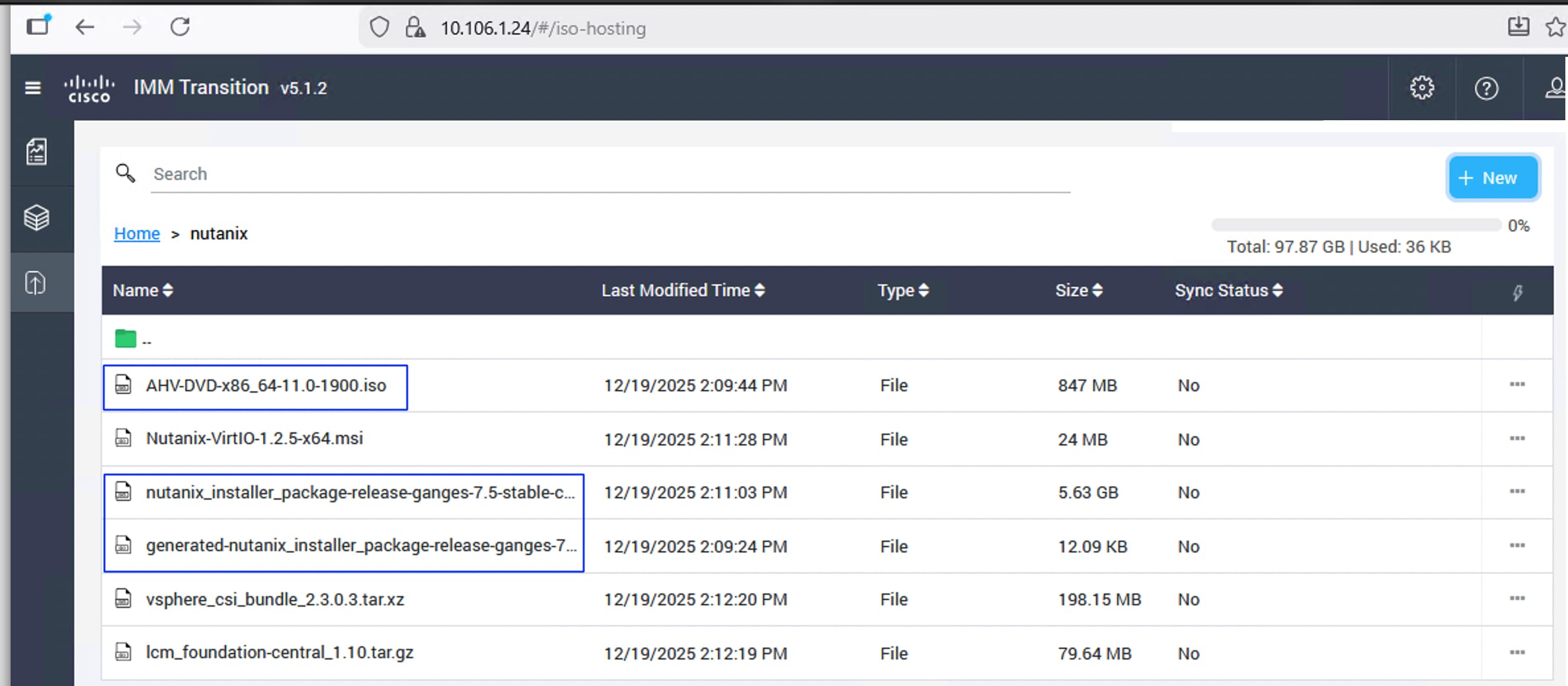

Table 3. Supported software components and their versions

| Component |

Version Details |

| Nutanix Acropolis Operating System (AOS) |

AOS 7.5 or above |

| Prism Central |

Prism 7.5 or above with Integrated FC version 1.10 |

| Foundation Central Appliance VM |

FC version 1.10 |

| Life Cycle Manager (LCM) |

3.3 |

| Nutanix Cluster Check (NCC) |

5.3 |

| Nutanix Acropolis Hypervisor (AHV) |

11.0 or above |

| Cisco Fabric Interconnect Firmware |

4.3(4.240066) or later |

| Cisco Intersight Connected Virtual Private Appliance (CVA) or Private Virtual Appliance (PVA) |

1.1.5-1 or later |

| Pure Storage Purity//FA |

6.10.3 or later |

| Cisco UCS X210x M7 Modular Server Firmware |

5.4(0.250048) or later |

| Cisco UCS C-Series M6 and M7 Server Firmware |

4.3(6.250053) or later |

| Cisco UCS B-Series M5 and M6 Blade Server Firmware |

5.3(0.250021) or later |

For more details on supported hardware and software components and their version, see: Cisco UCS Compute Server Hardware Compatibility. This link provides additional details on supported Cisco UCS server platforms, supported Virtual Interface Card (VIC) options, and the minimum hardware requirements for each type of deployment.

The following physical topologies illustrate the reference architectures that have been built and validated as part of this CVD validation.

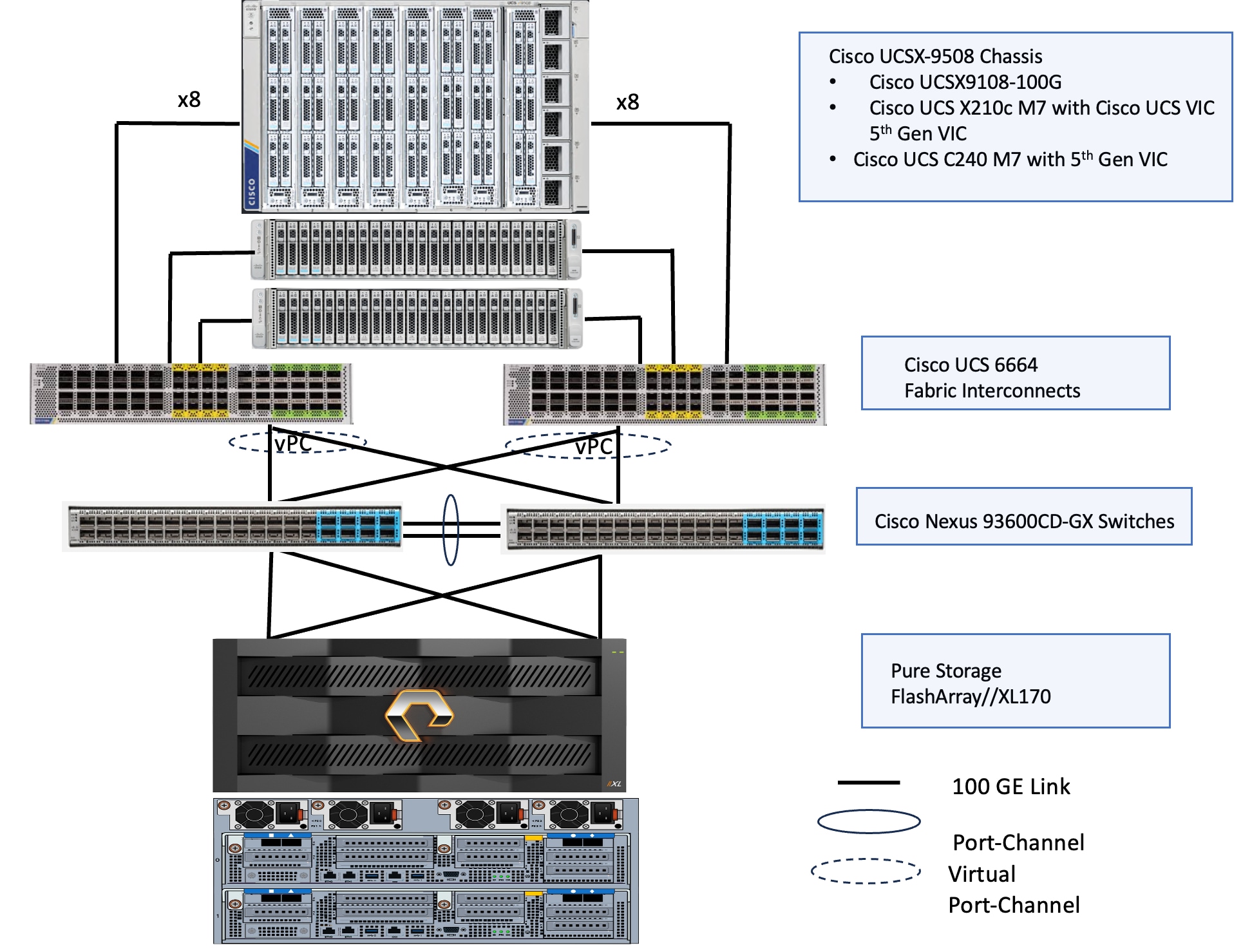

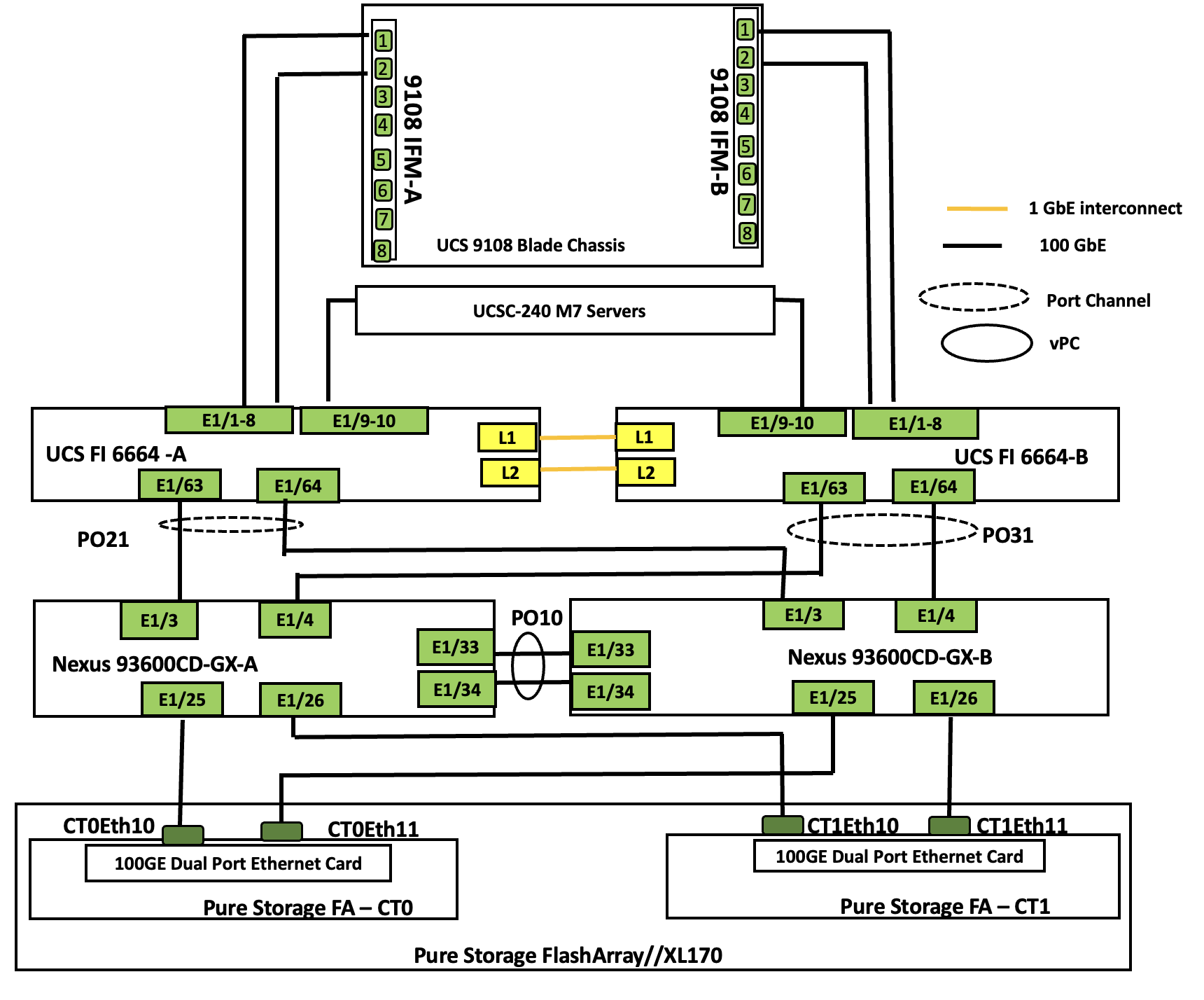

The topology for greenfield deployment is validated using Cisco UCS X210 M7 and Cisco UCSC-C240 M7 servers connected to Pure Storage FlashArray//XL170 through Nexus 93600CD-GX series switches utilizing NVMe-over-Fabric (NVMe-oF) protocol over TCP transport.

The reference hardware configuration includes:

● Cisco UCS X9508 chassis, equipped with a pair of Cisco UCS X9108 100G IFMs and Cisco UCS X210c M7 compute nodes. Each compute node is equipped with fifth-generation Cisco UCS VIC card 15231 providing 100G ethernet connectivity on each side of the fabric. Cisco VIC is configured with required vNICs for enabling network as well as storage access using NVMe-oF protocol over TCP.

● Cisco UCS C240 server validated for this solution. Cisco UCS C240 M7 server is a dual socket server that support up to 32 memory DIMMs and a maximum of 128 cores per node. The Cisco UCS C-Series servers are ideal for cpu-intensive and memory-intensive workloads that benefit from dual-CPU configurations. Both Cisco UCS C240 servers are equipped with Cisco UCS 5th Gen VIC 15237 dual port 40/100Gbps mLOM network card. Cisco UCS VIC is configured with required vNICs for enabling network as well as storage access using NVMe-oF protocol over TCP.

● Cisco 6th generation 6664 Fabric Interconnects (FIs) are used to provide connectivity to both blade and rack servers. These FIs are configured in End-Host mode acting like a host (not a traditional switch) to the upstream network, optimizing traffic flow and simplifying network management. The FIs are connected to the upstream Nexus switches using 100Gbps ports for both management and storage traffics.

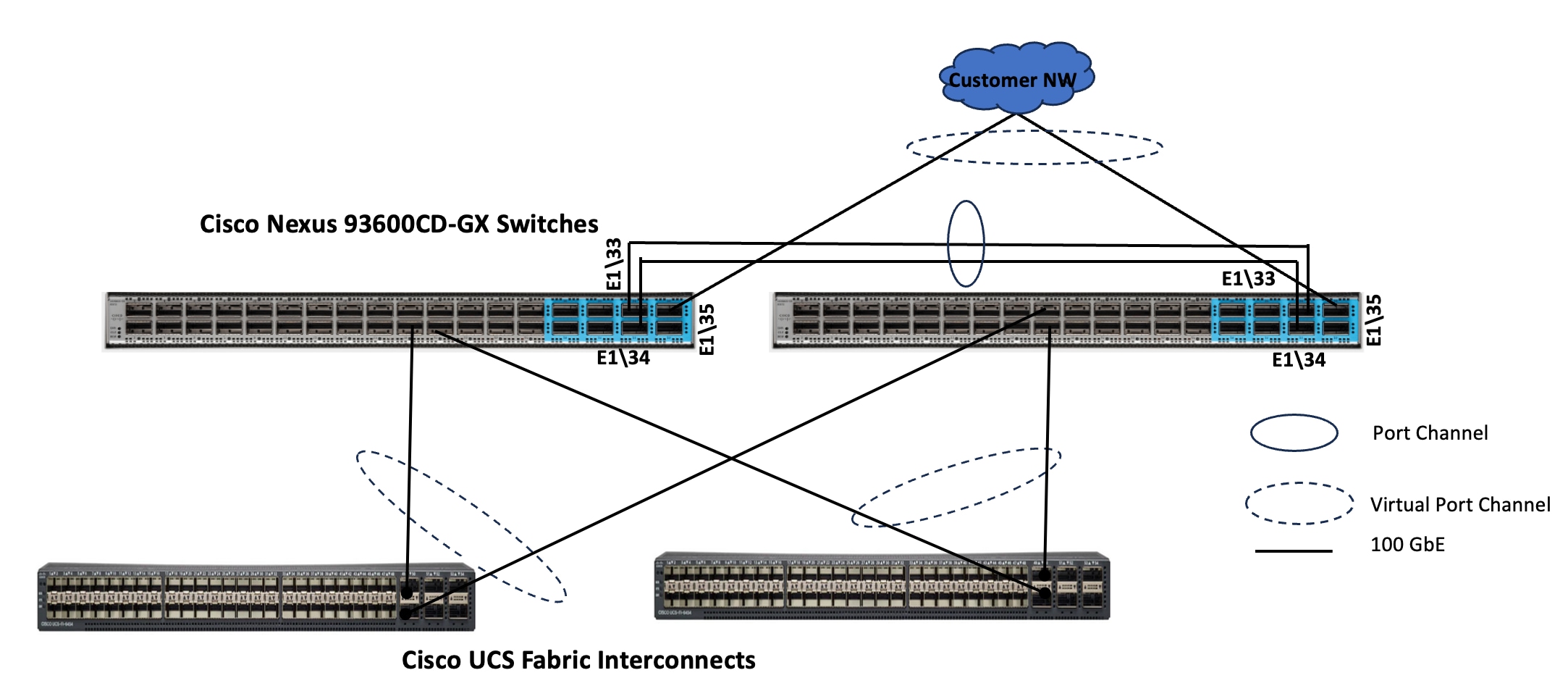

● A pair of Nexus C93600CD-GX switches are used in Virtual Port Channel (vPC) mode. This high-speed Cisco NXOS-based Nexus C93600CD-GX switching design supports up to 100 and 400-GE connectivity.

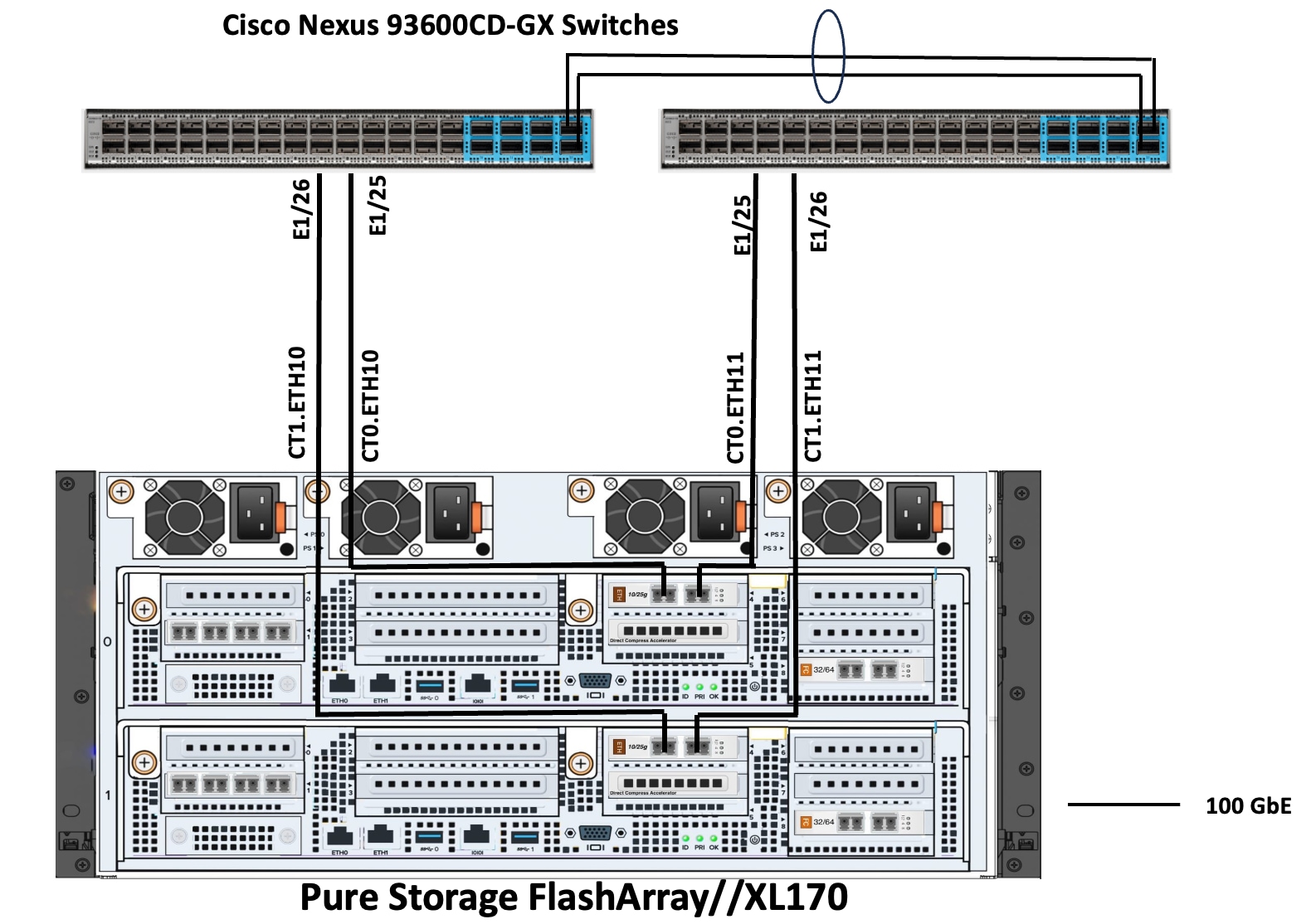

● Pure Storage FlashArray//XL170 is used as external storage for providing persistent storage for virtual machines hosted on the Nutanix cluster. The storage array controllers are connected to Nexus switches using dual port 100Gbps network cards for storage access over NVMe over Fabric protocol over TCP.

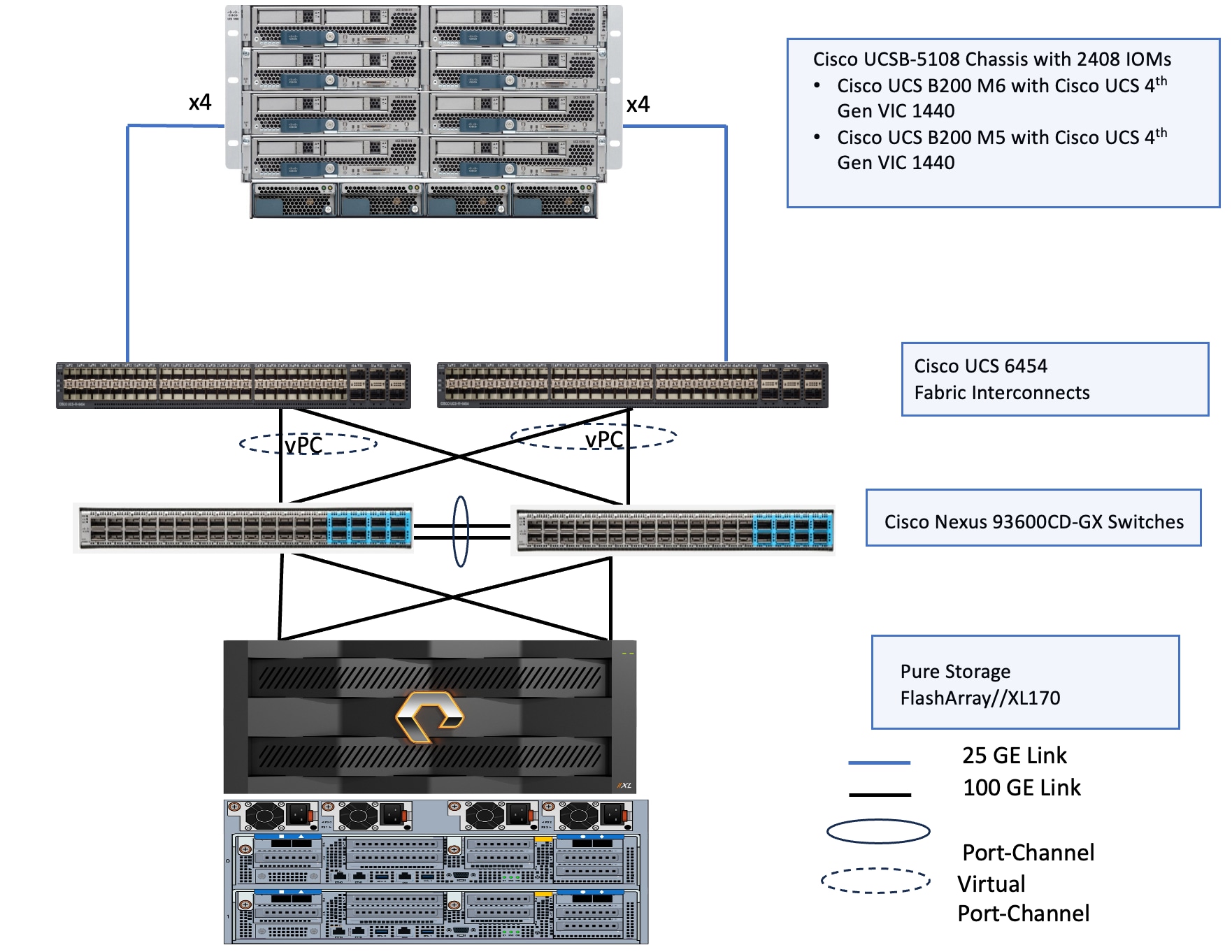

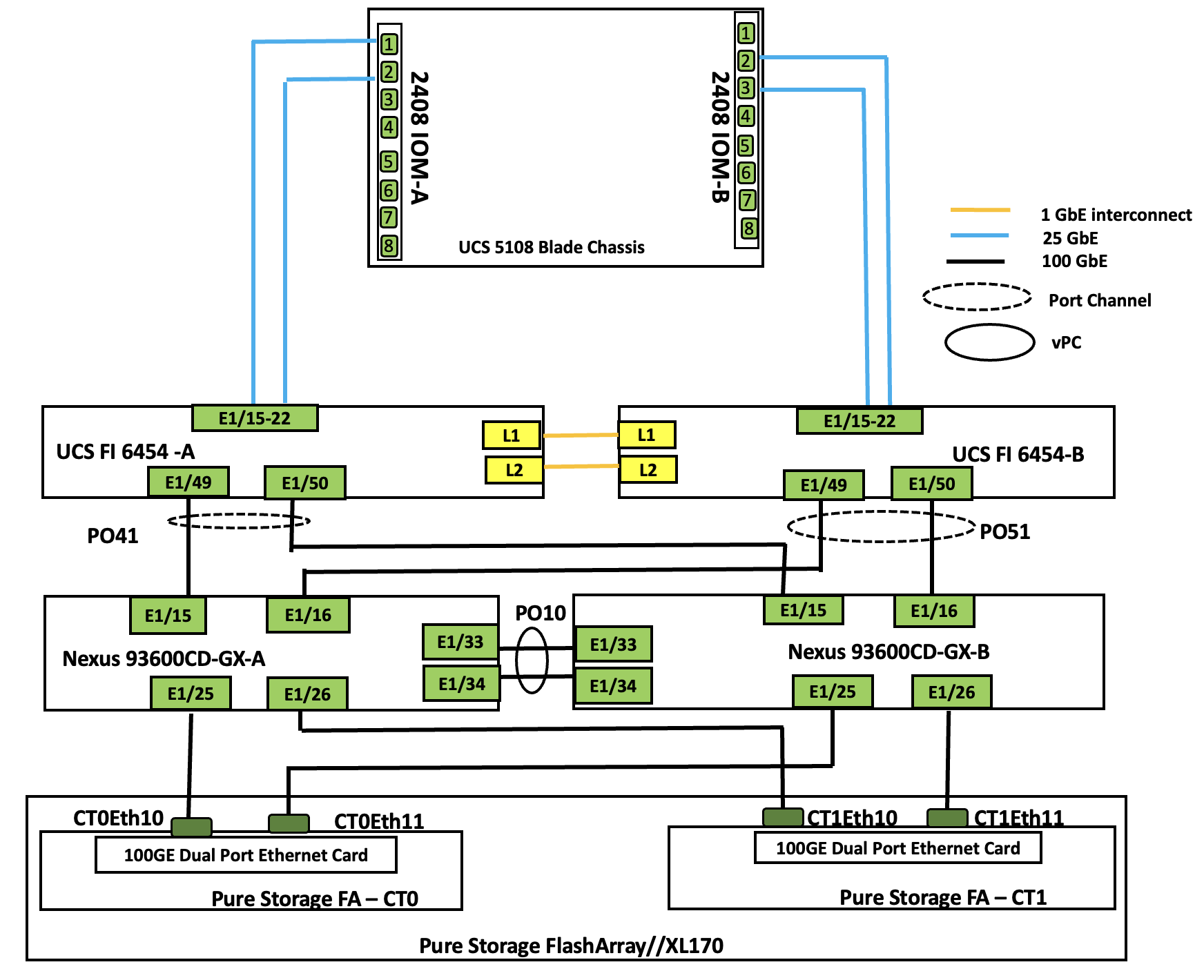

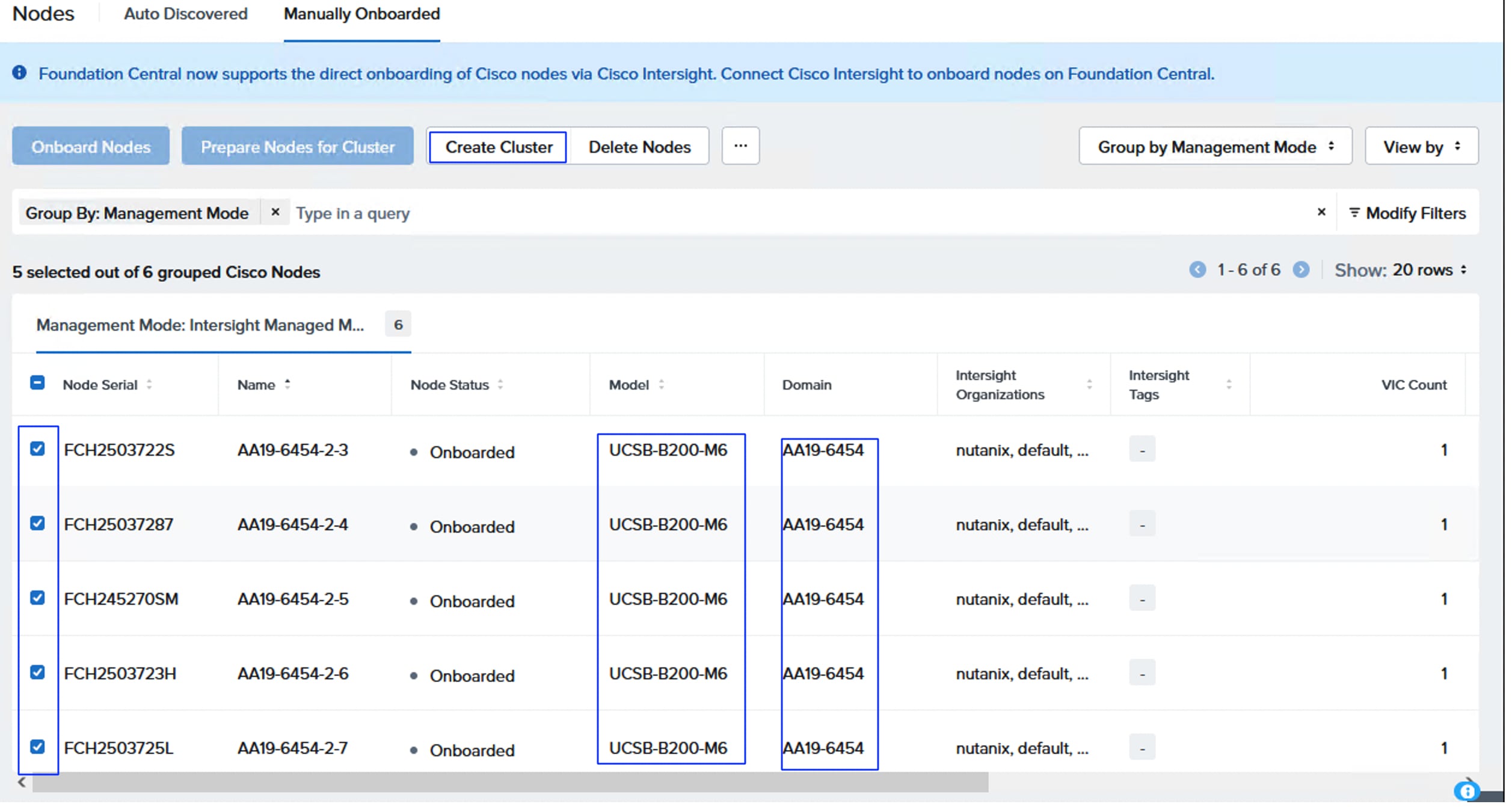

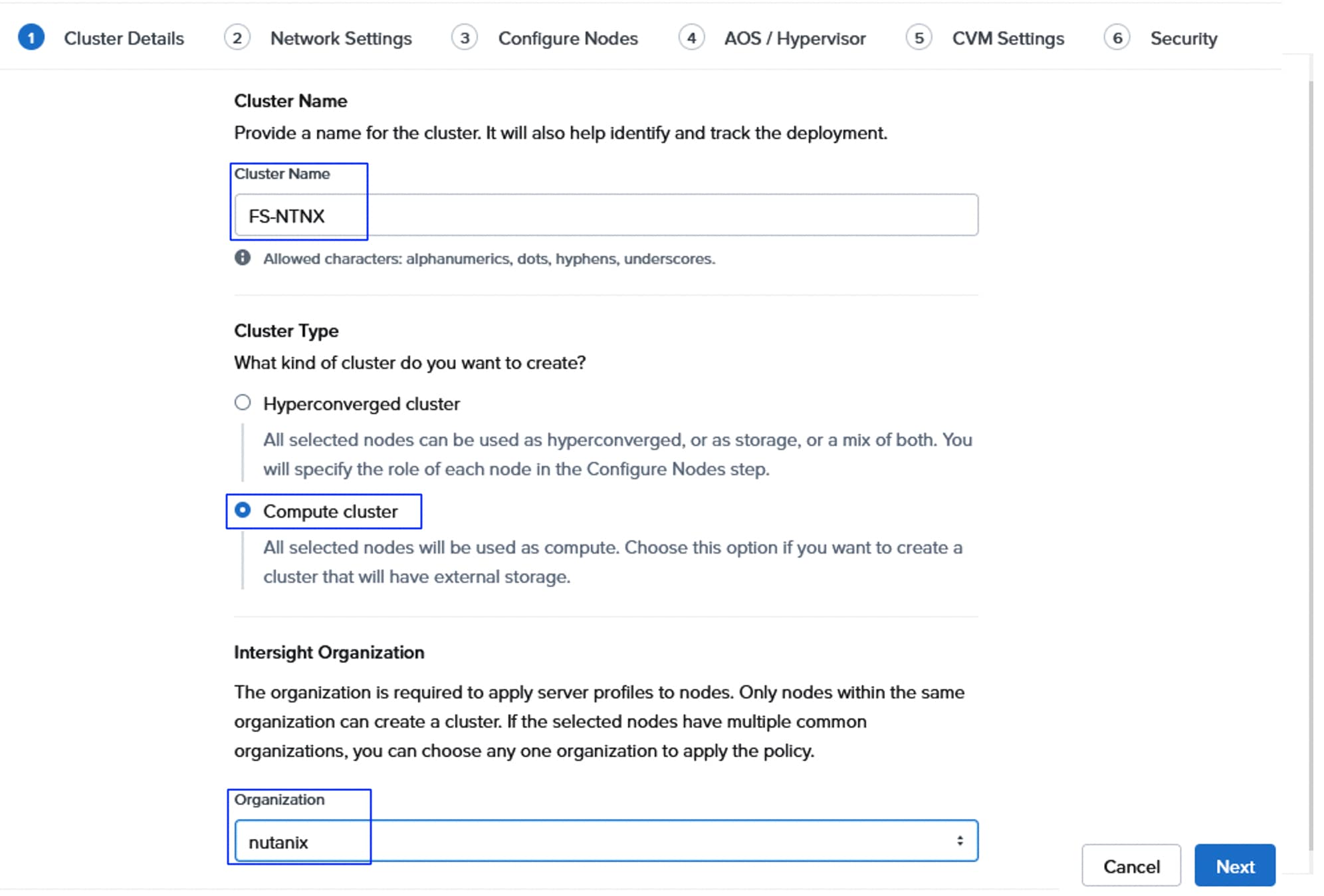

The topology for brownfield deployments is built using already existing Cisco UCS B200 M5 or Cisco UCS B200 M6 blade servers. Figure 4 shows the brownfield deployment validated using Cisco UCS B200 M5 and B200 M6 blades connected to Pure Storage FlashArray//XL170 through Nexus 9000 series switches utilizing NVMe over Fabric (NVMe-oF) protocol over TCP.

The reference hardware configuration includes:

● Cisco UCSB-5108 chassis, equipped with a pair of Cisco UCS-IOM-2408 modules and up to eight Cisco UCS B200 M6 and M5 compute nodes. Each compute node is equipped with 4th generation Cisco UCS VIC 1440 card providing 40Gbps ethernet connectivity to the blade on each side of the fabric. Cisco UCS VIC is configured with required vNICs for enabling network as well as storage access using NVMe-oF protocols over TCP.

● Cisco 4th generation 6454 Fabric Interconnects (FIs) are used to provide connectivity to both blade and rack servers. These FIs are configured in End-Host mode acting like a host (not a traditional switch) to the upstream network, optimizing traffic flow and simplifying network management. The FIs are connected to the upstream Nexus switches using 100Gbps ports (from ports 49 onwards) for both management and storage traffic.

● A pair of Nexus C93600CD-GX switches are used in Virtual Port Channel (vPC) mode. This high-speed Cisco NXOS-based Nexus C93600CD-GX switching design supports up to 100 and 400-GE connectivity.

● Pure Storage FlashArray//XL170 is used as external storage for providing persistent storage for virtual machines hosted on the Nutanix cluster. The storage array controllers are connected to Nexus switches via dual port 100Gbps network interfaces, facilitating high-performance storage access via NVMe-over-Fabric (NVMe-oF) over TCP protocol.

Note: Additional 1Gb management connections are needed for the out-of-band management KVM console access of the hardware. Each Cisco UCS C-Series server, Fabric Interconnects, and Pure Storage controllers are required to be connected to dedicated network switches. These out-of-Band connections and switches are not shown in the above figures.

The information in this section is provided as a reference for cabling the physical equipment in a FlashStack environment.

The compute infrastructure in FlashStack solution consists of the following:

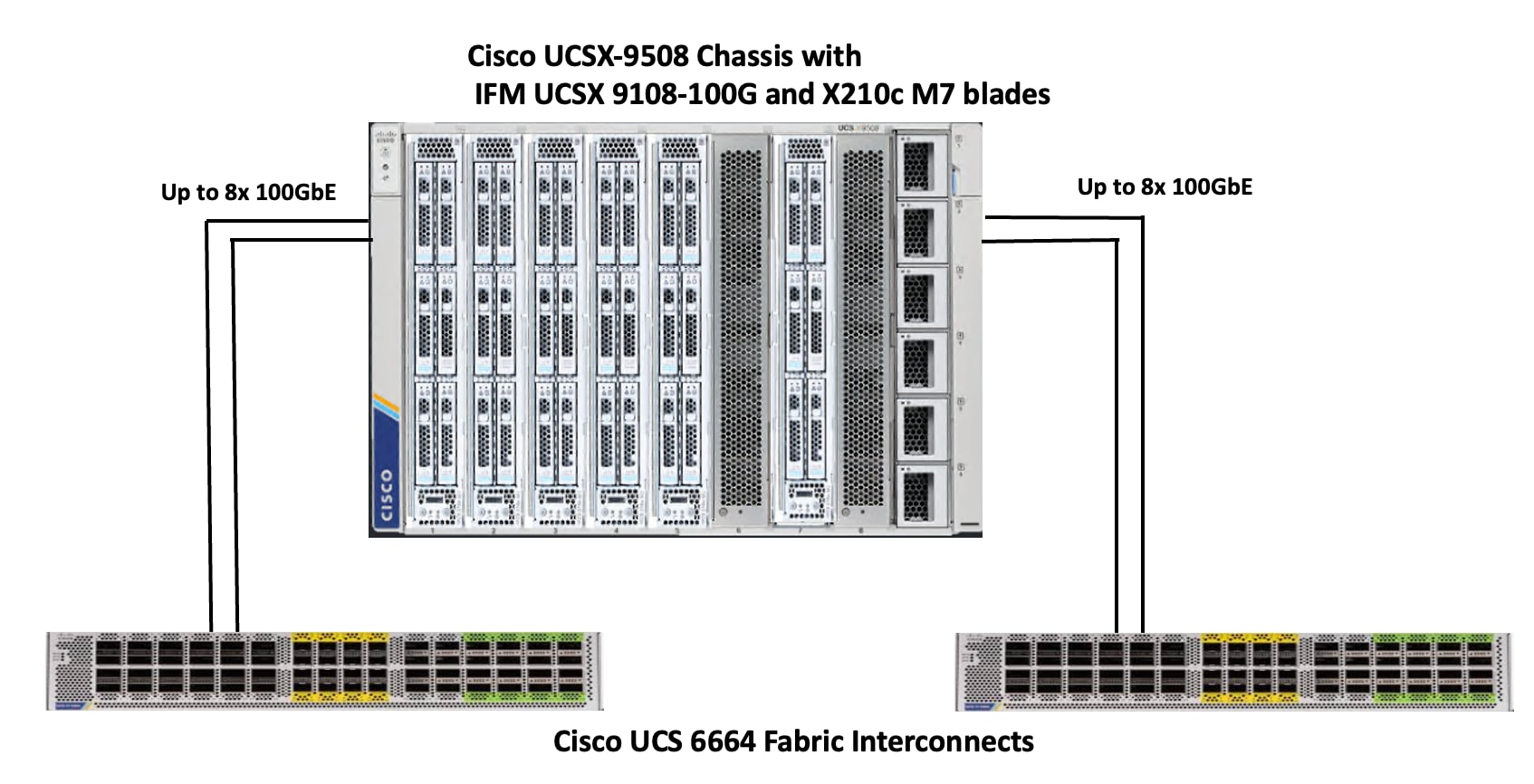

● Cisco UCS X-Series Chassis with Cisco UCSX-9108 Intelligent Fabric Modules and Cisco UCS X210c M7 blades

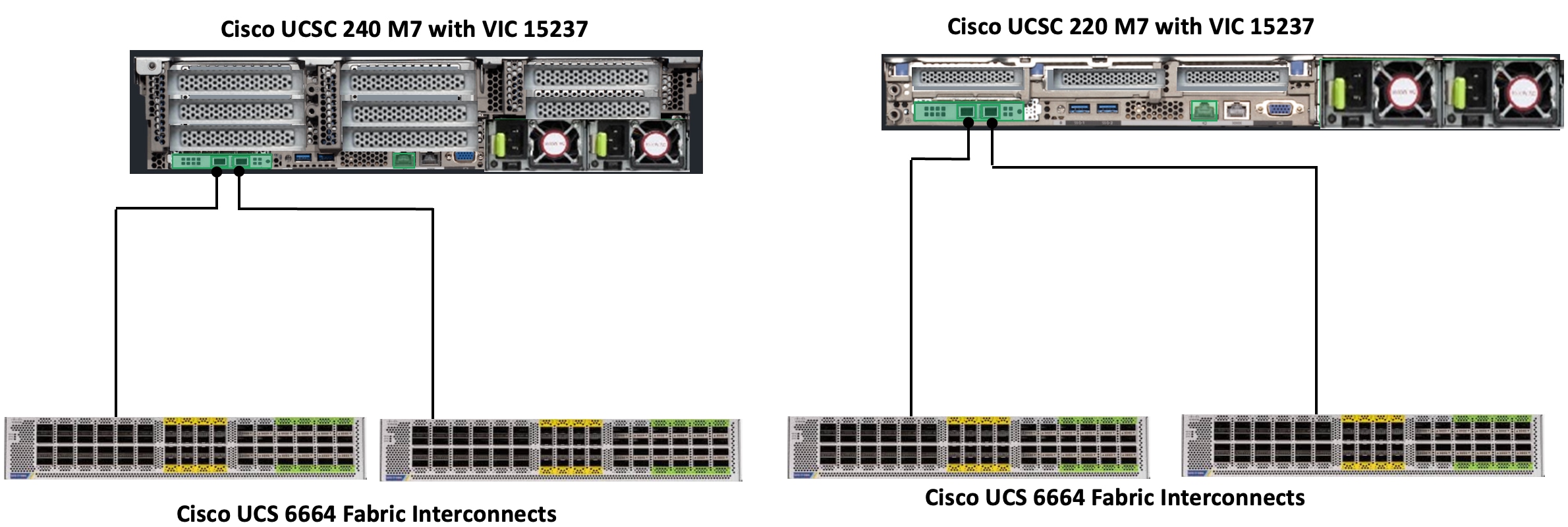

● Cisco UCS C240 M7 and Cisco UCS C220 M7 C-Series Rack servers with Cisco UCS VIC 15237

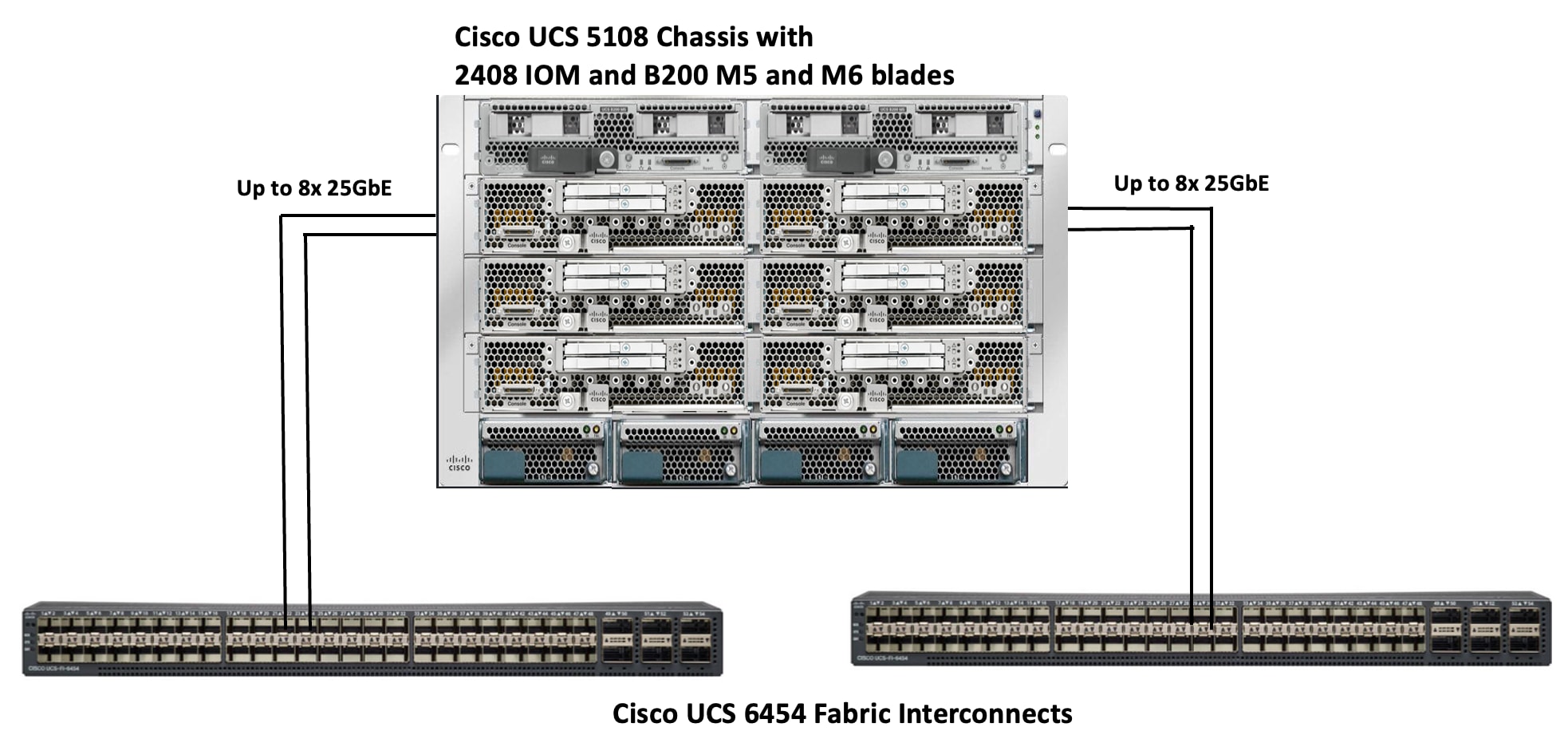

● Cisco UCSC 5108 blade Chassis with IOM 2408 module and Cisco UCS B200 M5 and Cisco UCS B200 M6 blades

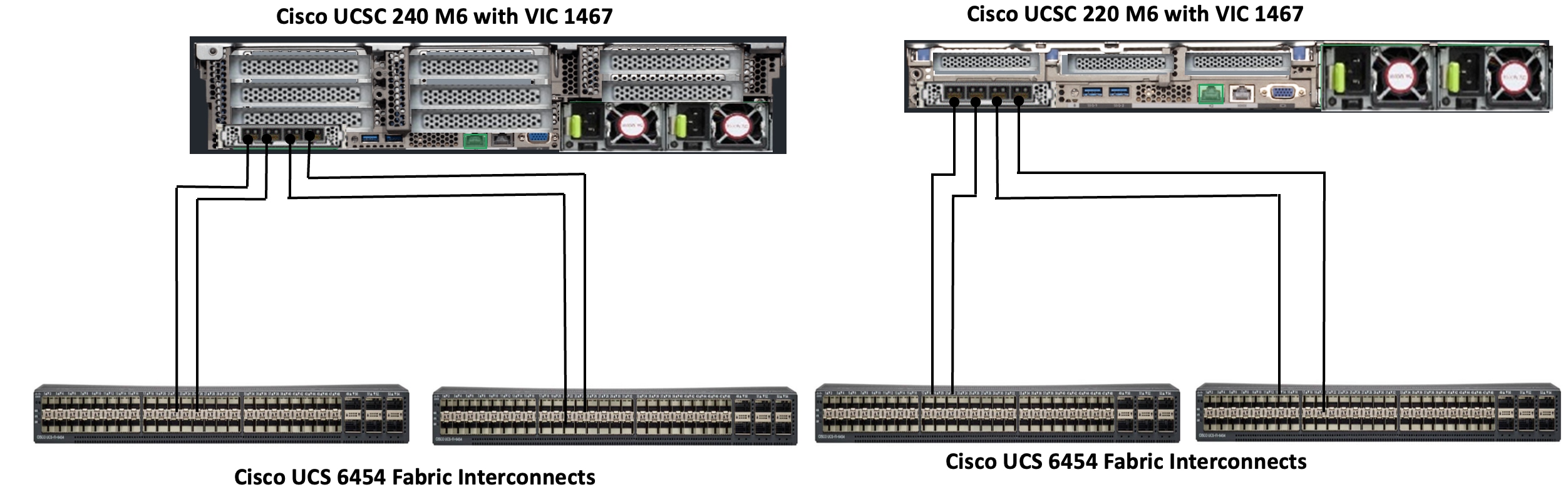

● Cisco UCS C240 M6 and Cisco UCS C220 M6 C-Series Rack servers with Cisco UCS VIC 1467

● Cisco UCS Fabric Interconnects (Cisco UCS-FI-6664 and Cisco UCS-FI-6454)

The Cisco UCS X9508 Chassis is equipped with Cisco UCS X9108-100G intelligent fabric modules (IFMs). The Cisco UCS X9508 Chassis connects to each Cisco UCS 6664 FI using up to eight 100GE ports, as shown in Figure 5. You can start with a minimum of one connection and scale up to eight connections for additional network bandwidth.

Cisco UCS C240 and Cisco UCS C220 M7 C-Series servers are equipped with Cisco UCS 5th Gen VIC 15237 dual port 40/100Gbps mLOM network card. Each Cisco UCS C-Series server is connected to Cisco UCS 6664 FIs using two 100GE ports as shown in Figure 6.

The Cisco UCS 5108 blade chassis is equipped with Cisco UCS 2408-25G IO modules. The Cisco UCS 5108 chassis connects to each Cisco UCS 6454 FI using up to eight 25GE ports, as shown in Figure 7. You can start with a minimum of one connection and scale up to eight connections for additional network bandwidth.

Cisco UCS C240 and Cisco UCS C220 M6 C-Series servers are equipped with Cisco UCS 4th Gen VIC 1467 dual port 10/25Gbps mLOM network card. Each Cisco UCS C-Series server is connected to Cisco UCS 6454 FIs using two 25GE ports as shown in Figure 8.

Note: Multiple options are available for connecting Cisco UCS C-Series rack servers to the Fabric Interconnects. See the FlashStack with Nutanix Installation Field Guide for more details on Cisco UCS C-series connectivity options.

Compute UCS Fabric Interconnect Connectivity

For both deployment types, Cisco UCS Fabric Interconnects are connected to upstream Cisco Nexus switches configured in virtual Port Channel (vPC) mode. This setup provides a link redundancy, load balancing and high availability for network connectivity between UCS domain and the upstream switches. Each FI is connected to both Cisco Nexus 93600CD-GX switches using 100G connections; additional links can easily be added to the port channel to increase the bandwidth as needed. Figure 9 shows the Fabric Interconnect connectivity to the Upstream Nexus switches.

Note: In case of 6454 Fabric Interconnects, leverage the last 6x 100 GbE ports (49 to 54) and for 64108 FIs, leverage last 12x 100GbE ports (97 to 108), to connect to the upstream Cisco Nexus switches. In case of the 6600 or 6500 series Fabric Interconnects, use any 100 GbE non-unified ports for the upstream network connectivity.

Pure Storage FlashArray//XL170 Ethernet Connectivity

Pure Storage FlashArray controllers are connected to Cisco Nexus 93600CD-GX switches using redundant 100Gbs ports. Figure 10 illustrates the physical connectivity details.

Figure 11 details the cable connections used in the validation lab for the FlashStack topologies.

FlashStack with Nutanix Networking

Figure 13 shows high level logical architecture of the FlashStack with NCI compute cluster. The AOS Controller VMs are connected to Pure Storage FlashArray over a dedicated network using two different VLANs/Subnets. Both AHV nodes and Controller VMs are connected to a management network for in-band management. All the hardware platforms including servers and storage controllers are connected to a dedicated out-of-band management or IPMI network for KVM console access and management.

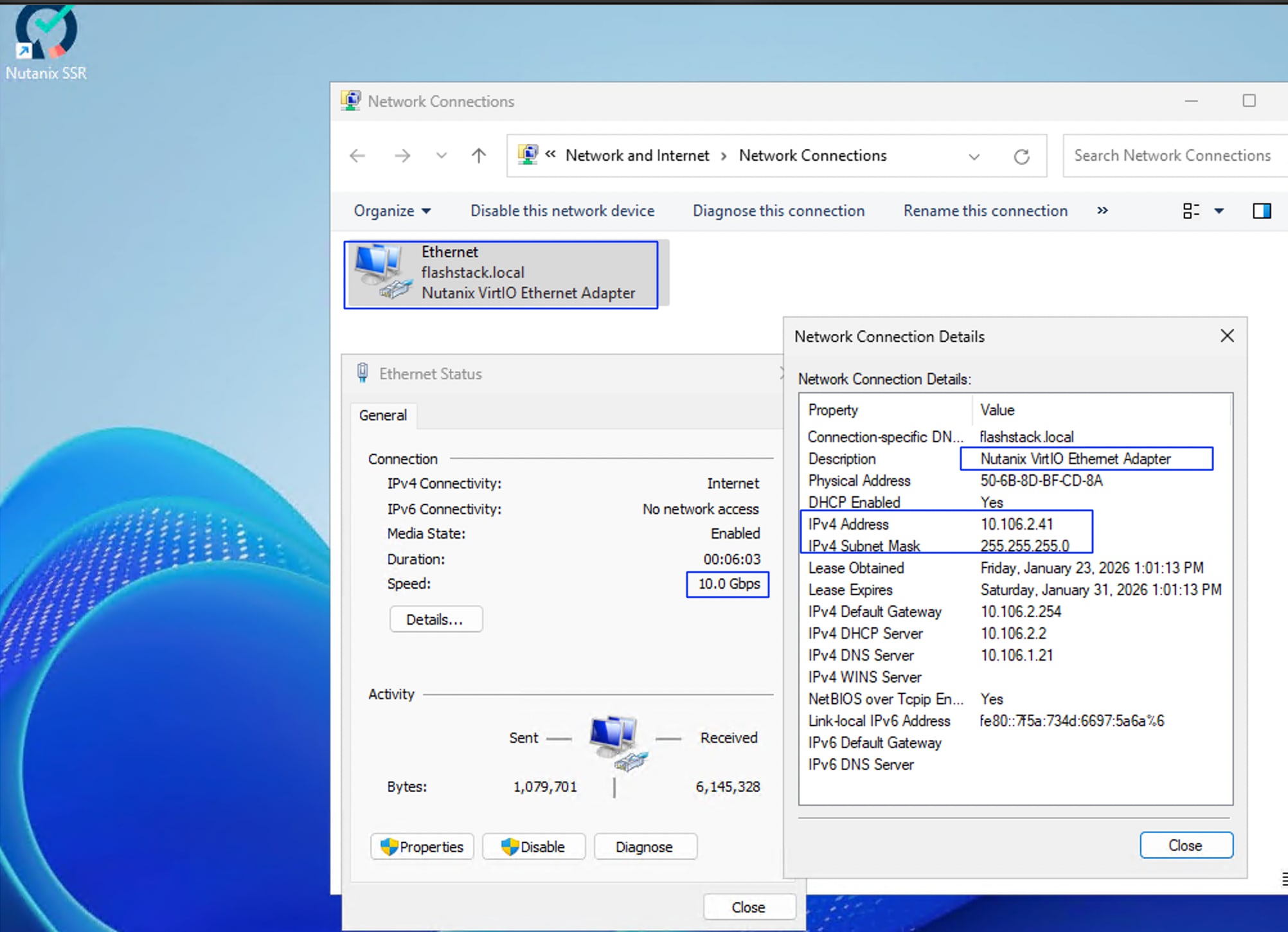

NCI Compute Cluster Node Networking

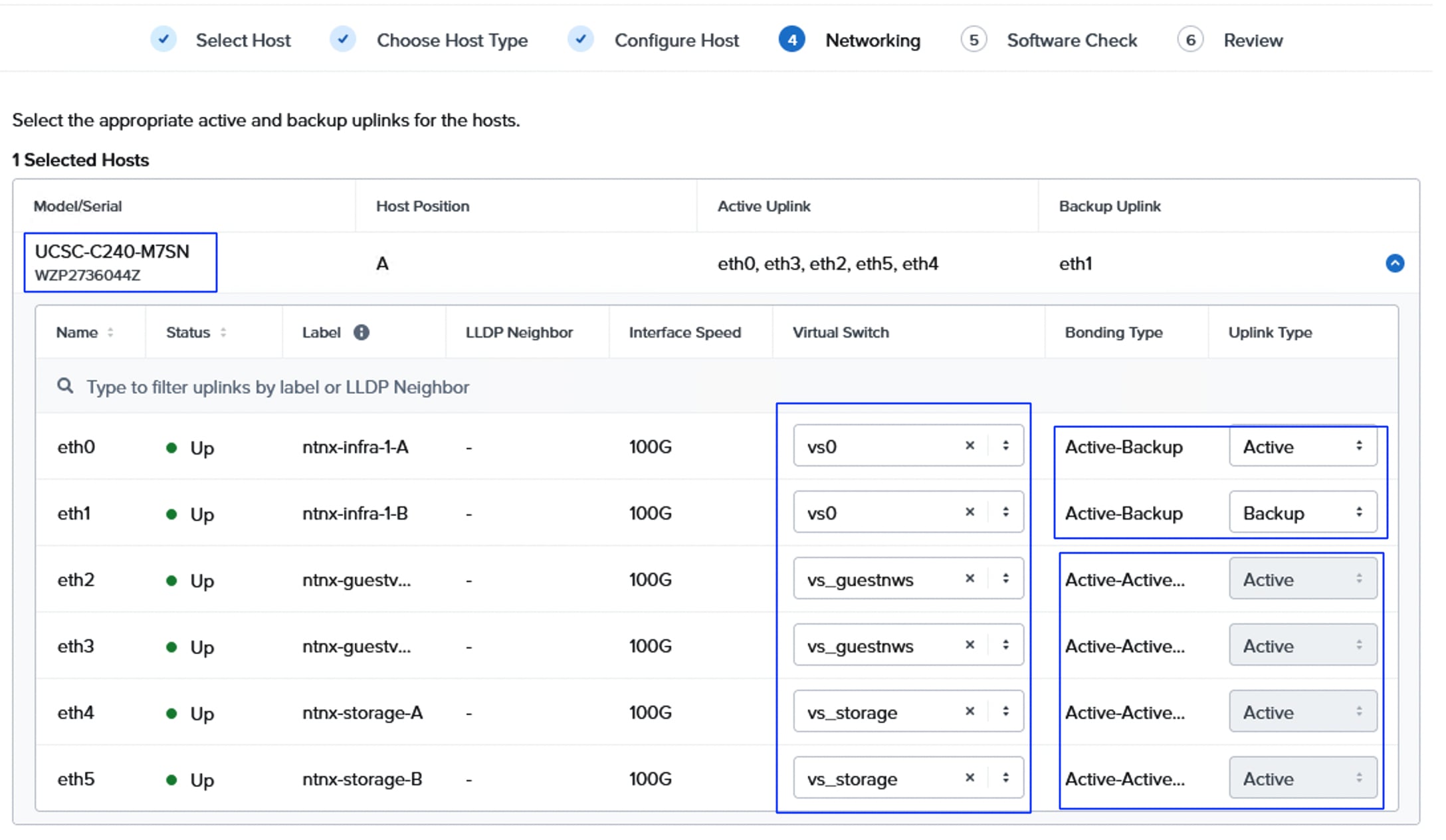

The networking topology leverages the unique VIC technology of Cisco UCS to provide a highly granular and secure networking environment for Nutanix clusters. By defining multiple pairs of vNICs in the Cisco UCS Service Profile, different types of traffic can be physically isolated before they even reach the hypervisor. For instance, external storage traffic can be segregated from Guest VM traffic by creating a dedicated pair of vNICs. Similarly, Guest VMs traffic can be segregated from the hypervisor (AHV) and controller VM (CVM) management traffic.

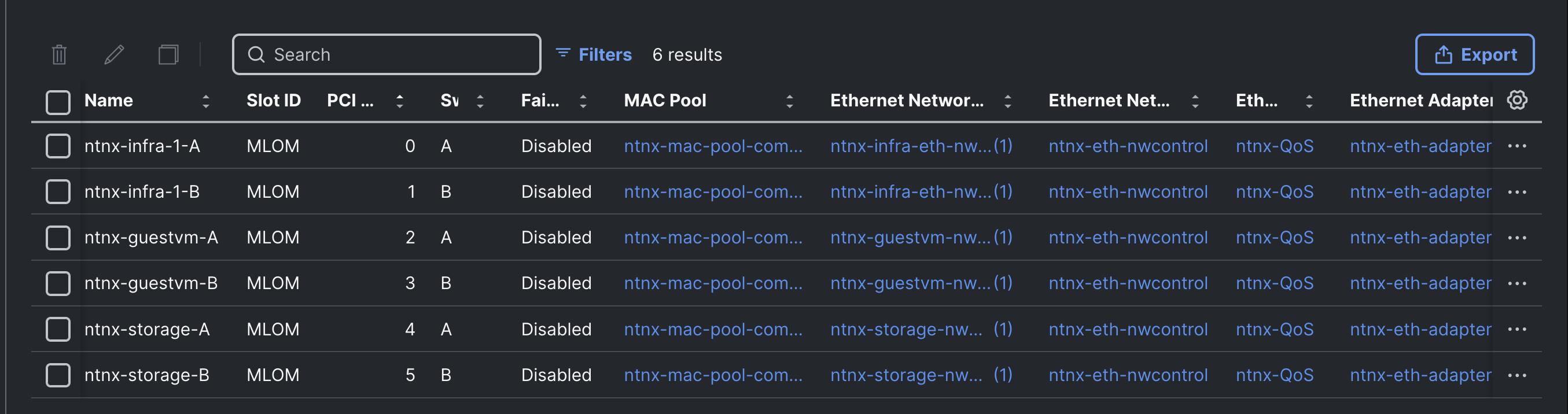

Using a UCS LAN connectivity policy, six vNICs are created to carry different types of traffic. Table 4 lists the details about the vNICs configured for each Nutanix node. For detailed steps to create these policies, see chapter Install and Configure.

Table 4. LAN Connectivity Policy and vNICs

| vNIC Name |

ntnx-infra-1-A |

ntnx-infra-1-B |

ntnx-guestvm-A |

ntnx-guestvm-B |

ntnx-storage-A |

ntnx-storage-B |

| Purpose |

management traffic of Nutanix nodes and Controller VMs, VM Live migration |

Guest VM management and Application traffic |

External storage traffic via Fabric-A |

External storage traffic via Fabric-B |

||

| Name of the emulated vNIC inside AHV node |

eth0 |

eth1 |

eth2 |

eth3 |

eth4 |

eth5 |

| Switch ID |

A |

B |

A |

B |

A |

B |

| PCI Order of the vNIC |

0 |

1 |

2 |

3 |

4 |

5 |

| CDN Source setting |

vNIC Name |

vNIC Name |

vNIC Name |

vNIC Name |

vNIC Name |

vNIC Name |

| Fabric Failover setting |

Disabled |

Disabled |

Disabled |

Disabled |

Disabled |

Disabled |

| MAC Pools |

Name: ntnx-mac-pool-common (used same pool for all the pairs of vNICs) |

|||||

| Network Group Policy name and Allowed VLANs and Native VLAN |

Name: ntnx-infra-eth-nwgrp: Allowed VLAN: 1061 |

Name: ntnx-guestvm-nwgrp: Allowed VLAN: 1062,1063,1064

|

Name: ntnx-storage-nwgrp: Allowed VLANs: 3010,3020 |

|||

| Network Control Policy Name and CDP and LLDP settings |

Name: ntnx-eth-nwcontrol: CDP Enabled LLDP (Tx and Rx) Enable |

|||||

| QoS Policy name and Settings |

Ntnx-QoS: Priority: Best Effort Class of Service: 0 MTU: 9000 Rate Limit (Mbps): 0 |

|||||

| Ethernet Adapter Policy Name and Settings |

Name: ntnx-eth-adapter-policy Uses system defined Policy: Linux-V2 with following changes EtherChannel Pinning: Enables Transmit Queues: 2 Complete Queues: 10 |

|||||

Note: Using LAN Connectivity policies, additional vNICs can be configured for other various traffics such as DR Replication, backup etc. If not, default vs0 switch can be leveraged and configured to accommodate the other traffic as well.

Figure 14 depicts the vNICs configured for the NCI Nutanix node for this validation.

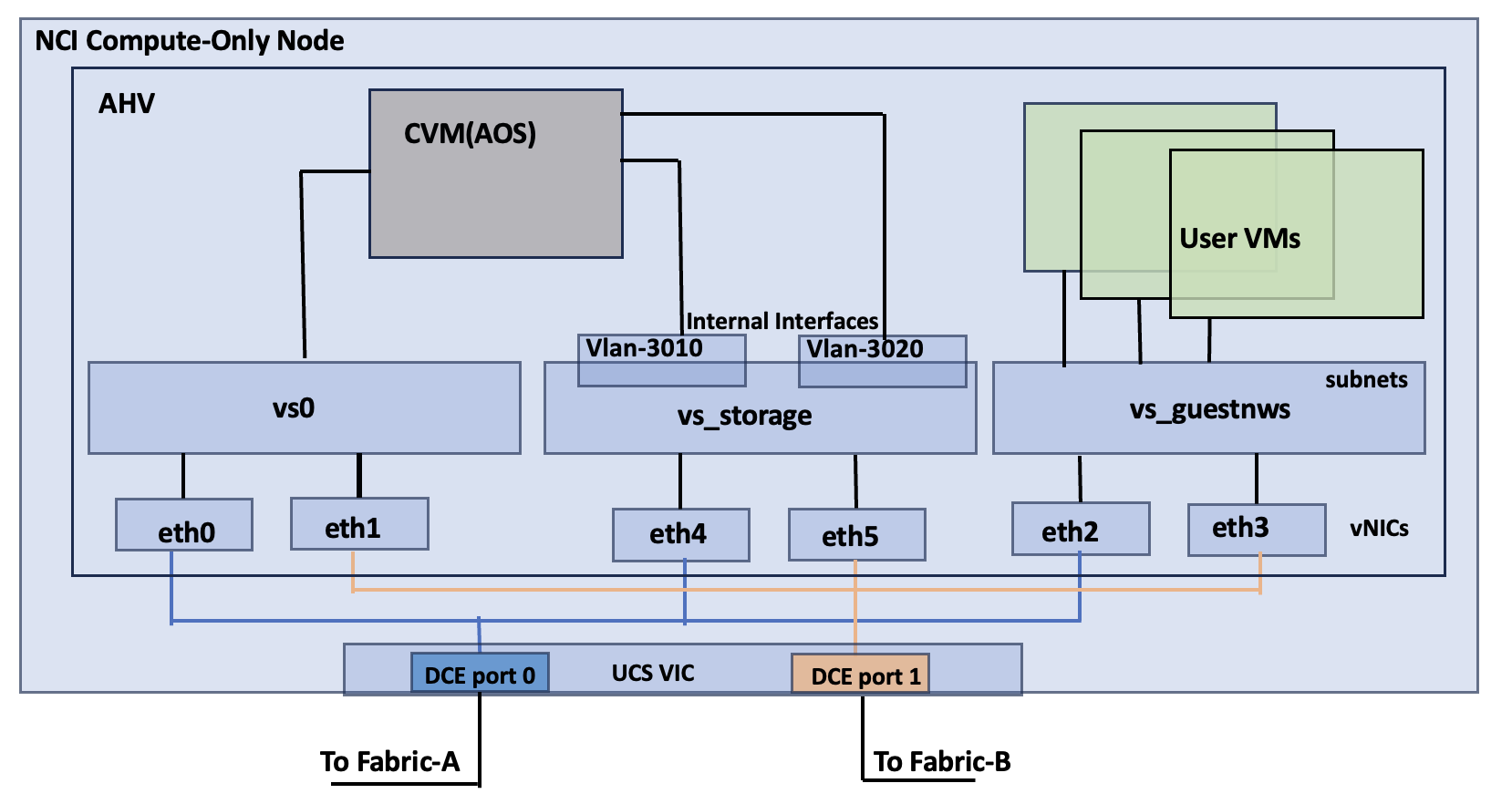

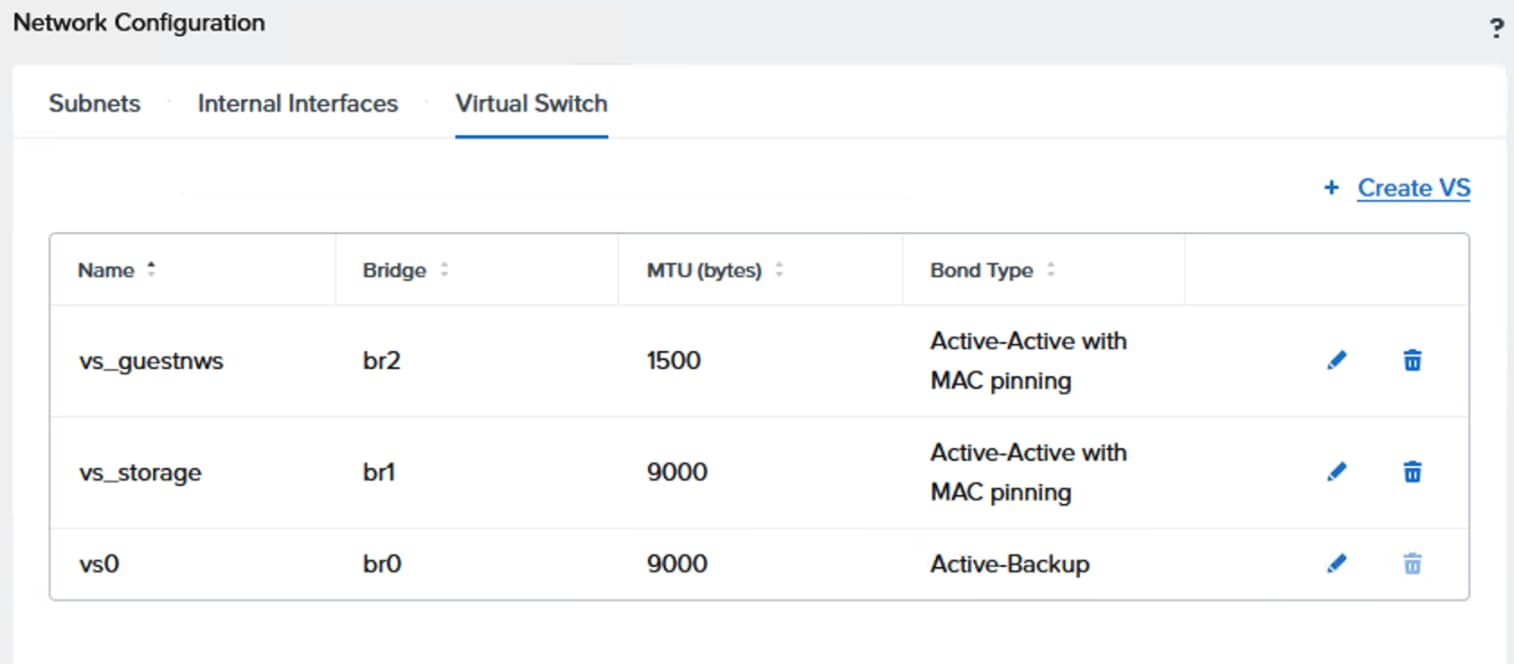

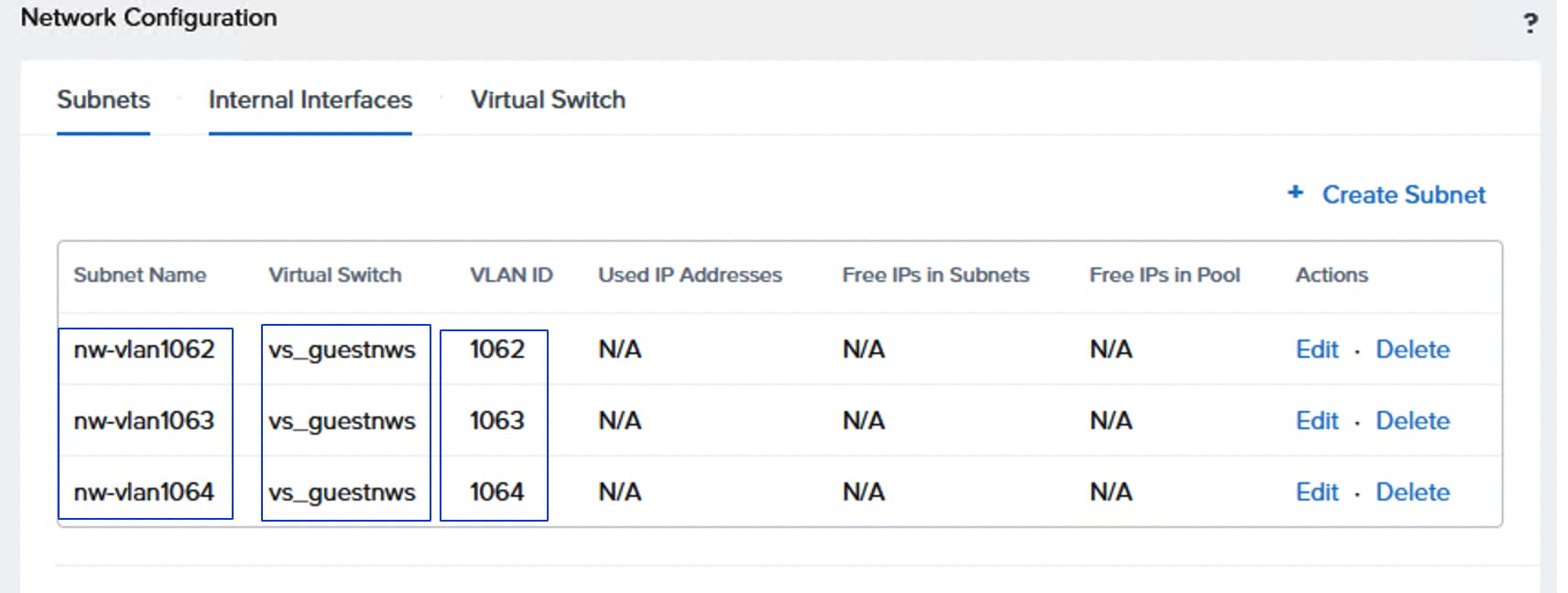

Table 5 lists the details about the virtual switch configuration within the NCI Compute Cluster node.

Table 5. Nutanix AHV Networking

| vNIC Name |

Enumerated AHV Interface Name |

vSwitch Configuration |

| ntnx-infra-1-A |

eth0 |

vSwitch Name: vs0 Bond Type: Active-Backup VLANs: 1061 |

| ntnx-infra-1-B |

eth1 |

|

| ntnx-guestvm-A |

eth2 |

vSwitch Name: vs_guestnws MTU: 1500 Bond Type: Active-Backup or Active-Active with MAC Pinning VLANs: 1062,1063,1064 etc. |

| ntnx- guestvm -B |

eth3 |

|

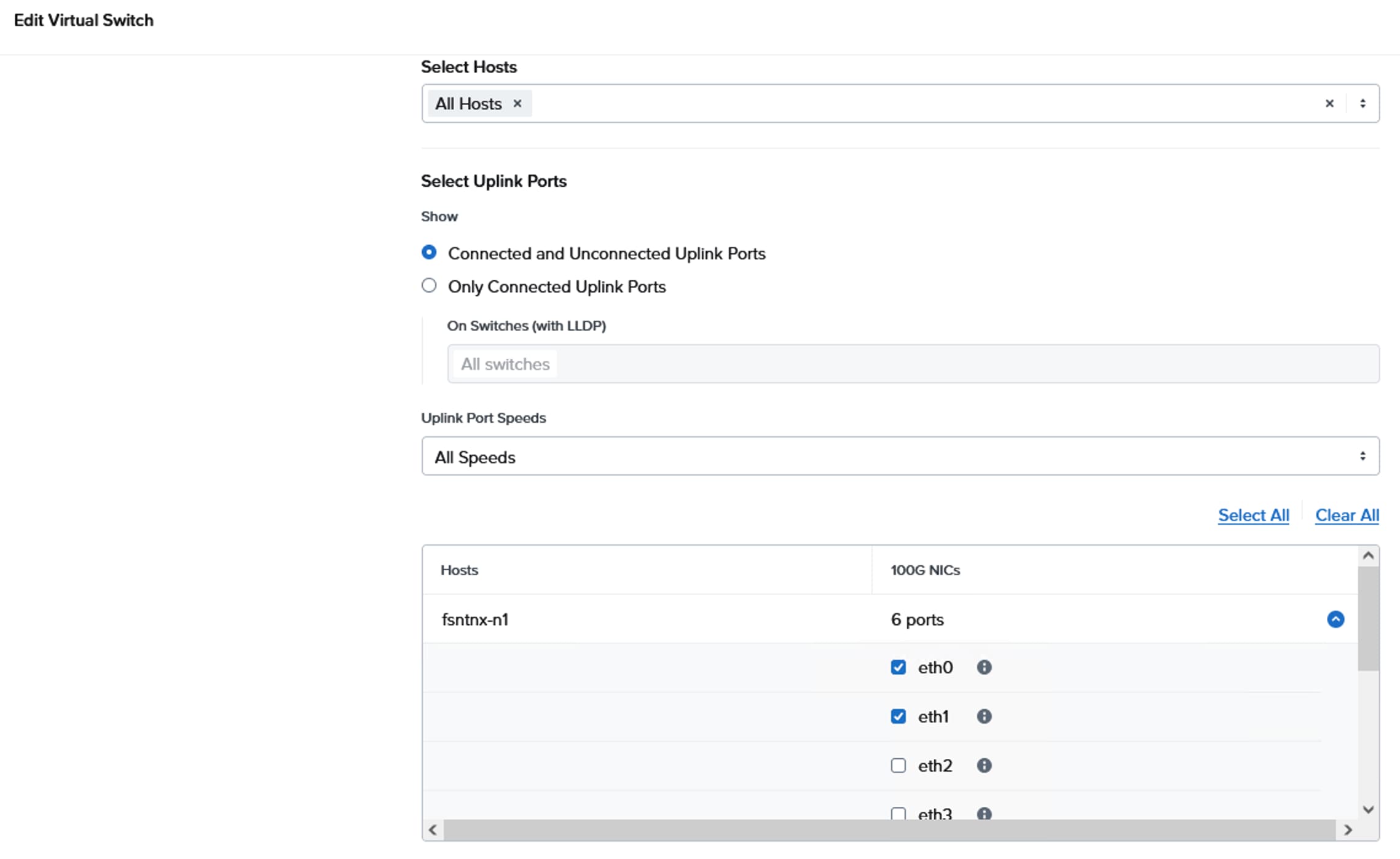

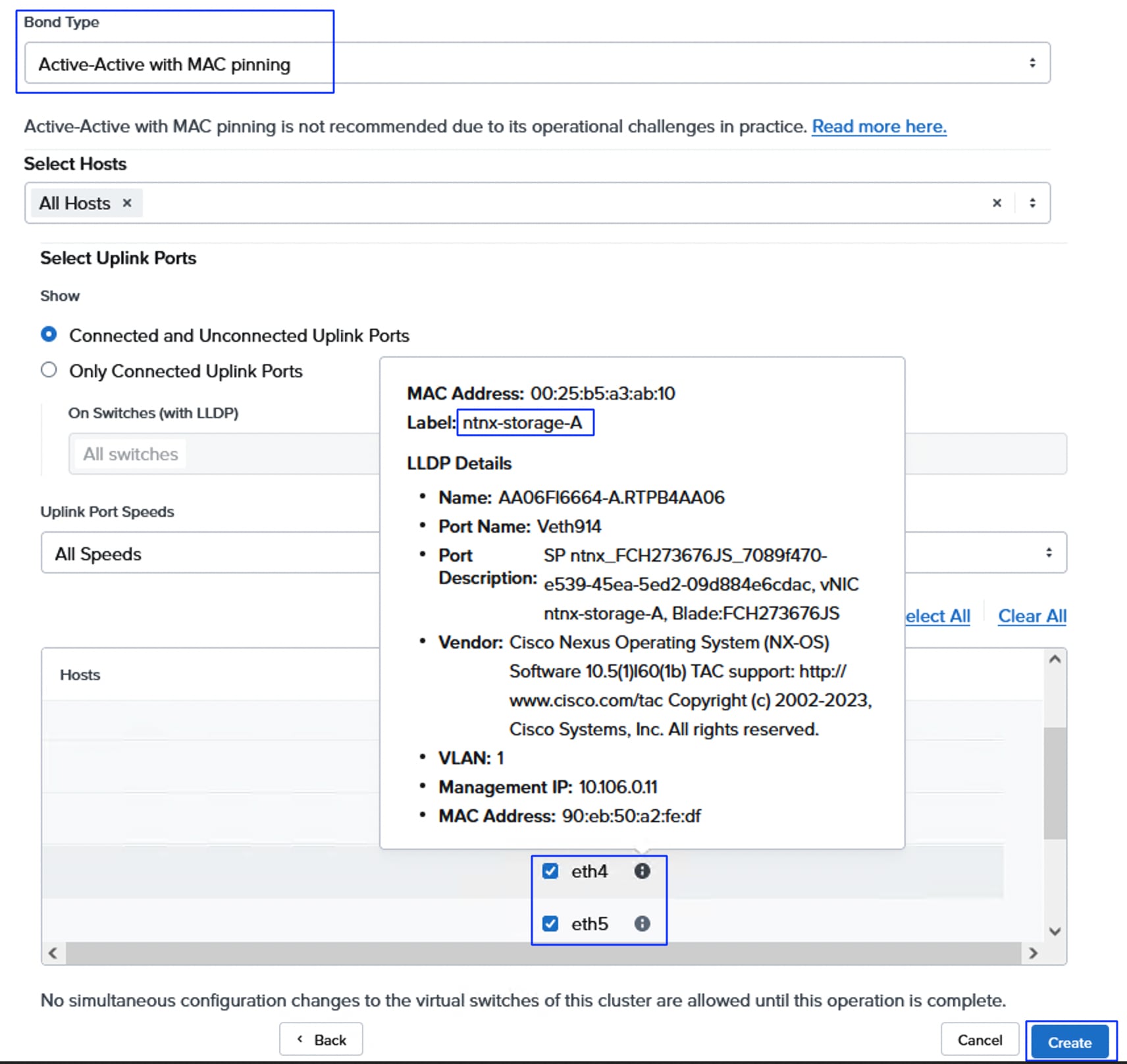

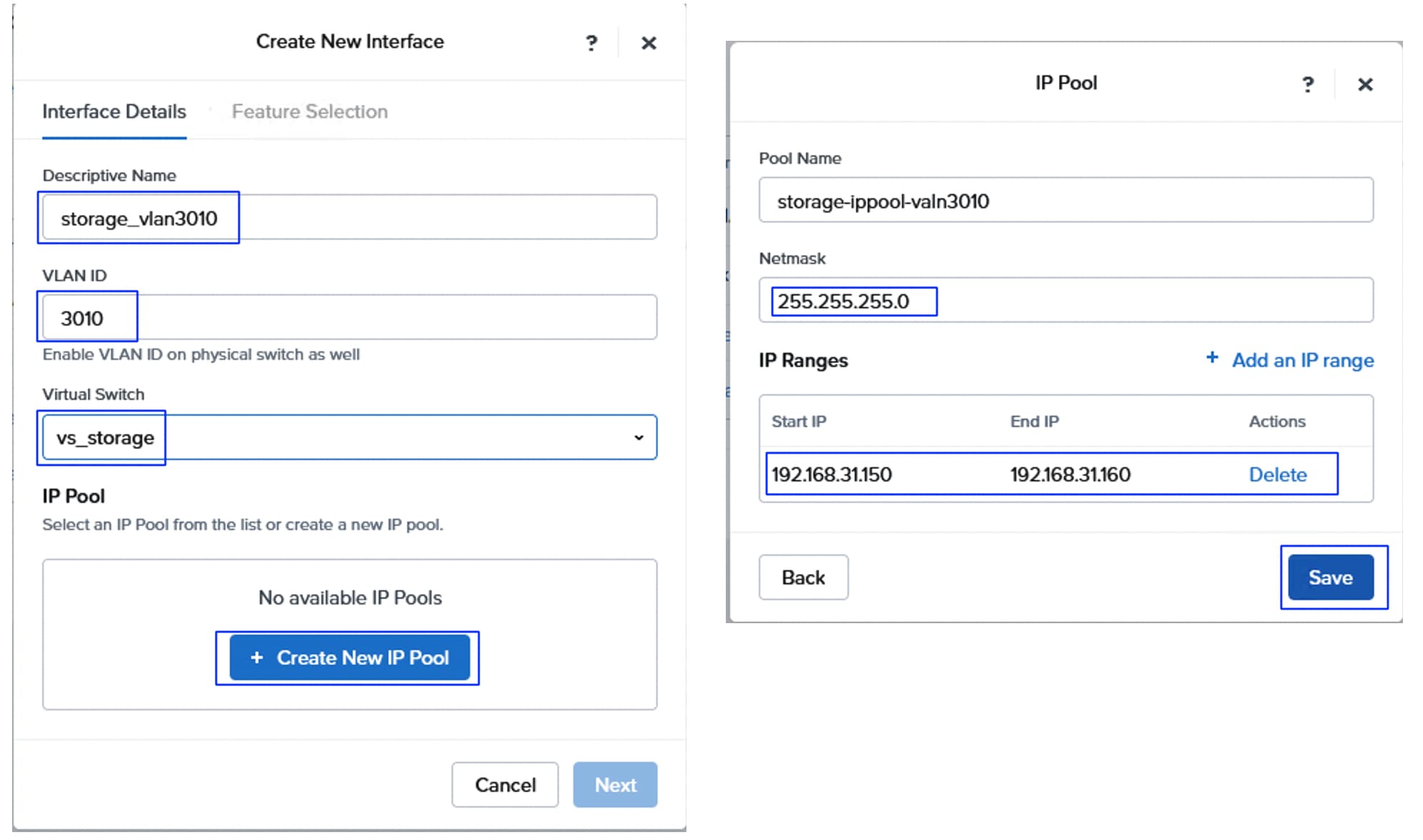

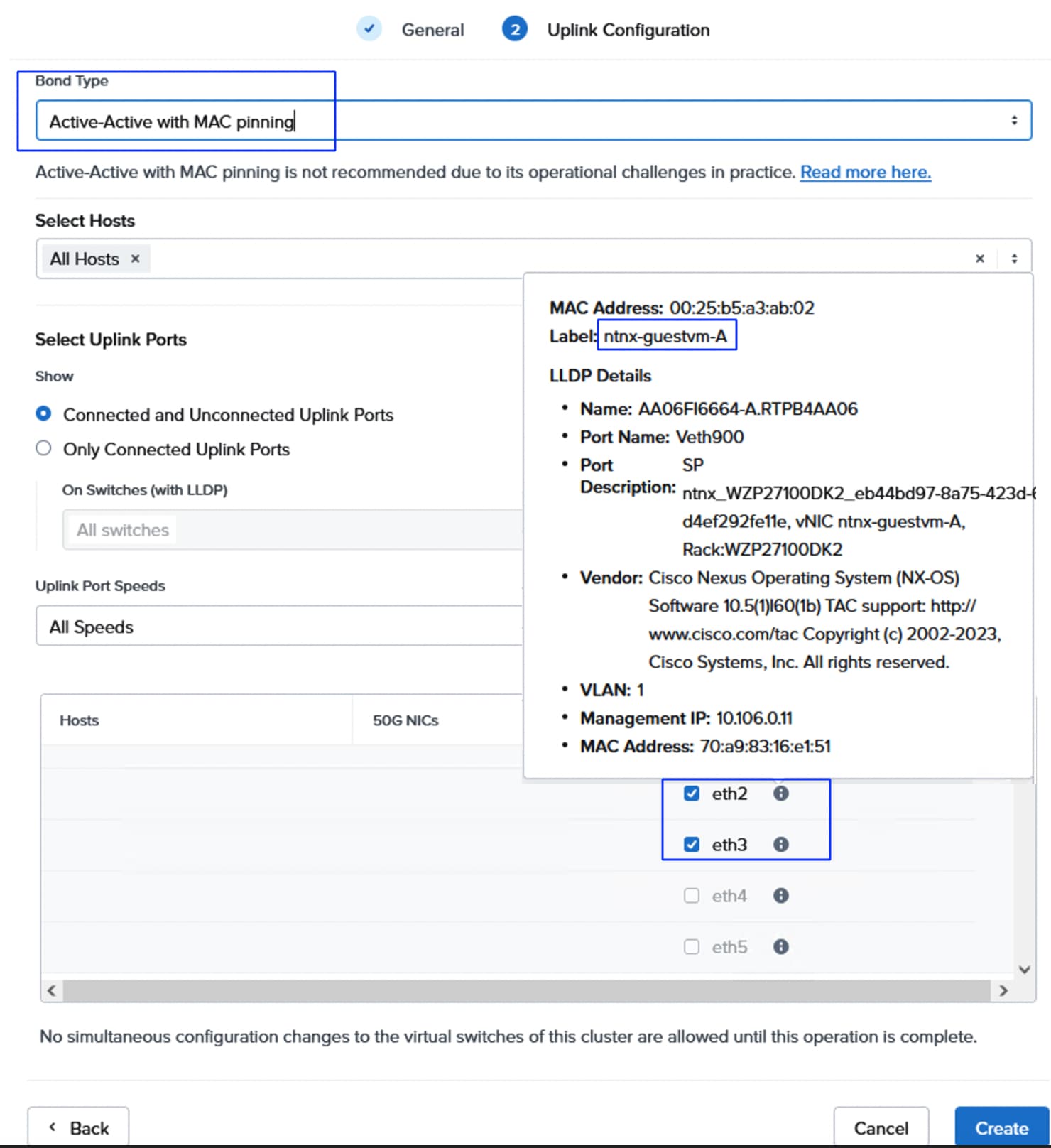

| ntnx-storage-A |

eth4 |

vSwitch Name: vs_storage Bond Type: Active-Active with MAC Pinning MTU: 9000 VLANS: 3010 (eth4), 3020 (eth5) |

| ntnx- storage -B |

eth5 |

Note: With in the UCS domain (between the Cisco UCS servers and FIs), the Nutanix virtual switches cannot be configured with Active-Active (LACP) bond type. Either Active-Backup or Active-Active with MAC Pinning must be used.

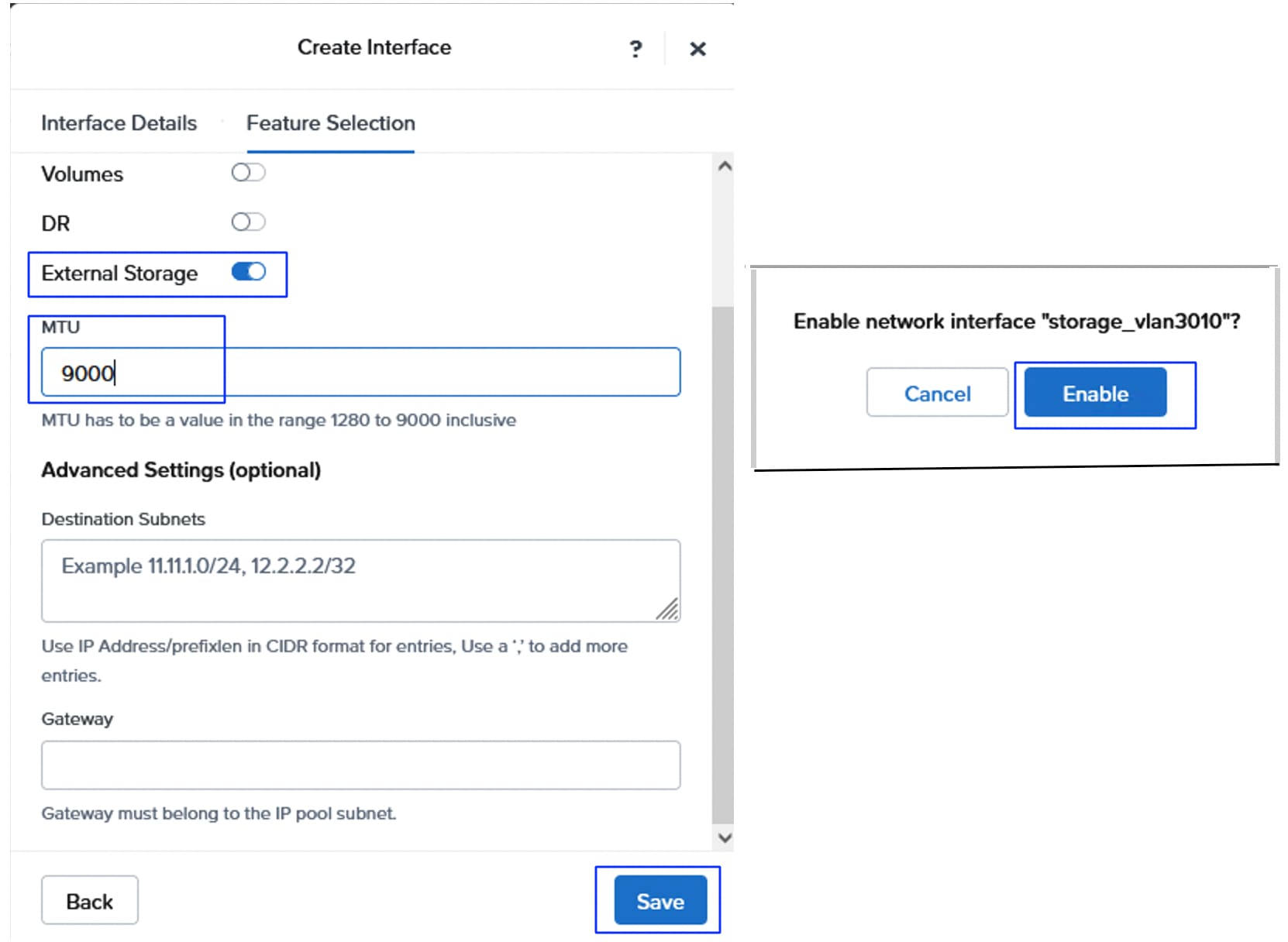

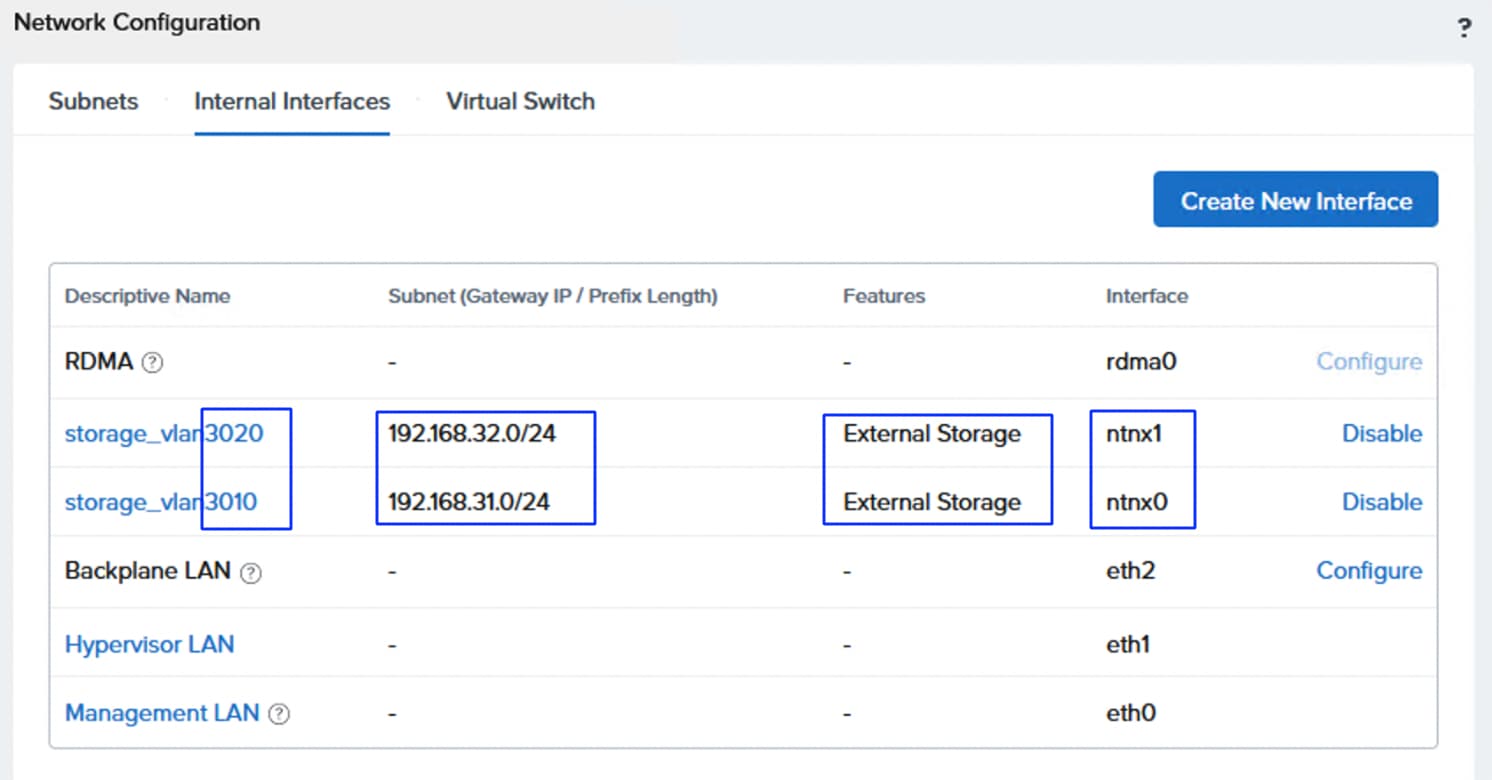

Figure 15 illustrates the internal networking configuration of each NCI Cluster Compute node. Within each Nutanix AHV node, Virtual Switch (vs0) is created using eth0 and eth1 uplink interfaces with an active-backup bond type. For FlashArray storage traffic, a dedicated vSwitch (vs_storage) is created using eth4 and eth5 uplink interfaces in Active-Active with Mac Pinning option. With in the vs_storage vSwitch, two internal interfaces are created for VLANS 3010 and 3020 for establishing highly available and performant storage data paths. For guest VM management and application traffic, a third Virtual Switch is created using uplink interfaces eth2 and eth3 with Active-backup or Active-Active with MAC Pinning configuration.

FlashStack with Nutanix Storage Layout

In the FlashStack with Nutanix architecture, an NCI compute cluster is connected to Pure Storage FlashArray’s “Realm+Pod” combination. In the Pure//FA Operating System, Realms and Pods are the fundamental building blocks for achieving Secure Multi-Tenancy (SMT) and Advanced Data Protection. While they are often used together, they serve two distinct purposes:

● Realm: It allows multiple organizations or departments to share a single higher-performance FlashArray without seeing each other’s data or accidentally deleting each other’s volumes. It allows administrators to carve up a FlashArray into multiple independent tenants (for example, Department A, Project B, Client C). They logically group the volumes, users & roles, Resource Quotas, Quality of Services (QoS) and Network Interfaces. Pure Storage implements access control using Role Based Access Control (RBAC) by creating permissions to the roles, which are then assigned to users or groups. Each Realm can have its own administrator and independent Active Directory/LDAP/NIS authentication configuration.

● Pod: is a management container that provides data consistency and mobility (replication). Each tenant can have more than one pod (for example, K8s-cluster, Oracle-cluster and so on).

By placing a Pod inside a Realm, application’s data is highly available (via Pod) while remaining securely isolated and quota-restricted (using Realm).

Note: NCI Compute clusters support a one-to-one or many-to-one relationship with FlashArrays. A cluster is restricted to a single Realm+Pod combination on one FlashArray; however, a FlashArray can serve multiple NCI Compute Clusters using different Realm+Pod combinations.

The mapping between NCI Compute Cluster, FlashArray and Realm+Pod combinations is illustrated in Figure 16.

NCI Compute Cluster Storage Internal Networking

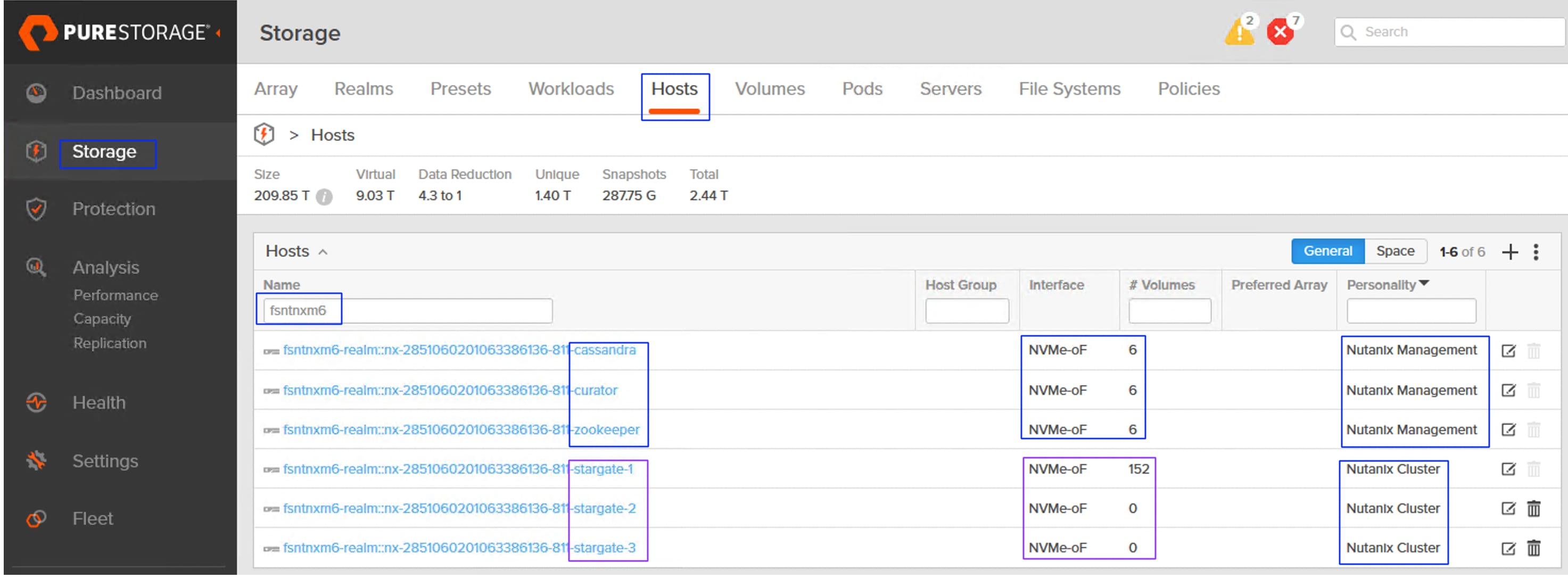

Stargate, Curator, Zookeeper and Cassandra are some of key software components that run as services within each AOS Nutanix Controller VM (CVM), working together to manage data, cluster configuration, and background tasks in the distributed storage fabric. For more details about these services, see The Nutanix Cloud Bible. The storage for the services (Zookeeper, Curator and Cassandra) are typically created on the M.2 boot disk of each Nutanix node during Nutanix Cluster provisioning.

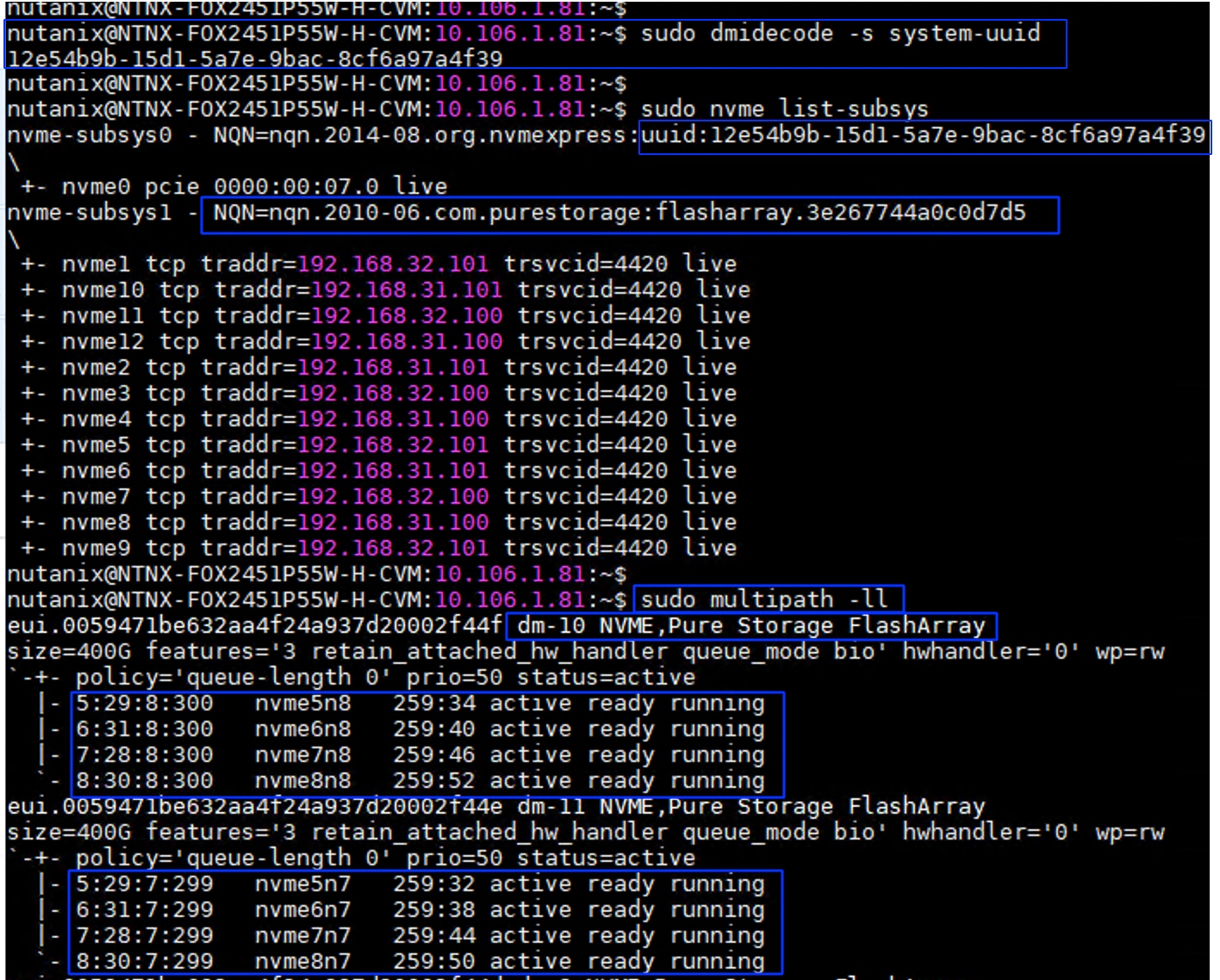

However, in the NCI Compute Cluster architecture ,to maintain a stateless footprint on the Cisco UCS Compute nodes, the storage of these core metadata and configuration services -Zookeeper, Curator and Cassandra- is offloaded to the Pure Storage FlashArray. One volume for each service is created per node on the FlashArray. These volumes are then attached to the corresponding individual CVMs via the Pure Storage Host/Initiator group, providing a highly available and scalable environment for the cluster’s metadata and management services. These volumes are referred as kernel volumes and are attached to corresponding controller VMs using NVMe/TCP initiators running in the kernel space.

In the NCI Compute Cluster, each virtual disk (vDisk) is architecturally represented on the Pure Storage FlashArray by a dual-volume pairing: a Primary Data Volume (-dt) and a Metadata volume (-md). This 1:2 mapping ensures granular data management and integrity. Connectivity to guest VMs is established via the Controller VM (CVM), which leverages high-performance NVMe/TCP initiators running in user space. By passing the kernel overhead, this design delivers near-native storage performance to the Guest VMs while maintaining the full suite of Nutanix enterprise data services.

A total of eighteen (6x3) services volumes created and mapped to corresponding service types on a 6-node NCI Compute Cluster (below). Three Stargate hosts/initiatorgroups are created to map the user volumes to the Guest VMs.

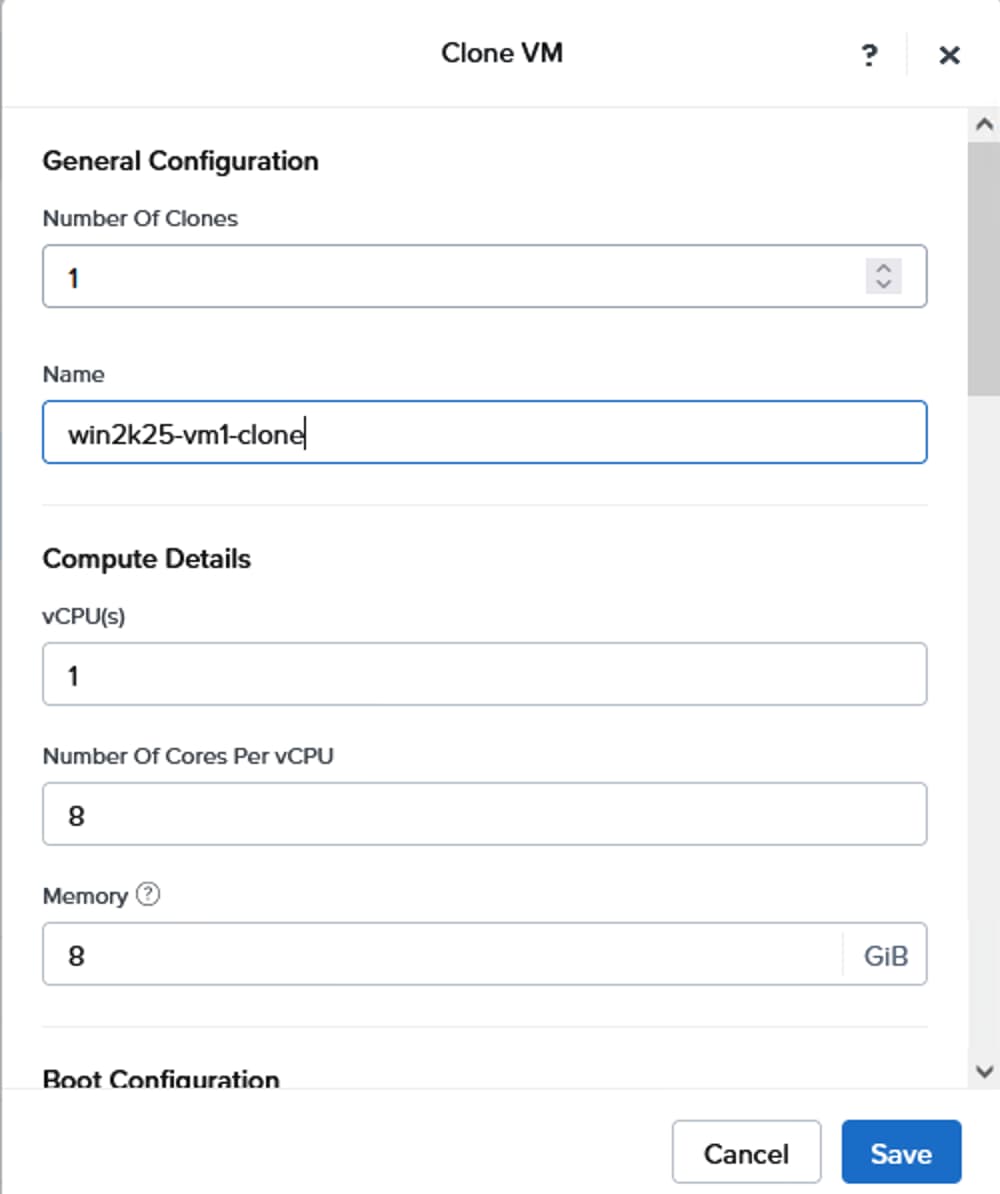

When a user volume is cloned (by cloning a VM via Prim Central/Element), two corresponding volumes (-dt and -md) will be created in the FlashArray. While a snapshot of a volume (by taking a snapshot of the VM from Prism Central) will have just one volume created in the FlashArray.

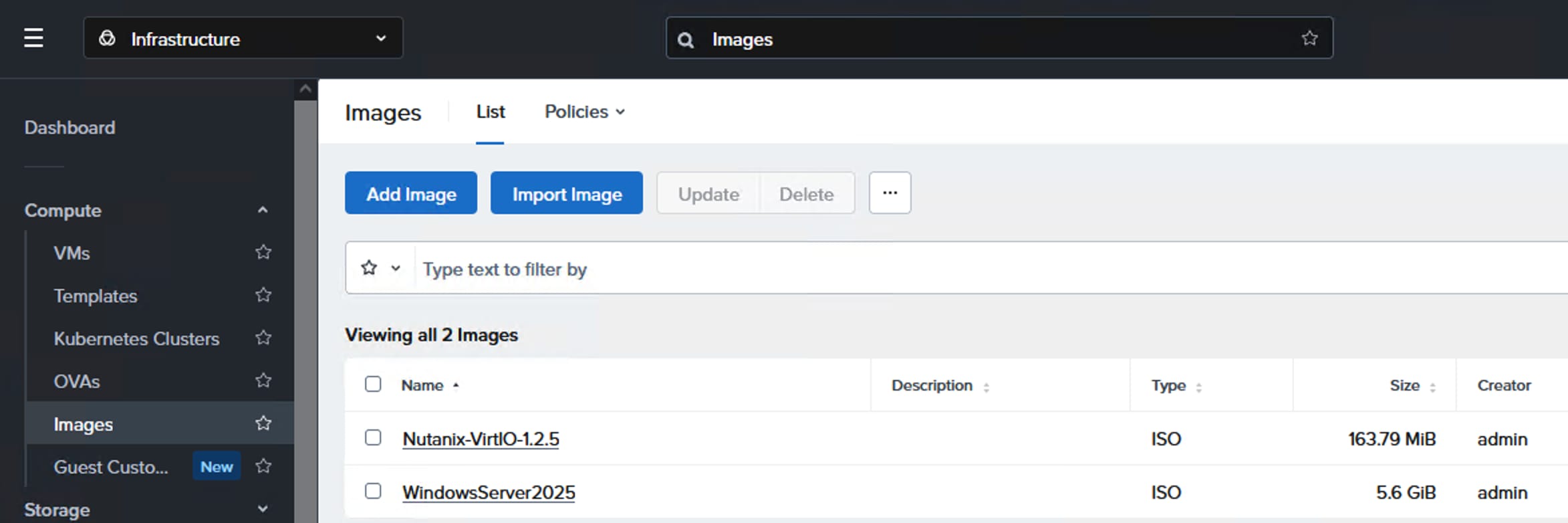

This chapter contains the following:

● Cisco Nexus Switch Configuration

● Claim Cisco Nexus Switches into Cisco Intersight

● Pure Storage FlashArray Configuration

● Cisco Intersight Configuration

● Fabric Interconnect Domain Profile and Policies

● Create Pools, Policies for LAN Connectivity Policy

● Select Nutanix Installation Method

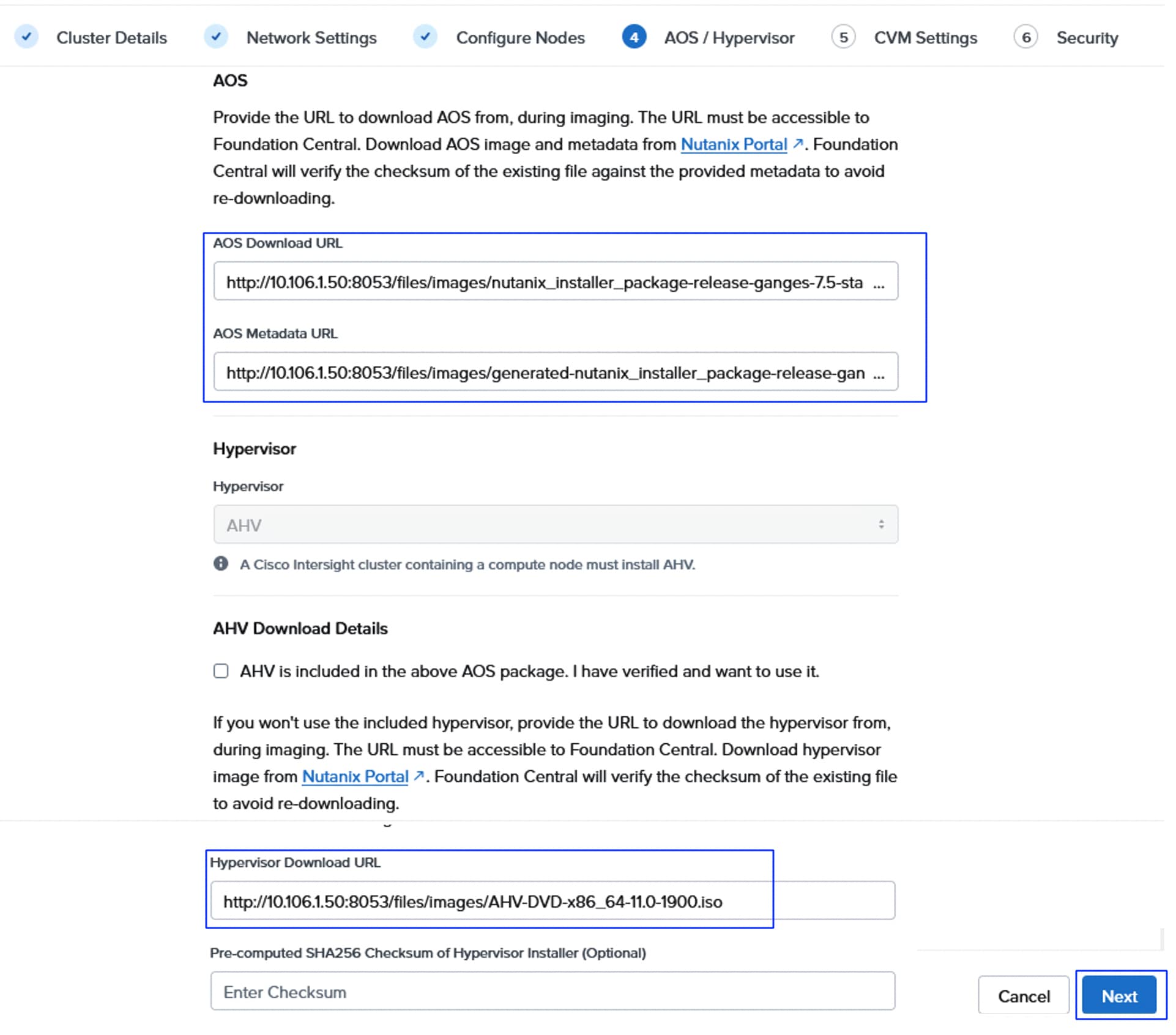

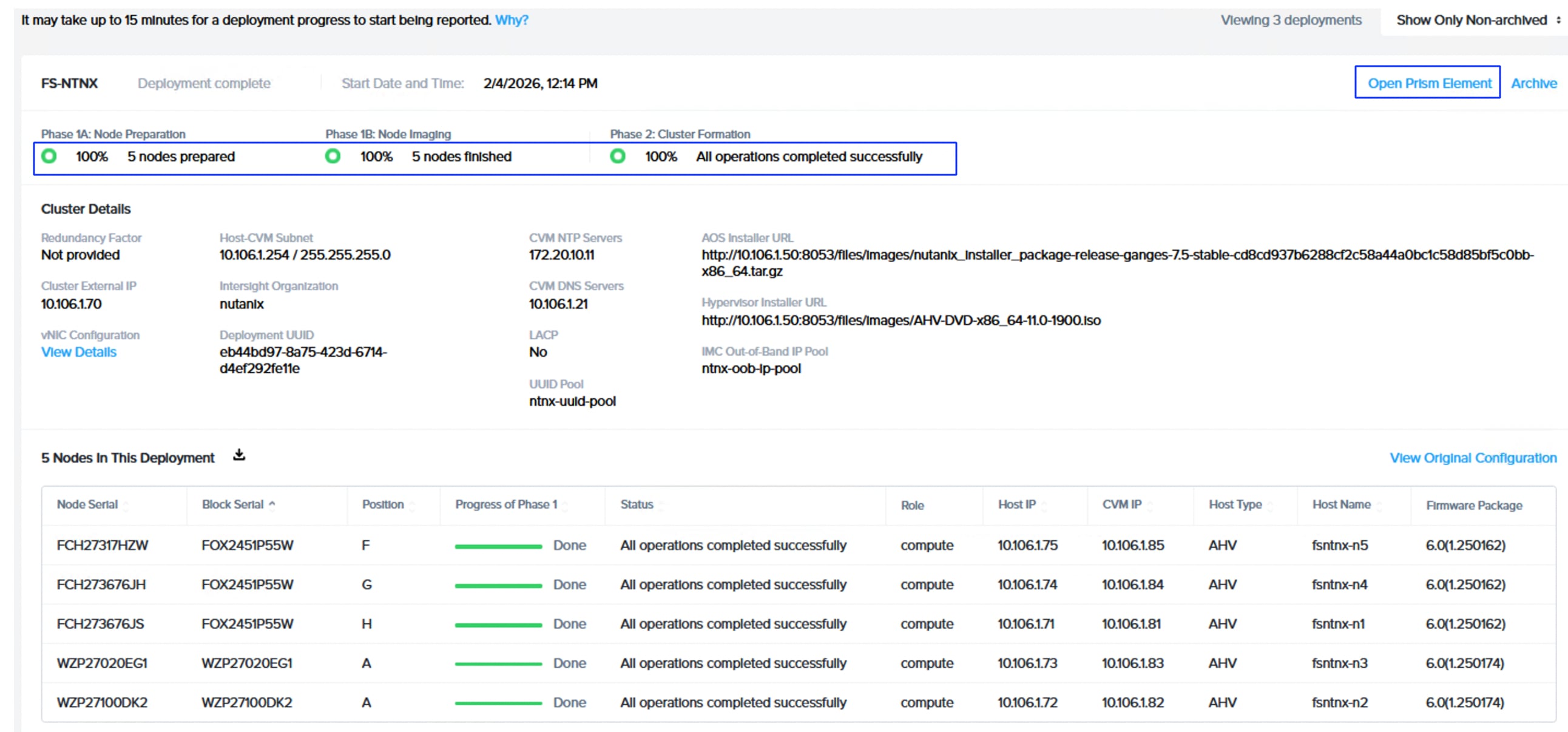

● Install NCI Compute Cluster on FlashStack

● Configure External Storage Array Connectivity

● Configure Virtual Machine Networking

● Modify Default Passwords on AHV and CVMs

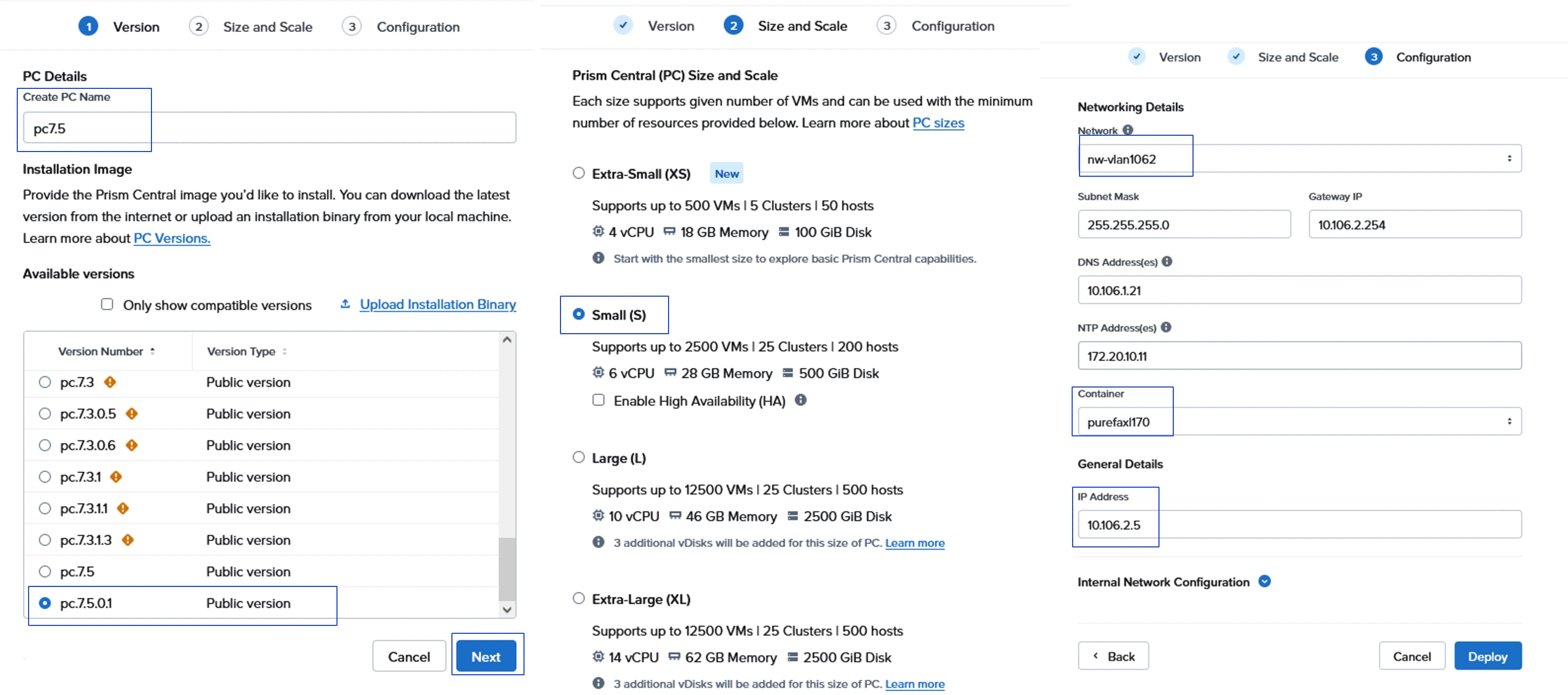

● Prism Central Installation and Configuration

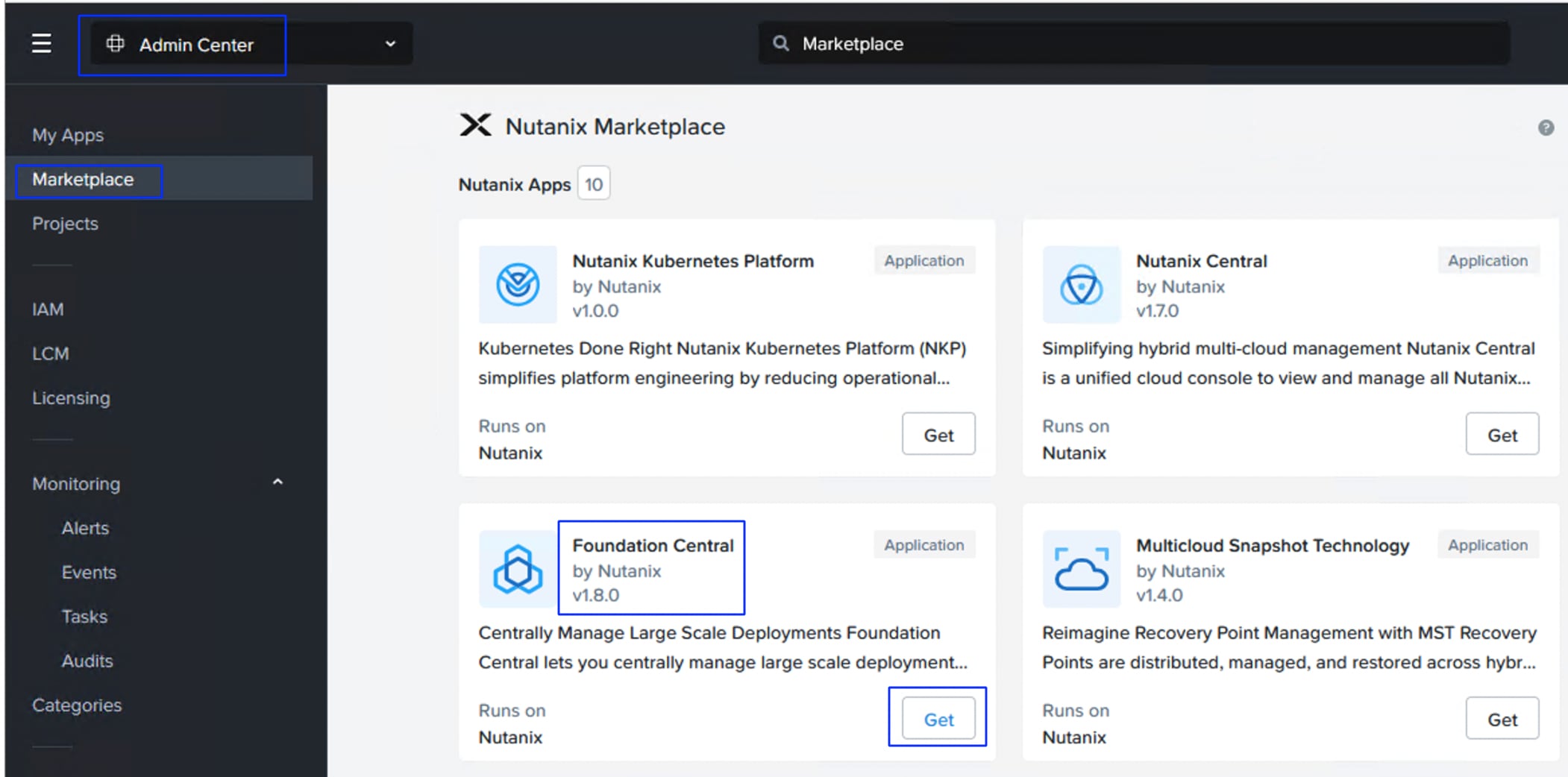

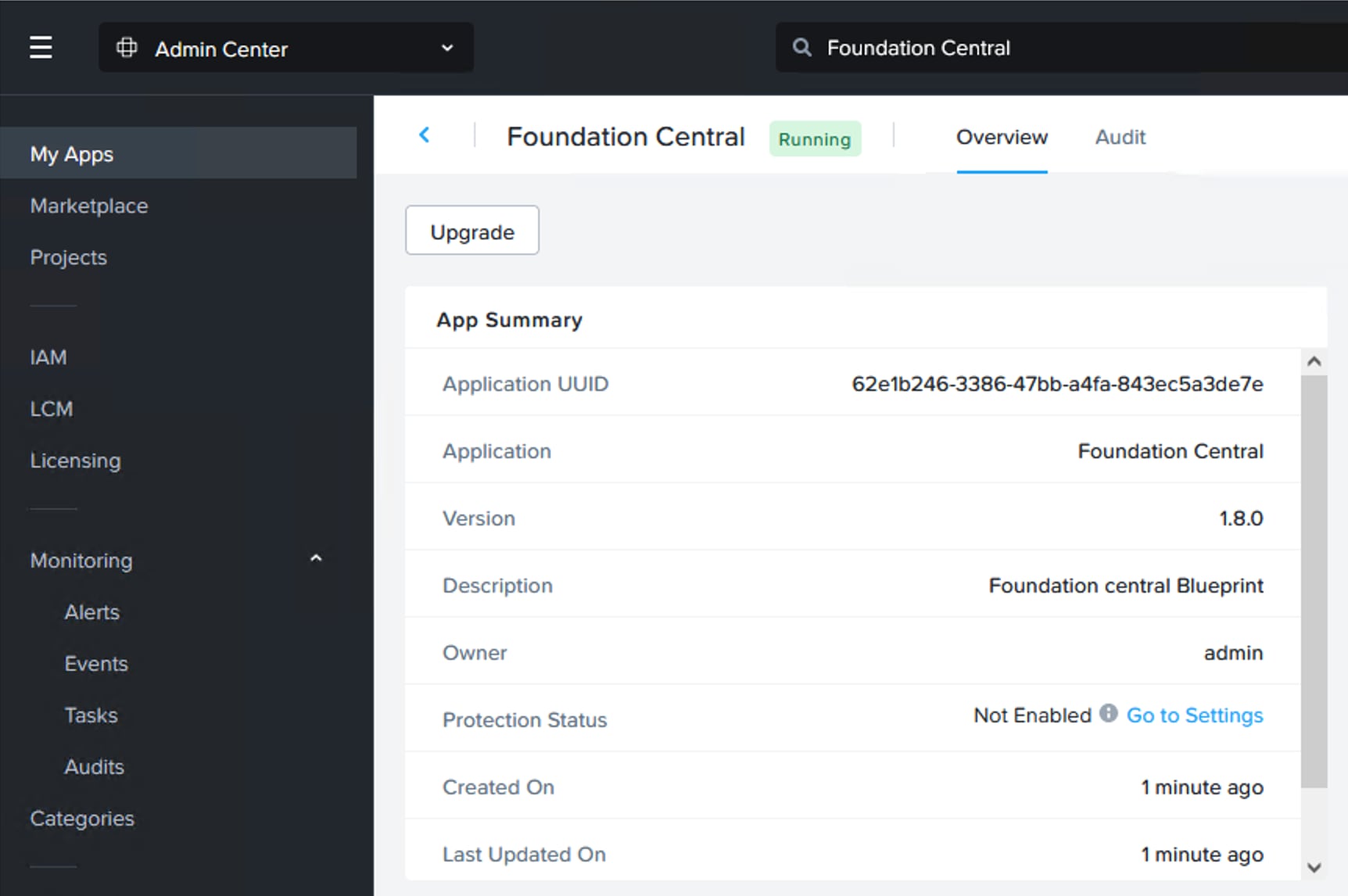

● Install Device Connector in Prism Central

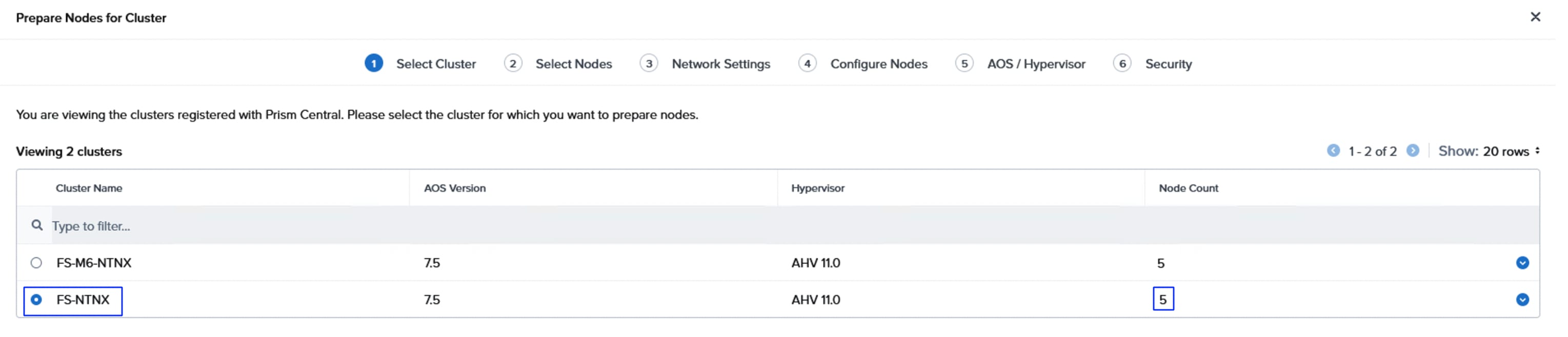

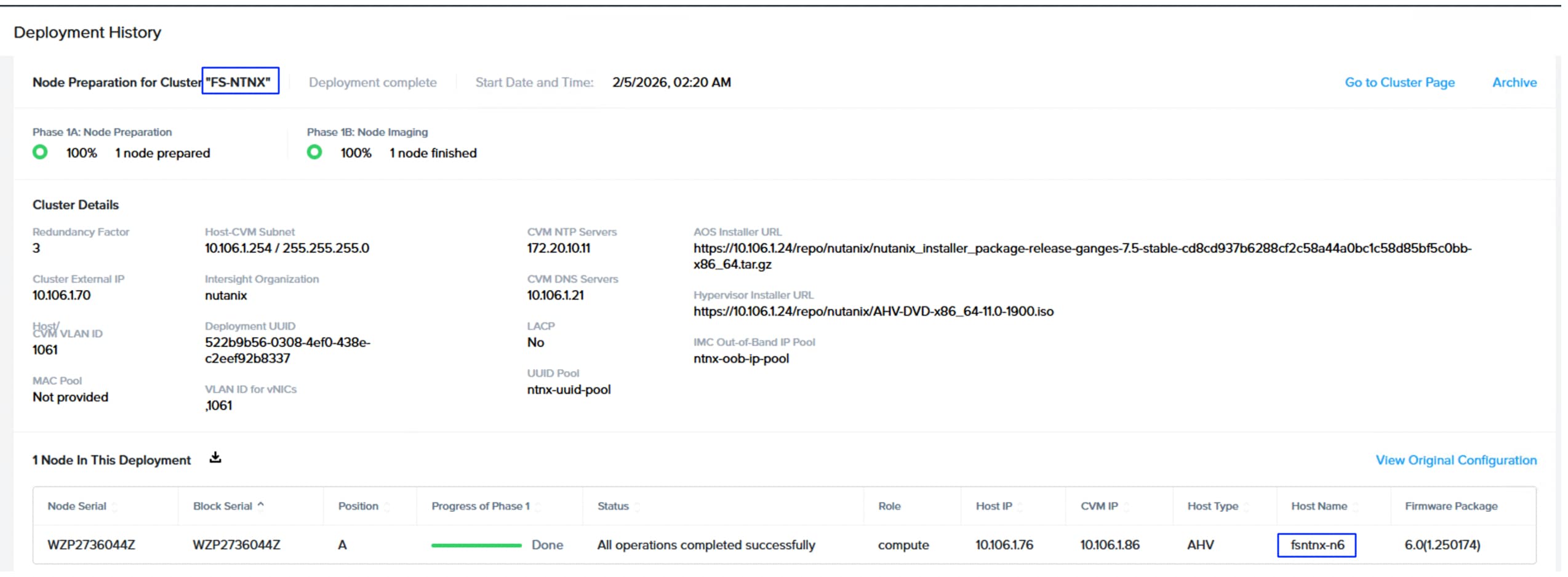

● NCI Compute Cluster Expansion using Prism Central

● Physical cabling should be completed by following the diagram and table references in section FlashStack Cabling. The following procedures assume that all the FlashStack components are connected to a dedicated Out-Of-Band/IPMI network for KVM session access.

● The procedures in this chapter describe how to configure the Cisco Nexus 93600CD-GX switches for use in a FlashStack environment. This procedure assumes Fabric Interconnects firmware and Cisco Nexus switches NXOS is upgraded to the supported versions.

● The procedure includes the setup of NTP distribution on both the mgmt0 port and the in-band management VLAN. The interface-vlan feature and ntp commands are used to set this up..

● This document assumes that initial day-0 switch configuration is already done using switch console ports and ready to use the switches using their management IPs.

● This document assumes that initial day-0 Pure Storage FlashArray configuration is already done using console ports and ready to use the Pure Storage Management Console using their virtual management IP.

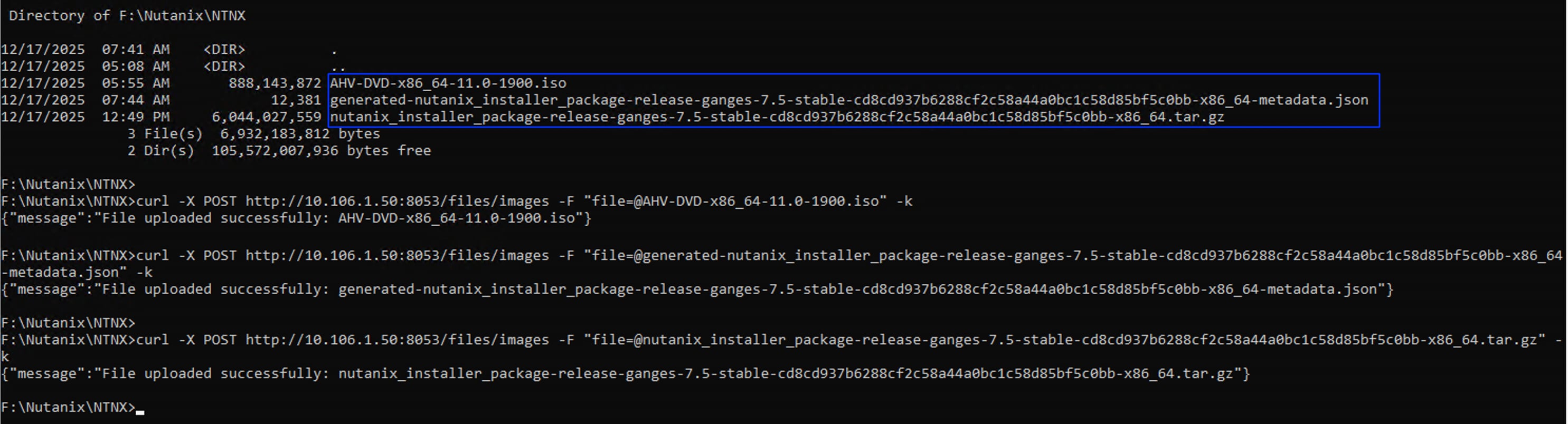

● It is recommended to list and note all VLANs, and IP addresses for Nutanix cluster provisioning. Table 6 lists the supporting components or modules used for deploying the solution.

Table 6. Supporting Components used for NCI Compute Cluster Deployments

| Component or Module Name |

IP address |

| Pure Storage FlashArray Virtual IP(VIP) |

10.103.0.55 |

| FlashArrays Controller’s Ethernet Ports IP subnets |

192.168.31.0/24 (CT0.Eth10,CT1.Eth10) |

| Foundation Central VM appliance |

10.106.1.50 |

| DNS IP |

10.106.1.21 |

| NTP IP |

172.10.20.11 |

| Prism Central VM IP |

10.106.2.5 |

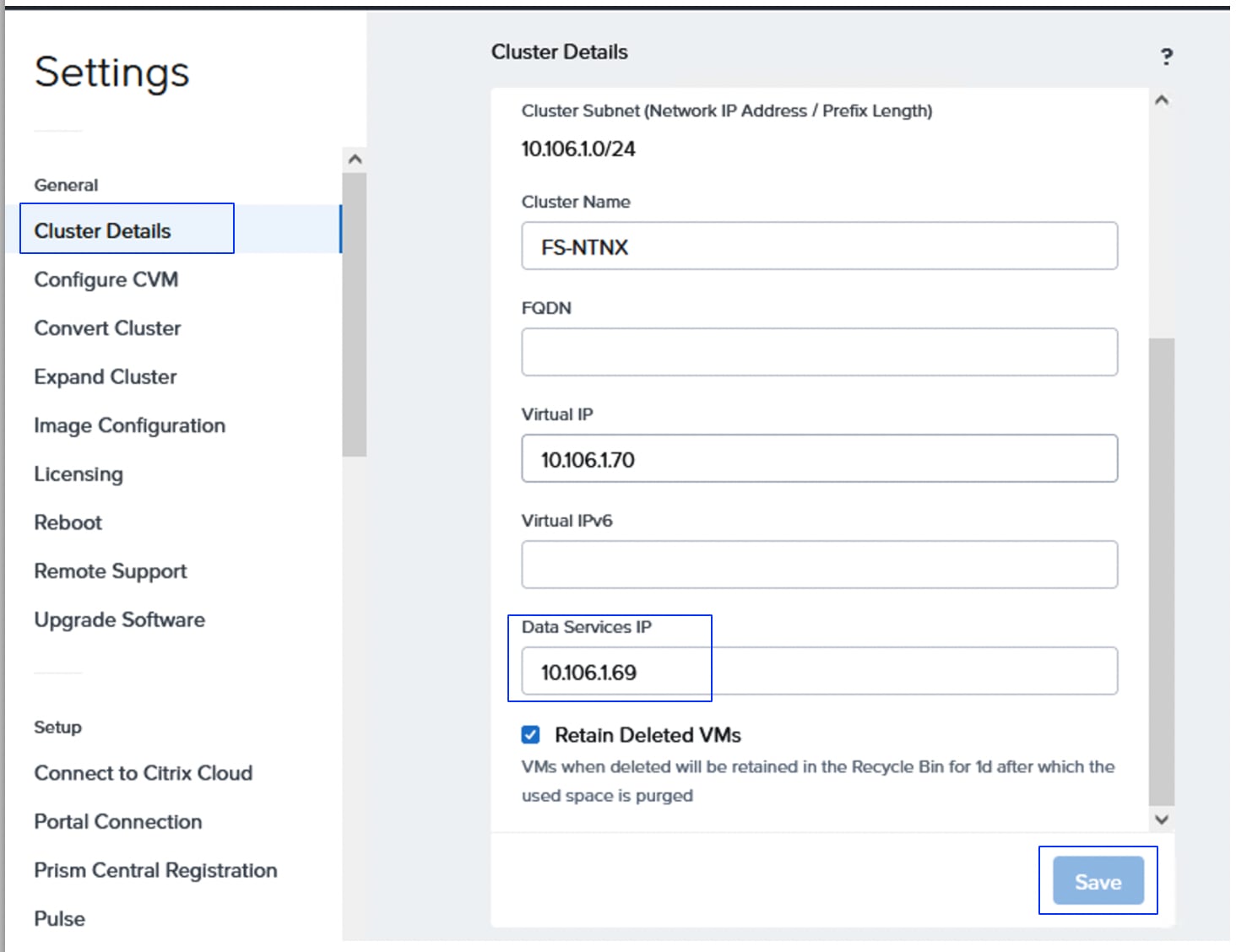

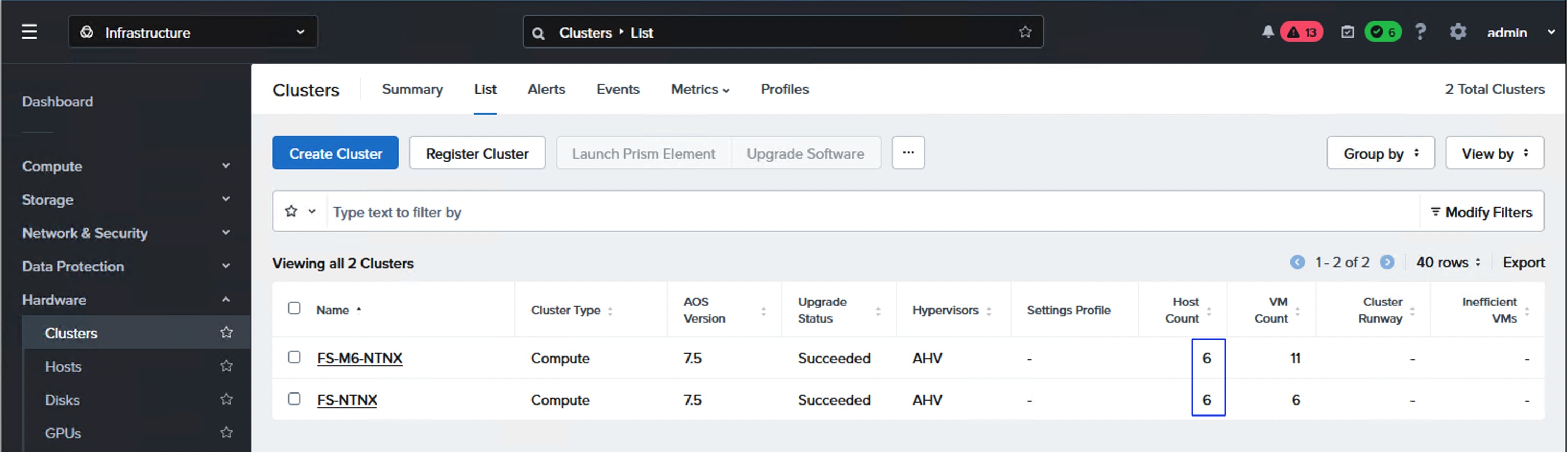

| NCI Compute Cluster Virtual IP (Greenfield-M7 setup) Cluster Name: fs-ntnx |

10.106.1.70 |

| Data services IP (M7 setup) |

10.106.1.69 |

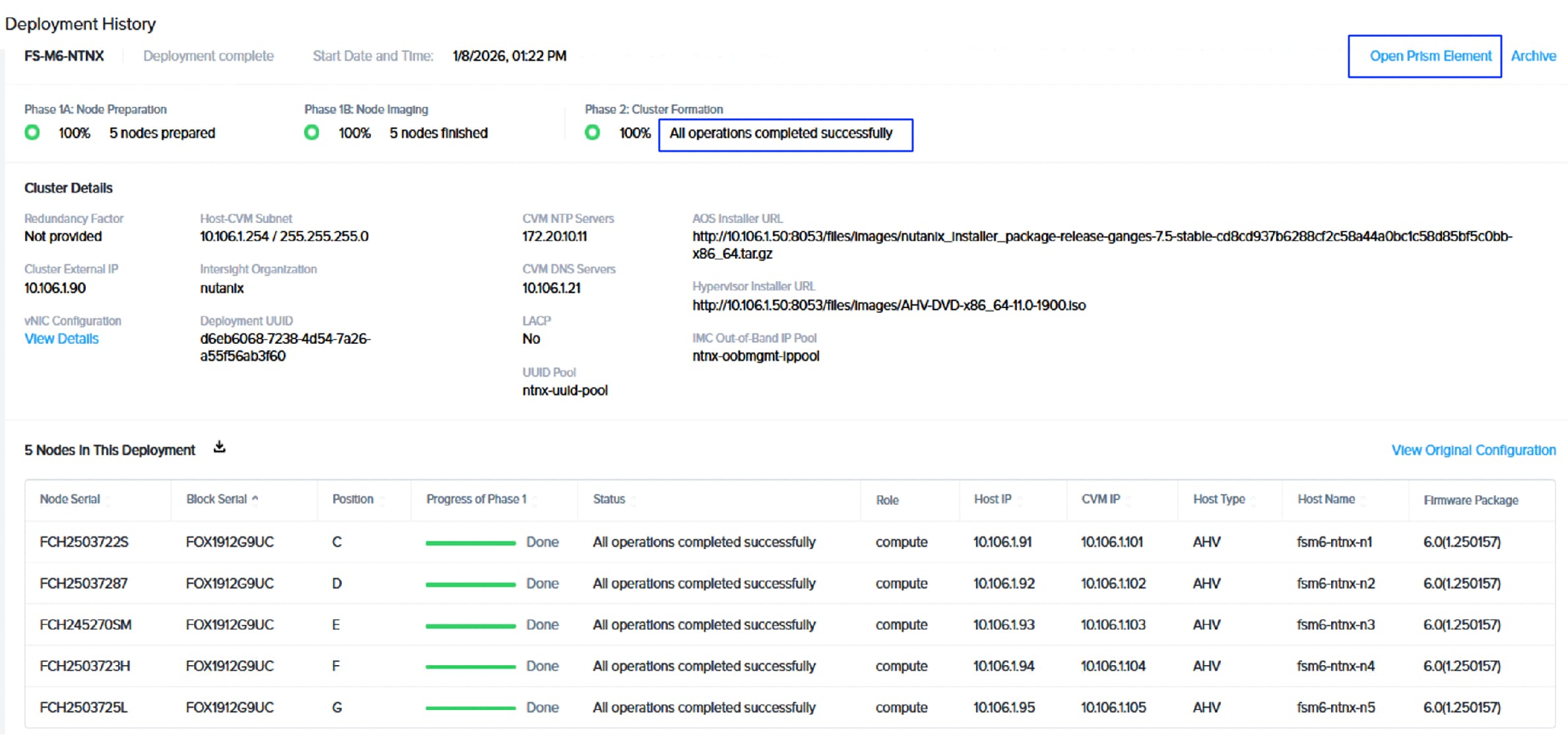

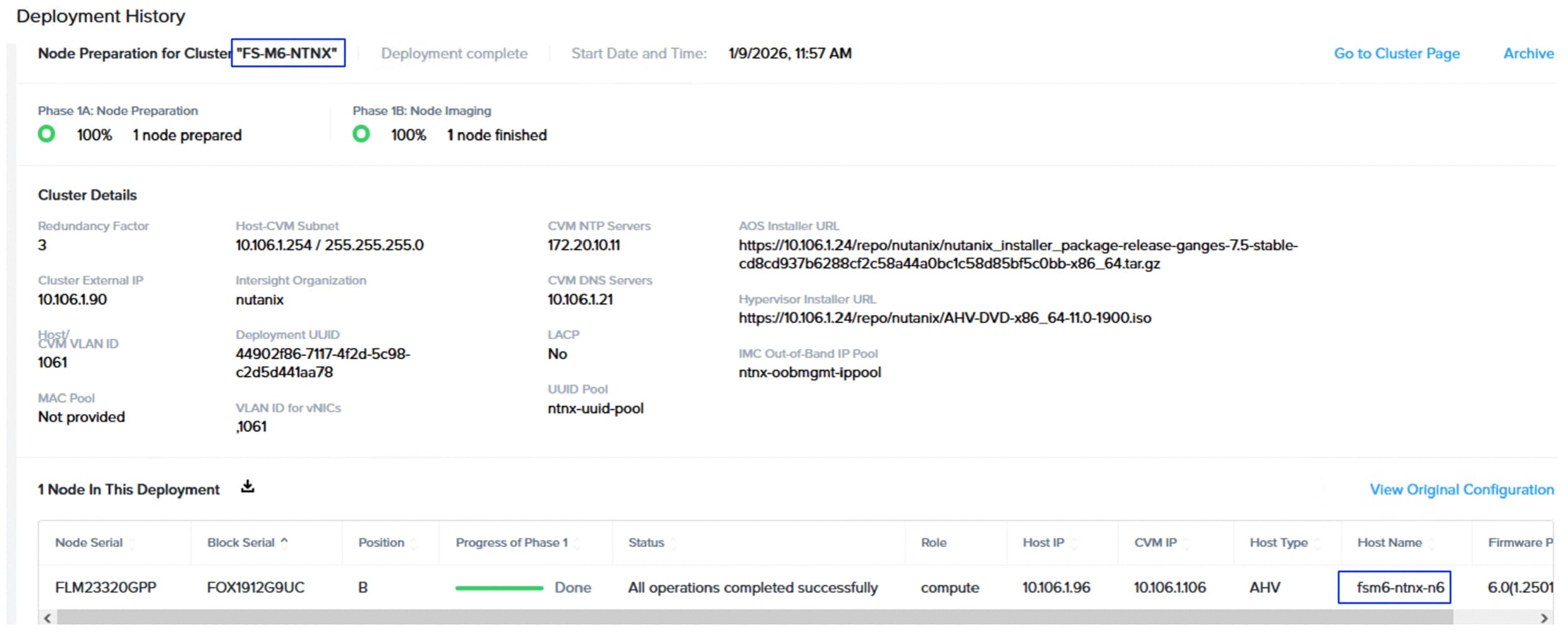

| NCI Compute Cluster Virtual IP (Brownfield-M5/M6 setup) Cluster Name: fs-m6-ntnx |

10.106.1.90 |

| Data services IP (M5/M6 setup) |

10.106.1.89 |

| Intersight Assist |

10.106.1.51 |

| Cisco UCS IMM Transition Toolkit |

10.106.1.24 |

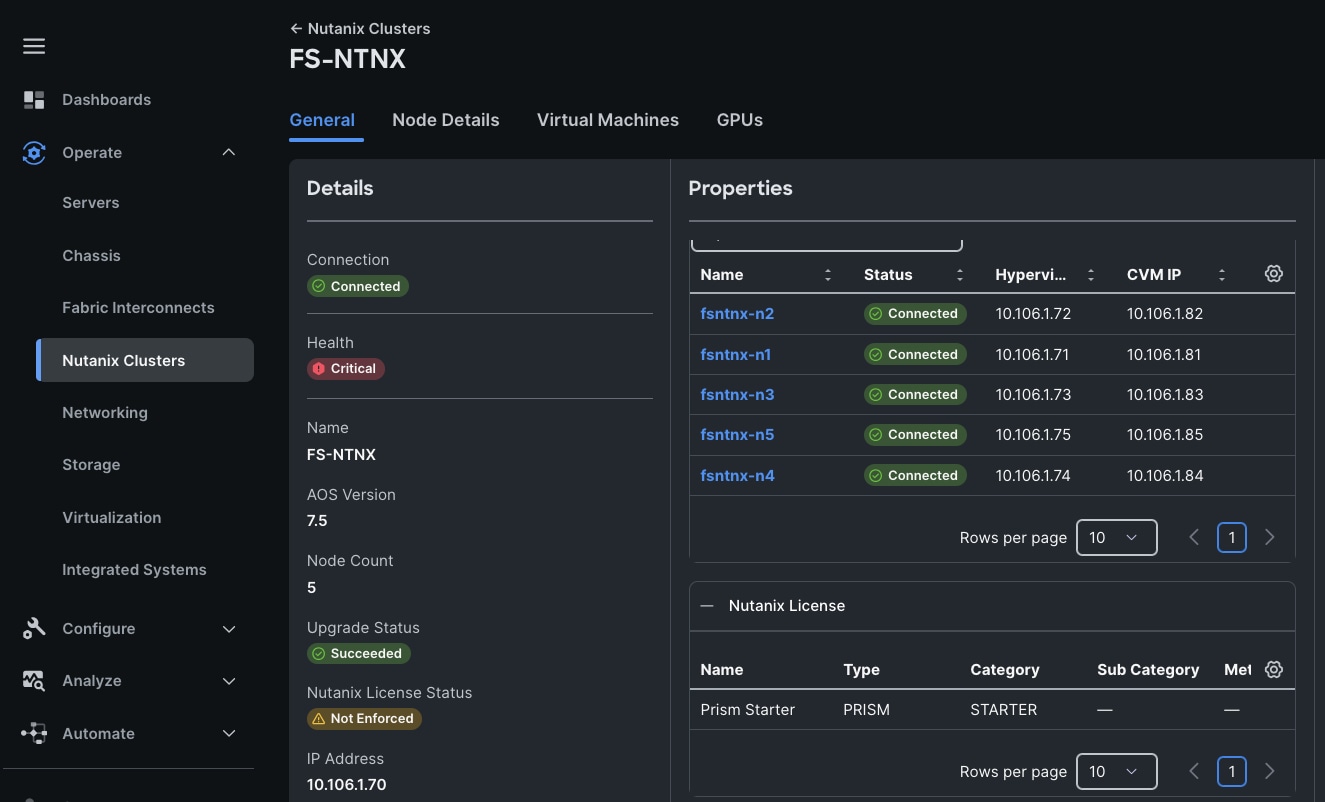

Table 7 lists the IP addresses used for the two deployment types (greenfield and brownfield).

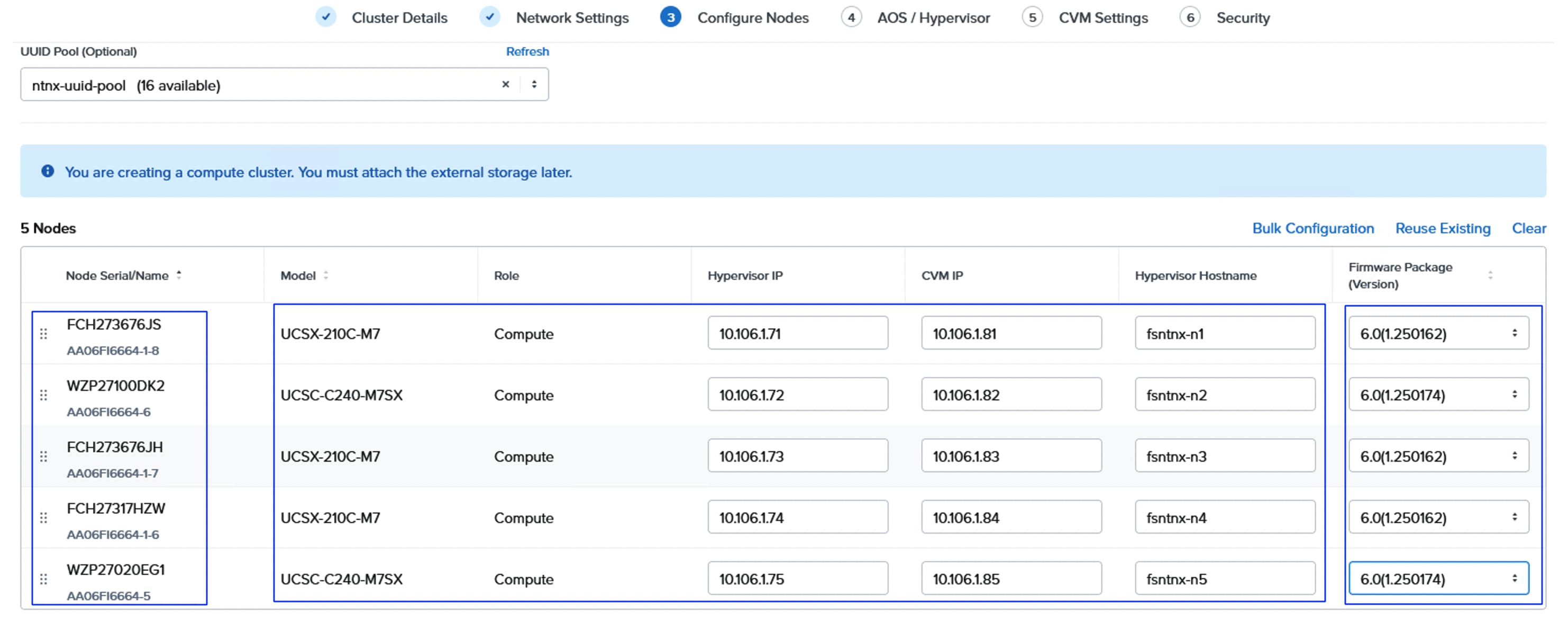

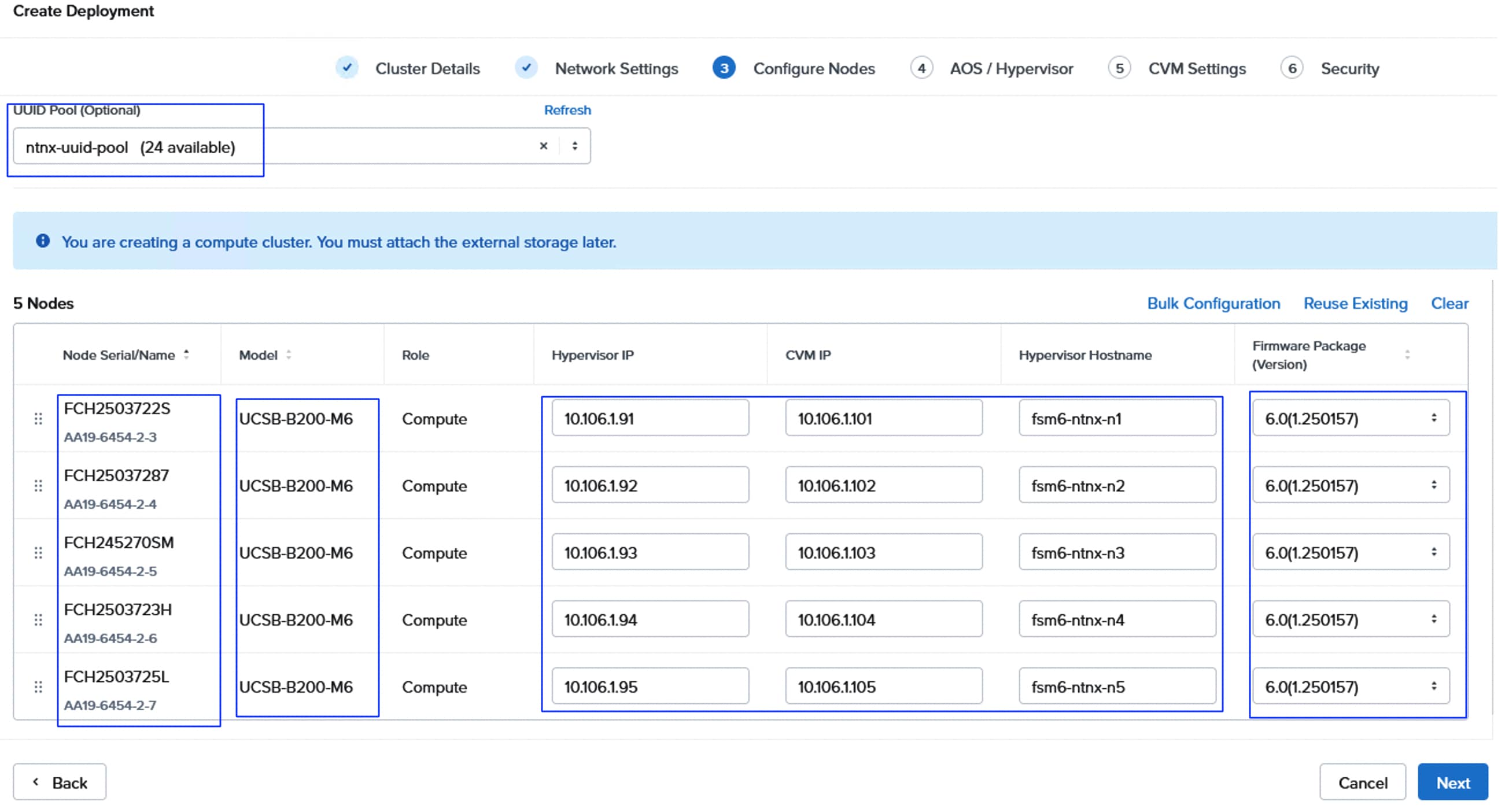

Table 7. IP Addresses used for the NCI Compute Cluster Deployments

| Host Name |

AHV Hypervisor IP |

CVM IP |

Out-Of-Band IP Address |

| Greenfield Deployment: fs-ntnx (10.106.1.70), Gateway: 10.106.1.254 and Netmask: 255.255.255.0 |

|||

| fsntnx-n1 |

10.106.1.71/24 |

10.106.1.81/24 |

10.106.0.60/24 |

| fsntnx-n2 |

10.106.1.72/24 |

10.106.1.82/24 |

10.106.0.61/24 |

| fsntnx-n3 |

10.106.1.73/24 |

10.106.1.83/24 |

10.106.0.62/24 |

| fsntnx-n4 |

10.106.1.74/24 |

10.106.1.84/24 |

10.106.0.63/24 |

| fsntnx-n5 |

10.106.1.75/24 |

10.106.1.85/24 |

10.106.0.64/24 |

| fsntnx-n6 (used for cluster expansion) |

10.106.1.76/24 |

10.106.1.86/24 |

10.106.0.65/24 |

| Brownfield Deployment: fs-m6-ntnx (10.106.1.90), Gateway: 10.106.1.254 and Netmask: 255.255.255.0 |

|||

| fs-m6-ntnx-n1 |

10.106.1.91/24 |

10.106.1.101/24 |

10.103.0.101/24 |

| fsm6-ntnx-n2 |

10.106.1.92/24 |

10.106.1.102/24 |

10.103.0.102/24 |

| fsm6-ntnx-n3 |

10.106.1.93/24 |

10.106.1.103/24 |

10.103.0.103/24 |

| fsm6-ntnx-n4 |

10.106.1.94/24 |

10.106.1.104/24 |

10.103.0.104/24 |

| fsm6-ntnx-n5 |

10.106.1.95/24 |

10.106.1.105/24 |

10.103.0.105/24 |

| fsm6-ntnx-n6 (used for cluster expansion) |

10.106.1.96/24 |

10.106.1.106/24 |

10.103.0.106/24 |

Cisco Nexus Switch Configuration

This section assumes that a pair of Nexus Switches are already configured and accessible using their management Ips. Follow the procedures listed below to configure the Nexus switches.

Note: For brownfield deployments, it is assumed that the Top-Of-Rack (ToR) switches are already configured to support the existing infrastructure. Therefore, switch configuration steps for brownfield deployment type, are not included in the following procedures. Ensure all the required VLANs for management, storage and guest traffics are defined in the switches.

Procedure 1. Enable Features on Cisco Nexus Switches A and B

Step 1. Log into both Nexus switches as admin using ssh.

Step 2. Enable the switch features as described:

config t

feature nxapi

cfs eth distribute

feature udld

feature interface-vlan

feature netflow

feature hsrp

feature lacp

feature vpc

feature lldp

Procedure 2. Set Global Configurations on Enable Features on Cisco Nexus Switches A and B

Step 1. Run the following commands to set the global configurations:

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

spanning-tree port type network default

system default switchport

system default switchport shutdown

port-channel load-balance src-dst l4port

ntp server <Global-ntp-server-ip> use-vrf management

ntp master 3

clock timezone <timezone> <hour-offset> <minute-Offset>

clock summer-time <timezone> <start-weekk> <start-day> <start-month> <start-time> <end-week> <end-day> <enb-month> <end-time> <offset-minutes>

ip route 0.0.0.0/0 <IB-Mgmt-VLAN-gatewayIP>

copy run start

Note: For more information on configuring the timezone and daylight savings time or summer time, see: Cisco Nexus 9000 Series NX-OS Fundamentals Configuration Guide, Release 10.3(x) - Basic Device Management.

Procedure 3. Create VLANs on Cisco Nexus Switches A and B

Step 1. From the global configuration mode, run the following:

Vlan <oob-mgmt-vlan-id> #1060

name OOB-Mgmt-VLAN

Vlan <iB-mgmt-vlan-id> #1061

name IB-Mgmt-VLAN

Vlan <native-vlan-id> #2

name Native-VLAN

Vlan <NVMe-TCP_A-vlan-id> #3010

name NVMe-TCP_A

Vlan <NVMe-TCP_B-vlan-id> #3020

name NVMe-TCP_B

Vlan <vm-mgmt1-vlan-id> #1062

name VM-Mgmt1

Vlan <vm-mgm2t-vlan-id> #1063

name VM-Mgmt2

Vlan <vm-mgm2t-vlan-id> #1064

name VM-Mgmt3

Procedure 4. Define Port Channels on Cisco Nexus A and B

Step 1. From the global configuration mode, run the following:

##This Port Channel (PO) is for VPC configuration; Execute the below commands on both the switches A & B

interface port-channel 10

description vPC Peer Link

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 1060-1064,3010,3020

spanning-tree port type network

## This PO is for FI-6664-A/B to Nexus Switches connectivity for greenfield deployment type; Execute the ##below commands on both the switches A & B

interface port-channel 21

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 1060-1064,3010,3020

spanning-tree port type edge trunk

mtu 9216

interface port-channel 31

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 1060-1064,3010,3020

spanning-tree port type edge trunk

mtu 9216

### Optional: The below port channels is for connecting the Nexus switches to the existing customer network; ##Execute the below commands on both the switches A & B

interface port-channel 106

description connecting-to-customer-Core-Switches

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 1060-1064

spanning-tree port type normal

mtu 9216

Procedure 5. Configuring Virtual Port Channel (VPC) Domain on Cisco Nexus Switches A and B

Step 1. Run the following commands to set the global configurations:

## Execute the following commands on Nexus-A

vpc domain <nexus-vpc-domain-id>

peer-switch

role priority 10

peer-keepalive destination <Switch-B-Mgmt-IP> source <Switch-A-Mgmt-IP>

delay restore 150

peer-gateway

auto-recovery

ip arp synchronize

## Execute the following commands on Nexus-B

vpc domain <nexus-vpc-domain-id>

peer-switch

role priority 20

peer-keepalive destination <Switch-A-Mgmt-IP> source <Switch-B-Mgmt-IP>

delay restore 150

peer-gateway

auto-recovery

ip arp synchronize

Procedure 6. Configure individual Interfaces on Switches A and B

Step 1. From the global configuration mode, run the following:

## Execute the below commands on Switch-A

### FI-6664 Ports for greenfield configuration

interface Ethernet1/3

description FI6664-A-uplink-Eth63

channel-group 21 mode active

no shutdown

interface Ethernet1/4

description FI6664-B-uplink-Eth63

channel-group 31 mode active

no shutdown

## Configuration for FA//XL170 Storage Ports

interface Ethernet1/25

description PureFAXL170-CT0.ETH10

switchport access vlan 3010

spanning-tree port type edge

mtu 9216

no shutdown

interface Ethernet1/26

description PureFAXL170-CT1.ETH10

switchport access vlan 3010

spanning-tree port type edge

mtu 9216

no shutdown

## Optional: Configuration for interfaces that connected to the customer existing management network

interface Ethernet1/35/1

description customer-Core-1:Eth1/37

channel-group 106 mode active

no shutdown

interface Ethernet1/35/2

description customer-Core-2:Eth1/37

channel-group 106 mode active

no shutdown

### Execute the below commands on Switch-B

### FI-6664 Ports for greenfield configuration

interface Ethernet1/3

description FI6664-A-uplink-Eth64

channel-group 21 mode active

no shutdown

interface Ethernet1/4

description FI6664-B-uplink-Eth64

channel-group 31 mode active

no shutdown

## Configuration for FA//XL170 Storage Ports

interface Ethernet1/25

description PureFAXL170-CT0.ETH11

switchport access vlan 3020

spanning-tree port type edge

mtu 9216

no shutdown

interface Ethernet1/26

description PureFAXL170-CT1.ETH11

switchport access vlan 3020

spanning-tree port type edge

mtu 9216

no shutdown

## Optional: Configuration for interfaces that connected to the customer existing management network

interface Ethernet1/35/1

description customer-Core-1:Eth1/38

channel-group 106 mode active

no shutdown

interface Ethernet1/35/2

description customer-Core-2:Eth1/38

channel-group 106 mode active

no shutdown

Procedure 7. Update the port channels

Step 1. From the global configuration mode, run the following:

## Execute the following commands on Switch A & B

interface port-channel 10

vpc peer-link

interface port-channel 21

vpc 21

interface port-channel 31

vpc 31

interface port-channel 41

vpc 41

interface port-channel 51

vpc 51

interface port-channel 106

vpc 106

copy run start

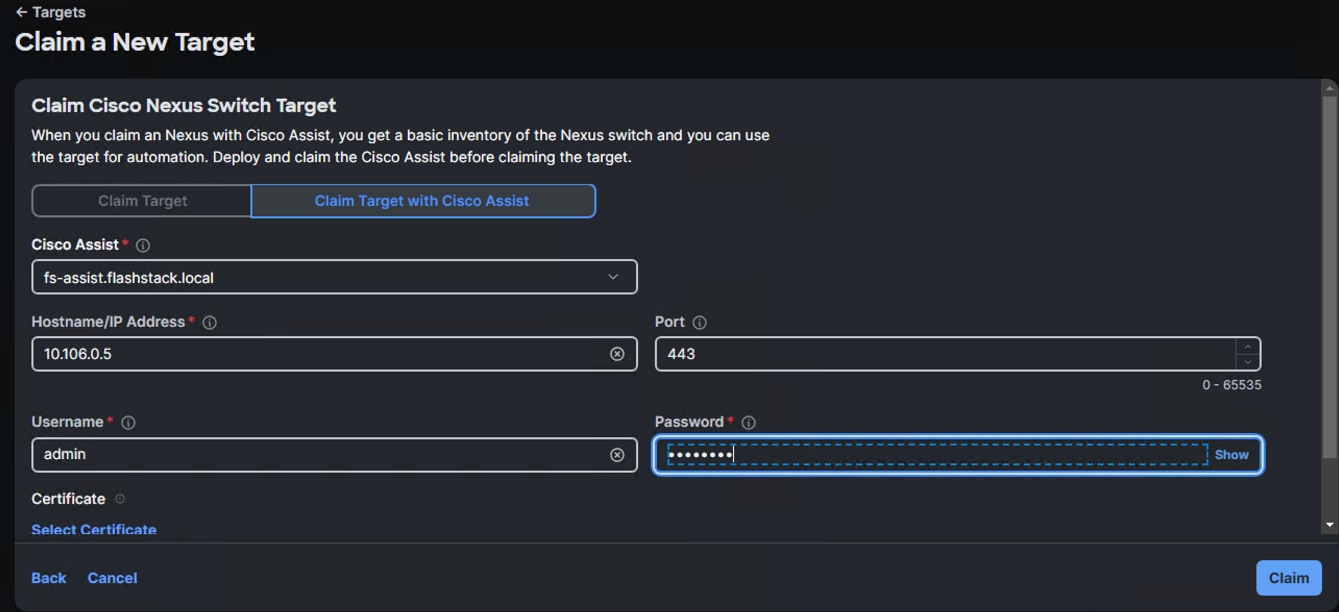

Claim Cisco Nexus Switches into Cisco Intersight

Cisco Nexus switches can be claimed into Cisco Intersight using Cisco Intersight Assist or Direct claim using Device ID and Claim Codes.

This section provides the steps to claim the Cisco Nexus switches using Cisco Intersight Assist.

Note: This procedure assumes that Cisco Intersight Assist is already hosted in the datacenter and claimed into the Intersight.com. Refer this video for deploying and claiming Intersight Assist into Intersight SAAS platform.

Procedure 1. Claim Cisco Nexus Switches into Cisco Intersight using Cisco Intersight Assist

Cisco Nexus - A

Step 1. Log into Nexus Switches and confirm the nxapi feature is enabled:

show nxapi

nxapi enabled

NXAPI timeout 10

HTTPS Listen on port 443

Certificate Information:

Issuer: issuer=C = US, ST = CA, L = San Jose, O = Cisco Systems Inc., OU = dcnxos, CN = nxos

Expires: Sep 12 06:08:58 2024 GMT

Step 2. Log into Cisco Intersight with your login credentials. From the drop-down list select System.

Step 3. Under Admin, click Target then click Claim a New Target. Under Categories, select Network, click Cisco Nexus Switch and then click Start.

Step 4. Select the Cisco Assist name which is already deployed and configured. Provide the Cisco Nexus Switch management IP address, username and password details and click Claim.

Step 5. Repeat steps 1 through 4 to claim the remaining Switch B.

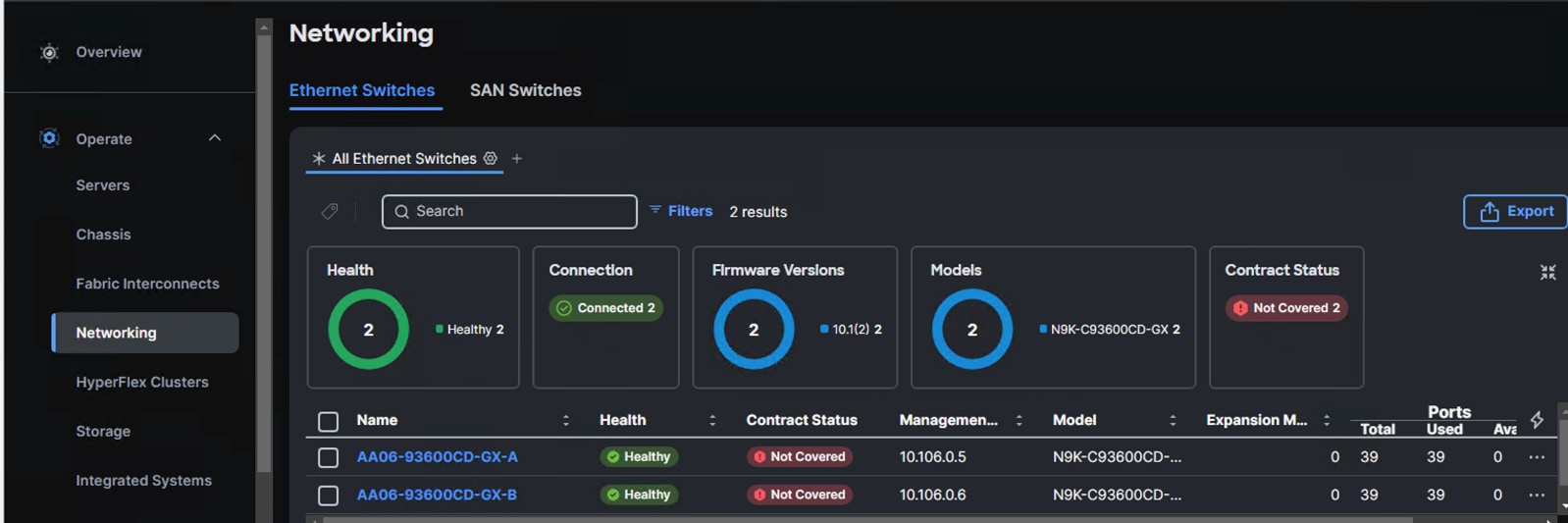

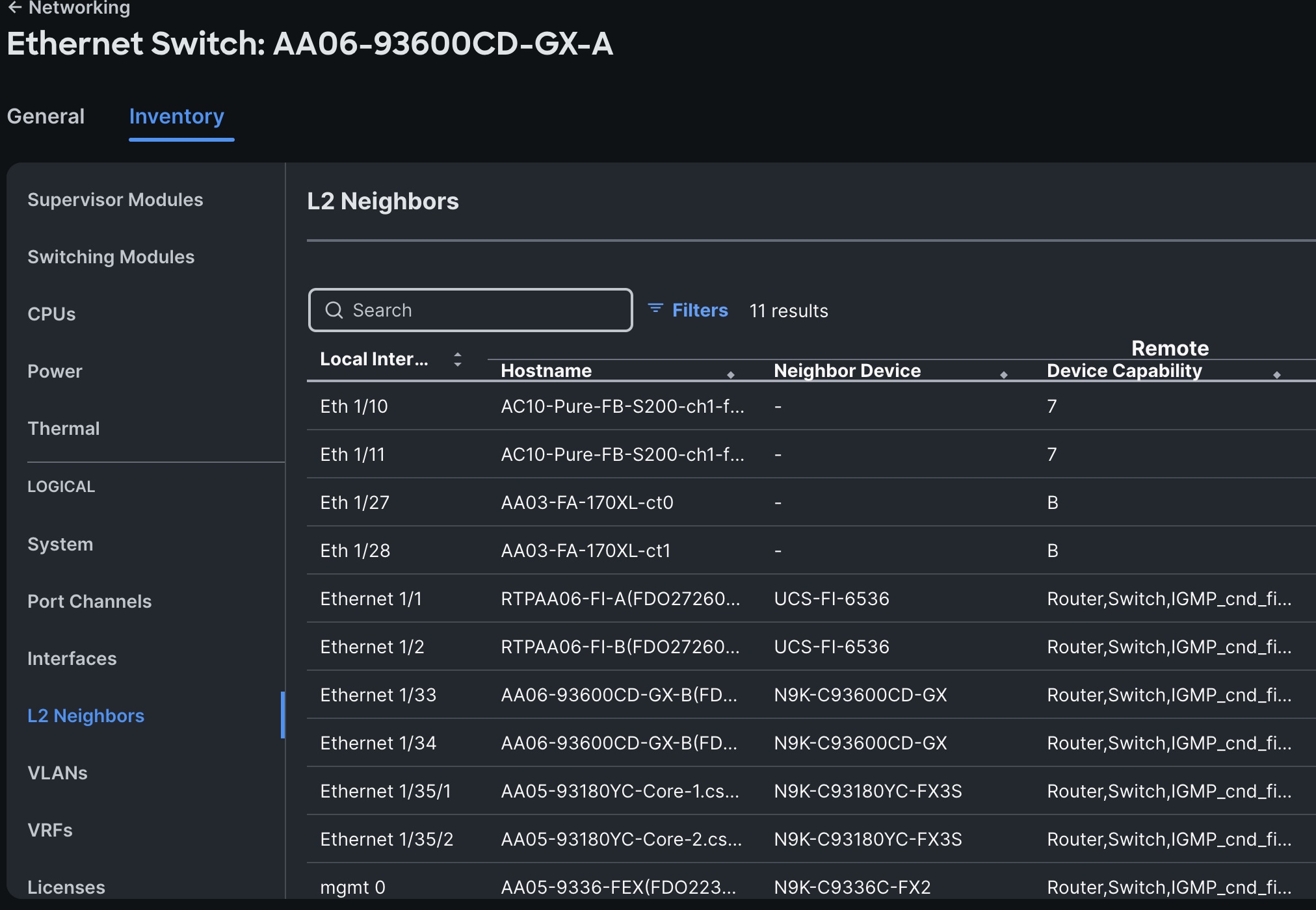

Step 6. When the switches are successfully claimed, from the drop-down list, select Infrastructure Services. Under Operate, click the Networking tab. On the right you will find the newly claimed Cisco Nexus switch details and browse through the Switches for viewing the inventory details.

The L2 neighbors of the Cisco Nexus Switch-A is shown below:

Pure Storage FlashArray Configuration

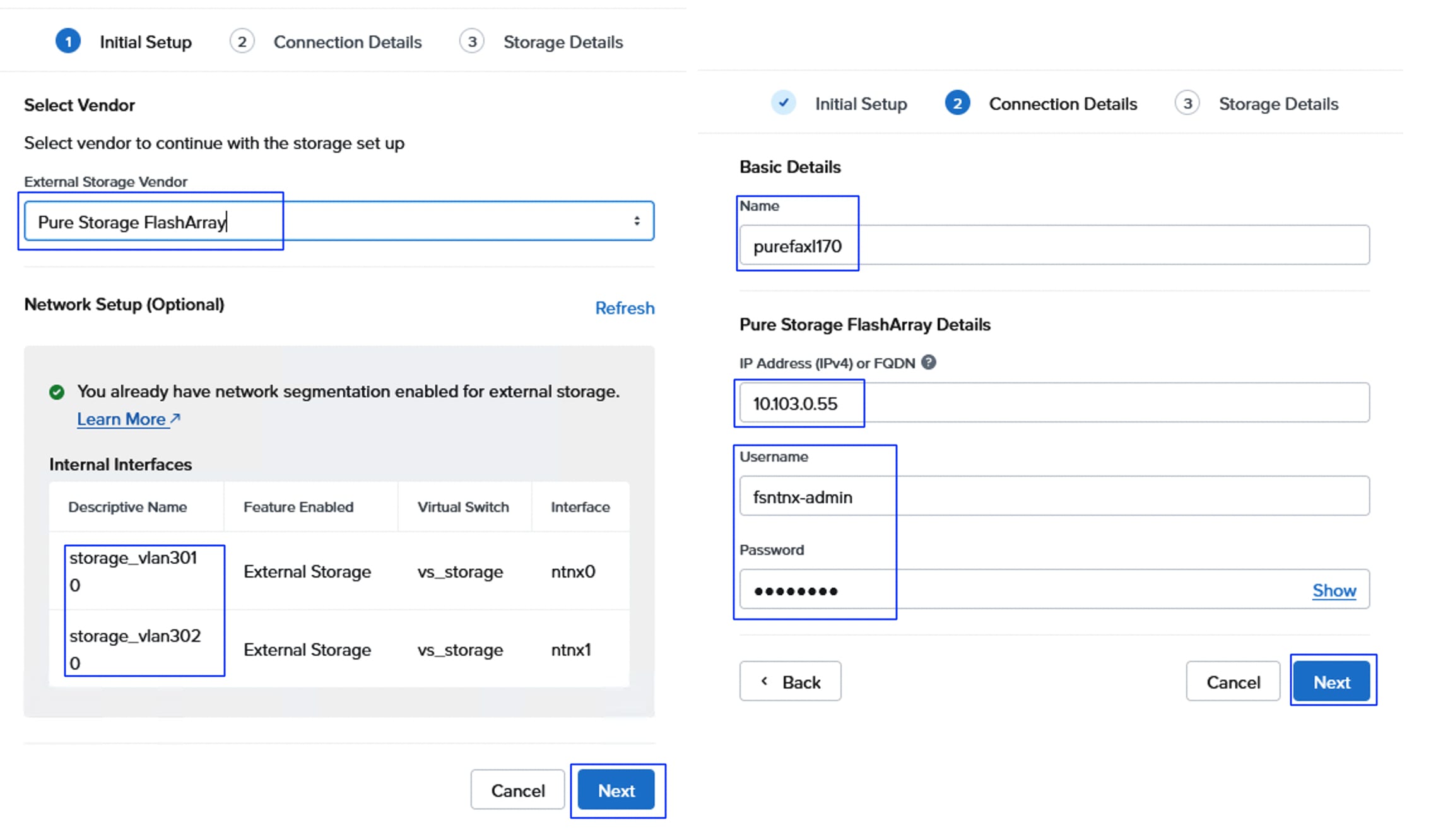

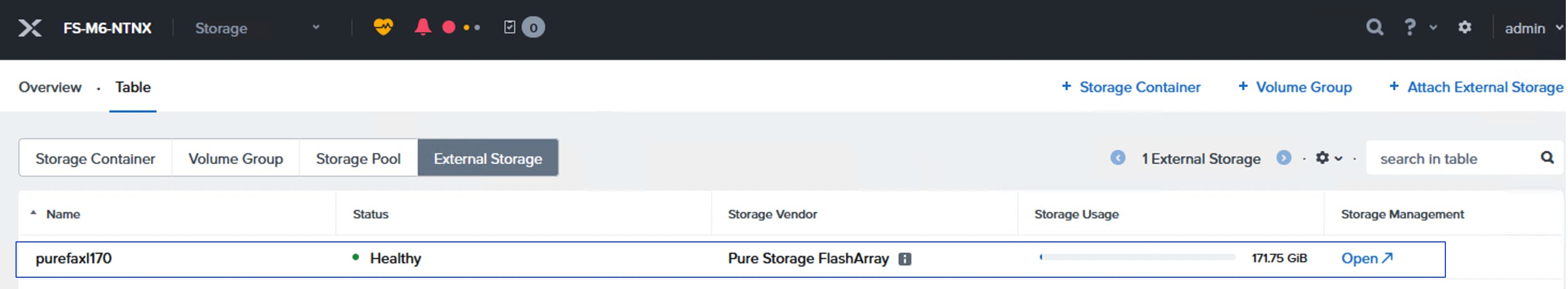

In this solution, Pure Storage FlashArray//XL170 provides storage for all the workloads running on the NCI Compute Clusters for both the deployment types (green and brown fields). This chapter describes the high-level steps to configure Pure Storage FlashArray//X170 network interfaces required for storage connectivity using NVMe over Fabric protocol over TCP. While these procedures specifically details configuration steps for FlashArray //XL170, the configuration steps are substantially similar for other supported models, including the FlashArray //X and //C series.

Note: This document assumes day-0 initial configuration to setup up the storage array is already completed and the FlashArray is accessible using its virtual IP.

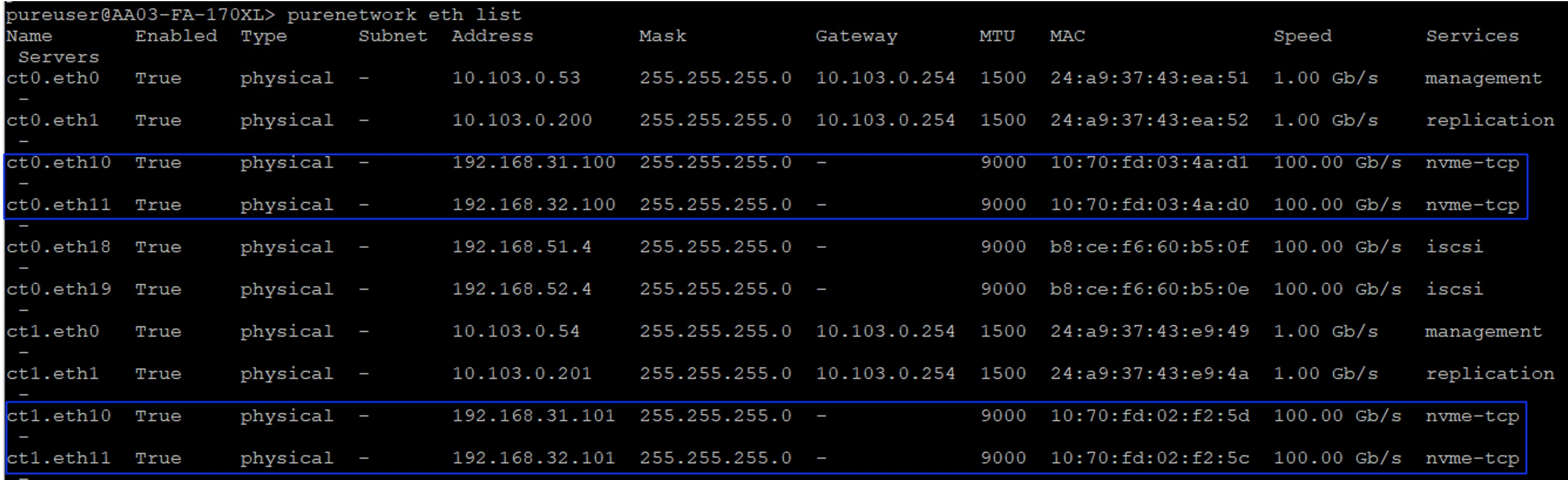

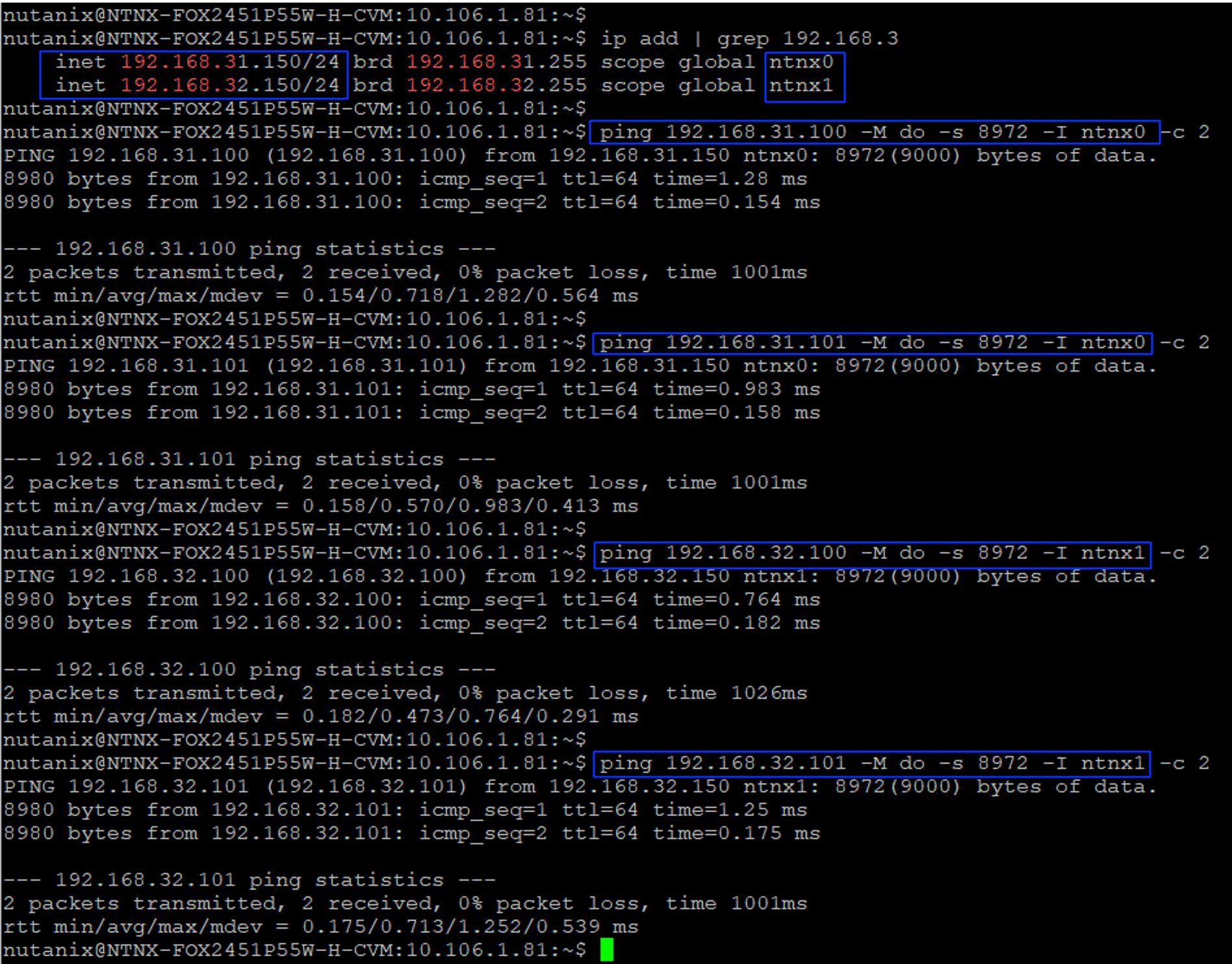

As discussed, each storage controller of FlashArray is connected to the pair of Nexus switches using 2x 100GbE ports offering aggregated network bandwidth of 400Gbps from the two controllers. The Pure Storage FlashArray network settings were configured with three subnets across three VLANs. Storage Interfaces CT0.Eth0 and CT1.Eth0 were configured to access management for the storage on VLAN 1030. Storage Interfaces CT0.Eth10, CT1.Eth10, CT0.Eth11 and CT1.Eth11 interfaces are configured with “nvme-tcp” Service. CT0.Eth10 and CT1.Eth10 interfaces are connected to Nexus 93600CD-GX-A switch on port 1/25-26 ports that are configured with access VLAN 3010 while CT0.Eth11 and CT1.Eth11 interfaces are connected to Nexus 93600CD-GX-B switch on port 1/25-26 ports that are configured with access VLAN 3020.

The following tables list the IP addressing configured on the FlashArray //Xl170 controller interfaces used for storage access.

Table 8. Pure Storage FlashArray//XL170 Interface Configuration Settings

| FlashArray Controller |

Port |

IP Address |

Subnet |

| FlashArray//X170 Controller 0 |

CT0.ETH10 |

192.168.31.100 |

255.255.255.0 |

| FlashArray//X170 Controller 1 |

CT1.ETH10 |

192.168.31.101 |

255.255.255.0 |

Table 9. Pure Storage FlashArray//XL170 Interface Configuration Settings

| FlashArray Controller |

Port |

IP Address |

Subnet |

| FlashArray//X170 Controller 0 |

CT0.ETH11 |

192.168.32.100 |

255.255.255.0 |

| FlashArray//X170 Controller 1 |

CT1.ETH11 |

192.168.32.101 |

255.255.255.0 |

Note: The above networking configuration of FlashArray is the simplest configuration and is not the only way to configure FlashArray networking. There are other methods too. FlashArray’s network configuration depends on the infrastructure that customers have. For instance, FlashArray networking can be configured with LACP with a single or multiple subnets or supporting multiple VLANS in trunk mode.

Procedure 1. Configure Storage Interfaces for NVMe-TCP

The following steps are required to configure the controller ports to use nvme-tcp protocol for accessing storage targets.

Step 1. ssh to the Pure FlashArray//Xl170 using its management ip and pureuser credentials.

Step 2. Enable nvme-tcp service on all the four ethernet interfaces as shown below:

purenetwork eth enable ct0.eth10

purenetwork eth enable ct0.eth11

purenetwork eth enable ct1.eth10

purenetwork eth enable ct1.eth11

purenetwork eth setattr --address 192.168.31.100/24 --mtu 9000 --servicelist nvme-tcp ct0.eth10

purenetwork eth setattr --address 192.168.32.100/24 --mtu 9000 --servicelist nvme-tcp ct0.eth11

purenetwork eth setattr --address 192.168.31.101/24 --mtu 9000 --servicelist nvme-tcp ct1.eth10

purenetwork eth setattr --address 192.168.32.101/24 --mtu 9000 --servicelist nvme-tcp ct1.eth11

purenetwork eth list

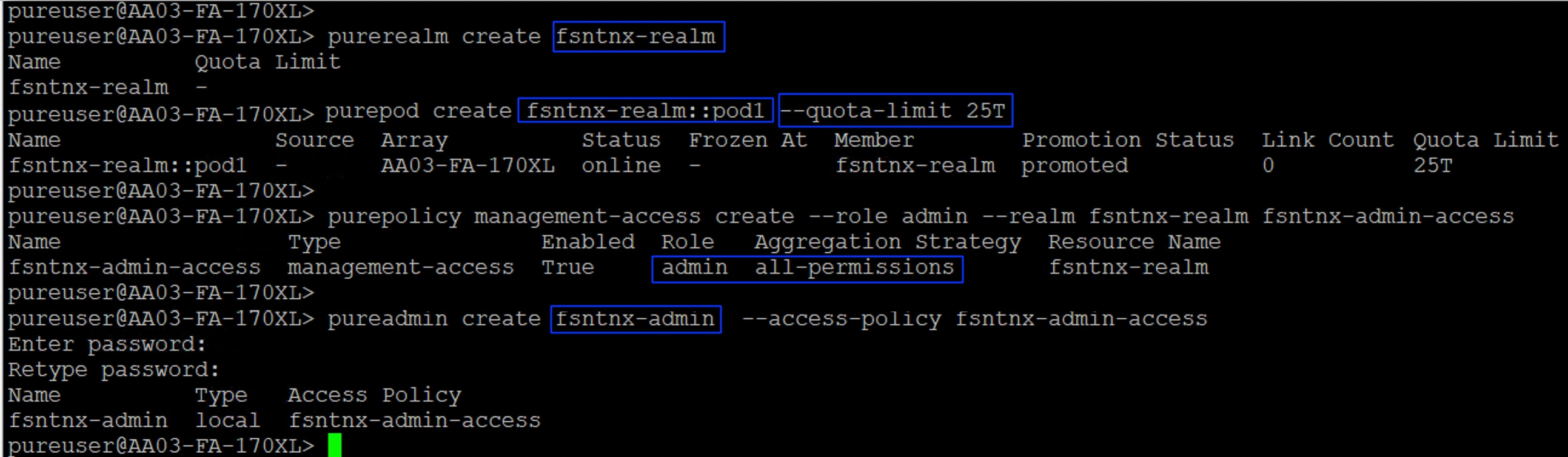

Procedure 2. Configure Realm and Pod on FlashArray

As discussed in the FlashStack with Nutanix storage layout section, This architecture uses Realms and Pods combination for exposing the external FlashArray storage to the NCI compute cluster. Follow this procedure to create the Realm+Pod combinations for both the deployment types.

Step 1. ssh to the Pure FlashArray//Xl170 using its management ip and pureuser credentials. Run the following scripts for creating Realm+Pod combinations for each deployment type:

##For the greenfield deployment type

purerealm create fsntnx-realm

purepod create fsntnx-realm::pod1 --quota-limit 25T

purepolicy management-access create --role admin --realm fsntnx-realm fsntnx-admin-access

pureadmin create fsntnx-admin --access-policy fsntnx-admin-access

## Create Realm and Pods for the brownfield deployment type, by following the above steps.

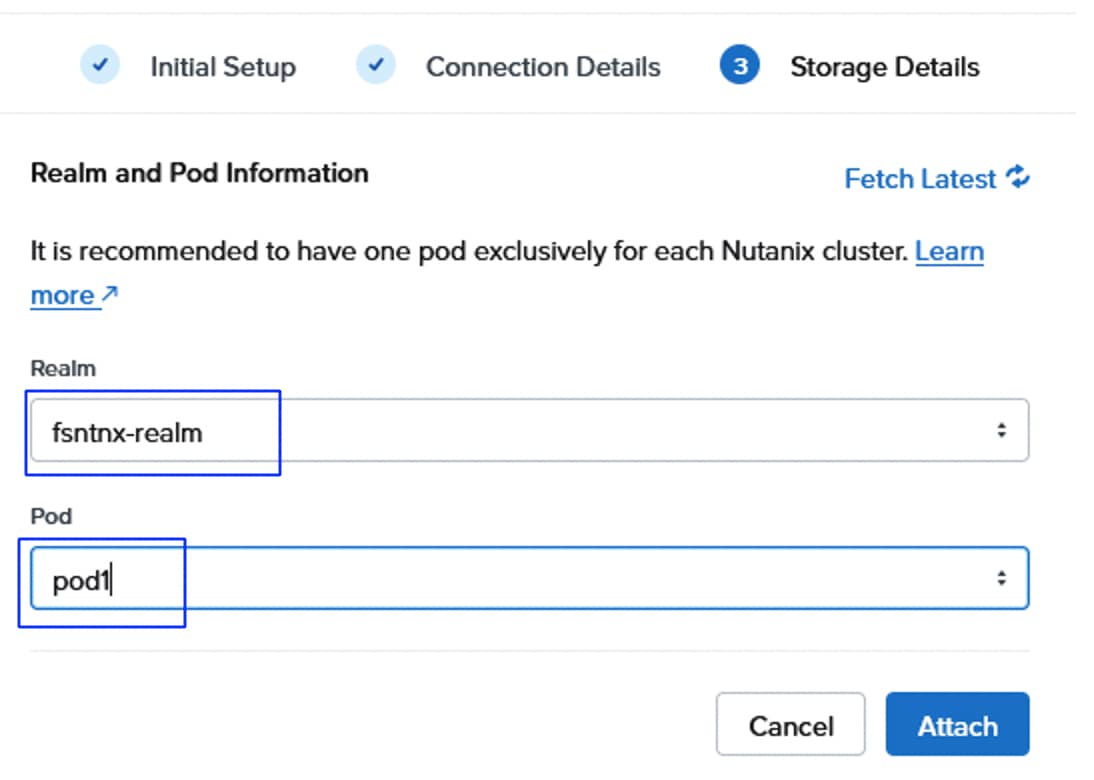

The following screenshot shows the configuration of Ream+Pod combination for greenfield deployment type:

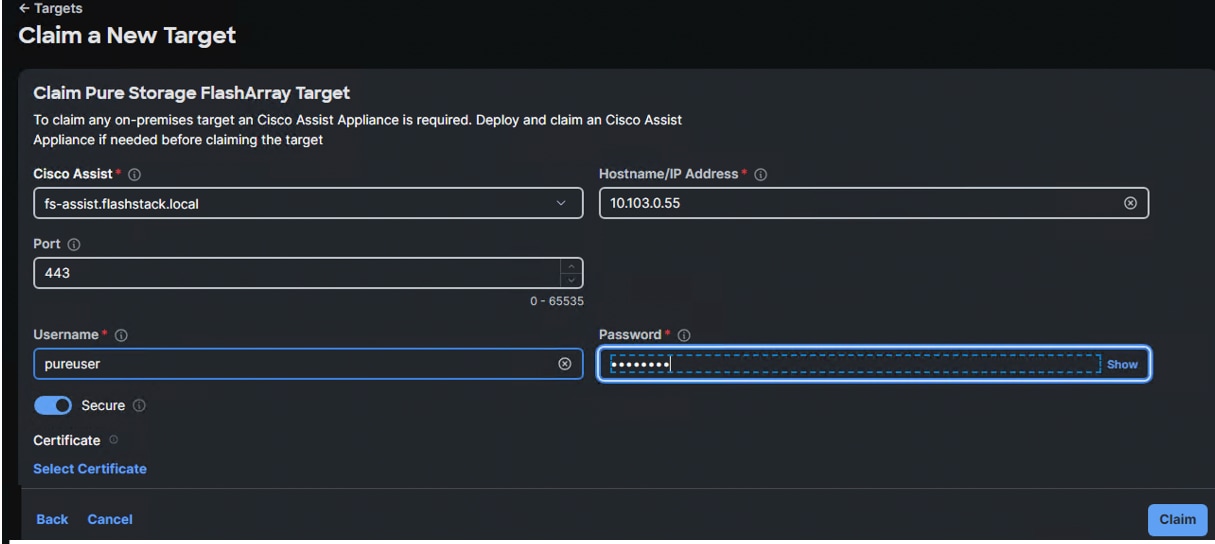

Procedure 3. Claim Pure Storage FlashArray//XL170 into Intersight

Note: This procedure assumes that Cisco Intersight Assist is already hosted and claimed into the Intersight.

Step 1. To claim the Pure Storage FlashArray into Intersight, log into Cisco Intersight using your login credentials. From the drop-down list select System.

Step 2. Under Admin, select Target and click Claim a New Target. Under Categories, select Storage, click Pure Storage FlashArray and then click Start.

Step 3. Select the Cisco Assist name which is already deployed and configured. Provide the Pure Storage FlashArray management IP address, username, and password details and click Claim.

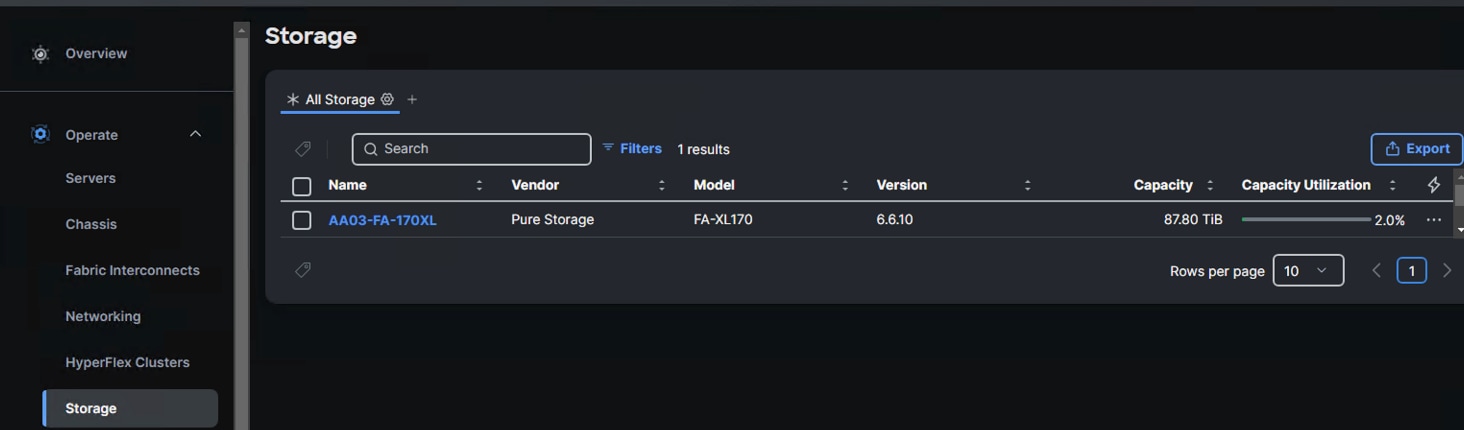

Step 4. When the storage is successfully claimed, go to select Infrastructure Services. Under Operate, click Storage. You will see the newly claimed Pure Storage FlashArray; browse through it to view the inventory details.

Cisco Intersight Configuration

The procedures in this chapter describe how to configure a Cisco UCS domain for use in a FlashStack environment. A Cisco UCS domain is defined as a pair for Cisco UCS FIs and all Cisco UCS B-Series, Cisco UCS X-Series and Cisco UCS C-Series servers connected to it. All the servers that are intended to be used as NCI Compute cluster nodes, must be connected and managed through Cisco Intersight IMM mode.

Note: This deployment guide assumes an Intersight account is already created, configured with required licenses and ready to use. A dedicated Resource Group and Organization will be created for managing the Nutanix servers used for this validation.

Note: This deployment guide assumes that the initial day-0 configuration of Fabric Interconnects is already done, updated with latest available firmware. Minimum supported FI firmware is 4.3(4.240066). See the Cisco UCS Fabric Interconnect Initialization Guide for the initial configuration.

Fabric Interconnect Domain Profile and Policies

This section contains the procedures to claim the FIs to Intersight account, create fabric interconnect domain profiles for each kind of deployment.

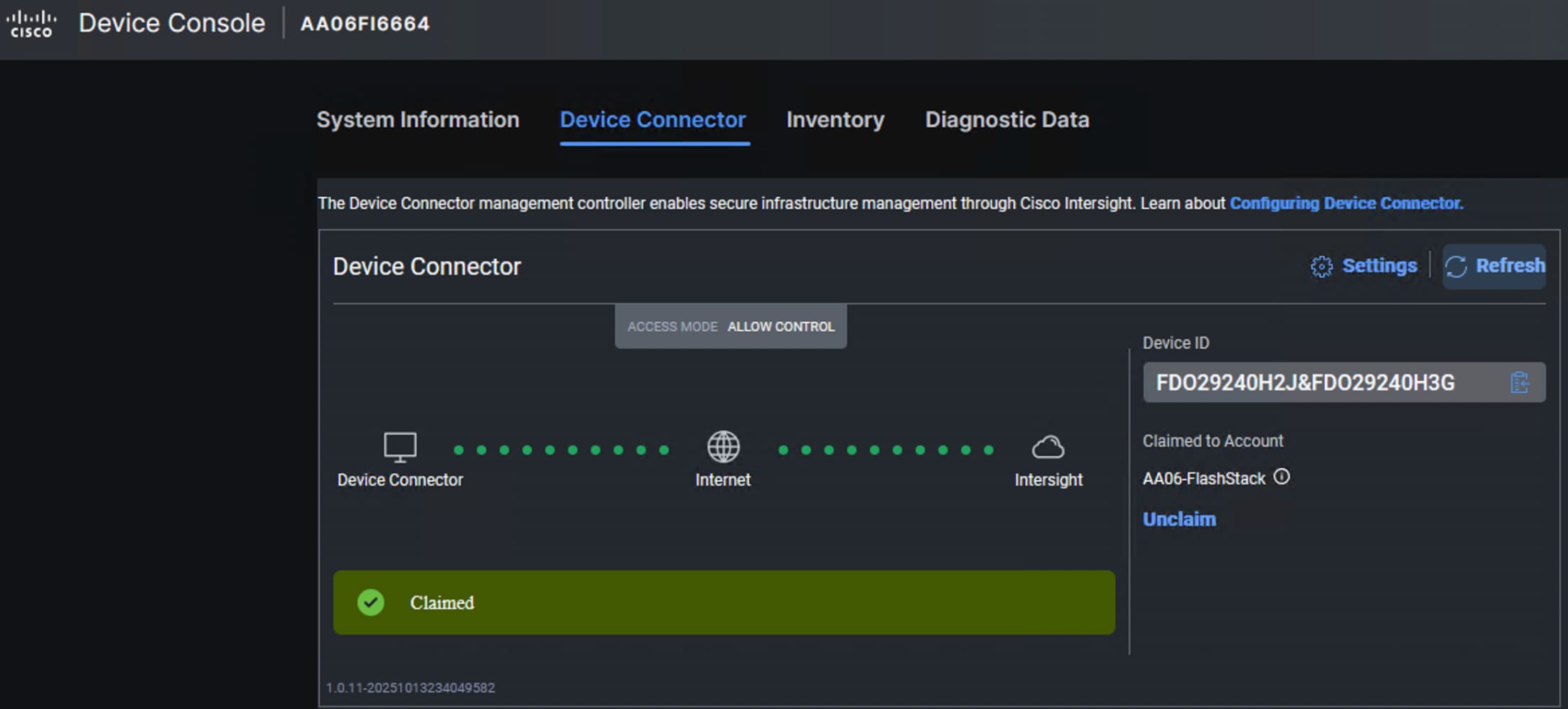

Procedure 1. Claim Fabric Interconnect into Intersight

Step 1. Log into your Intersight account with your credentials. Go to System > Targets and click Claim a New Target option.

Step 2. Select Cisco UCS Domain (Intersight Managed) option and click Start.

Step 3. Retrieve the Device ID and Claim Code for the Fabric Interconnect from its web console and enter the details and click Claim. The FIs will be claimed into your Default Resource Group of your Intersight account. The Custom Resource Groups (RGs) and Organizations can be created later and add the FIs to the custom Resource Groups.

Procedure 2. Upgrade Server Firmware

Step 1. Upgrade the firmware of the servers to the supported version in Cisco Intersight ahead of the Nutanix cluster deployment is recommended. Doing so can avoid any firmware upgrade failures from causing a Nutanix cluster deployment failure. See the Cisco UCS Nutanix Compatibility for supported firmware for each server type.

Procedure 3. Create Organization and Resource Groups

It is recommended to create dedicated Organizations and custom Resource Groups for managing Nutanix Cluster nodes. This approach simplifies management and enhances the security of server access. Follow these steps to create new Organization and Resource Group and add FI to the newly created Resource Group.

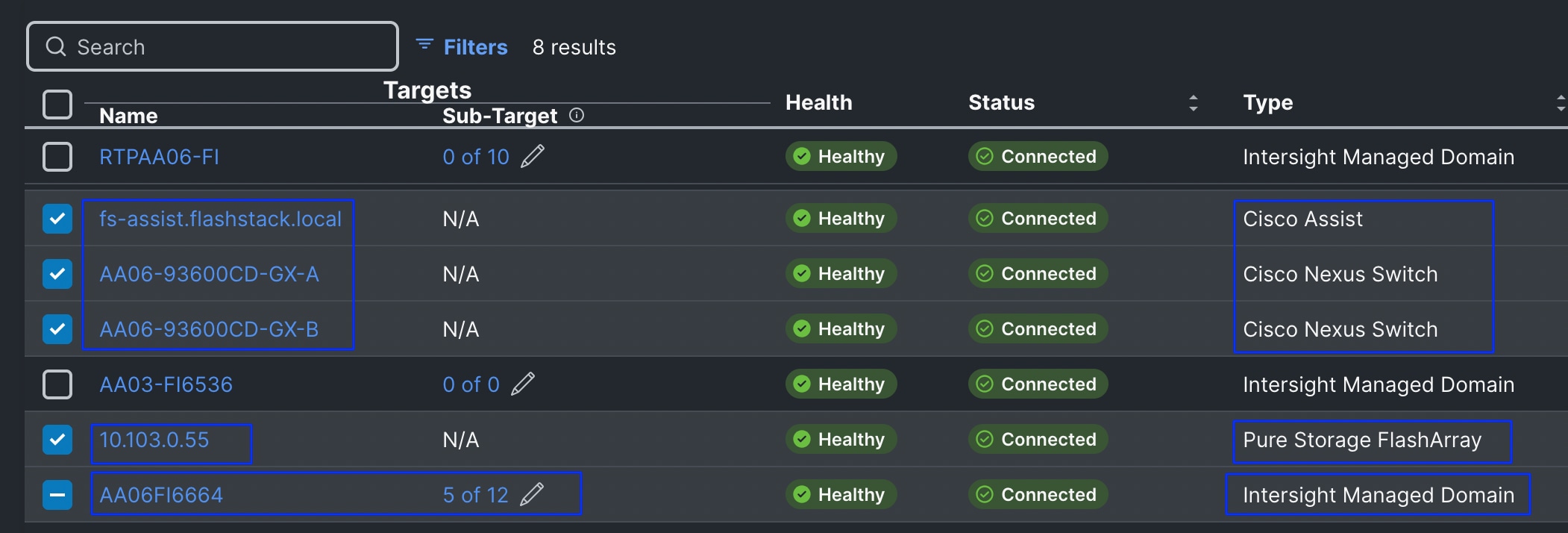

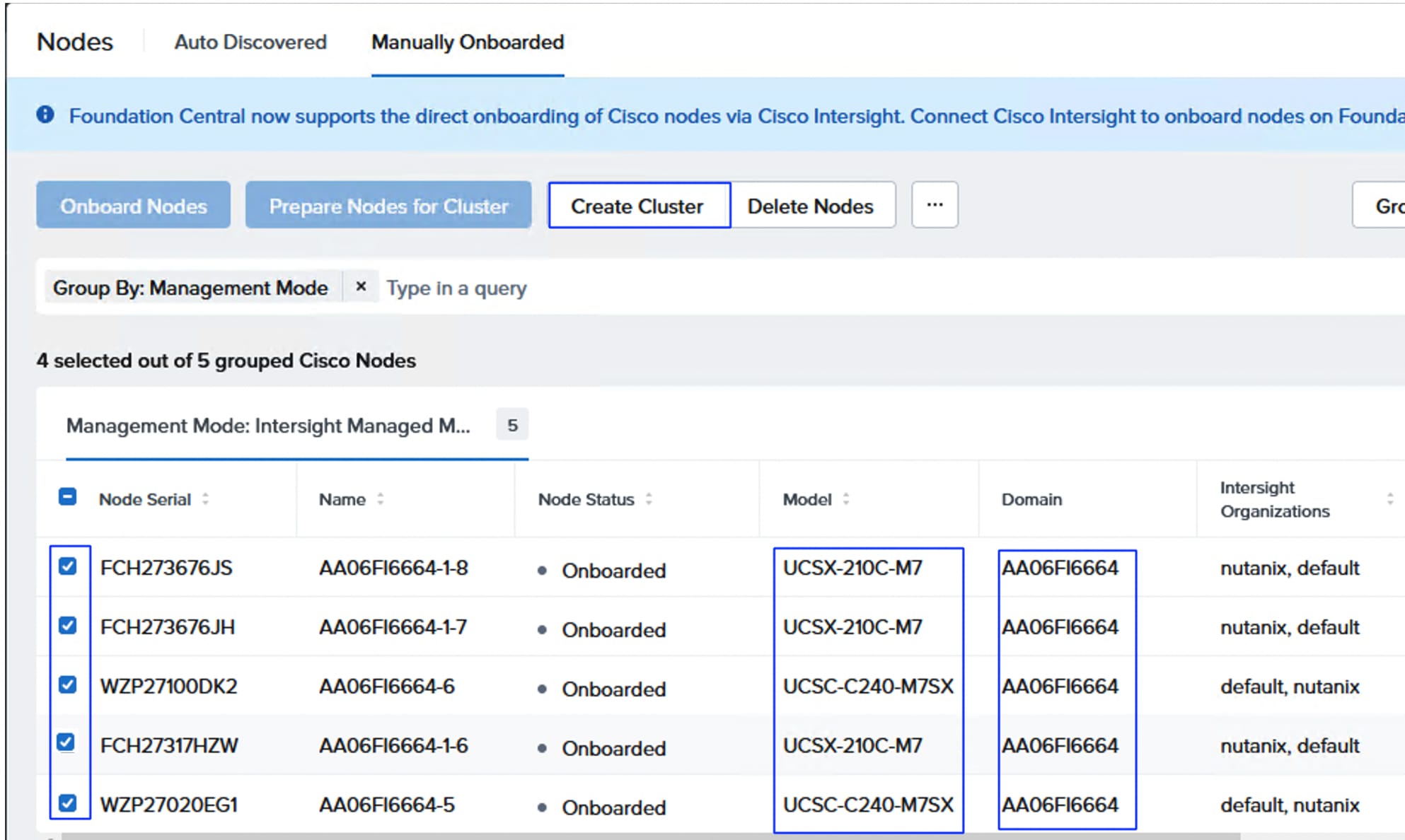

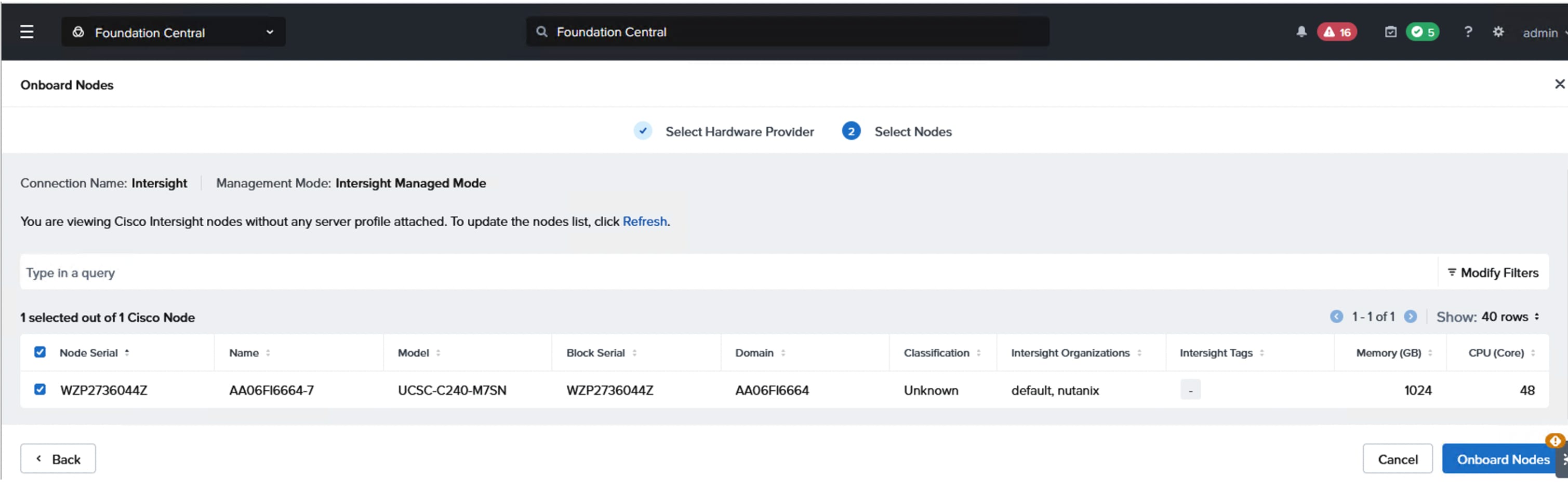

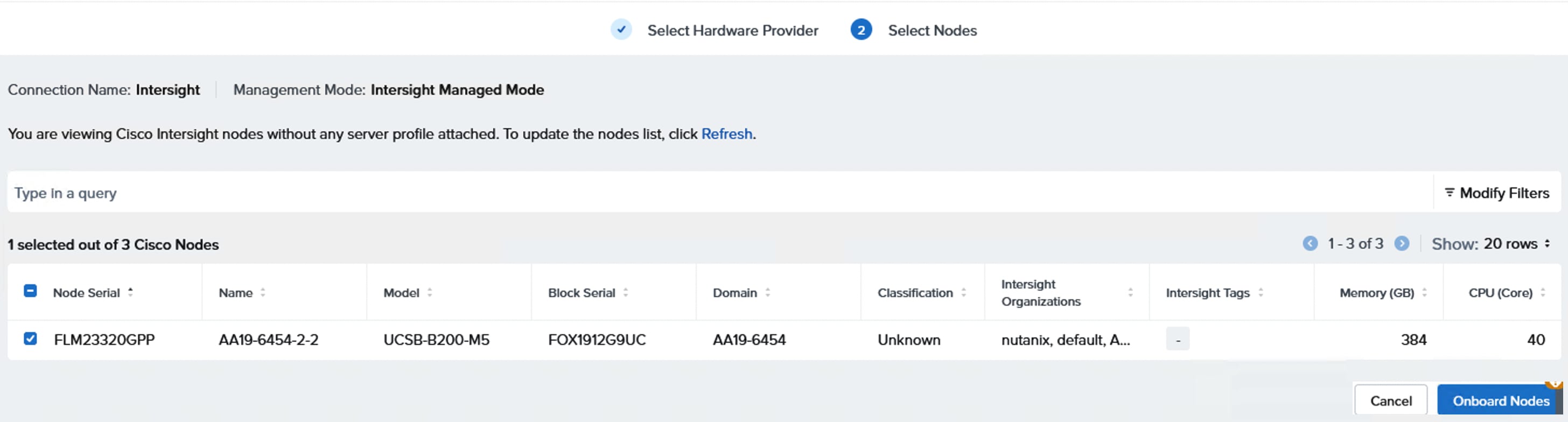

Step 1. Log into the Intersight portal, select System > Resource Groups > click Create Resource group. Provide the name as nutanix-rg and set resources as Custom. Select the 6664 FI and click the Pen symbol. Select all the servers that are going to be part of the Nutanix cluster. Along with the FIs, select all the remaining hardware components like Nexus switches, FlashArray and Intersight Assist and so on that are going to be part of your Nutanix Cluster as shown below. The following screenshot shows 5 out 12 servers along with other hardware components like Switches, Pure Storage and Intersight Assist. Once selected required components, click Create.

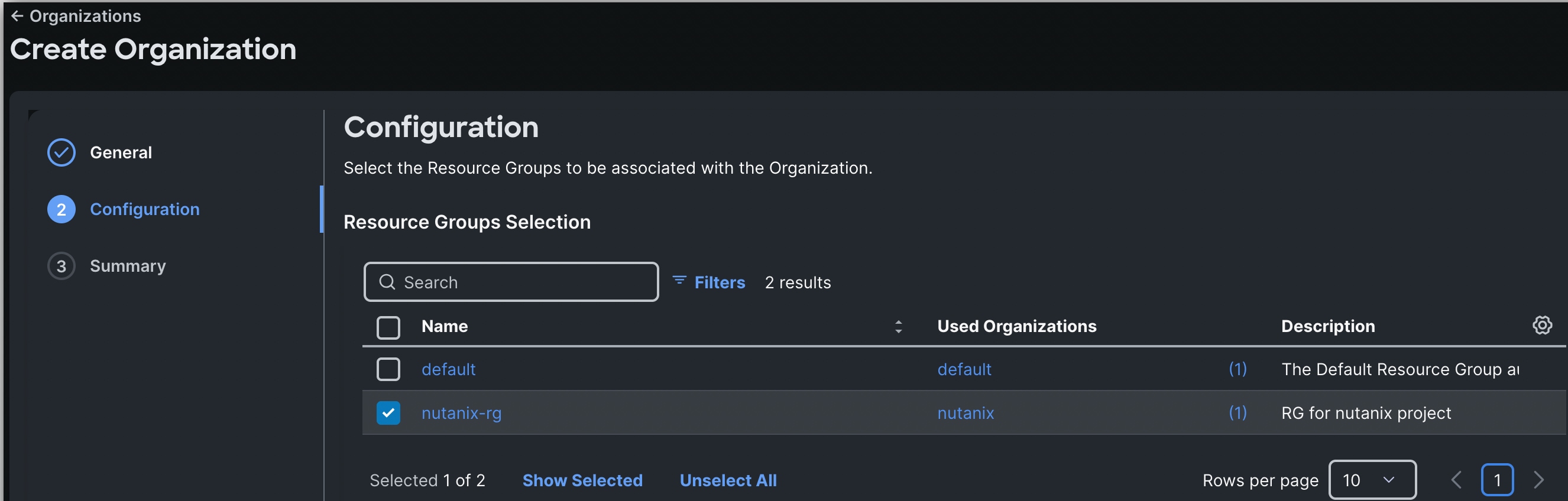

Step 2. Go to System > Organizations and click Create Organization. Provide a name as Nutanix and click Next. Select the nutanix-rg created in the above step and click Next. Review the summary and click Create.

Procedure 4. Create Fabric Interconnect Domain Profile and Policies for greenfield deployment (FI6664)

As discussed in the previous sections, Cisco UCS 6th generation 6664 Fabric Interconnects are used for the greenfield deployment. Follow this procedure for creating a UCS domain profile for 6664 FIs. For this greenfield deployment validation, a dedicated Intersight account “AA06-FlashStack” is created and claimed the 6664 FIs into this account under the default Organization.

Step 1. Log into the Intersight portal, select Configure > Profiles then select UCS Domain Profiles > Create Domain Profile.

Step 2. Set the Organization to default and provide a name to the FI domain profile (AA06FI6664-UCSDomain/) and click Next. Click Assign Later to assign this domain profile to a FI later. Click Next.

Step 3. Click Next to go to VLAN & VSAN Configuration.

Step 4. Under VLAN & VSAN Configuration > VLAN Configuration, click Select Policy then click Create New.

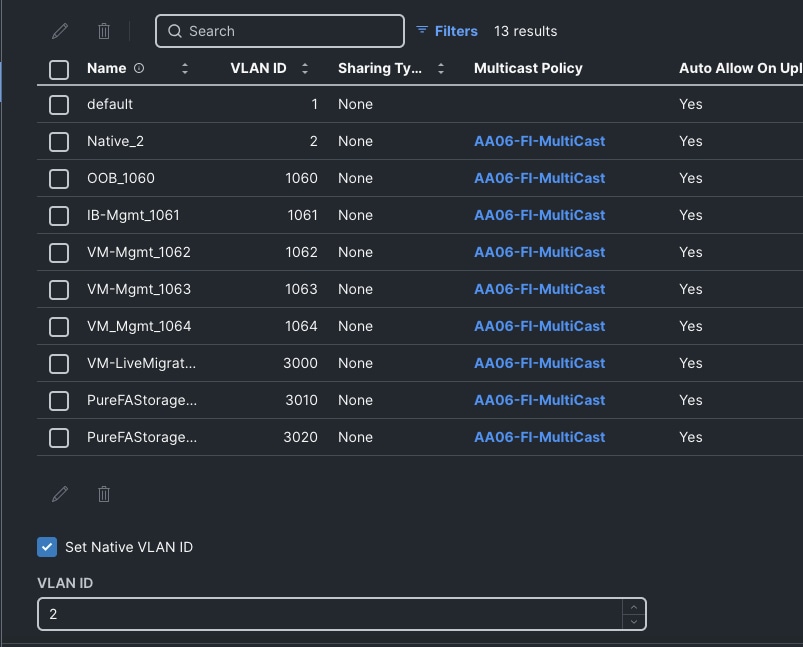

Step 5. On the Create VLAN page, enter a name (AA06-FI6664-VLANs) and click Next. To add a VLAN, click Add VLANs.

Step 6. For the Prefix, enter the VLAN name as OOB-Mgmt-VLAN. For the VLAN ID, enter the VLAN ID 1060. Leave Auto Allow on Uplinks enabled and Enable VLAN Sharing disabled.

Step 7. Under Multicast Policy, click Select Policy and select Create New to create a Multicast policy.

Step 8. On the Create Multicast Policy page, enter the name (AA06-FI-MultiCast) of the policy and click Next to go to Policy Details. Leave the Snooping State and Source IP Proxy state checked/enabled and click Create. Select the newly created Multicast policy.

Step 9. Repeat steps 6 through 8 to add all the required VLANs to the VLAN policy.

Step 10. After adding all the VLANs, click Set Native VLAN ID and enter the native VLANs (for example 2) and click Create. The VLANs used for this solution are shown below:

Step 11. Select the newly created VLAN policy for both Fabric Interconnects A and B. Click Next to go to the Port configuration.

Step 12. Create a new Ports Configuration Policy for each Fabric Interconnect. Provide a name (AA06FI6664-A-PortConf) and select the UCS-FI-6664 from Fabric Interconnect model drop-down list. Click Next and go to Port Roles.

Step 13. Select the appropriate ports, where the X-series M7 and C-series M7 servers are connected, Click Configure to set them as server ports by setting Role to Server.

Step 14. Click on the Port Channels tab and click Create Port Channel. Select Port 63 and 64 for FI6664, set Role to Ethernet Uplink Port Channel, set 100 for Port Channel ID and admin speed to 100Gbps and FEC to Cl91. Under Link Control, create a new link control policy with settings described in the following table. Once created, click Save to complete the Port policy configuration.

Step 15. Repeat steps 12 to 14 to create another port configuration policy (AA06FI6664-B-PortConf) for Fabric Interconnect B with Ethernet Uplink Port Channel ID set to 200. Select the corresponding Port configuration policies created for FI-A and FI-B.

| Policy Name |

Setting Name |

| AA06-FI-LinkControll |

UDLD Admin State: True UDLD mode: Normal |

Step 16. Once two Port configurations are selected for FI-A and B, click Next to go to the UCS Domain Configuration page. The following tables list the management and network related polices created and used for this validation. Create NTP, Network Connectivity and QoS policies as described below and complete the UCS Domain Profile creation for 6664 Fabric Interconnects used in greenfield deployment option.

| Policy Name |

Setting Name |

| AA06-FI-OCP-NTP |

Enable ntp: on Server list: 172.20.10.11,172.20.10.12,172.20.10.13 Timezone: America/New_York |

Table 12. Network Connectivity Policy

| Policy Name |

Setting Name |

| AA06-FS-OCP-NWPolicy |

Proffered IPV4 DNS Server: 10.106.1.21 Alternate IPV4 DNS Server: 10.106.1.22 |

| Policy Name |

Setting Name |

| AA06-FS-OCP-SystemQoS |

Best Effort: Enable Weight: 5 MTU: 9216 |

Step 17. Select the newly created UCS domain policy using the above steps and assign it to Fabric Interconnects used for the greenfield deployment. Ensure the policy is assigned to the FIs cleanly, and Status of the UCS domain policy turns to OK. After the Domain profile is deployed, all modular chassis, the blades in the chassis and the rackmount servers will be discovered. Once all the chassis, blades and rackmounts have finished discovery, the next steps can be completed.

Note: For brownfield deployments, it is assumed that the Fabric Interconnects are already configured with required Domain Profiles to support the existing infrastructure. Therefore, Domain Profile configuration steps are not included in the following procedures. Ensure all the required VLANs for management, storage and guest traffic are defined in the Domain Profiles.

Create Pools, Policies for LAN Connectivity Policy

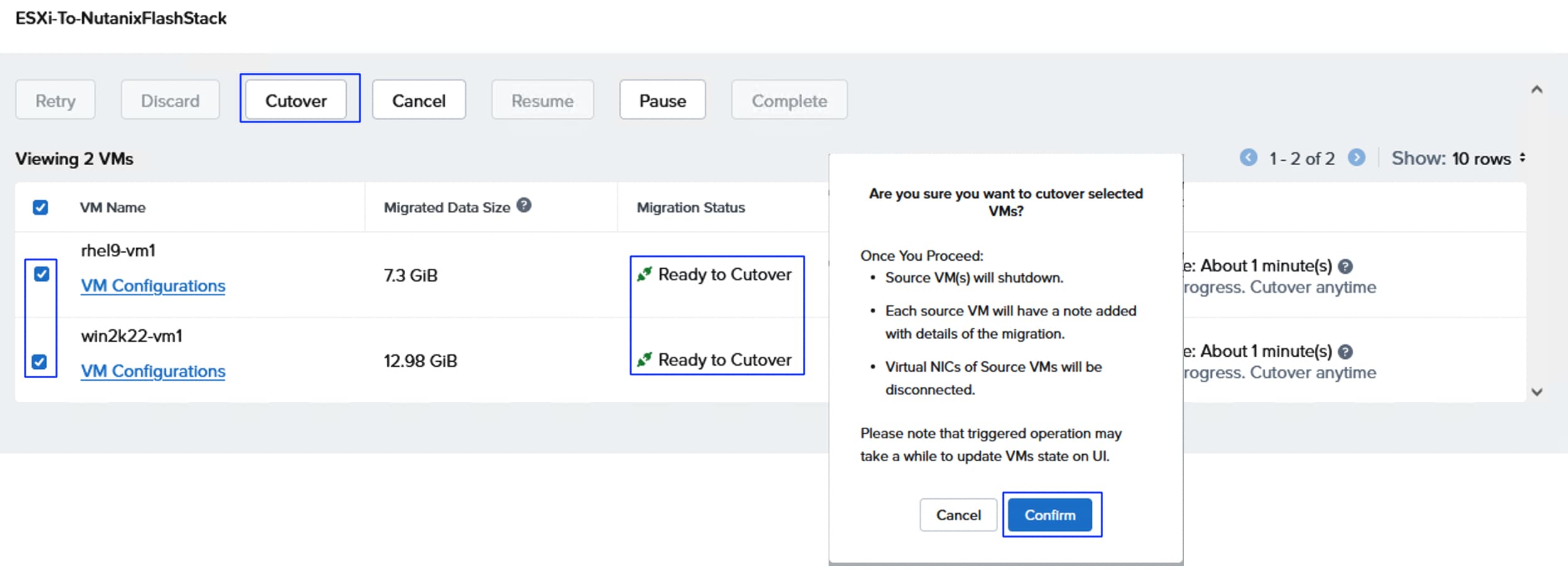

As discussed in the section Considerations and Recommendations for FlashStack with Nutanix, It is required to create a separate pair of vNIC interfaces for each node to segregate different types of traffics. The required vNICs pairs can be configured during the cluster deployment using Foundation Central. However, Cisco UCS LAN Connectivity Policy (LCP) can be used to define the required vNICs with advanced configuration options including vNIC placement, VLAN ID, PCI order and so on. If you do not wish you create the LAN connectivity policy manually, skip creation of LAN connectivity policy steps and ensure to define the vNIC pairs for each type of traffic at the time of Nutanix cluster deployment.