Feature Summary and Revision History

Summary Data

|

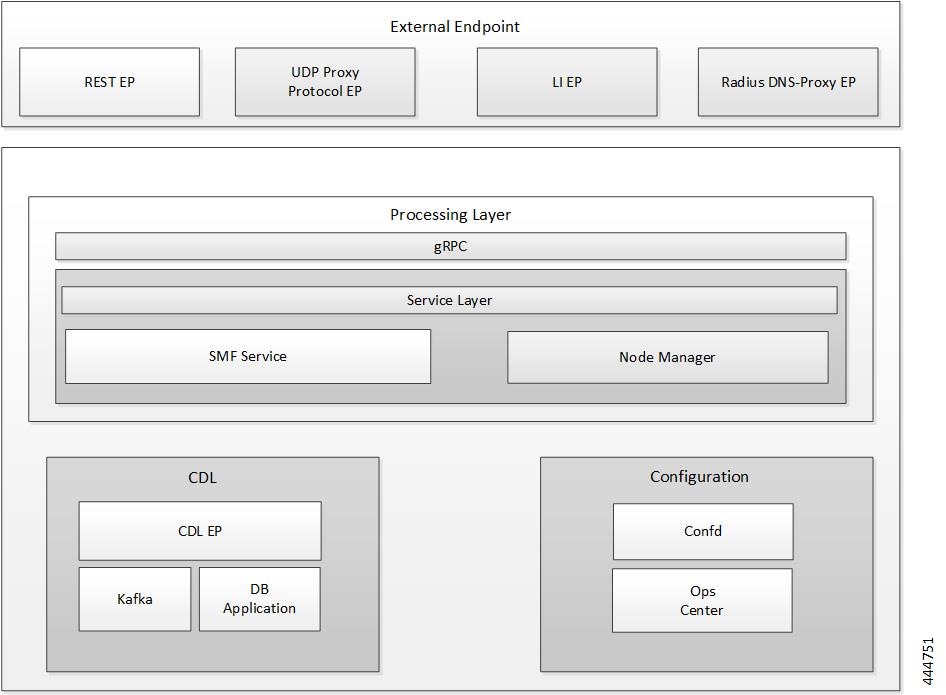

Applicable Products or Functional Area |

SMF |

|

Applicable Platform(s) |

SMI |

|

Feature Default Setting |

Enabled – Always-on |

|

Related Changes in this Release |

Not Applicable |

|

Related Documentation |

Not Applicable |

Revision History

| Revision Details | Release |

|---|---|

| The node-monitor pod is supported to enable monitoring of all K8 pods. |

2021.02.0 |

|

The grafana-dashboard-app-infra pod is removed. |

2021.02.3.t3 |

|

First introduced. |

Pre-2020.02.0 |

Feedback

Feedback