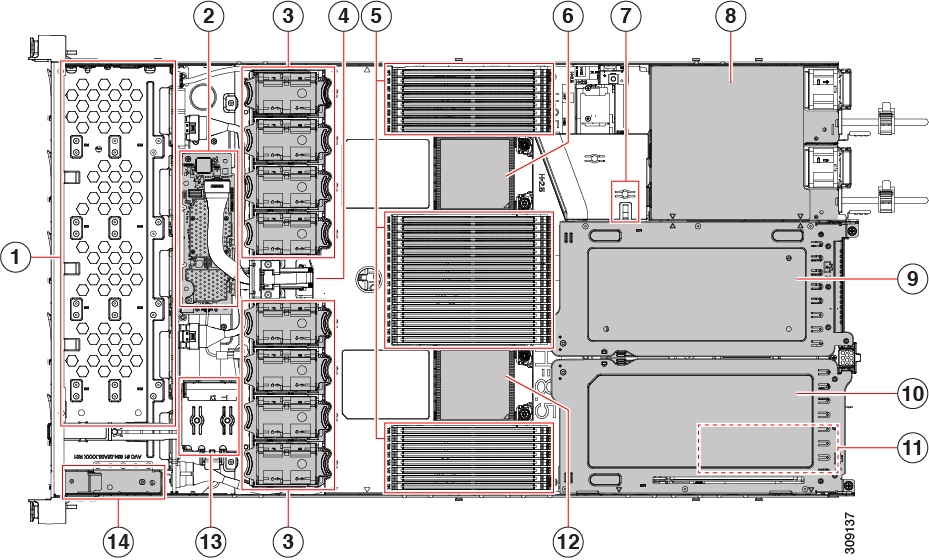

Status LEDs and Buttons

This section contains information for interpreting front, rear, and internal LED states.

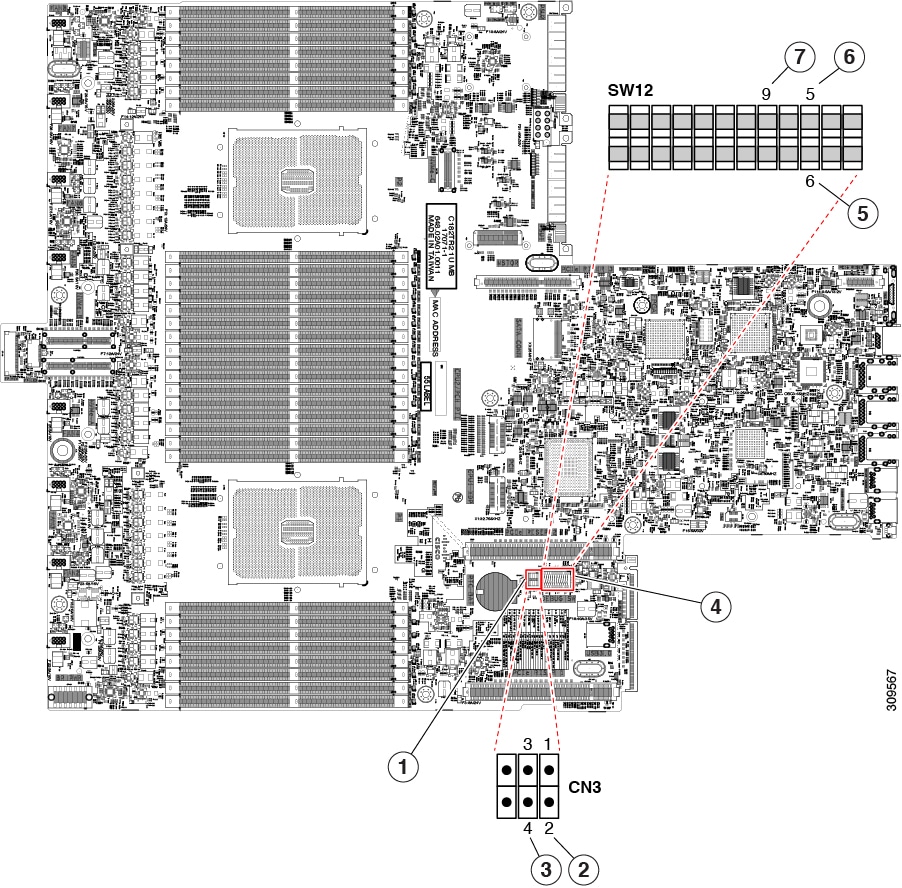

Front-Panel LEDs

|

LED Name |

States |

|

|

1 |

Power button/LED ( |

|

|

2 |

Unit identification ( |

|

|

3 |

System health ( |

|

|

4 |

Power supply status ( |

|

|

5 |

Fan status ( |

|

|

6 |

Network link activity ( |

|

|

7 |

Temperature status ( |

|

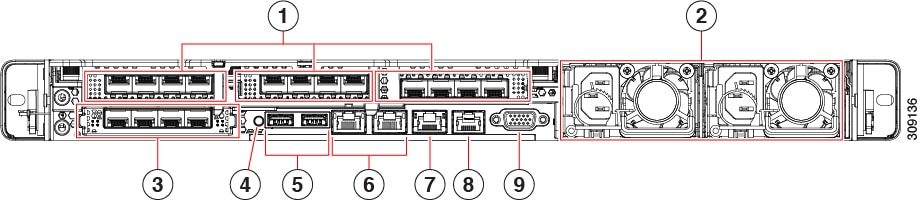

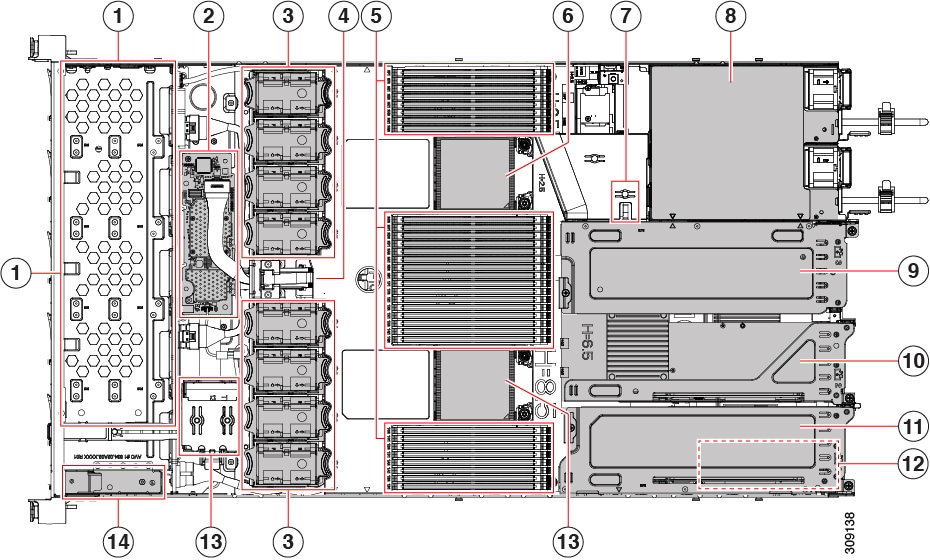

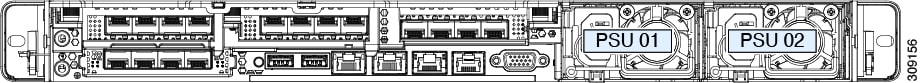

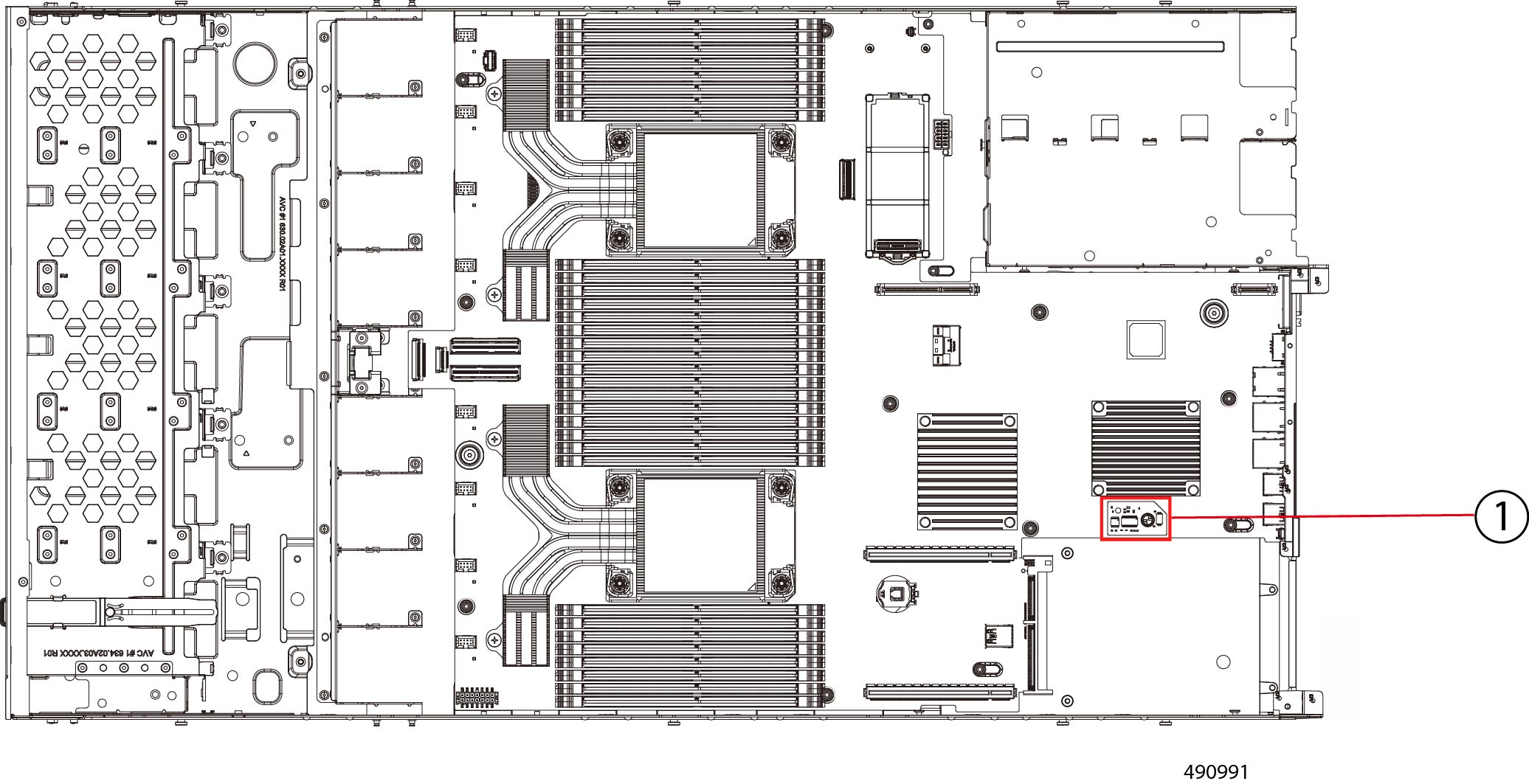

Rear-Panel LEDs

|

LED Name |

States |

|

|

1 |

1-Gb/10-Gb Ethernet link speed (on both LAN1 and LAN2) |

|

|

2 |

1-Gb/10-Gb Ethernet link status (on both LAN1 and LAN2) |

|

|

3 |

1-Gb Ethernet dedicated management link speed |

|

|

4 |

1-Gb Ethernet dedicated management link status |

|

|

5 |

Rear unit identification |

|

|

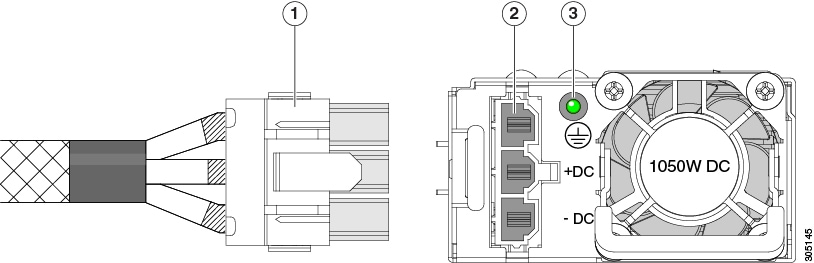

6 |

Power supply status (one LED each power supply unit) |

AC power supplies:

DC power supplies:

|

|

7 |

1-Gb Ethernet dedicated management port |

|

|

8 |

COM port (RJ-45 connector) |

- |

|

9 |

VGA display port (DB15 connector) |

- |

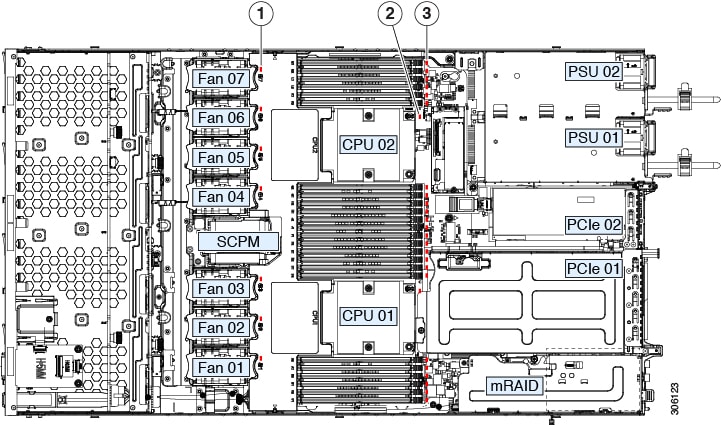

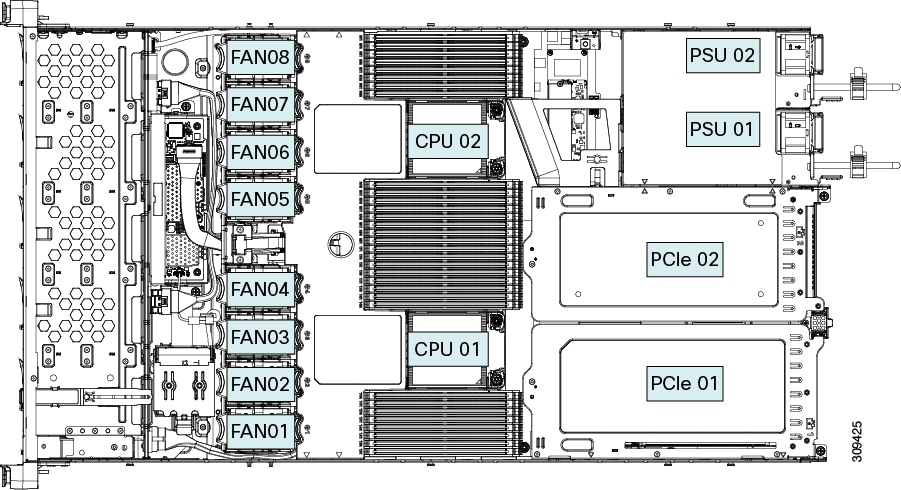

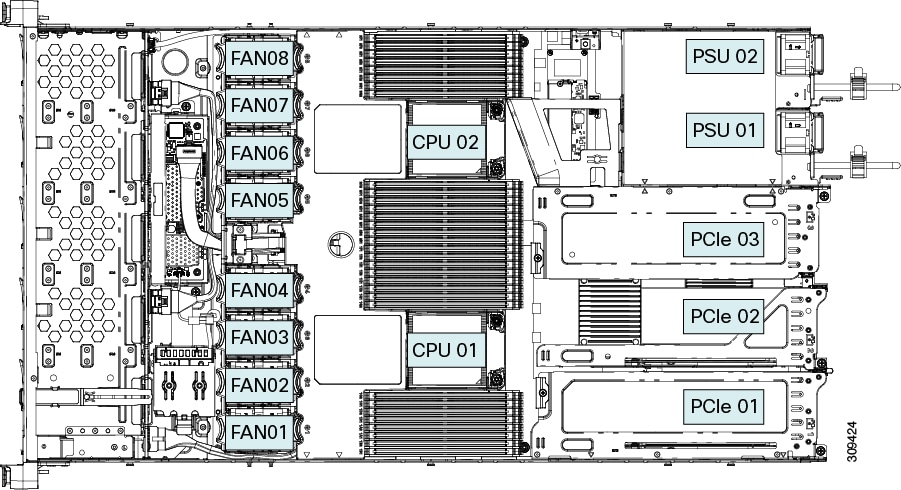

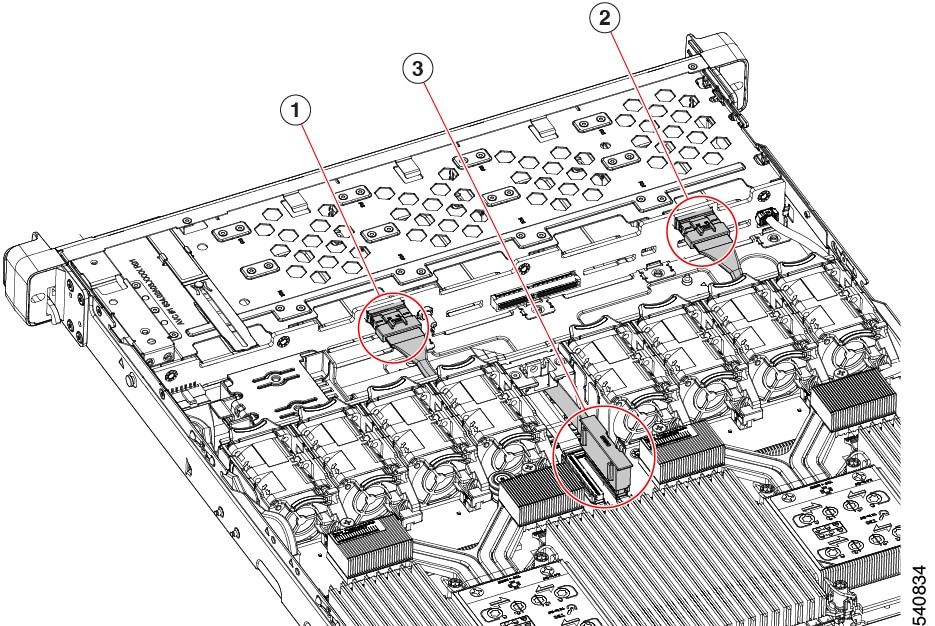

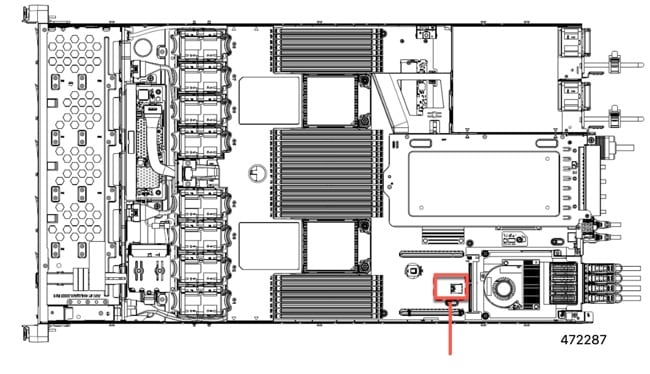

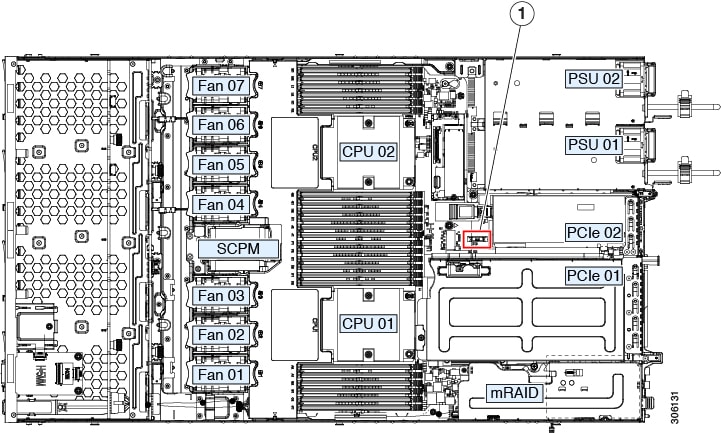

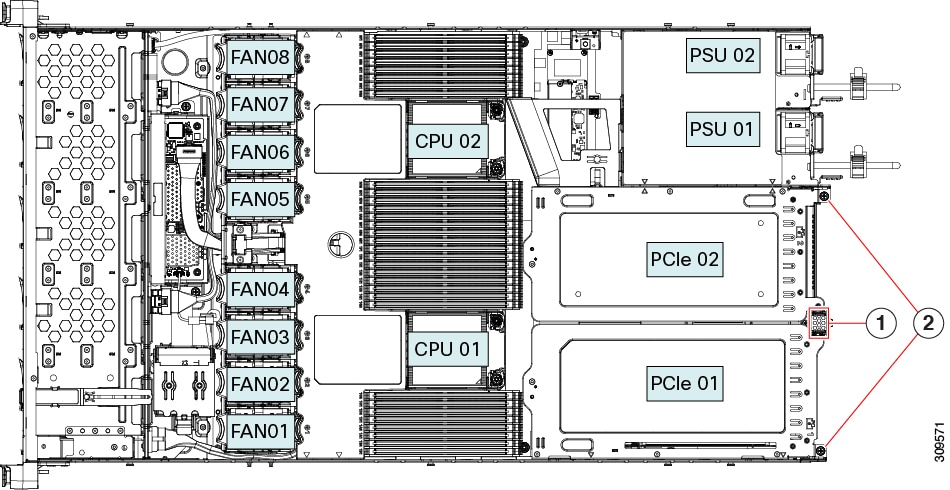

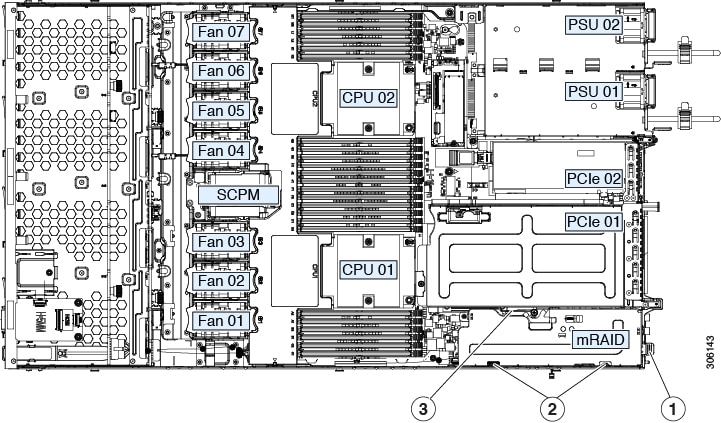

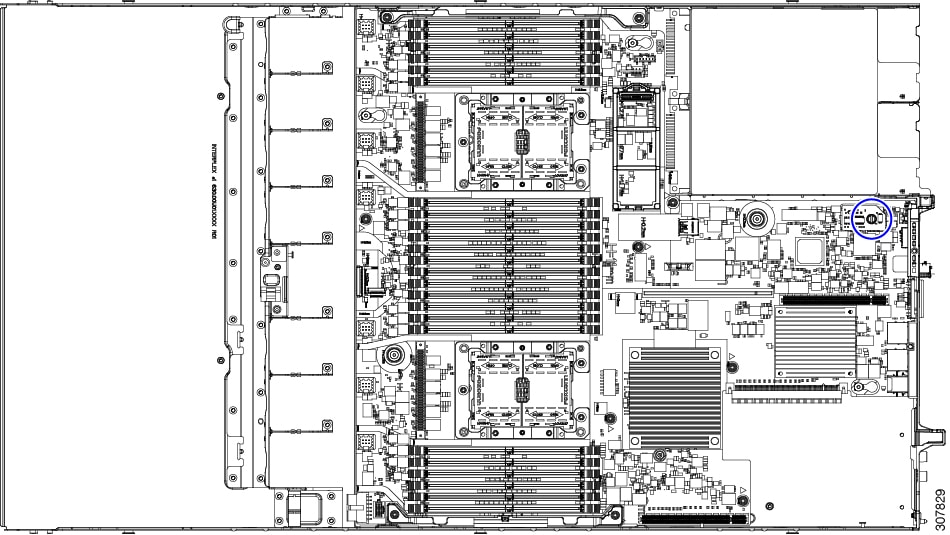

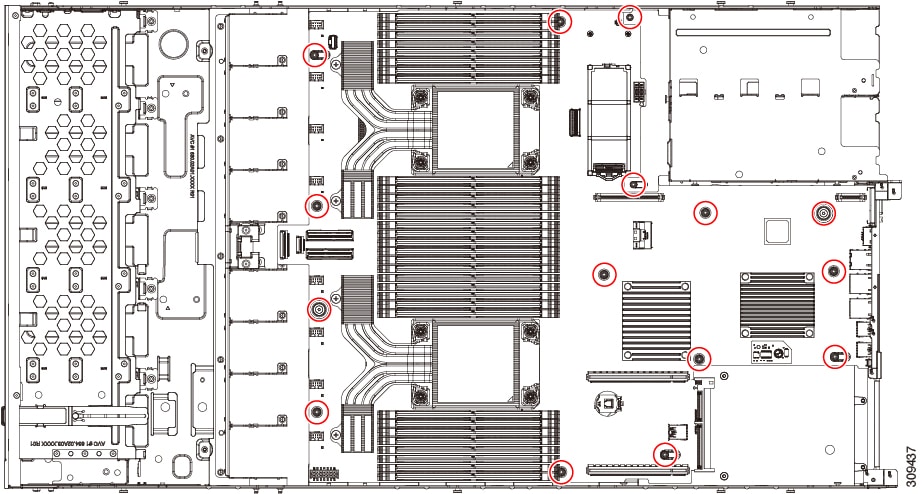

Internal Diagnostic LEDs

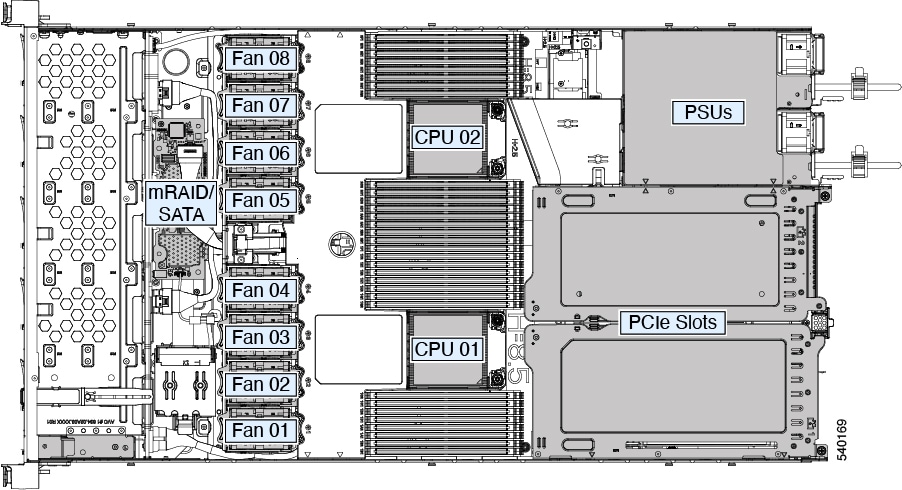

The server has internal fault LEDs for CPUs, DIMMs, and fan modules.

|

1 |

Fan module fault LEDs (one behind each fan connector on the motherboard)

|

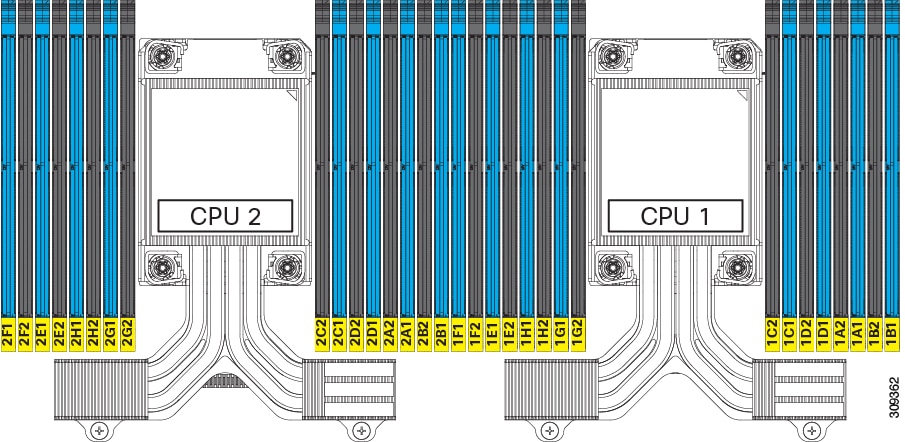

3 |

DIMM fault LEDs (one behind each DIMM socket on the motherboard) These LEDs operate only when the server is in standby power mode.

|

|

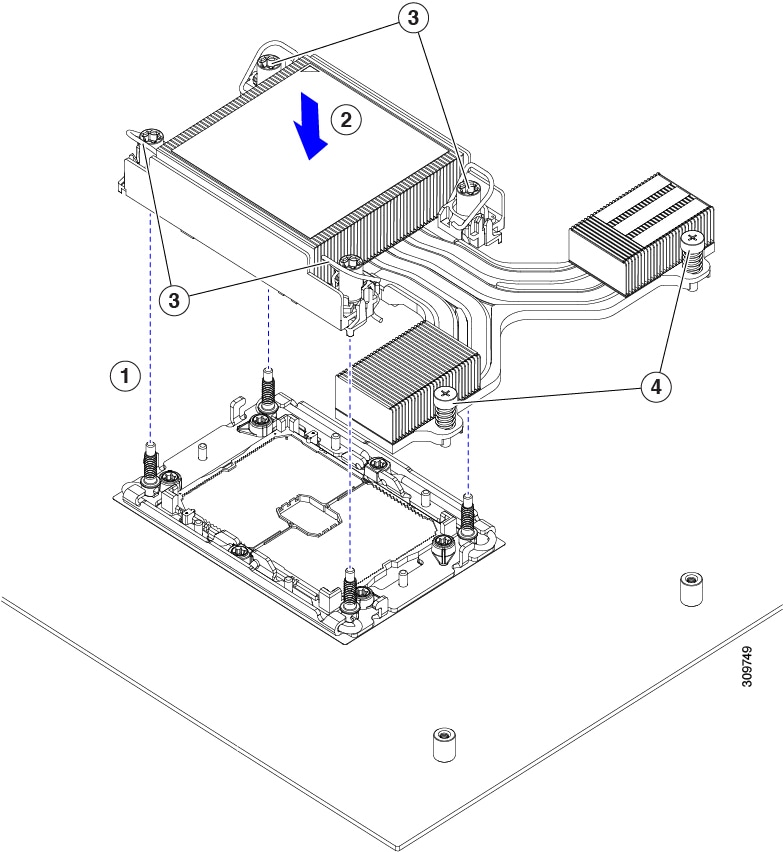

2 |

CPU fault LEDs (one behind each CPU socket on the motherboard). These LEDs operate only when the server is in standby power mode.

|

- |

Feedback

Feedback