Overview

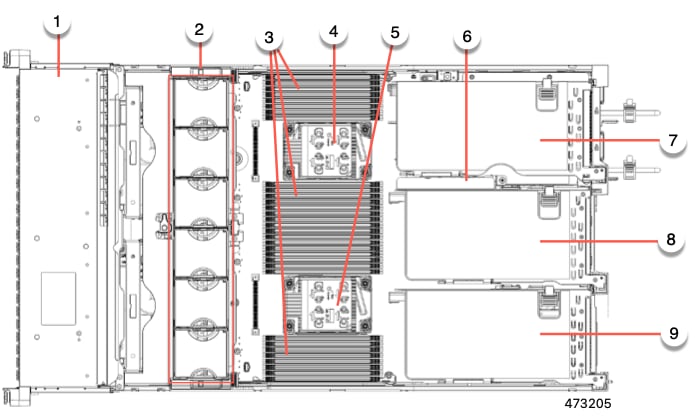

The Cisco UCS C240 M7 is a stand-alone 2U rack server chassis that can operate in both standalone environments and as part of the Cisco Unified Computing System (Cisco UCS).

Each Cisco UCS C240 M7 has two CPU sockets that can support the following Intel® Xeon® Scalable Processors, in either one or two CPU configurations.

-

Fourth Generation Intel Xeon Scalable Server processors

-

Fifth Generation Intel Xeon Scalable Server processors

Additionally, the server supports the following features with one CPU or two identical CPUs:

-

32 DDR5 DIMMs (RDIMM), up to 5600 MHz (1DPC) and 4400 MHz (2 DPC) support for RDIMMs.

16 DIMMs are supported per CPU for a total system memory of 8 TB (up to 256 GB DDR5 DIMMs) on servers with both Intel Fourth and Fifth Generation CPUs.

-

DDR5 DIMM capacities vary based on the CPU type for the compute node. For more information, see DIMM Population Rules and Memory Performance Guidelines.

-

Intel Fourth Generation Xeon Scalable Server Processors support 16, 32, 64, 128, and 256 GB DDR5 DIMMs

-

Intel Fifth Generation Xeon Scalable Server Processors support 16, 32, 64, 96, and 128 GB DDR5 DIMMs.

-

-

The server's DIMM configuration differs depending on which generation of CPU is populated on the server:

-

With Fourth Generation Intel Xeon Scalable Server processors, the compute node supports DDR5 DIMMs up to 4800 MT/s with 1DPC, and up to 4400 MT/s with 2DPC

-

With Fifth Generation Intel Xeon Scalable Server processors, the compute node supports DDR5 DIMMs up to 5600 MT/s with 1 DPC, and up to 4400 MT/s with 2DPC

-

-

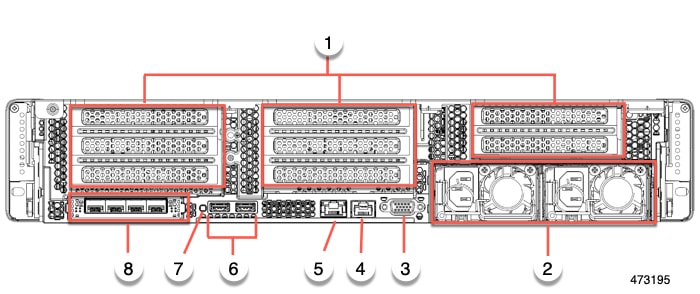

The servers have different supported configurations of small form factor (SFF) front-loading drives.

-

Up to 2 M.2 SATA RAID cards for server boot.

-

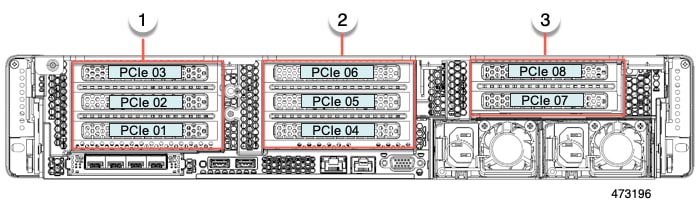

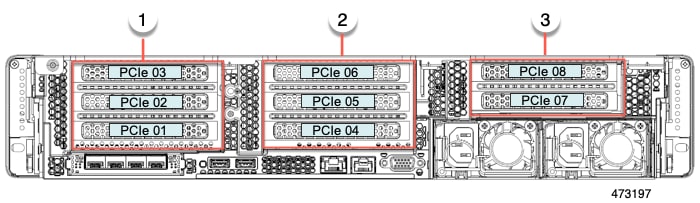

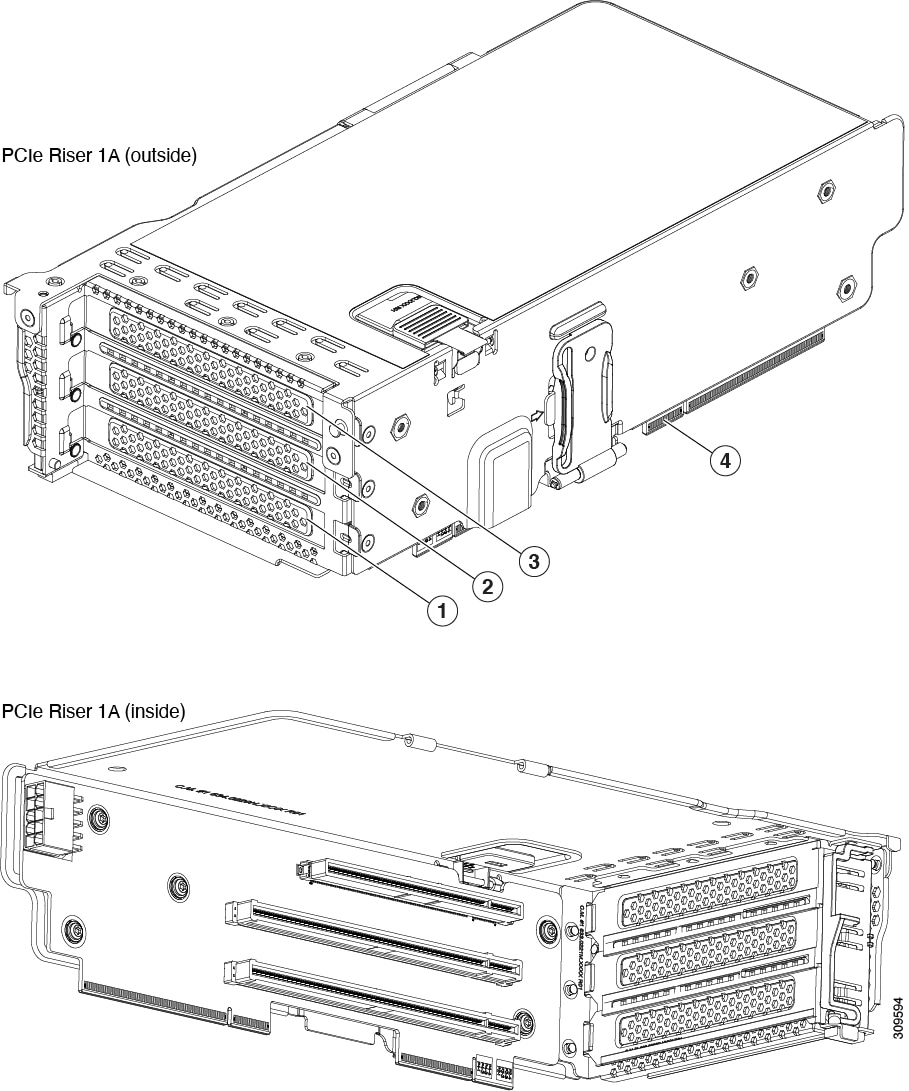

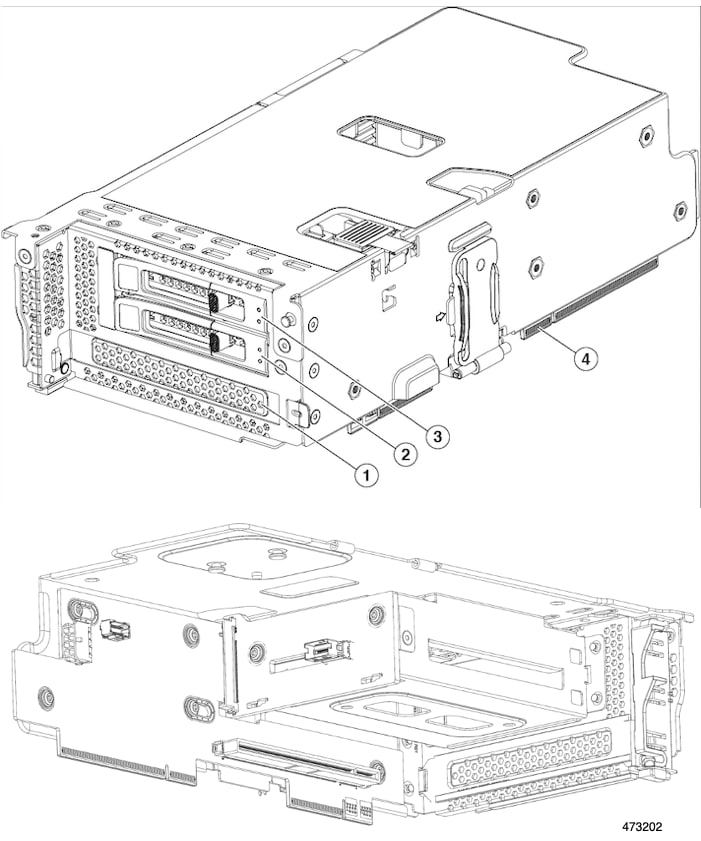

Rear Storage risers (2 slots each)

-

Rear PCIe risers

-

Internal slot for a 24 G Tri-Mode RAID controller with SuperCap for write-cache backup, or for a SAS HBA.

-

One mLOM/VIC card provides 10/25/40/50/100/200 Gbps. The following mLOMs are supported:

-

Cisco UCS VIC 15427 Quad Port CNA MLOM (UCSC-M-V5Q50GV2) supports:

-

a x16 PCIe Gen4 Host Interface to the rack server

-

four 10G/25G/50G SFP+/SFP28/SFP56 ports

-

4GB DDR4 Memory, 3200 MHz

-

Integrated blower for optimal ventilation

-

Secure boot support

-

-

Cisco UCS VIC 15425 Quad Port 10G/25G/50G SFP56 CNA PCIe (UCSC-P-V5Q50G-D)

-

a x16 PCIe Gen4 Host Interface to the rack server

-

Four 10G/25G/50G QSFP56 ports

-

4GB DDR4 Memory, 3200MHz

-

Integrated blower for optimal ventilation

-

-

Cisco UCS VIC 15237 Dual Port 40G/100G/200G QSFP56 mLOM (UCSC-M-V5D200GV2) supports:

-

a x16 PCIe Gen4 Host Interface to the rack server

-

two 40G/100G/200G QSFP/QSFP28/QSFP56 ports

-

4GB DDR4 Memory, 3200 MHz

-

Integrated blower for optimal ventilation

-

Secure boot support

-

-

Cisco UCS VIC 15235 Dual Port 40G/100G/200G QSFP56 CNA PCIe (UCSC-P-V5D200G-D)

-

a x16 PCIe Gen4 Host Interface to the rack server

-

two 40G/100G/200G QSFP56 ports

-

4GB DDR4 Memory, 3200MHz

-

Integrated blower for optimal ventilation

-

-

-

Two power AC supplies (PSUs) that support N+1 power configuration and cold redundancy.

-

Six modular, hot swappable fans.

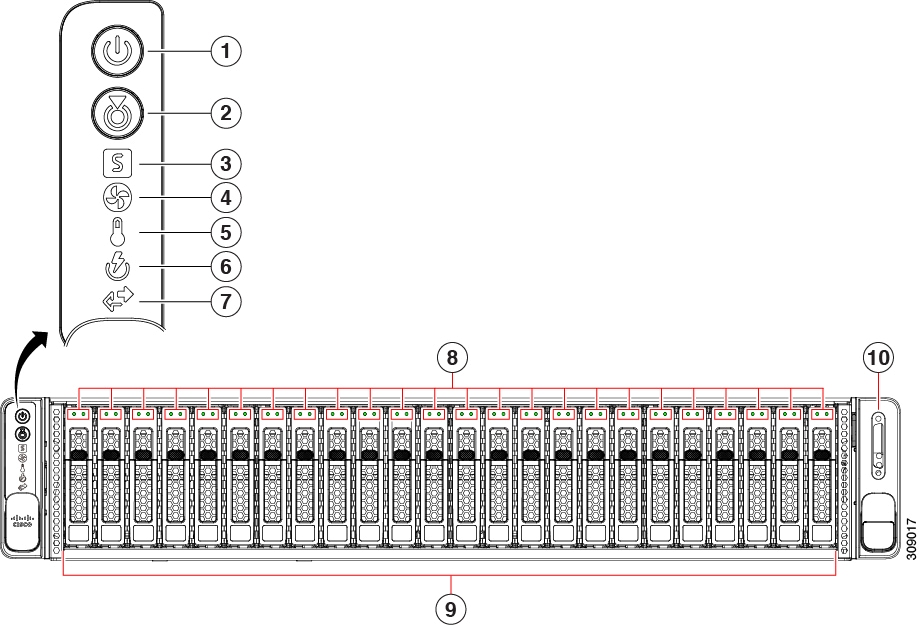

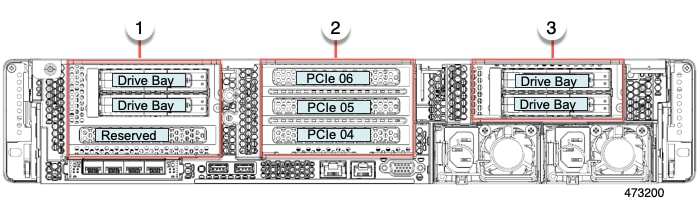

Server Configurations, 24 SFF SAS/SATA

The SFF 24 SAS/SATA/U.3 NVMe configuration (UCSC-C240-M7SX) can be ordered as either an I/O-centric configuration or a storage centric configuration. This server supports the following:

-

A maximum of 24 small form-factor (SFF) drives, with a 24-drive backplane.

-

Front-loading drive bays 1—24 support 2.5-inch SAS/SATA/U.3 drives as SSDs or HDDs.

-

Optionally, drive bays 1—4 can support 2.5-inch NVMe SSDs. In this configuration, any number of NVMe drives can be installed up to the maximum of 4.

Note

NVMe drives are supported only on a dual CPU server.

-

Drive bays 5 —24 support SAS/SATA/U.3 SSDs or HDDs only; no U.2 NVMe.

-

Optionally, the rear-loading drive bays support four 4 2.5-inch SAS/SATA or NVMe drives.

-

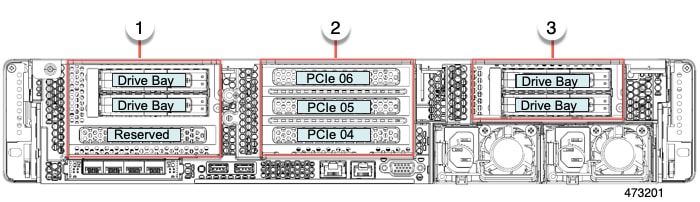

Server Configurations, 24 NVMe

The SFF 24 NVMe configuration (UCSC-C240-M7SN) can be ordered as an NVMe-only server. The NVMe-optimized server requires two CPUs. This server supports the following:

-

A maximum of 24 SFF NVMe drives as SSDs with a 24-drive backplane, NVMe-optimized.

-

Front-loading drive bays 1—24 support 2.5-inch NVMe PCIe SSDs only.

-

Optionally, the rear-loading drive bays support four 2.5-inch NVMe SSDs only. These drive bays are in the left and right (Riser 1 and Riser 3) of the rear panel.

-

Feedback

Feedback