FlexPod Datacenter with Red Hat OpenShift Bare Metal Manual Configuration with Cisco UCS X-Series Direct

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

![]()

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

The FlexPod Datacenter solution is a validated design for deploying Cisco and NetApp technologies and products to build shared private and public cloud infrastructure. Cisco and NetApp have partnered to deliver a series of FlexPod solutions that enable strategic data center platforms. The success of the FlexPod solution is driven through its ability to evolve and incorporate both technology and product innovations in the areas of management, compute, storage, and networking. This document explains the deployment details of Red Hat OpenShift on FlexPod Bare Metal Infrastructure. Some of the key advantages of FlexPod Datacenter with Red Hat OpenShift Bare Metal are:

● Consistent Configuration: having a standard method for deploying Red Hat OpenShift on FlexPod Bare Metal infrastructure provides a consistent platform to run containers and virtualized workloads including CPU and GPU accelerated AI/ML workloads, software and models, and OpenShift Virtualization, all side by side on the same infrastructure.

● Simpler and programmable infrastructure: the entire underlying infrastructure can be configured using infrastructure as code delivered using Ansible.

● End-to-End 100Gbps Ethernet: utilizing the 5th Generation Cisco UCS VICs and the 5th Generation Cisco UCS S9108 Fabric Interconnects (FIs) to deliver 100Gbps Ethernet from the server through the network to the storage.

● Cisco Intersight Management: Cisco Intersight Managed Mode (IMM) is used to manage the Cisco UCS S9108 FIs and Cisco UCS X-Series Servers. Additionally, Cisco Intersight integrates with NetApp Active IQ Unified Manager and Cisco Nexus switches as described in the following sections.

● Built for investment protections: design ready for future technologies such as liquid cooling and high-Wattage CPUs; CXL-ready.

In addition to the FlexPod-specific hardware and software innovations, the integration of the Cisco Intersight cloud platform with NetApp Active IQ Unified Manager, and Cisco Nexus switches delivers monitoring and, orchestration capabilities for different layers (storage and networking) of the FlexPod infrastructure. Implementation of this integration at this point in the deployment process would require Cisco Intersight Assist and NetApp Active IQ Unified Manager to be deployed outside of the FlexPod.

For information about the FlexPod design and deployment details, including the configuration of various elements of design and associated best practices, refer to Cisco Validated Designs for FlexPod, here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html.

Solution Overview

This chapter contains the following:

● Audience

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

This document provides deployment guidance around bringing up the FlexPod Datacenter with Red Hat OpenShift on Bare Metal infrastructure. This configuration is built as a tenant on top of FlexPod Base and assumes FlexPod Base has already been configured. This document introduces various design elements and explains various considerations and best practices for a successful deployment.

The following design elements distinguish this version of FlexPod from previous models:

● Configuration of Red Hat OpenShift Bare Metal as a tenant on top of FlexPod Base. This document is the first example of a FlexPod tenant on top of FlexPod Base that aligns with the tenant defined in FlexPod Zero Trust Framework Design Guide.

● Configuration of a platform that will support both Containerized Applications, such as AI applications and Virtual Machines on the same platform.

Deployment Hardware and Software

This chapter contains the following:

The FlexPod Datacenter with Cisco UCS and Cisco Intersight meets the following general design requirements:

● Resilient design across all layers of the infrastructure with no single point of failure

● Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed

● Modular design that can be replicated to expand and grow as the needs of the business grow

● Flexible design that can support different models of various components with ease

● Simplified design with the ability to integrate and automate with external automation tools

● Cloud-enabled design which can be configured, managed, and orchestrated from the cloud using GUI or APIs

To deliver a solution which meets all these design requirements, various solution components are connected and configured as explained in the following sections.

The FlexPod Datacenter with Red Hat OpenShift on Bare Metal infrastructure configuration is built using the following hardware components:

● Cisco UCS X9508 Chassis with six Cisco UCS X210C M7 Compute Nodes and 2 UCS X440p PCIe Nodes each containing 2 NVIDIA L40S GPUs

● Fifth-generation Cisco UCS S9108 Fabric Interconnects to support 100GbE and 25GbE connectivity from various components

● High-speed Cisco NX-OS-based Nexus 93600CD-GX switching design to support 100GE and 400GE connectivity

● NetApp AFF C800 end-to-end NVMe storage with 25G or 100G Ethernet and (optional) 32G Fibre Channel connectivity

The software components of this solution consist of:

● Cisco Intersight to deploy, maintain, and support the Cisco UCS server components

● Cisco Intersight SaaS platform to maintain and support the FlexPod components

● Cisco Intersight Assist Virtual Appliance to help connect NetApp ONTAP and Cisco Nexus switches with Cisco Intersight

● NetApp Active IQ Unified Manager to monitor and manage the storage and for NetApp ONTAP integration with Cisco Intersight

● Red Hat OpenShift which provides a platform for both containers and VMs

FlexPod Datacenter with Red Hat OpenShift on Bare Metal Infrastructure with Cisco UCS X-Series Direct Topology

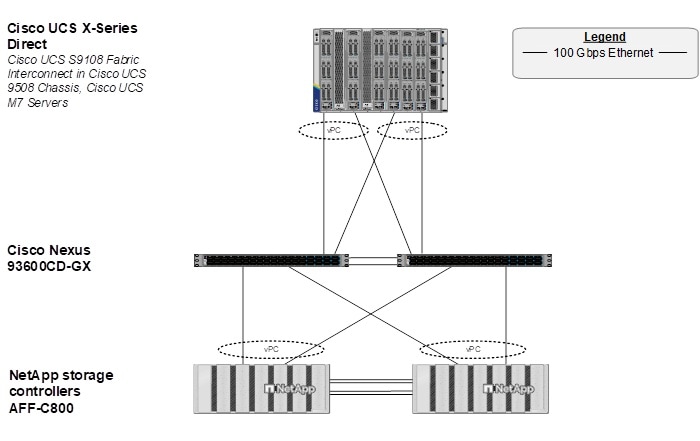

Figure 1 shows various hardware components and the network connections for this IP-based FlexPod design.

The reference hardware configuration includes:

● Two Cisco Nexus 93600CD-GX Switches in Cisco NX-OS mode provide the switching fabric. Other Cisco Nexus Switches are also supported.

● Two Cisco UCS S9108 Fabric Interconnects (FIs) in the chassis provide the chassis connectivity. At least two 100 Gigabit Ethernet ports from each FI, configured as a Port-Channel, are connected to each Nexus 93600CD-GX switch. 25 Gigabit Ethernet connectivity is also supported as well as other versions of the Cisco UCS FI that would be used with Intelligent Fabric Modules (IFMs) in the chassis.

● One Cisco UCS X9508 Chassis contains 6 Cisco UCS X210C M7 servers and 2 Cisco UCS X440p PCIe Nodes each with 2 NVIDIA L40S GPUs. Other configurations of servers with and without GPUs are also supported.

● One NetApp AFF C800 HA pair connects to the Cisco Nexus 93600CD-GX Switches using two 100 GE ports from each controller configured as a Port-Channel. 25 Gigabit Ethernet connectivity is also supported as well as other NetApp AFF, ASA, and FAS storage controllers.

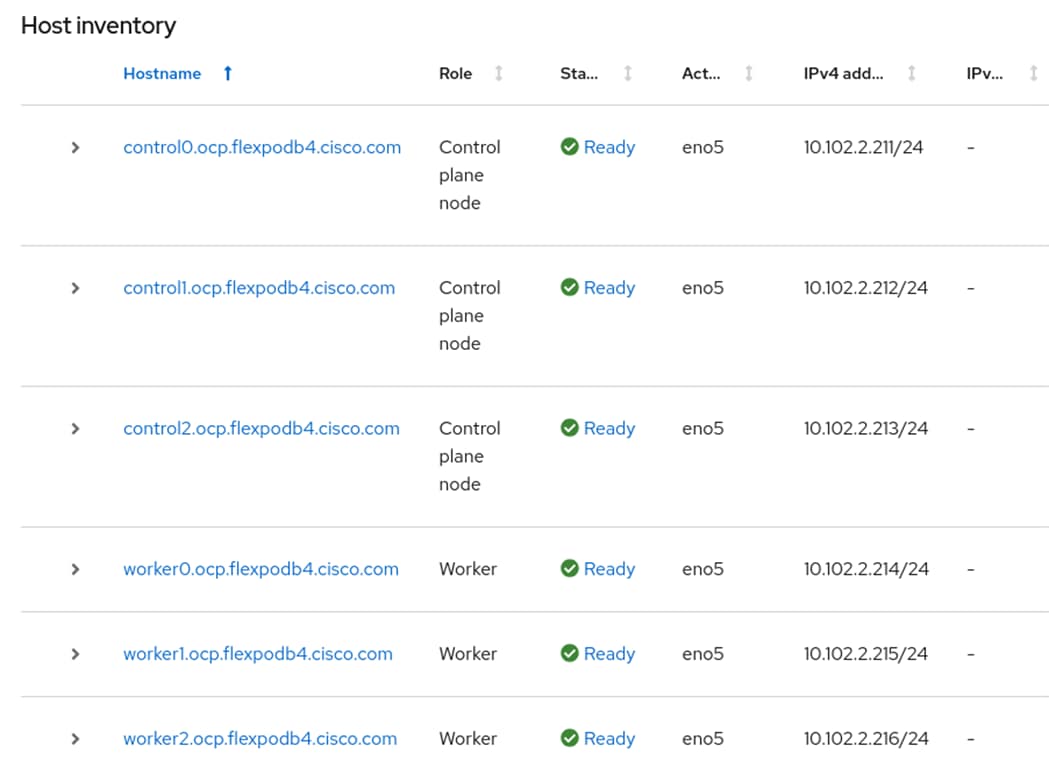

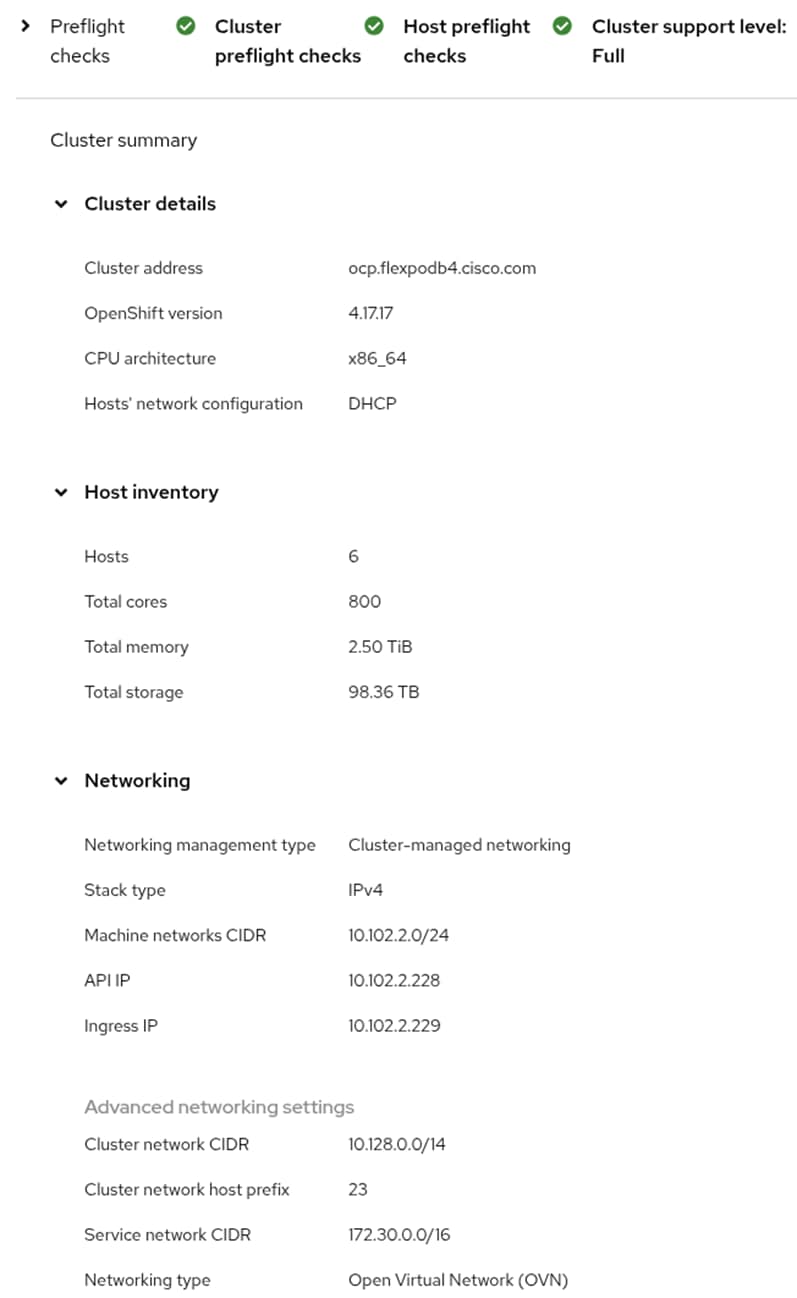

Red Hat OpenShift on Bare Metal Server Configuration

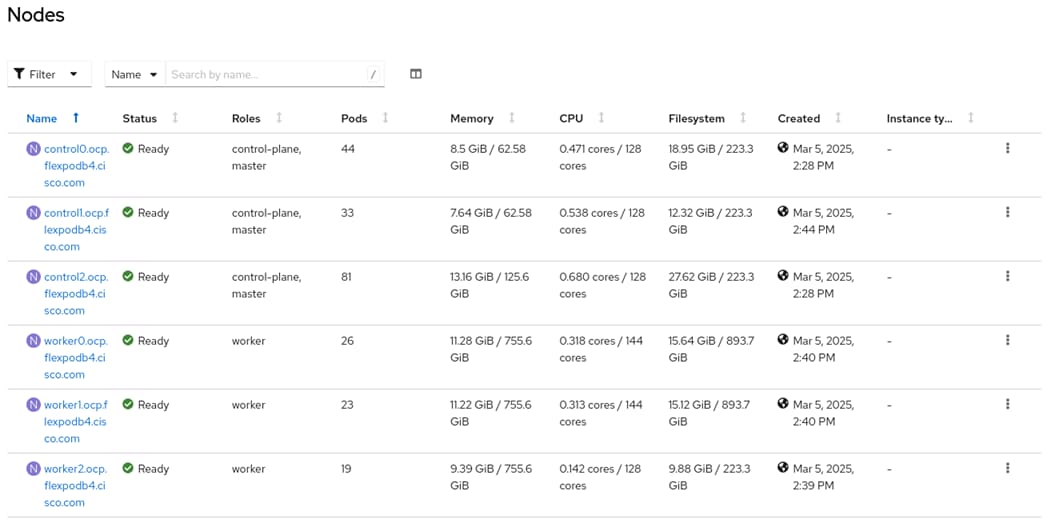

A simple Red Hat OpenShift cluster consists of at least five servers – 3 Control-Plane Nodes and 2 or more Worker Nodes where applications and VMs are run. In this lab validation 3 Worker Nodes were utilized. Based on OpenShift published requirements, the three Control Plane Nodes were configured with 64GB RAM, and the three Worker Nodes were configured with 768GB RAM to handle containerized applications and VMs.

An alternative configuration where all servers have the same amount of memory and CPUs, is to combine the control-plane and worker roles on the first three servers and then to assign only the worker role to the remaining servers. This configuration would require a minimum of three servers and notes throughout the document will explain deviations in the process for this configuration.

Each Node was booted from M.2. Both a single M.2 module and 2 M.2 modules with RAID1 are supported. Also, the servers paired with X440p PCIe Nodes were configured as Workers. From a networking perspective, both the Control-Plane Nodes and the Workers were configured with a single vNIC with UCS Fabric Failover in the Bare Metal or Management VLAN. The Workers were configured with extra NICs (vNICs) to allow storage attachment to the Workers. Each worker had two additional vNICs with the iSCSI A and B VLANs configured as native to allow iSCSI persistent storage attachment and future iSCSI boot. These same vNICs also had the NVMe-TCP A and B allowed VLANs assigned, allowing tagged VLAN interfaces for NVMe-TCP to be defined on the Workers. Finally, each worker had one additional vNIC with the OpenShift NFS VLAN configured as native to provide NFS persistent storage.

VLAN Configuration

Table 1 lists VLANs configured for setting up the FlexPod environment along with their usage.

| VLAN ID |

Name |

Usage |

IP Subnet used in this deployment |

| 2* |

Native-VLAN |

Use VLAN 2 as native VLAN instead of default VLAN (1) |

|

| 1020* |

OOB-MGMT-VLAN |

Out-of-band management VLAN to connect management ports for various devices |

10.102.0.0/24; GW: 10.102.0.254 |

| 1022 |

OCP-BareMetal-MGMT |

Routable OpenShift Bare Metal VLAN used for OpenShift cluster and node management |

10.102.2.0/24; GW: 10.102.2.254 |

| 3012 |

OCP-iSCSI-A |

Used for OpenShift iSCSI Persistent Storage |

192.168.12.0/24 |

| 3022 |

OCP-iSCSI-B |

Used for OpenShift iSCSI Persistent Storage |

192.168.22.0/24 |

| 3032 |

OCP-NVMe-TCP-A |

Used for OpenShift NVMe-TCP Persistent Storage |

192.168.32.0/24 |

| 3042 |

OCP-NVMe-TCP-B |

Used for OpenShift NVMe-TCP Persistent Storage |

192.168.42.0/24 |

| 3052 |

OCP-NFS |

Used for OpenShift NFS RWX Persistent Storage |

192.168.52.0/24 |

Note: *VLAN configured in FlexPod Base.

Note: S3 object storage was also used in this environment but requires a routable subnet. In order to avoid having two default gateways on the OpenShift nodes, S3 was placed on the OCP-BareMetal-MGMT subnet and VLAN. A separate VLAN and subnet was not defined for S3.

Table 2 lists the VMs or bare metal servers necessary for deployment as outlined in this document.

| Virtual Machine Description |

VLAN |

IP Address |

Comments |

| OCP AD1 |

1022 |

10.102.2.249 |

Hosted on pre-existing management infrastructure within the FlexPod |

| OCP AD2 |

1022 |

10.102.2.250 |

Hosted on pre-existing management infrastructure within the FlexPod |

| OCP Installer |

1022 |

10.102.2.10 |

Hosted on pre-existing management infrastructure within the FlexPod |

| NetApp Active IQ Unified Manager |

1021 |

10.102.1.97 |

Hosted on pre-existing management infrastructure within the FlexPod |

| Cisco Intersight Assist Virtual Appliance |

1021 |

10.102.1.96 |

Hosted on pre-existing management infrastructure within the FlexPod |

Table 3 lists the software revisions for various components of the solution.

| Layer |

Device |

Image Bundle |

Comments |

| Compute |

Cisco UCS Fabric Interconnect S9108 |

4.3(5.240191) |

|

|

|

Cisco UCS X210C M7 |

5.3(5.250001) |

|

| Network |

Cisco Nexus 93600CD-GX NX-OS |

10.4(4)M |

|

| Storage |

NetApp AFF C800 |

ONTAP 9.16.1 |

Latest patch release |

| Software |

Red Hat OpenShift |

4.17 |

|

| NetApp Trident |

25.06.2 |

|

|

| NetApp DataOps Toolkit |

2.5.0 |

|

|

| Cisco Intersight Assist Appliance |

1.1.1-1 |

1.1.1-0 initially installed and then automatically upgraded |

|

| NetApp Active IQ Unified Manager |

9.16 |

|

|

| NVIDIA L40S GPU Driver |

550.144.03 |

|

The information in this section is provided as a reference for cabling the physical equipment in a FlexPod environment. To simplify cabling requirements, a cabling diagram was used.

The cabling diagram in this section contains the details for the prescribed and supported configuration of the NetApp AFF C800 running NetApp ONTAP 9.16.1.

Note: For any modifications of this prescribed architecture, consult the NetApp Interoperability Matrix Tool (IMT).

Note: This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps.

Note: Be sure to use the cabling directions in this section as a guide.

The NetApp storage controller and disk shelves should be connected according to best practices for the specific storage controller and disk shelves. For disk shelf cabling, refer to NetApp Support.

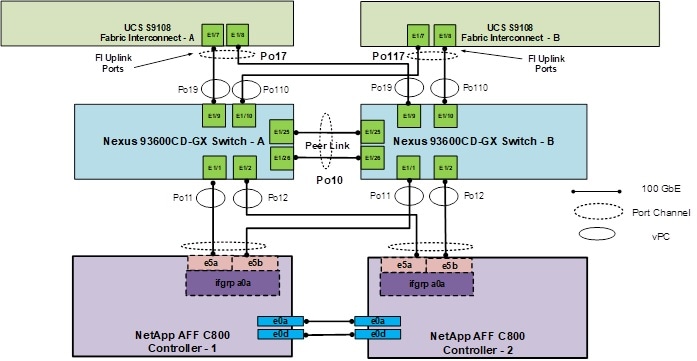

Figure 2 details the cable connections used in the validation lab for the FlexPod topology based on the Cisco UCS S9108 fabric interconnect directly in the chassis. Two 100Gb links connect each Cisco UCS Fabric Interconnect to the Cisco Nexus Switches and each NetApp AFF controller to the Cisco Nexus Switches. Additional 1Gb management connections will be needed for one or more out-of-band network switches that sit apart from the FlexPod infrastructure. Each Cisco UCS fabric interconnect and Cisco Nexus switch is connected to the out-of-band network switches, and each AFF controller has a connection to the out-of-band network switches. Layer 3 network connectivity is required between the Out-of-Band (OOB) and In-Band (IB) Management Subnets.

Network Switch Configuration

This chapter contains the following:

● Cisco Nexus Switch Manual Configuration

This chapter provides a detailed procedure for configuring the Cisco Nexus 93600CD-GX switches for use in a FlexPod environment.

Note: The following procedures describe how to configure the Cisco Nexus switches for use in the OpenShift Bare Metal FlexPod environment. This procedure assumes the use of Cisco Nexus 9000 10.3(4a)M.

● The following procedure includes the setup of NTP distribution on the bare metal VLAN. The interface-vlan feature and ntp commands are used to set this up.

● This procedure adds the tenant vlans to the appropriate port-channels.

Follow the physical connectivity guidelines for FlexPod as explained in section FlexPod Cabling.

Cisco Nexus Switch Manual Configuration

Procedure 1. Create Tenant VLANs on Cisco Nexus A and Cisco Nexus B

Step 1. Log into both Nexus switches as admin using ssh.

Step 2. Configure the OpenShift Bare Metal VLAN:

config t

vlan <bm-vlan-id for example, 1022>

name <tenant-name>-BareMetal-MGMT

Step 3. Configure OpenShift iSCSI VLANs:

vlan <iscsi-a-vlan-id for example, 3012>

name <tenant-name>-iSCSI-A

vlan <iscsi-b-vlan-id for example, 3022>

name <tenant-name>-iSCSI-B

Step 4. If configuring NVMe-TCP storage access, create the following two additional VLANs:

vlan <nvme-tcp-a-vlan-id for example, 3032>

name <tenant-name>-NVMe-TCP-A

vlan <nvme-tcp-b-vlan-id for example, 3042>

name <tenant-name>-NVMe-TCP-B

exit

Step 5. Add OpenShift NFS VLAN:

vlan <nfs-vlan-id for example, 3052>

name <tenant-name>-NFS

Step 6. Add VLANs to the vPC peer link in both Nexus switches:

int Po10

switchport trunk allowed vlan add <bm-vlan-id>,<iscsi-a-vlan-id>,<iscsi-b-vlan-id>,<nvme-tcp-a-vlan-id>,<nvme-tcp-b-vlan-id>,<nfs-vlan-id>

Step 7. Add VLANs to the storage interfaces in both Nexus switches:

int Po11,Po12

switchport trunk allowed vlan add <bm-vlan-id>,<iscsi-a-vlan-id>,<iscsi-b-vlan-id>,<nvme-tcp-a-vlan-id>,<nvme-tcp-b-vlan-id>,<nfs-vlan-id>

Step 8. Add VLANs to the UCS Fabric Interconnect Uplink interfaces in both Nexus switches:

int Po19,Po110

switchport trunk allowed vlan add <bm-vlan-id>,<iscsi-a-vlan-id>,<iscsi-b-vlan-id>,<nvme-tcp-a-vlan-id>,<nvme-tcp-b-vlan-id>,<nfs-vlan-id>

Step 9. Add the Bare Metal VLAN to the Switch Uplink interface in both Nexus switches:

interface Po127

switchport trunk allowed vlan add <bm-vlan-id>

exit

Step 10. If configuring NTP Distribution in these Nexus Switches, add Tenant VRF and NTP Distribution Interface in Cisco Nexus A:

vrf context <tenant-name>

ip route 0.0.0.0/0 <bm-subnet-gateway>

exit

interface Vlan<bm-vlan-id>

no shutdown

vrf member <tenant-name>

ip address <bm-switch-a-ntp-distr-ip>/<bm-vlan-mask-length>

exit

copy run start

Step 11. If configuring NTP Distribution in these Nexus Switches, add Tenant VRF and NTP Distribution Interface in Cisco Nexus B:

vrf context <tenant-name>

ip route 0.0.0.0/0 <bm-subnet-gateway>

exit

interface Vlan<bm-vlan-id>

no shutdown

vrf member <tenant-name>

ip address <bm-switch-b-ntp-distr-ip>/<bm-vlan-mask-length>

exit

copy run start

Step 12. The following commands can be used to see the switch configuration and status.

show run

show vpc

show vlan

show port-channel summary

show ntp peer-status

show cdp neighbors

show lldp neighbors

show run int

show int

show udld neighbors

show int status

NetApp ONTAP Storage Configuration

This chapter contains the following:

● Configure NetApp ONTAP Storage

● Configure S3 access to the OpenShift Tenant

Configure NetApp ONTAP Storage

This section describes how to configure the NetApp ONTAP Storage for the OpenShift Tenant.

Procedure 1. Log into the Cluster

Step 1. Open an SSH connection to either the cluster IP or the host name.

Step 2. Log into the admin user with the password you provided earlier.

Procedure 2. Configure NetApp ONTAP Storage for the OpenShift Tenant

Note: By default, all network ports are included in a separate default broadcast domain. Network ports used for data services (for example, e5a, e5b, and so on) should be removed from their default broadcast domain and that broadcast domain should be deleted.

Step 1. Delete any Default-N automatically created broadcast domains:

network port broadcast-domain delete -broadcast-domain <Default-N> -ipspace Default

network port broadcast-domain show

Note: Delete the Default broadcast domains with Network ports (Default-1, Default-2, and so on). This does not include Cluster ports and management ports.

Step 2. Create an IPspace for the OpenShift tenant:

network ipspace create -ipspace AA02-OCP

Step 3. Create the OCP-MGMT, OCP-iSCSI-A, OCP-iSCSI-B, OCP-NVMe-TCP-A , OCP-NVMe-TCP-B, and OCP-NFS broadcast domains with appropriate maximum transmission unit (MTU):

network port broadcast-domain create -broadcast-domain OCP-MGMT -mtu 1500 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-iSCSI-A -mtu 9000 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-iSCSI-B -mtu 9000 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-NVMe-TCP-A -mtu 9000 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-NVMe-TCP-B -mtu 9000 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-NFS -mtu 9000 -ipspace AA02-OCP

network port vlan create -node AA02-C800-01 -vlan-name a0a-1022

network port vlan create -node AA02-C800-02 -vlan-name a0a-1022

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-MGMT -ports AA02-C800-01:a0a-1022,AA02-C800-02:a0a-1022

Step 5. Create the OpenShift iSCSI VLAN ports and add them to the OpenShift iSCSI broadcast domains:

network port vlan create -node AA02-C800-01 -vlan-name a0a-3012

network port vlan create -node AA02-C800-02 -vlan-name a0a-3012

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-iSCSI-A -ports AA02-C800-01:a0a-3012,AA02-C800-02:a0a-3012

network port vlan create -node AA02-C800-01 -vlan-name a0a-3022

network port vlan create -node AA02-C800-02 -vlan-name a0a-3022

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-iSCSI-B -ports AA02-C800-01:a0a-3022,AA02-C800-02:a0a-3022

Step 6. Create the OpenShift NVMe-TCP VLAN ports and add them to the OpenShift NVMe-TCP broadcast domains:

network port vlan create -node AA02-C800-01 -vlan-name a0a-3032

network port vlan create -node AA02-C800-02 -vlan-name a0a-3032

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-NVMe-TCP-A -ports AA02-C800-01:a0a-3032,AA02-C800-02:a0a-3032

network port vlan create -node AA02-C800-01 -vlan-name a0a-3042

network port vlan create -node AA02-C800-02 -vlan-name a0a-3042

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-NVMe-TCP-B -ports AA02-C800-01:a0a-3042,AA02-C800-02:a0a-3042

Step 7. Create the OpenShift NFS VLAN ports and add them to the OpenShift NFS broadcast domain:

network port vlan create -node AA02-C800-01 -vlan-name a0a-3052

network port vlan create -node AA02-C800-02 -vlan-name a0a-3052

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-NFS -ports AA02-C800-01:a0a-3052,AA02-C800-02:a0a-3052

Step 8. Create the SVM (Storage Virtual Machine) in the IPspace. Run the vserver create command:

vserver create -vserver OCP-SVM -ipspace AA02-OCP

Note: The SVM must be created in the IPspace. An SVM cannot be moved into an IPspace later.

Step 9. Add the required data protocols to the SVM and remove the unused data protocols from the SVM:

vserver add-protocols -vserver OCP-SVM -protocols iscsi,nfs,nvme

vserver remove-protocols -vserver OCP-SVM -protocols cifs,fcp

Step 10. Add the two data aggregates to the OCP-SVM aggregate list and enable and run the NFS protocol in the SVM:

vserver modify -vserver OCP-SVM -aggr-list AA02_C800_01_SSD_CAP_1,AA02_C800_02_SSD_CAP_1

vserver nfs create -vserver OCP-SVM -udp disabled -v3 enabled -v4.1 enabled

Step 11. Create a Load-Sharing Mirror of the SVM Root Volume. Create a volume to be the load-sharing mirror of the infrastructure SVM root volume only on the node that does not have the Root Volume:

volume show -vserver OCP-SVM # Identify the aggregate and node where the vserver root volume is located.

volume create -vserver OCP-SVM -volume OCP_Trident_SVM_root_lsm01 -aggregate AA02_C800_0<x>_SSD_CAP_1 -size 1GB -type DP # Create the mirror volume on the other node

Step 12. Create the 15min interval job schedule:

job schedule interval create -name 15min -minutes 15

Step 13. Create the mirroring relationship:

snapmirror create -source-path OCP-SVM:OCP_Trident_SVM_root -destination-path OCP-SVM:OCP_SVM_root_lsm01 -type LS -schedule 15min

Step 14. Initialize and verify the mirroring relationship:

snapmirror initialize-ls-set -source-path OCP-SVM:OCP_Trident_SVM_root

snapmirror show -vserver OCP-SVM

Progress

Source Destination Mirror Relationship Total Last

Path Type Path State Status Progress Healthy Updated

----------- ---- ------------ ------- -------------- --------- ------- --------

AA02-C800://OCP-SVM/OCP_Trident_SVM_root

LS AA02-C800://OCP-SVM/OCP_SVM_root_lsm01

Snapmirrored

Idle - true -

Step 15. Create the iSCSI and NVMe services:

vserver iscsi create -vserver OCP-SVM -status-admin up

vserver iscsi show -vserver OCP-SVM

Vserver: OCP-SVM

Target Name: iqn.1992-08.com.netapp:sn.8442b0854ebb11efb1a7d039eab7b2f3:vs.5

Target Alias: OCP-SVM

Administrative Status: up

vserver nvme create -vserver OCP-SVM -status-admin up

vserver nvme show -vserver OCP-SVM

Vserver Name: OCP-SVM

Administrative Status: up

Discovery Subsystem NQN: nqn.1992-08.com.netapp:sn.8442b0854ebb11efb1a7d039eab7b2f3:discovery

Note: Make sure licenses are installed for all storage protocols used before creating the services.

Step 16. To create the login banner for the SVM, run the following command:

security login banner modify -vserver OCP-SVM -message "This OCP-SVM is reserved for authorized users only!"

Step 17. Create a new rule for the SVM NFS subnet in the default export policy and assign the policy to the SVM’s root volume:

vserver export-policy rule create -vserver OCP-SVM -policyname default -ruleindex 1 -protocol nfs -clientmatch 192.168.52.0/24 -rorule sys -rwrule sys -superuser sys -allow-suid true

volume modify –vserver OCP-SVM –volume OCP_Trident_SVM_root –policy default

Step 18. Create and enable the audit log in the SVM:

volume create -vserver OCP-SVM -volume audit_log -aggregate AA02_C800_01_SSD_CAP_1 -size 50GB -state online -policy default -junction-path /audit_log -space-guarantee none -percent-snapshot-space 0

snapmirror update-ls-set -source-path OCP-SVM:OCP_Trident_SVM_root

vserver audit create -vserver OCP-SVM -destination /audit_log

vserver audit enable -vserver OCP-SVM

Step 19. Run the following commands to create NFS Logical Interfaces (LIFs):

network interface create -vserver OCP-SVM -lif nfs-lif-01 -service-policy default-data-files -home-node AA02-C800-01 -home-port a0a-3052 -address 192.168.52.51 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

network interface create -vserver OCP-SVM -lif nfs-lif-02 -service-policy default-data-files -home-node AA02-C800-02 -home-port a0a-3052 -address 192.168.52.52 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

Step 20. Run the following commands to create iSCSI LIFs:

network interface create -vserver OCP-SVM -lif iscsi-lif-01a -service-policy default-data-iscsi -home-node AA02-C800-01 -home-port a0a-3012 -address 192.168.12.51 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif iscsi-lif-01b -service-policy default-data-iscsi -home-node AA02-C800-01 -home-port a0a-3022 -address 192.168.22.51 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif iscsi-lif-02a -service-policy default-data-iscsi -home-node AA02-C800-02 -home-port a0a-3012 -address 192.168.12.52 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif iscsi-lif-02b -service-policy default-data-iscsi -home-node AA02-C800-02 -home-port a0a-3022 -address 192.168.22.52 -netmask 255.255.255.0 -status-admin up

Step 21. Run the following commands to create NVMe-TCP LIFs:

network interface create -vserver OCP-SVM -lif nvme-tcp-lif-01a -service-policy default-data-nvme-tcp -home-node AA02-C800-01 -home-port a0a-3032 -address 192.168.32.51 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif nvme-tcp-lif-01b -service-policy default-data-nvme-tcp -home-node AA02-C800-01 -home-port a0a-3042 -address 192.168.42.51 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif nvme-tcp-lif-02a -service-policy default-data-nvme-tcp -home-node AA02-C800-02 -home-port a0a-3032 -address 192.168.32.52 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif nvme-tcp-lif-02b -service-policy default-data-nvme-tcp -home-node AA02-C800-02 -home-port a0a-3042 -address 192.168.42.52 -netmask 255.255.255.0 -status-admin up

Step 22. Run the following command to create the SVM-MGMT LIF:

network interface create -vserver OCP-SVM -lif svm-mgmt -service-policy default-management -home-node AA02-C800-01 -home-port a0a-1022 -address 10.102.2.50 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

Step 23. Run the following command to verify LIFs:

network interface show -vserver OCP-SVM

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

OCP-SVM

iscsi-lif-01a

up/up 192.168.12.51/24 AA02-C800-01 a0a-3012

true

iscsi-lif-01b

up/up 192.168.22.51/24 AA02-C800-01 a0a-3022

true

iscsi-lif-02a

up/up 192.168.12.52/24 AA02-C800-02 a0a-3012

true

iscsi-lif-02b

up/up 192.168.22.52/24 AA02-C800-02 a0a-3022

true

nfs-lif-01 up/up 192.168.52.51/24 AA02-C800-01 a0a-3052

true

nfs-lif-02 up/up 192.168.52.52/24 AA02-C800-02 a0a-3052

true

nvme-tcp-lif-01a

up/up 192.168.32.51/24 AA02-C800-01 a0a-3032

true

nvme-tcp-lif-01b

up/up 192.168.42.51/24 AA02-C800-01 a0a-3042

true

nvme-tcp-lif-02a

up/up 192.168.32.52/24 AA02-C800-02 a0a-3032

true

nvme-tcp-lif-02b

up/up 192.168.42.52/24 AA02-C800-02 a0a-3042

true

svm-mgmt up/up 10.102.2.50/24 AA02-C800-01 a0a-1022

true

11 entries were displayed.

Step 24. Create a default route that enables the SVM management interface to reach the outside world:

network route create -vserver OCP-SVM -destination 0.0.0.0/0 -gateway 10.102.2.254

Step 25. Set a password for the SVM vsadmin user and unlock the user:

security login password -username vsadmin -vserver OCP-SVM

Enter a new password:

Enter it again:

security login unlock -username vsadmin -vserver OCP-SVM

Step 26. Add the OpenShift DNS servers to the SVM:

dns create -vserver OCP-SVM -domains ocp.flexpodb4.cisco.com -name-servers 10.102.2.249,10.102.2.250

Configure S3 access to the OpenShift Tenant

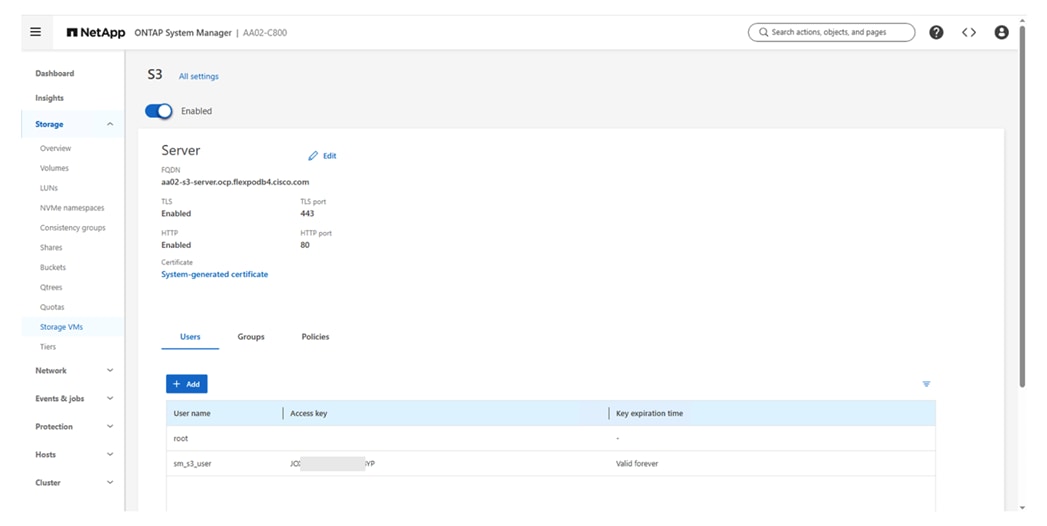

Procedure 1. Enable S3 on the storage VM

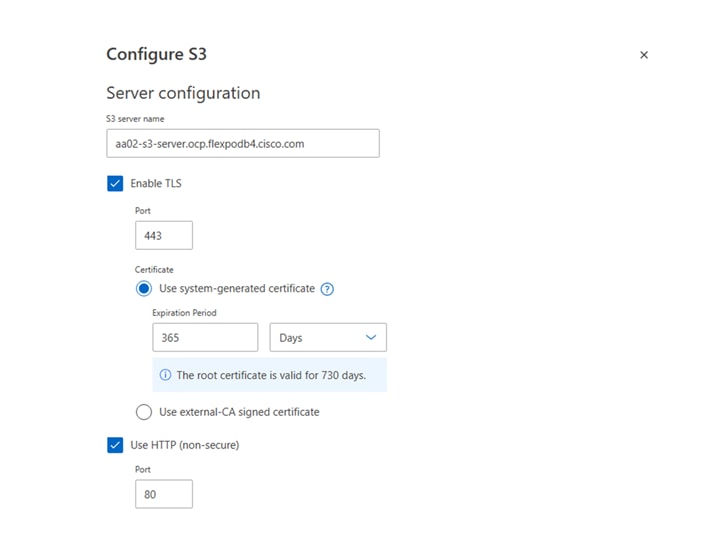

Step 1. In NetApp System Manager, click Storage > Storage VMs, select the storage VM (OCP-SVM), click Settings, and then click the pencil icon under S3.

Step 2. Enter the S3 server name. Make sure to enter the S3 server name as a Fully Qualified Domain Name (FQDN).

Step 3. TLS is enabled by default (port 443). You can enable HTTP if required.

Step 4. Select the certificate type. Whether you select system-generated certificate or external-CA signed certificate, it will be required for client access.

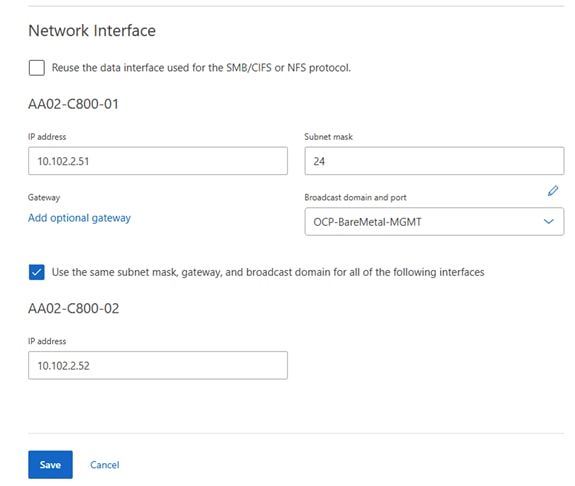

Step 5. Enter the network interfaces. Note that here the S3 object storage will be placed on the OCP-BareMetal-MGMT subnet and VLAN.

Step 6. Click Save.

The ONTAP S3 object store server is now configured as shown in the following figure. There are two users created by default:

1. root user with UID 0 – no access key or secret key is generated for this user

2. sm_s3_user – both access and secret keys are generated for this user

Note: The ONTAP administrator must run the object-store-server users regenerate-keys command to set the access key and secret key for the root user. As a NetApp best practice, do not use this root user. Any client application that uses the access key or secret key of the root user has full access to all buckets and objects in the object store.

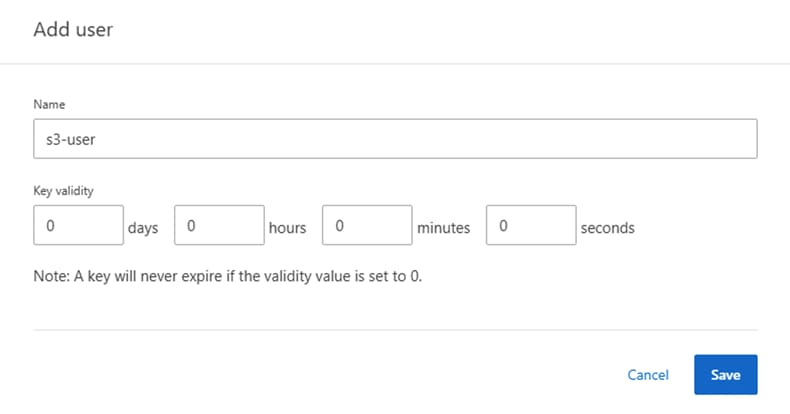

Step 7. You can choose to utilize the default user (sm_s3_user) or create a custom ONTAP S3 user:

a. Click Storage > Storage VMs. Select the storage VM (OCP-SVM) to which you need to add a user, select Settings and then click the pencil icon under S3.

b. To add a user, click Users > Add.

c. Enter a name for the user. Click Save.

d. The user is created, and an access key and a secret key are generated for the user.

e. Download or save the access key and secret key. These will be required for access from S3 clients.

Note: Beginning with ONTAP 9.14.1, you can specify the retention period of the access keys that get created for the user. You can specify the retention period in days, hours, minutes, or seconds, after which the keys automatically expire. By default, the value is set to 0 that indicates that the key is indefinitely valid.

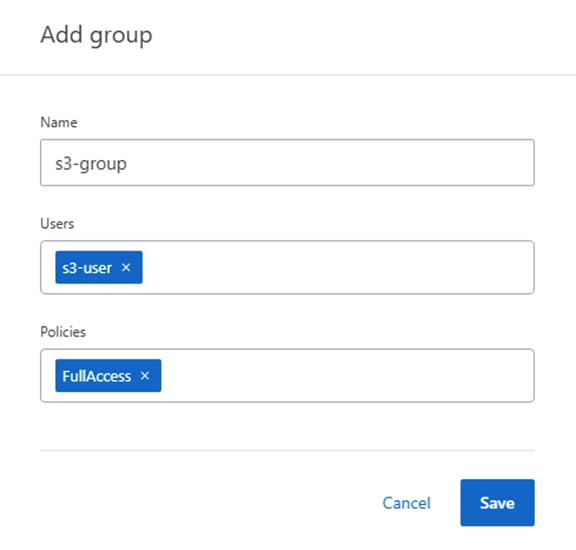

Procedure 2. Create ONTAP S3 user group to control access to buckets

Step 1. Click Storage > Storage VMs. Select the storage VM (OCP-SVM) to which you need to add a group, select Settings and then click the pencil icon under S3.

Step 2. To add a group, select Groups, then click Add.

Step 3. Enter a group name and select from a list of users.

Step 4. You can select an existing group policy or add one now, or you can add a policy later. In this configuration, we have used an existing policy (FullAccess).

Step 5. Click Save.

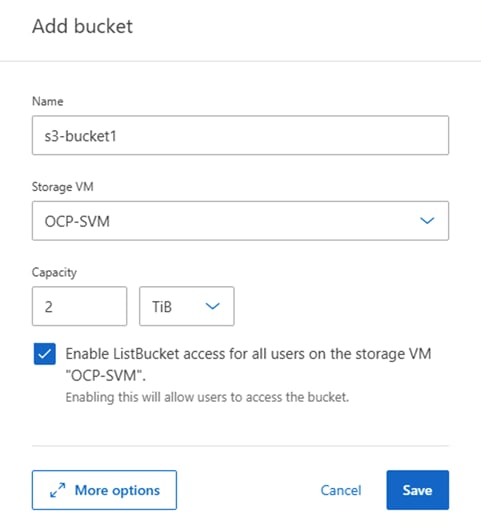

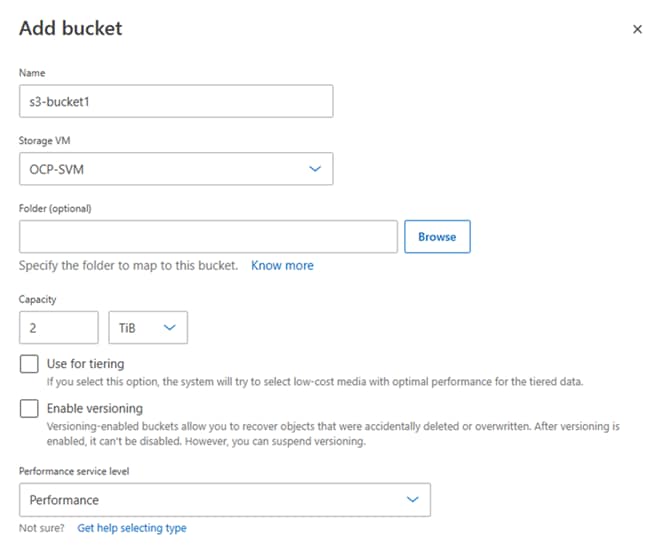

Procedure 3. Create an ONTAP S3 bucket

Step 1. Click Storage > Buckets, then click Add.

Step 2. Enter a name for the bucket, select the storage VM (OCP-SVM), and enter the size.

a. If you click Save at this point, a bucket is created with these default settings:

i. No users are granted access to the bucket unless any group policies are already in effect.

ii. A Quality of Service (performance) level that is the highest available for your system.

b. Click Save if you want to create a bucket with these default values.

Step 3. Click More Options to configure settings for object locking, user permissions, and performance level when you configure the bucket, or you can modify these settings later.

a. If you intend to use the S3 object store for FabricPool tiering, consider selecting Use for tiering rather than a performance service level. In this validation, we are not using S3 for FabricPool tiering.

b. To enable versioning for your objects for later recovery, select Enable Versioning. In this case, we have not enabled versioning.

c. Performance service level – default value (Performance) is used in this configuration.

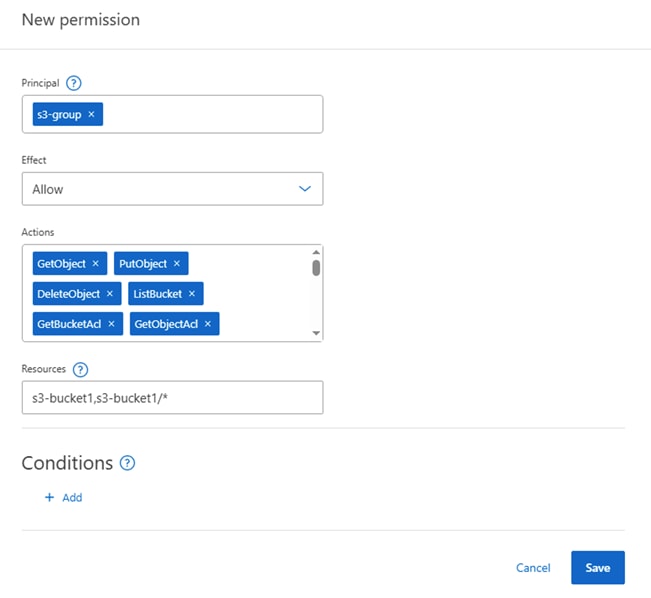

Step 4. Under Permissions section, click Add to add relevant permissions for accessing the bucket. Specify the following parameters:

a. Principal: the user or group to whom access is granted. Here, we selected “s3-group”.

b. Effect: allows or denies access to a user or group. Allow is selected here for “s3-group”.

c. Actions: permissible actions in the bucket for a given user or group. Select as required for validation.

d. Resources: paths and names of objects within the bucket for which access is granted or denied. The defaults bucketname and bucketname/* grant access to all objects in the bucket. In this solution, we used default values for resources (s3-bucket1,s3-bucket1/*)

e. Conditions (optional): expressions that are evaluated when access is attempted. For example, you can specify a list of IP addresses for which access will be allowed or denied. In this case, the field value was empty as no conditions were specified.

Step 5. Click Save.

Step 6. Click Save to create the ONTAP S3 bucket.

Note: In this configuration, we did not enable S3 object locking, but you can enable it if required by the validation.

Note: You can configure protection for the bucket by enabling SnapMirror (ONTAP or cloud) if needed. In this validation, this was not required.

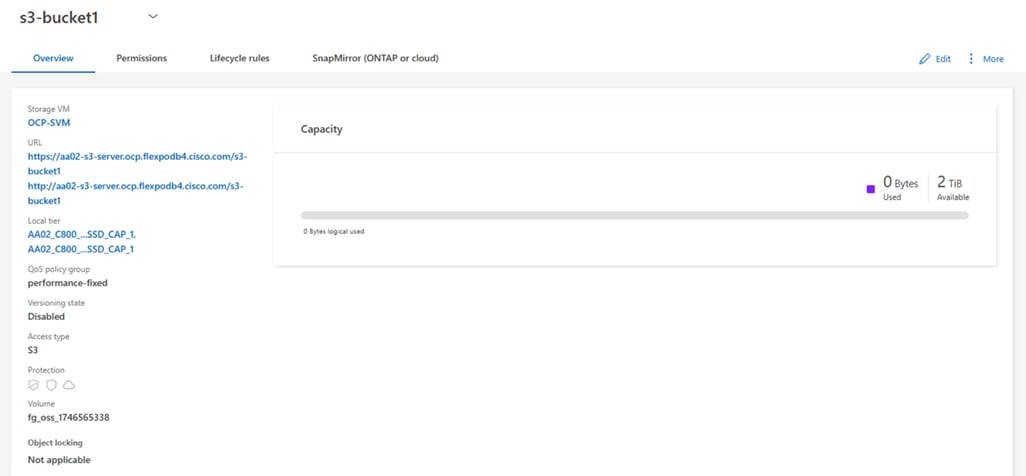

Step 7. ONTAP S3 is successfully created as shown in the following figure. Navigate to Storage > Buckets, select the bucket (s3-bucket1) and click Overview tab to see detailed information about the bucket.

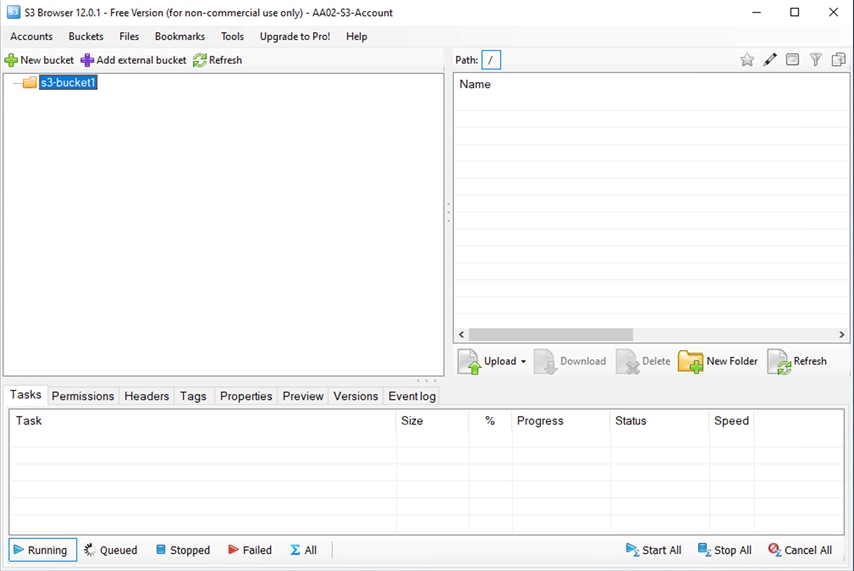

Step 8. On S3 client applications (whether ONTAP S3 or an external third-party application), you can verify access to the newly created S3 bucket. In this solution, we used S3 Browser application to access the bucket as shown in the following figure.

Note: In S3 Browser application, new account needs to be created first by providing S3 user access key and secret key, and REST endpoint (http://<s3-lif-ip>:80). Once account is added successfully, S3 buckets would be fetched automatically as shown above.

Cisco Intersight Managed Mode Configuration

This chapter contains the following:

● Set up Cisco Intersight Resource Group

● Set up Cisco Intersight Organization

● Add OpenShift VLANs to VLAN Policy

● Cisco UCS IMM Manual Configuration

● Create Control-Plane Node Server Profile Template

● Configure Boot Order Policy for M2

● Configure Firmware Policy (optional)

● Configure Virtual Media Policy

● Configure Cisco IMC Access Policy

● Configure IPMI Over LAN Policy

● Configure Virtual KVM Policy

● Storage Configuration (optional)

● Create Network Configuration - LAN Connectivity for Control-Plane Nodes

● Create MAC Address Pool for Fabric A and B

● Create Ethernet Network Group Policy

● Create Ethernet Network Control Policy

● Create Ethernet Adapter Policy

● Add vNIC(s) to LAN Connectivity Policy

● Complete the Control-Plane Server Profile Template

● Build the OpenShift Worker LAN Connectivity Policy

● Create the OpenShift Worker Server Profile Template

The Cisco Intersight platform is a management solution delivered as a service with embedded analytics for Cisco and third-party IT infrastructures. The Cisco Intersight Managed Mode (also referred to as Cisco IMM or Intersight Managed Mode) is an architecture that manages Cisco Unified Computing System (Cisco UCS) fabric interconnect–attached systems through a Redfish-based standard model. Cisco Intersight managed mode standardizes both policy and operation management for Cisco UCS C-Series M7 and Cisco UCS X210c M7 compute nodes used in this deployment guide.

Cisco UCS B-Series M6 servers, connected and managed through Cisco UCS FIs, are also supported by IMM. For a complete list of supported platforms, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/Intersight/b_Intersight_Managed_Mode_Configuration_Guide/b_intersight_managed_mode_guide_chapter_01010.html

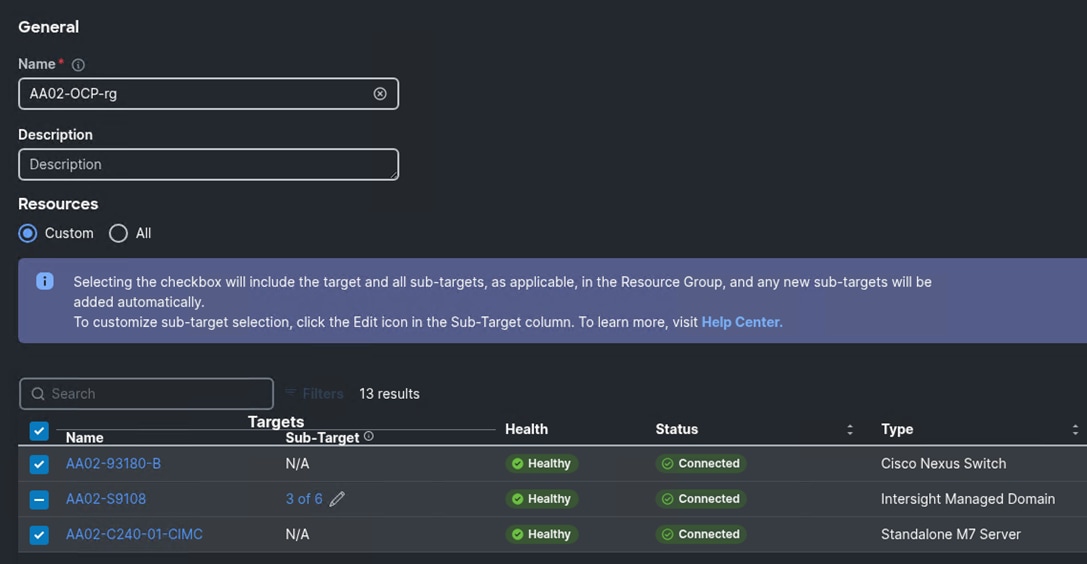

Procedure 1. Set up Cisco Intersight Resource Group

In this procedure, a Cisco Intersight resource group for the Red Hat OpenShift tenant is created where resources such as targets will be logically grouped. In this deployment, a single resource group is created to host all the resources, but you can choose to create multiple resource groups for granular control of the resources.

Step 1. Log into Cisco Intersight.

Step 2. Select System.

Step 3. Click Resource Groups on the left.

Step 4. Click + Create Resource Group in the top-right corner.

Step 5. Provide a name for the Resource Group (for example, AA02-OCP-rg).

Step 6. Under Resources, select Custom.

Step 7. Select all resources that are connected to this Red Hat OpenShift FlexPod tenant.

Note: If more than one FlexPod tenant is sharing the FIs, a subset of the servers can be assigned to the Resource Group.

Step 8. Click Create.

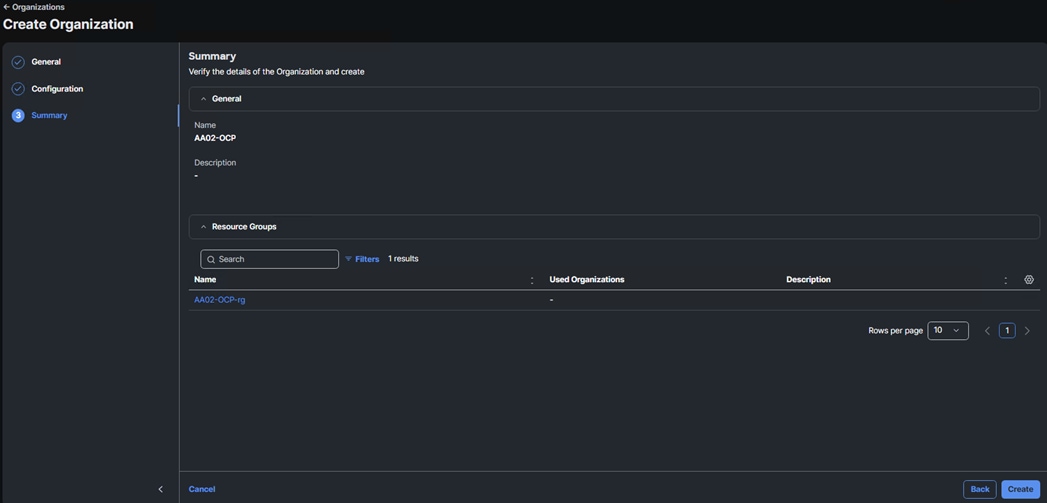

Procedure 2. Set Up Cisco Intersight Organization

In this procedure, an Intersight organization for the Red Hat OpenShift tenant is created where all Cisco Intersight Managed Mode configurations including policies are defined.

Step 1. Log into the Cisco Intersight portal.

Step 2. Select System.

Step 3. Click Organizations on the left.

Step 4. Click + Create Organization in the top-right corner.

Step 5. Provide a name for the organization (for example, AA02-OCP), optionally select Share Resources with Other Organizations, and click Next.

Step 6. Select the Resource Group created in the last step (for example, AA02-OCP-rg) and click Next.

Step 7. Click Create.

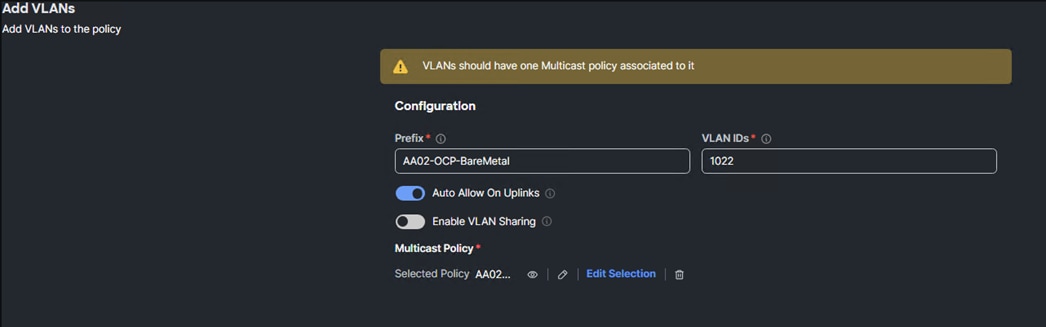

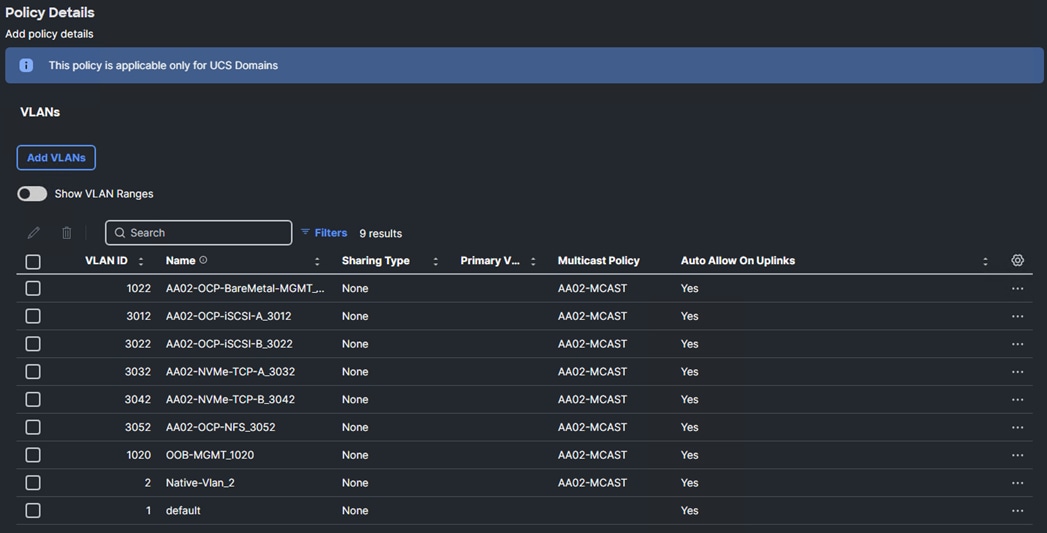

Procedure 3. Add OpenShift VLANs to VLAN Policy

Step 1. Log into the Cisco Intersight portal.

Step 2. Select Configure. On the left, select Profiles then select the UCS Domain Profiles tab.

Step 3. To the right of the UCS Domain Profile used for the OpenShift tenant, click … and select Edit.

Step 4. Click Next to go to UCS Domain Assignment.

Step 5. Click Next to go to VLAN & VSAN Configuration.

Step 6. Under VLAN & VSAN Configuration, click the pencil icon to the left of the VLAN Policy to Edit the policy.

Step 7. Click Next to go to Policy Details.

Step 8. To add the OCP-BareMetal VLAN, click Add VLANs.

Step 9. For the Prefix, enter the VLAN name. For the VLAN ID, enter the VLAN id. Leave Auto Allow on Uplinks enabled and Enable VLAN Sharing disabled.

Step 10. Under Multicast Policy, click Select Policy and select the already configured Multicast Policy (for example, AA02-MCAST).

Step 11. Click Add to add the VLAN to the policy.

Step 12. Repeat the above process to add all the VLANs in Table 1 to the VLAN Policy.

Step 13. Click Save to save the VLAN Policy.

Step 14. Click Next three times to get to the UCS Domain Profile Summary page.

Step 15. Click Deploy and then Deploy again to deploy the UCS Domain Profile.

Cisco UCS IMM Manual Configuration

Configure Server Profile Template

In the Cisco Intersight platform, a server profile enables resource management by simplifying policy alignment and server configuration. The server profiles are derived from a server profile template. A Server profile template and its associated policies can be created using the server profile template wizard. After creating the server profile template, customers can derive multiple consistent server profiles from the template.

The server profile templates captured in this deployment guide supports Cisco UCS X210c M7 compute nodes with 5th Generation VICs and can be modified to support other Cisco UCS blades and rack mount servers.

vNIC Placement for Server Profile Template

In this deployment, separate server profile templates are created for OpenShift Worker and Control-Plane Nodes where Worker Nodes have storage network interfaces to support workloads, but Control-Plane Nodes do not. The vNIC layout is covered below. While most of the policies are common across various templates, the LAN connectivity policies are unique and will use the information in the tables below.

Note: If a cluster with combined control-plane and worker nodes is utilized, the Worker Server Profile Template should be used for all nodes.

One vNIC is configured for OpenShift Control-Plane Nodes. This vNIC is manually placed as listed in Table 4.

Four vNICs are configured for OpenShift Worker Nodes. These vNICs are manually placed as listed in Table 5. NVMe-TCP VLAN Interfaces can be added as tagged VLANs to the iSCSI vNICs when NVMe-TCP is being used.

Table 4. vNIC placement for OpenShift Control-Plane Nodes

| vNIC/vHBA Name |

Switch ID |

PCI Order |

Fabric Failover |

Native VLAN |

Allowed VLANs |

| eno5 |

A |

0 |

Y |

OCP-BareMetal-MGMT |

OCP-BareMetal-MGMT |

Table 5. vNIC placement for OpenShift Worker Nodes

| vNIC/vHBA Name |

Switch ID |

PCI Order |

Fabric Failover |

Native VLAN |

Allowed VLANs |

| eno5 |

A |

0 |

Y |

OCP-BareMetal-MGMT |

OCP-BareMetal-MGMT |

| eno6 |

A |

1 |

N |

OCP-iSCSI-A |

OCP-iSCSI-A, OCP-NVMe-TCP-A |

| eno7 |

B |

2 |

N |

OCP-iSCSI-B |

OCP-iSCSI-B, OCP-NVMe-TCP-B |

| eno8 |

B |

3 |

Y |

OCP-NFS |

OCP-NFS |

Note: OCP-NVMe-TCP-A will be added to eno6 as a VLAN interface. OCP-NVMe-TCP-B will be added to eno7 as a VLAN interface.

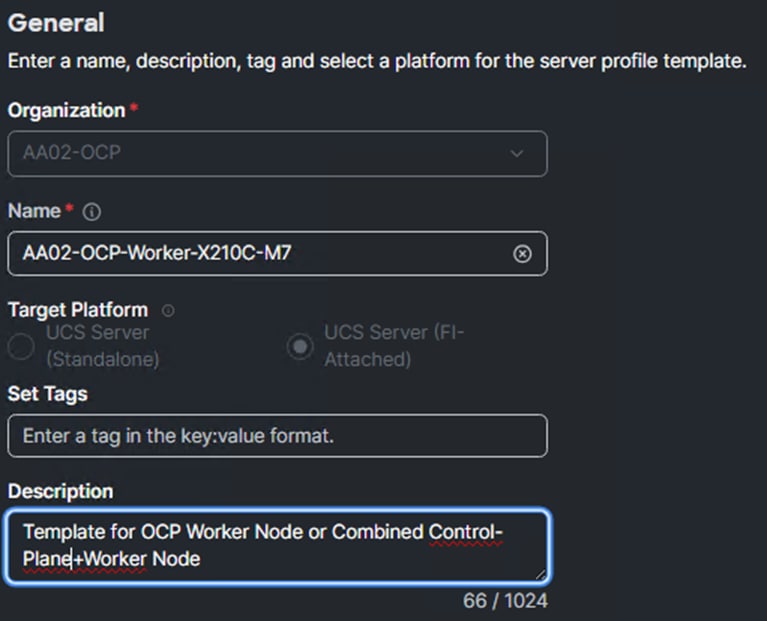

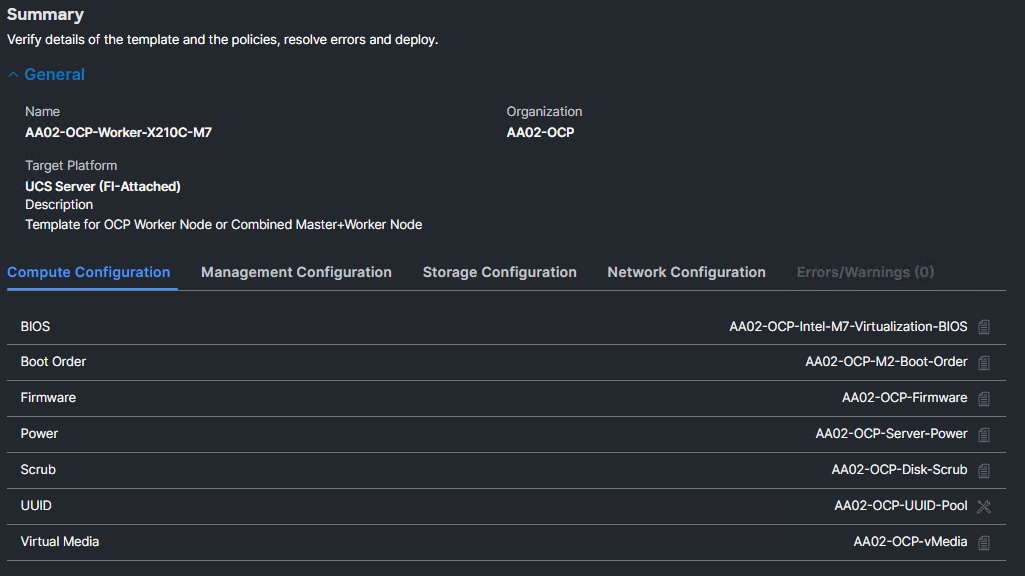

Procedure 4. Create Worker Node Server Profile Template

A Server Profile Template will first be created for the OpenShift Worker Nodes. This procedure will assume an X210C M7 is being used but can be modified for other server types.

Step 1. Log into Cisco Intersight.

Step 2. Go to Configure > Templates in the main window and select UCS Server Profile Templates. Click Create UCS Server Profile Template.

Step 3. Select the organization from the drop-down list (for example, AA02-OCP).

Step 4. Provide a name for the server profile template (for example, AA02-OCP-Worker-X210C-M7)

Step 5. Select UCS Server (FI-Attached).

Step 6. Provide an optional description.

Step 7. Click Next.

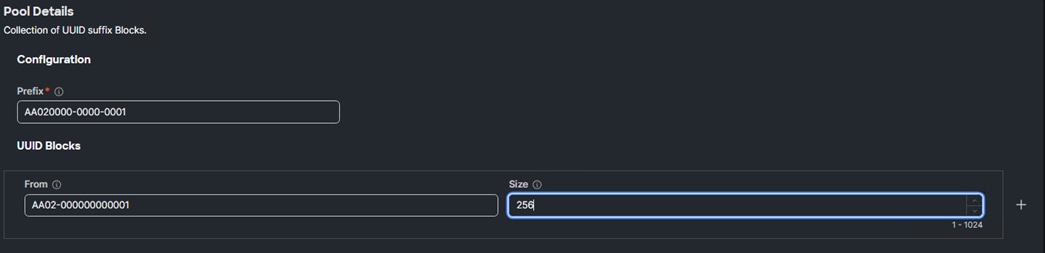

Procedure 5. Compute Configuration – Configure UUID Pool

Step 1. Click Select Pool under UUID Pool and then in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, AA02) and provide a name for the UUID Pool (for example, AA02-OCP-UUID-Pool).

Step 3. Provide an optional Description and click Next.

Step 4. Provide a unique UUID Prefix (for example, a prefix of AA020000-0000-0001 was used).

Step 5. Add a UUID block.

Step 6. Click Create.

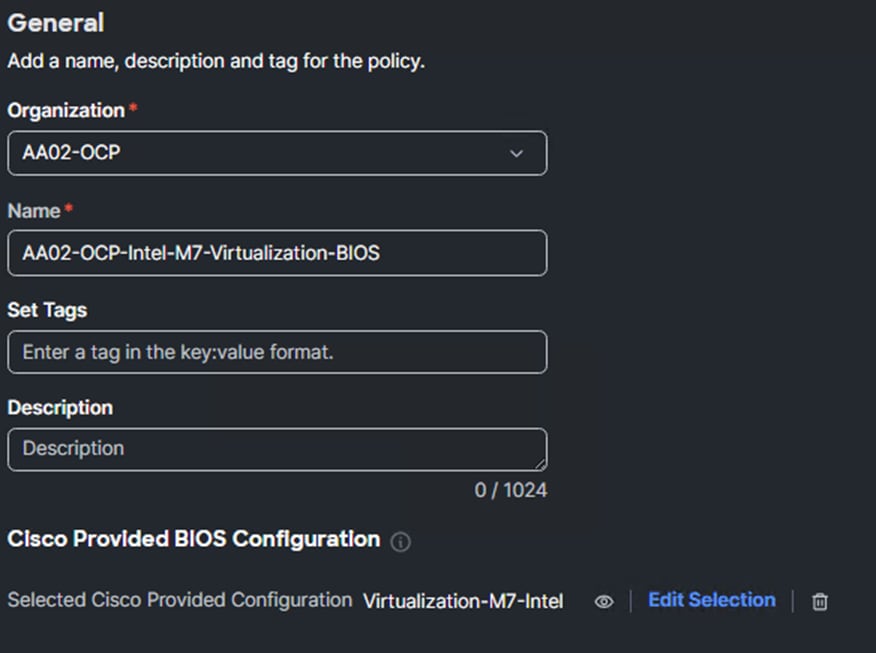

Procedure 6. Configure BIOS Policy

Step 1. Click Select Policy next to BIOS and in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Intel-M7-Virtualization-BIOS).

Step 3. Enter an optional Description.

Step 4. Click Select Cisco Provided Configuration. In the Search box, type Vir. Select Virtualization-M7-Intel or the appropriate Cisco Provided Configuration for your platform.

Step 5. Click Next.

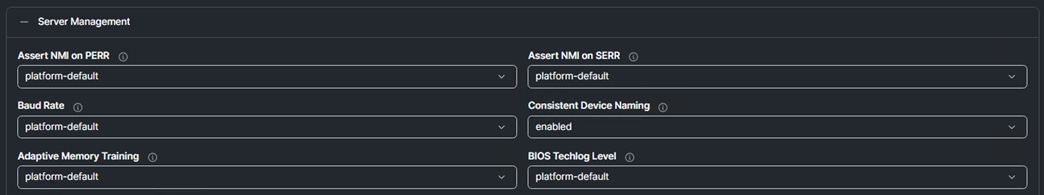

Step 6. On the Policy Details screen, expand Server Management. Use the pulldown to set the Consistent Device Naming BIOS token to enabled.

Note: The BIOS Policy settings specified here are from the Performance Tuning Best Practices Guide for Cisco UCS M7 Platforms - Cisco with the Virtualization workload. For other platforms, the appropriate document is listed below:

● Performance Tuning Guide for Cisco UCS M6 Servers - Cisco

● Performance Tuning Guide for Cisco UCS M5 Servers White Paper - Cisco

Step 7. Click Create to create the BIOS Policy.

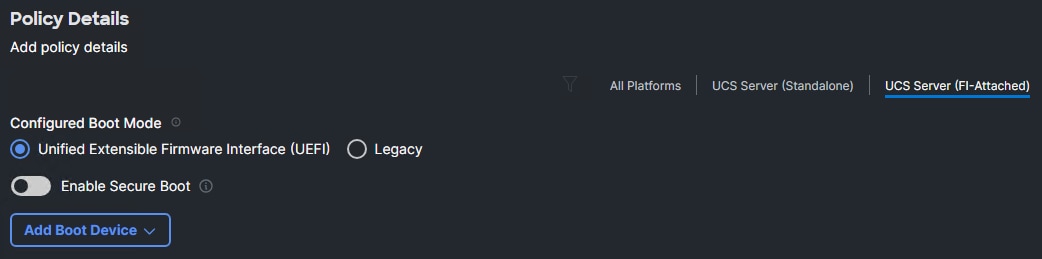

Procedure 7. Configure Boot Order Policy for M2

Step 1. Click Select Policy next to Boot Order and then, in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, AA02) and provide a name for the policy (for example, AA02-OCP-M2-Boot-Order).

Step 3. Click Next.

Step 4. For Configured Boot Mode, select Unified Extensible Firmware Interface (UEFI).

Step 5. Do not turn on Enable Secure Boot.

Note: It is critical to not enable UEFI Secure Boot. If Secure Boot is enabled, the NVIDIA GPU Operator GPU driver will fail to initialize.

Step 6. Click the Add Boot Device drop-down list and select Virtual Media.

Note: We are entering the Boot Devices in reverse order here to avoid having to move them in the list later.

Step 7. Provide a Device Name (for example, KVM-Mapped-ISO) and then, for the subtype, select KVM MAPPED DVD.

Step 8. Click the Add Boot Device drop-down list and select Virtual Media.

Step 9. Provide a Device Name (for example, CIMC-Mapped-ISO) and then, for the subtype, select CIMC MAPPED DVD.

Step 10. Click the Add Boot Device drop-down list and select Local Disk.

Step 11. Provide a Device Name (for example, M2) and MSTOR-RAID for the Slot.

Step 12. Verify the order of the boot devices and adjust the boot order as necessary using arrows next to the Delete button.

Step 13. Click Create.

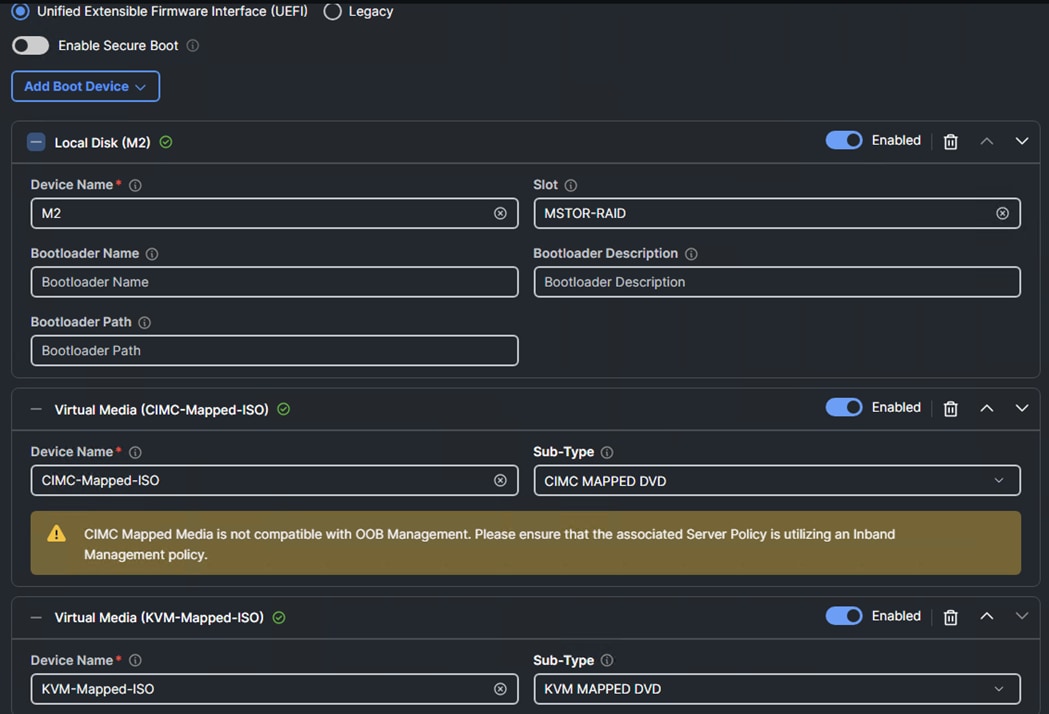

Procedure 8. Configure Firmware Policy (optional)

Since Red Hat OpenShift recommends using homogeneous server types for Control-Plane Nodes (and Workers), a Firmware Policy can ensure that all servers are running the appropriate firmware when the Server Profile is deployed.

Step 1. Click Select Policy next to Firmware and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Firmware). Click Next.

Step 3. Select the Server Model (for example, UCSX-210C-M7) and the latest 5.2(2) firmware version.

Step 4. Optionally, other server models can be added using the plus sign on the right.

Step 5. Click Create to create the Firmware Policy.

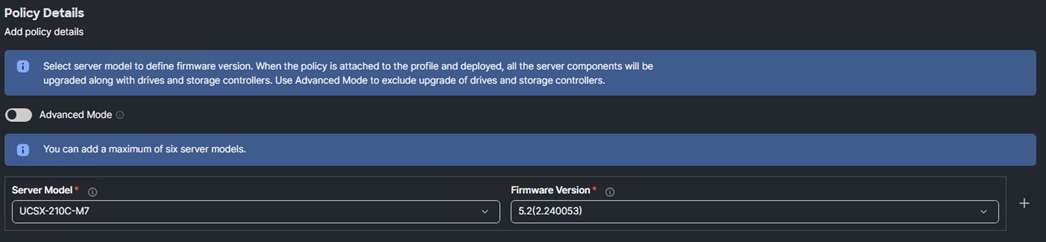

Procedure 9. Configure Power Policy

A Power Policy can be defined and attached to blade servers (Cico UCS X- and B-Series).

Step 1. Click Select Policy next to Power and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Server-Power). Click Next.

Step 3. Make sure UCS Server (FI-Attached) is selected and adjust any of the parameters according to your organizational policies.

Step 4. Click Create to create the Power Policy.

Step 5. Optionally, if you are using Cisco UCS C-Series servers, a Thermal Policy can be created and attached to the profile.

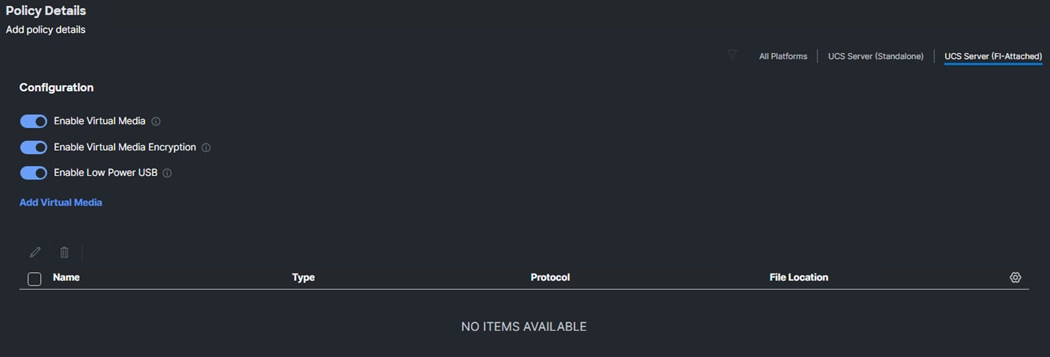

Procedure 10. Configure Virtual Media Policy

Step 1. Click Select Policy next to Virtual Media and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-vMedia). Click Next.

Step 3. Ensure that Enable Virtual Media, Enable Virtual Media Encryption, and Enable Low Power USB are turned on.

Step 4. Do not Add Virtual Media at this time, but the policy can be modified and used to map an ISO for a CIMC Mapped DVD.

Step 5. Click Create to create the Virtual Media Policy.

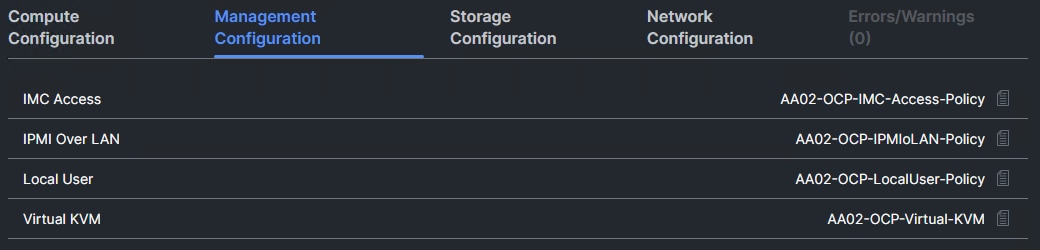

Step 6. Click Next to move to Management Configuration.

Four policies will be added to the management configuration:

● IMC Access to define the pool of IP addresses for compute node KVM access

● IPMI Over LAN to allow Intersight to manage IPMI messages

● Local User to provide local administrator to access KVM

● Virtual KVM to allow the Tunneled KVM

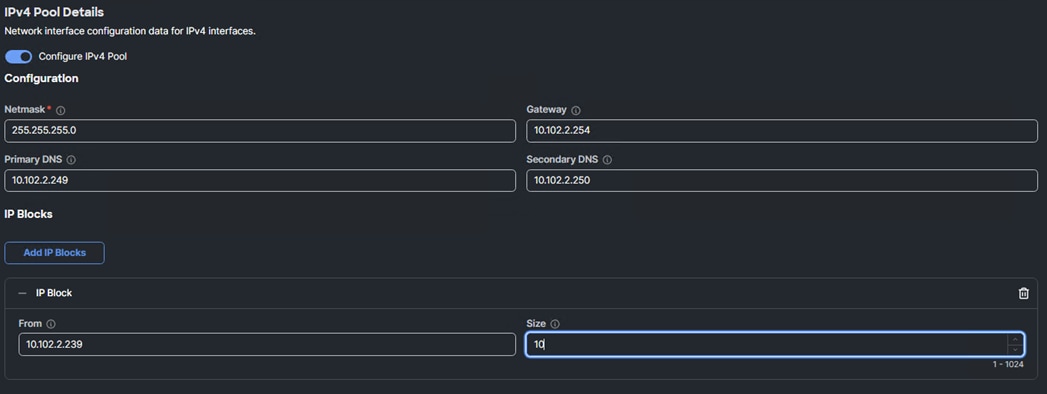

Procedure 1. Configure Cisco IMC Access Policy

The IMC Access Policy can be configured to use either the OOB-MGMT subnet/VLAN or the Baremetal-MGMT subnet/VLAN. The choice here is a design decision based on whether this FlexPod Tenant has access to the OOB-MGMT subnet/VLAN. This example procedure uses the Baremetal-MGMT subnet/VLAN but can be adjusted to use the OOB-MGMT subnet/VLAN. The IMC Access Policy should always be setup to use In-Band Management. If the OOB-MGMT subnet/VLAN is used, it was already configured on the FI switch ports and in the FIs.

Step 1. Click Select Policy next to IMC Access and then, in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-IMC-Access-Policy).

Step 3. Click Next.

Note: Because certain features are not yet enabled for Out-of-Band Configuration (accessed via the Fabric Interconnect mgmt0 ports), if you are using the OOB-MGMT subnet/VLAN, we are bringing in the OOB-MGMT VLAN through the Fabric Interconnect Uplinks and mapping it as the In-Band Configuration VLAN. This was done in FlexPod Base.

Step 4. Ensure UCS Server (FI-Attached) is selected on the right.

Step 5. Enable In-Band Configuration. Enter the OCP-BareMetal VLAN ID (for example, 1022) and select “IPv4 address configuration.”

Step 6. Under IP Pool, click Select IP Pool and then, in the pane on the right, click Create New.

Step 7. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-BareMetal-MGMT-IP-Pool). Click Next.

Step 8. Ensure Configure IPv4 Pool is selected and provide the information to define a unique pool for KVM IP address assignment including an IP Block (added by clicking Add IP Blocks).

Note: You will need the IP addresses of the OpenShift DNS servers here.

Note: The management IP pool subnet should be accessible from the host that is trying to open the KVM connection. In the example shown here, the hosts trying to open a KVM connection would need to be able to route to the 10.102.2.0/24 subnet.

Step 9. Click Next.

Step 10. Deselect Configure IPv6 Pool.

Step 11. Click Create to finish configuring the IP address pool.

Step 12. Click Create to finish configuring the IMC access policy.

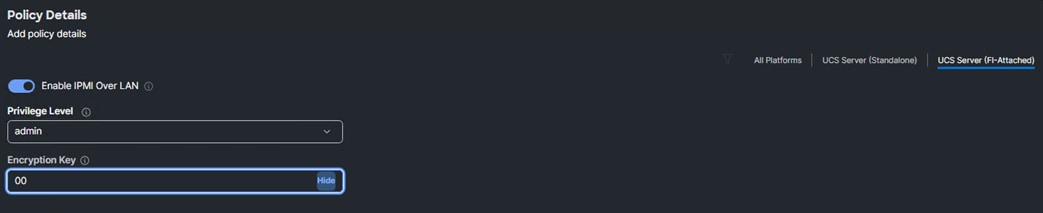

Procedure 2. Configure IPMI Over LAN Policy

The IPMI Over LAN Policy can be used to allow both IPMI and Redfish connectivity to Cisco UCS Servers.

Step 1. Click Select Policy next to IPMI Over LAN and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02) and provide a name for the policy (for example, AA02-OCP-IPMIoLAN-Policy). Click Next.

Step 3. On the right, ensure UCS Server (FI-Attached) is selected.

Step 4. Ensure Enable IPMI Over LAN is selected.

Step 5. From the Privilege Level drop-down list, select admin.

Step 6. For Encryption Key, enter 00 to disable encryption.

Step 7. Click Create to create the IPMI Over LAN policy.

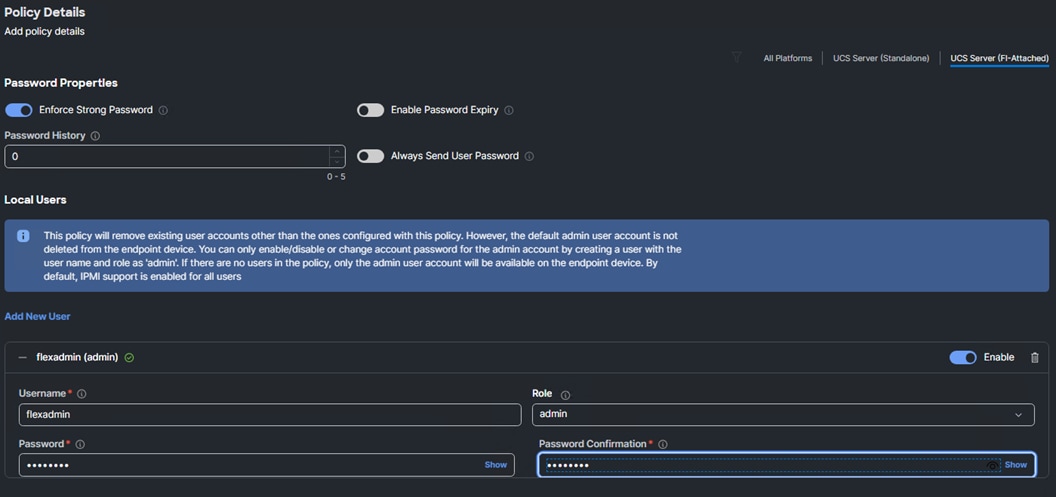

Procedure 3. Configure Local User Policy

Step 1. Click Select Policy next to Local User and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-LocalUser-Policy). Click Next.

Step 3. Verify that UCS Server (FI-Attached) is selected.

Step 4. Verify that Enforce Strong Password is selected.

Step 5. Enter 0 under Password History.

Step 6. Click Add New User.

Step 7. Provide the username (for example, flexadmin), select a role (for example, admin), and provide a password and password confirmation.

Note: The username and password combination defined here will be used as an alternate to log in to KVMs and can be used for IPMI.

Step 8. Click Create to finish configuring the Local User policy.

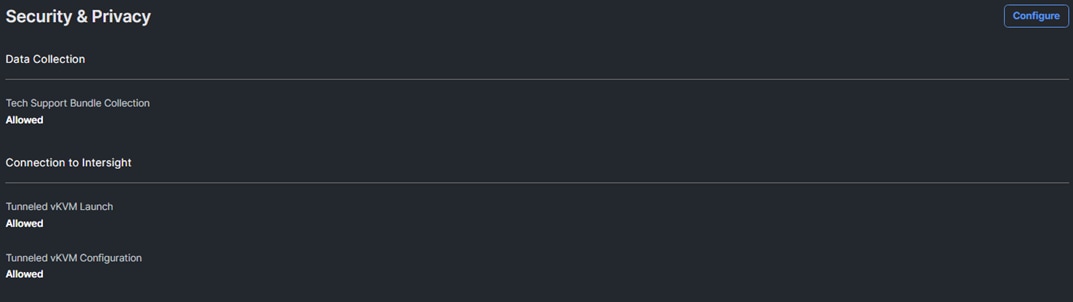

Procedure 4. Configure Virtual KVM Policy

Step 1. Click Select Policy next to Virtual KVM and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Virtual-KVM). Click Next.

Step 3. Verify that UCS Server (FI-Attached) is selected.

Step 4. Turn on Allow Tunneled vKVM.

Step 5. Click Create.

Note: To fully enable Tunneled KVM, once the Server Profile Template has been created, go to System > Settings > Security and Privacy and click Configure. Turn on “Allow Tunneled vKVM Launch” and “Allow Tunneled vKVM Configuration.” If Tunneled vKVM Launch and Tunneled vKVM Configuration are not Allowed, use the Configure button to change these settings.

Step 6. Click Next to move to Storage Configuration.

Storage Configuration

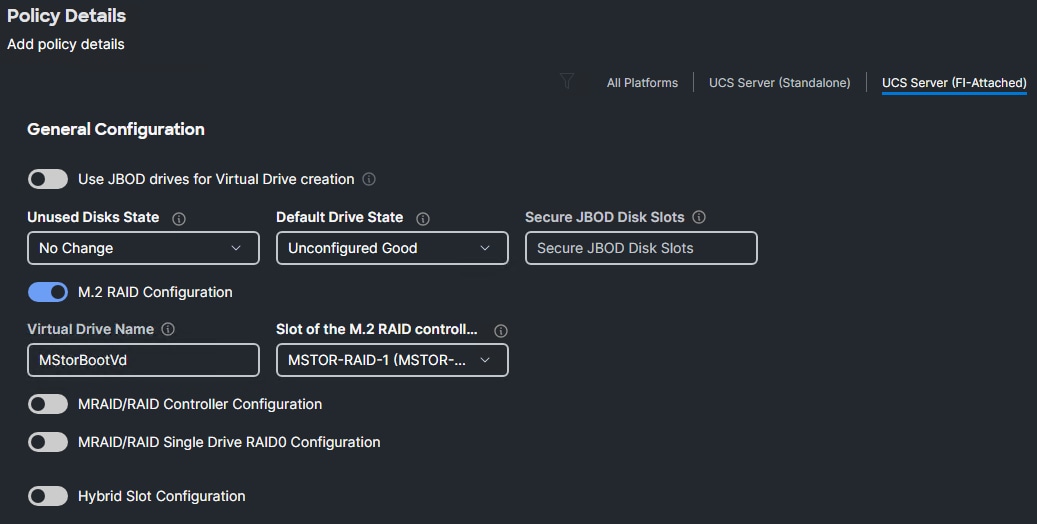

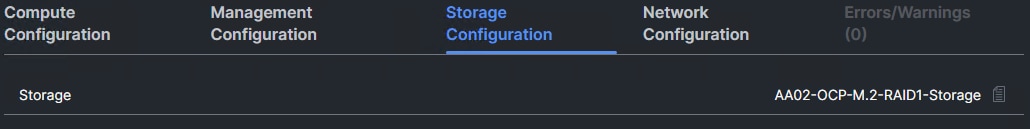

Procedure 1. Storage Configuration (optional)

If you have two M.2 drives in your servers you can create an optional policy to mirror these drives using RAID1.

Step 1. If it is not necessary to configure a Storage Policy, click Next to continue to Network Configuration.

Step 2. Click Select Policy next to Storage and then, in the pane on the right-click Create New.

Step 3. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-M.2-RAID1-Storage). Click Next.

Step 4. Enable M.2 RAID Configuration and leave the default Virtual Drive Name and Slot of the M.2 RAID controller field values, or values appropriate to your environment. Click Create.

Step 5. Click Next.

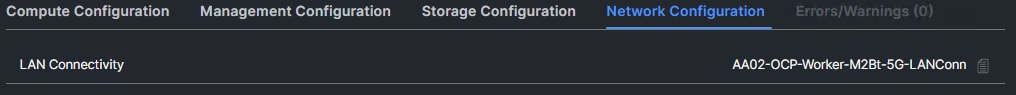

Network Configuration

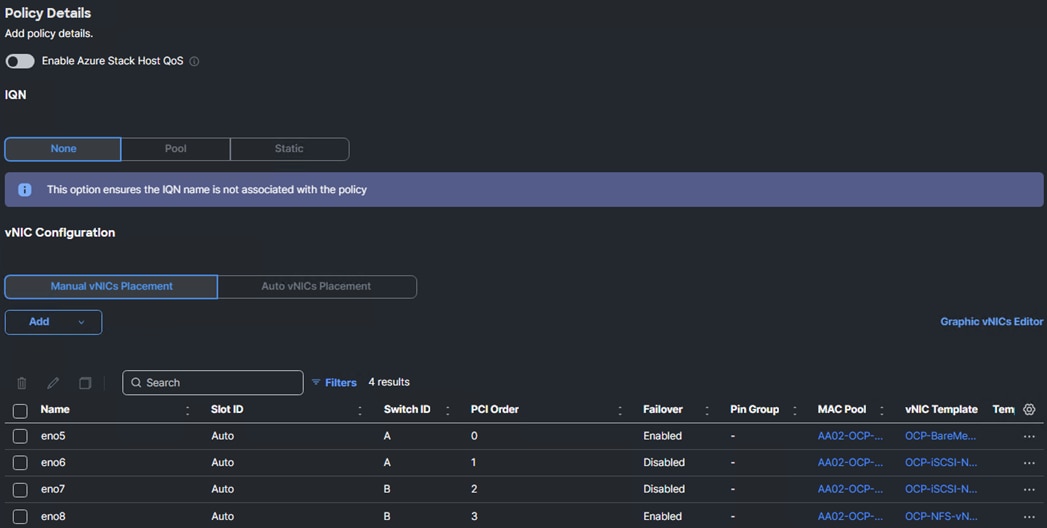

Procedure 1. Create Network Configuration - LAN Connectivity for Worker Nodes

The LAN connectivity policy defines the connections and network communication resources between the server and the LAN. This policy uses pools to assign MAC addresses to servers and to identify the vNICs that the servers use to communicate with the network.

For consistent vNIC placement, manual vNIC placement is utilized. Additionally, the assumption is being made here that each server contains only one VIC card and Simple placement, which adds vNICs to the first VIC, is being used. If you have more than one VIC in a server, the Advanced placement will need to be used.

The Worker hosts use 4 vNICs configured as listed in Table 6.

Table 6. vNIC placement for OpenShift Worker Nodes

| vNIC/vHBA Name |

Switch ID |

PCI Order |

Fabric Failover |

Native VLAN |

Allowed VLANs |

MTU |

| eno5 |

A |

0 |

Y |

OCP-BareMetal-MGMT |

OCP-BareMetal-MGMT |

1500 |

| eno6 |

A |

1 |

N |

OCP-iSCSI-A |

OCP-iSCSI-A, OCP-NVMe-TCP-A |

9000 |

| eno7 |

B |

2 |

N |

OCP-iSCSI-B |

OCP-iSCSI-B, OCP-NVMe-TCP-B |

9000 |

| eno8 |

B |

3 |

Y |

OCP-NFS |

OCP-NFS |

9000 |

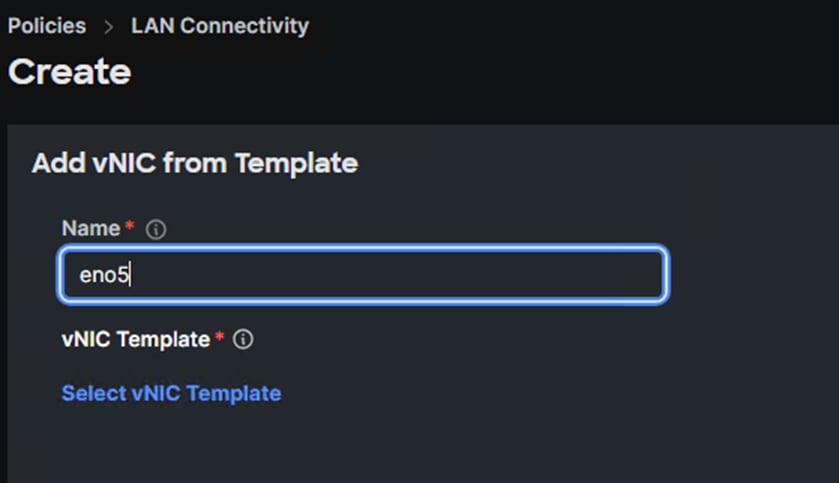

Step 1. Click Select Policy next to LAN Connectivity and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP), provide a name for the policy (for example, AA02-OCP-Worker-M2Bt-5G-LANConn) and select UCS Server (FI-Attached) under Target Platform. Click Next.

Step 3. Leave None selected under IQN and under vNIC Configuration, select Manual vNICs Placement.

Step 4. Use the Add drop-down list to select vNIC from Template.

Step 5. Enter the name for the vNIC from the table above (for example, eno5) and click Select vNIC Template.

Step 6. In the upper right, click Create New.

Step 7. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the vNIC Template (for example, AA02-OCP-BareMetal-MGMT-vNIC). Click Next.

Procedure 2. Create MAC Address Pool for Fabric A and B

Note: When creating the first vNIC, the MAC address pool has not been defined yet, therefore a new MAC address pool will need to be created. Two separate MAC address pools are configured, one for each Fabric. MAC-Pool-A will be used for all Fabric-A vNICs, and MAC-Pool-B will be used for all Fabric-B vNICs. Adjust the values in the table for your environment.

| Pool Name |

Starting MAC Address |

Size |

vNICs |

| MAC-Pool-A |

00:25:B5:A2:0A:00 |

64* |

eno5, eno6 |

| MAC-Pool-B |

00:25:B5:A2:0B:00 |

64* |

eno7, eno8 |

Note: For Control-Plane Nodes, each server requires 1 MAC address from MAC-Pool-A, and for Workers, each server requires 2 MAC addresses from MAC-Pool-A and 2 MAC addresses from MAC-Pool-B. Adjust the size of the pool according to your requirements.

Step 1. Click Select Pool under MAC Pool and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the pool from Table 7 with the prefix applied depending on the vNIC being created (for example, AA02-OCP-MAC-Pool-A for Fabric A).

Step 3. Click Next.

Step 4. Provide the starting MAC address from Table 7 (for example, 00:25:B5:A2:0A:00)

Note: For ease of troubleshooting FlexPod, some additional information is always coded into the MAC address pool. For example, in the starting address 00:25:B5:A2:0A:00, A2 is the rack ID and 0A indicates Fabric A.

Step 5. Provide the size of the MAC address pool from Table 7 (for example, 64).

Step 6. Click Create to finish creating the MAC address pool.

Step 7. From the Create vNIC Template window, provide the Switch ID from Table 6.

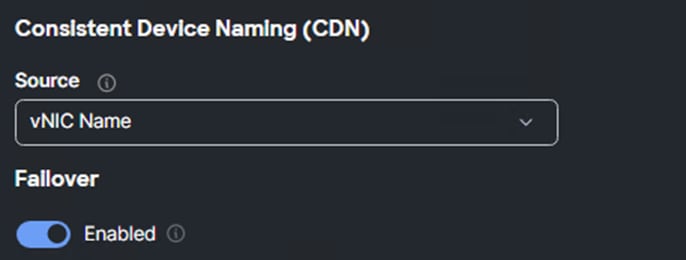

Step 8. For Consistent Device Naming (CDN), from the drop-down list, select vNIC Name.

Step 9. For Failover, set the value from Table 6.

Procedure 3. Create Ethernet Network Group Policy

Ethernet Network Group policies will be created and reused on applicable vNICs as explained below. The ethernet network group policy defines the VLANs allowed for a particular vNIC, therefore multiple network group policies will be defined for this deployment as listed in Table 8.

Table 8. Ethernet Group Policy Values

| Group Policy Name |

Native VLAN |

Apply to vNICs |

Allowed VLANs |

| AA02-OCP-BareMetal-NetGrp |

OCP-BareMetal-MGMT (1022) |

eno5 |

OCP-BareMetal-MGMT |

| AA02-OCP-iSCSI-NVMe-TCP-A-NetGrp |

OCP-iSCSI-A (3012) |

eno6 |

OCP-iSCSI-A, OCP-NVMe-TCP-A* |

| AA02-OCP-iSCSI-NVMe-TCP-B-NetGrp |

OCP-iSCSI-B (3022) |

eno7 |

OCP-iSCSI-B, OCP-NVMe-TCP-B* |

| AA02-OCP-NFS-NetGrp |

OCP-NFS |

eno8 |

OCP-NFS |

Note: *Add the NVMe-TCP VLANs when using NVMe-TCP.

Step 1. Click Select Policy under Ethernet Network Group Policy and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy from the Table 8 (for example, AA02-OCP-BareMetal-NetGrp).

Step 3. Click Next.

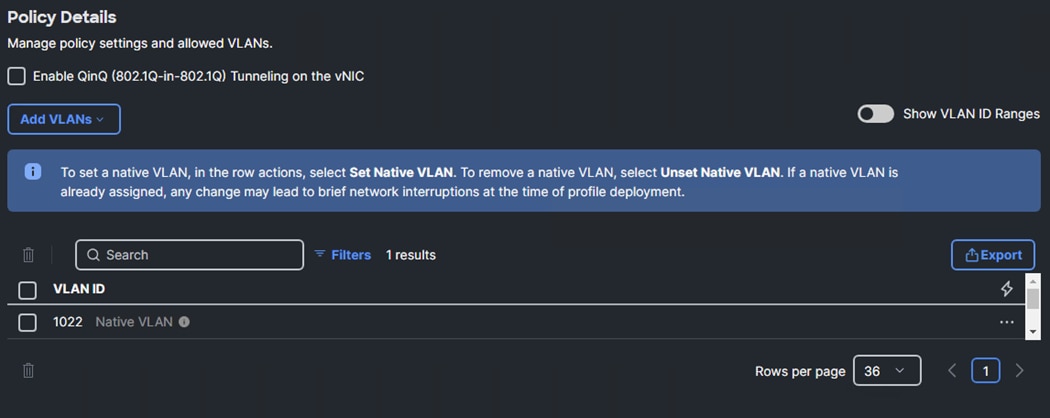

Step 4. From the Add VLANs drop-down list, select Enter Manually.

Step 5. Enter the Allowed VLANs from Table 8 as a comma separated list (for example, 1022). Click Enter.

Step 6. Click the three dots to the right of the native VLAN for the policy and select Set Native VLAN.

Step 7. Click Create to finish configuring the Ethernet network group policy.

Note: When ethernet group policies are shared between two vNICs, the ethernet group policy only needs to be defined for the first vNIC. For subsequent vNIC policy mapping, click Select Policy and pick the previously defined ethernet network group policy from the list.

Procedure 4. Create Ethernet Network Control Policy

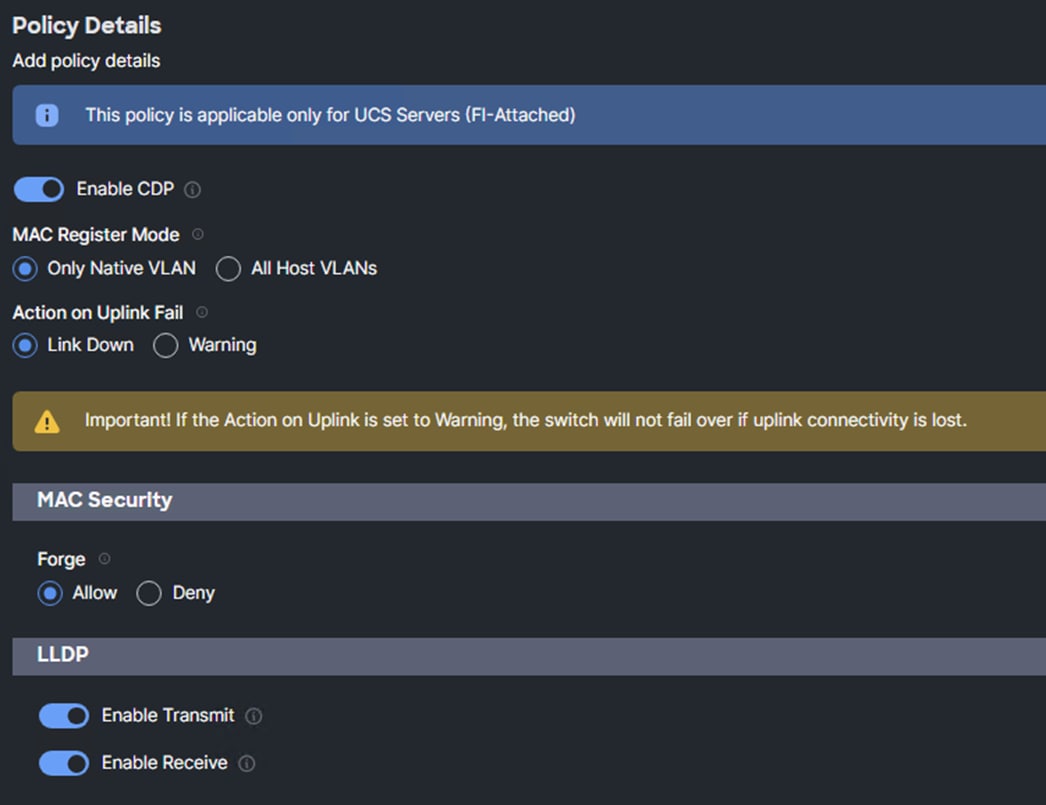

The Ethernet Network Control Policy is used to enable Cisco Discovery Protocol (CDP) and Link Layer Discovery Protocol (LLDP) for the vNICs. A single policy will be created here and reused for all the vNICs.

Step 1. Click Select Policy under Ethernet Network Control Policy and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Enable-CDP-LLDP).

Step 3. Click Next.

Step 4. Enable Cisco Discovery Protocol (CDP) and Enable Transmit and Enable Receive under LLDP.

Step 5. Click Create to finish creating Ethernet network control policy.

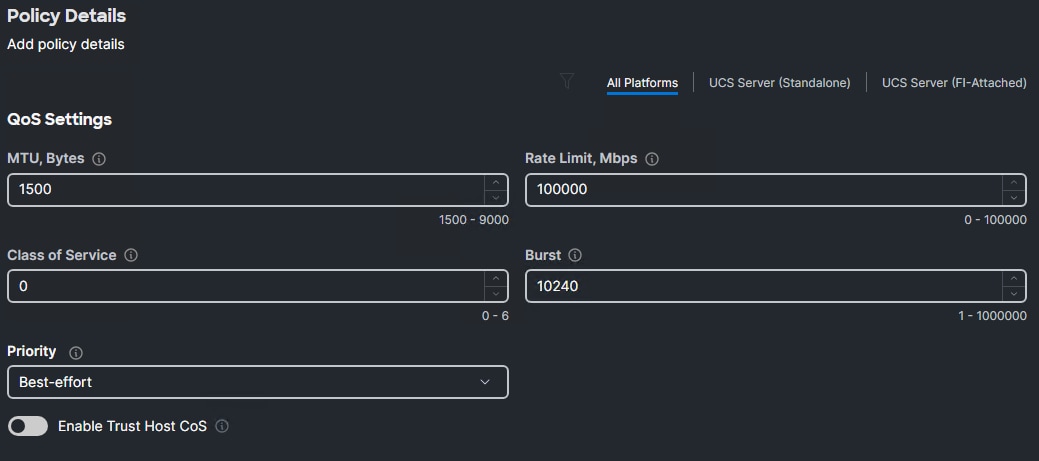

Procedure 5. Create Ethernet QoS Policy

Note: The Ethernet QoS policy is used to enable the appropriate maximum transmission unit (MTU) for all the vNICs. Across the vNICs, two policies will be created (one for MTU 1500 and one for MTU 9000) and reused for all the vNICs.

Table 9. Ethernet QoS Policy association to vNICs

| Policy Name |

vNICs |

| AA02-OCP-MTU1500-EthernetQoS |

eno5 |

| AA02-OCP-MTU9000-EthernetQoS |

eno6, eno7, eno8 |

Step 1. Click Select Policy under Ethernet QoS and in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-MTU1500-EthernetQoS). The name of the policy should conform to the MTU from Table 9.

Step 3. Click Next.

Step 4. Change the MTU, Bytes value to the value from Table 8.

Step 5. Set the Rate Limit Mbps to 100000.

Step 6. Click Create to finish setting up the Ethernet QoS policy.

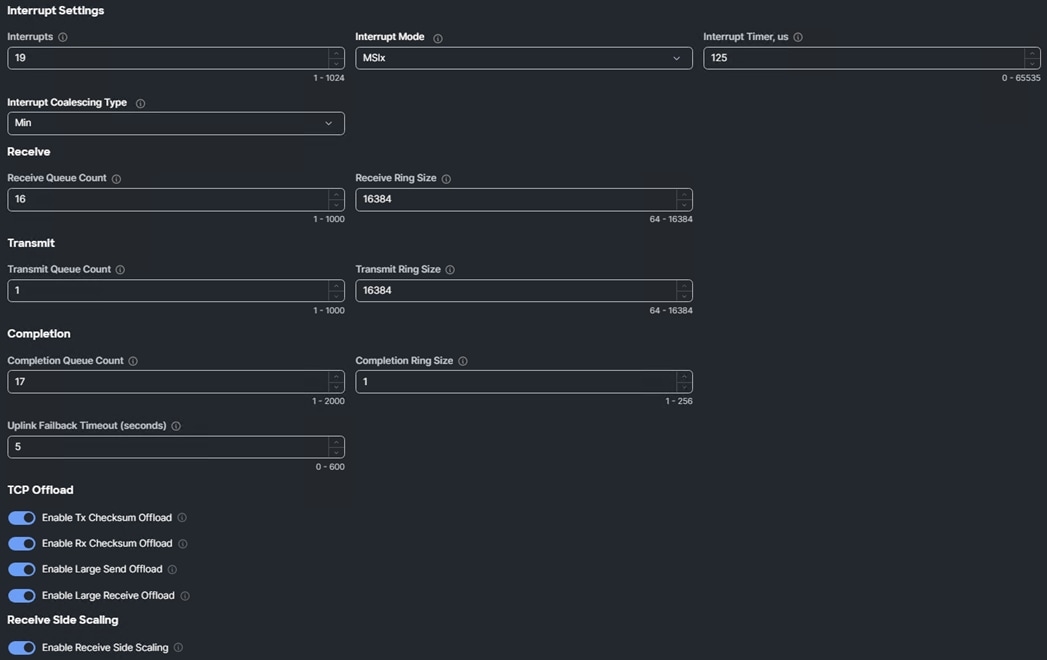

Procedure 6. Create Ethernet Adapter Policy

The ethernet adapter policy is used to set the interrupts, send, and receive queues, and queue ring size. The values are set according to the best-practices guidance for the operating system in use. Cisco Intersight provides a default Linux Ethernet Adapter policy for typical Linux deployments.

You can optionally configure a tweaked ethernet adapter policy for additional hardware receive queues handled by multiple CPUs in scenarios where there is a lot of traffic and multiple flows. In this deployment, a modified ethernet adapter policy, AA02-EthAdapter-16RXQs-5G, is created and attached to storage vNICs. Non-storage vNICs will use the default Linux-v2 Ethernet Adapter policy.

Table 10. Ethernet Adapter Policy association to vNICs

| Policy Name |

vNICs |

| AA02-OCP-EthAdapter-Linux-v2 |

eno5 |

| AA02-OCP-EthAdapter-16RXQs-5G |

eno6, eno7, eno8 |

Step 1. Click Select Policy under Ethernet Adapter and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-EthAdapter-Linux-v2).

Step 3. Click Select Cisco Provided Configuration under Cisco Provided Ethernet Adapter Configuration.

Step 4. From the list, select Linux-v2.

Step 5. Click Next.

Step 6. For the AA02-OCP-EthAdapter-Linux-v2 policy, click Create and skip the rest of the steps in this “Create Ethernet Adapter Policy” section.

Step 7. For the AA02-OCP-EthAdapter-16RXQs-5G policy, make the following modifications to the policy:

● Increase Interrupts to 19

● Increase Receive Queue Count to 16

● Increase Receive Ring Size to 16384 (Leave at 4096 for 4G VICs)

● Increase Transmit Ring Size to 16384 (Leave at 4096 for 4G VICs)

● Increase Completion Queue Count to 17

● Ensure Receive Side Scaling is enabled

Step 8. Click Create.

Procedure 7. Add vNIC(s) to LAN Connectivity Policy

The vNIC Template has now been created and all policies attached.

Step 1. For PCI Order enter the number from Table 8. Verify the other values.

Step 2. Click Add to add the vNIC to the LAN Connectivity Policy.

Step 3. If building the Worker LAN Connectivity Policy, go back to Procedure 1 Create Network Configuration - LAN Connectivity for Worker Nodes, Step 4 and repeat the vNIC Template and vNIC creation for all four vNICs often selecting existing policies instead of creating them.

Step 4. Verify all vNICs were successfully created.

Step 5. Click Create to finish creating the LAN Connectivity policy.

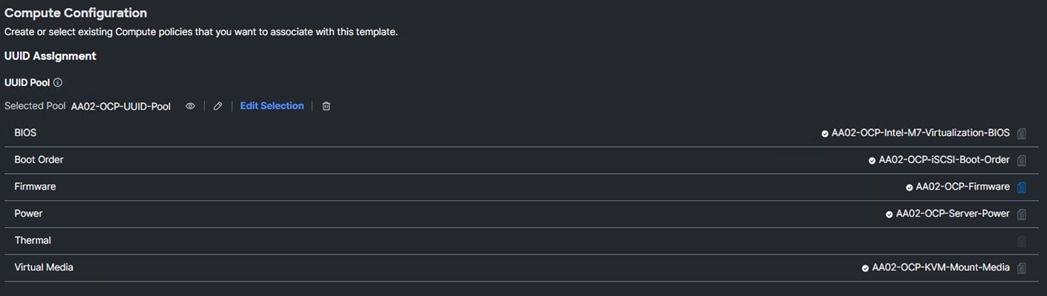

Procedure 8. Complete the Worker Server Profile Template

Step 1. When the LAN connectivity policy is created, click Next to move to the Summary screen.

Step 2. On the Summary screen, verify the policies are mapped to various settings. The screenshots below provide the summary view for the OpenShift Worker M.2 Boot server profile template.

Step 3. Click Close to close the template.

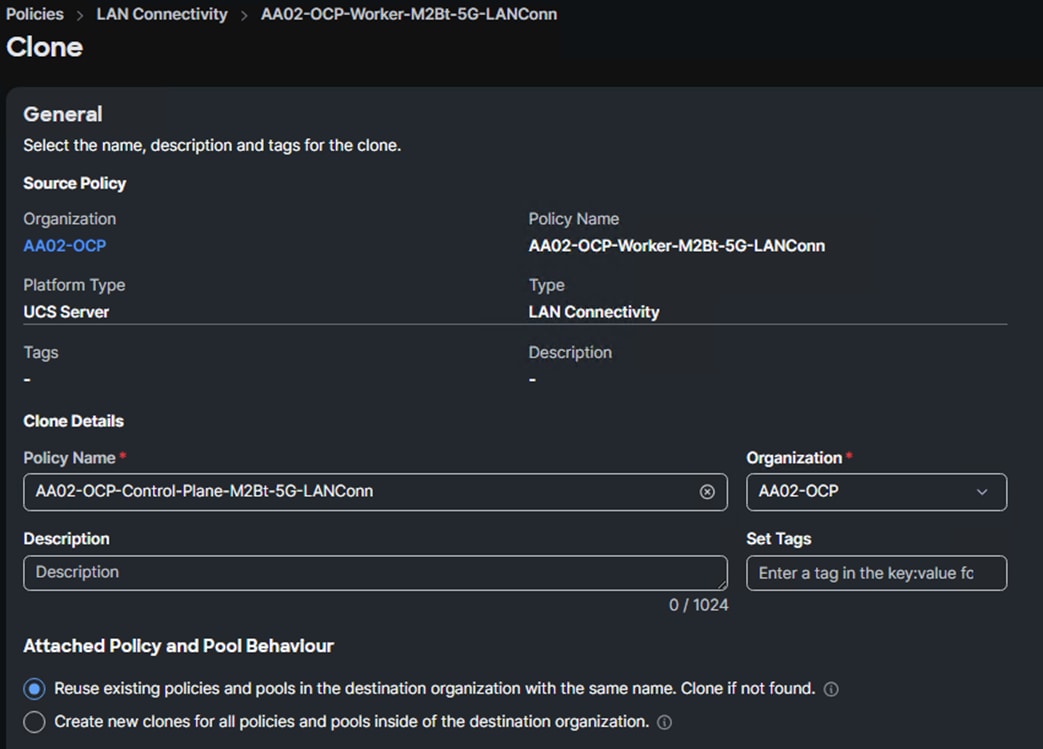

Procedure 9. Build the OpenShift Control-Plane LAN Connectivity Policy

Note: If combined control-plane and worker nodes are being used, it is not necessary to build a Control-Plane Node LAN Connectivity Policy because only the Worker LAN Connectivity Policy will be used.

Step 1. The OpenShift Worker LAN Connectivity Policy can be cloned and vNICs eno6, eno7, and eno8 removed to build the OpenShift Control-Plane Node LAN Connectivity Policy with only eno5 that will then be used in the OpenShift Control-Plane Node Server Profile Template. Log into Cisco Intersight and select Configure > Policies.

Step 2. In the policy list, look for the <org-name>-Worker-M2Bt-5G-LANConn or the LAN Connectivity policy created above. Click … to the right of the policy and select Clone.

Step 3. Change the name of the cloned policy to something like AA02-OCP-Control-Plane-M2Bt-5G-LANConn and select the correct Organization (for example, AA02-OCP).

Step 4. Click Clone to clone the policy.

Step 5. From the Policies window, click the refresh button to refresh the list. The newly cloned policy should now appear at the top of the list. Click … to the right of the newly cloned policy and select Edit.

Step 6. Click Next.

Step 7. Use the checkboxes to select all vNICs except eno5. Click the trash can icon to delete all vNICs except eno5.

Step 8. Click Save to save the policy.

Procedure 10. Create the OpenShift Control-Plane Node Server Profile Template

The OpenShift Worker Server Profile Template can be cloned and modified to create the OpenShift Control-Plane Node Server Profile Template.

Note: If combined control-plane and worker nodes are being used, it is not necessary to build a Control-Plane Node Server Profile Template because only the Worker Server Profile Template will be used.

Step 1. Log into Cisco Intersight and select Configure > Templates > UCS Server Profile Templates.

Step 2. To the right of the OCP-Worker-X210C-M7 template, click … and select Clone.

Step 3. Ensure that the correct Destination Organization is selected (for example, AA02-OCP) and click Next.

Step 4. Adjust the Clone Name (for example, AA02-Control-Plane-X210C-M7) and Description as needed and click Next.

Step 5. From the Templates window, click the … to the right of the newly created clone and click Edit.

Step 6. Click Next until you get to Storage Configuration. If the Storage Policy needs to be added or deleted, make that adjustment here.

Step 7. Click Next to get to Network Configuration. Click the page icon to the right of the LAN Connectivity Policy and select the Control-Plane Node LAN Connectivity Policy. Click Select.

Step 8. Click Next and Close to save this template.

Complete the Cisco UCS IMM Setup

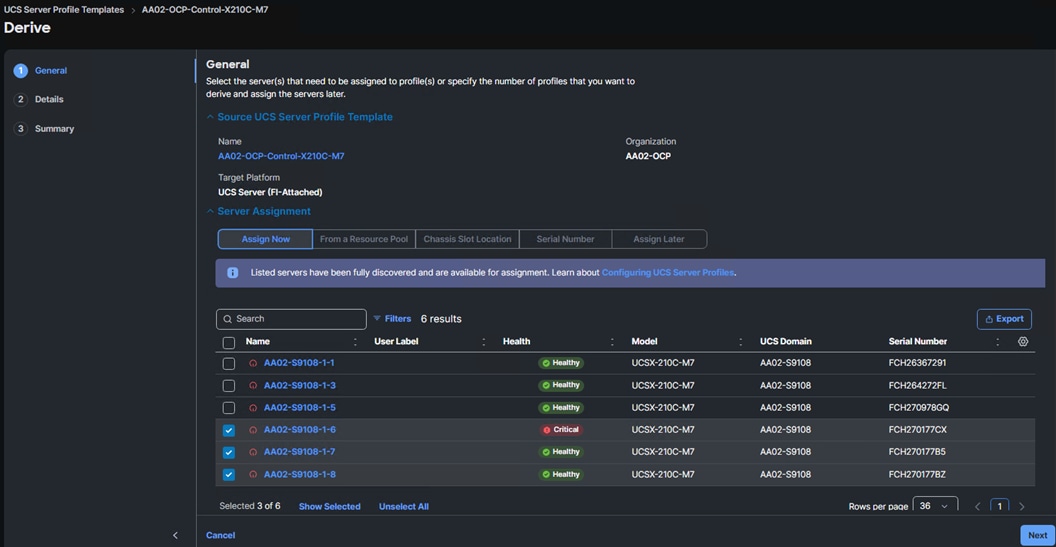

Procedure 1. Derive Server Profiles

Note: If using combined control-plane and worker nodes, use the OCP-Worker template for all nodes.

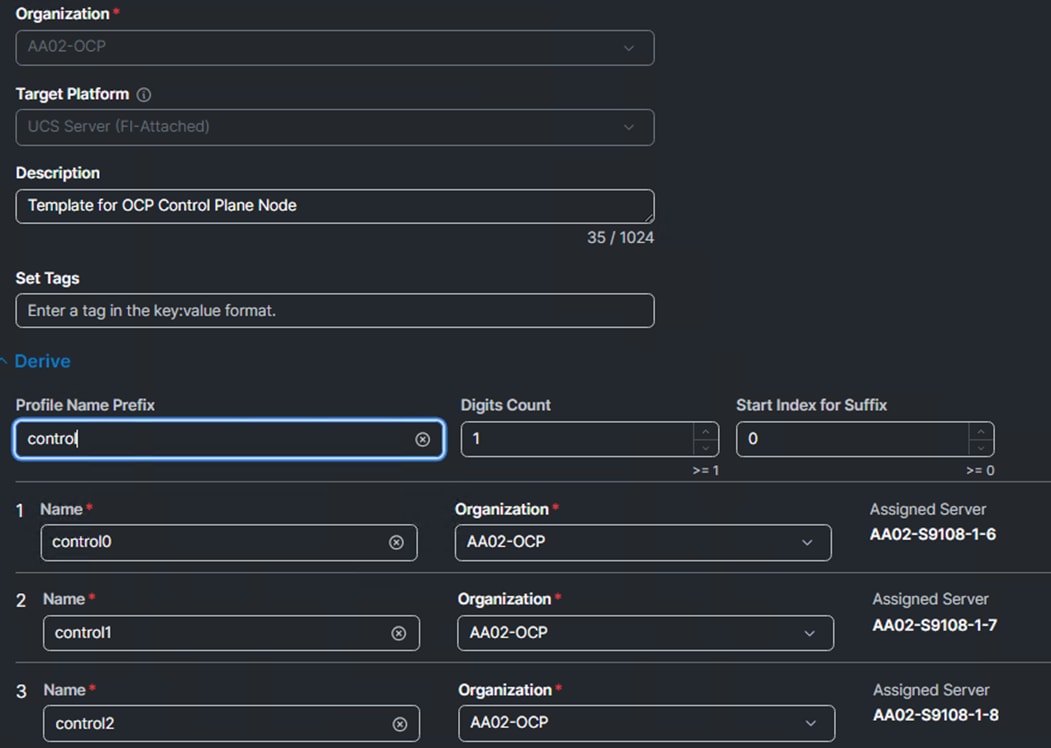

Step 2. Under the Server Assignment, select Assign Now and select the 3 Cisco UCS X210c M7 servers that will be used as OpenShift Control-Plane Nodes.

Step 3. Click Next.

Step 4. For the Profile Name Prefix, put in the first part of the OpenShift Control-Plane Node hostnames (for example, control. Set Start Index for Suffix to 0 (zero). The 3 server Names should now correspond to the OpenShift Control-Plane Node hostnames.

Step 5. Click Next.

Step 6. Click Derive to derive the OpenShift Control-Plane Node Server Profiles.

Step 7. Select Profiles on the left and then select the UCS Server Profiles tab.

Step 8. Select the 3 OpenShift Control-Plane Node profiles and then click the … at the top or bottom of the list and select Deploy.

Step 9. Select Reboot Immediately to Activate and click Deploy.

Step 10. Repeat this process to create 3 OpenShift Worker Node Server Profiles using the OCP-Worker-Template.

OpenShift Installation and Configuration

This chapter contains the following:

● OpenShift – Installation Requirements

● Add an Additional Administrative User to the OpenShift Cluster

● Add a Worker Node to an OpenShift Cluster

● Deploy a Sample Containerized Application

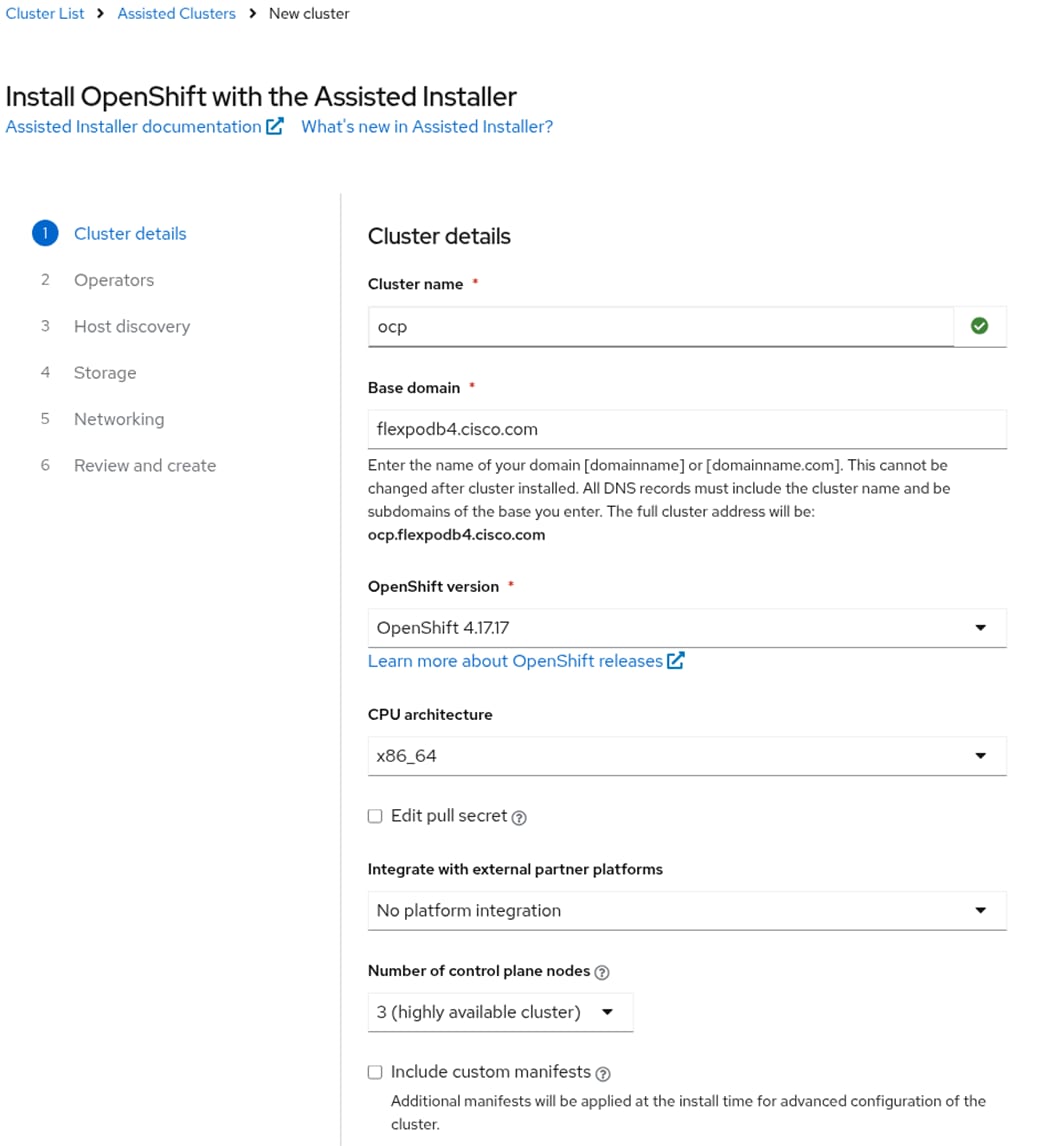

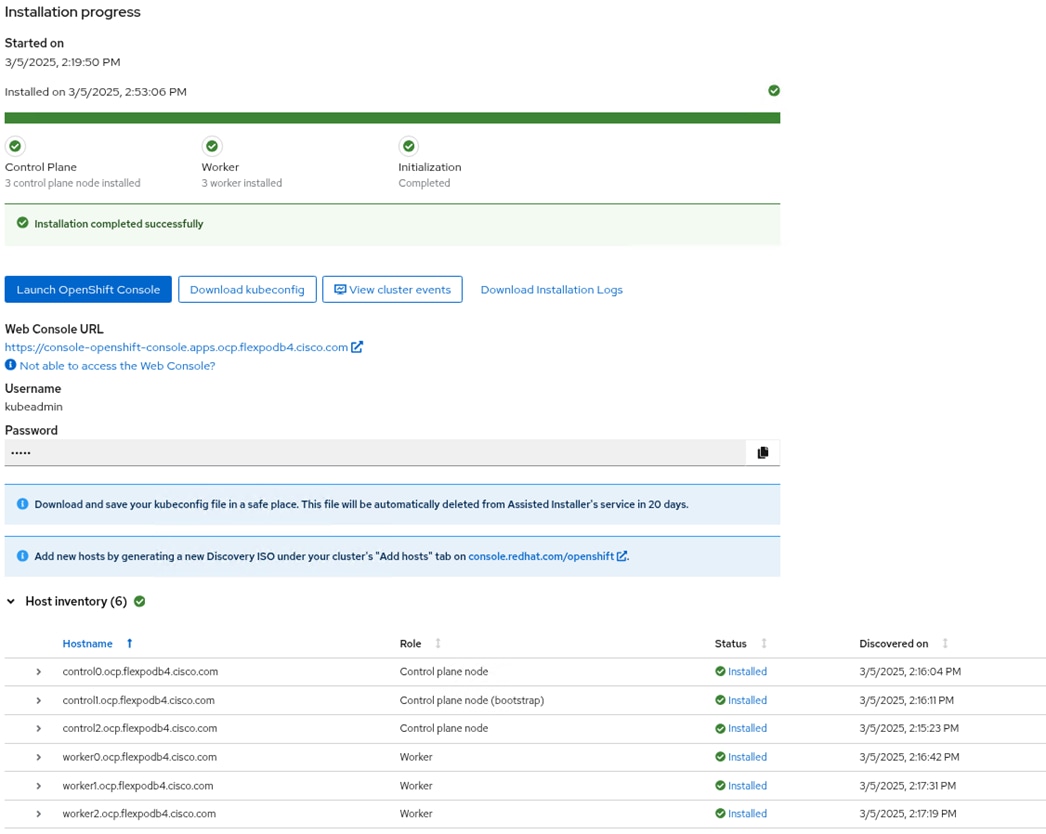

OpenShift 4.17 is deployed on the Cisco UCS infrastructure as M.2 booted bare metal servers. The Cisco UCS X210C M7 servers need to be equipped with an M.2 controller (SATA or NVMe) card and either 1 or 2 identical M.2 drives. Three control-plane nodes and three worker nodes are deployed in the validation environment and additional worker nodes can easily be added to increase the scalability of the solution. This document will guide you through the process of using the Assisted Installer to deploy OpenShift 4.17.

OpenShift – Installation Requirements

The Red Hat OpenShift Assisted Installer provides support for installing OpenShift on bare metal nodes. This guide provides a methodology to achieving a successful installation using the Assisted Installer.

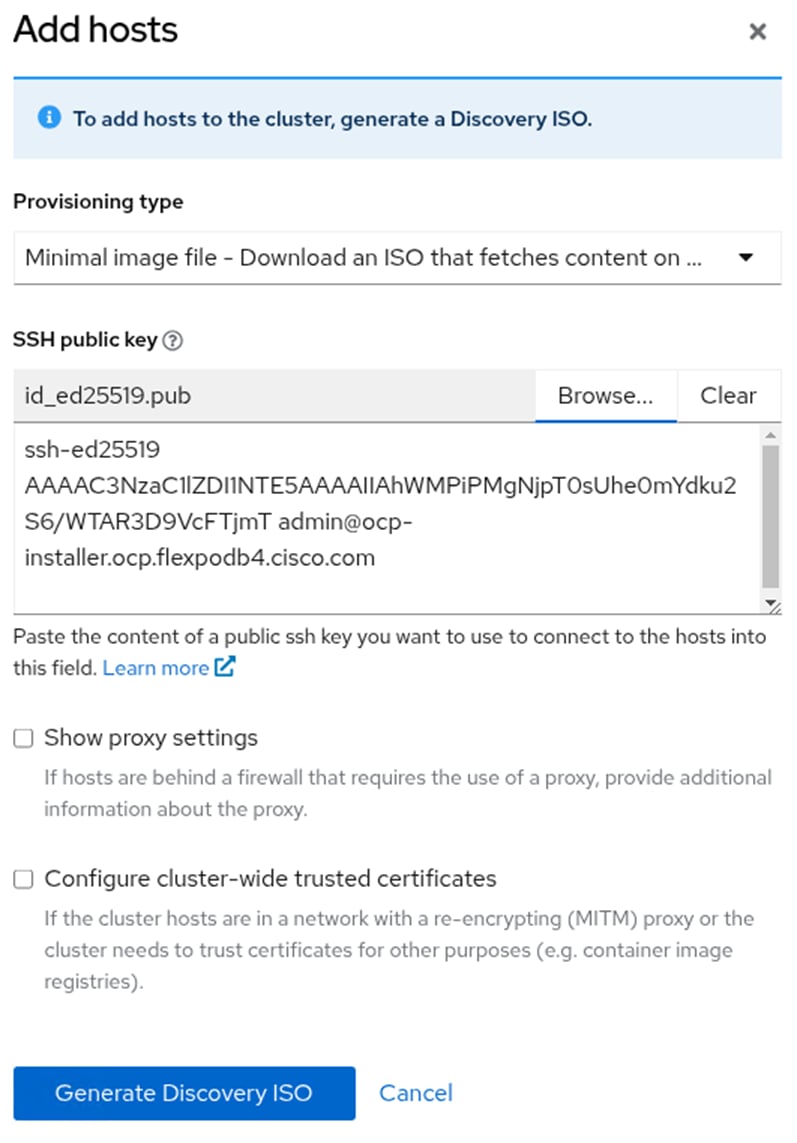

The FlexPod for OpenShift utilizes the Assisted Installer for OpenShift installation therefore when provisioning and managing the FlexPod infrastructure, you must provide all the supporting cluster infrastructure and resources, including an installer VM or host, networking, storage, and individual cluster machines.

The following supporting cluster resources are required for the Assisted Installer installation:

● The control plane and compute machines that make up the cluster

● Cluster networking

● Storage for the cluster infrastructure and applications

● The Installer VM or Host

The following infrastructure services need to be deployed to support the OpenShift cluster, during the validation of this solution we have provided VMs on your hypervisor of choice to run the required services. You can use existing DNS and DHCP services available in the data center.

There are various infrastructure services prerequisites for deploying OpenShift 4.16. These prerequisites are as follows:

● DNS and DHCP services – these services were configured on Microsoft Windows Server VMs in this validation

● NTP Distribution was done with the Cisco Nexus switches

● Specific DNS entries for deploying OpenShift – added to the DNS server

● A Linux VM for initial automated installation and cluster management – a Rocky Linux 9 / RHEL 9 VM with appropriate packages

NTP

Each OpenShift node in the cluster must have access to at least two NTP servers.

NICs

vNICs configured on the Cisco UCS servers based on the design previously discussed.

DNS

Clients access the OpenShift cluster nodes over the bare metal network. Configure a subdomain or subzone where the canonical name extension is the cluster name.

The following domain and OpenShift cluster names are used in this deployment guide:

● Base Domain: flexpodb4.cisco.com

● OpenShift Cluster Name: ocp

The DNS domain name for the OpenShift cluster should be the cluster name followed by the base domain, for example, ocp.flexpodb4.cisco.com.

Table 11 lists the information for fully qualified domain names used during validation. The API and Nameserver addresses begin with canonical name extensions. The hostnames of the control plane and worker nodes are exemplary, so you can use any host naming convention you prefer.

| Usage |

Hostname |

IP Address |

| API |

api.ocp.flexpodb4.cisco.com |

10.102.2.228 |

| Ingress LB (apps) |

*.apps.ocp.flexpodb4.cisco.com |

10.102.2.229 |

| control0 |

control0.ocp.flexpodb4.cisco.com |

10.102.2.211 |

| control1 |

control1.ocp.flexpodb4.cisco.com |

10.102.2.212 |

| control2 |

control2.ocp.flexpodb4.cisco.com |

10.102.2.213 |

| worker0 |

worker0.ocp.flexpodb4.cisco.com |

10.102.2.214 |

| worker1 |

worker1.ocp.flexpodb4.cisco.com |

10.102.2.215 |

| worker2 |

worker2.ocp.flexpodb4.cisco.com |

10.102.2.216 |

DHCP

For the bare metal network, a network administrator must reserve several IP addresses, including:

● One IP address for the API endpoint

● One IP address for the wildcard Ingress endpoint

● One IP address for each control-plane node (DHCP server assigns to the node)

● One IP address for each worker node (DHCP server assigns to the node)

Note: Obtain the MAC addresses of the bare metal Interfaces from the UCS Server Profile for each node to be used in the DHCP configuration to assign reserved IP addresses (reservations) to the nodes. The KVM IP address also needs to be gathered for the control-plane and worker nodes from the server profiles.

Procedure 1. Gather MAC Addresses of Node Bare Metal Interfaces

Step 1. Log into Cisco Intersight.

Step 2. Select Configure > Profiles > Server Profile (for example, ocp-worker2).

Step 3. In the center pane, select Inventory > Network Adapters > Network Adapter (for example, UCSX-ML-V5D200G).

Step 4. In the center pane, select Interfaces.

Step 5. Record the MAC address for NIC Interface eno5.

Step 6. Select the General tab and select Identifiers in the center pane.

Step 7. Record the Management IP assigned out of the OCP-BareMetal-IP-Pool.

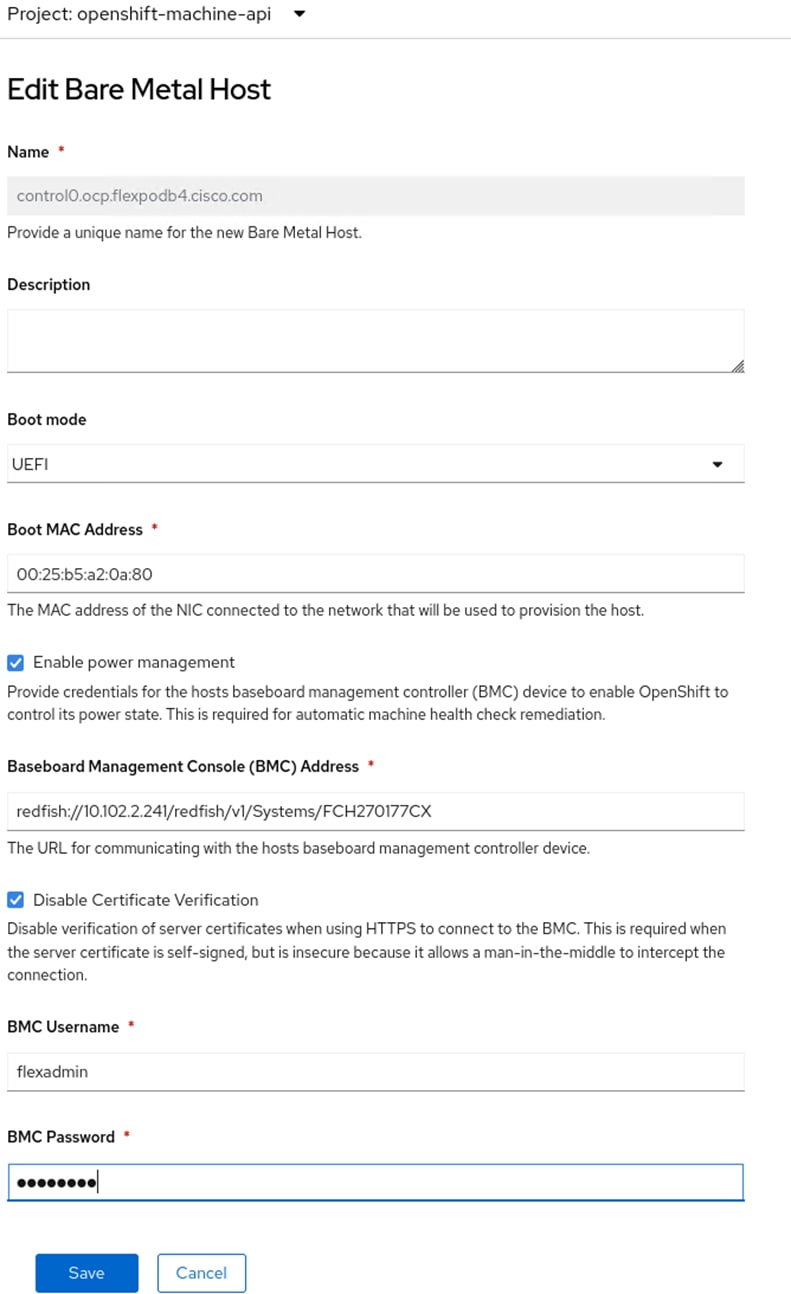

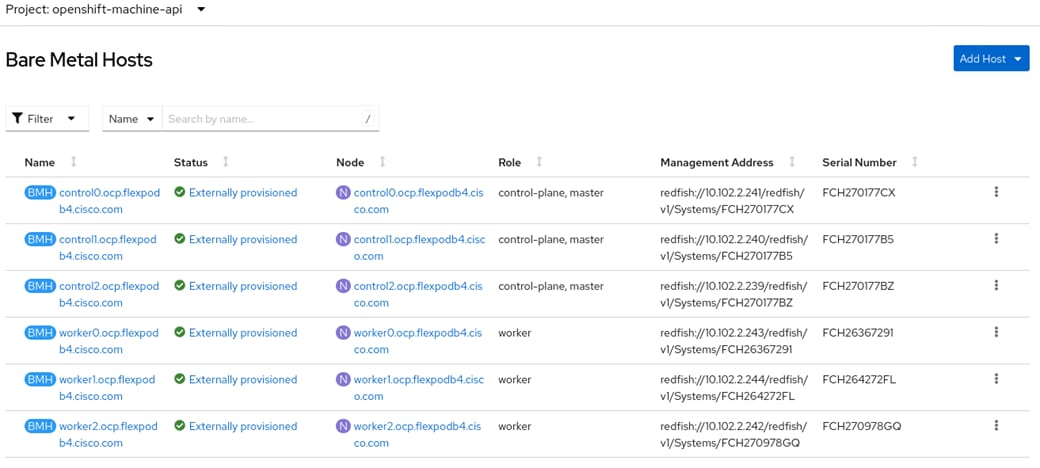

Table 12 lists the IP addresses used for the OpenShift cluster including bare metal network IPs and UCS KVM Management IPs for IPMI or Redfish access.

Table 12. Host BMC Information

| Hostname |

IP Address |

UCS KVM Mgmt. IP Address |

BareMetal MAC Address (eno5) |

| control0.ocp.flexpodb4.cisco.com |

10.102.2.211 |

10.102.2.241 |

00:25:B5:A2:0A:80 |

| control1.ocp.flexpodb4.cisco.com |

10.102.2.212 |

10.102.2.240 |

00:25:B5:A2:0A:81 |

| control2.ocp.flexpodb4.cisco.com |

10.102.2.213 |

10.102.2.239 |

00:25:B5:A2:0A:82 |

| worker0.ocp.flexpodb4.cisco.com |

10.102.2.214 |

10.102.2.243 |

00:25:B5:A2:0A:83 |

| worker1.ocp.flexpodb4.cisco.com |

10.102.2.215 |

10.102.2.244 |

00:25:B5:A2:0A:85 |

| worker2.ocp.flexpodb4.cisco.com |

10.102.2.216 |

10.102.2.242 |

00:25:B5:A2:0A:87 |

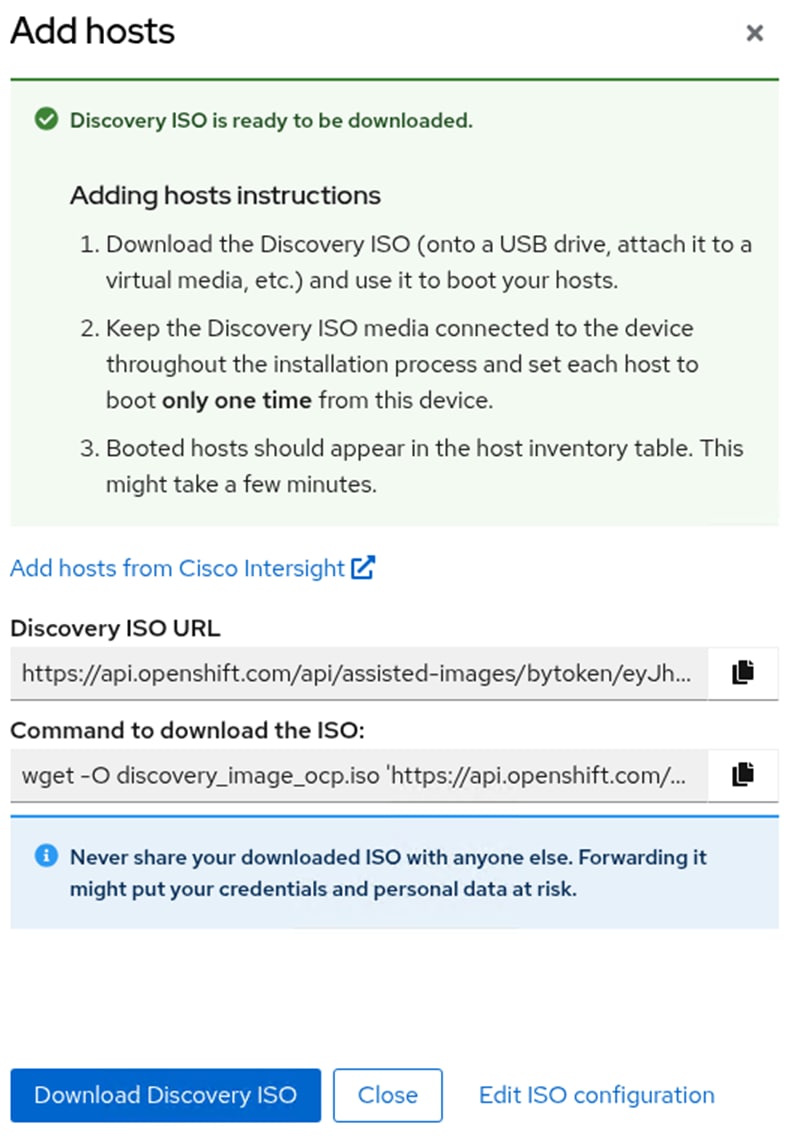

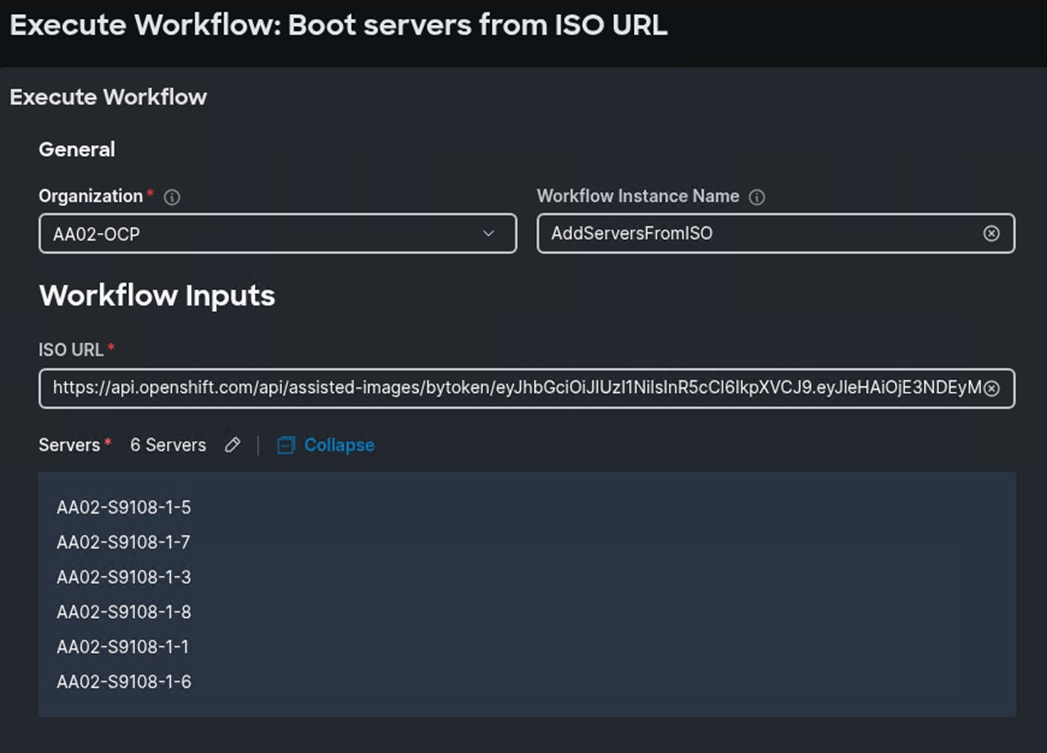

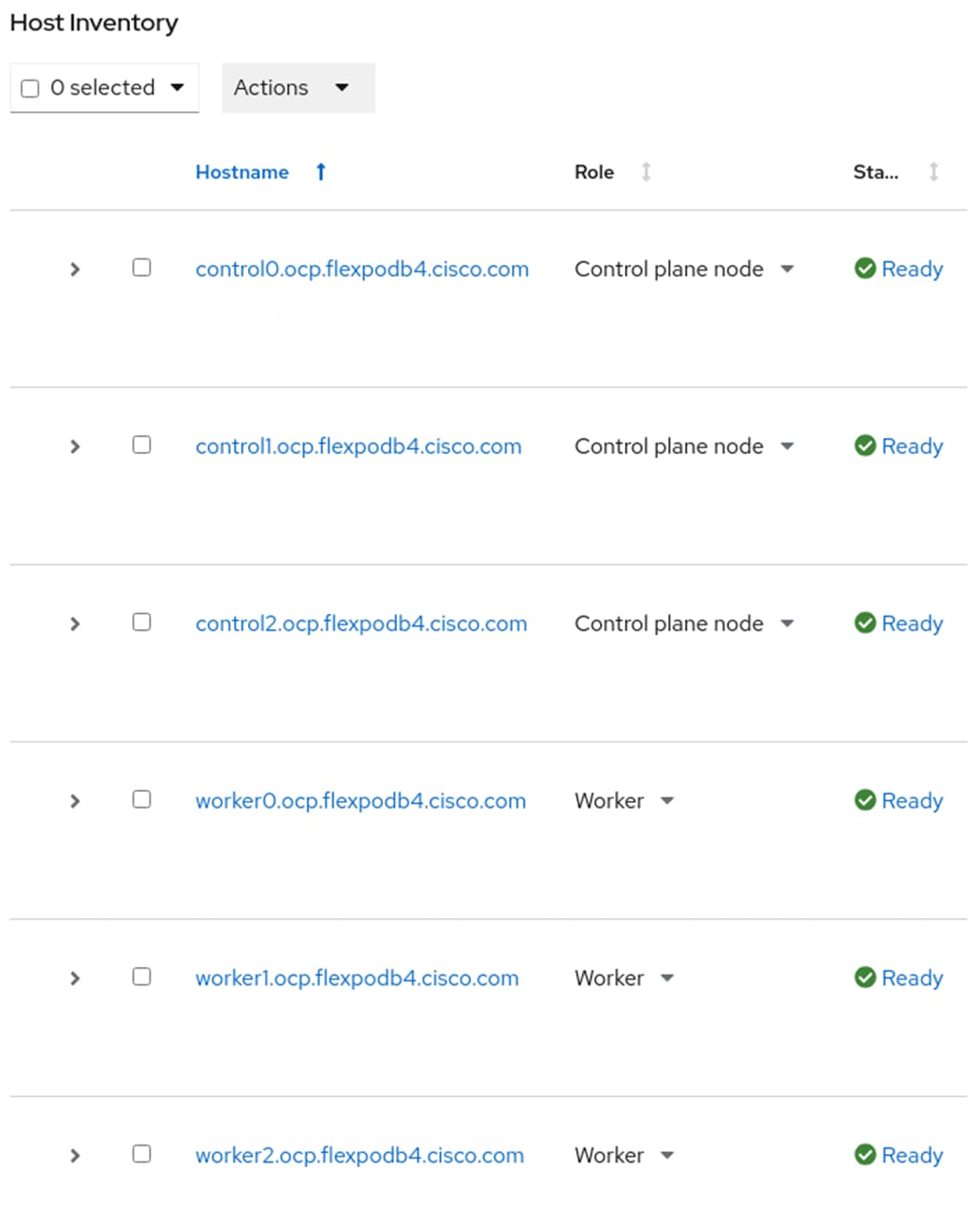

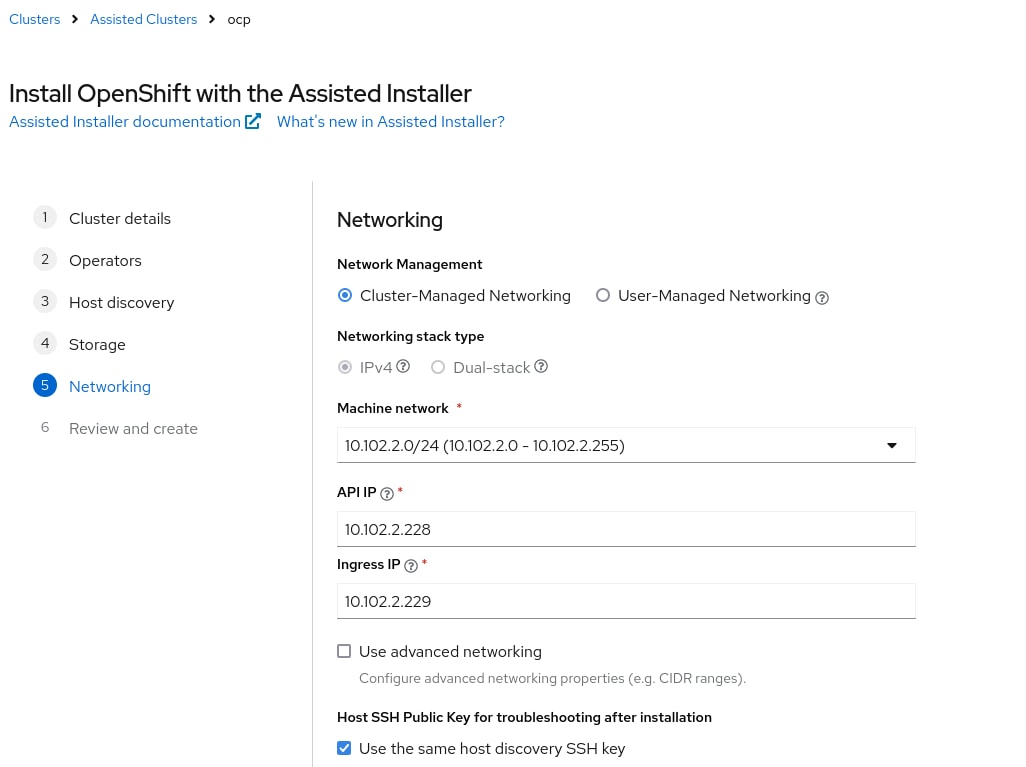

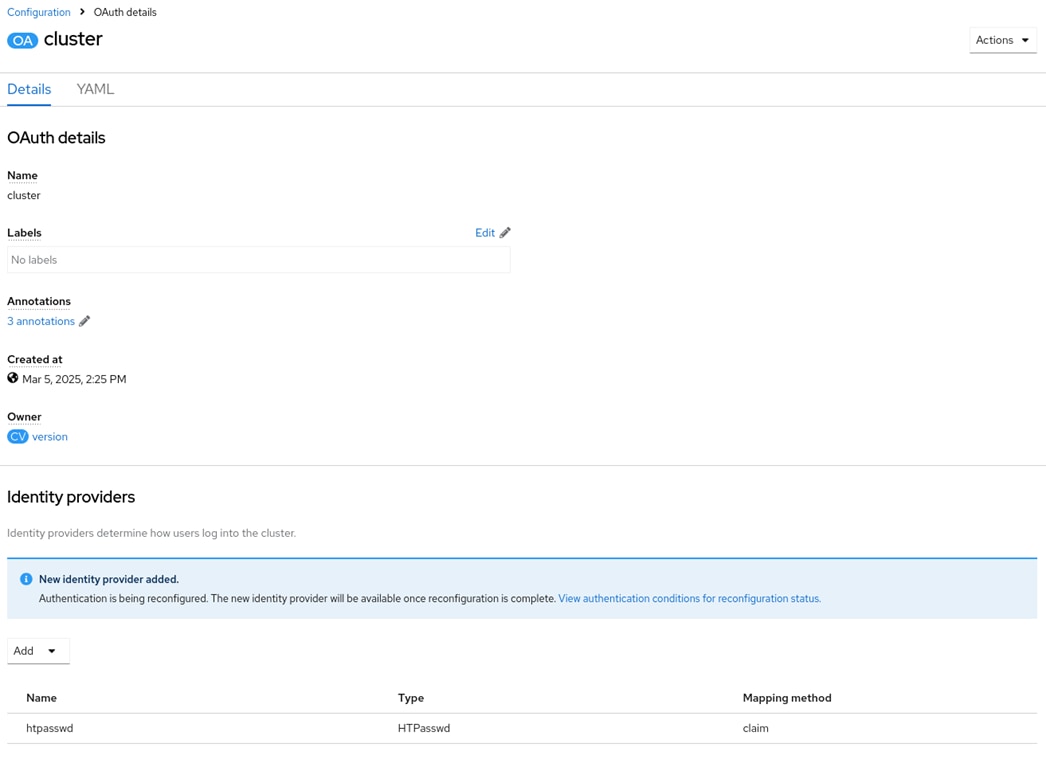

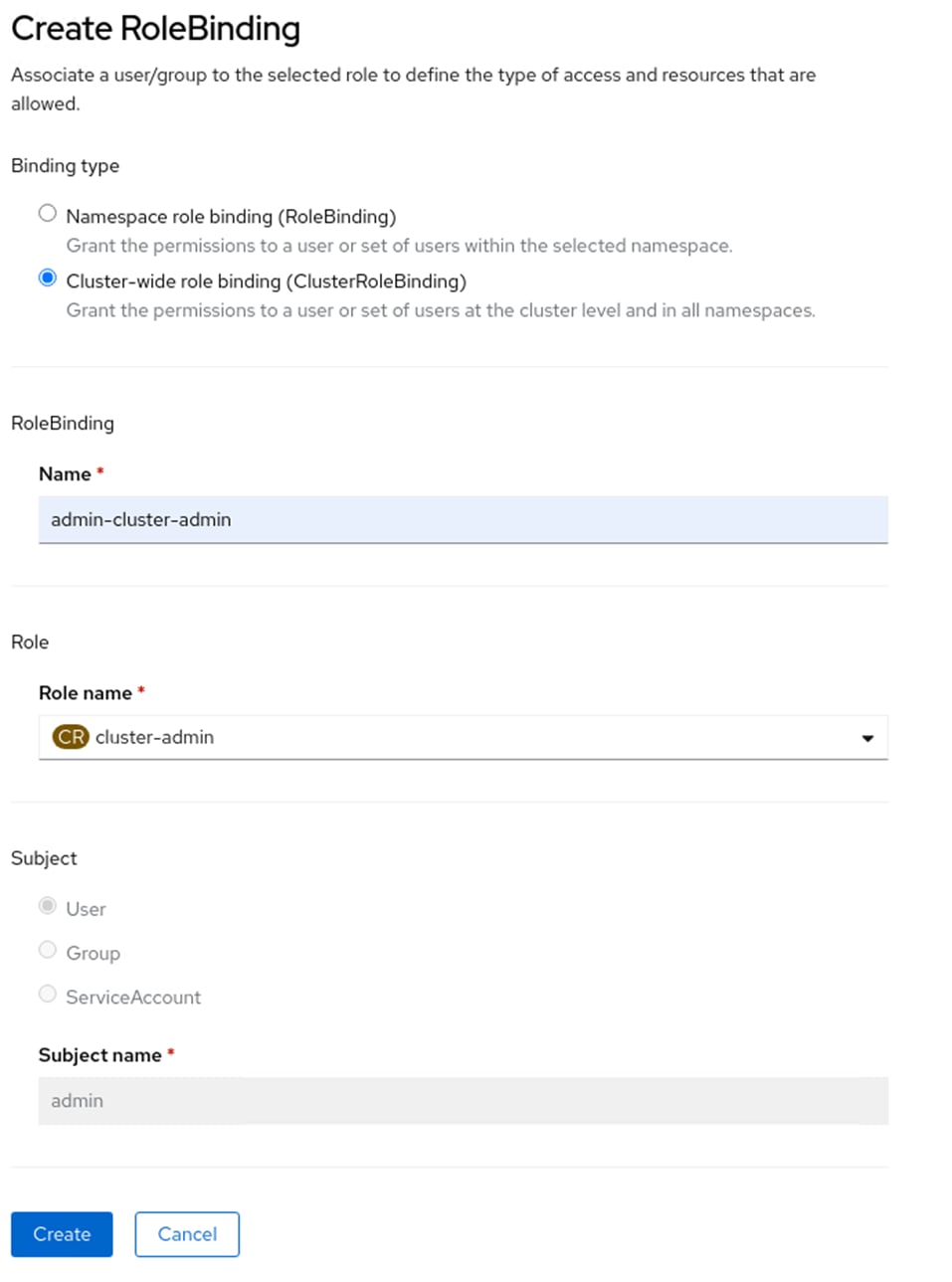

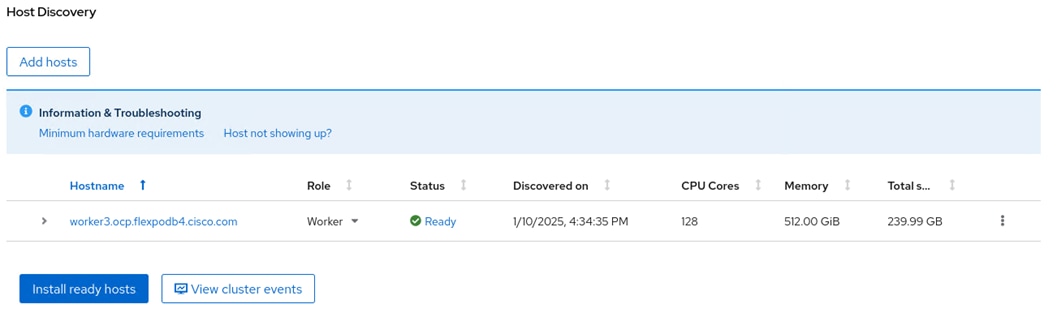

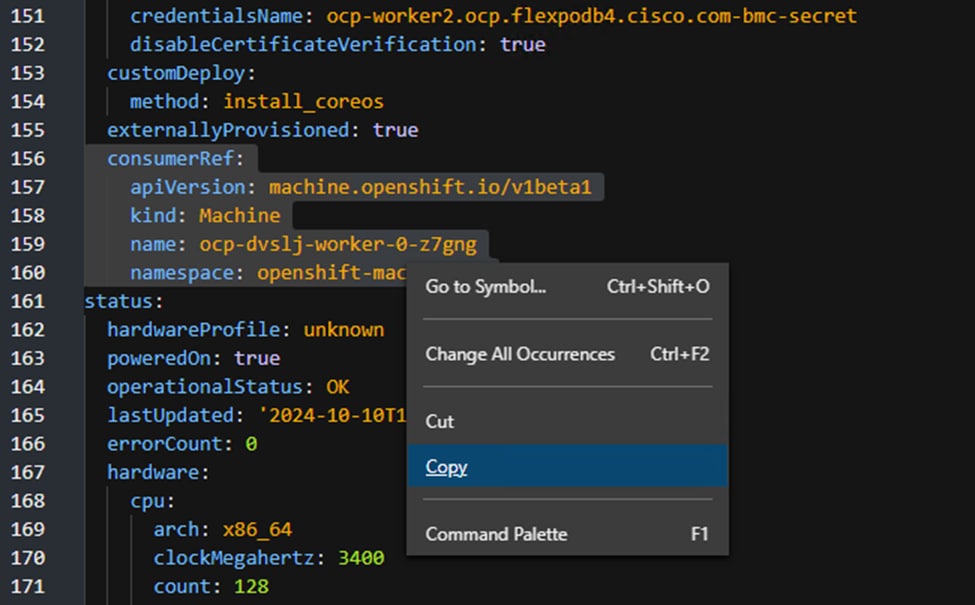

Step 8. From Table 12, enter the hostnames, IP addresses, and MAC addresses as reservations in your DHCP and DNS server(s) or configure the DHCP server to dynamically update DNS.