FlexPod Datacenter with Generative AI Inferencing

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Published: February 2024

![]()

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

The FlexPod Datacenter solution is a validated approach for deploying Cisco and NetApp technologies and products to build shared private and public cloud infrastructure. Cisco and NetApp have partnered to deliver a series of FlexPod solutions that enable strategic data-center platforms. The success of the FlexPod solution is driven through its ability to evolve and incorporate both technology and product innovations in the areas of management, compute, storage, and networking. This document explains the design and deployment details of implementing a platform for Generative Artificial Intelligence (AI) Inferencing on the latest FlexPod Datacenter design with Cisco UCS M7 servers with NVIDIA GPUs managed from Cisco Intersight, end-to-end 100 Gbps networking with Cisco Nexus 9000 series switches, NetApp ONTAP 9.13.1 on the NetApp AFF A800 with NetApp Astra Trident for persistent storage, VMware vSphere 8.0, NVIDIA AI Enterprise (NVAIE) software, and the latest release of Red Hat Openshift Container Platform (OCP). This solution takes the latest FlexPod Datacenter with VMware validated design and layers on NVIDIA GPUs, NVAIE, OCP, and NetApp Astra Trident to produce a powerful platform for running Generative AI Inferencing software and models. Some of the key advantages of this Generative AI Inferencing on FlexPod Datacenter platform are:

● A platform built on a proven, reliable infrastructure: FlexPod Datacenter is an industry-leading Converged Infrastructure built on proven, high-quality components from Cisco and NetApp. The optimal FlexPod configuration is then documented and tested, using only firmware and software that have been tested for interoperability and published.

● High Performance: From the latest Cisco UCS M7 servers with the latest Intel CPUs and memory, to end-to-end 100 Gbps networking, to the latest version of NetApp ONTAP on NetApp’s high end storage controllers with NVMe drives, to later model NVIDIA GPUs, this platform is built to provide high performance with Generative AI Inferencing workloads.

● Sustainability: taking advantage of sustainability and power usage monitoring features of all the components of the stack, including GPUs, and utilizing the Cisco UCS X-Series advanced power and cooling policies.

● Simpler and programmable infrastructure: infrastructure as code delivered using Ansible.

● Built for investment protections: design ready for future technologies such as newer or higher performing GPUs.

In addition to the compute-specific hardware and software innovations, the integration of the Cisco Intersight cloud platform with VMware vCenter, NetApp Active IQ Unified Manager, and Cisco Nexus switches delivers monitoring, and orchestration capabilities for different layers (virtualization, storage, and networking) of the FlexPod infrastructure. The modular nature of the Cisco Intersight platform also provides an easy upgrade path to additional services as they become available.

For information about the FlexPod design and deployment details, including the configuration of various elements of design and associated best practices, refer to Cisco Validated Designs for FlexPod, here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html.

Solution Overview and Design

This chapter contains the following:

● Audience

In simpler terms, think of it as the moment of truth for an AI model. It’s when the model takes real-time data, compares it with what it learned during training, and produces an actionable result. For example, it can transcribe speech, identify spam emails, or summarize lengthy documents. The goal is to apply what the model learned and put it into practice.

However, there’s an interesting twist: while training an AI model can be expensive, inferencing is where the real costs lie. Running an AI model for inference consumes energy, dollars, and contributes to carbon emissions. In fact, up to 90% of an AI model’s life is spent in inference mode, making it a critical area for optimization. Tech companies are actively working on speeding up inferencing to improve user experience and reduce operational costs.

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

This document provides design and deployment guidance around implementing FlexPod Datacenter with Red Hat Openshift Container Platform (OCP), NVIDIA GPUs, and NVIDIA AI Enterprise (NVAIE) software as a platform for running Generative AI Inferencing. This document provides best practice configuration of both FlexPod and the additional components to support Generative AI Inferencing.

The following design elements are added to FlexPod Datacenter to build a platform for Generative AI Inferencing:

● Specifying how to add a tenant to FlexPod Datacenter to house the components for Generative AI Inferencing

● NetApp ONTAP 9.13.1

● Layering of OCP on top of a VMware vSphere based FlexPod

● Use of NetApp Astra Trident with OCP to provide persistent storage to containers

● Addition of NVIDIA GPUs to Cisco UCS Servers

● Use of NVAIE GPU and vGPU drivers and software with both VMware vSphere and OCP

● Use of Cisco Intersight and other tools to monitor GPU and server energy consumption, temperatures, and utilization

● Demonstration of how to deploy and monitor performance of various Generative AI Inferencing servers and models

The FlexPod Datacenter solution as a platform for Generative AI Inferencing offers the following key benefits:

● The ability to implement readily available AI Inferencing models quickly and easily on a powerful platform with high-speed persistent storage, reducing customer spending on cloud.

● Save time and reduce errors with deployment ready Ansible playbooks for the base FlexPod setup and for FlexPod AI additions in the future.

● Simplified cloud-based management of solution components

● Hybrid-cloud-ready, policy-driven modular design

● Highly available and scalable platform with flexible architecture that supports various deployment models

● Cooperative support model and Cisco Solution Support

● Easy to deploy, consume, and manage architecture, which saves time and resources required to research, procure, and integrate off-the-shelf components

● Support for component monitoring, solution automation and orchestration, and workload optimization

Like all other FlexPod solution designs, FlexPod Datacenter with Generative AI Inferencing is configurable according to demand and usage. You can purchase exactly the infrastructure you need for your current application requirements and can then scale-up by adding more resources to the FlexPod system or scale-out by adding more FlexPod instances. Since many Generative AI Inferencing applications are containerized, use of OCP provides a single platform for hosting these applications and the corresponding AI models.

This chapter contains the following:

● Cisco Unified Computing System Additions

● Cisco Nexus Switching Fabric

● Red Hat OCP on VMware vSphere

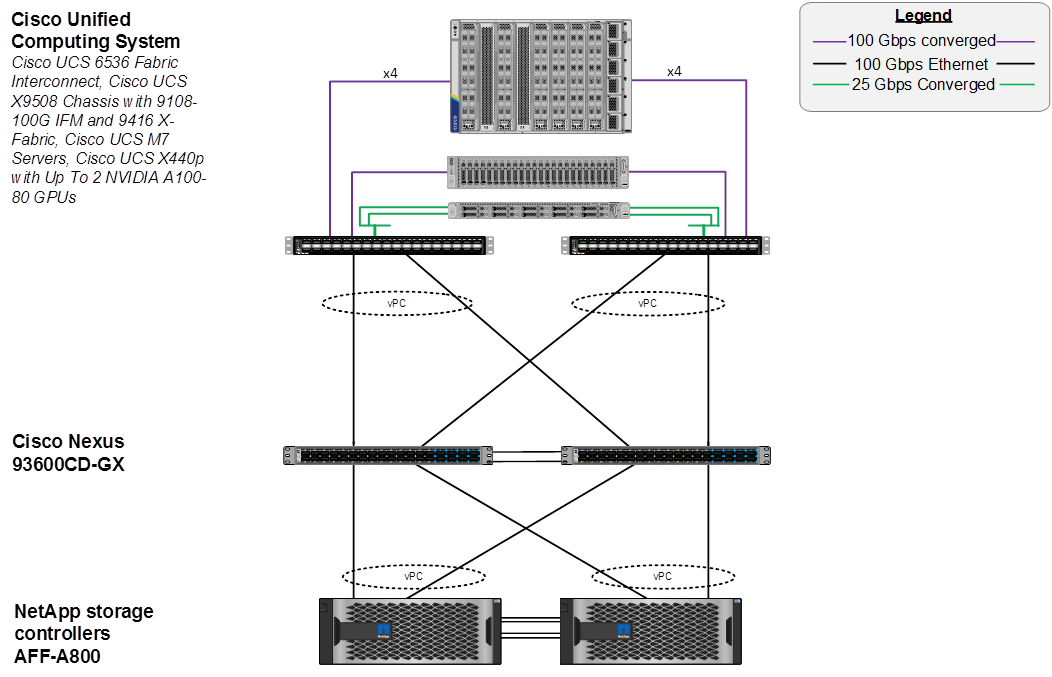

The IP-based end-to-end 100G FlexPod Datacenter was used as the basis for this solution, and is specified here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_m7_imm_vmware_design.html. This document will not repeat information from that document but will instead present what was updated or added for each component. The base FlexPod used in this validation used the following specific components:

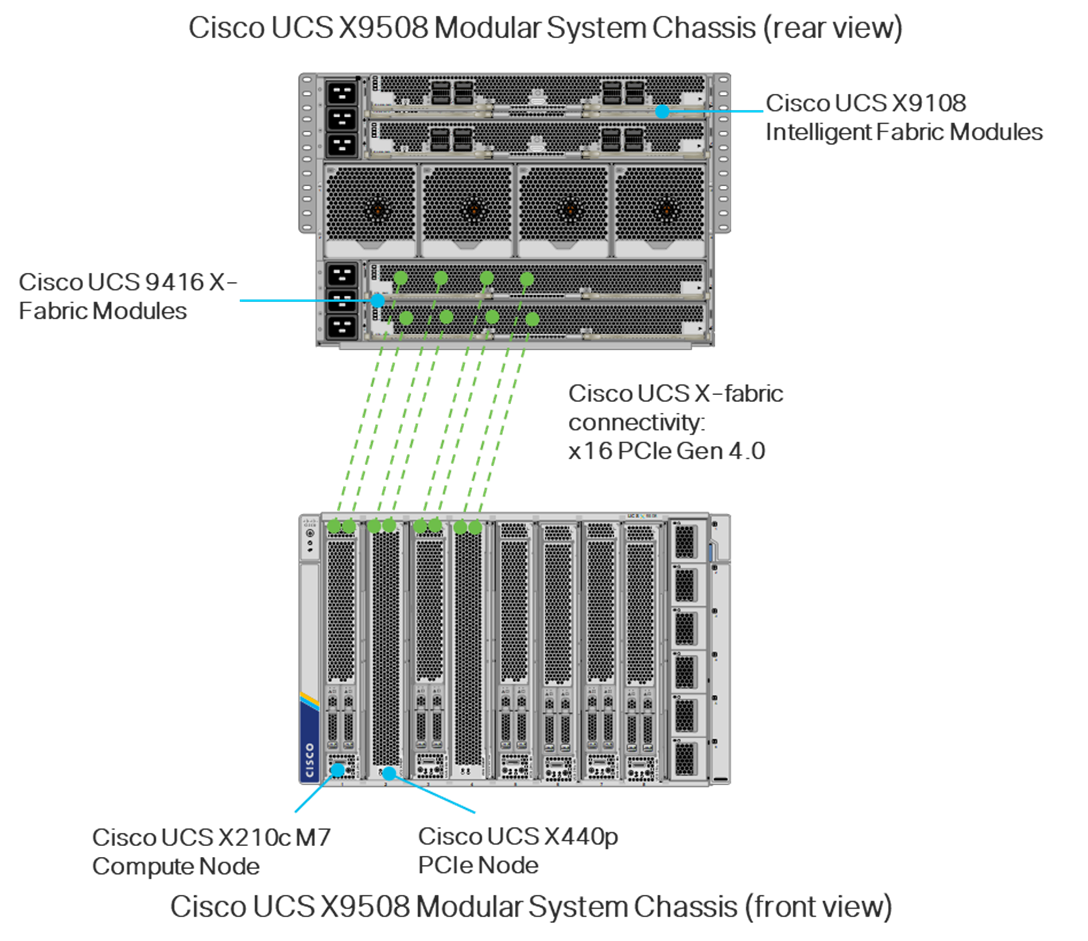

● Cisco UCS X9508 Chassis with Cisco UCSX-I-9108-100G Intelligent Fabric Modules, Cisco UCS 9416 X-Fabric Modules and Cisco UCS X210c M7 and X210c M6 Compute Nodes

● Fifth-generation Cisco UCS 6536 Fabric Interconnects to support 10/25/40/100GbE connectivity from various components

● Cisco UCS C220 M7 and C240 M7 Rack Mount Servers attached directly to the Fabric Interconnects

● High-speed Cisco NX-OS-based Cisco Nexus 93600CD-GX switching designed to support up to 400GE connectivity

● NetApp AFF A800 end-to-end NVMe storage with up to 100GE connectivity

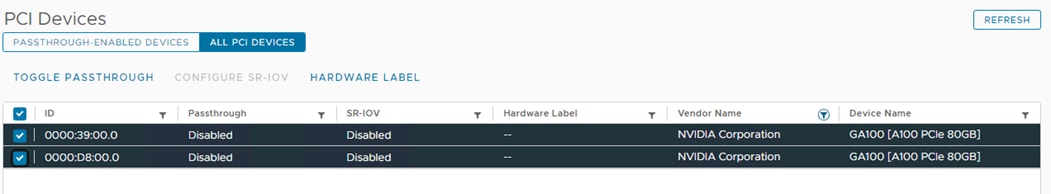

● NVIDIA A100-80 GPUs in Cisco UCS X440p PCIe Nodes connected to Cisco UCS X210c M7 Servers by Cisco UCS X-Fabric

The NVIDIA A100-80 PCIe GPU (UCSX-GPU-A100-80-D) was specifically used for this validation. However, any NVIDIA GPU supported with either the Cisco UCS X210C M7 or UCS C240/C220 M7 servers can be used in this platform. For information on the A100-80, see https://www.nvidia.com/en-us/data-center/a100/.

This validation used the A100-80 in two ways:

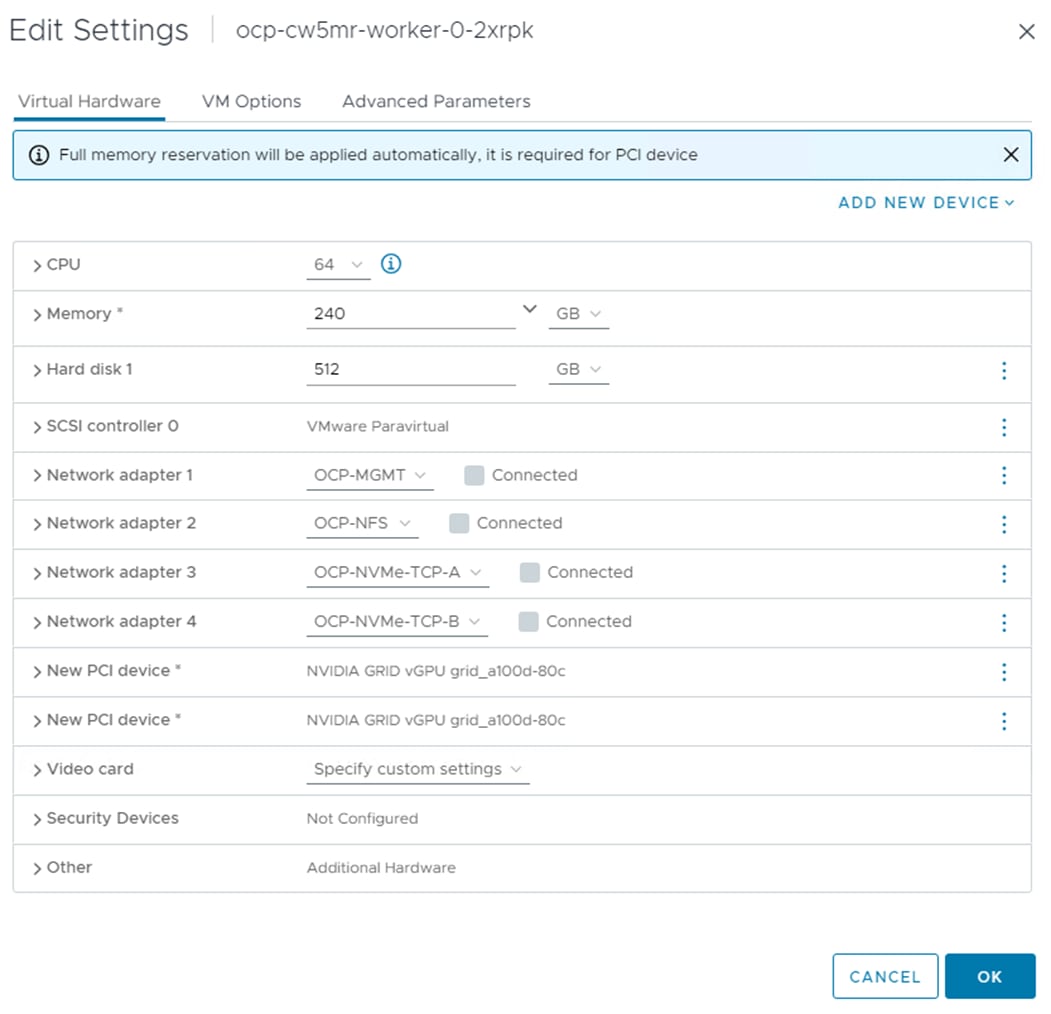

The first way the NVIDIA GPU was used was in NVIDIA Virtual Compute Server (vCS) mode, which took the physical GPU, loaded the NVAIE driver in VMware ESXi, and broke the physical GPU into multiple virtual GPUs (vGPUs) that could be assigned to Red Hat OCP worker VMs with VMware PCI passthrough. The NVAIE driver creates multiple sized vGPU profiles that divide up the 80GB of GPU frame buffer provided by the A100-80 and are used for assignment. In vCS mode, GPU frame buffer memory is divided up, but the entire GPU compute resources are shared by all the vGPUs. vCS mode is supported in all NVIDIA GPUs supported in Cisco UCS and the functionality used in this CVD can be applied to all supported NVIDIA GPUs. NVIDIA Multi-Instance GPU (MIG) described here: https://docs.nvidia.com/datacenter/tesla/mig-user-guide/index.html is another option that securely partitions a GPU into up to seven separate GPU Instances each with their own isolated memory and compute resources. This method of assigning vGPUs using profiles is useful when multiple vGPUs can be used to run a larger number of smaller AI models or replicas of the same smaller AI model at the same time.

Note: MIG is only supported in a subset of NVIDIA GPUs and was not validated in this CVD.

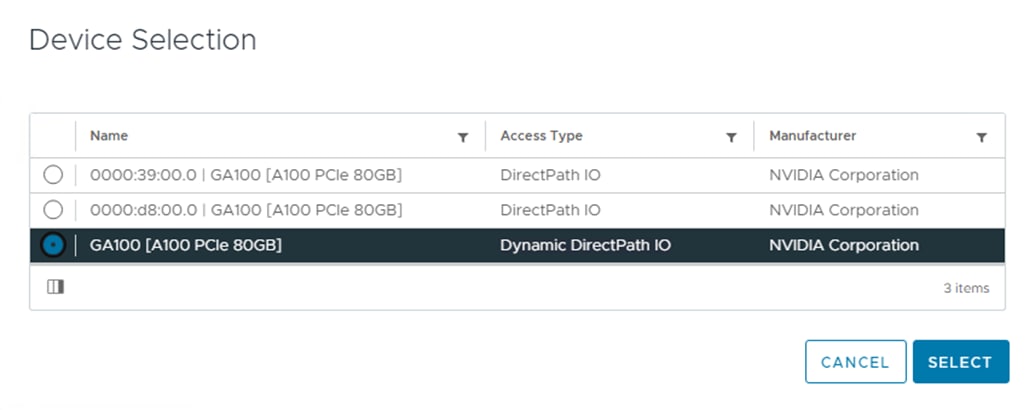

The second way the NVIDIA GPU was used was to not load the VMware NVAIE drivers and to assign the full physical GPU to the Red Hat OCP worker VM with VMware PCI passthrough. This method required a different GPU driver to be installed in OCP instead of the vGPU driver. This method of assigning the entire physical GPU to an OCP worker VM allows larger AI models, such as Llama 2 70B, to be run.

Cisco Unified Computing System Additions

To support Generative AI Inferencing, NVIDIA GPUs were added to Cisco UCS X210c M7 servers. The Cisco UCS X210c M7 supports up to two NVIDIA T4 GPUs in the front mezzanine GPU adapter. The Cisco UCS X210c M7 supports additional GPUs when used with Cisco UCS X-Fabric and the X440p PCIe Node.

Cisco UCS X-Fabric

The first generation of X-Fabric Technology, Cisco UCS 9416 X-Fabric Modules connect Cisco UCS X210c and X410c server nodes to Cisco UCS X440p PCIe Nodes. Cisco UCS X-Fabric also requires a Cisco UCS PCI Mezz Card (UCSX-V4-PCIME-D) or a mezzanine Cisco VIC card and Cisco VIC bridge card in the mezzanine slot to enable connectivity to the X440p PCIe node described below.

Cisco UCS X440p PCIe Node

The Cisco UCS X440p PCIe Node is the first PCIe resource node to integrate into the Cisco UCS X-Series Modular System. The Cisco UCS X9508 Chassis has eight node slots, up to four of which can be X440p PCIe nodes when paired with a Cisco UCS X210c M7 or M6 Compute Node. The Cisco UCS X440p PCIe Node supports two x16 full-height, full-length dual slot PCIe cards, or four x8 half-height, half-length single slot PCIe cards and requires both Cisco UCS 9416 X-Fabric modules for PCIe connectivity. This provides up to 16 GPUs per chassis to accelerate your applications with the Cisco UCS X440p Nodes. If your application needs even more GPU acceleration, up to two additional GPUs can be added on each Cisco UCS X210c compute node as stated above. For additional information on the Cisco UCS X440p PCIe Node, including the latest updates, see the datasheet here: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-x-series-modular-system/ucs-x440p-pcle-node-ds.html.

Cisco UCS X440p supports the following GPU options:

● NVIDIA H100 Tensor Core GPU

● NVIDIA L40 GPU

● NVIDIA L4 Tensor Core GPU

● NVIDIA A100 Tensor Core GPU

● NVIDIA A16 GPU

● NVIDIA A40 GPU

● Intel Data Center GPU Flex 140

● Intel Data Center GPU Flex 170

Note: Intel Data Center GPUs, although supported with the X440p, would require a different driver setup and process, and were not validated in this CVD.

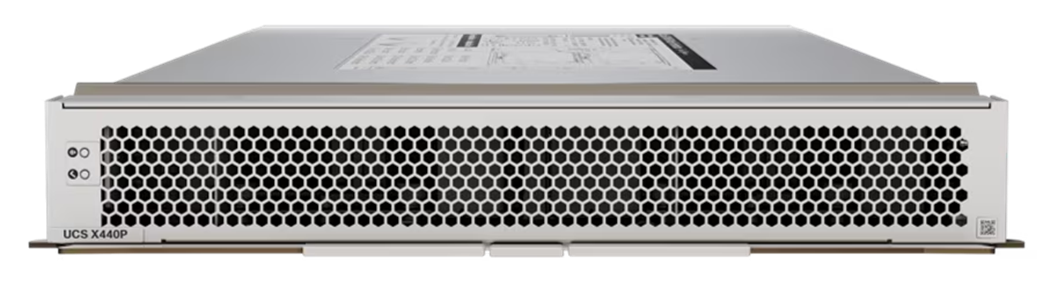

Figure 1. Cisco UCS X440p PCIe Node

Figure 2 shows the typical setup of Cisco UCS X440p modules paired with Cisco UCS X210c M6 and M7 servers. The server is usually placed in an odd-numbered slot and the Cisco UCS X440p is placed next to it in an even-numbered slot. For current information on supported GPUs associated requirements, see the Cisco UCS X210c M7 spec sheet here: https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-x-series-modular-system/x210cm7-specsheet.pdf and the Cisco UCS X410c M7 spec sheet here: https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-x-series-modular-system/x410cm7-specsheet.pdf.

Note: In the diagram, even though X-fabric connectivity is shown on four server slots, this connectivity extends across all eight server slots.

Figure 2. Cisco UCS X-Fabric Connectivity

Cisco UCS C220 M7 and C240 M7 NVIDIA GPU Options

The Cisco UCS C220 M7 can support up to 3 NVIDIA L4 GPUs or up to 3 Intel Flex 140 GPUs (Spec Sheet: https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/c220m7-sff-specsheet.pdf). The Cisco UCS C240 M7 can support a wide range of GPUs including up to 3 NVIDIA A100-80 GPUs. For detailed information on the Cisco UCS C240 M7, see the spec sheet here: https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/c240m7-sff-specsheet.pdf. Cisco UCS C-Series servers were not validated with GPUs in this CVD, but they are supported. Go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_gpu_aiml.html for an example of Cisco UCS C-Series servers being validated with NVIDIA GPUs.

Cisco Nexus 93600CD-GX

Based on Cisco Cloud Scale technology, the Cisco Nexus 9300-GX switches are the next generation of fixed Cisco Nexus 9000 Series Switches capable of supporting 400 Gigabit Ethernet (GE). With the increase in use cases for applications requiring Artificial Intelligence (AI) and Machine Learning (ML), the platform addresses the need for high-performance, power-efficient, compact switches in the networking infrastructure. These switches are designed to support 100G and 400G fabrics for mobile service provider environments, including the network edge, 5G, IoT, Professional Media Networking platform (PMN), and Network Functions Virtualization (NFV).

The Cisco Nexus 93600CD-GX Switch (Figure 3) is a 1RU switch that supports 12 Tbps of bandwidth and 4.0 bpps across 28 fixed 40/100G QSFP-28 ports and 8 fixed 10/25/40/50/100/200/400G QSFP-DD ports.

Cisco provides two modes of operation for Cisco Nexus 9000 Series Switches. Organizations can deploy Cisco Application Centric Infrastructure (Cisco ACI) or Cisco NX-OS mode.

Figure 3. Cisco UCS Nexus 93600CD-GX Switch

For more details, go to: https://www.cisco.com/c/en/us/products/collateral/switches/nexus-9000-series-switches/nexus-9300-gx-series-switches-ds.html.

The switching infrastructure in this document utilized the NX-OS switching mode. The switching infrastructure in future iterations of this document will follow the Cisco Data Center Networking Blueprint for AI/ML Applications and will utilize a spine-leaf architecture and VXLAN EVPN Network.

NetApp AFF A800 is mentioned in this document. Detailed information about NetApp AFF A-Series Storage is specified here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_m7_imm_vmware_design.html#NetApp_AFF_A_Series_Storage

Detailed information about NetApp AFF C-Series Storage is specified here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_m7_imm_vmware_design.html#NetAppAFFCSeriesStorage

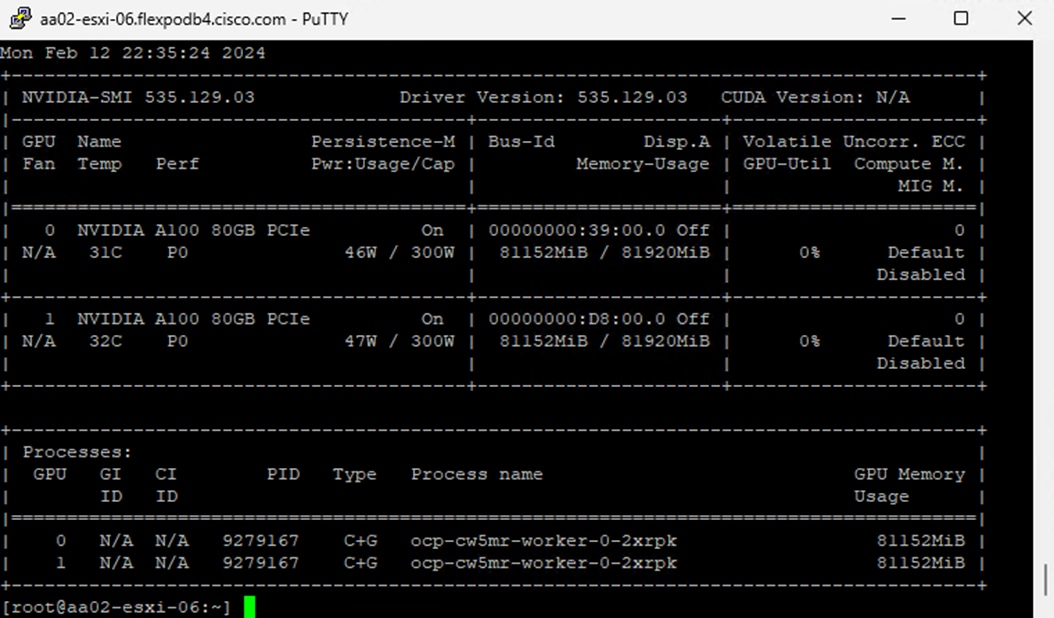

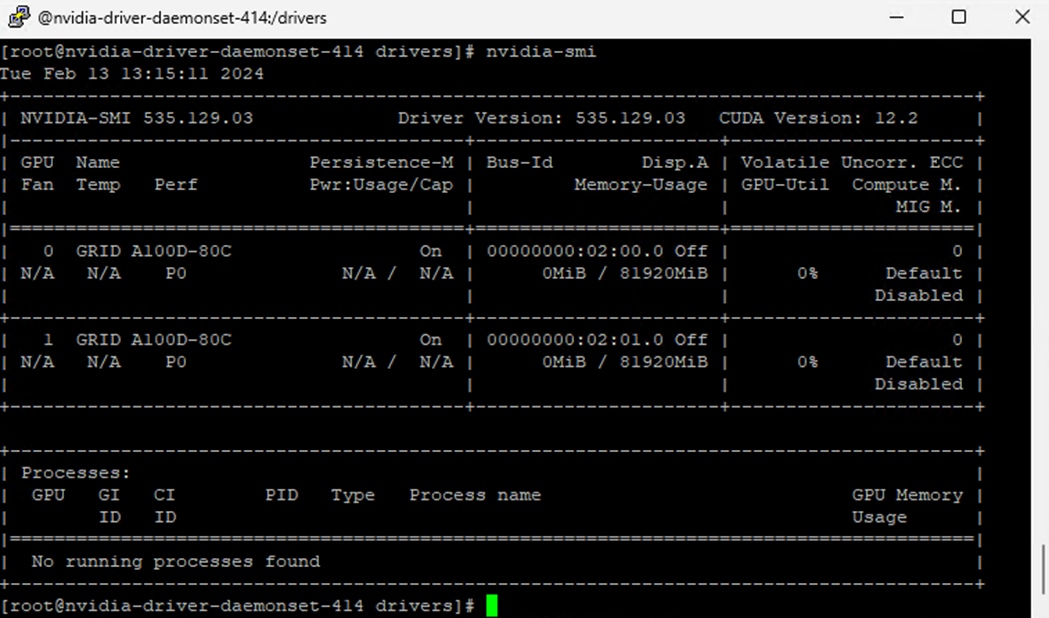

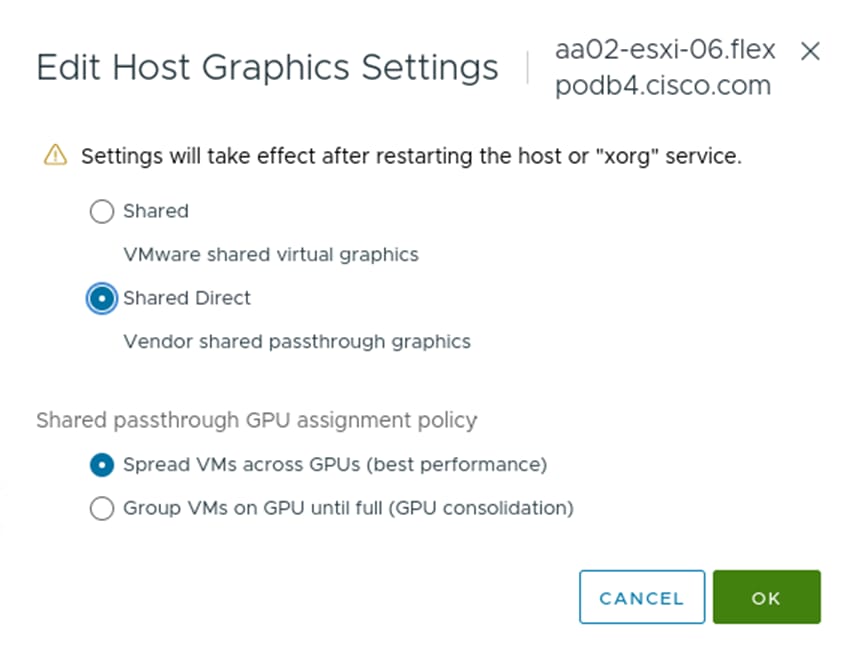

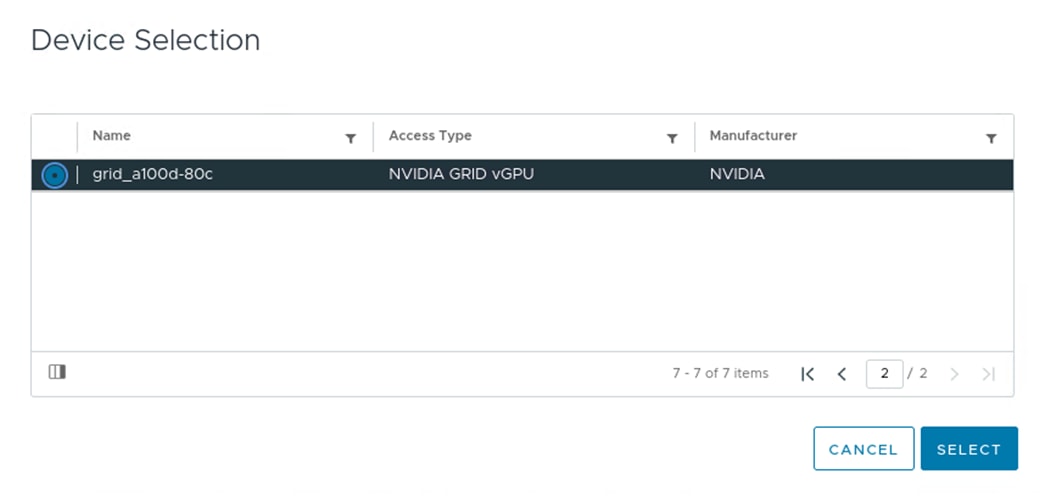

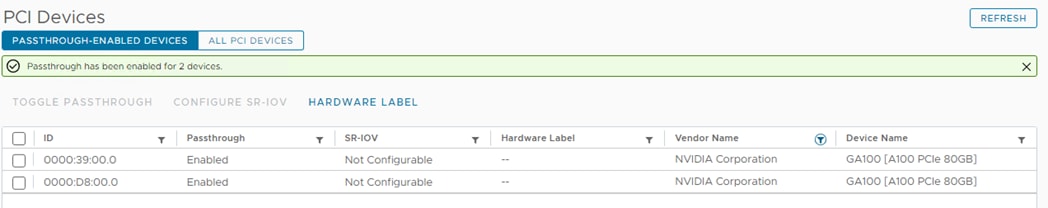

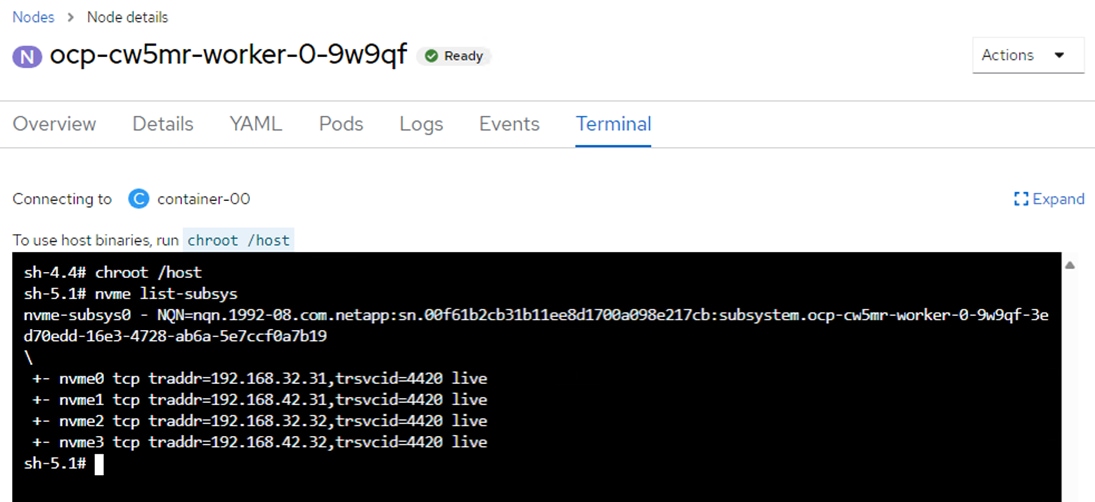

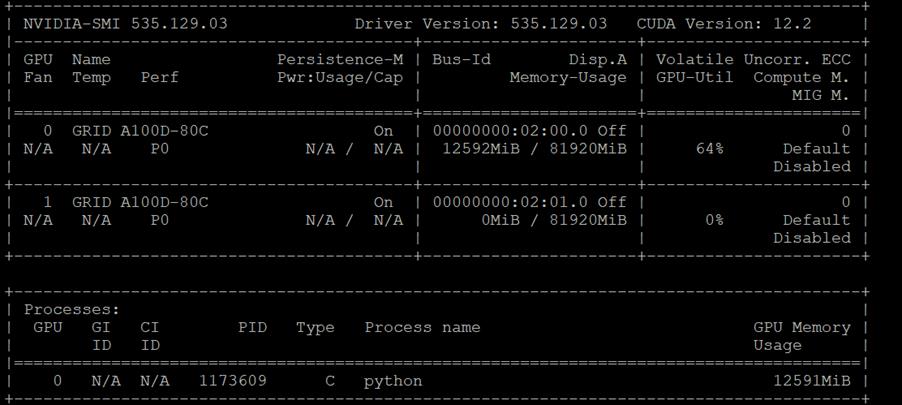

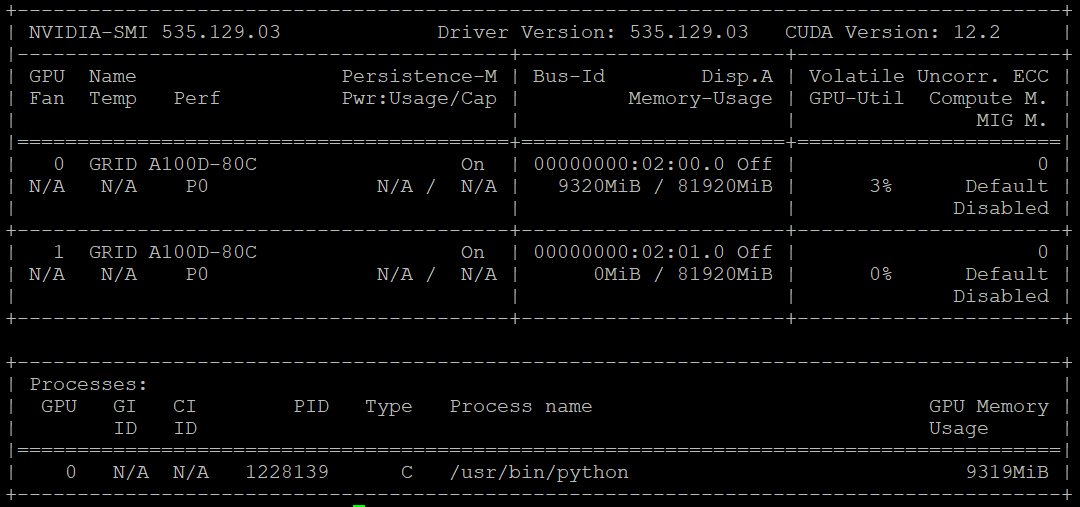

In order to implement NVIDIA GPU vCS mode, a pair of NVAIE vibs or drivers were installed on each VMware ESXi 8.0 host that had GPUs. After installing these drivers and setting the Graphics Device Settings to Shared Direct Vendor shared passthrough graphics, vGPUs could then be assigned to VMs, including Red Hat OCP worker VMs after OCP installation. NVAIE also installs the nvidia-smi application on the ESXi host, providing a tool to monitor and manage installed NVIDIA GPUs. On VMware ESXi, this tool provides physical GPU statistics such as GPU temperature, power consumption, memory usage of assigned vGPUs, and GPU utilization in addition to how much vGPU memory is assigned to worker VMs.

Figure 4. nvidia-smi Tool on VMware ESXi

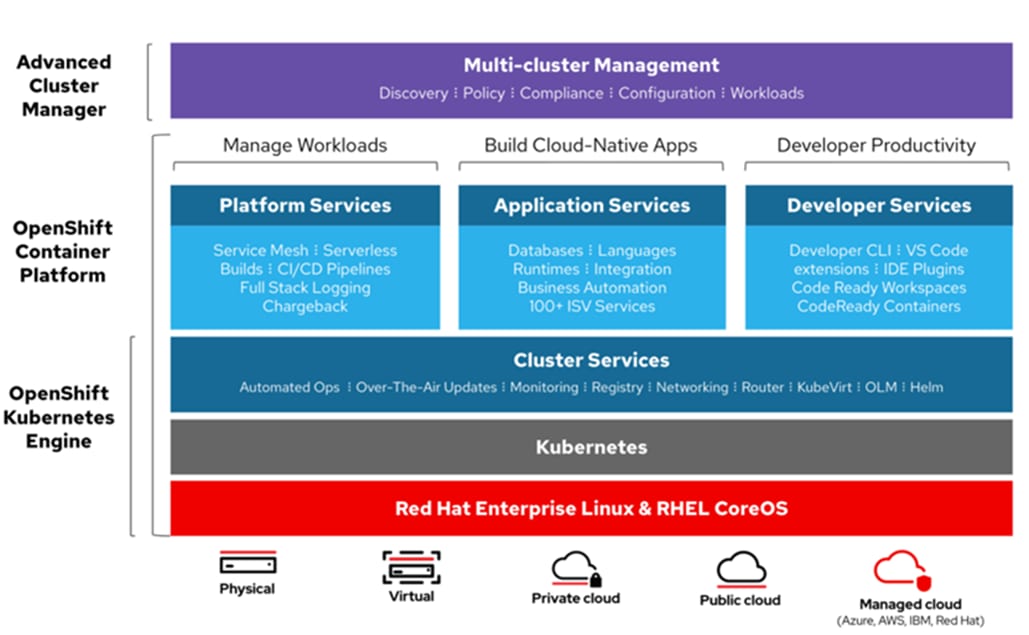

The RedHat OpenShift Container Platform (OCP) is a container application platform that brings together CRI-0 and Kubernetes and provides an API and web interface to manage these services. CRI-O is an implementation of the Kubernetes CRI (Container Runtime Interface) to enable using Open Container Initiative (OCI) compatible runtimes. It is a lightweight alternative to using Docker as the runtime for Kubernetes.

OCP allows you to create and manage containers. Containers are standalone processes that run within their own environment, independent of operating system and the underlying infrastructure. OCP helps developing, deploying, and managing container-based applications. It provides a self-service platform to create, modify, and deploy applications on demand, thus enabling faster development and release life cycles. OCP has a microservices-based architecture of smaller, decoupled units that work together. It runs on top of a Kubernetes cluster, with data about the objects stored in etcd, a reliable clustered key-value store.

Figure 5. Openshift Container Platform Overview

Kubernetes Infrastructure

Within OpenShift Container Platform, Kubernetes manages containerized applications across a set of CRI-O runtime hosts and provides mechanisms for deployment, maintenance, and application-scaling. The CRI-O service packages, instantiates, and runs containerized applications.

A Kubernetes cluster consists of one or more masters and a set of worker nodes. This solution design includes HA functionality at the VMware level as well as the OCP software level. A Kubernetes cluster is designed to run in HA mode with 3 master nodes and a minimum of 2 worker nodes to help ensure that the cluster has no single point of failure.

Red Hat Core OS

OpenShift Container Platform uses Red Hat Enterprise Linux CoreOS (RHCOS), a container-oriented operating system that combines some of the best features and functions of the CoreOS and Red Hat Atomic Host operating systems. RHCOS is specifically designed for running containerized applications from OpenShift Container Platform and works with new tools to provide fast installation, Operator-based management, and simplified upgrades.

RHCOS includes the following:

● Ignition, which OpenShift Container Platform uses as a first boot system configuration for initially bringing up and configuring machines.

● CRI-O, a Kubernetes native container runtime implementation that integrates closely with the operating system to deliver an efficient and optimized Kubernetes experience. CRI-O provides facilities for running, stopping, and restarting containers. It fully replaces the Docker Container Engine, which was used in OpenShift Container Platform 3.

● Kubelet, the primary node agent for Kubernetes that is responsible for launching and monitoring containers.

In this CVD OCP was installed on VMware using the Red Hat OCP Installer-Provisioned-Infrastructure (IPI) installer. The IPI installer connects to VMware vCenter, creates the needed master and worker VMs, and installs and configures RHCOS.

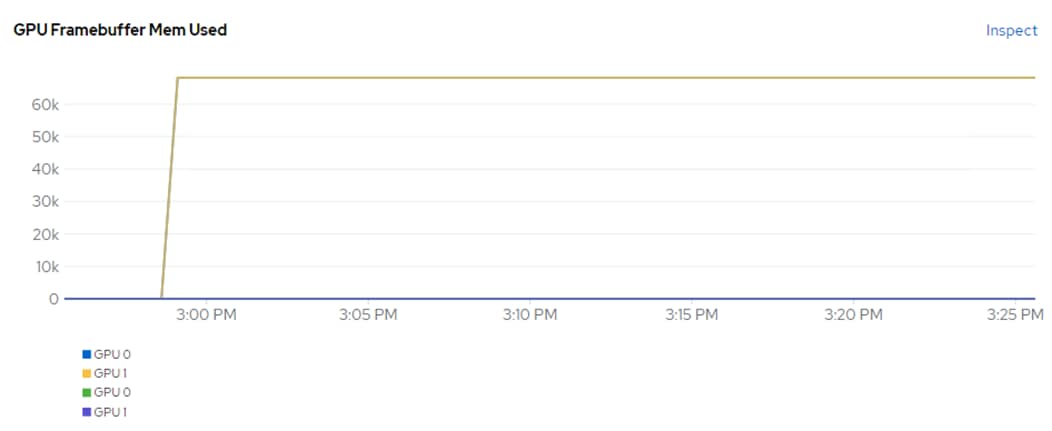

Once OCP is installed on top of VMware vSphere with the NVAIE drivers installed and vGPUs are added to the OCP worker VMs, the NVIDIA GPU Operator can then be installed in OCP. The NVIDIA GPU operator provides access to the Openshift vGPU driver which is used in the containers that the vGPU(s) are attached. The NVIDIA GPU operator also pulls vGPU licenses from an NVIDIA license server that can be installed on premis or in the NVIDIA cloud. Proper licensing is required for the vGPUs to perform. A number of pods that support NVIDIA vGPU operation and monitoring are also created in each worker VM that has vGPUs assigned. The nvidia-smi tool, with the vGPU view, is also available in the nvidia-driver-daemonset pod in the nvidia-gpu-operator project. This tool does not report power or temperature data, but does show vGPU memory usage, GPU utilization (since GPU compute resources are shared with vCS), and any pods or containers that are attached to the vGPU. In the figure below, two vGPUs are assigned to the OCP worker VM with the profile that provides 80G of memory to each vGPU.

Figure 6. nvidia-smi from the vGPU

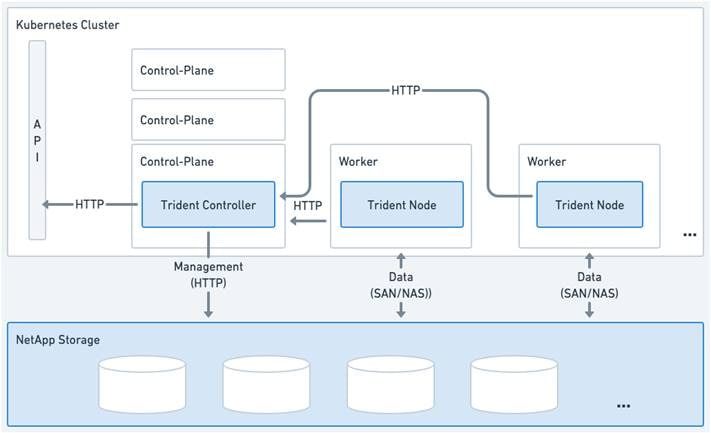

NetApp Astra Trident

Astra Trident is an open-source, fully supported storage orchestrator for containers created by NetApp. It has been designed from the ground up to help you meet your containerized applications persistence demands using industry-standard interfaces, such as the Container Storage Interface (CSI). With Astra Trident, microservices and containerized applications can take advantage of enterprise-class storage services provided by the full NetApp portfolio of storage systems. In a FlexPod environment, Astra Trident is utilized to allow end users to dynamically provision and manage persistent volumes for containers backed by FlexVols and LUNs hosted on ONTAP-based products such as NetApp AFF and FAS systems.

Astra Trident deploys as a single Trident Controller Pod and one or more Trident Node Pods on the Kubernetes cluster and uses standard Kubernetes CSI Sidecar Containers to simplify the deployment of CSI plugins. Kubernetes CSI Sidecar Containers are maintained by the Kubernetes Storage community.

Kubernetes node selectors and tolerations and taints are used to constrain a pod to run on a specific or preferred node. You can configure node selectors and tolerations for controller and node pods during Astra Trident installation.

● The controller plugin handles volume provisioning and management, such as snapshots and resizing.

● The node plugin handles attaching the storage to the node.

Figure 7. Astra Trident deployed on the Kubernetes cluster

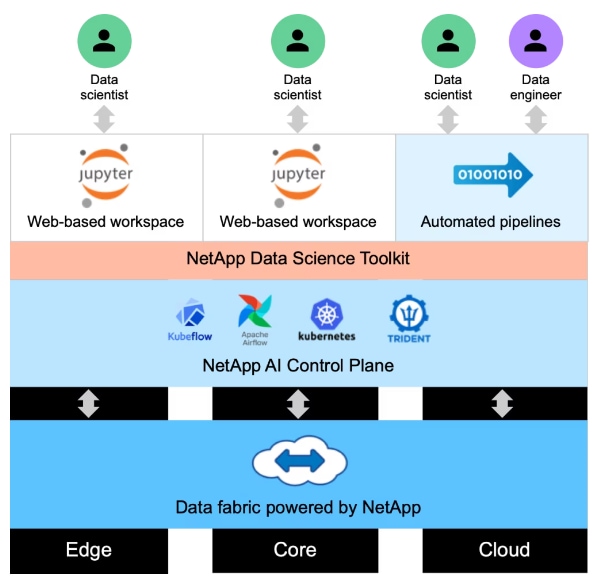

NetApp DataOps Toolkit

The NetApp DataOps Toolkit is a Python library that makes it easy for developers, data scientists, and data engineers to perform numerous data management tasks. These tasks include provisioning a new data volume or development workspace, cloning a data volume or development workspace almost instantaneously, and creating a NetApp Snapshot copy of a data volume or development workspace for traceability and baselining. This Python library can function as either a command-line utility or a library of functions that can be imported into any Python program or Jupyter Notebook.

The DataOps Toolkit supports Linux and macOS hosts. The toolkit must be used in conjunction with a NetApp data storage system or service. It simplifies various data management tasks that are executed by the data storage system or service. To facilitate this simplification, the toolkit communicates with the data storage system or service through an API.

The NetApp DataOps Toolkit for Kubernetes abstracts storage resources and Kubernetes workloads up to the data-science workspace level. These capabilities are packaged in a simple, easy-to-use interface that is designed for data scientists and data engineers. Using the familiar form of a Python program, the Toolkit enables data scientists and engineers to provision and destroy JupyterLab workspaces in just seconds. These workspaces can contain terabytes, or even petabytes, of storage capacity, enabling data scientists to store all their training datasets directly in their project workspaces. Gone are the days of separately managing workspaces and data volumes.

Figure 8. NetApp Data Science Toolkit

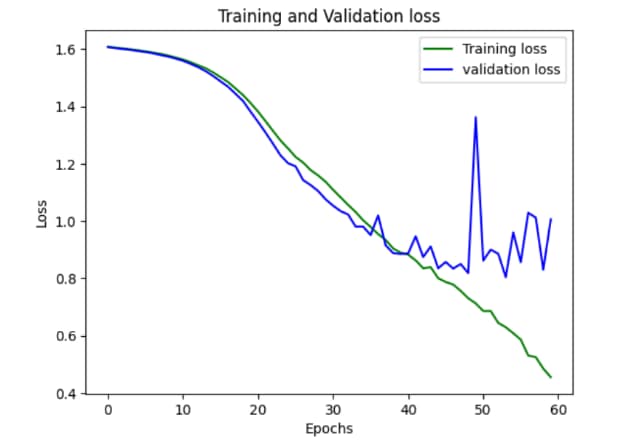

Various Generative AI Inferencing Models were run and benchmarked as part of this validation. One method for Generative AI Inferencing is to load an inferencing server with an AI model. The inferencing server then has a listener that waits for inferencing requests and responds to the requests. A second method is to load the model into the GPU(s), run the inferencing request(s), provide the response(s), and unload the model. In this validation, NetApp Astra Trident-provided persistent storage was used to store the AI models, preventing the models from being downloaded each time a pod was created or re-created. For this validation, AI software and models were obtained from NVAIE, Hugging Face, Github, and other sources. NVAIE provides a wide range of inferencing servers, AI frameworks, and AI models for NVIDIA GPUs.

NVIDIA NeMo Framework Inference

NVIDIA NeMo™ Framework is an end-to-end, cloud-native enterprise framework to build, customize, and deploy generative AI models with billions of parameters. The NeMo Framework Inferencing container utilizes the NVIDIA Triton Inferencing Server to serve NeMo formatted AI inferencing models. The version of the NeMo Framework Inferencing container validated in this CVD has been deprecated by NVIDIA. The container referenced above was pulled from NVIDIA unmodified and placed on quay.io to allow the testing in this CVD to be replicated.

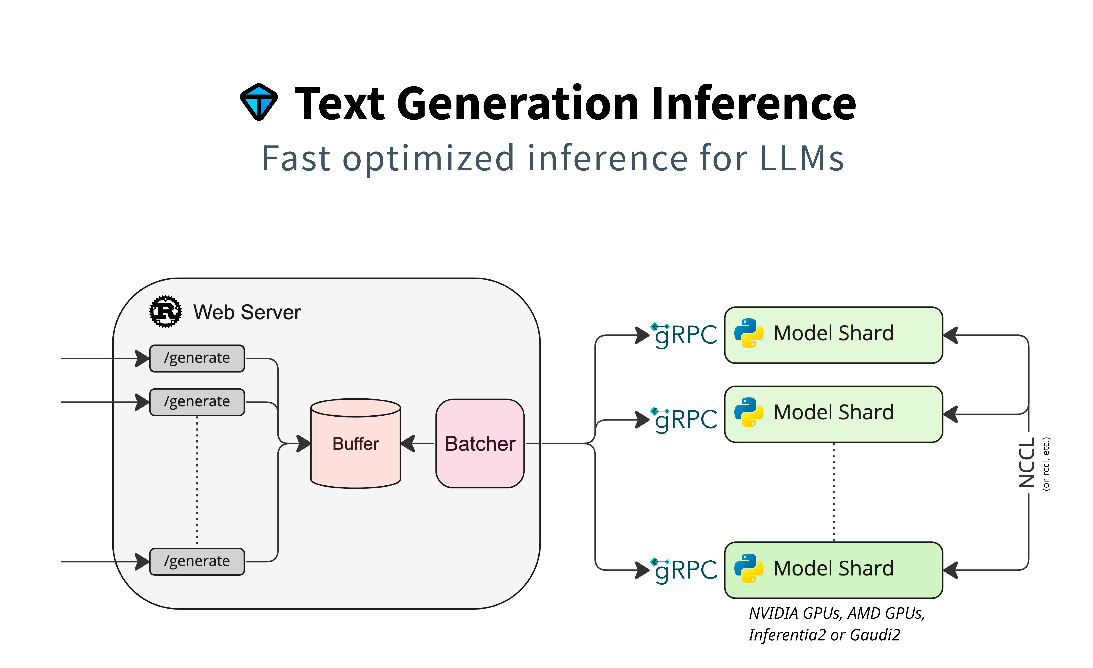

Text Generation Inference (TGI)

TGI is a toolkit for deploying and serving Large Language Models (LLMs). The TGI container is downloaded from Hugging Face and contains all of the necessary software to support usage of NVIDIA GPUs. TGI supports a wide range of AI models, and fourteen different AI Inferencing models were run as part of the validation.

PyTorch

PyTorch is an optimized tensor library for deep learning using GPUs and CPUs. The PyTorch container is downloaded from the NVIDIA NGC Catalog and is NVAIE supported. When running AI Inferencing models with PyTorch, the model is loaded into the GPU, inferencing is run, response is provided, and the model is unloaded.

Table 1. AI Inferencing Models Run

| Inferencing Serving |

Model |

Base Container Used for Inferencing |

| NeMo Framework Inference |

Nemo GPT 2B |

quay.io/jogeorg2/nemofw-inference:23.10_fix_v2 |

| Nemotron 3 8B QA |

||

| Llama-2-7B-Chat |

||

| Llama-2-13B-Chat |

||

| Llama-2-70B-Chat |

||

| Llama-2-SteerLM-Chat |

||

| TGI |

BLOOM 7B |

ghcr.io/huggingface/text-generation-inference |

| Google FLAN-T5 XL 2.85B |

||

| Google FLAN-T5 XXL 11.3B |

||

| GALACTICA 30B |

||

| GPT-NeoX-20B |

||

| OPT- 2.7B |

||

| MPT-30B |

||

| Falcon-40B |

||

| Mistral-7B-v0.1 |

||

| Code Llama 34B-Base |

||

| Code Llama 70B-Base |

||

| Llama-2-70B-Chat-HF |

||

| Defog SQLCoder-15B |

||

| Defog SQLCoder-34B |

||

| PyTorch |

Llama-2-7B-Chat |

nvcr.io/nvidia/pytorch:23.10-py3 |

| Llama-2-13B-Chat |

||

| resnet34* |

||

| Stable Diffusion 1.4* |

||

| Stable Diffusion 1.5* |

||

| Stable Diffusion 2* |

||

| Stable Diffusion 2.1* |

||

| Stable Diffusion XL* |

||

| Openjourney* |

||

| Dreamlike Diffusion 1.0* |

||

| Hotshot-XL* |

*Run using NetApp DataOps Toolkit with a Jupyter Notebook.

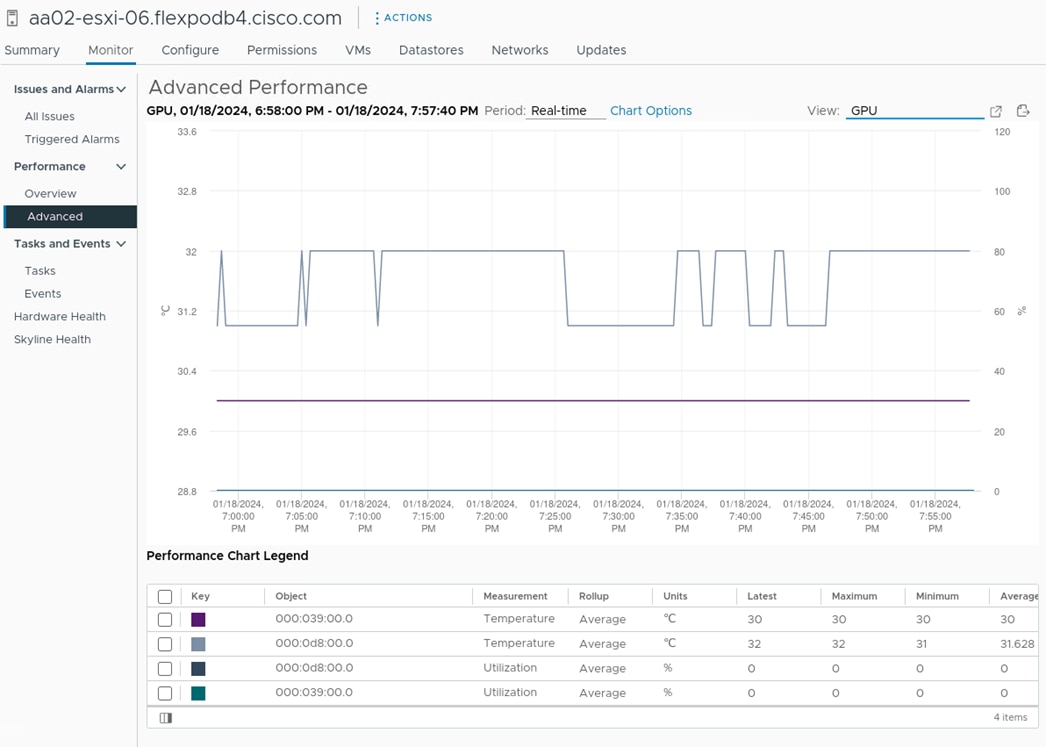

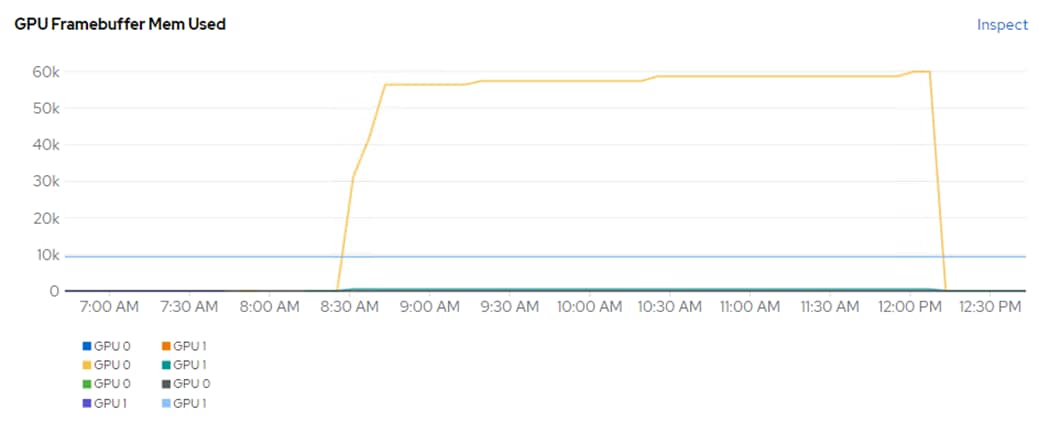

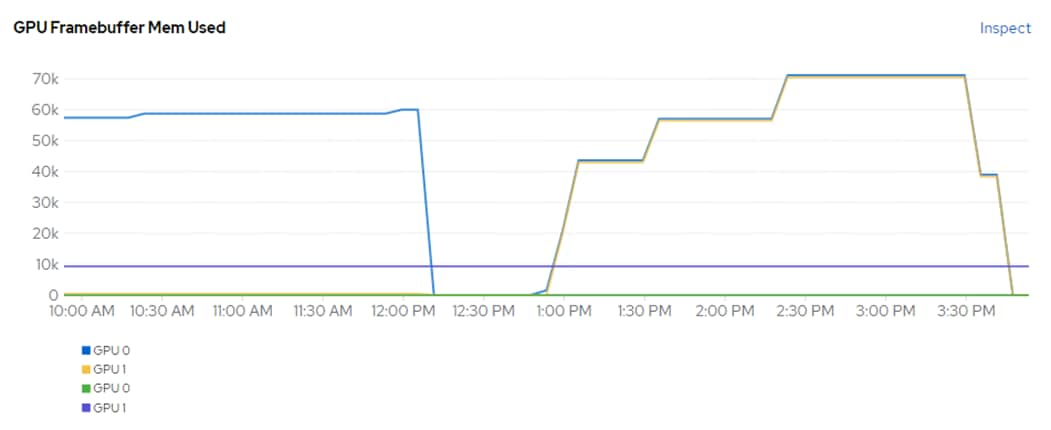

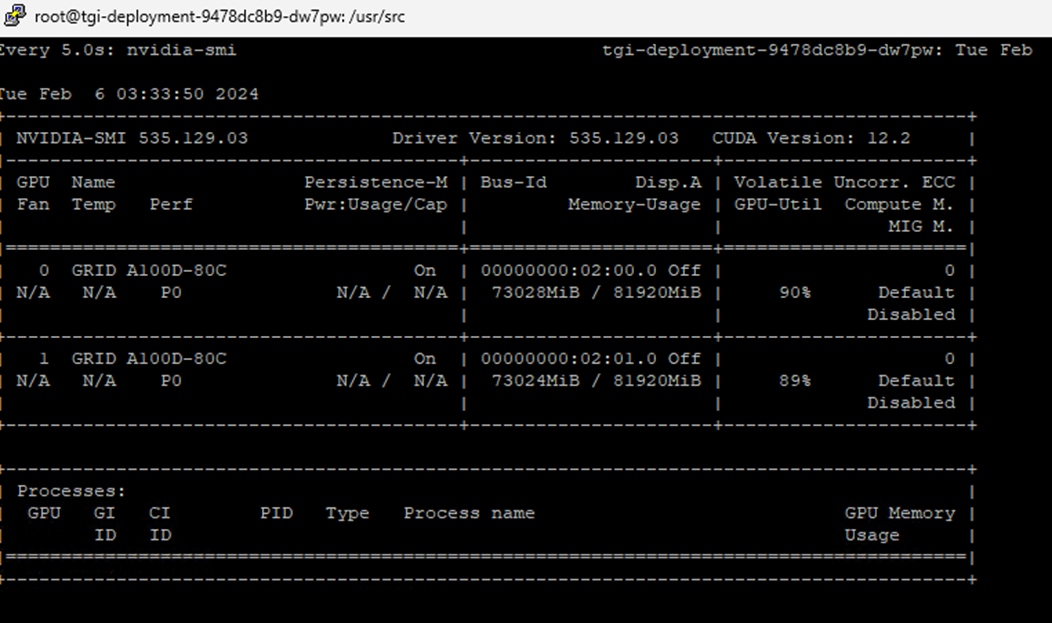

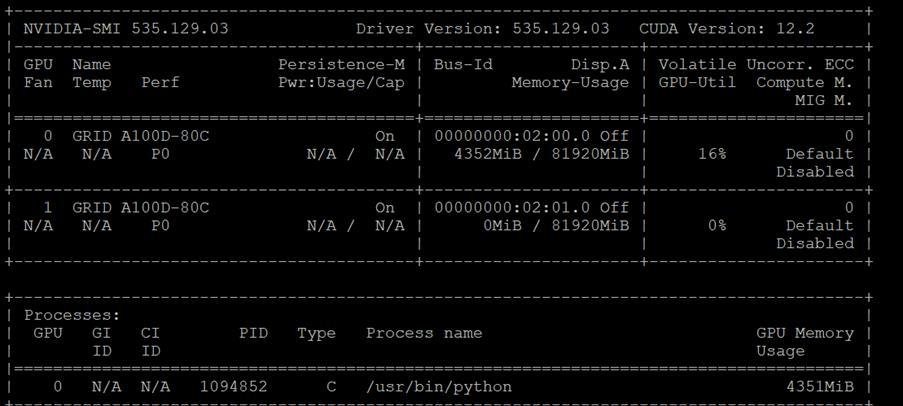

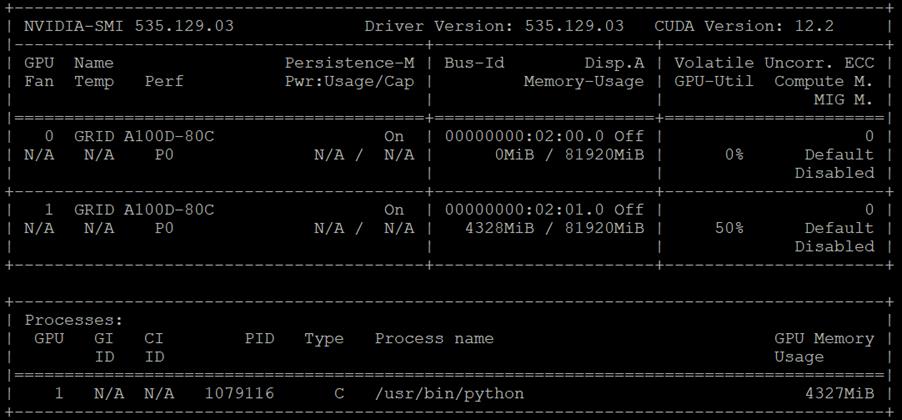

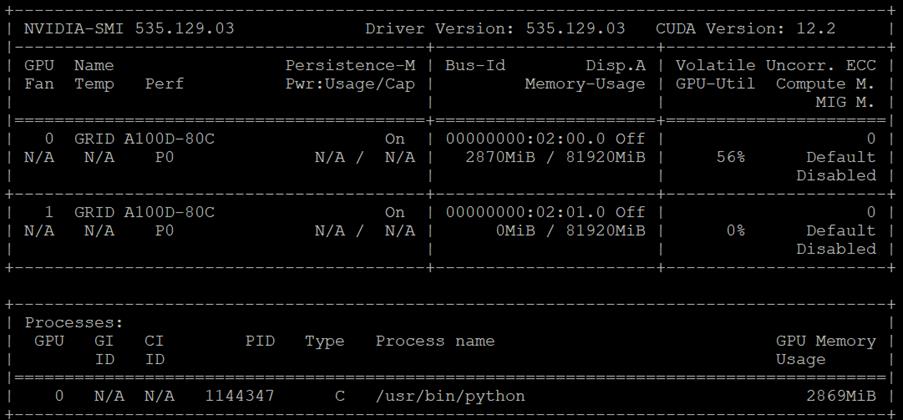

In this solution, GPUs were monitored in three ways:

nvidia-smi

The first was with the nvidia-smi tool that is installed as part of NVAIE and is mentioned above. From a monitoring perspective, the main screen of the tool can be monitored constantly by using the loop command line parameter or by using the Linux watch command. The tool also has numerous command line options to generate data about the GPU and can produce CSV files with data at specified intervals for graphing GPU data. The important thing to remember with nvidia-smi in this solution is where it is being run from. If it is run from VMware ESXi, it returns physical GPU statistics and data. If it is run from a container or pod in OCP, it returns vGPU statistics and data.

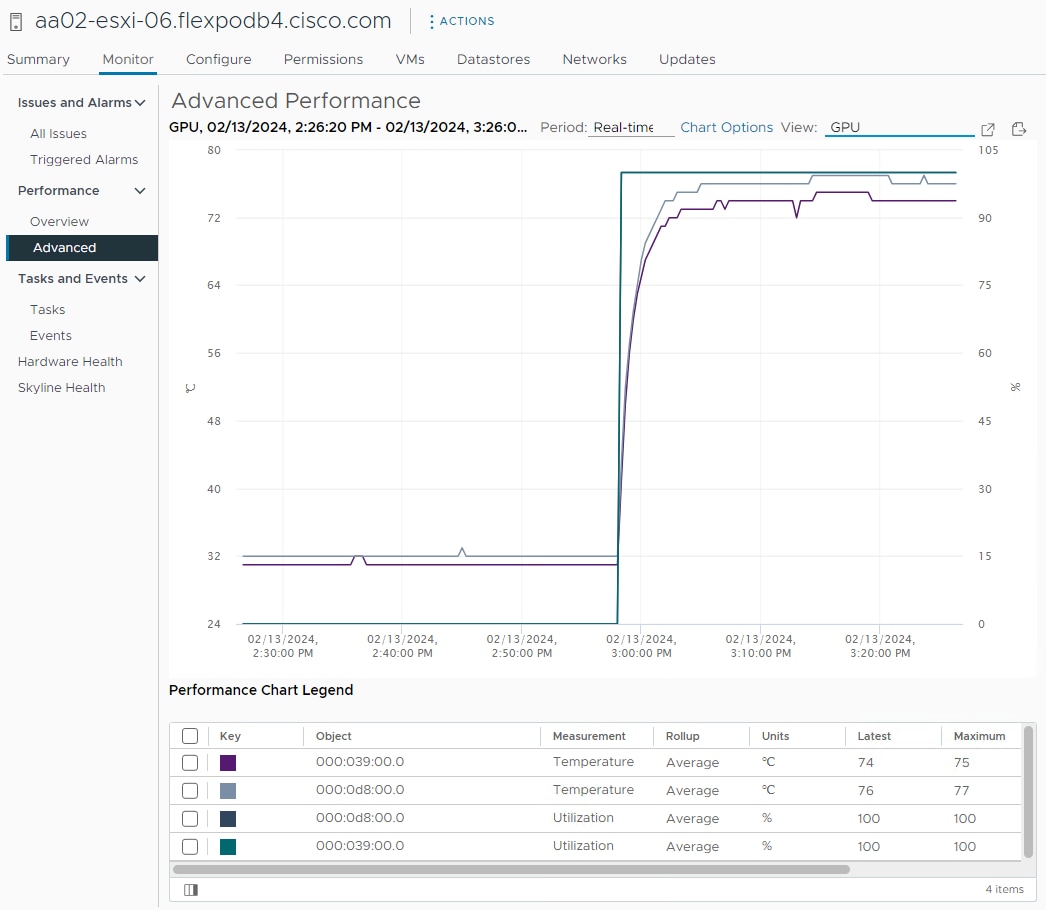

VMware vCenter GPU Statistics

In addition to nvidia-smi, the NVAIE vibs that are installed on VMware ESXi allow VMware vCenter to collect and show real time physical GPU data. GPU memory usage, memory used, temperature, and utilization are collected and up to two of these items can be charted and displayed in VMware vCenter. The figure below shows an example while running GPU Burn.

Figure 9. VMware vCenter GPU Statistics While Running GPU Burn

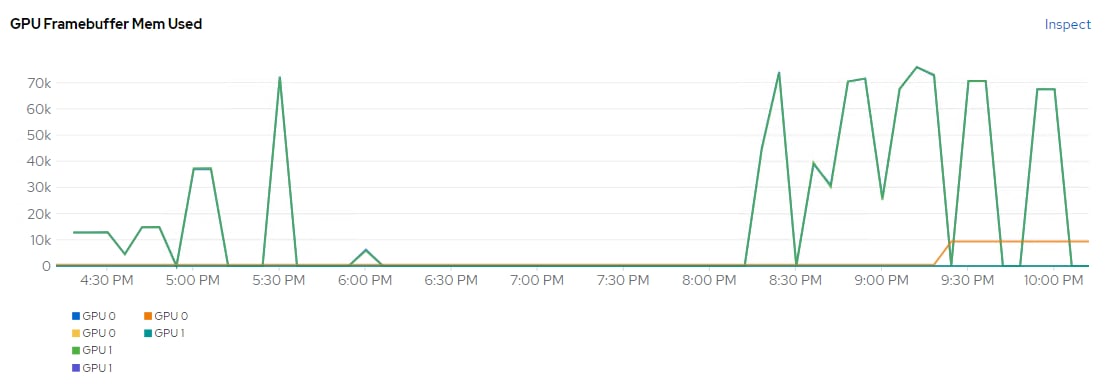

OCP Console NVIDIA DCGM Exporter Dashboard

The NVIDIA GPU Operator exposes vGPU telemetry for Prometheus by using the NVIDIA DCGM Exporter. These metrics can be visualized using a monitoring dashboard based on Grafana. The NVIDIA DCGM Exporter Grafana Dashboard can be installed in OCP and viewed in the OCP console. Figure 10 shows an example while running GPU Burn.

Figure 10. NVIDIA DCGM Exporter Dashboard While Running GPU Burn

Cisco Intersight

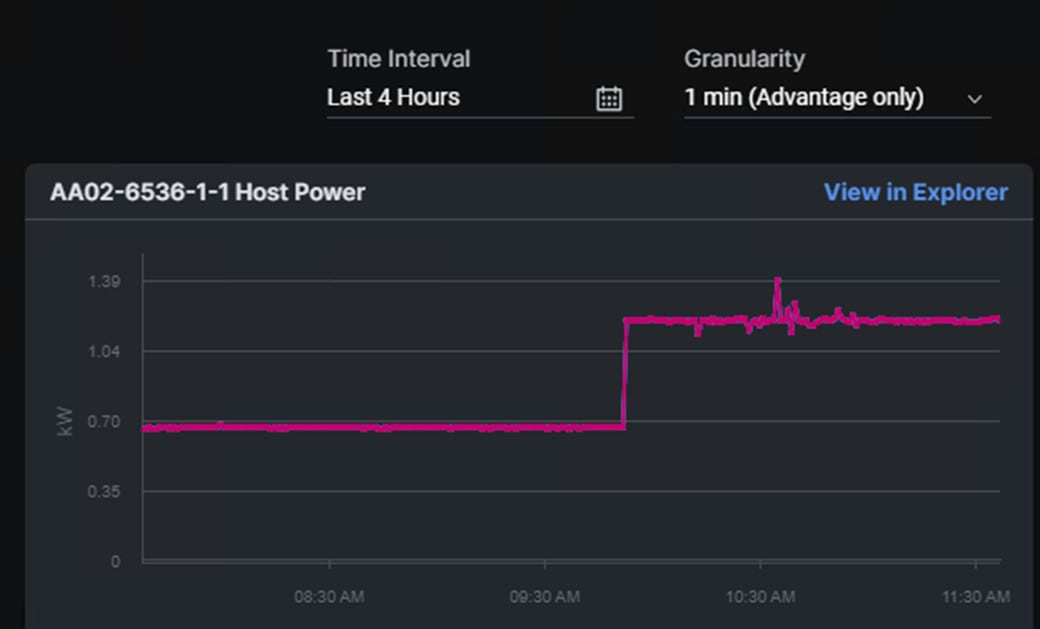

In Cisco Intersight, power consumption of GPUs installed in a Cisco UCS X440p and attached to an X210C M7 server using Cisco UCS X-Fabric is added to the server’s power consumption and shown in the server Metrics screen. In Figure 11, the Cisco UCS X210C M7 was using around 600W before GPU Burn was started. Each of the 2 NIVIDIA A100-80 GPUs attached to this server have a maximum usage of 300W. GPU Burn adds 600W of power consumption to the server from the GPUs to get to a server power consumption of around 1200W.

Figure 11. Cisco UCS Server Power Usage While Running GPU Burn

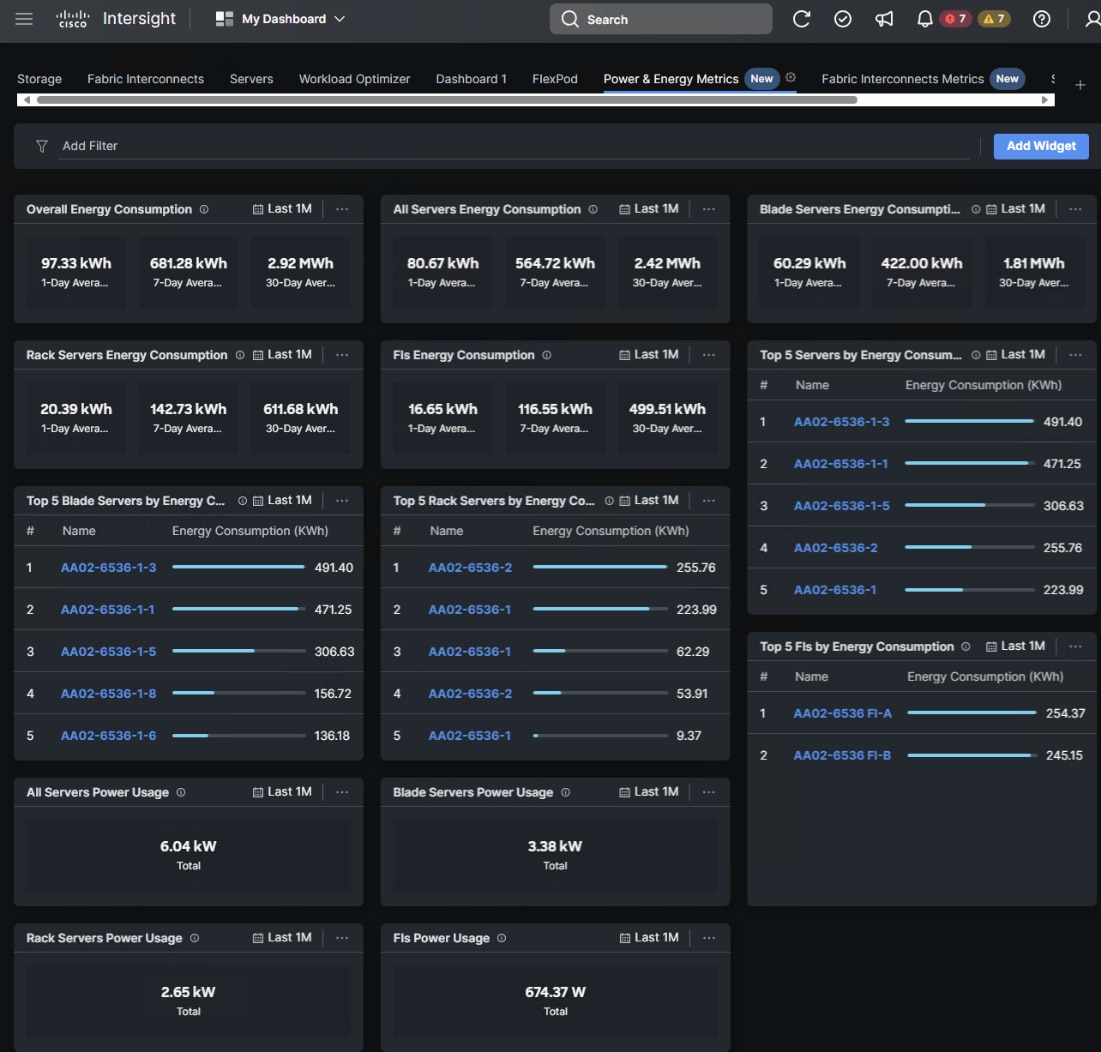

Cisco Intersight has also recently added a Power & Energy Metrics Dashboard under My Dashboard. This dashboard shows summary power and energy metrics for all servers in the Intersight Account.

Figure 12. Cisco Intersight Power and Energy Metrics Dashboard

VMware vSphere

In VMware vSphere, Distributed Power Management (DPM) was tested as part of this validation. The FlexPod-Management cluster in this validation consisted of 7 VMs running on a cluster of 3 VMware ESXi hosts and the OCP cluster consisted of 6 VMs running on a cluster of 5 VMware ESXi hosts. DPM uses IPMI over LAN to power on hosts that have been put into Standby mode when necessary. DPM was enabled in a manual automation mode for each cluster, and it was disabled with an override for hosts with GPUs. Then two hosts (one in each cluster) were suspended (powered off), saving energy consumption.

Solution Design

This chapter contains the following:

● FlexPod Multi-Tenant Configuration

The FlexPod Datacenter with Cisco UCS and Cisco Intersight meets the following general design requirements:

● Resilient design across all layers of the infrastructure with no single point of failure

● Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed

● Modular design that can be replicated to expand and grow as the needs of the business grow

● Flexible design that can support different models of various components with ease

● Simplified design with ability to integrate and automate with external automation tools

● Cloud-enabled design which can be configured, managed, and orchestrated from the cloud using GUI or APIs

To deliver a solution which meets all these design requirements, various solution components are connected and configured as covered in the upcoming sections.

The FlexPod Datacenter solution with Cisco UCS IMM M7, VMware 8.0, and NetApp ONTAP 9.13.1 is built using the following hardware components:

● Cisco UCS X9508 Chassis with Cisco UCSX-I-9108-100G intelligent fabric modules (IFMs), up to eight Cisco UCS X210C M7 Compute Nodes with 4th Generation Intel Xeon Scalable CPUs, and up to four Cisco UCS X440p PCIe Nodes each with up to two NVIDIA A100-80 GPUs (each X440p would go in place of an X210c)

● Fourth-generation Cisco UCS 6536 Fabric Interconnects to support 100GbE and 25GbE connectivity from various components

● Cisco UCS C220 M7 and C240 M7 rack mount servers with 4th Generation Intel Xeon Scalable CPUs

● High-speed Cisco NX-OS-based Nexus 93600CD-GX switching design to support 100GE and 400GE connectivity

● NetApp AFF A800 end-to-end NVMe storage with 25G or 100G Ethernet

The software components of this solution consist of:

● Cisco Intersight to deploy, maintain, and support the Cisco UCS server components

● Cisco Intersight SaaS platform to maintain and support the FlexPod components

● Cisco Intersight Assist Virtual Appliance to help connect NetApp ONTAP, VMware vCenter, and Cisco Nexus switches with Cisco Intersight

● NetApp Active IQ Unified Manager to monitor and manage the storage and for NetApp ONTAP integration with Cisco Intersight

● VMware vCenter to set up and manage the virtual infrastructure as well as Cisco Intersight integration

● Red Hat OCP to manage a Kubernetes containerized environment

● NVAIE at both the VMware ESXi and Red Hat OCP layers to manage GPU and vGPU drivers and to provide AI Inferencing software containers

● NetApp Astra Trident to provide persistent storage to OCP containers

● NetApp DataOps Toolkit to provide Jupyter notebooks for running AI Inferencing software

FlexPod Datacenter with Generative AI Inferencing with IP-based Storage Access

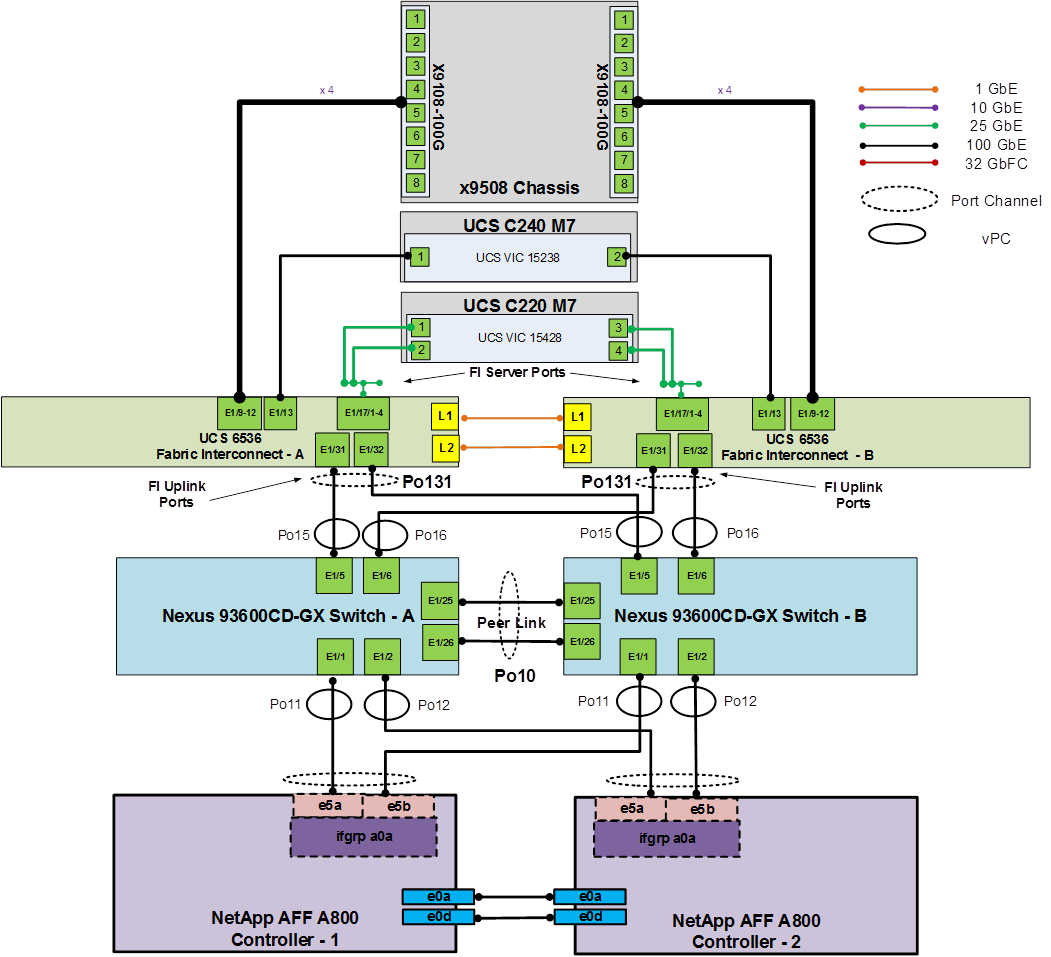

Figure 14 shows various hardware components and the network connections for the IP-based FlexPod design for Generative AI Inferencing.

Figure 14. FlexPod Datacenter Physical Topology for IP-based Storage Access

The reference hardware configuration includes:

● Two Cisco Nexus 93600CD-GX Switches in Cisco NX-OS mode provide the switching fabric.

● Two Cisco UCS 6536 Fabric Interconnects (FI) provide the chassis connectivity. Two 100 Gigabit Ethernet ports from each FI, configured as a Port-Channel, are connected to each Nexus 93600CD-GX.

● One Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCS UCSX-I-9108-100G IFMs, where four 100 Gigabit Ethernet ports are used on each IOM to connect to the appropriate FI. If additional bandwidth is required, all eight 100G ports can be utilized.

● The Cisco UCS X9508 Chassis is also equipped with a pair of Cisco UCS 9416 X-Fabric modules.

● One NetApp AFF A800 HA pair connects to the Cisco Nexus 93600CD-GX Switches using two 100 GE ports from each controller configured as a Port-Channel.

● One Cisco UCS C240 M7 rack mount server connects to the Fabric Interconnects using two 100 GE ports per server.

● One Cisco UCS C220 M7 rack mount server connects to the Fabric Interconnects using four 25 GE ports per server via breakout.

● Up to two NVIDIA A100-80 GPUs are installed in the Cisco UCS X440p cards and are connected to the Cisco UCS X210C M7 in the adjacent slot by X-Fabric.

● This reference configuration consists of 2 Cisco UCS X210c M7 servers each with an X440p card with 2 NVIDIA A100-80 GPUs, 2 additional Cisco UCS X210c M7 servers, 2 Cisco UCS X210c M6 servers, 1 Cisco UCS C240 M7, and 1 Cisco UCS C220 M7.

FlexPod Multi-Tenant Configuration

In the deployment section of this document, a base FlexPod is setup using Ansible playbooks and following the latest FlexPod Datacenter using IaC with Cisco IMM M7, VMware vSphere 8, and NetApp ONTAP 9.12.1 Deployment Guide. This validation uses NetApp ONTAP 9.13.1, but the Ansible playbooks have been successfully tested with ONTAP 9.13.1. In thinking about a FlexPod Multi-Tenant Configuration, the base FlexPod setup is a setup of the first FlexPod Infrastructure tenant. This CVD adds a second tenant (OCP) to the FlexPod, setting up a platform to run Generative AI Inferencing.

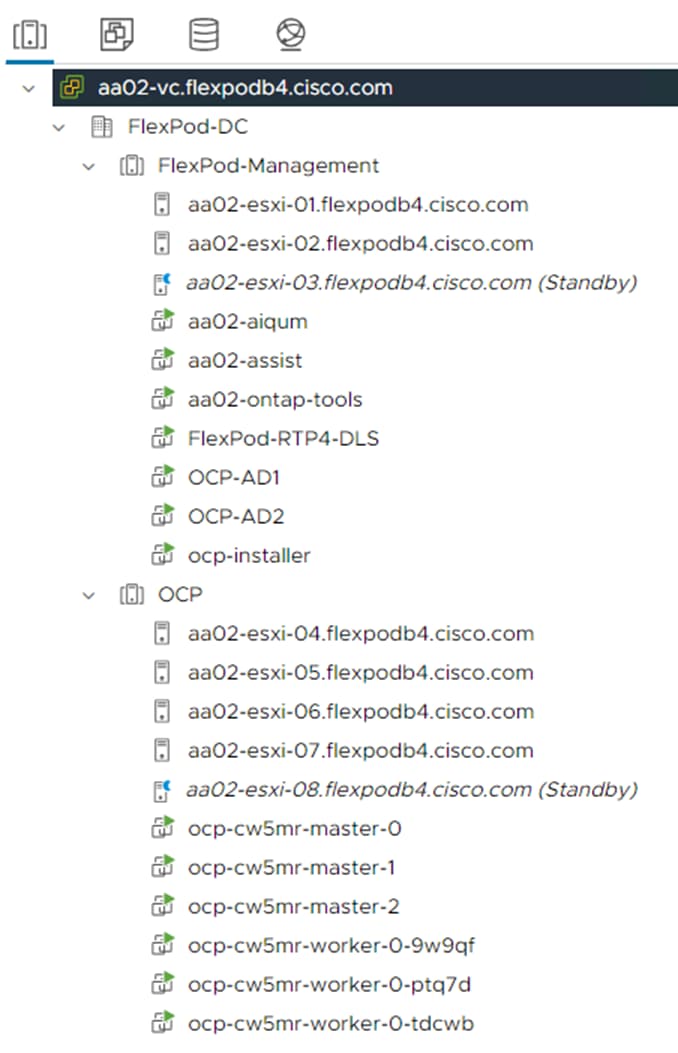

The first question that must be answered in setting up a multi-tenant environment is how the servers are going to be configured and booted. In this FlexPod environment all servers are running the VMware ESXi hypervisor, and we have two tenants. The first decision to be made is whether to run a fully shared infrastructure where both tenants run on all servers, or to dedicate servers to tenants. In this setup, it made the most sense to dedicate servers to the two tenants instead of setting up a fully shared infrastructure. Because this is a virtualized setup, it can be run on a minimum of six ESXi hosts, three for each tenant cluster. In this setup, there are eight total servers available, and the decision was made to dedicate three servers to the FlexPod Infrastructure tenant, where both FlexPod and OCP management VMs reside, and five servers to the OCP cluster, where the three OCP master VMs and three OCP worker VMs reside. The OCP master VMs have minimal requirements and more than one of these VMs can run on a single ESXi host. The OCP worker VMs can also be sized where more than one worker, or one worker and one master can run on a single ESXi host. Given that a separate NetApp Storage Virtual Machine (SVM) is used for each tenant, the second decision to be made is whether to iSCSI SAN boot all servers from the FlexPod Infrastructure SVM or to boot the three FlexPod Management servers from the Infrastructure SVM and the five OCP servers from the OCP SVM. Booting the OCP servers from the OCP SVM would require a new UCS Server Profile to be created with different iSCSI vNICs, different iSCSI boot IP pools in different subnets, and different iSCSI connection policies. Also, because adding a FlexPod tenant mainly involves adding VLANs and a NetApp SVM for a tenant, and the base FlexPod design allows these VLANs to be added to the UCS Domain Profile VLAN Policy, the base UCS Server Profile and VMware vDSs without generating a new Server Profile, it made the most sense to boot all of the servers from the FlexPod Infrastructure VM and use the same Server Profile template for both tenants.

Nexus Switch OCP Tenant Additions

To add the OCP tenant to this FlexPod, we first added four VLANs (one for OCP management, one for OCP NFS, and two for OCP NVMe-TCP) to the switches. We then added those VLANs to the appropriate switchports or port channels. Finally, to simplify OCP NTP setup, we added a switched virtual interface (SVI) in each switch as an OCP-MGMT VLAN interface. Since in NX-OS mode, if you have NTP distribution enabled, any SVI becomes and NTP distribution interface. We also added an OCP Virtual Route Forwarding (VRF) and default gateway in this VRF in case requests come into the OCP SVI from another subnet.

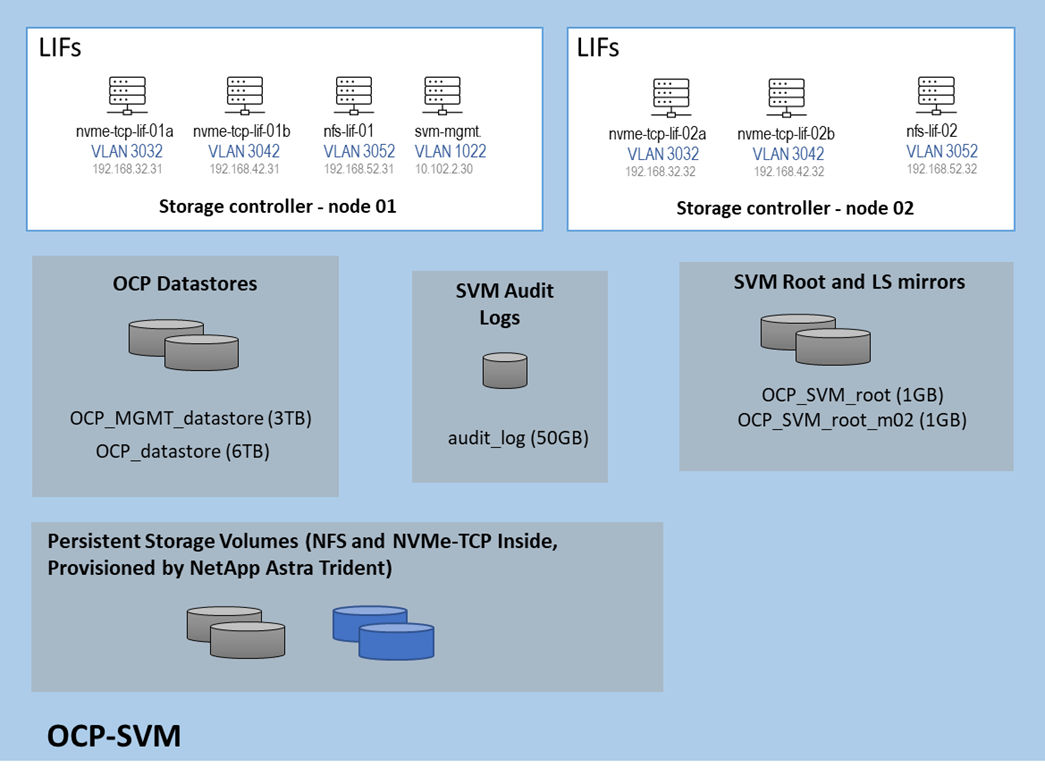

NetApp Storage OCP Tenant Additions

To add the OCP tenant to the NetApp storage we first created VLAN interfaces for the four OCP VLANs, then created corresponding broadcast domains and added the VLAN interfaces to these broadcast domains. After that we followed best practices in creating the OCP SVM and created Logical Interfaces (LIFs) inside that SVM for OCP management and storage interfaces. Next, we added two volumes to become NFS datastores, one for OCP management VMs and the other for OCP master and worker VMs. Finally, we enabled and set a password for the OCP SVM vsadmin user, which was used by NetApp Astra Trident to provision persistent container storage for OCP. The OCP SVM storage layout is shown below. Persistent container storage volumes are also added to this SVM by NetApp Astra Trident.

Figure 15. Initial Storage Layout of NetApp OCP SVM

Cisco UCS OCP Tenant Additions

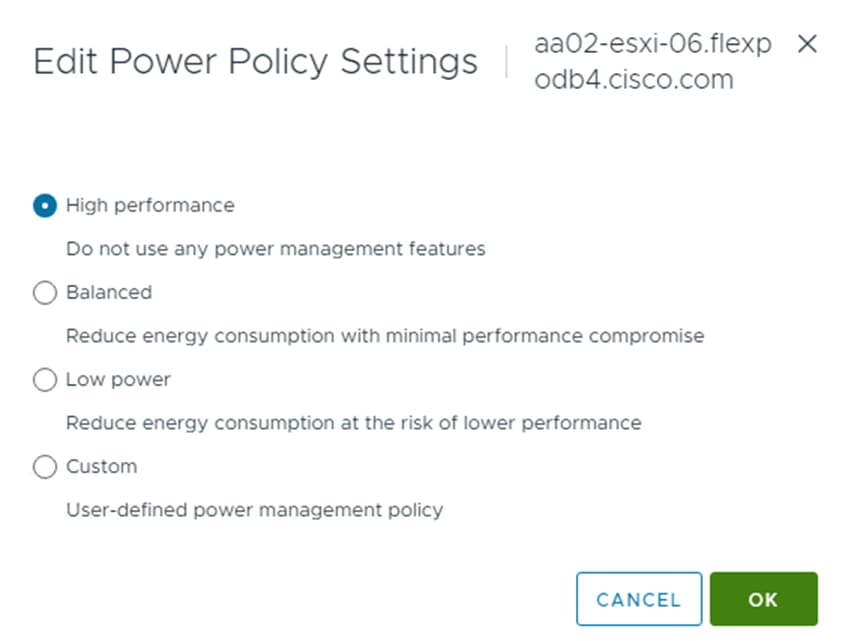

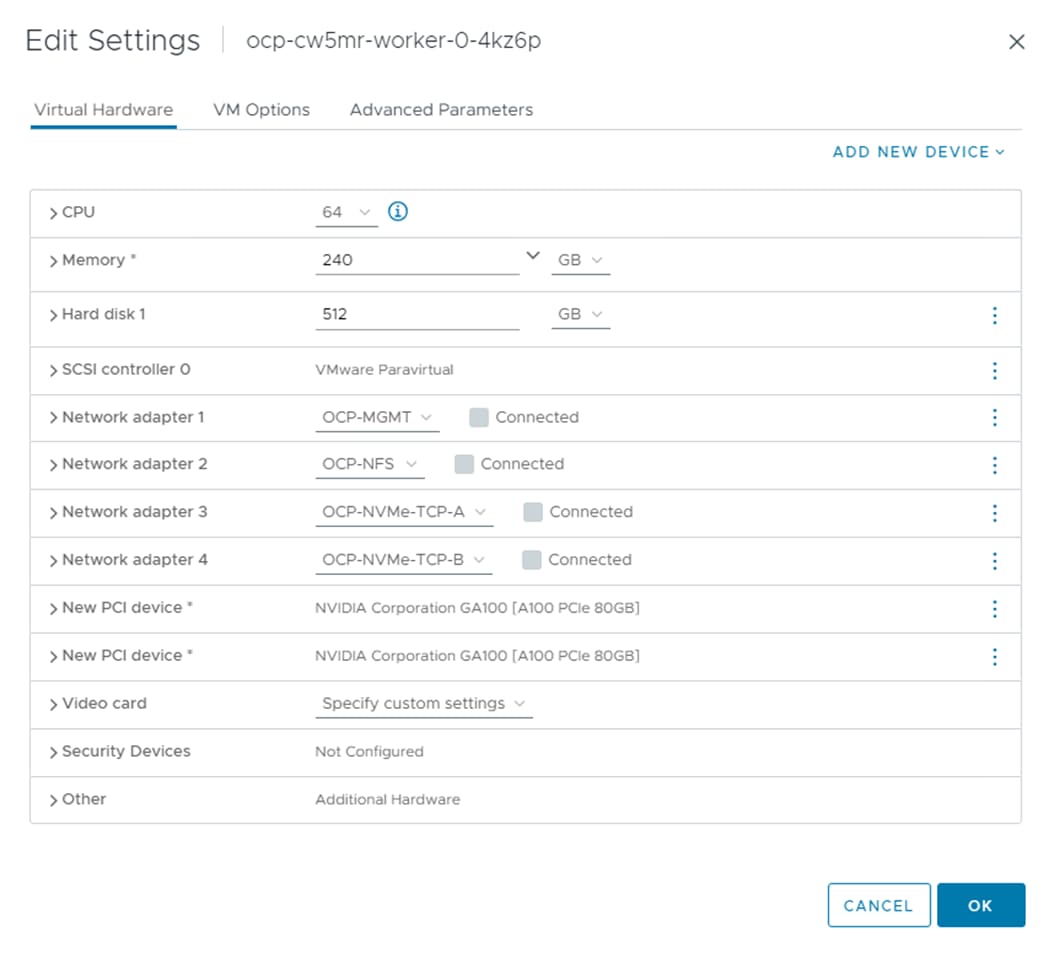

In Cisco UCS IMM, the VLANs were first need added to the VLAN policy that is a part of the UCS Domain Profile and the Domain Profile was redeployed. The VLANs were then added to the appropriate Ethernet Network Group policies to add them to the vNICs for vDS0 and the iSCSI-NVMe-TCP-vDS. Next, the standard virtualization BIOS Policy was cloned, and some BIOS tokens modified for use on the servers with GPUs. The default BIOS and VMware settings for servers in FlexPod uses a balanced power profile where power is conserved with very little effect on performance. For servers with GPUs, it is recommended to use a high-performance power profile policy and also to enable the BIOS token for Memory Mapped IO above 4GiB. The appropriate Server Profile was cloned, and the cloned BIOS policy was added to cloned template. This new template was then applied to the servers with GPUs. All server profiles were then redeployed and only the servers with GPUs were rebooted.

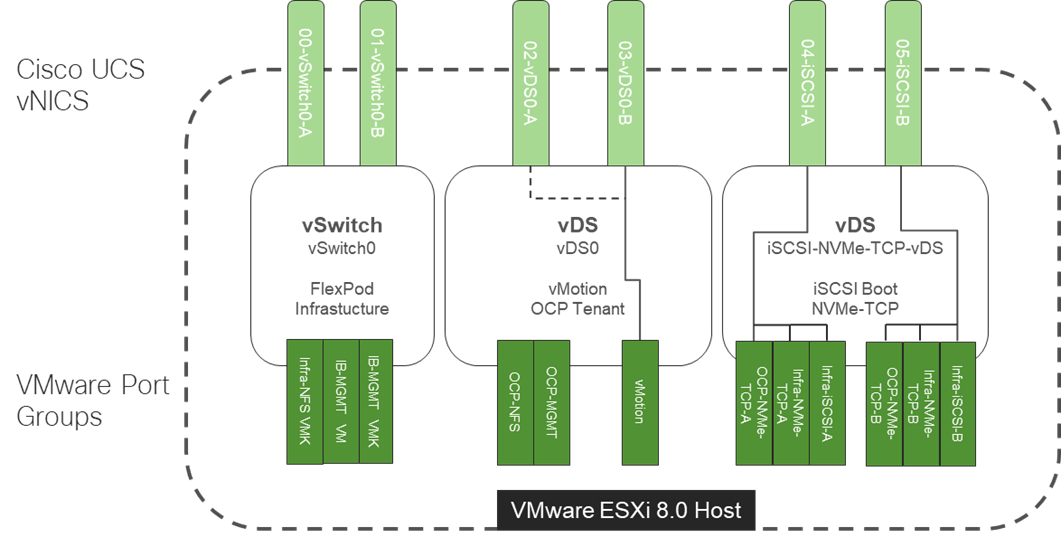

VMware vSphere OCP Tenant Additions

In VMware vCenter, the first thing that was done was to create a separate OCP ESXi cluster to hold the OCP ESXi hosts, this cluster, along with the FlexPod-Management cluster, was setup to be managed with a single image. The image for the FlexPod-Management cluster included the standard FlexPod drivers including the Cisco VIC nenic driver, the NetApp NFS VAAI plugin, and the Cisco UCS tool component. The image for the OCP cluster had all the FlexPod drivers plus the two NVAIE drivers required for the GPUs. The servers used for OCP were then moved to the OCP cluster and remediated to add the NVAIE drivers. Port groups were added to the two vDSs for OCP-MGMT, OCP-NFS, OCP-NVMe-TCP-A, and OCP-NVMe-TCP-B. The Infra-NVMe-TCP VMkernel ports were removed from the OCP servers and an OCP-NFS VMkernel port was added to each OCP host and to each FlexPod-Management host. It was not necessary to configure OCP NVMe-TCP VMkernel ports since OCP NVMe-TCP was only used to map persistent storage directly to the worker VMs. The OCP-MGMT datastore was mounted on all ESXi hosts and the OCP datastore was then mounted only on the OCP hosts. This mounting allowed the OCP management VMs (Windows AD and OCP Installer) to run in either the FlexPod-Management or OCP cluster. Finally, the Hardware Power Policy for the ESXi hosts with GPUs was changed to High performance.

VMware VMs can be successfully live migrated between Cisco UCS M7 servers with Intel 4th Generation Xeon Scalable CPUs and Cisco UCS M6 servers with Intel 3rd Generation Xeon Scalable CPUs. This capability allowed us to mix Cisco UCS M7 and M6 servers in the same VMware ESXi cluster and have vMotion without using Enhanced vMotion Compatibility (EVC).

The VMware network design with OCP included is shown in Figure 16. This diagram shows the base FlexPod VMware Network Design with pinning and the OCP tenant port groups added. In many ways, this diagram sums up the network design of the FlexPod. It shows how tenant connectivity can be added to the FlexPod, and it provides a platform to add additional tenants in a consistent way.

Figure 16. VMware Network Design with OCP Tenant

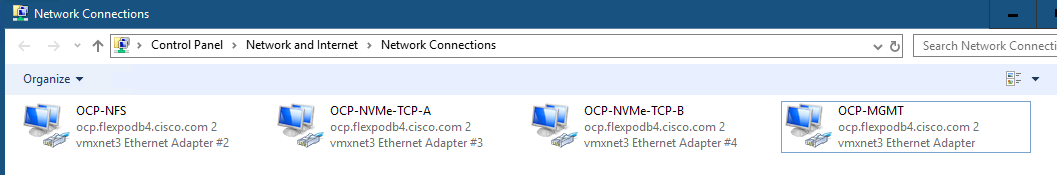

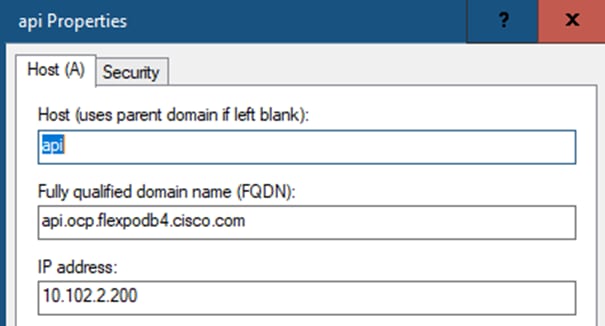

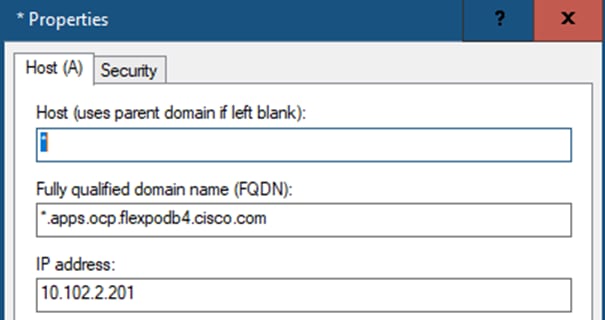

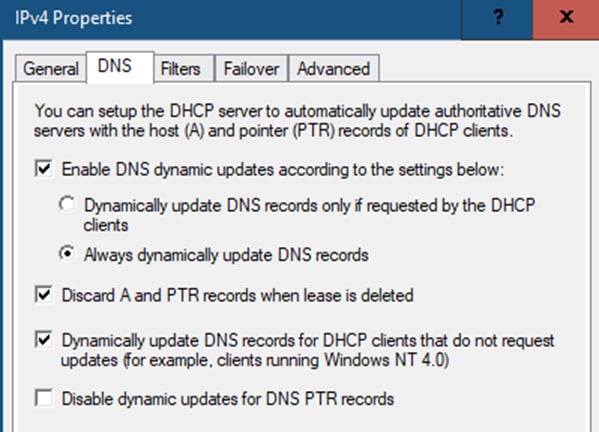

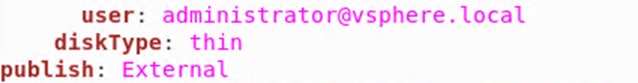

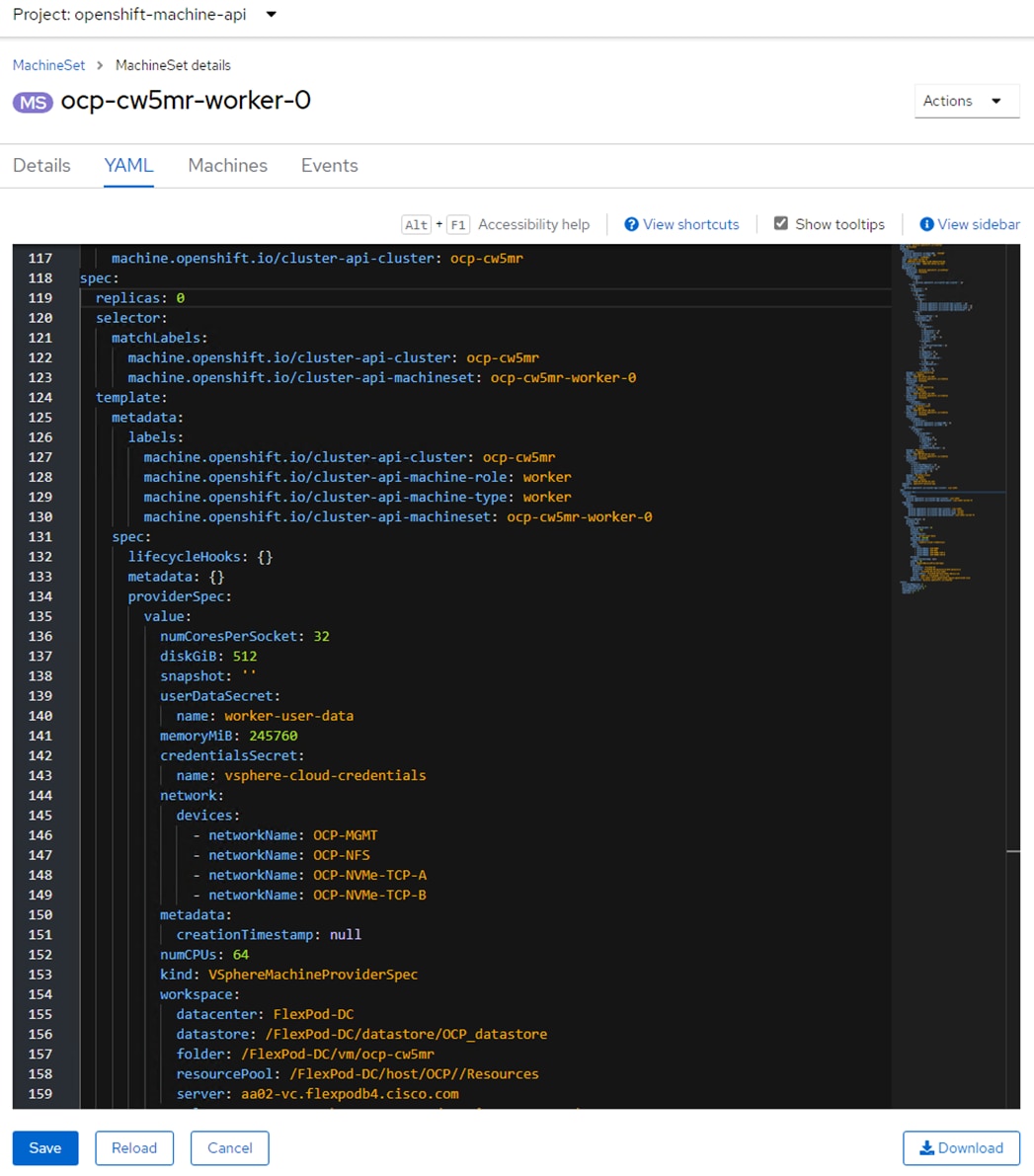

To install Red Hat OCP, first two Windows AD/DNS/DHCP server VMs, each with 4 network interfaces (OCP-MGMT, OCP-NFS, OCP-NVMe-TCP-A, and OCP-NVMe-TCP-B) with a DHCP scope for each network interface and required DNS entries for OCP were setup. Next, a Rocky Linux ocp-installer VM was setup. After that, the OCP IPI installer with a yaml file specifying the VMware vCenter IP address, user id, password, cluster, and datastore for OCP was used to install and bring up the OCP cluster. Next, OCP post configuration was done around properly setting up NTP servers and setting up NVMe-TCP. In VMware vCenter the OCP VM template was modified with some advanced settings for connecting PCI devices to VMs and upgraded to VM version 20 to allow for up to 8 vGPUs per worker VM. The OCP Machineset was then modified to resize the worker VMs and to add NFS and NVMe-TCP network interfaces to the worker VMs. These storage interfaces get their IP addresses via DHCP from the OCP AD/DNS/DHCP servers. The number of Machineset replicas was then set to zero then back to three to regenerate the workers.

NVIDIA GPU Operator

After OCP was installed and setup, the NVIDIA GPU Operator was installed to provide vGPU drivers and licensing. The first step is to install and configure an NVIDIA licensing server connected to the NVIDIA cloud. In this validation, an on-prem NVIDIA Delegated License Server (DLS) was installed from OVA and connected to the NVIDIA cloud to receive licenses. A Cloud-base License Server (CLS) in the NVIDIA cloud can also be used. Next, the VMware ESXi host GPU settings were adjusted to Share Direct graphics and vGPUs were assigned to OCP worker nodes. Next, the OCP Node Feature Discovery (NFD) Operator was installed to identify vGPUs connected to worker VMs. Then, the NVIDIA GPU Operator was installed with secure connectivity to the DLS for license retrieval and to the NVIDIA NGC Catalog for retrieval of the NVAIE vGPU driver. Once the NVIDIA GPU Operator was fully up and operational, vGPU licensing was checked to ensure GPU performance. This step also verified that the vGPUs and drivers are properly set up. The final steps were to enable the vGPU Dashboard in the OCP Console and to enable GPU monitoring in VMware vCenter.

NetApp Astra Trident

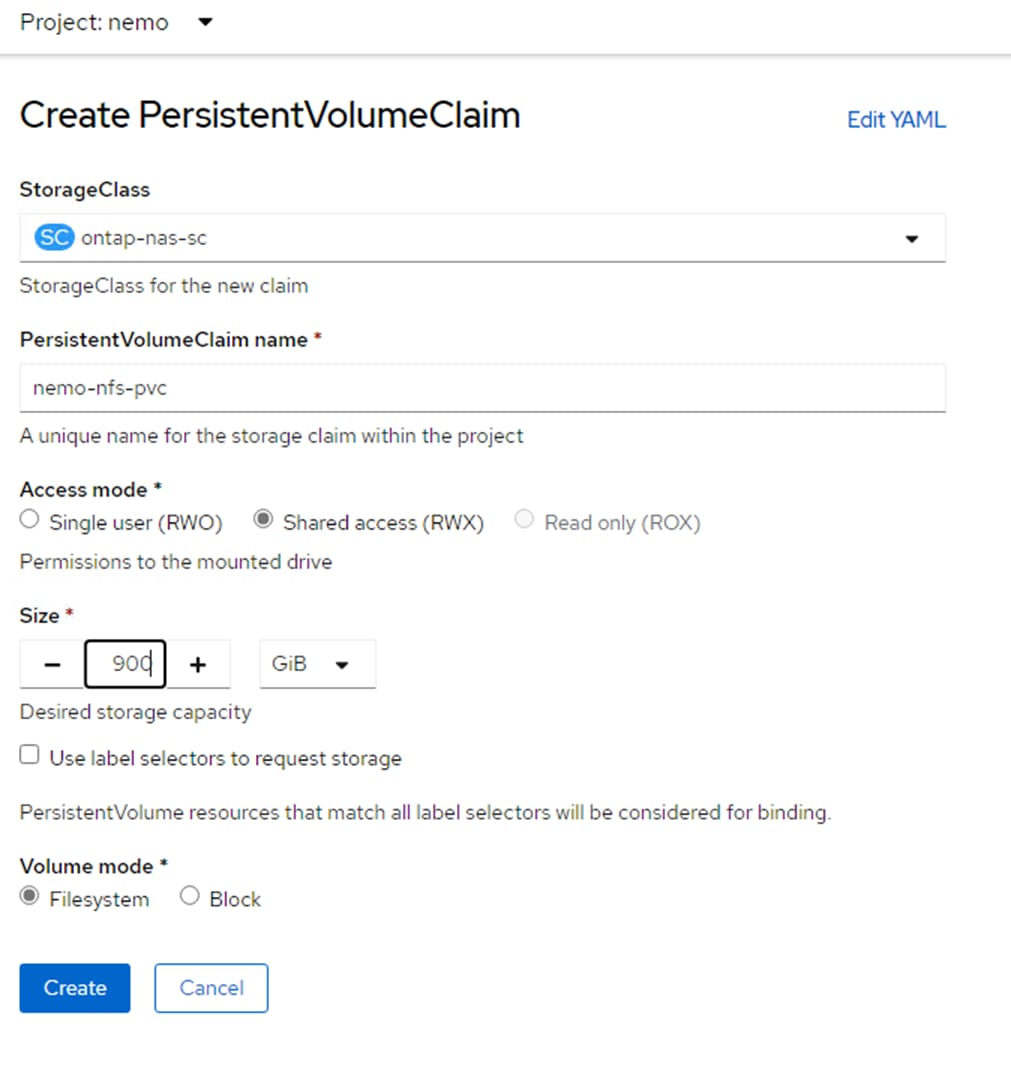

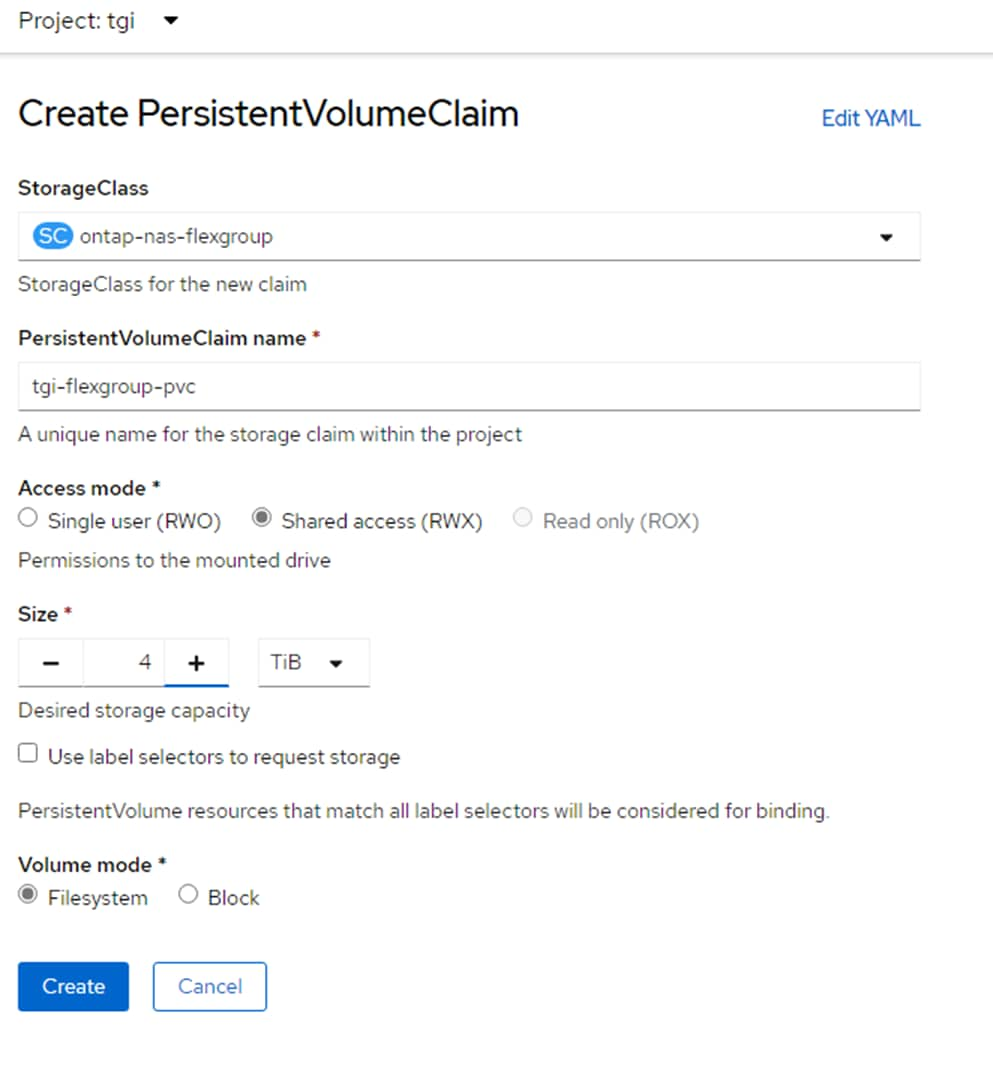

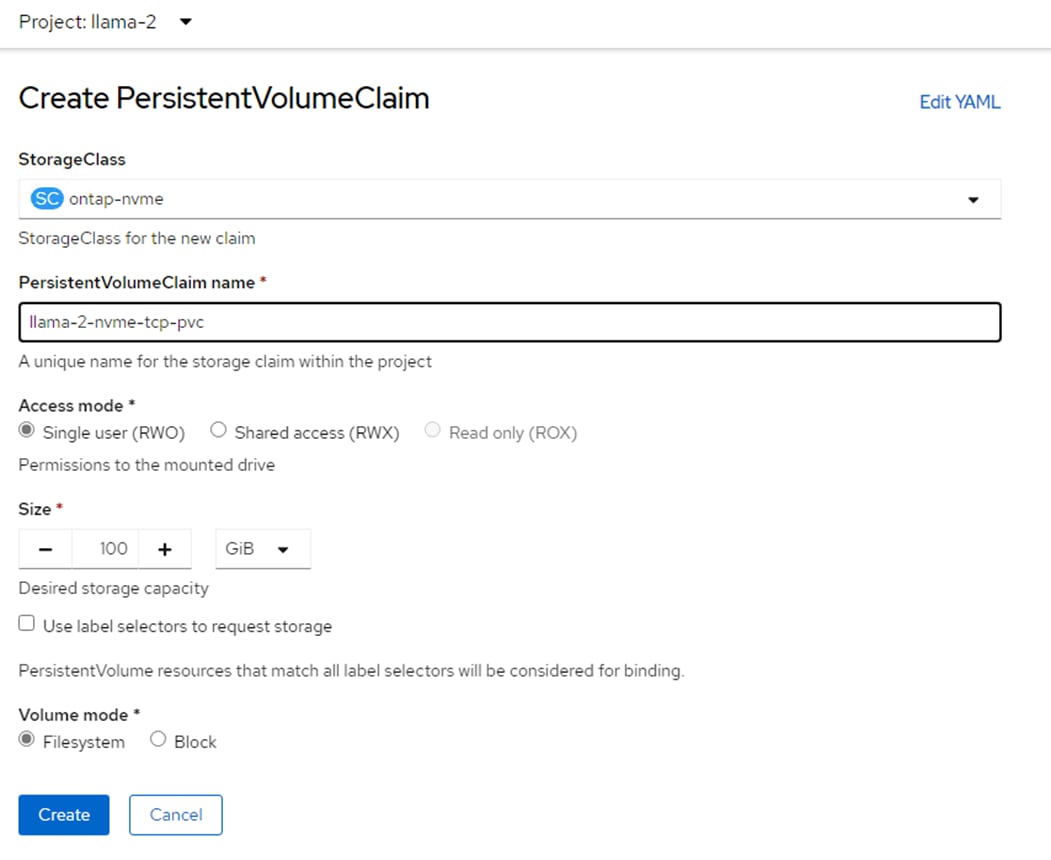

NetApp Astra Trident was then installed into OCP with Helm and three storage classes for persistent storage created:

● NFS 4.1 with a Single Volume with Shared or Single User Access

● NFS 4.1 with NetApp FlexGroup with Shared or Single User Access

● NVMe-TCP with Single User Access

Once the storage classes were created, a persistent volume claim (PVC) could be created with Trident creating backing storage on the NetApp AFF A800. Then when a pod or container was deployed, the PVC could be used to attach the persistent storage to the pod or container. Data in the persistent storage is not deleted each time a container is recreated meaning the pod can be deleted and a replacement pod automatically added without losing any data in the persistent storage. This capability was used in this solution where the AI Inferencing models were stored in persistent storage and standard containers used for the inferencing server or software. With limited GPU resources, deployments of different inferencing servers could be deleted and re-added without having to re-download the AI Inferencing models. The other aspect of the storage classes was Shared or Single User Access. With the NFS-based Storage Classes, multiple containers and other devices could be attached to the same persistent storage. This could allow more than one Inferencing Servers to share a single AI model repository. In this validation, NFS-based persistent storage was mounted from the OCP Installer VM to allow direct copying of AI models to persistent storage. All three Storage Classes were tested in this validation.

Each layer of this platform was built with base security as a requirement. If further security is needed, please refer to FlexPod Datacenter Zero Trust Framework Design Guide. The FlexPod multi-tenant architecture defined in this document aligns with the model in the Zero Trust document.

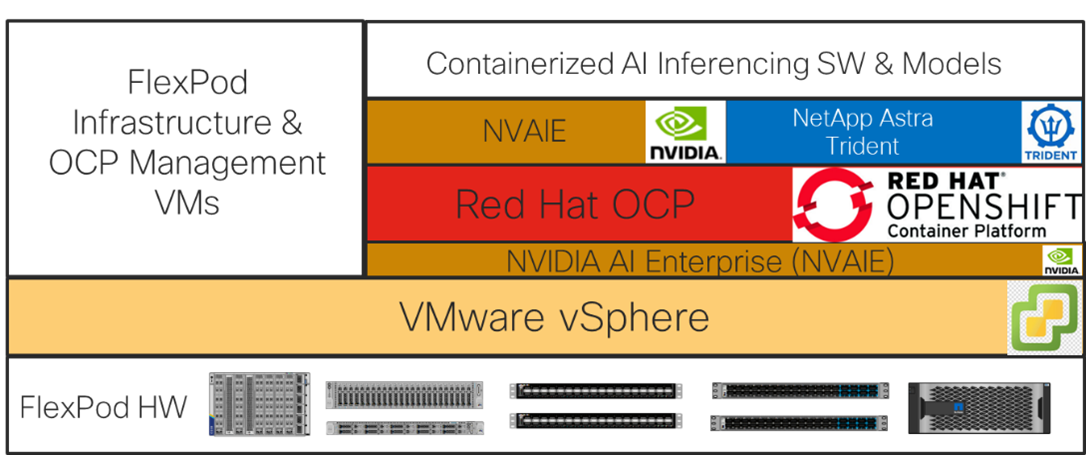

The base FlexPod with VMware vSphere architecture was configured, then NVIDIA GPUs and an OCP tenant were added followed by NVAIE on VMware, Red Hat OCP, NVAIE on OCP, and NetApp Astra Trident to build a powerful platform for running Generative AI Inferencing. This layered approach is summarized in the figure below. Each layer was configured with best practices and security, resulting in a high-performance, secure platform for Generative AI Inferencing. This platform can be extended for further AI applications such as Training, Fine Tuning, and Retrieval Augmentation Generation (RAG), provided that the platform is sized for the application.

Figure 17. FlexPod as a Platform for Generative AI Inferencing

Note: If the NVIDIA physical GPUs are assigned to the Red Hat OCP workers as PCI devices and the NVAIE driver is not installed in VMware ESXi, then the NVAIE layer between VMware vSphere and Red Hat OCP can be removed.

Solution Deployment

This chapter contains the following:

● Deploy the NVIDIA GPU Operator on OCP

Note: The NetApp storage controller and disk shelves should be connected according to best practices for the specific storage controller and disk shelves. For disk shelf cabling, refer to NetApp Support: https://docs.netapp.com/us-en/ontap-systems/index.html

Table 2 lists VLANs configured for setting up the FlexPod environment along with their usage.

| VLAN ID |

Name |

Usage |

IP Subnet used in this deployment |

| 2 |

Native-VLAN |

Use VLAN 2 as native VLAN instead of default VLAN (1). |

|

| 1020 |

OOB-MGMT-VLAN |

Out-of-band management VLAN to connect management ports for various devices |

10.102.0.0/24; GW: 10.102.0.254 |

| 1021 |

IB-MGMT-VLAN |

In-band management VLAN utilized for all in-band management connectivity - for example, ESXi hosts, VM management, and so on. |

10.102.1.0/24; GW: 10.102.1.254 |

| 1022 |

OCP-MGMT |

OCP management traffic VLAN – used in place of VM-Traffic VLAN |

10.102.2.0/24; GW: 10.102.2.254 |

| 3050 |

NFS-VLAN |

NFS VLAN for mounting datastores in ESXi servers for VMs |

192.168.50.0/24 ** |

| 3010 |

iSCSI-A |

iSCSI-A path for storage traffic including boot-from-san traffic |

192.168.10.0/24 ** |

| 3020 |

iSCSI-B |

iSCSI-B path for storage traffic including boot-from-san traffic |

192.168.20.0/24 ** |

| 3030 |

NVMe-TCP-A |

NVMe-TCP-A path when using NVMe-TCP |

192.168.30.0/24 ** |

| 3040 |

NVMe-TCP-B |

NVMe-TCP-B path when using NVMe-TCP |

192.168.40.0/24 ** |

| 3000 |

vMotion |

VMware vMotion traffic |

192.168.0.0/24 ** |

| 3052* |

OCP-NFS |

NFS VLAN for OCP persistent storage and OCP cluster and support VMs |

192.168.52.0/24 ** |

| 3032* |

OCP-NVMe-TCP-A |

NVMe-TCP-A path when using NVMe-TCP for persistent storage |

192.168.32.0/24 ** |

| 3042* |

OCP-NVMe-TCP-B |

NVMe-TCP-B path when using NVMe-TCP for persistent storage |

192.168.42.0/24 ** |

* To be added after initial build.

** IP gateway is not needed since no routing is required for these subnets

Some of the key highlights of VLAN usage are as follows:

● VLAN 1020 allows you to manage and access out-of-band management interfaces of various devices.

● VLAN 1021 is used for in-band management of VMs, ESXi hosts, and other infrastructure services.

● VLAN 1022 is used for OCP management.

● VLAN 3050 provides ESXi hosts access to the NFS datastores hosted on the NetApp Controllers for deploying VMs.

● A pair of iSCSI VLANs (3010 and 3020) is configured to provide access to boot LUNs for ESXi hosts. These VLANs are not needed if you are using FC-only connectivity.

● A pair of NVMe-TCP VLANs (3030 and 3040) are configured to provide access to NVMe datastores when NVMe-TCP is being used.

● VLAN 3000 is used for VM vMotion.

● Additional storage VLANs (3032, 3042, and 3052) are configured for OCP persistent storage and OCP VMs.

Note: iSCSI VLANs are not being configured for OCP persistent storage since NVMe-TCP VLANs are being configured.

Table 3 lists the infrastructure VMs necessary for deployment as outlined in this document.

| Virtual Machine Description |

VLAN |

IP Address |

Comments |

| vCenter Server |

1021 |

10.102.1.100 |

Hosted on either pre-existing management infrastructure (preferred) or on FlexPod |

| NetApp ONTAP Tools for VMware vSphere |

1021 |

10.102.1.99 |

Hosted on FlexPod |

| NetApp SnapCenter Plug-in for VMware vSphere |

1021 |

10.102.1.98 |

Hosted with vCenter on either pre-existing management infrastructure (preferred) or on FlexPod |

| NetApp Active IQ Unified Manager |

1021 |

10.102.1.97 |

Hosted on FlexPod |

| Cisco Intersight Assist |

1021 |

10.102.1.96 |

Hosted on FlexPod |

| FlexPod Ansible |

1021 |

10.102.1.151 |

Hosted on pre-existing management infrastructure and used to run Ansible playbooks to set up the FlexPod |

| OCP AD 1 and 2 |

1022 |

10.102.2.249 and 10.102.2.250 |

Hosted on FlexPod - Microsoft Windows AD servers to provide DNS and DHCP for the OCP environment. These VMs can also be Linux VMs and would use Linux DNS and DHCP server software |

| OCP Installer |

1022 |

10.102.2.10 |

Hosted on FlexPod – Linux VM to install and configure the OCP environment. |

| NVIDIA DLS |

1021 |

10.102.1.17 |

Hosted on FlexPod – OVA to issue NVIDIA vGPU licenses. Could also have been in VLAN 1022. |

Table 4 lists the software revisions for various components of the solution.

| Layer |

Device |

Image Bundle |

Comments |

| Compute |

Cisco UCS |

4.2(3h) |

Cisco UCS GA release for infrastructure including FIs and IFM |

| Cisco UCS X210C M7 |

5.2(0.230092) |

|

|

| Cisco UCS C220/240 M7 |

4.3(2.230270) |

|

|

| GPU |

NVIDIA A100-80 |

535.129.03 |

|

| Network |

Cisco Nexus 93600CD-GX NX-OS |

10.2(6)M |

|

| Storage |

NetApp AFF A800 |

ONTAP 9.13.1P6 |

Latest patch release |

| Software |

Cisco Intersight Assist Appliance |

1.0.9-630 |

1.0.9-630 initially installed and then automatically upgraded to latest release |

| VMware vCenter |

8.0 |

Latest 8.0 Build |

|

| VMware ESXi |

8.0 |

Latest 8.0 Build |

|

| VMware ESXi nenic Ethernet Driver |

2.0.11.0 |

|

|

| NetApp ONTAP Tools for VMware vSphere |

9.13 |

Formerly Virtual Storage Console (VSC) |

|

| NetApp SnapCenter Plug-in for VMware vSphere |

4.9 |

|

|

| NetApp Active IQ Unified Manager |

9.14RC1 |

|

The information in this section is provided as a reference for cabling the physical equipment in a FlexPod environment. To simplify cabling requirements, a cabling diagram was used.

The cabling diagram in this section contains the details for the prescribed and supported configuration of the NetApp AFF 800 running NetApp ONTAP 9.13.1P6.

Note: For any modifications of this prescribed architecture, consult the NetApp Interoperability Matrix Tool (IMT).

Note: This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps.

Note: Be sure to use the cabling directions in this section as a guide.

The NetApp storage controller and disk shelves should be connected according to best practices for the specific storage controller and disk shelves. For disk shelf cabling, refer to NetApp Support.

Figure 18 details the cable connections used in the validation lab for the FlexPod topology based on the Cisco UCS 6536 fabric interconnect. Two 100Gb links connect each Cisco UCS Fabric Interconnect to the Cisco Nexus Switches and each NetApp AFF controller to the Cisco Nexus Switches. Additional 1Gb management connections will be needed for out-of-band network switches that sit apart from the FlexPod infrastructure. Each Cisco UCS fabric interconnect and Cisco Nexus switch is connected to the out-of-band network switches, and each AFF controller has a connection to the out-of-band network switches. Layer 3 network connectivity is required between the Out-of-Band (OOB) and In-Band (IB) Management Subnets. This cabling diagram shows the iSCSI-boot configuration.

Figure 18. FlexPod Cabling with Cisco UCS 6536 Fabric Interconnect

Procedure 1. Deploy FlexPod

Step 1. Using the information in the above tables and diagrams, use FlexPod Datacenter using IaC with Cisco IMM M7, VMware vSphere 8, and NetApp ONTAP 9.12.1 to deploy the FlexPod up until the beginning of the FlexPod Management Tools Setup. Rename the VM-Traffic VLAN with a name like OCP-MGMT. Deploy a minimum of 6 servers with ESXi. In this lab setup, 8 total servers were deployed. Deploy an iSCSI-booted FlexPod with NVMe-TCP. The result of this setup will be all servers in the FlexPod-Management cluster.

Note: Do not use the OCP storage VLANs in the initial configuration. Those VLANs will be added later in the process. At the end of the VMware configuration, only configure NVMe-TCP on the three servers that will be used for management and will stay in the FlexPod-Management cluster.

Step 2. If mapping vGPUs to OCP, using your NVIDIA Enterprise account login, connect to https://ui.licensing.nvidia.com/software and download the NVIDIA AI Enterprise 4.1 Software Package for VMware vSphere 8.0. From the downloaded NVIDIA-AI-Enterprise-vSphere-8.0-535.129.03-535.129.03-537.70.zip file extract the NVD-AIE-800_535.129.03-1OEM.800.1.0.20613240_22670890.zip offline bundle and the nvd-gpu-mgmt-daemon_535.129.03-0.0.0000_22676950.zip file.

Step 3. Create an OCP ESXi cluster, turning on vSphere DRS and vSphere HA. Select Manage all hosts in the cluster with a single image and Compose a new image. In FlexPod Datacenter using IaC with Cisco IMM M7, VMware vSphere 8, and NetApp ONTAP 9.12.1, use Create a FlexPod ESXi Custom ISO using VMware vCenter in the Appendix as a guide, set up an image for the OCP cluster with the latest version of ESXi 8.0 (not ESXi 8.0 U1 or U2) with Cisco UCS Addon-ESXi version 4.2.3-b Vendor Addon. Click the Updates tab for the cluster, edit the image and include as Components the two drivers extracted in Step 2 (if mapping vGPUs to OCP) along with all drivers added in the ESXi Ansible scripts. Move the hosts that will be used for OCP to the OCP cluster. Make sure to set the swap file location to Datastore specified by host under the General setting. Put the hosts to be used for OCP in Maintenance Mode and move these hosts to the OCP cluster. Once the OCP hosts are moved to the OCP cluster, go to the cluster settings and under vSphere Cluster Services, add the vCLS datastore. Select the Updates tab, click REMEDIATE ALL and follow the prompts to Remediate all of the OCP hosts.

Note: If mapping the full physical GPUs to OCP workers as PCI devices, do not include the NVAIE driver or nvd-gpu-mgmt-daemon in the cluster image.

Step 4. Set up an image for the FlexPod-Management cluster with the latest version of ESXi 8.0 (not ESXi 8.0 U1 or U2) with Cisco UCS Addon-ESXi version 4.2.3-b Vendor Addon. Click the Updates tab for the cluster, edit the image and include as Components all drivers added in the ESXi Ansible scripts. It is not necessary to add the NVIDIA drivers to this cluster image. Select the Updates tab, click REMEDIATE ALL and follow the prompts to Remediate all of the FlexPod-Management hosts.

Step 5. Return to FlexPod Datacenter using IaC with Cisco IMM M7, VMware vSphere 8, and NetApp ONTAP 9.12.1 and complete the management tools installation and Cisco Intersight integration, including creating a FlexPod Integrated System.

Note: In order to do a first-time Intersight HCL check, it may be necessary to log into each VMware ESXi host with ssh and run python /opt/ucs_tool_esxi/ucs_host_inventory.py

Use the following procedures and steps to add an OCP tenant to your FlexPod so that OCP can be installed to build a platform to install AI Generative Inferencing software.

Procedure 1. Configure Nexus Switches for the OCP Tenant

Run the following commands to add NTP distribution interfaces to the switches and for VLANs used for OCP persistent storage access. Execute these steps in an ssh session on both switches.

config t

vrf context OCP

description VRF for routing OCP subnets/VLANs

ip route 0.0.0.0/0 10.102.2.254

interface VLAN1022

vrf member OCP

ip address 10.102.2.3/24 # Use 10.102.2.4/24 in the second switch

no shutdown

exit

vlan 3032

name OCP-NVMe-TCP-A

vlan 3042

name OCP-NVMe-TCP-B

vlan 3052

name OCP-NFS

exit

int Po10,Po11,Po12,Po15,Po16

switchport trunk allowed vlan add 3032,3042,3052 # Add OCP Storage VLANs to vPC Peer Link, Storage Interfaces, and UCS FI Uplink Interfaces

int Po11,Po12,Po127

switchport trunk allowed vlan add 1022 # Add OCP-MGMT VLAN to Storage Interfaces and make sure it is on the Uplink Interface

copy r s

Procedure 2. Configure NetApp ONTAP Storage for the OCP Tenant

Complete the following steps to add VLAN ports and broadcast domains to the NetApp storage for the OCP-MGMT and OCP storage VLANs, and then add and configure the SVM for OCP persistent storage volumes, including adding management, NFS, and NVMe-TCP LIFs and the vsadmin user and password. Execute these steps from the storage cluster ssh interface.

Step 1. Create the OCP-MGMT, OCP-NVMe-TCP-A , and OCP-NVMe-TCP-B, OCP-NFS broadcast domain with a maximum transmission unit (MTU) of 9000, run the following commands in ONTAP:

network port broadcast-domain create -broadcast-domain OCP-MGMT -mtu 1500

network port broadcast-domain create -broadcast-domain OCP-NVMe-TCP-A -mtu 9000

network port broadcast-domain create -broadcast-domain OCP-NVMe-TCP-B -mtu 9000

network port broadcast-domain create -broadcast-domain OCP-NFS -mtu 9000

Step 2. Create the OCP management VLAN ports and add them to the OCP management broadcast domain:

network port vlan create -node AA02-A800-01 -vlan-name a0a-1022

network port vlan create -node AA02-A800-02 -vlan-name a0a-1022

network port broadcast-domain add-ports -broadcast-domain OCP-MGMT -ports AA02-A800-01:a0a-1022,AA02-A800-02:a0a-1022

Step 3. Create the OCP NVMe-TCP VLAN ports and add them to the broadcast domain:

network port vlan create -node AA02-A800-01 -vlan-name a0a-3032

network port vlan create -node AA02-A800-02 -vlan-name a0a-3032

network port broadcast-domain add-ports -broadcast-domain OCP-NVMe-TCP-A -ports AA02-A800-01:a0a-3032,AA02-A800-02:a0a-3032

network port vlan create -node AA02-A800-01 -vlan-name a0a-3042

network port vlan create -node AA02-A800-02 -vlan-name a0a-3042

network port broadcast-domain add-ports -broadcast-domain OCP-NVMe-TCP-B -ports AA02-A800-01:a0a-3042,AA02-A800-02:a0a-3042

Step 4. Create the OCP NFS VLAN ports and add them to the OCP NFS broadcast domain:

network port vlan create -node AA02-A800-01 -vlan-name a0a-3052

network port vlan create -node AA02-A800-02 -vlan-name a0a-3052

network port broadcast-domain add-ports -broadcast-domain OCP-NFS -ports AA02-A800-01:a0a-3052,AA02-A800-02:a0a-3052

Step 5. Create SVM (Storage Virtual Machine). Run the vserver create command:

vserver create -vserver OCP-SVM

Step 6. Add the required data protocols to the SVM & Remove the unused data protocols from the SVM:

vserver add-protocols -vserver OCP-SVM -protocols nfs,nvme

vserver remove-protocols -vserver OCP-SVM -protocols cifs,fcp,iscsi,s3

Step 7. Add the two data aggregates to the OCP-SVM aggregate list & Enable and run the NFS protocol in the SVM::

vserver modify -vserver OCP-SVM -aggr-list AA02_A800_01_NVME_SSD_1,AA02_A800_02_NVME_SSD_1

vserver nfs create -vserver OCP-SVM -udp disabled -v3 enabled -v4.1 enabled -vstorage enabled

Step 8. Create a Load-Sharing Mirror of the SVM Root Volume. Create a volume to be the load-sharing mirror of the infrastructure SVM root volume only on the node that does not have the Root Volume:

volume show -vserver OCP-SVM # Identify the aggregate and node where the root volume is located.

volume create -vserver OCP-SVM -volume OCP_SVM_root_lsm0<x> -aggregate AA02_A800_0<x>_NVME_SSD_1 -size 1GB -type DP # Create the mirror volume on the other node.

Step 9. Create the mirroring relationship:

snapmirror create -source-path OCP-SVM:OCP_SVM_root -destination-path OCP-SVM:OCP_SVM_root_lsm0<x> -type LS -schedule 15min

Step 10. Initialize the mirroring relationship & verify the same:

snapmirror initialize-ls-set -source-path OCP-SVM:OCP_SVM_root

snapmirror show -vserver OCP-SVM

Progress

Source Destination Mirror Relationship Total Last

Path Type Path State Status Progress Healthy Updated

----------- ---- ------------ ------- -------------- --------- ------- --------

AA02-A800://OCP-SVM/OCP_SVM_root

LS AA02-A800://OCP-SVM/OCP_SVM_root_lsm01

Snapmirrored

Idle - true -

Step 11. Create NVMe Service:

vserver nvme create -vserver OCP-SVM -status-admin up

vserver nvme show -vserver OCP-SVM

Vserver Name: OCP-SVM

Administrative Status: up

Discovery Subsystem NQN: nqn.1992-08.com.netapp:sn.00f61b2cb31b11ee8d1700a098e217cb:discovery

Step 12. To create login banner for the SVM, run the following command:

security login banner modify -vserver OCP-SVM -message "This OCP-SVM is reserved for authorized users only!"

Step 13. Remove insecure ciphers from the SVM. Ciphers with the suffix CBC are considered insecure. To remove the CBC ciphers, run the following NetApp ONTAP command:

security ssh remove -vserver OCP-SVM -ciphers aes256-cbc,aes192-cbc,aes128-cbc,3des-cbc

Step 14. Create a new rule for the SVM NFS subnet in the default export policy and assign the policy to the SVM:

vserver export-policy rule create -vserver OCP-SVM -policyname default -ruleindex 1 -protocol nfs -clientmatch 192.168.52.0/24 -rorule sys -rwrule sys -superuser sys -allow-suid true

volume modify –vserver OCP-SVM –volume OCP_SVM_root –policy default

Step 15. Create FlexVol Volumes.

The following information is required to create a NetApp FlexVol volume:

● The volume name

● The volume size

● The aggregate on which the volume exists

volume create -vserver OCP-SVM -volume audit_log -aggregate AA02_A800_01_NVME_SSD_1 -size 50GB -state online -policy default -junction-path /audit_log -space-guarantee none -percent-snapshot-space 0

Step 16. Update set of load-sharing mirrors using the following command:

snapmirror update-ls-set -source-path OCP-SVM:OCP_SVM_root

Step 17. Run the following commands to create NFS LIFs:

network interface create -vserver OCP-SVM -lif nfs-lif-01 -service-policy default-data-files -home-node AA02-A800-01 -home-port a0a-3052 -address 192.168.52.31 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

network interface create -vserver OCP-SVM -lif nfs-lif-02 -service-policy default-data-files -home-node AA02-A800-02 -home-port a0a-3052 -address 192.168.52.32 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

Step 18. Run the following commands to create NVMe-TCP LIFs:

network interface create -vserver OCP-SVM -lif nvme-tcp-lif-01a -service-policy default-data-nvme-tcp -home-node AA02-A800-01 -home-port a0a-3032 -address 192.168.32.31 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif nvme-tcp-lif-01b -service-policy default-data-nvme-tcp -home-node AA02-A800-01 -home-port a0a-3042 -address 192.168.42.31 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif nvme-tcp-lif-02a -service-policy default-data-nvme-tcp -home-node AA02-A800-02 -home-port a0a-3032 -address 192.168.32.32 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-SVM -lif nvme-tcp-lif-02b -service-policy default-data-nvme-tcp -home-node AA02-A800-02 -home-port a0a-3042 -address 192.168.42.32 -netmask 255.255.255.0 -status-admin up

Step 19. Run the following commands to create SVM-MGMT LIF:

network interface create -vserver OCP-SVM -lif svm-mgmt -service-policy default-management -home-node AA02-A800-01 -home-port a0a-1022 -address 10.102.2.30 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

Step 20. Run the following commands to verify:

network interface show -vserver OCP-SVM

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

OCP-SVM

nfs-lif-01 up/up 192.168.52.31/24 AA02-A800-01 a0a-3052

true

nfs-lif-02 up/up 192.168.52.32/24 AA02-A800-02 a0a-3052

true

nvme-tcp-lif-01a

up/up 192.168.32.31/24 AA02-A800-01 a0a-3032

true

nvme-tcp-lif-01b

up/up 192.168.42.31/24 AA02-A800-01 a0a-3042

true

nvme-tcp-lif-02a

up/up 192.168.32.32/24 AA02-A800-02 a0a-3032

true

nvme-tcp-lif-02b

up/up 192.168.42.32/24 AA02-A800-02 a0a-3042

true

svm-mgmt up/up 10.102.2.30/24 AA02-A800-01 a0a-1022

true

7 entries were displayed.

Step 21. Create a default route that enables the SVM management interface to reach the outside world:

network route create -vserver OCP-SVM -destination 0.0.0.0/0 -gateway 10.102.2.254

Step 22. Set password for SVM vsadmin user and unlock the user.

security login password -username vsadmin -vserver OCP-SVM

Enter a new password:

Enter it again:

security login unlock -username vsadmin -vserver OCP-SVM

Step 23. Create and enable auditing configuration for the SVM.

vserver audit create -vserver OCP-SVM -destination /audit_log

vserver audit enable -vserver OCP-SVM

Procedure 3. Configure Cisco UCS IMM for the OCP Tenant

Use the following steps to add VLANs and BIOS Policy settings to IMM. Execute these steps from Cisco Intersight.

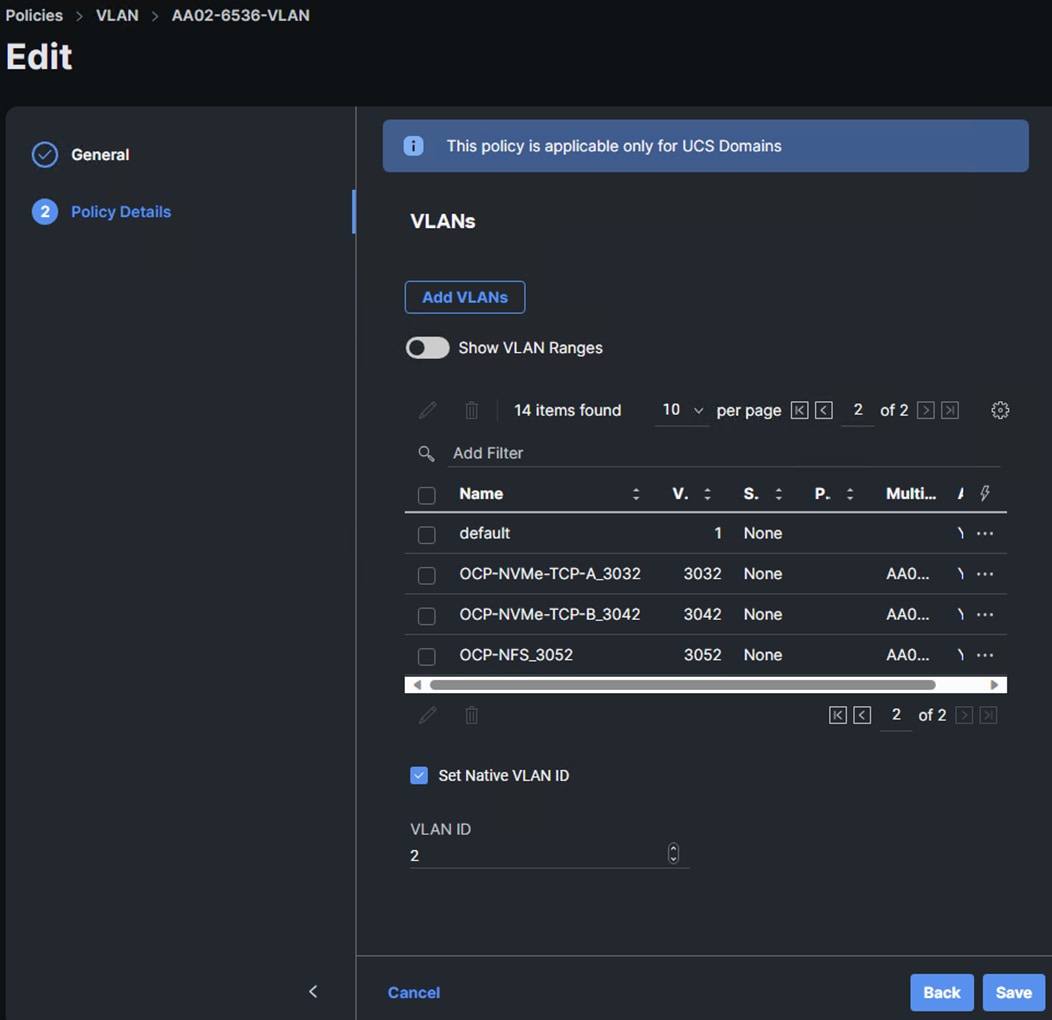

Step 1. In Cisco Intersight, select Infrastructure Service > Policies. Add a Filter of Type VLAN. Select and edit the UCS Domain VLAN policy (for example, AA02-6536-VLAN). Click Next. As you did when building the FlexPod, add the OCP-NVMe-TCP-A, OCP-NVMe-TCP-B, and OCP-NFS VLANs to the policy. Click Save to save the policy.

Step 2. In Cisco Intersight, select Infrastructure Service > Profiles > UCS Domain Profiles. Click the ellipses to the right of the UCS Domain Profile and select Deploy. Click Deploy to deploy the profile.

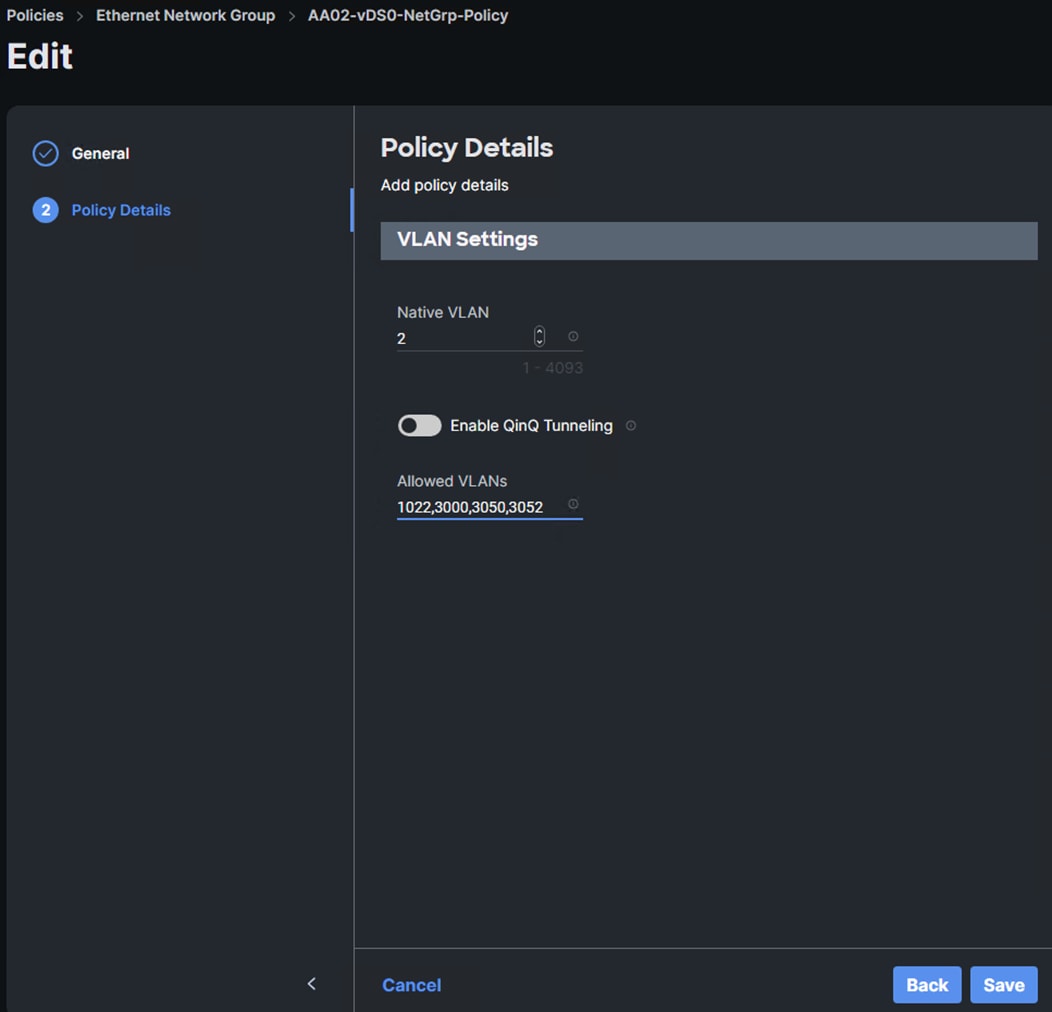

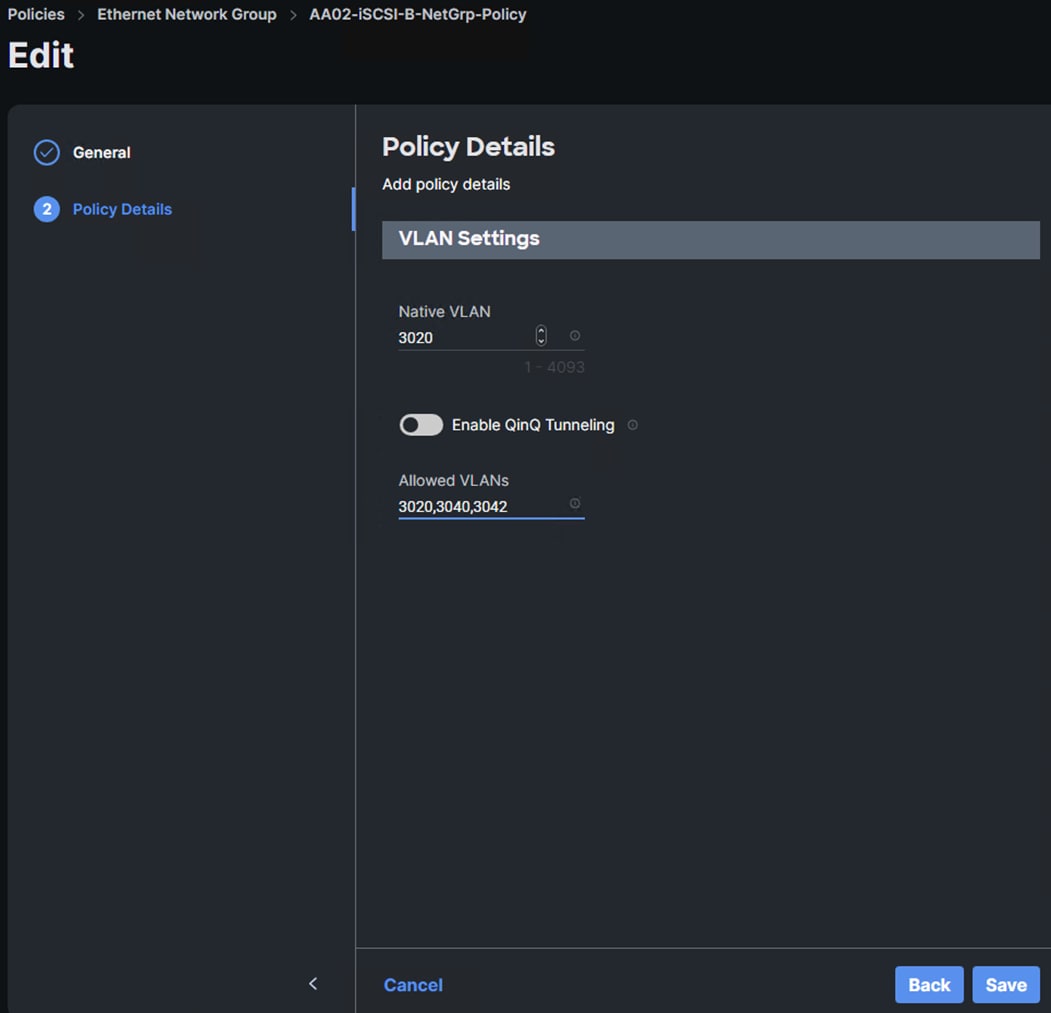

Step 3. In Cisco Intersight, select Infrastructure Service > Policies. Add a Filter of Type Ethernet Network Group. Select and edit the vDS0-NetGrp-Policy. Click Next. Add the OCP-NFS VLAN ID to the Allowed VLANs list. Click Save.

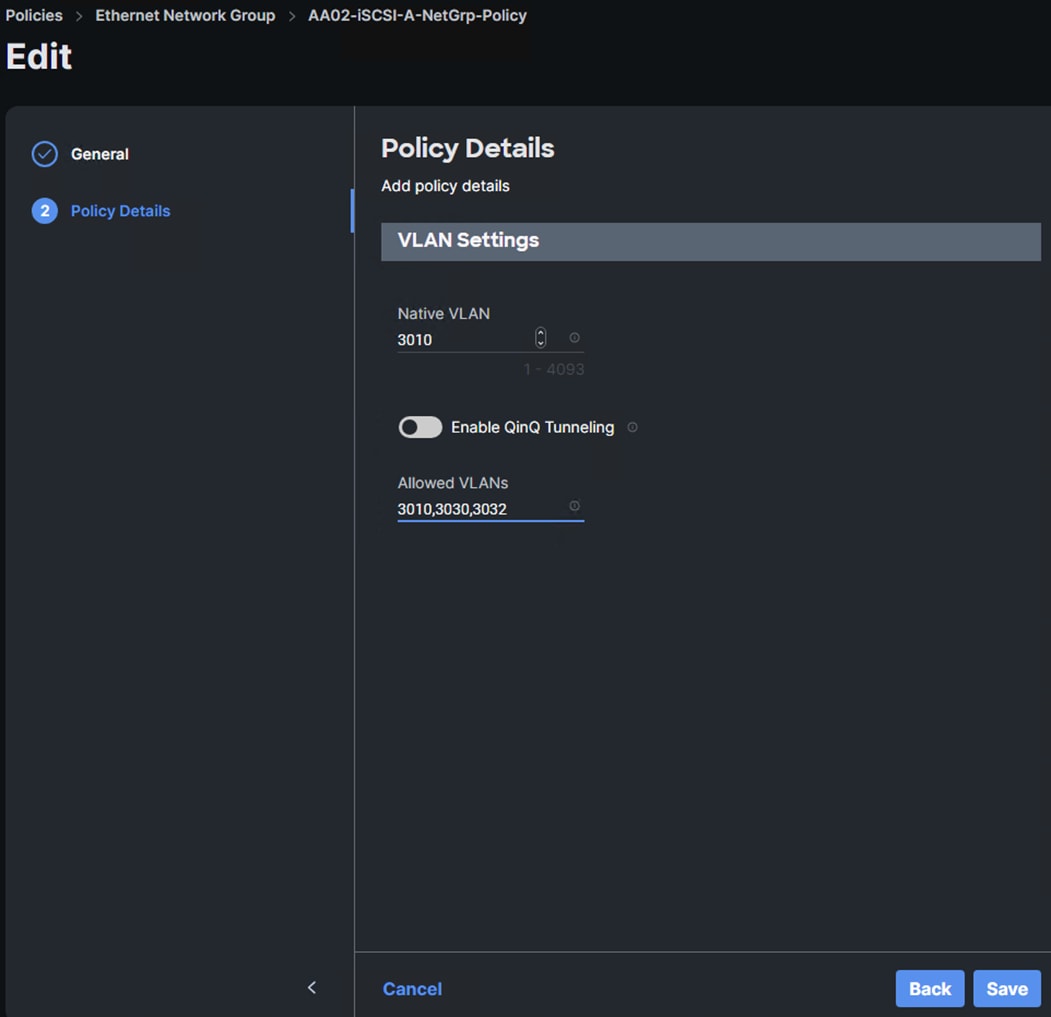

Step 4. In Cisco Intersight, select Infrastructure Service > Policies. Add a Filter of Type Ethernet Network Group. Select and edit the iSCSI-A-NetGrp-Policy. Click Next. Add the OCP-NVMe-TCP-A VLAN ID to the Allowed VLANs list. Click Save.

Step 5. In Cisco Intersight, select Infrastructure Service > Policies. Add a Filter of Type Ethernet Network Group. Select and edit the iSCSI-B-NetGrp-Policy. Click Next. Add the OCP-NVMe-TCP-B VLAN ID to the Allowed VLANs list. Click Save.

Step 6. In Cisco Intersight, select Infrastructure Service > Policies. In the center pane, click Add Filter and add a filter of Type BIOS. To the right of the BIOS Policy that applies to your server(s) with GPUs, click the ellipses and select Clone. Modify the clone’s Policy Name (for example, add -GPU to the original policy name to get Prefix-Intel-M7-Virt-BIOS-GPU. Click Clone to clone the BIOS Policy.

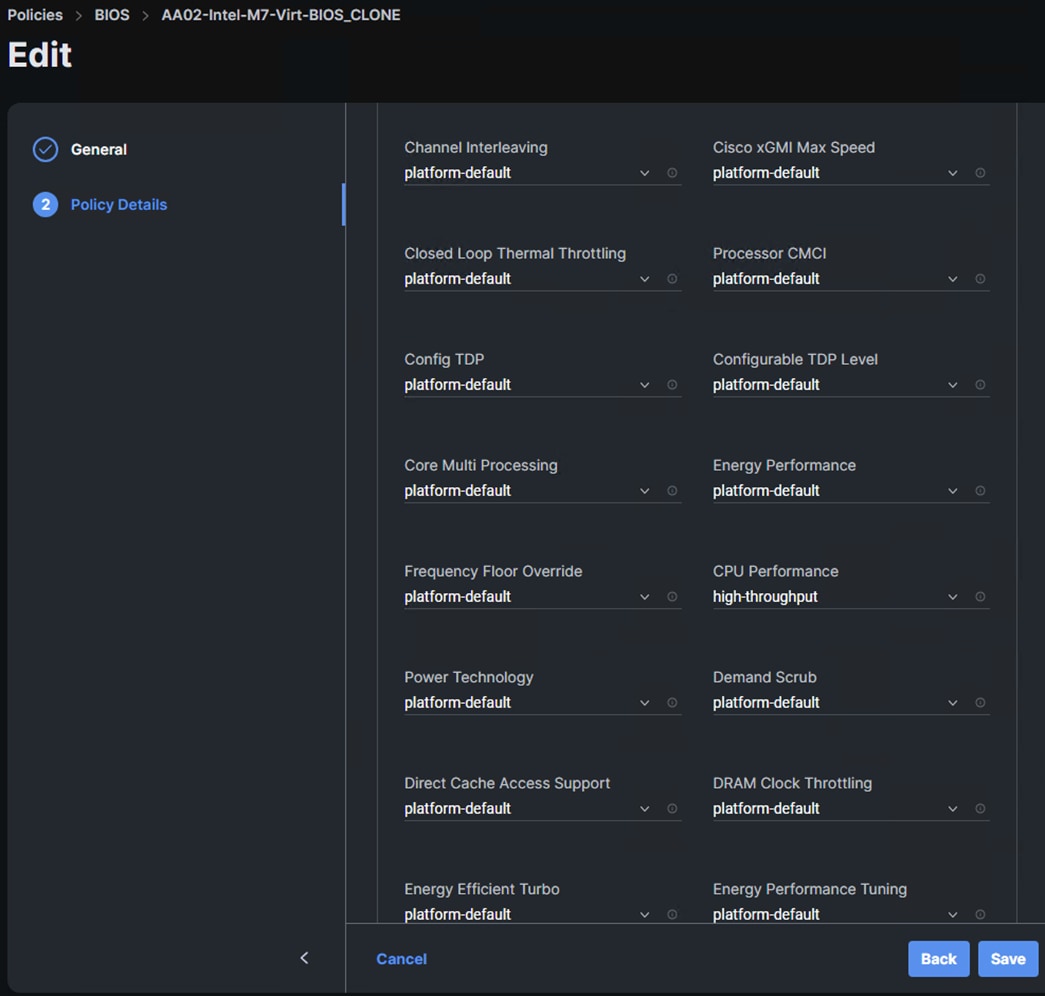

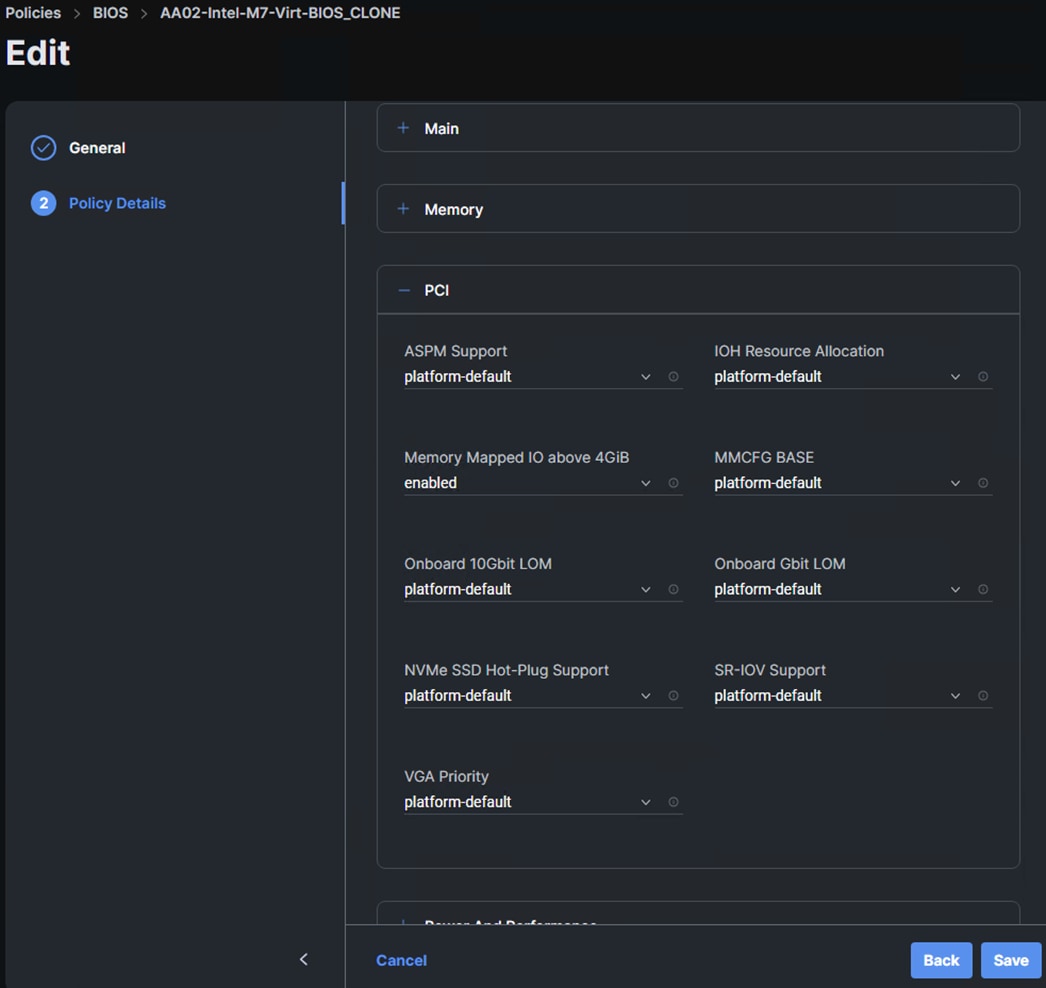

Step 7. Refresh the page to update the list of policies. The cloned policy should now appear at the top of the list. To the right of the cloned policy, click the ellipses and select Edit. Click Next. Expand Processor and set the CPU Performance token to high-throughput. Expand PCI and set the Memory Mapped IO above 4GiB token to enabled. Click Save to save the updated policy.

Step 8. In Cisco Intersight, select Infrastructure Service > Templates. To the right of the template being used on the servers that have GPUs, click the ellipses and select Clone. Leave Number of Clones set at 1 and click Next. Add -GPU- in the template name (for example, Prefix-M7-Intel-5G-VIC-GPU-iSCSI-Boot-Template) and click Clone.

Step 9. In the Templates list, click the ellipses to the right of the newly cloned GPU template and select Edit. Click Next to get to the Compute Configuration window. Place the cursor on the BIOS line and click the x to remove the current BIOS policy. Click Detach to complete the removal. On the BIOS line, click Select Policy and select the GPU BIOS policy. Once the check appears next to the updated BIOS policy, click Close.

Step 10. In Cisco Intersight, select Infrastructure Service > Profiles > UCS Server Profiles. For each server that has GPU(s) installed, on the corresponding profile, click the ellipses to the right of the profile and select Detach from Template. Once the profile is detached from the template, click the ellipses again and select Attach to Template. Select the GPU template and click Next. Click Attach.

Step 11. The UCS IMM Ansible scripts set the VMware-HighTrf Ethernet Adapter policy on the vDS0 vNICs. To change this to the AA02-EthAdapter-16RXQs-5G policy, in Cisco Intersight, select Infrastructure Service > Configure > Policies. Add a filter of Type LAN Connectivity. Click the ellipses to the right of the policy used for the OCP servers and select Edit. Click Next. Select the 02-vDS0-A vNIC and click the pencil icon to edit the vNIC. Scroll down and click the x to remove the existing Ethernet Adapter policy. Click Ethernet Adapter > Select Policy to select a new policy. Choose AA02-EthAdapter-16RXQs-5G and click Update. Repeat to set the AA02-EthAdapter-16RXQs-5G policy for the 03-vDS0-B vNIC. Click Save & Deploy then Save & Proceed to save the change to the UCS Server Profiles.

Step 12. In the Deploy Server Profiles popup, select Reboot Immediately to Activate and click Deploy to deploy the profiles.

Procedure 4. Configure VMware vSphere for the OCP Tenant

Use the following steps to add distributed switch port groups, VMkernel ports, and a datastore to the OCP VMware ESXi hosts. Execute these steps from the VMware vCenter web interface.

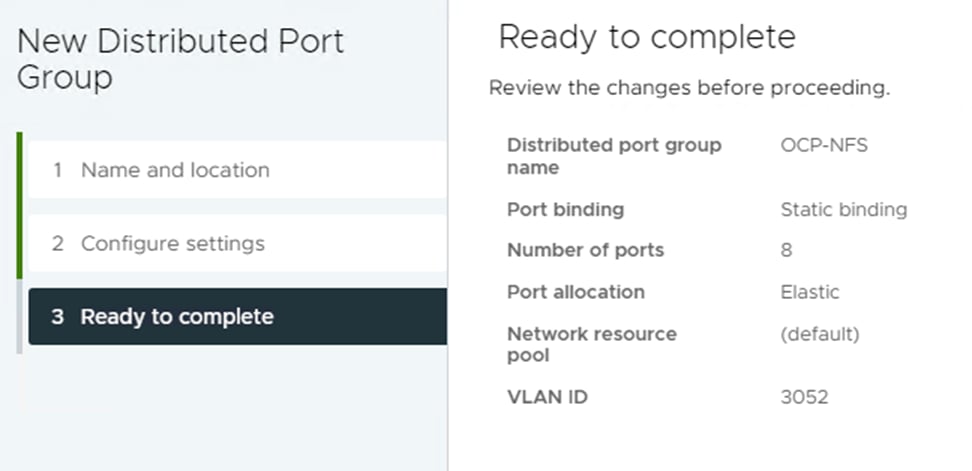

Step 1. In VMware vCenter, select Inventory > Networking, expand the vCenter and Datacenter, and right-click vDS0. Select Distributed Port Group > New Distributed Port Group. Name the port group OCP-NFS and click NEXT. Select VLAN type VLAN and enter the OCP NFS VLAN ID. Click NEXT. Click FINISH to complete adding the port group.

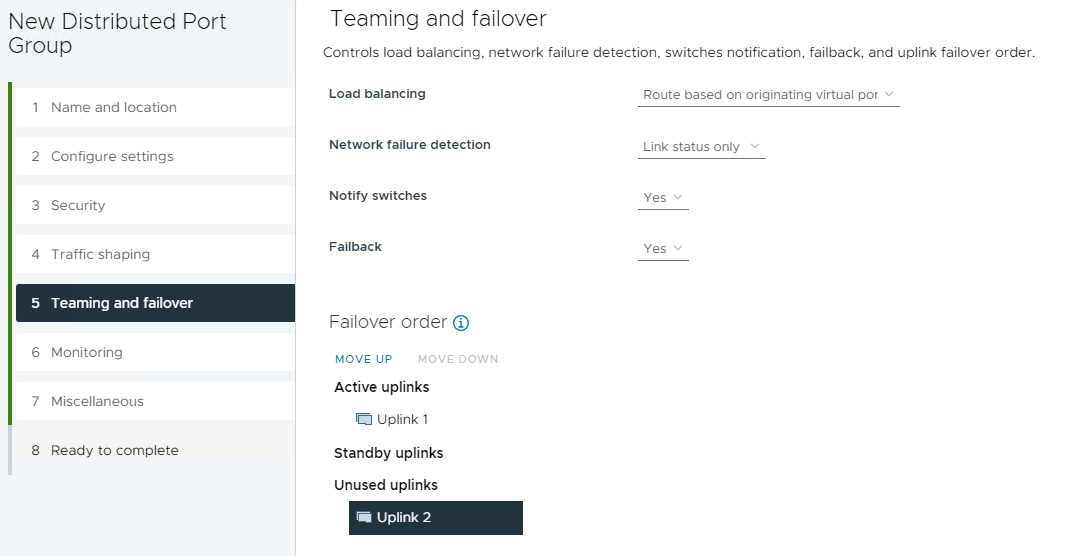

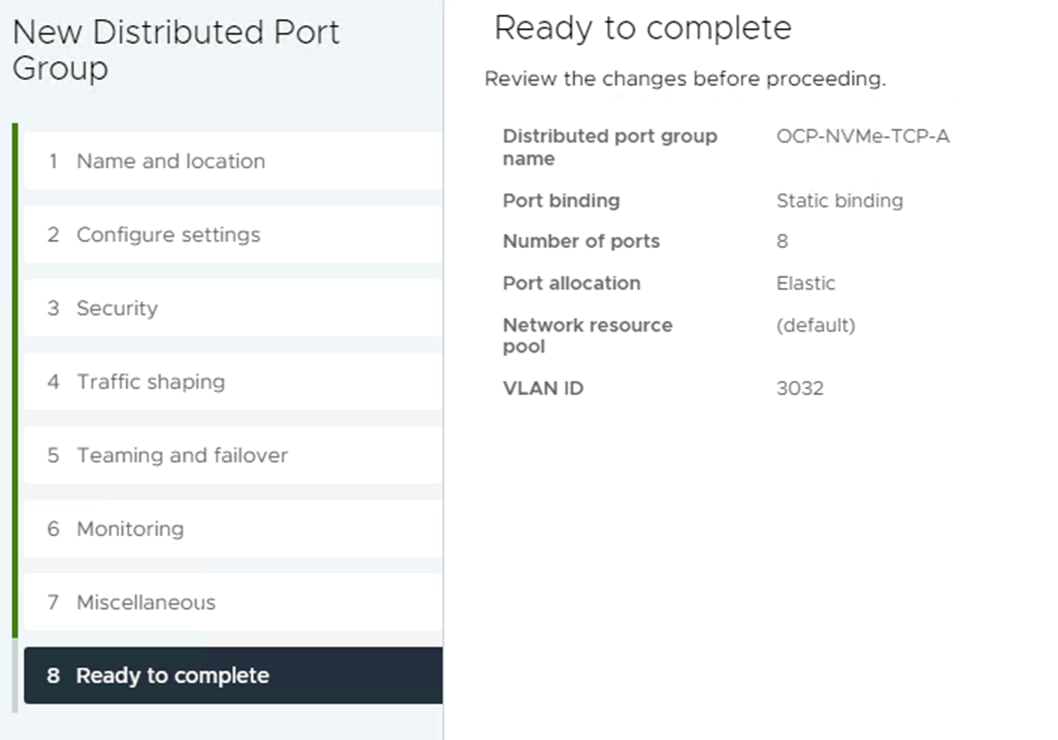

Step 2. Right-click iSCSI-NVMe-TCP-vDS. Select Distributed Port Group > New Distributed Port Group. Name the port group OCP-NVMe-TCP-A and click NEXT. Select VLAN type VLAN and enter the OCP NVMe-TCP-A VLAN ID. Select Customize default policies configuration. Click NEXT through the prompts to get to “5 Teaming and Failover”. Move Uplink 2 under “Unused uplinks” to pin all OCP-NVMe-TCP-A traffic to Fabric A. Click NEXT through the process. Click FINISH to complete adding the port group.

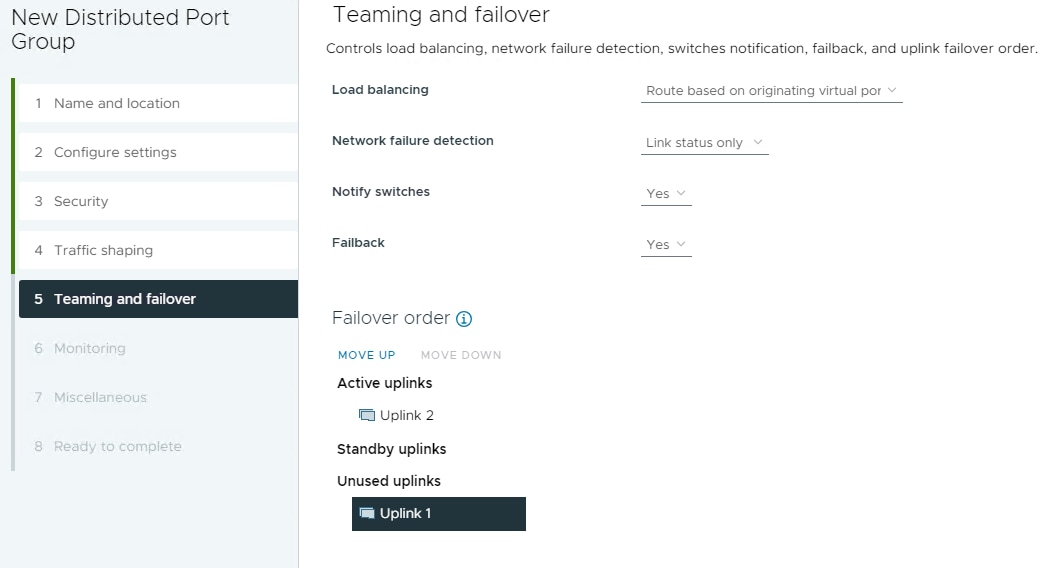

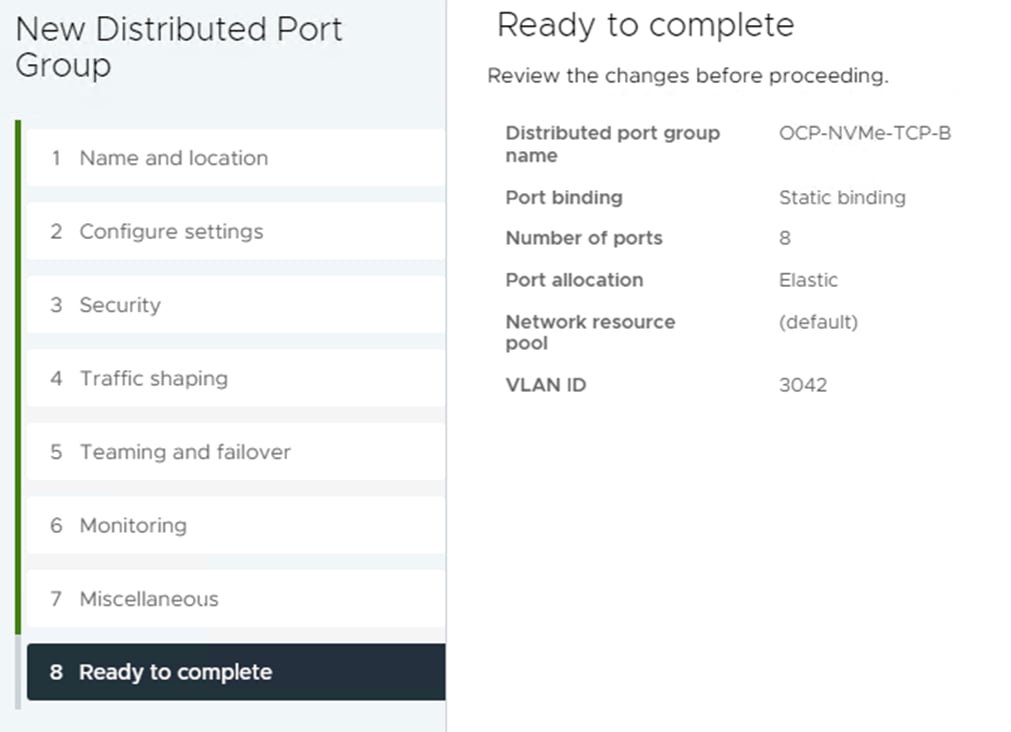

Step 3. Right-click iSCSI-NVMe-TCP-vDS. Select Distributed Port Group > New Distributed Port Group. Name the port group OCP-NVMe-TCP-B and click NEXT. Select VLAN type VLAN and enter the OCP NVMe-TCP-B VLAN ID. Select Customize default policies configuration. Click NEXT through the prompts to get to “5 Teaming and Failover.” Move Uplink 1 under “Unused uplinks” to pin all OCP-NVMe-TCP-B traffic to Fabric B. Click NEXT through the process. Click FINISH to complete adding the port group.

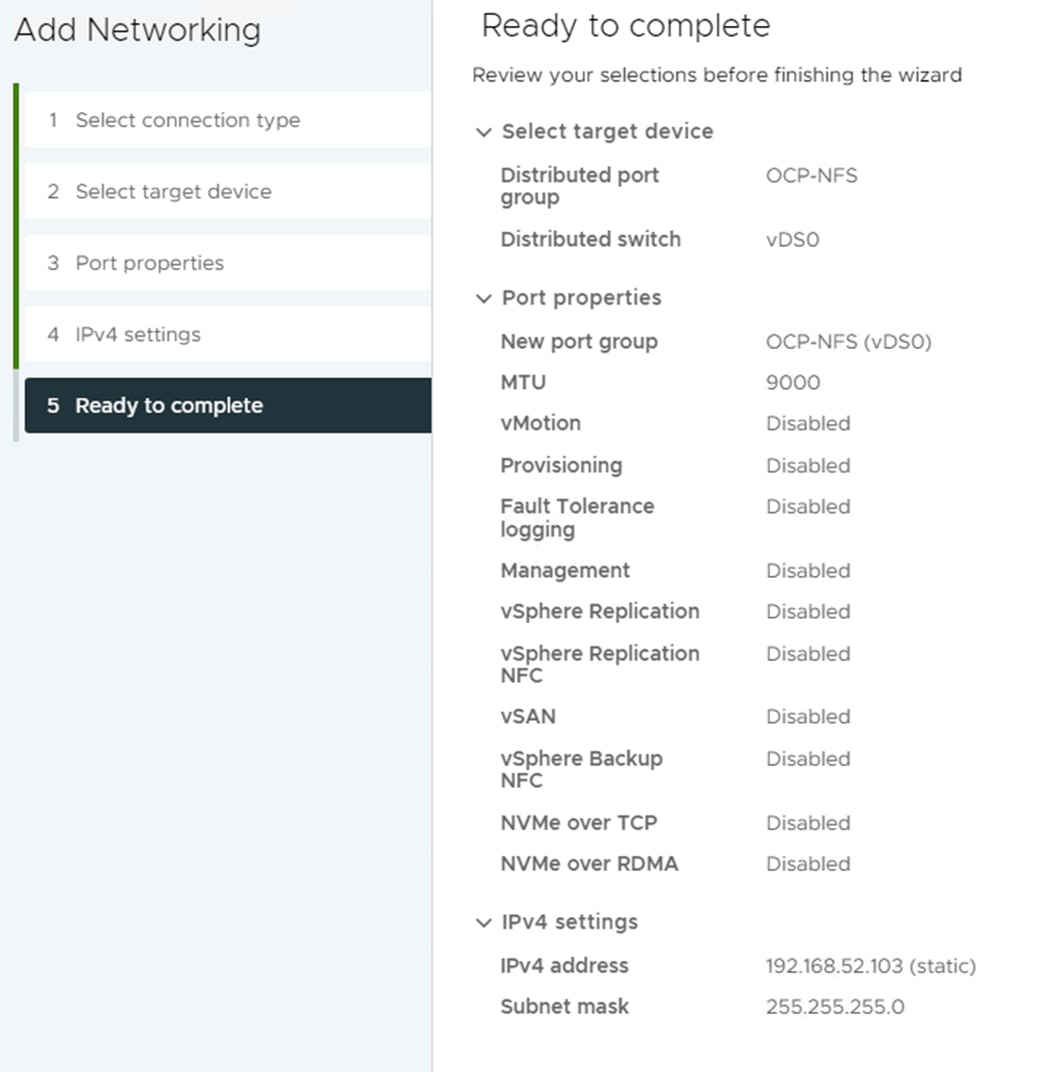

Step 4. Select Hosts and Clusters. Expand the vCenter, Datacenter, and OCP Cluster. Select each OCP ESXi host and select Configure > VMkernel Adapters. Remove the vmk5 (Infra-NVMe-TCP-A) and vmk6 (Infra-NVMe-TCP-B) adapters (not needed for OCP hosts). Click ADD NETWORKING. With “VMkernel Network Adapter” selected, click NEXT. Select the OCP-NFS network and click NEXT. Leave all settings default, including MTU 9000, and click NEXT. Select Use static IPv4 settings and fill in an IPv4 address and Subnet mask. Click NEXT. Click FINISH to complete adding the VMkernel port. Repeat this process for all six OCP ESXi hosts.

Note: It is not necessary to add VMkernel ports for NVMe-TCP since the NVMe-TCP namespaces will be mounted from within OCP.

Step 5. As detailed in Step 4, add an OCP-NFS VMkernel port to each of the FlexPod-Management VMware ESXi hosts.

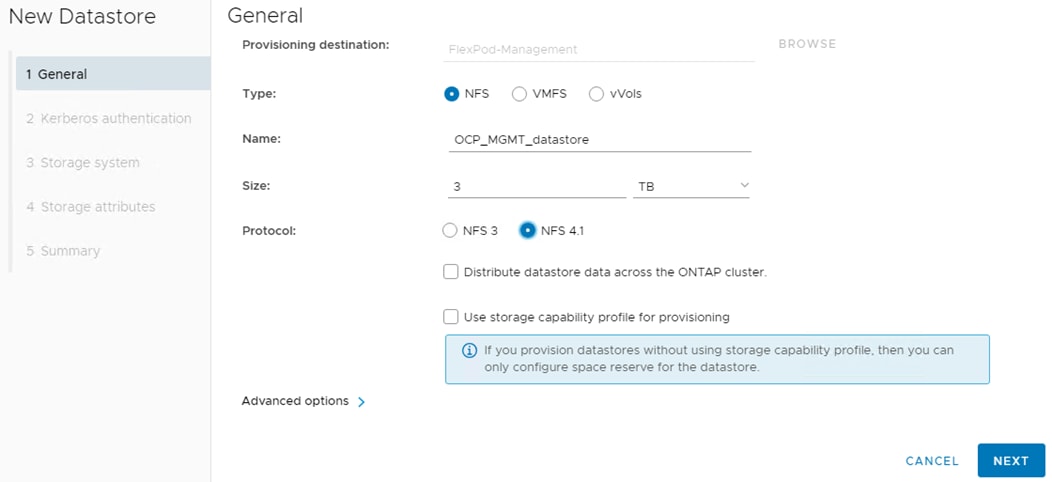

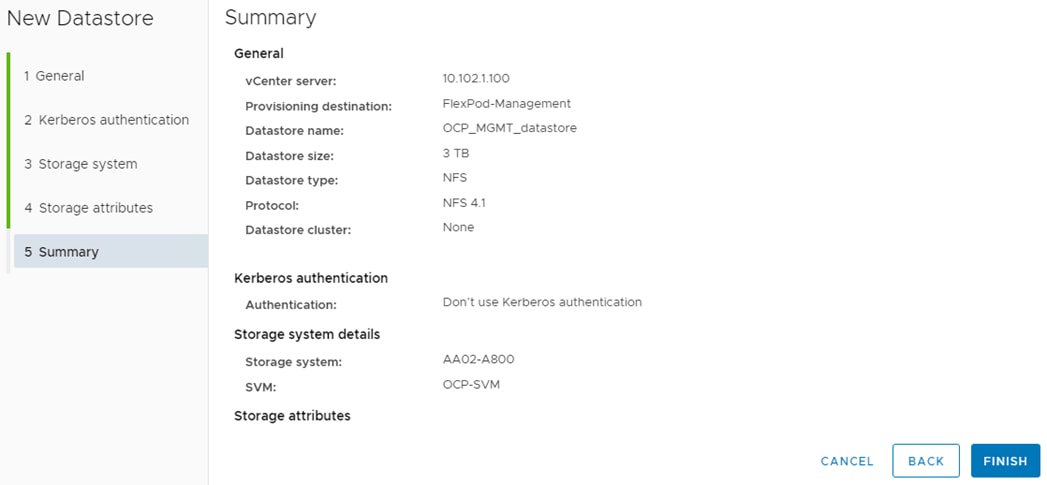

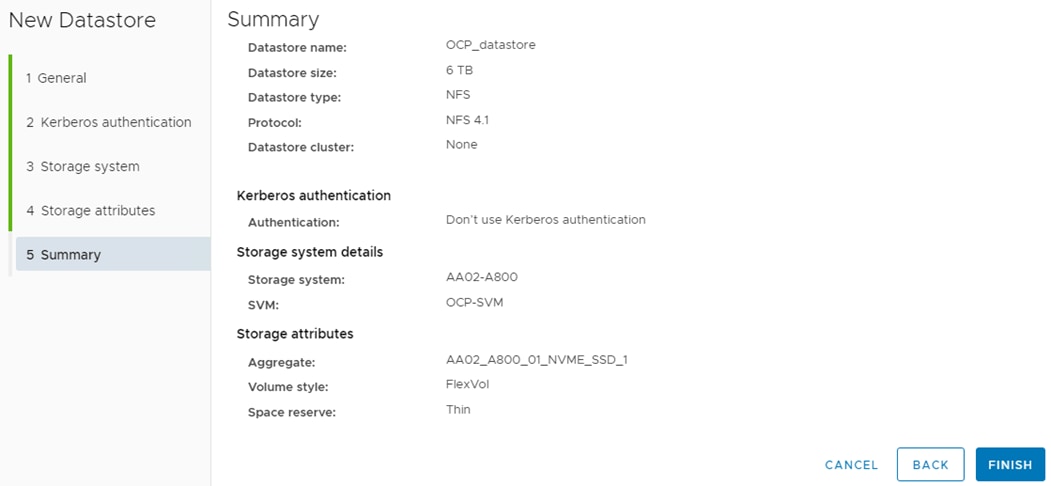

Step 6. In VMware vCenter, select NetApp ONTAP Tools. Select Storage Systems. Click REDISCOVER ALL. Select Inventory > Hosts and Clusters. Right-click the OCP cluster and select NetApp ONTAP tools > Provision Datastore. Leave NFS selected and name the datastore OCP_MGMT_datastore. Set the size to at least 3TB and select NFS 4.1. Uncheck “Use storage capability profile for provisioning.” Click NEXT.

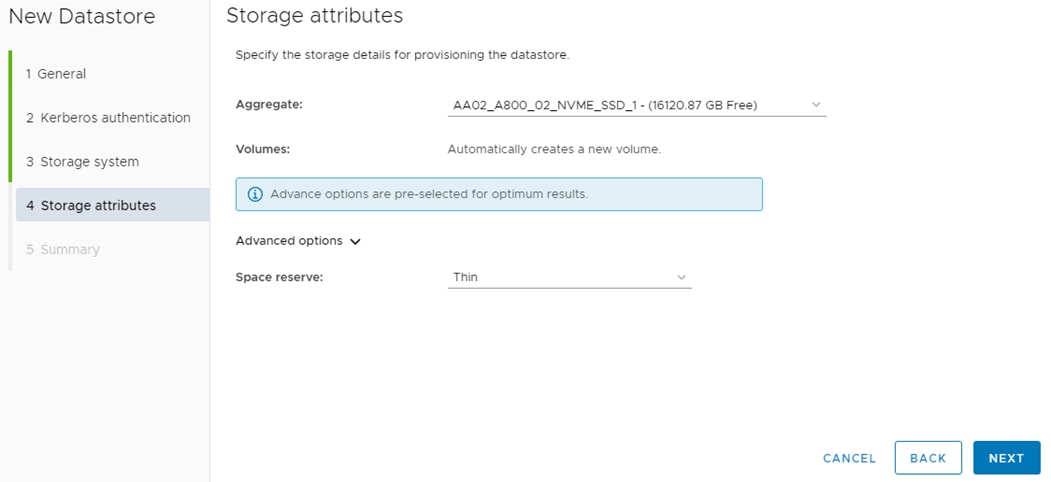

Step 7. Leave “Don’t use Kerberos authentication” selected and click NEXT. Select your Storage system and the OCP-SVM. Click NEXT. Select the aggregate for storage node 02, expand the Advanced options and select Space reserve Thin and click NEXT.

Step 8. Click FINISH to complete creating the datastore for OCP management virtual machines (VMs).