This document contains the following sections:

Introduction

The Cisco Prime Cable Provisioning (PCP) 7.4 RDU Container Deployment Guide describes the concepts and configurations of Prime Cable Provisioning Regional Distribution Unit Container (RDU-C).

The two Docker container images provided with the PCP 7.4 release:

-

pcp-rdu-app:7.4 - The authoritative datastore and device configuration generation engine of PCP.

-

pcp-rdu-webui:7.4 - The web-based user interface for the RDU Container.

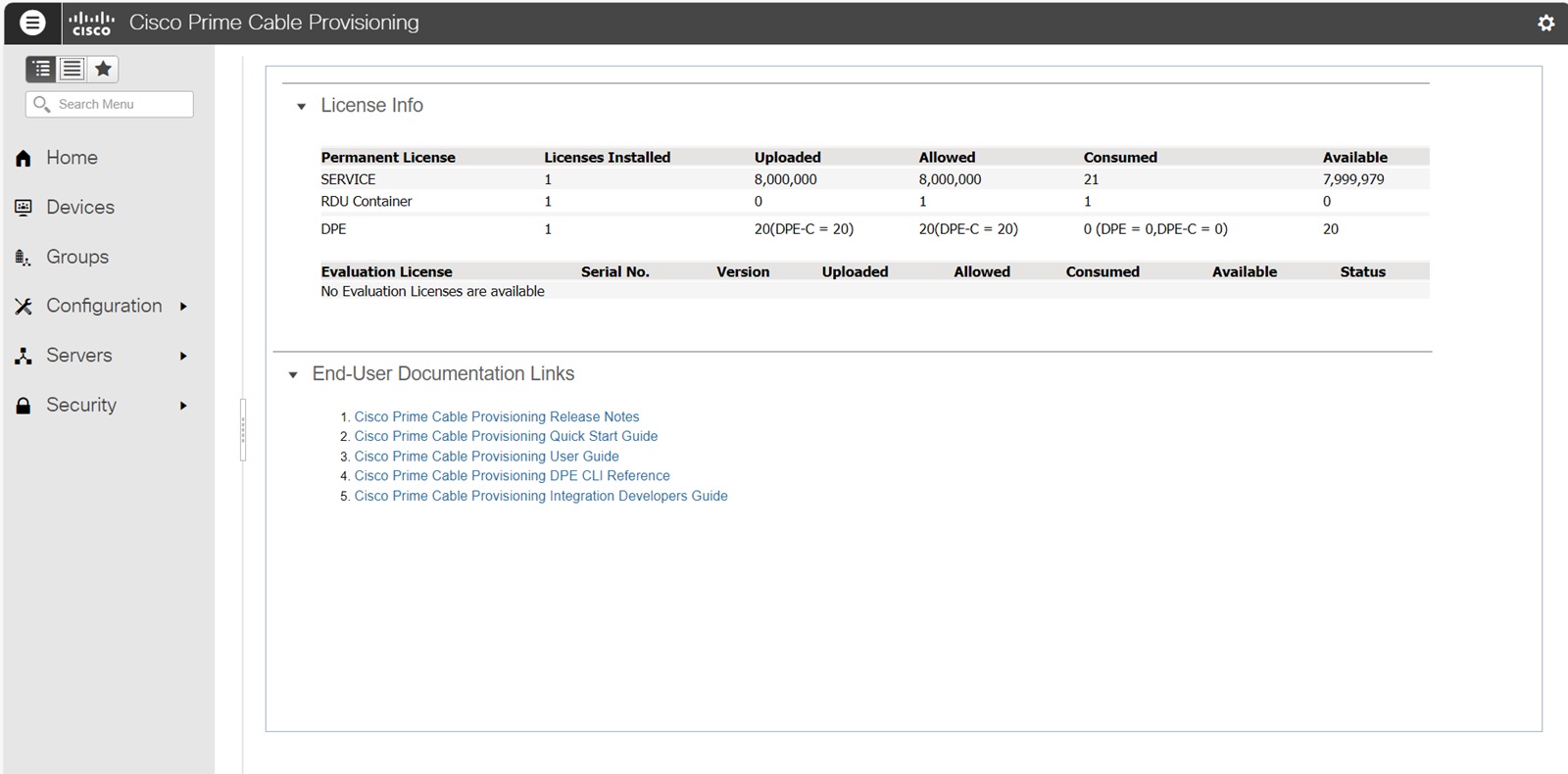

Licensing

Prime Cable Provisioning 7.4 adds support to run multiple RDU Containers in Kubernetes cluster. A new license to support the container function of RDU is now required. This license enables ability to use containerized RDU. To use the new feature function, purchase a RDU_CNT license.

The following figure shows a sample Manage License Keys page of the Admin UI, which displays the summary of licenses that has been added for the implementation:

Docker Images

RDU can be run as a container in a Kubernetes cluster. You can download the Prime Cable Provisioning RDU Container Docker images and relevant manifests for Prime Cable Provisioning 7.4 from, Download Software .

-

Download the following software images:

-

pcp-rdu-app_7_4.tar.gz

-

pcp-rdu-webui_7_4.tar.gz

-

-

Extract the files with .tar.gz extension:

# tar -zxvf pcp-rdu-app_7_4.tar.gzThe utility creates the following artifacts:

a. pcp-rdu-app.tar : A tar archive of pcp-rdu-app:7.4 Docker image.

b. manifests: A directory with manifests needed for rdu-app container.

# tar -zxvf pcp-rdu-webui_7_4.tar.gzThe utility creates the following artifacts:

a. pcp-rdu-webui.tar : A tar archive of pcp-rdu-webui:7.4 Docker image.

b. manifests : A directory with manifests needed for rdu-webui container.

-

To load the respective Docker images:

# docker load -i pcp-rdu-app.tar# docker load -i pcp-rdu-webui.tar

Verifying Image Signature

It is recommended to verify the signature of the downloaded images. Your premises verification script can contact Cisco to download root and subCA certs. Python(3) needs to be installed.

-

Extract the public key from the public cert:

$ openssl x509 -pubkey -noout -in PCP_RELEASE_7X-CCO_RELEASE.pem > PCP_RELEASE_7X-CCO_RELEASE.pubkey -

To check whether the verify script is using the public key and signature files:

$ openssl dgst -sha512 -verify PCP_RELEASE_7X-CCO_RELEASE.pubkey -signature cisco_x509_verify_release.py3.signature cisco_x509_verify_release.py3 Verified OK -

Verify the product image:

$ python3 cisco_x509_verify_release.py3 -e PCP_RELEASE_7X-CCO_RELEASE.pem -s <image signature file> -i <image> -v dgst -sha512Image signature file

Image

pcp-rdu-app_7_4.tar.gz.signature

pcp-rdu-app_7_4.tar.gz

pcp-rdu-webui_7_4.tar.gz.signature

pcp-rdu-webui_7_4.tar.gz

Requirements

-

A Kubernetes Cluster:

-

The cluster should be configured with dual-stack support (IPv4 and IPv6) i.e. pod-CIDR and service-CIDR should have subnets of both IP families.

-

The network services offered by RDU use a wider range of ports: 49187 and 49188

By default, the range of the service NodePorts is 30000-32768. Hence, the range of NodePorts has to be expanded.

This can be achieved by configuring kube-apiserver (--service-node-port-range=37-50000).

Note

Cisco has tested PCP 7.4 RDU Container with Kubernetes v1.30.

-

-

Calico CNI installed for dual-stack support (IPv4 and IPv6).

Calico IP Pools should have NAT Outgoing set to true (spec.natOutgoing).

Note

Cisco has tested PCP 7.4 RDU Container with Calico v3.24 configured with IPIP encapsulation.

-

PostgreSQL Database Server:

RDU Container acts as a client application connecting to PostgreSQL database server.

The Database Administrator is responsible to configure and tune the PostgreSQL database server to be used by the RDU Container.

-

A database has to be created (SQL: CREATE DATABASE) for RDU Container to store its data. RDU Container uses 'public' schema.

-

A database user with password has to be created (SQL: CREATE USER) which RDU Container application uses to connect to the above created database. It is recommended that the user be the owner of the database.

-

Configure PostgreSQL client authentication (pg_hba.conf) to allow connections to the database created in (a) by user created in (b) from the IP addresses of worker nodes in the Kubernetes cluster.

DPE Container supports password-based authentication methods (scram-sha-256, md5, password) configured on PostgreSQL database server.

Example:

An entry in pg_hba.conf:

'rdudbuser' database user is allowed to connect to 'rdudb' database from worker nodes in 10.0.0.0/26 network if password is correctly supplied

#

Type

Database

User

Address

Method

host

rdudb

rdudbuser

10.0.0.0/26

scram-sha-256

Note

Cisco has tested PCP 7.4 RDU Container with PostgreSQL 16 running on Linux.

-

-

ZooKeeper:

Apache ZooKeeper is a distributed coordination service. RDU Containers act as client application to ZooKeeper server and use it for coordination.

-

DNS entries for IPv4 and IPv6 address of Kubernetes worker nodes.

For RDU and CPNR Extension Points to connect to DPE Containers running on Kubernetes worker nodes, IPv4 and IPv6 address of every worker node should be available in DNS (A and AAAA records respectively).

Deploying RDU Container

PCP 7.4 allows you to run multiple containers of rdu-app. Typically just one container of rdu-webui is enough.

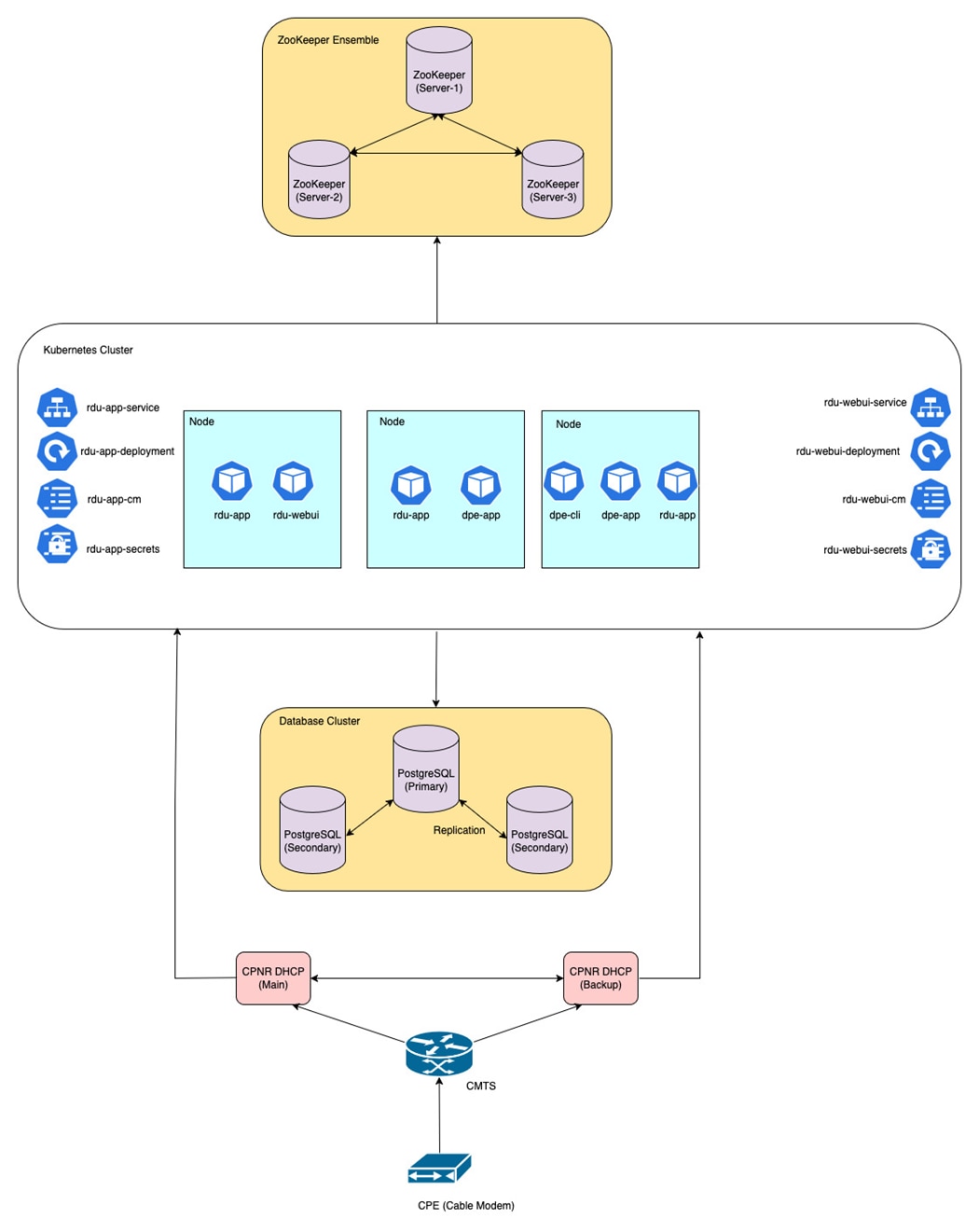

A typical PCP 7.4 deployment with RDU Container will look like:

-

A single Kubernetes cluster with RDU Containers (one rdu-webui pod and multiple rdu-app pods).

-

DPE Containers (one dpe-cli pod and multiple dpe-app pods).

-

CPNR Extension Points (CNR-EP) Containers running on a Linux node (either standalone or failover enabled).

-

PostgreSQL database cluster running on Linux machines (running a single server leads to single point of failure, so always run PostgreSQL server with replication enabled).

-

ZooKeeper ensemble running on Linux machines (running a single server leads to single point of failure, so always run ZooKeeper servers in an ensemble).

Note |

Database and Coordination services are not part of the PCP software image. You have to download, install, configure and maintain them to be consumed by RDU Containers. Please see https://www.postgresql.org/download/linux/redhat/ and https://zookeeper.apache.org/releases.html. We highly recommend to configure and use highly-available deployment of PostgreSQL and ZooKeeper servers. |

RDU Container deployment comprises of 2 containers:

- rdu-app

- rdu-webui

rdu-app Container

Following Kubernetes resources and manifests are used to run rdu-app container. Please create all these resources by applying the manifests:

-

rdu-app-deployment: Deployment object is to run the rdu-app pod with the pcp-rdu-app:7.4 image.

Manifest: rdu-app-deployment.yaml

Minimum resources recommended by Cisco to run a rdu-app pod with are:

-

Java Heap size of 2048MB. This can be tuned by

.spec.template.spec.containers[0].envfield. -

Memory = 3000Mi, CPU = 1000m. This can be tuned by

.spec.template.spec.containers[0].resources.requests.memoryand.spec.template.spec.containers[0].resources.requests.cpu fields.

-

-

rdu-app-cm: ConfigMap object is to provide the configuration to crdu-app pod.

Manifest: rdu-app-cm.yaml

RDU (rdu-app) pod consume ConfigMap as configuration files in a volume. Users of regular RDU can correlate the contents of this manifest with rdu.properties file.

In addition, this ConfigMap object is used to provide the database (URL, username and connection pool settings), ZooKeeper and logging configurations to the RDU pod.

All the properties (non-confidential data) required by rdu-app pod have to be provided in this manifest.

Note

The minimum configuration set in ConfigMap needed by rdu-app container are the following:/db/postgres/url /db/postgres/username /zk/connection/url

-

rdu-app-secrets: Secret object is to store sensitive data like username, passwords, certificates and keys, used by the rdu-app pod.

Manifest: rdu-app-secrets.yaml

rdu-app pod consumes Secret as files and environment variables.

Typical use of this Secret object is to store:

-

RDU Application Super User credentials provided through the following keys:

superuser-name superuser-passwordThese are the credentials of initial super user of RDU. This user is created at the time of first run of RDU application.

-

PostgreSQL database password provided through the following key:

db-password -

RDU shared secret and secret key provided through the following keys:

rdu-shared-secret rdu-secret-key -

Private key and public certificate for RDU's secure mode communication are provided through the following keys:

rduapp.key rduapp.pem -

Credentials for the keystore created for storing the above mentioned secret key and RDU private key are provided through the following keys:

private-key-password secure-keystore-password -

Public certificate of ZooKeeper server for the secure communication (one-way TLS) with RDU is provided through the following key:

zkserver-cert-0.pemIf individual servers in the ZooKeeper ensemble use different certificate, each of those can be provided to RDU using the keys in the following pattern:

zkserver-cert-1.pem zkserver-cert-2.pemIn case, two-way TLS is needed between the ZooKeeper server and RDU, private key and public certificate for RDU is provided through the following keys:

zkclient.key zkclient.pem -

Public certificate (root) of the PostgreSQL server for secure communication (one-way TLS) with RDU is provided through the following key:

postgrescert.pem

Values of all the keys has to be provided as base64-encoded strings. For example: PEM encoded RDU certificate should further be base64-encoded.

-

-

rdu-app-docsis-options-cm, rdu-app-pktcbl-options-cm and rdu-app-cablehome-options-cm: ConfigMap objects to provide DOCSIS, PacketCable and CableHome options descriptions used by the rdu-app pod.

Users of regular RDU are aware that RDU uses option descriptions as defined in XML files (DOCSIS_OptionDesc.xml, PKTCBL_OptionDesc.xml, CABLEHOME_OptionDesc.xml).

To update the option descriptions, you can modify the following ConfigMap objects:

-

rdu-app-docsis-options-cm.yaml to update DOCSIS_OptionDesc.xml

-

rdu-app-pktcbl-options-cm.yaml to update PKTCBL_OptionDesc.xml

-

rdu-app-cablehome-options-cm.yaml to update CABLEHOME_OptionDesc.xml

If you do not have any update for the option descriptions, you can use these ConfigMap manifest files as is.

-

-

rdu-app-log4j2-cm: ConfigMap is to provide the logging configuration of rdu_auth.log and rdu_crs.log

Manifest: rdu-app-log4j2-cm.yaml

Users of regular RDU are aware that the log levels of rdu_auth.log and rdu_crs.log can be configured via log4j2.xml.

Note

RDU Container configuration is externalized to Kubernetes ConfigMap and Secret objects.

To make a configuration change, update these objects and restart rdu-app pods.

Please note that log level changes (/server/log/*) do not require restart and they get propagated in few seconds.

-

rdu-app-service: Kubernetes service object of type = NodePort to expose rdu-app pod for external communication.

Manifest: rdu-app-service.yaml

Network services offered by RDU (non-secure communication on port 49187, secure communication on port 49188) have to be exposed to any batch client running external to Kubernetes cluster as NodePort service.

Note

spec.externalTrafficPolicy controls how traffic is routed from external sources to RDU pod running inside the Kubernetes cluster.

If spec.externalTrafficPolicy = Cluster, external traffic gets routed to any RDU pod in the Kubernetes cluster. But, this can result in loss of source IP address from request. .

If spec.externalTrafficPolicy = Local, external traffic gets routed to only node-local RDU pod in the Kubernetes cluster. Also, source IP address from request is retained. But, if traffic reaches a node which does not have a RDU pod running, it will be dropped.

rdu-webui Container

Following are the Kubernetes resources and manifests that are used to run rdu-webui container, you have to create all these resources by applying the manifests:

-

rdu-webapp-deployment: Deployment object to run the rdu-webui pod with image pcp-rdu-webui:7.4 image.

Manifest: rdu-webapp-deployment.yaml

-

rdu-webapp-cm: ConfigMap object is to provide configuration used by rdu-webui pod.

Manifest: rdu-webapp-cm.yaml

rdu-webui pod consumes ConfigMap as configuration files in a volume. Users of regular RDU can correlate the contents of this manifest with the adminui.properties and api.properties files.

rdu-webui pod communicates with rdu-app pod to provide the results of various user actions. This communication is configured by providing endpoint of rdu-app in this manifest.

For secure communication between rdu-webui and rdu-app:

/server/rdu/secure/enabled=true /server/rdu/unsecure/enabled=false /rdu/secure/servers=<rdu-ip>:49188For non-secure communication between rdu-webui and rdu-app:

/server/rdu/secure/enabled=false /server/rdu/unsecure/enabled=true /rdu/secure/servers=<rdu-ip>:49187This <rdu-ip> field could be ClusterIP of rdu-app service or DNS Name of ClusterIP service or Node IP address (when rdu-app is exposed as NodePort service)

-

rdu-webapp-secrets: Secret object is to store the sensitive data used by rdu-webui pods.

Manifest: rdu-webapp-secrets.yaml

Values of all the keys has to be provided as base64-encoded strings.

Admin UI runs with the HTTPS mode enabled. So, you have to mandatorily provide the private key and public certificate through the following keys:

rduweb.key rduweb.pemCredentials for the keystore created for storing above mentioned key material provided thorugh the following keys:

private-key-password secure-keystore-passwordTo establish secure connection with rdu-app pod, public certificate of rdu-app has to be trusted by rdu-webui pod. Use the following key to provide the public certificate of rdu-app:

rduapp.key -

rdu-webapp-ca-secrets: Secret object to store public certificate of rdu-app pod.

Manifest: rdu-webapp-ca-secrets.yaml

To establish secure connection with rdu-app pod, public certificate of rdu-app has to be trusted by rdu-webui pod.

Use the following key to provide the public certificate of rdu-app:

rduapp.pem -

tomcat-cm: ConfigMap object to configure the Tomcat web server.

Manifest: tomcat-cm.yaml

If you do not have any update for the Tomcat web server configuration, you can use this ConfigMap manifest file as it is.

-

rdu-app-docsis-options-cm, rdu-app-pktcbl-options-cm and rdu-app-cablehome-options-cm: ConfigMap objects to provide the DOCSIS, PacketCable and CableHome options descriptions used by rdu-webui pod.

Users of regular RDU are aware that RDU uses option descriptions as defined in XML file (DOCSIS_OptionDesc.xml, PKTCBL_OptionDesc.xml, CABLEHOME_OptionDesc.xml).

The same files are used by rdu-webui to decode a Cable Modem Configuration file. The XML files provided to rdu-app should be exactly same as provided to rdu-webui.

The following ConfigMap objects are used to provide XML files with the option descriptions:

-

rdu-app-docsis-options-cm.yaml is to provide DOCSIS_OptionDesc.xml

-

rdu-app-pktcbl-options-cm.yaml is to provide PKTCBL_OptionDesc.xml

-

rdu-app-cablehome-options-cm.yaml is to provide CABLEHOME_OptionDesc.xml

Note

Changes made to ConfigMap and Secret objects should be followed by dpe-cli-deployment restart i.e. rollout restart of dpe-cli-deployment.

-

-

rdu-webapp-service: Kubernetes service object of type=NodePort to expose rdu-webui pod for external communication.

Manifest: rdu-webapp-service.yaml

Note

These manifests are provided as a reference and tested by Cisco. We recommend you to use a copy of these, if you require modification for your Kubernetes cluster.

Database Connection

The RDU (rdu-app) container is built to use PostgreSQL database server. Please see the Requirements section for more details.

ConfigMap (rdu-app-cm) is the place to configure database connection URL.

It is recommended to run PostgreSQL database server with streaming replication enabled (e.g. one primary server + two secondary servers).

The database connection URL can be configured with multiple endpoints (host and port) to fully use replication and failover (high-availability) features, if configured on PostgreSQL database servers.

Example

/db/postgres/url=jdbc:postgresql://rdu-db-01:5432,rdu-db-02:5432,rdu-db-03:5432/rdudbRDU Container tries to connect to the database server in the order of the hosts given in the URL. RDU Container connects to the Primary database server if available. Only if Primary connection is unsuccessful, RDU Container connects to Secondary server.

Note |

|

ZooKeeper Connection

The RDU (rdu-app) container is built to use ZooKeeper as a coordination server. Please see the Requirements section for more details.

ConfigMap (rdu-app-cm) is the place to configure ZooKeeper connection URL and other properties.

It is highly recommended to run ZooKeeper ensemble (cluster) and connection URL can be configured with multiple endpoints (host and port) to fully use high-availability features, if configured on ZooKeeper ensemble.

Example

/zk/connection/url=zoo-01:2181,zoo-02:2181,zoo-03:2181Logs

By default, the RDU Container logs are available at the local filesystem of the container and underlying container runtime.

Users of regular RDU are aware that the current RDU logs are available at :

/var/CSCObac/rdu/logs/rdu.log

/var/CSCObac/rdu/logs/rdu_auth.log

/var/CSCObac/rdu/logs/rdu_crs.log

/var/CSCObac/rdu/logs/audit.logEven for the RDU Container (rdu-app), the logs are available in the container at: /var/CSCObac/rdu/logs/

To read the log file in the container:

$ kubectl exec -it <pod-name> -- bash

$ cd /var/CSCObac/rdu/logs/

$ tail -f rdu.logRDU Container (rdu-app) logs can also be viewed by command : kubectl logs <pod-name>

This is because RDU Container logs can be captured by underlying container runtime. That way, RDU Container (rdu-app) logs can be aggregated using EFK (Elasticsearch-Fluentd-Kibana) Logging Stack in Kubernetes.

Fluentd is a Log Collector which runs as a DaemonSet on each node of Kubernetes cluster. It collects logs from pods running on each node.

Elasticsearch a Log Storage which can either run as Deployment/StatefulSet on Kubernetes cluster or VM based application outside the cluster.

Kibana is a visualization tool used of log analysis which can run as Deployment on Kubernetes cluster.

If you are using EFK stack for log collection and aggregation, and want to disable logs being written to local filesystem of container, you can set the following property in ConfigMap of dpe-app (dpe-app-cm.yaml):

/server/log/2/enable=falseGetting Metrics from RDU Container

RDU Container (rdu-app) is built to expose the application-specific metrics in Prometheus format. This allows centralized monitoring of the rdu-app pods.

Prometheus server must be configured to scrape metrics from RDU-C pods. RDU-C pod will respond with current value of metrics.

Metrics are specific to an instance of rdu-app pod. You are responsible to configure Prometheus to scrape metrics from every rdu-app pod at regular intervals.

RDU-C support both HTTP and HTTPS schemes for metrics endpoint, based upon the communication mode enabled:

-

If rdu-app pod is running in non-secure mode (i.e. /server/rdu/unsecure/enabled=true), metrics are exposed on HTTP endpoint with port=8189

-

If rdu-app pod is running in secure mode (i.e. /server/rdu/secure/enabled=true), metrics are exposed on HTTPS endpoint with port=8190. The public certificate for this HTTPS communication is same as used by rdu-app pod for secure communication with PACE clients.

The HTTP and HTTPS endpoint are exposed via Basic authorization. A RDU administrator (user) with privilege=PRIV_RDU_READ is authorized to make requests to /metrics endpoint.

Details of metrics HTTP endpoint are:

scheme: http

port: 8189

endpoint: /metrics

authorization: Basicscheme: https

port: 8190

endpoint: /metrics

authorization: BasicIf you are running Prometheus in Kubernetes cluster, you can use Kubernetes Service Discovery configuration to scrape targets (which uses REST API of Kubernetes).

A sample job to scrape rdu-app metrics with Prometheus using Kubernetes Service Discovery configuration:

scrape_configs:

- job_name: 'rdu-app'

scrape_interval: 20s

metrics_path: /metrics

scheme: http

basic_auth:

username: metricsuser

password: metricspassword

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_container_port_name]

action: keep

regex: metrics

- source_labels: [__meta_kubernetes_pod_container_name]

action: keep

regex: dpe-app

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_nameThe following are the metrics that are exported:

|

Metric Name |

Description |

|---|---|

|

pace_connection_count |

Number of PACE Connections to RDU |

|

pace_batches_processed |

Number of Batches Processed |

|

pace_average_batch_time |

Average Batch Time [ms] |

|

pace_average_processing_time |

Average Batch Processing Time [ms] |

|

pace_batches_failed |

Number of Batches Failed |

|

pace_batches_dropped |

Number of Batches Dropped |

|

pace_batches_succeeded |

Number of Batches Succeeded |

|

pace_uptime |

PACE Uptime [ms] |

|

db_connection_state |

Current state of database connection [0-UNKNOWN, 1-PRIMARY CONNECTED, 2-SECONDARY CONNECTED, 3-NO DB CONNECTION] |

|

zookeeper_connection_state |

ZooKeeper connection status [0-UNKNOWN, 1-CONNECTED, 2-RECONNECTED, 3-SUSPENDED, 4-LOST, 5-READ_ONLY] |

|

rdu_state |

Current state of RDU [0-UNKNOWN, 1-READY, 2-INITIALIZING] |

Obtaining Documentation and Submitting a Service Request

For information on obtaining documentation, using the Cisco Bug Search Tool (BST), submitting a service request, and gathering additional information, see What's New in Cisco Product Documentation.

To receive new and revised Cisco technical content directly to your desktop, you can subscribe to the What's New in Cisco Product Documentation RSS feed. RSS feeds are a free service.

Cisco and the Cisco logo are trademarks or registered trademarks of Cisco and/or its affiliates in the U.S. and other countries. To view a list of Cisco trademarks, go to this URL: https://www.cisco.com/go/trademarks. Third-party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (1721R)

Any Internet Protocol (IP) addresses and phone numbers used in this document are not intended to be actual addresses and phone numbers. Any examples, command display output, network topology diagrams, and other figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses or phone numbers in illustrative content is unintentional and coincidental.

© 2025 Cisco Systems, Inc. All rights reserved.

Feedback

Feedback