|

Crosswork VM is not allowing the admin user to log in

OR

The following error is displayed:

Error: Invalid value for variable on cluster_vars.tf line 113:

├────────────────

This was checked by the validation rule at cluster_vars.tf:115,3-13. Error: expected length of name to be in the range (1

- 80), got with data.vsphere_virtual_machine.template_from_ovf, on main.tf line 32, in data "vsphere_virtual_machine" "template_from_ovf":

32: name = var.Cw_VM_Image Mon Aug 21 18:52:47 UTC 2023: ERROR: Installation failed. Check installer and the VMs' log by accessing

via console and viewing /var/log/firstBoot.log

|

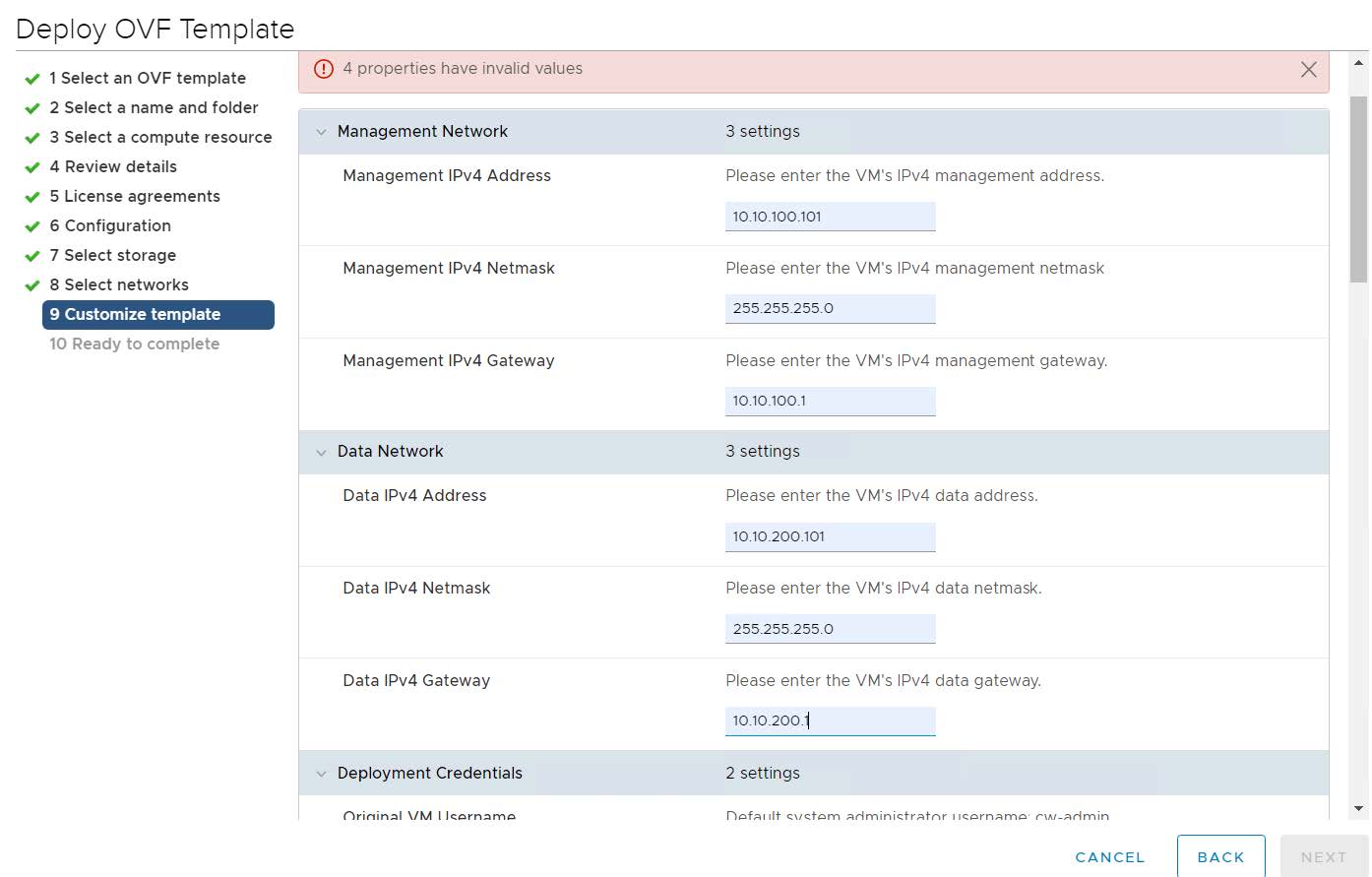

This happens when the password is not complex enough. Create a strong password, update the configuration manifest and redeploy.

Use a strong VM Password (8 characters long, including upper & lower case letters, numbers, and at least one special character).

Avoid using passwords similar to dictionary words (for example, "Pa55w0rd!") or relatable words. While they satisfy the criteria,

such passwords are weak and will be rejected resulting in failure to setup the VM.

|

|

Scenario

In a cluster with five or more nodes, the databases move to hybrid nodes during a node reload scenario. Users will see the

following alarm:

“The robot-postgres/cw-timeseries-db pods are currently running on hybrid nodes. Please relocate them to worker nodes if they're

available and health."Resolution

To resolve the alarm, invoke the move API to move the databases to worker nodes.

Use the following script to place services. It returns a job ID that can be queried to ensure the job is completed.

[Place Services] Request

curl --request POST --location 'https://<Vip>:30603/crosswork/platform/v2/placement/move_services_to_nodes' \

--header 'Content-Type: application/json' \

--header 'Authorization: <your-jwt-token>' \

--data '{

"service_placements": [

{

"service": {

"name": "robot-postgres",

"clean_data_folder": true

},

"nodes": [

{

"name": "fded-1bc1-fc3e-96d0-192-168-5-114-worker.cisco.com"

},

{

"name": "fded-1bc1-fc3e-96d0-192-168-5-115-worker.cisco.com"

}

]

},

{

"service": {

"name": "cw-timeseries-db",

"clean_data_folder": true

},

"nodes": [

{

"name": "fded-1bc1-fc3e-96d0-192-168-5-114-worker.cisco.com"

},

{

"name": "fded-1bc1-fc3e-96d0-192-168-5-115-worker.cisco.com"

}

]

}

]

}'

Response

{

"job_id": "PJ5",

"result": {

"request_result": "ACCEPTED",

"error": null

}

}

[GetJobs] Request

curl --request POST --location 'https://<Vip>:30603/crosswork/platform/v2/placement/jobs/query' \

--header 'Content-Type: application/json' \

--header 'Authorization: <your-jwt-token>' \

--data '{"job_id":"PJ5"}'

Response

{

"jobs": [

{

"job_id": "PJ1",

"job_user": "admin",

"start_time": "1714651535675",

"completion_time": "1714652020311",

"progress": 100,

"job_status": "JOB_COMPLETED",

"job_context": {},

"job_type": "MOVE_SERVICES_TO_NODES",

"error": {

"message": ""

},

"job_description": "Move Services to Nodes"

}

],

"query_options": {

"pagination": {

"page_token": "1714650688679",

"page_size": 200

}

},

"result": {

"request_result": "ACCEPTED",

"error": null

}

}

[GetEvents] Request

curl --request POST --location 'https://<Vip>:30603/crosswork/platform/v2/placement/events/query' \

--header 'Content-Type: application/json' \

--header 'Authorization: <your-jwt-token>' \

--data '{}'

Response

{

"events": [

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Operation done",

"event_time": "1714725461179"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Moving replica pod , to targetNodes [fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com

fded-1bc1-fc3e-96d0-192-168-6-116-worker.cisco.com]",

"event_time": "1714725354163"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "robot-postgres - Cleaning up target nodes [fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com

fded-1bc1-fc3e-96d0-192-168-6-116-worker.cisco.com] for stale data folder",

"event_time": "1714725346515"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Replica pod not found for service robot-postgres",

"event_time": "1714725346507"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Started moving leader and replica pods for service robot-postgres",

"event_time": "1714725346504"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": " robot-postgres - Source and target nodes are not subsets, source nodes

[fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com] ,

target nodes [fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com fded-1bc1-fc3e-96d0-192-168-6-116-worker.cisco.com]",

"event_time": "1714725346293"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Verified cw-timeseries-db location on target nodes",

"event_time": "1714725345692"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Moved leader pod cw-timeseries-db-0, to targetNodes [fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com

fded-1bc1-fc3e-96d0-192-168-6-116-worker.cisco.com]",

"event_time": "1714725345280"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "cw-timeseries-db-0 is ready",

"event_time": "1714725345138"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "cw-timeseries-db-0 is ready",

"event_time": "1714725241401"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Checking for cw-timeseries-db-0 pod is ready",

"event_time": "1714725211296"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Moving leader pod cw-timeseries-db-0, to targetNodes [fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com

fded-1bc1-fc3e-96d0-192-168-6-116-worker.cisco.com]",

"event_time": "1714725211256"

}

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "cw-timeseries-db-1 is ready",

"event_time": "1714725132896"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Checking for cw-timeseries-db-1 pod is ready",

"event_time": "1714725131684"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Moving replica pod cw-timeseries-db-1, to targetNodes [fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com

fded-1bc1-fc3e-96d0-192-168-6-116-worker.cisco.com]",

"event_time": "1714725128203"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "cw-timeseries-db - Cleaning up target nodes [fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com

fded-1bc1-fc3e-96d0-192-168-6-116-worker.cisco.com] for stale data folder",

"event_time": "1714725119505"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "Started moving leader and replica pods for service cw-timeseries-db",

"event_time": "1714725117684"

},

{

"event_tags": [

{

"tag_type": "JOB_ID_EVENT",

"tag_value": "PJ5"

}

],

"message": "cw-timeseries-db - Source and target nodes are not subsets, source nodes

[fded-1bc1-fc3e-96d0-192-168-6-111-hybrid.cisco.com fded-1bc1-fc3e-96d0-192-168-6-113-hybrid.cisco.com] ,

target nodes [fded-1bc1-fc3e-96d0-192-168-6-115-worker.cisco.com fded-1bc1-fc3e-96d0-192-168-6-116-worker.cisco.com]",

"event_time": "1714725115883"

}

],

"query_options": {

"pagination": {

"page_token": "1714725115883",

"page_size": 200

}

},

"result": {

"request_result": "ACCEPTED",

"error": null

}

}

|

Feedback

Feedback