ClusterName |

Name of the cluster file

|

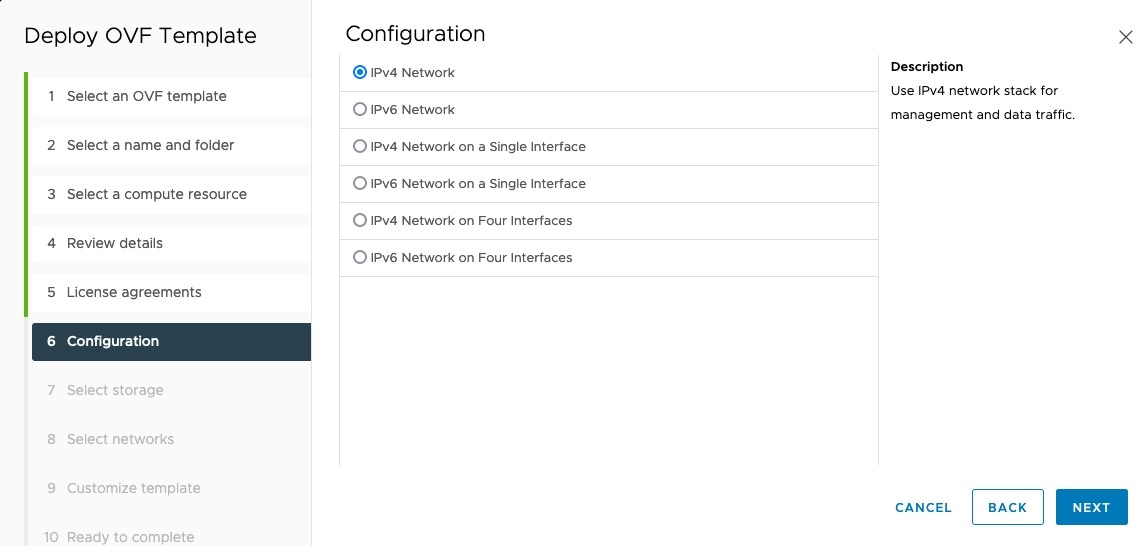

ClusterIPStack |

The IP stack protocol: IPv4 or IPv6

|

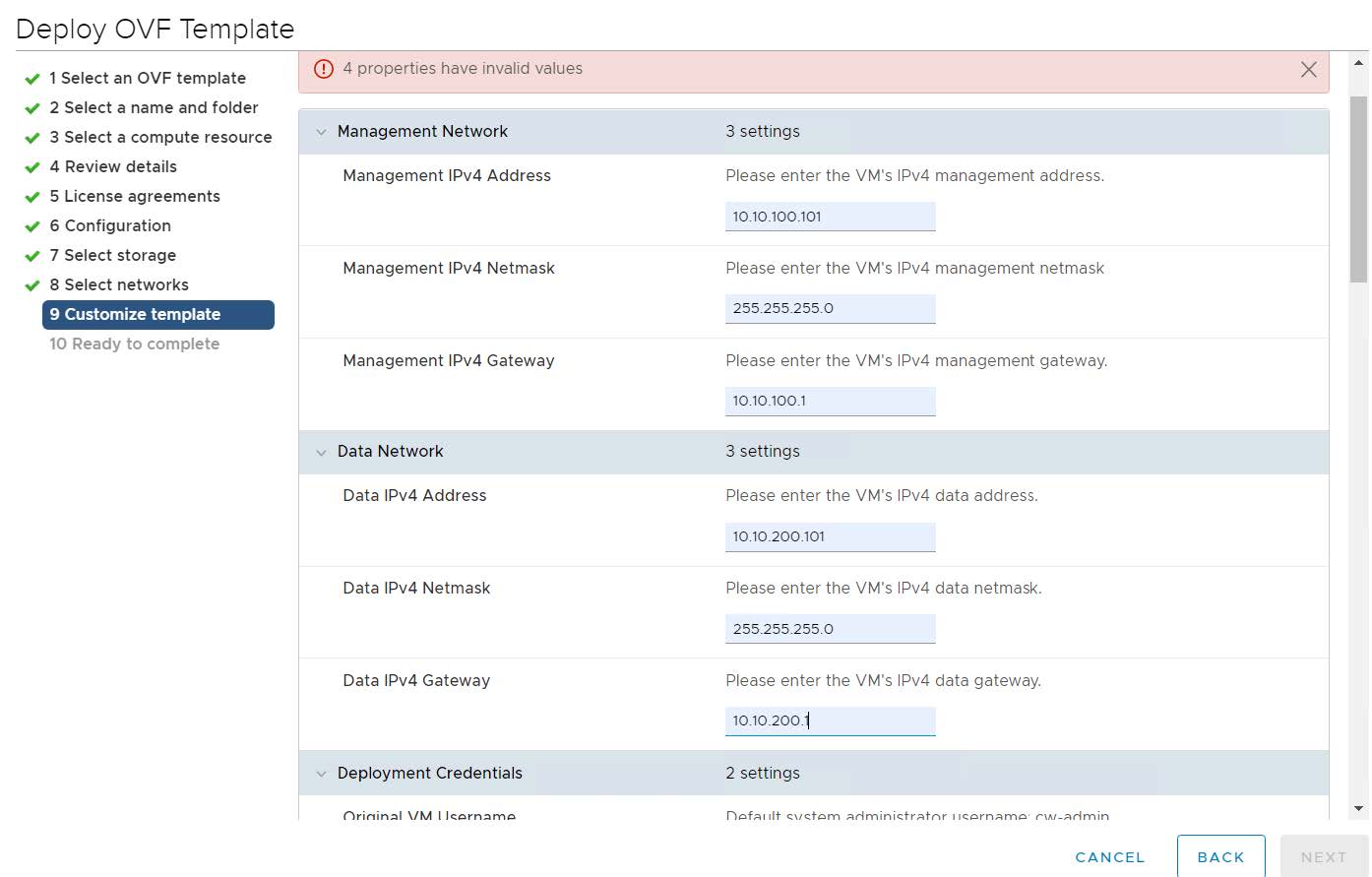

ManagementIPAddress |

The Management IP address of the VM (IPv4 or IPv6).

|

ManagementIPNetmask |

The Management IP subnet in dotted decimal format (IPv4 or IPv6).

|

ManagementIPGateway |

The Gateway IP on the Management Network (IPv4 or IPv6). The address must be reachable, otherwise the installation will fail.

|

ManagementVIP |

The Management Virtual IP for the cluster.

|

ManagementVIPName |

Name of the Management Virtual IP for the cluster. This is an optional parameter used to reach Crosswork cluster Management

VIP via DNS name. If this parameter is used, the corresponding DNS record must exist in the DNS server.

|

DataIPAddress |

The Data IP address of the VM (IPv4 or IPv6).

|

DataIPNetmask |

The Data IP subnet in dotted decimal format (IPv4 or IPv6).

|

DataIPGateway |

The Gateway IP on the Data Network (IPv4 or IPv6). The address must be reachable, otherwise the installation will fail.

|

DataVIP |

The Data Virtual IP for the cluster.

|

DataVIPName |

Name of the Data Virtual IP for the cluster. This is an optional parameter used to reach Crosswork cluster Data VIP via DNS

name. If this parameter is used, the corresponding DNS record must exist in the DNS server.

|

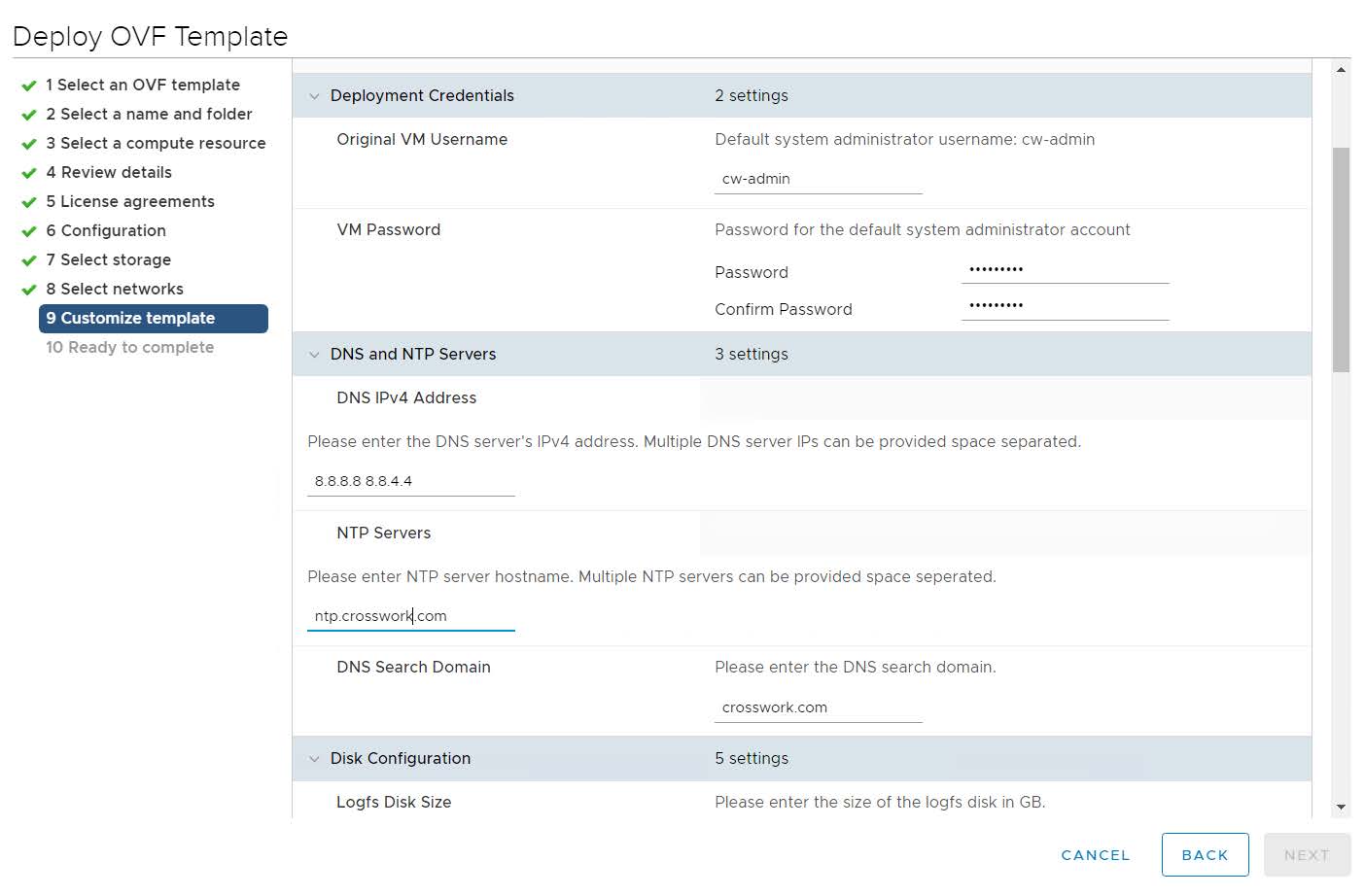

DNS |

The IP address of the DNS server (IPv4 or IPv6). The address must be reachable, otherwise the installation will fail.

|

NTP |

NTP server address or name. The address must be reachable, otherwise the installation will fail.

|

DomainName |

The domain name used for the cluster.

|

CWusername |

Username to log into Cisco Crosswork.

|

CWPassword |

Password to log into Cisco Crosswork.

Use a strong VM Password (8 characters long, including upper & lower case letters, numbers, and at least one special character).

Avoid using passwords similar to dictionary words (for example, "Pa55w0rd!") or relatable words (for example, C!sco123 or

Cwork321!). While they satisfy the criteria, such passwords are weak and will be rejected resulting in failure to setup the

VM.

|

VMSize |

VM size for the cluster. Value is Large.

|

VMName |

Name of the VM

A unique VM name is required for each node on the cluster (Hybrid or Worker).

|

NodeType |

Indicates the type of VM. Choose either "Hybrid" or "Worker".

|

Note

|

The Crosswork cluster requires at least three VMs operating in a hybrid configuration.

|

|

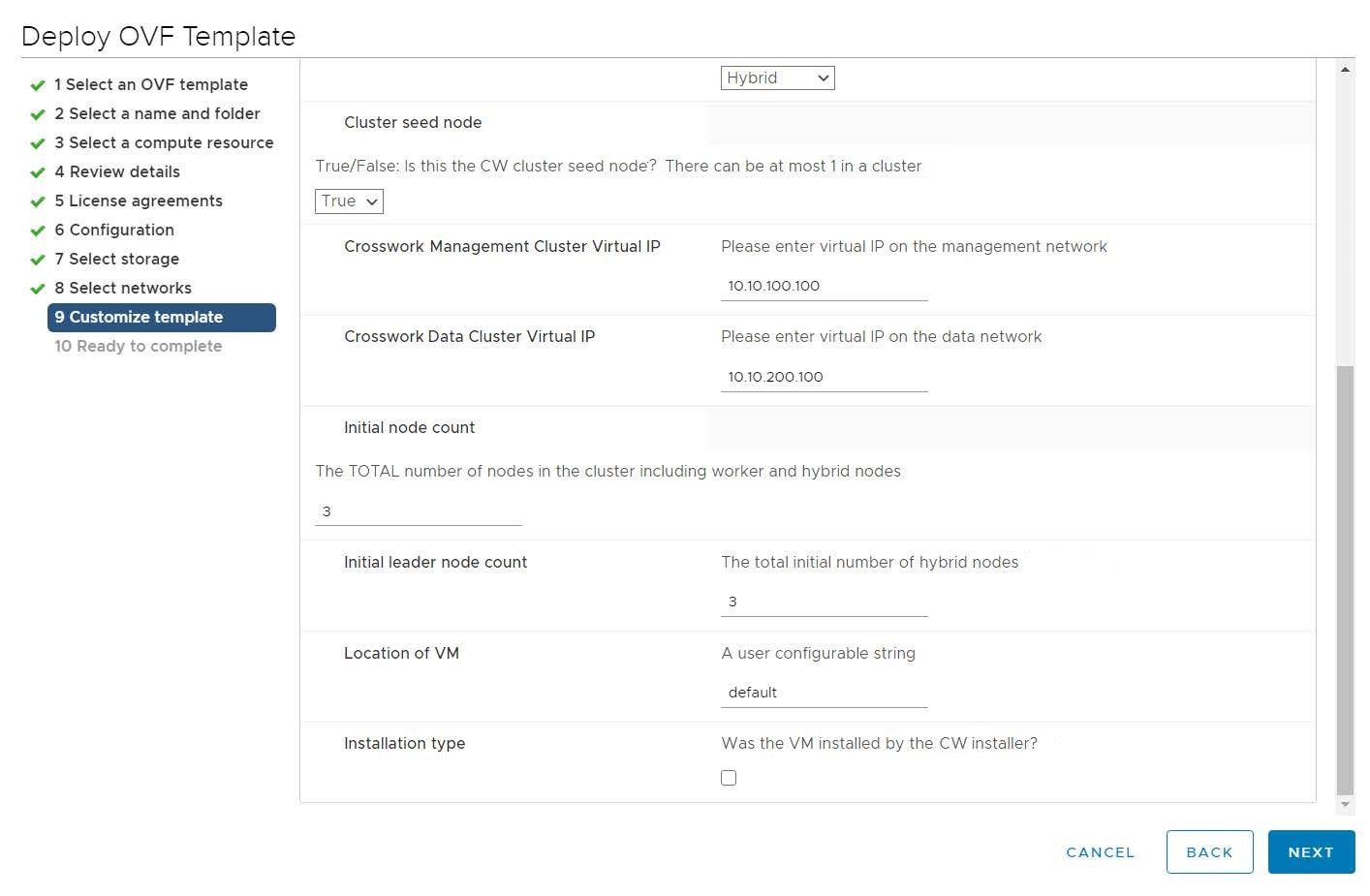

IsSeed |

Choose "True" if this is the first VM being built in a new cluster.

Choose "False" for all other VMs, or when rebuilding a failed VM.

This parameter is optional for installing using the cluster installer tool.

|

InitNodeCount |

Total number of nodes in the cluster including Hybrid and Worker nodes. The default value is 3. Set this to match the number

of VMs (nodes) you are going to deploy. For more information on VM count, see Table 1.

This parameter is optional for installing using the cluster installer tool.

|

InitLeaderCount |

Total number of Hybrid nodes in the cluster. The default value is 3.

This parameter is optional for installing using the cluster installer tool.

|

BackupMinPercent |

Minimum percentage of the data disk space to be used for the size of the backup partition. The default value is 50 (valid

range is from 1 to 80).

Please use the default value unless recommended otherwise.

|

Note

|

The final backup partition size will be calculated dynamically. This parameter defines the minimum.

|

|

ManagerDataFsSize |

Refers to the data disk size for Hybrid nodes (in Giga Bytes). This is an optional parameter and the default value is 450

(valid range is from 450 to 8000), if not explicitly specified.

Please use the default value unless recommended otherwise.

|

WorkerDataFsSize |

Refers to the data disk size for Worker nodes (in Gigabytes). This is an optional parameter and the default value is 450 (valid

range is from 450 to 8000), if not explicitly specified.

Please use the default value unless recommended otherwise.

|

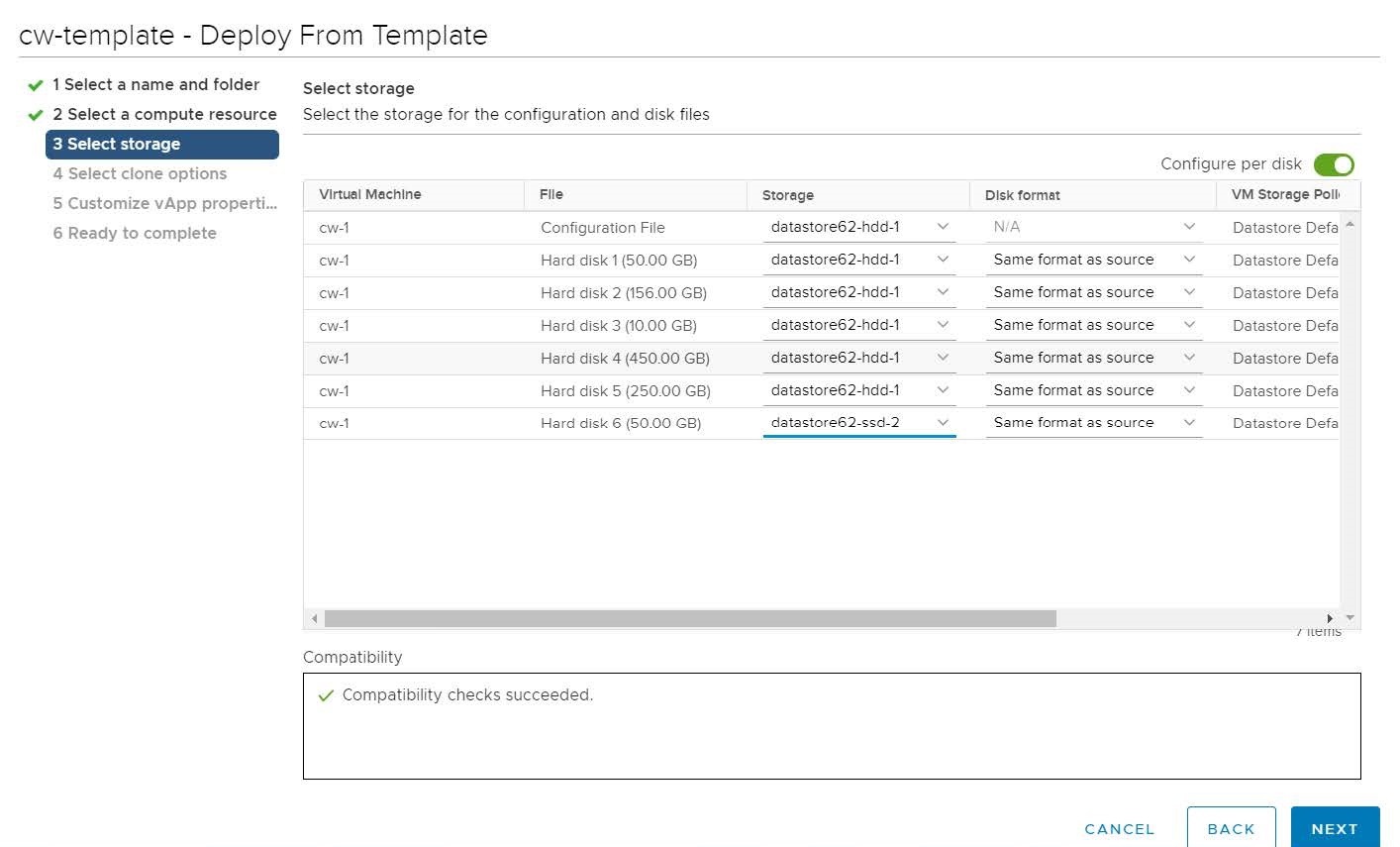

ThinProvisioned |

Set to "false" for production deployments.

|

EnableHardReservations |

Determines the enforcement of VM CPU and Memory profile reservations (see Installation Prerequisites for VMware vCenter for more information). This is an optional parameter and the default value is true, if not explicitly specified.

If set as true, the VM's resources are provided exclusively. In this state, the installation will fail if there are insufficient CPU cores,

memory or CPU cycles.

If set as false (only set for lab installations), the VM's resources are provided on best efforts. In this state, insufficient CPU cores

can impact performance or cause installation failure.

|

RamDiskSize |

Size of the Ram disk.

This parameter is only used for lab installations (value must be at least 2). When a non-zero value is provided for RamDiskSize, the HSDatastore value is not used.

|

OP_Status |

This optional parameter is used (uncommented) to import inventory post manual deployment of Crosswork cluster.

The parameter refers to the state for this VM. To indicate a running status, the value must be 2 (#OP_Status = 2). For more information, see the Import Cluster Inventory topic in the Cisco Crosswork Network Controller 6.0 Administration Guide.

|

SchemaVersion |

The configuration Manifest schema version. This indicates the version of the installer to use with this template.

Schema version should map to the version packaged with the sample template in the cluster installer tool on cisco.com. You

should always build a new template from the default template provided with the release you are deploying, as template requirements

may change from one release to the next.

|

LogFsSize |

Log partition size (in Giga Bytes). Minimum value is 10 GB and Maximum value is 1000 GB. You are recommended to use the default

value.

|

Timezone |

Enter the timezone. Input is a standard IANA time zone (for example, "America/Chicago").

If left blank, the default value (UTC) is selected.

This is an optional parameter.

|

Note

|

The timestamp in Kafka log messages represents the NSO server time. If you change the Timezone parameter in Crosswork without updating the NSO server time, there will be a mismatch between the Crosswork server time and

the NSO event time.

|

|

EnableSkipAutoInstallFeature |

Any pods marked as skip auto install will not be brought up until a dependent application/pod explicitly asks for it.

If left blank, the default value ("False") is selected.

|

EnforcePodReservations |

Enforces minimum resource reservations for the pod.

If left blank, the default value ("True") is selected.

|

K8sServiceNetwork |

The network address for the kubernetes service network. By default, the CIDR range is fixed to '/16'.

|

K8sPodNetwork |

The network address for the kubernetes pod network. By default, the CIDR range is fixed to '/16'.

|

Feedback

Feedback