Configure Auto Upgrade of APIC from 6.0(2H)

Available Languages

Download Options

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

Introduction

This document describes how Auto upgrade feature works for Cisco Application Policy Infrastructure Controller (APIC).

When it is needed?

In the existing process, whenever a new APIC is received via Return Material Authorization (RMA) or newly procured, it has to be running the same APIC version as the one in the ACI fabric to join the cluster. This requires upgrading the new APIC to match the version of the existing cluster before adding it to the fabric, which is time-consuming and requires manual effort.

There are two use cases when you require this feature.

- Replacement of faulty APIC (RMA APIC)

- APIC Cluster Expansion

Prerequisites

To simplify the procedure and make it plug-and-play, the APIC Auto Upgrade feature was introduced starting with ACI version 6.0(2) and subsequent releases.

When a New APIC is introduced into the fabric through replacement or addition, the APIC software must have to be matched with the one running on the cluster. This typically needs an upgrade which takes additional time for the upgrade process to complete and for the APIC to join the fabric

This is an automatic feature that does not require any configuration.

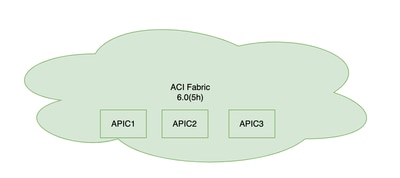

Topology

In this topology you have three APIC running ACI version 6.0(5h) and APIC3 goes faulty and requires replacement.

Steps to perform APIC Auto Upgrade

Once you receive the RMA APIC, configure the Cisco Integrated Management Controller (CIMC) IP to match the previous CIMC address.

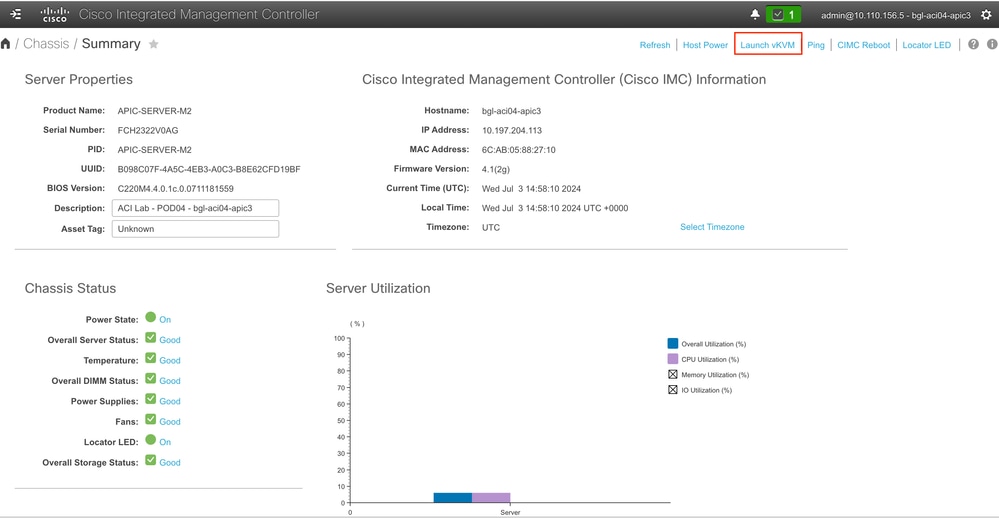

Step 1. Login to CIMC GUI.

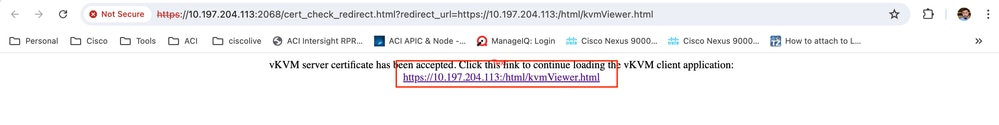

Step 2. Access APIC through keyboard, video, and mouse KVM.

Navigate to Launch vKVM > Click on it > One more window tab is going to open > Click on hyperlink

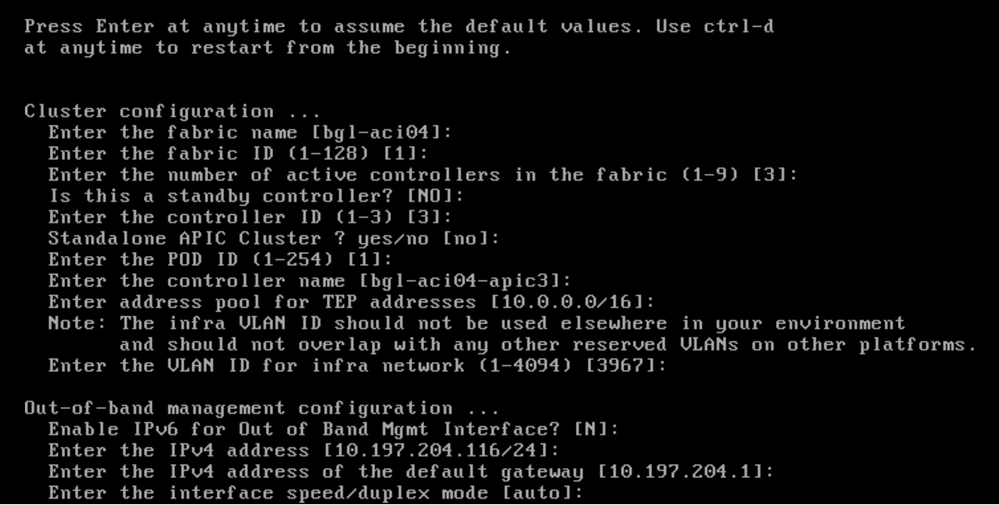

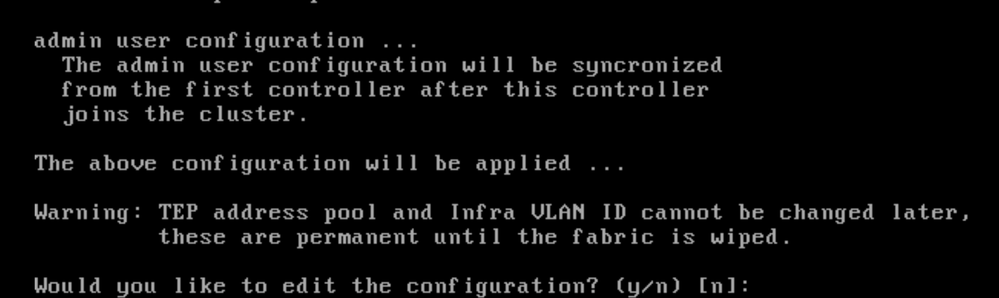

Step 3. Initialize the new APIC to check the APIC version it is running (You can use any dummy Fabric Discovery parameters like Fabric name, Fabric ID, TEP Pool etc)

Step 4. Once the new APIC is initialised check the version, (You can see existing APIC version is 5.2.5c)

apic3# acidiag version <== To check the APIC version

5.2.5cStep 5. Clean reload the new APIC using given commands.

acidiag touch clean <<== Commands to remove all configuration and reboot the devices

acidiag touch setup

acidiag rebootStep 6. Collect sam_exported.config from working APIC as this information to be used while APIC initialization.

apic1# cd /data/data_admin

apic1# pwd

/data/data_admin <=== Location of sam_exported.config file

apic1# ls -l | grep sam

-rw-r--r-- 1 root root 349 Jul 3 07:32 sam_exported.configapic1# cat sam_exported.config

Setup for Active and Standby APIC

fabricDomain= bgl-aci04

fabricId= 1

systemName= bgl-aci04-apic1 <== APIC1 hostname , change based on APIC3

controllerID= 1

tepPool= 10.0.0.0/16

infraVlan= 3967

GIPo= 225.0.0.0/15

clusterSize= 5

standbyApic= NO

enableIPv4= Y

enableIPv6= N

firmwareVersion= 6.0(5h)

ifcIpAddr= 10.0.0.1

apicX= NO

podId= 1

standaloneApicCluster= no

oobIpAddr= 10.197.204.114/24 <== APIC1 OOB IP, Change based on APIC3Step 7. Initialize new APIC using the data collected in Step 6.

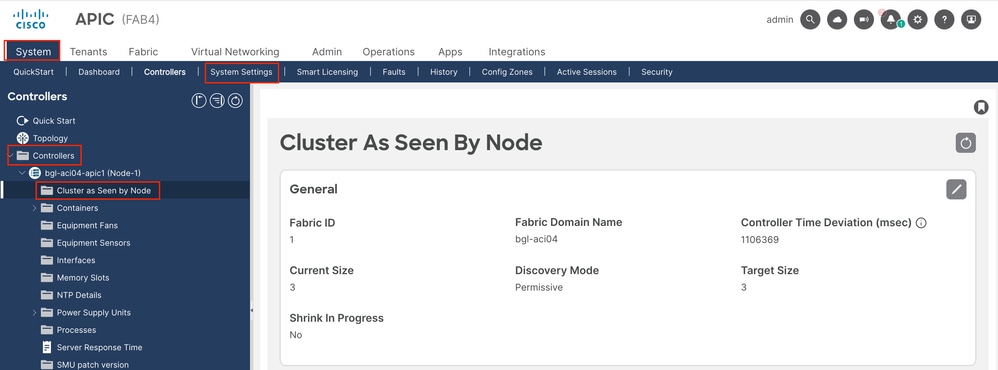

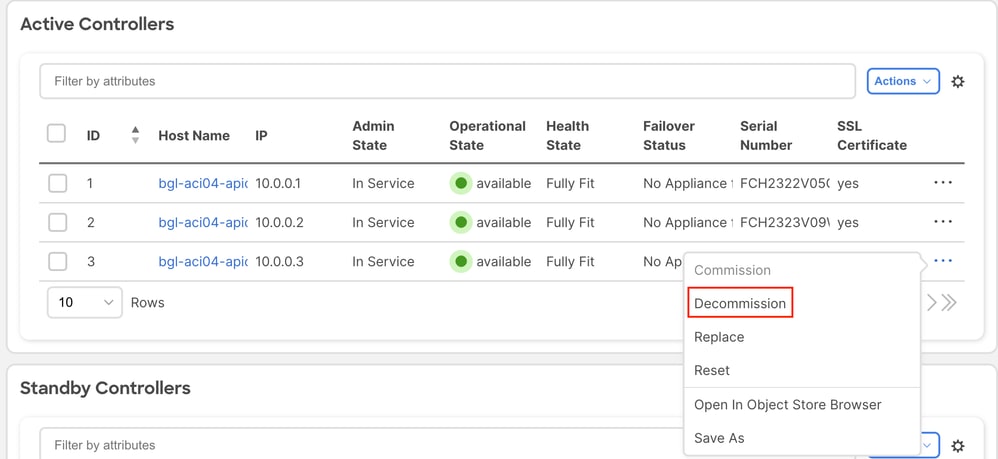

Step 8. Decommission faulty APIC3 from the APIC01 GUI

Navigate to System > System Settings > Controllers > Cluster as Seen by Node

Click on three dots on right hand side of the device. Click on Decommission

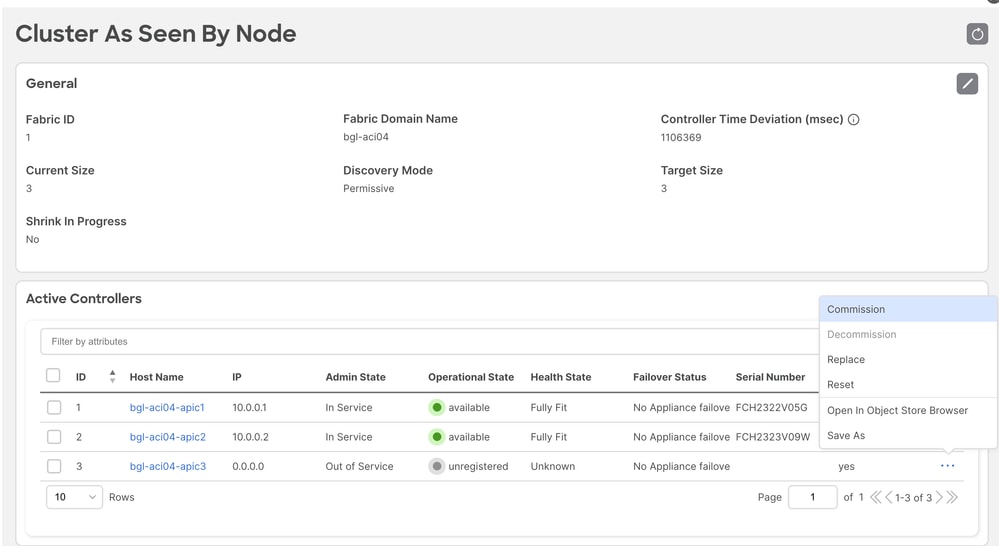

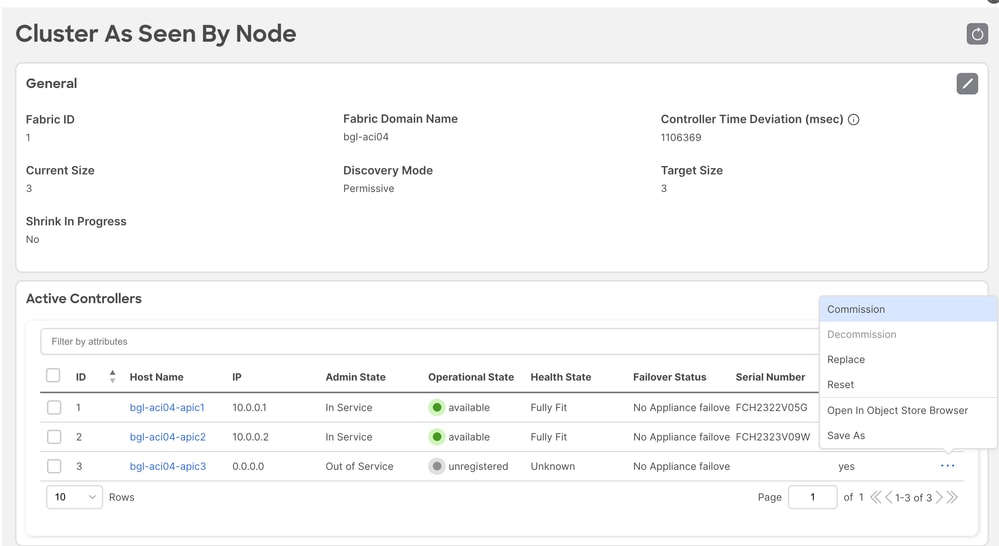

Step 9. Validate admin state of the node, it shows as "Out of service" and decommission option is greyed out.

Step 10. Remove faulty APIC3 from the rack and install new APIC3 in the rack.

Step 11. Once new APIC is installed in the rack, power on the new APIC3 and connect cables. Cables includes Fabric uplinks and management ports.

Step 12. Wait for 10-15 minutes so that new APIC can be powered ON. Validate through CIMC KVM if new APIC has been powered ON and we can see the login prompt. ( Login works with rescue-user only)

Step 13. Commission APIC03 from APIC01 GUI.

Navigate to System > System Settings > Controllers > Cluster as Seen by Node

Click on three dots on right hand side of the device > Click on Commission

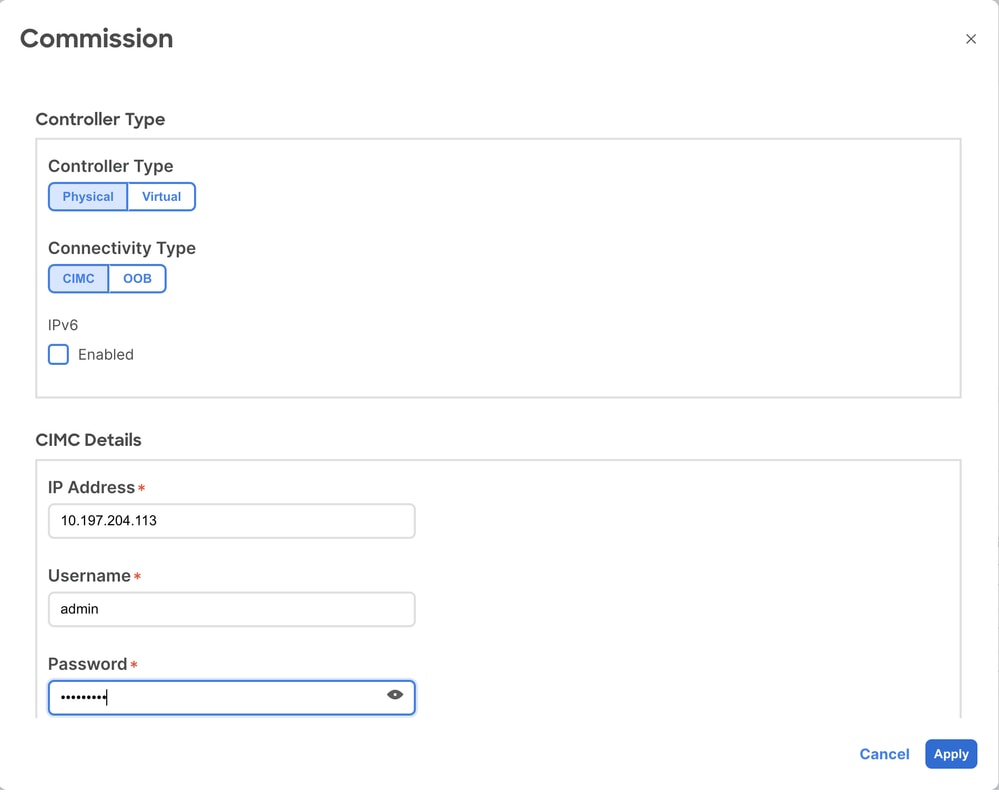

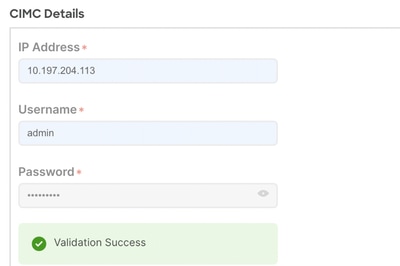

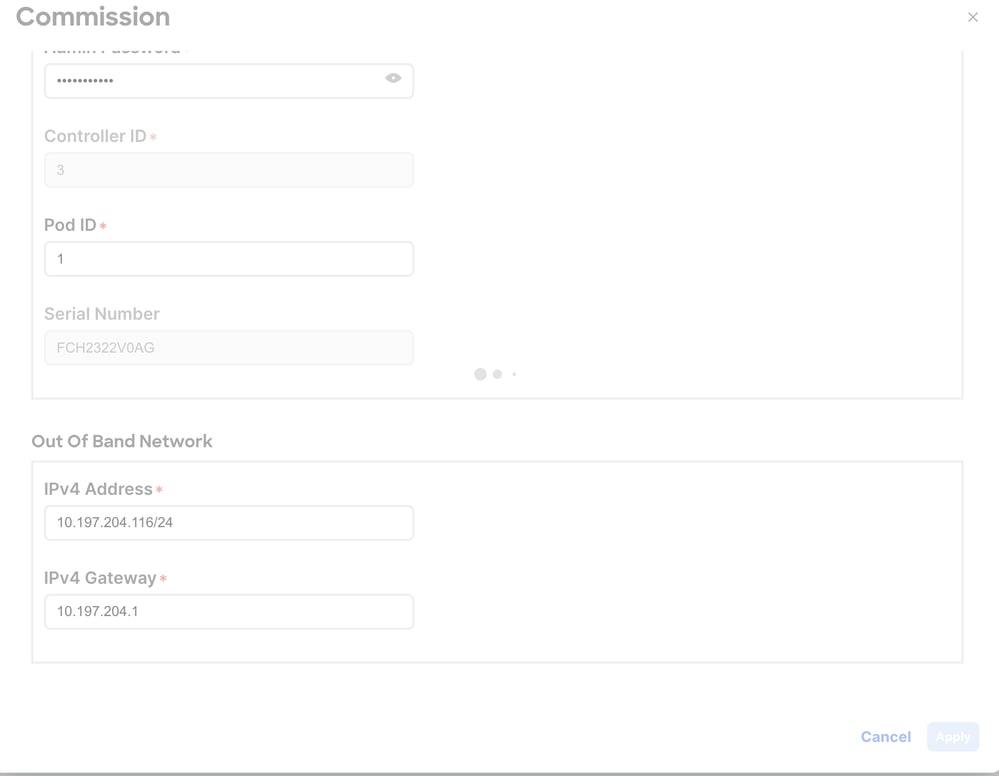

Step14: Add CIMC IP address, username , password which were used in the faulty/old APIC which we are replacing. Click on Validate. Once validate is successful.

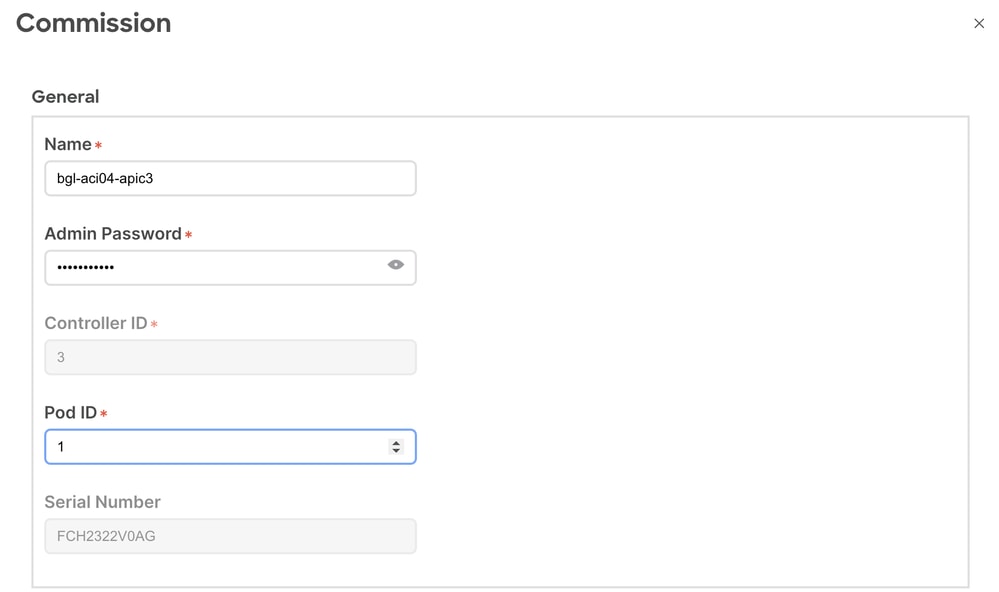

Enter APIC name, admin password (current password used in the fabric) , POD ID , Out of band management address and IPV4 gateway and click on Apply. (All these parameters belongs to old APIC in case of replacement).

Step 15. Now wait for 10-15 minutes so that new APIC to be added to fabric with 5.2(5c) APIC version.

apic1# acidiag avread

Local appliance ID=1 ADDRESS=10.0.0.1 TEP ADDRESS=10.0.0.0/16 ROUTABLE IP ADDRESS=0.0.0.0 CHASSIS_ID=8ca31168-c4ab-11ee-a6c2-5d61e00b2df0

Cluster of 3 lm(t):1(2024-07-03T07:52:20.791+00:00) appliances (out of targeted 3 lm(t):3(2024-07-03T09:21:10.936+00:00)) with FABRIC_DOMAIN name=bgl-aci04 set to version=apic-6.0(5h) lm(t):3(2024-07-03T07:18:46.821+00:00); discoveryMode=PERMISSIVE lm(t):0(1970-01-01T00:00:00.004+00:00); drrMode=OFF lm(t):0(1970-01-01T00:00:00.004+00:00); kafkaMode=ON lm(t):1(2024-02-06T05:02:19.730+00:00); autoUpgradeMode=ON lm(t):2(2024-07-03T13:18:18.036+00:00); clusterInterface=infra

appliance id=1 address=10.0.0.1 lm(t):1(2024-07-03T07:33:50.078+00:00) tep address=10.0.0.0/16 lm(t):1(2024-07-03T07:33:50.078+00:00) routable address=0.0.0.0 lm(t):1(zeroTime) oob address=10.197.204.114/24 lm(t):1(2024-07-03T07:52:20.955+00:00) version=6.0(5h) lm(t):1(2024-07-03T13:21:01.604+00:00) chassisId=8ca31168-c4ab-11ee-a6c2-5d61e00b2df0 lm(t):1(2024-07-03T13:21:01.604+00:00) capabilities=0X17EEFFFFFFFFF--0X2020--0X3--0X1 lm(t):1(2024-07-03T11:03:37.219+00:00) rK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T07:52:20.965+00:00) aK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T07:52:20.965+00:00) oobrK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T07:52:20.965+00:00) oobaK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T07:52:20.965+00:00) cntrlSbst=(APPROVED, FCH2322V05G) lm(t):1(2024-07-03T13:21:01.604+00:00) (targetMbSn= lm(t):0(zeroTime), failoverStatus=0 lm(t):0(zeroTime)) podId=1 lm(t):1(2024-07-03T07:33:50.078+00:00) commissioned=YES lm(t):1(zeroTime) registered=YES lm(t):1(2024-07-03T07:33:50.078+00:00) standby=NO lm(t):1(2024-07-03T07:33:50.078+00:00) DRR=NO lm(t):0(zeroTime) apicX=NO lm(t):1(2024-07-03T07:33:50.078+00:00) virtual=NO lm(t):1(2024-07-03T07:33:50.078+00:00) oob gw address=10.197.204.1 lm(t):1(2024-07-03T07:33:50.078+00:00) oob address v6=:: lm(t):1(2024-07-03T07:33:50.078+00:00) oob gw address v6=:: lm(t):1(2024-07-03T07:33:50.078+00:00) active=YES(2024-07-03T07:33:50.078+00:00) health=(applnc:255 lm(t):1(2024-07-03T11:03:39.196+00:00) svc's)

appliance id=2 address=10.0.0.2 lm(t):3(2024-07-03T12:40:59.116+00:00) tep address=10.0.0.0/16 lm(t):2(2024-02-06T05:43:35.885+00:00) routable address=0.0.0.0 lm(t):0(zeroTime) oob address=10.197.204.115/24 lm(t):1(2024-07-03T13:21:01.948+00:00) version=6.0(5h) lm(t):2(2024-07-03T13:21:01.600+00:00) chassisId=557cdb0e-c4b2-11ee-a0a8-93aaf27bee50 lm(t):2(2024-07-03T13:21:01.600+00:00) capabilities=0X17EEFFFFFFFFF--0X2020--0X7--0X1 lm(t):2(2024-07-03T11:03:37.452+00:00) rK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:21:01.948+00:00) aK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:21:01.948+00:00) oobrK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:21:01.948+00:00) oobaK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:21:01.948+00:00) cntrlSbst=(APPROVED, FCH2323V09W) lm(t):2(2024-07-03T13:21:01.600+00:00) (targetMbSn= lm(t):0(zeroTime), failoverStatus=0 lm(t):0(zeroTime)) podId=1 lm(t):2(2024-07-03T08:19:03.478+00:00) commissioned=YES lm(t):3(2024-07-03T12:41:00.239+00:00) registered=YES lm(t):3(2024-07-03T12:41:00.239+00:00) standby=NO lm(t):1(2024-07-03T12:41:00.239+00:00) DRR=NO lm(t):1(2024-07-03T12:41:00.239+00:00) apicX=NO lm(t):1(2024-07-03T12:41:00.239+00:00) virtual=NO lm(t):0(zeroTime) oob gw address=10.197.204.1 lm(t):1(2024-07-03T08:40:04.837+00:00) oob address v6=:: lm(t):1(2024-07-03T13:21:01.948+00:00) oob gw address v6=:: lm(t):1(2024-02-06T05:46:22.175+00:00) active=YES(2024-07-03T13:21:01.694+00:00) health=(applnc:255 lm(t):2(2024-07-03T11:03:39.702+00:00) svc's)

appliance id=3 address=10.0.0.3 lm(t):101(2024-07-03T13:18:17.965+00:00) tep address=10.0.0.0/16 lm(t):3(2024-07-03T12:40:40.900+00:00) routable address=0.0.0.0 lm(t):0(zeroTime) oob address=10.197.204.116/24 lm(t):1(2024-07-03T13:21:01.949+00:00) version=5.2(5c) lm(t):3(2024-07-03T13:21:01.736+00:00) chassisId=1c697d94-3939-11ef-a452-6d7c540421fa lm(t):3(2024-07-03T13:21:01.736+00:00) capabilities=0X17EEFFFFFFFFF--0X2020--0X4--0 lm(t):3(2024-07-03T13:20:59.140+00:00) rK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:21:01.949+00:00) aK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:21:01.949+00:00) oobrK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:21:01.949+00:00) oobaK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:21:01.949+00:00) cntrlSbst=(APPROVED, FCH2322V0AG) lm(t):3(2024-07-03T13:21:01.736+00:00) (targetMbSn= lm(t):0(zeroTime), failoverStatus=0 lm(t):0(zeroTime)) podId=1 lm(t):101(2024-07-03T13:18:17.965+00:00) commissioned=YES lm(t):1(2024-07-03T13:17:21.104+00:00) registered=YES lm(t):2(2024-07-03T13:18:18.036+00:00) standby=NO lm(t):101(2024-07-03T13:18:17.965+00:00) DRR=NO lm(t):1(2024-07-03T13:17:21.104+00:00) apicX=NO lm(t):101(2024-07-03T13:18:17.965+00:00) virtual=NO lm(t):0(zeroTime) oob gw address=0.0.0.0 lm(t):1(2024-07-03T13:21:01.562+00:00) oob address v6=:: lm(t):1(2024-07-03T13:21:01.949+00:00) oob gw address v6=:: lm(t):1(2024-02-06T06:06:24.927+00:00) active=YES(2024-07-03T13:21:01.562+00:00) health=(applnc:112 lm(t):3(2024-07-03T12:41:44.076+00:00) svc's) <<== APIC03 is still on 5.2(5c) version Step 16. Existing APIC are going to upgrade this new APIC automatically to 6.0(5h) and going to add it to the cluster also. You can keep this running for another 30 minutes and check the previous avread output again.

apic1# acidiag avread

Local appliance ID=1 ADDRESS=10.0.0.1 TEP ADDRESS=10.0.0.0/16 ROUTABLE IP ADDRESS=0.0.0.0 CHASSIS_ID=8ca31168-c4ab-11ee-a6c2-5d61e00b2df0

Cluster of 3 lm(t):1(2024-07-03T07:52:20.791+00:00) appliances (out of targeted 3 lm(t):3(2024-07-03T13:47:07.520+00:00)) with FABRIC_DOMAIN name=bgl-aci04 set to version=apic-6.0(5h) lm(t):3(2024-07-03T13:47:42.401+00:00); discoveryMode=PERMISSIVE lm(t):0(1970-01-01T00:00:00.004+00:00); drrMode=OFF lm(t):0(1970-01-01T00:00:00.004+00:00); kafkaMode=ON lm(t):1(2024-02-06T05:02:19.730+00:00); autoUpgradeMode=OFF lm(t):1(2024-07-03T13:45:43.249+00:00); clusterInterface=infra

appliance id=1 address=10.0.0.1 lm(t):1(2024-07-03T07:33:50.078+00:00) tep address=10.0.0.0/16 lm(t):1(2024-07-03T07:33:50.078+00:00) routable address=0.0.0.0 lm(t):1(zeroTime) oob address=10.197.204.114/24 lm(t):1(2024-07-03T07:52:20.955+00:00) version=6.0(5h) lm(t):1(2024-07-03T13:45:43.249+00:00) chassisId=8ca31168-c4ab-11ee-a6c2-5d61e00b2df0 lm(t):1(2024-07-03T13:45:43.249+00:00) capabilities=0X17EEFFFFFFFFF--0X2020--0X7--0X1 lm(t):1(2024-07-03T13:45:43.249+00:00) rK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T07:52:20.965+00:00) aK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T07:52:20.965+00:00) oobrK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T07:52:20.965+00:00) oobaK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T07:52:20.965+00:00) cntrlSbst=(APPROVED, FCH2322V05G) lm(t):1(2024-07-03T13:45:43.249+00:00) (targetMbSn= lm(t):0(zeroTime), failoverStatus=0 lm(t):0(zeroTime)) podId=1 lm(t):1(2024-07-03T07:33:50.078+00:00) commissioned=YES lm(t):1(zeroTime) registered=YES lm(t):1(2024-07-03T07:33:50.078+00:00) standby=NO lm(t):1(2024-07-03T07:33:50.078+00:00) DRR=NO lm(t):0(zeroTime) apicX=NO lm(t):1(2024-07-03T07:33:50.078+00:00) virtual=NO lm(t):1(2024-07-03T07:33:50.078+00:00) oob gw address=10.197.204.1 lm(t):1(2024-07-03T07:33:50.078+00:00) oob address v6=:: lm(t):1(2024-07-03T07:33:50.078+00:00) oob gw address v6=:: lm(t):1(2024-07-03T07:33:50.078+00:00) active=YES(2024-07-03T07:33:50.078+00:00) health=(applnc:255 lm(t):1(2024-07-03T13:47:42.561+00:00) svc's)

appliance id=2 address=10.0.0.2 lm(t):3(2024-07-03T13:24:30.725+00:00) tep address=10.0.0.0/16 lm(t):2(2024-02-06T05:43:35.885+00:00) routable address=0.0.0.0 lm(t):0(zeroTime) oob address=10.197.204.115/24 lm(t):1(2024-07-03T13:45:43.970+00:00) version=6.0(5h) lm(t):2(2024-07-03T13:45:42.995+00:00) chassisId=557cdb0e-c4b2-11ee-a0a8-93aaf27bee50 lm(t):2(2024-07-03T13:45:42.995+00:00) capabilities=0X17EEFFFFFFFFF--0X2020--0X7--0X1 lm(t):2(2024-07-03T13:45:42.994+00:00) rK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:45:43.970+00:00) aK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:45:43.970+00:00) oobrK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:45:43.970+00:00) oobaK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:45:43.970+00:00) cntrlSbst=(APPROVED, FCH2323V09W) lm(t):2(2024-07-03T13:45:42.995+00:00) (targetMbSn= lm(t):0(zeroTime), failoverStatus=0 lm(t):0(zeroTime)) podId=1 lm(t):2(2024-07-03T08:19:03.478+00:00) commissioned=YES lm(t):3(2024-07-03T13:24:30.745+00:00) registered=YES lm(t):3(2024-07-03T13:24:30.745+00:00) standby=NO lm(t):1(2024-07-03T13:24:30.745+00:00) DRR=NO lm(t):1(2024-07-03T13:24:30.745+00:00) apicX=NO lm(t):1(2024-07-03T13:24:30.745+00:00) virtual=NO lm(t):0(zeroTime) oob gw address=10.197.204.1 lm(t):1(2024-07-03T08:40:04.837+00:00) oob address v6=:: lm(t):1(2024-07-03T13:45:43.970+00:00) oob gw address v6=:: lm(t):1(2024-02-06T05:46:22.175+00:00) active=YES(2024-07-03T13:42:57.715+00:00) health=(applnc:255 lm(t):2(2024-07-03T13:47:42.560+00:00) svc's)

appliance id=3 address=10.0.0.3 lm(t):102(2024-07-03T13:42:57.550+00:00) tep address=10.0.0.0/16 lm(t):3(2024-07-03T13:24:21.522+00:00) routable address=0.0.0.0 lm(t):0(zeroTime) oob address=10.197.204.116/24 lm(t):1(2024-07-03T13:45:44.004+00:00) version=6.0(5h) lm(t):3(2024-07-03T13:45:43.443+00:00) chassisId=5803434b-393f-11ef-8e96-2c4f52b32ad0 lm(t):3(2024-07-03T13:45:43.443+00:00) capabilities=0X17EEFFFFFFFFF--0X2020--0X7--0X1 lm(t):3(2024-07-03T13:51:50.477+00:00) rK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:45:43.964+00:00) aK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:45:43.964+00:00) oobrK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:45:43.964+00:00) oobaK=(stable,present,0X206173722D687373) lm(t):1(2024-07-03T13:45:43.964+00:00) cntrlSbst=(APPROVED, FCH2322V0AG) lm(t):3(2024-07-03T13:45:43.443+00:00) (targetMbSn= lm(t):0(zeroTime), failoverStatus=0 lm(t):0(zeroTime)) podId=1 lm(t):102(2024-07-03T13:42:57.550+00:00) commissioned=YES lm(t):1(2024-07-03T13:38:51.799+00:00) registered=YES lm(t):1(2024-07-03T13:42:57.480+00:00) standby=NO lm(t):102(2024-07-03T13:42:57.550+00:00) DRR=NO lm(t):1(2024-07-03T13:38:51.799+00:00) apicX=NO lm(t):102(2024-07-03T13:42:57.550+00:00) virtual=NO lm(t):0(zeroTime) oob gw address=10.197.204.1 lm(t):1(2024-07-03T13:45:43.257+00:00) oob address v6=:: lm(t):1(2024-07-03T13:45:44.004+00:00) oob gw address v6=:: lm(t):1(2024-02-06T06:06:24.927+00:00) active=YES(2024-07-03T13:45:43.045+00:00) health=(applnc:255 lm(t):3(2024-07-03T13:47:42.405+00:00) svc's) <<== APIC03 is upgraded to 6.0(5h) version and health 255 shows it is fully fitTo verify that the auto upgrade is running smoothly without errors, check the svc_ifc_appliancedirector.bin.log file on the existing APIC, which is responsible for upgrading the newly added APIC.

Location : /var/log/dme/log

svc_ifc_appliancedirector.bin.log | grep "ApplianceAutoUpgrade.cpp"

4411||2024-07-03T13:03:02.014060877+00:00||appliance_director||DBG4||||# Registered APICs:3||../appliance/director/./ApplianceAutoUpgrade.cpp||115 << APIC3 is registered to the cluster

4411||2024-07-03T13:03:02.014069812+00:00||appliance_director||DBG4||||# APICs its version is same cluster version:2||../appliance/director/./ApplianceAutoUpgrade.cpp||116 << APIC01/02 have same version 6.0(5h)

4411||2024-07-03T13:03:02.014078887+00:00||appliance_director||DBG4||||# APICs its version is different cluster version:1||../appliance/director/./ApplianceAutoUpgrade.cpp||117 << APIC03 is running different version 5.2(5c)

4442||2024-07-03T13:04:02.025327143+00:00||appliance_director||DBG4||||Try to upgrade Appliance3||../appliance/director/./ApplianceAutoUpgrade.cpp||147 << Auto Upgrade Check Kicks in

4442||2024-07-03T13:04:02.025434226+00:00||appliance_director||DBG4||||ImageFile: /firmware/fwrepos/fwrepo/aci-apic-image-name.bin||../appliance/director/./ApplianceAutoUpgrade.cpp||160 << Image file location on Local APIC

4442||2024-07-03T13:04:02.025661541+00:00||appliance_director||DBG4||||Running rsync -8 -avzk --timeout 600 -e "ssh -p 1022 -i /securedata/ssh/root/id_rsa -o UserKnownHostsFile=/dev/null -o GSSAPIAuthentication=no -o StrictHostKeyChecking=no -o ConnectTimeout=60 -o ServerAliveInterval=60" /mgmt/support/insieme/auto_upgrade.py root@10.0.0.3:/mgmt/support/insieme/auto_upgrade.py||../appliance/director/./ApplianceAutoUpgrade.cpp||202

4442||2024-07-03T13:04:03.001492232+00:00||appliance_director||DBG4||||Done: Copy auto_upgrade script to remote apic||../appliance/director/./ApplianceAutoUpgrade.cpp||220 << Upgrade script is copied to APIC03 from APIC01

4442||2024-07-03T13:04:03.001598593+00:00||appliance_director||DBG4||||Running rsync -8 -avzk --timeout 600 -e "ssh -p 1022 -i /securedata/ssh/root/id_rsa -o UserKnownHostsFile=/dev/null -o GSSAPIAuthentication=no -o StrictHostKeyChecking=no -o ConnectTimeout=60 -o ServerAliveInterval=60" /firmware/fwrepos/fwrepo/aci-apic-image-name.bin root@10.0.0.3:/tmp/myImage.bin||../appliance/director/./ApplianceAutoUpgrade.cpp||226

4442||2024-07-03T13:13:59.287332478+00:00||appliance_director||DBG4||||Done: Copy iimage file to remote apic||../appliance/director/./ApplianceAutoUpgrade.cpp||244 << ISO image 6.0(5h) is coped to APIC03 from APIC01

4442||2024-07-03T13:13:59.287418460+00:00||appliance_director||DBG4||||Running ssh -p 1022 -i /securedata/ssh/root/id_rsa -o UserKnownHostsFile=/dev/null -o GSSAPIAuthentication=no -o StrictHostKeyChecking=no -o ConnectTimeout=60 -o ServerAliveInterval=60 root@10.0.0.3 "/mgmt/support/insieme/auto_upgrade.py 1 10.0.0.1"||../appliance/director/./ApplianceAutoUpgrade.cpp||250

4442||2024-07-03T13:17:55.430111171+00:00||appliance_director||DBG4||||Run remote upgrade done||../appliance/director/./ApplianceAutoUpgrade.cpp||268 << Running upgrade script on APIC03

4442||2024-07-03T13:17:55.430203755+00:00||appliance_director||DBG4||||Decommissioning Appliance 3||../appliance/director/./ApplianceAutoUpgrade.cpp||273 << APIC03 is decomissioned

4442||2024-07-03T13:20:25.430436301+00:00||appliance_director||DBG4||||Commissioning Appliance 3||../appliance/director/./ApplianceAutoUpgrade.cpp||277 << APIC03 is commissioned back to the fabricStep 17. In case of Auto APIC upgrade failed, it is followed by a fault.

Step 18. For failed cases, you can always retrigger Auto APIC upgrade by doing a clean reload and adding new APIC back to the ACI fabric for discovery.

If these steps do not resolve the issue, please collect Techsupport logs and contact Cisco TAC for further assistance.

Revision History

| Revision | Publish Date | Comments |

|---|---|---|

1.0 |

08-Jul-2024

|

Initial Release |

Contributed by Cisco Engineers

- Piyush KatariaTAC

- Manoranjan VeerTAC

Contact Cisco

- Open a Support Case

- (Requires a Cisco Service Contract)

Feedback

Feedback