Cisco Multi-Site Deployment Guide for ACI Fabrics

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

| Date |

Version |

Modifications |

| 10/03/2021 |

1.0 |

● Initial Version

|

| 10/14/2021 |

2.0 |

● Added the “Integrating ACI Multi-Pod and ACI Multi-Site”

● Fixed some typos and other small edits

|

Table 1. Document Version History

Introduction

The main goal of this document is to provide specific deployment and configuration information for multiple Cisco ACI Multi-Site use cases. ACI Multi-Site is the Cisco architecture commonly used to interconnect geographically dispersed data centers and extend Layer 2 and Layer 3 connectivity between those locations, together with a consistent end-to-end policy enforcement.

This paper is not going to describe in detail the functional components of a Cisco Multi-Site architecture, nor the specifics of how data-plane communication works, and the control-plane protocols used for exchanging reachability information between ACI fabrics. A prerequisite for making the best use of this deployment guide is to have gone through the white paper describing the overall ACI Multi-Site architecture and its main functionalities, available at the link below:

This guide is divided into different sections, each tackling a specific deployment aspect:

● Provisioning the Inter-Site Network connectivity: this section covers the specific configuration required on the network devices building the ISN infrastructure used to interconnect the different ACI fabrics.

● Adding ACI fabrics to a specific Multi-Site domain: this part covers the required infra configuration performed on the Cisco Nexus Dashboard Orchestrator NDO (previously known as Cisco Multi-Site Orchestrator – MSO) to add different ACI fabrics to the same Multi-Site domain.

Note: Most of the considerations contained in this paper apply also for deployment leveraging the original virtual NDO cluster (available up to the software release 3.1(1)). However, given the fact that going forward the Orchestrator is only going to be supported as a service enabled on top of Cisco Nexus Dashboard compute platform, in the rest of this document we’ll solely make reference to Nexus Dashboard Orchestrator (NDO) deployments.

● Nexus Dashboard Orchestrator schema and templates definition: this section provides guidelines on how to configure schema and templates in order to provision specific tenant policies. While Nexus Dashboard Orchestrator by design provides lots of flexibility on how to define those policy elements, the goal is to offer some best practice recommendations.

● Establishing endpoint connectivity across sites (east-west): this section focuses on how to deploy EPGs/BDs in different fabrics and establish Layer 2 and Layer 3 intersite connectivity between them. From a security perspective, it is covered how to define specific policies between EPGs with the use of security contracts, but also how to simplify the establishment of connectivity by initially removing the policy aspect through the use of Preferred Groups and vzAny.

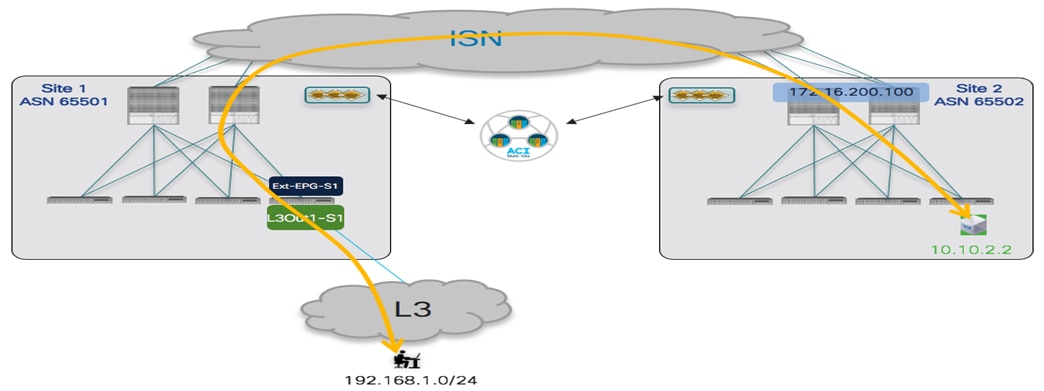

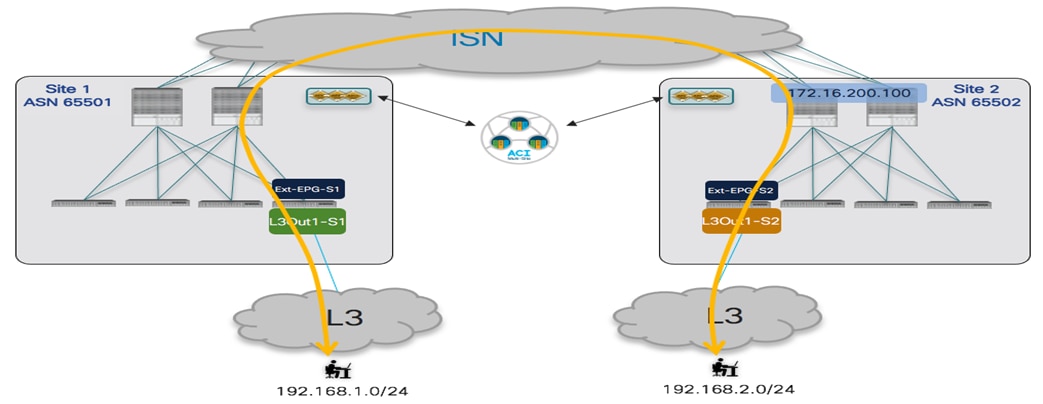

● Establishing endpoint connectivity with the external network domain (North-South): this part focuses on the deployment of L3Out configuration to ensure endpoints connected to the ACI leaf nodes of different fabrics can communicate with external resources, either reachable via a local L3Out or a remote L3Out connection (Intersite L3Out).

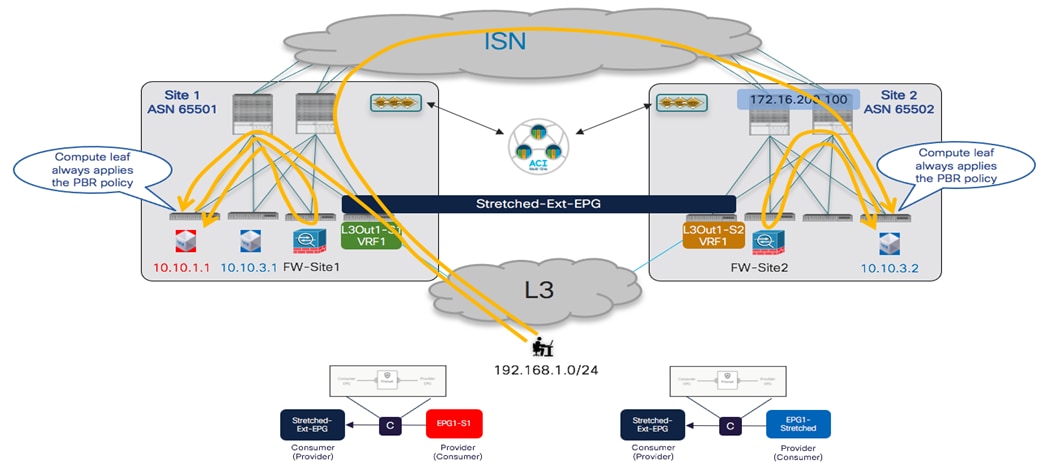

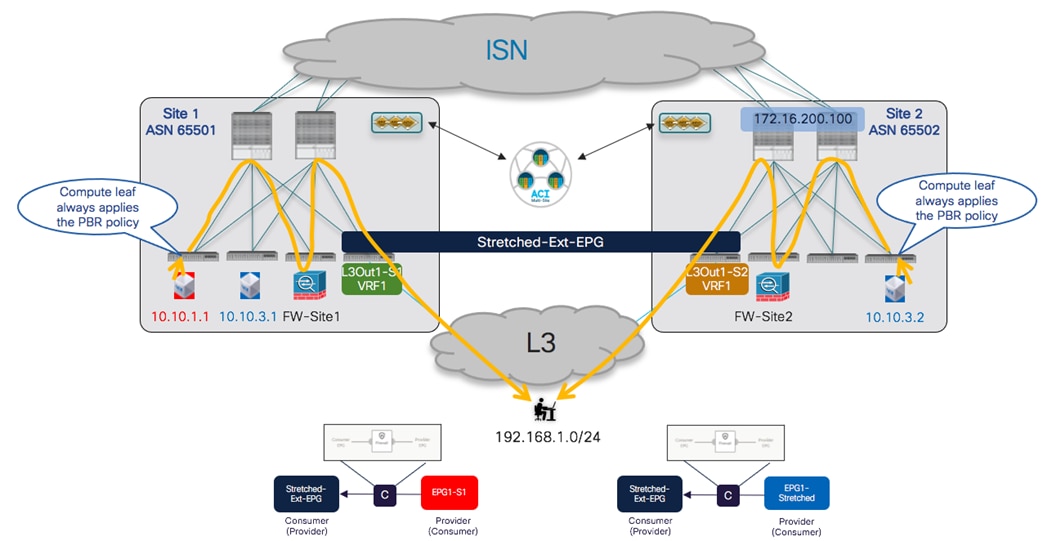

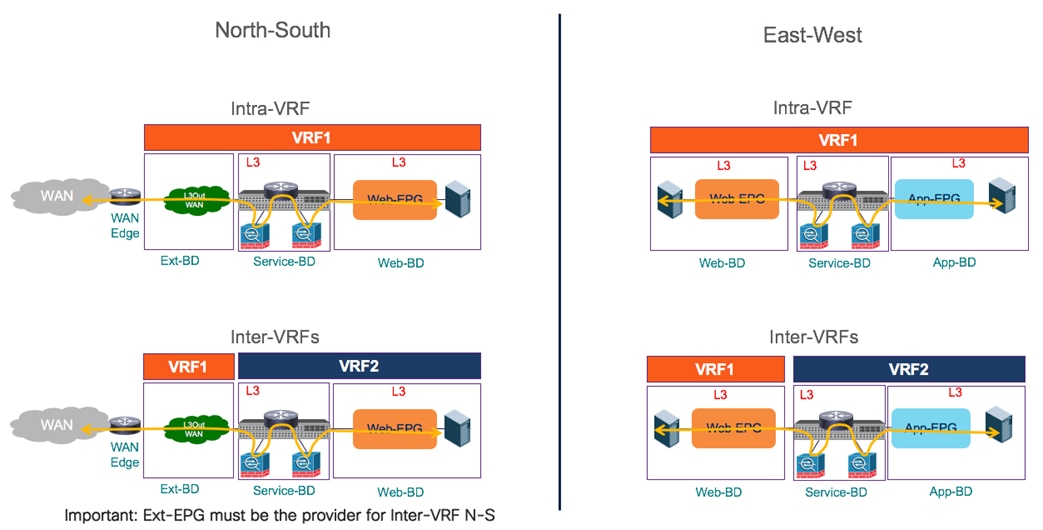

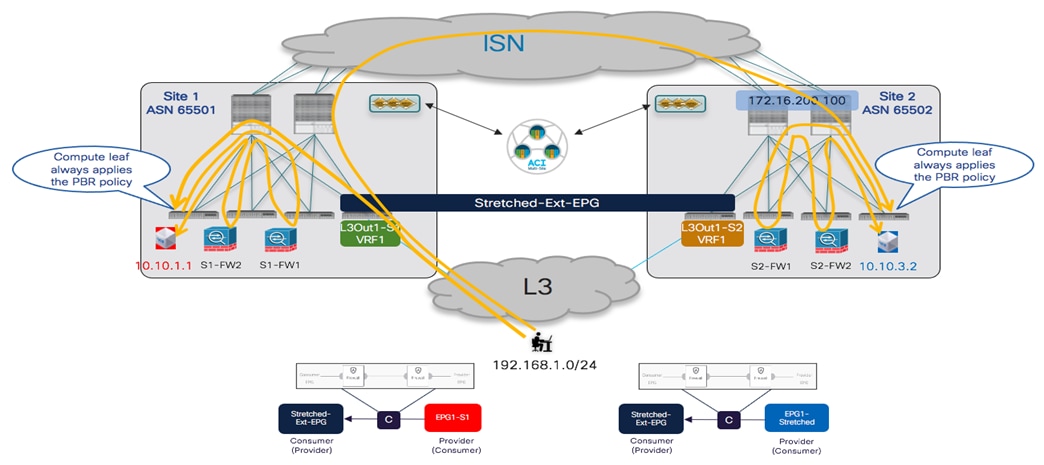

● Network services integration: the focus here is on how to leverage Service-Graph with PBR to ensure network services can be inserted in between communications for EPGs belonging to the same fabric or to separate fabrics (east-west) or for communication between endpoints connected to ACI and the external network domain (north-south).

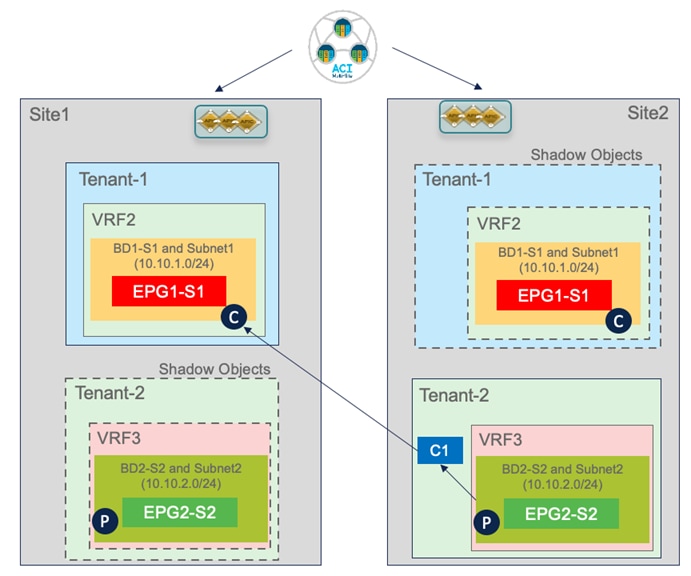

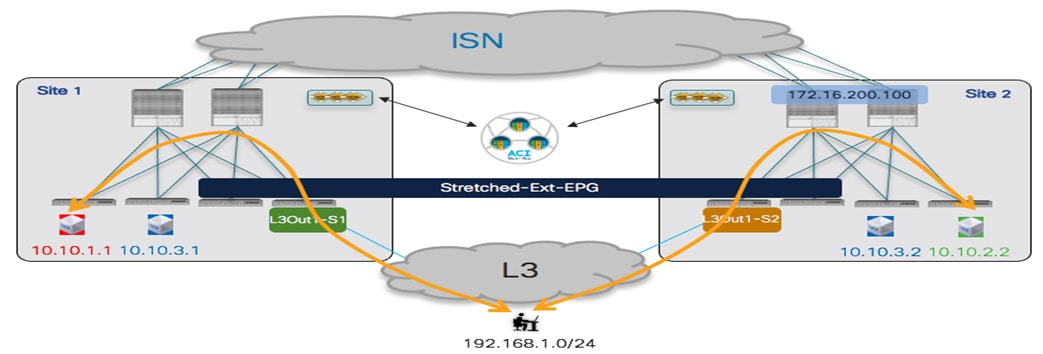

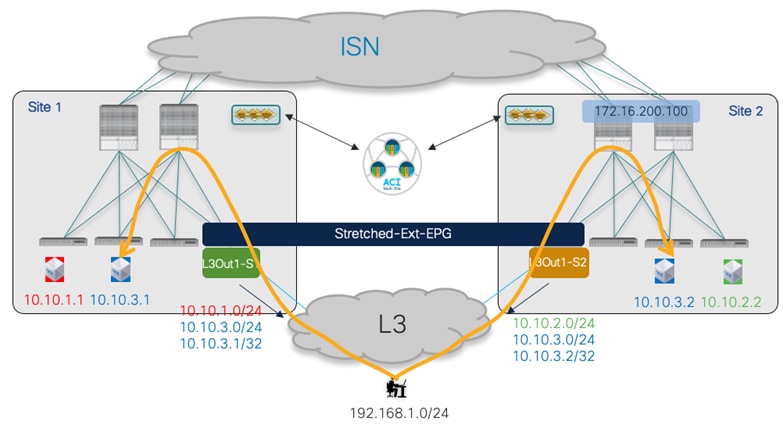

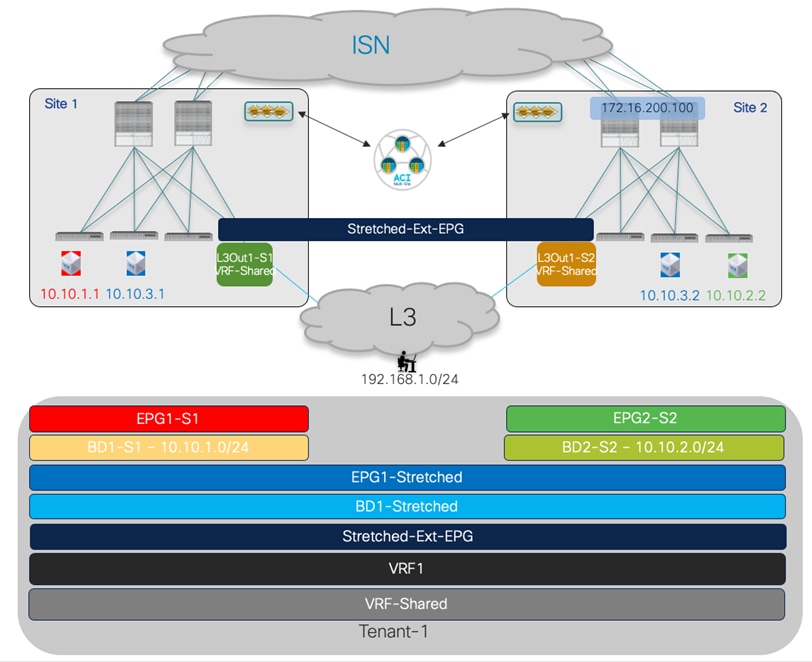

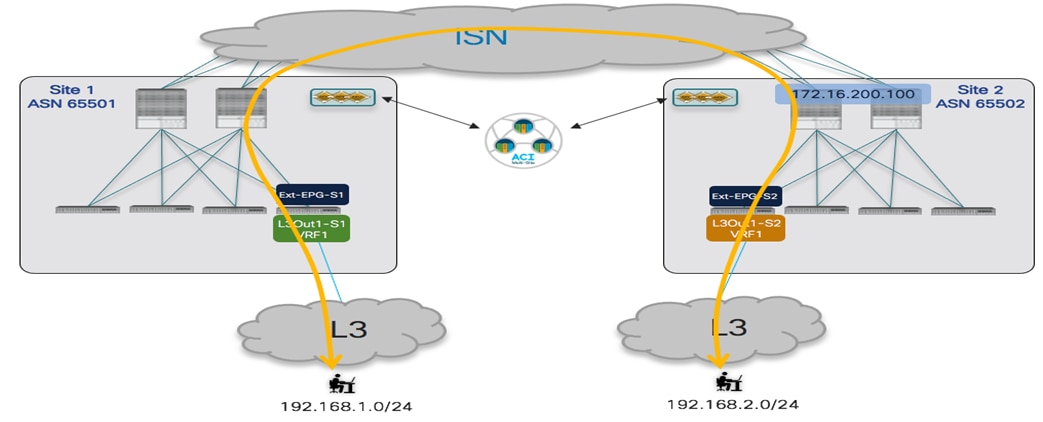

The topology that is going to be used to provision the different use cases mentioned above is the one shown in Figure 1 below:

Two Fabrics Multi-Site Deployment

The ACI fabrics are connected via an Inter-Site Network (ISN) routed domain that represents the transport for VXLAN communications happening between endpoints part of different fabrics. As a reminder, there is no latency limit between the different ACI fabrics part of the same Multi-Site domain. The only latency considerations are:

● Up to 150 ms, RTT is the latency supported between the Nexus Dashboard cluster nodes where the Orchestrator service is enabled.

● Up to 500 msec RTT is the latency between each Nexus Dashboard Orchestrator node and the APIC controller nodes that are added to the Multi-Site domain. This means that the Multi-Site architecture has been designed from the ground up to be able to manage ACI fabrics that can be geographically dispersed around the world.

All the use cases described in this paper have been validated using the latest ACI and Nexus Dashboard Orchestrator software releases available at the time of writing this paper. Specifically, the two ACI fabrics are using ACI 5.1(1) code, whereas for Nexus Dashboard Orchestrator it is used the 3.5(1) release. However, keep in mind that from Nexus Dashboard Orchestrator release 2.2(1), there is not interdependency between Nexus Dashboard Orchestrator and ACI software releases, and a Multi-Site deployment using Nexus Dashboard Orchestrator release 3.2(1) (and later) can have fabrics running a mix of software releases (from ACI 4.2(4), which is the first one supported with Nexus Dashboard) being part of the same Multi-Site domain.

Note: The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product.

Provisioning Inter-Site Network Connectivity

The first step in the creation of a Multi-Site domain is the provisioning of the network infrastructure used to interconnect the different ACI fabrics and to carry the VXLAN traffic allowing to establish Layer 2 and Layer 3 connectivity across sites. The ISN is not managed by APIC nor by the Orchestrator service, so it must be independently pre-provisioned before starting the configuration of the spine nodes to connect each fabric to the ISN infrastructure.

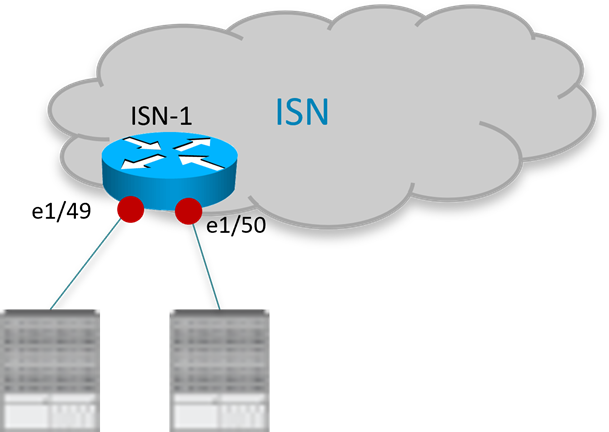

ISN Router Connecting to the Spine Nodes

The interfaces of the ISN devices connecting to the spine nodes in each fabric need to be deployed as point-to-point L3 links establishing routing adjacencies to allow for the exchange of infrastructure (i.e., underlay) prefixes between the spine nodes in different fabrics. The configuration sample below shows a specific example of the interfaces defined on the ISN router in Figure 2 to connect to the spine nodes of the local ACI Pod (the interfaces of other routers would be configured similarly).

Note: The configuration below applies to the deployment of Nexus 9000 switches as ISN nodes. When using different HW platforms, it may be required to slightly modify the specific CLI commands.

ISN-1 Router:

interface Ethernet1/49.4

description L3 Link to Pod1-Spine1

mtu 9150

encapsulation dot1q 4

vrf member ISN

ip address 192.168.1.1/31

ip ospf network point-to-point

ip router ospf ISN area 0.0.0.0

no shutdown

!

interface Ethernet1/50.4

description L3 Link to Pod1-Spine2

mtu 9150

encapsulation dot1q 4

vrf member ISN

ip address 192.168.1.5/31

ip ospf network point-to-point

ip router ospf ISN area 0.0.0.0

no shutdown

● As shown above, sub-interfaces must be created on the physical links connecting the router to the spine nodes. This is because of the specific ACI implementation that mandates leaf and spine nodes to always generate dot1q tagged traffic (the specific VLAN tag 4 is always used by the ACI spine nodes when connecting to the external ISN infrastructure). Please notice that those interfaces remain point-to-point Layer 3 links that must be addressed as part of separate IP subnets (the use of a /30 or /31 mask is commonly recommended) and this imply that the main requirement for the ISN router is to be able to use the same VLAN tag 4 on sub-interfaces configured for different local links. Most of the modern switches and routers offer this capability.

● The MTU of the interfaces should account for the extra overhead of VXLAN traffic (50 Bytes). The 9150B value shown in the configuration sample above matches the default MTU of the spine sub-interfaces connecting to the external ISN infrastructure, which ensures that the OSPF adjacency can be successfully established. However, it is not necessarily required to support such a large MTU on the ISN routers for intersite communication, as the required minimum value mostly depends on the MTU of the traffic generated by the endpoints connected to the ACI fabric. For more information on this, please refer to the “Intersite Network (ISN) deployment considerations” section of the ACI Multi-Site paper:

https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-739609.html#IntersiteNetworkISNdeploymentconsiderations

● The interfaces on the ISN routers can be deployed as part of a dedicated VRF or in the global table. Using a dedicated VRF, when possible, is a strong best practice recommendation, both from an operational simplification perspective and also preventing to send to the spine nodes more prefixes than strictly required for Multi-Site control and data plane (in case the ISN infrastructure is also shared for providing other connectivity services).

● From ACI release 5.2(3) and Nexus Dashboard Orchestrator release 3.5(1) it is possible to deploy also BGP for establishing underlay adjacencies between the spines and the ISN devices. However, in this paper we focus on the use of OSPF as it has been available since the introduction of the ACI Multi-Site architecture, and it is widely deployed.

Very often, a different routing protocol (usually BGP) is used between the devices building the core of the ISN network, especially when that infrastructure extends across geographically dispersed locations. This implies the need to redistribute from OSPF into BGP the specific prefixes that must be exchanged across fabrics part of the same Multi-Site domain. This allows controlling in a very selective way the prefixes that are exchanged across sites. As it will be discussed in more detail as part of the “Nexus Dashboard Orchestrator Sites Infra Configuration” section, only a handful of prefixes are required for establishing intersite control and data plane connectivity:

● A BGP EVPN Router-ID for each spine node, to establish MP-BGP EVPN adjacencies to remote spine nodes.

● An Overlay Unicast TEP (O-UTEP) anycast address for each Pod part of the same ACI fabric, used for unicast Layer 2 and Layer 3 data plane connectivity with the remote sites.

● An Overlay Multicast TEP (O-MTEP) anycast address shared between all the Pods part of the same ACI fabric, used to receive Layer 2 Broadcast/Unknown Unicast/Multicast (BUM) traffic originated from the remote sites.

● One (or more) external TEP pools are used to enable the intersite L3Out connectivity with the remote sites.

The original infra TEP pools used for each fabric bring-up (10.1.0.0/16 and 10.2.0.0/16 in the example in Figure 1) do not need to be exchanged across sites and should hence not being redistributed between protocols. The sample below shows an example of redistribution allowing the exchange of the few prefixes listed above (once again, this configuration applies to Nexus 9000 switches deployed as ISN devices):

● Define the IP prefix list and route-map to advertise the local prefixes to the remote sites:

ip prefix-list LOCAL-MSITE-PREFIXES seq 5 permit <BGP-EVPN-RID Site1-Spine1>

ip prefix-list LOCAL-MSITE-PREFIXES seq 10 permit <BGP-EVPN-RID Site1-Spine2>

ip prefix-list LOCAL-MSITE-PREFIXES seq 15 permit <O-UTEP-Pod1-Site1>

ip prefix-list LOCAL-MSITE-PREFIXES seq 20 permit <O-MTEP-Site1>

ip prefix-list LOCAL-MSITE-PREFIXES seq 25 permit <EXT-TEP-POOL-Site1>

!

route-map MSITE-PREFIXES-OSPF-TO-BGP permit 10

match ip address prefix-list LOCAL-MSITE-PREFIXES

● Define the IP prefix list and route-map to specify the prefixes to be received from the remote sites:

ip prefix-list REMOTE-MSITE-PREFIXES seq 5 permit <BGP-EVPN-RID Site2-Spine1>

ip prefix-list REMOTE-MSITE-PREFIXES seq 10 permit <BGP-EVPN-RID Site2-Spine2>

ip prefix-list REMOTE-MSITE-PREFIXES seq 15 permit <O-UTEP-Pod1-Site2>

ip prefix-list REMOTE-MSITE-PREFIXES seq 20 permit <O-MTEP-Site2>

ip prefix-list REMOTE-MSITE-PREFIXES seq 25 permit <EXT-TEP-POOL-Site2>

!

route-map MSITE-PREFIXES-BGP-TO-OSPF permit 10

match ip address prefix-list REMOTE-MSITE-PREFIXES

● Apply the route-maps to redistribute prefixes between OSPF and BGP (and vice versa):

router bgp 3

vrf ISN

address-family ipv4 unicast

redistribute ospf ISN route-map MSITE-PREFIXES-OSPF-TO-BGP

!

router ospf ISN

vrf ISN

redistribute bgp 3 route-map MSITE-PREFIXES-BGP-TO-OSPF

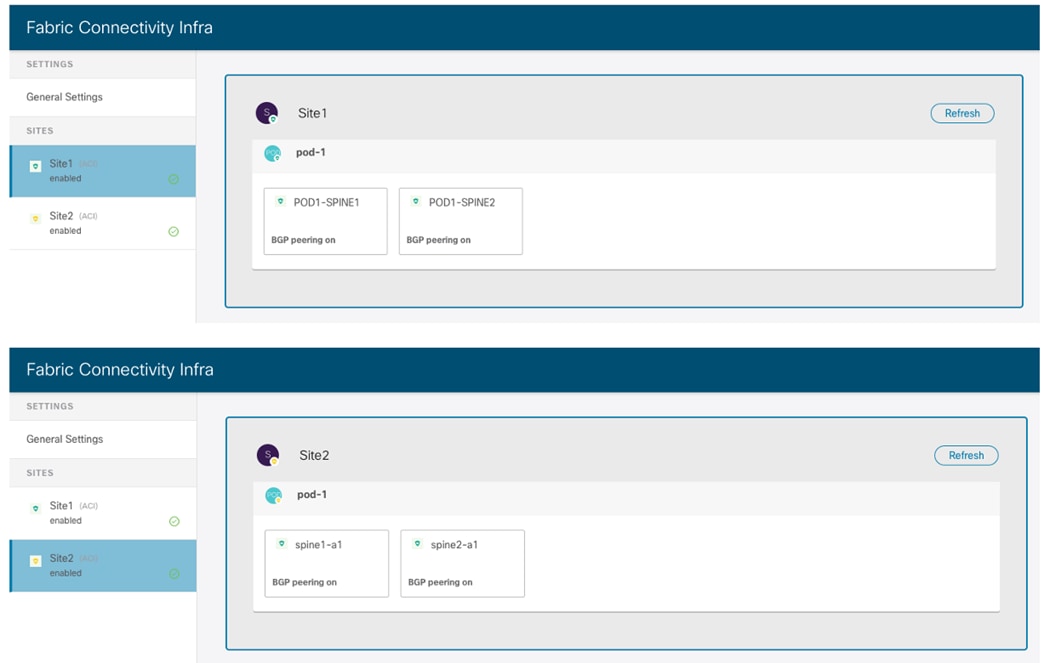

Adding the ACI Fabrics to the Multi-Site Domain

The following sections describes how to add the fabrics that are part of your Multi-Site domain to the Nexus Dashboard Orchestrator.

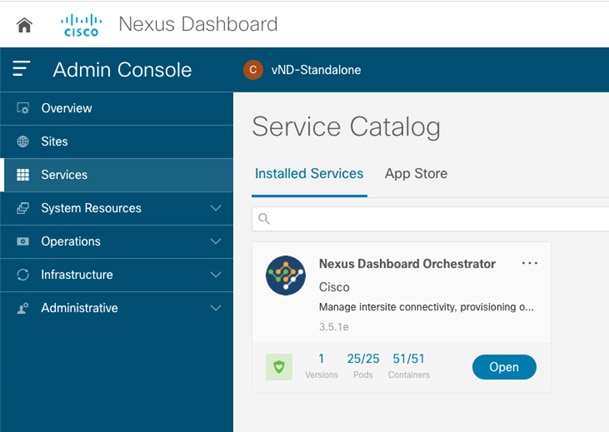

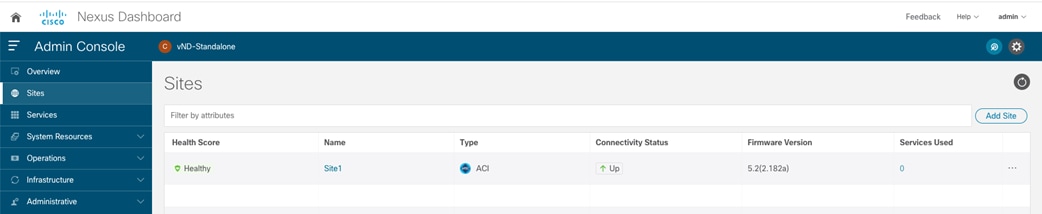

Onboarding ACI Fabrics to Nexus Dashboard Orchestrator

Once the configuration in the ISN is provisioned, it is then possible to onboard the ACI fabrics to Nexus Dashboard Orchestrator and perform the required infra configuration to ensure each site can be successfully connected to the ISN and establish the required control plane peerings. Specifically, each spine establishes OSPF adjacencies with the directly connected first-hop ISN routers and MP-BGP EVPN peerings with the spine nodes in the remote sites.

Up to Multi-Site Orchestrator release 3.1(1), the site onboarding procedure had to be done directly on MSO. From release 3.2(1) and later, the Nexus Dashboard Orchestrator is only supported as a service running on a Nexus Dashboard compute cluster and in that case, the site onboarding procedure must be performed directly on Nexus Dashboard (and the sites are then made available to the hosted services, as it is the case for Nexus Dashboard Orchestrator in our specific example). The required infra configuration steps described in the “Nexus Dashboard Orchestrator Sites Infra Configuration” section remain valid also for deployments leveraging older MSO releases.

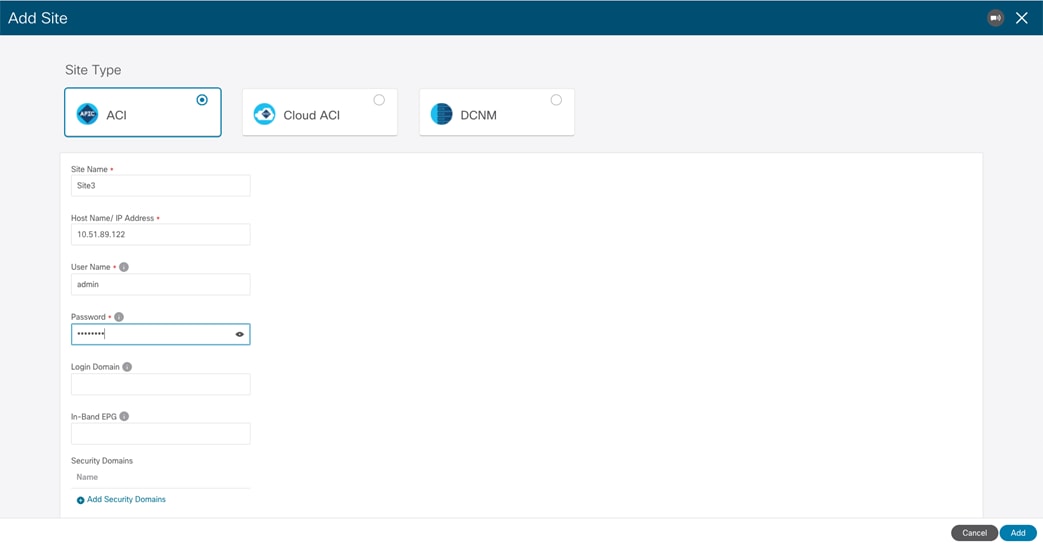

Figure 3 highlights how to start the process used to onboard an ACI fabric to Nexus Dashboard.

Adding a Site to NDO 3.1(1)

After selecting “Add Site”, the following screen opens up allowing to specify the information for the ACI fabric that needs to be onboarded on NDO.

Specify the ACI Fabric Information

The following information is required to onboard an ACI fabric to Nexus Dashboard:

● Site Name: The name used to reference the ACI fabric on Nexus Dashboard.

● Host Name / IP Address: The IP address of one of the APIC cluster nodes managing the fabric that is being added. At the time of writing of this paper, and when running only the Orchestrator service on top of Nexus Dashboard, it is possible to specify here the Out-of-Band (OOB) or In-Band (IB) address of the APIC. When enabling other services on the same ND cluster (as for example Insights), it may be required instead to onboard the fabric using the IB address only, so please refer to the specific service installation guide (available on cisco.com) for more specific information.

● User Name and Password: APIC credentials used to connect to Nexus Dashboard and, through the Single-Signed-On functionality, to the UI of the services hosted on Nexus Dashboard.

● Login Domain: By default, the user will be locally authenticated on Nexus Dashboard. If instead, the desire is to use a specific login domain (Radius, TACACS, etc.), the domain name can be defined on Nexus Dashboard and specified in this field.

● In-Band EPG: this is only required when hosting services on Nexus Dashboard (like Insights) that are using In-Band connectivity for data streaming from this site.

● Security Domains:.

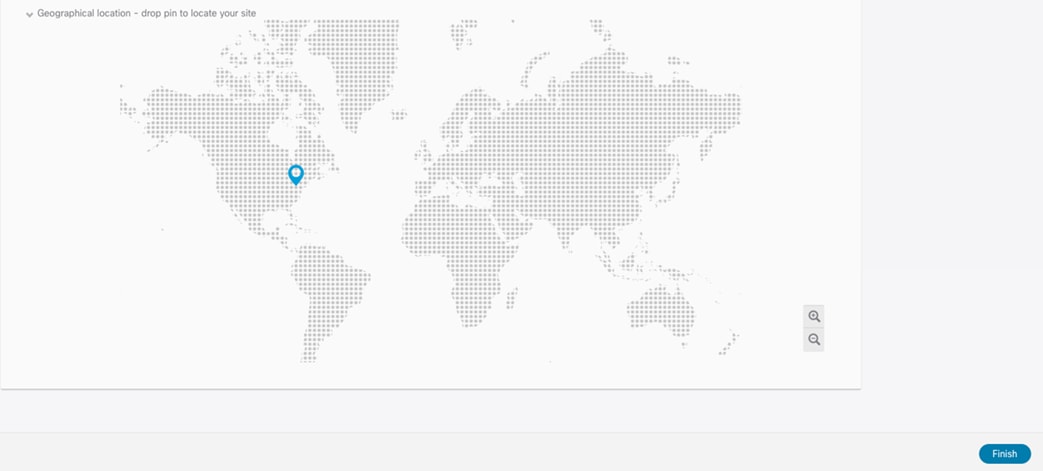

The last optional step of the ACI fabric onboarding process consists in dropping a pin for the site on the map, to represent the geographical location for this specific fabric.

Dropping a Pin on the Map to Locate the Site

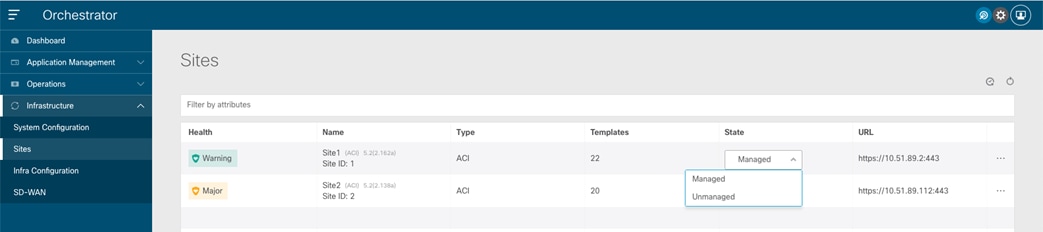

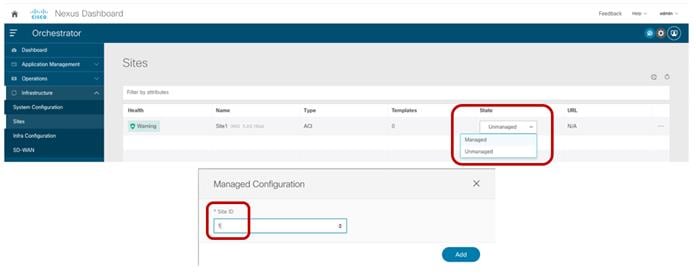

The same procedure can be repeated for every fabric that needs to be onboarded to Nexus Dashboard. At the end, all these sites are displayed on the Nexus Dashboard Orchestrator UI in “Unmanaged” state. The user can the selectively set as “Managed” the fabrics that should become part of the same ACI Multi-Site domain.

When a site is set to “Managed”, the user is also asked to enter a specific Site ID, which must uniquely identify that site.

Note: The Site ID is different than the Fabric-ID that is configured at the APIC level. Fabrics configured with the same Fabric-ID can become part of the same multi-Site domain, as long as they get assigned a unique Site ID.

Displaying the Sites on the NDO UI

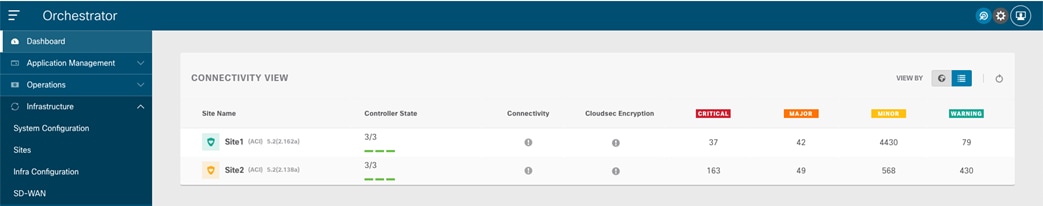

Once the fabrics become “Managed”, they will be displayed on the NDO Dashboard.

Connectivity View on NDO Dashboard

As noticed above, the Orchestrator Service can gather information about the health of each APIC controller node for the onboarded sites and the specific faults raised on each APIC domain (with their associated level of criticality). The connectivity between sites is showing a warning sign at this point, for the simple reason that the fabrics have just been onboarded to the Orchestrator Service, but the infra configuration steps have not been performed yet to connect each site to the external ISN.

Nexus Dashboard Orchestrator Sites Infra Configuration

After the fabrics have been onboarded to Nexus Dashboard and set as “Managed” on the Orchestrator service, it is required to perform the specific infra configuration allowing to connect each site to the ISN. This is needed so the spines in each fabric can first establish the OSPF adjacencies with the directly connected ISN routers and exchange the ‘underlay’ network information required for then successfully establishing intersite control plane and data plane connectivity.

Table 1 below displays the specific information that should be available before starting the infra configuration. For more explanation about the meaning of those different IP prefixes please refer to the “Intersite Network (ISN) deployment considerations” section of the ACI Multi-Site paper:

https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-739609.html#IntersiteNetworkISNdeploymentconsiderations

| Site |

Node |

Interfaces to ISN |

IP Address for Interface to ISN |

BGP-EVPN Router-ID |

O-UTEP |

O-MTEP |

| 1 |

Spine-1101 |

e1/63 e1/64 |

192.168.1.0/31 192.168.1.2/31 |

172.16.100.1 |

172.16.100.100 |

172.16.100.200 |

| 1 |

Spine-1102 |

e1/63 e1/64 |

192.168.1.4/31 192.168.1.6/31 |

172.16.100.2 |

172.16.100.100 |

172.16.100.200 |

| 2 |

Spine-2101 |

e1/63 e1/64 |

192.168.2.0/31 192.168.2.2/31 |

172.16.200.1 |

172.16.200.100 |

172.16.200.200 |

| 2 |

Spine-2102 |

e1/63 e1/64 |

192.168.2.4/31 192.168.2.6/31 |

172.16.200.2 |

172.16.200.100 |

172.16.200.200 |

Table 2. IP Address Information for the Infra Configuration of the Sites

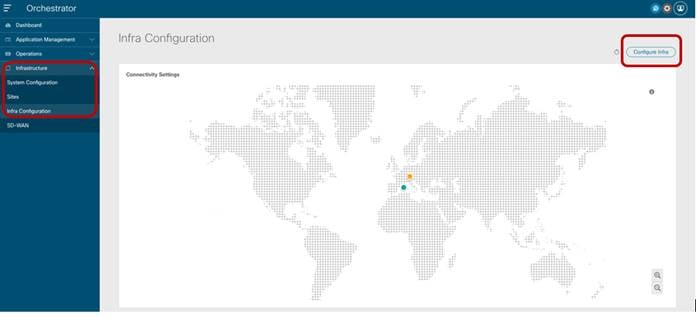

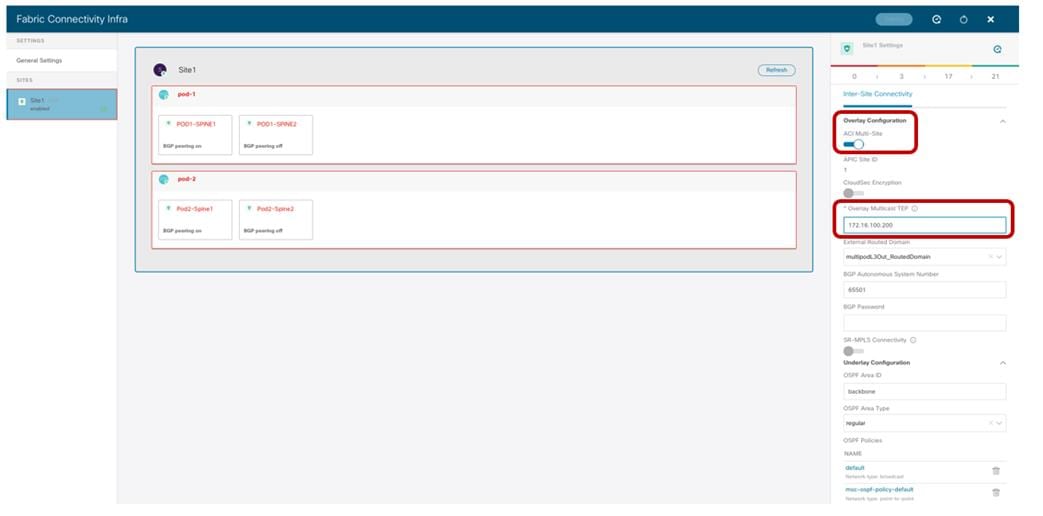

The Infra configuration workflow is started by selecting the “Infrastructure” à “Infra Configuration” option on the Nexus Dashboard Orchestrator left tab.

Starting the Sites Infra Configuration Process

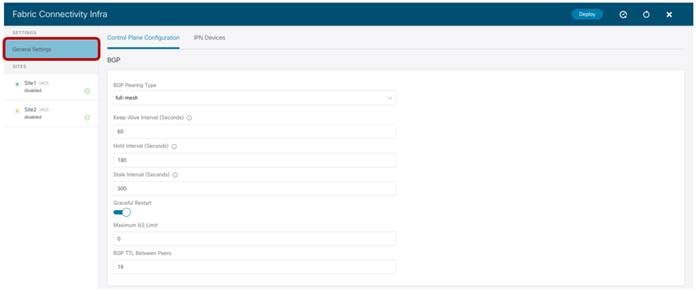

After selecting “Configure Infra”, the “Fabric Connectivity Infra” page is displayed to the user.

Infra Configuration General Settings

In the “General Settings” tab we find the “Control Plane Configuration” section allowing us to tune if desired, some default parameters used for the MP-BGP EVPN control plane used between the spines belonging to different fabrics. It is recommended to keep the default values for those parameters in the majority of the deployment scenarios. This applies also to the “BGP Peering Type” option: by default, it is set as “full-mesh”, which essentially means that the spine nodes deployed in different sites establish a full-mesh of MP-BGP EVPN adjacencies between them. This happens independently from the fact that the different sites are part of the same BGP Autonomous System Number (ASN) or not. The alternative option is to select “route-reflector”, which is effective only if the fabrics are part of the same ASN. Notice also that the “Graceful Helper” knob is on by default: this is to enable the BGP Graceful Restart functionality (documented in IETF RFC 4724) allowing a BGP speaker to indicate its ability to preserve its forwarding state during a BGP restart event.

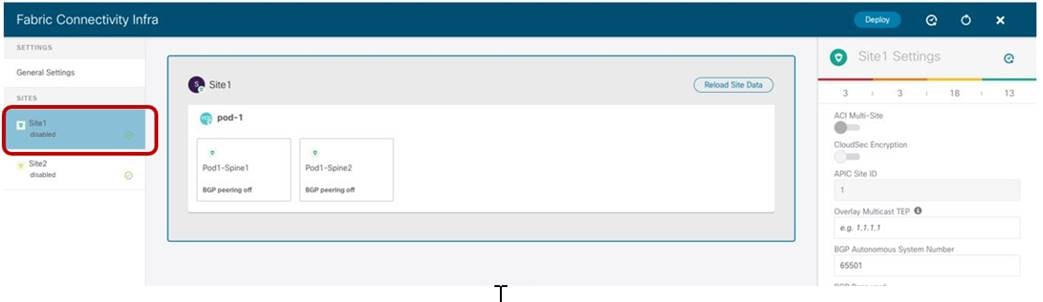

The site infra configuration is instead started by selecting the tab on the left associated with specific fabric.

Starting the Specific Site Infra Configuration

The configuration is performed in three different steps: Site level, Pod level and Spine node level, depending on what is being selected on the Nexus Dashboard Orchestrator UI.

Site-Level Configuration

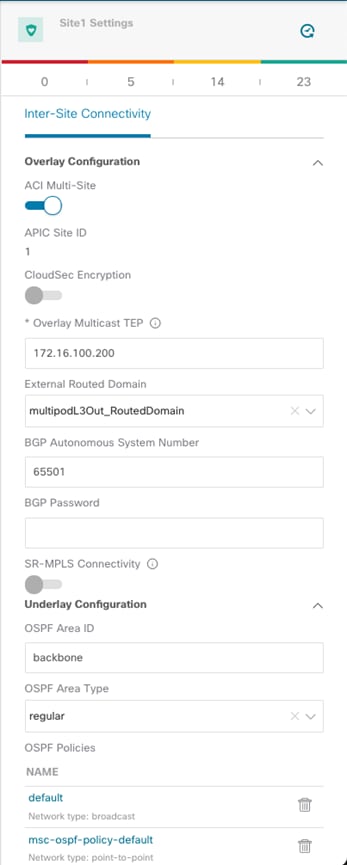

When clicking on the main box identifying the whole ACI Site, The Orchestrator service gives the capability of configuring the parameters shown in Figure 11.

Site Level Configuration

● ACI Multi-Site knob: Turn this on to enable Multi-Site on the fabric and ensure that the control and data plane connectivity with the other sites can be established. This is not required if Nexus Dashboard Orchestrator is deployed only to provide policies locally to each connected site and no intersite communication across the ISN is desired (an ISN is not even needed for this specific scenario).

● Overlay Multicast TEP: The anycast TEP address deployed on all the local spine nodes and used to receive BUM (or Layer 3 multicast) traffic originated from a remote site. A single O-MTEP address is associated with an ACI fabric, no matter if it is a single Pod or a Multi-Pod fabric.

● External Routed Domain: This is the routed domain defined on APIC as part of the Fabric Access policies for connecting the spine nodes to the ISN. While the specification on Nexus Dashboard Orchestrator of this parameter is technically not strictly required, it is a good practice to have the access policies for the spines defined at the APIC level.

● BGP Autonomous System Number: The local ASN value that is dynamically pulled from APIC.

● OSPF settings (Area ID, Area Type, Policies): Those are OSPF parameters required to then establish OSPF adjacencies between the spines and the directly connected ISN routers.

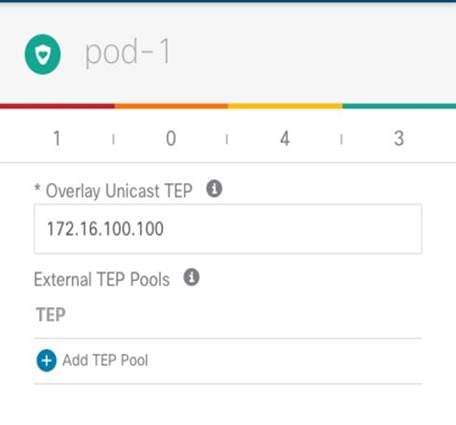

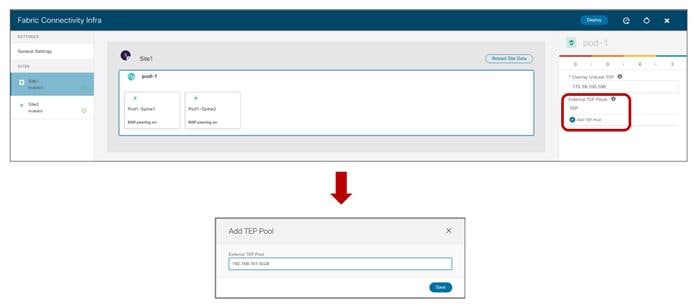

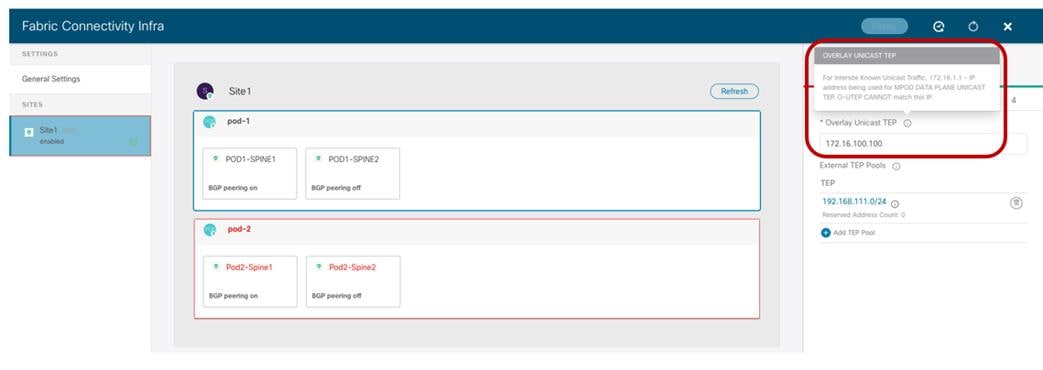

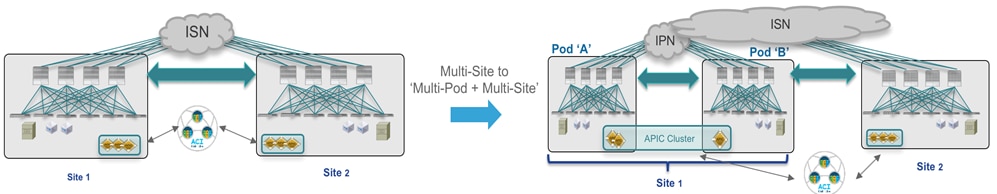

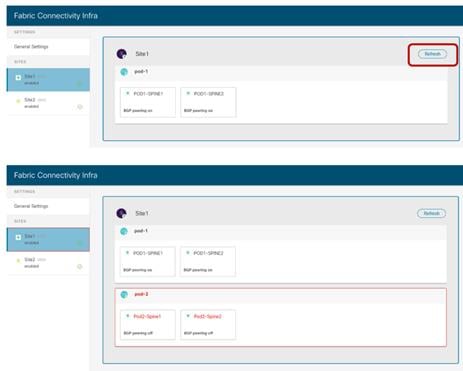

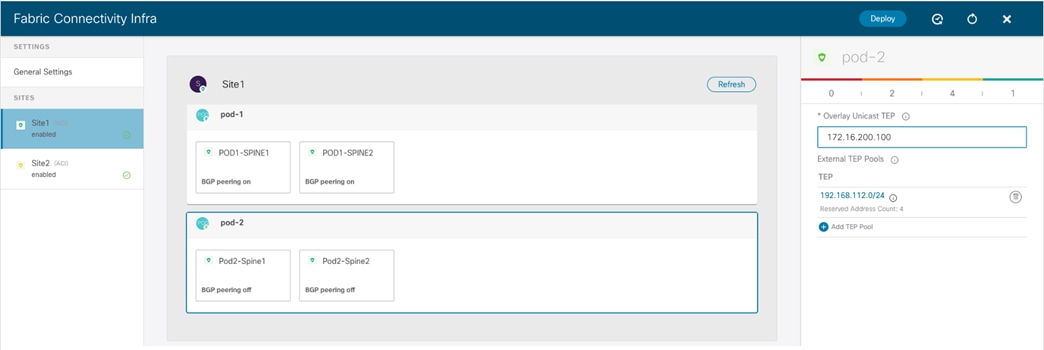

Pod-Level Configuration

Selecting the box identifying a Pod it is then possible to access the Pod’s specific configuration. Those are the settings that are independently applied to all the Pods that are part of the same Multi-Pod fabric (in our specific example we have a single Pod fabric).

Pod Level Configuration

● Overlay Unicast TEP: The anycast TEP address deployed on all the local spine nodes in the Pod and used to send and receive Layer 2 and Layer 3 unicast traffic flows. Every Pod part of the same Multi-Pod fabric would define a unique TEP address.

● External TEP Pools: Prefix(es) required when enabling the intersite L3Out functionality, as discussed in more detail in the “Deploying Intersite L3Out” section.

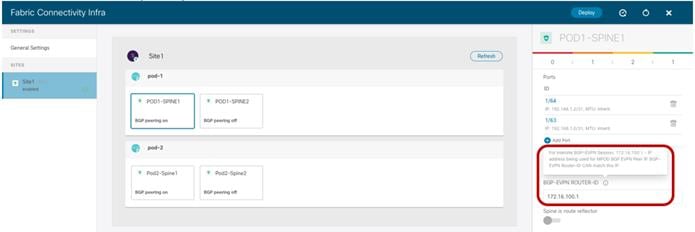

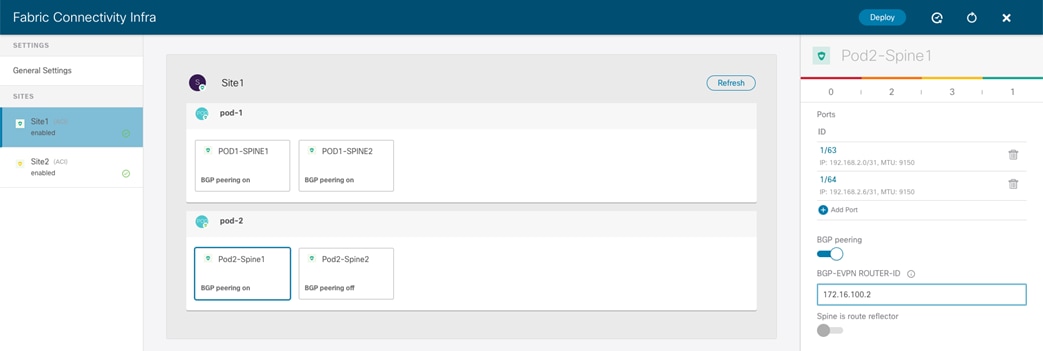

Spine-Level Configuration

Finally, a specific configuration must be applied for each specific spine node.

Spine Level Configuration

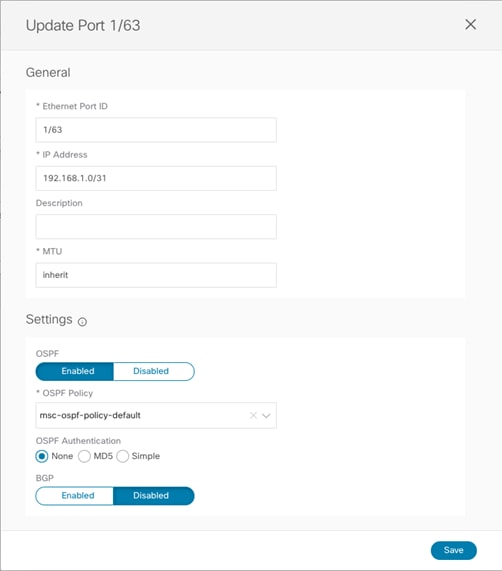

● Ports: Specify the interfaces on the local spine used to connect to the ISN infrastructure. For each interface, the following parameters must be specified.

Port Settings

● Ethernet Port ID: The specific interface connected to the ISN. A sub-interface is provisioned to carry send/receive underlay traffic to/from the ISN.

● IP address: The sub-interface’s IP address.

● Description: An optional description to be associated to this specific interface.

● MTU: The MTU value for the sub-interface. “Inherit” keeps the default value of 9150B (as shown in the CLI output below), but the user can specify a different value if desired.

Spine1011-Site1# show int e1/63.63

Ethernet1/63.63 is up

admin state is up, Dedicated Interface, [parent interface is Ethernet1/63

Hardware: 10000/100000/40000 Ethernet, address: 0000.0000.0000 (bia 780c.f0a2.039f)

Internet Address is 192.168.1.0/31

MTU 9150 bytes, BW 40000000 Kbit, DLY 1 usec

It is recommended to ensure the value used here is matching the MTU configured on the ISN router (please refer to the “Provisioning Inter-Site Network Connectivity” section).

● OSPF Policy: References the specific Policy created/selected during the Site level configuration. Usually, it is required to specify the fact that those are OSPF point-to-point interfaces.

● OSPF Authentication: Allows to enable authentication (disabled by default).

Note: The OSPF parameters would disappear if OSPF is disabled and different BGP parameters would be shown when enabling the use of BGP for underlay peering between the spines and the ISN devices.

● BGP Peering: This knob must be enabled to ensure the spine establishes MP-BGP EVPN peerings with the spines in remote fabrics. At least two spines per fabric (for the sake of redundancy) should be configured with this knob enabled. The other local spines assume the role of “Forwarders”, which essentially means they establish MP-BGP EVPN adjacencies only with the spines in other Pods of the same ACI fabric that have “BGP Peering” on and not with the spines deployed in remote ACI fabrics. This can be done to reduce the number of geographic BGP adjacencies, without compromising the overall redundancy of the intersite peerings.

Note: In our specific example of a single-Pod fabric with two spines, it is required to enable the “BGP Peering” knob on both spines, to ensure remote prefixes continue to be learned in case of a spine’s failure scenario. If there were more than two spines deployed in the same Pod, the knob should be enabled only on two of them. The other two “Forwarders” spines would learn the remote endpoint information from the local BGP-enabled spines via the COOP control plane.

● BGP-EVPN ROUTER-ID: Unique loopback interface deployed on each spine and used to establish MP-BGP EVPN peerings with the local “Forwarders” spines and with the BGP enabled spines deployed in the remote sites. The best practice recommendation is to use an IP address dedicated for the purpose of Multi-Site EVPN peerings, which is routable in the ISN infrastructure.

Important Note: The Router-ID specified here for the spine node will replace the original Router-ID that was allocated from the internal TEP pool during the fabric bring-up. This causes a reset of the MP-BGP VPNv4 sessions established between the spine RRs and the leaf nodes to propagate inside the fabric the external prefixes learned on local L3Out connections, with a consequent temporary outage for the north-south traffic flows. As a consequence, it is recommended to perform this infra configuration task one spine at a time and, preferably, during a maintenance window. The same considerations apply to a site removal scenario when a fabric must be detached from Nexus Dashboard Orchestrator. It is worth noticing that simply deleting a site from Nexus Dashboard Orchestrator does not cause the removal of the infra L3Out configuration, so the Router-ID previously assigned on NDO will continue to be used. If, however, the infra L3Out is deleted directly on APIC, the router-ID will be changed to the original one part of the TEP pool, and that would also cause a temporary north-south traffic outage due to the reset of the BGP VPNv4 sessions.

● The last knob is only required if the fabrics in the Multi-Site domain are all part of the same BGP ASN and there is the desire to configure the spine as Route-Reflector. The best practice recommendation is to keep the default behavior of creating full-mesh peerings since there are no scalability concerns even when deploying the maximum number of fabrics supported in the same Multi-Site domain.

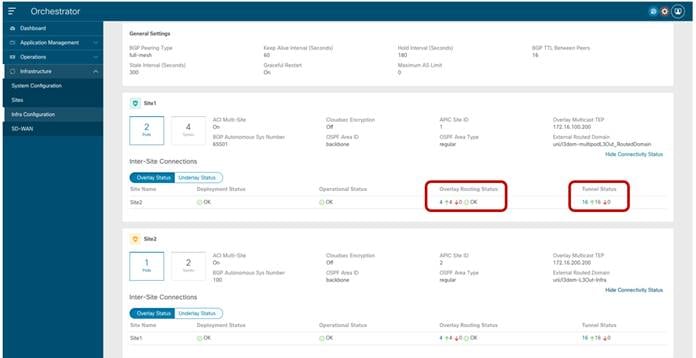

Verifying Intersite Control and Data Plane Connectivity

After completing and deploying the configuration steps described in the previous sections for all the ACI fabrics that have been onboarded to Nexus Dashboard Orchestrator, the MP-BGP EVPN adjacencies should be established across sites and the VXLAN dataplane should also be in a healthy state. This can be verified by looking at the dashboard tab of the NDO UI.

NDO Dashboard

If the connectivity were shown in an unhealthy state, the UI would also provide the information if there is a problem in establishing control-plane adjacencies or a non-healthy VXLAN data-plane or both. This information can be retrieved for each site as part of the “Infra Configuration” section, as shown in figure below.

Display Connectivity Status for Each SIte

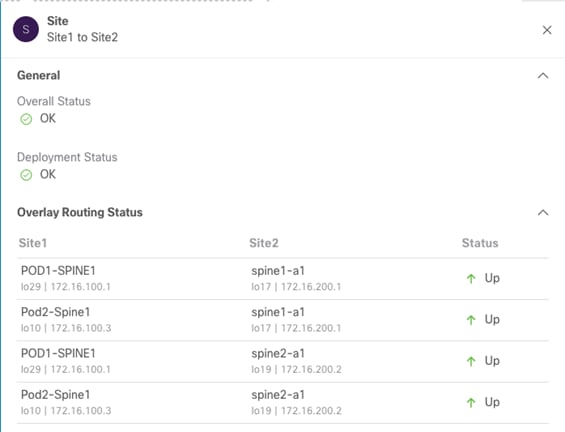

As noticed above, both the “Overlay Status” and “Underlay Status” of each fabric is shown in detail. The user has also the capability of drilling into more details by clicking the specific value highlighting the routing adjacencies or the tunnel adjacencies. The figure below, shows for example the detailed “Overlay Routing Status” information.

Overlay Routing Status Detailed Information

Typically, issues in the establishment of control plane or data plane connectivity are due to configuration errors in the ISN that do not allow the successful exchange of reachability information between fabrics. The first required step is hence ensuring that the spine nodes in a site receive the IP prefixes (BGP EVPN Router-ID, O-UTEP, and O-MTEP) from the remote site and vice versa. This can be done by connecting to one of the spine nodes in site 1 as follows:

Spine 1101 Site1

Spine1011-Site1# show ip route vrf overlay-1

IP Route Table for VRF "overlay-1"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

<snip>

172.16.100.1/32, ubest/mbest: 2/0, attached, direct

*via 172.16.100.1, lo16, [0/0], 01w02d, local, local

*via 172.16.100.1, lo16, [0/0], 01w02d, direct

172.16.100.2/32, ubest/mbest: 4/0

*via 10.1.0.64, eth1/34.69, [115/3], 06:36:58, isis-isis_infra, isis-l1-int

*via 10.1.0.67, eth1/61.72, [115/3], 06:36:58, isis-isis_infra, isis-l1-int

*via 10.1.0.68, eth1/33.71, [115/3], 06:36:58, isis-isis_infra, isis-l1-int

*via 10.1.0.69, eth1/57.70, [115/3], 06:36:58, isis-isis_infra, isis-l1-int

172.16.100.100/32, ubest/mbest: 2/0, attached, direct

*via 172.16.100.100, lo21, [0/0], 06:36:59, local, local

*via 172.16.100.100, lo21, [0/0], 06:36:59, direct

172.16.100.200/32, ubest/mbest: 2/0, attached, direct

*via 172.16.100.200, lo20, [0/0], 06:36:59, local, local

*via 172.16.100.200, lo20, [0/0], 06:36:59, direct

172.16.200.1/32, ubest/mbest: 1/0

*via 192.168.1.3, eth1/64.64, [110/4], 01w02d, ospf-default, intra

172.16.200.2/32, ubest/mbest: 1/0

*via 192.168.1.1, eth1/63.63, [110/4], 01w02d, ospf-default, intra

172.16.200.100/32, ubest/mbest: 2/0

*via 192.168.1.1, eth1/63.63, [110/4], 06:37:51, ospf-default, intra

*via 192.168.1.3, eth1/64.64, [110/4], 00:00:35, ospf-default, intra

172.16.200.200/32, ubest/mbest: 2/0

*via 192.168.1.1, eth1/63.63, [110/4], 06:37:46, ospf-default, intra

*via 192.168.1.3, eth1/64.64, [110/4], 00:00:35, ospf-default, intra

Nexus Dashboard Orchestrator Tenant, Schema and Template Definition

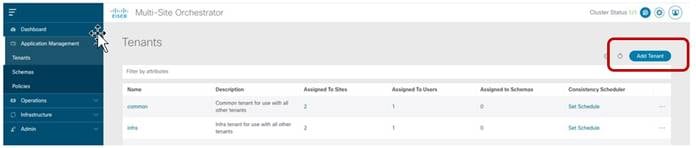

Once the site onboarding and the infra configuration steps are completed, it is possible to start establishing secure communication between endpoints connected to the different ACI fabrics. To do that it is first necessary to create a Tenant and deploy it on all the fabric that requires intersite connectivity. By default, only two tenants (infra and common) are pre-defined on Nexus Dashboard Orchestrator and automatically associated to all the sites that were previously set as “Managed”.

Notice that there are no schemas associated to those tenants by default, so if it is desirable to utilize some of the common/infra policies that are normally available by default on APIC, it is required to import those objects from the different APIC domains into Nexus Dashboard Orchestrator. Covering the import of existing policies from the APIC domains is out of the scope of this paper.

The focus for the rest of this section is on the creation of policies for new tenants and their provisioning across the fabrics that are part of the Multi-Site domain. The first step in doing so is to create a new Tenant and associate it to all the sites where it should be deployed (Figure 18).

Adding a New Tenant

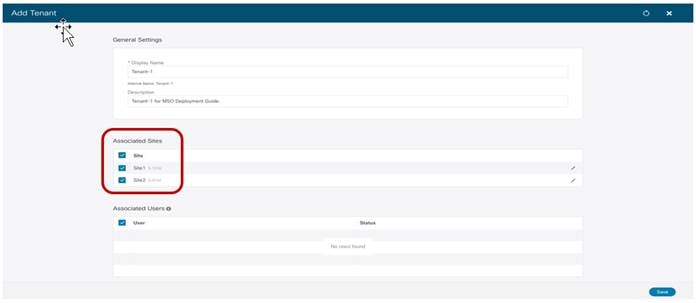

After selecting the “Add Tenant” option, it is possible to configure the tenant’s information and specify the sites where the tenant should be created. In the example in Figure 19 the newly created tenant is mapped to both the sites that were previously onboarded on the Orchestrator Service.

Mapping a Tenant to Different Fabrics

Notice also how the screen above gives you the possibility of associating specific users to this newly created tenant to allow them to manage the tenant’s configuration (by default only the admin user is associated to the tenant). For more information on the supported user roles and configuration, please refer to the configuration guide at the link below:

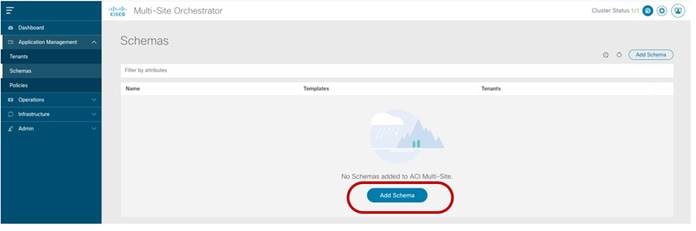

As a result of the configuration shown above, Tenant-1 is created on both Site1 and Site2. However, this is still an “empty shell,” as no policies have been defined yet for this tenant to be provisioned on the fabrics. The definition of tenant policies requires the creation of specific configuration constructs called “Schemas” and “Templates.” For a more detailed discussion on what those constructs represent and associated deployment guidelines, please refer to the “Cisco ACI Multi-Site Architecture” section of the paper below:

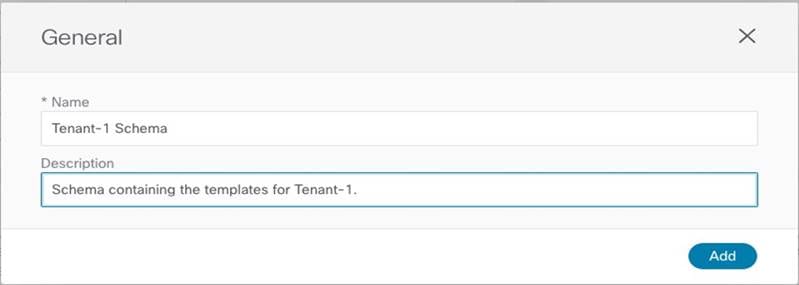

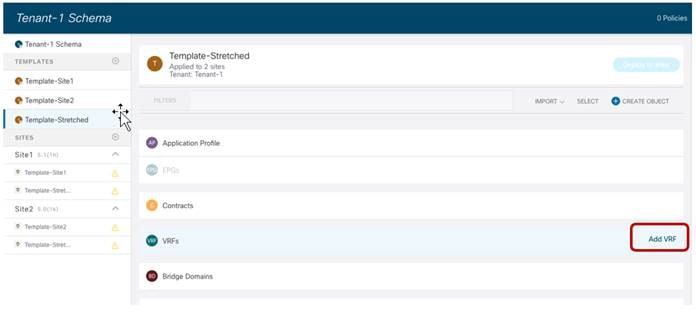

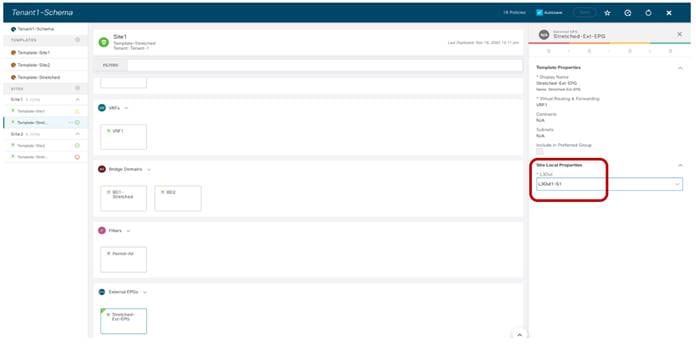

In our example we are going to define a specific schema (named “Tenant-1 Schema”) to be used as a repository of all the templates associated to Tenant-1.

Creating a New Schema

Assigning the Name to the Schema

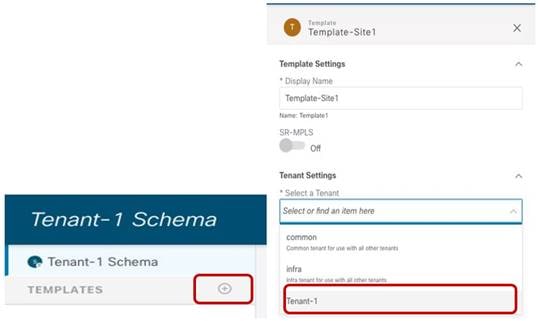

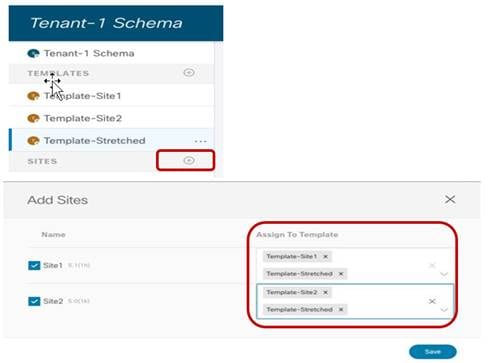

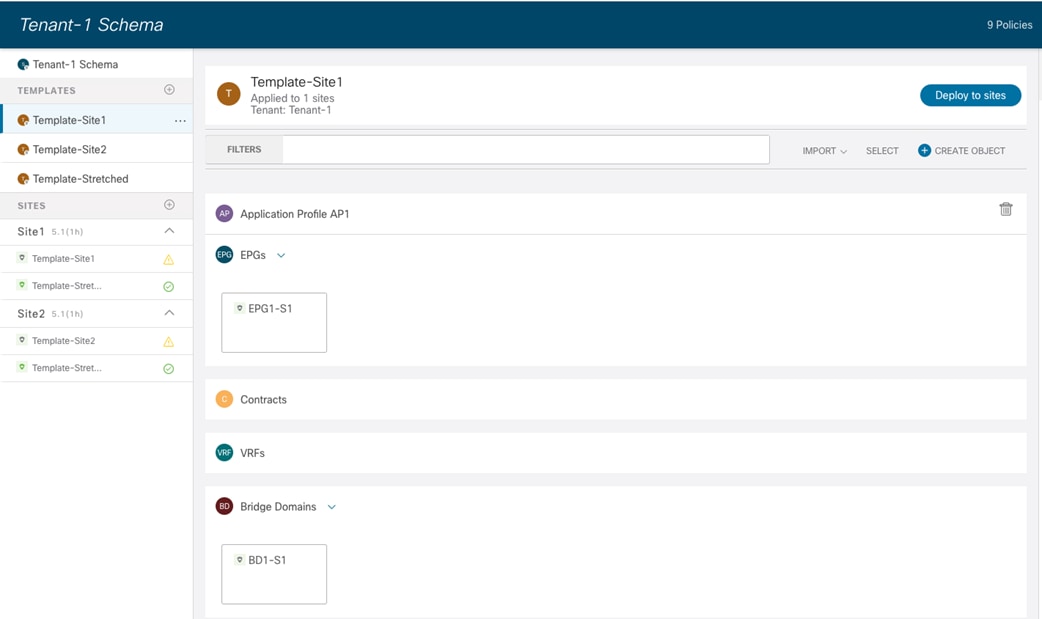

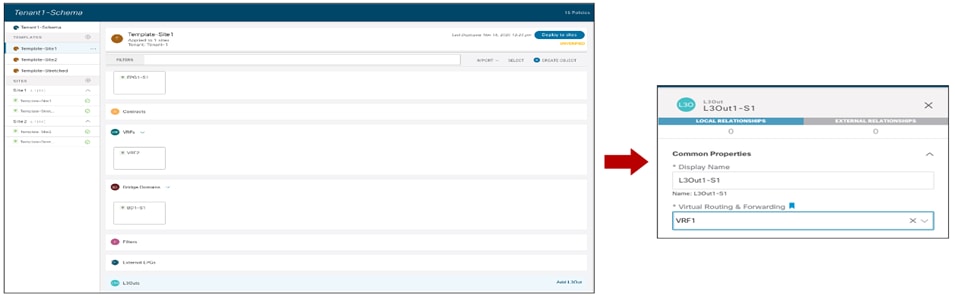

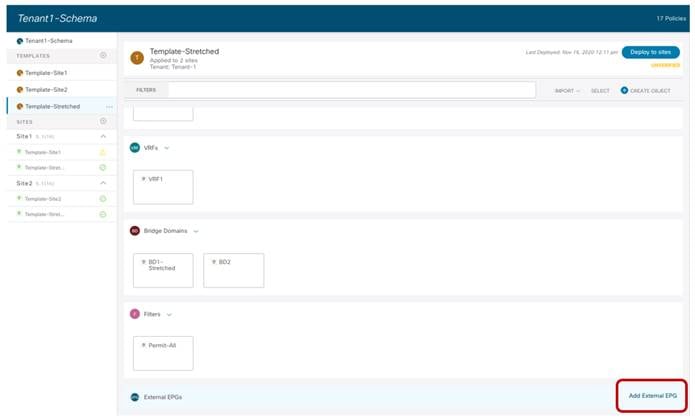

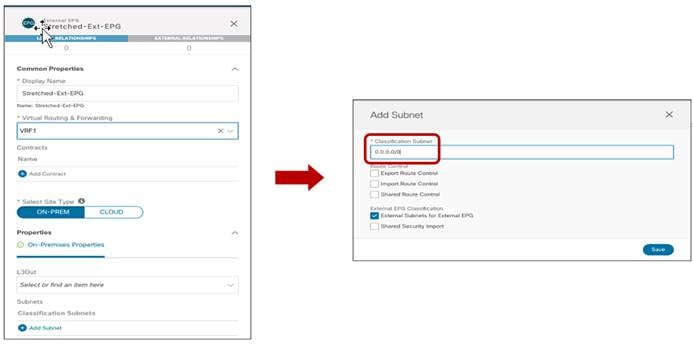

Since for the use cases we are going to discuss in the rest of this paper we would need to deploy policies that are locally available in each site and common to both sites (i.e., ‘stretched’ policies), we are simply going to use three templates:

● Template-Site1 to deploy policies only local to Site1.

● Template-Site2 to deploy policies only local to Site2.

● Template-Stretched to deploy policies common to Site1 and Site2 (stretched policies).

Note: It is important to keep in mind that objects that should exist in different fabrics part of the same ACI Multi-Site domain, when having the same name should always and only be provisioned from templates associated to all those sites. The only exception could be EPGs deployed as part of different Application Profiles, which could have overlapping names. Even in this case, the best practice recommendation is to provision site local EPGs with unique naming, for the sake of operational simplification.

Each of the above templates must be associated to the Tenant-1 tenant, as shown in Figure 22.

Create a Template Mapped to Tenant-1

Once the same operation has been completed for the other templates, it is then possible to associate each template to the corresponding ACI site.

Associate the Templates to the ACI Sites

When this last step is completed, we are ready to start defining the specific configuration policies to be pushed to the different sites to implement the different use cases described in the following sections.

Intersite Connectivity Between Endpoints

The first two use cases that we are considering are the ones allowing to establish intra-EPG and inter-EPGs connectivity between endpoints connected to separate fabrics. We usually refer to those use cases as “east-west” connectivity.

Intra-EPG Connectivity Across Sites

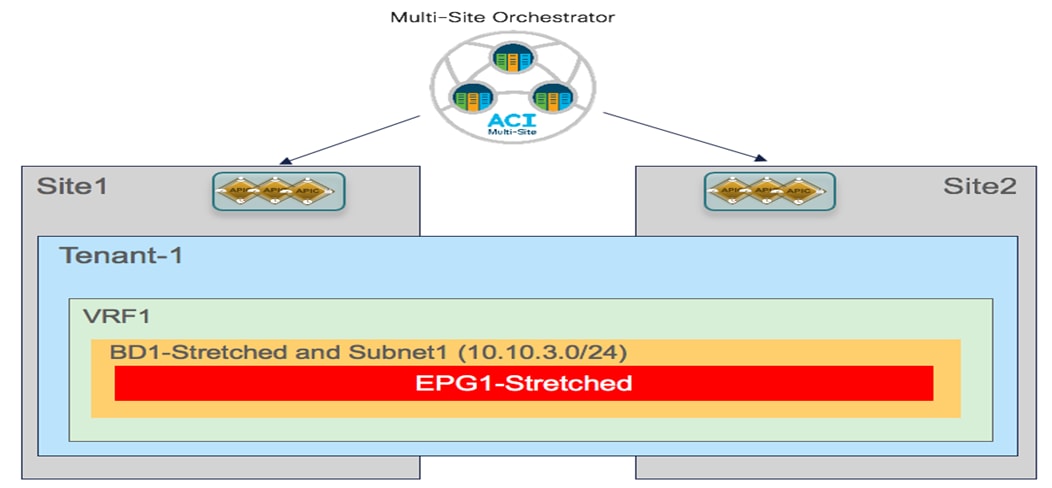

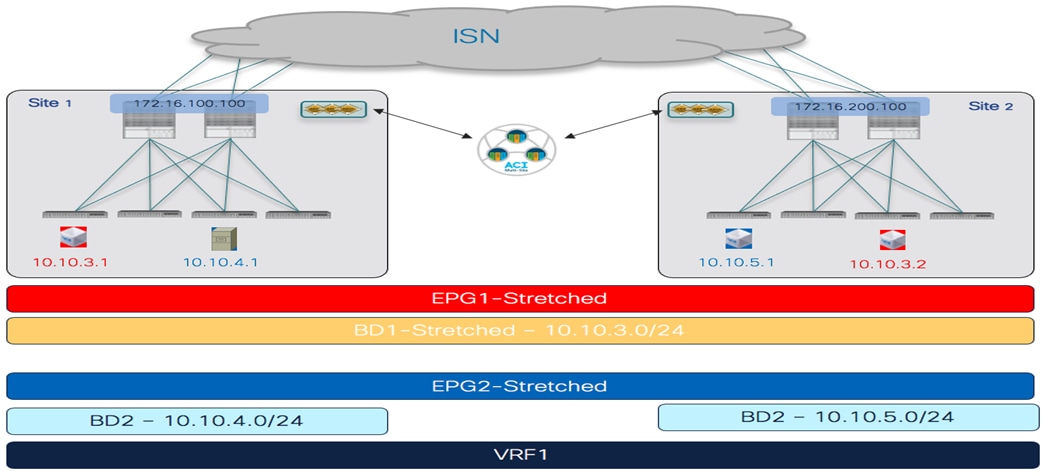

To establish intra-EPG connectivity across sites, it is required to define objects in the Template-Stretched, which allows to render those items in both fabrics. There are a couple of different scenarios that can be deployed. The first one is the one shown in Figure 24, where the EPG is mapped to a stretched BD and the IP subnet(s) associated to the BD is also stretched across sites. This implies that intra-subnet communication can in this case be enabled between endpoints connected to different sites.

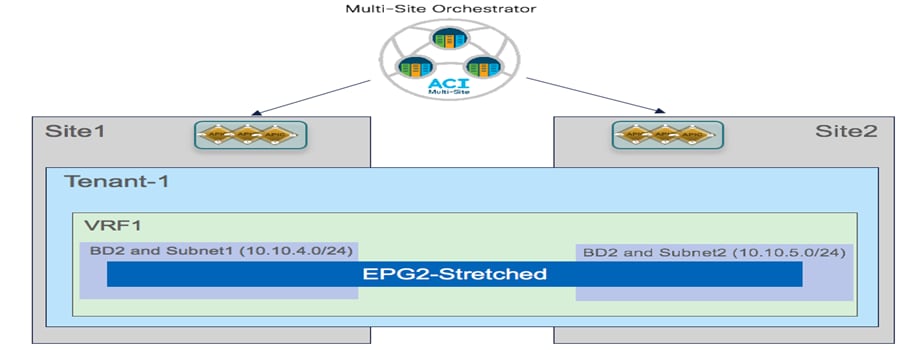

Stretched EPG, Stretched BD and Stretched Subnet

The second scenario is instead depicted in Figure 25. In this case the EPG is still stretched across site, but the BD and the subnet(s) are not stretched, which essentially implies that intra-EPG communication between endpoint connected in separate sites will be Layer 3 and not Layer 2 (as it was in the previous case).

Stretching the EPG without Stretching BD and Subnet

The following sections highlight the specific configuration steps required to provision the policies required to enable the two communication patterns shown above.

Creating a Stretched VRF

The first step for enabling intra-EPG communication across sites consists in creating and deploying the VRF to which the EPG (or better its BD) is associated. This VRF must be configured as part of the Template-Stretched since its configuration must be provisioned in both ACI fabrics.

Creating a new VRF in Template-Stretched

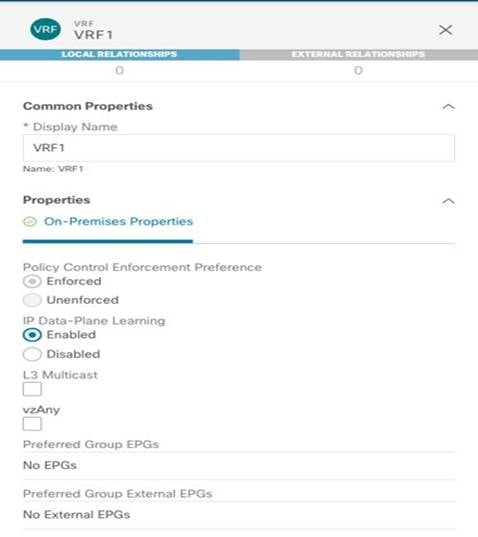

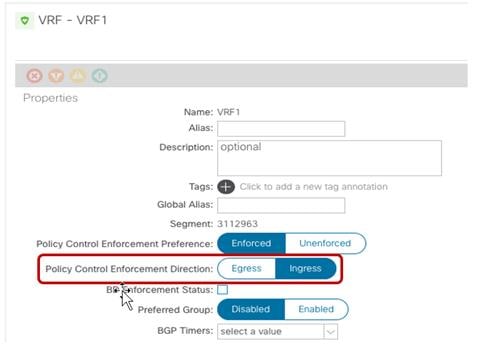

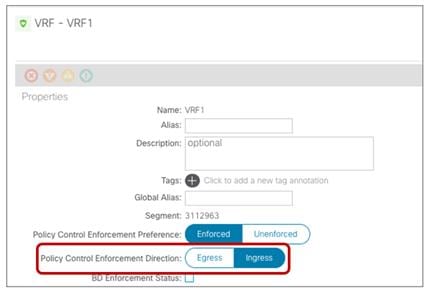

Figure 27 highlights the various configuration parameters available when creating a new VRF on the NDO GUI.

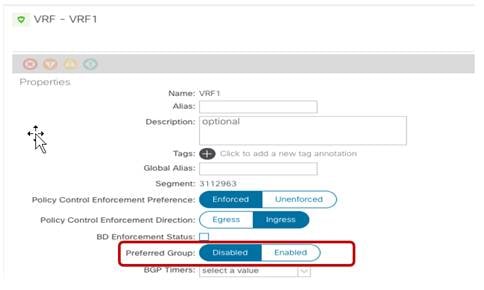

VRF Configuration Parameters

The “Policy Control Enforcement Preference” is always enforced and grayed out, as it is the only VRF configuration supported with Multi-Site. The only reason for exposing the knob is for brownfield scenario where a VRF configuration is imported from APIC into Nexus Dashboard Orchestrator; if the VRF on APIC is configured as “Unenforced”, the user can then have the capability to modify the settings to “Enforced” directly on NDO or keeping it “Unenforced” with the specific understanding that such configuration would not allow establishing intersite communication. There are other supported functionalities (i.e. use of Preferred Groups or vzAny) allowing to remove the policy enforcement for inter-EPG communication, as it will be discussed in more detail in the “Inter-EPGs Connectivity across Sites” section.

The other default setting for a newly created VRF is the enablement of “IP Data-Plane Learning”. There are only specific scenarios where this setting requires to be changed, usually related to use cases where an IP address may get associated with different MAC addresses (active/active server NIC teaming options, application clustered services, certain FW/SLB cluster options, etc.). For more information on this please refer to the ACI design guide available at the link below:

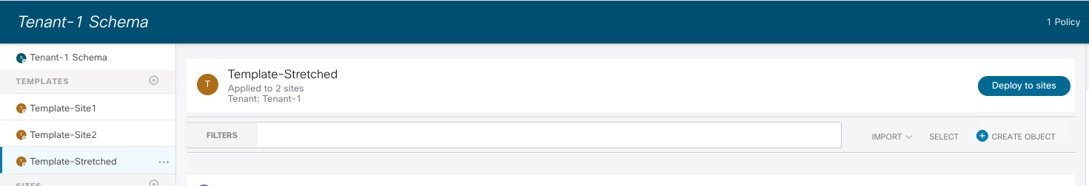

Once the VRF configuration is completed, it is possible to deploy the template to ensure that the VRF is created on both APIC domains that are associated with the Template-Stretched.

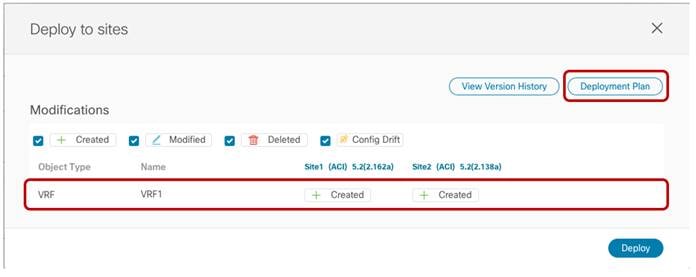

Deploying the Template-Stretched to Create the VRF on the APIC Domains

Before the configuration is pushed to the APIC domains, the NDO GUI provides a summary of the objects that will be created and where (in this case only VRF1 on both Site1 and Site2).

VRF1 being pushed to Site1 and Site2

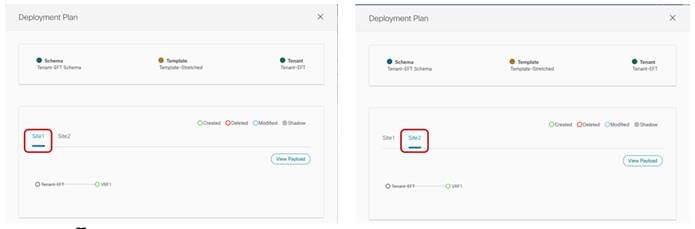

Starting from NDO release 3.4(1), a new functionality named “Template Deployment Plan” became available. By selecting the corresponding button shown in the figure above, graphical (and XML based) information is displayed to show in detail what objects are provisioned by the Orchestrator (and in which sites) as a result of the deployment of the template. In this simple scenario, the Deployment Plan only shows that the VRF has been created in both sites (since the template that is being deployed is associated to both sites).

Template Deployment Plan (Graphical View)

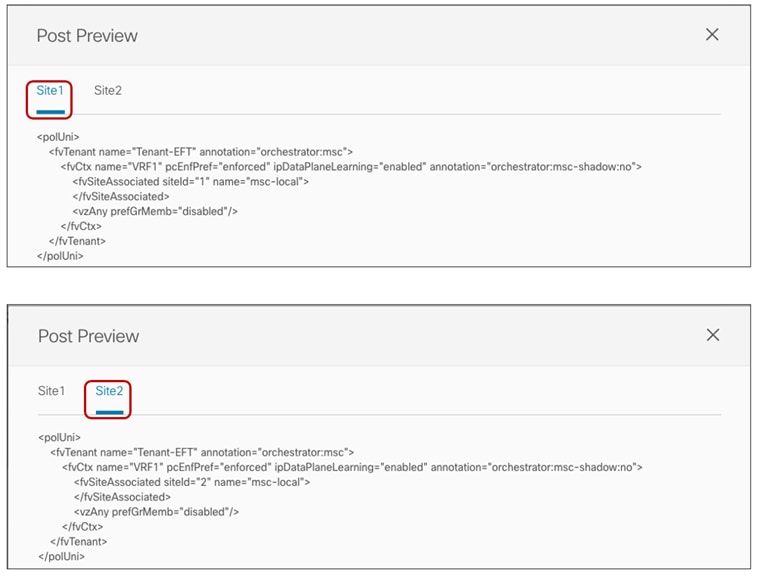

Selecting the “View Payload” option shown above allows you instead to view the XML format of the REST API call that the Orchestrator will make to the APIC controllers in each site as a result of the deployment of the template.

Deployment Plan (XML View)

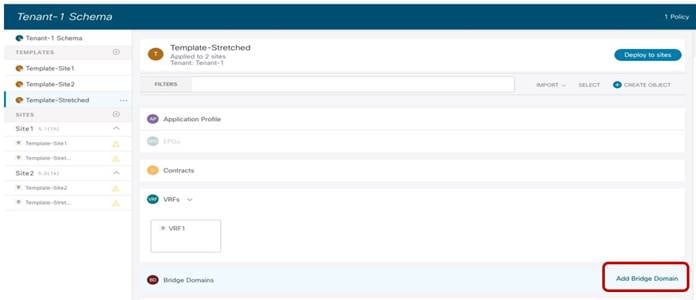

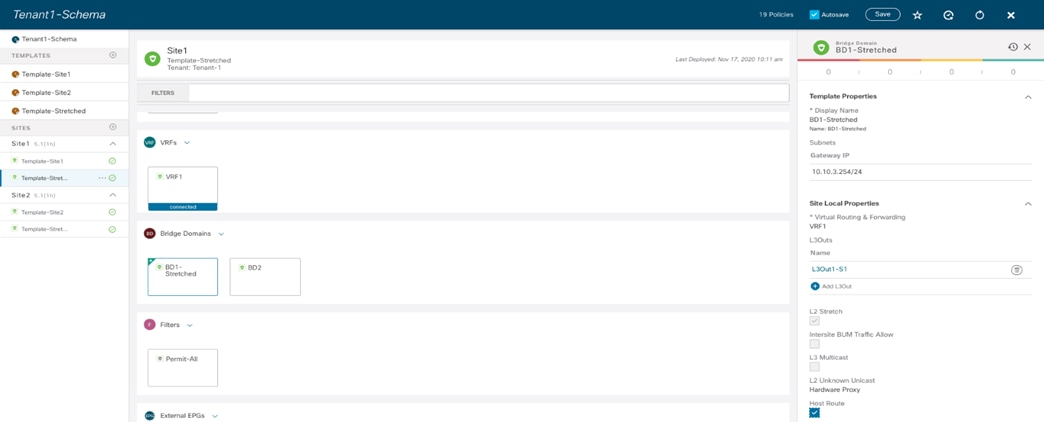

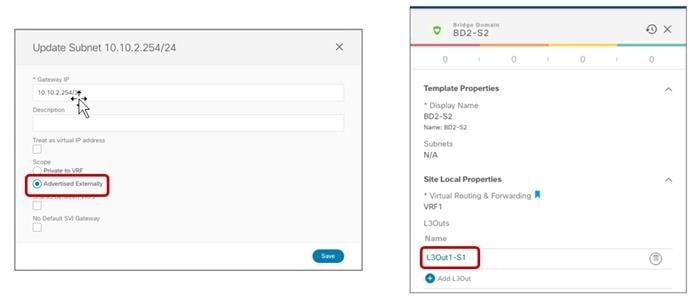

Creating a Stretched Bridge Domain and a Stretched Subnet

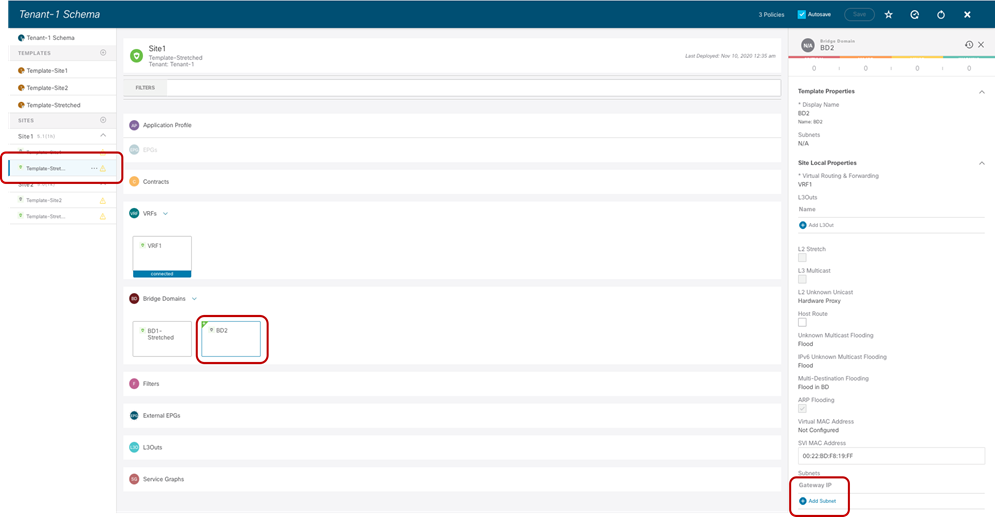

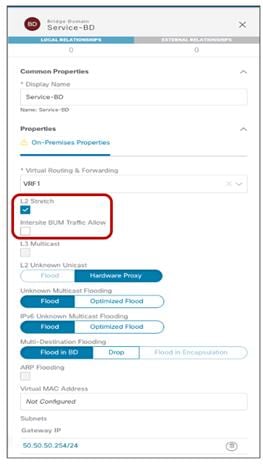

The stretched BD required to implement the use case shown in previous Figure 24 must be defined inside the Template-Stretched.

Creating a Stretched BD in Template-Stretched

Stretched BD (configuration parameters)

● As noticed in Figure 33 above, the BD must be associated with the stretched VRF1 previously defined.

● The BD is stretched by setting the “L2 Stretch” knob. In most of the use cases, the recommendation is to keep the “Intersite BUM Traffic Allow” knob disabled instead, as it is strictly required only in specific scenarios where flooding should be enabled between sites. This is the case for example for legacy-to-ACI migration use cases (until the default gateway for the endpoints is migrated to ACI) or for deployment where L2 multicast stream must be sent across sites. The other knobs to control flooding can usually be kept to the default values.

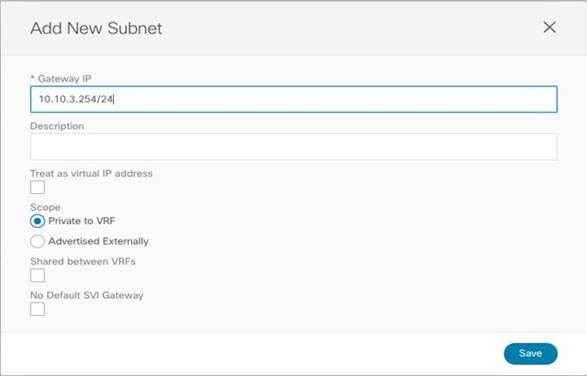

● Since the BD is stretched, the BD subnet is also defined at the template level since it must also be extended across sites.

Define the BD’s Subnet IP Address

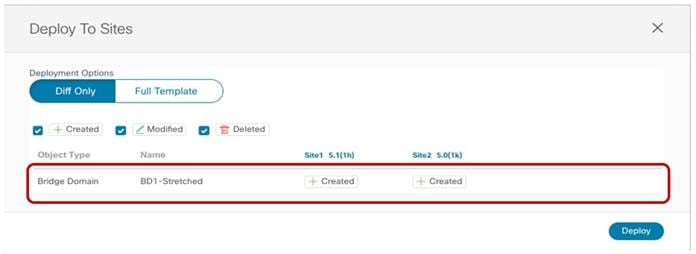

Once the BD configuration is completed, it is possible to deploy the Template-Stretched to the ACI fabrics.

Deploying the Stretched BD to Site 1 and Site2

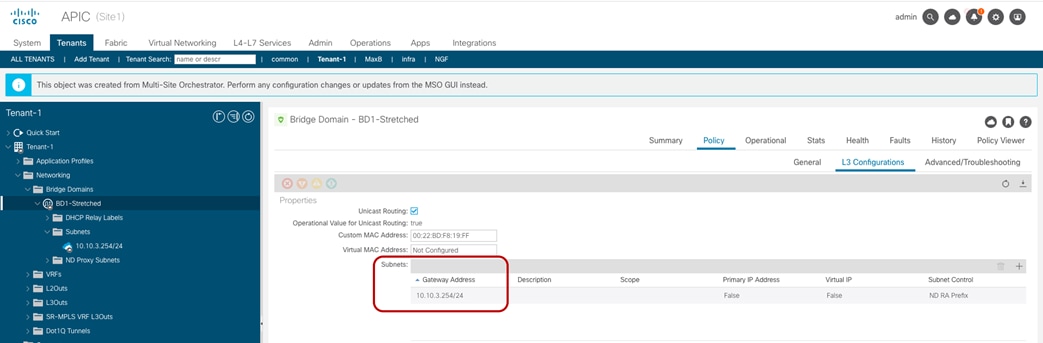

As the end results, the BD is created on both APIC domains, with the same anycast gateway 10.10.3.254/24 defined on all the leaf nodes where VRF1 is deployed.

BD-Stretched with Stretched Subnet Created on APIC in Site1

Creating a Non-Stretched Bridge Domain with a Non-Stretched Subnet

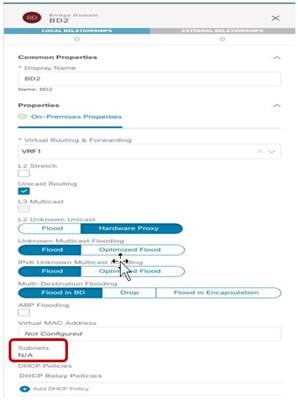

This specific configuration is required to implement the use case previously shown in Figure 25, where the EPG is stretched but the BD is not. Since an EPG can only be associated with a single BD, we need to ensure that the same BD object is created in both sites, even if the forwarding behavior of the BD is to be non-stretched. This can be achieved by deploying the BD inside the Stretched-Template and configure it as shown in Figure 37.

Non-Stretched BD2 Deployed Across Sites (Configuration Parameters)

● The BD is associated with the same stretched VRF1 previously defined.

● The BD must be configured with the “L2 Stretch” knob disabled, as we don’t want to extend the BD subnet nor allow L2 communication across sites.

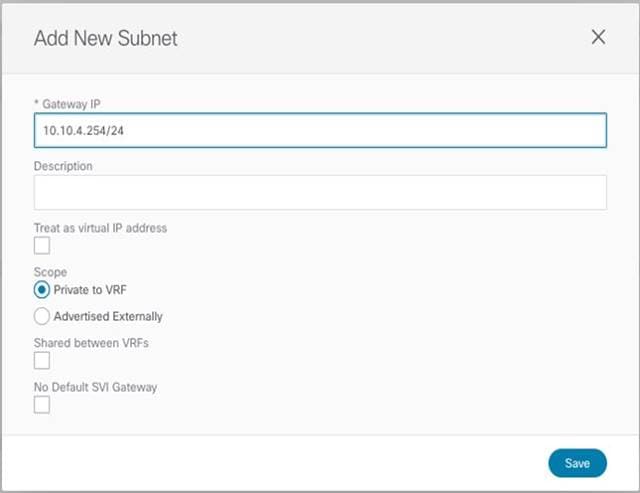

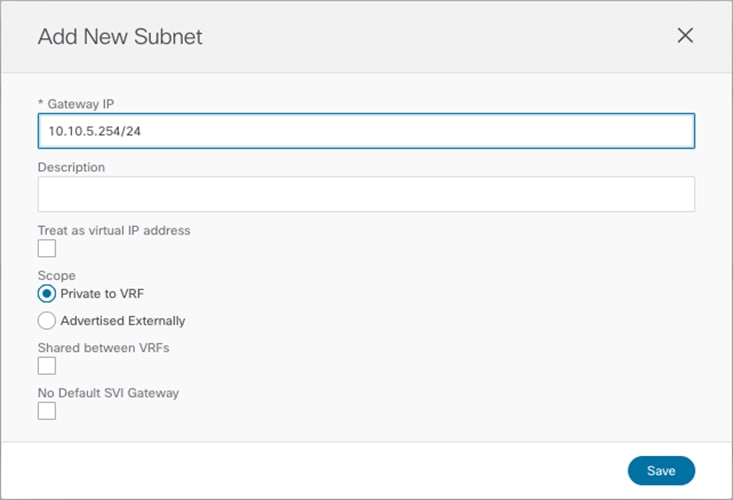

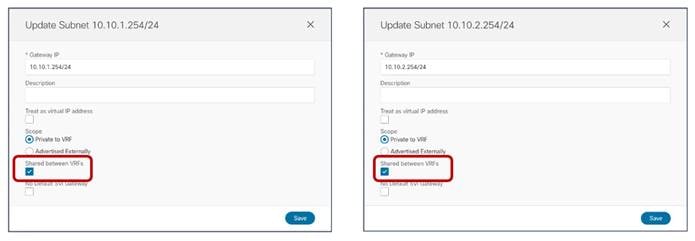

● The BD’s subnet field is grayed out at the template level; this is because for this specific use case the goal is to provide a separate IP subnet to the BD deployed in each site. The subnet is hence configured at the site level (for each site to which the Template-Stretched is associated), as shown in Figure 38 and Figure 39.

Define the BD’s Subnet at the Site1 Level

BD’s Subnet for Endpoints Connected to Site1

The same configuration should be applied for the same BD at the Site2 level, which allows to configure a separate IP subnet to be used for the endpoints that are connected to Site2 (Figure 40).

BD’s Subnet for Endpoints Connected to Site2

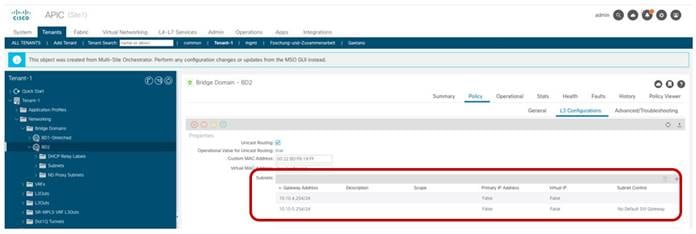

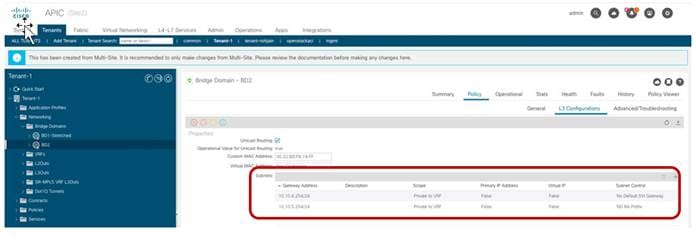

Once a specific subnet has been provisioned at the site level for each ACI fabric and the template has been deployed, it is possible to verify directly on the APIC domains what is the result of the configuration. As noticed in Figure 41, the BD in Site1 is configured with both IP subnets, but only the specific one that was configured at the Site1 level on Nexus Dashboard Orchestrator (10.10.4.0/24) is going to be used to provide default gateway services for the endpoints. The other IP subnet (10.10.5.0/24) (also referred to as “Shadow Subnet”) is automatically provisioned with the “No Default SVI Gateway” parameter since it is only installed on the leaf nodes in Site1 to allow routing to happen across the sites when endpoints part of the same EPG want to communicate (we’ll look at the leaf node routing table in the “Creating the Stretched EPGs” section).

BD’s Subnets Configured on APIC in Site 1

The exact opposite considerations are instead valid for the same BD deployed on the APIC nodes in Site2, as highlighted in Figure 42 below.

BD’s Subnets Configured on APIC in Site 1

Note: The “Shadow Subnet” is always provisioned with the “Private to VRF” scope, independently from the specific settings the same subnet had in the original site. This means that it won’t ever be possible to advertise the “Shadow Subnet” prefix out of an L3Out in the site where it is instantiated. For advertising a BD subnet out of the L3Outs of different sites it is required to deploy the BD with the “L2 Stretch” flag set.

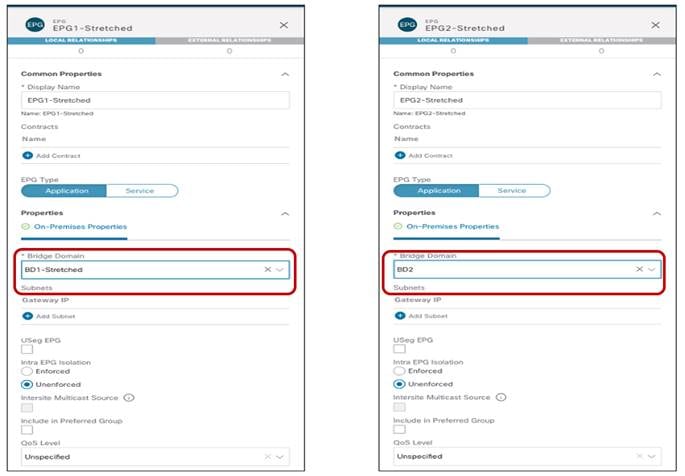

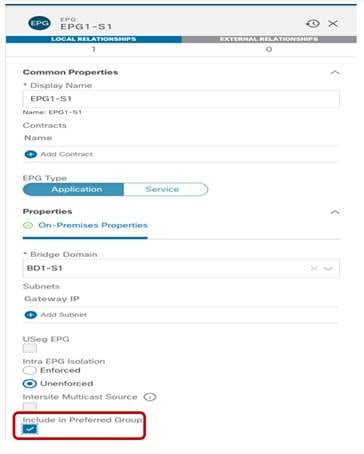

Creating the Stretched EPGs

The last step consists in creating the two EPGs (EPG1-Stretched and EPG2-Stretched) previously shown in Figure 24 and Figure 25. Since those are stretched objects, they will be defined in the Template-Stretched and then pushed to both ACI sites.

Creating Stretched EPGs

As shown above, each EPG is mapped to the BD previously created, depending on the specific use case it needs to be implemented. Once the EPGs have been created, the next logical step is to specify what type of endpoints should become part of those EPGs. ACI allows connecting to the same EPG endpoints of different nature: bare metal servers, virtual machines, containers, etc. The type of endpoints to be used is specified by mapping the EPG to a specific domain (physical domain, VMM domain, etc.). Those domains are created at the APIC level for each fabric that is part of the Multi-Site domain, but they then get exposed to the Orchestrator Service so that the EPG-domain mappings can be provisioned directly through the Orchestrator Service (at the site-specific level, since each fabric can expose its own locally defined domains).

Note: How to create domains on APIC is out of the scope of this paper. For more information, please refer to the ACI configuration guides below:

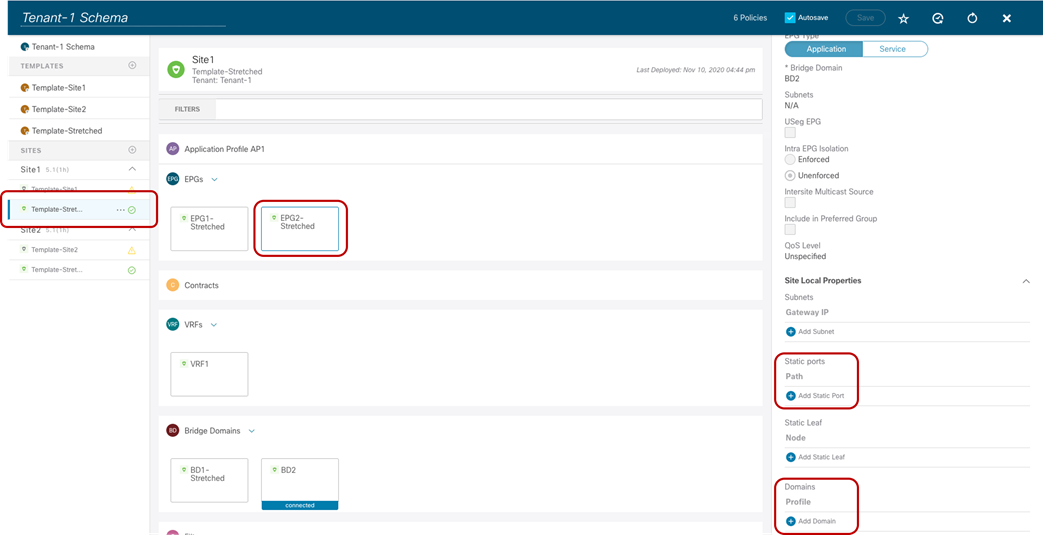

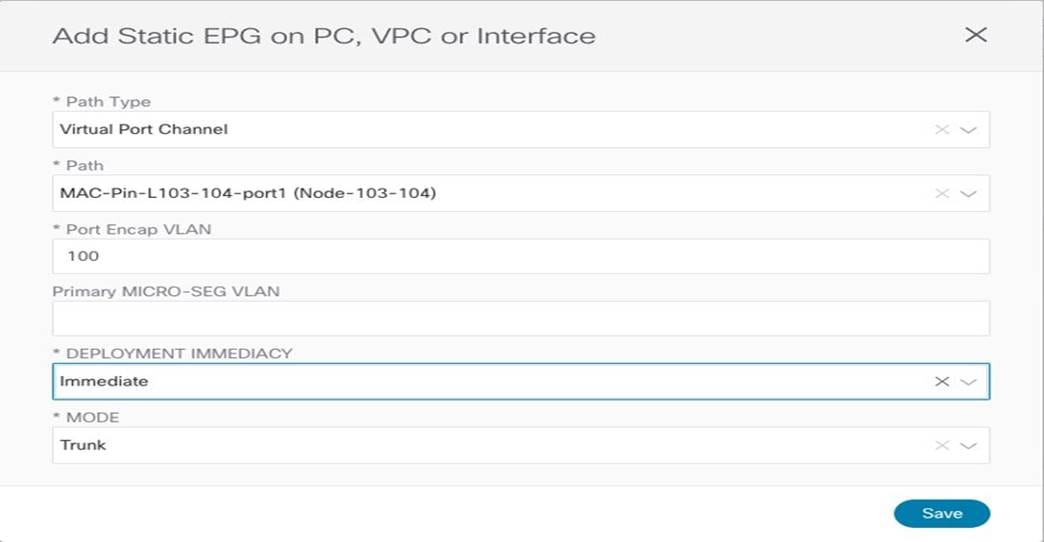

Figure 44 shows the example of the mapping of EPG2-Stretched to a physical domain in Site1 and the corresponding static port configuration required for those physical endpoints. This configuration must be performed at the site level since it specifically references a physical domain that is locally defined in that APIC domain.

Static Port and Physical Domain Configuration for EPG2-Stretched

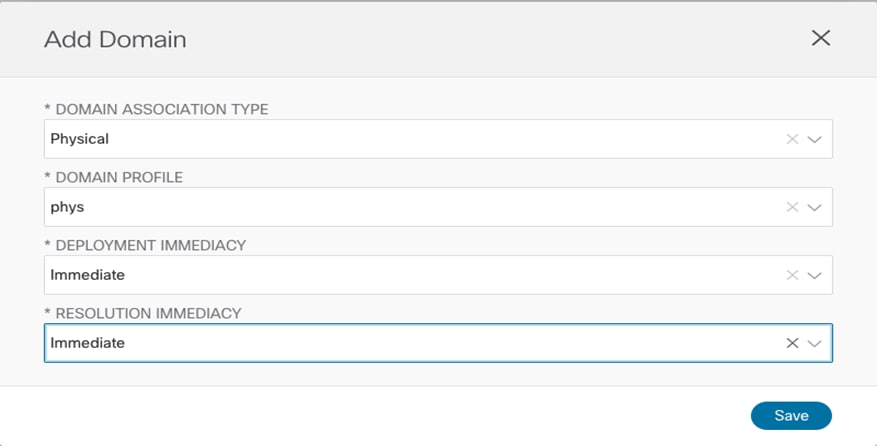

After selecting “Add Domain”, is it then possible to specify the specific physical domain this EPG should be mapped to. There are different options to select for what concerns the “Deployment Immediacy” and “Resolution Immediacy”. For more information on what is the meaning of those options please refer to the ACI configuration guides referenced above.

Mapping EPG2-Stretched to a Physical Domain

The static port configuration allows then to specify the specific port (vPC1) and VLAN encapsulation (VLAN 100) to be used to connect the physical endpoint to the ACI fabric and make it part of the EPG2-Stretched group.

Static Port Configuration for a Physical Endpoint

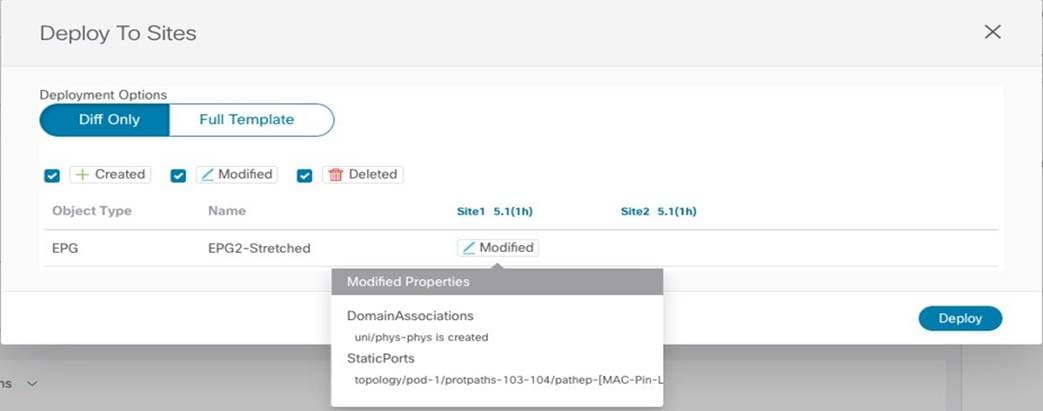

Finally, before the physical domain mapping configuration is pushed to the APIC Site1, the Nexus Dashboard Orchestrator GUI displays the specific changes that will be applied when hitting “Deploy”, just to ensure the admin can verify those actions reflect the desired intent.

Reviewing the Changes to be Deployed to Site1

Following a similar procedure, it is possible to map EPG2-Stretched to a specific domain in Site2, for example, a VMM domain. Doing so, would then automatically provision a corresponding port-group on the ESXi hosts managed by the vSphere server that is peered with APIC so that the virtual machines that represent endpoints part of Stretched-EPG2 can be connected to it.

Verifying Intra-EPG Communication

Once the endpoints are connected, they are locally discovered by the leaf nodes.

Endpoints Connected to Stretched EPGs

This information can be retrieved directly from the APIC in each site (as part of the operational tab of the EPG), and also through the CLI on each specific leaf node, as shown below for Site1 and Site2:

Leaf 103 Site1

Leaf103-Site1# show endpoint vrf Tenant-1:VRF1

Legend:

s - arp H - vtep V - vpc-attached p - peer-aged

R - peer-attached-rl B - bounce S - static M - span

D - bounce-to-proxy O - peer-attached a - local-aged m - svc-mgr

L - local E - shared-service

+--------------------------------+---------------+-----------------+--------------+-------------+

VLAN/ Encap MAC Address MAC Info/ Interface

Domain VLAN IP Address IP Info

+--------------------------------+---------------+-----------------+--------------+-------------+

10 vlan-100 0050.56b9.3e72 LV po1

Tenant-1:VRF1 vlan-100 10.10.4.1 LV po1

Leaf 301 Site2

Leaf301-Site2# show endpoint vrf Tenant-1:VRF1

Legend:

s - arp H - vtep V - vpc-attached p - peer-aged

R - peer-attached-rl B - bounce S - static M - span

D - bounce-to-proxy O - peer-attached a - local-aged m - svc-mgr

L - local E - shared-service

+--------------------------------+---------------+-----------------+--------------+-------------+

VLAN/ Encap MAC Address MAC Info/ Interface

Domain VLAN IP Address IP Info

+--------------------------------+---------------+-----------------+--------------+-------------+

42 vlan-136 0050.5684.48b0 LpV po2

Tenant-1:VRF1 vlan-136 10.10.5.1 LpV po2

Communication between those endpoints can be freely established across sites since they are part of the same EPG2-Stretched group. When looking at the routing table of the leaf nodes where the endpoints are connected, it is possible to notice how the IP subnet for the local endpoint is locally instantiated (with the corresponding anycast gateway address) and also the IP subnet for the endpoints in the remote site is locally instantiated pointing to the proxy-VTEP address of the local spines as next-hop (10.1.112.66).

Leaf 103 Site1

Leaf103-Site1# show endpoint vrf Tenant-1:VRF1

IP Route Table for VRF "Tenant-1:VRF1"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

10.10.4.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.1.112.66%overlay-1, [1/0], 00:09:58, static, tag 4294967294

10.10.4.254/32, ubest/mbest: 1/0, attached, pervasive

*via 10.10.4.254, vlan10, [0/0], 00:09:58, local, local

10.10.5.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.1.112.66%overlay-1, [1/0], 00:09:58, static, tag 4294967294

This is the result of the configuration pushed to APIC and shown in previous Figure 41 (the opposite configuration is provisioned on the leaf nodes in Site2); the subnet entry in the routing table is used to forward routed traffic across sites until the leaf nodes can learn the specific IP address of the remote endpoints via data plane learning.

The CLI output below shows instead the endpoint tables on the leaf node in Site1 once the data plane learning of the remote endpoint in Site2 has happened (similar output would be obtained for the leaf node in Site2). The next-hop of the VXLAN tunnel to reach the remote endpoint is represented by the O-UTEP address of the remote fabric (172.16.200.100).

Leaf 103 Site1

Leaf103-Site1# show endpoint vrf Tenant-1:VRF1

Legend:

S - static s - arp L - local O - peer-attached

V - vpc-attached a - local-aged p - peer-aged M - span

B - bounce H - vtep R - peer-attached-rl D - bounce-to-proxy

E - shared-service m - svc-mgr

+-----------------------------------+---------------+-----------------+--------------+-------------+

VLAN/ Encap MAC Address MAC Info/ Interface

Domain VLAN IP Address IP Info

+-----------------------------------+---------------+-----------------+--------------+-------------+

Tenant-EFT:VRF1 10.10.5.1 tunnel39

13 vlan-883 0050.56b9.1bee LV po1

Tenant-EFT:VRF1 vlan-883 10.10.4.1 LV po1

Leaf 103 Site1

Leaf103-Site1# show interface tunnel 39

Tunnel39 is up

MTU 9000 bytes, BW 0 Kbit

Transport protocol is in VRF "overlay-1"

Tunnel protocol/transport is ivxlan

Tunnel source 10.1.0.68/32 (lo0)

Tunnel destination 172.16.200.100/32

Similarly, communication can be freely achieved between endpoints connected to the EPG1-Stretched group. The only difference is that those endpoints are part of the same IP subnet (10.10.3.254/24) that is stretched across sites. As a consequence, Layer 2 bridging and not Layer 3 routing is what allows to establish communication between them, as it can be noticed by looking at the endpoint table below:

Leaf 101 Site1

Leaf101-Site1# show endpoint vrf Tenant-1:VRF1

Legend:

s - arp H - vtep V - vpc-attached p - peer-aged

R - peer-attached-rl B - bounce S - static M - span

D - bounce-to-proxy O - peer-attached a - local-aged m - svc-mgr

L - local E - shared-service

+--------------------------------+---------------+-----------------+--------------+-------------+

VLAN/ Encap MAC Address MAC Info/ Interface

Domain VLAN IP Address IP Info

+--------------------------------+---------------+-----------------+--------------+-------------+

1/Tenant-1:VRF1 vxlan-16154555 0050.56a2.380f tunnel26

3 vlan-886 0050.56b9.54f3 LV po1

In this case, only the MAC addresses of the remote endpoint is learned on the local leaf node, together with the information that it is reachable through a VXLAN tunnel (tunnel26). Not surprisingly, the VXLAN tunnel is established also in this case between the VTEP of the local leaf node and the O-UTEP address of Site2 (172.16.200.100).

Leaf 101 Site1

Leaf101-Site1# show interface tunnel 26

Tunnel26 is up

MTU 9000 bytes, BW 0 Kbit

Transport protocol is in VRF "overlay-1"

Tunnel protocol/transport is ivxlan

Tunnel source 10.1.0.68/32 (lo0)

Tunnel destination 172.16.200.100/32

As you may have noticed, no specific security policy was required to enable communication between endpoints part of the same EPG. This is the default behavior for ACI, which will always allow free intra-EPG communication. Communication between endpoints part of EPG1-Stretched and EPG2-Stretched won’t instead be allowed by default, because of the zero-trust security approach delivered by ACI. The “Inter-EPG Connectivity across Sites” section will cover in great detail how this communication can be allowed.

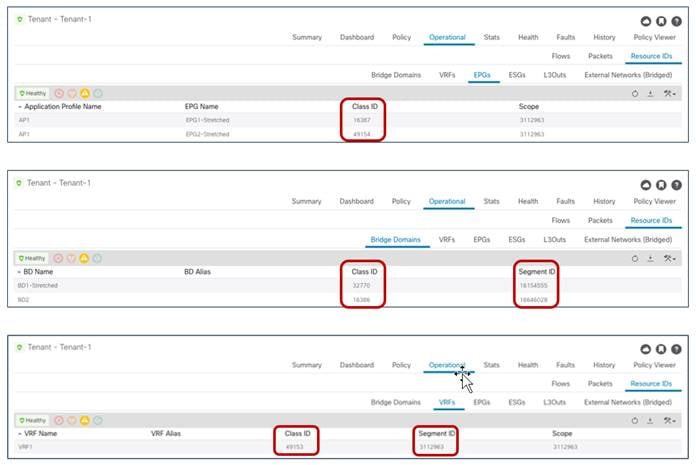

Verifying Namespace Translation Information for Stretched Objects

The ACI Multi-Site architecture allows to interconnect sites representing completely different namespaces, since policies are locally instantiated by different APIC clusters. This essentially means that when specific resources (like L2VLAN IDs for BDs, L3VLAN IDs for VRFs, Class-IDs for EPGs) are assigned by each APIC controller, their values would be different in each site even if the objects are stretched across sites (and hence represent the same logical items).

Since VXLAN traffic used to establish intersite communication carries this type of information in the VXLAN header, a translation (or namespace normalization) function must be performed on the receiving spine to ensure successful end-to-end communication and consistent policy application.

Note: The namespace normalization function is not required only for stretched objects, but also when creating relationships between local objects defined in different sites. for more information, please refer to the “Cisco ACI Multi-Site architecture” section of the paper below:

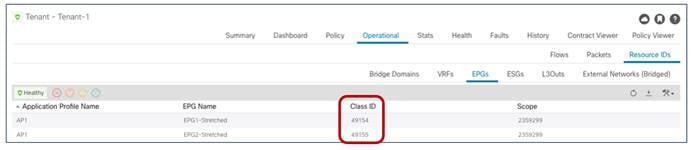

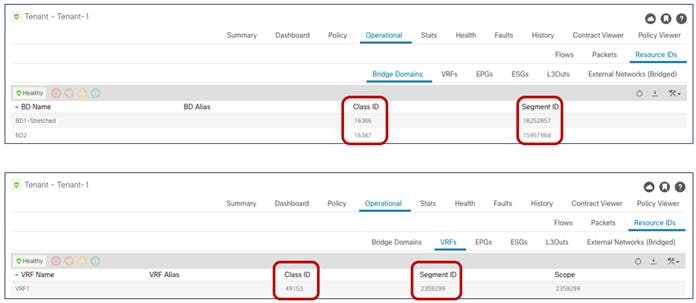

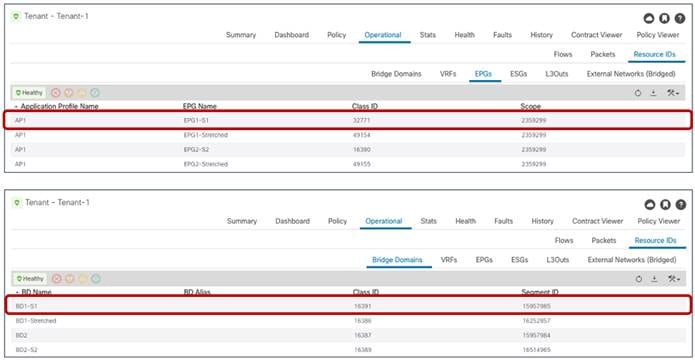

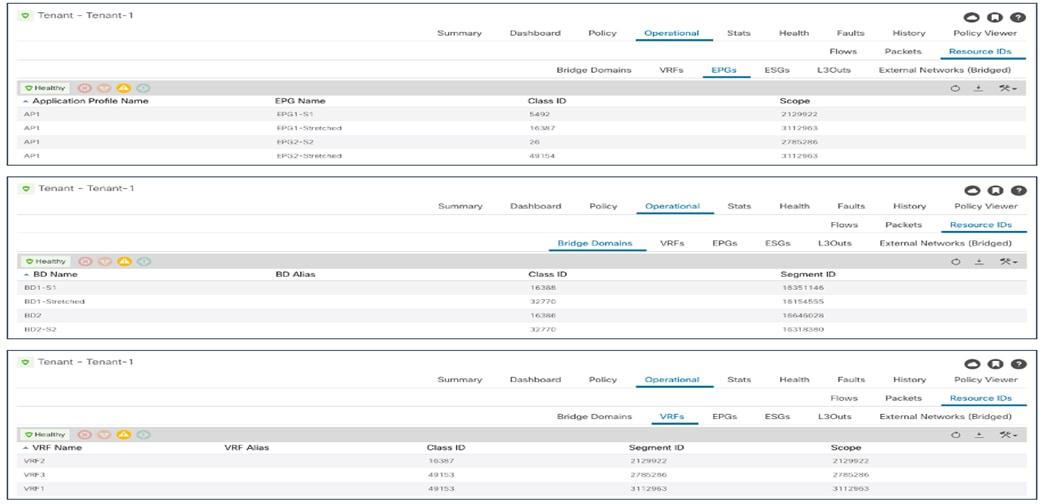

For our specific scenario of inter-site intra-EPG communication, we can then verify how the translation entries are properly configured on the spine nodes receiving VXLAN traffic from the remote site. Figure 49 shows the specific values assigned to the stretched objects created in the APIC domain of Site1.

Class IDs, Segment IDs and Scopes for Objects Created on Site1

Figure 50 displays instead the values for the same objects assigned by the APIC controller in Site2.

Class IDs, Segment IDs and Scopes for Objects Created on Site2

As it can be easily noticed comparing the information in the two figures above, the values assigned to the stretched objects (EPG, BD, or VRF) in Site1 differ from the values provisioned in Site2. This is where the translation function performed by the spine comes into the picture to “normalize” those values and allow successful data plane connectivity across sites.

The outputs below show the entries on the spines of Site1 and a spine of Site 2 that allow translating the Segment ID and Scope of the BD and VRF that are stretched across sites. You can notice how translation mappings are created for VRF1 and BD1-Stretched since those are stretched objects, but not for BD2 that is not “L2 stretched” instead.

Spine 1101 Site1

Spine1101-Site1# show dcimgr repo vnid-maps

--------------------------------------------------------------

Remote | Local

site Vrf Bd | Vrf Bd Rel-state

--------------------------------------------------------------

2 2359299 | 3112963 [formed]

2 2359299 16252857 | 3112963 16154555 [formed]

Spine 401 Site2

APIC-Site2# fabric 401 show dcimgr repo vnid-maps

----------------------------------------------------------------

Node 401 (spine1-a1)

----------------------------------------------------------------

--------------------------------------------------------------

Remote | Local

site Vrf Bd | Vrf Bd Rel-state

--------------------------------------------------------------

1 3112963 | 2359299 [formed]

1 3112963 16154555 | 2359299 16252857 [formed]

The outputs below display instead the translation entries on the spine nodes in Site1 and Site2 for the policy information (i.e., class IDs) of the VRF, BDs, and EPGs. It is worth noticing how in a case (for VRF1) the local and remote class ID values are actually the same (49153). In other cases, the same class ID value is used in the two fabrics for different purposes: for example, 49154 represents the class ID of EPG2-Stretched in Site1 and also the class ID of EPG1-Stretched in Site2. This reinforces the point that each APIC domain assigns values with local significance and hence the namespace normalization function is needed to allow successful intersite communication.

Spine 1101 Site1

Spine1101-Site1# show dcimgr repo sclass-maps

----------------------------------------------------------

Remote | Local

site Vrf PcTag | Vrf PcTag Rel-state

----------------------------------------------------------

2 2916358 16386 | 2129927 32770 [formed]

2 2818056 16387 | 2916360 16386 [formed]

2 2359299 49155 | 3112963 49154 [formed]

2 2359299 49153 | 3112963 49153 [formed]

2 2359299 49154 | 3112963 16387 [formed]

Spine 401 Site2

Spine401-Site2# show dcimgr repo sclass-maps

----------------------------------------------------------

Remote | Local

site Vrf PcTag | Vrf PcTag Rel-state

----------------------------------------------------------

1 3014657 32770 | 2326532 16386 [formed]

1 2916360 16386 | 2818056 16387 [formed]

1 3112963 49154 | 2359299 49155 [formed]

1 3112963 16387 | 2359299 49154 [formed]

1 3112963 49153 | 2359299 49153 [formed]

Inter-EPG Connectivity Across Sites (Intra-VRF)

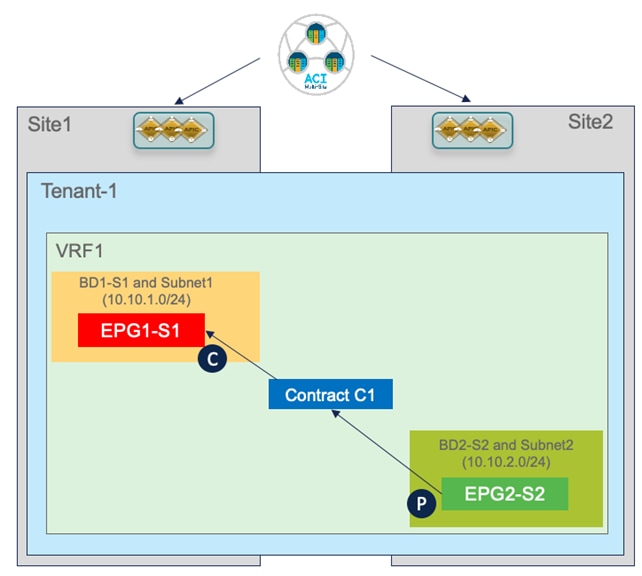

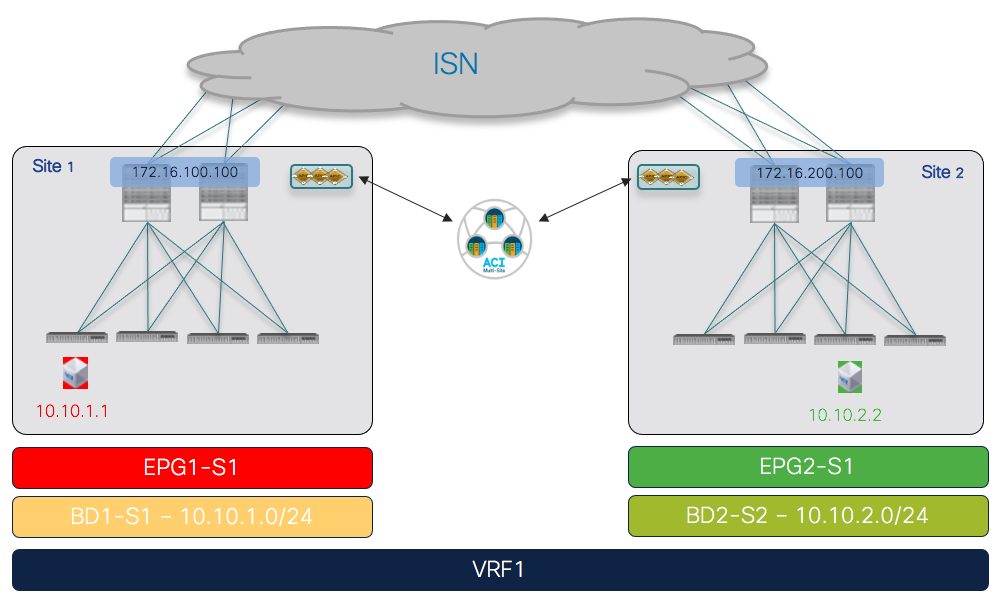

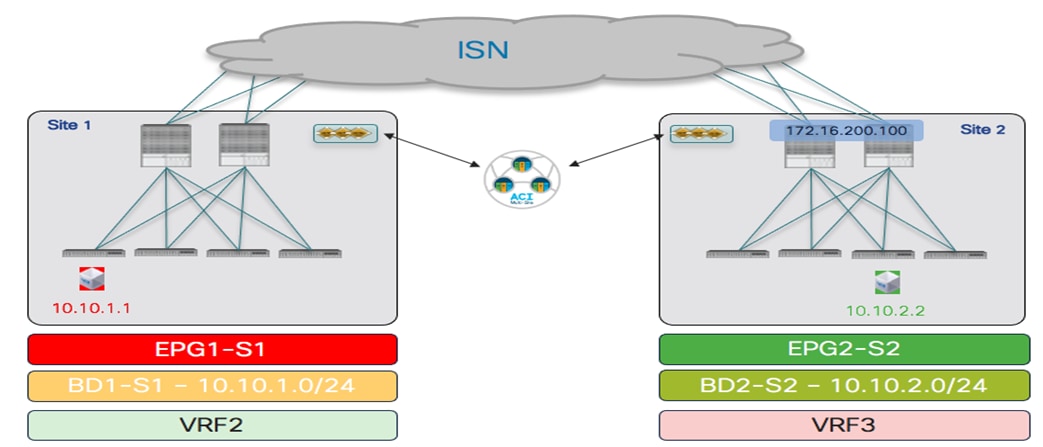

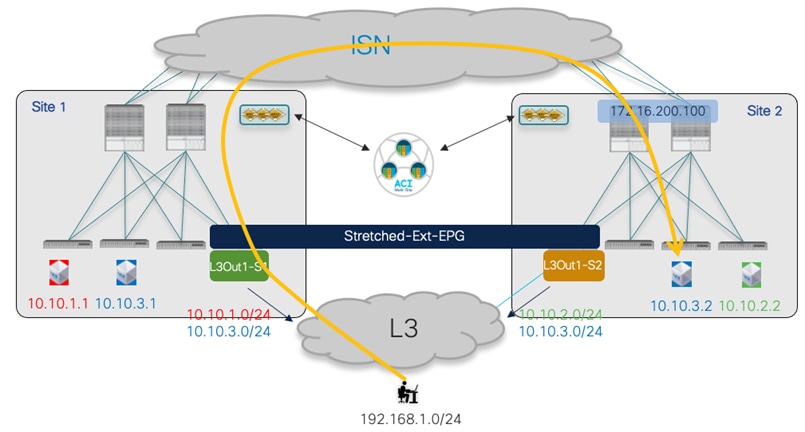

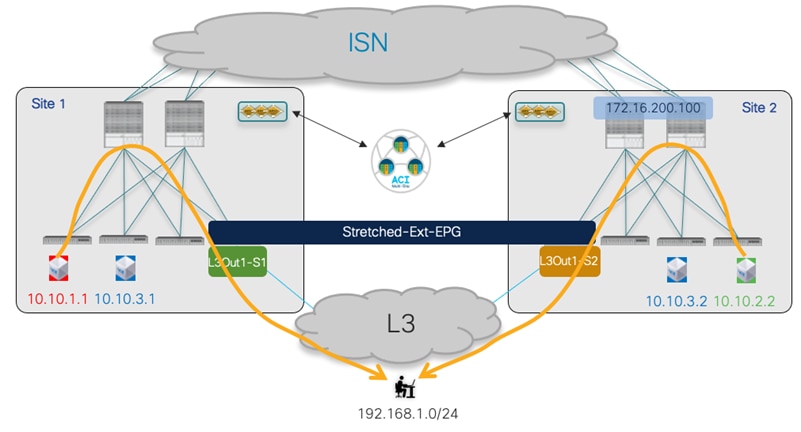

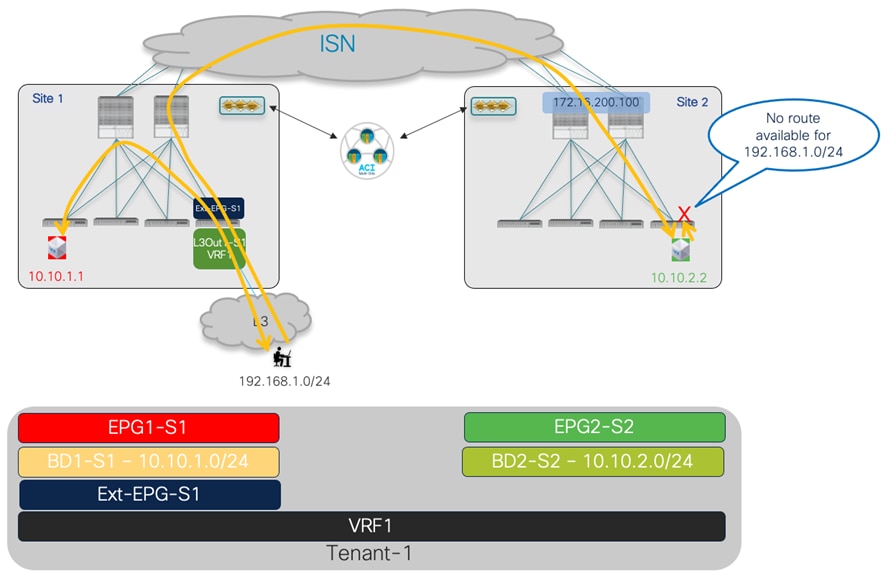

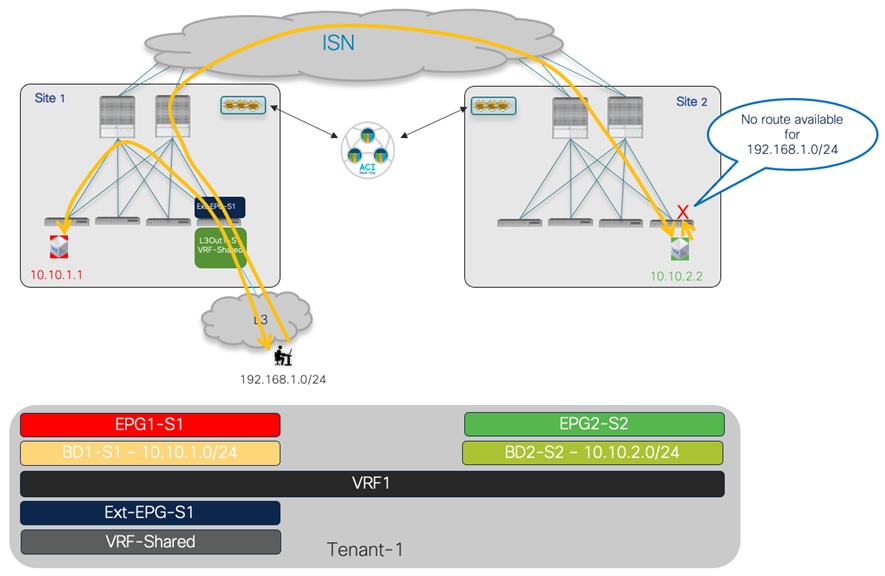

The first use case to consider for the establishment of intersite connectivity between endpoints connected to different EPGs is the one displayed in Figure 51, which applies to the intra-VRF scenario.

Inter-EPGs Connectivity Across Sites (Intra-VRF) Use Case

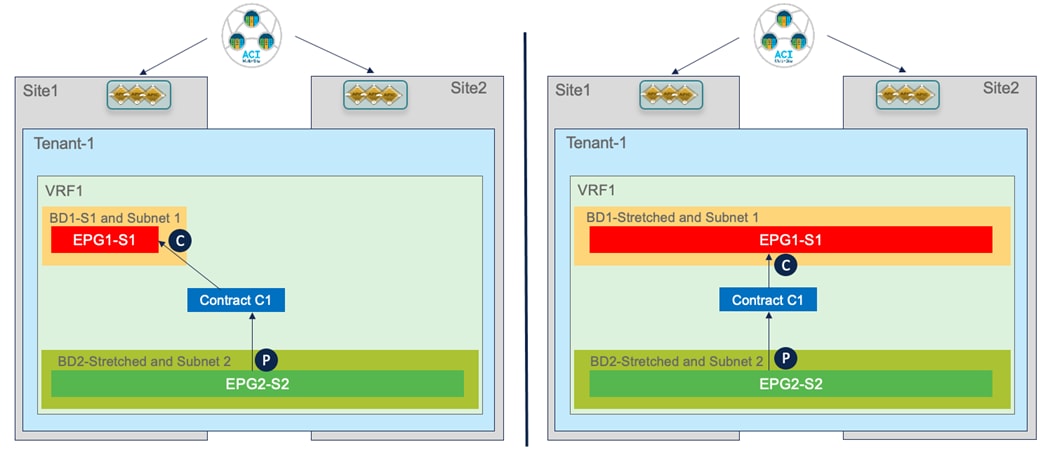

Differently from the stretched EPG use cases previously described, in this case, the EPG/BD objects are locally provisioned in each site and connectivity between them must be established by creating a specific security policy (i.e., contract) specifying what type of communication is allowed. It is worth noticing that similar considerations to what is described in the following section would apply for establishing connectivity between EPGs, independently from the fact that they are locally deployed or stretched (Figure 52).

Inter-EPGs Communication Between Local and/or Stretched EPGs

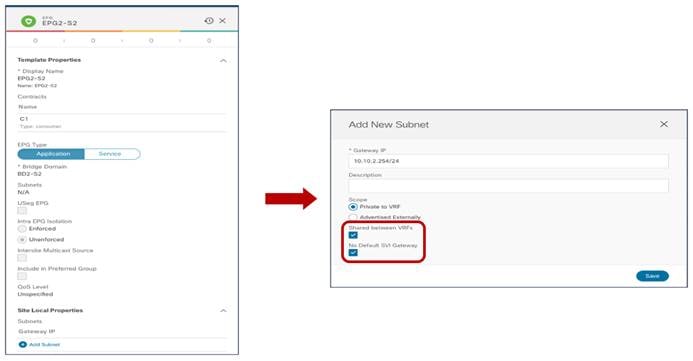

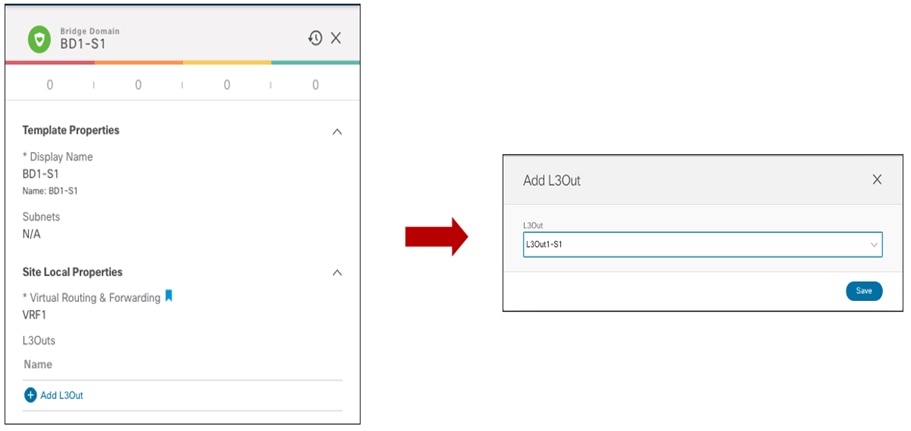

Creating Site Local EPGs/BDs

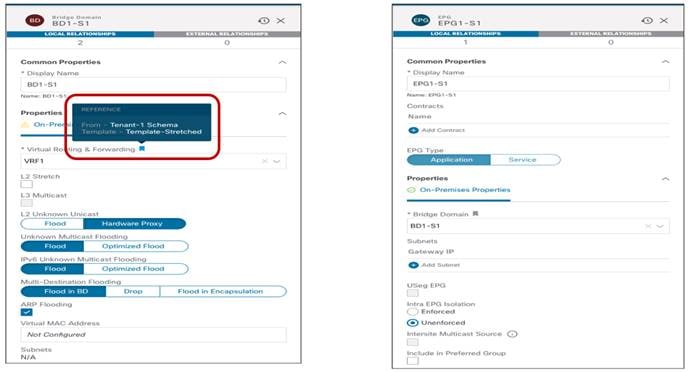

The creation of site-local EPGs/BDs is similar to what is described in the stretched EPG use case. The main difference is that those objects should be defined in templates that are only associated with the specific ACI fabrics where the policies should be provisioned. Figure 53 displays the creation of EPG1-S1 and BD1-S1 that need to be only provisioned to Site1 (a similar configuration is needed in the template associated with Site2 for the local EPGs/BDs objects).

EPGs/BDs Defined in a Site-Specific Template

Notice that inter-template references would hence be needed for example to map local BDs to the previously deployed stretched VRF. Nexus Dashboard Orchestrator allows to cross-reference objects across templates defined in the same schema or even across different schemas.

Local BD and EPG Configuration

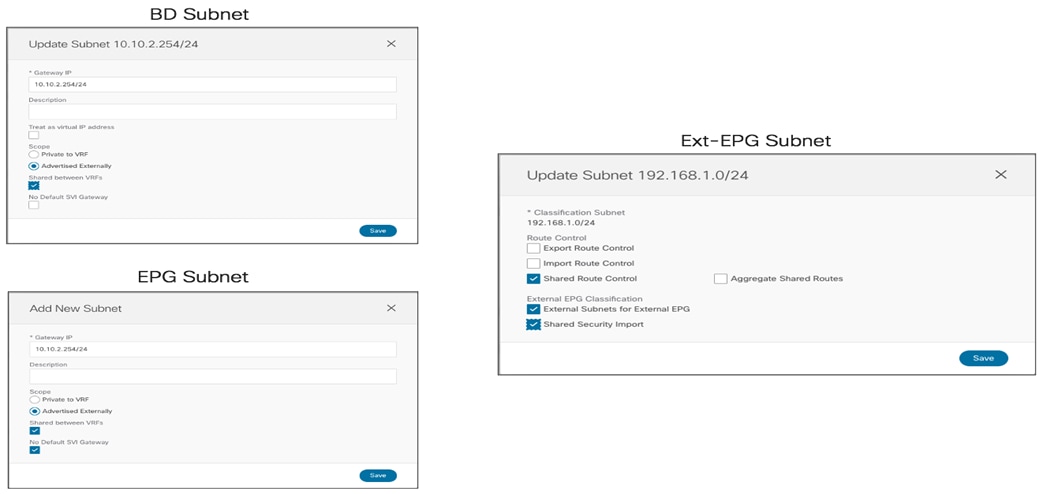

After defining the EPG and the BD local objects, it is required to perform the same site-local configuration discussed for the stretched EPG use cases: the BD subnet is assigned at the site-local level (since the BDs are localized) and there is the requirement to map the local EPG to a local domain (Physical, VMM, etc.).

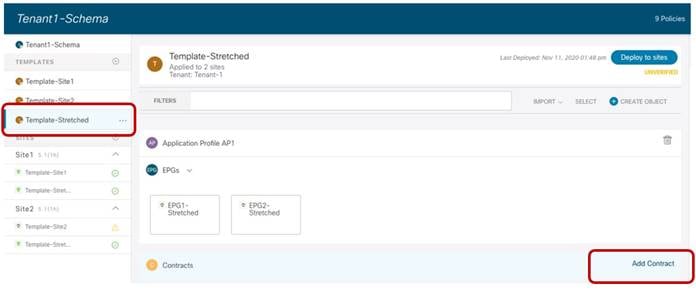

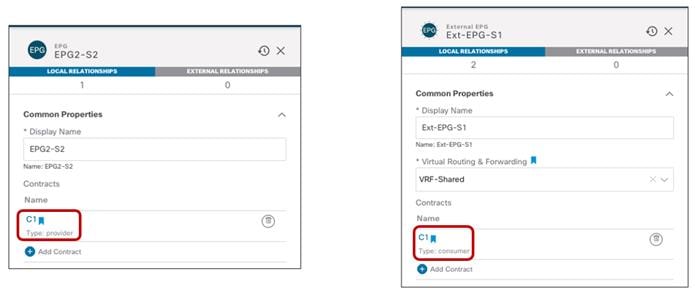

Applying a Security Contract between EPGs

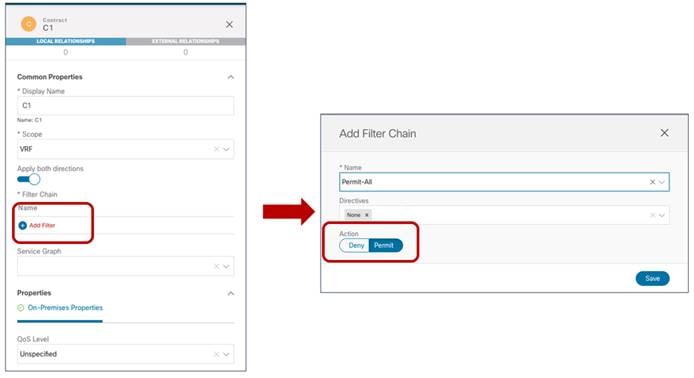

Once the local EPG/BD objects are created in each fabric, to establish communication between them it is required to apply a security policy (contract) allowing all traffic or specific protocols. The contract and the associated filter(s) can be defined in the Template-Stretched, so as to make it available to both fabrics.

Create a Contract in the Template-Stretched

The contract must reference one or more security filters, used to specify what traffic should be allowed. Notice how it is also possible to create a filter with a “Deny” entry (“Permit” was the only option available in older releases).

Define a Deny/Permit Filter Associated to the Contract

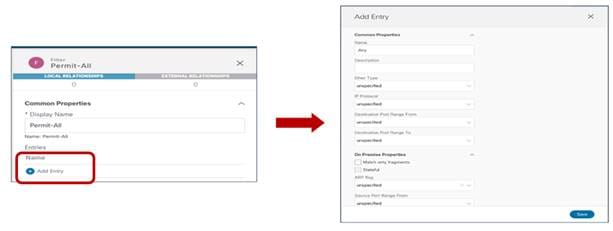

The last step consists in creating the specific filter’s entry used to define the traffic flows that should be permitted (or denied). In the specific example in Figure 57 below we simply use the default settings that translate to match all traffic.

Create the Filter’s Entry to Deny/Permit Traffic

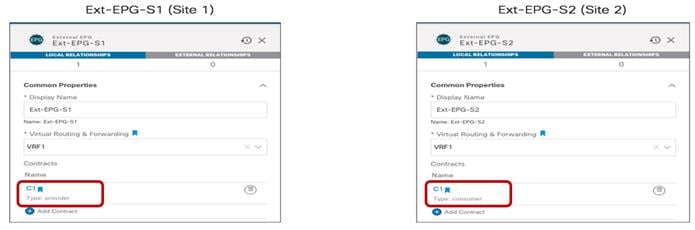

Once the contract with the associated filters is ready, it is possible to define the EPG that “provides” the contract and the EPG that “consumes” it. The best practices recommendation when using contracts with ACI Multi-Site is to always clearly identify a provider and a consumer side for all the contracts that are used. This is critical especially when the goal is to attach a Service-Graph to the contract, as discussed in detail in the “Service Node Integration with ACI Multi-Site” section. For more detailed information on the use of ACI contracts, please refer to the document below:

Verifying EPG-to-EPG Intersite Communication

Once the contract is applied, intersite connectivity between endpoints part of the different EPGs can be established. Before the endpoints start communicating with each other, they are locally learned on the leaf node they connect to, as shown in the outputs below.

Once the contract is applied, intersite connectivity between endpoints part of the different EPGs can be established. Before the endpoints start communicating with each other, they are locally learned on the leaf node they connect to, as shown in the outputs below.

Endpoints Connected to Local EPGs

Leaf 101 Site1

Leaf101-Site1# show endpoint vrf Tenant-1:VRF1

Legend:

s - arp H - vtep V - vpc-attached p - peer-aged

R - peer-attached-rl B - bounce S - static M - span

D - bounce-to-proxy O - peer-attached a - local-aged m - svc-mgr

L - local E - shared-service

+--------------------------------+---------------+-----------------+--------------+-------------+

VLAN/ Encap MAC Address MAC Info/ Interface

Domain VLAN IP Address IP Info

+--------------------------------+---------------+-----------------+--------------+-------------+

55 vlan-819 0050.56b9.1bee LpV po1

Tenant-1:VRF1 vlan-819 10.10.1.1 LpV po1

Leaf 303 Site2

Leaf303-Site2# show endpoint vrf Tenant-1:VRF1

Legend:

s - arp H - vtep V - vpc-attached p - peer-aged

R - peer-attached-rl B - bounce S - static M - span

D - bounce-to-proxy O - peer-attached a - local-aged m - svc-mgr

L - local E - shared-service

+--------------------------------+---------------+-----------------+--------------+-------------+

VLAN/ Encap MAC Address MAC Info/ Interface

Domain VLAN IP Address IP Info

+--------------------------------+---------------+-----------------+--------------+-------------+

34 vlan-118 0050.56b3.e41e LV po4

Tenant-1:VRF1 vlan-118 10.10.2.2 LV po4

On the routing table of the leaf node, as a result of the contract, it is also installed the IP subnet associated with the remote EPG pointing to the proxy-TEP address provisioned on the local spine nodes. The reverse happens on the leaf node in Site2.

Leaf 101 Site1

Leaf101-Site1# show ip route vrf Tenant-1:VRF1

IP Route Table for VRF "Tenant-1:VRF1"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

10.10.1.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.1.112.66%overlay-1, [1/0], 01:01:38, static, tag 4294967294

10.10.1.254/32, ubest/mbest: 1/0, attached, pervasive

*via 10.10.1.254, vlan54, [0/0], 01:01:38, local, local

10.10.2.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.1.112.66%overlay-1, [1/0], 00:04:51, static, tag 4294967294

Leaf 303 Site2

Leaf303-Site2# show ip route vrf Tenant-1:VRF1

IP Route Table for VRF "Tenant-1:VRF1"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

10.10.1.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.0.136.66%overlay-1, [1/0], 00:06:47, static, tag 4294967294

10.10.2.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.0.136.66%overlay-1, [1/0], 00:06:47, static, tag 4294967294

10.10.2.254/32, ubest/mbest: 1/0, attached, pervasive

*via 10.10.2.254, vlan33, [0/0], 00:06:47, local, local

Once connectivity between the endpoints is established, the leaf nodes in each site learn via data-plane activity the specific information for the remote endpoints. The output below shows for example the endpoint table for the leaf node in Site1.

Leaf 101 Site1

Leaf101-Site1# show endpoint vrf Tenant-1:VRF1

Legend:

s - arp H - vtep V - vpc-attached p - peer-aged

R - peer-attached-rl B - bounce S - static M - span

D - bounce-to-proxy O - peer-attached a - local-aged m - svc-mgr

L - local E - shared-service

+--------------------------------+---------------+-----------------+--------------+-------------+

VLAN/ Encap MAC Address MAC Info/ Interface

Domain VLAN IP Address IP Info

+--------------------------------+---------------+-----------------+--------------+-------------+

Tenant-1:VRF1 10.10.2.2 tunnel26

55 vlan-819 0050.56b9.1bee LpV po1

Tenant-1:VRF1 vlan-819 10.10.1.1 LpV po1

The remote endpoint 10.10.2.2 is learned as reachable via the VXLAN tunnel26. As expected, the destination of such a tunnel is the O-UTEP address for Site2 (172.16.200.100).

Leaf 101 Site1

Leaf101-Site1# show interface tunnel 26

Tunnel26 is up

MTU 9000 bytes, BW 0 Kbit

Transport protocol is in VRF "overlay-1"

Tunnel protocol/transport is ivxlan

Tunnel source 10.1.0.68/32 (lo0)

Tunnel destination 172.16.200.100/32

Verifying Namespace Translation Information

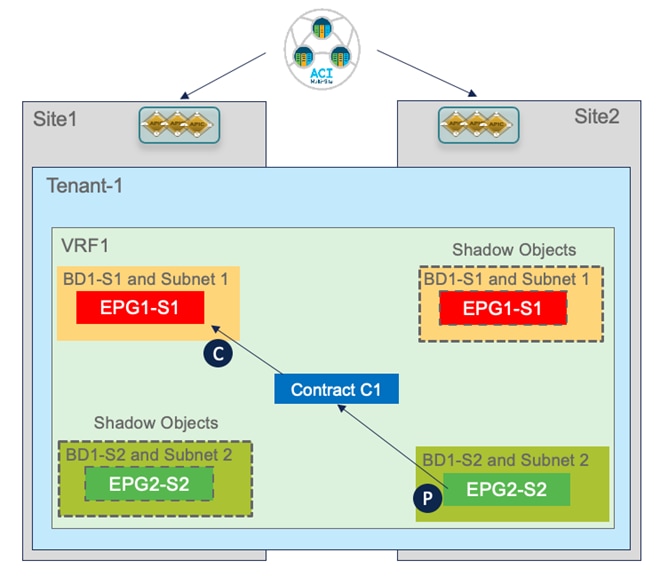

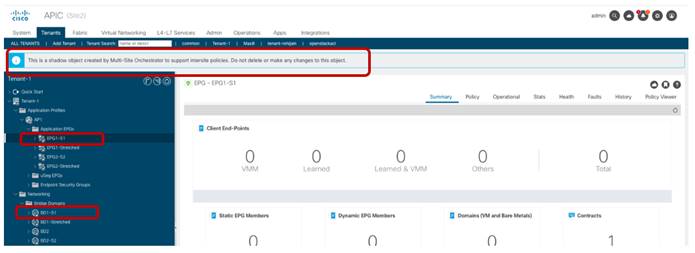

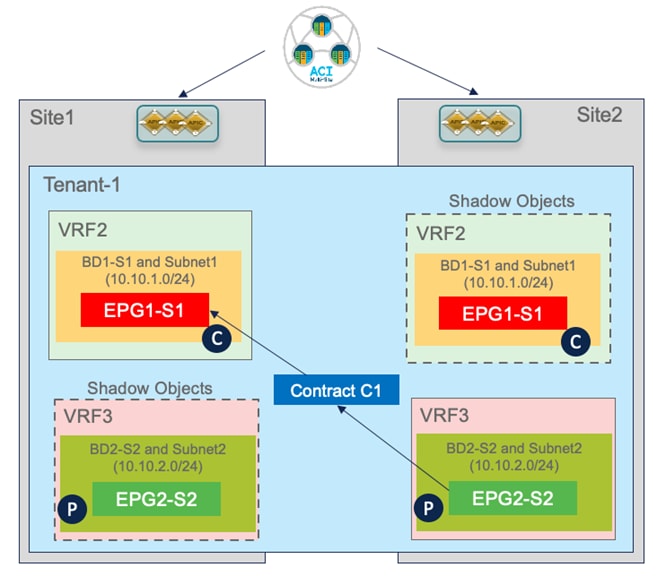

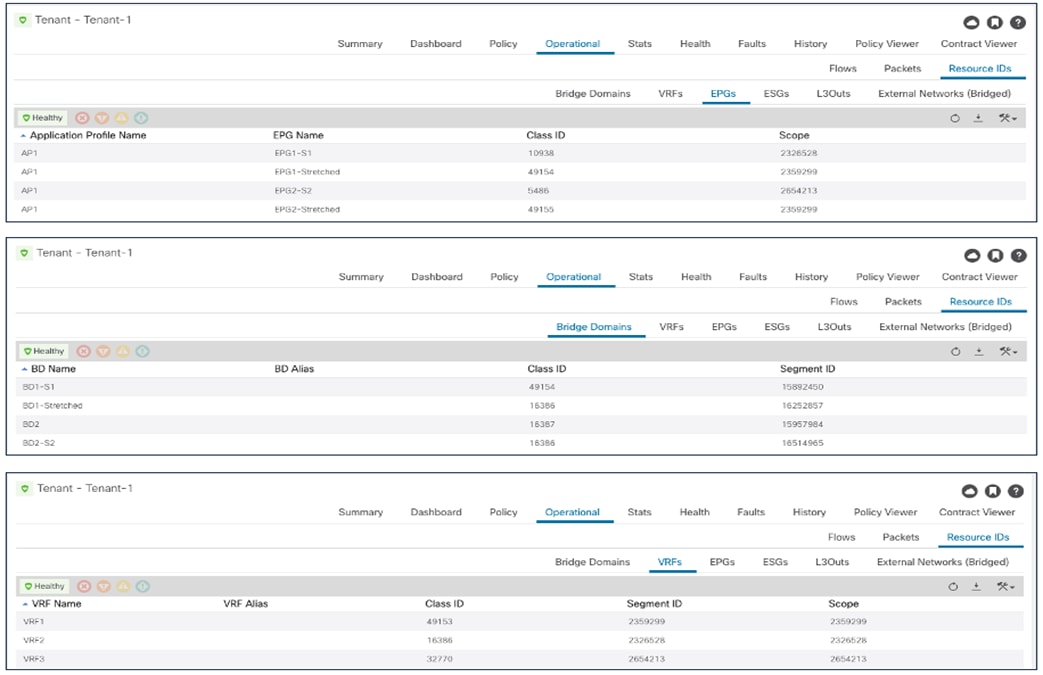

As discussed for the stretched EPG use cases, the creation of translation entries in the spines is required every time an intersite communication must be established using the VXLAN data path. In the specific use case of inter-EPG connectivity between EPGs/BDs that are locally deployed in each fabric, the creation of a security policy between them leads to the creation of the so-called ‘shadow objects’ (Figure 59) in the remote site’s APIC domain.

Creation of Shadow Objects

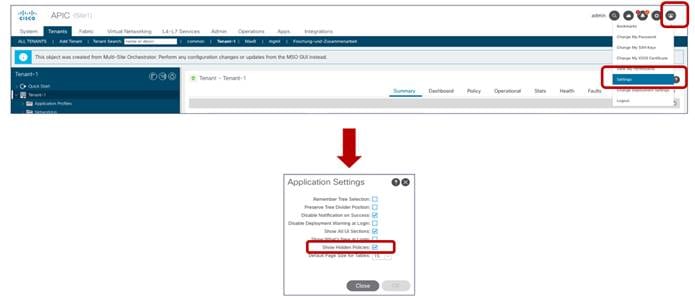

Starting from ACI release 5.0(2), the shadow objects are hidden by default on APIC. To enable their display, it is required to set check the flag for the “Show Hidden Policies” option shown in Figure 60.

Enabling the Display of Shadow Objects

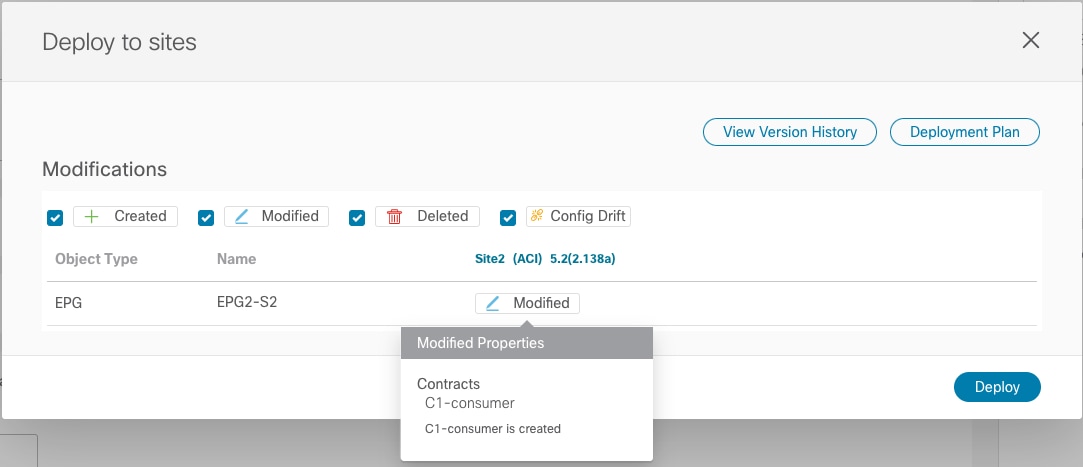

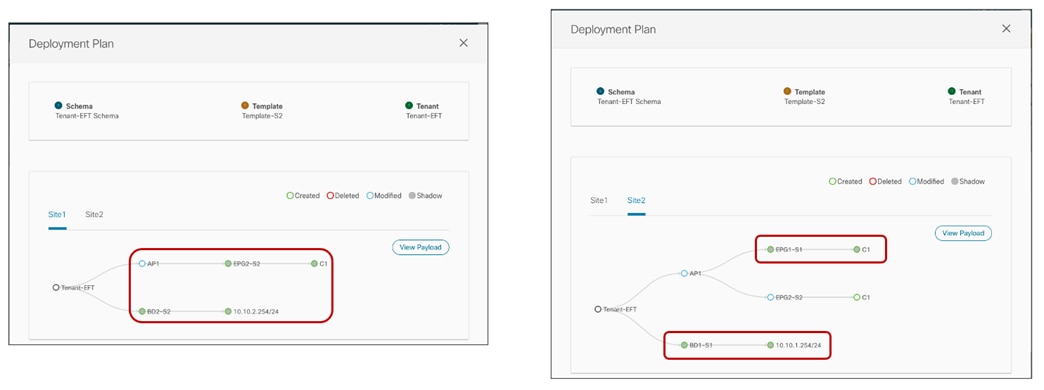

The use of the “Template Deployment Plan” feature, available since Nexus Dashboard Orchestrator release 3.4(1), is quite interesting as it allows to clearly provide the information of what objects are created and where. In our example, when we configure the EPG2-S2 in Site2 to consume the contract provided by EPG1-S1 in Site1 (information provided in the “Deploy to sites” window in Figure 61), the Deployment Plan highlights the creation of those shadow objects in both sites (figure 62).

Adding a Consumed Contract to EPG2-S2

Creation of Shadow Objects Highlighted by the Deployment Plan

The creation of shadow objects is required to be able to assign them the specific resources (Segment IDs, class IDs, etc.) that must be configured in the translation tables of the spines to allow for successful intersite data plane communication.

For example, when looking at the APIC in Site2, we can notice that the EPG and BD locally defined in Site2 are appearing as shadow objects there.

Display of Shadow Objects on APIC

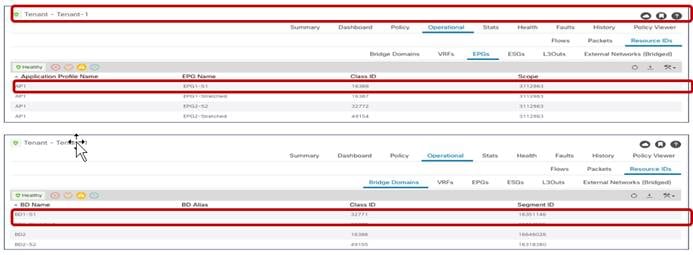

EPG1-S1 and BD1-S1 are objects that were locally created only in Site1 (since they represent site-local objects). However, the establishment of a security policy between EPG1-S1 and EPG2-S2 caused the creation of those objects also on the APIC managing Site2. The same behavior is exhibited for EPG2-S2 and BD2-S2. Figure 64 and Figure 65 display the specific Segment IDs and class IDs values assigned to those objects (the VRF is not shown as the entries are the same previously displayed in Figure 49 and Figure 50).

Segment IDs and Class IDs for Local and Shadow Objects in Site1

Segment IDs and Class IDs for Local and Shadow Objects in Site2

Those values are then programmed in the translation tables of the spines to ensure they can perform the proper translation functions when traffic is exchanged between endpoints in Site1 part of EPG1-S1 and endpoints in Site2 part of EPG2-S2.

For what concerns the Segment IDs, the only translation entry that is required is the one for the VRF. This is because when routing between sites, the VRF L3 VNID value is inserted in the VXLAN header to ensure that the receiving site can then perform the Layer 3 lookup in the right routing domain. There is no need of installing translation entries for the Segment IDs associated with the BDs since there will never be intersite traffic carrying those values in the VXLAN header (given that those BDs are not stretched).

Spine 1101 Site1

Spine1101-Site1# show dcimgr repo vnid-maps

--------------------------------------------------------------

Remote | Local

site Vrf Bd | Vrf Bd Rel-state

--------------------------------------------------------------

2 2359299 | 3112963 [formed]

Spine 401 Site2

Spine401-Site2# show dcimgr repo vnid-maps

--------------------------------------------------------------

Remote | Local

site Vrf Bd | Vrf Bd Rel-state

--------------------------------------------------------------

1 3112963 | 2359299 [formed]

The class IDs for the EPGs and shadow EPGs are instead displayed in the output below.

Spine 1101 Site1

Spine1101-Site1# show dcimgr repo sclass-maps

----------------------------------------------------------

Remote | Local

site Vrf PcTag | Vrf PcTag Rel-state

----------------------------------------------------------

2 2359299 32771 | 3112963 16388 [formed]

2 2359299 16390 | 3112963 32772 [formed]

Spine 401 Site2

Spine401-Site2# show dcimgr repo sclass-maps

----------------------------------------------------------

Remote | Local

site Vrf PcTag | Vrf PcTag Rel-state

----------------------------------------------------------

1 3112963 32772 | 2359299 16390 [formed

1 3112963 16388 | 2359299 32771 [formed]

In addition to the programming of the translation entries on the spine, the assignment of class IDs to the shadow EPGs is also important to be able to properly apply the security policy associated with the contract. As already discussed in the “Verifying EPG-to-EPG Intersite Communication” section, when intra-VRF intersite traffic flows are established, remote endpoint information is learned on the local leaf nodes. This ensures that the contract can always be applied at the ingress leaf node for both directions of the flow.

The output below shows the security rules programmed on leaf 101 in Site1, which is where the endpoint part of EPG1-S1 is locally connected. As you can notice, there is a permit entry associated to the contract C1 for communication between 16388 (the class ID of EPG1-S1) and 32772 (the class ID of the shadow EPG2-S2). There is also an entry for the return flow, which will be used only if for some reason the policy can’t be applied in the ingress direction on the remote leaf node in Site2.

Leaf 101 Site1

Leaf101-Site1# show zoning-rule scope 3112963

+---------+--------+--------+----------+----------------+---------+---------+-------------+----------+----------------------+

| Rule ID | SrcEPG | DstEPG | FilterID | Dir | operSt | Scope | Name | Action | Priority |

+---------+--------+--------+----------+----------------+---------+---------+-------------+----------+----------------------+

| 4151 | 0 | 0 | implicit | uni-dir | enabled | 3112963 | | deny,log | any_any_any(21) |

| 4200 | 0 | 0 | implarp | uni-dir | enabled | 3112963 | | permit | any_any_filter(17) |

| 4198 | 0 | 15 | implicit | uni-dir | enabled | 3112963 | | deny,log | any_vrf_any_deny(22) |

| 4213 | 0 | 32771 | implicit | uni-dir | enabled | 3112963 | | permit | any_dest_any(16) |

| 4219 | 16388 | 32772 | default | uni-dir-ignore | enabled | 3112963 | Tenant-1:C1 | permit | src_dst_any(9) |

| 4220 | 32772 | 16388 | default | bi-dir | enabled | 3112963 | Tenant-1:C1 | permit | src_dst_any(9) |

| 4203 | 0 | 32770 | implicit | uni-dir | enabled | 3112963 | | permit | any_dest_any(16) |

+---------+--------+--------+----------+----------------+---------+---------+-------------+----------+----------------------+

Similar output can be found on leaf 303 in Site2 where the endpoint part of EPG2-S2 is locally connected. Notice how the class IDs values used here are the ones programmed in Site2 for the shadow EPG1-S1 (32771) and local EPG2-S2 (16390).

Leaf 303 Site2

Leaf303-Site2# show zoning-rule scope 2359299

+---------+--------+--------+----------+----------------+---------+---------+-------------+----------+----------------------+

| Rule ID | SrcEPG | DstEPG | FilterID | Dir | operSt | Scope | Name | Action | Priority |

+---------+--------+--------+----------+----------------+---------+---------+-------------+----------+----------------------+

| 4183 | 0 | 0 | implicit | uni-dir | enabled | 2359299 | | deny,log | any_any_any(21) |

| 4182 | 0 | 0 | implarp | uni-dir | enabled | 2359299 | | permit | any_any_filter(17) |

| 4181 | 0 | 15 | implicit | uni-dir | enabled | 2359299 | | deny,log | any_vrf_any_deny(22) |

| 4176 | 0 | 16387 | implicit | uni-dir | enabled | 2359299 | | permit | any_dest_any(16) |

| 4190 | 0 | 16386 | implicit | uni-dir | enabled | 2359299 | | permit | any_dest_any(16) |

| 4205 | 0 | 16389 | implicit | uni-dir | enabled | 2359299 | | permit | any_dest_any(16) |

| 4207 | 16390 | 32771 | default | bi-dir | enabled | 2359299 | Tenant-1:C1 | permit | src_dst_any(9) |

| 4206 | 32771 | 16390 | default | uni-dir-ignore | enabled | 2359299 | Tenant-1:C1 | permit | src_dst_any(9) |

+---------+--------+--------+----------+----------------+---------+---------+-------------+----------+----------------------+

Use of Preferred Group for Enabling Intersite Connectivity

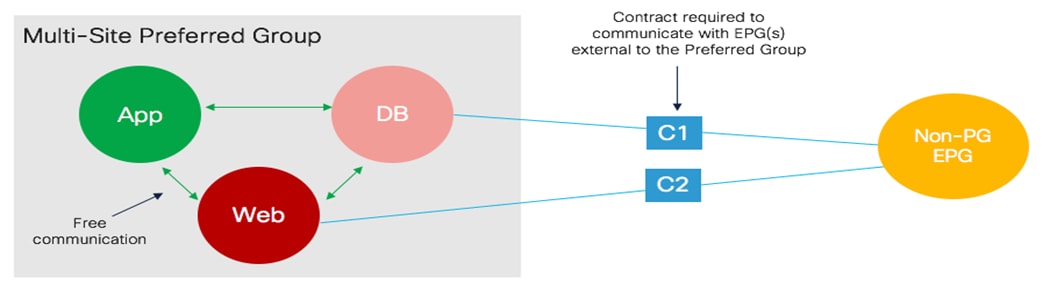

An alternative approach to the use of contracts to allow inter-EPG communication intra-VRF is the use of the Preferred Group functionality.

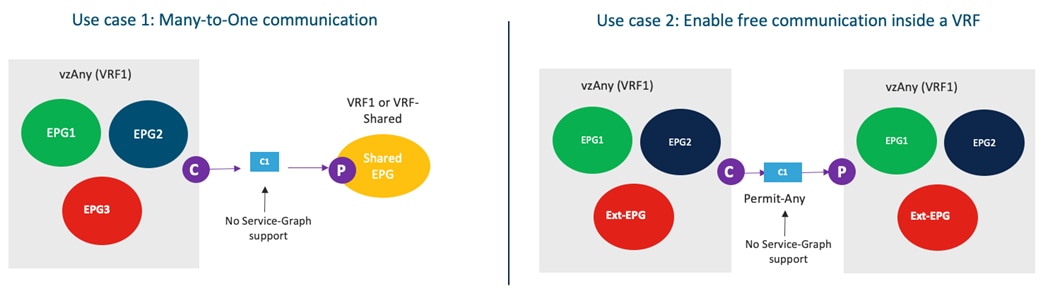

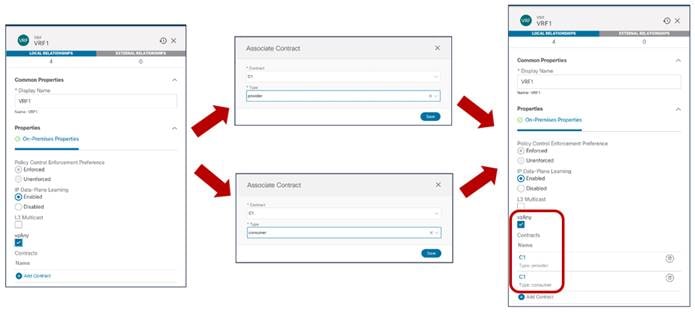

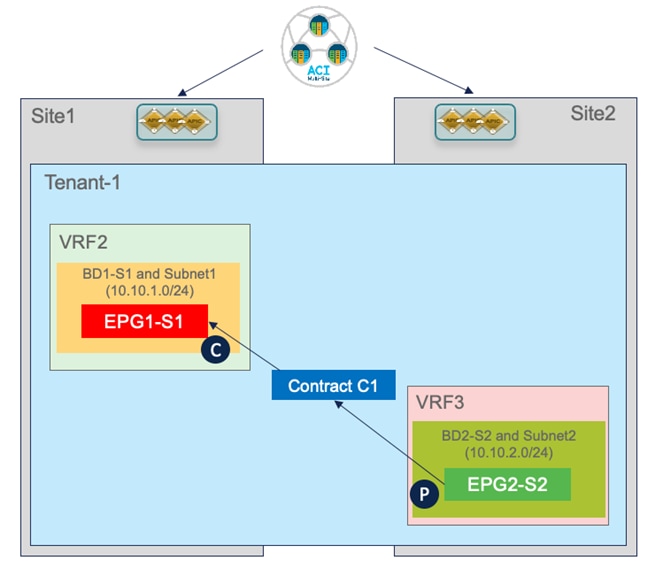

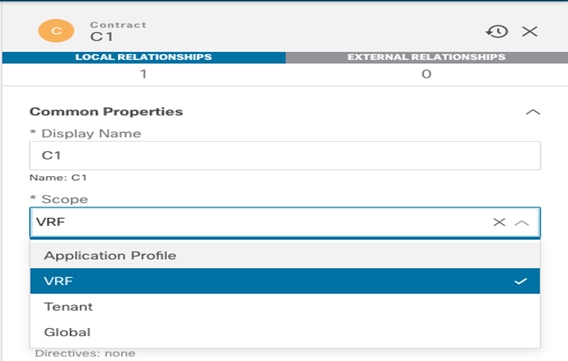

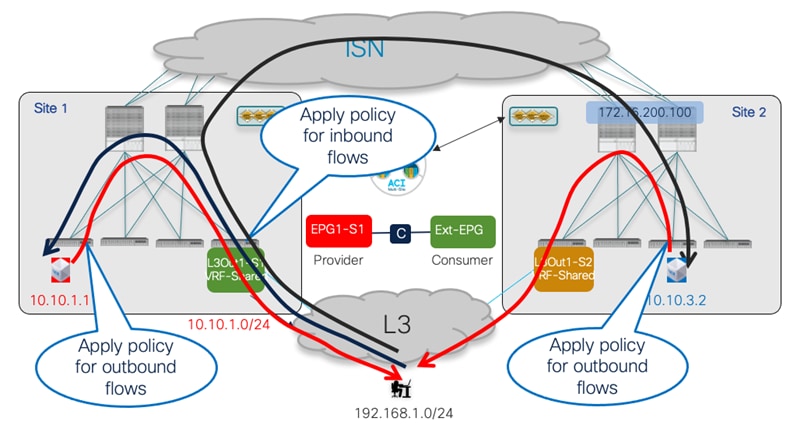

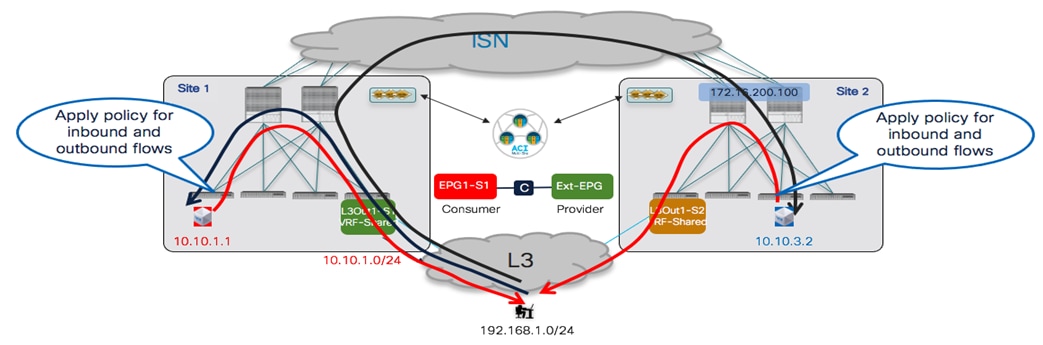

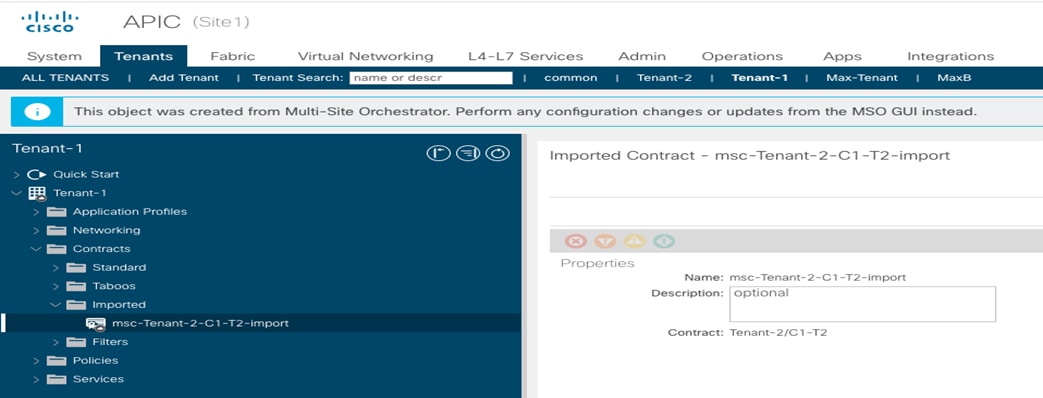

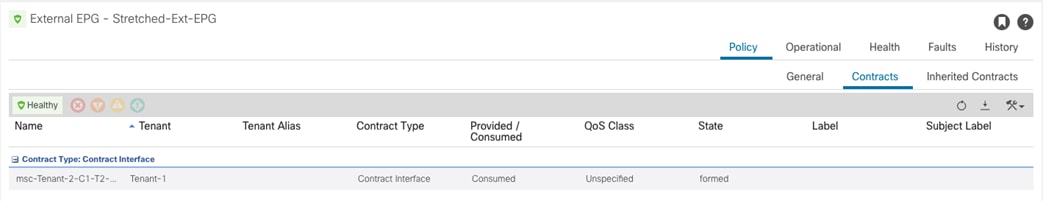

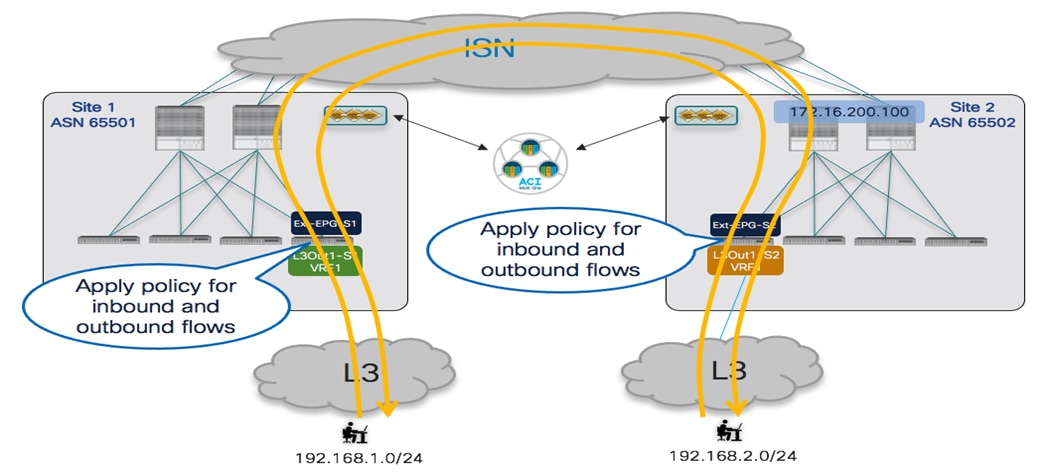

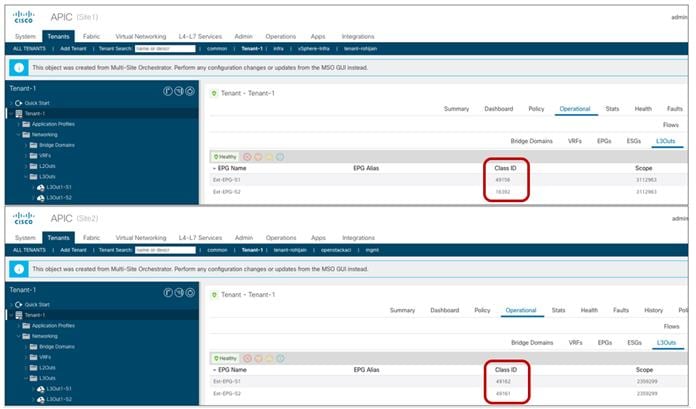

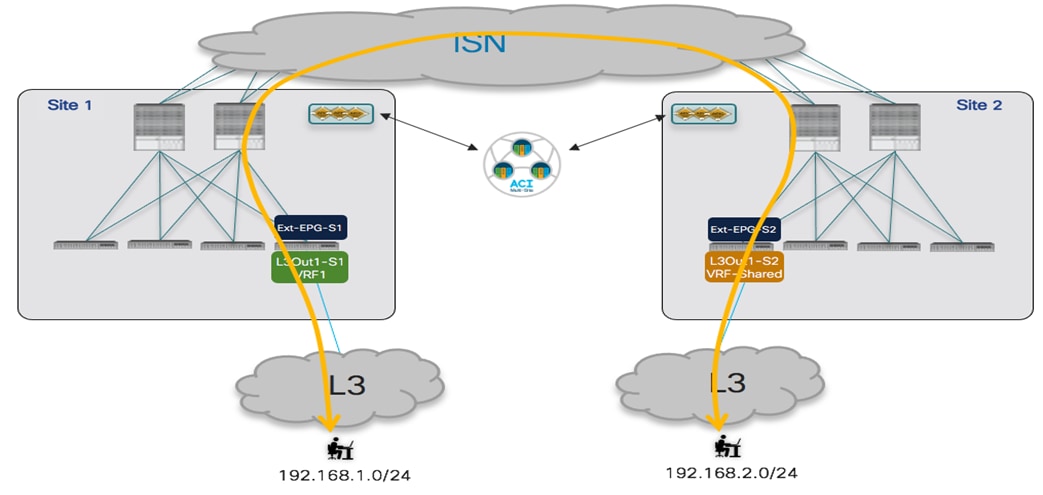

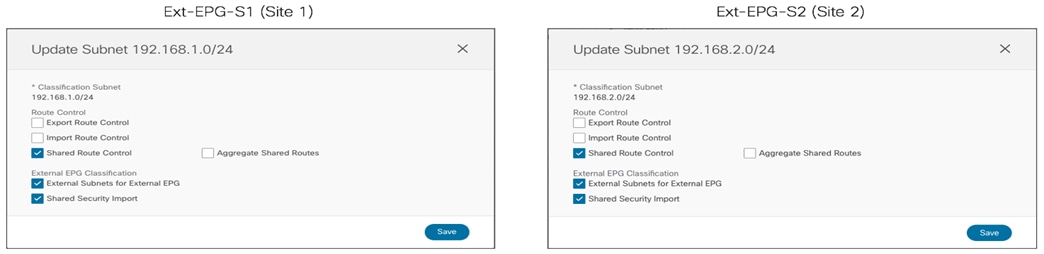

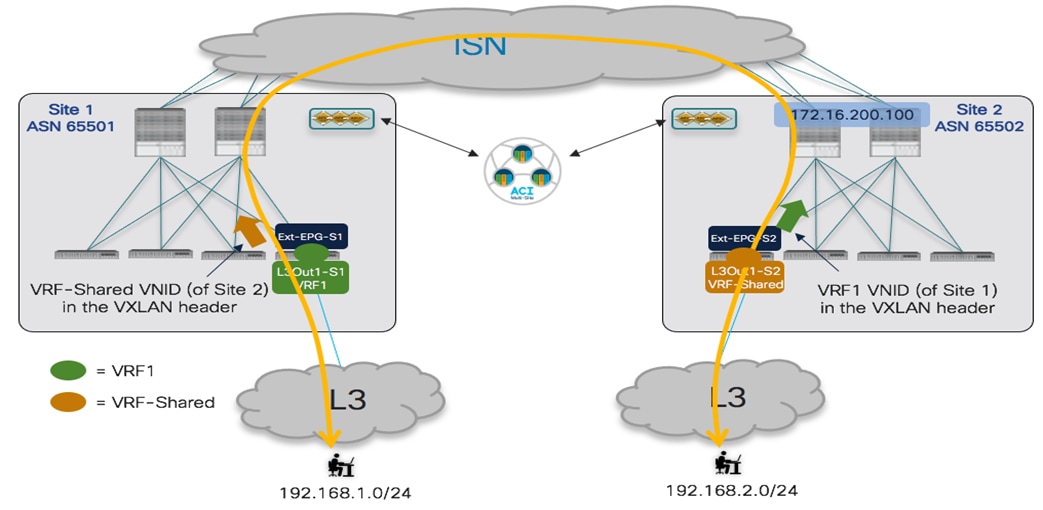

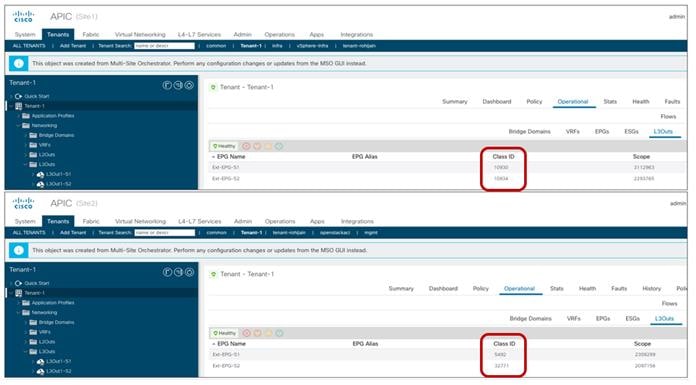

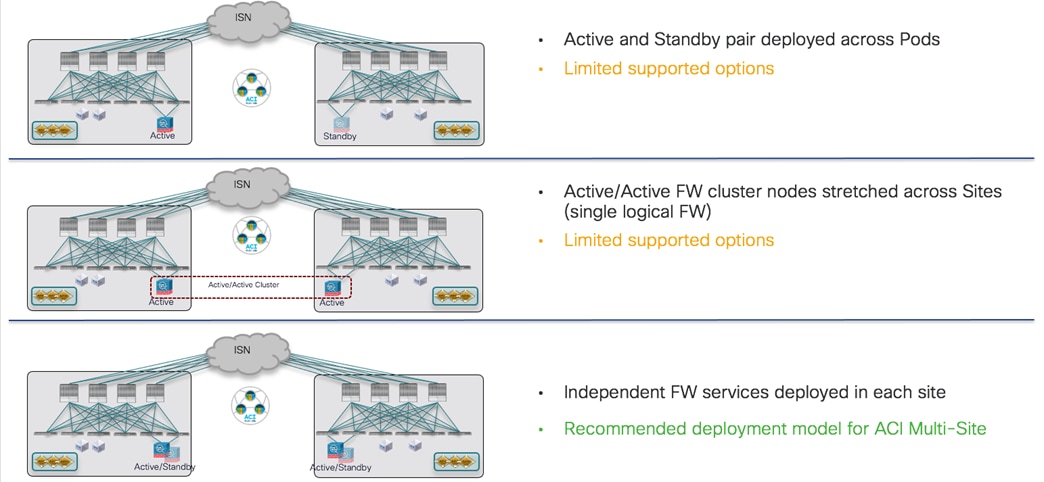

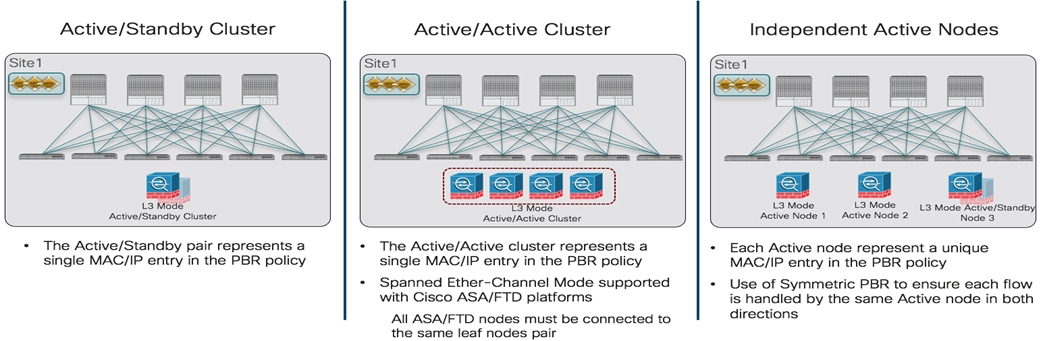

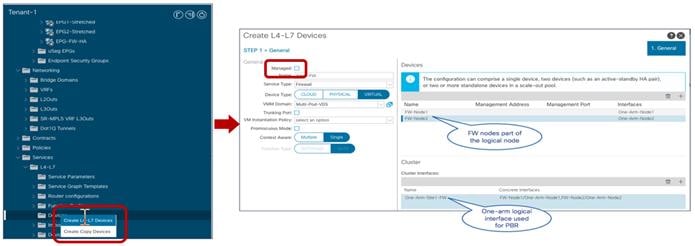

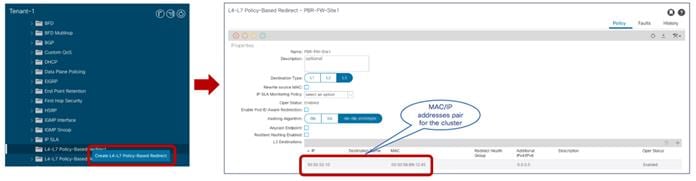

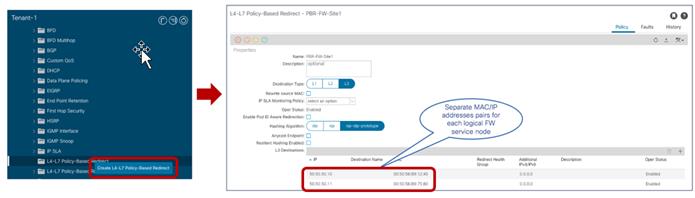

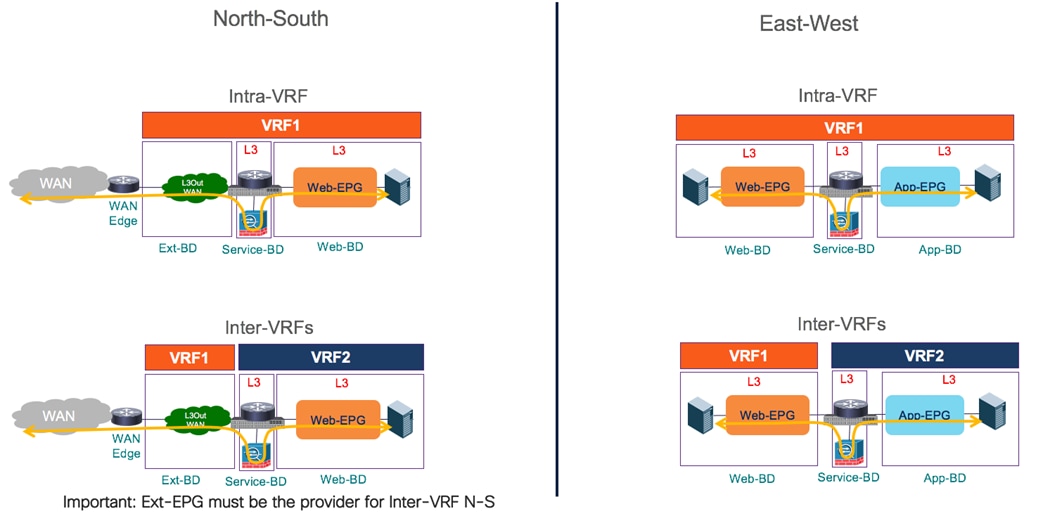

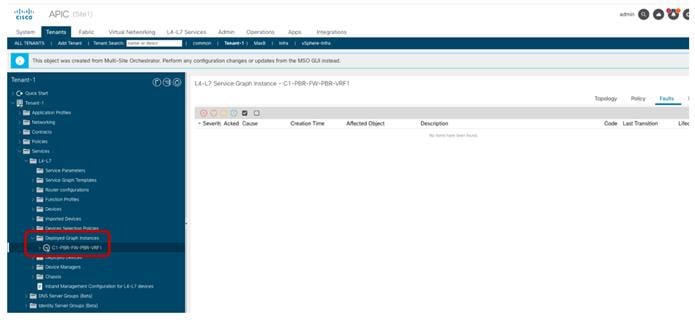

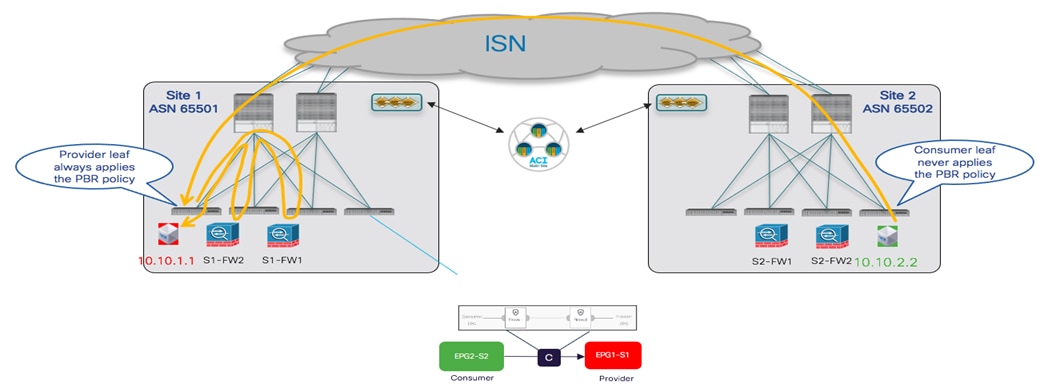

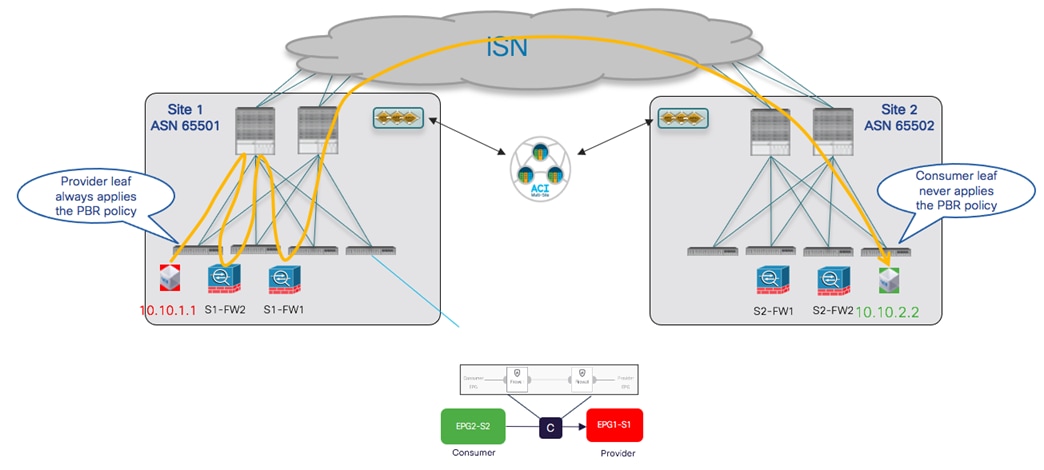

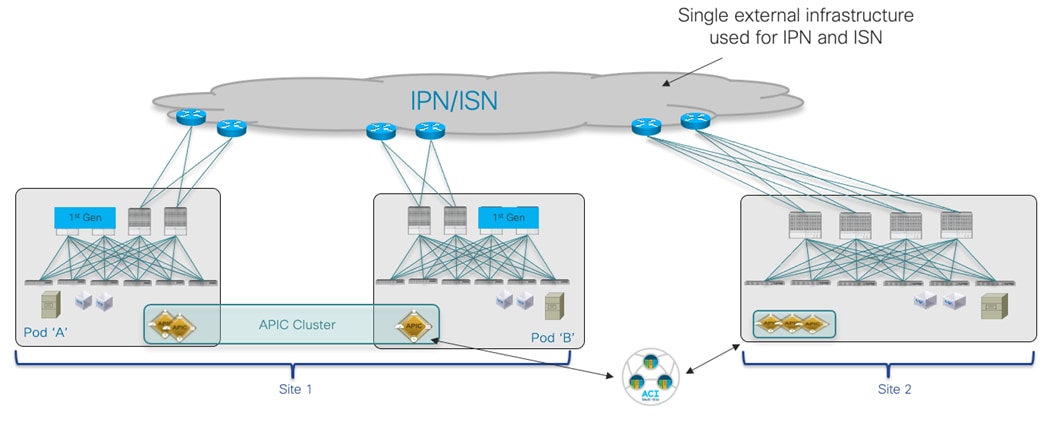

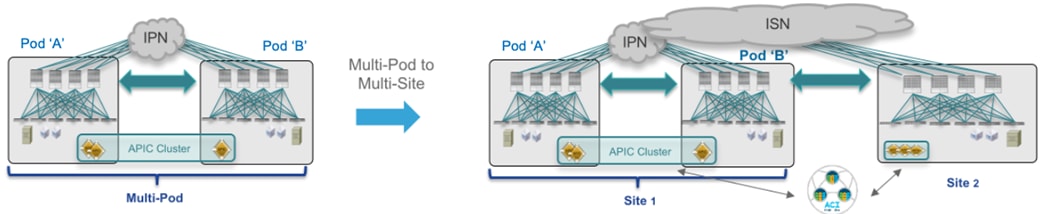

Use of Preferred Group for Free Intra-VRF Communication Between EPGs