FlexPod Datacenter with SUSE Rancher for AI Workloads

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Published Date: May 2024

![]()

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

The FlexPod Datacenter solution is a validated approach for deploying Cisco® and NetApp technologies and products to build shared private and public cloud infrastructure. Cisco and NetApp have partnered to deliver a series of FlexPod solutions that enable strategic data-center platforms. The success of the FlexPod solution is driven by its ability to evolve and incorporate both technology and product innovations in the areas of management, computing, storage, and networking. To help organizations with their digital transformation and application modernization practices, Cisco and NetApp have partnered to produce this Cisco Validated Design (CVD) for the FlexPod™ Datacenter for SUSE Rancher and Rancher Kubernetes Engine (RKE2) solution. As the hybrid-cloud operation is the new de-facto default for many companies, the network connection to the public cloud, the Kubernetes cluster management, and the workload management across on-premises and public clouds are covered as part of this solution design.

FlexPod delivers an integrated architecture that incorporates compute, storage, and network design best practices, thereby minimizing IT risks by validating the integrated architecture to ensure compatibility between various components. The solution also addresses IT pain points by providing documented design guidance, deployment guidance, and support that can be used in various stages (planning, designing, and implementation) of a deployment. FlexPod delivered as Infrastructure as Code (IaC) further eliminates error-prone manual tasks, allowing quicker and more consistent solution deployments.

SUSE® Rancher Enterprise Container Management is an enterprise-ready Kubernetes container platform management with full-stack automated operations to manage hybrid cloud and multi-cloud deployments. SUSE Rancher is optimized to improve developer productivity and promote innovation. The SUSE Rancher gives developers a self-service platform to build and run containerized applications. With SUSE Rancher, you can quickly start creating new cloud-native applications or cloud-enabling existing applications and spawning an environment for a new microservice in minutes.

Combining SUSE Rancher with the FlexPod solution can simplify the deployment and management of the container infrastructure. The Ansible integration with the FlexPod solution automates the deployment of the FlexPod infrastructure enabling you to take advantage of programming and automating the infrastructure at scale with agility, extending the benefits of automation to the entire stack.

Some of the key advantages of integrating Cisco FlexPod Datacenter as a workload domain into SUSE Rancher are:

· Simpler and programmable infrastructure: FlexPod infrastructure delivered as infrastructure-as-a-code through a single partner integrable open API.

· Latest hardware and software compute innovations: policy-based configurations, delivered using Cisco Intersight, to deploy and manage the latest processor, memory, network, and power/cooling improvements.

· Storage Modernization: deliver high-speed, consistent, low latency, multi-tenant storage using a range of NetApp all-flash arrays.

· Innovative cloud operations: continuous feature delivery and no need for maintaining on-premises physical or virtual machines supporting management functions.

· Built for investment protections: design ready for future technologies such as liquid cooling and high-Wattage CPUs; CXL-ready.

The FlexPod solution includes integration of the Cisco Intersight with NetApp Active IQ Unified Manager, and if required VMware vCenter to deliver monitoring, orchestration, and workload optimization capabilities for different layers (virtualization and storage) of the FlexPod infrastructure. The modular nature of the Cisco Intersight platform also provides an easy upgrade path to additional services, such as Intersight Workload Optimization.

The solution provided in this document was validated on distinct types of Cisco UCS servers to ensure functionality in heterogeneous landscapes, like expansions of existing Cisco UCS B-Series blade-based systems with Cisco UCS X-Series nodes or using M5, M6 and M7 generation servers in the same setup. The diagrams shown in this document are focused on X-Series based deployments. The configuration principles are valid for any type of Cisco UCS servers.

If you’re interested in understanding the FlexPod design and deployment details, including the configuration of various elements of design and associated best practices, refer to the Cisco Validated Designs for FlexPod, here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html.

Solution Overview – FlexPod Datacenter with SUSE Rancher

This chapter contains the following:

· Audience

The featured FlexPod Datacenter for SUSE Rancher Enterprise Container Manager solution is a pre-designed, integrated, and validated architecture for the data center that combines Cisco UCS servers, the Cisco Nexus family of switches, and NetApp AFF A-series storage into a single, flexible architecture. FlexPod is designed for high availability (HA), with no single point of failure, while maintaining cost-effectiveness and flexibility in the design to support a wide variety of workloads. The SUSE Rancher software provides multiple ways of deployment, this document is focused on only one. The FlexPod solution with SUSE Rancher in this deployment guide was tested and validated with Cisco UCS M5, M6 and M7 servers managed by Intersight Managed Mode (IMM) and leveraging local disks for the SUSE Linux Enterprise (SLE) and SLE Micro operating systems.

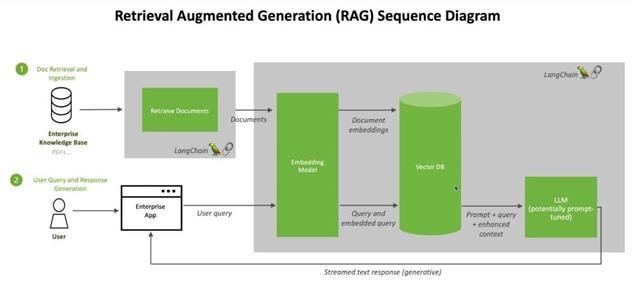

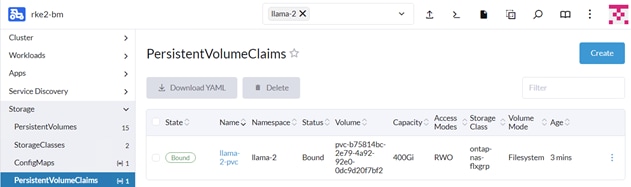

Integration between the SUSE Rancher/RKE2 and the NetApp storage and data management services occurs at several levels. The main storage integration is based on Container Storage Interface (CSI) Astra Trident for Kubernetes Driver for NetApp storage systems, which enables container orchestrators such as Kubernetes to manage the life cycle of persistent storage.

The focus of the FlexPod Datacenter with SUSE Rancher solution is on a bare metal cluster with the RKE2 nodes running SUSE Linux Enterprise on Cisco UCS servers. Virtualized RKE2 clusters and single-node deployments are validated to better support smaller deployments and Test/Dev installations.

The following deployment steps for the FlexPod Datacenter for the SUSE Rancher solution are explained in this document:

· Hardware deployment.

· SUSE Linux Enterprise (SLE) 15

· SUSE Rancher Kubernetes Engine (RKE2) cluster

· SUSE Rancher Enterprise Container Manager

· CSI Astra Trident for Kubernetes

· Optional deployment with SUSE SLE Micro and K3s

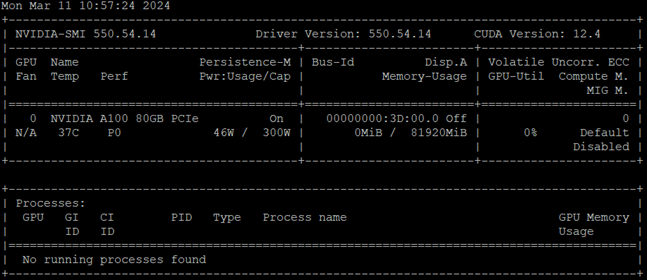

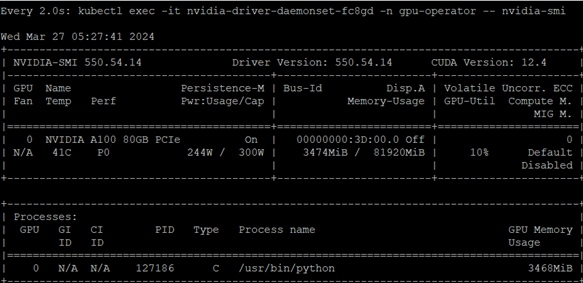

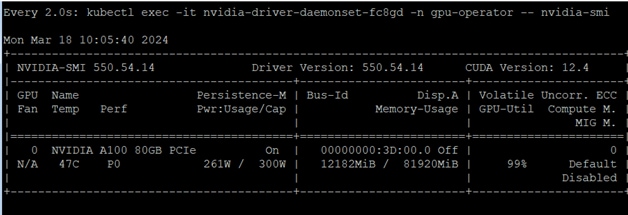

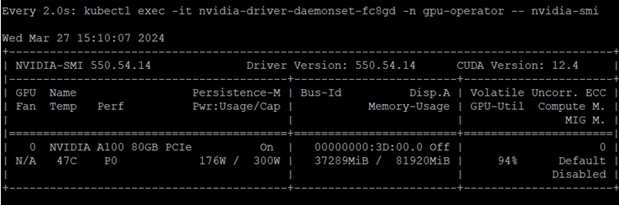

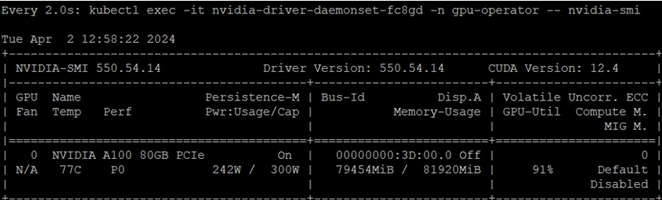

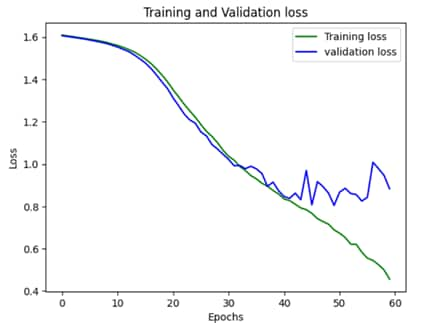

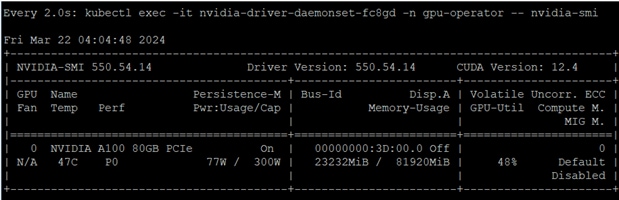

· NVIDIA GPU Driver and CUDA Toolkit

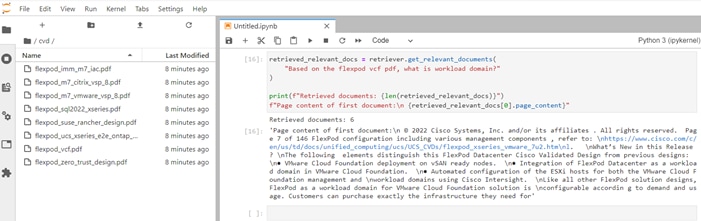

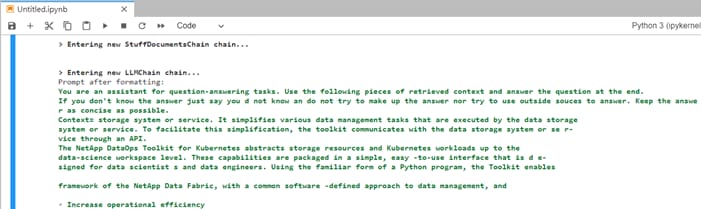

· NetApp DataOps Toolkit

Note: The infrastructure deployment is the same as documented in the FlexPod Datacenter with End-to-End 100G, Cisco Intersight Managed Mode, VMware 7U3, and NetApp ONTAP 9.11 Deployment Guide.

All configurations on the Cisco UCS, Nexus switches, and NetApp AFF storage for SUSE Rancher are in addition to the documented configuration for VMware. This configuration simplifies the deployment of different use-cases on the same FlexPod and in parallel help Cisco TAC support customers in case of an issue.

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

This document provides deployment guidance around incorporating the Cisco Intersight—managed UCS platform within FlexPod Datacenter infrastructure to run SUSE Rancher Enterprise Container Manager and SUSE Rancher Kubernetes Engine (RKE) cluster and NVIDIA GPUs to enable AI/ML workloads. The document covers various considerations and best practices for a successful deployment. The document also highlights the product requirements for integrating virtualization and storage systems to Cisco Intersight to deliver a true cloud-based integrated approach for infrastructure management.

The following elements distinguish this version of FlexPod from previous models:

· Cisco Intersight Infrastructure Manager

· NetApp ONTAP 9.13.1 P6

· SUSE Rancher Enterprise Container Manager

· SUSE Rancher Kubernetes Engine (RKE)

· NetApp Astra Trident

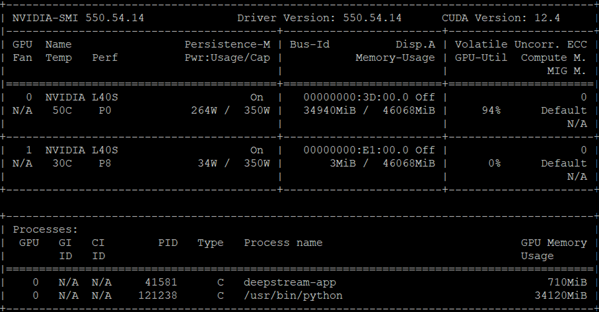

· NVIDIA Datacenter GPUs

· NVIDIA CUDA-Toolkit

· NetApp DataOps Toolkit

This chapter contains the following:

· Ansible Automation for Solution Deployment

This section explains the key design requirements and various prerequisites for delivering this new solution.

The FlexPod Datacenter with SUSE Rancher solution closely aligns with all FlexPod CVDs and meets the following general design requirements:

· Resilient design across all infrastructure layers with no single point of failure.

· Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed.

· Modular design that can be replicated to expand and grow as the needs of the business grow.

· Flexible design that can support components beyond what is validated and documented in this guide.

· Simplified design with the ability to automate and integrate with external automation and orchestration tools.

For SUSE Rancher integration into a traditional FlexPod solution, the following specific design considerations are observed:

· Deployment option for one or three master nodes, where the opportunity with one master is only recommended for non-production installations.

· A minimum of 2 worker nodes with the ability to increase the nodes as the load requirements increase.

· Automating the FlexPod infrastructure deployment and RKE2 installation by utilizing Ansible Playbooks to simplify the installation and reduce the deployment time.

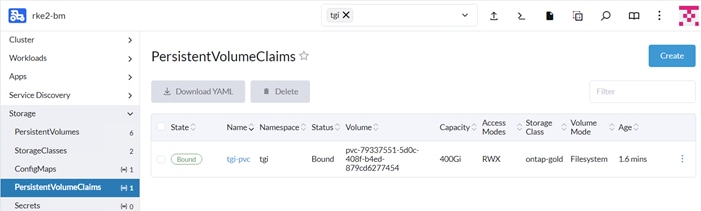

· Present persistent storage (volumes) to the containerized applications using the NetApp Astra Trident CSI framework.

· Dedicated Cisco UCS vNICs for different traffic needs with UCS Fabric Failover for high availability.

This solution uses the same hardware components and initial configuration as the solution documented here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html.

Note: This document refers to the required information to finish a task. Some VLAN IDs and used names differ between the two documents, as those information are used/defined as variables in both documents, there is no impact to the deployment of the solution.

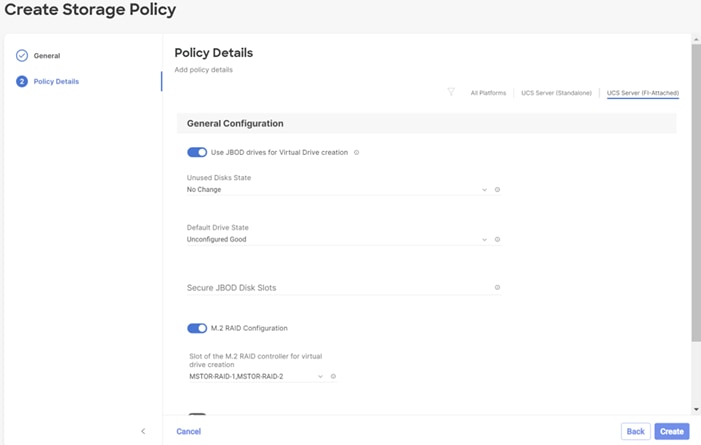

This FlexPod design utilizes Cisco UCS servers connected and managed through Cisco UCS Fabric Interconnects and the Intersight Infrastructure Manager (IMM) to manage the servers. These high-performance servers are configured as compute nodes where SUSE Linux Enterprise Micro is loaded using local disk boot leveraging a RAID1 volume provided by M.2 controller and two SATA SSDs. The persistent storage volumes for containers are provisioned on the NetApp AFF A400 using NFS NAS storage. Optionally, you can use iSCSI storage.

IP- and FC-based Storage Access: FC, iSCSI, and NFS

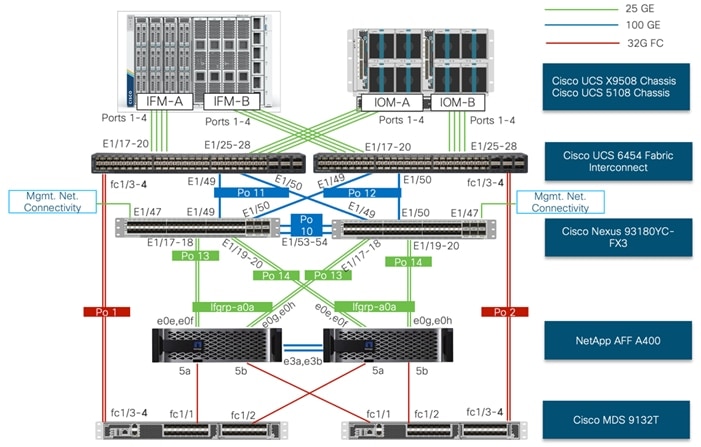

A typical topology for the FlexPod Datacenter is shown in Figure 1.

Figure 1. FlexPod Physical Topology

To build an IP only storage access in a FlexPod configuration, the components are set up as follows:

· Cisco UCS Fabric Interconnects provide chassis and network connectivity.

· The Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCS X9108 Intelligent Fabric Module, where four 25 Gigabit Ethernet ports are used on each IFM to connect to the appropriate Fabric Interconnect. If additional bandwidth is required, all eight 25G ports can be utilized.

· Cisco UCS X210c M6 Compute Nodes contain fourth-generation Cisco 14425 virtual interface cards (VICs).

· Cisco UCS C220 or C240 Servers with fourth generation VICs 1457 VICs connect to the fabric interconnects with 25GE.

· Cisco Nexus 93180YC-FX3 Switches in Cisco NX-OS mode provide the switching fabric.

· Optional: Cisco MDS 9132T FC Switches provide the SAN switching fabric.

· Cisco UCS 6454 Fabric Interconnect 25-Gigabit Ethernet uplink ports connect to Cisco Nexus 93180YC-FX3 Switches in a Virtual Port Channel (vPC) configuration. Optional uplink connection to Cisco MDS 9132T FC Switches via Port Channel (PC) configuration.

· The NetApp AFF A400 controllers connect to the Cisco Nexus 93180YC-FX3 Switches using two 100 GE ports from each controller configured as a vPC. Optional connection to the Cisco MDS 9132T SAN Switches.

· SUSE software is installed on Cisco UCS Compute Nodes with local disks to validate the infrastructure.

Note: Since Cisco UCS C-series is being managed and configured by Cisco Intersight Managed Mode, the nodes must satisfy the software and hardware requirements outlined here: https://intersight.com/help/saas/supported_systems

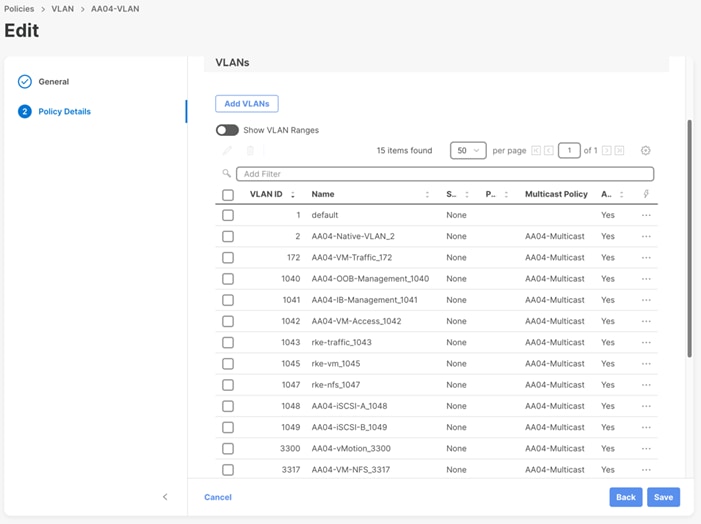

VLAN Configuration

Table 1 lists VLANs configured for setting up the FlexPod environment along with their usage.

| VLAN ID |

Name |

Usage |

| Infrastructure related networks** |

||

| 2 |

Native-VLAN |

Use VLAN 2 as native VLAN instead of default VLAN (1) |

| 3072 |

OOB-MGMT-VLAN |

Out-of-band management VLAN to connect management ports for various devices |

| 17 |

IB-MGMT-VLAN |

In-band management VLAN utilized for all in-band management connectivity - for example, ESXi hosts, VM management, and so on. |

| 3117* |

iSCSI-A |

iSCSI-A path for data traffic |

| 3217* |

iSCSI-B |

iSCSI-B path for data traffic |

| VMware related networks** |

||

| 172 |

VM Traffic |

VM data traffic VLAN |

| 3017 |

NFS-VLAN |

NFS VLAN for Infrastructure components |

| 3317 |

vMotion |

VMware vMotion traffic |

| SUSE Rancher / RKE2 related networks |

||

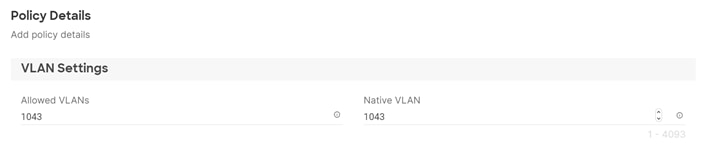

| 1043 |

RKE2-Traffic1 |

Data traffic VLAN from/to RKE2 cluster 1 |

| 1047 |

RKE2-NFS1 |

NFS storage traffic VLAN for RKE2 cluster 1 |

| 2043 |

RKE2-Traffic2 |

Data traffic VLAN from/to RKE2 cluster 2 |

| 2047 |

RKE2-NFS2 |

NFS storage traffic VLAN for RKE2 cluster 2 |

* iSCSI is listed only for the VMware part.

**Infrastructure and VMware related VLANs are listed as reference as they are used to setup the infrastructure with this CVD: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html

Some of the key highlights of VLAN usage are as follows:

· VLAN 3072 allows you to manage and access out-of-band management interfaces of various devices and is brought into the infrastructure to allow CIMC access to the UCS servers and is also available to infrastructure virtual machines (VMs). Interfaces in this VLAN are configured with MTU 1500.

· VLAN 17 is used for in-band management of VMs, hosts, and other infrastructure services. Interfaces in this VLAN are configured with MTU 1500.

· VLAN 3017 provides ESXi hosts, management systems, and other infrastructure services access to the NFS storage hosted on the NetApp Controllers. Interfaces in this VLAN are configured with MTU 9000.

◦ Optional: A pair of iSCSI VLANs (3117 and 3217) is configured to provide access to iSCSI based storage for data access. Interfaces in these VLANs are configured with MTU 9000.

· VLAN 1043 is used as an access network for RKE2 cluster 1 to access all RKE2 hosts, and services deployed on top. Interfaces in this VLAN are configured with MTU 1500.

· VLAN 1047 provides services deployed on top of RKE2 cluster 1 access to the NFS storage hosted on the NetApp Controllers managed by NetApp Astra Trident CSI. Interfaces in this VLAN are configured with MTU 9000.

· VLAN 2043 is used as an access network for RKE2 cluster 2 to access all RKE2 hosts, and services deployed on top. Interfaces in this VLAN are configured with MTU 1500.

· VLAN 2047 provides services deployed on top of RKE2 cluster 2 access to the NFS storage hosted on the NetApp Controllers managed by NetApp Astra Trident CSI. Interfaces in this VLAN are configured with MTU 9000.

Table 2 lists the hardware components used to build the solution in the lab. You are encouraged to review your requirements and adjust the various components as needed.

Table 2. FlexPod Hardware Components

| Component |

Hardware |

Comments |

| Cisco Nexus Switches |

Two Cisco Nexus 93180YC-FX3 switches |

|

| Cisco MDS Switches |

Two Cisco MDS 9132T switches |

Optional components. Only used for FC storage connection. |

| NetApp AFF Storage |

A NetApp AFF A400 HA Pair with appropriate storage and network connectivity. |

Your requirements will determine the amount of storage. The NetApp AFF A400 should support both 25Gbps (or 100 Gbps) ethernet and 32Gbps (or 16 Gbps) FC connectivity. |

| Cisco UCS Fabric Interconnects |

Two Cisco UCS 6454 Fabric Interconnects |

These fabric interconnects will be shared between the management and the workload domain. |

| Management Domain Compute |

||

| Cisco UCS Servers |

Two Cisco UCS nodes |

|

| Workload Domain Compute |

||

| Cisco UCS Chassis |

One UCS X9508 chassis. |

Single chassis can host up to 8 Cisco UCS X210c compute nodes. |

| Cisco UCS Compute Nodes |

Five Cisco UCS X210c compute nodes |

Two generations, M6 and M7, are validated. Two compute nodes are configured with X440p PCI node and GPU cards. |

Table 3 lists software versions of the components, drivers, and software (for example, various NetApp software tools, Cisco Intersight Assist, and so on) used to build this solution.

Table 3. Software Components and Versions

| Component |

Version |

| Cisco Nexus 93180YC-FX3 |

9.3(8) |

| Cisco UCS Fabric Interconnects |

4.3(2.230117) |

| Cisco UCS X-Series blade server |

5.0(2b) |

| Cisco UCS C-Series rack server |

4.3(2.240002) |

| Cisco Intersight Assist Appliance |

1.0.9-342 (will automatically upgrade to latest when claimed) |

| NetApp AFF A400 - ONTAP |

9.13.1 P6 |

| NetApp Astra Trident CSI |

24.02 |

| SUSE Rancher |

|

| SUSE Rancher Enterprise Container Manager |

2.8.2 |

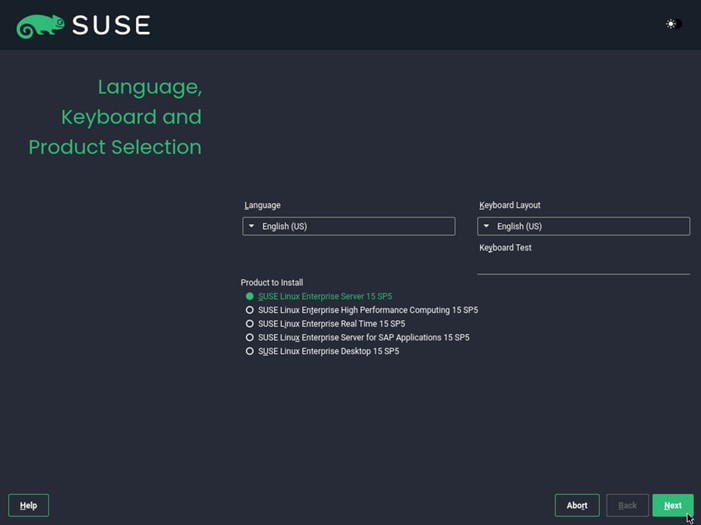

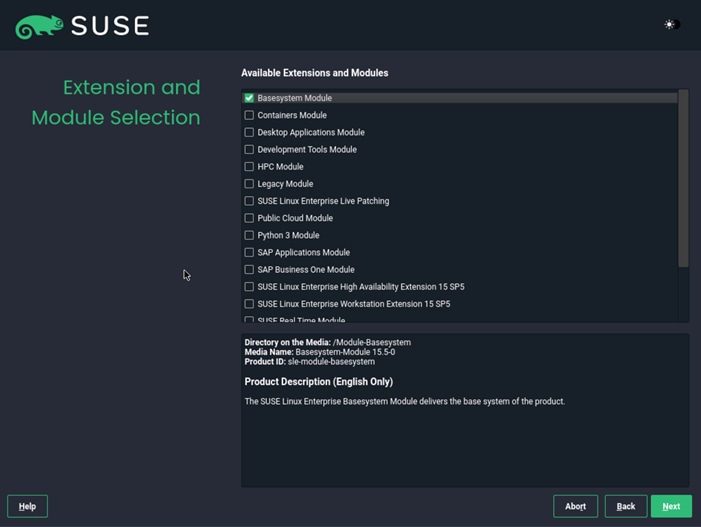

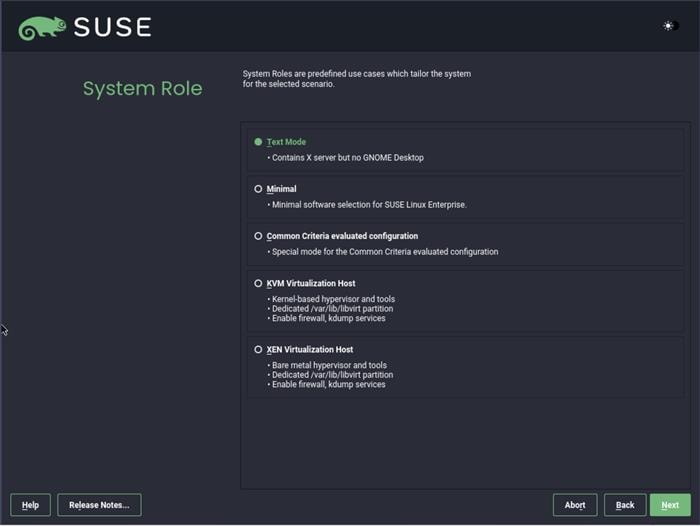

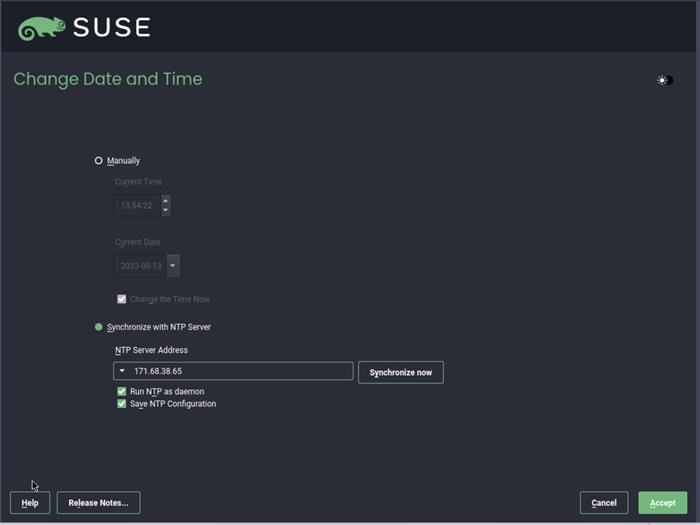

| SUSE Linux Enterprise |

15 SP5 |

| SUSE Linux Enterprise Micro |

5.4 and 5.5 |

| K3s |

v1.26.9+k3s1 and v1.27.10+k3s1 |

| RKE |

V1.26.9.rke2r1 and v1.27.10+rke2r1 |

Table 4 lists the required components deployed as virtual machines to build the validated solution. You are encouraged to review the list and adjust the deployment type, the size or quantity of various components as needed.

Table 4. Virtual Machines were used for this solution

| Component |

Comments |

| vCenter Server |

Hosted on pre-existing infrastructure. |

| Intersight Assist |

Hosted on pre-existing infrastructure. Required to manage some on-premises components, like VMware or NetApp with Intersight. |

| Infrastructure and network services provides by a Linux System |

Hosted on pre-existing infrastructure. Used to provide required services for RKE2: DNS, DHCP, Load-Balancer, and NTP. |

| Management node |

Hosted on pre-existing infrastructure. Used to host the Ansible automation framework and to manage the solution. |

Ansible Automation for Solution Deployment

This section provides information about setting up and running Ansible playbooks to configure the infrastructure for the SUSE Rancher and RKE solution. As the focus of this solution validation was on the software and workload, the infrastructure setup is based on this document: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_imm_m7_iac.html#AnsibleAutomationWorkflowandSolutionDeployment

For the deployment process, see: https://developer.cisco.com/codeexchange/github/repo/ucs-compute-solutions/FlexPod-IMM-VMware/. This document will guide you through the deployment process and highlight the additions required to make the SUSE Rancher and RKE software stack work. Ansible automation requires a management workstation (control machine) to run Ansible playbooks for configuring Cisco Nexus, NetApp ONTAP Storage, Cisco UCS, and SUSE Rancher/RKE.

A management workstation is a VM where Ansible is installed and has access to the Internet to download various packages and clone the playbook repository. Instructions for installing the workstation Operating System (OS) or complete setup of Ansible are not included in this document; however, basic installation and configuration of Ansible are provided as a reference. A guide for installing and getting started with Ansible can be found at: https://docs.ansible.com/ansible_community.html.

Procedure 1. Prepare Management Workstation

In this procedure, the installation steps are performed on a virtual machine running openSUSE Leap 15.5 to prepare the host for automated solution deployment. The automation includes the configuration of Cisco UCS, Cisco Nexus, NetApp Storage, and SUSE Rancher using Ansible Playbooks. The following steps were performed as root user.

Step 1. Install Python 3.11:

zypper install python311

update-alternatives --install /usr/bin/python3 python3 /usr/bin/python3.11 2

Step 2. Verify Python version:

python3 --version

Python 3.11.8

Step 3. Install Ansible:

pip3 install ansible

Step 4. Verify Ansible version:

ansible [core 2.16.5]

config file = None

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python3.11/site-packages/ansible

ansible collection location = /root/.ansible/collections:/usr/share/ansible/collections

executable location = /usr/bin/ansible

python version = 3.11.8 (main, Feb 29 2024, 12:19:47) [GCC] (/usr/bin/python3.11)

jinja version = 3.1.3

libyaml = True

Step 5. Install git:

zypper install git-core

Step 6. Update pip and setup tools:

pip3 install --upgrade pip

pip3 install --upgrade setuptools

Step 7. Install NetApp specific modules:

pip3 install netapp-lib

Step 8. Install ansible-galaxy collections for Cisco UCS, NX-OS, NetApp, and VMware as follows:

ansible-galaxy collection install cisco.intersight

ansible-galaxy collection install cisco.nxos

pip3 install ansible-pylibssh

ansible-galaxy collection install netapp.ontap

ansible-galaxy collection install community.vmware

pip3 install -r ~/.ansible/collections/ansible_collections/community/vmware/requirements.txt

| Tech tip |

| In some instances, the following error messages might be seen when executing VMware specific ansible playbooks: An exception occurred during task execution. To see the full traceback, use -vvv. The error was: ModuleNotFoundError: No module named 'requests' fatal: [10.101.1.101 -> localhost]: FAILED! => {"changed": false, "msg": "Failed to import the required Python library (requests) on aa01-linux8.vm.vcf.local's Python /usr/bin/python3.11. Please read the module documentation and install it in the appropriate location. If the required library is installed, but Ansible is using the wrong Python interpreter, please consult the documentation on ansible_python_interpreter"}

An exception occurred during task execution. To see the full traceback, use -vvv. The error was: ModuleNotFoundError: No module named 'pyVim' fatal: [10.101.1.101 -> localhost]: FAILED! => {"changed": false, "msg": "Failed to import the required Python library (PyVmomi) on aa01-linux8.vm.vcf.local's Python /usr/bin/python3.11. Please read the module documentation and install it in the appropriate location. If the required library is installed, but Ansible is using the wrong Python interpreter, please consult the documentation on ansible_python_interpreter"} To fix this issue, use the appropriate version of PIP to install “requests” and “pyvmomi:” pip3 install requests pip3 install pyVmomi |

To download the Ansible playbooks for configuring the infrastructure, the management workstation needs a working installation of Git and access to the public GitHub repository. You can also manually download the repository and copy the files to the management workstation. The Ansible playbooks used in this document can be found at the following links:

Cisco DevNet: https://developer.cisco.com/codeexchange/github/repo/ucs-compute-solutions/FlexPod-IMM-VMware/

GitHub repository: https://github.com/ucs-compute-solutions/FlexPod-IMM-VMware

The Cisco Nexus Switches, NetApp Storage and Cisco UCS must be physically racked, cabled, powered, and configured with management IP addresses before the Ansible-based installation procedure can begin. Upgrade the Cisco UCS, Nexus Switches to appropriate software versions listed in Table 3.

Before executing the Ansible playbooks to setup various devices, several variables must be updated based on your specific implementation. These variables contain values such as the interfaces, interface numbering, VLANs, pools, policies and ports on Cisco UCS, IP addresses and interfaces for storage and so on.

Note: Day 2 Configuration tasks such as adding additional VLAN, datastores, Virtual Machines and so on, can be performed manually or with Cisco Intersight Cloud Orchestrator (ICO).

Procedure 1. Clone GitHub Collection

To copy the github repository for the project, clone the collection to a new (empty) folder on the management workstation. Cloning the repository creates a local copy, which is then used to modify and run the playbooks.

Step 1. From the management workstation, create a new folder for the project. The GitHub collection will be cloned to this new folder.

Step 2. Open a command-line or console interface on the management workstation and change directories to the newly created folder.

Step 3. Clone the GitHub collection using the following command:

cd <new folder>

git clone https://github.com/ucs-compute-solutions/FlexPod-IMM-VMware

This section provides the procedure for configuring the Cisco Nexus 93180YC-FX3 switches used for ethernet LAN switching in this solution. The switch configuration for this validated design is based on the switching configuration covered in FlexPod Datacenter with Cisco UCS X-Series Cisco Validated Design (CVD): https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html#NetworkSwitchConfiguration therefore this section only explains the changes from the base CVD.

Physical Connectivity

Follow the physical connectivity guidelines for FlexPod as explained in section FlexPod Topology.

Initial Configuration

For setting up the initial switch configuration, complete the steps explained here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html#InitialConfiguration

Manual Configuration Steps

The required configuration steps for the FlexPod solution are documented here:

https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html#CiscoNexusSwitchManualConfiguration.

Procedure 1. Create VLANs

Cisco Nexus A and Cisco Nexus B

In addition to the existing VLANs, follow this step on both switches. Refer to VLAN information in VLAN Configuration for setting up all the required VLANs.

Step 1. From the global configuration mode, run the following commands:

vlan <rke1-traffic-vlan-id e.g. 1043>

name rke1-traffic-vlan

vlan <rke1-nfs-vlan-id e.g. 1047>

name rke1-nfs-vlan

vlan <rke2-traffic-vlan-id e.g. 2043>

name rke2-traffic-vlan

vlan <rke2-nfs-vlan-id e.g. 2047>

name rke2-nfs-vlan

Procedure 2. Create Port Channel Parameters

Cisco Nexus A and Cisco Nexus B

To configure port channel parameters, follow the steps on both Cisco Nexus switches.

Step 1. From the global configuration mode, run the following commands to setup VPC Peer-Link port-channel:

interface Po10

switchport trunk allowed vlan add <rke1-traffic-vlan-id>,<rke1-nfs-vlan-id>,<rke2-traffic-vlan-id>,<rke2-nfs-vlan-id>

Step 2. From the global configuration mode, run the following commands to setup port-channels for UCS FI 6454 connectivity:

interface Po11

switchport trunk allowed vlan add <rke1-traffic-vlan-id>,<rke1-nfs-vlan-id>,<rke2-traffic-vlan-id>,<rke2-nfs-vlan-id>

spanning-tree port type edge trunk

!

interface Po12

switchport trunk allowed vlan add <rke1-traffic-vlan-id>,<rke1-nfs-vlan-id>,<rke2-traffic-vlan-id>,<rke2-nfs-vlan-id>

spanning-tree port type edge trunk

Step 3. From the global configuration mode, run the following commands to setup port-channels for NetApp A400 connectivity:

interface Po113

switchport trunk allowed vlan <ib-mgmt-vlan-id>, <infra-nfs-vlan-id>

switchport trunk allowed vlan add <rke1-nfs-vlan-id>,<rke2-nfs-vlan-id>

!

interface Po114

switchport trunk allowed vlan <ib-mgmt-vlan-id>, <infra-nfs-vlan-id>

switchport trunk allowed vlan add <rke1-nfs-vlan-id>,<rke2-nfs-vlan-id>

Step 4. From the global configuration mode, run the following commands to setup port-channels for connectivity to existing management switch:

interface Po17

switchport trunk allowed vlan <rke1-acccess-vlan-id>,<rke2-access-vlan-id>

interface PoXX

switchport trunk allowed vlan add <rke1-traffic-vlan-id>,<rke2-traffic-vlan-id>

!

exit

copy run start

Procedure 3. Configure IP Gateways

To enable internet access for the installation and the workloads we have enabled IP routing and HSRP function on the Nexus Switches. If IP gateways for the VLANs covered below are present on the upstream switches, the configuration in this step can be skipped. If some or all the gateways are not configured, use Hot Standby Router Protocol (HSRP) and Switched Virtual Interface (SVI) on the Nexus switches to set up gateways for Out-of-band management*, in-band management*, RKE2 access networks.

Note: *Gateways for management networks will be pre-configured in your existing environments.

Configure Nexus-A Switch

Step 1. From the global configuration mode, run the following commands to setup IP addresses and HSRP:

feature interface-vlan

feature hsrp

interface Vlan3072

description GW for Out-of-Band Mgmt 10.104.0.0/24 Network

no shutdown

no ip redirects

ip address 10.101.0.251/24

no ipv6 redirects

hsrp version 2

hsrp 1010

preempt delay minimum 300

priority 105

ip 10.104.0.254

interface Vlan17

description GW for In-band Management 10.104.1.0/24 Network

no shutdown

no ip redirects

ip address 10.104.1.251/24

no ipv6 redirects

hsrp version 2

hsrp 1011

preempt delay minimum 300

priority 105

ip 10.104.1.254

interface Vlan172

description GW for virtual machine 10.104.2.0/24 Network

no shutdown

! MTU should be adjusted based on application requirements

mtu 1500

no ip redirects

ip address 10.104.2.251/24

no ipv6 redirects

hsrp version 2

hsrp 1012

preempt delay minimum 300

priority 105

ip 10.104.2.254

interface Vlan1043

description Gateway for RKE1 Access VLAN

no shutdown

mtu 9216

no ip redirects

ip address 10.104.3.251/24

no ipv6 redirects

hsrp version 2

hsrp 3002

preempt delay minimum 300

priority 105

ip 10.104.3.254

interface Vlan2043

description Gateway for RKE2 Access VLAN

no shutdown

mtu 9216

no ip redirects

ip address 10.104.4.251/24

no ipv6 redirects

hsrp version 2

hsrp 3002

preempt delay minimum 300

priority 105

ip 10.104.4.254

Configure Nexus-B Switch

Step 1. From the global configuration mode, run the following commands to setup IP addresses and HSRP:

feature interface-vlan

feature hsrp

interface Vlan3072

description GW for Out-of-Band Mgmt 10.104.0.0/24 Network

no shutdown

no ip redirects

ip address 10.101.0.252/24

no ipv6 redirects

hsrp version 2

hsrp 1010

preempt delay minimum 300

ip 10.104.0.254

interface Vlan17

description GW for In-band Management 10.104.1.0/24 Network

no shutdown

no ip redirects

ip address 10.104.1.252/24

no ipv6 redirects

hsrp version 2

hsrp 1011

preempt delay minimum 300

ip 10.104.1.254

interface Vlan172

description GW for virtual machine 10.104.2.0/24 Network

no shutdown

! MTU should be adjusted based on application requirements

mtu 1500

no ip redirects

ip address 10.104.2.252/24

no ipv6 redirects

hsrp version 2

hsrp 1012

preempt delay minimum 300

ip 10.104.2.254

interface Vlan1043

description Gateway for RKE1 Access VLAN

no shutdown

mtu 9216

no ip redirects

ip address 10.104.3.252/24

no ipv6 redirects

hsrp version 2

hsrp 3002

preempt delay minimum 300

ip 10.104.3.254

interface Vlan2043

description Gateway for RKE2 Access VLAN

no shutdown

mtu 9216

no ip redirects

ip address 10.104.4.252/24

no ipv6 redirects

hsrp version 2

hsrp 3002

preempt delay minimum 300

ip 10.104.4.254

See the following section (NetApp Hardware Universe) for planning the physical location of the storage systems:

· Site Preparation

· System Connectivity Requirements

· Circuit Breaker, Power Outlet Balancing, System Cabinet Power Cord Plugs, and Console Pinout Requirements

· AFF Series Systems

To confirm that the hardware and software components that you would like to use are supported with the version of ONTAP that you plan to install, follow these steps at the NetApp Support site:

1. Access the HWU application to view the System Configuration guides.

2. Click the Products tab to select Platforms menu to view the compatibility between different versions of the ONTAP software and the NetApp storage appliances with your desired specifications.

3. Alternatively, to compare components by storage appliance, click Utilities and select compare Storage Systems.

Follow the physical installation procedures for the controllers found here: https://docs.netapp.com/us-en/ontap-systems/index.html.

NetApp storage systems support a wide variety of disk shelves and disk drives. The complete list of disk shelves that is supported by the AFF A400 is available at the NetApp Support site.

When using SAS disk shelves with NetApp storage controllers, refer to: https://docs.netapp.com/us-en/ontap-systems/sas3/install-new-system.html for proper cabling guidelines.

When using NVMe drive shelves with NetApp storage controllers, refer to: https://docs.netapp.com/us-en/ontap-systems/ns224/hot-add-shelf.html for installation and servicing guidelines.

Complete the NetApp AFF A400 initial cluster setup explained here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_imm_m7_iac.html#NetAppONTAPStorageConfiguration

FC, NVMe, iSCSI, and FC Boot have not been explained in the CVD since the current solution deployment contains Boot from Local Disk and implements NFS services to provide IP-based storage access for FlexPod. At the completion of this step, NetApp A400 management connectivity and ONTAP cluster setup is ready.

Procedure 1. Log into the Cluster

Step 1. Open an SSH connection to either the cluster IP or the host name.

Step 2. Log into the admin user with the password provided during cluster setup.

Procedure 2. Verify Storage Failover

To confirm that storage failover is enabled, run the following commands for a failover pair:

Step 1. Verify the status of the storage failover:

AA07-A400::> storage failover show

Takeover

Node Partner Possible State Description

-------------- -------------- -------- -------------------------------------

AA07-A400-01 AA07-A400-02 true Connected to AA07-A400-02

AA07-A400-02 AA07-A400-01 true Connected to AA07-A400-01

2 entries were displayed.

Note: Both <st-node01> and <st-node02> must be capable of performing a takeover. Continue with Step 2 if the nodes can perform a takeover.

Step 2. Enable failover on one of the two nodes if it was not completed during the installation:

storage failover modify -node <st-node01> -enabled true

Note: Enabling failover on one node enables it for both nodes.

Step 3. Verify the HA status for a two-node cluster.

Note: This step is not applicable for clusters with more than two nodes.

AA07-A400::> cluster ha show

High-Availability Configured: true

Step 4. If HA is not configured use the below commands. Only enable HA mode for two-node clusters.

Note: Do not run this command for clusters with more than two nodes because it causes problems with failover.

cluster ha modify -configured true

Do you want to continue? {y|n}: y

Step 5. Verify that hardware assist is correctly configured:

AA07-A400::> storage failover hwassist show

Node

-----------------

AA07-A400-01

Partner: AA07-A400-02

Hwassist Enabled: true

Hwassist IP: 192.x.x.84

Hwassist Port: 162

Monitor Status: active

Inactive Reason: -

Corrective Action: -

Keep-Alive Status: healthy

AA07-A400-02

Partner: AA07-A400-01

Hwassist Enabled: true

Hwassist IP: 192.x.x.85

Hwassist Port: 162

Monitor Status: active

Inactive Reason: -

Corrective Action: -

Keep-Alive Status: healthy

2 entries were displayed.

Procedure 3. Set Auto-Revert on Cluster Management Interface

To set the auto-revert parameter on the cluster management interface, follow this step:

Step 1. Run the following command:

network interface modify -vserver <clustername> -lif cluster_mgmt_lif_1 -auto-revert true

Note: A storage virtual machine (SVM) is referred to as a Vserver or vserver in the GUI and CLI.

Procedure 4. Zero All Spare Disks

Step 1. To zero all spare disks in the cluster, run the following command:

disk zerospares

Note: Advanced Data Partitioning creates a root partition and two data partitions on each SSD drive in an AFF configuration. Disk auto assign should have assigned one data partition to each node in an HA pair. If a different disk assignment is re-quired, disk auto assignment must be disabled on both nodes in the HA pair by running the disk option modify command. Spare partitions can then be moved from one node to another by running the disk removeowner and disk assign commands.

Procedure 5. Set Up Service Processor Network Interface

Step 1. Assign a static IPv4 address to the Service Processor on each node by running the following commands:

system service-processor network modify –node <st-node01> -address-family IPv4 –enable true –dhcp none –ip-address <node01-sp-ip> -netmask <node01-sp-mask> -gateway <node01-sp-gateway>

system service-processor network modify –node <st-node02> -address-family IPv4 –enable true –dhcp none –ip-address <node02-sp-ip> -netmask <node02-sp-mask> -gateway <node02-sp-gateway>

Note: The Service Processor IP addresses should be in the same subnet as the node management IP addresses.

Procedure 6. Create Manual Provisioned Aggregates

An aggregate containing the root volume is created during the ONTAP setup process. To manually create additional aggregates, determine the aggregate name, the node on which to create it, and the number of disks it should contain.

Step 1. To create new aggregates, run the following commands:

storage aggregate create -aggregate <aggr1_node01> -node <st-node01> -diskcount <num-disks> -diskclass solid-state

storage aggregate create -aggregate <aggr1_node02> -node <st-node02> -diskcount <num-disks> -diskclass solid-state

Note: You should have the minimum number of hot spare disks for the recommended hot spare disk partitions for their aggregate.

Note: For all-flash aggregates, you should have a minimum of one hot spare disk or disk partition. For non-flash homogenous aggregates, you should have a minimum of two hot spare disks or disk partitions. For Flash Pool aggregates, you should have a minimum of two hot spare disks or disk partitions for each disk type.

Note: In an AFF configuration with a small number of SSDs, you might want to create an aggregate with all, but one remaining disk (spare) assigned to the controller.

Note: The aggregate cannot be created until disk zeroing completes. Run the storage aggregate show command to display the aggregate creation status. Do not proceed until both aggr1_node01 and aggr1_node02 are online.

Procedure 7. Remove Default Broadcast Domains

By default, all network ports are included in separate default broadcast domain. Network ports used for data services (for example e0e, e0f, and so on) should be removed from their default broadcast domain and that broadcast domain should be deleted.

Step 1. To perform this task, run the following commands:

network port broadcast-domain delete -broadcast-domain <Default-N> -ipspace Default

network port broadcast-domain show

Note: Delete the Default broadcast domains with Network ports.

Procedure 8. Disable Flow Control on 25/100GbE Data Ports

To disable flow control on 25 and 100GbE data ports, follow these steps:

Step 1. Run the following command to configure the ports on node 01:

network port modify -node <st-node01> -port e0e,e0f,e0g,e0h -flowcontrol-admin none

Step 2. Run the following command to configure the ports on node 02:

network port modify -node <st-node02> -port e0e,e0f,e0g,e0h -flowcontrol-admin none

Step 3. Run the following command to verify:

network port show -node * -port e0e,e0f,e0g,e0h -fields speed-admin,duplex-admin,flowcontrol-admin

Procedure 9. Enable Cisco Discovery Protocol

Step 1. To enable the Cisco Discovery Protocol (CDP) on the NetApp storage controllers, run the following command to enable CDP in ONTAP:

node run -node * options cdpd.enable on

Procedure 10. Enable Link-layer Discovery Protocol on all Ethernet Ports

Step 1. Enable Link-layer Discovery Protocol (LLDP) on all ports of all nodes in the cluster:

node run * options lldp.enable on

Procedure 11. Enable FIPS Mode on the NetApp ONTAP Cluster (Optional)

NetApp ONTAP is compliant in the Federal Information Processing Standards (FIPS) 140-2 for all SSL connections. When SSL FIPS mode is enabled, SSL communication from NetApp ONTAP to external client or server components outside of NetApp ONTAP will use FIPS compliant crypto for SSL.

Step 1. To enable FIPS on the NetApp ONTAP cluster, run the following commands:

set -privilege advanced

security config modify -interface SSL -is-fips-enabled true

Note: If you are running NetApp ONTAP 9.8 or earlier manually reboot each node in the cluster one by one. Beginning in NetApp ONTAP 9.9.1, rebooting is not required.

Note: If FIPS is not enabled on the NetApp ONTAP cluster, you will see a warning in AIQUM stating “FIPS Mode Disabled.”

Procedure 12. Configure Timezone

To configure time synchronization on the cluster, follow this step:

Step 1. Set the time zone for the cluster.

timezone -timezone <timezone>

Note: For example, in the eastern United States, the time zone is America/New_York

Procedure 13. Configure Simple Network Management Protocol

Note: If you have enabled FIPS then please look at the following points while configuring SNMP.

· The SNMP users or SNMP traphosts that are non-compliant with FIPS will be deleted automatically. “Configure SNMP traphosts” configuration will be non-compliant with FIPS.

· The SNMPv1 user, SNMPv2c user (After configuring SNMP community) or SNMPv3 user (with none or MD5 as authentication protocol or none or DES as encryption protocol or both) is non-compliant with FIPS.

Step 1. Configure basic SNMP information, such as the location and contact. When polled, this information is visible as the sysLocation and sysContact variables in SNMP.

snmp contact <snmp-contact>

snmp location “<snmp-location>”

snmp init 1

options snmp.enable on

Step 2. Configure SNMP traps to send to remote hosts, such as an Active IQ Unified Manager server or another fault management system.

Note: This step works when FIPS is disabled.

Note: An SNMPv1 traphost or SNMPv3 traphost (configured with an SNMPv3 user non-compliant to FIPS) is non-compliant to FIPS.

snmp traphost add <oncommand-um-server-fqdn>

Step 3. Configure SNMP community.

Note: This step works when FIPS is disabled.

Note: SNMPv1 and SNMPv2c are not supported when cluster FIPS mode is enabled.

system snmp community add -type ro -community-name <snmp-community> -vserver <clustername>

Procedure 14. Configure SNMPv3 Access

SNMPv3 offers advanced security by using encryption and passphrases. The SNMPv3 user can run SNMP utilities from the traphost using the authentication and privacy settings that you specify.

Note: When FIPS is enabled, the following are the supported/compliant options for authentication and privacy protocol:

· Authentication Protocol: sha, sha2-256

· Privacy protocol: aes128

Step 1. To configure SNMPv3 access, run the following commands:

security login create -user-or-group-name <<snmp-v3-usr>> -application snmp -authentication-method usm

Enter the authoritative entity's EngineID [local EngineID]:

Which authentication protocol do you want to choose (none, md5, sha, sha2-256) [none]: <<snmp-v3-auth-proto>>

Enter the authentication protocol password (minimum 8 characters long):

Enter the authentication protocol password again:

Which privacy protocol do you want to choose (none, des, aes128) [none]: <<snmpv3-priv-proto>>

Enter privacy protocol password (minimum 8 characters long):

Enter privacy protocol password again:

Note: Refer to the SNMP Configuration Express Guide for additional information when configuring SNMPv3 security users.

Procedure 15. Remove insecure ciphers from the NetApp ONTAP Cluster

Note: If you do not perform this procedure, you will see the warning in AIQUM “SSH is using insecure ciphers.”

Step 1. Ciphers with the suffix CBC are considered insecure. To remove the CBC ciphers, run the following NetApp ONTAP command:

security ssh remove -vserver <clustername> -ciphers aes256-cbc,aes192-cbc,aes128-cbc,3des-cbc

Procedure 16. Create Management Broadcast Domain

Step 1. If the management interfaces are required to be on a separate VLAN, create a new broadcast domain for those interfaces by running the following command:

network port broadcast-domain create -broadcast-domain IB-MGMT -mtu 1500

Procedure 17. Create NFS Broadcast Domain

Step 1. To create an NFS data broadcast domain with a maximum transmission unit (MTU) of 9000, run the following commands to create a broadcast domain for NFS in ONTAP:

network port broadcast-domain create -broadcast-domain Infra-NFS -mtu 9000

Procedure 18. Create 2 Default Broadcast Domains and Add ifgroups a0a interface on each node to Default Broadcast Domain (applicable for 2-node cluster only)

Step 1. Create default broadcast domain.

broadcast-domain create -broadcast-domain Default-1 -mtu 9000 -ipspace Default

broadcast-domain create -broadcast-domain Default-2 -mtu 9000 -ipspace Default

Step 2. To add ports to default broadcast domains, run the following commands:

AA07-A400::> broadcast-domain add-ports -broadcast-domain Default-1 -ports AA07-A400-01:a0a

(network port broadcast-domain add-ports)

AA07-A400::> broadcast-domain add-ports -broadcast-domain Default-2 -ports AA07-A400-02:a0a

(network port broadcast-domain add-ports)

Procedure 19. Create Interface Groups

Step 1. To create the LACP interface groups for the 25GbE data interfaces, run the following commands:

network port ifgrp create -node <st-node01> -ifgrp a0a -distr-func port -mode multimode_lacp

network port ifgrp add-port -node <st-node01> -ifgrp a0a -port e0e

network port ifgrp add-port -node <st-node01> -ifgrp a0a -port e0f

network port ifgrp add-port -node <st-node01> -ifgrp a0a -port e0g

network port ifgrp add-port -node <st-node01> -ifgrp a0a -port e0h

network port ifgrp create -node <st-node02> -ifgrp a0a -distr-func port -mode multimode_lacp

network port ifgrp add-port -node <st-node02> -ifgrp a0a -port e0e

network port ifgrp add-port -node <st-node02> -ifgrp a0a -port e0f

network port ifgrp add-port -node <st-node02> -ifgrp a0a -port e0g

network port ifgrp add-port -node <st-node02> -ifgrp a0a -port e0h

####To Verify: ####

AA07-A400::> network port ifgrp show

Port Distribution Active

Node IfGrp Function MAC Address Ports Ports

-------- ---------- ------------ ----------------- ------- -------------------

AA07-A400-01

a0a port d2:39:ea:29:d4:4a full e0e, e0f, e0g, e0h

AA07-A400-02

a0a port d2:39:ea:29:ce:d5 full e0e, e0f, e0g, e0h

2 entries were displayed.

Procedure 20. Create VLANs

Step 1. Create the management VLAN ports and add them to the management broadcast domain:

network port vlan create –node <st-node01> -vlan-name a0a-<ib-mgmt-vlan-id>

network port vlan create –node <st-node02> -vlan-name a0a-<ib-mgmt-vlan-id>

network port broadcast-domain add-ports -broadcast-domain IB-MGMT -ports <st-node01>:a0a-<ib-mgmt-vlan-id>,<st-node02>:a0a-<ib-mgmt-vlan-id>

Step 2. To verify, issue the following command:

network port vlan show

Step 3. Create the NFS VLAN ports and add them to the Infra-NFS broadcast domain:

network port vlan create –node <st-node01> -vlan-name a0a-<infra-nfs-vlan-id>

network port vlan create –node <st-node02> -vlan-name a0a-<infra-nfs-vlan-id>

network port broadcast-domain add-ports -broadcast-domain Infra-NFS -ports <st-node01>:a0a-<infra-nfs-vlan-id>,<st-node02>:a0a-<infra-nfs-vlan-id>

Procedure 21. Create SVM (Storage Virtual Machine)

The SVM (AA04-RKE2-BM-SVM) is used to configure NFS services for storage access by NetApp Astra Trident.

Step 1. Run the vserver create command:

vserver create –vserver AA04-RKE2-BM-SVM

Step 2. Add the required data protocols to the SVM:

vserver add-protocols -protocols nfs -vserver AA04-RKE2-BM-SVM

Step 3. Remove the unused data protocols from the SVM:

vserver remove-protocols –vserver AA04-RKE2-BM-SVM -protocols cifs,fcp,iscsi

Note: It is recommended to remove fcp and iscsi protocols if they are not used:

Step 4. Add the two data aggregates to the AA04-RKE2-BM-SVM aggregate list for the NetApp ONTAP Tools:

vserver modify –vserver AA04-RKE2-BM-SVM –aggr-list < AA07_A400_01_NVME_SSD_1>,< AA07_A400_02_NVME_SSD_1>

Step 5. Enable and run the NFS protocol in the AA04-RKE2-BM-SVM:

vserver nfs create -vserver AA04-RKE2-BM-SVM -udp disabled -v3 enabled -v4.1 enabled -vstorage enabled

Note: If the NFS license was not installed during the cluster configuration, make sure to install the license before starting the NFS service.

Procedure 22. Vserver Protocol Verification

Step 1. Verify the required protocols are added to the AA04-RKE2-BM-SVM vserver:

AA07-A400::> vserver show-protocols -vserver AA04-RKE2-BM-SVM

Vserver: AA04-RKE2-BM-SVM

Protocols: nfs

Procedure 23. Create Load-Sharing Mirrors of SVM Root Volume

Step 1. Create a load-sharing mirror volume of the “AA04-RKE2-BM-SVM” SVM root volume on the node that does not have the Root Volume:

volume show -vserver AA04-RKE2-BM-SVM # Note down the aggregate and node for the root volume

volume create –volume AA04_RKE2_SVM_BM_root_lsm0<x> –aggregate <AA07_A400_0x_NVME_SSD_1> –size 1GB –type DP

Step 2. Create a job schedule to update the root volume mirror relationships every 15 minutes:

job schedule interval create -name 15min -minutes 15

Step 3. Create mirroring relationships:

snapmirror create –source-path AA04-RKE2-BM-SVM:AA04_RKE_SVM_root -destination-path AA04-RKE2-BM-SVM: AA04_RKE2_SVM_BM_root_lsm0<x> –type LS -schedule 15min

Step 4. Initialize the mirroring relationship:

AA07-A400::> snapmirror initialize-ls-set -source-path AA04-RKE2-BM-SVM:AA04_RKE2_BM_SVM_root

[Job 19735] Job is queued: snapmirror initialize-ls-set for source "AA07-A400://AA04-RKE2-BM-SVM/AA04_RKE2_BM_SVM_root".

To verify:

AA07-A400::> snapmirror show -vserver AA04-RKE2-BM-SVM

Progressss

Source Destination Mirror Relationship Total Last

Path Type Path State Status Progress Healthy Updated

----------- ---- ------------ ------- -------------- --------- ------- --------

AA07-A400://AA04-RKE2-BM-SVM/AA04_RKE2_BM_SVM_root

LS AA07-A400://AA04-RKE2-BM-SVM/AA04_RKE2_BM_SVM_root_lsm01

Snapmirrored

Idle - true -snapmirror show -type ls

Procedure 24. Configure HTTPS Access

To configure secure access to the storage controller, follow these steps:

Step 1. Increase the privilege level to access the certificate commands:

set -privilege diag

Do you want to continue? {y|n}: y

Step 2. A self-signed certificate is already in place. Verify the certificate and obtain parameters (for example, the <serial-number>) by running the following command:

security certificate show

Step 3. For each SVM shown, the certificate common name should match the DNS fully qualified domain name (FQDN) of the SVM. Delete the two default certificates and replace them with either self-signed certificates or certificates from a certificate authority (CA). To delete the default certificates, run the following commands:

security certificate delete -vserver AA04-RKE2-BM-SVM -common-name AA04-RKE2-BM-SVM -ca AA04-RKE2-BM-SVM -type server -serial <serial-number>

Note: Deleting expired certificates before creating new certificates is best practice. Run the security certificate delete command to delete the expired certificates. In the following command, use TAB completion to select and delete each default certificate.

Step 4. To generate and install self-signed certificates, run the following commands as one-time commands. Generate a server certificate for the AA04-RKE2-BM-SVM and the cluster SVM. Use TAB completion to aid in the completion of these commands.

security certificate create -common-name <cert-common-name> -type server -size 2048 -country <cert-country> -state <cert-state> -locality <cert-locality> -organization <cert-org> -unit <cert-unit> -email-addr <cert-email> -expire-days <cert-days> -protocol SSL -hash-function SHA256 -vserver AA04-RKE2-BM-SVM

Step 5. To obtain the values for the parameters required in step 5 (<cert-ca> and <cert-serial>), run the security certificate show command.

Step 6. Enable each certificate that was just created by using the –server-enabled true and –client-enabled false parameters. Use TAB completion to aid in the completion of these commands.

security ssl modify -vserver <clustername> -server-enabled true -client-enabled false -ca <cert-ca> -serial <cert-serial> -common-name <cert-common-name>

Step 7. Disable HTTP cluster management access.

network interface service-policy remove-service -vserver <clustername> -policy default-management -service management-http

Note: It is normal for some of these commands to return an error message stating that the entry does not exist.

Step 8. Change back to the normal admin privilege level and verify that the system logs are available in a web browser.

set –privilege admin

https://<node01-mgmt-ip>/spi

https://<node02-mgmt-ip>/spi

Procedure 25. Set password for SVM vsadmin user and unlock the user

Step 1. Set a password for the SVM vsadmin user and unlock the user using the following commands:

security login password –username vsadmin –vserver AA04-RKE2-BM-SVM

Enter a new password: <password>

Enter it again: <password>

security login unlock –username vsadmin –vserver AA04-RKE2-BM-SVM

Procedure 26. Configure login banner for the SVM

Step 1. To create login banner for the SVM, run the following command:

security login banner modify -vserver AA04-RKE2-BM-SVM -message "This AA04-RKE2-BM-SVM is reserved for authorized users only!"

Note: If the login banner for the SVM is not configured, users will observe a warning in AIQUM stating “Login Banner Disabled.”

Procedure 27. Remove insecure ciphers from the SVM

Ciphers with the suffix CBC are considered insecure.

Note: If you do not perform the procedure, you will see a warning in AIQUM saying “SSH is using insecure ciphers.”

Step 1. To remove the CBC ciphers from the SVM, run the following NetApp ONTAP command:

security ssh remove -vserver AA04-RKE2-BM-SVM -ciphers aes256-cbc,aes192-cbc,aes128-cbc,3des-cbc

Procedure 28. Configure Export Policy Rule

Note: This step is crucial when using ontap-nas (NFS) storage driver in Astra Trident since this SVM is added as a Trident Backend.

To configure NFS on the SVM, follow these steps:

Step 1. Create a new rule for the infrastructure NFS subnet in the default export policy:

vserver export-policy rule create –vserver AA04-RKE2-BM-SVM -policyname default –ruleindex 1 –protocol nfs -clientmatch <infra-nfs-subnet-cidr> -rorule any –rwrule any -superuser sys –allow-suid true -anon 65534

Note: For more information on configuring NFS Export Policy for Trident, go to: https://docs.netapp.com/us-en/trident/trident-use/ontap-nas-prep.html#requirements.

Step 2. Assign the FlexPod export policy to the infrastructure SVM (AA04-RKE2-BM-SVM) root volume:

volume modify –vserver AA04-RKE2-BM-SVM –volume AA04_RKE2_BM_SVM_root –policy default

Procedure 29. Create FlexVol Volumes

The following information is required to create a NetApp FlexVol® volume:

· The volume name

· The volume size

· The aggregate on which the volume exists

Step 1. Run the following command to create a volume for storing SVM audit log configuration:

volume create -vserver AA04-RKE2-BM-SVM -volume audit_log -aggregate <aggr1_node01> -size 50GB -state online -

policy default -junction-path /audit_log -space-guarantee none -percent-snapshot-space 0

Step 2. Update set of load-sharing mirrors using the command below:

snapmirror update-ls-set -source-path AA04-RKE2-BM-SVM:AA04_RKE2_SVM_BM_root

Procedure 30. Create NFS LIFs

Step 1. To create NFS LIFs, run the following commands:

network interface create -vserver AA04-RKE2-BM-SVM -lif nfs-lif-01 -service-policy default-data-files -home-node <st-node01> -home-port a0a-<rke1-nfs-vlan-id> –address <node01-nfs-lif-01-ip> -netmask <node01-nfs-lif-01-mask> -status-admin up –failover-policy broadcast-domain-wide –auto-revert true

network interface create -vserver AA04-RKE2-BM-SVM -lif nfs-lif-02 -service-policy default-data-files -home-node <st-node02> -home-port a0a-<rke1-nfs-vlan-id> –address <node02-nfs-lif-02-ip> -netmask <node02-nfs-lif-02-mask>> -status-admin up –failover-policy broadcast-domain-wide –auto-revert true

Step 2. To verify, run the following commands:

AA07-A400::> network interface show -vserver AA04-RKE2-BM-SVM -service-policy default-data-files

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

AA04-RKE2-BM-SVM

nfs-lif-01 up/up 10.104.7.1/24 AA07-A400-01 a0a-1047 true

nfs-lif-02 up/up 10.104.7.2/24 AA07-A400-02 a0a-1047 true

2 entries were displayed.

Procedure 31. Create SVM Management LIF (Add Infrastructure SVM Administrator)

To add the SVM administrator and SVM administration LIF in the in-band management network, follow these steps:

Step 1. Run the following commands:

network interface create –vserver AA04-RKE2-BM-SVM –lif svm-mgmt –home-node <st-node02> -home-port a0a-<ib-mgmt-vlan-id> –address <svm-mgmt-ip> -netmask <svm-mgmt-mask> -status-admin up -auto-revert true -service-policy default-management –failover-policy broadcast-domain-wide

Step 2. Create a default route that enables the SVM management interface to reach the outside world.

network route create –vserver AA04-RKE2-BM-SVM -destination 0.0.0.0/0 –gateway <svm-mgmt-gateway>

Step 3. To verify, run the following commands:

AA07-A400::> network route show -vserver AA04-RKE2-BM-SVM

Vserver Destination Gateway Metric

------------------- --------------- --------------- ------

AA04-RKE2-BM-SVM

0.0.0.0/0 10.104.0.254 10

Step 4. Add route to reach the corresponding RKE2 master/worker network:

AA07-A400::> net route show -vserver AA04-RKE2-BM-SVM

(network route show)

Vserver Destination Gateway Metric

------------------- --------------- --------------- ------

AA04-RKE2-BM-SVM

0.0.0.0/0 10.104.0.254 10

10.104.3.0/24 10.104.3.254 20

10.104.7.0/24 10.104.7.254 20

3 entries were displayed.

Note: A cluster serves data through at least one and possibly several SVMs. With these steps, you’ve created a single data SVM. You can create additional SVMs depending on your requirement.

Procedure 32. Configure Auto Support

NetApp AutoSupport® sends support summary information to NetApp through HTTPS.

Step 1. To configure AutoSupport, run the following command:

system node autosupport modify -state enable –mail-hosts <mailhost> -from <from-address> -transport https -support enable -to <storage-admin-email>

Procedure 33. Configure DNS for Infrastructure SVM (AA04-RKE2-BM-SVM)

Step 1. To configure DNS for the Infra-SVM, run the following command:

dns create -vserver <vserver-name> -domains <dns-domain> -nameserve <dns-servers>

Example:

dns create -vserver AA04-RKE2-BM-SVM -domains aa04.cspgb4.local -nameservers 10.104.1.4,10.104.1.6

Procedure 34. Create and enable auditing configuration for the SVM

Note: If you do not perform this procedure for the SVM, you will see a warning in AIQUM stating “Audit Log is disabled.”

Step 1. To create auditing configuration for the SVM, run the following command:

vserver audit create -vserver AA04-RKE2-BM-SVM -destination /audit_log

Step 2. Run the following command to enable audit logging for the SVM:

vserver audit enable -vserver AA04-RKE2-BM-SVM

Note: It is recommended that you enable audit logging so you can capture and manage important support and availability information. Before you can enable auditing on the SVM, the SVM’s auditing configuration must already exist.

Procedure 35. Test Auto Support

Step 1. To test the Auto Support configuration by sending a message from all nodes of the cluster, run the following command:

autosupport invoke -node * -type all -message “FlexPod ONTAP storage configuration for RKE2 is completed”

Now the setup of the NetApp storage is completed. Since SLES servers are booted with local disks, storage configuration for boot is not required. The configuration required for NetApp Astra Trident will be done later.

Cisco Intersight Managed Mode – Initial Setup

This chapter contains the following:

· Set up Cisco Intersight Managed Mode on Cisco UCS Fabric Interconnects

· Set up Cisco Intersight Account

· Initial Cisco Intersight Configuration

The Cisco Intersight Managed Mode (also referred to as Cisco IMM or Intersight Managed Mode) is a new architecture that manages Cisco Unified Computing System™ (Cisco UCS®) fabric interconnect–attached systems. Cisco Intersight managed mode standardizes both policy and operation management for Cisco UCS B-series M5 and M6 servers, Cisco UCS X210c M6 and M7 compute nodes used in this deployment guide. For a complete list of supported platforms, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/Intersight/b_Intersight_Managed_Mode_Configuration_Guide/b_intersight_managed_mode_guide_chapter_01010.html

During the initial setup, Cisco UCS FIs are configured in Intersight Managed Mode and added to a newly created Intersight account. Intersight organization creation, resource group definition and license setup are also part of the initial setup. At the end of this section, you can start creating various chassis and server level policies and profiles to deploy Cisco UCS compute nodes.

Set up Cisco Intersight Managed Mode on Cisco UCS Fabric Interconnects

To set up Cisco UCS 6454 Fabric Interconnects in Intersight Managed Mode, complete the steps explained here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html#CiscoIntersightManagedModeSetUp

Note: If a software version that supports Intersight Managed Mode (4.1(3) or later) is already installed on Cisco UCS Fabric Interconnects, do not upgrade the software to a recommended recent release using Cisco UCS Manager. The software upgrade will be performed using Cisco Intersight to make sure Cisco UCS X-series firmware is part of the software upgrade.

Set up Cisco Intersight Account

To set up a new Cisco Intersight Account, complete the steps explained here: https://www.intersight.com/help/saas/getting_started/create_cisco_intersight_account

Note: Setting up a new Intersight account is not necessary if you plan to add the Cisco UCS FIs to an existing account.

Initial Cisco Intersight Configuration

When setting up a new Cisco Intersight account (as described in this document), the account needs to be enabled for Cisco Smart Software Licensing. It is also required to configure Organizations, Resource Groups, and to claim the Cisco UCS hardware.

Cisco Intersight Managed Mode – Domain Profile Setup

This chapter contains the following:

· Review and Deploy the Domain Profile

· Configure Cisco UCS Chassis Profile (optional)

A Cisco UCS domain profile configures a fabric interconnect pair through reusable policies, allows configuration of the ports and port channels, and configures the VLANs and VSANs in the network. It defines the characteristics of and configured ports on fabric interconnects. The domain-related policies can be attached to the profile either at the time of creation or later. One Cisco UCS domain profile can be assigned to one fabric interconnect domain.

Domain profile setup has the following steps:

· General configuration – name and organization assignment

· UCS Domain Assignment – assign previously claimed UCS Fabric Interconnects to the domain profile

· VLAN configuration – define required VLANs

· Port configuration – configure server and uplink ports and port-channels for Ethernet traffic

· UCS domain configuration – policies such as NTP, DNS and QoS

· Review and deploy – review the configuration and deploy the UCS domain profile

To configure the name, description, and organization for the UCS domain profile, complete the steps from Procedure 9 and following, explained here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html#CiscoIntersightManagedModeSetUp

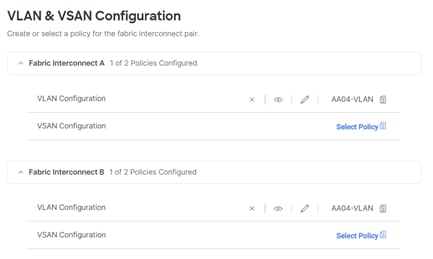

To define the VLANs, complete the steps explained here:

https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html#VLANandVSANConfiguration before continuing with the RKE2 specific configurations.

Figure 2. UCS Domain Profile VLAN Policy Mapping

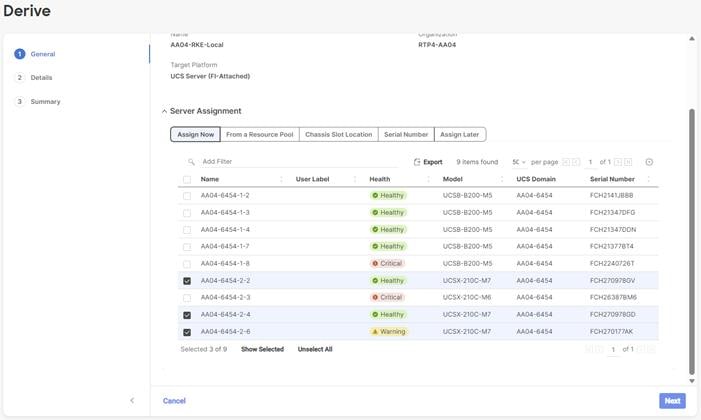

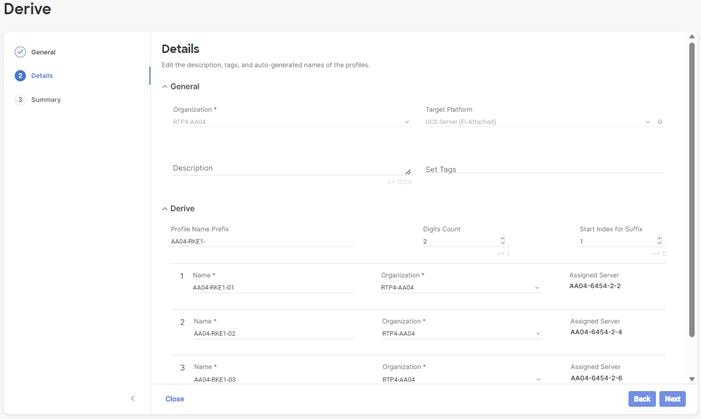

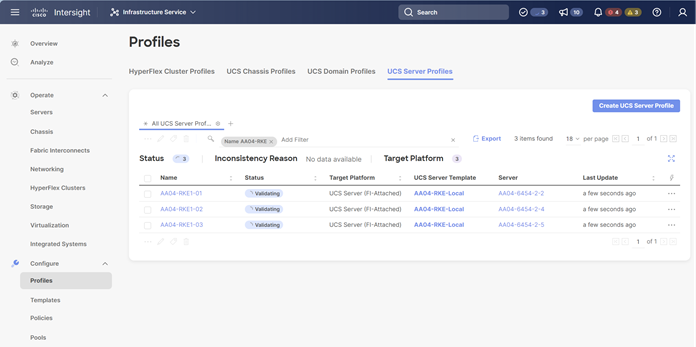

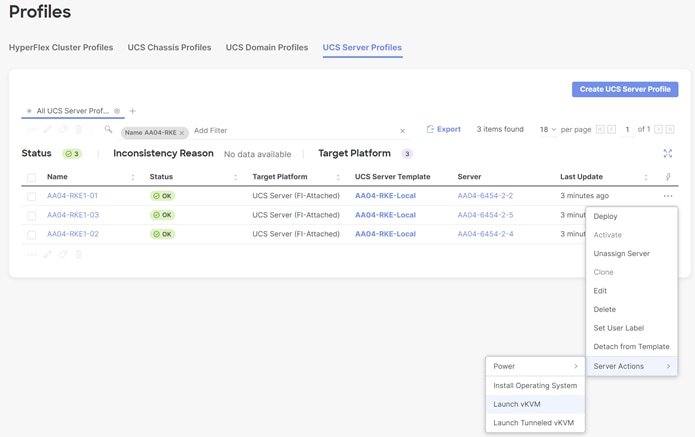

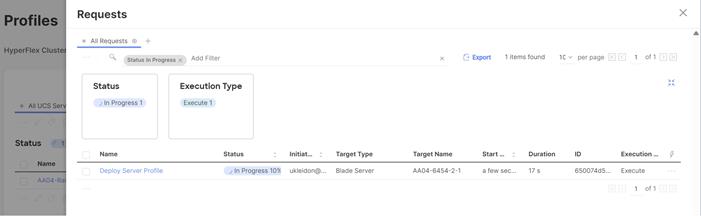

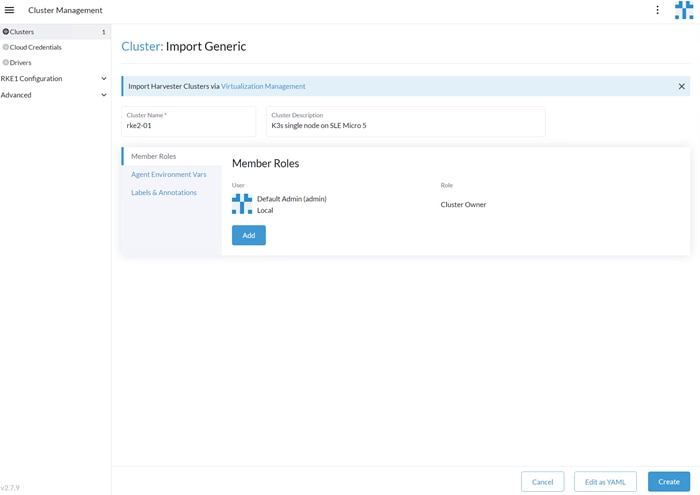

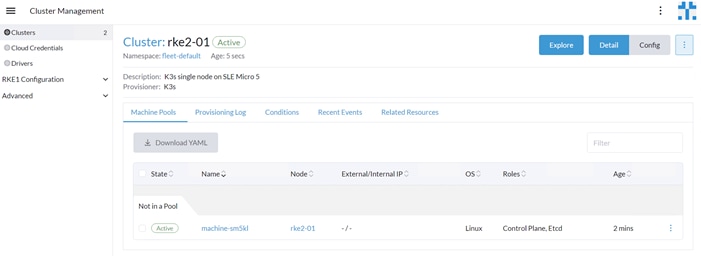

Procedure 1. Add SUSE Rancher/RKE2 VLANs to the Domain Profile

To add the RKE2 VLANs to the Domain Profile, follow the steps shown in the screenshot below:

Review and Deploy the Domain Profile

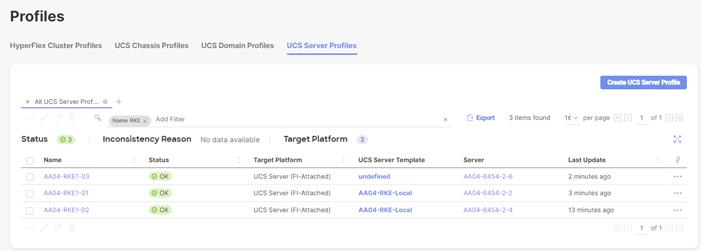

With a successful deployment of the UCS domain profile, the ethernet port channels should be enabled and the Cisco UCS rack servers and compute nodes should be successfully discovered.

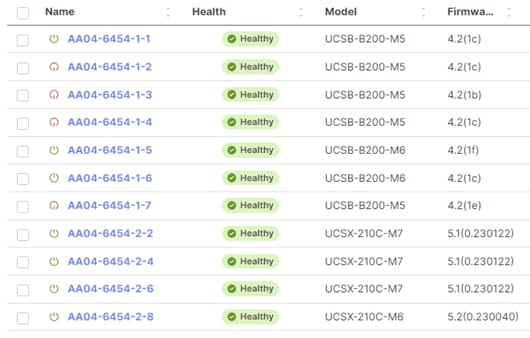

Figure 3. Example of discovered compute nodes and rack server

Configure Cisco UCS Chassis Profile (optional)

Cisco UCS Chassis profile in Cisco Intersight allows you to configure various parameters for the chassis, including:

· IMC Access Policy: IP configuration for the in-band chassis connectivity. This setting is independent of Server IP connectivity and only applies to communication to and from chassis.

· SNMP Policy, and SNMP trap settings.

· Power Policy to enable power management and power supply redundancy mode.

· Thermal Policy to control the speed of FANs.

A chassis policy can be assigned to any number of chassis profiles to provide a configuration baseline for a chassis. In this deployment, no chassis profile was created or attached but you can configure policies to configure SNMP or Power parameters and attach them to the chassis as needed. For more information about configuring UCS chassis policies, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html#CiscoIntersightManagedModeSetUp

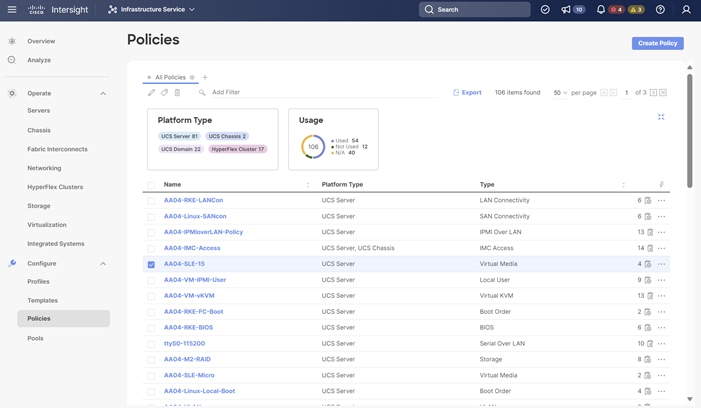

Cisco Intersight Managed Mode – Server Profile Template

This chapter contains the following:

· vNIC Placement for Server Profile Templates

· Server Profile Template Creation

In Cisco Intersight Managed Mode, a server profile enables resource management by simplifying policy alignment and server configuration. The server profiles are derived from a server profile template. Server profile template and its associated policies can be created using the server profile template wizard.

If VMware ESXi hosts will be deployed on the same UCS Domain, complete the steps explained here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_ucs_xseries_e2e_ontap_manual_deploy.html#CiscoUCSIMMManualConfiguration

In this document, one server profile template is created for SUSE Rancher and RKE2 hosts deployed bare metal.

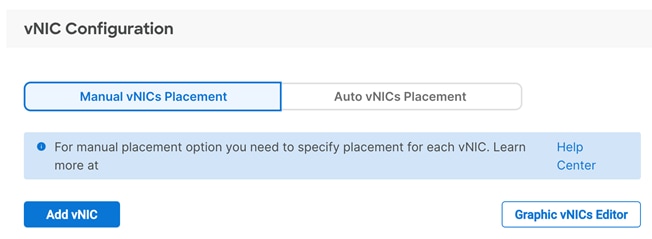

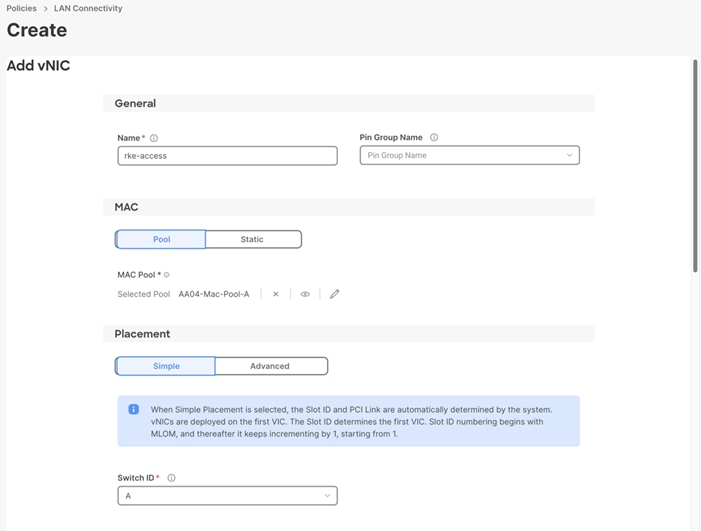

vNIC Placement for Server Profile Templates

This section explains the vNIC definitions and placement for server profile templates.

SUSE RKE2 Host vNIC Placement

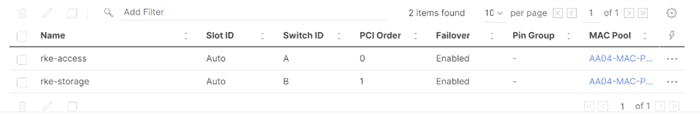

Two vNICs are configured and manually placed as listed in Table 5.

Table 5. vNIC placement for Management Domain hosts

| vNIC/vHBA Name |

Slot |

Switch ID |

PCI Order |

| rke-access |

MLOM |

A |

0 |

| rke-storage |

MLOM |

B |

1 |

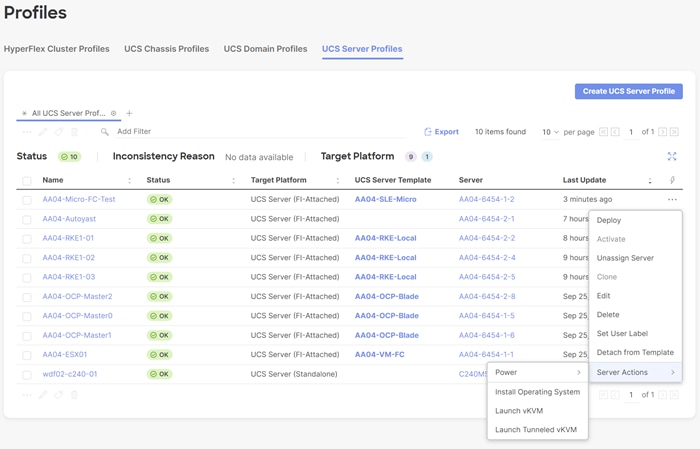

Server Profile Template Creation

Procedure 1. Configure a Server Profile Template

Step 1. Log in to Cisco Intersight.

Step 2. Go to Infrastructure Service > Configure > Templates and in the main window click Create UCS Server Profile Template.

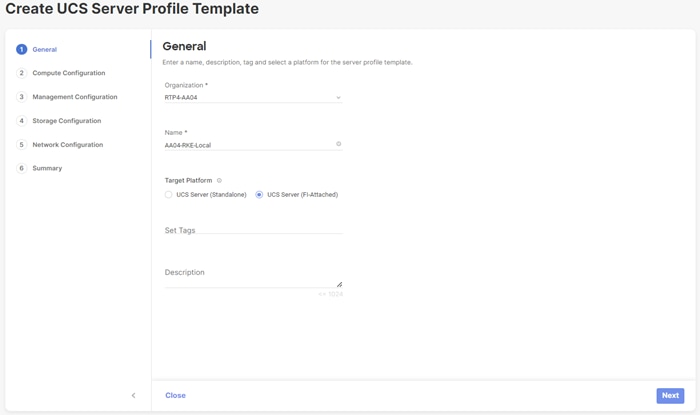

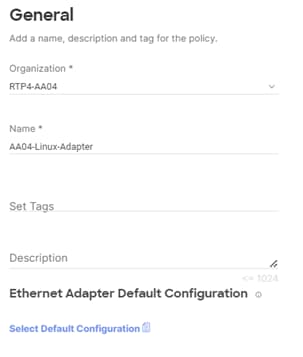

Procedure 2. General Configuration

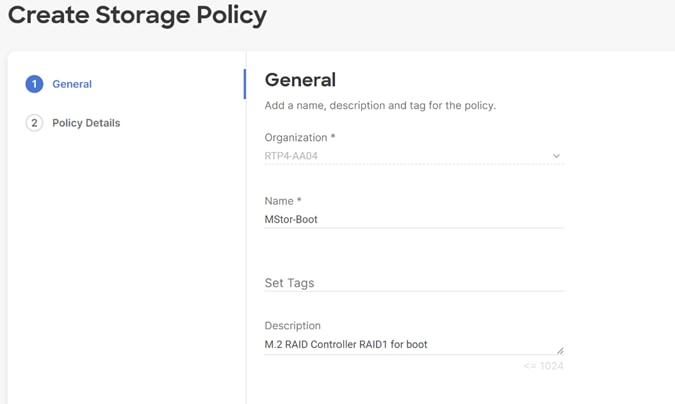

Step 1. Select the organization from the drop-down list (for example RTP4-AA04).

Step 2. Provide a name for the server profile template. The name used in this deployment is AA04-RKE-Local

Step 3. Select UCS Server (FI-Attached).

Step 4. Provide an optional description.

Step 5. Click Next.

Procedure 3. Compute Configuration – UUID Pool

Follow these steps to configure UUID pool under the Compute Configuration.

Note: For additional granularity, you can choose to create different UUID pools for different workloads or departments.

Step 1. Click Select Pool under UUID Pool and then in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example RTP4-AA04) and provide a name for the UUID Pool (for example, AA04-UUID-Pool).

Step 3. Provide an optional Description and click Next.

Step 4. Provide a UUID Prefix (for example, a random prefix of AA040000-0000-0001 was used).

Step 5. Add a UUID block.

Step 6. Click Create.

Procedure 4. Compute Configuration – BIOS policy

Follow these steps to configure BIOS policies under the Compute Configuration.

Step 1. Click Select Policy next to BIOS and in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, RTP4-AA04) and provide a name for the policy (for example, AA04-RKE-BIOS or AA04-RKE-BIOS-M6).

Step 3. Click Next.

Step 4. On the Policy Details screen, select appropriate values for the BIOS settings. In this deployment, the default BIOS values were selected.

Step 5. Click Create.

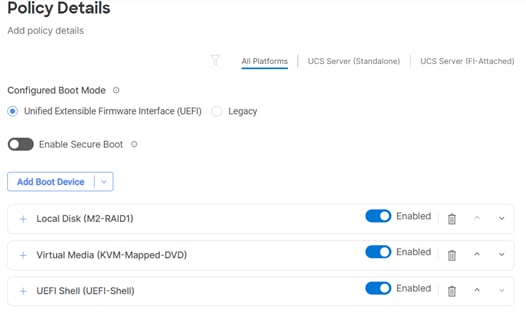

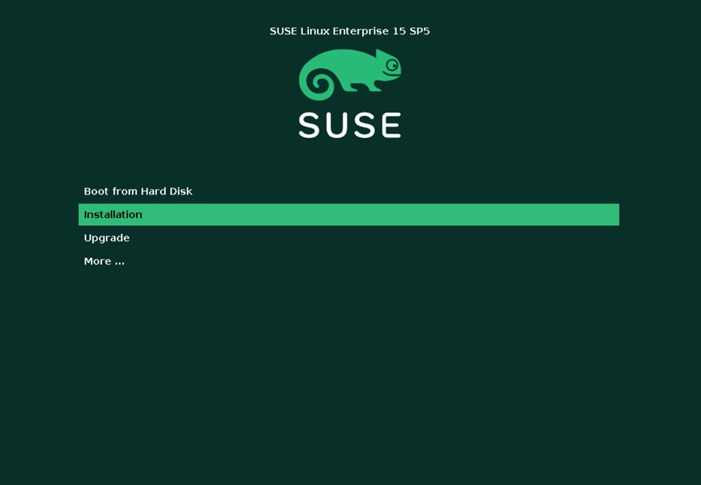

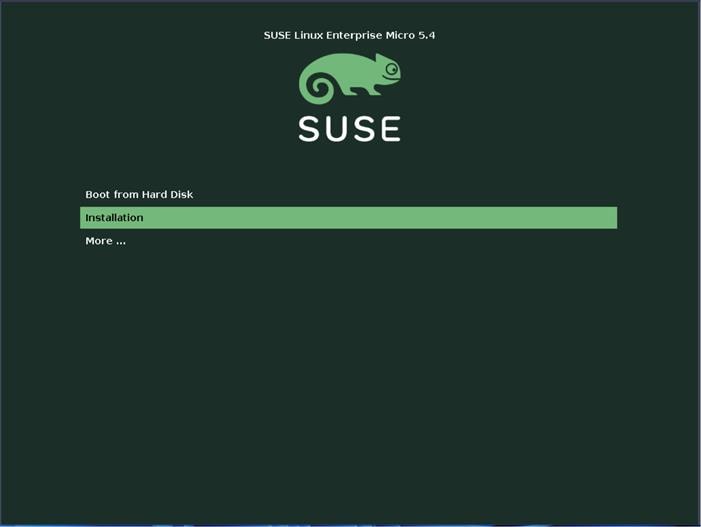

Procedure 5. Compute Configuration - Boot Order policy for Management Domain hosts

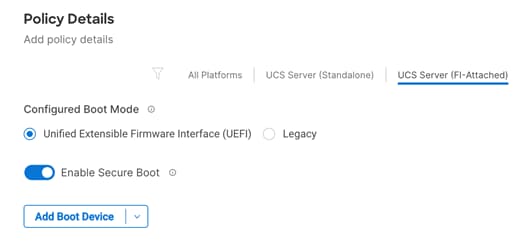

Follow these steps to configure Boot Order policy for RKE2 hosts booting from local disk.

Step 1. Click Select Policy next to Boot Order and then, in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, RTP4-AA04) and provide a name for the policy (for example, AAO4-RKE-Local-Boot).

Step 3. Click Next.

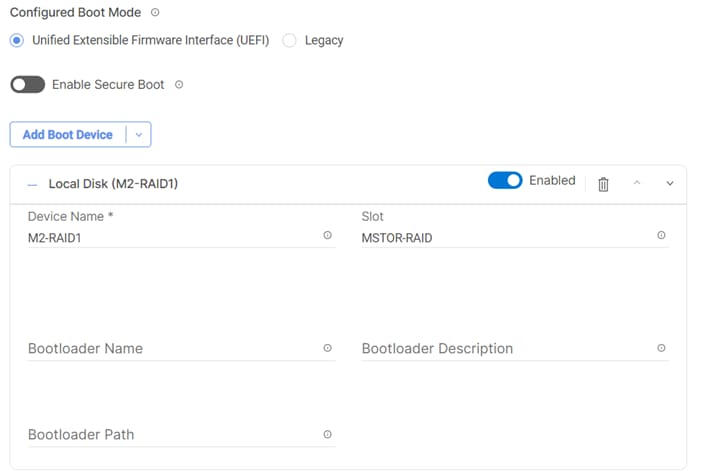

Step 4. For Configured Boot Mode option, select Unified Extensible Firmware Interface (UEFI).

Step 5. Turn on Enable Secure Boot.

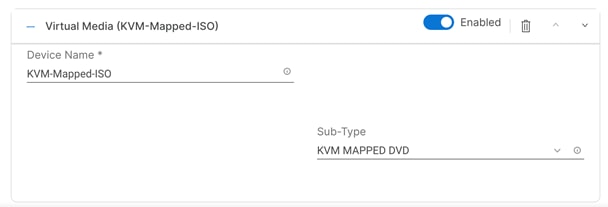

Step 6. Click Add Boot Device drop-down list and select Virtual Media.

Step 7. Provide a device name (for example, KVM-Mapped-ISO) and then, for the subtype, select KVM Mapped DVD.

Step 8. From the Add Boot Device drop-down list, select Local Disk.

Step 9. Provide the Device Name: M2-RAID1 and the Slot: MSTOR-RAID.

Step 10. Verify the order of the boot policies and adjust the boot order as necessary using arrows next to the delete button.

Step 11. Click Create.

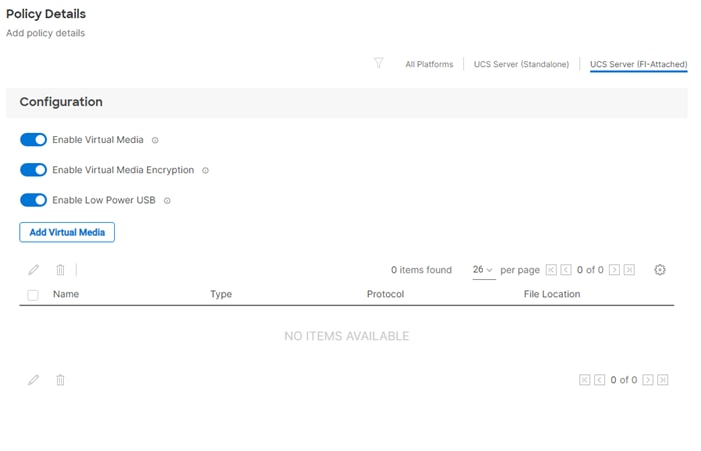

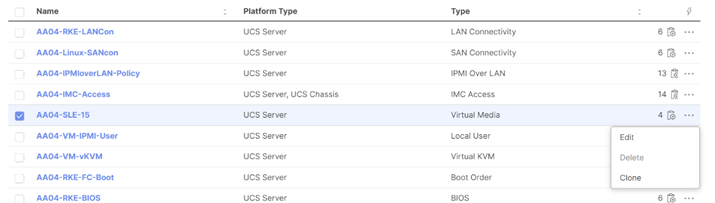

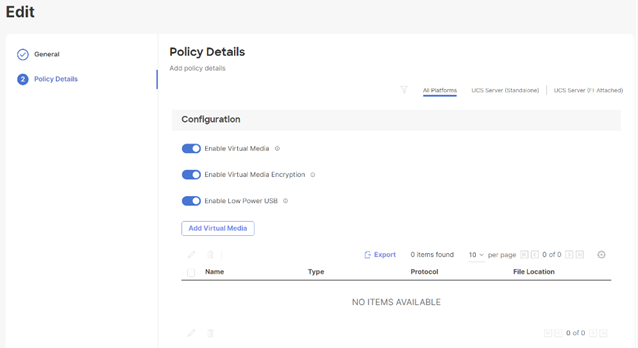

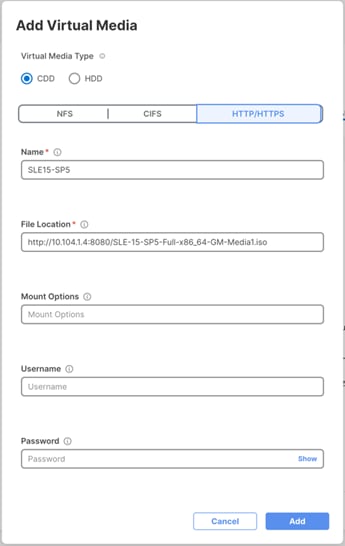

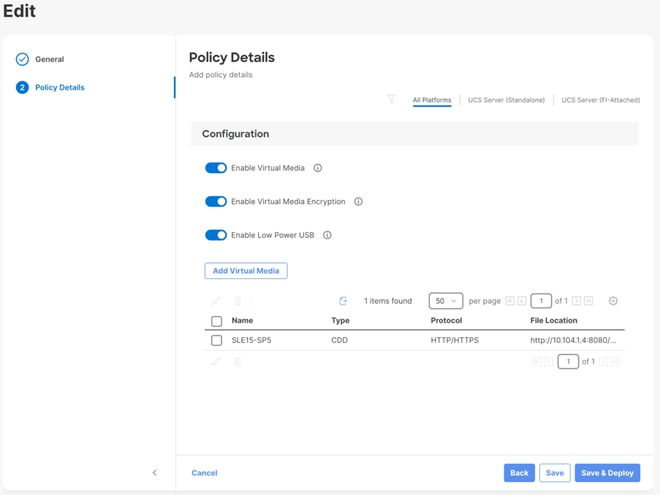

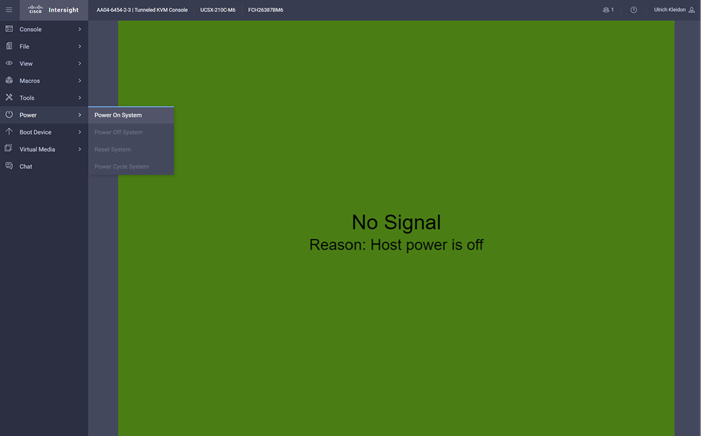

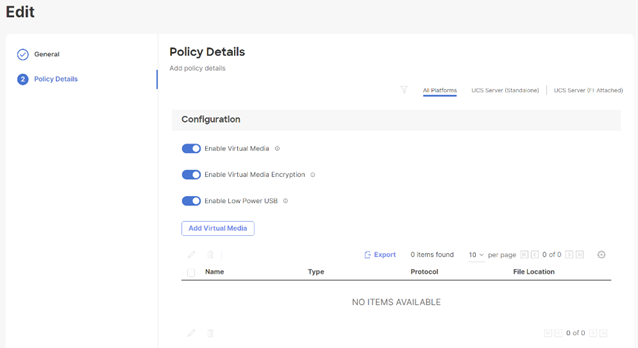

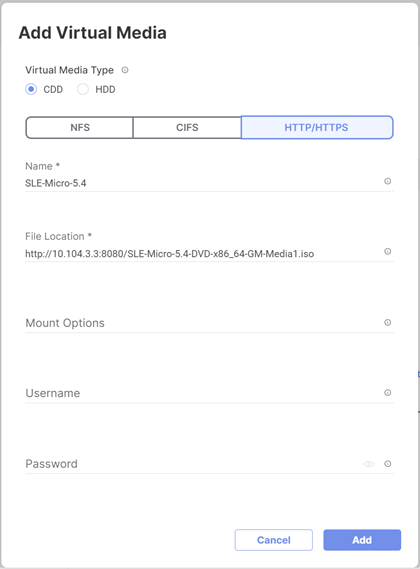

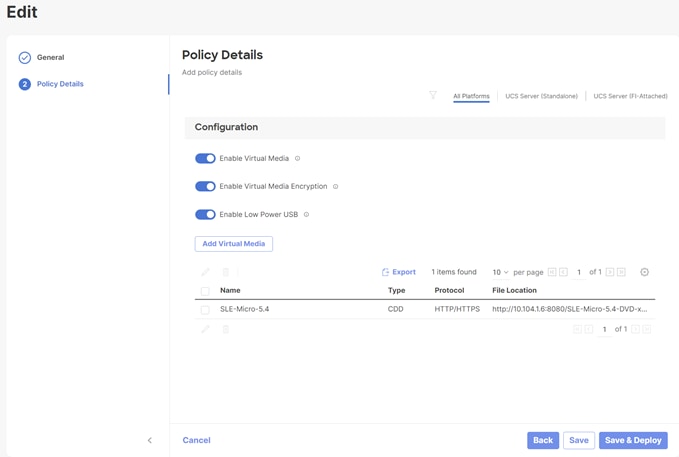

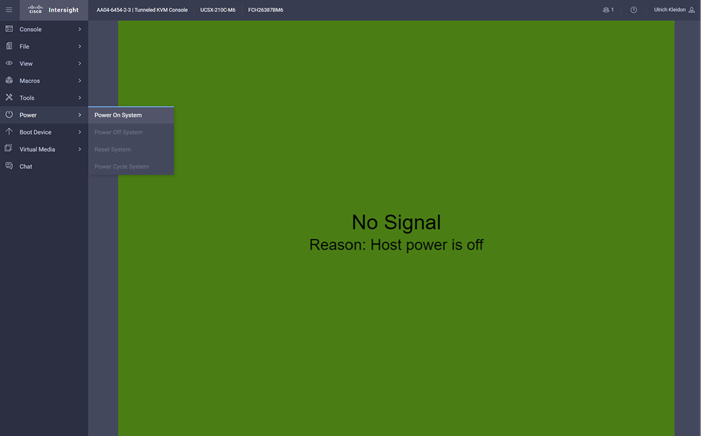

Procedure 6. Compute Configuration - Virtual Media Policy

Follow these steps to configure Virtual Media Policy to allow mapping an ISO files as installation source for operating system.

Step 1. Click Select Policy next to Virtual Media and then, in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, RTP4-AA04) and provide a name for the policy (for example, AA04-SLE-15).

Step 3. Turn on Enable Virtual Media, Enable Virtual Media Encryption, and Enable Low Power USB.

Step 4. Do not Add Virtual Media at this time.

Step 5. Click Create.

Step 6. Click Next to move to Management Configuration.

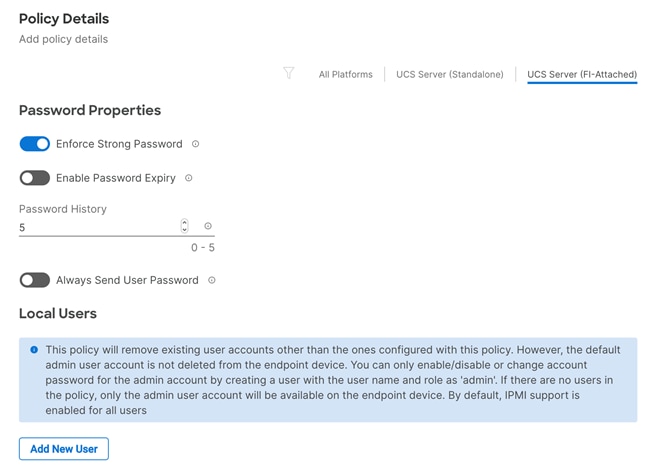

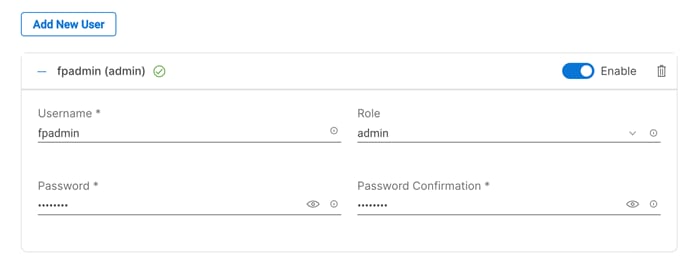

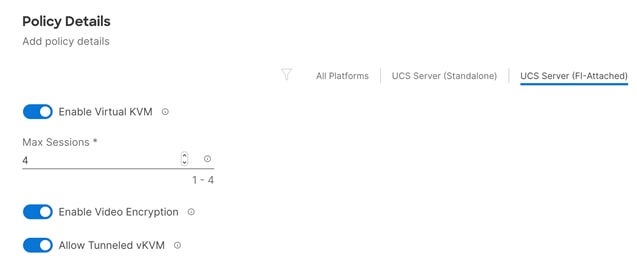

Management Configuration

These policies will be added to the management configuration:

· IMC Access to define the pool of IP addresses for compute node KVM access.

· IPMI Over LAN to allow Intersight to manage IPMI messages.

· Local User to provide local administrator to access KVM.

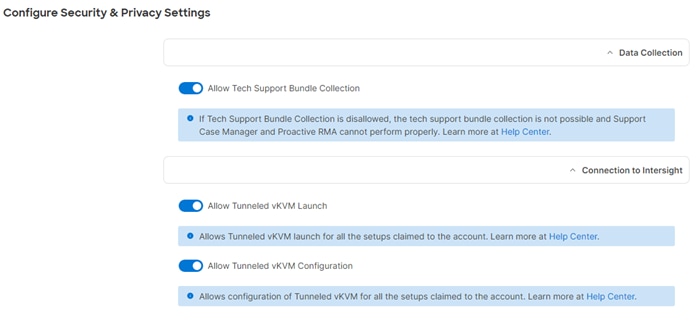

· Virtual KVM to allow the Tunneled KVM.

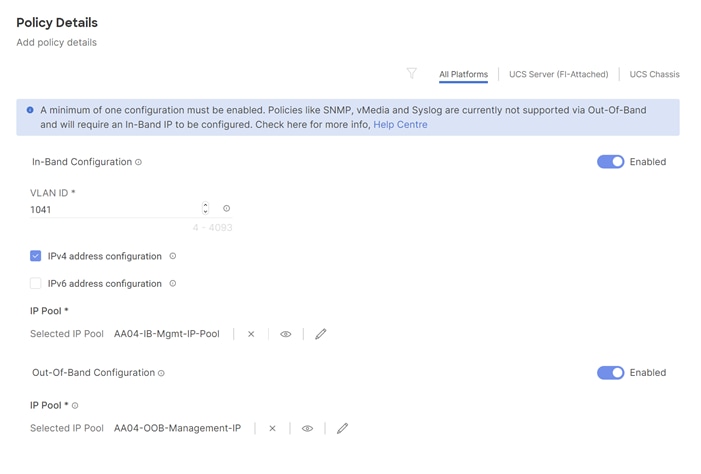

Procedure 1. Management Configuration - Cisco IMC access policy

Follow these steps to configure Cisco IMC access policy:

Step 1. Click Select Policy next to IMC Access and then, in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, RTP4-AA04) and provide a name for the policy (for example, AA04-IMC-Access).

Step 3. Click Next.

Note: You can select in-band management access to the compute node using an in-band management VLAN (for example, VLAN 17) or out-of-band management access via the Mgmt0 interfaces of the FIs. Policies like SNMP, vMedia and Syslog are currently not supported via Out-Of-Band and will require an In-Band IP to be configured. In-band management access was configured in this deployment guide.

Step 4. Enable In-Band Configuration and provide the in-band management VLAN (for example, 17).

Step 5. Make sure IPv4 address configuration is selected.

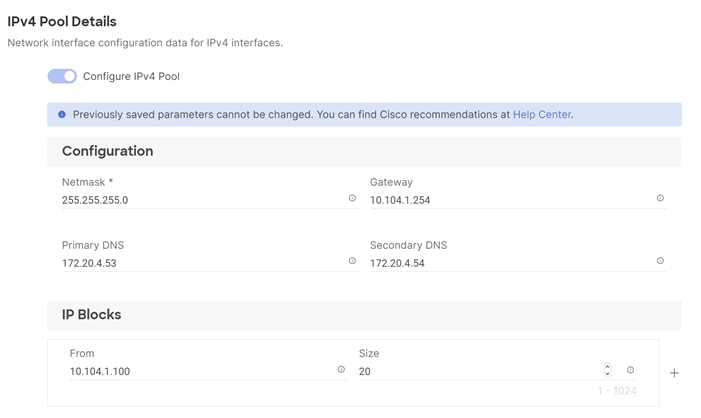

Step 6. Under IP Pool, click Select IP Pool and then, in the pane on the right, click Create New.

Step 7. Verify correct organization is selected from the drop-down list (for example, RTP4-AA04) and provide a name for the pool (for example, AA04-Mgmt-IP-Pool).

Step 8. Select Configure IPv4 Pool and provide the information to define a pool for KVM IP address assignment including an IP Block.

Note: The management IP pool subnet should be accessible from the host that is trying to access the KVM session. In the example shown here, the hosts trying to establish a KVM connection would need to be able to route to 10.101.1.0/24 subnet.

Step 9. Click Next.

Step 10. Unselect Configure IPv6 Pool.

Step 11. Click Create to finish configuring the IP address pool.

Step 12. Click Create to finish configuring the IMC access policy.

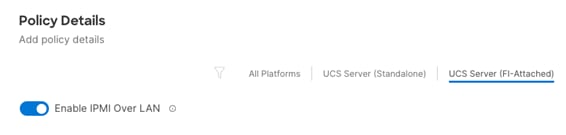

Procedure 2. Management Configuration - IPMI Over LAN policy

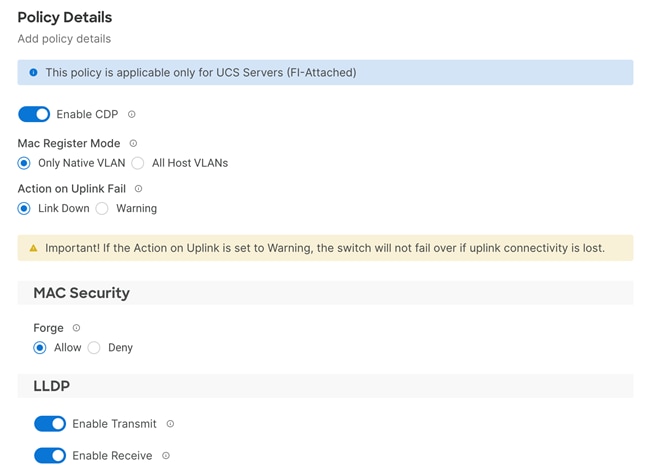

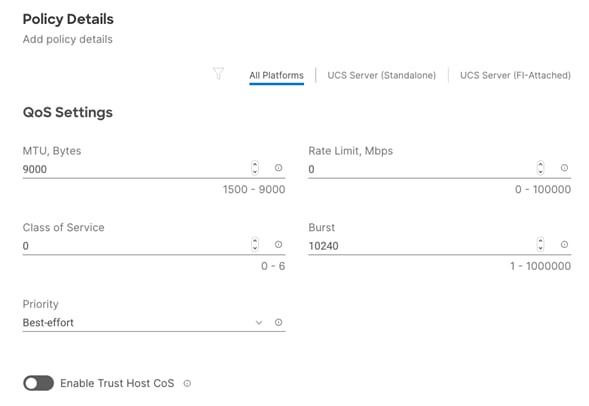

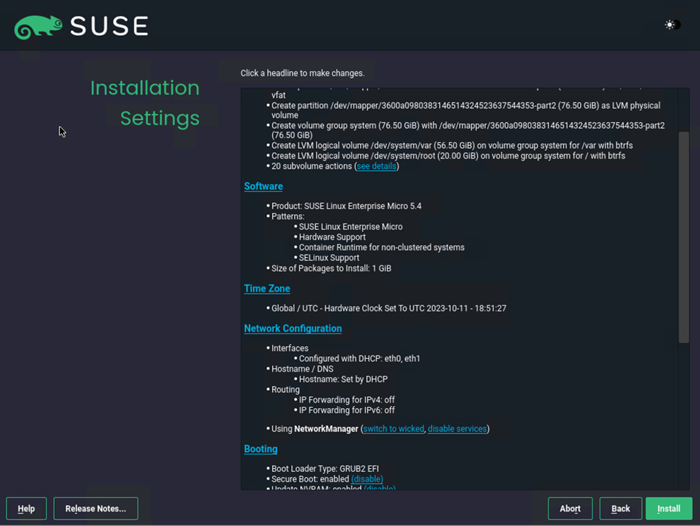

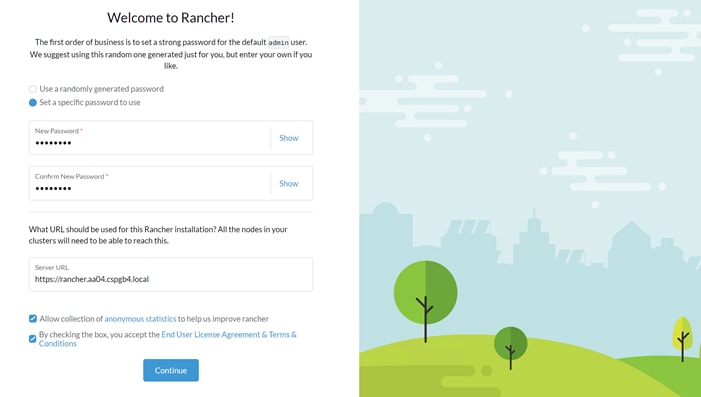

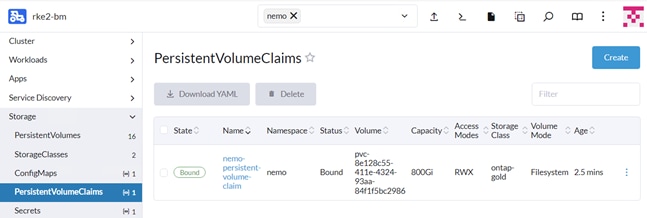

Follow these steps to configure IPMI Over LAN policy.