Security

Note |

Operating system (OS) security configurations and host hardening are the responsibility of the deployment team. CIS hardening of the deployed image and the application of OS security patches are mandatory for maintaining the security posture of the reverse proxy. However, these tasks are not included as part of this deployment. |

The primary security feature provided by the Reverse Proxy Installer is to authenticate all requests and connections at the edge, ensuring that fraudulent requests do not reach the upstream server and threaten the deployment.

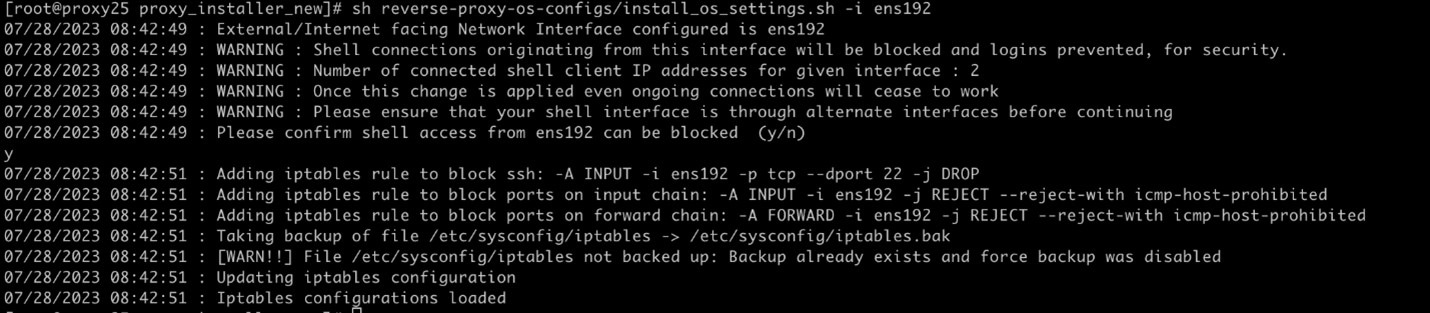

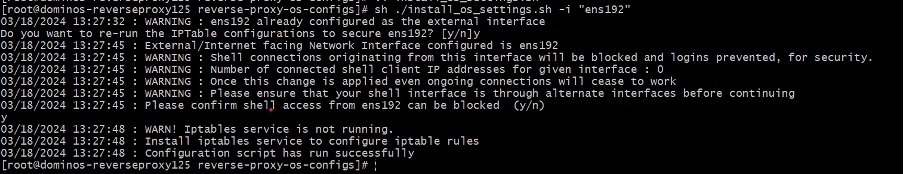

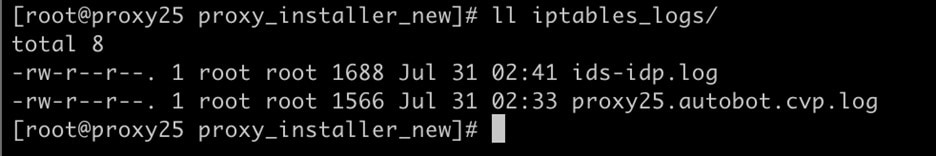

In addition to authentication, the Reverse Proxy Installer automates several essential and mandatory security configurations that are typically tedious to apply and validate. Therefore, the Reverse Proxy Installer is pre-configured to apply iptables and SELinux security rules, and basic kernel configurations for performance and security.

The administrator must enable some of these configurations using the included CLI tool (install_os_settings.sh script) . For details on using the script, refer to Configure Host Operating System.

The following sections describe the security configurations in detail, how they work, configurations it involve, and the troubelshooting steps as appropriate.

Feedback

Feedback