|

Configuration Property Errors

|

|

An unknown template type is mentioned on the .env file which isn’t known to the Installer.

|

[ERROR]: Unknown TEMPLATE_TYPE cuic_1230 found in file cuic.env. Exiting.

|

|

The .env file doesn't contain the property TEMPLATE_TYPE which identifies the type of upstream component.

|

[ERROR]: TEMPLATE_TYPE variable missing in file cuic.env. Exiting.

|

|

A particular variable isn’t present in the primary .env file for the template type. However, it’s available in a particular .env file that is being processed in the custom env directory.

|

[ERROR]: Below unused variable found in ./sample_envs/. Exiting.

NGX_FIN_TEST_HOSTNAME

|

|

One of the mandatory variables isn’t configured. (Currently, limited to host and port of the upstream).

|

[ERROR]: NGX_PRXY_CHAT_HOSTNAME’s value is not configured. Exiting.

|

|

Same variable is encountered more than once in the .env file that is being processed.

|

[ERROR]: NGX_PRXY_CLOUDCONNECT_HOSTNAME’s value is configured in multiple places. Exiting.

|

|

Mandatory variable is configured more than once.

|

[ERROR]: NGX_FIN_HOSTNAME’s configured more than one time. Exiting.

|

|

Duplicate environment variable.

|

[ERROR]: Following variables were found to be duplicate in file sample_env/finesse.env. Exiting.

|

|

The .env file is not readable.

|

[ERROR]: File sample_env/core.env does not exist or does not have appropriate permissions. Exiting.

|

|

The primary template is altered. This is just a warning, it won’t exit the installation.

|

[!!! WARNING !!!] Primary templates have been altered. Note: Some of the pre-install checks that are based on the templates

configurations will be skipped.

|

|

The primary env file is altered. This is just a warning. It won’t exit the installation.

|

[!!! WARNING !!!] Primary master_env have been altered. Note: Some of the pre-install checks that are based on the templates

configurations will be skipped.

|

|

The custom env directory which is passed as a run time option to the Installer is missing.

|

[ERROR]: Directory sample_env/core doesn’t exist. Exiting.

|

|

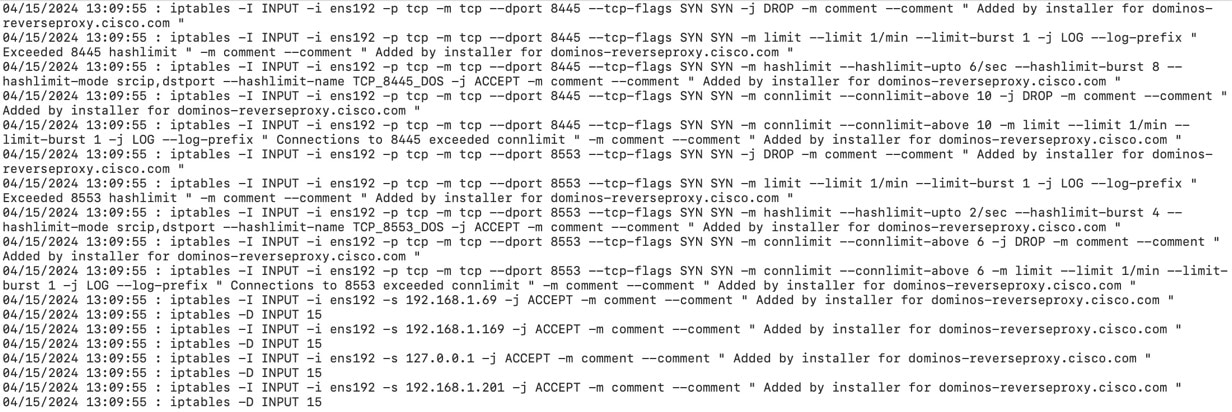

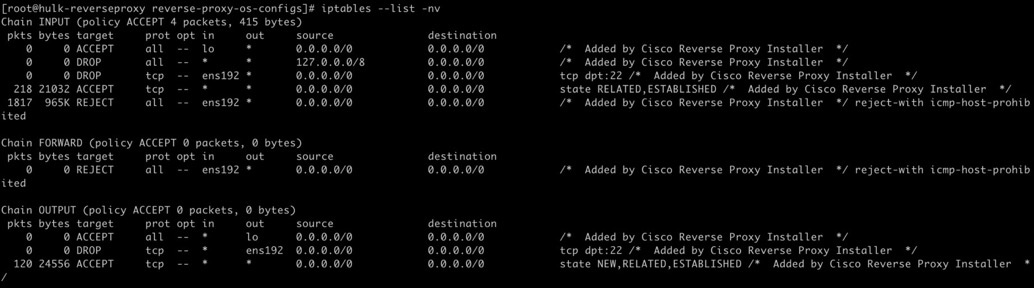

Running multiple proxy containers on the same host is supported by using multiple DNS hostnames mapped to distinct IP addresses

on that host. These addresses must be configured on the same external NIC used for the reverse proxy container during the

install_os_settings.sh configuration. Specify one of the external NIC's IP addresses as the PROXY_BINDING_IP. This setup ensures that traffic intended

for a specific hostname is directed to the container bound to the corresponding IP address.

For example, if the external NIC is ens192 with IP addresses 192.168.1.69 and 192.168.1.70, use 192.168.1.69 as the PROXY_BINDING_IP

for one container and 192.168.1.70 for the other container.

An error occurs when CONTAINER_NETWORK_MODE is set to bridge and the external NIC has more than one IP address configured.

|

[ERROR]: PROXY_BINDING_IP is not configured on the external NIC configured. Exiting.

|

|

By default, the installer uses the IP address of the external NIC configured for proxy binding. However, if the external NIC

has multiple IP addresses configured, the PROXY_BINDING_IP must be set to one of those IP addresses.

|

[ERROR]: External NIC configured has multiple IPs. Please provide a valid IP to bind from this list as the value for PROXY_BINDING_IP

in installer.env configuration file.

|

|

Invalid reverse proxy log level is mentioned.

|

[ERROR] Invalid log level "<Invalid value>" specified for variable NGX_ERR_LOG_LEVEL. Allowed values are debug|info|warn|error|crit|alert|emerg.

|

|

When multiple internal IPs are configured in the VM.

|

[ERROR] Multiple IPs are available in the system. Please provide the exact IP to bind to in PROXY_BINDING_INTERNAL_IP property,

in installer.env configuration file.

|

|

There are no IP address configured on external NIC.

|

[ERROR]: No IP address configured on the NIC <nic name>. Exiting.

|

|

Invalid value configured for NGX_USE_REGEX_TO_VALIDATE_USER_AGENT.

The allowed value is true or false.

|

[ERROR]: Invalid NGX_USE_REGEX_TO_VALIDATE_USER_AGENT value configured in core.env.

|

|

Crond service is not started on the reverse proxy host machine.

|

[ERROR]: Failed to start the cron service 'crond'.

|

|

Unable to create a group name (NGX_USER_USERGROUP) for the user ID mentioned in installer.env.

|

[ERROR]: Failed to create group '<user_group>'.

|

|

Unable to create a user ID (NGX_USER_USERID) specified in installer.env. The user ID already exists, but it does not have the required unique UID (NGX_USER_UID).

|

[ERROR]: User 'userid' exists but has UID <existing uid for userid given> instead of <given uid>.

|

|

Unable to create a user ID (NGX_USER_USERID) with the group name (NGX_USER_USERGROUP) and UID (NGX_USER_UID).

|

[ERROR]: Failed to create user 'userid' with UID <given uid> and groupname <given groupname>.

|

|

Deployment Configuration Errors

|

|

More than one version for Unified Intelligence Center or LiveData is configured.

|

[ERROR]: Multiple versions of env files detected for Unified Intelligence Center, retain one type and retry. Exiting.

|

|

More than one Cisco IdS instance is configured.

(Each side of the proxy should have only a single instance of IdS configured).

|

[ERROR]: Number of Cisco IdS instance should not be more than 1. Exiting.

|

|

Load Balancer Configuration Errors

|

|

The NGX_LOAD_BALANCER_IPS contains values which can’t be parsed as a valid IP.

|

[ERROR]: NGX_LOAD_BALANCER_IPS should contain only IP addresses. Exiting.

|

|

The NGX_LOAD_BALANCER_REAL_IP_HEADER is configured but the NGX_LOAD_BALANCER_IPS isn’t configured.

|

[ERROR]: NGX_LOAD_BALANCER_REAL_IP_HEADER should be configured only when NGX_LOAD_BALANCER_IPS is configured. Exiting.

|

|

The NGX_LOAD_BALANCER_REAL_IP_HEADER is empty but the NGX_LOAD_BALANCER_IPS is configured.

|

[ERROR]: NGX_LOAD_BALANCER_REAL_IP_HEADER is empty. It should contain header details when NGX_LOAD_BALANCER_IPS is configured, Exiting.

|

|

Proxymap Configuration Errors

|

|

Duplicate upstream entries are present in the proxymap.txt file.

|

[ERROR]: There is more than one entry populated for this upstream entry <Duplicate hostname>. Exiting.

|

|

The upstream hostname that is configured in the .env file is not reachable or incorrect.

|

[ERROR]: Hostname configured is not reachable using DNS server entries. Hostname: <Hostname>, DNS server: <DNS entries>. Exiting.

|

|

Hardware Configuration Errors

|

|

The container is not configured with enough resources to run all the upstreams that it is configured with.

|

[ERROR] Not enough resources configured for the container. Available CPUs <>, Available Memory <>GB. Recommended to have minimum

<>vCPU and <>GB of available memory. Exiting.

|

|

The installer.env file has NOFILE_LIMT value less than the recommended value.

|

[ERROR] NOFILE_LIMIT in installer.env should be more than or equal to 102400.

|

|

The speed of NIC interface is less than 1 Gigabit.

|

[ERROR] <NIC_NAME> does not have a connection speed greater than or equal to 1000 Mbps. Exiting.

|

|

Certificate Configuration Errors

|

|

Certificate-based authentication is enabled for a particular upstream server. (Using NGX_PRXY_SSL_VERIFY="on"). However, the

certificate isn’t present, nonreadable, or empty.

|

[ERROR]: Mutual TLS validation is enabled for Finesse, but the upstream server certificate /root/reverse_proxy/contactcenter-reverseproxy/ssl/upstreams_finesse_trust.crt is not present, not readable or invalid. Exiting.

|

|

Certificate-based authentication is enabled for a particular upstream server (Using NGX_PRXY_SSL_VERIFY="on"), without defining

the certificate path.

|

[ERROR]: Mutual Transport Layer Security validation is enabled for finesse, but the upstream server certificate path in NGX_PRXY_SSL_TRUST_CRT is empty. Exiting.

|

|

Value entered for NGX_AUTHENTICATE_WEBSOCKET is other than 'true' or 'false'.

|

[ERROR]: NGX_AUTHENTICATE_WEBSOCKET must be 'true' or 'false'.

|

|

Upgrade Errors

|

|

The upgrade script of each component must contain the upgrade functions to upgrade the configuration from current version

to latest version. The Installer reports an error when one or more components are missing the upgrade functions to upgrade

the configuration.

|

[ERROR]: The required function name <function_name> is not present in upgrade script <script_path>. Exiting upgrade.

|

|

The upgrade script of the component must contain the VERSION_PATH in a sorted order to upgrade the configuration. The Installer

reports an error when the VERSION_PATH contains the version in an unsorted order.

|

[ERROR]: VERSION_PATH value in <script_path> is not sorted. It must be in ascending order.

|

|

The upgrade script for the component is missing.

|

[ERROR]: Upgrade file not present for the given component: {<component_name>}, upgrade script path: {migration/component_upgrade.sh}.

Exiting.

|

|

Hot Reload Errors

|

|

Hot reload must be performed on the container name which is currently running. The Installer reports an error when hot reload

is performed on the container which is not running.

|

[ERROR]: No container name specified to reload. Exiting.

|

|

No container is running with the name that you provided.

|

[ERROR]: Container <GIVEN_NAME> is not running, hence cannot be reloaded. Exiting.

|

|

As part of hot reload, user must not change the image name of the container which is already running. The Installer reports

an error when the image name is changed in the .env file before hot reload.

|

[ERROR]: Image name should be same as running one, Exiting.

|

|

Container configurations reload is tested functionally by running a temporary container image of the proxy with the provided

changes. At times, this container might not exit cleanly and this causes conflicts when the operation is retried. To overcome

this, the container with the name TMP_GIVEN_NAME which is provided in the error message, should be stopped manually and the

operation retried.

|

[ERROR]: Fake container with name TMP_<GIVEN_NAME> is already running, stop it and try reload. Exiting.

|

|

Hot reload requires one or more configurations to be updated in the .env file. The Installer reports an error when there is no change in the .env file for hot reload.

|

[ERROR]: There is no config change to reload. Exiting.

|

|

The openresty configurations in reverse-proxy container couldn't be fetched for download.

|

[ERROR]: Not able to get the openresty config from container ${container_name}. Exiting.

|

|

The user confirms not to hot reload the changes.

|

[ERROR]: Config reload rejected by user, Exiting.

|

|

Log Shipping Errors

|

|

mTLS is enabled between reverse proxy and logging server. However, the logging server certificate path is not configured in

the NGX_LIVE_LOG_SHIPPING_SERVER_CERT property.

|

[ERROR] Secured live log shipping is enabled, but the logging server certificate path is empty. Configure the variable NGX_LIVE_LOG_SHIPPING_SERVER_CERT.

Exiting.

|

|

mTLS is enabled between reverse proxy and logging server. However, the logging server certificate file defined using the NGX_LIVE_LOG_SHIPPING_SERVER_CERT

property is missing, empty, or not readable.

|

[ERROR] Secured live log shipping is enabled, but the certificate for the logging server is missing, not readable, or invalid.

Exiting.

|

|

mTLS is enabled between reverse proxy and logging server. However, the client certificate path is not configured in the NGX_LIVE_LOG_SHIPPING_CLIENT_CERT

property.

|

[ERROR] Secured live log shipping is enabled, but the reverse proxy client certificate path is empty. Configure the variable

NGX_LIVE_LOG_SHIPPING_CLIENT_CERT. Exiting.

|

|

mTLS is enabled between reverse proxy and logging server. However, the logging client certificate file defined using the NGX_LIVE_LOG_SHIPPING_CLIENT_CERT

property is missing, empty, or not readable.

|

[ERROR] Secured live log shipping is enabled, but the certificate for the reverse proxy client is missing, not readable, or

invalid. Exiting.

|

|

mTLS is enabled between reverse proxy and logging server. However, the client private key path is not configured in the NGX_LIVE_LOG_SHIPPING_CLIENT_KEY

property.

|

[ERROR] Secured live log shipping is enabled, but the reverse proxy client certificate key is empty. Configure the variable

NGX_LIVE_LOG_SHIPPING_CLIENT_KEY. Exiting.

|

|

mTLS is enabled between reverse proxy and logging server. However, the logging client private key file defined using the NGX_LIVE_LOG_SHIPPING_CLIENT_KEY

property is missing, empty, or not readable.

|

[ERROR] Secured live log shipping is enabled, but the certificate key for the reverse proxy client is missing, not readable,

or invalid. Exiting.

|

|

mTLS is enabled between reverse proxy and logging server. However, the logging server is not configured in the NGX_LIVE_LOG_SHIPPING_SERVER_HOST

property.

|

[ERROR] Live log shipping server is not configured when live log shipping is enabled. Configure the variable NGX_LIVE_LOG_SHIPPING_SERVER_HOST.

Exiting.

|

|

mTLS is enabled between reverse proxy and logging server. However, the logging server port is not configured in the NGX_LIVE_LOG_SHIPPING_SERVER_PORT

property.

|

[ERROR] Live log shipping server port is not configured when live log shipping is enabled. Configure the variable NGX_LIVE_LOG_SHIPPING_SERVER_PORT.

Exiting.

|

|

The NGX_LIVE_LOG_SERVER_CRT_AUTH property can be used to enable or disable mTLS between the reverse proxy and the logging

server. The allowed values are only 0 (disabled) and 1 (enabled). This error is displayed when the property contains any other

value.

|

[ERROR] Invalid value for the property NGX_LIVE_LOG_SERVER_CRT_AUTH. It should be 1 for secured live log shipping and 0 otherwise.

Exiting.

|

|

The configured logging server through the NGX_LIVE_LOG_SHIPPING_SERVER_HOST property is not resolved by the DNS server.

|

[ERROR] Hostname configured is not reachable using DNS server entries. Hostname: invalidserver.autobot.cvp, DNS server: 192.168.1.3|72.163.128.140.

Exiting.

|

|

The live log shipping is not secured using TLS.

|

[WARN] TLS disabled for live log shipping.

|

Feedback

Feedback