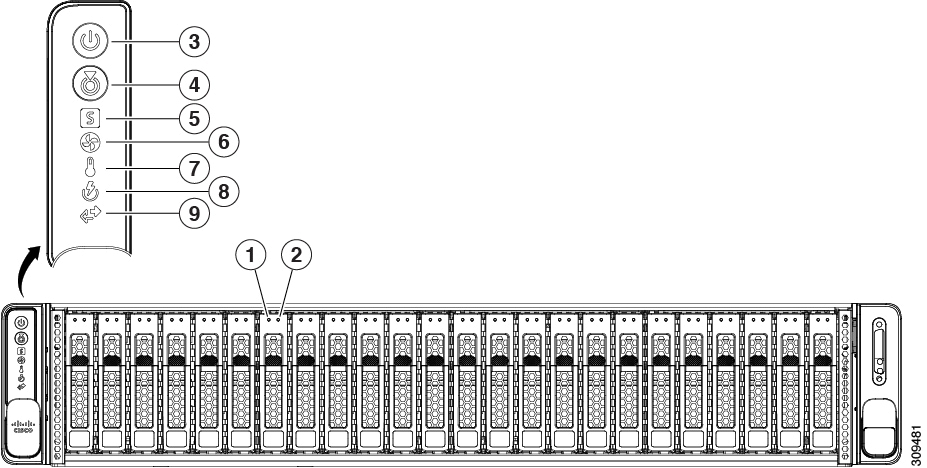

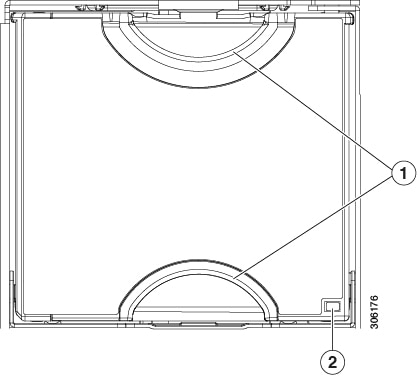

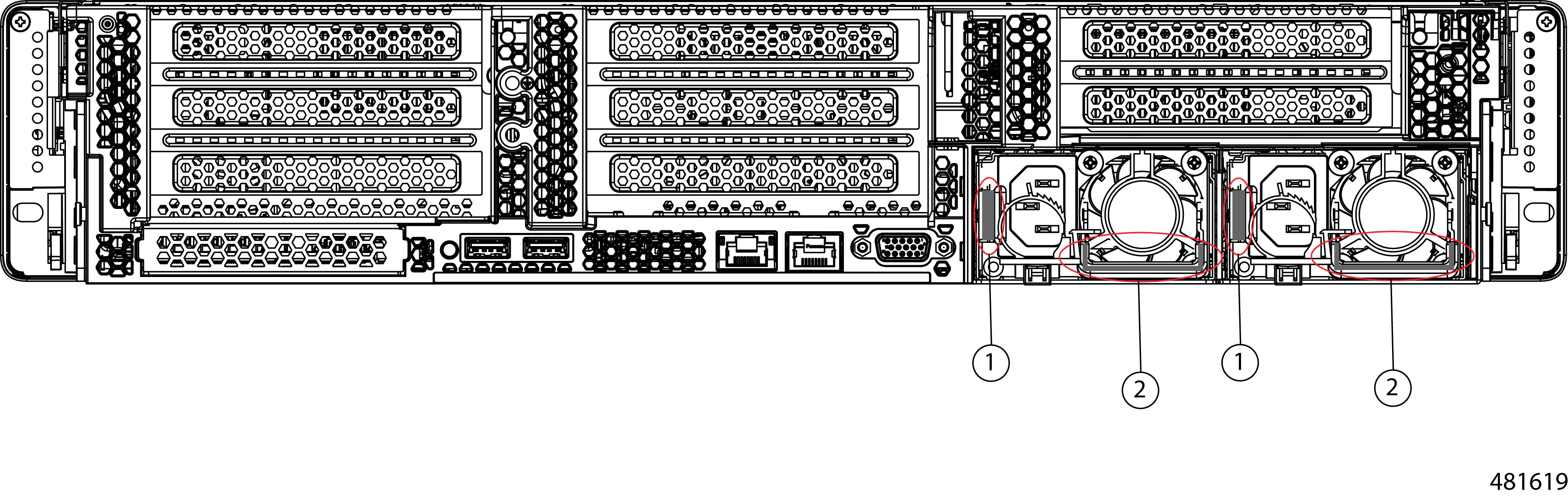

Front-Panel LEDs

|

LED Name |

States |

|||

|

1 SAS |

SAS/SATA drive fault

|

|

||

|

2 SAS |

SAS/SATA drive activity LED |

|

||

|

1 NVMe |

NVMe SSD drive fault

|

|

||

|

2 NVMe |

NVMe SSD activity |

|

||

|

3 |

Power button/LED |

|

||

|

4 |

Unit identification |

|

||

|

5 |

System health |

|

||

|

6 |

Fan status |

|

||

|

7 |

Temperature status |

|

||

|

8 |

Power supply status |

|

||

|

9 |

Network link activity |

|

Feedback

Feedback