Cisco and Hitachi Adaptive Solutions with Red Hat OCP Al Ready Infrastructure

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Published: October 2024

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Cisco Validated Designs consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of our customers.

The development of container technology resulted in a significant contribution to the evolution of modern computing application architecture. Virtual Machines previously replaced bare metal for efficiency in infrastructure utilization, and today containers do this same thing to VMs, in most cases providing reduced CPU utilization, reduced storage needs, and decreased latency for applications positioned as a container versus as a VM.

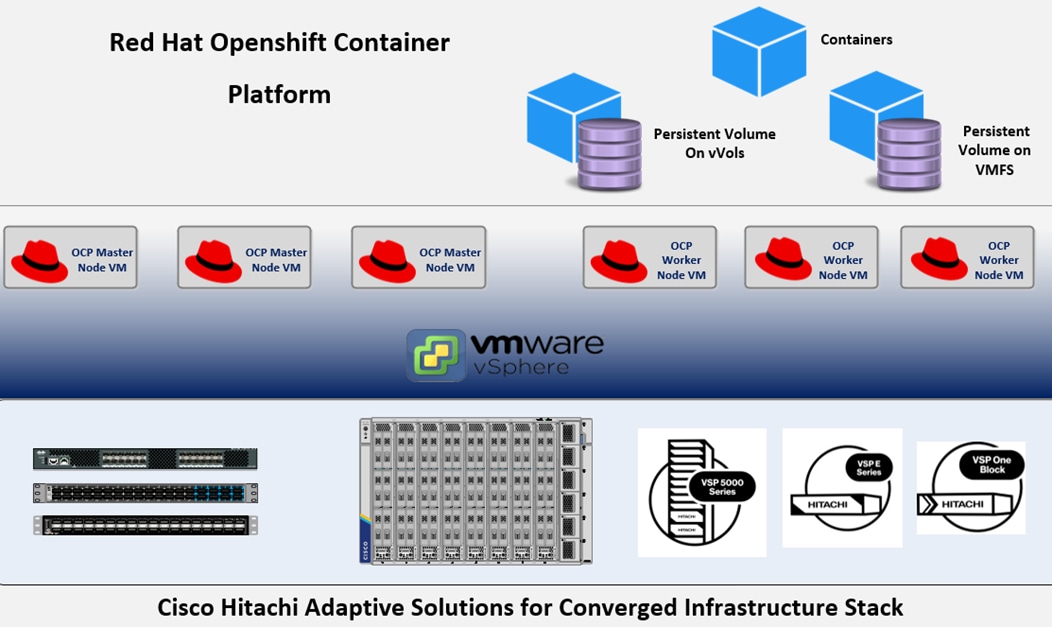

In this document Cisco and Hitachi will discuss the deployment and use of Red Hat’s OpenShift Container Platform (OCP) positioned within the Cisco and Hitachi Adaptive Solutions for Converged Infrastructure (CI). This CI is a robust, flexible, and AI ready foundation for today’s businesses. The recommended solution architecture incorporates NVIDIA GPUs and is built on Cisco Unified Computing System (Cisco UCS) using the unified software release to support the Cisco UCS hardware platforms for Cisco UCS X-Series Servers, Cisco UCS 6500 Fabric Interconnects, Cisco Nexus 9000 Series Switches, Cisco MDS Fibre Channel Switches, and the Hitachi Virtual Storage Platform (VSP) 5600. The VSP integrates with OCP and supports container persistent storage, facilitates new business opportunities and provides efficient and rapid deployments. This OCP virtualized architecture is implemented on VMware vSphere 8.0 U2 to support the leading virtual server platform of enterprise customers.

Additional Cisco Validated Designs created in a partnership between Cisco and Hitachi can be found here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/data-center-design-guides-all.html#Hitachi

This chapter contains the following:

▪ Audience

Modernizing your data center can be overwhelming, and it’s vital to select a trusted technology partner with proven expertise. With Cisco and Hitachi as partners, companies can build for the future by enhancing systems of record, supporting systems of innovation, and facilitating business opportunities. Organizations need an agile solution, free from operational inefficiencies, to deliver continuous data availability, meet SLAs, and prioritize innovation.

Cisco and Hitachi are taking the Adaptive Solutions for Converged Infrastructure as a Virtual Server Infrastructure (VSI) and reimagining it as a bridge for the data center journey toward containers. This implements Red Hat OpenShift Container Platform (OCP) on a vSphere-based architecture that is composed of the Hitachi Virtual Storage Platform (VSP) 5000 series connecting through the Cisco MDS multilayer switches supporting both FC-SCSI and FC-NVMe protocols to Cisco Unified Computing System X-Series Servers managed through Cisco Intersight, and further enabled with the Cisco Nexus family of switches.

These deployment instructions are based on the buildout of the Cisco and Hitachi Adaptive Solutions for Converged Infrastructure validated reference architecture, which describes the specifics of the products utilized within the Cisco validation lab, but the solution is considered relevant for equivalent supported components listed within Cisco and Hitachi Vantara’s published compatibility matrixes. Supported adjustments from the example validated build must be evaluated with care as their implementation instructions may differ.

This design and implementation guide shows containers within a validated reference architecture and describes the specifics of the products used within the Cisco validation lab. The steps and design followed are not prescriptive but are a validated example of a deployment following best practices. Care should be followed in making adjustments to this design by referencing the compatibility matrixes of Cisco and Hitachi, as well as support documentation of Cisco, Hitachi, and Red Hat.

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

This document provides a comprehensive design and detailed implementation guide for the Cisco and Hitachi Adaptive Solutions for the Converged Infrastructure solution using a VMware vSphere based environment to host Red Hat OpenShift Container Platform (OCP). This solution features a validated reference architecture composed of:

▪ Cisco UCS Compute

▪ Cisco Nexus Switches

▪ Cisco Multilayer SAN Switches

▪ Hitachi Virtual Storage Platform

▪ VMware vSphere

▪ Red Hat OCP

For the design decisions and technology discussion of the solution, please refer to the Cisco and Hitachi Adaptive Solutions for Converged Infrastructure Design Guide: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hitachi_adaptive_vmware_vsp_design.html

The following design elements distinguish this version of the Adaptive Solutions for Converged Infrastructure from previous models:

▪ Cisco UCS X210c M7 servers with 5th Generation Intel Xeon Scalable Processors with up to 64 cores per processor and up to 8TB of DDR-5600 DIMMs

▪ Cisco UCS X440p PCIe Nodes

▪ Cisco UCS 9416 X-Fabric Module

▪ NVIDIA L40 GPUs

▪ Red Hat OpenShift Container Platform 4.15

▪ Ansible orchestration of Cisco UCS and Hitachi VSP resources

▪ Hitachi Storage Provider for VMware vCenter 3.7.4

▪ Hitachi Ops Center release version 11.0.1

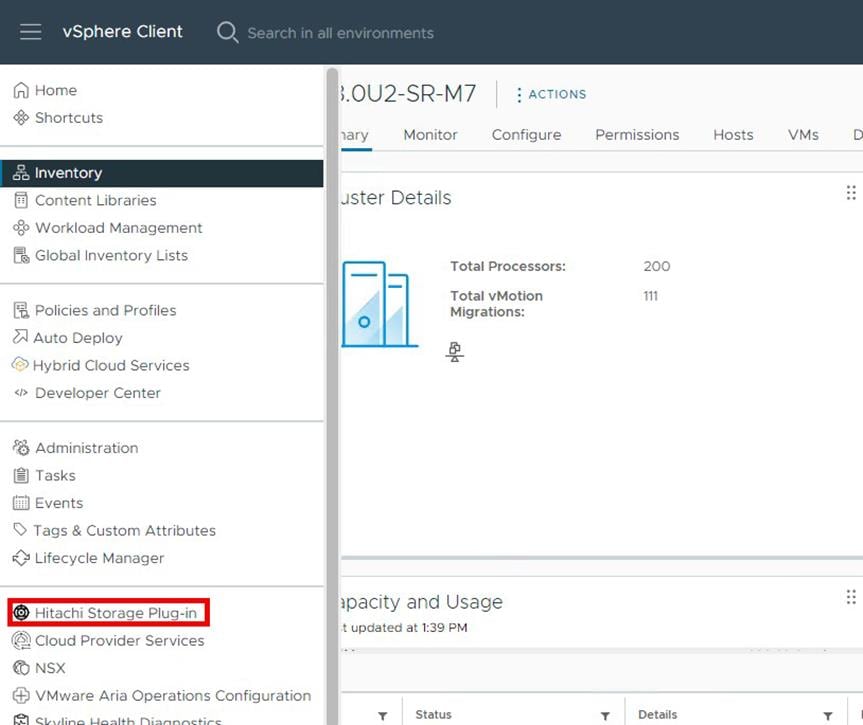

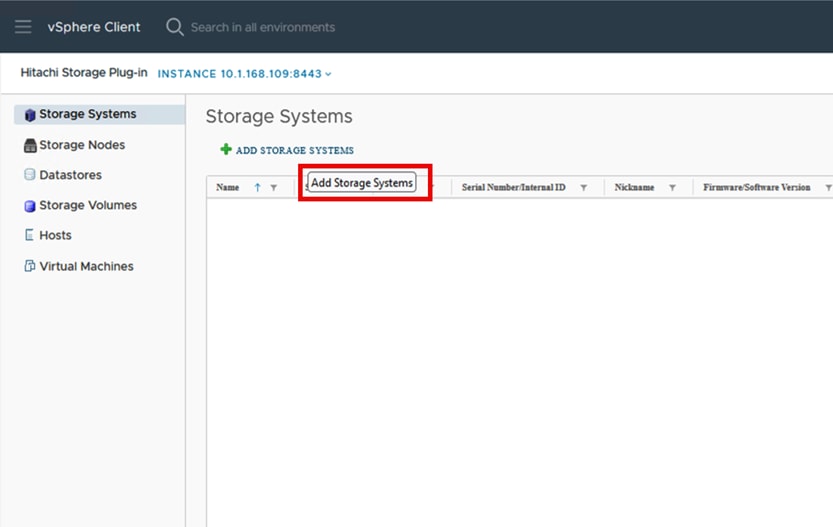

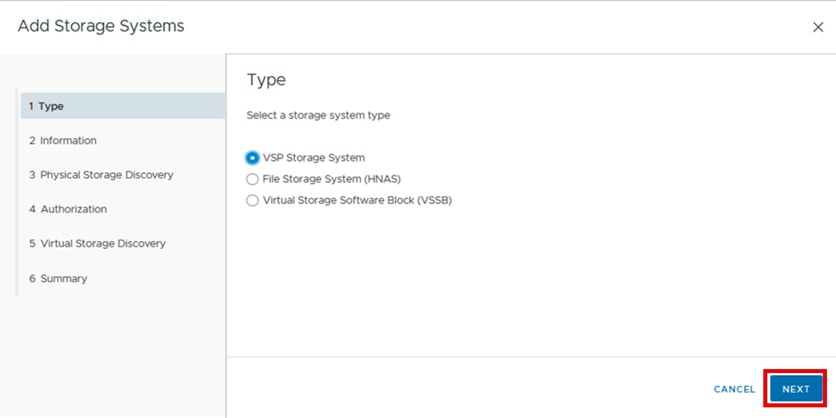

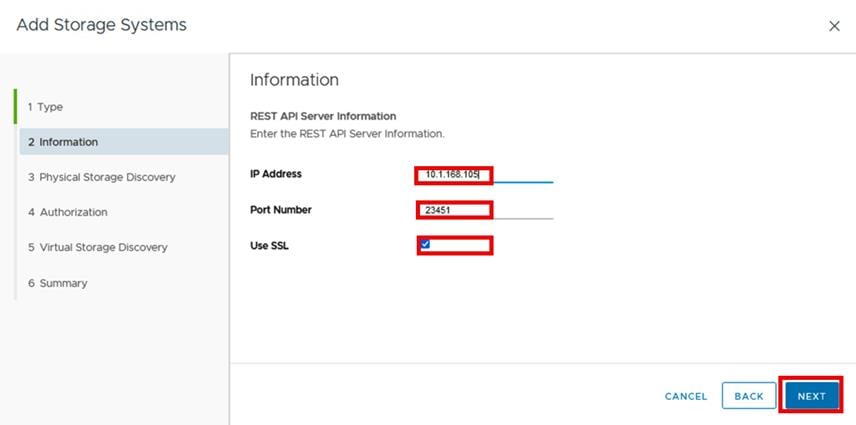

▪ Hitachi Storage Plug-in version 4.10

Deployment Hardware and Software

This chapter contains the following:

The deployment hardware and software incorporate the base architecture defined in the Cisco and Hitachi Adaptive Solutions with Cisco UCSX, VMware 8U1, and Hitachi VSP 5600 Design Guide with some additions and upgrades.

The Adaptive Solutions Virtual Server Infrastructure consists of a high-performance Fibre Channel network built using the following hardware components:

▪ Cisco UCS X9508 Chassis with Cisco UCSX-I-9108-100G Intelligent Fabric Modules (IFMs) and up to eight Cisco UCS X210c M7 Compute Nodes with options for 4th and 5th Generation Intel Xeon Scalable CPUs.

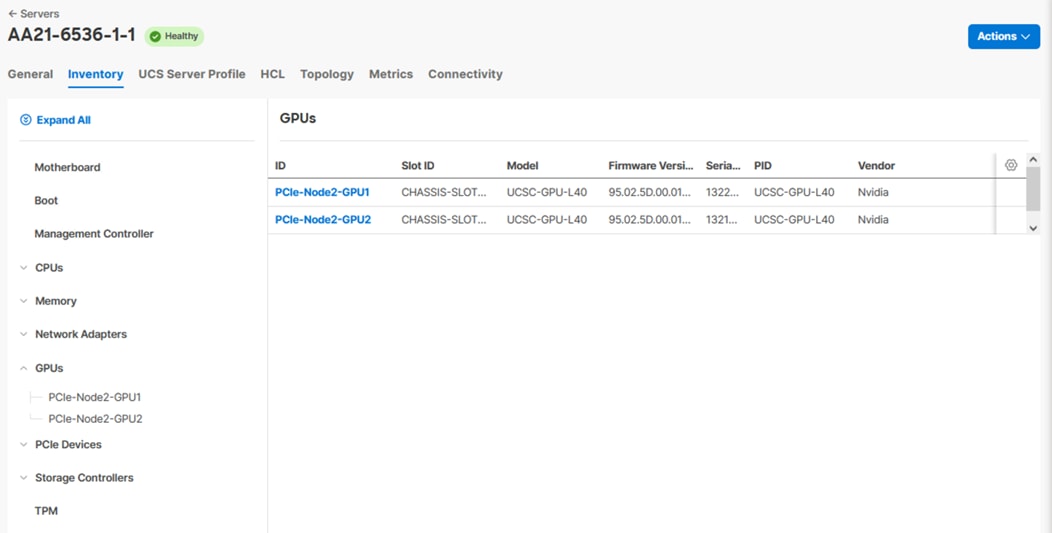

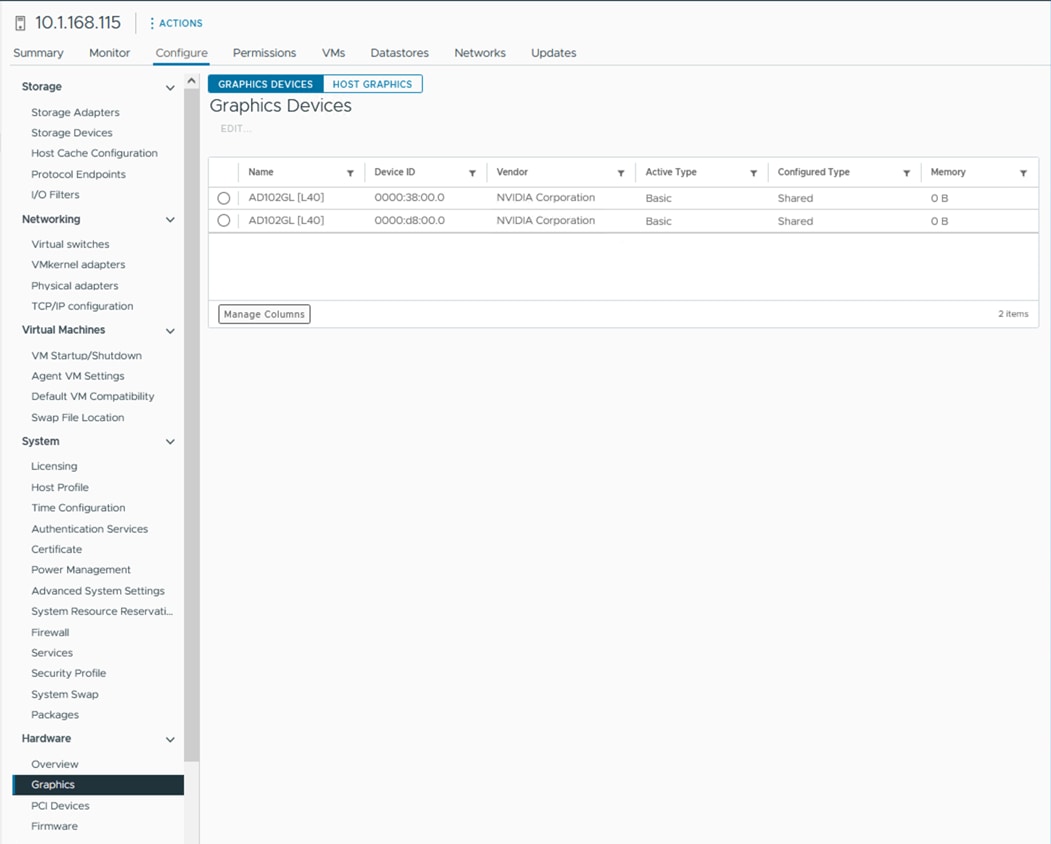

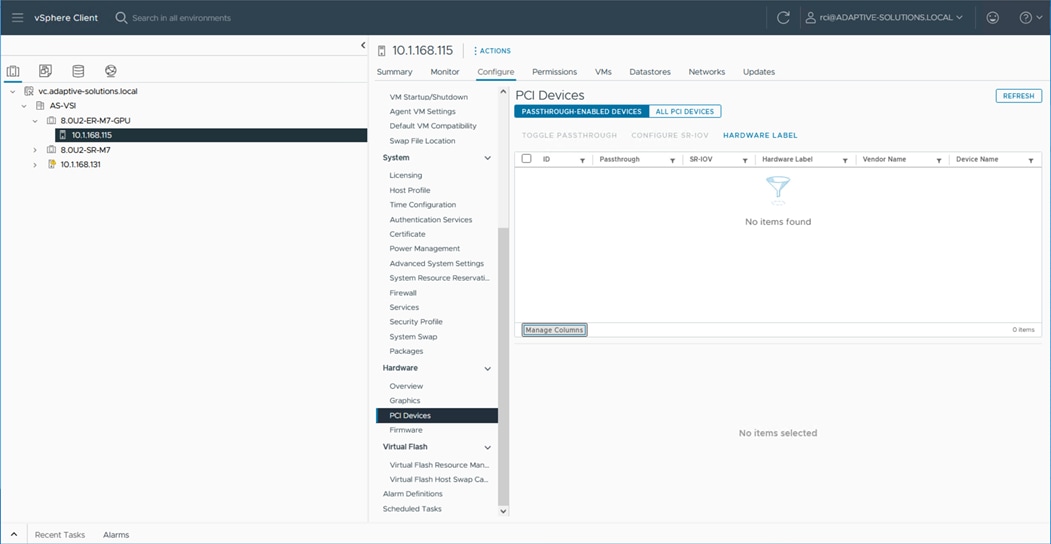

▪ Cisco UCS X440p PCIe Nodes with NVIDIA L40 GPUs.

▪ Fifth-generation Cisco UCS 6536 Fabric Interconnects to support 100GbE, 25GbE, and 32GFC connectivity as needed.

▪ High-speed Cisco NX-OS-based Nexus 93600CD-GX switching design to support up to 100GE.

▪ Hitachi 5600 Virtual Storage Platform all flash storage system with 32G Fibre Channel connectivity.

▪ Cisco MDS 9124V switches to support Fibre Channel storage configuration.

The software components of the solution consist of:

▪ Cisco Intersight SaaS platform to deploy, maintain, and support the Adaptive Solutions infrastructure, giving visibility to the compute, network and storage in the architecture.

▪ Cisco Intersight Assist Virtual Appliance to connect the Hitachi VSP 5600, VMware vCenter, and Cisco Nexus and MDS switches with Cisco Intersight.

▪ Hitachi Ops Center Administrator is an infrastructure management solution that unifies storage provisioning, data protection, and storage management.

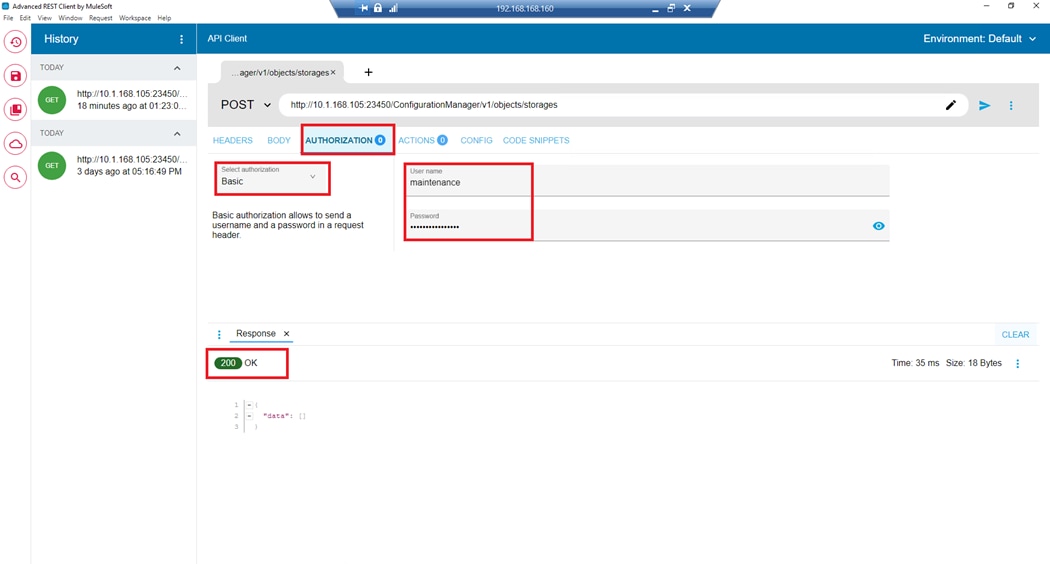

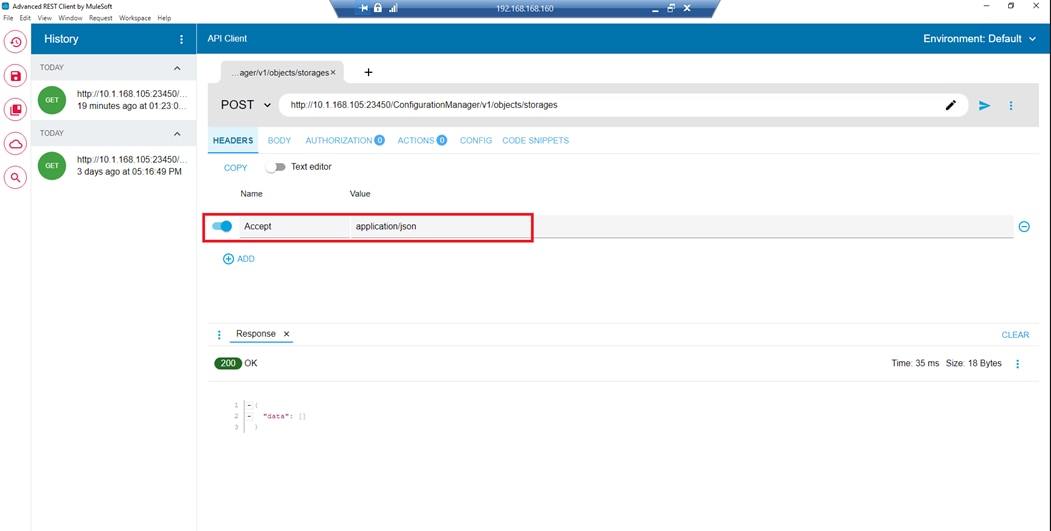

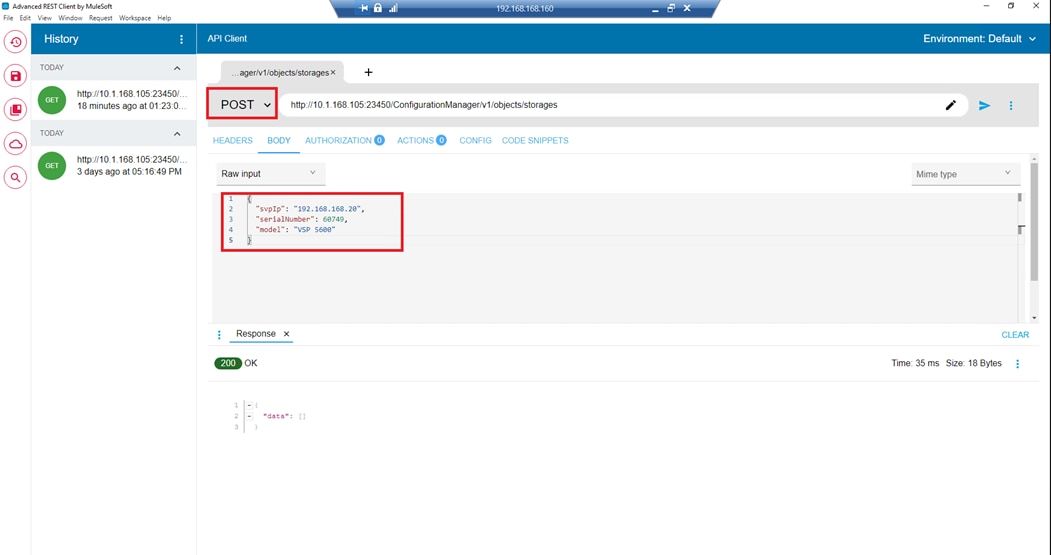

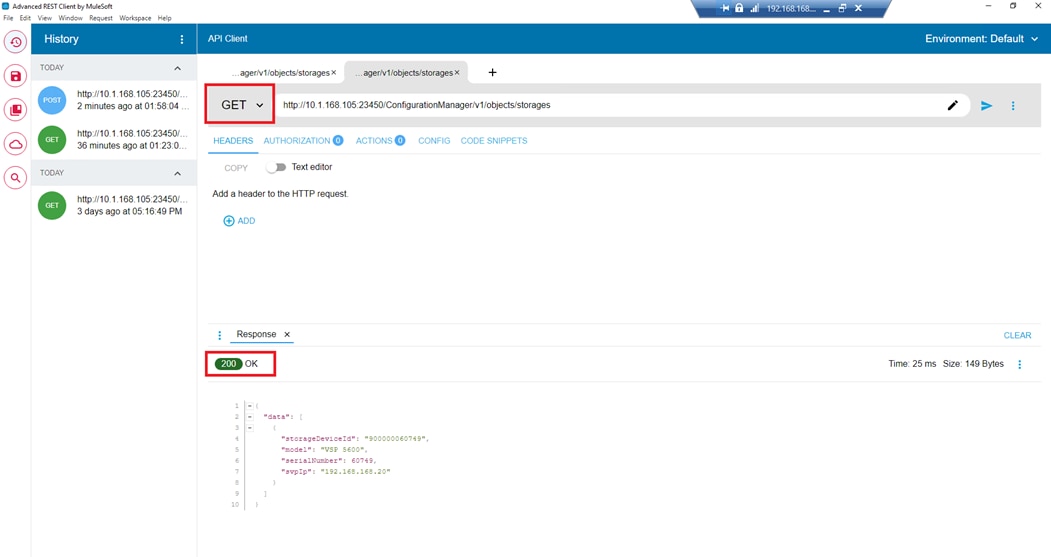

▪ Hitachi Ops Center API Configuration Manager to help connect the Hitachi VSP 5600 to the Intersight platform and to use Hitachi Storage Plug-in for vCenter.

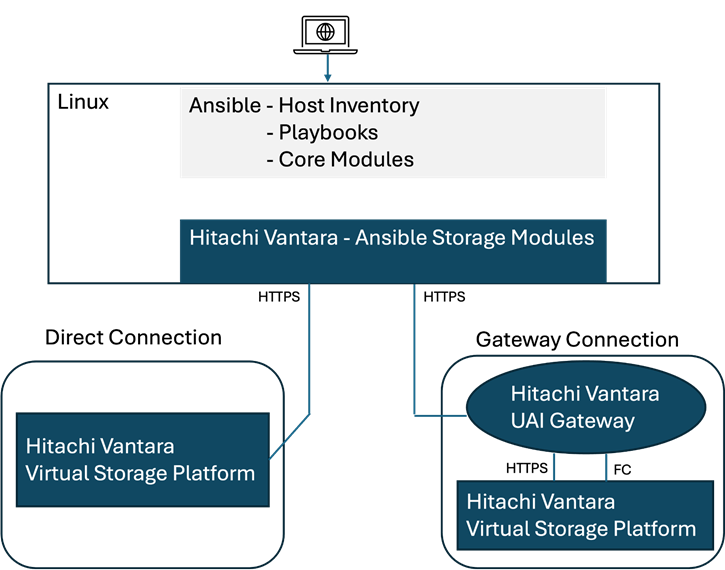

▪ Hitachi Storage Modules for Red Hat Ansible to help provision VSP host groups and volumes.

▪ Hitachi Storage Plug-in for VMware vCenter to integrate VSP system information and provisioning operations into the vSphere Web Client.

▪ VMware vCenter to set up and manage the virtual infrastructure as well as Cisco Intersight integration.

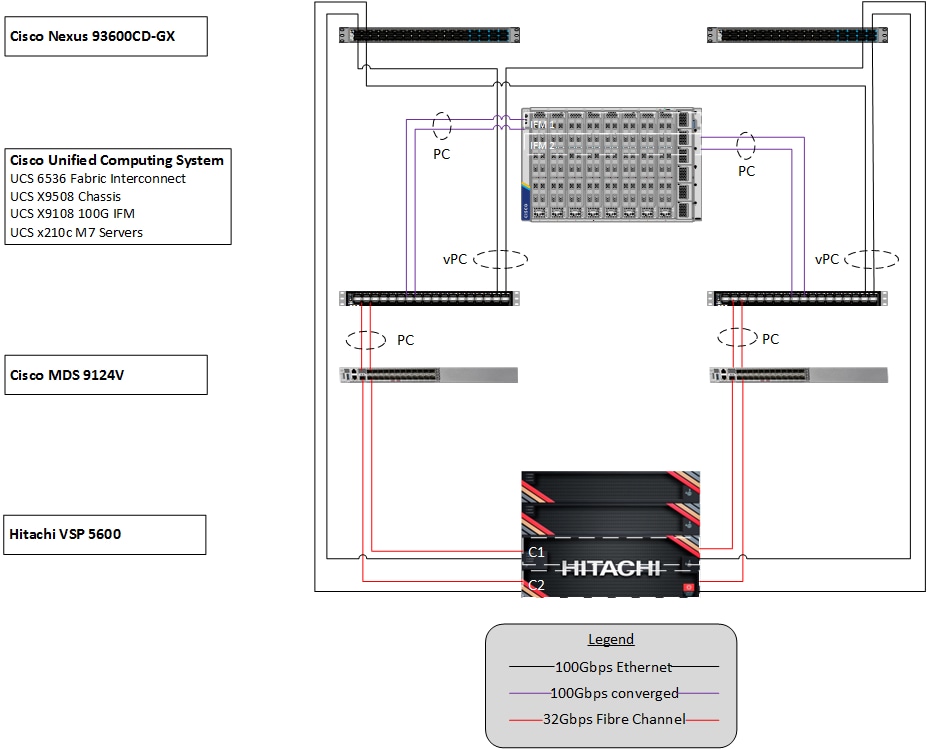

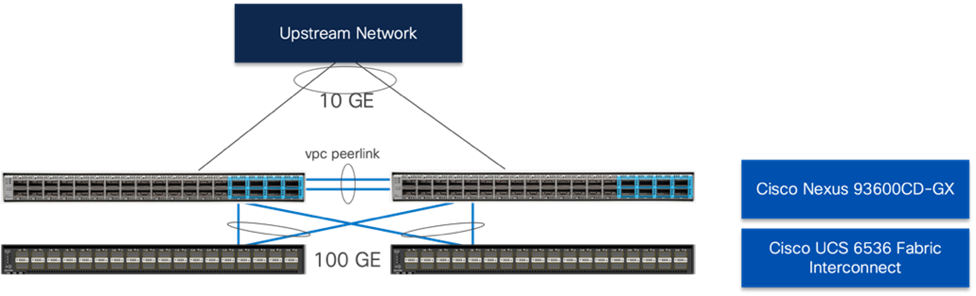

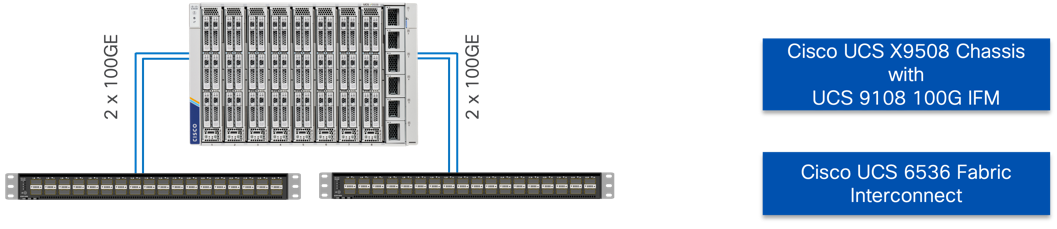

Figure 1 shows the validated hardware components and connections used in the Adaptive Solutions Virtual Server Infrastructure design.

Figure 1. Adaptive Solutions Virtual Server Infrastructure Physical Topology

The reference hardware configuration includes:

▪ Two Cisco Nexus 93600CD-GX Switches in Cisco NX-OS mode provide the switching fabric.

▪ Two Cisco UCS 6536 Fabric Interconnects (FI) provide chassis connectivity. One 100 Gigabit Ethernet port from each FI, configured as a Port-Channel, is connected to each 93600CD-GX. Four FC ports are connected to the Cisco MDS 9124V switches via breakout using 32-Gbps Fibre Channel connections configured as a single port channel for SAN connectivity.

▪ One Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCSX 9108-100G Intelligent Fabric Modules (IFMs), where four 100 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI. If additional bandwidth is required, all eight 100G ports can be utilized.

▪ The Cisco MDS 9124V sits between the compute and storage delivering 32Gbps Fibre Channel connectivity, as well as interfacing to resources present in an existing data center.

▪ The Hitachi VSP 5600 controllers connect with two 32Gbps FC ports from each controller to each Cisco MDS 9124V for delivering data to the SAN network.

Table 1 lists the software revisions for various components of the solution.

| Layer |

Device |

Image |

Comments |

| Network |

Cisco Nexus 93600CD-GXNX-OS |

10.3(5)M |

|

| Cisco MDS 9124V |

9.4(1a) |

Requires SMART Licensing |

|

| Compute |

Cisco UCS Fabric Interconnect 6536 and UCS 9108-100G IFM |

4.3(4) |

|

| Cisco UCS X210c M7 |

5.2(1.240010) |

|

|

| Cisco UCS Tools |

1.3.3-1OEM |

|

|

| VMware ESXi nfnic FC Driver |

5.0.0.43 |

Supports FC-NVMe |

|

| VMware ESXi nenic Ethernet Driver |

2.0.11.0 |

|

|

| VMware ESXi |

8.0 Update 2 |

Build 21813344 included in Cisco Custom ISO, updated with patch 8.0 Update 1c |

|

| VMware vCenter Appliance |

8.0 Update 2c |

Build 23504390 |

|

| Cisco Intersight Assist Appliance |

1.0.9-588 |

1.0.9-588 initially installed and then automatically upgraded |

|

| Storage |

Hitachi VSP 5600 |

SVOS 90-09-22-00/00 |

|

| Hitachi Ops Center Administrator/CM Rest |

11.01 |

|

|

| Hitachi Storage Provider for VMware vCenter |

3.7.4 |

|

|

| Hitachi Storage Plugin for VMware vCenter |

4.10 |

|

|

| Hitachi Storage Modules for Red Hat Ansible |

3.0.x |

|

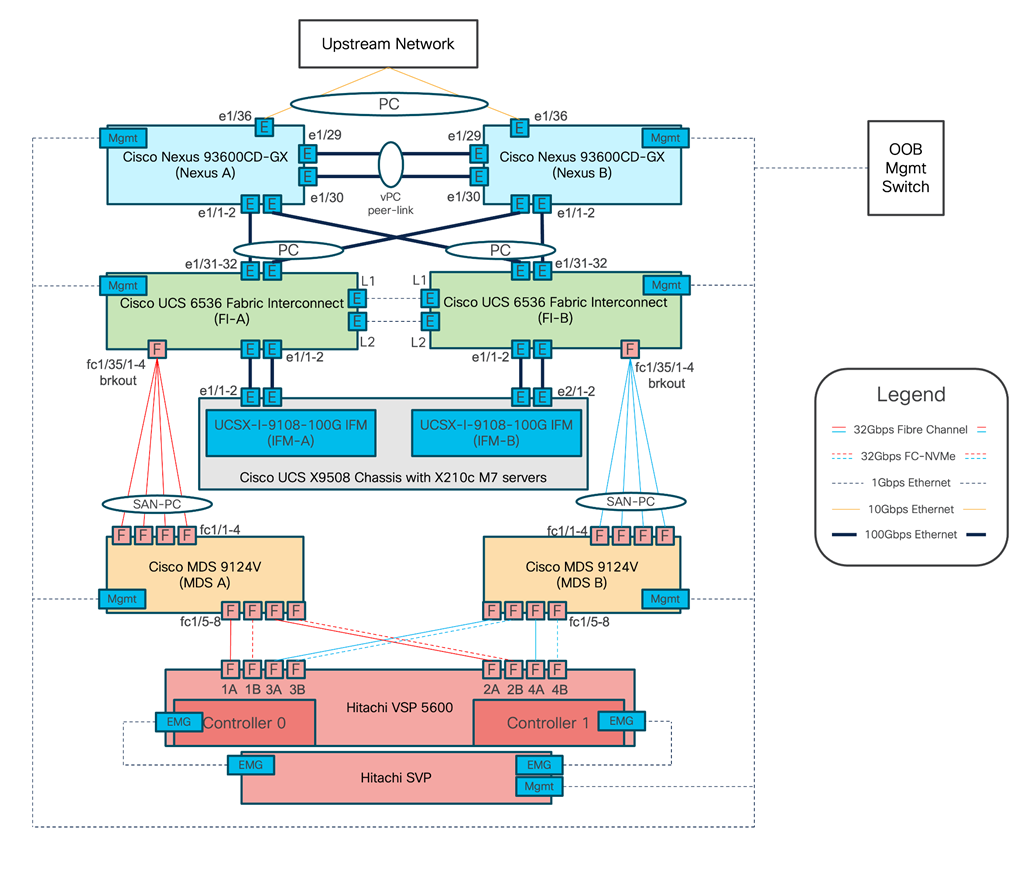

The information in this section is provided as a reference for cabling the physical equipment in the environment. This includes a diagram for each layer of infrastructure detailing the local and remote port locations.

Note: If you modify the validated architecture, see the Cisco Hardware Compatibility Matrix and the Hitachi Product Compatibility Guide for guidance.

This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps.

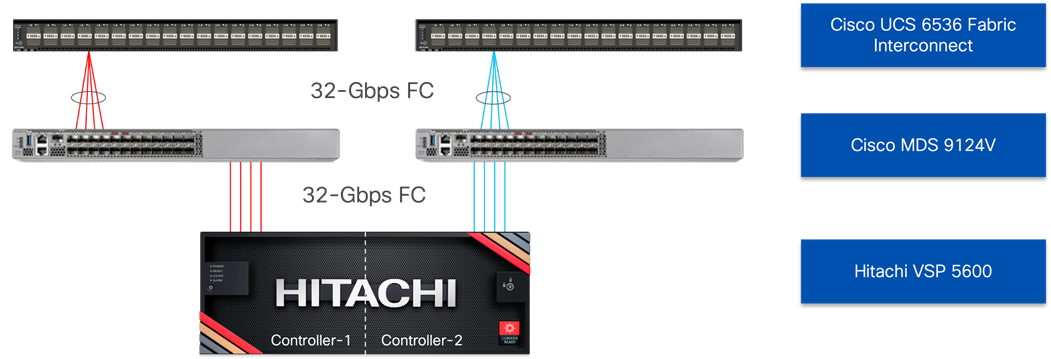

Figure 2 details the cable connections used in the validation lab for the Adaptive Solutions VSI topology based on the Cisco UCS 6536 fabric interconnect and the Hitachi VSP 5600. Four 32Gb uplinks via breakout connect as SAN port-channels from each Cisco UCS Fabric Interconnect to the MDS switches, and a total of eight 32Gb links connect the MDS switches to the VSP controller ports. 100Gb links connect the Cisco UCS Fabric Interconnects as port-channels to the Cisco Nexus 93600CD-GX switch pair’s vPCs, while upstream of the Nexus switches, 400G uplink connections are possible for the model. Additional 1Gb management connections will be needed for an out-of-band network switch that sits apart from the Adaptive Solutions infrastructure. Each Cisco UCS fabric interconnect and Cisco Nexus switch is connected to the out-of-band network switch, and the VSP is front-ended by the SVP, which has a connection to the out-of-band network switch. Layer 3 network connectivity is required between the Out-of-Band (OOB) and In-Band (IB) Management Subnets.

Figure 2. Adaptive Solutions Cabling with Cisco UCS 6536 Fabric Interconnect

Technology Overview of New Components

This chapter contains the following:

▪ 5th Gen Intel Xeon Scalable Processors

▪ Cisco UCS X9416 X-Fabric Module

▪ OpenShift Container Platform

▪ Hitachi Storage Modules for Red Hat Ansible

▪ Hitachi Storage Plug-in for VMware vCenter

This architecture directly extends from the Virtual Server Infrastructure defined in the Cisco and Hitachi Adaptive Solutions with Cisco UCSX, VMware 8U1, and Hitachi VSP 5600 Design Guide. The full list of solution elements found in the base architecture can be found here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hitachi_adaptive_vmware_vsp_design.html#TechnologyOverview.

The following are new elements to the design discussed in this document.

5th Gen Intel Xeon Scalable Processors

The Intel 5th generation Xeon Scalable processors expand the power and deployment options of the Cisco UCS X210c Compute Nodes. You can see a 21% average performance gain and a 36% increase in performance for watts used with these new processors when compared to previous generations of Intel Xeon processors.

More information on the 5th Gen Intel Xeon Scalable Processors can be found here: https://www.intel.com/content/www/us/en/products/docs/processors/xeon/5th-gen-xeon-scalable-processors.html

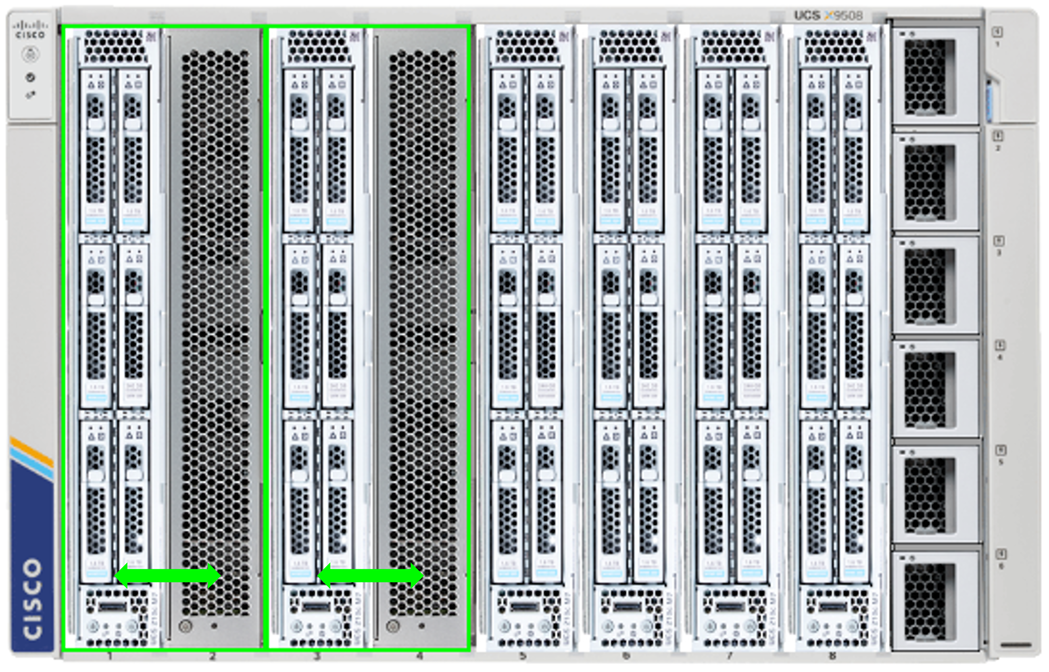

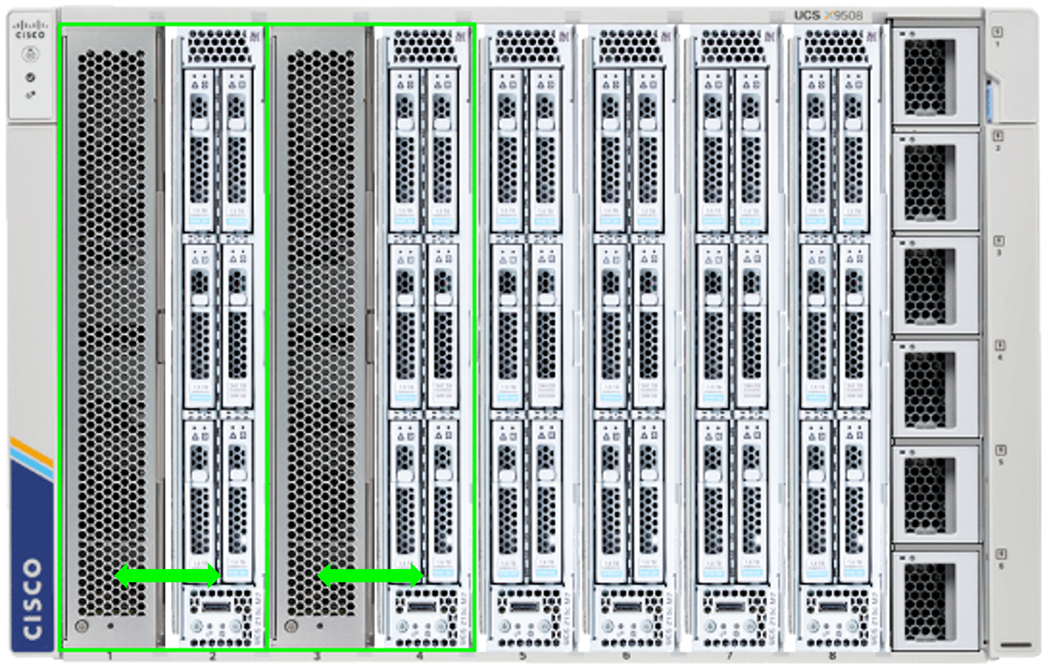

Cisco UCS X9416 X-Fabric Module

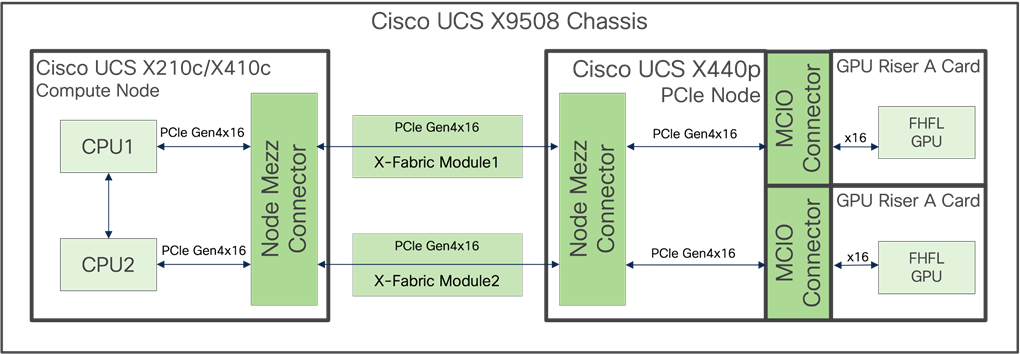

The Cisco UCS X9416 X-Fabric modules provide high speed PCIe connectors to Cisco UCS X210c M6 and M7 Compute Nodes. This allows the Cisco UCS X-Series Servers to have the density and efficiency of a blade solution but have expandability options as needed.

Computing nodes are connected through rear mezzanine ports into the fabric module slots directly for PCIe connectivity to expansion nodes without a midplane. More information on the Cisco UCS X9416 X-Fabric can be found here: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-x-series-modular-system/ucs-x-fabric-here-aag.html

The Cisco UCS X440p PCIe Node provides 2 or 4 PCIe slots for GPUs that will connect to an adjacent compute node through the Cisco UCS X-Fabric. All GPUs within the PCIe node must be of the same type.

The PCIe nodes must be positioned in alternating chassis slots with the compute nodes in a consistent pattern of the PCIe nodes residing on either all even, or all odd slot IDs. More information on the Cisco UCS X440p PCIe Node can be found here: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-x-series-modular-system/ucs-x440p-pcle-node-ds.html

The NVIDIA L40 supports the latest hardware-accelerated ray tracing, revolutionary AI features, advanced shading, and powerful simulation capabilities for a wide range of graphics and compute use cases in data center and edge server deployments.

More information on the NVIDIA L40 GPU can be found here: https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/datasheets/L-40/product-brief-L40.pdf

Red Hat OpenShift Container Platform (OCP) is a hybrid cloud platform as a service for building and managing containerized applications. Deployment, visibility, and orchestration is available through API, CLI, and console tools.

More information on Red Hat OCP can be found here: https://www.redhat.com/en/technologies/cloud-computing/openshift/container-platform

Red Hat Ansible is an open-source tool for automation, configuration management, application software deployment, and for Infrastructure as Code (IaC). When used for IaC, Ansible manages endpoints and infrastructure components in an inventory file, formatted in YAML or INI.

Red Hat Ansible is free to use and can be extended for greater enterprise level value with the subscription based Red Hat Ansible Automation Platform. More information on Ansible can be found here: https://www.ansible.com/

Hitachi Storage Modules for Red Hat Ansible

You can enhance your day 1 storage management experience with the integration of Hitachi Vantara Storage Modules into Ansible. These modules include configuration and management modules for on-premises storage and public cloud storage. Additional information on Hitachi Storage Modules can be found here: https://docs.hitachivantara.com/v/u/en-us/adapters-and-drivers/3.0.x/mk-92adptr149

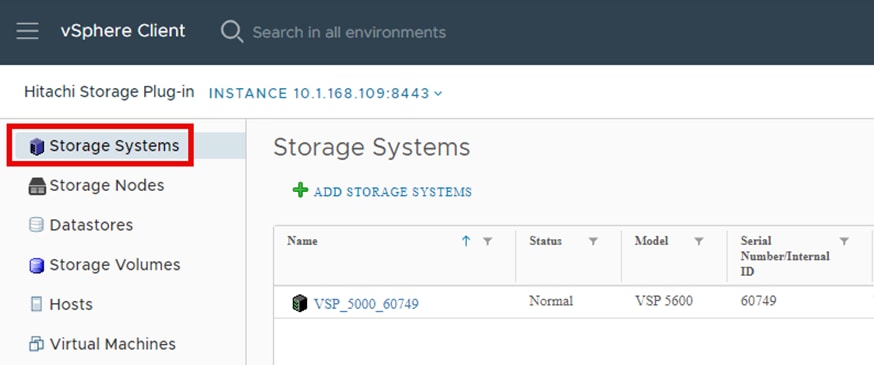

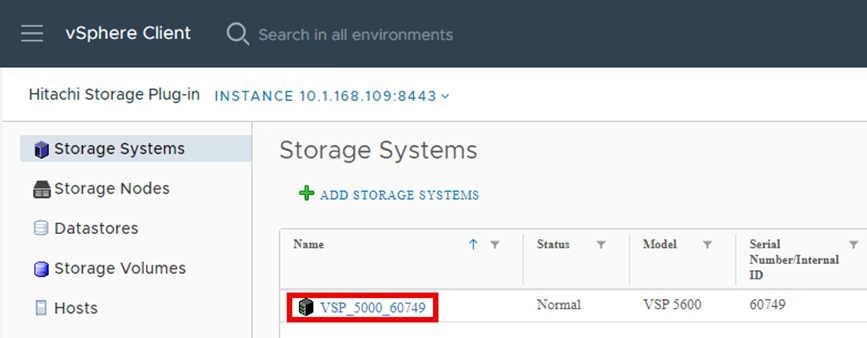

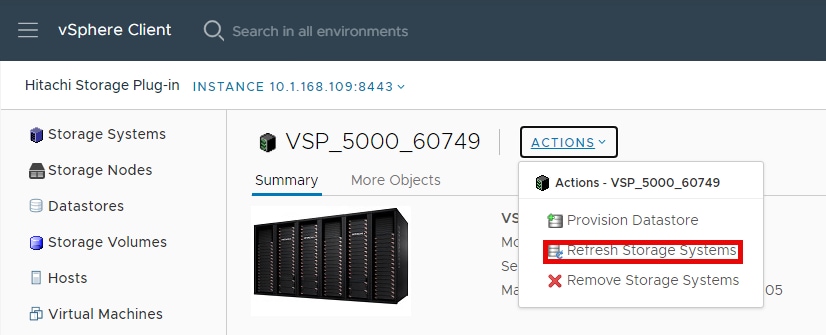

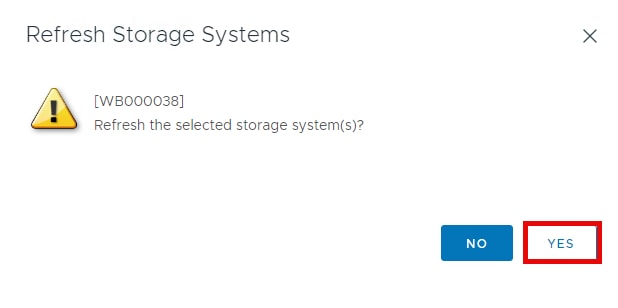

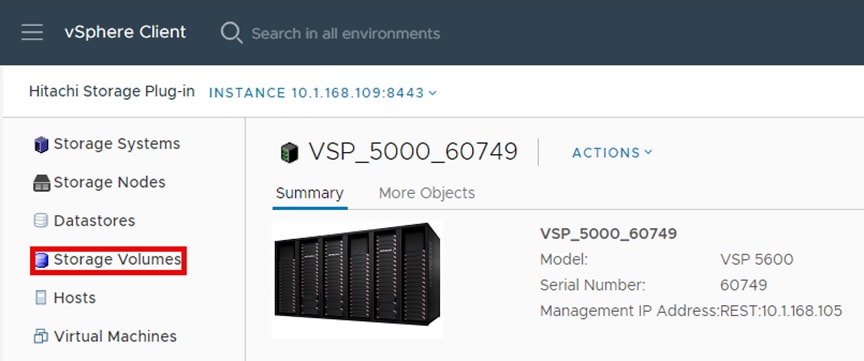

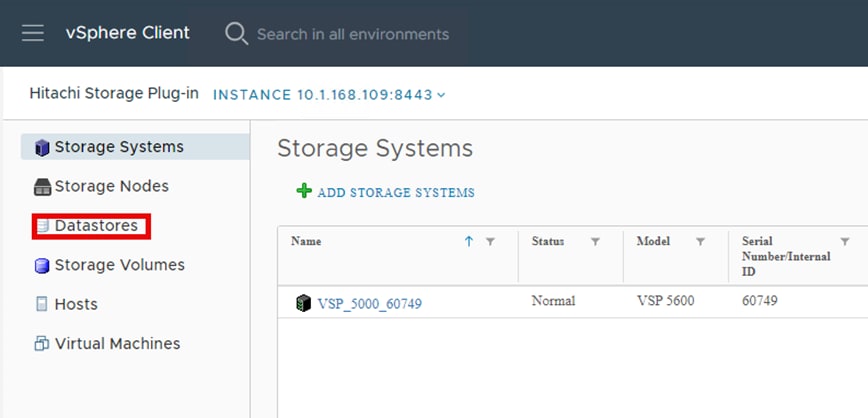

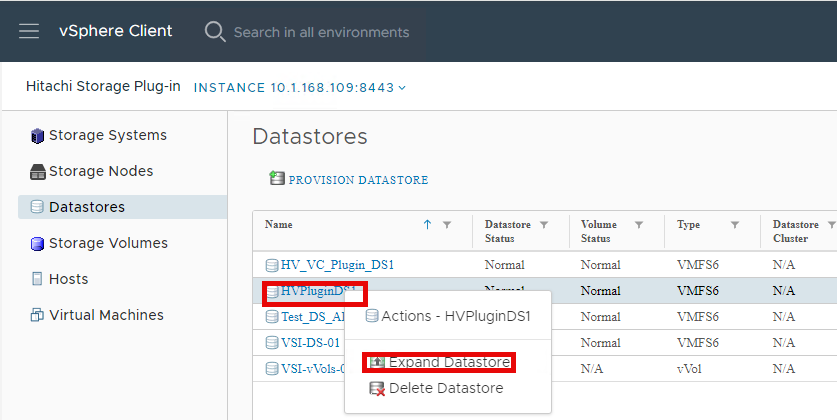

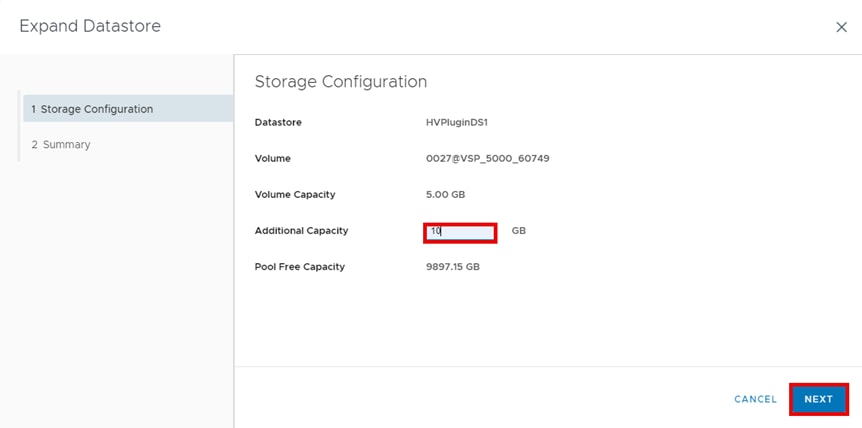

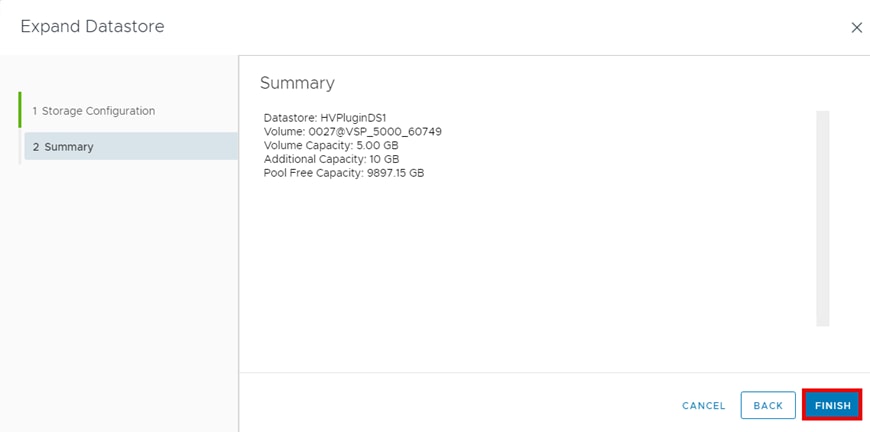

Hitachi Storage Plug-in for VMware vCenter

Hitachi Storage Plug-in for VMware vCenter integrates Hitachi Storage system information and provisioning operations into the vSphere Client from a common user interface. Additional information can be found here: https://docs.hitachivantara.com/v/u/en-us/adapters-and-drivers/4.10.x/mk-92adptr047

Solution Design

This chapter contains the following:

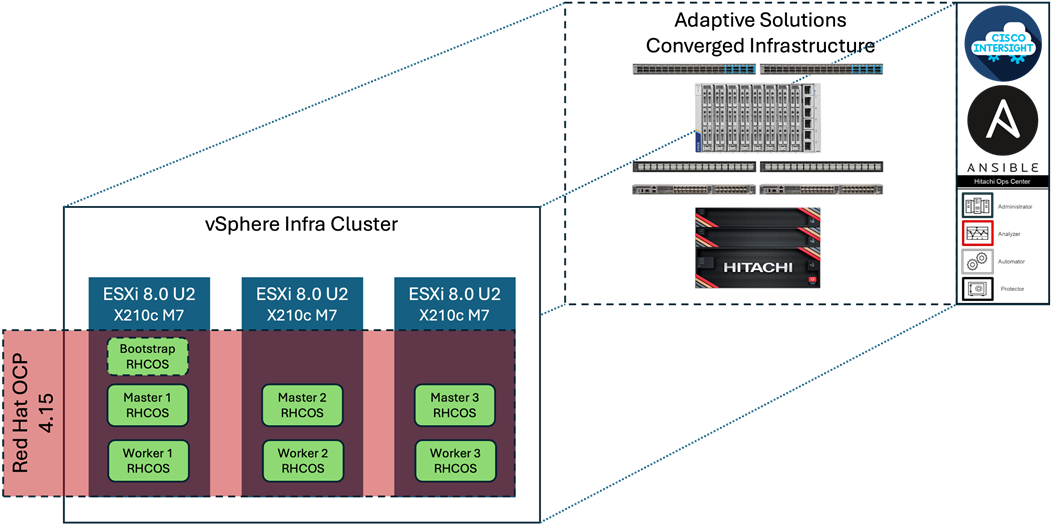

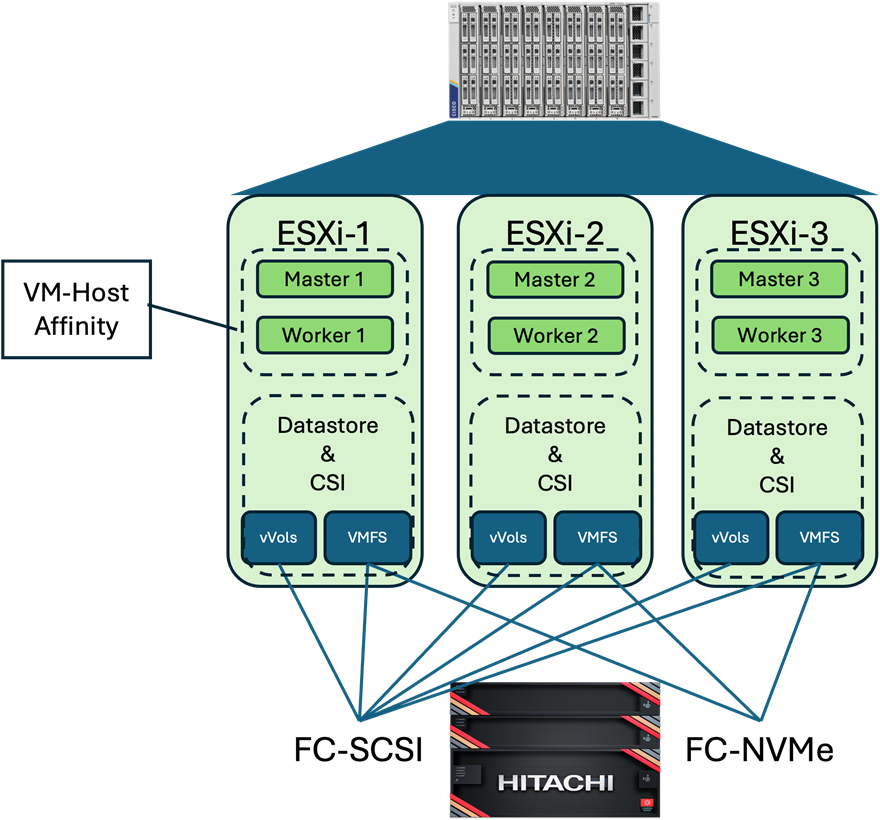

This Adaptive Solutions OpenShift Container Platform design implements a virtualized OCP deployment on top of the previously discussed Virtual Server Infrastructure (VSI) design for Cisco UCS receiving Fibre Channel based storage from the Hitachi VSP 5600 shown in Figure 3. Cisco Intersight and Hitachi Ops Center are the primary components for configuration and visibility into the infrastructure. The Hitachi Storage Plug-in for VMware vCenter has been added to the CI infrastructure and enables the VMware vSphere Web Client to provision and manage VSP volumes. For more information, see the appendix in the Hitachi Storage Plug-in for VMware vCenter. The appendix also includes configuration examples using Ansible for both compute and storage.

Figure 3. Red Hat OCP hosted within Adaptive Solutions VSI

The IP network is based on the Cisco Nexus 9600CD-GX switch and delivers resilient 100GE connectivity utilizing NX-OS features to include:

▪ Feature interface-vans—Allows for VLAN IP interfaces to be configured within the switch as gateways.

▪ Feature HSRP—Allows for Hot Standby Routing Protocol configuration for high availability.

▪ Feature LACP—Allows for the utilization of Link Aggregation Control Protocol (802.3ad) by the port channels configured on the switch.

▪ Feature VPC—Virtual Port-Channel (vPC) presents the two Nexus switches as a single “logical” port channel to the connecting upstream or downstream device.

▪ Feature LLDP—Link Layer Discovery Protocol (LLDP), a vendor-neutral device discovery protocol, allows the discovery of both Cisco devices and devices from other sources.

▪ Feature NX-API—NX-API improves the accessibility of CLI by making it available outside of the switch by using HTTP/HTTPS. This feature helps with configuring the Cisco Nexus switch remotely using the automation framework.

▪ Feature UDLD—Enables unidirectional link detection for various interfaces.

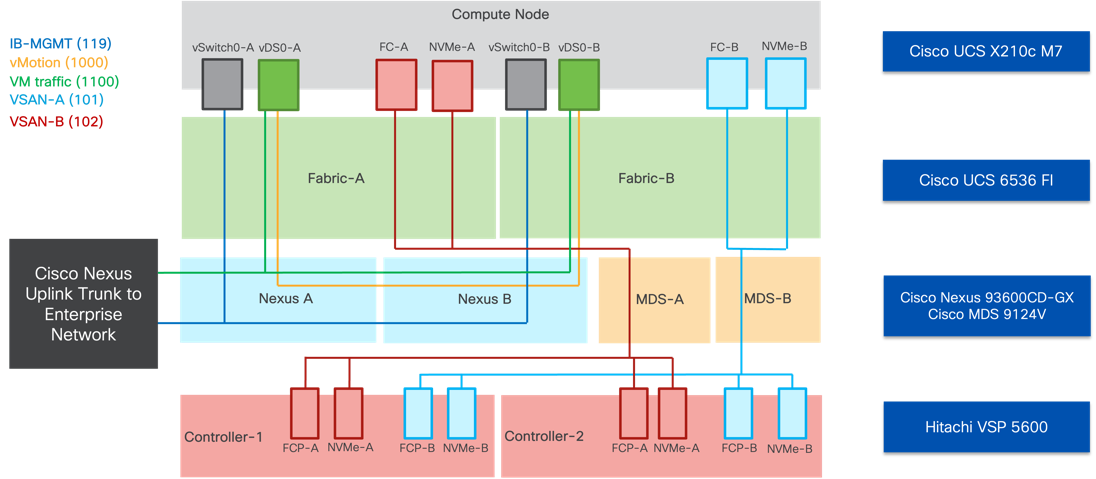

Connectivity from the Cisco Nexus switches is through the Cisco UCS 6536 Fabric Interconnects as shown in Figure 4. This figure shows the configuration of the validated environment, when considering a production environment, redundant upstream switches could be supported.

Figure 4. Cisco Nexus connectivity to the Cisco UCS 6536 Fabric Interconnects and upstream network

Within certain L2 traffic patterns between servers within the UCS domain, the traffic will be pinned to stay within one side of the fabric interconnects to avoid unnecessary northbound hops up to the Nexus.

The storage network implements Fibre Channel for FC-SCSI and FC-NVMe traffic with the 64G capable Cisco MDS 9124V. Some of the features incorporated with the MDS in this design include:

▪ Feature NPIV — N port identifier virtualization (NPIV) provides a means to assign multiple FC IDs to a single N port.

▪ Feature fport-channel-trunk — F-port-channel-trunks allow for the fabric log-ins from the NPV switch to be virtualized over the port channel. This provides nondisruptive redundancy should individual member links fail.

▪ Enhanced Device Alias – a feature that allows device aliases (a name for a WWPN) to be used in zones instead of WWPNs, making zones more readable. Also, if a WWPN for a vHBA or Hitachi VSP port changes, the device alias can be changed, and this change will carry over into all zones that use the device alias instead of changing WWPNs in all zones.

▪ Smart-Zoning — a feature that reduces the number of TCAM entries and administrative overhead by identifying the initiators and targets in the environment.

Connections between the MDS bridging the compute to the storage are shown in Figure 5.

Figure 5. Fibre Channel connectivity through the MDS 9124V

The logical view of the IP and FC network extends the physical into the virtual connections as they are positioned to support the OCP solution shown in Figure 6. The VIC adapters of the Cisco UCS X210c present virtual NICs and virtual HBAs as it carries the converged traffic within the solution. Primary IP network traffic is passed through the ingress and egress IPs that were set on the IB-Mgmt network, but this can be changed to fit the customer implementation.

Figure 6. Logical view of LAN and SAN connectivity

For the tested environment, all OCP traffic was carried through the underlay of the physical network through the IB-MGMT network shown above. Customizations can be made to incorporate other dedicated networks, but the Control Nodes, Worker Nodes, Ingress and Egress were accommodated by IB-MGMT.

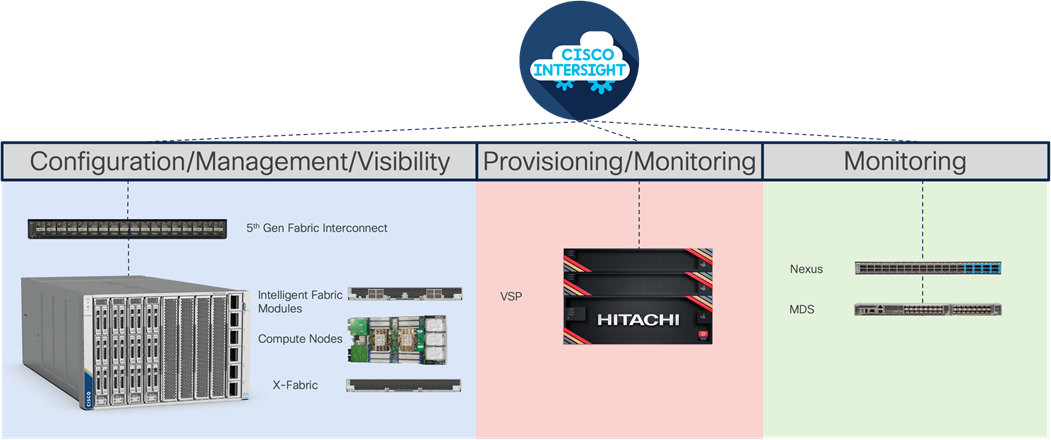

The Cisco UCS X-Series Modular System delivers a high-density compute system configured and managed through Cisco Intersight. This SaaS delivered platform gives scalability to UCS at a global level with the potential for a unified view and control of all compute for an organization through a single interface that also gives that same visibility to Hitachi storage and Cisco networking with Nexus and MDS switches. Intersight is accessible through secure SSO and a REST API to automate the management of Intersight connected devices across multiple data centers. Figure 7 provides a summary of Cisco Intersight within this design.

Figure 7. Cisco Intersight control and visibility across the architecture

The Cisco UCS X-Series M7 X210c Compute Nodes in this design incorporate offerings of both 4th Generation along with 5th Generation Intel Xeon Scalable processors. The density of computing power available in these CPUs provides the opportunity to deliver more computing power per physical rack space and power consumed compared to previous generations of computing. The Intelligent Fabric Module (IFM) connects up to 8 100G connections of converged traffic to each fabric interconnect, which are automatically configured as port channels for resiliency. Figure 8 illustrates the connections from the IFM to the servers are direct, without a need for a backplane.

Figure 8. FI connectivity to Chassis through IFM

Through Cisco Intersight, the fabric interconnects oversee the configuration and control of the compute nodes through server policies and pools of identifying information, such as UUIDs, WWPNs, and MAC addresses that are incorporated as server profiles that can be provisioned uniformly and at scale through server profile templates. Along with the configuration, the fabric interconnects control the converged traffic sent to the servers that it receives from either the upstream network, east-west between other servers, or through the MDS SAN network from the Hitachi VSP.

This converged traffic is received by the 5th Generation UCS VIC adapters that present virtual adapters of NICs that can receive VLAN tagged traffic and virtual HBAs. The WWPN identities held by these virtual HBAs are registered in the zoning on the MDS as well as in host groups within Ops Center to associate them as initiators for the targets held by the VSP for the association of FC boot LUNs and data LUNs of both FC-SCSI and FC-NVMe.

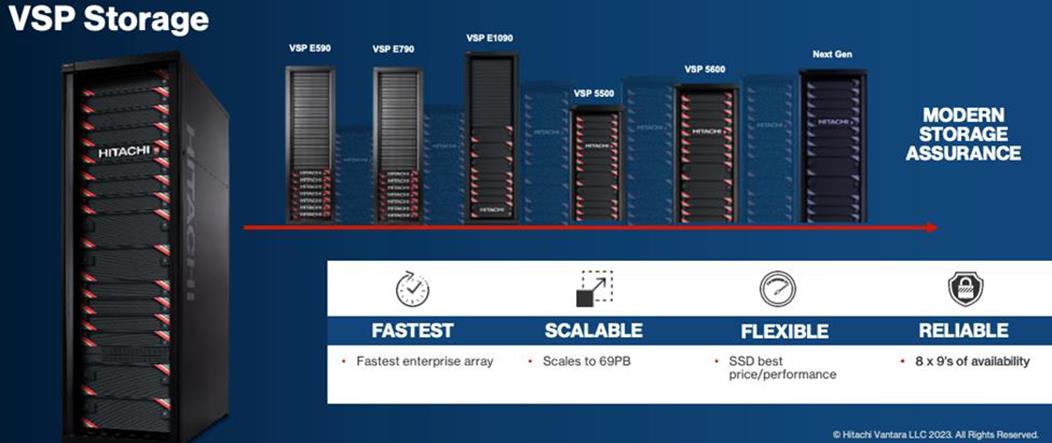

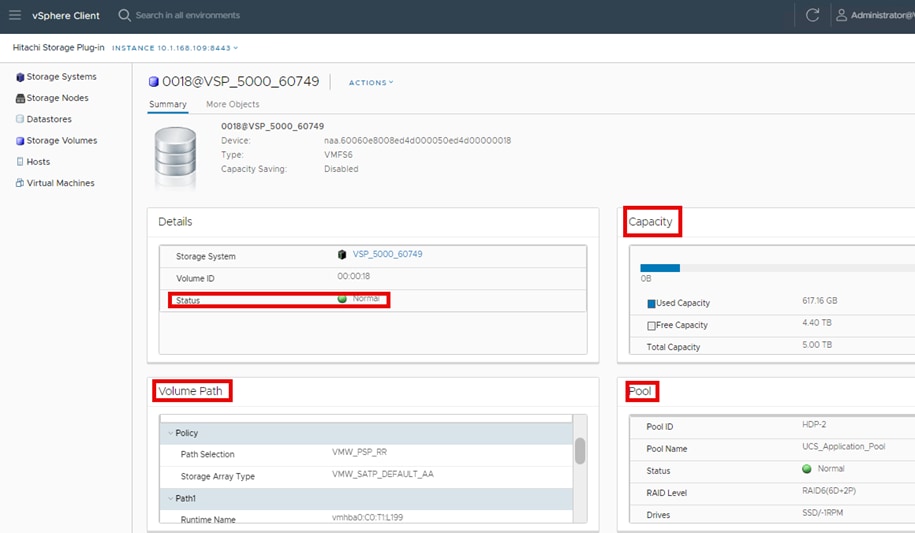

A key element in the successful deployment of a container platform is having a robust and flexible infrastructure that can meet the wide variety of requirements in a highly dynamic environment. The Cisco and Hitachi Adaptive Solution for CI with Red Hat OpenShift Container Platform (OCP) provides highly available, predictable, and high-performance infrastructures for container applications running on virtual nodes with persistent storage on the Hitachi VSP. The Hitachi VSP is a highly scalable, true enterprise-class storage system that can virtualize external storage and provide virtual partitioning, and quality of service for diverse workload consolidation. With the industry’s only 100 percent data availability guarantee, the Virtual Storage Platform delivers the highest uptime and flexibility for your block-level storage needs.

Figure 9. Hitachi VSP Storage Family

For more information on Hitachi Vantara Virtual Storage Platform, see: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hitachi_adaptive_vmware_vsp_design.html

In addition to configuring the VSP 5600 through Ops Center Administrator, two additional tools are now available to provide flexibility for the provisioning and management of storage for the Adaptive Solution for CI. These tools include:

▪ Hitachi Storage Modules for Red Hat Ansible

▪ Hitachi Storage Plug-in for VMware vCenter

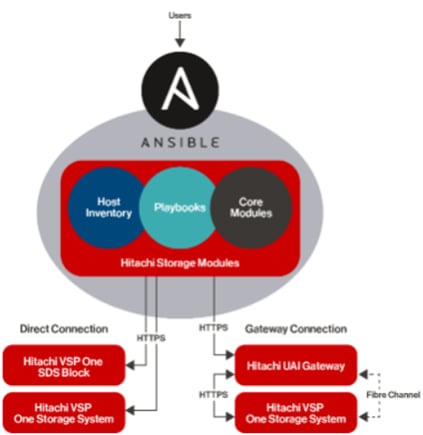

The Hitachi Storage Modules for Red Hat Ansible enables IT and data center administrators to consistently and reliably provision and manage the Hitachi Virtual Storage Platform (VSP) from Red Hat Ansible playbooks. You can use these storage modules with playbooks that you create to manage your infrastructure with either a direct connection to the storage system or using a Unified API Infrastructure (UAI) gateway connection. Within the context of this CVD, the Hitachi VSP 5600 was managed through a direct connection.

Figure 10 shows the available Ansible Control Node connectivity options to the Hitachi VSP 5600.

Figure 10. Hitachi Vantara Storage Modules for Red Hat Ansible Connectivity Options

The Hitachi Storage Modules for Red Hat include various software modules supporting storage configuration (host groups and LUNs) and storage management. Refer to the Hitachi Vantara Virtual Storage Platform Ansible Support Appendix for deployment and usage information.

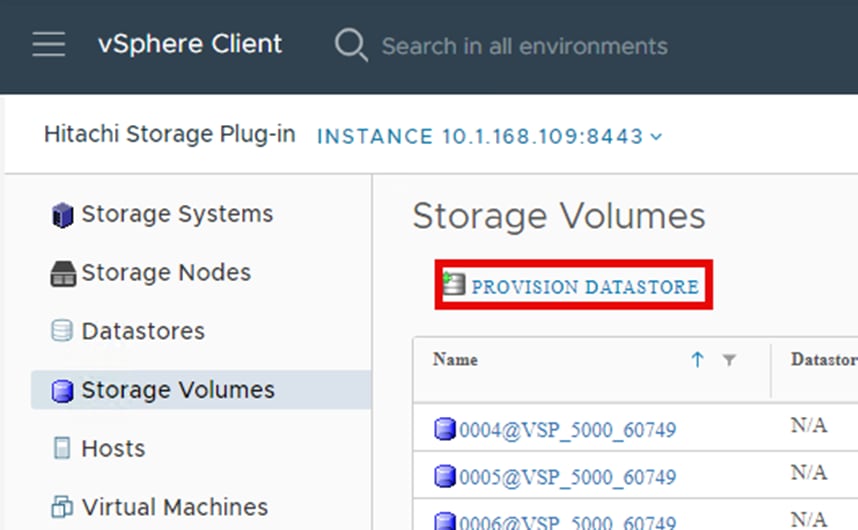

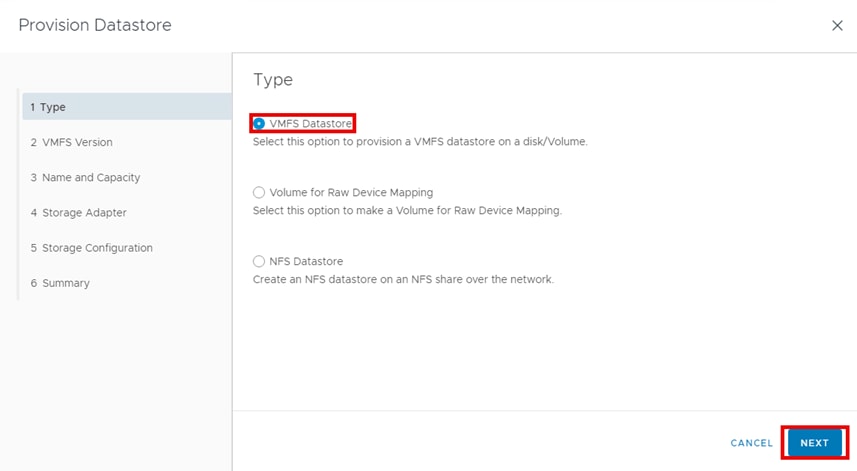

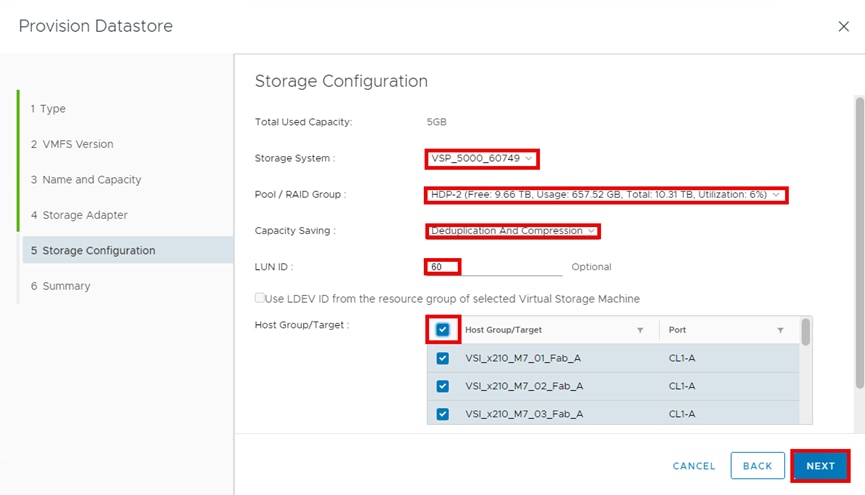

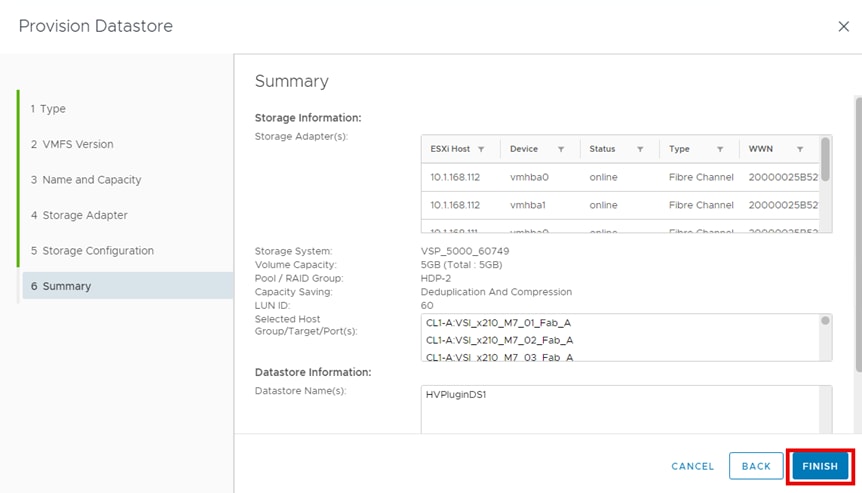

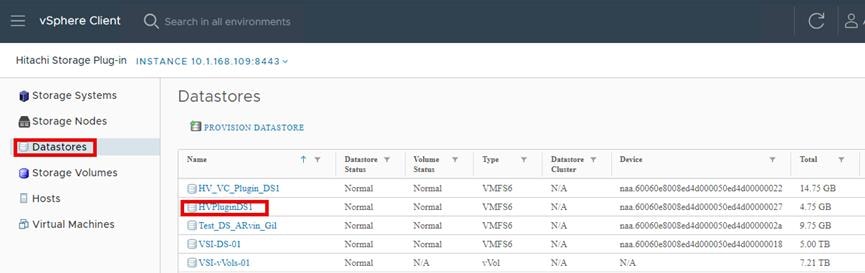

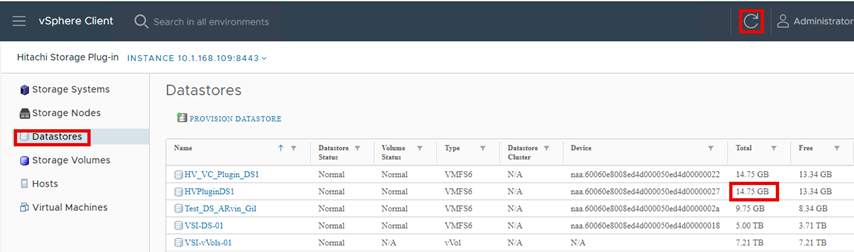

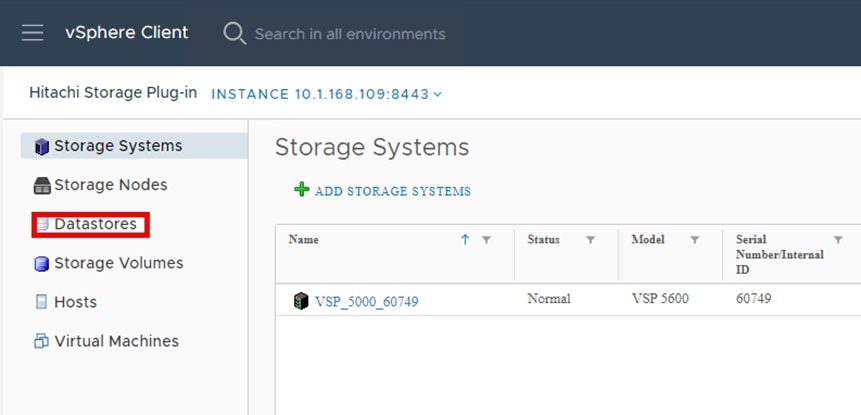

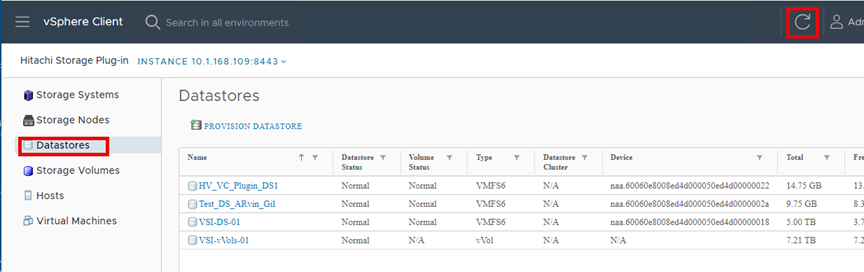

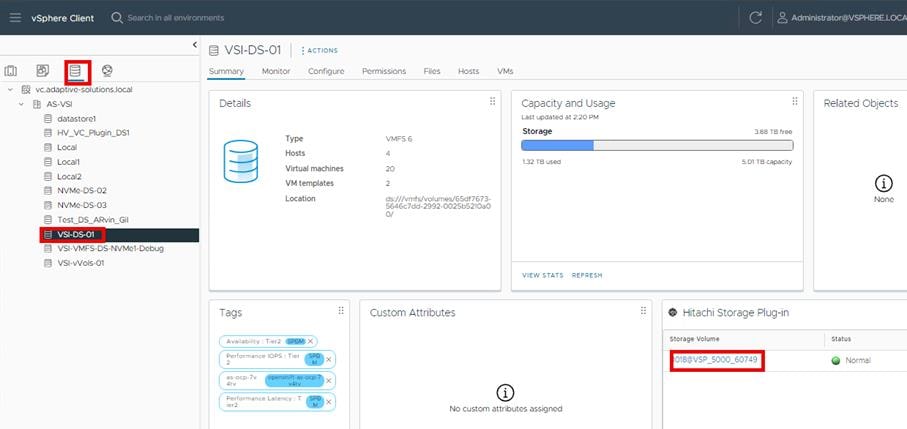

Hitachi Storage Plug-in for VMware vCenter (referred to as Hitachi Storage Plug-in in this document) integrates Hitachi storage system information and provisioning operations into the vSphere Web Client. This integration allows VMware product administrators to perform storage provisioning operations from within the VMware user interface, which offers greater convenience than switching between VMware and Hitachi management software to perform common operations involving both. The Hitachi Storage Plug-In simplifies the Day-1 configuration and management of the VSP using the FC-SCSI protocol to provide Virtual Machine File System (VMFS) datastore volumes.

The Hitachi Storage Plug-in provides the following features:

▪ View: The View function displays the storage system information registered in Hitachi Storage Plug-in, the datastore on the ESXi host using the storage system, and virtual machine information.

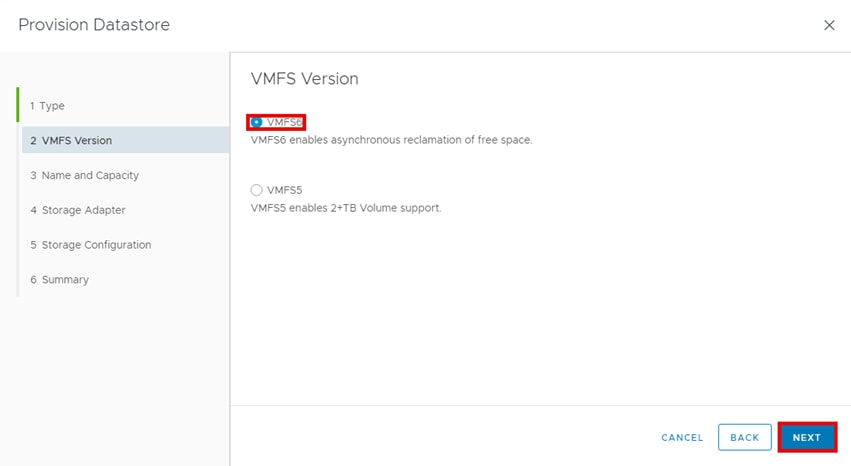

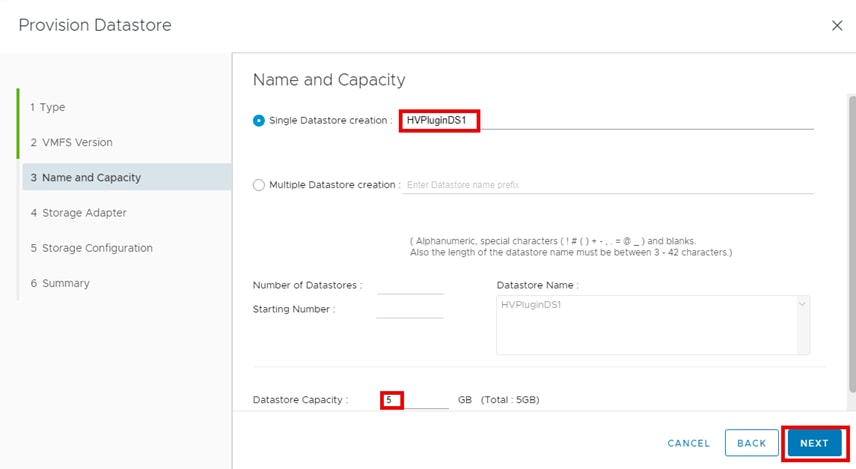

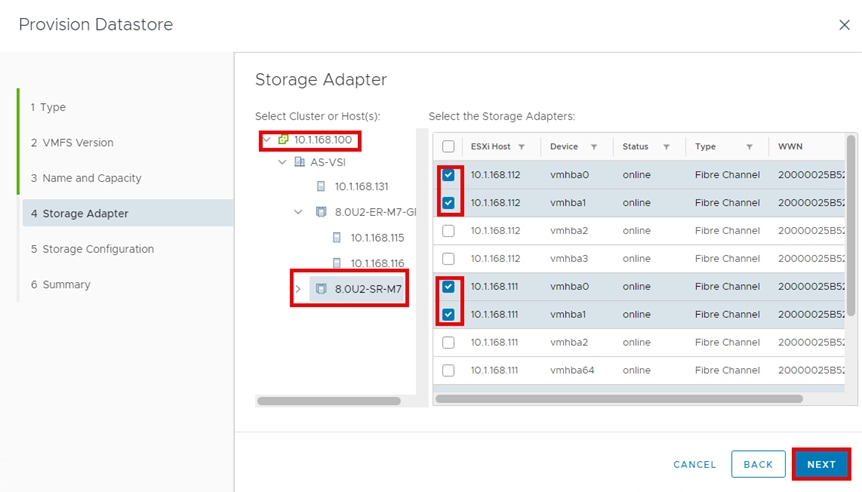

▪ Provision Datastore: The Provision Datastore function creates an LDEV/volume used as a datastore for a Virtual Machine File System (VMFS), a Network File System (NFS), and for Raw Device Mapping objects (RDMs) by a storage system registered in Hitachi Storage Plug-in.

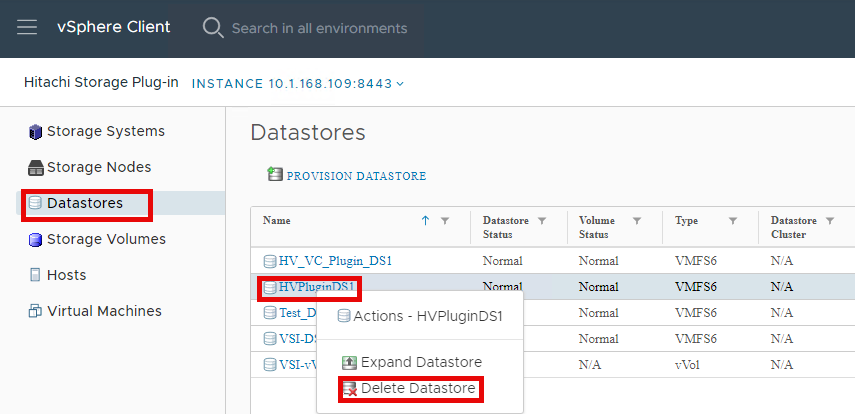

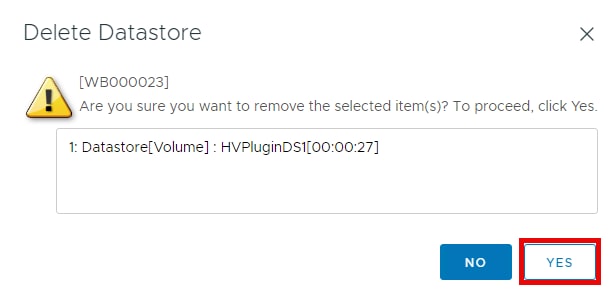

▪ Delete Datastore: The Delete Datastore function removes datastores created using Hitachi Storage Plug-in and the LDEVs or volumes of storage systems corresponding to a datastore. This feature does not support datastores and LDEVs/volumes created without using Hitachi Storage Plug-in.

For deployment and usage information, go to section Hitachi Storage Plug-in for VMware vCenter in the Appendix.

Figure 11 illustrates the capability overview of Red Hat OCP backed by Hitachi VSP storage on top of Cisco and Hitachi Adaptive Solutions for CI.

Figure 11. Red Hat OCP on Cisco Hitachi Adaptive Solutions

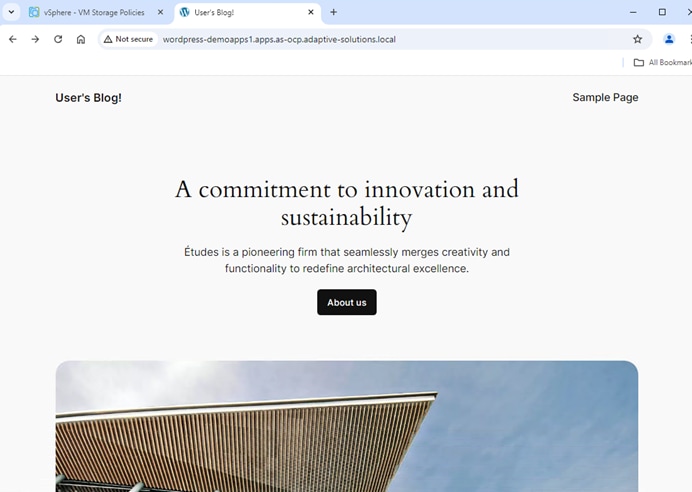

This guide offers a VSP storage solution that can be used to meet most deployment configurations that require persistent storage service for containers (applications) in a Red Hat OCP virtualized environment. This solution integrates the VMware Container Storage Interface (CSI) with Cloud Native Storage (CNS) using the Hitachi Storage Provider for VMware vCenter (VASA) software. The Hitachi VSP provides Fibre Channel connectivity in the form of FC-SCSI (VMFS and vVols), and FC-NVMe (VMFS).

vSphere Cloud Native Storage

Cloud Native Storage (CNS) integrates vSphere and OCP and offers capabilities to create and manage container volumes deployed in a vSphere environment. CNS consists of two components, a CNS component in vCenter Server and vSphere CSI driver in OCP. CNS enables vSphere and vSphere storage (VMFS and vVols) to run stateful applications. CNS enables access to this data path for OCP and brings information about OCP volume and pod abstractions to vSphere. CNS uses several components to work with vSphere storage including VMFS or vVols provided by the Hitachi Storage Provider for VMware vCenter. After you create persistent volumes (PVs), you can review them and their backing virtual disks in the vSphere Client and monitor their storage policy compliance.

vSphere Cloud Storage Interface

The Container Storage Interface (CSI) driver is installed in the Kubernetes cluster and can provide persistent storage to worker nodes within the OCP cluster. Administrators can use VMware through the csi.vsphere.vmware.com driver which enables PV creation from VMFS datastores backed by the Hitachi VSP. A Persistent Volume Claim (PVC) is created that references an available StorageClass, which maps to a vSphere storage policy-based management (SPBM) policy. A first-class disk (FCD) is created within vSphere, and a resultant PV is presented to the OpenShift layer from the CSI driver. The FCD is then mounted to the pod when requested for use as a PV. The vSphere CSI driver has different components that provide an interface used by the Container Orchestrators such as OpenShift to manage the lifecycle of vSphere volumes. It is also used to create volumes, expand and delete volumes, snapshot volumes and restore, attach, and detach volumes to the cluster worker node VMs, and use bind mounts for the volumes inside the pods.

Note: The vSphere CSI driver does not support cloning PVCs.

Hitachi Storage Provider for VMware vCenter (VASA)

Hitachi Storage Provider for VMware vCenter (VASA) enables VASA APIs for storage awareness to be used with Hitachi storage systems. VASA enables policies to be made by making the storage attribute information available in vSphere. VASA enables organizations to deploy Hitachi storage infrastructure with VMware vSphere Virtual Volumes (vVols) to bring customers on a reliable enterprise journey to a software-defined, policy-controlled datacenter. Hitachi storage policy-based management (SPBM) enables automated provisioning of virtual machines (VMs) and quicker adjustment to business changes. Virtual infrastructure (VI) administrators can make changes to policies to reflect changes in their business environment, dynamically matching storage policy requirements for VMs to available storage pools and services. The vVols solution reduces the operational burden between VI administrators and storage administrators with an efficient collaboration framework leading to faster and better VM and application services provisioning.

VASA makes this possible in two ways:

▪ VMware vSphere vVols

This function is the VASA component of VMware vVols that enables vVols to be used with supported Hitachi storage systems in a 1-to-1 mapping, enabling greater insight into virtual machine performance.

▪ VMware VMFS

VASA enables storage capability information and alert notifications related to VMFS file systems to be generated automatically and displayed in vCenter Server.

Hitachi VSP vSphere OCP Persistent Storage Options

Table 2 lists the supported Hitachi VSP integration beginning with the OCP Deployment Type, supported Storage Type, and the supported storage for container persistent storage.

Table 2. Supported Hitachi VSP Integration

| Red Hat OpenShift Container Platform |

||

| Deployment Type |

Storage Type |

Hitachi Persistent Storage Provider Compatibility |

| VM (all virtualized OCP Infrastructure |

Cloud Native Storage (CNS) |

Container Storage Interface (vVols + VMFS) |

Red Hat OCP will have a requirement of DNS and DHCP to bring up the cluster. Internet access is needed for the Installer Provisioned Infrastructure (IPI) method followed in our example, but the cluster can be built from Red Hat using an offline method for local/air gapped placements. An LDAP source like Active Directory can be used for setting up the OAuth, as well as an internal OAuth server can optionally be setup.

The physical underlay of the converged infrastructure as it maps to the OCP installation is shown in Figure 12.

Figure 12. Cisco UCS compute and VSP storage underlay mapping to OCP

The OCP environment in this design is hosted within three VMware vSphere hosts residing on Cisco UCS X210c Compute Nodes. With the deployment completed, nodes are distributed across the hosts, keeping the respective master and worker nodes isolated from each other using vSphere DRS VM-Host Affinity rules as shown in Figure 12. Within the deployment, the storage is allocated for the OCP components from the Hitachi VSP 5600. This places the nodes on VM datastores, and provisions Persistent Virtual Storage (PVC) using the vSphere Container Storage Integration (CSI) on either vVols or VMFS for persistent storage needs of the deployed containers.

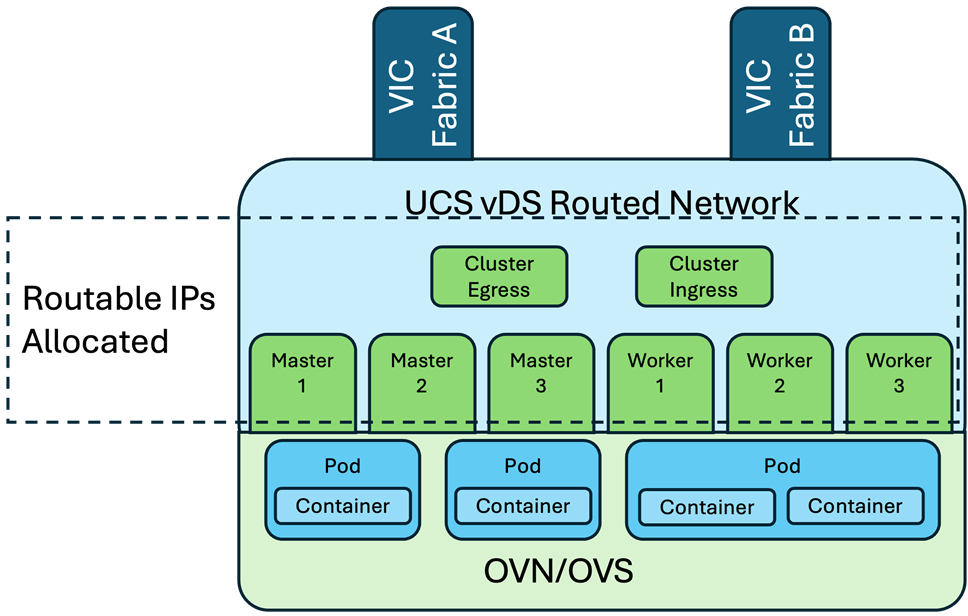

The network design used by OCP is shown in Figure 13. It receives and sends traffic through the Cisco UCS VIC adapters, that are teamed with the route based on originating virtual port algorithm, for any traffic external to the cluster, or must travel between nodes that are not residing on the same host. The cluster uses the OCP default Container Network Interface (CNI) specification, with the nodes implementing Open Virtual Networking (OVN) with an Open Virtual Switch (OVS) that spans across the nodes. Pods receive an OVN allocated IP, and containers residing within the pod will share that IP.

Figure 13. Container networking mapping to the connectivity provided by the Cisco UCS VIC and VMware vSphere installation

The East/West traffic between nodes will pass with an overlay created with Generic Network Virtualization Encapsulation (GENEVE) implemented by OVN. Connectivity and services for pods and containers is handled with OVN constructs giving a potentially more human readable than presentation than was found with the previous OpenShift SDN. Alternate CNI options are available, including one from Cisco that is incorporated through Cisco ACI to give a common interface to datacenter networking down into containers.

Red Hat recommendations for OpenShift v4 on VMware vSphere include the following:

▪ The overall CPU over-commitment ratio for all pods to physical cores available in the cluster should not exceed 1.8.

▪ Memory usage for all pods should not exceed .9 of the physical memory available in a cluster.

▪ Etcd hosted on the control-plane nodes is used to store the OCP cluster state and is usually the most latency sensitive component. For etcd, it is important to always have low latency.

▪ Deploy OpenShift on a dedicated vSphere cluster to reduce competition for physical resources between different applications.

▪ Apply anti-affinity rules for the master node and worker nodes.

▪ OCP supports compute only vMotion and should follow VMware best practices. vMotion has a short duration during which the VM is not available and is a risk for a latency sensitive workload. vMotion for a VM hosting an OCP node should only be triggered when unavoidable. Only a single OCP master node should be migrated to a different host at a time. Ensure sufficient time for all etcd cluster members to synchronize before initiating vMotion for another master node.

▪ Storage vMotion is not supported. If you are using vSphere datastores in your pods, migrating a VM across datastores can cause invalid references within the OCP persistent volume (PV) objects that can result in data loss.

▪ Worker nodes should have spare capacity for failover situations. In the event of a node failure, OCP will attempt to schedule the lost pods to another node. It is important to test fail-over scenarios. especially if a vSphere cluster is used for OCP and VMs are configured for HA, which could lead to latency.

Implementation

This chapter contains the following:

▪ Preparation for Installation

▪ Configure vSphere VM Storage Policies

▪ Red Hat OCP Storage Configuration

▪ Red Hat OCP Storage Management

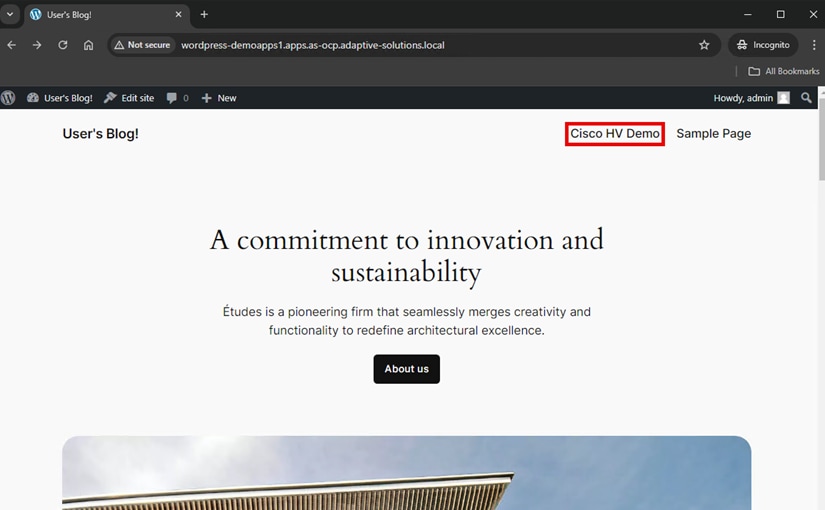

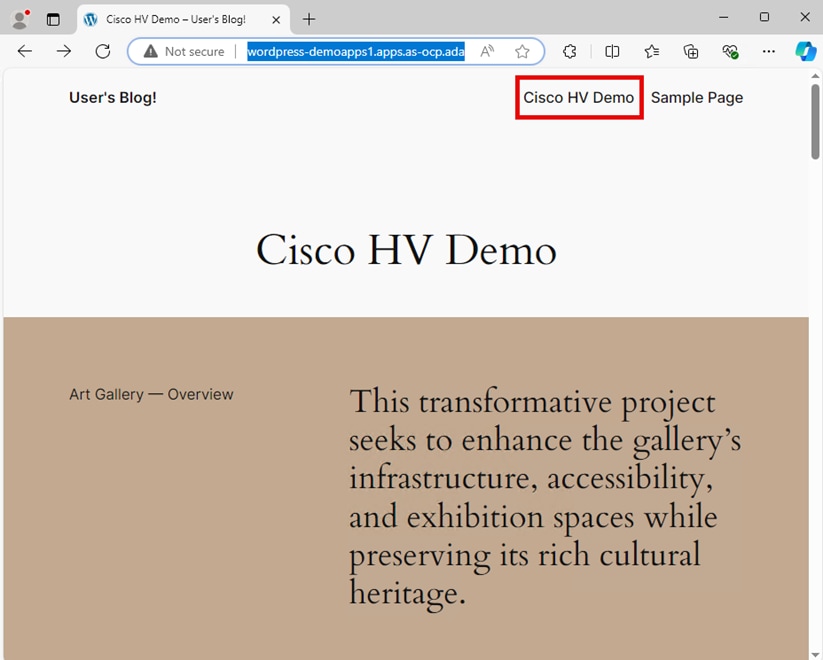

This chapter explains the deployment of the Red Hat OCP on an existing Adaptive Solutions Converged Infrastructure VSI placement, detailed in this CVD deployment guide: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hitachi_adaptive_vmware_vsp.html. The primary Red Hat source for installing OCP for this architecture can be found here: https://docs.redhat.com/en/documentation/openshift_container_platform/4.15/html/installing/installing-on-vsphere#preparing-to-install-on-vsphere

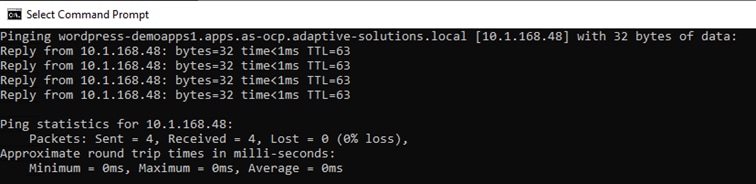

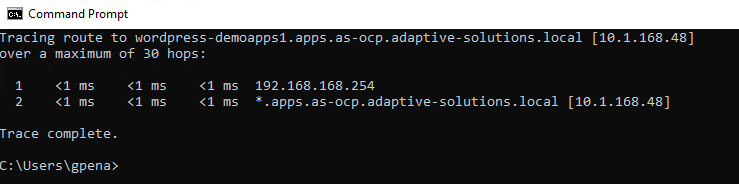

The OCP placement has these additional dependencies in place at installation time:

▪ DNS Server

From a primary DNS domain in our setup of adaptive-solutions.local, a subdomain is created of:

as-ocp.adaptive-solutions.local

◦ Within this subdomain there are two initial A record (Host) entries of:

api.as-ocp.adaptive-solutions.local

*.apps.as-ocp.adaptive-solutions.local

▪ DHCP Server

A DHCP scope will be needed to at least support the provisioning of the master and worker nodes of the cluster. Additionally, the following scope options will be needed:

▪ 003 Router

▪ 004 Timer Server

▪ 006 DNS Servers

▪ 015 DNS Domain Name

▪ Red Hat Credentials

Red Hat credentials are needed to log in to https://console.redhat.com for provisioning OCP. A subscription is not needed for a 60 day trial but will be needed for any production placement.

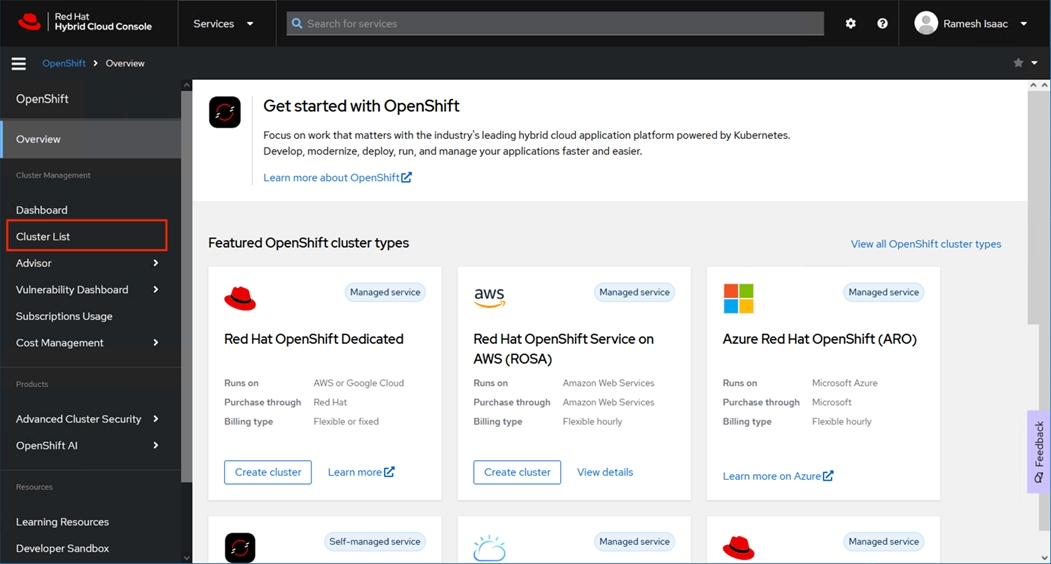

The installation for Red Hat OCP on VMware vSphere will go through some of the initial setup from https://console.redhat.com and the deployment being invoked from an installation host.

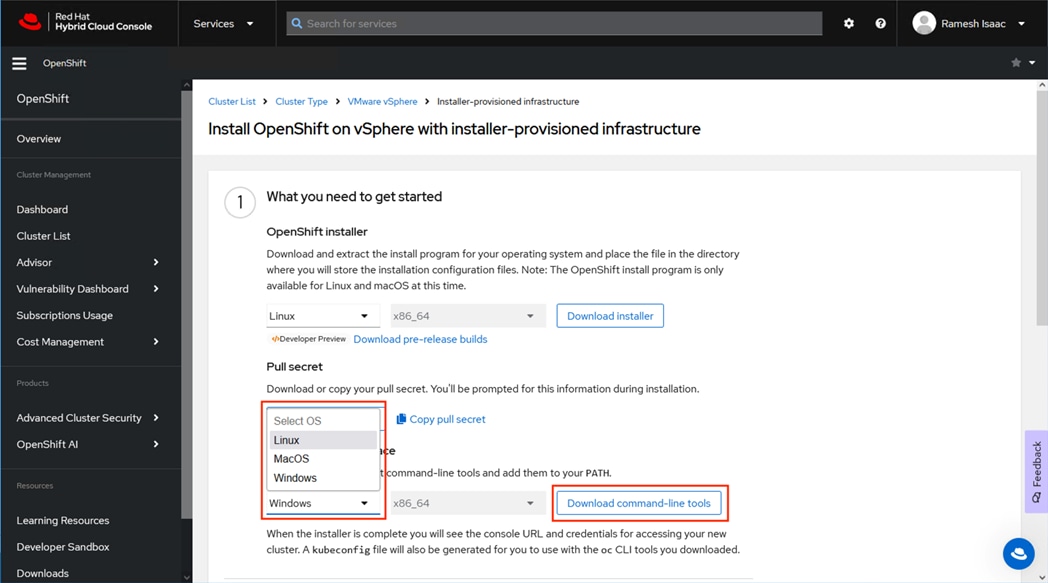

Procedure 1. Start installation

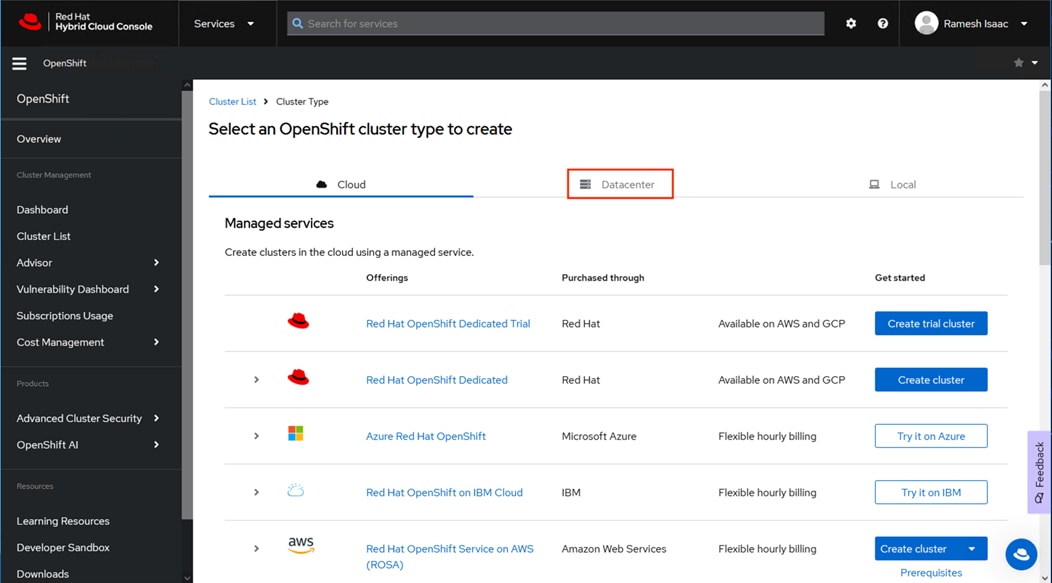

Step 1. Open a browser and log in to https://console.redhat.com.

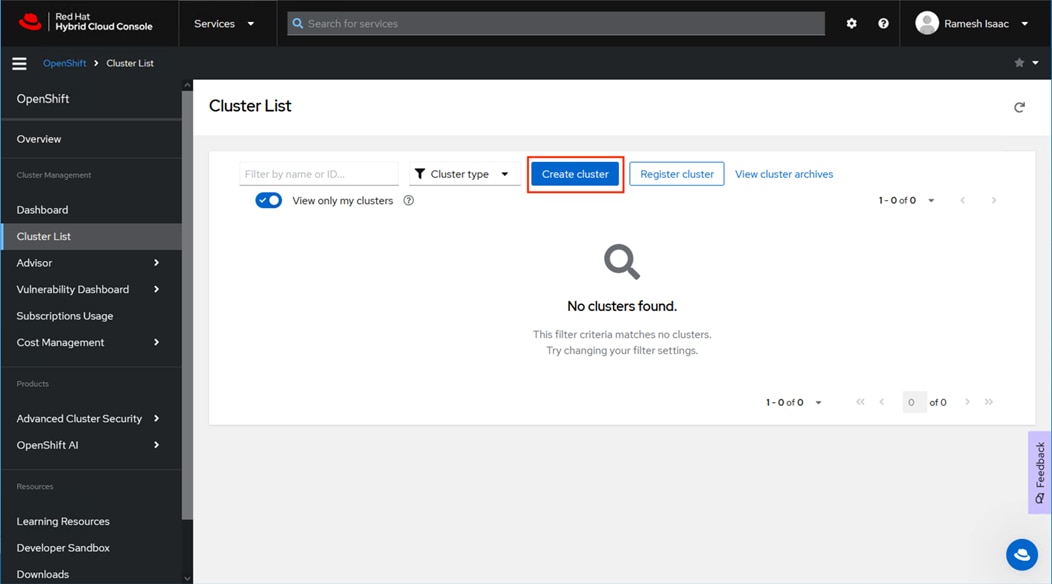

Step 2. Click Cluster List from the options in the left pane and click Create cluster.

Step 3. Click Datacenter.

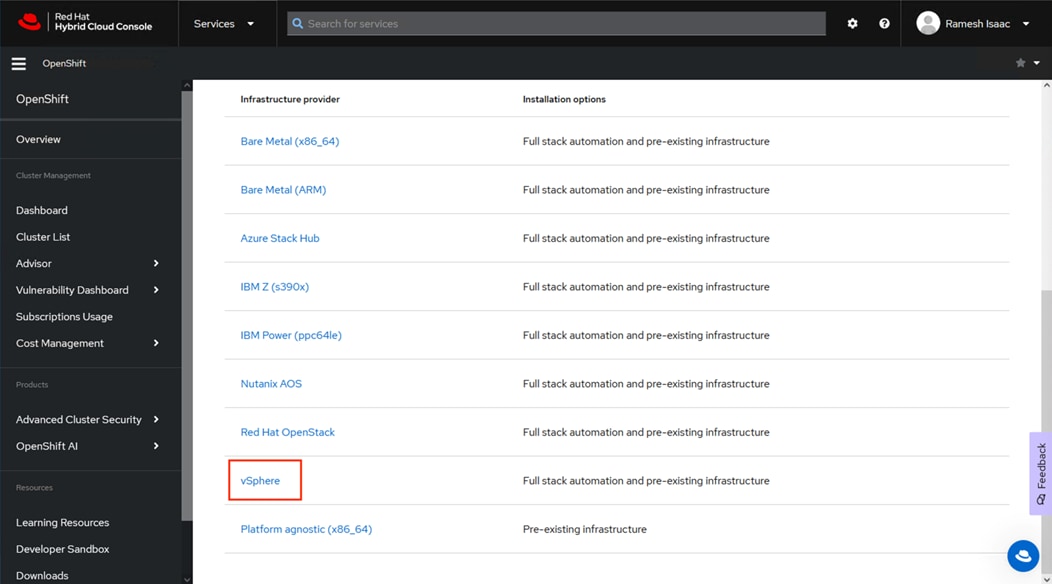

Step 4. Scroll down in the resulting page and click the vSphere option.

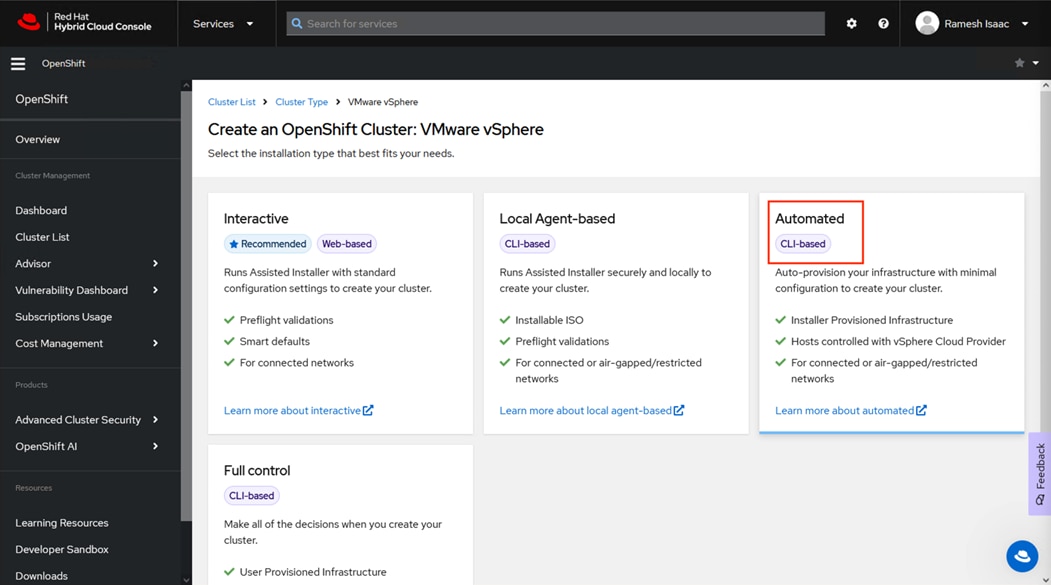

Step 5. Click the Automated option.

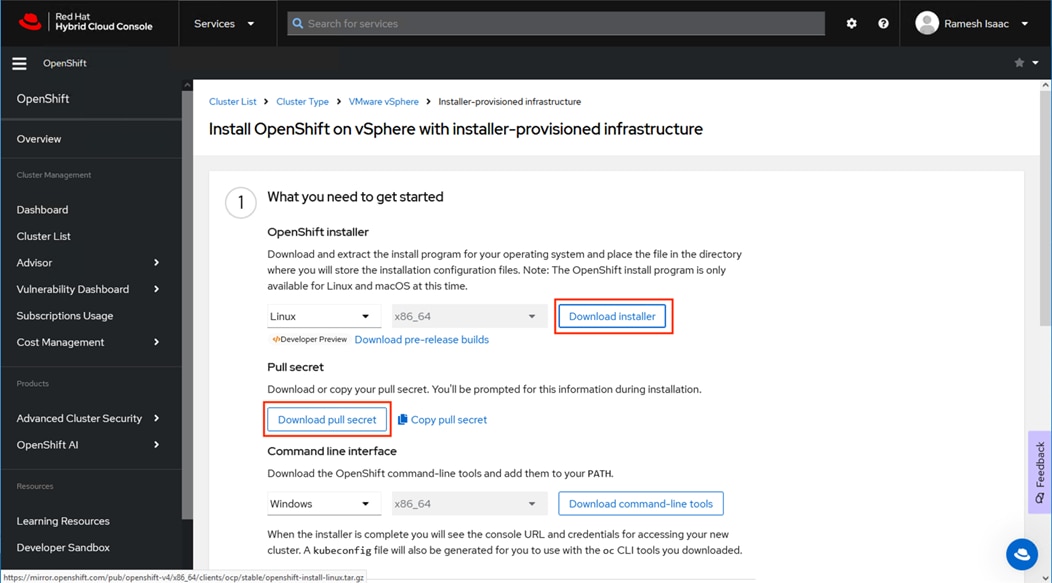

Step 6. Click Download installer to save the compressed tar bundle for the installer and click Download pull secret to save a text copy of the pull secret. If manually recording, click Copy pull secret.

Step 7. Select the appropriate OS from the drop-down list for the Command line interface and click Download command-line tools to save.

Step 8. Copy the installer, pull secret, and command-line tools to the installation host.

Step 9. Connect to the installation host and run the following commands, changing destinations and names as needed:

mkdir bin install

gunzip openshift-client-linux.tar.gz openshift-install-linux.tar.gz

tar xf openshift-install-linux.tar -C install

tar xf openshift-client-linux.tar -C bin

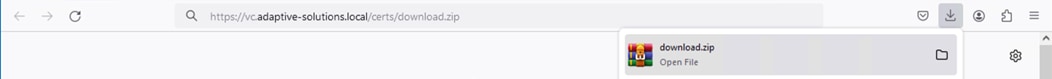

Step 10. Gather the X.509 certificates from vCenter: https://vc.adaptive-solutions.local/certs/download.zip substituting the appropriate vCenter address for vc.adaptive-solutions.local.

Step 11. Copy the download.zip file to the installer and unzip it.

Step 12. From the installer, run the following commands:

sudo cp lin/* /etc/pki/ca-trust/source/anchors

sudo update-ca-trust extract

Step 13. From the install host, run openshift-install to create the cluster, providing options for the dialogue when prompted:

[as-control@as-control ~]$ openshift-install create cluster

? SSH Public Key /home/as-control/.ssh/id_rsa.pub

? Platform vsphere

? vCenter vc.adaptive-solutions.local

? Username administrator@vsphere.local

? Password [? for help] *********

INFO Connecting to vCenter vc.adaptive-solutions.local

INFO Defaulting to only available datacenter: AS-VSI

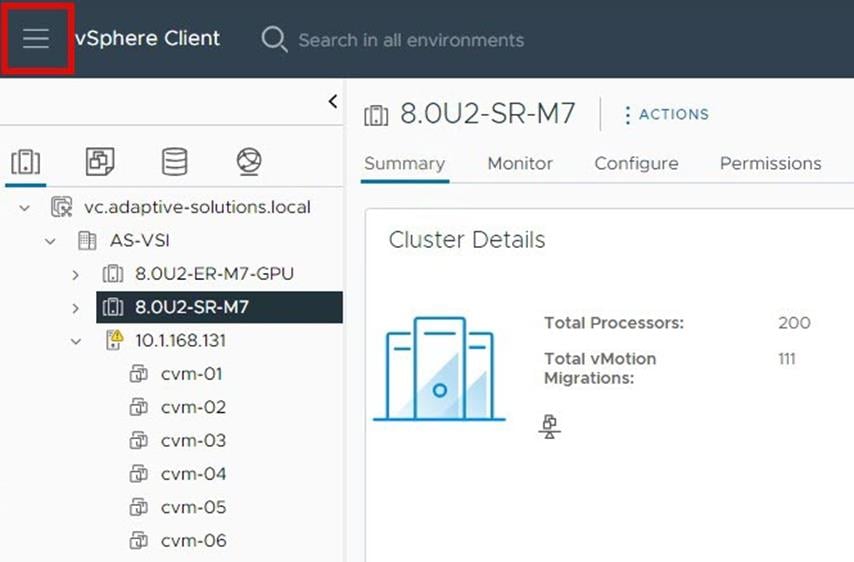

? Cluster /AS-VSI/host/8.0U1-M7

? Default Datastore /AS-VSI/datastore/DS1

? Network IB-Mgmt-119

? Virtual IP Address for API 10.1.168.49

? Virtual IP Address for Ingress 10.1.168.48

? Base Domain adaptive-solutions.local

? Cluster Name as-ocp

? Pull Secret [? for help] *************************************************************************INFO Creating infrastructure resources...

INFO Waiting up to 20m0s (until 5:21PM EDT) for the Kubernetes API at https://api.as-ocp.adaptive-solutions.local:6443...

INFO API v1.28.9+416ecaf up

INFO Waiting up to 1h0m0s (until 6:03PM EDT) for bootstrapping to complete...

INFO Destroying the bootstrap resources...

INFO Waiting up to 40m0s (until 5:55PM EDT) for the cluster at https://api.as-ocp.adaptive-solutions.local:6443 to initialize...

INFO Waiting up to 30m0s (until 5:53PM EDT) to ensure each cluster operator has finished progressing...

INFO All cluster operators have completed progressing

INFO Checking to see if there is a route at openshift-console/console...

INFO Install complete!

INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/home/as-control/auth/kubeconfig'

INFO Access the OpenShift web-console here: https://console-openshift-console.apps.as-ocp.adaptive-solutions.local

INFO Login to the console with user: "kubeadmin", and password: "pXXXX-sXXXX-hXXXX-nXXXX"

INFO Time elapsed: 30m35s

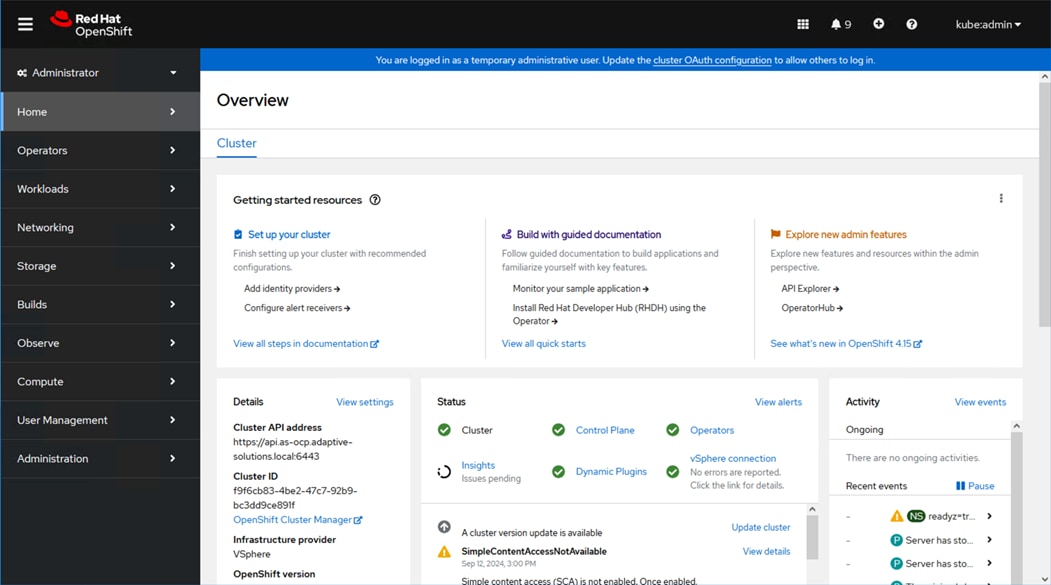

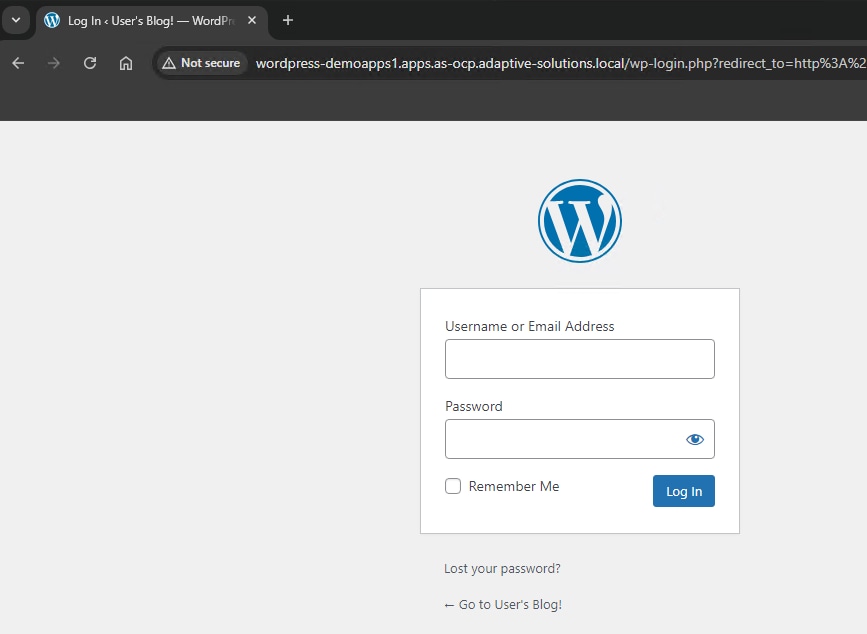

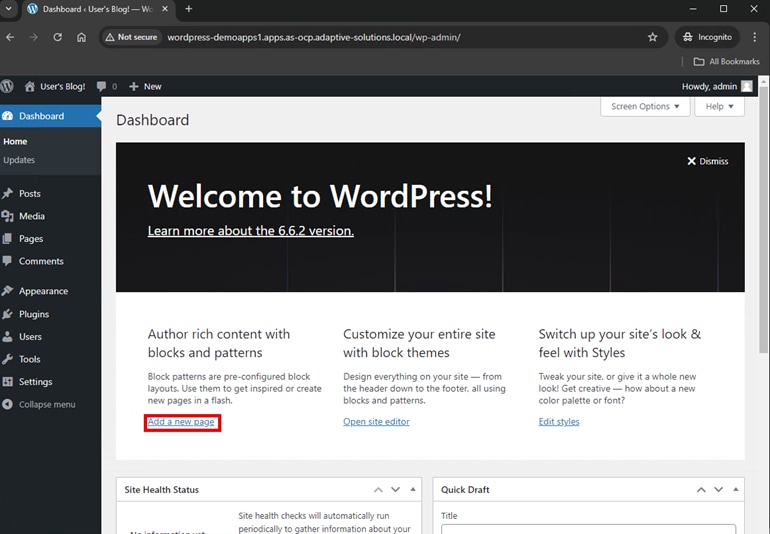

Step 14. Connect using CLI with the log in information provided at the end of the installation dialogue or connect to https://console-openshift-console.apps.as-ocp.adaptive-solutions.local and log in with the kubeadmin user.

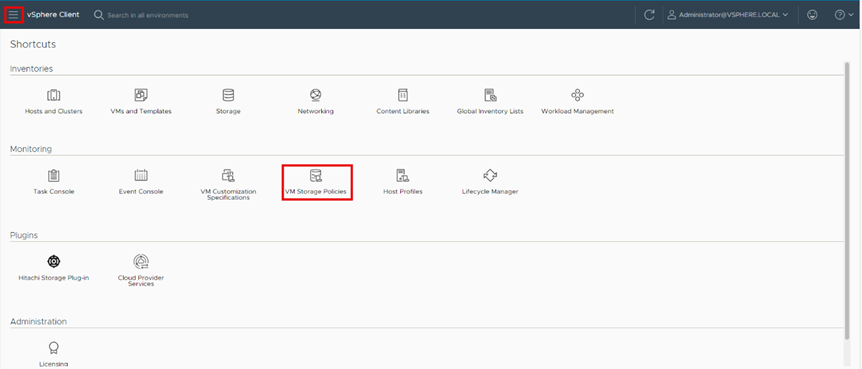

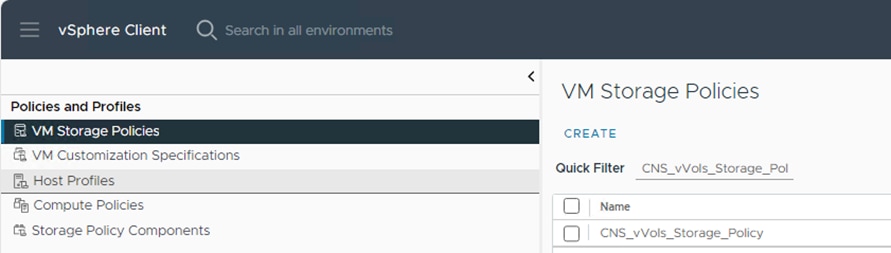

Configure vSphere VM Storage Policies

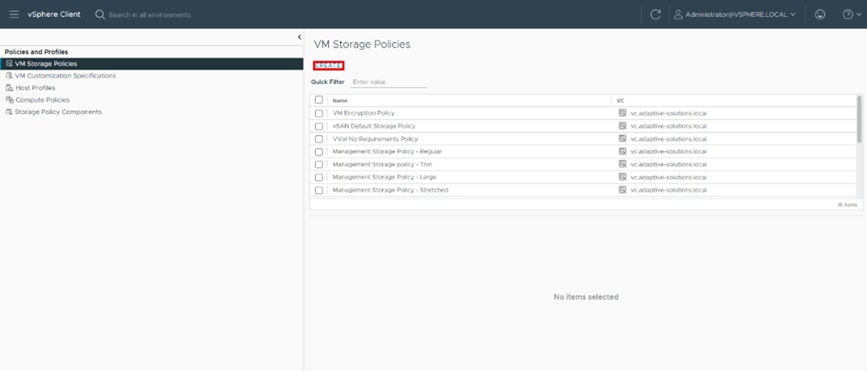

VMware vSphere VM storage policies must be configured before creating a storage class within Red Hat OpenShift Container Management Platform (OCP). This section covers how to create storage policies for both VMFS and vVols datastores backed by Hitachi Virtual Storage Platform (VSP) with capabilities translated down using the VASA APIs.

Storage managed capabilities for both VMFS and vVols Datastores can be defined using the Hitachi Storage Provider for VMware vCenter. These managed capabilities identify the characteristics for a VMFS LDEV or a vVols storage container and are visible within vCenter. Defined capabilities include Performance IOPs and Performance Latency and Availability. For defining the managed capabilities for Hitachi Vantara VSP 5600 LDEVs or vVols Storage Containers, see: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hitachi_adaptive_vmware_vsp.html

VM Storage Policy for VMFS

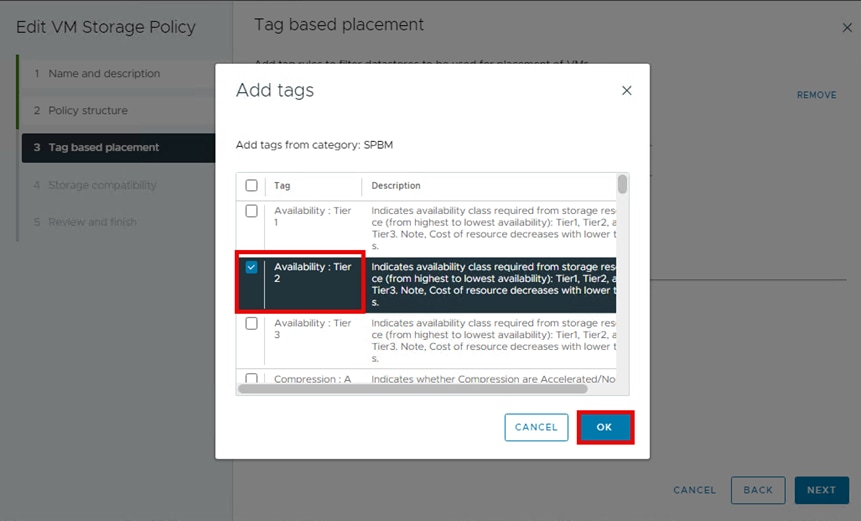

Procedure 1. Create a VMware vSphere VM storage policy for a VMFS datastore

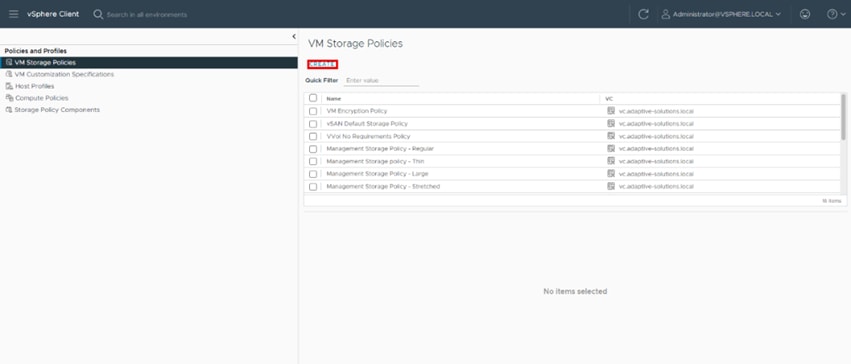

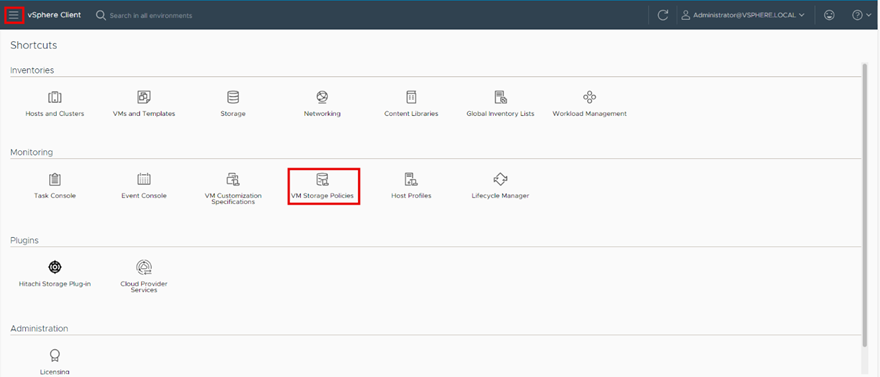

Step 1. Log in to the VMware vSphere Client.

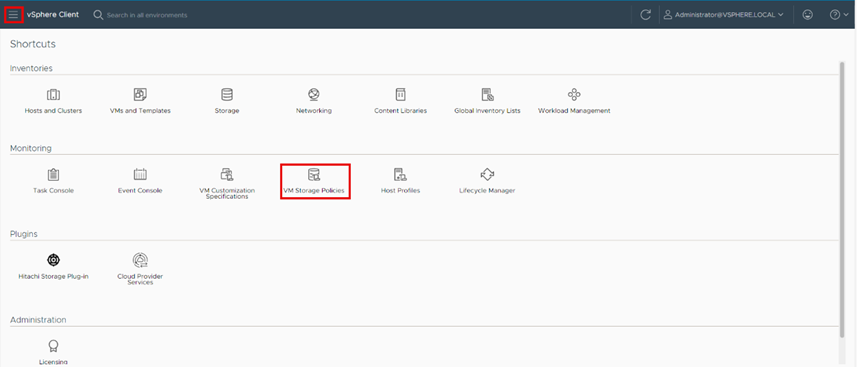

Step 2. Select Shortcuts and click VM Storage Policies.

Step 3. Click Create.

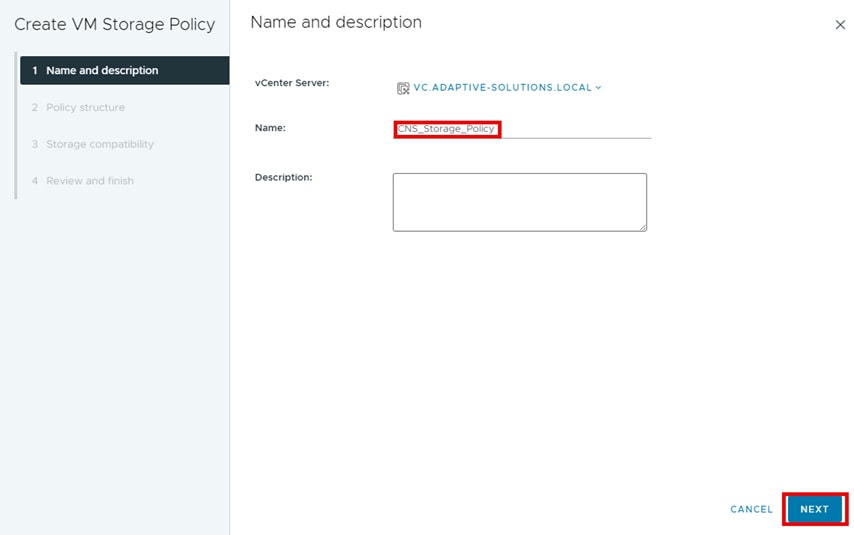

Step 4. Define a policy Name and click NEXT.

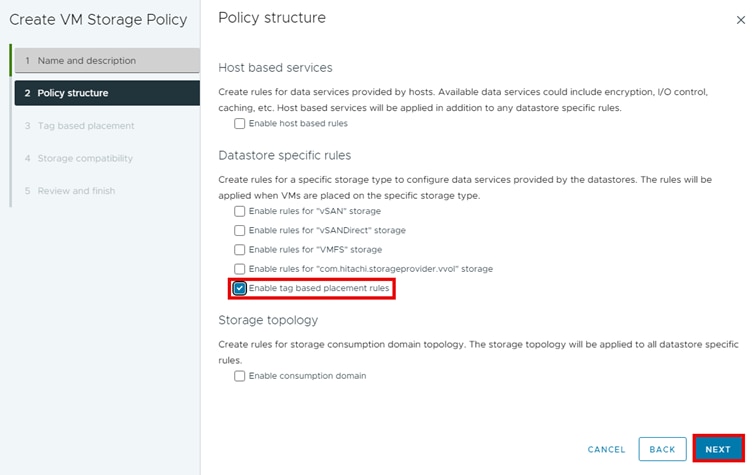

Step 5. Under Datastore specific rules, select Enable tag-based placement rules and click NEXT.

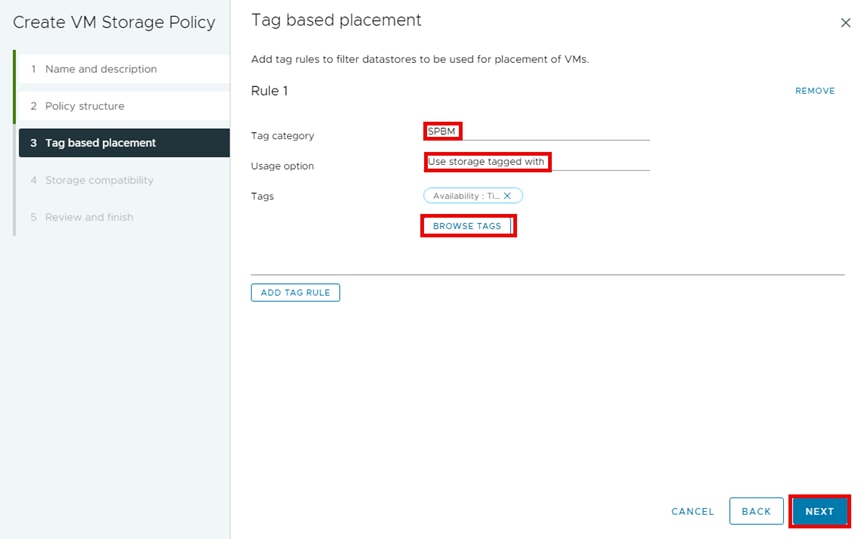

Step 6. From Tag based placement, select the following and then click NEXT.

a. Tag category: SPBM

b. Usage options: Use storage tagged with

c. Tags: BROWSE TAGS

Step 7. Select the applicable storage tags for a VMFS Datastore that the storage administrator has defined using the Storage Provider for VMware vCenter, or that has been natively tagged using vCenter and then click OK.

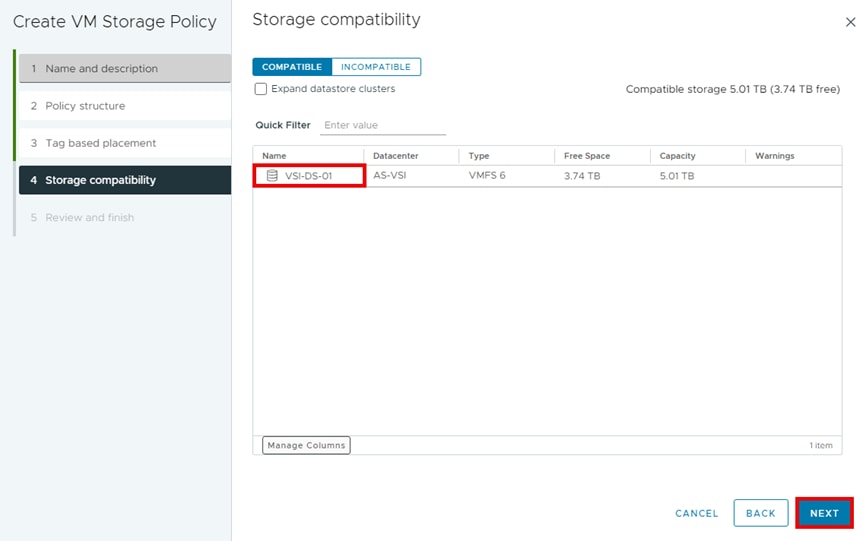

Step 8. From the Storage compatibility window, you will see the datastores that match the tags that you enabled in the previous step. Under Name, select the datastore and click NEXT.

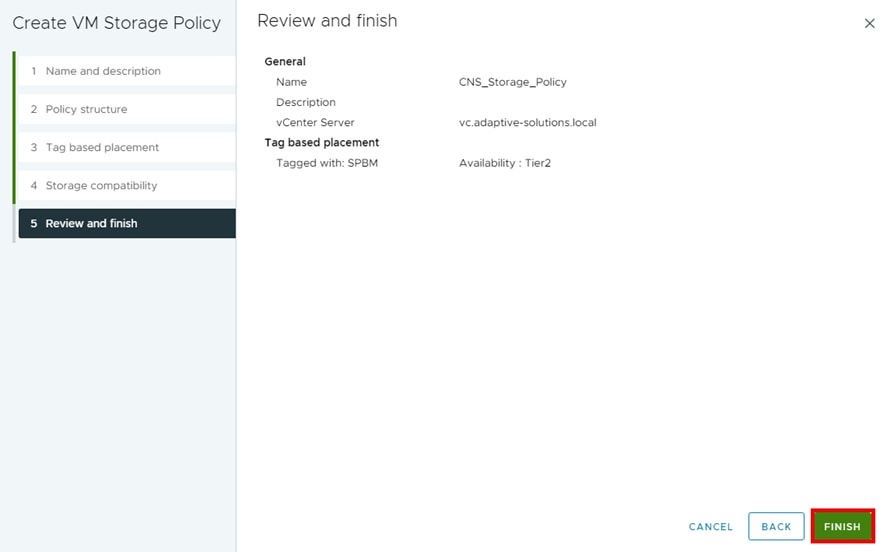

Step 9. From the Review and finish tab, click FINISH.

Step 10. Select Shortcuts and click VM Storage Policies.

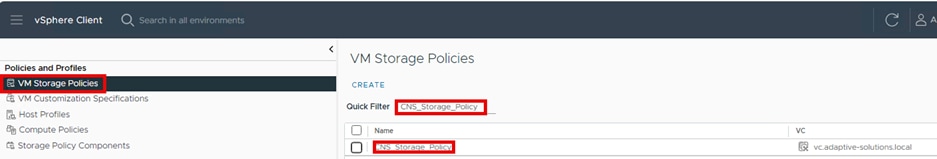

Step 11. Confirm the creation of the new Storage Policy by selecting VM Storage Polices and using the Quick Filter and searching for CNS_Storage_Policy.

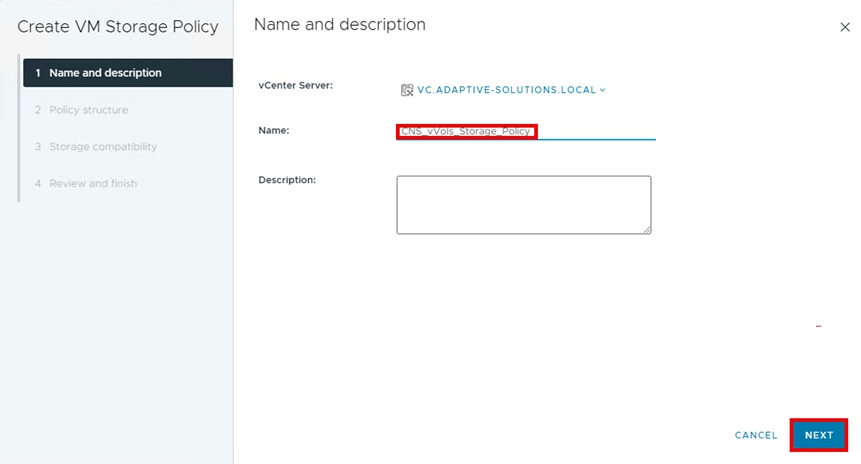

Procedure 1. Create a VMware VM storage policy for a vVols datastore

Step 1. Log in to the VMware vSphere Client.

Step 2. Select Shortcuts and click VM Storage Policies.

Step 3. Click Create.

Step 4. Define a Name and click NEXT.

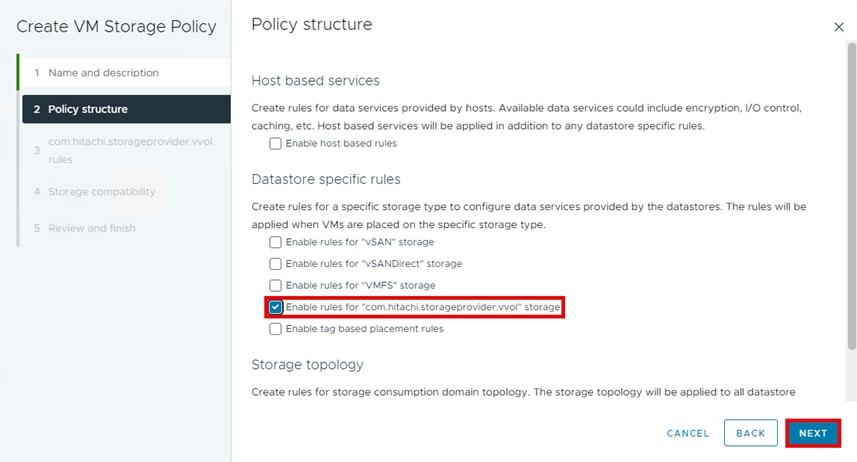

Step 5. For Datastore specific rules, select Enable rules for “com.hitachi.storageprovider.vvol” storage and click NEXT.

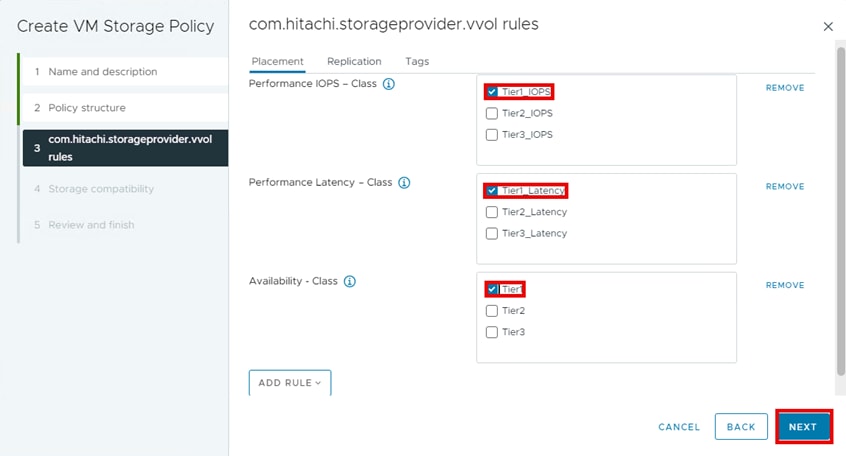

Step 6. From the com.hitachi.storageprovider.vvol rules pane, from the Placement tab, select Tier1_IOPS, Tier1_Latency, Tier1, and click NEXT.

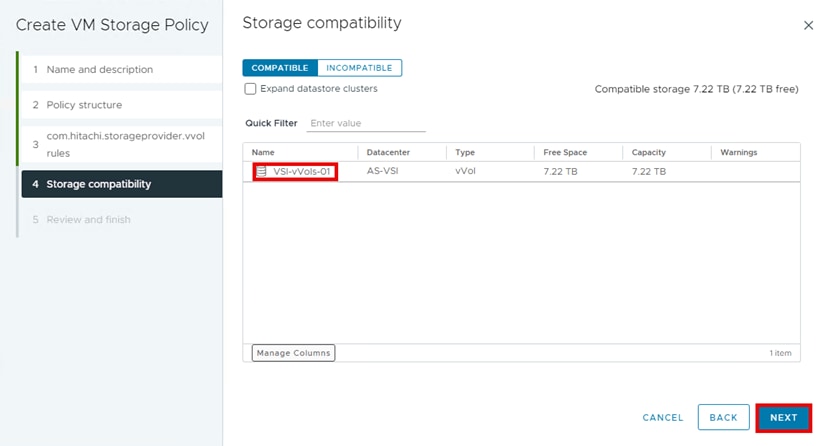

Step 7. From the Storage compatibility window, you will see datastores that match the com.hitachi.storageprovider.vvol placement requirements that you selected in the previous step. Under Name, select the datastore and click NEXT.

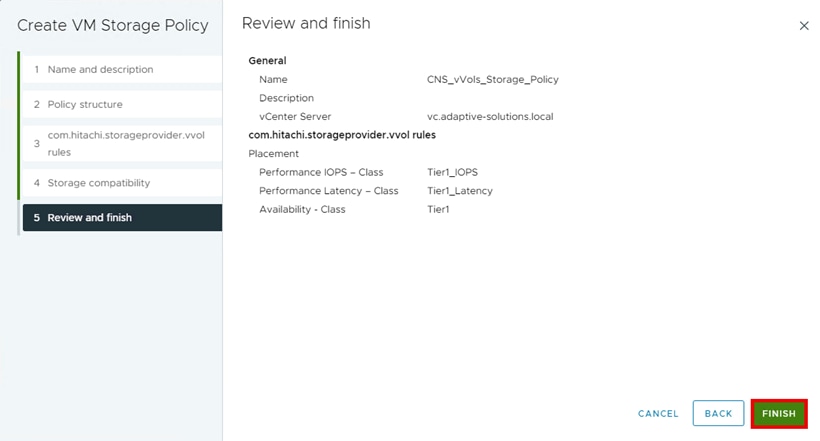

Step 8. From the Review and finish pane, click FINISH.

Step 9. Confirm the vSphere Storage Policy has been created.

Red Hat OCP Storage Configuration

Red Hat OCP provides a CLI for administrators to deploy Persistent Volumes (PVs) with containerized applications. This section describes prerequisite storage operations to provide persistent storage to the environment backed by Hitachi VSP.

OCP persistent storage is supported on the Hitachi VSP with FC-SCSI, FC-NVMe, and vVols storage. Within OCP, Storage Classes are created to match a specific vCenter Storage Policy name assigned to a datastore with the suitable type of storage (FC-SCSI, FC-NVMe, or vVols).

Note: Verify that you have completed the previous procedures in this guide before continuing.

The following OCP CLI sections steps document configuring the storage requirements for an OCP Pod and are common across the different storage protocols:

▪ Create an OCP Storage Class for each of the vSphere Storage Policy associated with a specific type of Datastore (VMFS or vVols)

A Storage Class provides a way for administrators to describe the classes of storage they offer which can be requested through the OCP interface. Each class contains fields for administrators to define the provisioner, parameter, and reclaim policy which are used for Persistent Volume (PV) creation via Persistent Volume Claims (PVCs). The provisioner parameter in a virtual environment backed by Hitachi storage on top of VMware would use the CSI provisioner csi.vsphere.vmware.com. Storage Classes also have specific names and are called out when creating PVCs. When administrators create Storage Class objects, these objects cannot be updated once they have been created.

▪ Create a PVC associated with each OCP Storage Class

One of the storage resources that the OCP platform orchestrates is Persistent Storage via Persistent Volumes (PV). PVs are resources in the Kubernetes cluster that have a lifecycle independent of any pod that uses a PV. This is a type of volume on the host machine that stores persistent data. PVs provide storage resources in a cluster, which allows the storage resource to persist even when the pods that use them are removed. PVs can be statically or dynamically provisioned, and they can be customized for performance, size, and access mode. PVs can be attached to pods via a Persistent Volume Claim (PVC), that is, a request for the resource that acts as a claim to check for available resources.

▪ Create a Pod associated with each PVC. In OCP, a pod consists of either a single or multiple containers and are packaged together to maximize resource sharing benefits

OCP Storage Configuration Section for vSphere VMFS Datastores

The directions in the section apply to VMFS datastores supported via FC-SCSI as well as FC-NVMe.

Procedure 1. Create Storage Class - VMFS

Step 1. Confirm that the VMware storage policy backed by a VMFS datastore has been created and has compatible storage.

Step 2. Log in to the OCP CLI using valid user credentials.

Step 3. Use the parameters listed below and create the following file “StorageClass_VMFS.yaml” to configure the Storage Class for a vCenter VMFS datastore.

Parameters:

a. Define the kind parameter as StorageClass.

b. Define the API version used.

c. Define an applicable StorageClass name for OCP “csi-sc”.

d. Optionally, select whether this is a default StorageClass.

e. Define the provisioner used. For CNS, the default is “csi.vsphere.vmare.com”.

f. Set the StoragePolicyName to the VMware storage policy name defined in vCenter for VMFS datastore “CNS_Storage_Policy”.

g. Set the reclaim Policy as “Delete”.

h. Set the volumeBindingMode as “Immediate”.

StorageClass_VMFS.yaml file:

kind: StorageClass (a)

apiVersion: storage.k8s.io/v1 (b)

metadata:

name: csi-sc (c)

annotations:

storageclass.kubernetes.io/is-default-class: 'false' (d)

provisioner: csi.vsphere.vmware.com (e)

parameters:

StoragePolicyName: CNS_Storage_Policy (f)

allowVolumeExpansion: true

reclaimPolicy: Delete (g)

volumeBindingMode: Immediate (h)

Step 4. Create the Storage Class for the VMFS datastore using the following command:

oc create -f StorageClass_VMFS.yaml

Step 5. Verify the Storage Class for the VMFS datastore “csi-sc” has been created with the following command:

oc get sc

[as-control@as-control ~]$ oc get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

csi-sc csi.vsphere.vmware.com Delete Immediate true 4s

thin-csi (default) csi.vsphere.vmware.com Delete WaitForFirstConsumer true 5d19h

Procedure 2. Create PVC -VMFS

Before creating a PVC, verify that an appropriate StorageClass exists. Additionally, confirm that the VMware storage policy backed by a VMFS datastore is created and has compatible storage. Use the following OCP CLI procedure to deploy a PVC.

Step 1. Use the parameters listed below and create the following file “pvc.yaml” to configure the PVC for a vCenter VMFS datastore.

Parameters:

a. Define the API version used.

b. Define the kind parameter as “PersistentVolumeClaim”.

c. Select an applicable PersistentVolumeClaim name “pvc-vmfs”.

d. Set the accessModes to “ReadWriteOnce”.

e. Set the storage size to “10Gi”.

f. Set the storageClassName to the OCP Storage Class defined for VMFS “csi-sc”.

pvc.yaml file:

apiVersion: v1 (a)

kind: PersistentVolumeClaim (b)

metadata:

name: pvc-vmfs (c)

spec:

accessModes:

- ReadWriteOnce (d)

resources:

requests:

storage: 10Gi (e)

storageClassName: csi-sc (f)

Step 2. Create the PVC for the VMFS datastore using the following command:

oc create -f pvc.yaml

Step 3. Verify the PVC using the VMFS datastore has been created with the following command:

oc get pvc

[as-control@as-control home]$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-vmfs Bound pvc-26c28f19-ce53-4f3c-97c2-6f27d142e5e1 10Gi RWO csi-sc 17s

Procedure 3. Create Pod - VMFS

Before creating a PVC verify that an appropriate Storage Class, and PVC exist. Confirm that the VMware storage policy backed by a VMFS datastore is created and has compatible storage. Use the following OCP CLI procedure to configure a Pod.

Step 1. Use the parameters listed below and create the following file “PODBox_vmfs.yaml” to configure an OCP Pod for a vCenter VMFS datastore:

Parameters:

a. Define the API version used.

b. Define the kind parameter as “Pod”.

c. Assign a name to the Pod “pod-box”.

d. Assign a name to the container “my-busybox”.

e. Use the following image for the container “busybox”.

f. Under volumeMounts - Specify the mountPath “/data”.

g. Under volumeMounts – Specify name “sample-volume”.

h. Set command to ["sleep", "1000000"].

i. For imagePullPolicy, pull the image if it does not already exist on the node “IfNotPresent”.

j. Under volumes, assign the name “sample-volume”.

k. Set the claimName to the PVC for VMFS “pvc-vmfs”.

PODBox_vmfs.yaml file

apiVersion: v1 (a)

kind: Pod (b)

metadata:

name: pod-box (c)

spec:

containers:

- name: my-busybox (d)

image: busybox (e)

volumeMounts:

- mountPath: "/data" (f)

name: sample-volume (g)

command: ["sleep", "1000000"] (h)

imagePullPolicy: IfNotPresent (i)

volumes:

- name: sample-volume (j)

persistentVolumeClaim:

claimName: pvc-vmfs (k)

Step 2. Create the Pod using the following command:

oc create -f PODBox_vmfs.yaml

Step 3. Verify the POD has been created using the following command:

oc get pod

[as-control@as-control ~]$ oc get pod

NAME READY STATUS RESTARTS AGE

pod-box 1/1 Running 0 33s

OCP Storage Configuration Section for vVols

Procedure 1. Create Storage Class – vVols

Use the following OCP CLI procedure to create a Storage Class.

Step 1. Confirm that the VMware storage policy backed by a vVols datastore has been created and has compatible storage.

Step 2. Log in to the OCP CLI using valid user credentials.

Step 3. Use the parameters listed below and create the following file “StorageClass_vvols.yaml” to configure the Storage Class for a vCenter VMFS datastore.

Parameters:

a. Define the kind parameter as “StorageClass”.

b. Define the API version used.

c. Define an applicable StorageClass name “csi-vvols-sc”.

d. Optionally select whether this is a default StorageClass “false”.

e. Define the provisioner used. For CNS, the default is “csi.vsphere.vmare.com”.

f. Set the StoragePolicyName to the VMware storage policy within vCenter for a vVols datastore “CNS_vVols_Storage_Policy”.

g. Set allowVolumeExpansion to “true”.

h. Set the reclaim Policy as “Delete”.

i. Set the volumeBindingMode as “Immediate”.

StorageClass_vvols.yaml file:

kind: StorageClass (a)

apiVersion: storage.k8s.io/v1 (b)

metadata:

name: csi-vvols-sc (c)

annotations:

storageclass.kubernetes.io/is-default-class: 'false' (d)

provisioner: csi.vsphere.vmware.com (e)

parameters:

StoragePolicyName: CNS_vVols_Storage_Policy (f)

allowVolumeExpansion: true (g)

reclaimPolicy: Delete (h)

volumeBindingMode: Immediate (i)

Step 4. Create the Storage Class for the vVols datastore using the following command:

oc create -f StorageClass_vvols.yaml

Step 5. Verify the Storage Class for the vVols datastore “csi-vvols-sc” has been created with the following command:

oc get sc

[as-control@as-control ~]$ oc get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

csi-sc csi.vsphere.vmware.com Delete Immediate true 37m

csi-vvols-sc csi.vsphere.vmware.com Delete Immediate true 3s

thin-csi (default) csi.vsphere.vmware.com Delete WaitForFirstConsumer true 5d20h

Procedure 2. Create PVC - vVols

Before creating a PVC verify that an appropriate StorageClass exists. Confirm that the VMware storage policy backed by a vVols datastore is created and has compatible storage. Use the following OCP CLI procedure to deploy a PVC.

Step 1. Use the parameters listed below and create the following file “pvc_vvols.yaml” to configure the PVC for a vCenter VMFS datastore.

Parameters:

a. Define the API version used.

b. Define the kind parameter as “PersistentVolumeClaim”.

c. Select an applicable PersistentVolumeClaim name “pvc-vvols”.

d. Set the accessModes to “ReadWriteOnce”.

e. Set the storage size to “10Gi”.

f. Set the storageClassName to the OCP Storage Class defined for vVols “csi-vvols-sc”.

File pvc_vvols.yaml

apiVersion: v1 (a)

kind: PersistentVolumeClaim (b)

metadata:

name: pvc-vvols (c)

spec:

accessModes:

- ReadWriteOnce (d)

resources:

requests:

storage: 10Gi (e)

storageClassName: csi-vvols-sc (f)

Step 2. Create the PVC for the VMFS datastore using the following command:

oc create -f pvc_vvols.yaml

Step 3. Verify the PVC using the vVols datastore has been created with the following command:

oc get pvc

[as-control@as-control ~]$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-from-snapshot Bound pvc-2ca034e8-cf96-451c-88c2-244a8c100968 20Gi RWO csi-sc 28m

pvc-vmfs Bound pvc-ff2f3539-7e08-4dbd-a883-6ecaafa5017b 20Gi RWO csi-sc 58m

pvc-vvols Bound pvc-4556b046-8945-46d7-a36a-b13dbc942a93 10Gi RWO csi-vvols-sc 12s

Procedure 3. Create Pod - vVols

Before creating a PVC verify that an appropriate Storage Class and PVC exist. Confirm that the VMware storage policy backed by a vVols datastore is created and has compatible storage. Use the following OCP CLI procedure to configure a Pod.

Step 1. Use the parameters listed below and create the following file “PODBox1_vvols.yaml” to configure an OCP Pod for a vCenter vVols datastore.

Parameters:

a. Define the API version used.

b. Define the kind parameter as Pod.

c. Assign a name to the Pod “pod-box1”.

d. Assign a name to the container “my-busybox1”.

e. Use the following image for the container “busybox”.

f. Under volumeMounts, specify where external storage volumes are mounted within the container “/data”.

g. Under volumeMounts, assign a name to the volume “sample-volume”.

h. Set command to “["sleep", "1000000"]”.

i. For imagePullPolicy, pull the image if it does not already exist on the node “IfNotPresent”.

j. Under volumes, assign the name “sample-volume”.

k. Assign a name to the volume.

l. Set the persistent volume to the PVC for vVols “pvc-vvols”.

PODBox1_vvols.yaml file

apiVersion: v1 (a)

kind: Pod (b)

metadata:

name: pod-box1 (c)

spec:

containers:

- name: my-busybox1 (d)

image: busybox (e)

volumeMounts:

- mountPath: "/data" (f)

name: sample-volume (g)

command: ["sleep", "1000000"] (h)

imagePullPolicy: IfNotPresent (i)

volumes:

- name: sample-volume (j)

persistentVolumeClaim:

claimName: pvc-vvols (k)

Step 2. Create the Pod using the following command:

oc create -f PODBox1_vvols.yaml

Step 3. Display the POD using the following command:

oc get pod

[as-control@as-control ~]$ oc get pod

NAME READY STATUS RESTARTS AGE

pod-box 1/1 Running 0 59m

pod-box1 1/1 Running 0 11s

Red Hat OCP Storage Management

The following OCP CLI sections document managing a PV and are common across the different storage protocols:

▪ Expand a PVC

The vSphere CSI Driver allows volume expansion of deployed PVs. Before expanding a PV, confirm that the StorageClass has volume expansion parameter allowVolumeExpansion: true. Kubernetes supports offline and online modes of volume expansion. When the PVC is used by a pod and is mounted on a node, the volume expansion operation is categorized as online. In all other cases, it is an offline expansion.

Note: PV capacity cannot be reduced.

Note: When expanding volume capacity there is no need to delete and redeploy Pods.

▪ Snapshot a PVC

A snapshot is a point-in-time image of a volume and can be used to duplicate the previous state of an existing volume or to provision a new pre-populated volume. A Snapshot Class must be created before creating the PVC snapshot.

▪ Restore a PVC to a new PVC

OCP Storage Management for VMFS

Procedure 1. Expand an OCP Persistent Volume - VMFS

Step 1. Display the PVC by using the following command:

oc get pvc

[as-control@as-control ~]$ oc get pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-ff2f3539-7e08-4dbd-a883-6ecaafa5017b 10Gi RWO Delete Bound default/pvc-vmfs csi-sc 10s

Step 2. Display the Pod by using the following command:

oc get pod

[as-control@as-control ~]$ oc get pod

NAME READY STATUS RESTARTS AGE

pod-box 1/1 Running 0 33s

Step 3. Patch the PVC in online mode to increase the storage size from 10Gi to 20Gi using the following command:

oc patch pvc pvc-vmfs -p '{"spec": {"resources": {"requests": {"storage": "20Gi"}}}}'

Step 4. Display the PVC with the following command to verify the expansion “oc get pvc”:

[as-control@as-control ~]$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-vmfs Bound pvc-ff2f3539-7e08-4dbd-a883-6ecaafa5017b 20Gi RWO csi-sc 4m53s

Procedure 2. Create Persistent Volume Snapshots - VMFS

Step 1. Create the snapshot class yaml file named “volumesnapshotclass_vmfs.yaml”.

Step 2. Edit the “volumesnapshotclass_vmfs.yaml” using the following parameters:

Parameters:

a. Define the API version.

b. Define the kind variable as “VolumeSnapShotClass”.

c. Assign a name to the snapshot class “snapshotclass-csi”.

d. Set the driver to “csi.vpshere.vmware.com”.

e. Set the deletionPolicy to “Delete”.

volumesnapshotclass_vmfs.yaml file

apiVersion: snapshot.storage.k8s.io/v1 (a)

kind: VolumeSnapshotClass (b)

metadata:

name: snapshotclass-csi (c)

driver: csi.vsphere.vmware.com (d)

deletionPolicy: Delete (e)

Step 3. Create the snapshot class for the VMFS PVC using the following command:

oc create -f volumesnapshotclass_vmfs.yaml

Step 4. Display the snapshot class using the following command:

oc get volumesnapshotclass

[as-control@as-control ~]$ oc get volumesnapshotclass

NAME DRIVER DELETIONPOLICY AGE

csi-vsphere-vsc csi.vsphere.vmware.com Delete 5d19h

snapshotclass-csi csi.vsphere.vmware.com Delete 13m

Step 5. Create the PVC snapshot yaml file named “volumesnapshot_vmfs.yaml”.

Step 6. Edit the “volumesnapshot_vmfs.yaml” using the following parameters:

Parameters:

a. Define the API version.

b. Define the kind variable as “VolumeSnapShot”.

c. Assign a name to the snapshot.

d. Set the volumeSnapshotClassName to “snapshotclass-csi”.

e. Set the persistentVolumeClaimName to “pvc-vmfs”.

volumesnapshot_vmfs.yaml file

apiVersion: snapshot.storage.k8s.io/v1 (a)

kind: VolumeSnapshot (b)

metadata:

name: snapshot-csi (c)

spec:

volumeSnapshotClassName: snapshotclass-csi (d)

source:

persistentVolumeClaimName: pvc-vmfs (e)

Step 7. Create the persistent volume snapshot with the following command:

oc create -f volumesnapshot_vmfs.yaml

Step 8. Verify the volume snapshot using the following command:

oc get volumesnapshot

[as-control@as-control ~]$ oc get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

snapshot-csi true pvc-vmfs 20Gi snapshotclass-csi snapcontent-88de34a8-7d7c-4a24-9418-2923c6e50d6d 10s 14s

Procedure 3. Restore Persistent Volume Snapshots to a new PVC – VMFS

Step 1. Restore the PV snapshot as a new PVC by creating a yaml file named “pvc_from_snapshot_vmfs.yaml” using the following parameters:

Parameters:

a. Define the API version.

b. Define the kind variable as “PersistentVolumeClaim”.

c. Assign a name for the new PVC “pvc-from-snapshot”.

d. Set the datasource name to “snapshotclass-csi”.

e. Set the datasource kind to “VolumeSnapshot”.

f. Set the datasource apiGroup to “snapshot.storage.k8s.io”.

g. Set accessModes to “ReadWriteOnce”.

h. Set storage to “20Gi”.

i. Set StorageClassName to “csi-sc”.

pvc_from_snapshot_vmfs.yaml file

apiVersion: v1 (a)

kind: PersistentVolumeClaim (b)

metadata:

name: pvc-from-snapshot (c)

spec:

dataSource:

name: snapshot-csi (d)

kind: VolumeSnapshot (e)

apiGroup: snapshot.storage.k8s.io (f)

accessModes:

- ReadWriteOnce (g)

resources:

requests:

storage: 20Gi (h)

storageClassName: csi-sc (i)

Step 2. Restore the snapshot using the following command:

oc create -f pvc pvc_from_snapshot_vmfs.yaml

Step 3. Verify the new PVC has been created by using the following command:

oc get pvc

[as-control@as-control ~]$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-from-snapshot Bound pvc-2ca034e8-cf96-451c-88c2-244a8c100968 20Gi RWO csi-sc 8s

pvc-vmfs Bound pvc-ff2f3539-7e08-4dbd-a883-6ecaafa5017b 20Gi RWO csi-sc 30m

OCP Storage Management for vVols

Procedure 1. Expand an OCP Persistent Volume - vVols

Step 1. Display the PVC by using the following command:

oc get pvc

[as-control@as-control ~]$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-from-snapshot Bound pvc-2ca034e8-cf96-451c-88c2-244a8c100968 20Gi RWO csi-sc 28m

pvc-vmfs Bound pvc-ff2f3539-7e08-4dbd-a883-6ecaafa5017b 20Gi RWO csi-sc 58m

pvc-vvols Bound pvc-4556b046-8945-46d7-a36a-b13dbc942a93 10Gi RWO csi-vvols-sc 12s

Step 2. Display the Pod by using the following command:

oc get pod

[as-control@as-control ~]$ oc get pod

NAME READY STATUS RESTARTS AGE

pod-box 1/1 Running 0 59m

pod-box1 1/1 Running 0 11s

Step 3. Patch the PVC in online mode to increase the storage size from 10Gi to 20Gi using the following command:

oc patch pvc pvc-vvols -p '{"spec": {"resources": {"requests": {"storage": "20Gi"}}}}'

Step 4. Display the PVC with the following command to verify the expansion:

oc get pvc

[as-control@as-control ~]$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-from-snapshot Bound pvc-2ca034e8-cf96-451c-88c2-244a8c100968 20Gi RWO csi-sc 33m

pvc-vmfs Bound pvc-ff2f3539-7e08-4dbd-a883-6ecaafa5017b 20Gi RWO csi-sc 63m

pvc-vvols Bound pvc-4556b046-8945-46d7-a36a-b13dbc942a93 20Gi RWO csi-vvols-sc 4m31s

Procedure 2. Create Persistent Volume Snapshots - vVols

Step 1. Create the snapshot class yaml file named “volumesnapshotclass_vvols.yaml”.

Step 2. Edit the “volumesnapshotclass_vvols.yaml” using the following parameters:

Parameters:

a. Define the API version.

b. Define the kind variable as “VolumeSnapShotClass”.

c. Assign a name to the snapshot class “snapshotclass-csi-vvols”.

d. Set the driver to “csi.vpshere.vmware.com”.

e. Set the deletionPolicy to “Delete”.

volumesnapshotclass_vvols.yaml file

apiVersion: snapshot.storage.k8s.io/v1 (a)

kind: VolumeSnapshotClass (b)

metadata:

name: snapshotclass-csi-vvols (c)

driver: csi.vsphere.vmware.com (d)

deletionPolicy: Delete (e)

Step 3. Create the snapshot class for the VMFS PVC using the following command:

oc create -f volumesnapshotclass_vvols.yaml

Step 4. Display the snapshot class using the following command:

oc get volumesnapshotclass

[as-control@as-control ~]$ oc get volumesnapshotclass

NAME DRIVER DELETIONPOLICY AGE

csi-vsphere-vsc csi.vsphere.vmware.com Delete 5d20h

snapshotclass-csi csi.vsphere.vmware.com Delete 56m

snapshotclass-csi-vvols csi.vsphere.vmware.com Delete 12s

[as-control@as-control ~]$ oc create -f volumesnapshot_vvols.yaml

Step 5. Create the PVC snapshot yaml file named “volumesnapshot_vvols.yaml”.

Step 6. Edit the “volumesnapshot_vvols.yaml” using the following parameters:

Parameters:

a. Define the apiVersion

b. Define the kind variable as “VolumeSnapShot”.

c. Assign a name to the snapshot “snapshot-csi-vvols”.

d. Set the volumeSnapshotClassName to “snapshotclass-csi-vvols”.

e. Set the persistentVolumeClaimName to “pvc-vvols”.

volumesnapshot_vvols.yaml file

apiVersion: snapshot.storage.k8s.io/v1 (a)

kind: VolumeSnapshot (b)

metadata:

name: snapshot-csi-vvols (c)

spec:

volumeSnapshotClassName: snapshotclass-csi-vvols (d)

source:

persistentVolumeClaimName: pvc-vvols (e)

Step 7. Create the persistent volume snapshot with the following command:

oc create -f volumesnapshot_vvols.yaml

Step 8. Verify the volume snapshot using the following command:

oc get volumesnapshot

[as-control@as-control ~]$ oc get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

snapshot-csi true pvc-vmfs 20Gi snapshotclass-csi snapcontent-88de34a8-7d7c-4a24-9418-2923c6e50d6d 35m 35m

snapshot-csi-vvols true pvc-vvols 20Gi snapshotclass-csi-vvols snapcontent-93478f44-0e73-436d-99d8-d93efa82dafc 20s 31s

Procedure 3. Restore Persistent Volume Snapshots to a new PVC – vVols

Step 1. Restore the PV snapshot as a new PVC by creating a yaml file named “pvc_from_snapshot_vvols.yaml” using the following parameters:

Parameters:

a. Define the API version.

b. Define the kind variable as “PersistentVolumeClaim”.

c. Assign a name for the new PVC “pvc-from-snapshot-vvols”.

d. Set the datasource name to “snapshotclass-csi-vvols”.

e. Set the datasource kind to “VolumeSnapshot”.

f. Set the datasource apiGroup to “snapshot.storage.k8s.io”.

g. Set accessModes to “ReadWriteOnce”

h. Set storage to “20Gi”

i. Set StorageClassName to “csi-vvols-sc”

pvc_from_snapshot_vvols.yaml file

apiVersion: v1 (a)

kind: PersistentVolumeClaim (b)

metadata:

name: pvc-from-snapshot-vvols (c)

spec:

dataSource:

name: snapshot-csi-vvols (d)

kind: VolumeSnapshot (e)

apiGroup: snapshot.storage.k8s.io (f)

accessModes:

- ReadWriteOnce (g)

resources:

requests:

storage: 20Gi (h)

storageClassName: csi-vvols-sc (i)

Step 2. Restore the snapshot using the following command:

oc create -f pvc pvc_from_snapshot_vvols.yaml

Step 3. Verify the new PVC has been created by using the following command:

oc get pvc

[as-control@as-control ~]$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-from-snapshot Bound pvc-2ca034e8-cf96-451c-88c2-244a8c100968 20Gi RWO csi-sc 38m

pvc-from-snapshot-vvols Bound pvc-8fb5c950-8fa8-4ee7-8f93-66652abce102 20Gi RWO csi-vvols-sc 20s

pvc-vmfs Bound pvc-ff2f3539-7e08-4dbd-a883-6ecaafa5017b 20Gi RWO csi-sc 68m

pvc-vvols Bound pvc-4556b046-8945-46d7-a36a-b13dbc942a93 20Gi RWO csi-vvols-sc 9m57s

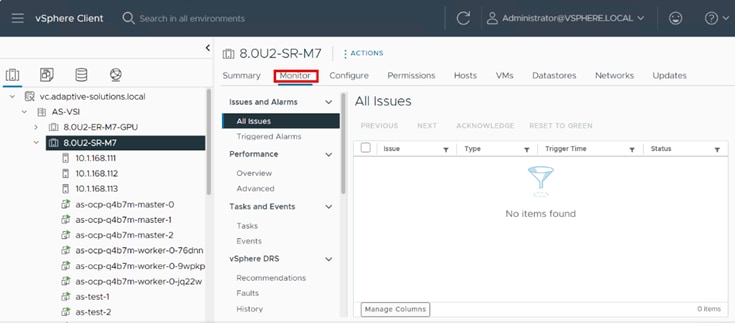

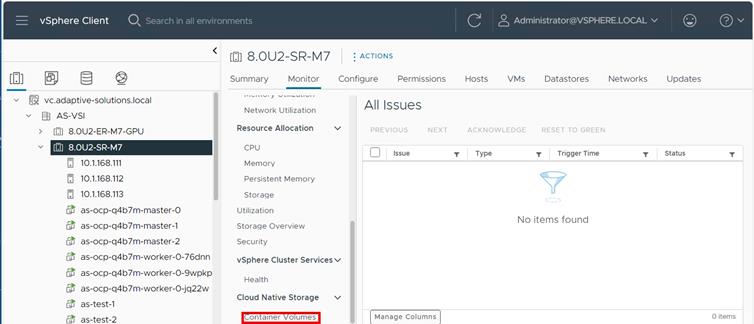

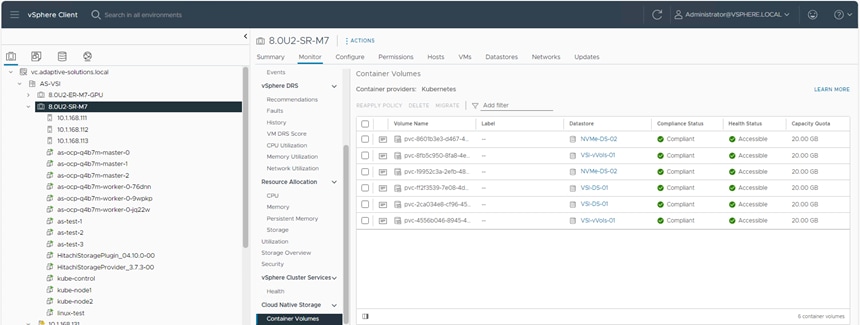

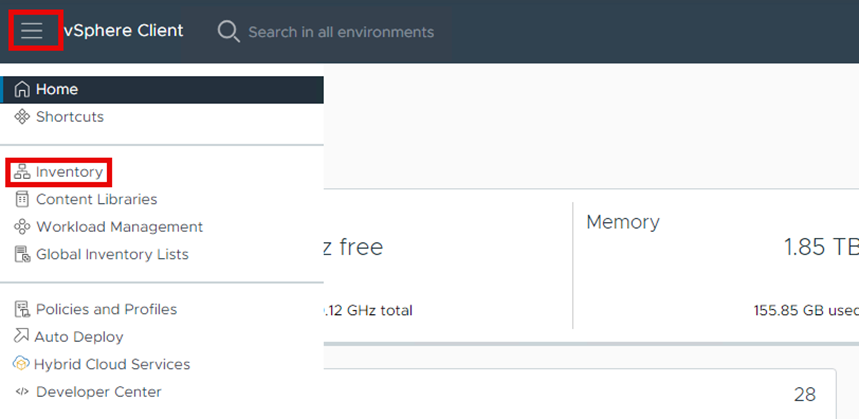

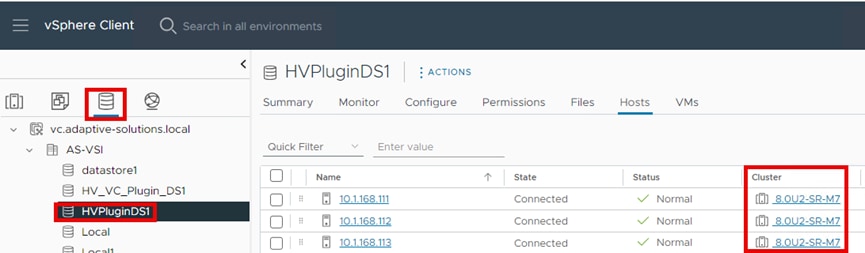

Procedure 4. View Persistent Storage on VMware vCenter

After you have deployed the PVCs, you can view them natively within VMware vCenter. From this vantage point, administrators can view other information about the object such as PVC ID, PVC name, as well as namespace information which relates to the OCP deployment. Use the following procedure to view container volumes within vCenter.

Step 1. Log into the VMware vSphere Client.

Step 2. Select Inventory.

Step 3. Click your vCenter cluster.

Step 4. Select the Monitor tab.

Step 5. From the Monitor navigation tree, go to Cloud Native Storage > Container Volumes.

The workspace presents the PVCs deployed using OCP, and you can view the Volume Name, Datastore, Compliance Status, Health Status, and Capacity Quota.

Ramesh Isaac, Technical Marketing Engineer, Cisco Systems, Inc.

Ramesh Isaac is a Technical Marketing Engineer in the Cisco UCS Data Center Solutions Group. Ramesh has worked in the data center and mixed-use lab settings for over 25 years. He started in information technology supporting UNIX environments and focused on designing and implementing multi-tenant virtualization solutions in Cisco labs before entering Technical Marketing where he has supported converged infrastructure and virtual services as part of solution offerings as Cisco. Ramesh has held certifications from Cisco, VMware, and Red Hat.

Gilberto Pena Jr, Virtualization Solutions Architect, Hitachi Vantara

Gilberto Pena Jr. Is a Virtualization Solutions Architect in the Hitachi Vantara in the Engineering Converged UCP Group. Gilberto has over 25 years of experience with Enterprise financial customers focusing on LAN and WAN design and most recently converged and hyperconverged virtualization designs. Gilberto has held certifications from Cisco.

Acknowledgements

For their support and contribution to the design, validation, and creation of this Cisco Validated Design, the authors would like to thank:

▪ Archana Sharma, Technical Marketing Engineer, Cisco Systems, Inc.

▪ John George, Technical Marketing Engineer, Cisco Systems, Inc.

▪ Paniraja Koppa, Technical Marketing Engineer, Cisco Systems, Inc.

▪ Arvin Jami, Solutions Architect, Hitachi Vantara

This appendix contains the following:

▪ Ansible Deployment and Configuration

▪ Hitachi VSP Provisioning with Ansible

▪ Hitachi Storage Plug-in for VMware vCenter

▪ OCP Persistent Storage and Networking Verification

Ansible Deployment and Configuration

Ansible by Red Hat is a popular open-source infrastructure and application automation tool, giving speed and consistency to deployments and configuration. Ansible is designed around these principles:

▪ Agent-less architecture - Low maintenance overhead by avoiding the installation of additional software across IT infrastructure.

▪ Simplicity - Automation playbooks use straightforward YAML syntax for code that reads like documentation. Ansible is also decentralized, using SSH existing OS credentials to access to remote machines.

▪ Scalability and flexibility - Easily and quickly scale the systems you automate through a modular design that supports a large range of operating systems, cloud platforms, and network devices.

▪ Idempotence and predictability - When the system is in the state your playbook describes Ansible does not change anything, even if the playbook runs multiple times.

Ansible runs on many Linux platforms, Apple OSX, and MS Windows. Installation instructions will vary between platforms, and for this environment, the instructions and the control host used for playbook invocation will be for a RHEL 9 VM. Ansible can be installed at the system level, but convention and instructions from the Ansible site steer users to install with pip to create a user specific instance.

With the base RHEL VM installed as a control host, Ansible was set up for the invoking user account with:

sudo dnf install pip

sudo dnf install git

pip install ansible

Cisco UCS IMM Deployment with Ansible

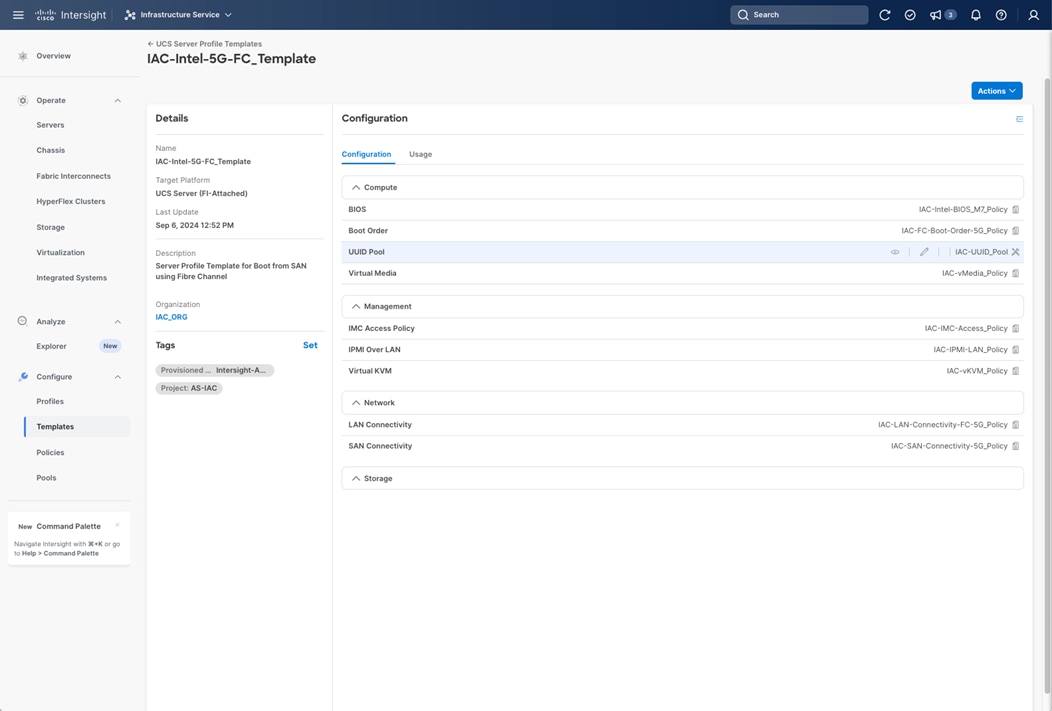

The Cisco UCS IMM deployment will show the example setup of Cisco UCS Server Profiles configured through the creation of a Server Template. This will require the installation of an Ansible Galaxy collection for Intersight, and the invocation will occur through an API interaction.

Specific to the Intersight IMM configuration, the following will need to be added to the control host:

ansible-galaxy collection install cisco.intersight --force

The Intersight API key and Secrets.txt will need to be gathered using the information discussed here: https://community.cisco.com/t5/data-center-and-cloud-documents/intersight-api-overview/ta-p/3651994

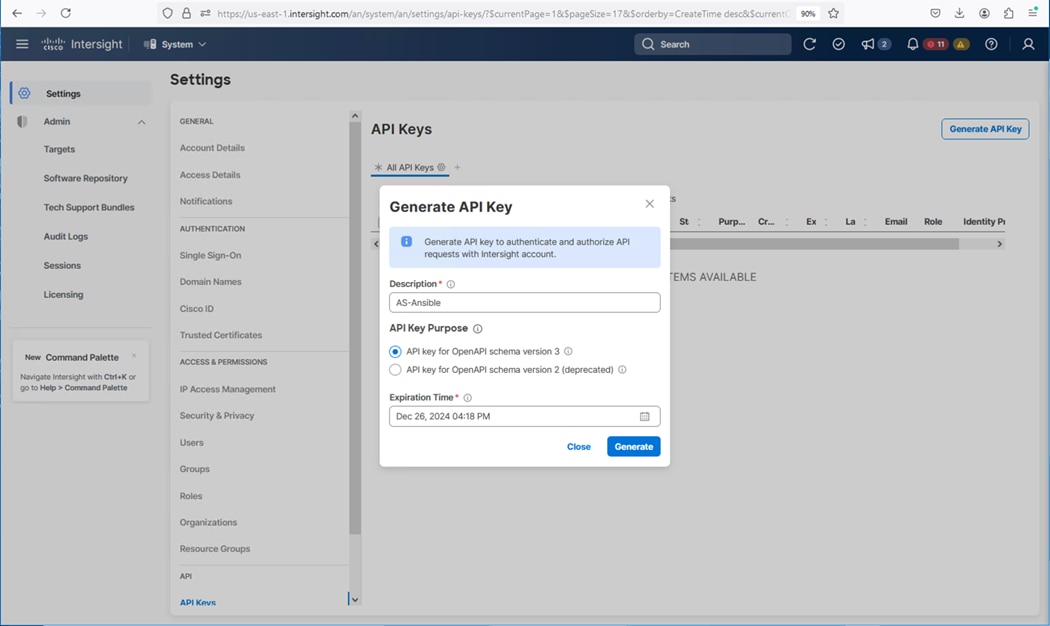

Procedure 1. Obtain Intersight API Key and Secrets.txt

Step 1. Log in to Cisco Intersight and go to System > Settings > API > API Keys.

Step 2. Click Generate API Key.

Step 3. Under Generate API Key, enter a Description and select API key for OpenAPI schema version 3. Select a date for the Expiration Time and click Generate.

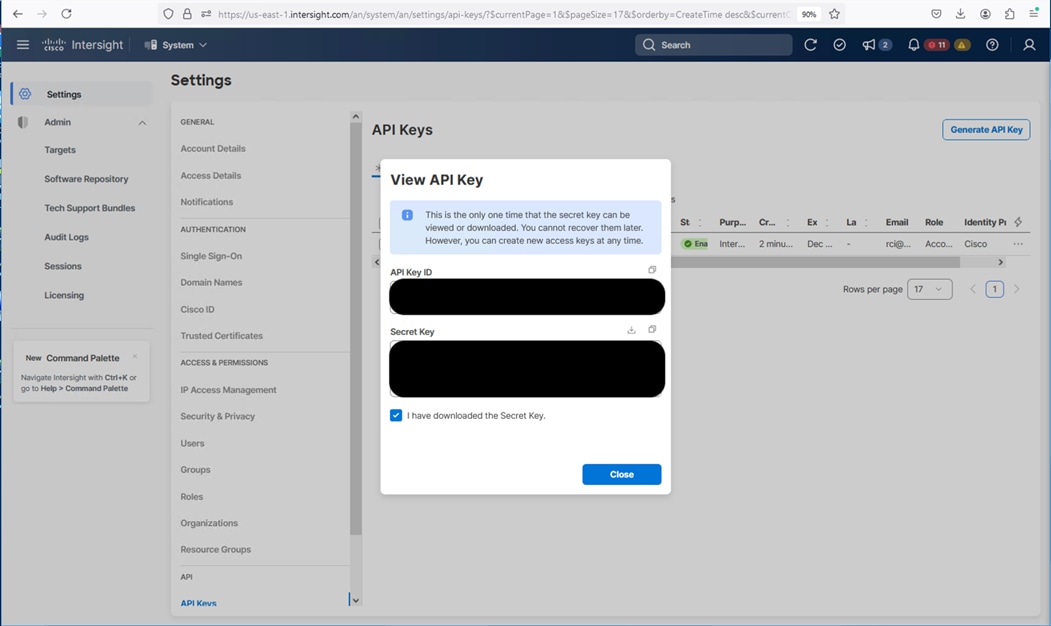

Step 4. Record the API Key ID, download the Secret Key, and click Close.

Step 5. With the API Key ID and the Secret Key properly recorded, they can be inserted under the group_vars folder in the github repo at https://github.com/ucs-compute-solutions/AdaptiveSolutions_IMM_FC_M7.

Step 6. Clone the repository to the intended Ansible control host, in the working directory:

The cloned repository will make available the following structure of files:

.

├── Setup_IMM_Pools.yml

├── Setup_IMM_Server_Policies.yml

├── Setup_IMM_Server_Profile_Templates.yml

├── group_vars

│ ├── all.yml

│ ├── secrets.sample

│ └── ucs.yml

└── roles

├── create_pools

│ ├── defaults

│ │ └── main.yml

│ └── tasks

│ ├── create_fc_ww_pools.yml

│ ├── create_ip_pools.yml

│ ├── create_mac_pools.yml

│ ├── create_uuid_pool.yml

│ └── main.yml

├── create_server_policies

│ ├── defaults

│ │ └── main.yml

│ └── tasks

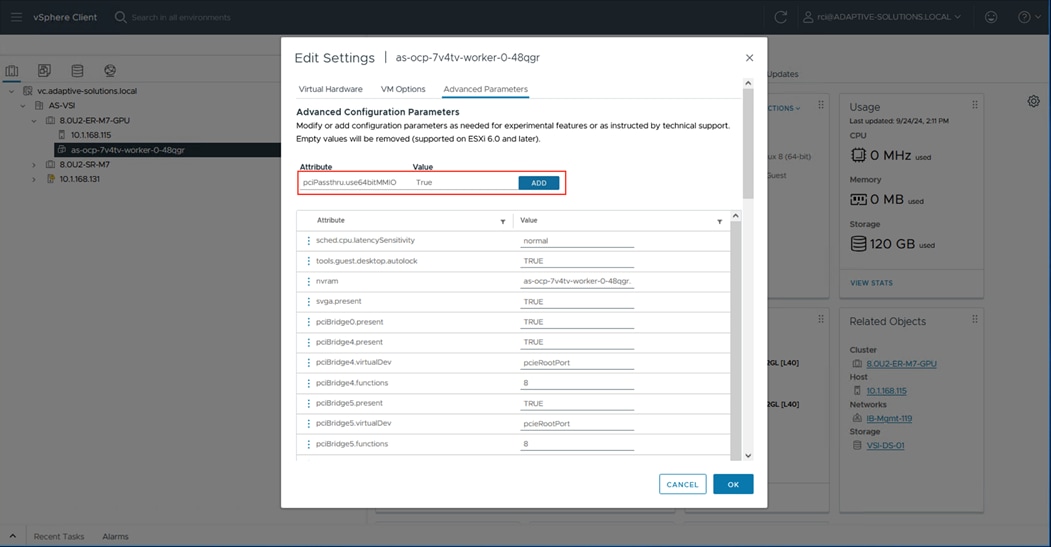

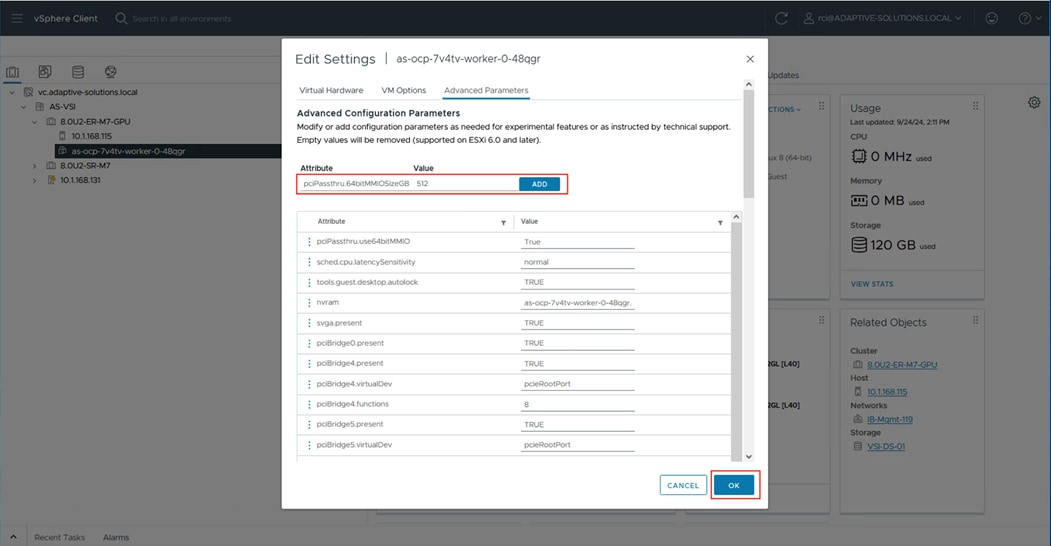

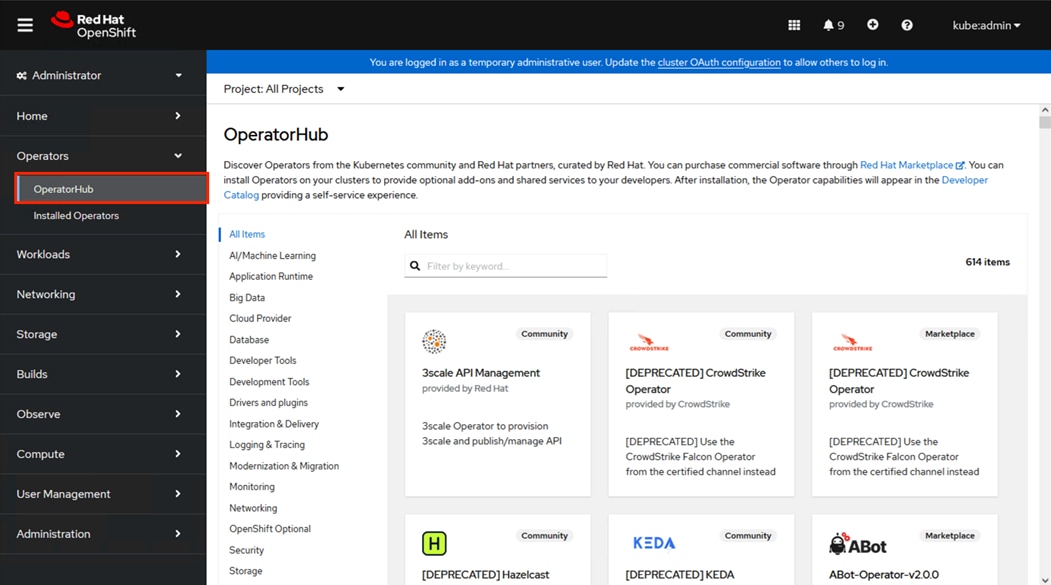

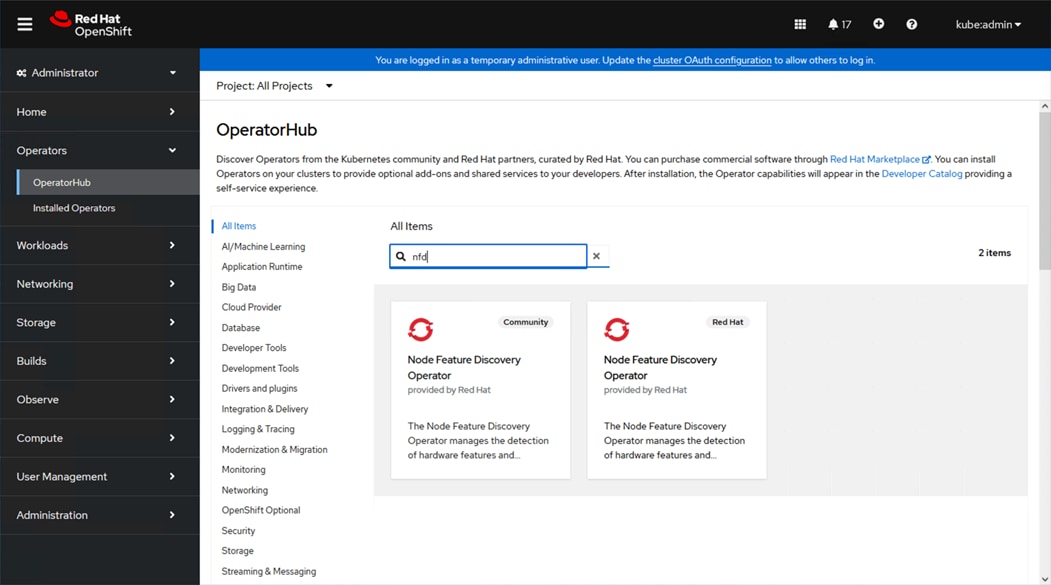

│ ├── create_bios_policies.yml