The current solution for a cnBNG Control Plane consists of two racks working together to provide high availability. Each rack

contains four Kubernetes (K8s) clusters and is in the process of scaling up to a fifth Kubernetes cluster.

This MOP outlines the steps to scale a cnBNG Control Plane deployment from Half-Rack to Full-Rack.

The procedure includes

Before you begin

Perform these steps prior to the actual maintenance window.

Estimated Time for Procedure

| Time for |

Time taken |

|

Adding new servers

Wiring up the new servers

Configure the switches

|

1 day |

| Scale up – Rack1 – CDL+Service |

90m |

| Scale up – Rack2 – CDL+Service |

90m |

| Scale up – CDL |

60 ~ 90m |

Software Used

| Software For |

Software Version |

| cnBNG |

CNBNG-2024.03.0 |

Adding and Wiring New Servers

-

Update the firmware.

Ensure that new servers have the same firmware version as existing nodes.

Note

|

This step should be done prior to the actual maintenance window before racking the servers in the pod.

|

-

Install and wire servers.

-

Power up servers.

-

Verify that there are no existing boot or virtual disks on these new servers.

-

Ensure that disks appear as Unconfigured-good.

-

Check the CIMC IP addresses of these new servers for connectivity.

-

Configure switch ports.

-

Configure leaf switch ports for new servers.

Use the same configuration as other leaf ports connected to existing worker nodes.

-

Verify from the ClusterManager Linux shell that you can ping CIMC IP addresses of new nodes.

Scale Up Kubernetes Cluster

You must scale up the Kubernetes cluster in each rack of the pod.

Follow these steps to scale up the Kubernetes cluster:

-

Perform a planned GR switchover to move all cnBNG instances to the RackInService as primary.

Note

|

RackInService refers to the rack hosting all primary GR instances of all cnBNGs in the pod.

|

-

Reset states of cnBNG GR instances in STANDBY_ERROR state to STANDBY state in RackBeingScaled.

Note

|

RackBeingScaled refers to the rack undergoing K8s node scaling.

|

-

Verify that all GR instances in RackInService are in PRIMARY state and those in RackBeingScaled are in STANDBY state.

-

Set geo-maintenance mode in all cnBNGs in both RackBeingScaled and RackInService.

-

Shut down all cnBNGs in RackBeingScaled.

-

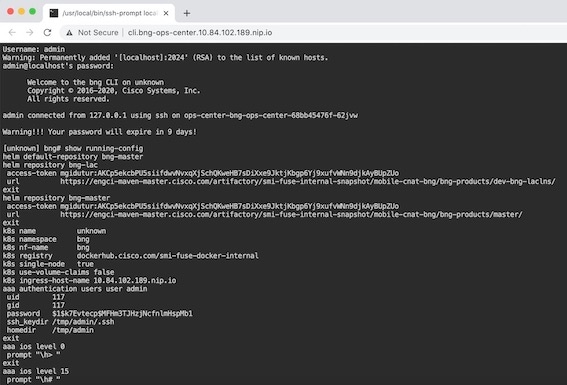

Add the configuration in Deployer via CLI or NSO load merge config change payload files to scale up RackBeingScaled.

See the section "K8s Cluster scale-up config example" for example configuration.

-

Manually add networks in the netplan on the new server.

Note

|

If the server is new, you don't have to add netplan manually. If you are reusing a server, write a netplan manually.

|

-

[Optional] If you are reusing an existing server, reset it using the CLI command:

-

Issue a cluster sync to scale up RackBeingScaled.

-

Monitor the cluster sync progress by logging into the Cluster Manager.

-

Verify if all nodes in the K8s cluster in RackBeingScaled are in ready state.

-

Verify if all pods in the K8s cluster in RackBeingScaled are in good running state.

-

Start up all cnBNGs in RackBeingScaled.

-

Verify if the added nodes appear in the cnBNG Ops Center configurations.

-

Verify if both GR instances of all cnBNGs in RackBeingScaled are in STANDBY state.

-

Unset geo-maintenance mode in all cnBNGs in both RackBeingScaled and RackInService

Post-Requirements:

Repeat this procedure to scale up K8s cluster in each rack of the Pod.

Scale Up CDL

Follow these steps to scale up the CDL:

-

Verify that all cnBNGs in the pod are in good state.

-

Update CDL Grafana dashboard to monitor the total number of index keys that have been rebalanced.

-

Query: sum(index_rebalanced_keys_total{namespace="$namespace"}) by (cdl_slice, shardId, pod)

-

Legend: irkt-{{cdl_slice}}-{{shardId}}-{{pod}}

Note

|

CDL rebalancing happens once the CDL rebalance query is issued.

|

-

Make rack-1 as ACTIVE and rack-2 as STANDBY.

-

Unset geo maintenance mode in all cnBNGs in both racks (if previously set).

-

Make both GR instances of all cnBNGs in Rack1 PRIMARY.

-

Make both GR instances of all cnBNGs in Rack2 STANDBY.

-

Set geo maintenance mode in all cnBNGs in both racks.

-

Add configuration changes to Rack2 (STANDBY)

-

Shut down all cnBNGs in Rack2.

-

Add configuration changes to all cnBNGs in Rack2 (see "CDL Configuration changes" section).

-

Start up all cnBNGs in Rack2.

-

Verify the cnBNG State

-

Ensure that all cnBNGs are in good state.

-

Both GR instances in all cnBNGs in Rack2 should be in STANDBY state.

-

Both GR instances in all cnBNGs in Rack1 should be in PRIMARY state.

-

Verify CDL indices and slots sync

-

Verify if CDL indices and slots in Rack2 can sync with remote peers (may take ~15 mins).

-

Switch rack states

-

Unset geo maintenance mode in all cnBNGs in both racks.

-

Make both GR instances of all cnBNGs in Rack2 PRIMARY.

-

Make both GR instances of all cnBNGs in Rack1 STANDBY.

-

Set geo maintenance mode in all cnBNGs in both racks.

-

Add configuration changes to Rack1 (STANDBY)

-

Shut down all cnBNGs in Rack1.

-

Add configuration changes to all cnBNGs in Rack1 (see "CDL Configuration changes" section).

-

Start up all cnBNGs in Rack1.

-

Verify the state of all cnBNGs.

-

Ensure that all cnBNGs are in good state.

-

Both GR instances in all cnBNGs in Rack1 should be in STANDBY state.

-

Both GR instances in all cnBNGs in Rack2 should be in PRIMARY state.

-

Verify if CDL indices and slots in Rack1 can sync with remote peers (may take ~15 mins).

-

Trigger CDL index rebalancing.

-

Verify CDL index rebalancing completion.

-

Monitor progress with the following command in the STANDBY rack cnBNG:

cdl rebalance-index status

-

Validate rebalancing with the following command in the STANDBY rack cnBNG:

cdl rebalance-index validate

-

Remove CDL scale up mode configuration:

config

cdl datastore session

no mode

commit

end

-

Unset Geo maintenance mode in all cnBNGs in both racks.

-

Initiate GR switchovers

Switch all cnBNGs in the pod to ACTIVE-ACTIVE mode.

-

Verify that all cnBNGs are operating in sunny day mode.

Scale Up Services

You can now scale up cnBNG Services.

Note

|

There is no restriction to always scale up Cluster1 before Cluster2. You can scale up any cluster first, depending on the

existing role set on the cluster.

|

Follow these steps to scale up cnBNG services:

-

Make Rack-1 Active and Rack-2 Standby

-

Unset geo maintenance mode in all cnBNGs in both racks (if previously set).

-

Make both GR instances of all cnBNGs in Rack1 PRIMARY.

-

Make both GR instances of all cnBNGs in Rack2 STANDBY.

-

Set geo maintenance mode in all cnBNGs in both racks.

-

Add configuration changes in Rack-2 (STANDBY).

-

Shut down all cnBNGs in Rack2.

-

Add the configuration changes to all cnBNGs in Rack2 (see the "Service scaleup config changes" section).

-

Start up all cnBNGs in Rack2.

-

Verify the cnBNG state.

-

Ensure that all cnBNGs are in good state.

-

Both GR instances in all cnBNGs in Rack2 should be in STANDBY state.

-

Both GR instances in all cnBNGs in Rack1 should be in PRIMARY state.

-

Verify that the scale-up configuration changes are applied (may take approximately 15 mins).

-

Make Rack-2 active and Rack-1 standby

-

Unset geo maintenance mode in all cnBNGs in both racks.

-

Make both GR instances of all cnBNGs in Rack2 PRIMARY.

-

Make both GR instances of all cnBNGs in Rack1 STANDBY.

-

Set Geo maintenance mode in all cnBNGs in both racks.

-

Add configuration changes in Rack-1 (STANDBY)

-

Shutdown all cnBNGs in Rack1.

-

Add the configuration changes to all cnBNGs in Rack1 (see "Service Scale up Configuration" section).

-

Start up all cnBNGs in Rack1.

-

Verify the cnBNG State.

-

Ensure that all cnBNGs are in good state.

-

Both GR instances in all cnBNGs in Rack1 should be in STANDBY state.

-

Both GR instances in all cnBNGs in Rack2 should be in PRIMARY state.

-

Verify that the scale-up configuration changes are applied (may take ~15 mins).

-

Remove the geo maintenance mode in both clusters.

-

Move both CP-GR clusters to their original state.

Configuration Examples

K8s Cluster Scale Up:

Scale up configurations for both the servers are available in the following combinations:

-

Node Scaling for Service only

-

Node Scaling for CDL only

-

Node Scaling for both CDL and Service

Node Scaling for Service Only

| Cluster1 Node5 Deployer Configuration |

Cluster2 Node5 Deployer Configuration |

clusters svi-cnbng-gr-tb1

nodes server-5

host-profile bng-ht-sysctl-enable

k8s node-type worker

k8s ssh-ip 1.1.111.15

k8s ssh-ipv6 2002:4888:1:1::111:15

k8s node-ip 1.1.111.15

k8s node-ipv6 2002:4888:1:1::111:15

k8s node-labels smi.cisco.com/svc-type service

exit

ucs-server cimc ip-address 10.81.103.78

initial-boot netplan vlans bd0.mgmt.3103

addresses [ 10.81.103.119/24 ]

gateway4 10.81.103.1

exit

initial-boot netplan vlans bd1.k8s.111

addresses [ 1.1.111.15/24 2002:4888:1:1::111:15/112 ]

routes 203.203.203.50/32 1.1.111.1

exit

routes 2002:4888:203:203::203:50/128 2002:4888:1:1::111:1

exit

exit

initial-boot netplan vlans bd1.inttcp.104

dhcp4 false

dhcp6 false

addresses [ 1.1.104.15/24 2002:4888:1:1::104:15/112 ]

id 104

link bd1

routes 2.2.104.0/24 1.1.104.1

exit

routes 2002:4888:2:2::104:0/112 2002:4888:1:1::104:1

exit

exit

os tuned enabled

exit

exit

|

clusters svi-cnbng-gr-tb2

nodes server-5

host-profile bng-ht-sysctl-enable

k8s node-type worker

k8s ssh-ip 2.2.112.15

k8s ssh-ipv6 2002:4888:2:2::112:15

k8s node-ip 2.2.112.15

k8s node-ipv6 2002:4888:2:2::112:15

k8s node-labels smi.cisco.com/svc-type service

exit

ucs-server cimc ip-address 10.81.103.58

initial-boot netplan vlans bd0.mgmt.3103

addresses [ 10.81.103.68/24 ]

gateway4 10.81.103.1

exit

initial-boot netplan vlans bd1.inttcp.104

dhcp4 false

dhcp6 false

addresses [ 2.2.104.15/24 2002:4888:2:2::104:15/112 ]

id 104

link bd1

routes 1.1.104.0/24 2.2.104.1

exit

routes 2002:4888:1:1::104:0/112 2002:4888:2:2::104:1

exit

exit

initial-boot netplan vlans bd1.k8s.112

addresses [ 2.2.112.15/24 2002:4888:2:2::112:15/112 ]

routes 203.203.203.50/32 2.2.112.1

exit

routes 2002:4888:203:203::203:50/112 2002:4888:2:2::112:1

exit

exit

os tuned enabled

exit

exit

|

Node Scaling for CDL Only

| Cluster1 Node5 Deployer Configuration |

Cluster2 Node5 Deployer Configuration |

clusters svi-cnbng-gr-tb1

nodes server-5

host-profile bng-ht-sysctl-enable

k8s node-type worker

k8s ssh-ip 1.1.111.15

k8s ssh-ipv6 2002:4888:1:1::111:15

k8s node-ip 1.1.111.15

k8s node-ipv6 2002:4888:1:1::111:15

k8s node-labels smi.cisco.com/sess-type cdl-node

exit

ucs-server cimc ip-address 10.81.103.78

initial-boot netplan vlans bd0.mgmt.3103

addresses [ 10.81.103.119/24 ]

gateway4 10.81.103.1

exit

initial-boot netplan vlans bd1.cdl.103

dhcp4 false

dhcp6 false

addresses [ 1.1.103.15/24 2002:4888:1:1::103:15/112 ]

id 103

link bd1

routes 2.2.103.0/24 1.1.103.1

exit

routes 2002:4888:2:2::103:0/112 2002:4888:1:1::103:1

exit

exit

initial-boot netplan vlans bd1.k8s.111

addresses [ 1.1.111.15/24 2002:4888:1:1::111:15/112 ]

routes 203.203.203.50/32 1.1.111.1

exit

routes 2002:4888:203:203::203:50/128 2002:4888:1:1::111:1

exit

exit

os tuned enabled

exit

exit

|

clusters svi-cnbng-gr-tb2

nodes server-5

host-profile bng-ht-sysctl-enable

k8s node-type worker

k8s ssh-ip 2.2.112.15

k8s ssh-ipv6 2002:4888:2:2::112:15

k8s node-ip 2.2.112.15

k8s node-ipv6 2002:4888:2:2::112:15

k8s node-labels smi.cisco.com/sess-type cdl-node

exit

ucs-server cimc ip-address 10.81.103.58

initial-boot netplan vlans bd0.mgmt.3103

addresses [ 10.81.103.85/24 ]

gateway4 10.81.103.1

exit

initial-boot netplan vlans bd1.cdl.103

dhcp4 false

dhcp6 false

addresses [ 2.2.103.15/24 2002:4888:2:2::103:15/112 ]

id 103

link bd1

routes 1.1.103.0/24 2.2.103.1

exit

routes 2002:4888:1:1::103:0/112 2002:4888:2:2::103:1

exit

exit

initial-boot netplan vlans bd1.k8s.112

addresses [ 2.2.112.15/24 2002:4888:2:2::112:15/112 ]

routes 203.203.203.50/32 2.2.112.1

exit

routes 2002:4888:203:203::203:50/112 2002:4888:2:2::112:1

exit

exit

os tuned enabled

exit

exit

|

Node Scaling for both CDL and Services

| Cluster1 Node5 Deployer configuration |

Cluster2 Node5 Deployer configuration |

clusters svi-cnbng-gr-tb1

nodes server-5

host-profile bng-ht-enable

k8s node-type worker

k8s ssh-ip 1.1.111.15

k8s ssh-ipv6 2002:4888:1:1::111:15

k8s node-ip 1.1.111.15

k8s node-ipv6 2002:4888:1:1::111:15

k8s node-labels smi.cisco.com/sess-type cdl-node

exit

k8s node-labels smi.cisco.com/svc-type service

exit

ucs-server cimc ip-address 10.81.103.78

initial-boot netplan vlans bd0.mgmt.3103

addresses [ 10.81.103.119/24 ]

gateway4 10.81.103.1

exit

initial-boot netplan vlans bd1.inttcp.104

dhcp4 false

dhcp6 false

addresses [ 1.1.104.15/24 2002:4888:1:1::104:15/112 ]

id 104

link bd1

routes 2.2.104.0/24 1.1.104.1

exit

routes 2002:4888:2:2::104:0/112 2002:4888:1:1::104:1

exit

exit

initial-boot netplan vlans bd1.cdl.103

dhcp4 false

dhcp6 false

addresses [ 1.1.103.15/24 2002:4888:1:1::103:15/112 ]

id 103

link bd1

routes 2.2.103.0/24 1.1.103.1

exit

routes 2002:4888:2:2::103:0/112 2002:4888:1:1::103:1

exit

exit

initial-boot netplan vlans bd1.k8s.111

addresses [ 1.1.111.15/24 2002:4888:1:1::111:15/112 ]

routes 203.203.203.50/32 1.1.111.1

exit

routes 2002:4888:203:203::203:50/128 2002:4888:1:1::111:1

exit

exit

os tuned enabled

exit

exit

|

clusters svi-cnbng-gr-tb2

nodes server-5

host-profile bng-ht-enable

k8s node-type worker

k8s ssh-ip 2.2.112.15

k8s ssh-ipv6 2002:4888:2:2::112:15

k8s node-ip 2.2.112.15

k8s node-ipv6 2002:4888:2:2::112:15

k8s node-labels smi.cisco.com/sess-type cdl-node

exit

k8s node-labels smi.cisco.com/svc-type service

exit

ucs-server cimc ip-address 10.81.103.58

initial-boot netplan vlans bd0.mgmt.3103

addresses [ 10.81.103.85/24 ]

gateway4 10.81.103.1

exit

initial-boot netplan vlans bd1.inttcp.104

dhcp4 false

dhcp6 false

addresses [ 2.2.104.15/24 2002:4888:2:2::104:15/112 ]

id 104

link bd1

routes 1.1.104.0/24 2.2.104.1

exit

routes 2002:4888:1:1::104:0/112 2002:4888:2:2::104:1

exit

exit

initial-boot netplan vlans bd1.cdl.103

dhcp4 false

dhcp6 false

addresses [ 2.2.103.15/24 2002:4888:2:2::103:15/112 ]

id 103

link bd1

routes 1.1.103.0/24 2.2.103.1

exit

routes 2002:4888:1:1::103:0/112 2002:4888:2:2::103:1

exit

exit

initial-boot netplan vlans bd1.k8s.112

addresses [ 2.2.112.15/24 2002:4888:2:2::112:15/112 ]

routes 203.203.203.50/32 2.2.112.1

exit

routes 2002:4888:203:203::203:50/112 2002:4888:2:2::112:1

exit

exit

os tuned enabled

exit

exit

|

CDL Configuration Changes

|

CDL Scale-up Configuration on Cluster1

|

CDL Scale-up Configuration on Cluster2

|

cdl datastore session

label-config session

geo-remote-site [ 2 ]

mode scale-up

slice-names [ 1 2 ]

overload-protection disable true

endpoint go-max-procs 16

endpoint replica 3

endpoint copies-per-node 2

endpoint settings slot-timeout-ms 750

endpoint external-ip 1.1.103.100

endpoint external-ipv6 2002:4888:1:1::103:100

endpoint external-port 8882

index go-max-procs 8

index replica 2

index prev-map-count 2

index map 3

features instance-aware-notification enable true

features instance-aware-notification system-id 1

slice-names [ 1 ]

exit

features instance-aware-notification system-id 2

slice-names [ 2 ]

exit

slot go-max-procs 8

slot replica 2

slot map 6

slot notification limit 1500

slot notification max-concurrent-bulk-notifications 20

exit

cdl label-config session

endpoint key smi.cisco.com/sess-type

endpoint value cdl-node

slot map 1

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 2

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 3

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 4

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 5

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 6

key smi.cisco.com/sess-type

value cdl-node

exit

index map 1

key smi.cisco.com/sess-type

value cdl-node

exit

index map 2

key smi.cisco.com/sess-type

value cdl-node

exit

index map 3

key smi.cisco.com/sess-type

value cdl-node

exit

exit

|

cdl datastore session

label-config session

geo-remote-site [ 1 ]

mode scale-up

slice-names [ 1 2 ]

overload-protection disable true

endpoint go-max-procs 16

endpoint replica 3

endpoint copies-per-node 2

endpoint settings slot-timeout-ms 750

endpoint external-ip 2.2.103.100

endpoint external-ipv6 2002:4888:2:2::103:100

endpoint external-port 8882

index go-max-procs 8

index replica 2

index prev-map-count 2

index map 3

features instance-aware-notification enable true

features instance-aware-notification system-id 1

slice-names [ 1 ]

exit

features instance-aware-notification system-id 2

slice-names [ 2 ]

exit

slot go-max-procs 8

slot replica 2

slot map 6

slot notification limit 1500

slot notification max-concurrent-bulk-notifications 20

exit

cdl label-config session

endpoint key smi.cisco.com/sess-type

endpoint value cdl-node

slot map 1

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 2

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 3

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 4

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 5

key smi.cisco.com/sess-type

value cdl-node

exit

slot map 6

key smi.cisco.com/sess-type

value cdl-node

exit

index map 1

key smi.cisco.com/sess-type

value cdl-node

exit

index map 2

key smi.cisco.com/sess-type

value cdl-node

exit

index map 3

key smi.cisco.com/sess-type

value cdl-node

exit

exit

|

The commands that are required for node scaling are highlighted.

Service Scale up Configuration

Scaling up services by increasing replica counts and nodes is supported. Reducing replicas and nodes is not supported and

it will impact the system.

| Existing Configuration |

Service Scale Up Configuration in Both Clusters |

instance instance-id 1

endpoint dhcp

replicas 4

nodes 2

exit

exit

endpoint sm

replicas 6

nodes 2

exit

exit

instance instance-id 2

endpoint dhcp

replicas 4

nodes 2

exit

exit

endpoint sm

replicas 6

nodes 2

exit

exit

|

instance instance-id 1

endpoint dhcp

replicas 4

nodes 3

exit

exit

endpoint sm

replicas 6

nodes 3

exit

exit

instance instance-id 2

endpoint dhcp

replicas 4

nodes 3

exit

exit

endpoint sm

replicas 6

nodes 3

exit

exit

|

Feedback

Feedback