| Step 1 |

configure

|

|

| Step 2 |

vrf vpn1

RP/0/RSP0/CPU0:router(config)# vrf vpn1

|

Configures the vrf and enters the vrf configuration mode.

|

| Step 3 |

address-family ipv4 unicast

RP/0/RSP0/CPU0:router(config-vrf)# address-family ipv4 unicast

|

Configures the ipv4 address-family for a unicast topology and enters the ipv4 address-family submode.

|

| Step 4 |

import route-target 2-byte AS number

RP/0/RSP0/CPU0:router(config-vrf-af)# import route-target 20:1

|

Specifies the 2-byte AS number for the import route target extended communities.

|

| Step 5 |

export route-target 2-byte AS number

RP/0/RSP0/CPU0:router(config-vrf-af)# export route-target 10:1

|

Specifies the 2-byte AS number for the export route target extended communities.

|

| Step 6 |

router bgp 2-byte AS number

RP/0/RSP0/CPU0:router(config)# router bgp 100

|

Configures the router bgp and enters the router bgp configuration mode.

|

| Step 7 |

bgp router-id ipv4 address

RP/0/RSP0/CPU0:router(config-bgp)# bgp router-id 10.10.10.1

|

Configures the bgp router id with the ipv4 address.

| Note

|

Steps 1 to 7 are common for both Option B and C.

|

|

| Step 8 |

address-family ipv4 unicast

RP/0/RSP0/CPU0:router(config-bgp)# address-family ipv4 unicast

|

Configures the ipv4 address-family with the unicast topology.

|

| Step 9 |

allocate-label all

RP/0/RSP0/CPU0:router(config-bgp)# allocate-label all

|

Allocates label for all prefixes.

| Note

|

Steps 8 and 9 are part of the Option C configuration.

|

|

| Step 10 |

address-family vpnv4 unicast

RP/0/RSP0/CPU0:router(config-bgp)# address-family vpnv4 unicast

|

Configures the vpnv4 address-family with the unicast topology.

|

| Step 11 |

address-family ipv4 mvpn

RP/0/RSP0/CPU0:router(config-bgp)# address-family ipv4 mvpn

|

Configures the ipv4 address-family with the mvpn.

|

| Step 12 |

neighbor neighbor_address

RP/0/RSP0/CPU0:router(config-bgp)# neighbor 10.10.10.02

|

Specifies and configures a neighbor router with the neighbor address.

|

| Step 13 |

remote-as 2-byte AS number

RP/0/RSP0/CPU0:router(config-bgp-nbr)# remote-as 100

|

Sets remote AS with the mentioned 2-byte AS number.

|

| Step 14 |

update-source Loopback 0-655335

RP/0/RSP0/CPU0:router(config-bgp-nbr)# update-source Loopback 0

|

Specifies the source of routing updates using the Loopback interface.

| Note

|

Steps 10 to 14 are common to both Option B and C.

|

|

| Step 15 |

address-family ipv4 labeled-unicast

RP/0/RSP0/CPU0:router(config-bgp-nbr)# address-family ipv4 labeled-unicast

|

Configures the ipv4 address-family with the labeled unicast topology.

| Note

|

Step 15 is only performed for Option C configuration.

|

|

| Step 16 |

address-family vpnv4 unicast

RP/0/RSP0/CPU0:router(config-bgp-nbr)# address-family vpnv4 unicast

|

Configures the vpnv4 address-family with the unicast topology.

| Note

|

Step 16 is common to both Option B and C.

|

|

| Step 17 |

inter-as install

RP/0/RSP0/CPU0:router(config-bgp-nbr)# inter-as install

|

Installs Inter-AS option.

| Note

|

Step 17 is only for Option B configuration.

|

|

| Step 18 |

address-family ipv4 mvpn

RP/0/RSP0/CPU0:router(config-bgp)# address-family ipv4 mvpn

|

Configures the ipv4 address-family with the mvpn.

|

| Step 19 |

vrf vpn1

RP/0/RSP0/CPU0:router(config-bgp)# vrf vpn1

|

Configures the vrf and enters the vrf configuration mode.

|

| Step 20 |

rd 2-byte AS number

RP/0/RSP0/CPU0:router(config-bgp-vrf)# rd 10:1

|

Configures the route distinguisher with a 2-byte AS number.

|

| Step 21 |

address-family ipv4 unicast

RP/0/RSP0/CPU0:router(config-bgp-vrf)# address-family ipv4 unicast

|

Configures the ipv4 address-family for a unicast topology and enters the ipv4 address-family submode.

|

| Step 22 |

route-target download

RP/0/RSP0/CPU0:router(config-bgp-vrf-af)# route-target download

|

Installs and configures the route-targets in RIB.

|

| Step 23 |

address-family ipv4 mvpn

RP/0/RSP0/CPU0:router(config-bgp-vrf)# address-family ipv4 mvpn

|

Configures the ipv4 address-family with the mvpn.

| Note

|

Steps 18 to 23 are common to both Option B and C.

|

|

| Step 24 |

inter-as install

RP/0/RSP0/CPU0:router(config-bgp-nbr)# inter-as install

|

Installs Inter-AS option.

| Note

|

Step 24 is only for Option C configuration.

|

|

| Step 25 |

mpls ldp

RP/0/RSP0/CPU0:router(config)# mpls ldp

|

Configures the MPLS label distribution protocol (ldp).

| Note

|

Steps 25 till 44 are common to both Option B and C.

|

|

| Step 26 |

router-id ip address

RP/0/RSP0/CPU0:router(config-ldp)# router-id 10.10.10.1

|

Configures the router id with the ip address.

|

| Step 27 |

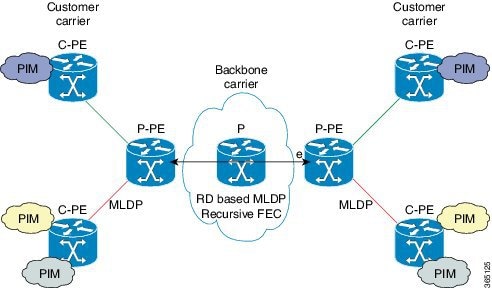

mldp recursive-fec

RP/0/RSP0/CPU0:router(config-ldp)# mldp recursive-fec

|

Configures the mLDP recursive FEC support.

|

| Step 28 |

interface type interface-path-id

RP/0/RSP0/CPU0:router(config-ldp)# interface GigabitEthernet 0/1/0/0

|

Configures the GigabitEthernet/IEEE 802.3 interface(s).

|

| Step 29 |

multicast-routing

RP/0/RSP0/CPU0:router(config)# multicast-routing

|

Enbales IP Multicast forwarding and enters the multicast routing configuration mode.

|

| Step 30 |

address-family

ipv4

RP/0/RSP0/CPU0:router(config-mcast)# address-family ipv4

|

Configures the ipv4 address-family and enters ipv4 address-family submode.

|

| Step 31 |

mdt source type interface-path-id

RP/0/RSP0/CPU0:router(config-mcast-default-ipv4)# mdt source Loopback 0

|

Configures mvpn and specifies the interface used to set MDT source address.

|

| Step 32 |

interface

all enable

RP/0/RSP0/CPU0:router(config-mcast-default-ipv4)# interface all enable

|

Enables multicast routing and forwarding on all new and existing interfaces. You can also enable individual interfaces.

|

| Step 33 |

vrf vpn1

RP/0/RSP0/CPU0:router(config-mcast)# vrf vpn1

|

Configures the vrf and enters the vrf configuration mode.

|

| Step 34 |

address-family

ipv4

RP/0/RSP0/CPU0:router(config-mcast-vpn1)# address-family ipv4

|

Configures the ipv4 address-family and enters the ipv4 address-family submode.

|

| Step 35 |

bgp auto-discovery mldp inter-as

RP/0/RSP0/CPU0:router(config-mcast-vpn1-ipv4)# bgp auto-discovery mldp inter-as

|

enables BGP MVPN auto-discovery.

|

| Step 36 |

mdt

partitioned mldp ipv4 mp2mp

RP/0/RSP0/CPU0:router(config-mcast-vpn1-ipv4)# mdt partitioned mldp ipv4 mp2mp

|

Enables MLDP MP2MP signaled partitioned distribution tree for ipv4 core.

| Note

|

This configuration varies depending on what core tree option is being

used.

For example, the above step enables MLDP MP2MP core tree. Instead, if you select, P2MP core tree, the configuration enables

MLDP P2MP core tree.

|

|

| Step 37 |

interface

all enable

RP/0/RSP0/CPU0:router(config-mcast-vpn1-ipv4)# interface all enable

|

Enables multicast routing and forwarding on all new and existing interfaces. You can also enable individual interfaces.

|

| Step 38 |

router pim

RP/0/RSP0/CPU0:router(config)# router pim

|

Configures the router pim and enters the pim configuration mode.

|

| Step 39 |

vrf vrf1

RP/0/RSP0/CPU0:router(config-pim)# vrf vrf1

|

Configures the vrf and enters the vrf configuration mode.

|

| Step 40 |

address-family

ipv4

RP/0/RSP0/CPU0:router(config-pim-vrf1)# address-family ipv4

|

Configures the ipv4 address-family and enters the ipv4 address-family submode.

|

| Step 41 |

rpf toplogy route-policy policy_name

RP/0/RSP0/CPU0:router(config-pim-vrf1-ipv4)# rpf topology route-policy MSPMSI_MP2MP

|

Configures the route-policy to select RPF topology.

|

| Step 42 |

route-policy policy_name

RP/0/RSP0/CPU0:router(config)# route-policy MSPMSI_MP2MP

|

Configures the route-policy to select RPF topology.

|

| Step 43 |

set core-tree mldp-partitioned-mp2mp

RP/0/RSP0/CPU0:router(config-rpl)# set core-tree mldp-partitioned-mp2mp

|

Sets a MLDP Partitioned MP2MP core multicast distribution tree type.

|

| Step 44 |

commit

|

|

Feedback

Feedback