Installation Workflow

Note |

If the HyperFlex cluster nodes were part of any other HyperFlex cluster before (or not factory shipped), follow the node cleanup procedure before starting the cluster deployment. For more information , see HyperFlex Customer Cleanup Guides for FI and Edge. |

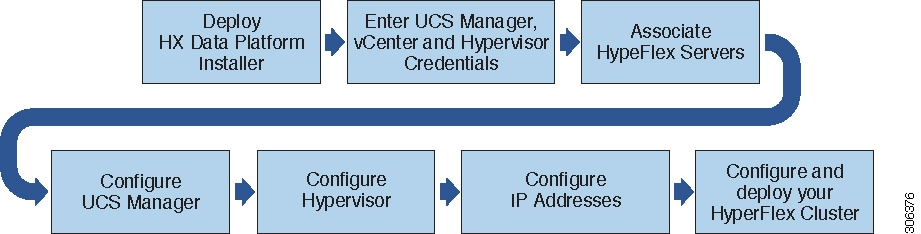

The following installation workflow summarizes the steps involved in creating a Standard Cluster, using the HX Data Platform Installer.

Follow this workflow during installation:

-

Deploy the HX Data Platform Installer OVA using the vSphere Web Client. If your hypervisor wizard defaults to DHCP for assigning IP addresses to new VMs, deploy the HX Data Platform Installer OVA with a static IP address. See Deploy HX Data Platform Installer OVA Using vSphere Web Client or Deploy the HX Data Platform Installer OVA with a Static IP Address for more information.

-

Configure syslog to send all logging information to a centralized syslog repository. See Configure Syslog for more information.

-

Enter UCS Manager, vCenter, and Hypervisor credentials.

-

Configure server ports and associate HyperFlex servers. See Associate HyperFlex Serversfor more information.

-

Configure VLAN, MAC Pool, 'hx-ext-mgmt' IPPool for Out-of-Band CIMC, iSCSi Storage, and FC Storage. See Configure UCS Manager for more information.

-

Configure the Hypervisor. See Configure Hypervisor for more information.

-

Configure the IP addresses. See Configure IP Addresses for more information.

-

Configure and deploy the HyperFlex cluster. See Configure Your HyperFlex Cluster for more information.

Feedback

Feedback