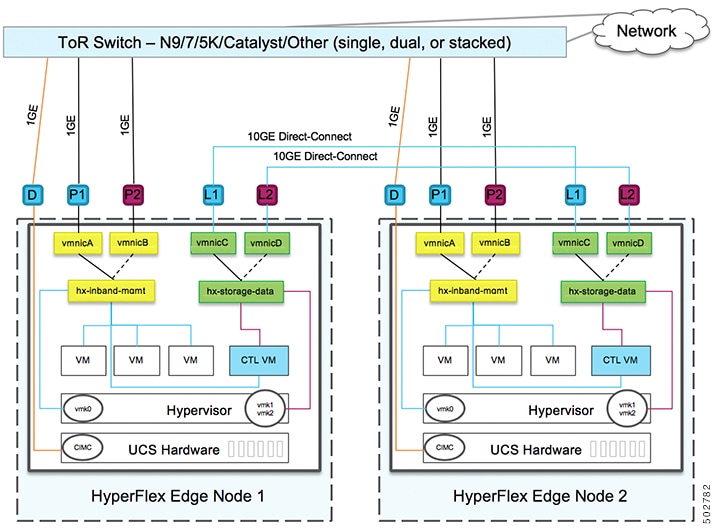

Select your 2-Node Network Topology

When selecting your 2-Node topology, keep in mind that the network topology chosen during initial deployment cannot be changed or upgraded without full reinstallation. Choose your network topology carefully with future needs in mind and take into account the following Cisco HyperFlex offerings:

-

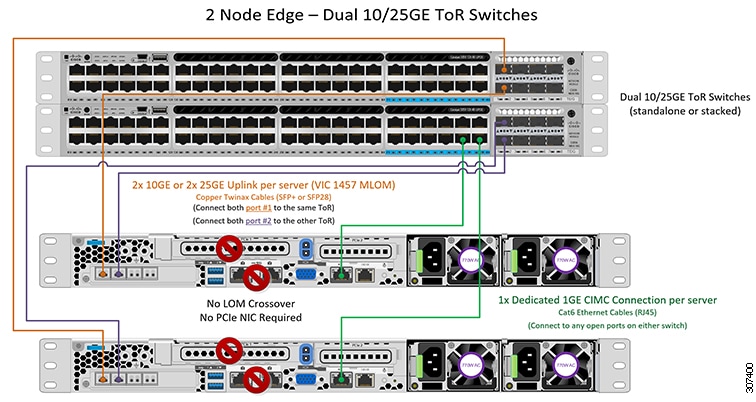

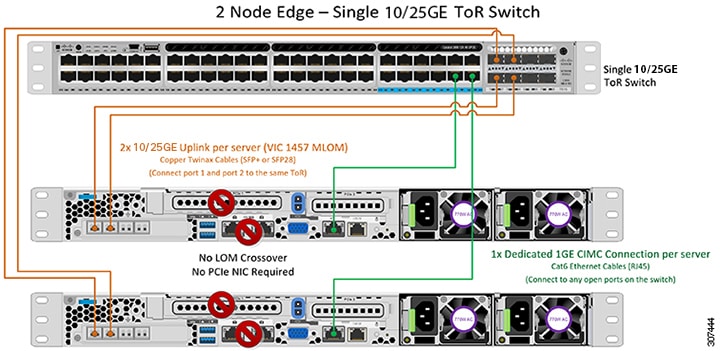

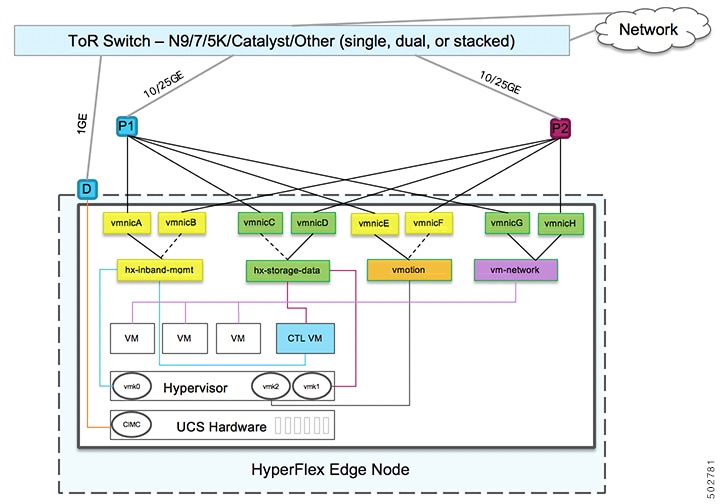

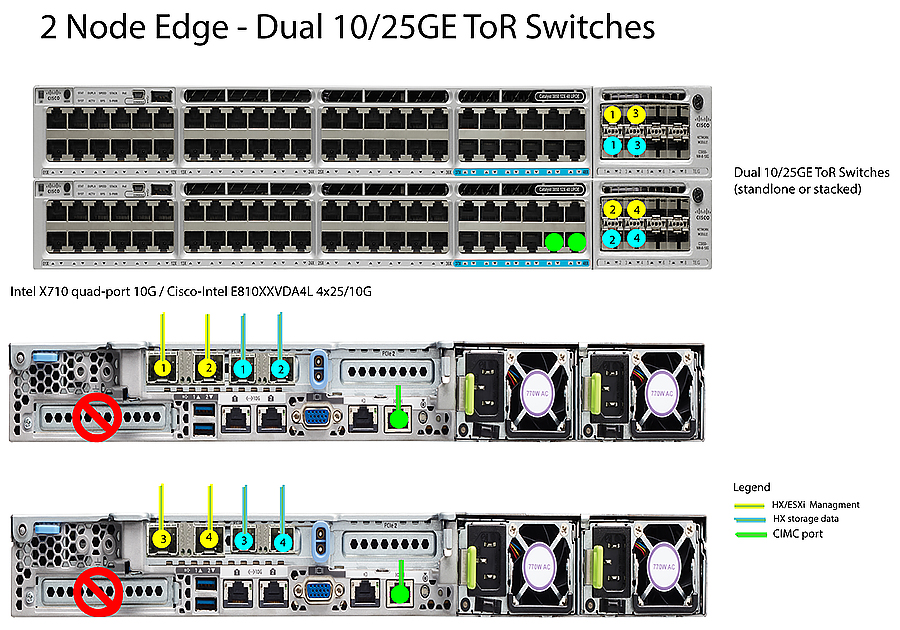

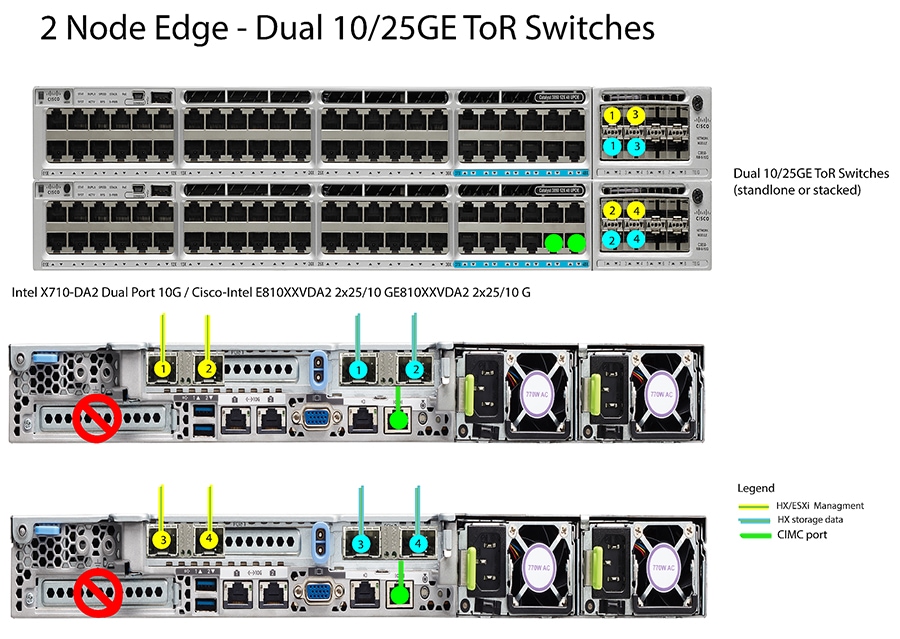

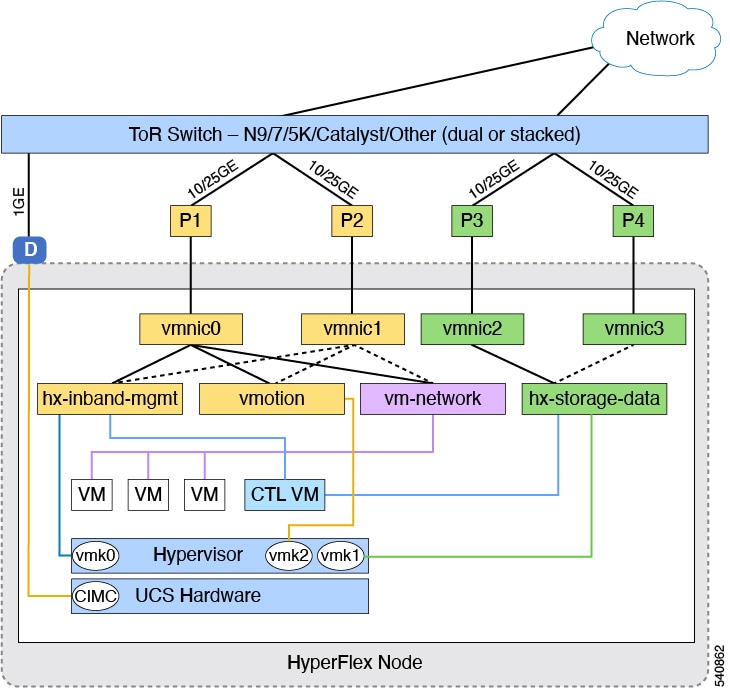

10/25Gigabit (GE) topology with Cisco VIC-based hardware or Intel NIC-Based adapters.

-

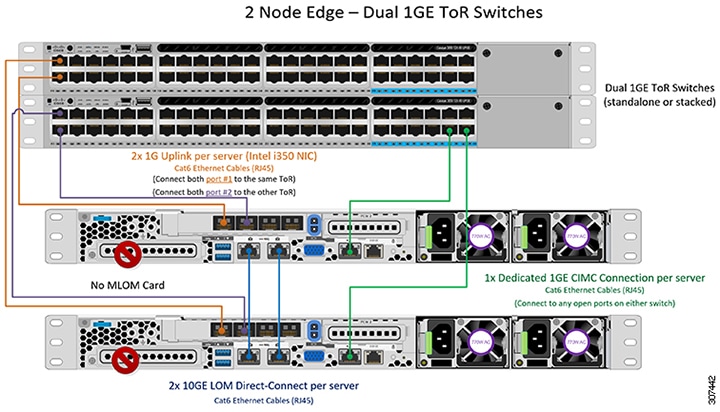

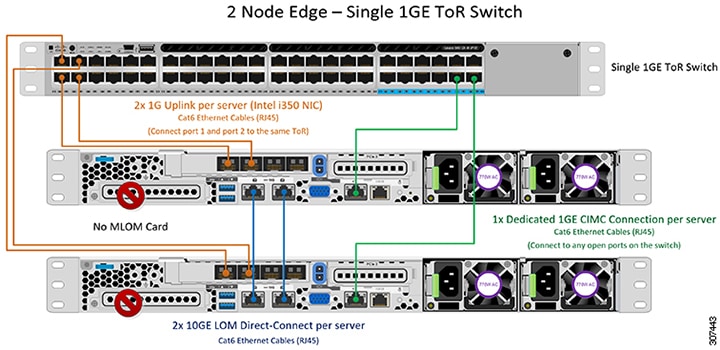

1GE topology, for clusters that will not need node expansion and where the top-of-rack ToR switch does not have 10GE ports available.

For more specific information on Cisco IMC Connectivity, physical cabling, network design, and configuration guidelines, select from the following list of available topologies:

After completing the 10/25GE or 1GE ToR physical network and cabling section, continue with the Common Network Requirement Checklist.

Feedback

Feedback