About backup and restore

The backup and restore functions enable you to create backup files and restore them on a different appliance if necessary for your network configuration.

Backup

-

You can back up automation data only or both automation and Assurance data.

Important

NetFlow data is not backed up when you back up Catalyst Center's automation and Assurance data.

-

Automation data consists of Catalyst Center databases, credentials, file systems, and files. The automation backup is a full backup.

-

The Assurance data consists of network assurance and analytics data. The first backup of Assurance data is a full backup. After that, backups are incremental.

Important

-

Do not modify or delete the backup files. If you do, you might not be able to restore the backup files to Catalyst Center.

-

A backup can only be restored on a Catalyst Center cluster that has the same FIPS mode setting configured as the source cluster. Backup and restore operations involving clusters with different FIPS mode settings will fail (since Catalyst Center will label backups as incompatible).

-

-

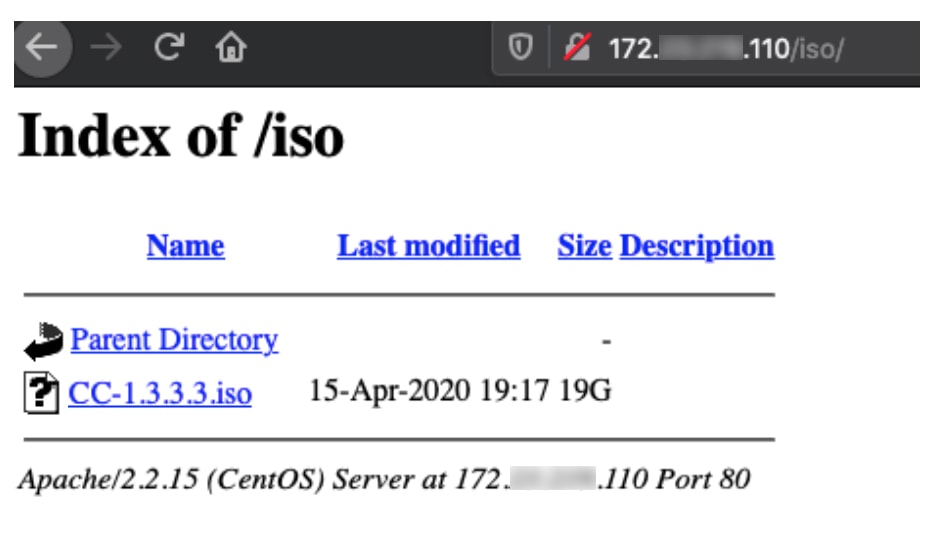

Catalyst Center creates the backup files and posts them to a remote server. Each backup is uniquely stored using the UUID as the directory name. For information about the remote server requirements, see Backup server requirements.

-

Only a single backup can be performed at a time. Performing multiple backups at once is not supported.

-

When a backup is being performed, you cannot delete the files that have been uploaded to the file service, and changes that you make to these files might not be captured by the backup process.

-

We recommend:

-

Perform a daily backup to maintain a current version of your database and files.

-

Perform a backup after making changes to your configuration. For example, when changing or creating a new policy on a device.

-

Perform a backup only during a low-impact or maintenance period.

-

-

You can schedule weekly backups on a specific day of the week and time.

Restore

-

You can restore the backup files from the remote server using Catalyst Center.

-

When you restore the backup files, Catalyst Center removes and replaces the existing database and files with the backup database and files. While a restore is being performed, Catalyst Center is unavailable.

-

You cannot do a backup from one version of Catalyst Center and restore it to another version of Catalyst Center. You can only restore a backup to an appliance that is running the same Catalyst Center software release with the same first four digits and the same application versions as the appliance from which the backup was taken. To view the current applications and versions, choose and click Currently Installed Applications.

-

You can restore a backup to a Catalyst Center appliance with a different IP address. This situation could happen if the IP address is changed on Catalyst Center and you need to restore from an older system.

Important

After a backup and restore of Catalyst Center:

-

You must access the Integration Settings window and update (if necessary) the Callback URL Host Name or IP Address. For more information, see Configure Integration Settings.

-

-

You can restore a backup file to an appliance with the same machine profile, such as restoring a backup from a medium appliance to another medium appliance.

-

You can restore a backup file from a lower-end appliance to a higher-end appliance. For example, you can restore the backup file from a medium appliance to a large or extra-large appliance.

-

You cannot restore a backup file from a higher-end appliance to a lower-end appliance. So, these scenarios are not supported:

-

Restoring a large appliance's backup file to a medium appliance.

-

Restoring an extra-large appliance's backup file to either a large or medium appliance.

-

-

You can restore a standalone node's backup file to a three-node cluster or vice versa, provided that the target appliance has the same machine profile or is a higher-end appliance. The one exception is that you can't restore the backup file from a three-node cluster consisting of extra-large appliances to a standalone extra-large appliance.

Backup and restore event notifications

You can receive a notification whenever a backup or restore event takes place. To configure and subscribe to these notifications, complete the steps described in the "Work with Event Notifications" topic of the Cisco Catalyst Center Platform User Guide. When completing this procedure, ensure that you select and subscribe to the SYSTEM-BACKUP-v2 and SYSTEM-RESTORE-v2 events.

A notification is generated and sent whenever an event listed in this table occurs:

| Operation | Event |

|---|---|

|

Backup |

The process to create a backup file for your system has started. |

|

A backup file could not be created for your system. This event typically happens because:

|

|

|

Restore |

The process to restore a backup file has started. |

|

The restoration of a backup file failed. This event typically happens because:

|

Feedback

Feedback