FlexPod Datacenter Zero Trust Framework Design Guide

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

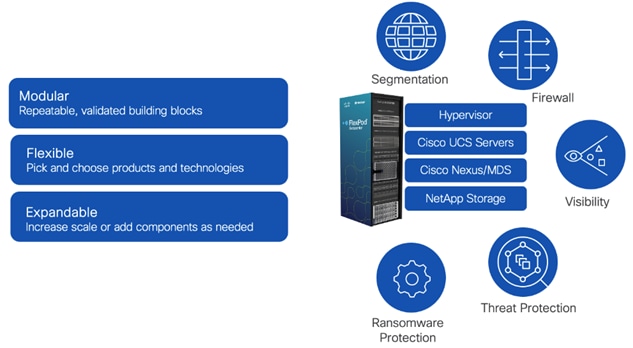

The FlexPod Datacenter solution is a validated approach for deploying Cisco and NetApp technologies and products to build shared private and public cloud infrastructure. Cisco and NetApp have partnered to deliver a series of FlexPod solutions that enable strategic data-center platforms. The success of the FlexPod solution is driven by its ability to evolve and incorporate both technology and product innovations in the areas of management, computing, storage, networking, and security. This document explains the design details of incorporating and implementing various tools, technologies, and products to deliver a Zero Trust security framework for FlexPod Datacenter.

FlexPod delivers an integrated architecture that incorporates compute, storage, and network design best practices, thereby minimizing IT risks by validating the integrated architecture to ensure compatibility between various components. The solution also addresses IT pain points by providing documented design guidance, deployment guidance, and support that can be used in various stages (planning, designing, and implementation) of a deployment.

FlexPod Datacenter delivers the following key benefits:

● Simpler and programmable infrastructure: FlexPod infrastructure delivered as infrastructure-as-code through a single partner integrable open API.

● Latest hardware and software compute innovations: policy-based configurations, delivered using Cisco Intersight, to deploy and manage the latest processor, memory, network, and power/cooling improvements.

● Storage Modernization: deliver high-speed, consistent, low latency, multi-tenant storage using a range of NetApp storage arrays.

● Innovative cloud operations: continuous feature delivery and no need for maintaining on-premises physical or virtual machines supporting management functions.

● Built for investment protections: design ready for future compute technologies such as liquid cooling and high-Wattage CPUs; CXL-ready.

A Zero Trust Security Framework is a comprehensive approach to network security that assumes no user, system, or device can be trusted by default, regardless of its location relative to the network perimeter. It operates under the principle of "never trust, always verify," meaning that every access request is thoroughly verified before granting access, irrespective of where it originates from. The Zero Trust Framework strives to protect modern digital environments by leveraging network segmentation, preventing lateral movement, providing Layer 7 threat prevention, and simplifying granular user-access control.

Implementing Zero Trust framework on a FlexPod infrastructure provides following additional benefits:

● Enhanced Security: By treating every access request as a potential threat, Zero Trust significantly reduces the risk of data breaches and other security incidents.

● Greater Visibility: Constant monitoring of network activities provides a comprehensive view of the network, enabling quick identification and response to any unusual or suspicious activities.

● Reduced Attack Surface: By enforcing least privilege access and micro-segmentation, Zero Trust minimizes the potential points of vulnerability in the network.

● Improved Compliance: The stringent security controls in Zero Trust can help organizations meet compliance requirements for data protection and privacy.

● Efficient Incident Response: Quickly detect, block, and respond to threats. Verify data integrity and implement data loss prevention.

● Protection Against Internal Threats: Zero Trust considers the possibility of threats coming from inside the network, offering protection against insider threats as well as external ones.

The Zero Trust Framework for the FlexPod solution incorporates various additional security components by Cisco and NetApp including Cisco Secure Firewall Threat Defense (FTD), Cisco Secure Network Analytics (previously Stealthwatch), Cisco Secure Workload (previously Tetration), and NetApp Ransomware Protection.

If you’re interested in understanding the FlexPod design and deployment details, including the configuration of various elements of design and associated best practices, see the Cisco Validated Designs for FlexPod: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html.

This chapter contains the following:

● Audience

The FlexPod Datacenter solution incorporates various security technologies by Cisco and NetApp to follow the security design best practices for delivering a hardened platform. Zero Trust framework introduces several secure design principles which require additional security and visibility products. The Zero Trust framework design requirements can be summarized as:

● Never assume trust, always verify, and enforce least privilege access.

● Establish and enforce trust on platforms. Implement device and protocol hardening.

● Reduce the attack surface using segmentation and control traffic flow between segments.

● Complete visibility of processes, devices, and workloads.

● Quickly detect, block, and respond to threats. Verify data integrity and implement data loss prevention.

● Provide an automated, flexible, standardized, and layered approach to security.

To deliver a Zero Trust framework on FlexPod, several technologies and security products are introduced in following key areas:

● Platform Resilience: device and protocol hardening including traffic isolation, role-based access control (RBAC), and utilizing secure connectivity.

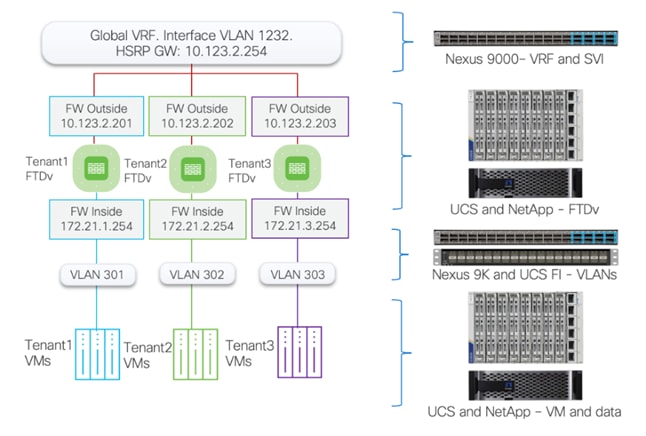

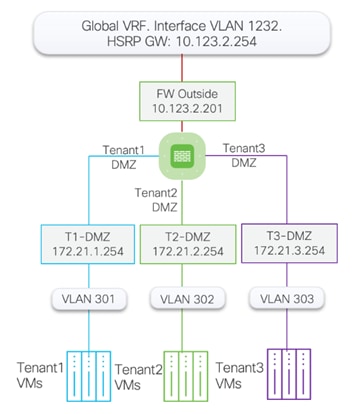

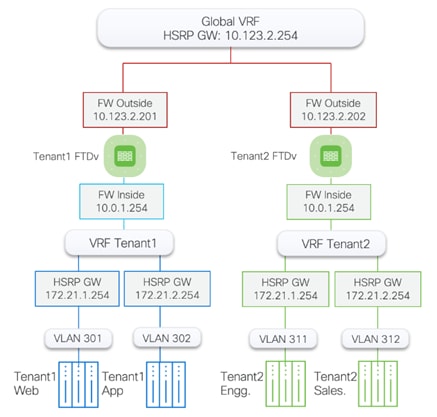

● Segmentation and Control: multi-tenancy design using virtual routing and forwarding (VRF), VLANs, and Cisco Firewall Threat Defense.

● Visibility and Monitoring: network and OS level visibility and anomaly detection using Cisco Secure Network Analytics and Cisco Secure Workload.

● Threat Protection and Response: controlling the breach and recover quickly using Cisco Secure Workload and NetApp Ransomware Protection.

● Device Automation: automated infrastructure deployment including day-2 tenant setup using Ansible.

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

This document provides design guidance around incorporating the Zero Trust framework design principles in a FlexPod Datacenter environment. The document introduces various design elements and describes various considerations and best practices for a successful deployment of additional security technologies and products such as Cisco Secure Firewall Threat Defense, Cisco Secure Network Analytics, Cisco Secure Workload, and NetApp Ransomware Protection.

These design elements distinguish this FlexPod Datacenter CVD from previous designs:

● Enhanced platform security by implementing additional device and protocol hardening.

● Multi-tenant design where data traffic between various tenants is controlled using Cisco Secure Firewall Threat Defense.

● Cisco Secure Workload (formerly Tetration) and Cisco Secure Network Analytics (formerly Stealthwatch) for network and process level visibility.

● Threat protection using Cisco Secure Workload.

● Data loss prevention using NetApp Ransomware Protection.

The Zero Trust framework for FlexPod solution offers the following key benefits for securing your infrastructure:

● Cisco security technologies: FlexPod leverages Cisco's advanced security technologies like segmentation, firewalls, intrusion detection/prevention systems, Cisco Secure workload, and Cisco secure network analytics to identify, mitigate, and protect against various cyber threats.

● NetApp security features: NetApp storage solutions offer encryption at rest and in transit, data lifecycle management, and role-based access control to secure sensitive data. NetApp Autonomous Ransomware Protection utilizes machine learning to provide a comprehensive data protection solution at negligible performance impact.

● FlexPod security features: FlexPod designs follow various security best practices such as secure boot and firmware updates, disabling insecure users and protocols, and employing role-based access control to prevent unauthorized modifications and vulnerabilities.

FlexPod designs are modular and scalable in nature therefore the security framework integrated design maintains the modularity and scalability while providing an automated, flexible, standardized, and layered approach to security.

This chapter contains the following:

● Cisco Unified Computing System X-Series

● Cisco Nexus 93600CD-GX Ethernet Switch

● Infrastructure as Code with Ansible

● Cisco Secure Firewall Threat Defense Virtual

● Cisco Secure Firewall Management Center

● Cisco Secure Network Analytics

● Intel Confidential Computing

● NetApp Security and Ransomware Protection

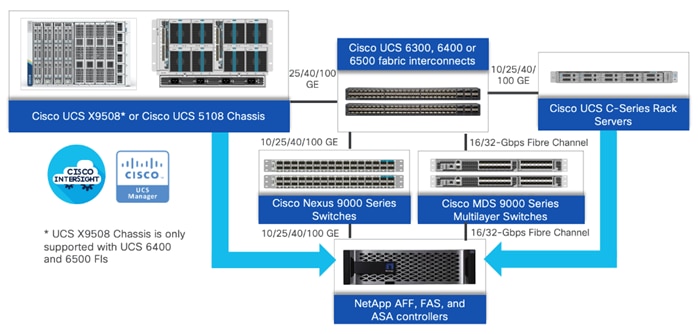

FlexPod Datacenter architecture is built using the following infrastructure components for compute, network, and storage:

● Cisco Unified Computing System (Cisco UCS)

● Cisco Nexus and Cisco MDS switches

● NetApp All Flash FAS (AFF), FAS, and All SAN Array (ASA) storage systems

All the FlexPod components have been integrated so that you can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. One of the main benefits of FlexPod is its ability to maintain consistency at scale. Each of the component families shown in Figure 2. (Cisco UCS, Cisco Nexus, Cisco MDS, and NetApp controllers) offers platform and resource options to scale up or scale out the infrastructure while supporting the same features.

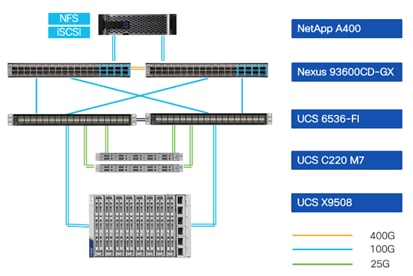

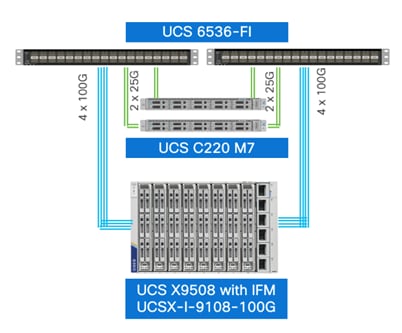

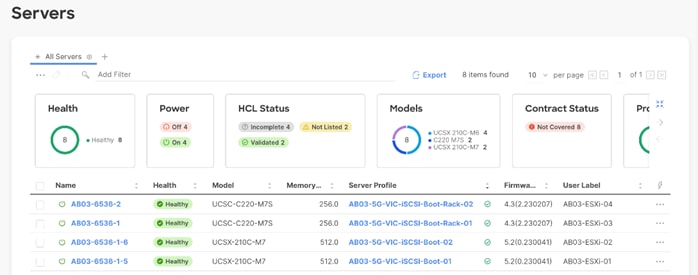

The Zero Trust framework for FlexPod Datacenter solution is built using the following hardware components:

● Cisco UCS X9508 Chassis with Cisco UCSX-I-9108-100G Intelligent Fabric Modules and up to eight Cisco UCS X210c M7 Compute Nodes.

● Fifth-generation Cisco UCS 6536 Fabric Interconnects to support 10/25/40/100GbE and 16/32GbFC connectivity from various components.

● Cisco UCS C220 M7 or C240 M7 Rack Mount Servers attached directly to the Fabric Interconnects.

● High-speed Cisco NX-OS-based Cisco Nexus C93600CD-GX switching to support up to 400GE ethernet connectivity.

● NetApp AFF A400 end-to-end NVMe storage with up to 100GE connectivity and 32G FC connectivity

The management software components of the Zero Trust framework for FlexPod consist of:

● Cisco Intersight platform to deploy the Cisco UCS components and maintain and support the FlexPod components.

● Cisco Intersight Assist Virtual Appliance to help connect NetApp AIQUM, Cisco Nexus (and MDS) Switches, and VMware vCenter to Cisco Intersight.

● NetApp Active IQ Unified Manager to monitor and manage the storage and for NetApp ONTAP integration with Cisco Intersight.

● VMware vCenter to set up and manage the virtual infrastructure as well as Cisco Intersight integration.

FlexPod Security Hardening

FlexPod has always been constructed with security at its core, ensuring a safe and secure platform for users. The design not only emphasizes system functionality but also data protection and traffic isolation. The security fundamentals of FlexPod are well-documented in the technical report, TR-4984-1123, providing comprehensive insights into its safe and secure operations. NetApp TR-4984-1123, is a comprehensive approach to safeguarding FlexPod infrastructure by implementing security controls and best practices across all layers: compute, network, storage, and virtualization. This multi-layered defense aims to minimize the attack surface and mitigate the risk of data breaches, unauthorized access, and other security incidents. FlexPod Hardening Technical Report (TR) highlights:

● Integrated Security: integrated approach to security hardening covers all layers of the solution stack and ensures that all aspects of the infrastructure are secure.

● Best Practices Guidance: provide detailed guidance based on hardening guides from VMWare, NetApp, and Cisco across the FlexPod infrastructure.

● Secure by Design: solution designed with security in mind from the ground up where security isn't just an afterthought or add-on, but a fundamental part of the solution's design and implementation.

Note: FlexPod hardening is an ongoing process that should be reviewed and updated regularly to reflect changes in security threats and best practices.

This Cisco Validated Design (CVD) enhances the existing FlexPod security fundamentals by incorporating additional software components for improved visibility, segmentation, threat mitigation, and ransomware protection.

Cisco Unified Computing System X-Series

Cisco UCS X-Series simplifies the data-center design by providing flexible server options. A single server type, supporting a broader range of workloads results in fewer different data center products to manage and maintain. Cisco Intersight manages Cisco UCS X-Series as well as integrates with Cisco and third-party devices, including Cisco Nexus and MDS switches, VMware vCenter and NetApp storage, to provide visibility, optimization, and orchestration from a single platform.

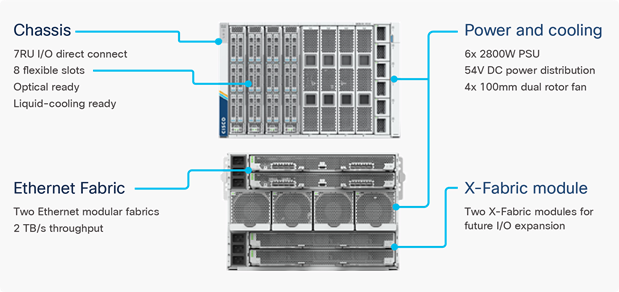

Cisco UCS X9508 Chassis

The Cisco UCS X-Series chassis is engineered to be adaptable and flexible. Figure 4 shows the Cisco UCS X9508 chassis only has a power-distribution midplane. This midplane-free design provides fewer obstructions for better airflow. For I/O connectivity, vertically oriented compute nodes intersect with horizontally oriented fabric modules, allowing the chassis to support future fabric innovations. Cisco UCS X9508 Chassis’ superior packaging enables larger compute nodes, thereby providing more space for actual compute components, such as memory, GPU, drives, and accelerators. Improved airflow through the chassis enables support for higher power components, and more space allows for future thermal solutions such as liquid cooling.

The Cisco UCS X9508 7-Rack-Unit (7RU) chassis has eight flexible slots. These slots can house a combination of compute nodes and a pool of current and future I/O resources that includes GPU accelerators, disk storage, and nonvolatile memory. At the top rear of the chassis are two Intelligent Fabric Modules (IFMs) that connect the chassis to upstream Cisco UCS 6400 or 6500 Series Fabric Interconnects. At the bottom rear of the chassis are slots to house X-Fabric modules that can flexibly connect the compute nodes with I/O devices. Six 2800W Power Supply Units (PSUs) provide 54V power to the chassis in multiple redundancy configurations. A higher voltage allows efficient power delivery with reduced power loss. Efficient, 100mm, dual counter-rotating fans deliver industry-leading airflow and power efficiency and optimized thermal algorithms enable different cooling modes to best support your environment.

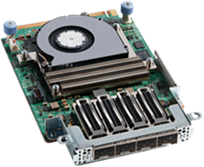

Cisco UCSX-I-9108-100G Intelligent Fabric Modules

Cisco UCS X9508 Chassis 100-Gbps network connectivity is provided by a pair of Cisco UCSX-I-9108-100G Intelligent Fabric Modules (IFMs). Like the fabric extenders used in the Cisco UCS 5108 Blade Server Chassis, these modules carry all network traffic to a pair of Cisco UCS 6536 Fabric Interconnects (FIs). IFMs also host the Chassis Management Controller (CMC) for chassis management. In contrast to systems with fixed networking components, Cisco UCS X9508’s midplane-free design enables easy upgrades to new networking technologies as they emerge making it straightforward to accommodate new network speeds or technologies in the future.

Each IFM supports eight 100Gb uplink ports for connecting the Cisco UCS X9508 Chassis to the FIs and 8 100Gb or 32 25Gb server ports for the eight compute nodes. IFM server ports can provide up to 200 Gbps of unified fabric connectivity per compute node across the two IFMs. The uplink ports connect the chassis to the Cisco UCS FIs, providing up to 1600Gbps connectivity across the two IFMs. The unified fabric carries management, VM, and Fibre Channel over Ethernet (FCoE) traffic to the FIs, where server management traffic is routed to the Cisco Intersight cloud operations platform, FCoE traffic is forwarded to either native Fibre Channel interfaces through unified ports on the FI (to Cisco MDS switches) and data Ethernet traffic is forwarded upstream to the data center network using Cisco Nexus switches.

Cisco UCS X210c M7 Compute Node

The Cisco UCS X9508 Chassis is designed to host up to 8 Cisco UCS X210c M7 Compute Nodes. Figure 6 shows the hardware details of the Cisco UCS X210c M7 Compute Nodes.

The Cisco UCS X210c M7 features:

● CPU: Up to 2x 4th or 5th Gen Intel Xeon Scalable Processors with up to 64 cores per processor.

● Memory: Up to 32 x 256 GB DDR5-5600 DIMMs for a maximum of 8 TB of main memory.

● Disk storage: Up to 6 SAS or SATA drives or NVMe drives can be configured with the choice of an internal RAID controller or passthrough controllers. Up to two 960GB M.2 memory cards can be added to the Compute Node with optional hardware RAID.

● GPUs: The optional front mezzanine GPU module allows support for up to two HHHL GPUs. Adding a mezzanine card and a Cisco UCS X440p PCIe Node allows up to four more GPUs to be supported with an X210c M7.

● Virtual Interface Card (VIC): Up to 2 VICs including an mLOM Cisco UCS VIC 15230/15231 or an mLOM Cisco UCS VIC 15420 and a mezzanine Cisco UCS VIC card 15422 can be installed in a Compute Node.

● Security: The server supports an optional Trusted Platform Module (TPM). Additional security features include a secure boot FPGA and ACT2 anticounterfeit provisions.

Cisco UCS C220 M7 Rack Server

Cisco UCS Virtual Interface Cards (VICs)

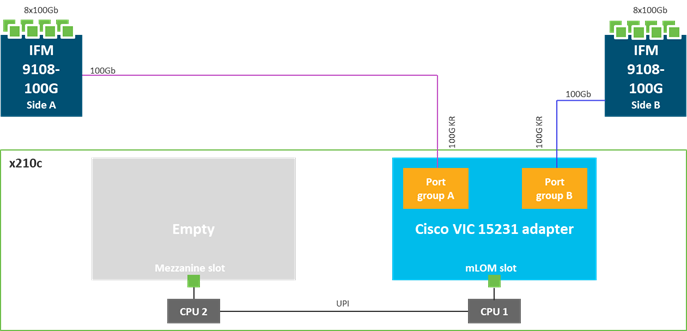

During this validation, following VIC cards were installed in Cisco UCS X210c M7 and Cisco UCS C220 M7 servers:

Cisco UCS VIC 15230 and Cisco UCS VIC 15231

Cisco UCS VIC 15230 and15231 fits in the mLOM slot in the Cisco UCS X210c Compute Node and enables up to 100 Gbps of unified fabric connectivity to each of the chassis IFMs for a total of 200 Gbps of connectivity per server. Cisco UCS VIC 15230 is functionally equivalent to Cisco UCS VIC 15231 (used during this validation) but incorporates secure boot technology. Cisco UCS VIC 15230 and 15231 connectivity to the IFM and up to the fabric interconnects is delivered through 100Gbps KR lanes. Cisco UCS VIC 15230 and 15231 supports 512 virtual interfaces (both Fibre Channel and Ethernet) along with the latest networking innovations such as NVMe over fabric, VxLAN/NVGRE offload, and so forth.

Note: Since Cisco UCS VIC 15230 incorporates secure boot technology, for additional security, Cisco UCS VIC 15230 is recommended for Cisco UCS X210c M7 compute nodes.

Cisco UCS VIC 14427 and 15428

The Cisco UCS VIC 15427/15428 is a quad-port small-form-factor pluggable (SFP+/SFP28/SFP56) mLOM card designed for Cisco UCS C-series M6/M7 rack servers. The Cisco UCS VIC 15427 is functionally equivalent to the 15428 (used during this validation) but incorporates secure boot technology. The card supports 10/25/50-Gbps Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs. When a UCS rack server with VIC 15427/15428 is connected to a fabric interconnect, the VIC is provisioned through Cisco Intersight™ Managed Mode (IMM) or Cisco UCS Manager (UCSM) policies.

Note: Since Cisco UCS VIC 15427 incorporates secure boot technology, for additional security, Cisco UCS VIC 15427 is recommended for Cisco UCS C-Series M7 rack servers.

Secure Boot mLOM VICs

The Cisco Virtual Interface Card (VIC) 15230 and 15427 provide Secure Boot feature for the rackmount servers Cisco UCS X-Series and Cisco UCS C-Series which significantly enhance the system's security. To protect against tampering through the bootloader code, Cisco VIC employs a Trust Anchor Module (TAM) in its hardware. The TAM serves as a secure component that ensures the integrity of the device's firmware and its boot process. Cisco VIC performs checks on digitally signed images to verify their authenticity. This process ensures that only genuine, unmodified code boots on a Cisco device, significantly reducing the risk of malicious code execution.

Cisco UCS 6536 Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point for connectivity and management for the entire Cisco Unified Computing System. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by Cisco Intersight or Cisco UCS Manager. Cisco UCS FIs provide low-latency, lossless, cut-through switching that supports LAN, SAN, and management traffic using a single set of cables.

![]()

The Cisco UCS 6536 utilized in the current design is a 36-port Fabric Interconnect. This single RU device includes up to 36 10/25/40/100 Gbps Ethernet ports. Four of these 36 ports (33-36) can be configured to provide 16 8/16/32-Gbps Fibre Channel ports using 128 Gbps to 4x32 Gbps breakouts. All 36 ports support ethernet breakout cables or QSA interfaces.

Cisco UCS Server Security Highlights

Cisco UCS offers a comprehensive security approach that is built into its design from the ground up. Unlike systems where security is an afterthought, with Cisco UCS, every supplier is held to the highest standards, ensuring robust security from the start. Cisco UCS has security controls and policies at every level of infrastructure. These controls provide hardware attestation, integrity, and seamless integration with partner solution security features, offering a secure and reliable computing environment.

Secure Operational Model

In terms of operation, Cisco UCS fits seamlessly into security best practices. It supports multi-factor authentication, single sign-on, and secure APIs, enhancing the system's overall security while providing you with a secure and efficient user experience. Additionally, Cisco UCS reduces the opportunity for malicious or erroneous exposures and alterations by restricting access to sensitive data and controls, ensuring that only authorized individuals can access and modify critical information.

Secure internal and external Communication

Cisco UCS provides customizable, secure, and auditable internal communication. This is achieved using encryption, detailed logging, and ensuring that all communication within the system is secure and traceable. All the external communication between various Cisco UCS components is authenticated, authorized, and uses secure, encrypted traffic and protocols.

UEFI Secure Boot

This validation of FlexPod uses Unified Extensible Firmware Interface (UEFI) Secure Boot. UEFI is a specification that defines a software interface between an operating system and platform firmware. With UEFI secure boot enabled, all executables, such as boot loaders and adapter drivers, are authenticated by the BIOS before they can be loaded.

Trusted Platform Module (TPM)

Cisco UCS compute servers also contain an optional Trusted Platform Module (TPM). VMware ESXi 7.0 U3 supports UEFI Secure Boot and VMware vCenter 7.0 U3 supports UEFI Secure Boot Attestation between the TPM module and ESXi, validating that UEFI Secure Boot has properly taken place.

Digitally Signed redundant Firmware

Cisco UCS firmware images are digitally signed from vendors and secure boot checks the digital signature during boot to allow the firmware update to proceed. Each CIMC, I/O module, BIOS, CIMC, and Cisco adapter has two slots for firmware in flash and each slot holds a version of firmware. One of the slots is active at any time while the other is the backup slot. These components boot from active slot but if the primary software image (in active slot) is breached, the system can be set to boot using the non-active slot and marking the backup firmware as the known good.

Threat Mitigation

Cisco UCS helps increase situational awareness and shorten vulnerability windows through targeted advisories and mitigation automation, allowing for quick and efficient responses to potential threats. This proactive approach to security significantly enhances the system's resilience and reduces the likelihood of successful cyber-attacks.

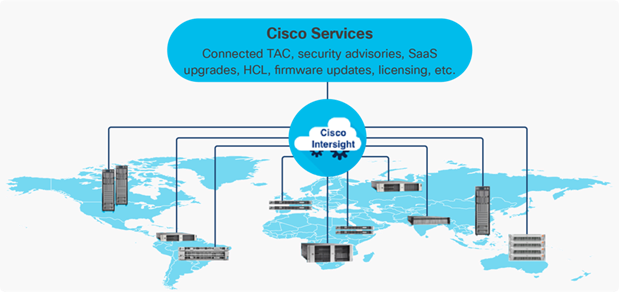

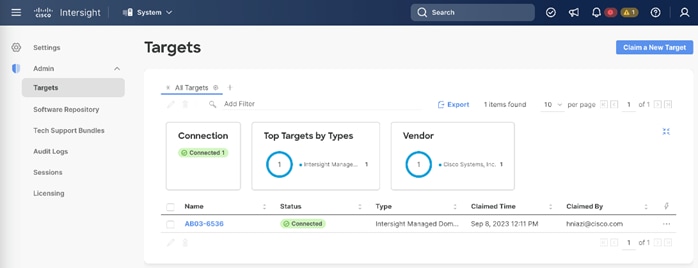

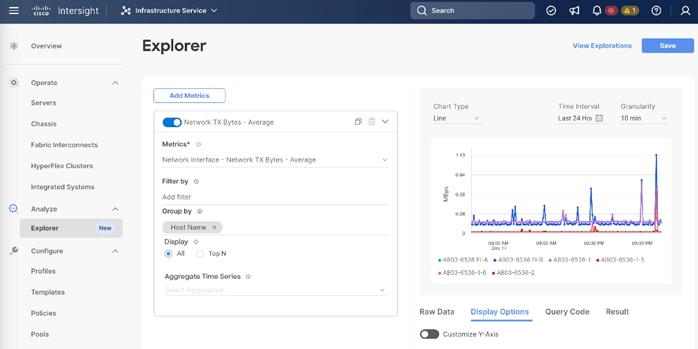

The Cisco Intersight platform is an infrastructure lifecycle management platform that delivers simplified configuration, deployment, maintenance, and support. The Cisco Intersight platform is designed to be modular, so you can adopt services based on your individual requirements. The platform significantly simplifies IT operations by bridging applications with infrastructure, providing visibility and management from bare-metal servers and hypervisors to serverless applications, thereby reducing costs and mitigating risk. This unified SaaS platform uses an Open API design that natively integrates with third-party platforms and tools. Cisco Intersight offers flexible deployment either as Software as a Service (SaaS) on Intersight.com or running on your premises as Cisco Intersight Virtual Appliance.

The main benefits of Cisco Intersight infrastructure services are as follows:

● Simplify daily operations by automating many daily manual tasks.

● Combine the convenience of a SaaS platform with the capability to connect from anywhere and manage infrastructure through a browser or mobile app.

● Stay ahead of problems and accelerate trouble resolution through advanced support capabilities.

● Gain global visibility of infrastructure health and status along with advanced management and support capabilities.

● Upgrade to add workload optimization services when needed.

Cisco Intersight Virtual Appliance and Private Virtual Appliance

In addition to the SaaS deployment model running on Intersight.com, on-premises options can be purchased separately. The Cisco Intersight Virtual Appliance and Cisco Intersight Private Virtual Appliance are available for organizations that have additional data locality or security requirements for managing systems. The Cisco Intersight Virtual Appliance delivers the management features of the Cisco Intersight platform in an easy-to-deploy VMware Open Virtualization Appliance (OVA) or Microsoft Hyper-V Server virtual machine that allows you to control the system details that leave your premises. The Cisco Intersight Private Virtual Appliance is provided in a form factor specifically designed for those who operate in disconnected (air gap) environments. The Private Virtual Appliance requires no connection to public networks or back to Cisco to operate.

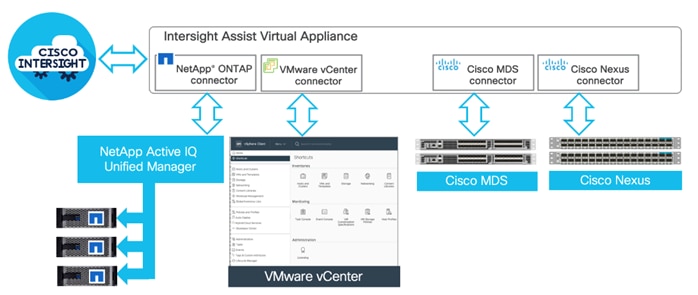

Cisco Intersight Assist

Cisco Intersight Assist helps you add endpoint devices to Cisco Intersight. A data center could have multiple devices that do not connect directly with Cisco Intersight. Any device that is supported by Cisco Intersight, but does not connect directly with it, will need a connection mechanism. Cisco Intersight Assist provides that connection mechanism. In FlexPod, VMware vCenter and NetApp Active IQ Unified Manager, Cisco Nexus, and Cisco MDS switches (if deployed) connect to Intersight with the help of Intersight Assist VM.

Cisco Intersight Assist is available within the Cisco Intersight Virtual Appliance, which is distributed as a deployable virtual machine contained within an Open Virtual Appliance (OVA) file format. A single Cisco Intersight Assist virtual appliance can support NetApp ONTAP storage, VMware vCenter, and Cisco switches.

Licensing Requirements

The Cisco Intersight platform uses a subscription-based license with multiple tiers. You can purchase a subscription duration of one, three, or five years and choose the required Cisco UCS server volume tier for the selected subscription duration. Each Cisco endpoint automatically includes a Cisco Intersight Base license at no additional cost when you access the Cisco Intersight portal and claim a device. You can purchase any of the following higher-tier Cisco Intersight licenses using the Cisco ordering tool:

● Cisco Intersight Essentials: the Essentials includes Lifecycle Operations features, including Cisco UCS Central and Cisco UCS-Manager entitlements, policy-based configuration with server profiles (IMM), firmware management, Global Monitoring and Inventory, Custom Dashboards, and evaluation of compatibility with the Cisco Hardware Compatibility List (HCL). Also, Essentials includes Proactive Support features, including Proactive RMA, Connected TAC, Advisories, and Sustainability.

● Cisco Intersight Advantage: Advantage offers all the features of the Essentials tier plus In-Platform Automation features such as Tunneled KVM, Operating System Install Automation, Storage/Virtualization/Network Automation, and Workflow Designer. It also includes Ecosystem Integrations for Ecosystem Visibility, Operations, and Automation, and ServiceNow Integration.

Servers in the Cisco Intersight managed mode require at least the Essentials license. For detailed information about the features provided in the various licensing tiers, see: https://intersight.com/help/saas/getting_started/licensing_requirements/lic_infra.

Cisco Intersight Assist Device Connector for VMware vCenter, NetApp ONTAP, Cisco Nexus, and Cisco MDS Switches

Cisco Intersight integration with VMware vCenter, NetApp ONTAP, and Cisco switches enables you to perform the following tasks right from the Intersight dashboard:

● Monitor the virtualization, storage, and switching environment.

● Add various dashboard widgets to obtain useful at-a-glance information.

● Perform common Virtual Machine tasks such as power on/off, remote console and so on.

● Orchestrate virtual, storage, and switching, environment to perform common configuration tasks.

● Define FlexPod as an Intersight Integrated System to view various system properties such as capacity, usage, physical and virtual components, and Interoperability status of various devices using Interoperability Matrix Tool (IMT).

Since Cisco Intersight is a SaaS platform, the monitoring and orchestration capabilities are constantly being added and delivered seamlessly from the cloud. Cisco Intersight integrates with VMware vCenter, NetApp storage and Cisco Nexus and MDS switches as follows:

● Cisco Intersight uses the device connector running within Cisco Intersight Assist virtual appliance to communicate with the VMware vCenter.

● Cisco Intersight uses the device connector running within a Cisco Intersight Assist virtual appliance to integrate with NetApp Active IQ Unified Manager. The NetApp AFF A400 should be added to NetApp Active IQ Unified Manager.

● Cisco Intersight uses the device connector running within the Cisco Intersight Assist virtual appliance to communicate with Cisco Nexus 9000 and MDS switches.

The device connector provides a secure way for connected targets to send information and receive control instructions from the Cisco Intersight portal using a secure internet connection. The integration brings the full value and simplicity of Cisco Intersight infrastructure management service to VMware hypervisor and ONTAP data storage environments.

Key Security Highlights

The layered security architecture of Cisco Intersight incorporates several key elements to ensure maximum protection. The architecture includes the use of industry-standard protocols (such as HTTPS) to provide secure communication and during transport all data is encrypted, ensuring confidentiality, and preventing unauthorized access. A clear separation is maintained between the management and production networks to eliminate any potential interference. In the Intersight architecture, no production network data flows to or from Intersight, adding another layer of safety. The system identifies, authenticates, and authorizes all devices during the claim process as well as all subsequent transfers, ensuring secure transactions. Furthermore, all management tasks are driven by the device itself, eliminating the need for device inbound connections.

Access and Authentication

Cisco Intersight accounts form the authentication domain for users. The accounts control all resource access, and authenticated users are restricted from seeing any data in accounts where they are not authorized. With the SaaS platform, Cisco login IDs can be used for authentication with the identity provider for Cisco.com, which includes support for multifactor authentication. Both SaaS and on-premises Intersight implementations allow integration with external identity management systems to meet existing customer authentication requirements. The Cisco Intersight framework uses granular access control with privileges managed per resource. Intersight software allows configuration of users and groups into several roles, and each user or group can be a member of multiple roles.

Role Based Access Control

Resource Groups in Cisco Intersight comprise of a collection of managed resources or targets, providing a structured and organized system for managing different assets. In this system, organizations play a crucial role in enabling multi-tenancy by placing devices into logically separated resource groups. This separation facilitates effective management and control over different resources. Access control in Cisco Intersight is fundamentally underpinned by roles and privileges where roles are tied to a specific set of privileges to perform operations related to that role to ensure that every user has defined responsibilities and access rights, enhancing system security and efficiency. The privileges in Cisco Intersight can be based on specific areas of responsibility, such as UCS Domain, Virtualization, Storage, and Network. Each area requires a particular skill set and understanding, and by associating privileges with specific roles, you can ensure that the most qualified personnel handle tasks. This enhances the operational efficiency and security of the system.

Policy Driven Control

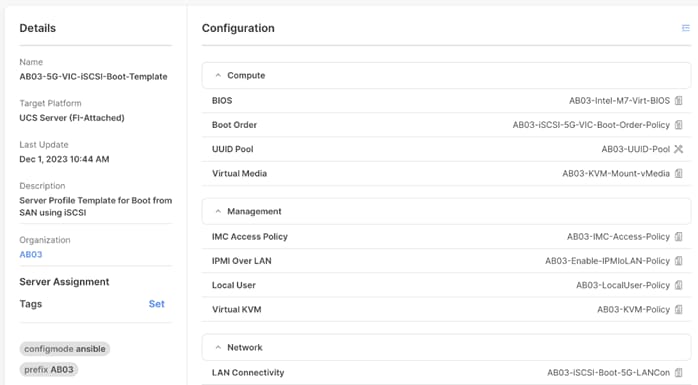

Cisco Intersight and Cisco UCS Manager deliver significant advantages through policy-driven control plane. Using server profile templates associated with several policies, UCS server deployments support physical and logical abstraction. This abstraction of physical resources ensures hardware identifies stay secured (hidden) during deployment and the system remains protected and robust, capable of fending off potential threats by quickly creating new server profiles and identities to replace the compromised servers.

Using server profile templates, Cisco Intersight associates repeatable secure baselines to physical servers. This allows for the standardization of security measures across various systems, thereby bolstering overall security and reducing potential vulnerabilities. Additionally, Cisco Intersight provides a centralized add/change/delete functionality. This centralized control not only simplifies system management but also significantly reduces the audit footprint. By consolidating all changes in one place, tracking, reviewing, and auditing changes become much more manageable and efficient.

Cisco Intersight also offers drift protection as part of its deployment design. Drift protection ensures that system configurations remain consistent over time. It automatically identifies (and sometimes rectifies) any deviations from the defined configurations, thereby maintaining system security and optimal performance levels.

Endpoint Security Advisories

Cisco's Intersight platform serves as a comprehensive tool for managing security advisories. It is equipped to display devices that are impacted by Cisco Security Advisories, ensuring users are aware of potential vulnerabilities or threats. These advisories are conveniently available in the menu bar of the user interface, making them easily accessible for users. Each advisory comes with associated Common Vulnerabilities and Exposures (CVE) IDs and links to more detailed information. This feature allows users to explore the nature of the security advisories in more detail, enabling them to understand the potential impact and implement necessary mitigation strategies.

Cisco Nexus 93600CD-GX Ethernet Switch

The Cisco Nexus 9000 series switch featured in this design is the Cisco Nexus 93600CD-GX configured in NX-OS standalone mode. NX-OS is a purpose-built data-center operating system designed for performance, resiliency, scalability, manageability, and programmability at its foundation. It provides a robust and comprehensive feature set that meets the demanding requirements of virtualization and automation.

![]()

The Cisco Nexus 93600CD-GX Switch is a 1RU switch that supports 12 Tbps of bandwidth and 4.0 bpps. The 28 downlink ports on the 93600CD-GX support 40/100-Gbps Ethernet, offering deployment flexibility and investment protection. The eight uplink ports can be configured as 10/25/40/50/100/200/400-Gbps Ethernet using appropriate optics and breakout cables, offering flexible migration options.

Note: For detailed information in port speeds and breakout options, refer to: https://www.cisco.com/c/en/us/td/docs/switches/datacenter/nexus9000/hw/n93600cd-gx-hig/guide/b_c93600cd-gx-nxos-mode-hardware-installation-guide/m_overview1.html

NetApp AFF A-Series controller lineup provides industry leading performance while continuing to provide a full suite of enterprise-grade data services for a shared environment across on-premises data centers and the cloud. Powered by NetApp ONTAP data management software, NetApp AFF A-Series systems deliver the industry’s highest performance, superior flexibility, and best-in-class data services and cloud integration to help you accelerate, manage, and protect business-critical data on-prem and across hybrid clouds. As the first enterprise-grade storage systems to support both NVMe over Fibre Channel (NVMe/FC) and NVMe over TCP (NVMe/TCP), AFF A-Series systems boost performance with modern network connectivity. These systems deliver the industry’s lowest latency for an enterprise all-flash array, making them a superior choice for running the most demanding workloads and applications. With a simple software upgrade to the modern NVMe/FC or NVMe/TCP SAN infrastructure, you can run more workloads, with faster response times, and without disruption or data migration.

NetApp offers a wide range of AFF-A series controllers to meet varying demands of the field. This solution design details midrange, the most versatile NetApp AFF A400 system featuring hardware acceleration technology that significantly enhances performance and storage efficiency.

For more information about the NetApp AFF A-series controllers, see the AFF product page: https://www.netapp.com/us/products/storage-systems/all-flash-array/aff-a-series.aspx.

NetApp AFF A400

The NetApp AFF A400 offers full end-to-end NVMe support. The frontend NVMe/FC connectivity makes it possible to achieve optimal performance from an all-flash array for workloads that include artificial intelligence, machine learning, and real-time analytics as well as business-critical databases. On the back end, the A400 supports both serial-attached SCSI (SAS) and NVMe-attached SSDs, offering the versatility for current customers to move up from their legacy A-Series systems and satisfying the increasing interest that all customers have in NVMe-based storage.

The NetApp AFF A400 offers greater port availability, network connectivity, and expandability. The NetApp AFF A400 has 10 PCIe Gen3 slots per high availability pair. The NetApp AFF A400 offers 25GbE or 100GbE, as well as 32Gb/FC and NVMe/FC network connectivity. This model was created to keep up with changing business needs and performance and workload requirements by merging the latest technology for data acceleration and ultra-low latency in an end-to-end NVMe storage system.

Note: Cisco UCS X-Series is supported with all NetApp AFF systems running NetApp ONTAP 9 release.

NetApp ONTAP 9.13.1

NetApp storage systems harness the power of ONTAP to simplify the data infrastructure from edge, core, and cloud with a common set of data services and 99.9999 percent availability. NetApp ONTAP 9 data management software from NetApp enables you to modernize your infrastructure and transition to a cloud-ready data center. ONTAP 9 has a host of features to simplify deployment and data management, accelerate and protect critical data, and make infrastructure future-ready across hybrid-cloud architectures.

NetApp ONTAP 9 is the data management software that is used with the NetApp AFF A400 all-flash storage system in this solution design. ONTAP software offers secure unified storage for applications that read and write data over block- or file-access protocol storage configurations. These storage configurations range from high-speed flash to lower-priced spinning media or cloud-based object storage. ONTAP implementations can run on NetApp engineered FAS, AFF, or ASA series arrays and in private, public, or hybrid clouds (NetApp Private Storage and NetApp Cloud Volumes ONTAP). Specialized implementations offer best-in-class converged infrastructure, featured here as part of the FlexPod Datacenter solution or with access to third-party storage arrays (NetApp FlexArray virtualization). Together these implementations form the basic framework of the NetApp Data Fabric, with a common software-defined approach to data management, and fast efficient replication across systems. FlexPod and ONTAP architectures can serve as the foundation for both hybrid cloud and private cloud designs.

Read more about all the capabilities of ONTAP data management software here: https://www.netapp.com/us/products/data-management-software/ontap.aspx.

See the ONTAP 9 release notes for more details on specific features and what’s new: ONTAP 9 Release Notes (netapp.com)

NetApp Active IQ Unified Manager

NetApp Active IQ Unified Manager is a comprehensive monitoring and proactive management tool for NetApp ONTAP systems to help manage the availability, capacity, protection, and performance risks of the storage systems and virtual infrastructure. The Unified Manager can be deployed on a Linux server, a Windows server, or as a virtual appliance on a VMware host.

Active IQ Unified Manager enables monitoring ONTAP storage clusters from a single redesigned, intuitive interface that delivers intelligence from community wisdom and AI analytics. It provides comprehensive operational, performance, and proactive insights into the storage environment and the virtual machines running on it. When an issue occurs on the storage infrastructure, Unified Manager can notify storage admins about the details of the issue to help identify the root cause. The virtual machine dashboard provides performance statistics for the VM so that users can investigate the entire I/O path from the vSphere host down through the network and finally to the storage. Some events also provide remedial actions that can be taken to rectify the issue. Custom alerts can be configured for events so that when issues occur, notifications are sent via email or using SNMP Traps. Active IQ Unified Manager enables planning for the storage requirements by forecasting capacity and usage trends to proactively act before issues arise.

For more information on NetApp Active IQ Unified Manager, refer to the following link: https://docs.netapp.com/us-en/active-iq-unified-manager/

NetApp ONTAP Tools for VMware vSphere

The ONTAP tools for VMware vSphere provide end-to-end life cycle management for virtual machines in VMware environments that use NetApp storage systems. It simplifies storage and data management by enabling administrators to directly manage storage within the vCenter Server.

Note: Each component in ONTAP tools provides capabilities to help manage storage more efficiently.

Virtual Storage Console (VSC)

NetApp Virtual Storage Console enables you to perform the following tasks:

● Add storage controllers, assign credentials, and set up permissions for storage controllers.

● Provision datastores.

● Monitor the performance of the datastores and virtual machines in the vCenter Server environment.

● View and update the host settings of the ESXi hosts that are connected to NetApp storage.

● Control administrator access to the vCenter Server objects by using role-based access control (RBAC).

VASA Provider

VASA Provider for NetApp ONTAP uses VMware vSphere APIs for Storage Awareness (VASA) to send information about storage used by VMware vSphere to the vCenter Server. NetApp ONTAP tools has VASA Provider integrated with VSC. VASA Provider enables you to perform the following tasks:

● Provision VMware Virtual Volumes (vVols) datastores.

● Create and use storage capability profiles that define different storage service level objectives (SLOs) for your environment.

● Verify for compliance between the datastores and the storage capability profiles.

● Set alarms to warn you when volumes and aggregates are approaching the threshold limits.

● Monitor the performance of virtual machine disks (VMDKs) and the virtual machines that are created on vVols datastores.

Storage Replication Adapter (SRA)

SRA enables you to use array-based replication (ABR) for protected sites and recovery sites for disaster recovery in the event of a failure. When SRA is enabled and used in conjunction with VMware Site Recovery Manager (SRM), you can recover the vCenter Server datastores and virtual machines in the event of a failure.

NetApp SnapCenter

SnapCenter Software is a simple, centralized, scalable platform that provides application consistent data protection for applications, databases, host file systems, and VMs running on NetApp ONTAP systems anywhere on premise or in the Hybrid Cloud.

SnapCenter leverages NetApp Snapshot, SnapRestore, FlexClone, SnapMirror, and SnapVault technologies to provide:

● Fast, space-efficient, application-consistent, disk-based backups.

● Rapid, granular restore, and application-consistent recovery.

● Quick, space-efficient cloning.

SnapCenter includes both SnapCenter Server and individual lightweight plug-ins. You can automate deployment of plug-ins to remote application hosts, schedule backup, verification, and clone operations, and monitor all data protection operations. Data protection is supported for Microsoft Exchange Server, Microsoft SQL Server, Oracle Databases on Linux or AIX, SAP HANA database, and Windows Host Filesystems running on NetApp ONTAP systems. It is also supported for other standard or custom applications and databases by providing a framework to create user-defined SnapCenter plug-ins. You may install only the plug-ins that are appropriate for the data that you want to protect.

Note: For more information on SnapCenter, refer to the SnapCenter software documentation: https://docs.netapp.com/us-en/snapcenter/index.html

NetApp BlueXP

NetApp BlueXP is a unified control plane that provides a hybrid multi-cloud experience for storage and data services across on-premises and cloud environments. NetApp BlueXP is an evolution of Cloud Manager and enables the management of your NetApp storage and data assets from a single interface. NetApp BlueXP is used to move, protect, and analyze data, and to control on-prem storage devices like NetApp ONTAP, E-Series, and StorgeGRID, and to create and administer cloud storage (for example, Cloud Volumes ONTAP and Azure NetApp Files).

The NetApp BlueXP backup and recovery service provides efficient, secure, and cost-effective data protection for NetApp ONTAP data, Kubernetes persistent volumes, databases, and virtual machines, both on premises and in the cloud. Backups are automatically generated and stored in an object store in public or private cloud account. NetApp BlueXP ransomware protection provides a single point of visibility and control to manage and to refine data security across various working environments and infrastructure layers to better respond to threats as they occur.

Note: For more information on NetApp BlueXP, go to: https://docs.netapp.com/us-en/cloud-manager-family/

vSphere uses virtualization to transform individual data centers into aggregated computing infrastructures that include CPU, storage, and networking resources. VMware vSphere manages these infrastructures as a unified operating environment and provides you with the tools to administer your data centers that participate in that environment. The two core components of VMware vSphere are ESXi and vCenter Server. ESXi is the virtualization platform which allows you to create and run virtual machines and virtual appliances. vCenter Server is the service through which virtualization administrators manage multiple hosts.

Latest FlexPod infrastructure validated design supports vSphere 8.0 but currently, Cisco Firewall Threat Defense (FTDv) is not supported on VMware ESXi 8.0. Since FTDv is installed on the FlexPod infrastructure being validated, VMware vSphere 7.0 U3 was selected during this validation.

Note: If you are deploying physical FTD devices or are using an existing (separate) VMware vSphere 7.0 based management infrastructure to deploy FTDv, VMware vSphere 8.0 can be used on the FlexPod infrastructure to deploy applications.

VMware vSphere 7.0 U3

VMware vSphere is a virtualization platform for holistically managing large collections of infrastructures (resources including CPUs, storage, and networking) as a seamless, versatile, and dynamic operating environment. Unlike traditional operating systems that manage an individual machine, VMware vSphere aggregates the infrastructure of an entire data center to create a single powerhouse with resources that can be allocated quickly and dynamically to any application in need.

VMware vSphere 7.0 U3 has several improvements and simplifications including, but not limited to:

● Support for the NVMe-TCP storage protocol with VMFS6 datastores.

● Improvements to vSphere Cluster Services (vCLS), including the ability to designate a datastore to store vCLS virtual machines.

● Improved Maintenance Mode Reliability and Workload Placement.

● Enhanced Performance Statistics for Memory.

● vSphere Lifecycle Management (vLCM) with Hardware Support Manager (HSM) Integration with Cisco Intersight.

● A VMware-Recommended 128GB SAN boot LUN for VMware ESXi.

For more information about VMware vSphere and its components, see: https://www.vmware.com/products/vsphere.html.

VMware vSphere vCenter

VMware vCenter Server provides unified management of all hosts and VMs from a single console and aggregates performance monitoring of clusters, hosts, and VMs. VMware vCenter Server gives administrators a deep insight into the status and configuration of compute clusters, hosts, VMs, storage, the guest OS, and other critical components of a virtual infrastructure. VMware vCenter manages the rich set of features available in a VMware vSphere environment.

Infrastructure as Code with Ansible

This FlexPod solution provides a fully automated solution deployment that covers all components of the infrastructure. The configuration of the Cisco Network and Compute, NetApp ONTAP Storage, and VMware vSphere are automated by leveraging Ansible playbooks that have been developed to setup the components according to the solution best practices. The automated deployment using Ansible provides a well-defined sequence of steps across the different elements of this solution. The automated deployment involves exchange of parameters or attributes between compute, network, storage, and virtualization and require some level of manual intervention. The workflow is clearly defined and documented. The Ansible playbooks to configure the different sections of the solution invoke a set of Roles which consume several user configurable variables. Based on the installation environment, you can choose to modify the variables to suit your needs and proceed with the automated installation.

After the FlexPod infrastructure is setup, NetApp Management Tools such as ONTAP Tools for VMware vSphere (formerly Virtual Storage Console), SnapCenter Plug-in for VMware vSphere, and Active IQ Unified Manager can also be deployed in an automated fashion.

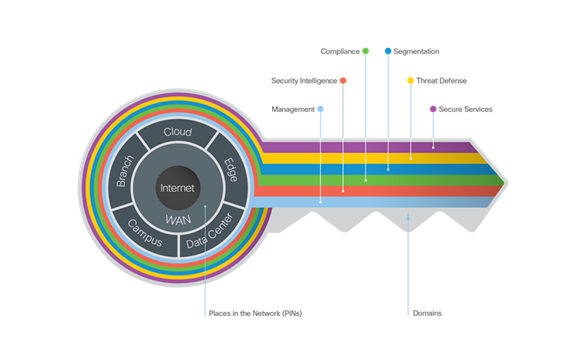

Today, attacks like phishing, ransomware, and advanced persistent threats are common. No single product can successfully secure a business from these risks. An architectural approach that addresses the full range from people, to devices, to applications is needed. Secure Architecture for Everyone (SAFE) can help simplify security strategy and deployment. This Cisco security reference architecture features easy-to-use visual icons that helps you design a secure infrastructure for the edge, branch, data center, campus, cloud, and WAN. The framework encompasses operational domains such as management, security intelligence, compliance, segmentation, threat defense, and secure services. SAFE solutions have been deployed, tested, and validated at Cisco and provide guidance, best practices, and configuration steps.

The Key to SAFE provides the Key to simplify cybersecurity into Secure Places in the Network (PINs) for infrastructure and Secure Domains for operational guidance. SAFE includes:

● Business use cases illustrating the surface that fraudsters can attack.

● Security capabilities mapped to common threats within business use cases.

● Reference architectures that logically arrange the security capabilities into blueprints.

● Designs using the reference architectures for common deployment scenarios and solutions delivered as Cisco Validated Designs (CVDs).

Zero Trust Framework for FlexPod

Zero Trust framework for FlexPod validated design aligns with the Cisco SAFE architecture guidelines for Data Center. Various capabilities are necessary to protect the workloads running on FlexPod Datacenter and to build appropriate layers of defense that protect your applications.

Three (of several) core pillars of Zero Trust framework explained in this design guide are:

● Segmentation - Reduce the attack surface by preventing attackers from moving laterally, with consistent security policy enforcement, application access control and micro segmentation.

● Visibility - Complete visibility of users, devices, networks, applications, workloads, and processes.

● Threat Protection - Stop the breach by quickly detecting, blocking, and dynamically responding to threats.

These Cisco security products and solutions are deployed to satisfy the requirements:

● Cisco Secure Firewall Threat Defense – for enforcing traffic control and segmentation.

● Cisco Secure Network Analytics – for network traffic visibility and anomaly detection.

● Cisco Secure Workload – for device and process level visibility and threat mitigation

For more details on Cisco SAFE architecture and validated designs, refer to: https://www.cisco.com/c/en/us/solutions/enterprise/design-zone-security/landing_safe.html.

Cisco Secure Firewall Threat Defense Virtual

Cisco Secure Firewall Threat Defense Virtual (FTDv) combines Cisco's proven next-generation firewall (NGFW) technology with threat defense features like Snort intrusion prevention system (IPS), URL filtering, and Advanced Malware Protection (AMP). It simplifies threat protection with consistent security policies across physical, private, and public cloud environments.

Cisco Secure Firewall Threat Defense - Key Features

● Stateful firewall: Inspects traffic at both the Layer 3 and Layer 4 levels to detect and block unauthorized traffic.

● Intrusion prevention system (IPS): Protects against known and unknown attacks using Snort technology.

● URL filtering: Blocks access to malicious websites and other unwanted content.

● Advanced Malware Protection: Detects and blocks malware before it can infect your systems.

● Application visibility and control: Provides granular control over applications and their traffic.

● Threat intelligence: Provides real-time updates about the latest threats.

Cisco Secure Firewall Threat Defense - Key Benefits

● Simplified security management: Deploy and manage your firewall from a single pane of glass, with unified policy and consistent enforcement across all your environments.

● Flexibility: Deploy the firewall anywhere you need it, whether it's in your data center, public cloud, or private cloud.

● Scalability: Easily scale your firewall up or down to meet your changing needs.

● Comprehensive security: Get protection from a wide range of threats, including malware, phishing, and botnets.

● Integration with other Cisco security solutions: Integrate Threat Defense Virtual with other Cisco security solutions, such as SecureX and Umbrella, to create a comprehensive security posture.

For more details: https://www.cisco.com/c/en/us/products/collateral/security/firepower-ngfw-virtual/threat-defense-virtual-ngfwv-ds.html

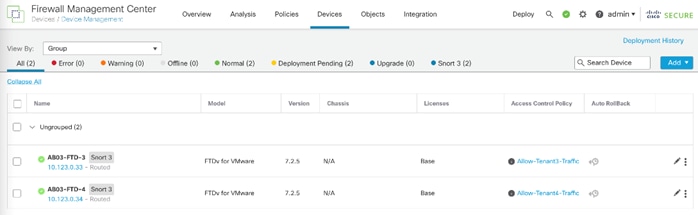

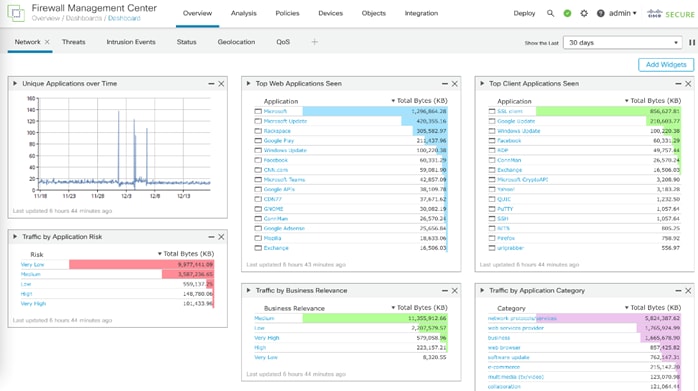

Cisco Secure Firewall Management Center

The Cisco Secure Firewall Management Center (FMC) provides complete and unified management of firewalls, application control, intrusion prevention, URL filtering, and advanced malware protection. It is a key part of the broad Cisco Secure portfolio, delivering in-depth analysis, streamlined security management across the network and cloud, and accelerated incident investigation and response, working across your Cisco and third-party technologies. FMC is ideal for organizations with large deployments of Cisco Secure firewalls, as it simplifies management and reduces operational costs.

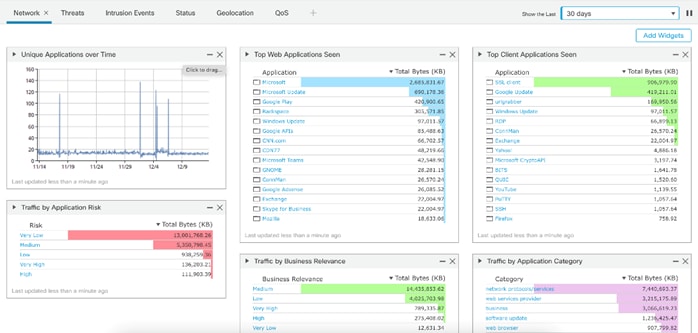

The Firewall Management Center (FMC) provides contextual network and security information so you can make quick and informed decisions. See Figure 18.

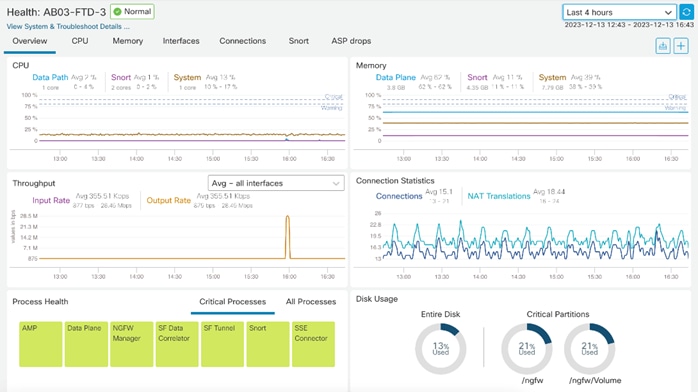

FMC also provides real-time device health monitoring and status across multiple devices. See Figure 19.

Cisco Secure Firewall Management Center - Key Features

● Policy management: Create and manage firewall policies with a drag-and-drop interface.

● Object management: Manage objects, such as networks, addresses, and users, in a central location.

● Configuration management: Manage firewall configurations and settings from a single console.

● Monitoring and reporting: Monitor security events and traffic in real time and generate reports to track trends and identify security issues.

● Threat intelligence integration: Integrate with Cisco Talos to receive threat intelligence updates and automatically generate policies to protect against the latest threats.

Cisco Secure Firewall Management Center - Key Benefits

● Centralized management: Manage all your Cisco Secure firewalls from a single console, simplifying security administration and reducing operational costs. Multitenancy management and policy inheritance by creating up to 100 management domains.

● Automated workflows: Automate repetitive tasks, such as configuration changes and policy updates, to improve efficiency and reduce errors.

● Open APIs: Integrate with third-party technologies through powerful APIs.

● Real-time visibility: Get real-time visibility into your security posture with dashboards and reports that provide insights into traffic, threats, and events.

● Scalability: Manage large deployments of Cisco Secure firewalls with ease.

FMC is preferred method of managing multiple firewalls in Zero Trust framework for FlexPod design. For more details: https://www.cisco.com/c/en/us/products/collateral/security/firesight-management-center/datasheet-c78-736775.html

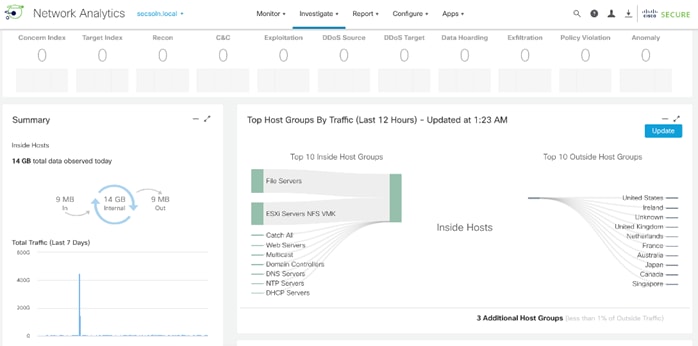

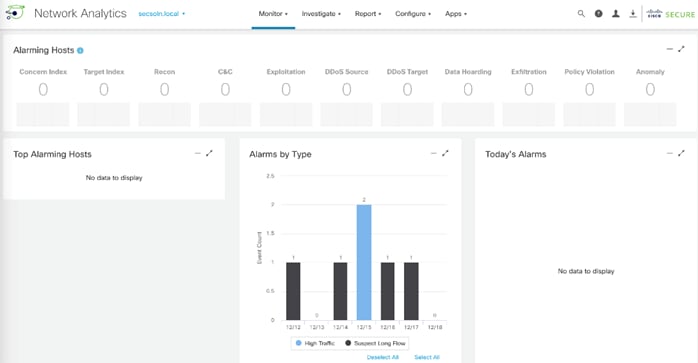

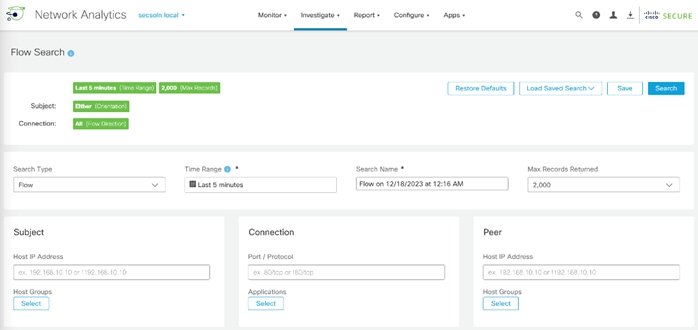

Cisco Secure Network Analytics

Cisco Secure Network Analytics (formerly known as Stealthwatch) is a comprehensive network traffic analysis (NTA) and network detection and response (NDR) solution that uses telemetry from the existing network infrastructure to provide deep visibility into network activity and detect threats across private networks, public clouds, and even encrypted traffic.

The solution continuously analyzes network activities to create a baseline of normal network behavior. It then uses this baseline, along with non–signature-based advanced analytics that include behavioral modeling and machine learning algorithms, as well as global threat intelligence to identify anomalies and detect and respond to threats in real-time. Secure Network Analytics can quickly and with high confidence detect threats such as Command-and-Control (C&C) attacks, ransomware, Distributed-Denial-of-Service (DDoS) attacks, illicit crypto mining, unknown malware, and insider threats. With an agentless solution, you get comprehensive threat monitoring across the entire network traffic, even if it’s encrypted.

Cisco Secure Network Analytics performs monitoring of network traffic using data collected from NetFlow devices across the network acting as a complement to the string based IPS detection of Secure Firewall.

Cisco Secure Network Analytics key features

● Traffic analysis: Analyzes network traffic to identify applications, users, and devices.

● Threat detection: Uses machine learning and behavioral analysis to detect threats in real-time.

● Incident investigation: Provides tools to investigate security incidents quickly and efficiently.

● Security automation: Automates security tasks, such as incident response and threat hunting.

● Advanced reporting: Provides detailed reports on network activity, threats, and compliance.

Required Components of the System

Manager

The Secure Network Analytics Manager aggregates, organizes, and presents analyses from up to 25 Flow Collectors, Cisco Secure Network Access (formerly Cisco Identity Services Engine), and other sources. It uses graphical representations of network traffic, identity information, customized summary reports, and integrated security and network intelligence for comprehensive analysis. Secure Network Analytics Manager provides graphical views of the current state of the organization’s traffic. Administrators can easily construct maps of their network based on any criteria, such as location, function, or virtual environment.

Flow Collector

The Flow Collector collects and stores enterprise telemetry types such as NetFlow from existing infrastructure such as routers, switches, firewalls, endpoints, and other network infrastructure devices. The Flow Collector can also collect telemetry from proxy data sources, which can be analyzed by the cloud-based machine learning engine (global threat alerts). The telemetry data is analyzed to provide a complete picture of network activity. Months or even years of data can be stored, creating an audit trail that can be used to improve forensic investigations and compliance initiatives.

Data Store (optional)

The Data Store provides a solution for environments requiring high data ingest capacity levels or long-term retention times that exceed the capacity of one or more Flow Collectors. The Data Store cluster can be added between the Secure Network Analytics Manager and Flow Collectors. For these larger and more extensive networks, one or more Flow Collectors ingest and de-duplicate flow data, perform analyses, and then send the flow data and its results directly to the Data Store.

In this guide, Secure Network Analytics is deployed as two devices, a Flow Collector virtual machine and a Management Center virtual machine. For more information, go to: https://www.cisco.com/c/en/us/products/collateral/security/stealthwatch/datasheet-c78-739398.html

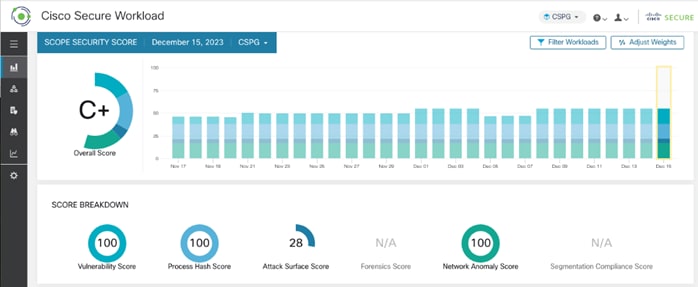

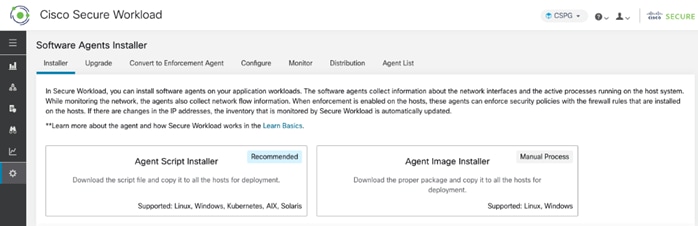

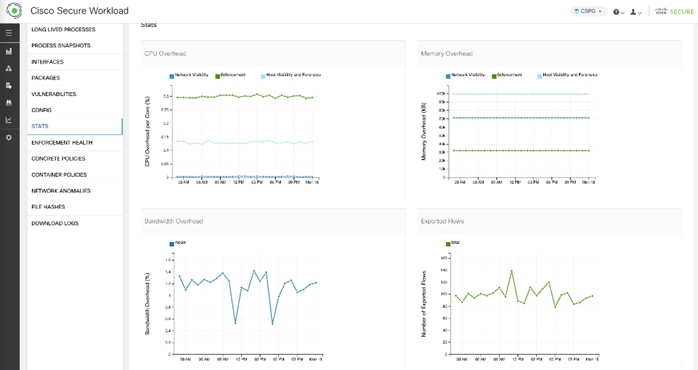

Cisco Secure Workload (formerly known as Cisco Tetration) is a comprehensive security platform that helps you achieve micro-segmentation and implement a zero-trust security model across their entire application landscape, regardless of location or workload type. Cisco Secure Workload offers a unified view of network, applications, and workloads, enabling you to detect and respond to threats quickly and effectively.

Cisco Secure Workload has a SaaS offering that provides the capability to do micro-segmentation in a highly flexible manner along with an in-depth visibility into the workloads. Cisco Secure Workload offers visibility and enforcement agents that are installed on the workloads. The enforcement agents provide an additional capability to enforce policies. Cisco Secure Workload can dynamically learn various ongoing changes in the cloud workload environment and enforce an adaptive micro-segmentation and the user portal allows you to create workspaces and graphical views for applications and enforce security from the web application point of view. Once the agent on the new workload is registered with the Cisco Secure Workload, it starts exporting the network flow and process information for analysis.

Cisco Secure Workload Key Features

Cisco Secure Workload ensures Cisco's Zero Trust model with the following key features:

● Policy enforcement (Micro-segmentation).

● Visibility into workload process activity.

● Network flow visibility.

● Software vulnerability reports.

● Forensic analysis.

● Behavior deviations.

Cisco Secure Workload Deployment Options

Cisco Secure Workload offers two deployment options: on-premises and SaaS.

The on-premises option is a hardware-based appliance that comes in two sizes: small and large. It is suitable for any business type and size, and it offers high performance, high availability, and on-premises control of apps and data.

The SaaS option is a fully managed service that is suitable for any size customer. It has a low barrier to entry and a flexible pricing model, and it enables secure migration to cloud and multi-cloud environments.

In this guide, Secure Workload SaaS is utilized. For more information, go to: https://www.cisco.com/site/us/en/products/security/secure-workload/index.html#tabs-ca9b217826-item-9e6cde6a19-tab

Intel Confidential Computing is a security technology built into Intel processors that enables protected execution of sensitive data within a hardware-isolated environment called a Trusted Execution Environment (TEE). This TEE acts as a secure enclave, shielding data and computations from unauthorized access, even from privileged software like the operating system or the hypervisor.

Enterprise services often run in a hybrid and multi-cloud environment, and it is critical to protect enterprise applications when working with confidential data such as username, password, database credentials, and API keys, when interacting with third-party services, credentials for service-oriented architecture communication, and more. Intel’s Xeon Scalable processor has multiple security features that can help significantly boost the security posture of a Zero Trust solution architecture, including Intel Total Memory Encryption (Intel TME) for memory encryption and Intel Software Guard Extensions (Intel SGX) facilitating confidential computing.

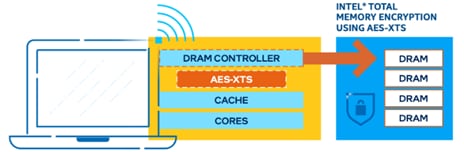

Intel Total Memory Encryption (Intel TME)

Intel TME can encrypt the entirety of physical memory of a physical server system. This capability typically is enabled in the very early stages of the boot process with a small setting in the UEFI/BIOS. After it is configured and locked, the CPU is responsible for encrypting all data into the system memory. Intel TME is based on the National Institute of Standards and Technology (NIST) standard AES-XTS algorithm with 128-bit or 256-bit keys, depending on the algorithm availability and selection. The encryption key used for Intel TME uses a hardware random number generator implemented in the Intel CPU, and the key is not accessible by software or with external interfaces. The AES-XTS encryption engine is in the direct data path to external memory buses and, therefore, all the memory data entering and/or leaving the CPU on memory buses is encrypted using AES-XTS. A Key for Intel TME is generated at every boot time. If the system is resuming from a standby, Intel TME can restore the key from storage.

Intel TME capability is transparent to software such as operating systems, hypervisors, or containers, applications, and micro services. It does not require any specific Linux kernel support. Overall, the performance impact of this capability is almost negligible when running software workloads such as Vault.

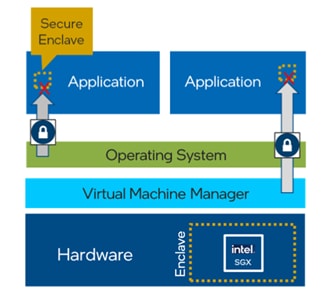

Intel Software Guard Extensions (Intel SGX)

Intel SGX is a set of instructions incorporated in Intel Xeon Scalable processor. Software developers can place security-sensitive codes and data into an Intel SGX enclave, which is then executed in a CPU protected region. Traditionally, when a system’s BIOS, hypervisor, or operating system is compromised by a malicious attack, the attacker’s code can gain visibility and access to everything higher in the system stack, such as applications and data. Intel SGX utilizes memory encryption and hardware-enforced access controls to change how data is accessed, providing enclaves of protected memory in which to run applications and data. Secure enclaves can be created on untrusted platforms not owned by the enterprise. Intel processor-based attestation can ensure the integrity of a secure enclave. After the enclave is verified, the remote attestation (application) can push secrets securely into the enclave. Even if the system is hosted in a third-party facility such as cloud, edge, or POP, the application can rely on Intel SGX to help secure the data and reduce the attacking surface available, such as to inside hackers or misconfiguration.

Intel SGX currently provides the smallest trust boundary in data center confidential computing, compared to other confidential computing technologies. With Intel SGX, only the code or functions inside the protected enclave can access confidential data.

For further details of Intel Confidential Computing, go to: https://www.intel.com/content/www/us/en/security/confidential-computing.html.

Secure Virtual Machines with Intel SGX

VMware vSphere enables you to configure Virtual Intel Software Guard Extensions (vSGX) for virtual machines. vSGX enables virtual machines to use Intel SGX technology if available on the hardware. To use vSGX, the ESXi host must be installed on an SGX-capable CPU such as Intel Xeon scalable processor and SGX must be enabled in the BIOS of the ESXi host. The VMware vSphere Client can then be used to enable SGX for a virtual machine.

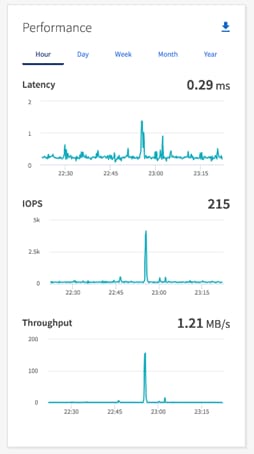

NetApp Security and Ransomware Protection

NetApp Autonomous Ransomware Protection (ARP) takes a proactive and automated approach to safeguarding your data from the ever-evolving threat of ransomware attacks. Beginning with ONTAP 9.10.1, the Autonomous Ransomware Protection (ARP) feature uses workload analysis in NAS (NFS and SMB) environments to proactively detect and warn about abnormal activity that might indicate a ransomware attack. When an attack is suspected, ARP also creates new Snapshot copies, in addition to existing protection from scheduled Snapshot copies. ARP offers anti-ransomware detection based on:

● Identification of the incoming data as encrypted or plaintext.

● Analytics, which detects:

◦ Entropy: an evaluation of the randomness of data in a file.

◦ File extension types: An extension that does not conform to the normal extension type.

◦ File IOPS: A surge in abnormal volume activity with data encryption.

ARP can detect the spread of most ransomware attacks after only a small number of files are encrypted, act automatically to protect data, and alert admins that a suspected attack is happening.

NetApp Autonomous Ransomware Protection Key Benefits

● Reduced risk of data loss: Proactive and automated intervention minimizes the potential damage from ransomware attacks.

● Faster recovery times: Quick identification and isolation of threats enable swift restoration of affected data.

● Simplified security management: ARP automates many security tasks, freeing up IT teams to focus on strategic initiatives.

● Improved security posture: The multi-layered protection approach significantly strengthens overall security posture.

Data Recovery

When an attack is suspected, the system takes a volume Snapshot copy at that point in time and locks that copy. If the attack is confirmed later, the volume can be restored to this Snapshot, minimizing data loss. Locked Snapshot copies cannot be deleted by normal means. With the knowledge of the affected files and the time of attack, it is possible to selectively recover the affected files from various Snapshot copies, rather than simply reverting the whole volume to one of the snapshots. ARP builds on proven ONTAP data protection and disaster recovery technology to respond to ransomware attacks.

For more information on NetApp ARP, go to: https://docs.netapp.com/us-en/ontap/anti-ransomware/ and technical report TR-4961, FlexPod ransomware protection & recovery with NetApp Cloud Insights and SnapCenter.

This chapter contains the following:

● Validated Infrastructure – Secure Foundation

● Visibility and Continuous Monitoring

● Threat Protection and Response

The Zero Trust framework for FlexPod Datacenter design incorporates various security products and components providing a robust framework that extends to all layers, including network, compute, hypervisor, and storage and includes implementation of tenant-based segmentation. This current FlexPod design implements security best practices like segmentation, authentication, and secure transport protocols. In Zero Trust Framework for FlexPod validated design:

● Cisco Secure Firepower Threat Defense devices are utilized to ensure secure communication across application tiers and tenants.

● Cisco Secure Workload is used for visibility into workload VMs' OS and processes and for providing micro segmentation.

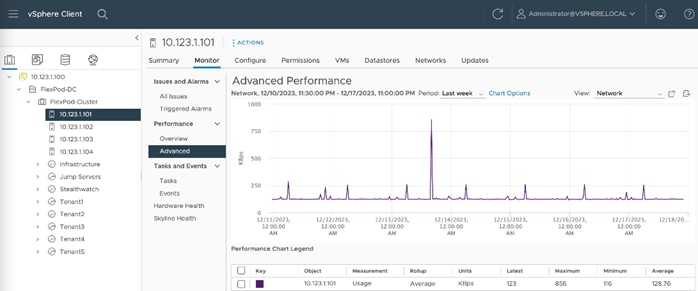

● Cisco Secure Network Analytics combined with NetFlow export from various sources provide application and tenant visibility.

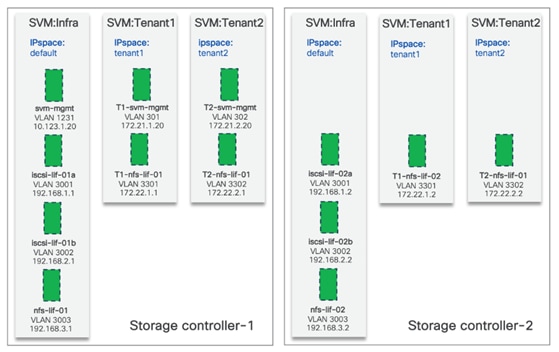

● NetApp Autonomous Ransomware Protection delivers data classification, protection, and recovery. Additionally, data isolation on NetApp is achieved using IP Spaces and Storage Virtual Machines.

The Zero Trust framework for FlexPod Datacenter design meets the following core FlexPod infrastructure design requirements:

● Resilient design across all layers of the infrastructure with no single point of failure.

● Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed.

● Modular design that can be replicated to expand and grow as the needs of the business grow.

● Flexible design that can support different models of various components with ease.

● Simplified design with ability to integrate and automate.

● Cloud-enabled infrastructure design which can be configured, managed, and orchestrated from the cloud using GUI or APIs

Additionally, Zero Trust framework for FlexPod needs to satisfy following additional security and trust requirements:

● Follow cybersecurity best practices including device and protocol hardening therefore reducing the risk of configuration errors and vulnerabilities.

● Reduce attack surface using designs that support enhanced segmentation and control and reduce attack surface for malicious actors.

● Continuous Monitoring of the infrastructure at every layer to identify and mitigate threats.

● Utilize tools that allow for centralized device and security management and policy enforcement.

Note: The FC FlexPod design is supported but was not validated as part of this effort.

FlexPod Configuration

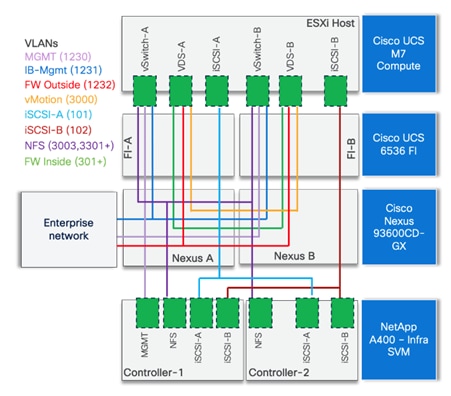

The FlexPod physical topology used in this design guide is shown in Figure 24.

The validated configuration in this design guide showcases the Cisco UCS X-Series chassis and Cisco UCS M7 servers. The Cisco UCS B-Series chassis and Cisco UCS B200 M6 servers are also supported.

● Cisco Nexus 93600CD-GX Switches in Cisco NX-OS mode provide the switching fabric. The two Nexus switches connect to each other using two 400Gbps ports configured as a port-channel (VPC peer-link).

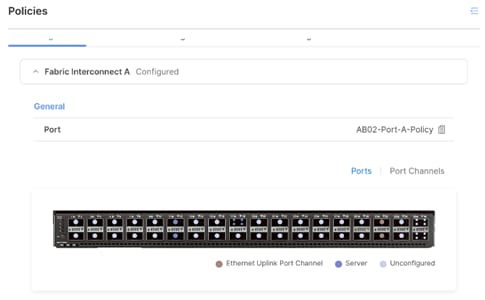

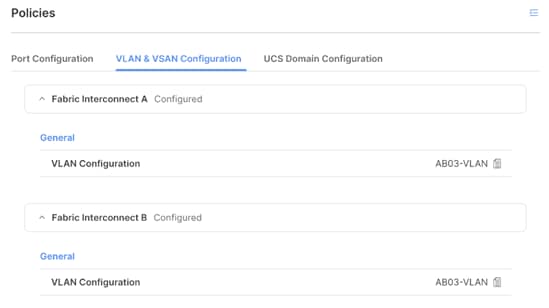

● Cisco UCS 6536 Fabric Interconnects provide the Cisco UCS X-Series chassis, C-Series servers, and network switch connectivity:

◦ Cisco UCS 6536 Fabric Interconnect (FI) 100 Gigabit Ethernet uplink ports connect to Cisco Nexus 93600CD-GX Switches in a vPC configuration.

◦ Cisco UCS X9508 Chassis connects to Cisco UCS 6536 FIs using Cisco UCSX 9108-100G Intelligent Fabric Modules (IFMs), where two or more 100 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI.

◦ The Cisco UCS C220 M7 servers connect to Cisco UCS 6536 FIs using four 25G connections. 100G to 4x25G breakout cables are used for this connectivity.

◦ Cisco UCS x210c M7 compute nodes contain fifth-generation Cisco UCS 15231 virtual interface cards.

◦ Cisco UCS C220 M7 servers contain fifth-generation Cisco UCS 15428 virtual interface cards.

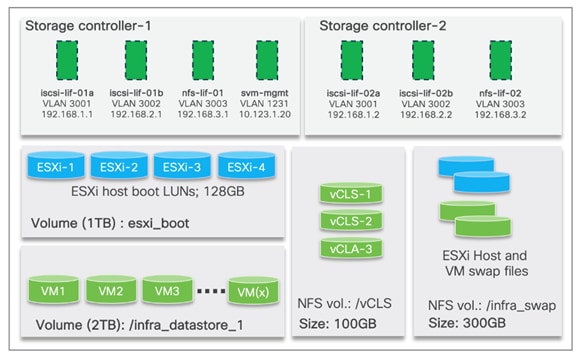

● The NetApp AFF A400 controller connects to the Cisco Nexus 93600CD-GX Switches using two 100 GE ports from each controller configured as a vPC for iSCSI boot and for NFS traffic.

● VMware 7.0 Update 3 ESXi software is installed on Cisco UCS compute nodes and rack servers.

VLAN Configuration

Table 1 lists VLANs configured for setting up the environment along with their usage.

| VLAN ID |