|

Cache-POD-

Replication-Immediate

-Local

|

critical

|

1

|

Communication Alarm

|

|

Expression: (sum by (namespace)

(increase(geo_replication_total{ReplicationNode='CACHE_POD',

ReplicationSyncType='Immediate',ReplicationReceiver='local',

ReplicationRequestType='Response',status='success'}[1m]))/sum by (namespace)

(increase(geo_replication_total{ReplicationNode='CACHE_POD',

ReplicationSyncType='Immediate',ReplicationReceiver='local',

ReplicationRequestType='Request'}[1m])))*100 < 90

Description: This alert is generated when the success rate of CACHE_POD sync type:Immediate and replication receiver:Local is below threshold

value.

|

|

Cache-POD-

Replication-Immediate

-Remote

|

critical

|

1

|

Communication Alarm

|

|

Expression: (sum by (namespace)

(increase(geo_replication_total{ReplicationNode='CACHE_POD',

ReplicationSyncType='Immediate',ReplicationReceiver='remote',

ReplicationRequestType='Response',status='success'}[1m]))/sum by (namespace)

(increase(geo_replication_total{ReplicationNode='CACHE_POD',

ReplicationSyncType='Immediate',ReplicationReceiver='remote',

ReplicationRequestType='Request'}[1m])))*100 < 90

Description: This alert is generated when the success rate of CACHE_POD sync type:Immediate and replication receiver:Remote is below threshold

value.

|

|

Cache-POD-

Replication-PULL

-Remote

|

critical

|

1

|

Communication Alarm

|

|

Expression: (sum by (namespace)

(increase(geo_replication_total{ReplicationNode='CACHE_POD',

ReplicationSyncType='PULL',ReplicationReceiver='remote',

ReplicationRequestType='Response',status='success'}[1m]))/sum by (namespace)

(increase(geo_replication_total{ReplicationNode='CACHE_POD',

ReplicationSyncType='PULL',ReplicationReceiver='remote',

ReplicationRequestType='Request'}[1m])))*100 < 90

Description: This alert is generated when the success rate of CACHE_POD sync type:PULL and replication receiver:Remote is below threshold

value.

|

|

ETCD-

Replication-Immediate

-Local

|

critical

|

1

|

Communication Alarm

|

|

Expression: (sum by (namespace)

(increase(geo_replication_total{ReplicationNode='ETCD',

ReplicationSyncType='Immediate',ReplicationReceiver='local',

ReplicationRequestType='Response',status='success'}[1m]))/sum by (namespace)

(increase(geo_replication_total{ReplicationNode='ETCD',

ReplicationSyncType='Immediate',ReplicationReceiver='local',

ReplicationRequestType='Request'}[1m])))*100 < 90

Description: This alert is generated when the success rate of ETCD sync type:Immediate and replication receiver:Local is below threshold

value.

|

|

ETCD-

Replication-Immediate

-Remote

|

critical

|

1

|

Communication Alarm

|

|

Expression: (sum by (namespace)

(increase(geo_replication_total{ReplicationNode='ETCD',

ReplicationSyncType='Immediate',ReplicationReceiver='remote',

ReplicationRequestType='Response',status='success'}[1m]))/sum by (namespace)

(increase(geo_replication_total{ReplicationNode='ETCD',

ReplicationSyncType='Immediate',ReplicationReceiver='remote',

ReplicationRequestType='Request'}[1m])))*100 < 90

Description: This alert is generated when the success rate of ETCD sync type:Immediate and replication receiver:Remote is below threshold

value.

|

|

ETCD-

Replication-PULL

-Remote

|

critical

|

1

|

Communication Alarm

|

|

Expresion: (sum by (namespace)

(increase(geo_replication_total{ReplicationNode='ETCD',

ReplicationSyncType='PULL',ReplicationReceiver='remote',

ReplicationRequestType='Response',status='success'}[1m]))/

sum by (namespace) (increase(geo_replication_total

{ReplicationNode='ETCD',ReplicationSyncType='PULL',

ReplicationReceiver='remote',ReplicationRequestType=

'Request'}[1m])))*100 < 90

Description: This alert is generated when the success rate of ETCD sync type:PULL and replication receiver:Remote is below threshold value.

|

|

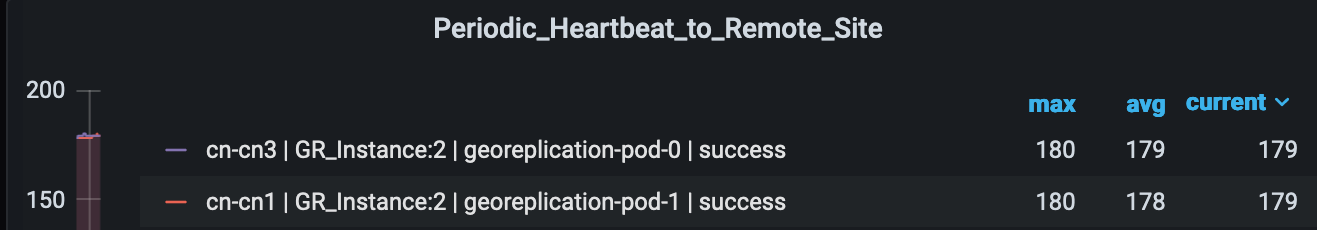

Heartbeat-Remote

-Site

|

critical

|

-

|

Communication Alarm

|

|

Expression: sum by (namespace)

(increase(geo_monitoring_total{ControlActionNameType=

'RemoteMsgHeartbeat',status!='success'}[1m])) > 0

Description: This alert is triggerd when periodic Heartbeat to remote site fails.

|

|

Local-Site-

POD-Monitoring

|

critical

|

-

|

Communication Alarm

|

|

Expression: sum by (namespace,AdminNode)

(increase(geo_monitoring_total{ControlActionNameType

='MonitorPod'}[1m])) > 0

Description: This alert is triggerd when local site pod monitoring failures breaches the configured threshold for the pod mentioned in

Label:{{$labels.AdminNode}}.

|

|

PEER-Replication-

Immediate-

Local

|

critical

|

1

|

Communication Alarm

|

|

Expression: (sum by (namespace)

(increase(geo_replication_total{ReplicationNode='PEER',

ReplicationSyncType='Immediate',ReplicationReceiver='local',

ReplicationRequestType='Response',status='success'}

[1m]))/sum by (namespace) (increase(geo_replication_total

{ReplicationNode='PEER',ReplicationSyncType=

'Immediate',ReplicationReceiver='local',

ReplicationRequestType='Request'}[1m])))*100 < 90

Description: This alert is generated when the success rate of PEER sync type:Immediate and replication receiver:Local is below threshold

value.

|

|

PEER-Replication-

Immediate-

Remote

|

critical

|

1

|

Communication Alarm

|

|

Expression: (sum by (namespace)

(increase(geo_replication_total{ReplicationNode='PEER',

ReplicationSyncType='Immediate',ReplicationReceiver='remote',

ReplicationRequestType='Response',status='success'}

[1m]))/sum by (namespace) (increase(geo_replication_total

{ReplicationNode='PEER',ReplicationSyncType='Immediate',

ReplicationReceiver='remote',ReplicationRequestType=

'Request'}[1m])))*100 < 90

Description: This alert is generated when the success rate of PEER sync type:Immediate and replication receiver:Remote is below threshold

value.

|

|

RemoteCluster-

PODFailure

|

critical

|

-

|

Communication Alarm

|

|

Expression: sum by (namespace,AdminNode)

(increase(geo_monitoring_total{ControlActionNameType

='RemoteClusterPodFailure'}[1m])) > 0

Description: This alert is generated when pod failure is detected on the Remote site for the pod mentioned in Label:{{$labels.AdminNode}}.

|

|

RemoteMsg

NotifyFailover

|

critical

|

1

|

Communication Alarm

|

|

Expression: sum by (namespace,status)

(increase(geo_monitoring_total{ControlActionNameType

='RemoteMsgNotifyFailover',status!='success'}[1m])) > 0

Description: This alert is generated when transient role RemoteMsgNotifyFailover has failed for the reason mentioned in Label:{{$labels.status}}.

|

|

RemoteMsg

NotifyPrepare

Failover

|

critical

|

1

|

Communication Alarm

|

|

Expression: sum by (namespace,status)

(increase(geo_monitoring_total{ControlActionNameType

='RemoteMsgNotifyPrepareFailover',status!='success'}[1m])) > 0

Description: This alert is generated when transient role RemoteMsgNotifyPrepareFailover has failed for the reason mentioned in Label:{{$labels.status}}.

|

|

RemoteSite-

RoleMonitoring

|

critical

|

-

|

Communication Alarm

|

|

Expression: sum by (namespace,AdminNode)

(increase(geo_monitoring_total{ControlActionNameType

='RemoteSiteRoleMonitoring'}[1m])) > 0

Description: This alert is generated when RemoteSiteRoleMonitoring detects role inconsistency for an instance on the partner rack and

accordingly changes the role of the respective instance on local rack to Primary. The impacted instance is in Label:{{$labels.AdminNode}}.

|

|

ResetRoleApi

-Initiated

|

critical

|

-

|

Communication Alarm

|

|

Expression: sum by (namespace,status)

(increase(geo_monitoring_total{ControlActionNameType

='ResetRoleApi'}[1m])) > 0

Description: This alert is generated when ResetRoleApi is initiated with the state transition of roles mentioned in Label:{{$labels.status}}.

|

|

TriggerGRApi

-Initiated

|

critical

|

-

|

Communication Alarm

|

|

Expression: sum by (namespace,status)

(increase(geo_monitoring_total{ControlActionNameType

='TriggerGRApi'}[1m])) > 0

Description: This alert is generated when TriggerGRApi is initiated for the reason mentioned in Label:{{$labels.status}}.

|

|

VIP-Monitoring

-Failures

|

critical

|

-

|

Communication Alarm

|

|

Expression: sum by (namespace,AdminNode)

(increase(geo_monitoring_total{ControlActionNameType

='MonitorVip'}[1m])) > 0

Description: This alert is generated when GR is generated upon detecting VIP monitoring failures for the VIP and Instance mentioned in

the Label:{{$labels.AdminNode}}.

|

Feedback

Feedback