Important |

Currently, SNMP and

statistics are not supported on third site arbiter.

|

Do not install

Arbiter if third site is not there or Arbiter is already installed on primary

site.

Additionally, if

third site blades are accessible from one of the GR sites, you can spawn the

Arbiter VM from one of the sites, say, Site1, and installer will sit on third

site blades. In that case also, this section is not applicable. Just have

appropriate configurations done (On Primary Site)

so that destination VM is on a third site's blade.

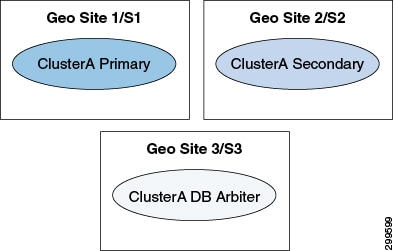

The automatic GR

site failover happens only when arbiters are placed on third site thus we

recommend the MongoDB arbiter to be on third site that is, S3.

Note |

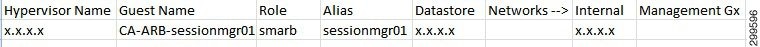

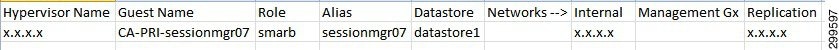

Arbiter VM name

should be sessionmgrxx.

|

Site3 HA

configuration looks like (This information is for reference only):

Table 4. Naming

Convention

|

Blade

|

Virtual

Machines

|

vCPU

|

Memory

(GB)

|

|

CPS Blade

1

|

S3-ARB-cm

S3-CA-ARB-sessionmgr01

|

1

4

|

8

8

|

For more

information about deploying VMs, refer to

CPS Installation Guide for

VMware.

Feedback

Feedback