Service Provider IP Infrastructure

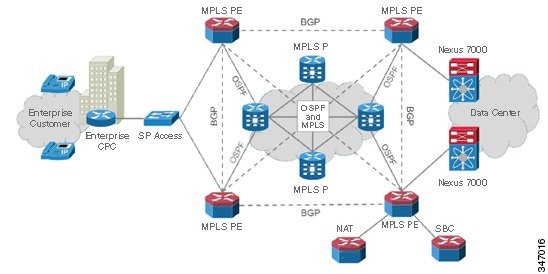

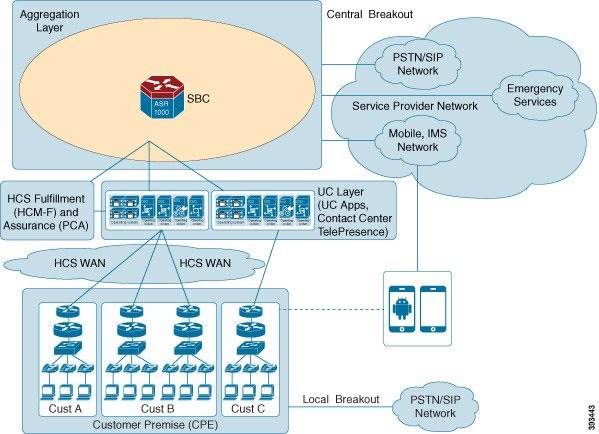

This section covers the configuration of the service provider (SP) MPLS/IP Core used to transport traffic from the customer sites to the Data Center hosting HCS. The PE devices establish L3VPNs for each customer to ensure traffic isolation while the P devices provide efficient transport across the SP backbone.

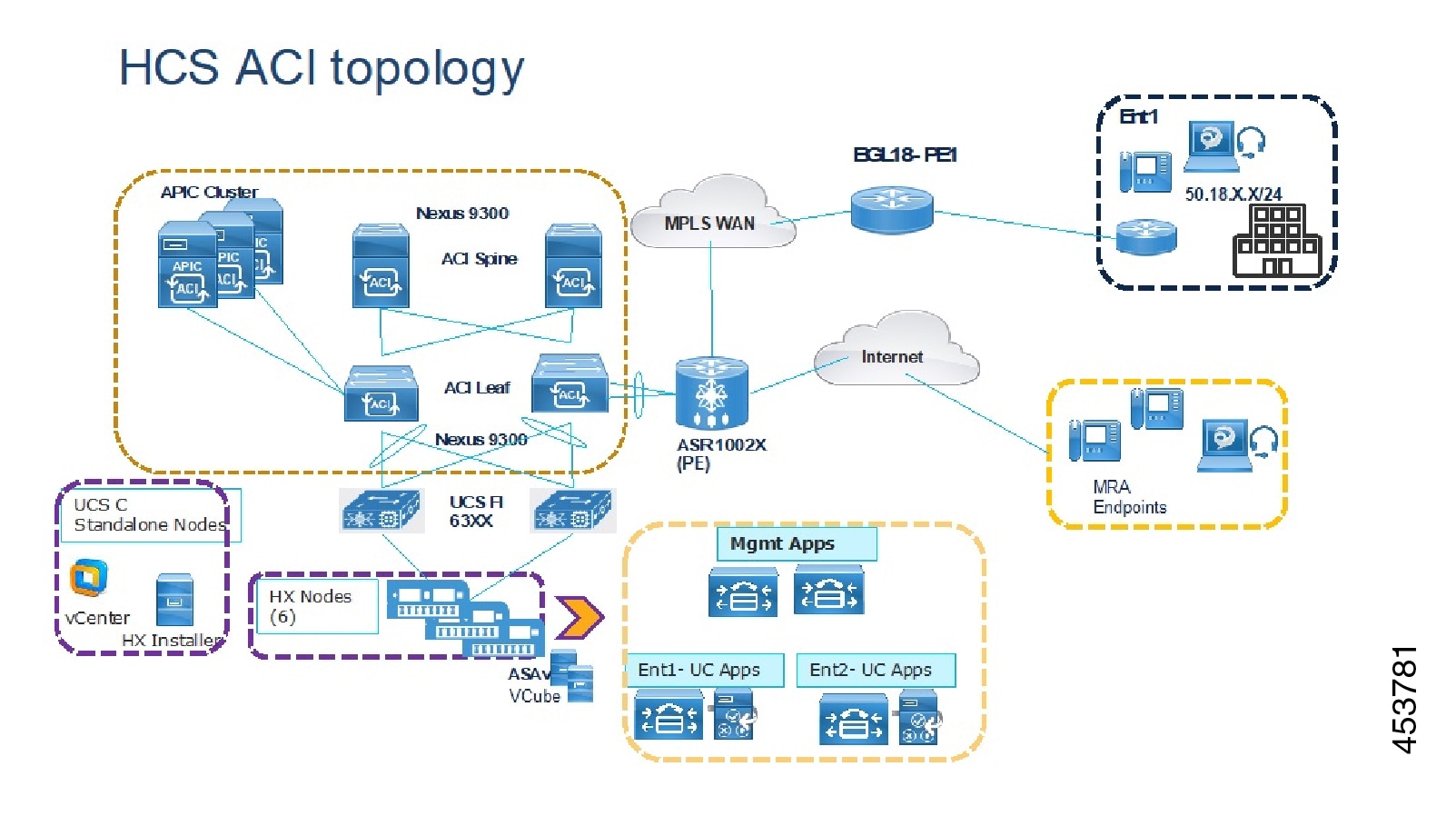

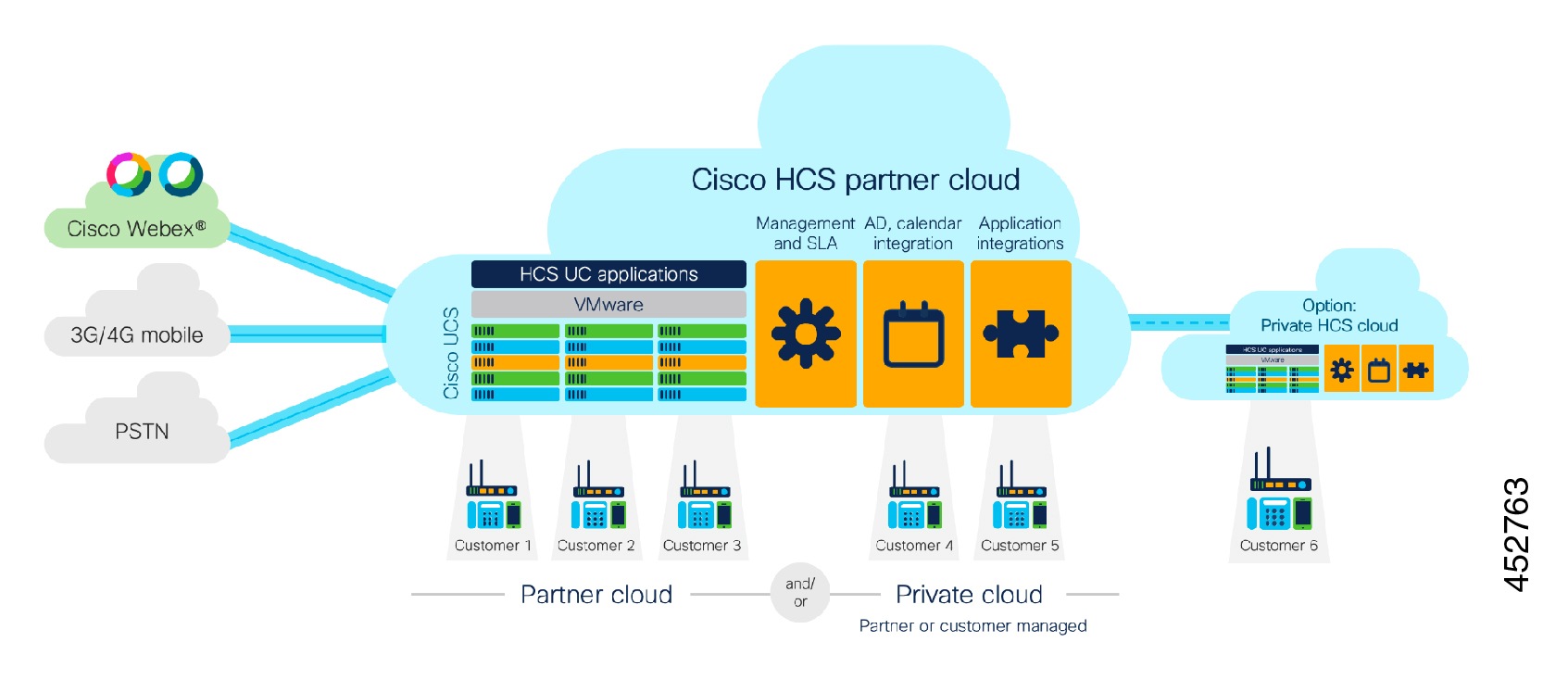

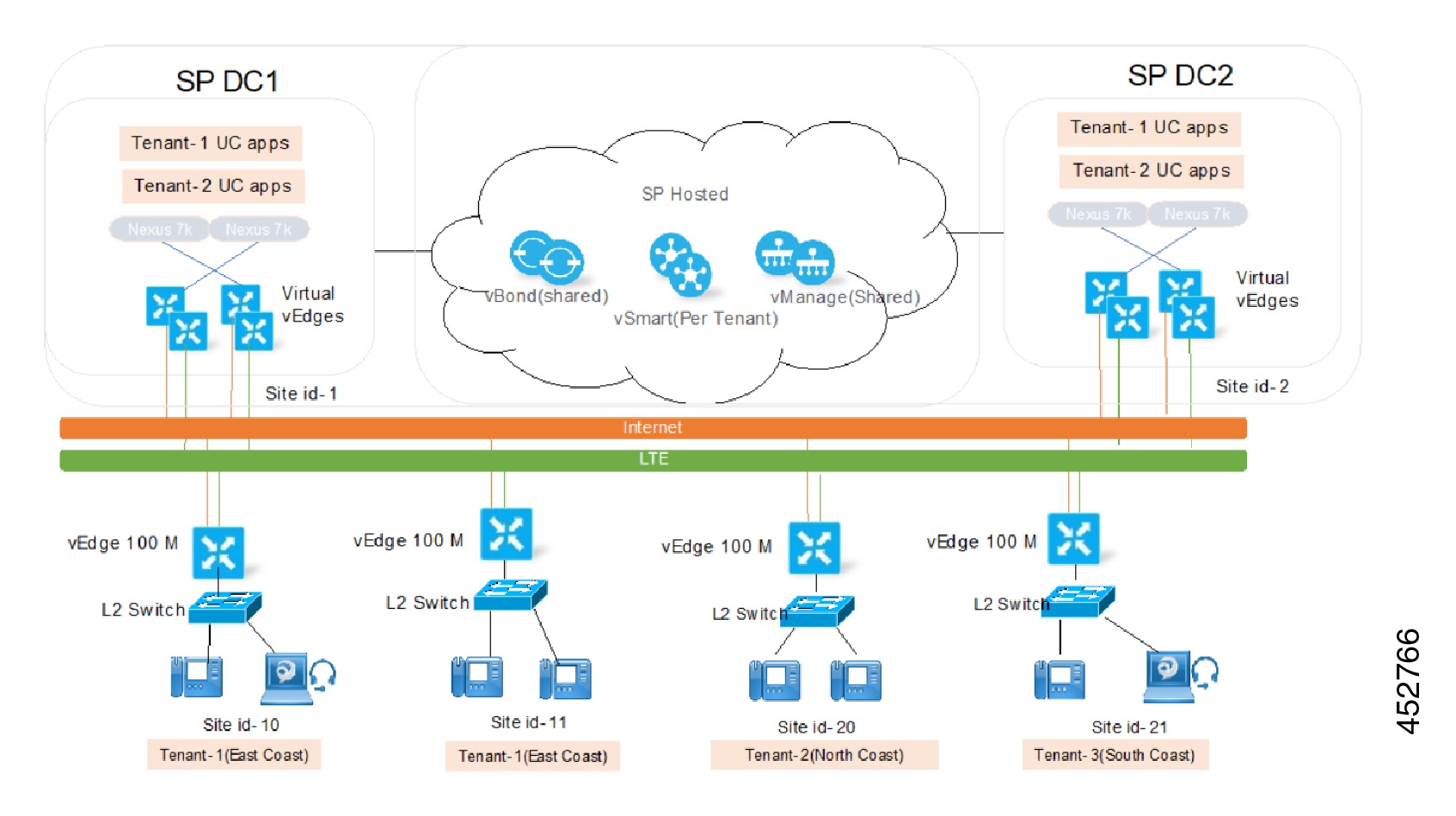

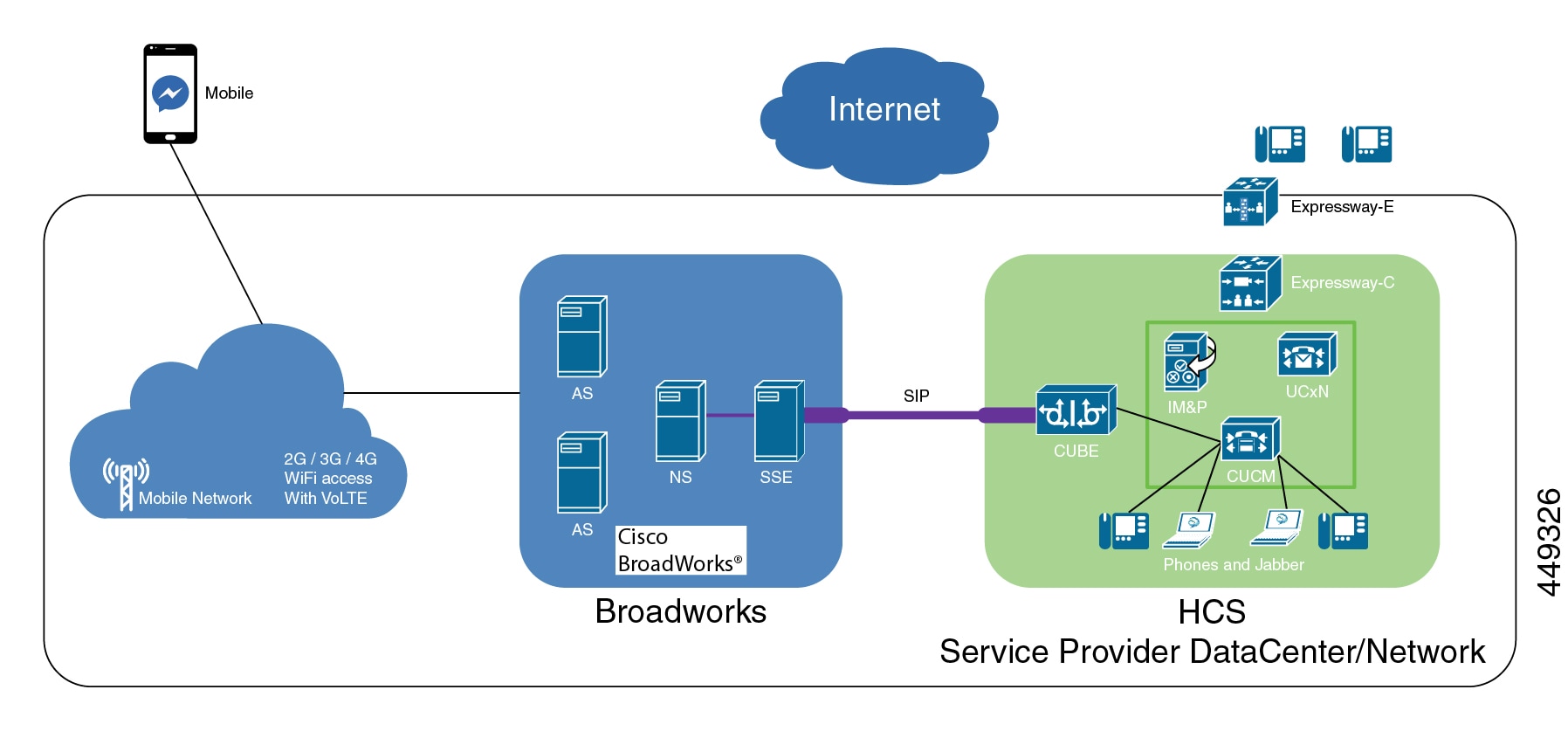

You can implement NAT services for Service Assurance, management or security. Hosted Collaboration Solution (HCS) defines an environment where customer-specific applications are hosted in the service provider Data Centers (DCs) rather than on premises. Inevitably, this also means that applications for multiple customers are hosted in the same SP DC. Customer Premises Equipment (CPE), IP endpoints within the customer premises require connectivity to the service provider data center, which is provided through a robust SP IP infrastructure.

This section takes a look at basic IP connectivity requirements and outlines the HCS IP deployment model and functions of devices at each layer within the SP IP infrastructure. This section also outlines various design considerations for IP based services such as Dynamic Host Configuration Protocol (DHCP), network address translation (NAT), Domain Name System (DNS, and Network Time Protocol (NTP), and provides more details on connectivity requirements, maximum transmission unit (MTU) size, and addressing recommendations.

Service Provider IP Connectivity Requirements

To understand overall service provider IP Infrastructure connectivity, you must understand the following:

-

What devices require IP connectivity for an end-to-end service

-

What traffic traverses service provider and Enterprise customer networks

The following table outlines the set of components and their intended placement within the end-to-end system architecture.

| Device category | Network placement | Sample device |

|---|---|---|

| IP Endpoints | Customer Premises | IP phones (voice and video), Presence/IM Clients (Cisco Unified Personal Communicator) |

| SP Managed components | Customer Premises | SRST CPE, Media Resources CPE, Media Resources Managed CPE |

| Per-Customer Servers | SP Data Center | Cisco Unified Communications Manager, Cisco Unity Connection, Cisco Unified Communications IM and Presence Service |

| Shared Management Components | SP Data Center |

Cisco Prime Collaboration Assurance (PCA) for HCS |

| Multitenant signaling aggregation | SP VoIP aggregation within IP Infrastructure | Third- party SBC |

Each of the devices shown in the preceding table requires IP connectivity to one or more other devices.

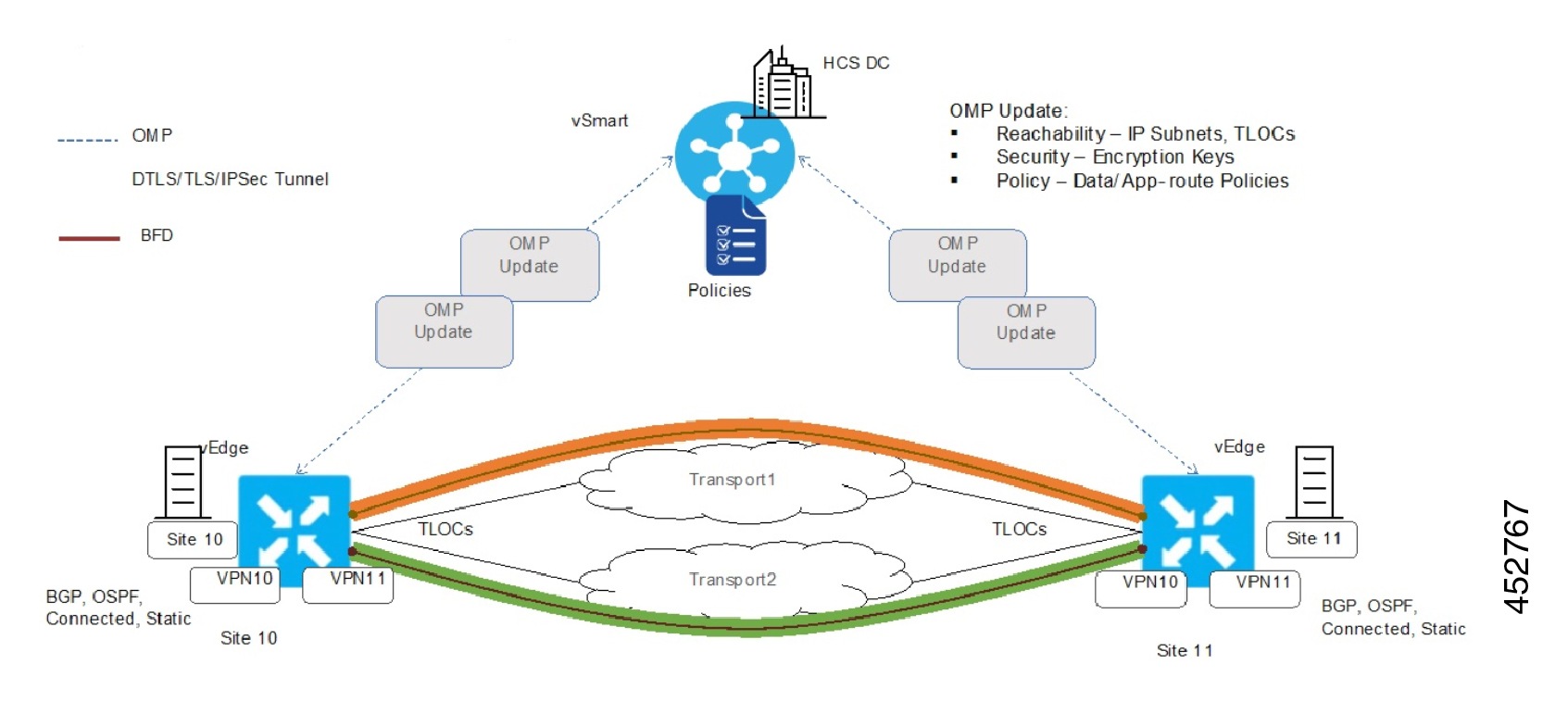

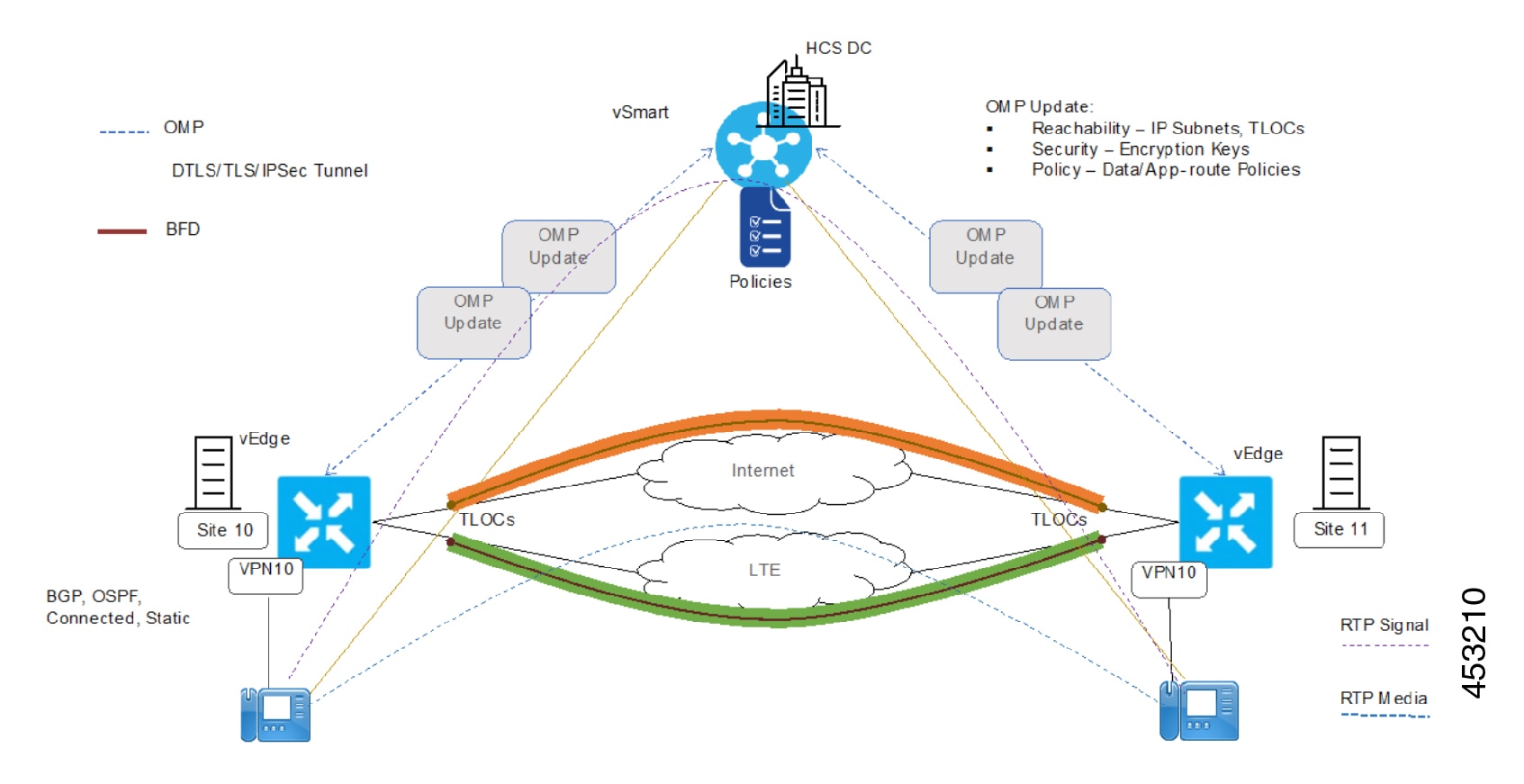

HCS Traffic Types

There are typically multiple traffic flows for different components within the service provider infrastructure, each with a distinct purpose and requirement. These traffic flows are documented as follows.

Traffic Type and Requirements

Signaling

-

For each customer, on-premises endpoints must have reachability to its per-customer services components in the service provider's data center.

-

Each per-customer instance of Unified Communications Manager in the service provider data center must have reachability to a multitenant signaling aggregation component in the service provider's data center or VoIP network.

-

On-premises components of one customer must not have reachability to the per-customer services components of another customer.

Media

-

On-premises endpoints of one customer must have reachability to on-premises endpoints of another customer for interenterprise on-net calls.

-

On-premises endpoints must have reachability to PSTN media gateway.

-

(MGW) in the service provider's data center.

Management

-

Per-customer management components in the service provider's data center must have reachability to multitenant management components in the service provider's data center.

-

In the case of managed CPE or SRST routers, the on-premises CPE management address must have reachability to per-customer management components in the service provider's data center.

-

On-premises LDAP server to the customer IM and Presence Service server instance in the service provider's data center.

Data

-

Connectivity between multiple sites within an enterprise customer.

-

No direct connectivity between sites of different enterprise customers.

-

Because multiple enterprise customers share service provider IP (and data center) infrastructure as a transport medium, some fundamental design and security constraints must be addressed:

-

On-premises components of one enterprise must not negatively impact hosted components of other enterprises or the service provider network in general.

-

Customer traffic must be segregated as it passes through the service provider IP (and data center) infrastructure. This is because multiple customers use the same infrastructure to access applications hosted in the service provider data center.

-

While providing over traffic segregation, the service provider must support some intercustomer communication. For example, media for intercustomer on-net calls can be sent over an IP network between endpoints in two different enterprises without being sent to the PSTN.

-

IP network design must consider potential overlapping address spaces of both on-premises and hosted components for multiple enterprises.

-

HCS Management IP Addressing Scheme

You must deploy the following Cisco HCS and HCS-related management components within a global address space:

-

Hosted Collaboration Mediation Fulfillment Layer (HCM-F)

Note

The use of network address translation (NAT) address space is not recommended for management applications such as Cisco Hosted Collaboration Mediation Fulfillment Layer (HCM-F) when they are accessed from customer Unified Communications applications.

-

Cisco Prime Collaboration Assurance

-

Unified Communications Manager

-

vCenter

-

Prime License Manager

These components must be directly accessed from the individual customer domains without network address translation of the management components.

The deployment scheme shown in the preceding figure is the preferred and validated method, which enables all management features to work correctly.

Note |

Some deployments do not follow the above recommended best practice, and problems with some features have been encountered; for example, platform upgrade manager or automation of assurance provisioning. We highly recommend that you migrate noncomplying deployments to the above Cisco HCS supported and validated deployment deployment (in other words, addresses of management applications such as HCM-F) must be directly accessible (without NAT) from the UC applications, whereas the UC applications can have their addresses translated (NAT) while being accessed from management applications. |

Service Provider NAT/PAT Design

With the use of per-customer MPLS VPN, Cisco HCS end customers can end up using overlapping IP addresses. Another possibility is that a service provider will use a fixed, overlapping subnet with possibly the same IP addresses for all customers to simplify operation complexities.

While MPLS provides the ability to use overlapping subnets across multiple customers, it also causes problems for out-of-band management of overlapping customer subnets. HCS recommended design uses NAT between management systems and customer UC applications. which use overlapping addresses.

Grouping VLANs and VLAN Numbering

Cisco recommends that when you design Layer 2 for a Cisco HCS deployment, you group the VLANs based on their usage. The current Service Provider Cisco HCS data center design assumes that each end customer consumes only two VLANs; however, it is possible to configure four VLANs for each end customer.

Use the following VLAN numbering scheme if four VLANs are configured for each end customer:

-

0100 to 0999: UC Apps (100 to 999 are the customer IDs)

-

1100 to 1999: outside VLANs (100 to 999 are the customer IDs)

-

2100 to 2999: hcs-mgmt ( 100 to 999 are the customer IDs)

-

3100 to 3999: Services ( 100 to 999 are the customer IDs)

-

Use all the unused VLANs x000 to x099 (where x is 1, 2, or 3) and VLANS 4000 to 4095 for other purposes

Use the following number scheme if only two VLANs are configured for each end customer:

-

0100 to 1999: UC Apps (100 to 999 are the customer IDs for Group 1)

-

2100 to 3999: outside VLANs (100 to 999 are the customer IDs for Group 1)

Use the following numbering scheme for additional end customers:

-

2100 to 2999: UC Apps (100 to 999 are the customer IDs for Group 2)

-

3100 to 3999: outside (100 to 999 are the customer IDs for Group 2)

-

Use the unused VLANs for other purposes

While this is the recommended grouping of VLANS to help you scale the number of customers that can be hosted on a Cisco HCS platform, you may reach the upper limit of customers due to limitations in other areas of the Cisco HCS solution.

VPN Options

- MPLS VPN

- Site-to-Site IPsec VPN

- FlexVPN

- AnyConnect VPN

- For access options that do not require VPN, see Cisco Expressway Over-the-Top Solution Overview

Service Provider IP infrastructure design MPLS VPN

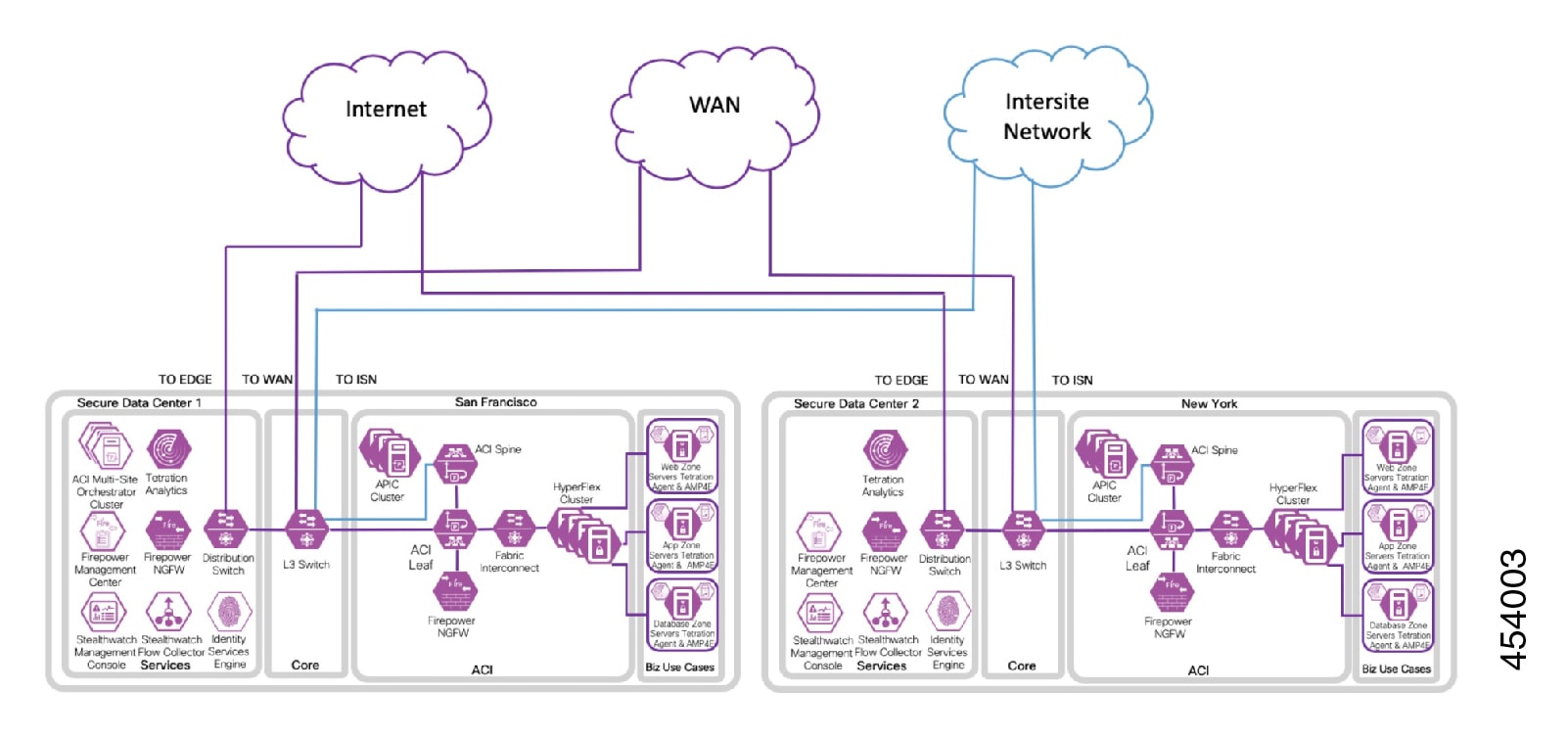

Cisco HCS recommended IP infrastructure design looks to satisfy all the connectivity requirements for both services and management, as outlined in an earlier section, securely with complete segregation between customers in multitenant service provider data centers.

Cisco HCS reference IP infrastructure design revolves around the following two key principles:

-

Use of MPLS VPN and VLAN to provide customer traffic isolation and segregation

Endpoints in individual customer sites connect to the service provider network through MPLS Provider Edge (PE) devices. Customer traffic may be untagged, in which case physical interfaces are used on MPLS PE devices. Or the service provider may choose to use a bump-in-the-wire and may aggregate multiple customers on the same physical MPLS PE interface, in which case each customer is assigned its own VLAN and each customer is terminated on a customer-specific sub-interface with 802.1Q encapsulation that matches the VLAN sent by customer.

The customer-facing MPLS PE device is responsible for implementing per-customer MPLS Layer 3 Virtual Private Network (VPN), which provides customer traffic separation through the service provider MPLS-IP infrastructure.

As an MPLS VPN PE node this device is responsible for the following:

-

Defining customer-specific VRF

-

Assigning customer-facing interfaces to VRF

-

Implementing PE-CE Routing protocol for route exchange

-

Implementing Multiprotocol BGP (M-BGP) for VPN route exchange through the MPLS Core

-

Routing redistribution between PE-CE and M-BGP routing protocol

MPLS Provider (P) routers are core service provider routers, responsible for high-speed data transfer through the service provider backbone. Depending upon overall service provider design, this P router may or may not be part of M-BGP deployment. Other than regular service provider routing and MPLS operations, there is no specific Cisco HCS-related requirement.

Per-customer MPLS VPN services initiated at the customer-facing MPLS PE devices are terminated at the data center facing MPLS PEs. The implementation at data center core facing MPLS PEs is the same as the customer-facing PE device. This effectively means that MPLS L3 VPN is used only in the service provider MPLS/IP core for customer data transport.

Note |

Use of labels for MPLS VPN may push the packet size beyond the default maximum of 1500 bytes that may cause fragmentation in some cases. A good practice is to increase MTU size to accommodate these added bytes. |

The data center core-facing interfaces on the MPLS PE implement a per-customer sub-interface, which is configured for the customer VRF and is a VLAN unique to each customer. In other words, customer traffic handoff from service provider core to the data center core devices is based on per-customer VLAN. Data center infrastructure uses this VLAN to implement VRF-Lite for customer traffic separation.

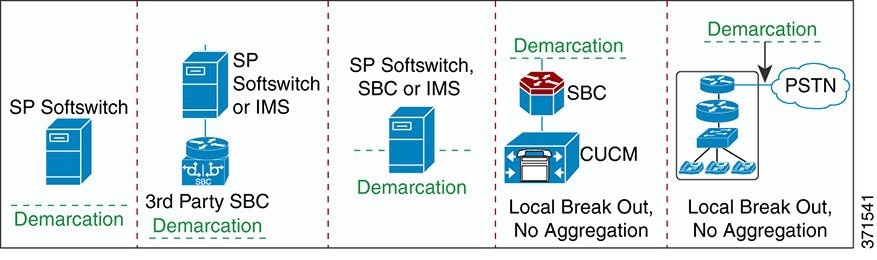

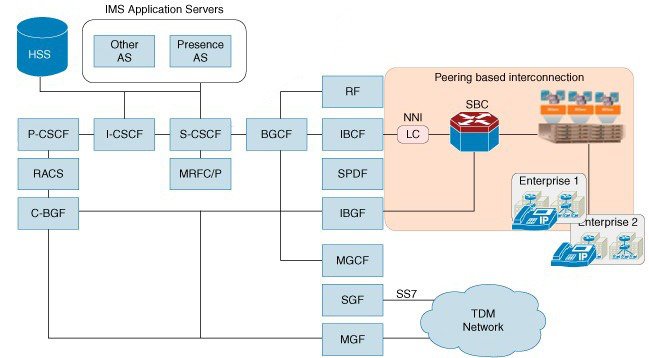

A similar approach is used to hand over customer traffic to the Session Border Controller (SBC). Any intercustomer calls, or any calls to PSTN are done through SBC. The Nexus 7000 device hands off customer traffic to SBC using a per-customer sub-interface, similar to data center handoff. The Session Border Controller is responsible to correctly route customer calls, based on the configuration within SBC.

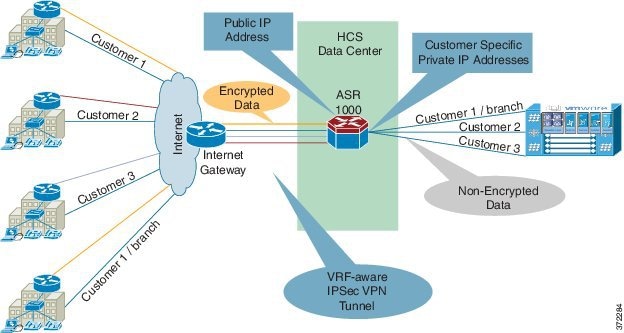

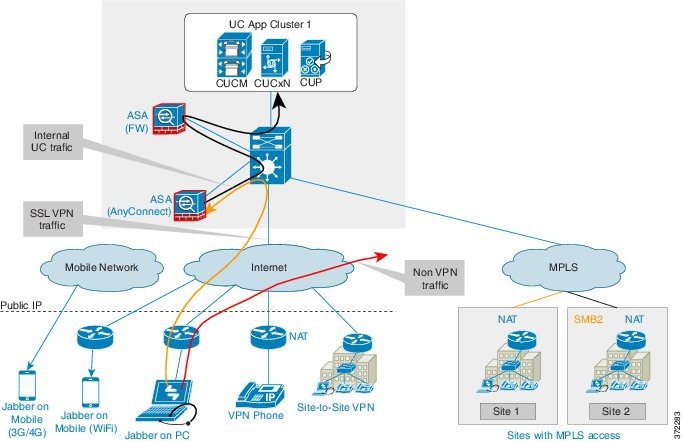

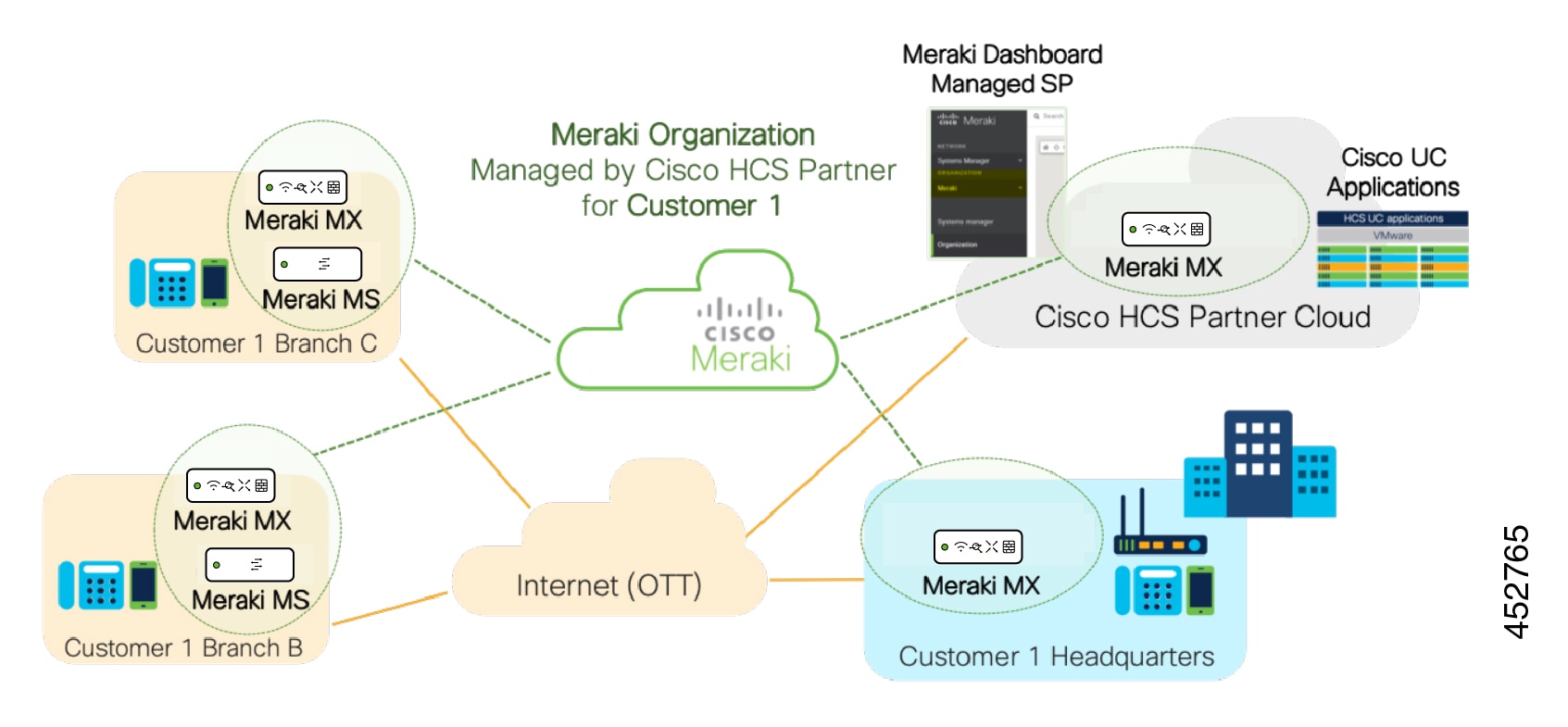

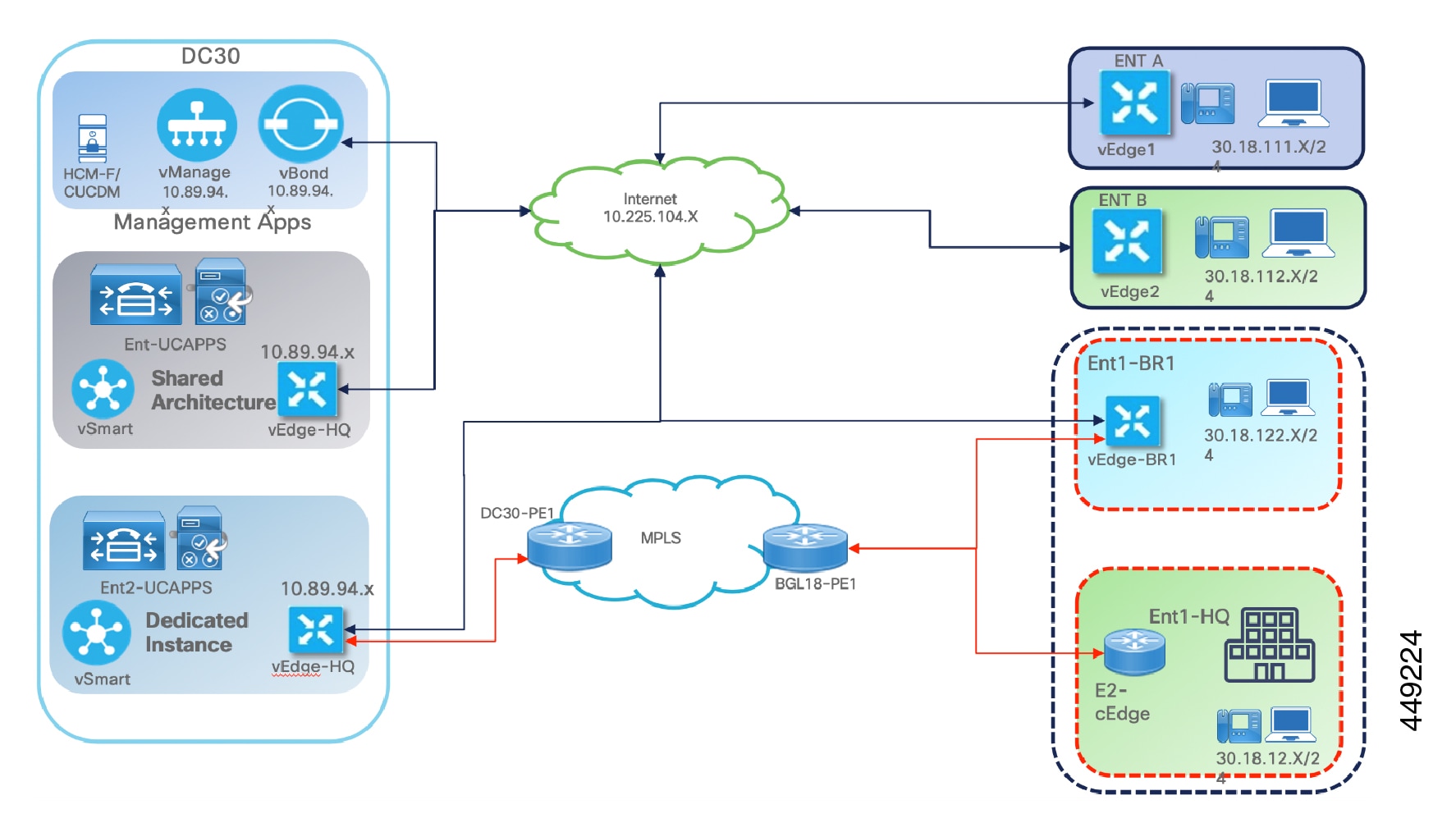

HCS Tenant Connectivity Over Internet Model

Cisco HCS using IPSec aware VPN technology allows you to enable an alternative option in the HCS P(roductized) V(alidated) S(olution) to offer SMBs without MPLS VPN connectivity a secure low cost alternative to connect to the service provider's data center in the cloud using the internet. This setup does not require any VRF configuration on the customer premise side, which eliminates the need of costly MPLS VPN.

Note |

This solution is meant to enable a Cisco HCS tenant site and not a single user. |

IPsec is a framework of open standards. It provides security for the transmission of sensitive information over unprotected networks such as the Internet. IPsec acts at the network layer, protecting and authenticating IP packets between participating IPsec devices or peers, such as Cisco routers.

In the above diagram of the IP gateway, the device service provider typically has in their IP cloud for the Internet connectivity. There is no mandate on which IP router one may use, as long as it provides the IP routing capabilities for the incoming traffic over IPSec to the appropriate VPN concentrator in the service provider's HCS data center for IPSec VPN tunnel termination. As shown in the diagram, the VPN concentrator recommended for this kind of deployment is ASR 1000, which sits inside the Service Provider Cisco HCS Data Center as a centralized VPN concentrator. This is called Site-Site IPSec VPN tunnel on ASR router.

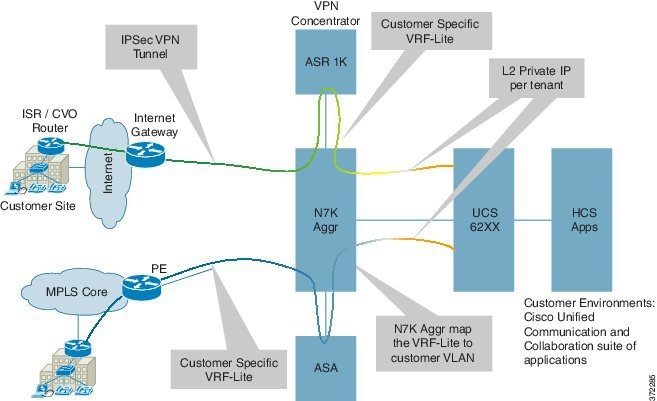

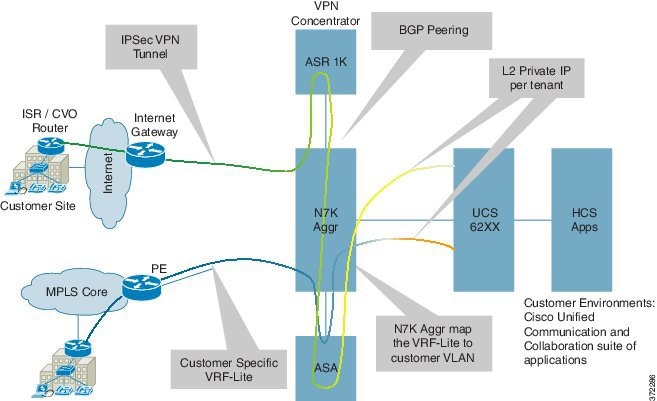

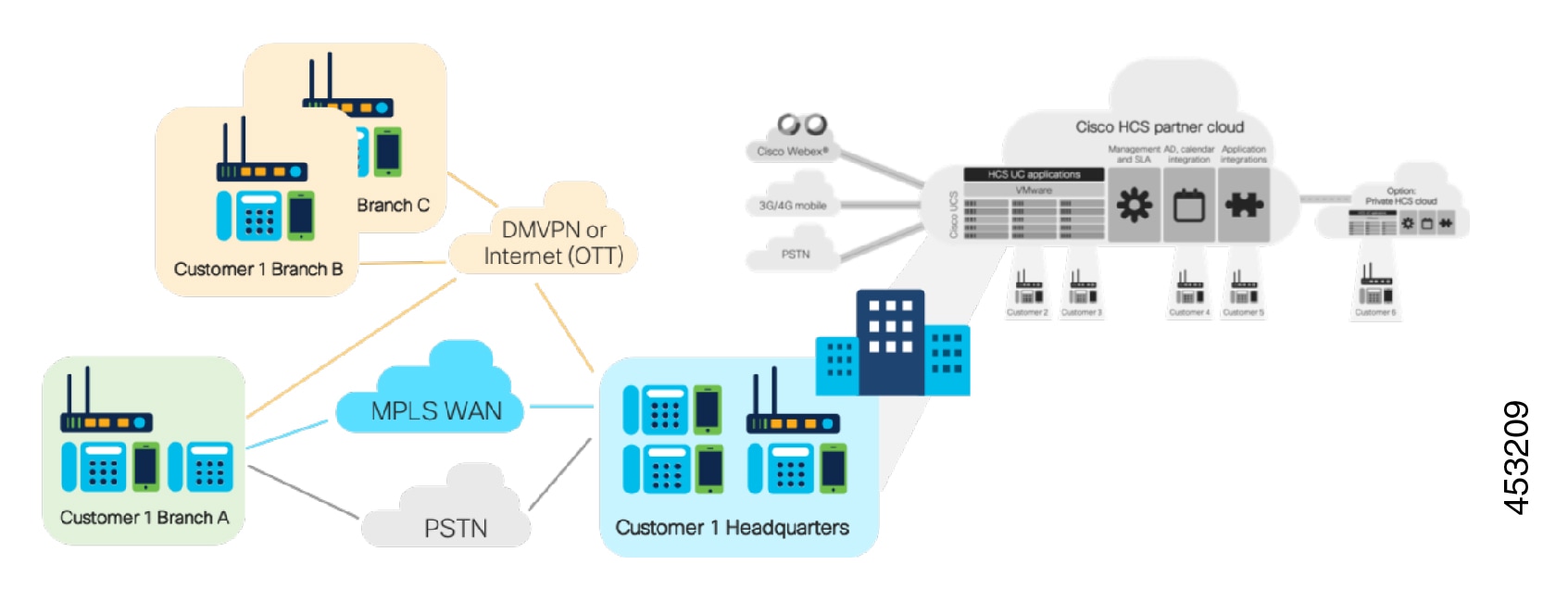

As shown above, the cloud for the MPLS traffic and cloud for the Internet traffic are considered to be different from one another in terms of how they ingress to the service provider's network. For the traffic coming out of Internet, the IP gateway is the ingress point, where as in the case of the traffic coming from the MPLS cloud, the PE is the ingress point.

The above architecture applies to the aggregation-only layer in the above design within the data center.

Deploy the VPN concentrator as other services are typically deployed in this layer. Use the ASR 1000 dedicated as a VPN concentrator as encryption and decryption happen on the ASR 1000. Running other services may impact the performance overall.

There are multiple ways to deploy this solution within the Service Provider Cisco HCS Data Center using two different techniques.

- Use Layer 3 between the IP

gateway and ASR 1000. In this case, the Nexus 7000 switch is used as a router.

The Nexus 7000 acts as a default gateway for ingress and egress traffic for encrypted traffic in the global routing table.

- Use Layer 2 technology

between the IP gateway and ASR 1000, and in this case the Nexus 7000 switch is

transparent to the traffic and ASR 1000.

ASR 1000 acts as a default gateway for ingress and the IP gateway is used as a egress default gateway for encrypted traffic in the global routing table.

You can deploy using the Layer 2 connectivity between the IP gateway and ASR 1000. This keeps this inter-connectivity architecture as an overlay network on top of the Cisco HCS VPN based network.

There are multiple ways to deploy this over the Internet solution within the SP's data center.

- Bring the IPsec tunnel directly to the ASR 1000 (VPN concentrator), which decrypts into VRF and connects to the south VRF on Nexus 7000 using a static route per tenant. On this tenant, it points to the Nexus 7000 aggregation and similarly builds a static route per tenant on Nexus 7000 for any outgoing traffic. You also require one more static route on the Nexus 7000 toward the SBC for any inter SMB traffic or PSTN traffic.

- 2. Bring the IPsec tunnel directly to the ASR 1000 K (VPN concentrator) and connect it to the Nexus 7000 aggregation using dynamic routing protocol BGP. Dynamic BGP also has the advantage to redistribute the IPSec RRI routes from ASR to Nexus 7000 automatically.

In the diagram below, the ASR 1000-VPN decrypts into VRF and this VRF is connected to the Northbound VRF on N7000. Then it goes to ASA Outside, and from ASA Inside to Southbound VRF on the Nexus 7000, then to UC Applications.

The IP address on the Customer Premise Equipment (CPE) and the VPN concentrator need to be in the public domain from the reachability perspective. For all the various different customer sites, there is only one common public IP address, which they use to connect.

IPSec tunnels are sets of security associations (SAs) that are established between two IPsec peers. The SAs define the protocols and the algorithms to be applied to sensitive packets and specify the keying material to be used by the two peers. SAs are unidirectional and are established per security protocol (AH or ESP)

Note |

The access lists used for IPsec are used only to determine the traffic that should be protected by IPsec, and not the traffic that should be blocked or permitted through the interface. Separate access lists define blocking and permitting at the interface. |

Access lists associated with IPsec crypto map entries also represent the traffic that a device requires to be protected by IPsec. Inbound traffic is processed against the crypto map entries--if an unprotected packet matches a permit entry in a particular access list associated with an IPsec crypto map entry, that packet is dropped because it was not sent as an IPsec-protected packet.

Cisco recommends static IP addresses on the CPE device and on the VPN concentrator to avoid teardown of the IPSec tunnel. If the CPE device is using the DHCP or dynamic IP address scheme, there is no way to establish the tunnel from the central site to the remote site.

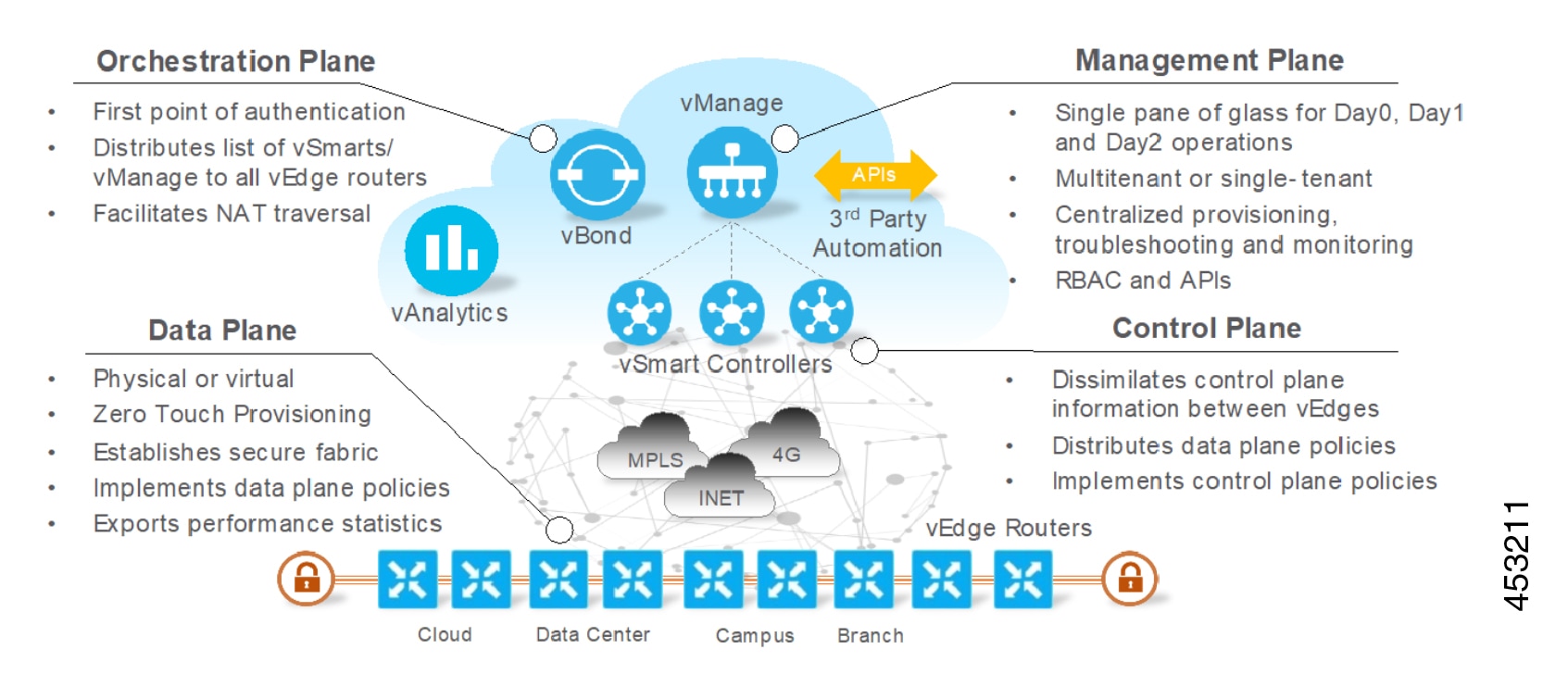

FlexVPN

FlexVPN is deployed in HCS as a site-to-site VPN, between the customer site and the hosted HCS datacenter. The FlexVPN based site-to-site VPN is easy to configure with IKEv2 smart defaults feature. The deployment model only requires a customer to have internet access and FlexVPN capable routers from HCS. Both dedicated or shared Cisco Unified Communications Manager can be used to offer HCS to customers behind the FlexVPN. The following key assumptions are made with regard to the FlexVPN support:

- Endpoints deployed in the customer premise are directly accessible at layer 3 level from UC Applications deployed in the HCS data center.

- No NAT is assumed between the customer endpoints and the UC applications.

- The Customer VPN client router may be connected to the Internet domain from behind a NAT enabled internet facing router.

- The VPN client router’s WAN facing address may be private and may be dynamically assigned.

- The VPN server for the HCS may need to support the configuration, such that a common public IP can be used for all customer VPN client router connectivity.

- Dual Tunnels can be established to two different FlexVPN server routers and tracking enabled at the client side to failover.

AnyConnect VPN

Cisco AnyConnect VPN Client provides secure SSL connections for remote users. You can secure connections through the Cisco ASA 5500 Series using SSL and DTLS protocols. It provides a broad desktop and mobile OS platform support.

ASA for AnyConnect is independent of the existing Firewall ASA in Cisco HCS. You need one ASA per cluster as multi-context SSL VPN support in ASA is not available yet. AnyConnect split tunneling allows only the configured applications to go through the VPN tunnel while other Internet traffic from that endpoint goes outside of the VPN.

Feedback

Feedback