FlexPod Datacenter with Cisco UCS X-Series, VMware 7.0 U2, and NetApp ONTAP 9.9

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

FlexPod Datacenter with Cisco UCS X-Series, VMware 7.0 U2, and NetApp ONTAP 9.9

Deployment Guide for FlexPod with VMware vSphere 7.0 U2, Cisco UCS X9508 Chassis with Cisco UCS X210c M6 Compute Nodes and Cisco UCS 5108 Chassis with Cisco UCS B200 M6 Blade Servers, deployed using Cisco Intersight Managed Mode, NetApp AFF Storage and NetApp Active IQ Unified Manager

Published: January 2022

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. (LDW_U3)

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2022 Cisco Systems, Inc. All rights reserved.

Contents

Deployment Hardware and Software

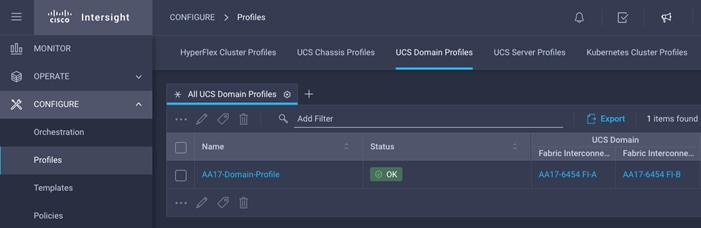

Cisco Intersight Managed Mode Configuration

Storage Configuration – ONTAP Boot Storage Setup

FlexPod Management Tools Setup

The FlexPod Datacenter solution is a validated approach for deploying Cisco and NetApp technologies and products to build shared private and public cloud infrastructure. Cisco and NetApp have partnered to deliver a series of FlexPod solutions that enable strategic data-center platforms. The success of the FlexPod solution is driven through its ability to evolve and incorporate both technology and product innovations in the areas of management, compute, storage, and networking. This document covers deployment details of incorporating the Cisco X-Series modular platform into the FlexPod Datacenter and the ability to manage and orchestrate FlexPod components from the cloud using Cisco Intersight. Some of the key advantages of integrating Cisco UCS X-Series into the FlexPod infrastructure are:

● Simpler and programmable infrastructure: infrastructure as a code delivered through a single partner integrable open API

● Power and cooling innovations: higher power headroom and lower energy loss due to a 54V DC power delivery to the chassis

● Better airflow: midplane-free design with fewer barriers, therefore lower impedance

● Fabric innovations: PCIe/Compute Express Link (CXL) topology for heterogeneous compute and memory composability

● Innovative cloud operations: continuous feature delivery and no need for maintaining on-premise virtual machines supporting management functions

● Built for investment protections: design ready for future technologies such as liquid cooling and high-Wattage CPUs; CXL-ready

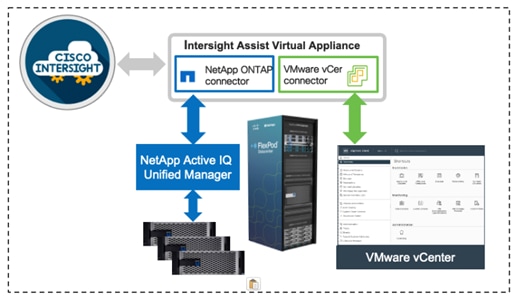

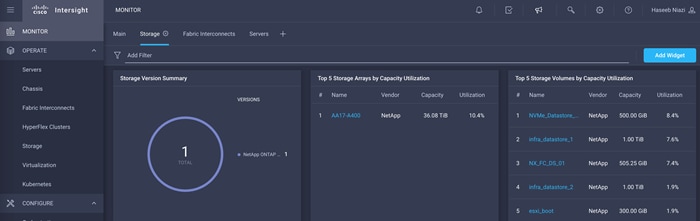

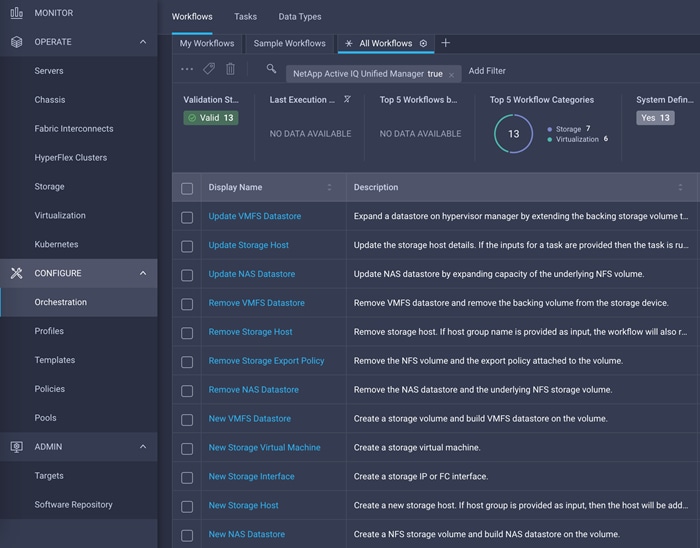

In addition to the compute-specific hardware and software innovations, the integration of the Cisco Intersight cloud platform with VMware vCenter and NetApp Active IQ Unified Manager delivers monitoring, orchestration, and workload optimization capabilities for different layers (virtualization and storage) of the FlexPod infrastructure. The modular nature of the Cisco Intersight platform also provides an easy upgrade path to additional services, such as workload optimization and Kubernetes.

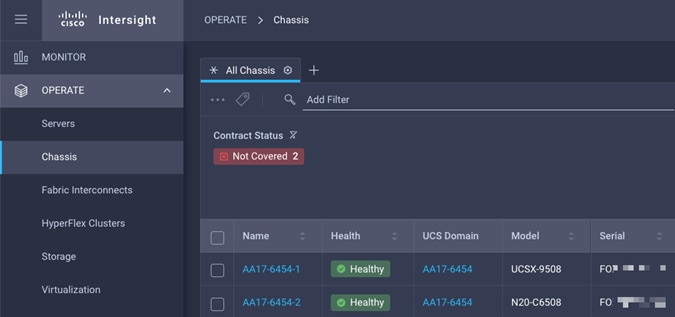

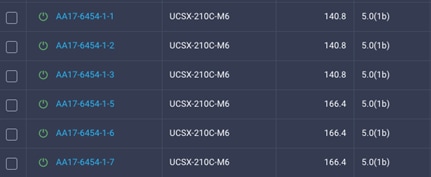

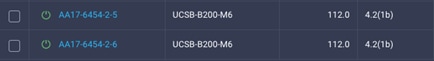

This deployment guide also showcases configuring and managing Cisco UCS 5108 chassis equipped with Cisco UCS B200 M6 blades using Cisco Intersight. Both Cisco UCS B200 M6 blades and Cisco UCS X210c compute nodes fit seamlessly in the FlexPod architecture and can be deployed and managed using common server profiles and configuration policies.

Customers interested in understanding the FlexPod design and deployment details, including the configuration of various elements of design and associated best practices, should refer to Cisco Validated Designs for FlexPod, here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html.

Introduction

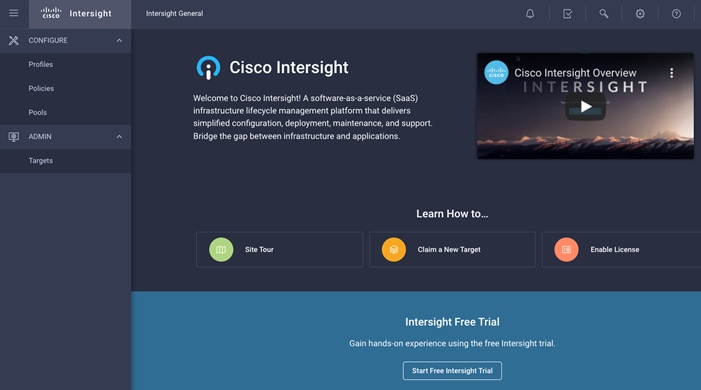

The Cisco Unified Compute System (Cisco UCS) X-Series is a brand-new modular compute system, configured and managed from the cloud. It is designed to meet the needs of modern applications and to improve operational efficiency, agility, and scale through an adaptable, future-ready, modular design. The Cisco Intersight platform is a Software-as-a-Service (SaaS) infrastructure lifecycle management platform that delivers simplified configuration, deployment, maintenance, and support.

Powered by the Cisco Intersight cloud-operations platform, the Cisco UCS X-Series enables the next-generation cloud-operated FlexPod infrastructure that not only simplifies data-center management but also allows the infrastructure to adapt to the unpredictable needs of modern applications as well as traditional workloads. With the Cisco Intersight platform, customers get all the benefits of SaaS delivery and the full lifecycle management of Intersight-connected distributed servers and integrated NetApp storage systems across data centers, remote sites, branch offices, and edge environments. Cisco Intersight can provide uniform management policies for both Cisco UCS X9508 chassis with Cisco UCS X210c M6 compute nodes and Cisco UCS 5108 chassis with Cisco UCS B200 M6 blades in the FlexPod environment.

Audience

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

Purpose of this Document

This document provides deployment guidance around incorporating the Cisco Intersight—managed UCS X-Series platform and Cisco UCS B200 M6 blades within FlexPod Datacenter infrastructure. The document covers both configurations and best practices for a successful deployment. This deployment guide also highlights integration of VMware vCenter and NetApp Active IQ Unified Manager to Cisco Intersight to deliver a true cloud-based integrated approach to infrastructure management.

What’s New in this Release?

The following design elements distinguish this version of FlexPod from previous models:

● Integration of Cisco UCS X-Series into FlexPod Datacenter

● Deploying and managing Cisco UCS X9508 chassis equipped with Cisco UCS X210c M6 compute nodes from the cloud using Cisco Intersight

● Deploying and managing Cisco UCS 5108 chassis equipped with Cisco UCS B200 M6 blades using Cisco Intersight

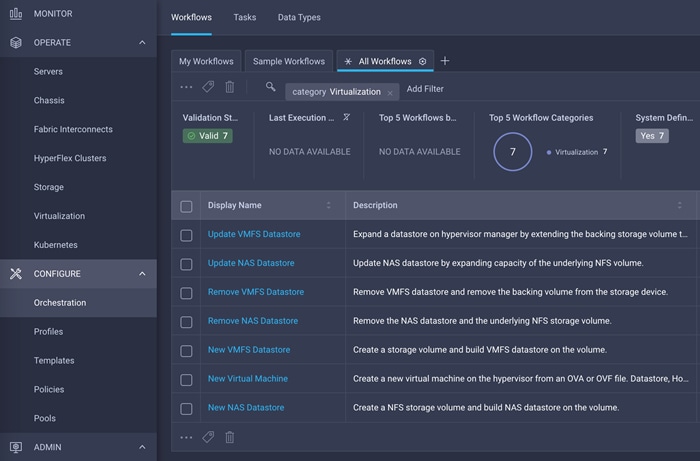

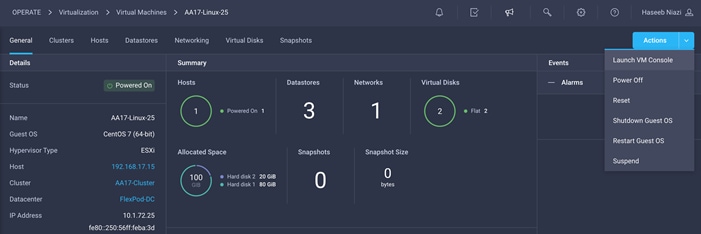

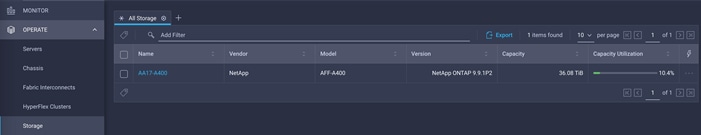

● Integration of Cisco Intersight with NetApp Active IQ Unified Manager for storage monitoring and orchestration

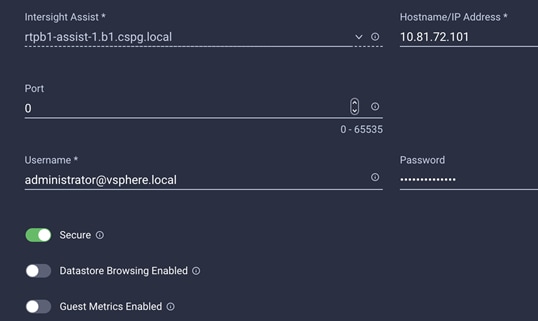

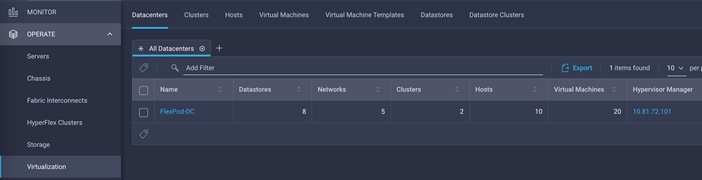

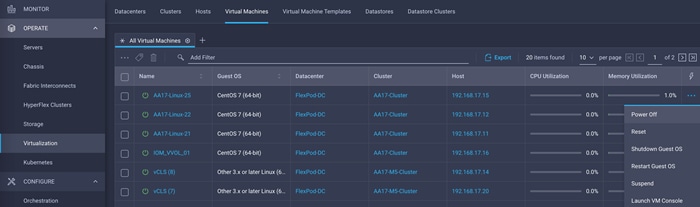

● Integration of Cisco Intersight with VMware vCenter for interacting with, monitoring, and orchestrating the virtual environment

Deployment Hardware and Software

Design Requirements

The FlexPod Datacenter with Cisco UCS and Intersight meets the following general design requirements:

● Resilient design across all layers of the infrastructure with no single point of failure

● Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed

● Modular design that can be replicated to expand and grow as the needs of the business grow

● Flexible design that can support different models of various components with ease

● Simplified design with ability to integrate and automate with external automation tools

● Cloud-enabled design which can be configured, managed, and orchestrated from the cloud using GUI or APIs

To deliver a solution which meets all these design requirements, various solution components are connected and configured as covered in the upcoming sections.

The FlexPod Datacenter solution with Cisco UCS and Intersight is built using following hardware components:

● Cisco UCS X9508* Chassis with up to eight Cisco UCS X210c M6 Compute Nodes

● Cisco UCS 5108* Chassis with up to eight Cisco UCS B200 M6 blade servers

● Fourth-generation Cisco UCS 6454 Fabric Interconnects to support 25GbE, and 100GbE connectivity from various components

● High-speed Cisco NX-OS-based Nexus 93180YC-FX3 switching design to support up to 100GE connectivity

● NetApp AFF A400 end-to-end NVMe storage with high-speed Ethernet and (optional) Fibre Channel connectivity

● Cisco MDS 9132T** switches to support Fibre Channel storage configuration

![]() * This document covers Cisco UCS X9508 chassis and Cisco UCS 5108 chassis connected to the same set of fabric interconnect to show common management and compatibility. Customers can choose to deploy either one or both these platforms in their environment depending on their requirements.

* This document covers Cisco UCS X9508 chassis and Cisco UCS 5108 chassis connected to the same set of fabric interconnect to show common management and compatibility. Customers can choose to deploy either one or both these platforms in their environment depending on their requirements.

![]() ** Cisco MDS 9132T and FC connectivity is not needed when implementing IP-based connectivity design supporting iSCSI boot from SAN and NFS.

** Cisco MDS 9132T and FC connectivity is not needed when implementing IP-based connectivity design supporting iSCSI boot from SAN and NFS.

The software components of the solution consist of:

● Cisco Intersight SaaS platform to deploy, maintain and support the FlexPod components

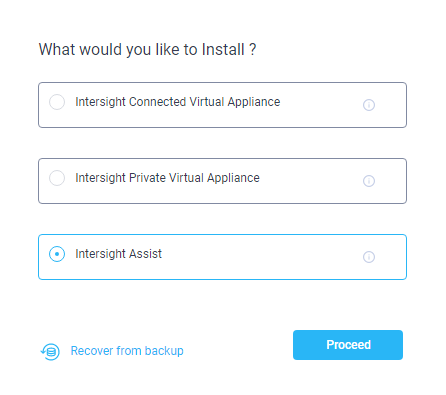

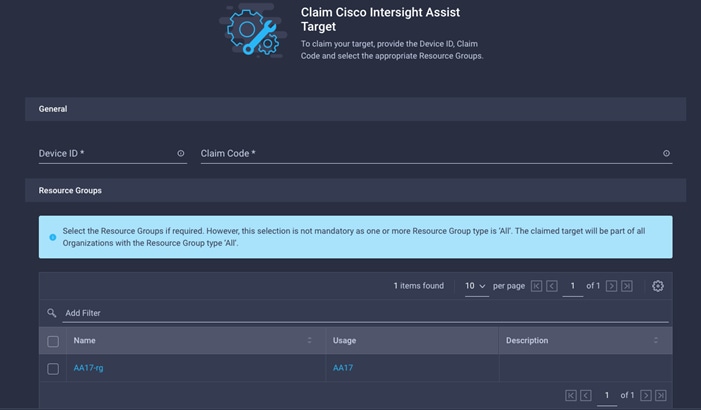

● Cisco Intersight Assist Virtual Appliance to help connect NetApp ONTAP and VMware vCenter with Cisco Intersight

● NetApp Active IQ Unified Manager to monitor and manage the storage and for NetApp ONTAP integration with Cisco Intersight

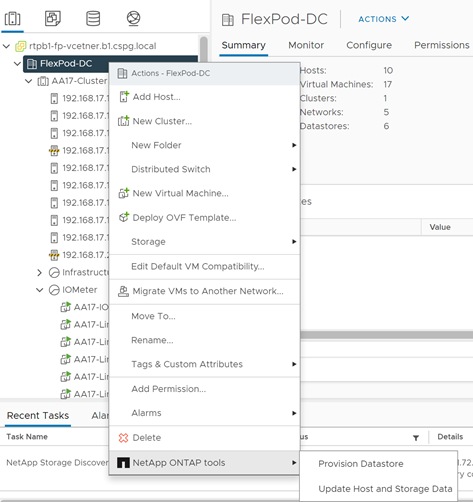

● VMware vCenter to set up and manage the virtual infrastructure as well as Cisco Intersight integration

FlexPod Datacenter for IP-based Storage Access

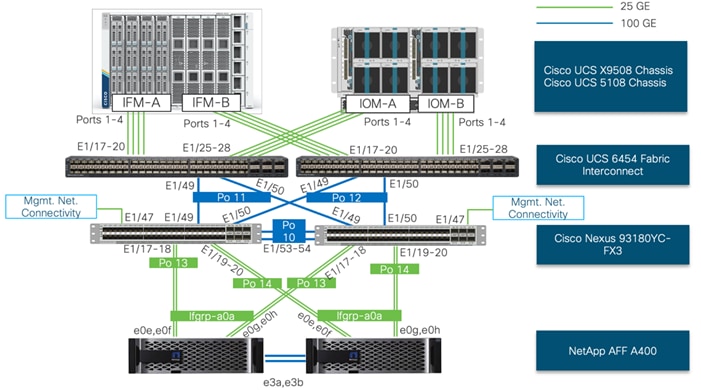

Figure 1 shows various hardware components and the network connections for IP-based FlexPod design.

Figure 1. FlexPod Datacenter Physical Topology for IP-based storage access

The reference hardware configuration includes:

● Two Cisco Nexus 93180YC-FX3 Switches in Cisco NX-OS mode provide the switching fabric.

● Two Cisco UCS 6454 Fabric Interconnects (FI) provide the chassis connectivity. One 100 Gigabit Ethernet port from each FI, configured as a Port-Channel, is connected to each Nexus 93180YC-FX3.

● One Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCSX 9108-25G Intelligent Fabric Modules (IFMs), where four 25 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI. If additional bandwidth is required, all eight 25G ports can be utilized.

● One Cisco UCS 5108 Chassis connects to fabric interconnects using Cisco UCS 2408 Fabric Extender (FEX), where four 25 Gigabit Ethernet ports are used on each FEX to connect to the appropriate FI. If additional bandwidth is required, all eight 25G ports can be utilized.

● One NetApp AFF A400 HA pair connects to the Cisco Nexus 93180YC-FX3 Switches using four 25 GE ports from each controller configured as a Port-Channel.

FlexPod Datacenter for FC-based Storage Access

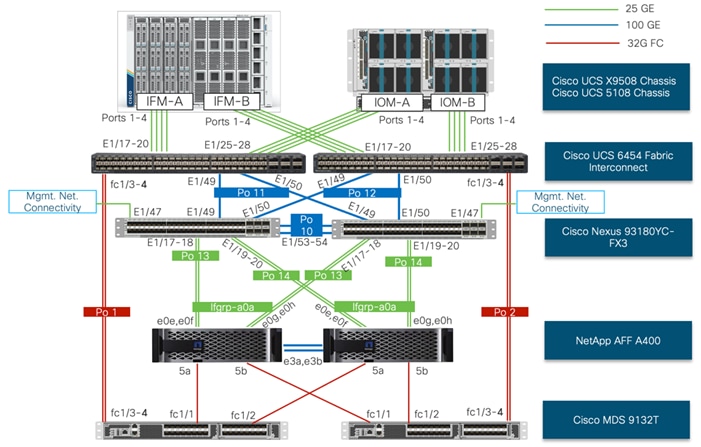

Figure 2 shows various hardware components and the network connections for FC-based FlexPod design.

Figure 2. FlexPod Datacenter Physical Topology for FC-based storage access

The reference hardware configuration includes:

● Two Cisco Nexus 93180YC-FX3 Switches in Cisco NX-OS mode provide the switching fabric.

● Two Cisco UCS 6454 Fabric Interconnects (FI) provide the chassis connectivity. One 100 Gigabit Ethernet port from each FI, configured as a Port-Channel, is connected to each Nexus 93180YC-FX3. Two FC ports are connected to the Cisco MDS 9132T switches using 32-Gbps Fibre Channel connections configured as a single port channel for SAN connectivity.

● One Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCSX 9108-25G Intelligent Fabric Modules (IFMs), where four 25 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI. If additional bandwidth is required, all eight 25G ports can be utilized.

● One Cisco UCS 5108 Chassis connects to fabric interconnects using Cisco UCS 2408 Fabric Extender (FEX), where four 25 Gigabit Ethernet ports are used on each FEX to connect to the appropriate FI. If additional bandwidth is required, all eight 25G ports can be utilized.

● One NetApp AFF A400 HA pair connects to the Cisco Nexus 93180YC-FX3 Switches using four 25 GE ports from each controller configured as a Port-Channel. One 32Gbps FC port from each controller is connected to each Cisco MDS 9132T for SAN connectivity.

![]() The NetApp storage controller and disk shelves should be connected according to best practices for the specific storage controller and disk shelves. For disk shelf cabling, refer to NetApp Support: https://docs.netapp.com/us-en/ontap-systems/index.html

The NetApp storage controller and disk shelves should be connected according to best practices for the specific storage controller and disk shelves. For disk shelf cabling, refer to NetApp Support: https://docs.netapp.com/us-en/ontap-systems/index.html

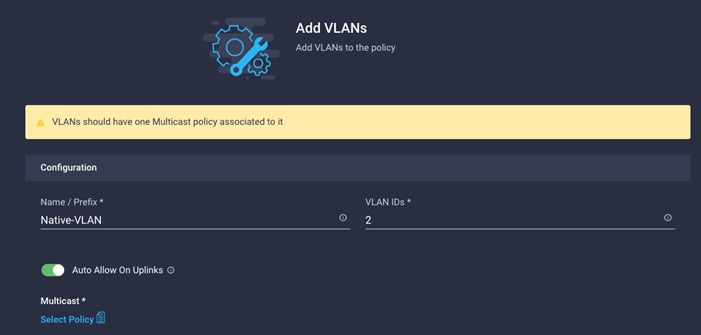

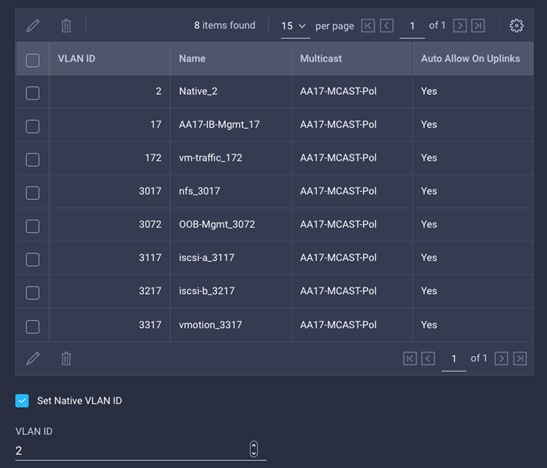

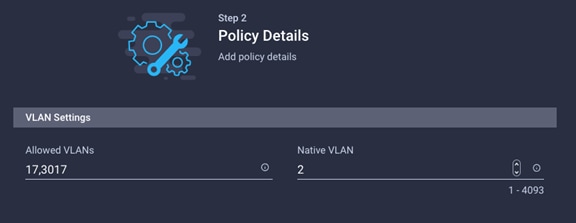

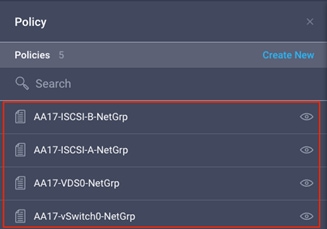

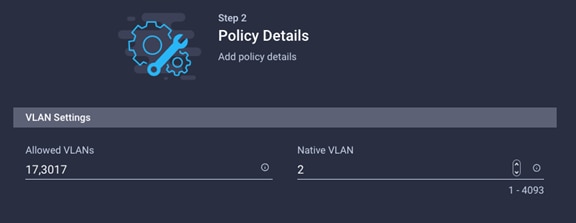

Table 1 lists VLANs configured for setting up the FlexPod environment along with their usage.

| VLAN ID |

Name |

Usage |

IP Subnet used in this deployment |

| 2 |

Native-VLAN |

Use VLAN 2 as native VLAN instead of default VLAN (1). |

|

| 3072 |

OOB-MGMT-VLAN |

Out-of-band management VLAN to connect management ports for various devices |

10.81.72.0/24; GW: 10.81.72.254 |

| 17 |

IB-MGMT-VLAN |

In-band management VLAN utilized for all in-band management connectivity - for example, ESXi hosts, VM management, and so on. |

192.168.17.0/24; GW: 192.168.17.254 |

| 172 |

VM-Traffic |

VM data traffic VLAN |

10.1.72.0/24; GW: 10.1.72.254 |

| 3017 |

NFS-VLAN |

NFS VLAN for mounting datastores in ESXi servers for VMs |

192.168.30.0/24 ** |

| 3117* |

iSCSI-A |

iSCSI-A path for storage traffic including boot-from-san traffic |

192.168.31.0/24 ** |

| 3217* |

iSCSI-B |

iSCSI-B path for storage traffic including boot-from-san traffic |

192.168.32.0/24 ** |

| 3317 |

vMotion |

VMware vMotion traffic |

192.168.33.0/24 ** |

* iSCSI VLANs are not required if using FC storage access.

** IP gateway is not needed since no routing is required for these subnets

Some of the key highlights of VLAN usage are as follows:

● VLAN 3072 allows customers to manage and access out-of-band management interfaces of various devices.

● VLAN 17 is used for in-band management of VMs, ESXi hosts, and other infrastructure services

● VLAN 3017 provides ESXi hosts access to the NSF datastores hosted on the NetApp Controllers for deploying VMs.

● A pair of iSCSI VLANs (3117 and 3217) is configured to provide access to boot LUNs for ESXi hosts. These VLANs are not needed if customers are using FC-only connectivity.

● VLAN 3317 is used for VM vMotion

Table 2 lists the infrastructure VMs necessary for deployment as outlined in this document.

| Virtual Machine Description |

VLAN |

IP Address |

Comments |

| vCenter Server |

|

10.81.72.101 |

Hosted on pre-existing management infrastructure |

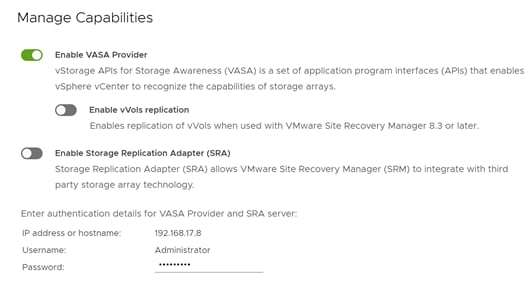

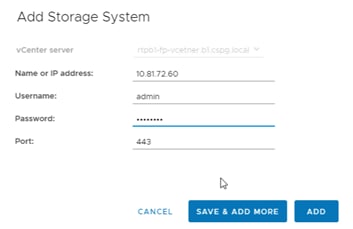

| NetApp ONTAP Tools |

17 |

192.168.17.8 |

Hosted on FlexPod |

| NetApp SnapCenter for vSphere |

17 |

192.168.17.10 |

Hosted on FlexPod |

| Active IQ Unified Manager |

17 |

192.168.17.9 |

Hosted on FlexPod |

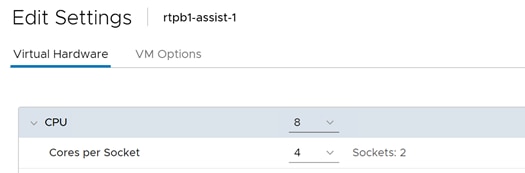

| Cisco Intersight Assist |

|

10.81.72.99 |

Hosted on pre-existing management Infrastructure |

Table 3 lists the software revisions for various components of the solution.

| Layer |

Device |

Image Bundle |

Comments |

| Compute |

Cisco UCS |

4.2(1h) |

Cisco UCS X-series GA release for infrastructure including FIs and IOM/IFM. |

| Network |

Cisco Nexus 93180YC-FX3 NX-OS |

9.3(8) |

|

|

|

Cisco MDS 9132T |

8.4(2c) |

|

| Storage |

NetApp AFF A400 |

ONTAP 9.9.1P2 |

|

| Software |

Cisco UCS X210c |

5.0(1b) |

Cisco UCS X-series GA release for compute nodes |

|

|

Cisco UCS B200 M6 |

4.2(1b) |

|

|

|

Cisco Intersight Assist Appliance |

1.0.9-342 |

|

|

|

VMware vSphere |

7.0 Update 2b |

|

|

|

VMware ESXi nfnic FC Driver |

5.0.0.15 |

Supports FC-NVMe |

|

|

VMware ESXi nenic Ethernet Driver |

1.0.35.0 |

|

|

|

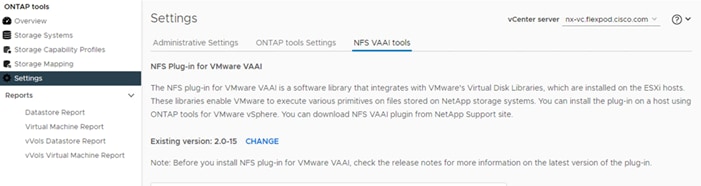

NetApp ONTAP Tools for VMware vSphere |

9.8P1 |

Formerly Virtual Storage Console (VSC) |

|

|

NetApp NFS Plug-in for VMware VAAI |

2.0 |

|

|

|

NetApp SnapCenter for vSphere |

4.5 |

Includes the vSphere plug-in for SnapCenter |

|

|

NetApp Active IQ Unified Manager |

9.9P1 |

|

Physical Connectivity

Follow the physical connectivity guidelines for FlexPod as explained in section Physical Topology.

Initial Configuration

The following procedures describe this basic configuration of the Cisco Nexus switches for use in the FlexPod environment. This procedure assumes the use of Cisco Nexus 9000 9.3(8), the Cisco suggested Nexus switch release at the time of this validation.

Set Up Initial Configuration

To set up the initial configuration for the Cisco Nexus A switch on <nexus-A-hostname>, follow these steps from a serial console:

1. Configure the switch.

![]() On initial boot, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Power On Auto Provisioning [yes - continue with normal setup, skip - bypass password and basic configuration, no - continue with Power On Auto Provisioning] (yes/skip/no)[no]: yes

Disabling POAP.......Disabling POAP

poap: Rolling back, please wait... (This may take 5-15 minutes)

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <nexus-A-hostname>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <nexus-A-out_of_band_mgmt0-ip>

Mgmt0 IPv4 netmask: <nexus-A-mgmt0-netmask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <nexus-A-mgmt0-gw>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: Enter

Configure default interface layer (L3/L2) [L2]: Enter

Configure default switchport interface state (shut/noshut) [noshut]: shut

Enter basic FC configurations (yes/no) [n]: n

Configure CoPP system profile (strict/moderate/lenient/dense) [strict]: moderate

Would you like to edit the configuration? (yes/no) [n]: Enter

2. Review the configuration summary before enabling the configuration.

Use this configuration and save it? (yes/no) [y]: Enter

To set up the initial configuration of the Cisco Nexus B switch, repeat the steps above with appropriate host and IP address information.

Cisco Nexus A and Cisco Nexus B

1. Log in as admin using ssh.

2. Run the following commands:

config t

feature nxapi

feature udld

feature interface-vlan

feature lacp

feature vpc

feature lldp

Cisco Nexus A and Cisco Nexus B

To set global configurations, follow this step on both switches:

1. Run the following commands to set global configurations:

spanning-tree port type network default

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

port-channel load-balance src-dst l4port

ntp server <global-ntp-server-ip> use-vrf management

ntp master 3

clock timezone <timezone> <hour-offset> <minute-offset>

(For Example: clock timezone EST -5 0)

clock summer-time <timezone> <start-week> <start-day> <start-month> <start-time> <end-week> <end-day> <end-month> <end-time> <offset-minutes>

(For Example: clock summer-time EDT 2 Sunday March 02:00 1 Sunday November 02:00 60)

copy run start

![]() For more information on configuring the timezone and daylight savings time or summer time, please see Cisco Nexus 9000 Series NX-OS Fundamentals Configuration Guide, Release 9.3(x).

For more information on configuring the timezone and daylight savings time or summer time, please see Cisco Nexus 9000 Series NX-OS Fundamentals Configuration Guide, Release 9.3(x).

Cisco Nexus A and Cisco Nexus B

To create the necessary virtual local area networks (VLANs), follow this step on both switches:

1. From the global configuration mode, run the following commands:

vlan <oob-mgmt-vlan-id for example, 3072>

name oob-mgmt

vlan <ib-mgmt-vlan-id for example, 17>

name ib-mgmt

vlan <native-vlan-id for example, 2>

name Native-Vlan

vlan <vmotion-vlan-id for example, 3317>

name vmotion

vlan <vm-traffic-vlan-id for example, 172>

name vm-traffic

vlan <infra-nfs-vlan-id for example, 3017>

name nfs-vlan

2. If configuring iSCSI storage access, create following two additional VLANs:

vlan <iscsi-a-vlan-id for example, 3117>

name iscsi-a

vlan <iscsi-b-vlan-id for example, 3217>

name iscsi-b

Cisco Nexus A and Cisco Nexus B

To create the necessary port channels between devices, follow these steps on both switches:

1. From the global configuration mode, run the following commands:

interface Po10

description vPC peer-link

interface Eth1/53-54

channel-group 10 mode active

no shutdown

!

! UCS Connectivity

!

interface Po11

description <ucs-hostname>-a

!

interface Eth1/49

channel-group 11 mode active

no shutdown

!

interface Po12

description <ucs-hostname>-b

!

interface Eth1/50

channel-group 12 mode active

no shutdown

!

! Storage Connectivity

!

interface Po13

description <st-clustername>-01

!

interface Eth1/17-18

channel-group 13 mode active

no shutdown

!

interface Po14

description <st-clustername>-02

!

interface Eth1/19-20

channel-group 14 mode active

no shutdown

!

! Management Switch Connectivity

!

interface Po17

description MGMT-Uplink

!

interface Eth1/47

channel-group 17 mode active

no shutdown

exit

copy run start

Configure Port Channel Parameters

Cisco Nexus A and Cisco Nexus B

To configure port channel parameters, follow this step on both switches.

![]() iSCSI VLANs in these steps are only configured when setting up iSCSI storage access.

iSCSI VLANs in these steps are only configured when setting up iSCSI storage access.

1. From the global configuration mode, run the following commands to setup VPC Peer-Link port-channel:

interface Po10

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

switchport trunk allowed vlan <oob-mgmt-vlan-id>, <ib-mgmt-vlan-id>, <infra-nfs-vlan-id>, <vmotion-vlan-id>, <vm-traffic-vlan-id>, <iscsi-a-vlan-id>, <iscsi-b-vlan-id>

spanning-tree port type network

speed 100000

duplex full

2. From the global configuration mode, run the following commands to setup port-channels for UCS FI 6454 connectivity:

interface Po11

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

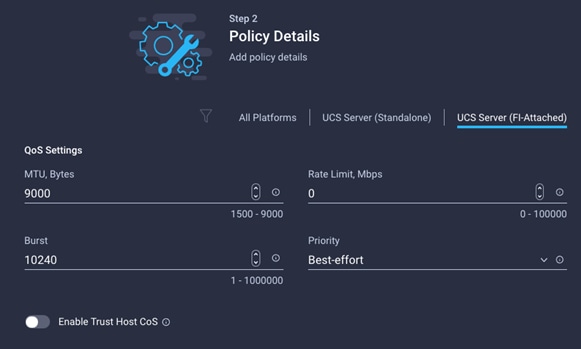

switchport trunk allowed vlan <oob-mgmt-vlan-id>, <ib-mgmt-vlan-id>, <infra-nfs-vlan-id>, <vmotion-vlan-id>, <vm-traffic-vlan-id>, <iscsi-a-vlan-id>, <iscsi-b-vlan-id>

spanning-tree port type edge trunk

mtu 9216

!

interface Po12

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

switchport trunk allowed vlan <oob-mgmt-vlan-id>, <ib-mgmt-vlan-id>, <infra-nfs-vlan-id>, <vmotion-vlan-id>, <vm-traffic-vlan-id>, <iscsi-a-vlan-id>, <iscsi-b-vlan-id>

spanning-tree port type edge trunk

mtu 9216

3. From the global configuration mode, run the following commands to setup port-channels for NetApp A400 connectivity:

interface Po13

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

switchport trunk allowed vlan <ib-mgmt-vlan-id>, <infra-nfs-vlan-id>, <iscsi-a-vlan-id>, <iscsi-b-vlan-id>

spanning-tree port type edge trunk

mtu 9216

!

interface Po14

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

switchport trunk allowed vlan <ib-mgmt-vlan-id>, <infra-nfs-vlan-id>, <iscsi-a-vlan-id>, <iscsi-b-vlan-id>

spanning-tree port type edge trunk

mtu 9216

4. From the global configuration mode, run the following commands to setup port-channels for connectivity to existing management switch:

interface Po17

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

switchport trunk allowed vlan <oob-mgmt-vlan-id>, <ib-mgmt-vlan-id>

spanning-tree port type network

mtu 9216

!

exit

copy run start

For fibre-optic connections between Cisco UCS Fabric Interconnects and Cisco Nexus 93180YC-FX3 switches, UDLD configuration is automatically enabled, and no additional configuration is required on either device.

Configure Virtual Port Channels

Cisco Nexus A

To configure virtual port channels (vPCs) for switch A, follow this step:

1. From the global configuration mode, run the following commands:

vpc domain <nexus-vpc-domain-id for example, 10>

role priority 10

peer-keepalive destination <nexus-B-mgmt0-ip> source <nexus-A-mgmt0-ip>

peer-switch

peer-gateway

auto-recovery

delay restore 150

ip arp synchronize

!

interface Po10

vpc peer-link

!

interface Po11

vpc 11

!

interface Po12

vpc 12

!

interface Po13

vpc 13

!

interface Po14

vpc 14

!

interface Po17

vpc 17

!

exit

copy run start

Cisco Nexus B

To configure vPCs for switch B, follow this step:

1. From the global configuration mode, run the following commands:

vpc domain <nexus-vpc-domain-id for example, 10>

role priority 20

peer-keepalive destination <nexus-A-mgmt0-ip> source <nexus-B-mgmt0-ip>

peer-switch

peer-gateway

auto-recovery

delay restore 150

ip arp synchronize

!

interface Po10

vpc peer-link

!

interface Po11

vpc 11

!

interface Po12

vpc 12

!

interface Po13

vpc 13

!

interface Po14

vpc 14

!

interface Po17

vpc 17

!

exit

copy run start

See the following section (NetApp Hardware Universe) for planning the physical location of the storage systems:

● Site Preparation

● System Connectivity Requirements

● Circuit Breaker, Power Outlet Balancing, System Cabinet Power Cord Plugs, and Console Pinout Requirements

● AFF Series Systems

To confirm that the hardware and software components that you would like to use are supported with the version of ONTAP that you plan to install, follow these steps at the NetApp Support site.

1. Access the HWU application to view the System Configuration guides. Click the Platforms menu to view the compatibility between different version of the ONTAP software and the NetApp storage appliances with your desired specifications.

2. Alternatively, to compare components by storage appliance, click Compare Storage Systems.

Follow the physical installation procedures for the controllers found here: https://docs.netapp.com/us-en/ontap-systems/index.html.

NetApp storage systems support a wide variety of disk shelves and disk drives. The complete list of disk shelves that are supported by the AFF A400 and AFF A800 is available at the NetApp Support site.

When using SAS disk shelves with NetApp storage controllers, refer to: https://docs.netapp.com/us-en/ontap-systems/sas3/index.html for proper cabling guidelines.

When using NVMe drive shelves with NetApp storage controllers, refer to: https://docs.netapp.com/us-en/ontap-systems/ns224/index.html for installation and servicing guidelines.

NetApp ONTAP 9.9.1P2

Complete Configuration Worksheet

Before running the setup script, complete the Cluster setup worksheet in the ONTAP 9 Documentation Center. You must have access to the NetApp Support site to open the cluster setup worksheet.

Before running the setup script, review the configuration worksheets in the Software setup section of the ONTAP 9 Documentation Center to learn about configuring ONTAP. Table 4 lists the information needed to configure two ONTAP nodes. Customize the cluster-detail values with the information applicable to your deployment.

Table 4. ONTAP Software Installation Prerequisites

| Cluster Detail |

Cluster Detail Value |

| Cluster node 01 IP address |

<node01-mgmt-ip> |

| Cluster node 01 netmask |

<node01-mgmt-mask> |

| Cluster node 01 gateway |

<node01-mgmt-gateway> |

| Cluster node 02 IP address |

<node02-mgmt-ip> |

| Cluster node 02 netmask |

<node02-mgmt-mask> |

| Cluster node 02 gateway |

<node02-mgmt-gateway> |

| ONTAP 9.9 URL (http server hosting ONTAP software) |

<url-boot-software> |

Configure Node 01

To configure node 01, follow these steps:

1. Connect to the storage system console port. You should see a Loader-A prompt. However, if the storage system is in a reboot loop, press Ctrl-C to exit the autoboot loop when the following message displays:

Starting AUTOBOOT press Ctrl-C to abort…

2. Allow the system to boot up.

autoboot

3. Press Ctrl-C when prompted.

![]() If ONTAP 9.9.1P2 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.9.1P2 is the version being booted, choose option 8 and y to reboot the node. Then continue with section Set Up Node.

If ONTAP 9.9.1P2 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.9.1P2 is the version being booted, choose option 8 and y to reboot the node. Then continue with section Set Up Node.

4. To install new software, select option 7 from the menu.

5. Enter y to continue the installation.

6. Select e0M for the network port for the download.

7. Enter n to skip the reboot.

8. Select option 7 from the menu: Install new software first

9. Enter y to continue the installation

10. Enter the IP address, netmask, and default gateway for e0M.

Enter the IP address for port e0M: <node01-mgmt-ip>

Enter the netmask for port e0M: <node01-mgmt-mask>

Enter the IP address of the default gateway: <node01-mgmt-gateway>

11. Enter the URL where the software can be found.

![]() The e0M interface should be connected to management network and the web server must be reachable (using ping) from node 01.

The e0M interface should be connected to management network and the web server must be reachable (using ping) from node 01.

<url-boot-software>

12. Press Enter for the user name, indicating no user name.

13. Enter y to set the newly installed software as the default to be used for subsequent reboots.

14. Enter yes to reboot the node.

![]() When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

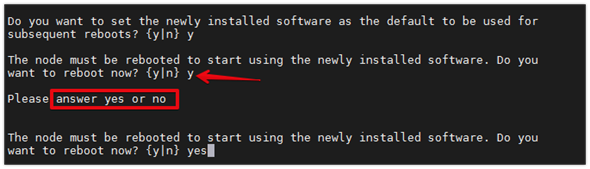

![]() During the ONTAP installation a prompt to reboot the node requests a Y/N response. The prompt requires the entire Yes or No response to reboot the node and continue the installation.

During the ONTAP installation a prompt to reboot the node requests a Y/N response. The prompt requires the entire Yes or No response to reboot the node and continue the installation.

15. Press Ctrl-C when the following message displays:

Press Ctrl-C for Boot Menu

16. Select option 4 for Clean Configuration and Initialize All Disks.

17. Enter y to zero disks, reset config, and install a new file system.

18. Enter yes to erase all the data on the disks.

![]() The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize. You can continue with the configuration of node 02 while the disks for node 01 are zeroing.

The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize. You can continue with the configuration of node 02 while the disks for node 01 are zeroing.

Configure Node 02

To configure node 02, follow these steps:

1. Connect to the storage system console port. You should see a Loader-B prompt. However, if the storage system is in a reboot loop, press Ctrl-C to exit the autoboot loop when the following message displays:

Starting AUTOBOOT press Ctrl-C to abort…

2. Allow the system to boot up.

autoboot

3. Press Ctrl-C when prompted.

![]() If ONTAP 9.9.1P2 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.9.1 is the version being booted, choose option 8 and y to reboot the node, then continue with section Set Up Node.

If ONTAP 9.9.1P2 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.9.1 is the version being booted, choose option 8 and y to reboot the node, then continue with section Set Up Node.

4. To install new software, select option 7.

5. Enter y to continue the installation.

6. Select e0M for the network port you want to use for the download.

7. Enter n to skip the reboot.

8. Select option 7: Install new software first

9. Enter y to continue the installation

10. Enter the IP address, netmask, and default gateway for e0M.

Enter the IP address for port e0M: <node02-mgmt-ip>

Enter the netmask for port e0M: <node02-mgmt-mask>

Enter the IP address of the default gateway: <node02-mgmt-gateway>

11. Enter the URL where the software can be found.

![]() The web server must be reachable (ping) from node 02.

The web server must be reachable (ping) from node 02.

<url-boot-software>

12. Press Enter for the username, indicating no user name.

13. Enter y to set the newly installed software as the default to be used for subsequent reboots.

14. Enter yes to reboot the node.

![]() When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

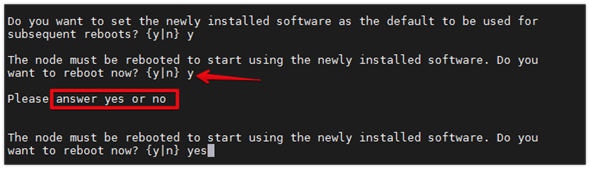

![]() During the ONTAP installation a prompt to reboot the node requests a Y/N response. The prompt requires the entire Yes or No response to reboot the node and continue the installation.

During the ONTAP installation a prompt to reboot the node requests a Y/N response. The prompt requires the entire Yes or No response to reboot the node and continue the installation.

15. Press Ctrl-C when you see this message:

Press Ctrl-C for Boot Menu

16. Select option 4 for Clean Configuration and Initialize All Disks.

17. Enter y to zero disks, reset config, and install a new file system.

18. Enter yes to erase all the data on the disks.

![]() The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize.

The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize.

From a console port program attached to the storage controller A (node 01) console port, run the node setup script. This script appears when ONTAP 9.9.1P2 boots on the node for the first time. To set up the node, follow these steps:

1. Follow the prompts to set up node 01.

Welcome to node setup.

You can enter the following commands at any time:

"help" or "?" - if you want to have a question clarified,

"back" - if you want to change previously answered questions, and

"exit" or "quit" - if you want to quit the setup wizard.

Any changes you made before quitting will be saved.

You can return to cluster setup at any time by typing “cluster setup”.

To accept a default or omit a question, do not enter a value.

This system will send event messages and weekly reports to NetApp Technical Support.

To disable this feature, enter "autosupport modify -support disable" within 24 hours.

Enabling AutoSupport can significantly speed problem determination and resolution should a problem occur on your system.

For further information on AutoSupport, see:

http://support.netapp.com/autosupport/

Type yes to confirm and continue {yes}: yes

Enter the node management interface port [e0M]: Enter

Enter the node management interface IP address: <node01-mgmt-ip>

Enter the node management interface netmask: <node01-mgmt-mask>

Enter the node management interface default gateway: <node01-mgmt-gateway>

A node management interface on port e0M with IP address <node01-mgmt-ip> has been created

Use your web browser to complete cluster setup by accesing https://<node01-mgmt-ip>

Otherwise press Enter to complete cluster setup using the command line interface:

2. To complete cluster setup, open a web browser and navigate to https://<node01-mgmt-ip>.

Table 5. Cluster Create in ONTAP Prerequisites

| Cluster Detail |

Cluster Detail Value |

| Cluster name |

<clustername> |

| Cluster Admin SVM |

<cluster-adm-svm> |

| Infrastructure Data SVM |

<infra-data-svm> |

| ONTAP base license |

<cluster-base-license-key> |

| Cluster management IP address |

<clustermgmt-ip> |

| Cluster management netmask |

<clustermgmt-mask> |

| Cluster management gateway |

<clustermgmt-gateway> |

| Cluster node 01 IP address |

<node01-mgmt-ip> |

| Cluster node 01 netmask |

<node01-mgmt-mask> |

| Cluster node 01 gateway |

<node01-mgmt-gateway> |

| Cluster node 02 IP address |

<node02-mgmt-ip> |

| Cluster node 02 netmask |

<node02-mgmt-mask> |

| Cluster node 02 gateway |

<node02-mgmt-gateway> |

| Node 01 service processor IP address |

<node01-sp-ip> |

| Node 01 service processor network mask |

<node01-sp-mask> |

| Node 01 service processor gateway |

<node01-sp-gateway> |

| Node 02 service processor IP address |

<node02-sp-ip> |

| Node 02 service processor network mask |

<node02-sp-mask> |

| Node 02 service processor gateway |

<node02-sp-gateway> |

| Node 01 node name |

<st-node01> |

| Node 02 node name |

<st-node02> |

| DNS domain name |

<dns-domain-name> |

| DNS server IP address |

<dns-ip> |

| NTP server A IP address |

<switch-a-ntp-ip> |

| NTP server B IP address |

<switch-b-ntp-ip> |

| SNMPv3 User |

<snmp-v3-usr> |

| SNMPv3 Authentication Protocol |

<snmp-v3-auth-proto> |

| SNMPv3 Privacy Protocol |

<snmpv3-priv-proto> |

![]() Cluster setup can also be performed using the CLI. This document describes the cluster setup using the NetApp ONTAP System Manager guided setup.

Cluster setup can also be performed using the CLI. This document describes the cluster setup using the NetApp ONTAP System Manager guided setup.

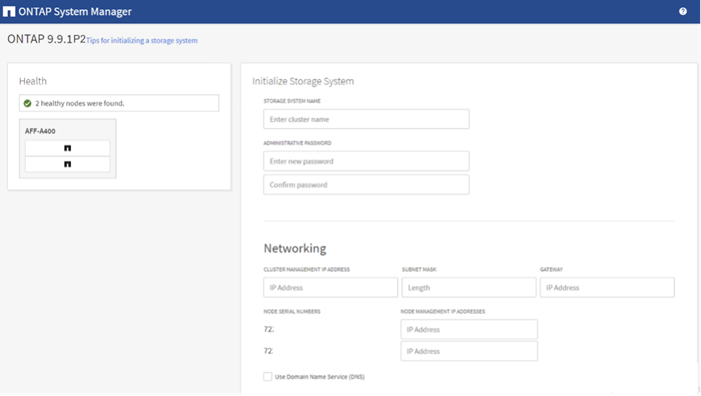

3. Complete the required information on the Initialize Storage System screen:

4. In the Cluster screen, follow these steps:

a. Enter the cluster name and administrator password.

b. Complete the Networking information for the cluster and each node.

c. Check the box for Use time services (NTP) and enter the IP addresses of the time servers in a comma separated list.

![]() The nodes should be discovered automatically; if they are not, Refresh the browser page. By default, the cluster interfaces are created on all the new factory shipping storage controllers.

The nodes should be discovered automatically; if they are not, Refresh the browser page. By default, the cluster interfaces are created on all the new factory shipping storage controllers.

![]() If all the nodes are not discovered, then configure the cluster using the command line.

If all the nodes are not discovered, then configure the cluster using the command line.

![]() The node management interface can be on the same subnet as the cluster management interface, or it can be on a different subnet. In this document, we assume that it is on the same subnet.

The node management interface can be on the same subnet as the cluster management interface, or it can be on a different subnet. In this document, we assume that it is on the same subnet.

5. Click Submit.

6. A few minutes will pass while the cluster is configured. When prompted, login to ONTAP System Manager to continue the cluster configuration.

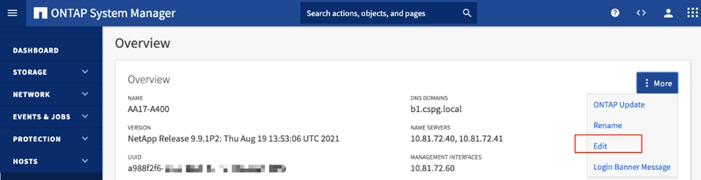

7. From the Dashboard click the Cluster menu on the left and select Overview.

8. Click the More ellipsis button in the Overview pane at the top right of the screen and select Edit.

9. Add additional cluster configuration details and click Save to make the changes persistent:

a. Cluster location

b. DNS domain name

c. DNS server IP addresses

![]() DNS server IP addresses can be added individually or with a comma separated list on a single line.

DNS server IP addresses can be added individually or with a comma separated list on a single line.

10. Click Save to make the changes persistent.

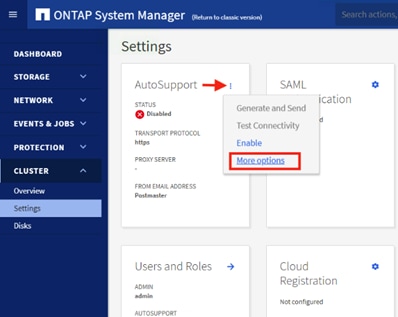

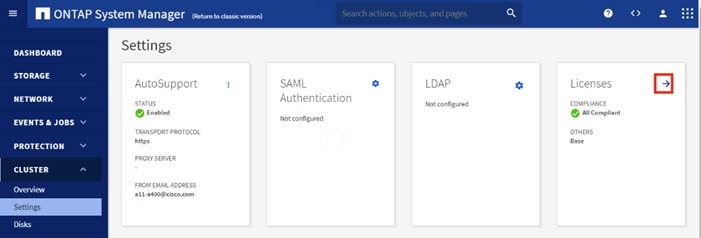

11. Select the Settings menu under the Cluster menu.

12. If AutoSupport was not configured during the initial setup, click the ellipsis in the AutoSupport tile and select More options.

13. To enable AutoSupport click the slider.

14. Click Edit to change the transport protocol, add a proxy server address and a mail host as needed.

15. Click Save to enable the changes.

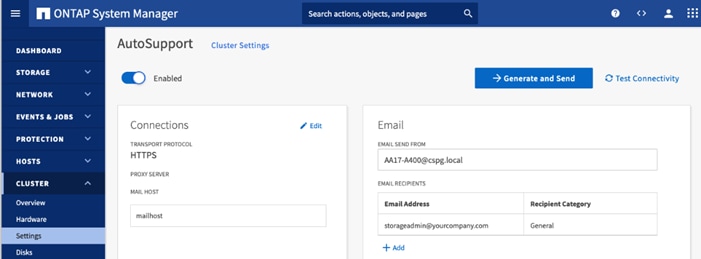

16. In the Email tile to the right, click Edit and enter the desired email information:

a. Email send from address

b. Email recipient addresses.

c. Recipient Category.

17. Click Save when complete.

18. Select CLUSTER > Settings at the top left of the page to return to the cluster settings page.

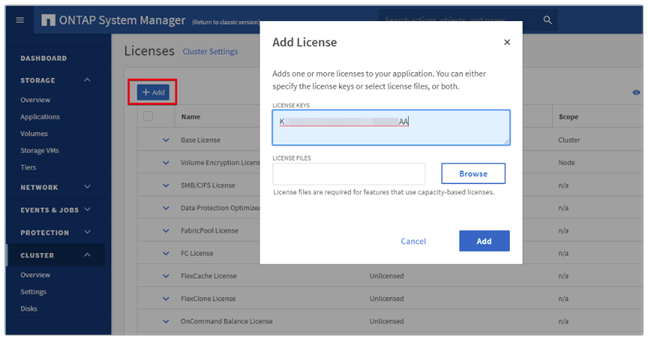

19. Locate the Licenses tile on the right and click the detail arrow.

20. Add the desired licenses to the cluster by clicking Add and entering the license keys in a comma separated list.

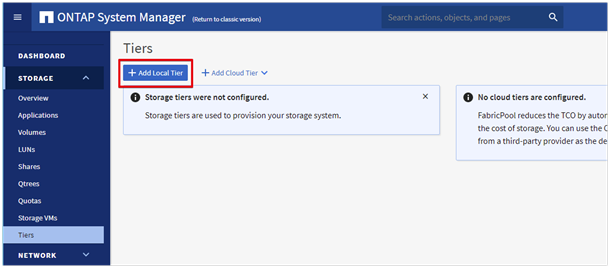

21. Configure storage aggregates by selecting the Storage menu on the left and selecting Tiers.

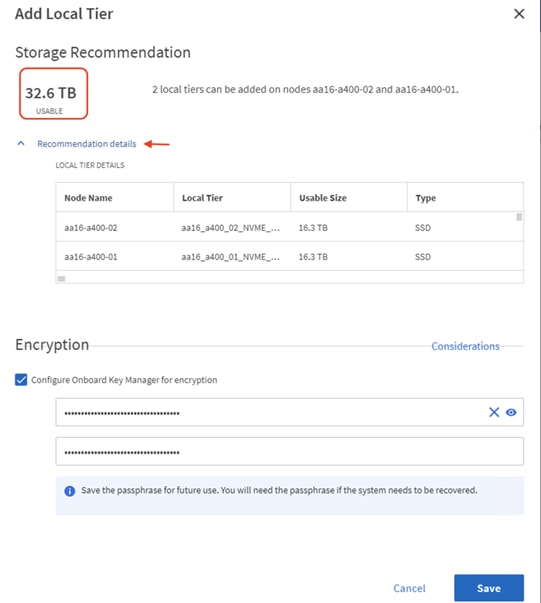

22. Click Add Local Tier and allow ONTAP System Manager to recommend a storage aggregate configuration.

23. ONTAP will use best practices to recommend an aggregate layout. Click the Recommended details link to view the aggregate information.

24. Optionally, enable NetApp Aggregate Encryption (NAE) by checking the box for Configure Onboard Key Manager for encryption.

25. Enter and confirm the passphrase and save it in a secure location for future use.

26. Click Save to make the configuration persistent.

![]() Aggregate encryption may not be supported for all deployments. Please review the NetApp Encryption Power Guide and the Security Hardening Guide for NetApp ONTAP 9 (TR-4569) to help determine if aggregate encryption is right for your environment.

Aggregate encryption may not be supported for all deployments. Please review the NetApp Encryption Power Guide and the Security Hardening Guide for NetApp ONTAP 9 (TR-4569) to help determine if aggregate encryption is right for your environment.

To log into the cluster, follow these steps:

1. Open an SSH connection to either the cluster IP or the host name.

2. Log into the admin user with the password you provided earlier.

To confirm that storage failover is enabled, run the following commands for a failover pair:

1. Verify the status of the storage failover.

AA17-A400::> storage failover show

Takeover

Node Partner Possible State Description

-------------- -------------- -------- -------------------------------------

AA17-A400-01 AA17-A400-02 true Connected to AA17-A400-02

AA17-A400-02 AA17-A400-01 true Connected to AA17-A400-01

2 entries were displayed.

![]() Both <st-node01> and <st-node02> must be capable of performing a takeover. Continue with step 2 if the nodes can perform a takeover.

Both <st-node01> and <st-node02> must be capable of performing a takeover. Continue with step 2 if the nodes can perform a takeover.

2. Enable failover on one of the two nodes if it was not completed during the installation.

storage failover modify -node <st-node01> -enabled true

![]() Enabling failover on one node enables it for both nodes.

Enabling failover on one node enables it for both nodes.

3. Verify the HA status for a two-node cluster.

![]() This step is not applicable for clusters with more than two nodes.

This step is not applicable for clusters with more than two nodes.

AA17-A400::> cluster ha show

High-Availability Configured: true

4. If HA is not configured use the below commands. Only enable HA mode for two-node clusters. Do not run this command for clusters with more than two nodes because it causes problems with failover.

cluster ha modify -configured true

Do you want to continue? {y|n}: y

5. Verify that hardware assist is correctly configured.

AA17-A400::> storage failover hwassist show

Node

-----------------

AA17-A400-01

Partner: AA17-A400-02

Hwassist Enabled: true

Hwassist IP: 192.x.x.84

Hwassist Port: 162

Monitor Status: active

Inactive Reason: -

Corrective Action: -

Keep-Alive Status: healthy

AA17-A400-02

Partner: AA17-A400-01

Hwassist Enabled: true

Hwassist IP: 192.x.x.85

Hwassist Port: 162

Monitor Status: active

Inactive Reason: -

Corrective Action: -

Keep-Alive Status: healthy

2 entries were displayed.

6. If hwassist storage failover is not enabled, enable using the following commands.

storage failover modify –hwassist-partner-ip <node02-mgmt-ip> -node <st-node01>

storage failover modify –hwassist-partner-ip <node01-mgmt-ip> -node <st-node02>

Set Auto-Revert on Cluster Management

To set the auto-revert parameter on the cluster management interface, follow this step:

![]() A storage virtual machine (SVM) is referred to as a Vserver or vserver in the GUI and CLI.

A storage virtual machine (SVM) is referred to as a Vserver or vserver in the GUI and CLI.

1. Run the following command:

net interface modify -vserver <clustername> -lif cluster_mgmt_lif_1 -auto-revert true

To zero all spare disks in the cluster, run the following command:

disk zerospares

![]() Advanced Data Partitioning creates a root partition and two data partitions on each SSD drive in an AFF configuration. Disk autoassign should have assigned one data partition to each node in an HA pair. If a different disk assignment is required, disk autoassignment must be disabled on both nodes in the HA pair by running the disk option modify command. Spare partitions can then be moved from one node to another by running the disk removeowner and disk assign commands.

Advanced Data Partitioning creates a root partition and two data partitions on each SSD drive in an AFF configuration. Disk autoassign should have assigned one data partition to each node in an HA pair. If a different disk assignment is required, disk autoassignment must be disabled on both nodes in the HA pair by running the disk option modify command. Spare partitions can then be moved from one node to another by running the disk removeowner and disk assign commands.

Set Up Service Processor Network Interface

To assign a static IPv4 address to the Service Processor on each node, run the following commands:

system service-processor network modify –node <st-node01> -address-family IPv4 –enable true –dhcp none –ip-address <node01-sp-ip> -netmask <node01-sp-mask> -gateway <node01-sp-gateway>

system service-processor network modify –node <st-node02> -address-family IPv4 –enable true –dhcp none –ip-address <node02-sp-ip> -netmask <node02-sp-mask> -gateway <node02-sp-gateway>

![]() The Service Processor IP addresses should be in the same subnet as the node management IP addresses.

The Service Processor IP addresses should be in the same subnet as the node management IP addresses.

Create Manual Provisioned Aggregates (Optional)

An aggregate containing the root volume is created during the ONTAP setup process. To manually create additional aggregates, determine the aggregate name, the node on which to create it, and the number of disks it should contain.

To create new aggregates, run the following commands:

storage aggregate create -aggregate <aggr1_node01> -node <st-node01> -diskcount <num-disks> -disktype SSD-NVM

storage aggregate create -aggregate <aggr1_node02> -node <st-node02> -diskcount <num-disks> -disktype SSD-NVM

![]() Customer should have the minimum number of hot spare disks for the recommended hot spare disk partitions for their aggregate.

Customer should have the minimum number of hot spare disks for the recommended hot spare disk partitions for their aggregate.

![]() For all-flash aggregates, you should have a minimum of one hot spare disk or disk partition. For non-flash homogenous aggregates, you should have a minimum of two hot spare disks or disk partitions. For Flash Pool aggregates, you should have a minimum of two hot spare disks or disk partitions for each disk type.

For all-flash aggregates, you should have a minimum of one hot spare disk or disk partition. For non-flash homogenous aggregates, you should have a minimum of two hot spare disks or disk partitions. For Flash Pool aggregates, you should have a minimum of two hot spare disks or disk partitions for each disk type.

![]() In an AFF configuration with a small number of SSDs, you might want to create an aggregate with all but one remaining disk (spare) assigned to the controller.

In an AFF configuration with a small number of SSDs, you might want to create an aggregate with all but one remaining disk (spare) assigned to the controller.

![]() The aggregate cannot be created until disk zeroing completes. Run the storage aggregate show command to display the aggregate creation status. Do not proceed until both aggr1_node1 and aggr1_node2 are online.

The aggregate cannot be created until disk zeroing completes. Run the storage aggregate show command to display the aggregate creation status. Do not proceed until both aggr1_node1 and aggr1_node2 are online.

Remove Default Broadcast Domains

By default, all network ports are included in separate default broadcast domain. Network ports used for data services (for example, e0e, e0f, and so on) should be removed from their default broadcast domain and that broadcast domain should be deleted.

To perform this task, run the following commands:

network port broadcast-domain delete -broadcast-domain <Default-N> -ipspace Default

network port broadcast-domain show

Disable Flow Control on 25/100GbE Data Ports

To disable flow control on 25 and 100GbE data ports, follow these steps:

1. Run the following command to configure the ports .on node 01:

network port modify -node <st-node01> -port e3a,e3b -flowcontrol-admin none

network port modify -node <st-node01> -port e0e,e0f,e0g,e0h -flowcontrol-admin none

2. Run the following command to configure the ports on node 02:

network port modify -node <st-node02> -port e3a,e3b -flowcontrol-admin none

network port modify -node <st-node02> -port e0e,e0f,e0g,e0h -flowcontrol-admin none

AA17-A400::> net port show -node * -port e0e,e0f,e0g,e0h -fields speed-admin,duplex-admin,flowcontrol-admin

(network port show)

node port duplex-admin speed-admin flowcontrol-admin

------------ ---- ------------ ----------- -----------------

AA17-A400-01 e0e auto auto none

AA17-A400-01 e0f auto auto none

AA17-A400-01 e0g auto auto none

AA17-A400-01 e0h auto auto none

AA17-A400-02 e0e auto auto none

AA17-A400-02 e0f auto auto none

AA17-A400-02 e0g auto auto none

AA17-A400-02 e0h auto auto none

8 entries were displayed.

AA17-A400::> net port show -node * -port e3a,e3 -fields speed-admin,duplex-admin,flowcontrol-admin (network port show)

node port duplex-admin speed-admin flowcontrol-admin

------------ ---- ------------ ----------- -----------------

AA17-A400-01 e3a auto auto none

AA17-A400-01 e3b auto auto none

AA17-A400-02 e3a auto auto none

AA17-A400-02 e3b auto auto none

4 entries were displayed.

Disable Auto-Negotiate on Fibre Channel Ports (Required only for FC configuration)

In accordance with the best practices for FC host ports, to disable auto-negotiate on each FCP adapter in each controller node, follow these steps:

1. Disable each FC adapter in the controllers with the fcp adapter modify command.

fcp adapter modify -node <st-node01> -adapter 5a –status-admin down

fcp adapter modify -node <st-node01> -adapter 5b –status-admin down

fcp adapter modify -node <st-node02> -adapter 5a –status-admin down

fcp adapter modify -node <st-node02> -adapter 5b –status-admin down

2. Set the desired speed on the adapter and return it to the online state.

fcp adapter modify -node <st-node01> -adapter 5a -speed 32 -status-admin up

fcp adapter modify -node <st-node01> -adapter 5b -speed 32 -status-admin up

fcp adapter modify -node <st-node02> -adapter 5a -speed 32 -status-admin up

fcp adapter modify -node <st-node02> -adapter 5b -speed 32 -status-admin up

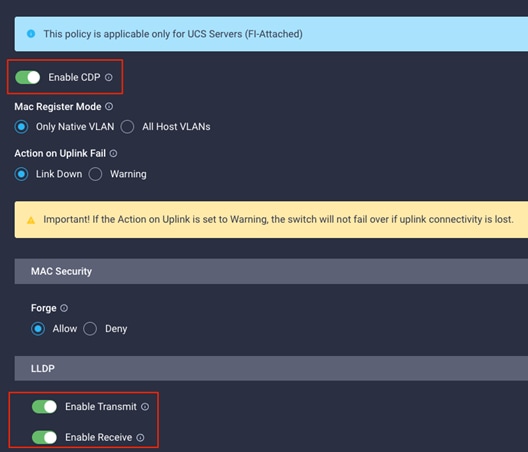

Enable Cisco Discovery Protocol

To enable the Cisco Discovery Protocol (CDP) on the NetApp storage controllers, run the following command to enable CDP in ONTAP:

node run -node * options cdpd.enable on

Enable Link-layer Discovery Protocol on all Ethernet Ports

To enable the exchange of Link-layer Discovery Protocol (LLDP) neighbor information between the storage and network switches, follow this step:

Enable LLDP on all ports of all nodes in the cluster.

node run * options lldp.enable on

Create Management Broadcast Domain

If the management interfaces are required to be on a separate VLAN, create a new broadcast domain for those interfaces by running the following command:

network port broadcast-domain create -broadcast-domain IB-MGMT -mtu 1500

To create an NFS data broadcast domain with a maximum transmission unit (MTU) of 9000, run the following commands to create a broadcast domain for NFS in ONTAP:

network port broadcast-domain create -broadcast-domain Infra-NFS -mtu 9000

Create ISCSI Broadcast Domains (Required only for iSCSI configuration)

To create an ISCSI-A and ISCSI-B data broadcast domain with a maximum transmission unit (MTU) of 9000, run the following commands to create a broadcast domain for NFS in ONTAP:

network port broadcast-domain create -broadcast-domain Infra-ISCSI-A -mtu 9000

network port broadcast-domain create -broadcast-domain Infra-ISCSI-B -mtu 9000

Create Interface Groups

To create the LACP interface groups for the 25GbE data interfaces, run the following commands:

network port ifgrp create -node <st-node01> -ifgrp a0a -distr-func port -mode multimode_lacp

network port ifgrp add-port -node <st-node01> -ifgrp a0a -port e0e

network port ifgrp add-port -node <st-node01> -ifgrp a0a -port e0f

network port ifgrp add-port -node <st-node01> -ifgrp a0a -port e0g

network port ifgrp add-port -node <st-node01> -ifgrp a0a -port e0h

network port ifgrp create -node <st-node02> -ifgrp a0a -distr-func port -mode multimode_lacp

network port ifgrp add-port -node <st-node02> -ifgrp a0a -port e0e

network port ifgrp add-port -node <st-node02> -ifgrp a0a -port e0f

network port ifgrp add-port -node <st-node02> -ifgrp a0a -port e0g

network port ifgrp add-port -node <st-node02> -ifgrp a0a -port e0h

To Verify:

AA17-A400::> network port ifgrp show

Port Distribution Active

Node IfGrp Function MAC Address Ports Ports

-------- ---------- ------------ ----------------- ------- -------------------

AA17-A400-01

a0a port d2:39:ea:29:d4:4a full e0e, e0f, e0g, e0h

AA17-A400-02

a0a port d2:39:ea:29:ce:d5 full e0e, e0f, e0g, e0h

2 entries were displayed.

Change MTU on Interface Groups

To change the MTU size on the base interface-group ports before creating the VLAN ports, run the following commands:

network port modify –node <st-node01> -port a0a –mtu 9000

network port modify –node <st-node02> -port a0a –mtu 9000

To create VLANs, follow these steps:

1. Create the management VLAN ports and add them to the management broadcast domain.

network port vlan create –node <st-node01> -vlan-name a0a-<ib-mgmt-vlan-id>

network port vlan create –node <st-node02> -vlan-name a0a-<ib-mgmt-vlan-id>

network port broadcast-domain add-ports -broadcast-domain IB-MGMT -ports <st-node01>:a0a-<ib-mgmt-vlan-id>,<st-node02>:a0a-<ib-mgmt-vlan-id>

To verify, issue the following command:

AA17-A400::> network port vlan show

Network Network

Node VLAN Name Port VLAN ID MAC Address

------ --------- ------- -------- -----------------

AA17-A400-01

a0a-17 a0a 17 d2:39:ea:29:d4:4a

a0a-3017

a0a 3017 d2:39:ea:29:d4:4a

a0a-3117

a0a 3117 d2:39:ea:29:d4:4a

a0a-3217

a0a 3217 d2:39:ea:29:d4:4a

AA17-A400-02

a0a-17 a0a 17 d2:39:ea:29:ce:d5

a0a-3017

a0a 3017 d2:39:ea:29:ce:d5

a0a-3117

a0a 3117 d2:39:ea:29:ce:d5

a0a-3217

a0a 3217 d2:39:ea:29:ce:d5

8 entries were displayed.

2. Create the NFS VLAN ports and add them to the Infra-NFS broadcast domain.

network port vlan create –node <st-node01> -vlan-name a0a-<infra-nfs-vlan-id>

network port vlan create –node <st-node02> -vlan-name a0a-<infra-nfs-vlan-id>

network port broadcast-domain add-ports -broadcast-domain Infra-NFS -ports <st-node01>:a0a-<infra-nfs-vlan-id>,<st-node02>:a0a-<infra-nfs-vlan-id>

3. If configuring iSCSI, create VLAN ports for the iSCSI LIFs on each storage controller and add them to the broadcast domain:

network port vlan create -node <st-node01> -vlan-name a0a-<infra-iscsi-a-vlan-id>

network port vlan create -node <st-node01> -vlan-name a0a-<infra-iscsi-b-vlan-id>

network port vlan create -node <st-node02> -vlan-name a0a-<infra-iscsi-a-vlan-id>

network port vlan create -node <st-node02> -vlan-name a0a-<infra-iscsi-b-vlan-id>

network port broadcast-domain add-ports -broadcast-domain Infra-iSCSI-A -ports <st-node01>:a0a-<infra-iscsi-a-vlan-id>

network port broadcast-domain add-ports -broadcast-domain Infra-iSCSI-B -ports <st-node01>:a0a-<infra-iscsi-b-vlan-id>

network port broadcast-domain add-ports -broadcast-domain Infra-iSCSI-A -ports <st-node02>:a0a-<infra-iscsi-a-vlan-id>

network port broadcast-domain add-ports -broadcast-domain Infra-iSCSI-B -ports <st-node02>:a0a-<infra-iscsi-b-vlan-id>

Configure Timezone

To configure time synchronization on the cluster, follow these steps:

1. Set the time zone for the cluster.

timezone -timezone <timezone>

![]() For example, in the eastern United States, the time zone is America/New_York.

For example, in the eastern United States, the time zone is America/New_York.

Configure Simple Network Management Protocol

To configure the Simple Network Management Protocol (SNMP), follow these steps:

1. Configure basic SNMP information, such as the location and contact. When polled, this information is visible as the sysLocation and sysContact variables in SNMP.

snmp contact <snmp-contact>

snmp location “<snmp-location>”

snmp init 1

options snmp.enable on

2. Configure SNMP traps to send to remote hosts, such as an Active IQ Unified Manager server or another fault management system.

snmp traphost add <oncommand-um-server-fqdn>

SNMPv3 offers advanced security by using encryption and passphrases. The SNMPv3 user can run SNMP utilities from the traphost using the authentication and privacy settings that you specify. To configure SNMPv3 access, run the following commands:

security login create -user-or-group-name <<snmp-v3-usr>> -application snmp -authentication-method usm

Enter the authoritative entity's EngineID [local EngineID]:

Which authentication protocol do you want to choose (none, md5, sha, sha2-256) [none]: <<snmp-v3-auth-proto>>

Enter the authentication protocol password (minimum 8 characters long):

Enter the authentication protocol password again:

Which privacy protocol do you want to choose (none, des, aes128) [none]: <<snmpv3-priv-proto>>

Enter privacy protocol password (minimum 8 characters long):

Enter privacy protocol password again:

![]() Refer to the SNMP Configuration Express Guide for additional information when configuring SNMPv3 security users.

Refer to the SNMP Configuration Express Guide for additional information when configuring SNMPv3 security users.

Create SVM

To create an infrastructure SVM, follow these steps:

1. Run the vserver create command.

vserver create –vserver Infra-SVM –rootvolume infra_svm_root –aggregate aggr1_node01 –rootvolume-security-style unix

2. Remove the unused data protocols from the SVM:

vserver remove-protocols –vserver Infra-SVM -protocols cifs

![]() It is recommended to remove iSCSI or FCP protocols if the protocol is not in use.

It is recommended to remove iSCSI or FCP protocols if the protocol is not in use.

3. Add the two data aggregates to the Infra-SVM aggregate list for the NetApp ONTAP Tools.

vserver modify –vserver Infra-SVM –aggr-list <aggr1_node01>,<aggr1_node02>

4. Enable and run the NFS protocol in the Infra-SVM.

vserver nfs create -vserver Infra-SVM -udp disabled -v3 enabled -v4.1 enabled -vstorage enabled

![]() If the NFS license was not installed during the cluster configuration, make sure to install the license before starting the NFS service.

If the NFS license was not installed during the cluster configuration, make sure to install the license before starting the NFS service.

5. Verify the NFS vstorage parameter for the NetApp NFS VAAI plug-in was enabled.

AA17-A400::> vserver nfs show -fields vstorage

vserver vstorage

--------- --------

Infra-SVM enabled

Create Load-Sharing Mirrors of SVM Root Volume

To create a load-sharing mirror of an SVM root volume, follow these steps:

1. Create a volume to be the load-sharing mirror of the infrastructure SVM root volume on each node.

volume create –vserver Infra-SVM –volume infra_svm_root_m01 –aggregate <aggr1_node01> –size 1GB –type DP

volume create –vserver Infra-SVM –volume infra_svm_root_m02 –aggregate <aggr1_node02> –size 1GB –type DP

2. Create a job schedule to update the root volume mirror relationships every 15 minutes.

job schedule interval create -name 15min -minutes 15

3. Create the mirroring relationships.

snapmirror create –source-path Infra-SVM:infra_svm_root -destination-path Infra-SVM:infra_svm_root_m01 –type LS -schedule 15min

snapmirror create –source-path Infra-SVM:infra_svm_root –destination-path Infra-SVM:infra_svm_root_m02 –type LS -schedule 15min

4. Initialize the mirroring relationship.

snapmirror initialize-ls-set –source-path Infra-SVM:infra_svm_root

To verify:

AA17-A400::> snapmirror show -type ls

Progress

Source Destination Mirror Relationship Total Last

Path Type Path State Status Progress Healthy Updated

----------- ---- ------------ ------- -------------- --------- ------- --------

AA17-A400://Infra-SVM/Infra_SVM_root

LS AA17-A400://Infra-SVM/infra_svm_root_m01

Snapmirrored

Idle - true -

AA17-A400://Infra-SVM/infra_svm_root_m02

Snapmirrored

Idle - true -

2 entries were displayed.

Create FC Block Protocol Service (required only for FC configuration)

Run the following command to create the FCP service. This command also starts the FCP service and sets the worldwide name (WWN) for the SVM:

vserver fcp create -vserver Infra-SVM -status-admin up

To verify:

AA17-A400::> vserver fcp show

Status

Vserver Target Name Admin

---------- ---------------------------- ------

Infra-SVM 20:00:d0:39:ea:29:ce:d4 up

![]() If the FC license was not installed during the cluster configuration, make sure to install the license before creating the FC service.

If the FC license was not installed during the cluster configuration, make sure to install the license before creating the FC service.

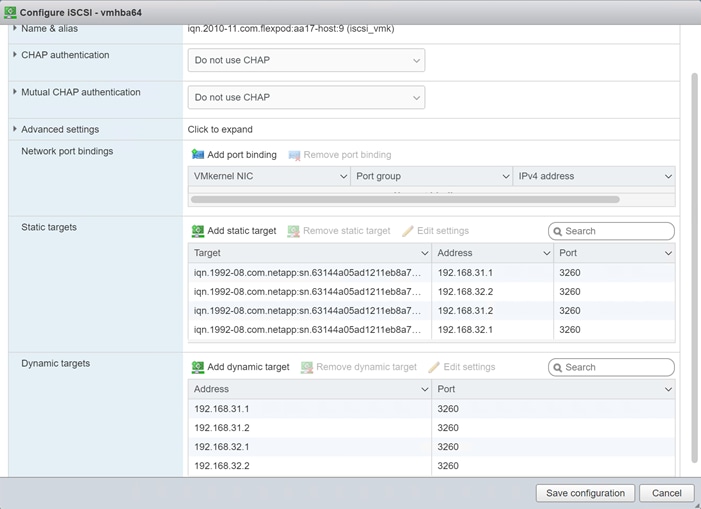

Create iSCSI Block Protocol Service (required only for iSCSI configuration)

Run the following command to create the iSCSI service:

vserver iscsi create -vserver <infra-data-svm>

To verify:

AA17-A400::> vserver iscsi show

Target Target Status

Vserver Name Alias Admin

---------- -------------------------------- ---------------------------- ------

Infra-SVM iqn.1992-08.com.netapp:sn.63144a05ad1211eb8a7ad039ea29d44a:vs.3

Infra-SVM up

![]() If the iSCSI license was not installed during the cluster configuration, make sure to install the license before creating the iSCSI service.

If the iSCSI license was not installed during the cluster configuration, make sure to install the license before creating the iSCSI service.

Vserver Protocol Verification

Verify the protocols are added to the Infra vserver

AA17-A400::> vserver show-protocols -vserver Infra-SVM

Vserver: Infra-SVM

Protocols: nfs, fcp, iscsi, ndmp, nvme

If a protocol is not present, use the following command to add the protocol to the vserver:

vserver add-protocols -vserver <infra-data-svm> -protocols < isci or fcp >

Configure HTTPS Access

To configure secure access to the storage controller, follow these steps:

1. Increase the privilege level to access the certificate commands.

set -privilege diag

Do you want to continue? {y|n}: y

2. Generally, a self-signed certificate is already in place. Verify the certificate and obtain parameters (for example, the <serial-number>) by running the following command:

security certificate show

3. For each SVM shown, the certificate common name should match the DNS fully qualified domain name (FQDN) of the SVM. Delete the two default certificates and replace them with either self-signed certificates or certificates from a certificate authority (CA). To delete the default certificates, run the following commands:

security certificate delete -vserver Infra-SVM -common-name Infra-SVM -ca Infra-SVM -type server -serial <serial-number>

![]() Deleting expired certificates before creating new certificates is a best practice. Run the security certificate delete command to delete the expired certificates. In the following command, use TAB completion to select and delete each default certificate.

Deleting expired certificates before creating new certificates is a best practice. Run the security certificate delete command to delete the expired certificates. In the following command, use TAB completion to select and delete each default certificate.

4. To generate and install self-signed certificates, run the following commands as one-time commands. Generate a server certificate for the Infra-SVM and the cluster SVM. Use TAB completion to aid in the completion of these commands.

security certificate create -common-name <cert-common-name> -type server -size 2048 -country <cert-country> -state <cert-state> -locality <cert-locality> -organization <cert-org> -unit <cert-unit> -email-addr <cert-email> -expire-days <cert-days> -protocol SSL -hash-function SHA256 -vserver Infra-SVM

5. To obtain the values for the parameters required in step 5 (<cert-ca> and <cert-serial>), run the security certificate show command.

6. Enable each certificate that was just created by using the –server-enabled true and –client-enabled false parameters. Use TAB completion to aid in the completion of these commands.

security ssl modify -vserver <clustername> -server-enabled true -client-enabled false -ca <cert-ca> -serial <cert-serial> -common-name <cert-common-name>

7. Disable HTTP cluster management access.

system services firewall policy delete -policy mgmt -service http –vserver <clustername>

![]() It is normal for some of these commands to return an error message stating that the entry does not exist.

It is normal for some of these commands to return an error message stating that the entry does not exist.

8. Change back to the normal admin privilege level and verify that the system logs are available in a web browser.

set –privilege admin

https://<node01-mgmt-ip>/spi

https://<node02-mgmt-ip>/spi

Configure NFSv3 and NFSv4.1

To configure NFS on the SVM, follow these steps:

1. Create a new rule for the infrastructure NFS subnet in the default export policy.

vserver export-policy rule create –vserver Infra-SVM -policyname default –ruleindex 1 –protocol nfs -clientmatch <infra-nfs-subnet-cidr> -rorule sys –rwrule sys -superuser sys –allow-suid true

2. Assign the FlexPod export policy to the infrastructure SVM root volume.

volume modify –vserver Infra-SVM –volume infra_svm_root –policy default

The following information is required to create a NetApp FlexVol® volume:

● The volume name

● The volume size

● The aggregate on which the volume exists

To create a FlexVol volume, run the following commands:

volume create -vserver Infra-SVM -volume infra_datastore_1 -aggregate <aggr1_node01> -size 1TB -state online -policy default -junction-path /infra_datastore_01 -space-guarantee none -percent-snapshot-space 0

volume create -vserver Infra-SVM -volume infra_datastore_2 -aggregate <aggr1_node02> -size 1TB -state online -policy default -junction-path /infra_datastore_02 -space-guarantee none -percent-snapshot-space 0

volume create -vserver Infra-SVM -volume infra_swap -aggregate <aggr1_node01> -size 100GB -state online -policy default -junction-path /infra_swap -space-guarantee none -percent-snapshot-space 0 -snapshot-policy none.

volume create -vserver Infra-SVM -volume esxi_boot -aggregate <aggr1_node01> -size 320GB -state online -policy default -space-guarantee none -percent-snapshot-space 0

snapmirror update-ls-set -source-path Infra-SVM:infra_svm_root

![]() If you are going to setup and use SnapCenter to backup the infra_datastore volume, add “-snapshot-policy none” to the end of the volume create command for the infra_datastore volume.

If you are going to setup and use SnapCenter to backup the infra_datastore volume, add “-snapshot-policy none” to the end of the volume create command for the infra_datastore volume.

On NetApp AFF systems, deduplication is enabled by default. To disable the efficiency policy on the infra_swap volume, run the following command:

volume efficiency off –vserver Infra-SVM –volume infra_swap

To create NFS LIFs, run the following commands:

network interface create -vserver Infra-SVM -lif nfs-lif-01 -role data -data-protocol nfs -home-node <st-node01> -home-port a0a-<infra-nfs-vlan-id> –address <node01-nfs-lif-01-ip> -netmask <node01-nfs-lif-01-mask> -status-admin up –failover-policy broadcast-domain-wide –firewall-policy data –auto-revert true

network interface create -vserver Infra-SVM -lif nfs-lif-02 -role data -data-protocol nfs -home-node <st-node02> -home-port a0a-<infra-nfs-vlan-id> –address <node02-nfs-lif-02-ip> -netmask <node02-nfs-lif-02-mask>> -status-admin up –failover-policy broadcast-domain-wide –firewall-policy data –auto-revert true

To verify:

AA17-A400::> network interface show -vserver Infra-SVM -data-protocol nfs

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

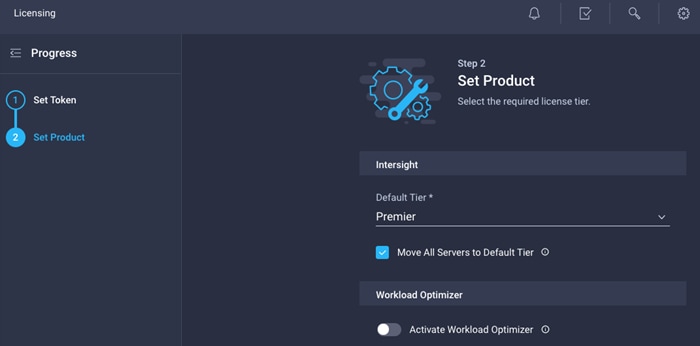

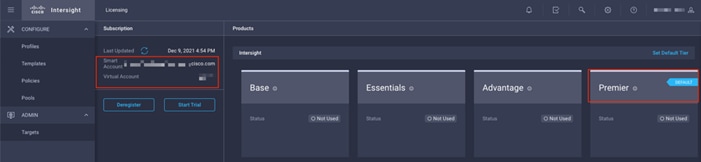

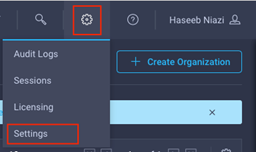

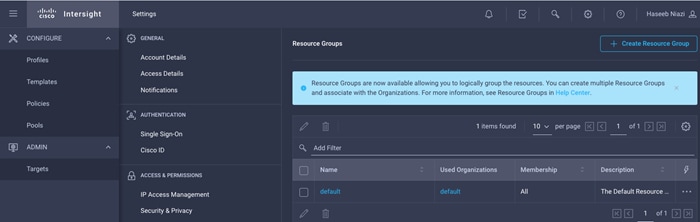

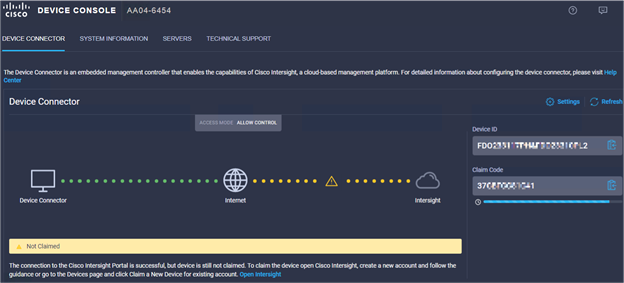

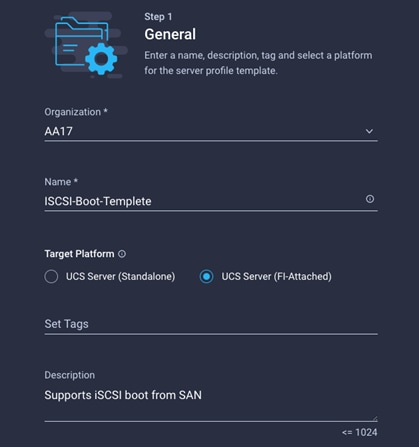

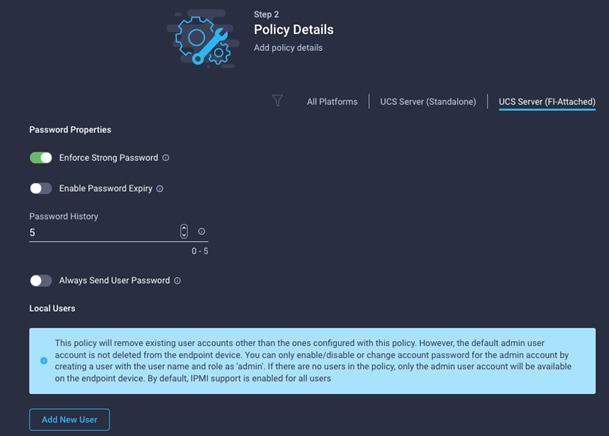

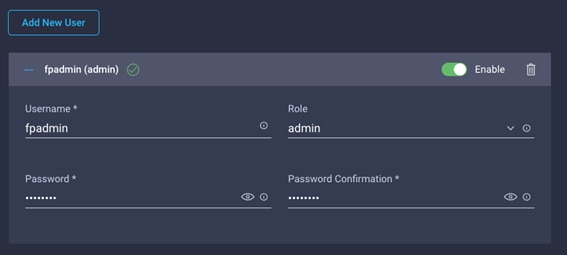

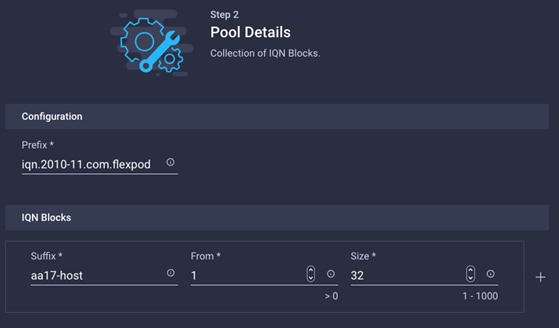

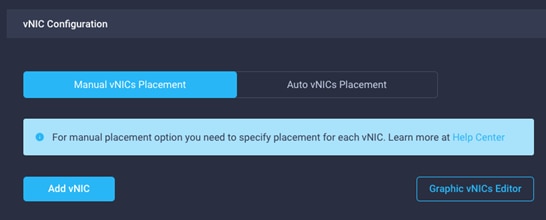

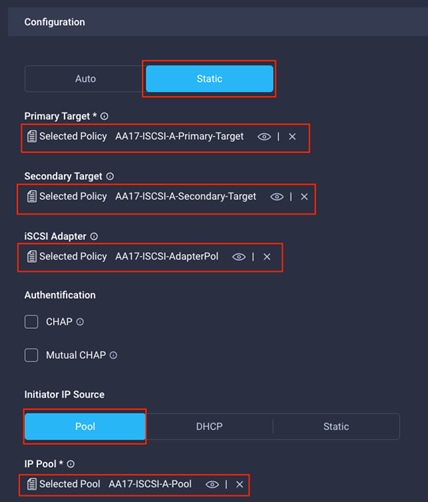

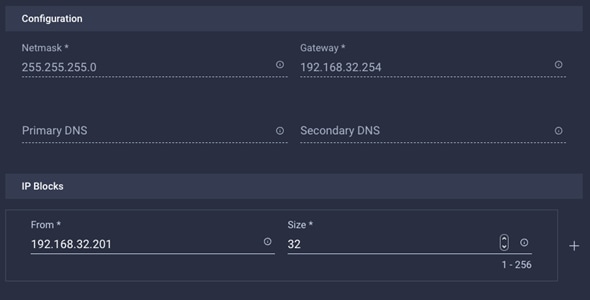

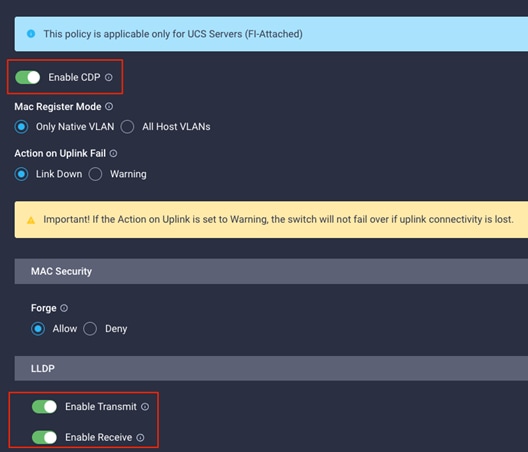

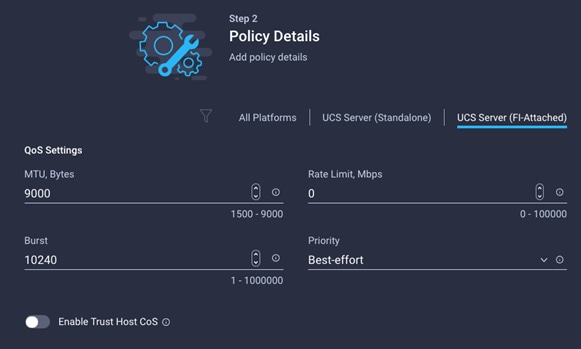

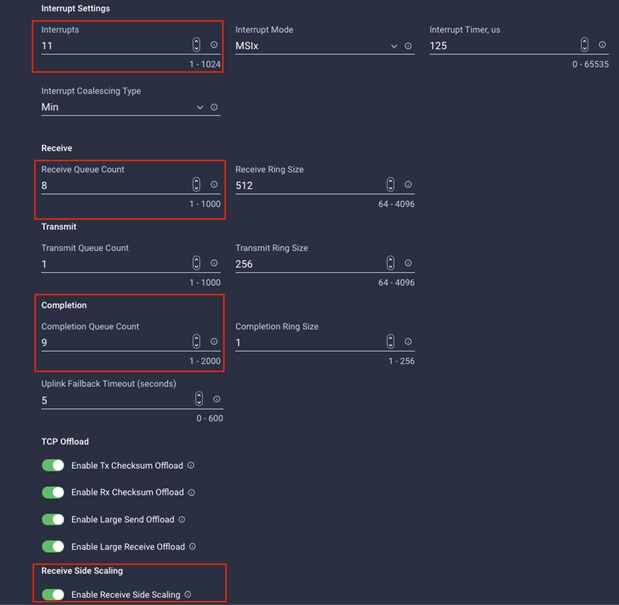

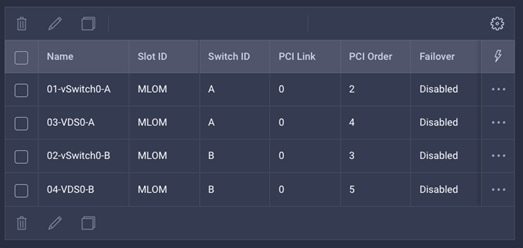

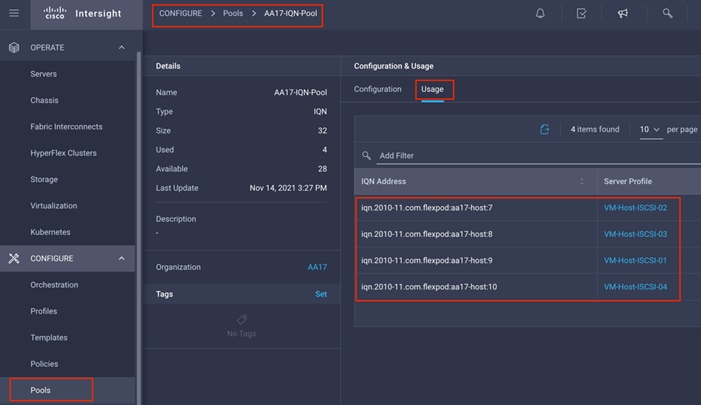

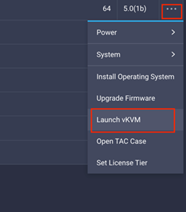

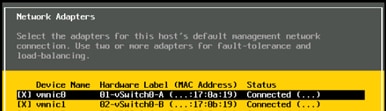

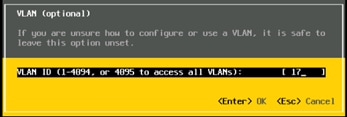

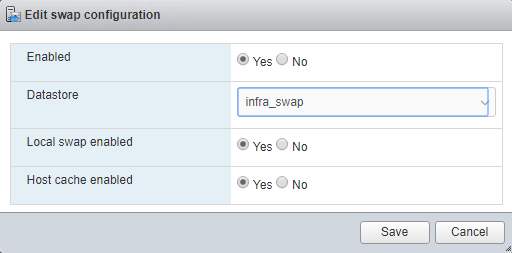

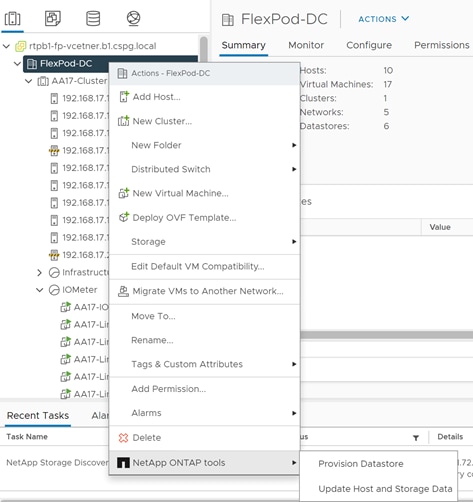

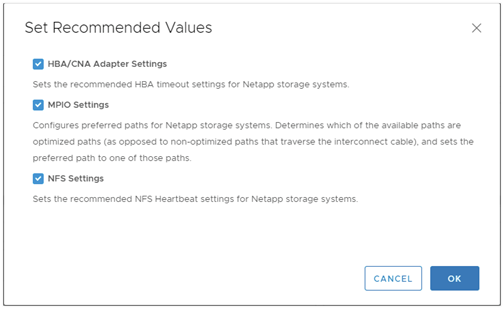

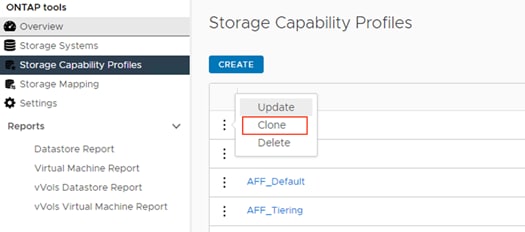

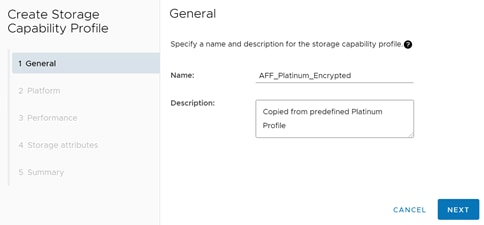

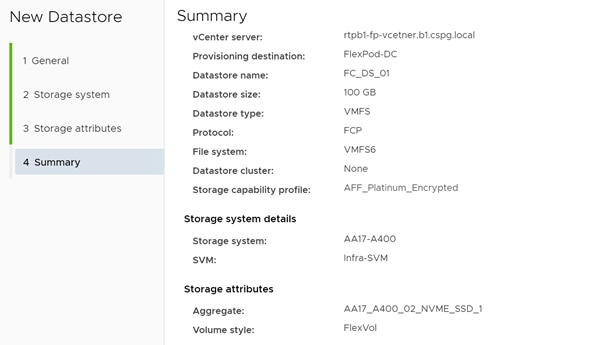

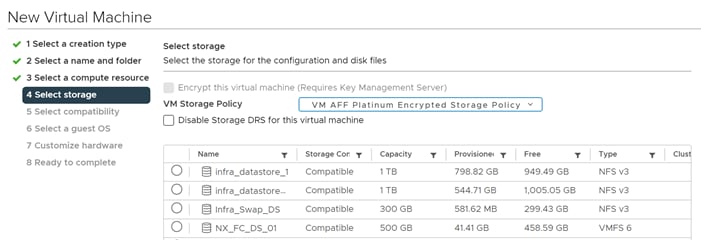

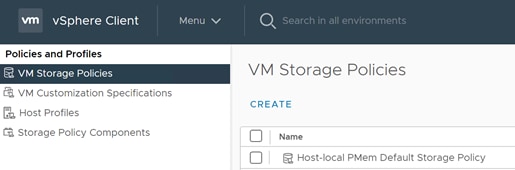

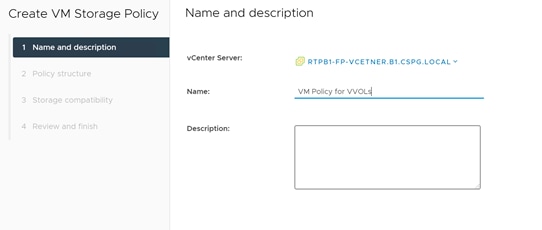

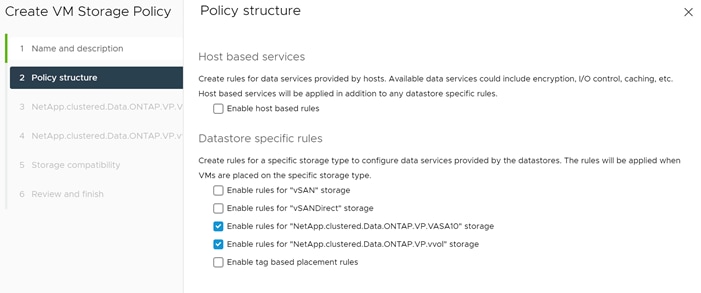

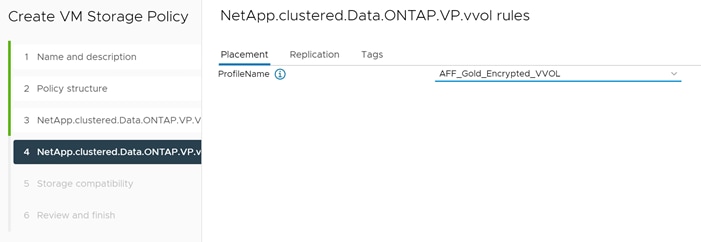

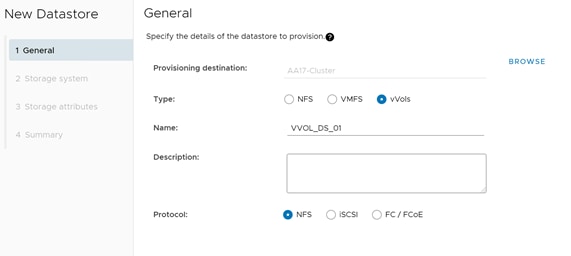

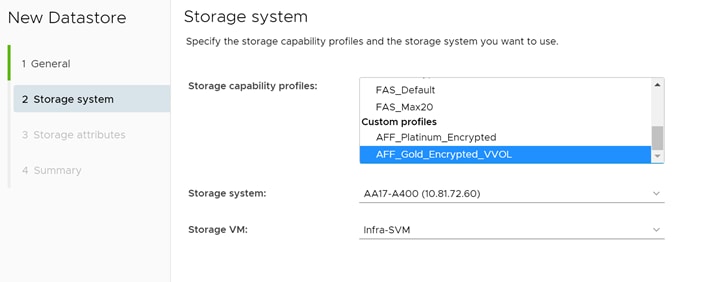

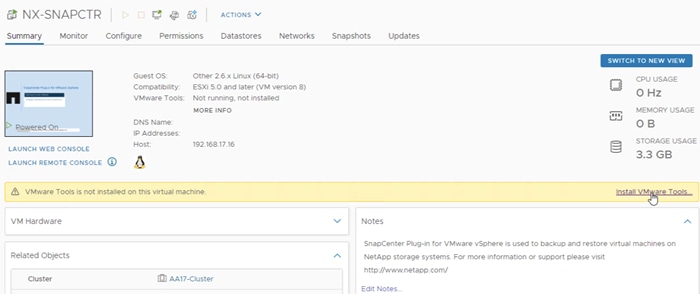

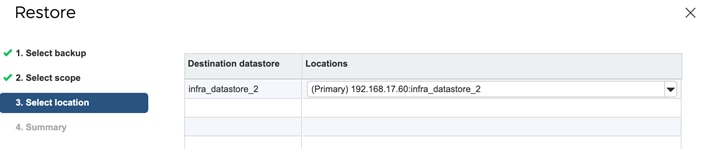

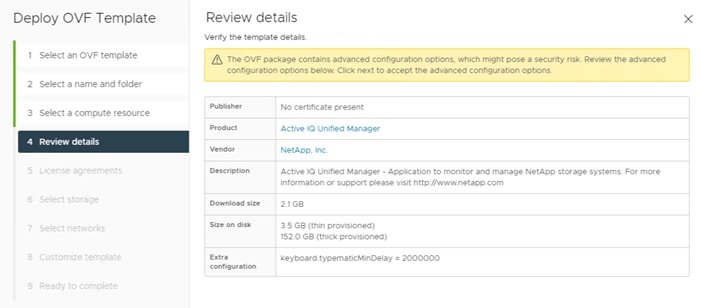

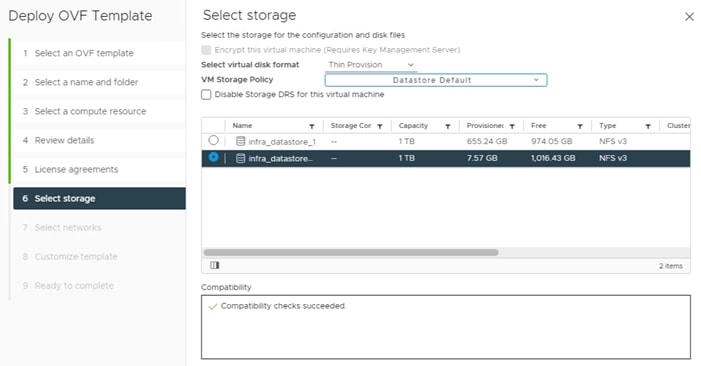

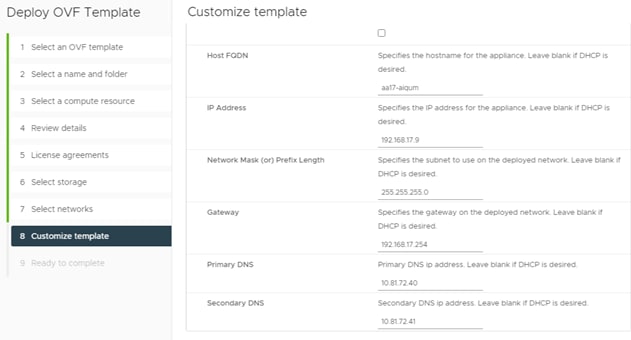

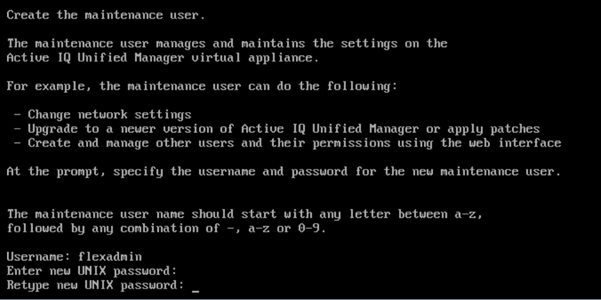

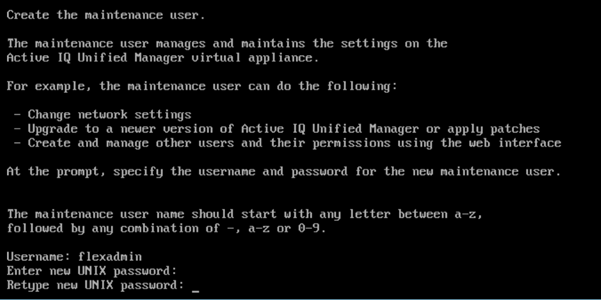

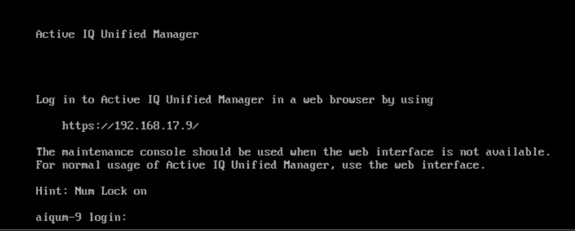

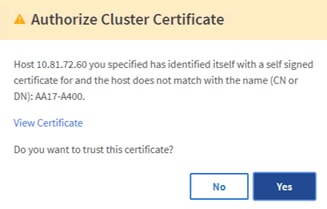

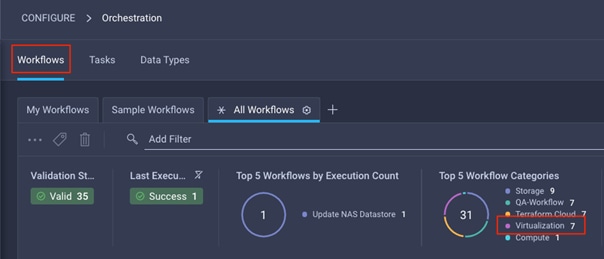

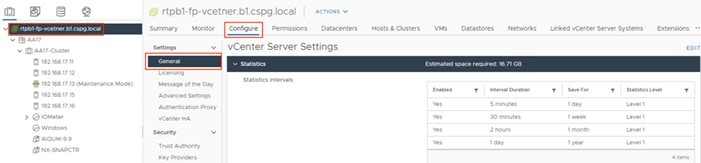

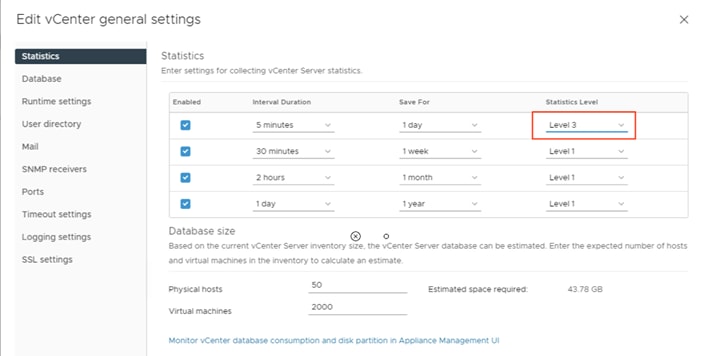

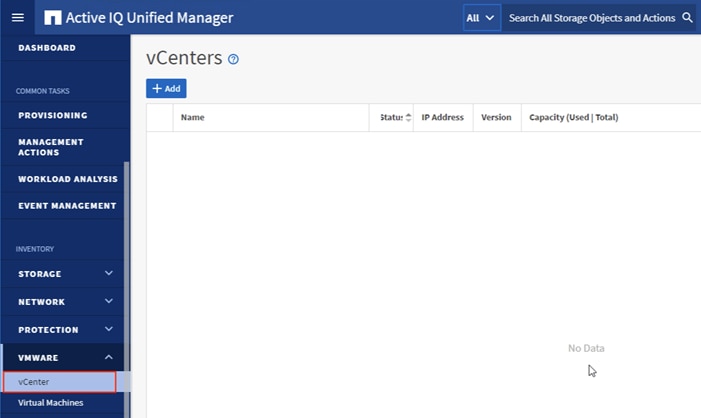

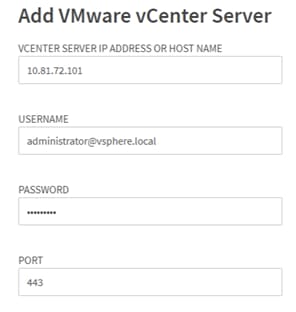

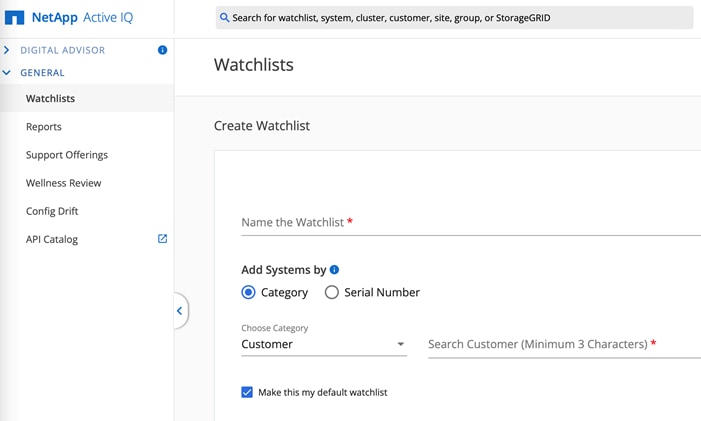

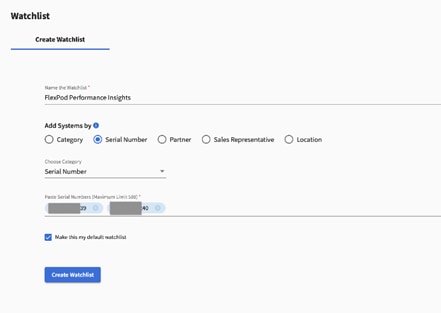

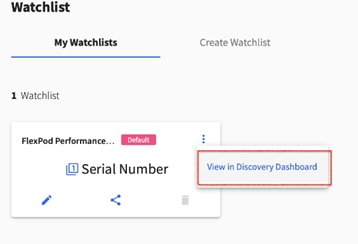

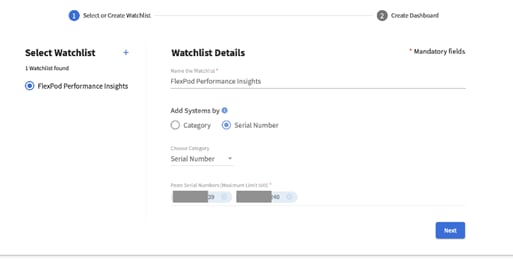

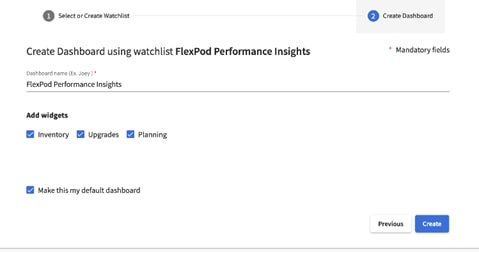

Infra-SVM