Cisco UCS Integrated Infrastructure for Splunk Enterprise

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Published: June 2025

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone

Executive Summary

The exponential growth in data generation from diverse digital sources such as servers, applications, devices, and user interactions present significant challenges for organizations seeking to analyze, troubleshoot, and monitor their environments effectively. Traditional monitoring and analytics tools often struggle to keep pace, resulting in delayed detection of issues, incomplete visibility, and inefficient troubleshooting processes. These challenges are further amplified by the demand for real-time monitoring and the complexity of integrating data from multiple, siloed sources, making it increasingly difficult to maintain operational reliability and security.

To gain valuable insights from these vast amounts of data generated by IT systems and applications, organizations require powerful analytics platforms. By transforming this data into actionable insights, organizations can improve operational efficiency, enhance security, and make better decisions. Splunk Enterprise is a leading software platform for operational intelligence, focusing on centralized data collection, indexing, and analysis. It offers real-time visibility into IT infrastructure along with advanced search, monitoring, and reporting capabilities. Splunk deployment allows users to gain insights into their infrastructure across hybrid as well as multi-cloud environments.

The performance and scalability of Splunk depends heavily on the underlying infrastructure. Traditional IT architectures can often struggle to meet the demands of Splunk’s data heavy workloads, operational bottlenecks, and escalating costs as data volumes continue to grow. The Cisco Unified Computing System™ (Cisco UCS®) is a next-generation data center platform that unites computing, network, storage access, and virtualization into a single cohesive system. It is designed to simplify data center operations, increase efficiency, and provide centralized management for enterprise application workloads.

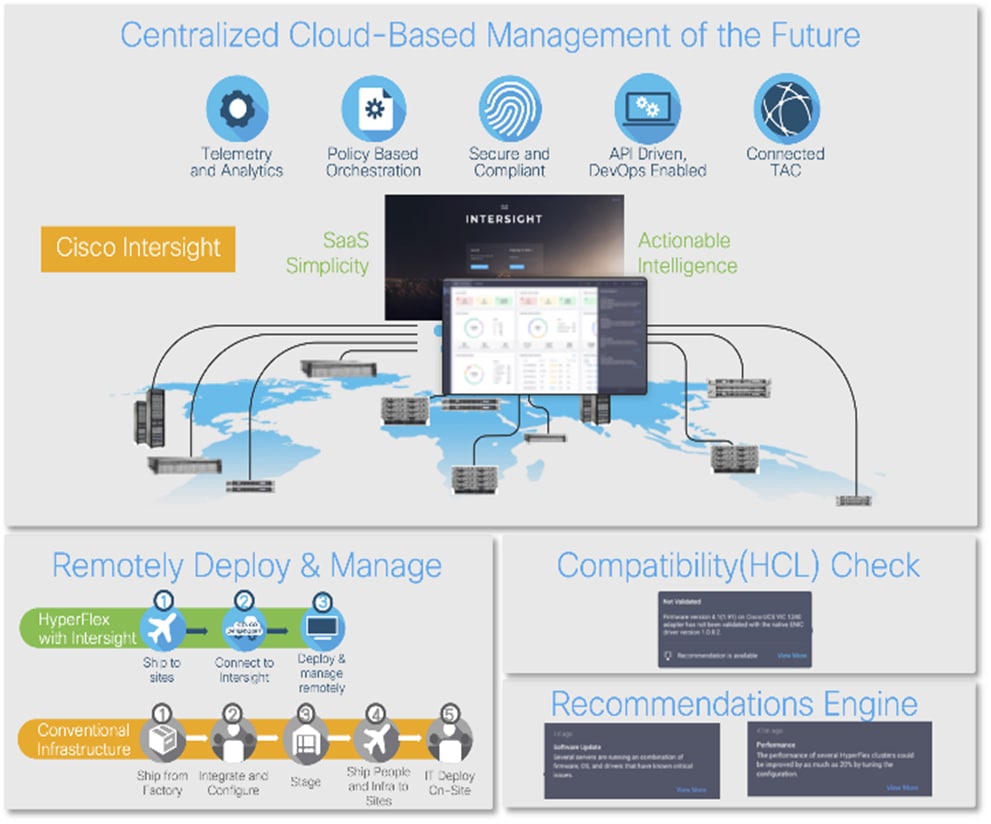

Cisco Validated Designs consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. This CVD document describes the steps for configuring and implementing Splunk Enterprise solution on a Distributed Clustered model with a Search Head Cluster (SHC) for a Single Site Deployment (C3/C13) on Cisco UCS Integrated Infrastructure with the ability to monitor and manage components from the cloud using Cisco Intersight. By moving the management from the fabric interconnects into the cloud, the solution can respond to the speed and scale of your deployments with a constant stream of new capabilities delivered from Cisco Intersight software-as-a-service model at cloud-scale. For those that require management within a secure datacenter, Cisco Intersight is also offered as an on-site appliance with both connected and internet disconnected options.

Solution Overview

This chapter contains the following:

● Audience

Most enterprise IT infrastructures have been built over time and include a wide variety of multivendor networks, compute platforms, operating systems, storage resources, and applications. This diversity creates management complexity that increases costs and hampers agility. Consequently, IT managers need real-time, comprehensive visibility across all applications and every aspect of their infrastructure, so they can identify underlying issues and resolve a problem as soon as possible.

Traditional tools for managing and monitoring IT infrastructures have become outdated considering the constant changes occurring in today's data centers. When issues arise, pinpointing the root cause and achieving visibility across the infrastructure to proactively identify and prevent outages can be nearly impossible. Splunk is a powerful platform that offers a wide range of capabilities, particularly in the areas of data monitoring, infrastructure management, and observability. Splunk is extremely beneficial for data analysis since it allows organizations to search, analyze, and visualize all types of data—both structured and unstructured—from a single platform, delivering actionable insights in real time. Splunk Enterprise can be deployed on-premises, in the cloud, or as a hybrid solution, providing a unified view of data and operational insights for IT, security, DevOps, and business analytics use cases.

Organizations typically start with Splunk to solve a specific problem and then expand from there to address a broad range of use cases, such as application troubleshooting, IT infrastructure monitoring, security, business analytics and many others. As operational analytics become increasingly critical to day-to-day decision-making and Splunk deployments expand to terabytes of data, a high-performance, highly scalable infrastructure is critical to ensuring rapid and predictable delivery of insights. Cisco UCS's ability to expand to thousands of servers allows the Splunk deployments to scale horizontally while continuously delivering exceptional performance.

The Cisco Unified Computing System with Intersight Managed Mode (IMM) is a modular compute system, configured and managed from the cloud. It is designed to meet the needs of modern applications and to improve operational efficiency, agility, and scale through an adaptable, future-ready, modular design. The Cisco Intersight platform is a Software-as-a-Service (SaaS) infrastructure lifecycle management platform that delivers simplified configuration, deployment, maintenance, and support of your UCS resources.

By leveraging Cisco UCS Integrated Infrastructure for Splunk, organizations can focus on deriving insights from their data rather than managing the underlying infrastructure. This solution combines leading innovation in software with Splunk Enterprise and hardware, with the Cisco UCS Integrated Infrastructure. This CVD document offers a deployment model for Splunk Enterprise which can be customized and implemented rapidly to meet Splunk workload requirements.

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, IT engineers and IT architects, partners, and customers who are interested in deploying Splunk Enterprise platform on Cisco UCS.

The purpose of this document is to provide step-by-step configuration and implementation guide for deploying Splunk Enterprise software on Cisco UCS Compute Servers, Cisco Fabric Interconnect Switches and Cisco Nexus Switches. The document also highlights the design and product requirements for integrating compute, network, and storage systems to Cisco Intersight to deliver a true cloud-based integrated approach to infrastructure management.

This design and implementation guide shows a validated reference architecture and describes the specifics of the products used within the Cisco validation lab. This document introduces various design elements and explains various considerations and best practices for successful deployments.

Deploying Splunk Enterprise on Cisco UCS infrastructure delivers a powerful, scalable, and efficient solution for organizations seeking operational intelligence, real-time analytics, and robust data management. Cisco UCS and Splunk team have carefully validated and verified solution architecture and its many use cases while creating detailed documentation, information, and references to assist customers in transforming their data centers to this shared infrastructure model.

Some of the key benefits of this solution are:

● Exceptional Performance and Scalability: Cisco UCS provides industry-leading performance and linear scalability, allowing Splunk Enterprise deployments to efficiently handle massive data volumes, including multiple petabytes of machine data across cloud, on-premises, and hybrid environments. The architecture supports horizontal scaling, so organizations can expand Splunk deployments as data and user demands grow, without sacrificing speed or reliability.

● Simplified and Unified Management: The architecture supports horizontal scaling, so organizations can expand Splunk deployments as data and user demands grow, without sacrificing speed or reliability.

● High Availability and Reliability: The reference architectures are designed for high availability, with redundant components and active-active configurations to ensure continuous operations and minimize downtime. Automated health monitoring and advanced system maintenance features help maintain system integrity and performance.

● Real Time Insights and Proactive Monitoring: Splunk Enterprise, running on Cisco UCS, enables real-time monitoring, analysis, and visualization of data from any source—such as customer transactions, network activity, and application logs—turning raw machine data into actionable business insights. This capability allows organizations to troubleshoot issues rapidly, often reducing investigation times from hours or days to just minutes.

● Flexibility and Storage Optimization: Cisco UCS supports a variety of storage options (NVMe, SSD, HDD), allowing organizations to optimize storage for different Splunk data tiers (hot, warm, cold, archive) based on performance and cost requirements. The infrastructure can be tailored to meet the needs of both medium and large-scale Splunk deployments

● Accelerated Deployment and Reduced Risk: Validated reference architectures and Cisco Validated Designs (CVDs) provide prescriptive, step-by-step guidance for deploying Splunk Enterprise on Cisco UCS, accelerating time-to-value, and minimizing deployment risks

● Integrated Security and Compliance: Splunk and Cisco together provide comprehensive visibility across IT and security environments, accelerating threat detection, investigation, and response to reduce the impact of security breaches. Integrations with Cisco’s security portfolio and Splunk’s analytics-driven security features help organizations improve their security posture and meet compliance requirements.

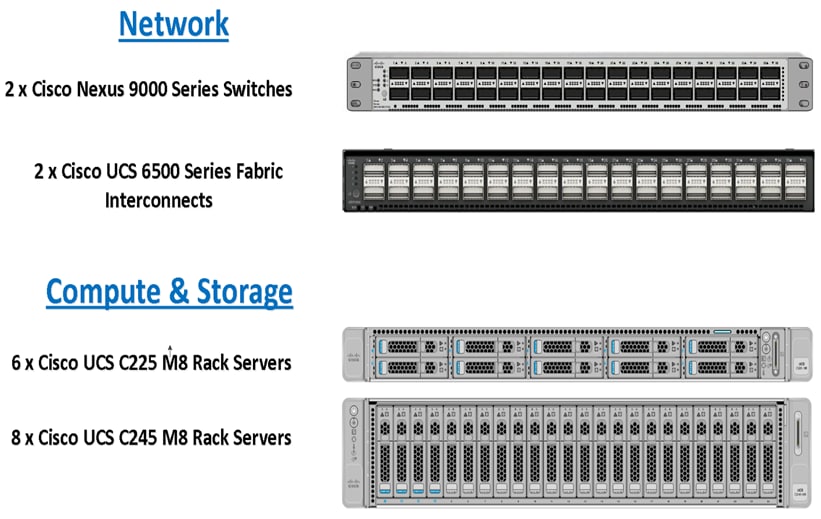

This reference architecture for running Splunk Enterprise on Cisco UCS is built using the following infrastructure components for compute, network, and storage as:

● Cisco UCS Nexus 9000 Switches

● Cisco UCS 5th Generation Fabric Interconnects

● Cisco UCS C-Series M8 Series C225 and C245 Rack Servers

These components have been integrated so that you can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. Cisco UCS Servers, Cisco FI and Cisco Nexus component families, shown in Figure 1, offer options when solutioning and through implementation that are designed to allow you to scale up or scale out the infrastructure while supporting the same features. There are two modes to configure Cisco UCS, one is UCSM (UCS Managed), and the other is IMM (Intersight Managed Mode). This reference solution was deployed using Intersight Managed Mode (IMM)and the associated best practices and setup recommendations are described later in this document.

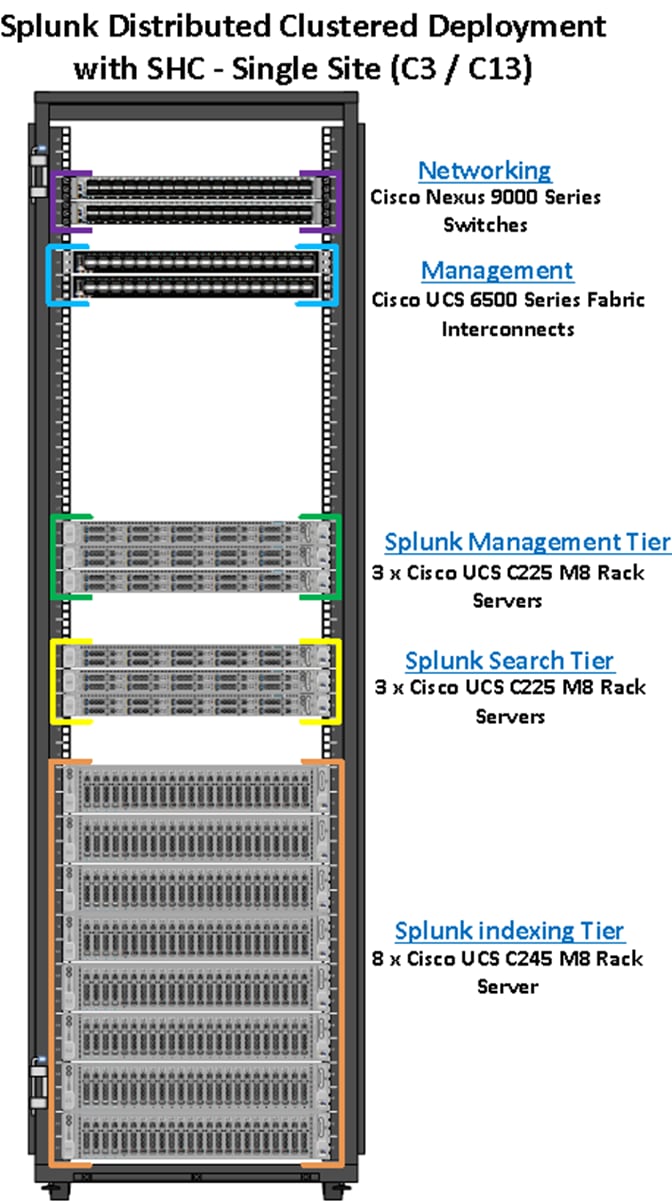

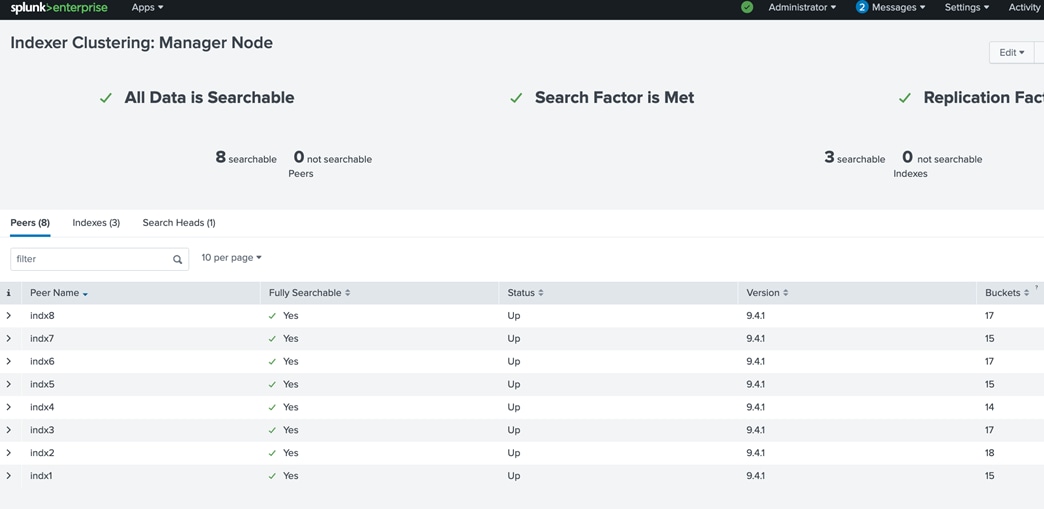

This CVD describes architecture and deployment procedures for Splunk Enterprise using eight (8) Cisco UCS C245 M8 rack servers as indexers, three (3) Cisco UCS C225 M8 rack servers as search heads, and three (3) Cisco UCS C225 M8 rack servers to perform administrative functions.

In this CVD, eight Splunk Indexers provide capacity to index up to 2.4 TB of data per day. This configuration can scale to index hundreds of terabytes to petabytes of data every 24 hours, delivering real-time search results and meeting Splunk application demands with seamless data integration and analytics to multiple users across the globe.

Technology Overview

This chapter contains the following:

● Cisco Unified Computing System

● Cisco UCS Fabric Interconnect

● Cisco UCS C-Series Rack Servers

● Cisco UCS Virtual Interface Card

The Cisco UCS solution for Splunk Enterprise is based on Cisco UCS Integrated Infrastructure for Big Data and Analytics, a highly scalable architecture designed to meet a variety of scale-out application demands with seamless data integration and management integration capabilities built using the components described in the following sections.

Cisco Unified Computing System

Cisco Unified Computing System™ (Cisco UCS®) is an integrated computing infrastructure with intent-based management to automate and accelerate deployment of all your applications, including virtualization and cloud computing, scale-out and bare-metal workloads, and in-memory analytics, as well as edge computing that supports remote and branch locations and massive amounts of data from the Internet of Things (IoT).

Cisco UCS is based on the concept that infrastructure is code. Servers are designed to be stateless, with their identity, configuration, and connectivity extracted into variables that can be set through software. This enables management tools to help guarantee consistent, error-free, policy-based alignment of server personalities with workloads. Through automation, transforming the server and networking components of your infrastructure into a complete solution is fast and error-free because programmability eliminates the error-prone manual configuration of servers and integration into solutions. Server, network, and storage administrators are now free to focus on strategic initiatives rather than spending their time performing tedious tasks.

For more details, go to: https://www.cisco.com/site/us/en/products/computing/servers-unified-computing-systems/resources.html

Cisco UCS Differentiators

Cisco Unified Computing System is revolutionizing the way servers are managed in the datacenter. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager:

● Embedded Management—In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Inter-connects, eliminating the need for any external physical or virtual devices to manage the servers.

● Unified Fabric—In Cisco UCS, from blade server chassis or rack servers to FI, there is a single Ethernet cable used for LAN, SAN, and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of the overall solution.

● Auto Discovery—By simply inserting the blade server in the chassis or connecting the rack server to the fabric interconnect, discovery and inventory of compute resources occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of Cisco UCS, where compute capability of Cisco UCS can be extended easily while keeping the existing external connectivity to LAN, SAN, and management networks.

Cisco Intersight

Cisco Intersight is the premier management platform for Cisco UCS. It connects to the systems’ fabric interconnects when they are configured for Intersight managed mode. Because it is cloud based, Software as a Service (SaaS), it has a broad scope that extends to complete infrastructure and application lifecycle management. It has limitless scale so you can manage all your infrastructure from a single control point with role- and policy-based automation. Cisco Intersight can usher your IT operations into today’s hybrid cloud world with your on-premises infrastructure acting as the foundation, with the capability to move and optimize workload performance across multiple clouds. Because it is SaaS, you don’t have to support management servers or worry about updates: you are always accessing the latest software, and you benefit from a continuous stream of new capabilities and features.

Cisco Intersight Software as a Service (SaaS) unifies and simplifies your experience of the Cisco Unified Computing System (Cisco UCS) (Figure 2).

With Intersight, you get all the benefits of SaaS delivery and full lifecycle management of distributed infrastructure across data centers, remote sites, branch offices, and edge environments. This empowers you to deploy, configure, secure, update, maintain, automate, and scale your environment through a cloud-operating model in ways that were not previously possible. As a result, your organization can operate with consistency and control, stay in compliance, and strengthen your security posture to deliver IT infrastructure, resources, and applications faster to support business growth.

For more information, see the following:

● https://www.cisco.com/c/en_sg/products/cloud-systems-management/intersight/index.html

The Cisco UCS 6536 Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. The Cisco UCS 6536 Fabric Interconnect offers line-rate, low-latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel, NVMe over Fabric, and Fibre Channel over Ethernet (FCoE) functions.

The Cisco UCS 6536 Fabric Interconnect provides the communication backbone and management connectivity for the Cisco UCS X-Series compute nodes, Cisco UCS X9508 X-Series chassis, Cisco UCS B-Series blade servers, Cisco UCS 5108 B-Series server chassis, and Cisco UCS C-Series rack servers. All servers attached to a Cisco UCS 6536 Fabric Interconnect become part of a single, highly available management domain. Additionally, by supporting a unified fabric, Cisco UCS 6536 Fabric Interconnect provides both LAN and SAN connectivity for all servers within its domain.

The Cisco UCS 6536 Fabric Interconnect is built to consolidate LAN and SAN traffic onto a single unified fabric, saving on Capital Expenditures (CapEx) and Operating Expenses (OpEx) associated with multiple parallel networks, different types of adapter cards, switching infrastructure, and cabling within racks.

The Cisco UCS 6536 Fabric Interconnect can be managed through Cisco Intersight. The UCS 6536 Fabric Interconnect supports Intersight Managed Mode (IMM), which enables full manageability of Cisco UCS elements behind the UCS 6536 FI through Cisco Intersight. UCS 6536 Fabric Interconnect in Intersight managed mode will support Cisco UCS product models, including Cisco UCS X-Series Servers, Cisco UCS B-Series Blade Servers, and C-Series Rack Servers, as well as the associated storage resources and networks. For more detail, go to: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs6536-fabric-interconnect-ds.html

Cisco UCS C-Series Rack Servers

The Cisco UCS C-Series is a family of rack-mount servers designed to deliver high performance, scalability, and flexibility for a wide range of data center workloads. These servers can be deployed as standalone systems or integrated into the broader Cisco Unified Computing System (UCS) architecture for centralized management and automation. For more information, go to: https://www.cisco.com/site/us/en/products/computing/servers-unified-computing-systems/ucs-c-series-rack-servers/resources.html

For this solution, we deployed Cisco’s M8 generation of UCS C-Series rack servers C225 and C245 as explained below.

Cisco UCS C225 M8 Rack Server

The Cisco UCS C225 M8 Rack Server is a versatile general-purpose infrastructure and application server. This high-density, 1RU, single-socket rack server delivers industry-leading performance and efficiency for a wide range of workloads, including virtualization, collaboration, and bare-metal applications. The Cisco UCS C225 M8 Rack Server extends the capabilities of the Cisco UCS rack server portfolio. It powers 5th Gen and 4th Gen AMD EPYC™ Processors with 150 percent more cores per socket designed using AMD’s chiplet architecture. With advanced features such as AMD Infinity Guard, compute-intensive applications will see significant performance improvements and reap other benefits such as power and cost efficiencies.

You can deploy the Cisco UCS C-Series rack servers as standalone servers or as part of the Cisco Unified Computing System™ managed by Cisco Intersight® or Cisco UCS Manager to take advantage of Cisco® standards-based unified computing innovations that can help reduce your Total Cost of Ownership (TCO) and increase your business agility.

The Cisco UCS C225 M8 rack server brings many new innovations to the Cisco UCS AMD rack server portfolio. With the introduction of PCIe Gen 5.0 for high-speed I/O, a DDR5 memory bus, and expanded storage capabilities, the server delivers significant performance and efficiency gains that will improve your application performance. For more details, go to: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/ucs-c225-m8-rack-server-ds.html

Cisco UCS C245 M8 Rack Server

The Cisco UCS C245 M8 Rack Server is perfectly suited for a wide range of storage and I/O-intensive applications such as big data analytics, databases, collaboration, virtualization, consolidation, AI/ML, and high-performance computing supporting up to two AMD CPUs in a 2RU form factor.

The Cisco UCS C245 M8 Rack Server extends the capabilities of the Cisco UCS rack server portfolio. It powers 5th Gen and 4th Gen AMD EPYC™ Processors with up to 160 cores per socket designed using AMD’s chiplet architecture. With advanced features like AMD Infinity Guard, compute-intensive applications will see significant performance improvements and will reap other benefits such as power and cost efficiencies.

Cisco UCS C245 M8 Rack Servers can be deployed as part of a Cisco UCS–managed environment, through Cisco Intersight, or standalone. When used in combination with Cisco Intersight, the C245 M8 brings the power and automation of unified computing to enterprise applications, including Cisco Single Connect technology, drastically reducing switching and cabling requirements.

For more details, go to: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/ucs-c245-m8-rack-server-ds.html

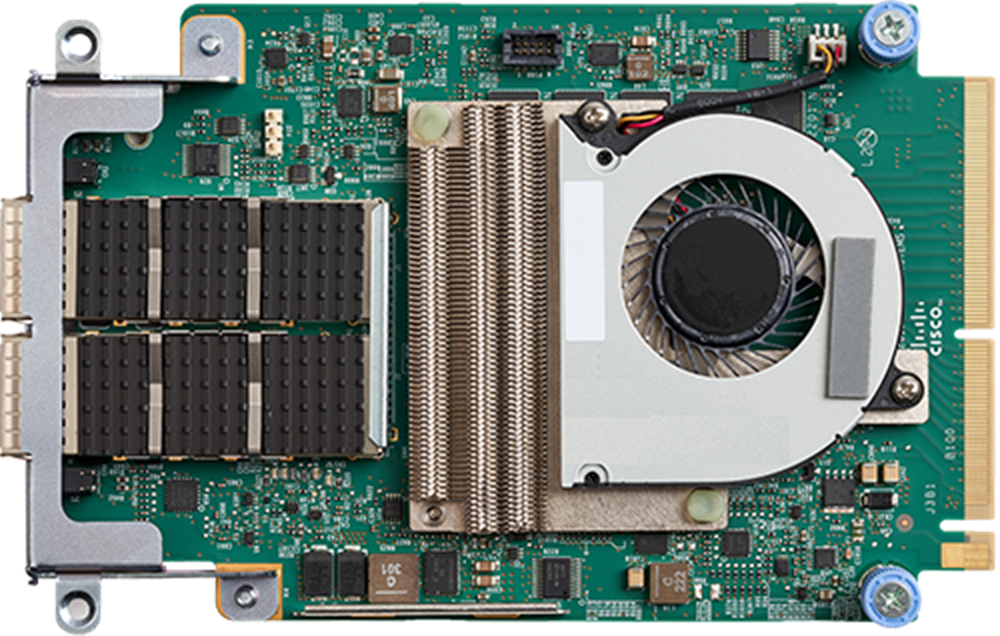

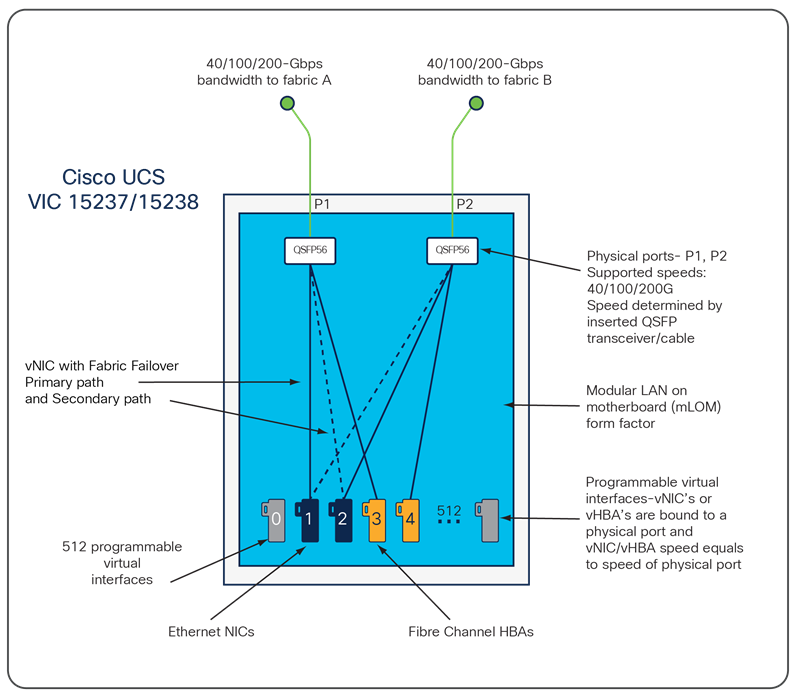

Cisco UCS Virtual Interface Card

The Cisco UCS Virtual Interface Card (VIC) 15000 Series extends the network fabric directly to both servers and virtual machines so that a single connectivity mechanism can be used to connect both physical and virtual servers with the same level of visibility and control. Cisco® VICs provide complete programmability of the Cisco UCS I/O infrastructure, with the number and type of I/O interfaces configurable on demand with a zero-touch model.

Cisco VICs can support 512 PCI Express (PCIe) virtual devices, either virtual Network Interface Cards (vNICs) or virtual Host Bus Adapters (vHBAs), with a high rate of I/O Operations Per Second (IOPS), support for lossless Ethernet, and 10/25/40/50/100/200-Gbps connection to servers. Cisco VICs supports NIC teaming with fabric failover for increased reliability and availability. In addition, it provides a policy-based, stateless, agile server infrastructure for your data center.

Cisco VIC 15237

The Cisco UCS VIC 15237 and 15238 are dual-port quad small-form-factor pluggable (QSFP/QSFP28/QSFP56) mLOM cards designed for Cisco UCS C-Series M6/M7/M8 rack servers. The card supports 40/100/200-Gbps Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs.

When a Cisco UCS rack server with VIC 15237 is connected to a fabric interconnect (FI-6536), the VIC adapter is provisioned through Cisco Intersight Managed Mode (IMM) or Cisco UCS Manager (UCSM) policies. When the UCS rack server with VIC 15237 is connected to a ToR switch such as Cisco Nexus 9000 Series, the VIC adapter is provisioned through the Cisco IMC or Intersight policies for a UCS standalone server.

For more information, go to: https://www.cisco.com/c/en/us/products/collateral/interfaces-modules/unified-computing-system-adapters/ucs-vic-15000-series-ds.html

Splunk Enterprise is a software product that enables you to search, analyze, and visualize the data gathered from the components of your IT infrastructure or business. Splunk Enterprise collects data from any source, including metrics, logs, clickstreams, sensors, stream network traffic, web servers, custom applications, hypervisors, containers, social media, and cloud services. It enables you to search, monitor and analyze that data to discover powerful insights across multiple use cases like security, IT operations, application delivery and many more. With Splunk enterprise, everyone from data and security analyst to business users can gain insights to drive operational performance and business results. Splunk makes it easy to input data from virtually any source — without the limitations of database structures.

Splunk Enterprise scales hundreds of terabytes per day to meet the needs of any organization, and supports clustering, high availability, and disaster recovery configurations. It achieves all of this while helping with security and compliance. You can deploy Splunk Enterprise on-premises or in the cloud, use it as a SaaS service via Splunk Cloud Platform, or in any combination you like.

Splunk Enterprise performs three main functions as it processes data:

● It ingests data from files, the network, or other sources.

● It parses and indexes the data.

● It runs searches on the indexed data.

Depending on your needs, you can deploy Splunk Enterprise as a single instance, or you can create deployments that span multiple instances, ranging from just a few to hundreds or even thousands of instances. In small deployments, one instance of Splunk Enterprise handles all aspects of processing data, from input through indexing to search. A single-instance deployment can be useful for testing and evaluation purposes and might serve the needs of department-sized environments.

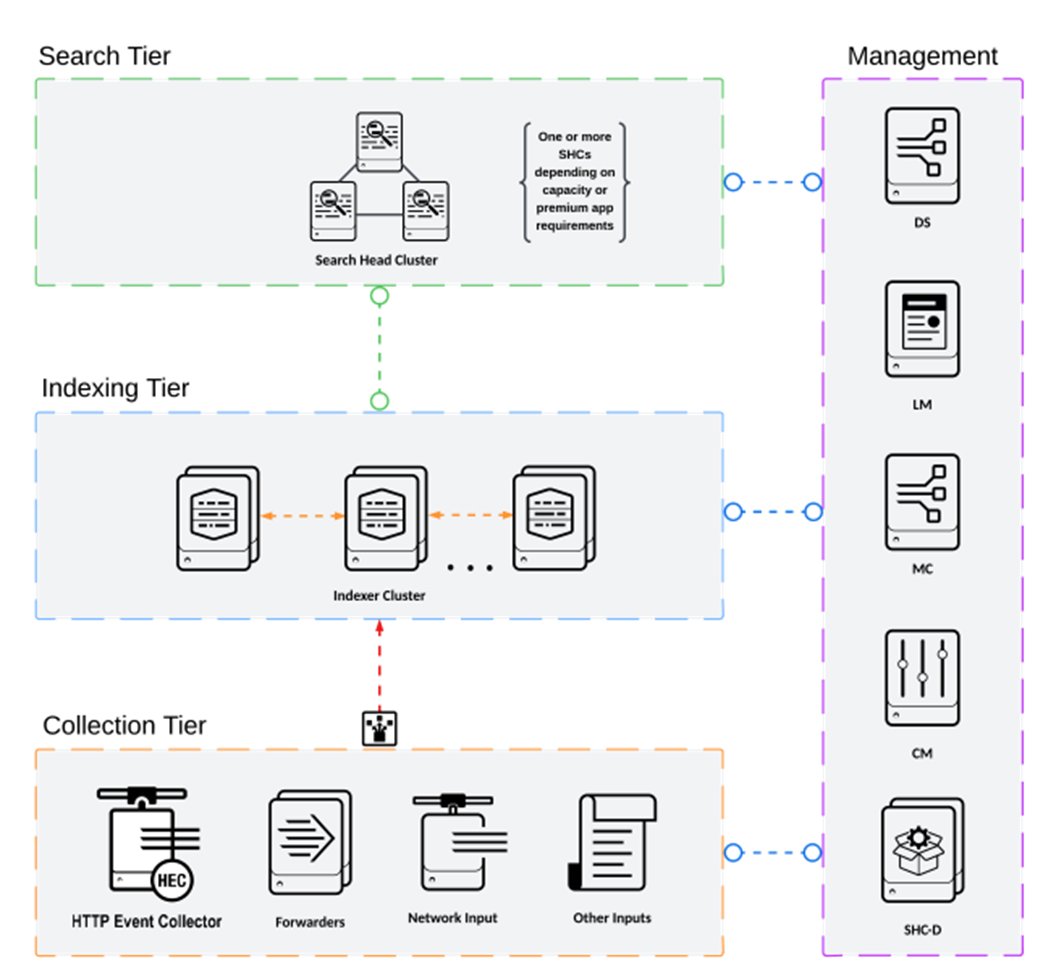

To support larger environments where data originates on many machines, where you need to process large volumes of data, or where many users need to search the data, you can scale the deployment by distributing Splunk Enterprise instances across multiple machines. This is known as a "distributed deployment". In a typical distributed deployment, each Splunk Enterprise instance performs a specialized task and resides on one of three processing tiers corresponding to the main processing functions:

● Data input tier

● Indexer tier

● Search management tier

Specialized instances of Splunk Enterprise are known collectively as components. With one exception, components are full Splunk Enterprise instances that have been configured to focus on one or more specific functions, such as indexing or search. The exception is the universal forwarder, which is a lightweight version of Splunk Enterprise with a separate executable. There are several types of Splunk Enterprise components. Table 1 lists the tiers and components of Splunk software deployments.

Table 1. Splunk software deployment tiers and components

| Tier |

Components |

Description |

Note |

| Management |

Deployment Server (DS) |

The deployment server manages configuration of forwarder configuration |

Should be deployed on a dedicated instance. |

| License Manager (LM) |

The license manager is required by other Splunk software components to enable licensed features and track daily data ingest volume. |

The license manager role has minimal capacity and availability requirements and can be collocated with other management functions. |

|

| Monitoring Console (MC) |

The monitoring console provides dashboards for usage and health monitoring of your environment. It also contains a number of prepackaged platform alerts that can be customized to provide notifications for operational issues |

In clustered environments, the MC can be collocated with the Manager Node, in addition to the License Manager and Deployment server function in non-clustered deployments. |

|

| Cluster Manager (CM) |

The cluster manager is the required coordinator for all activity in a clustered deployment. |

In clusters with a large number of index buckets (high data volume/retention), the cluster manager will likely require a dedicated server to run on. |

|

| Search |

Search Head (SH) |

The search head provides the UI for Splunk users and coordinates scheduled search activity. |

Search heads are dedicated Splunk software instances in distributed deployments. |

| Search Head Cluster (SHC) |

A search head cluster is a pool of at least three clustered Search Heads. It provides horizontal scalability for the search head tier and transparent user failover in case of outages. |

Search head clusters require dedicated servers of ideally identical system specifications. |

|

| Indexing |

Indexer |

Indexers are the heart and soul of a Splunk deployment. They process and index incoming data and serve as search peers to fulfill search requests initiated on the search tier. |

Indexers must always be on dedicated servers in distributed or clustered deployments. Indexers perform best on bare metal servers or in dedicated, high-performance virtual machines, if adequate resources can be guaranteed. |

| Data Collection |

Forwarders |

General icon for any component involved in data collection. |

This includes universal and heavy forwarders, network data inputs and other forms of data collection |

For more detail information, go to: https://docs.splunk.com/Documentation/SVA/current/Architectures/Topology

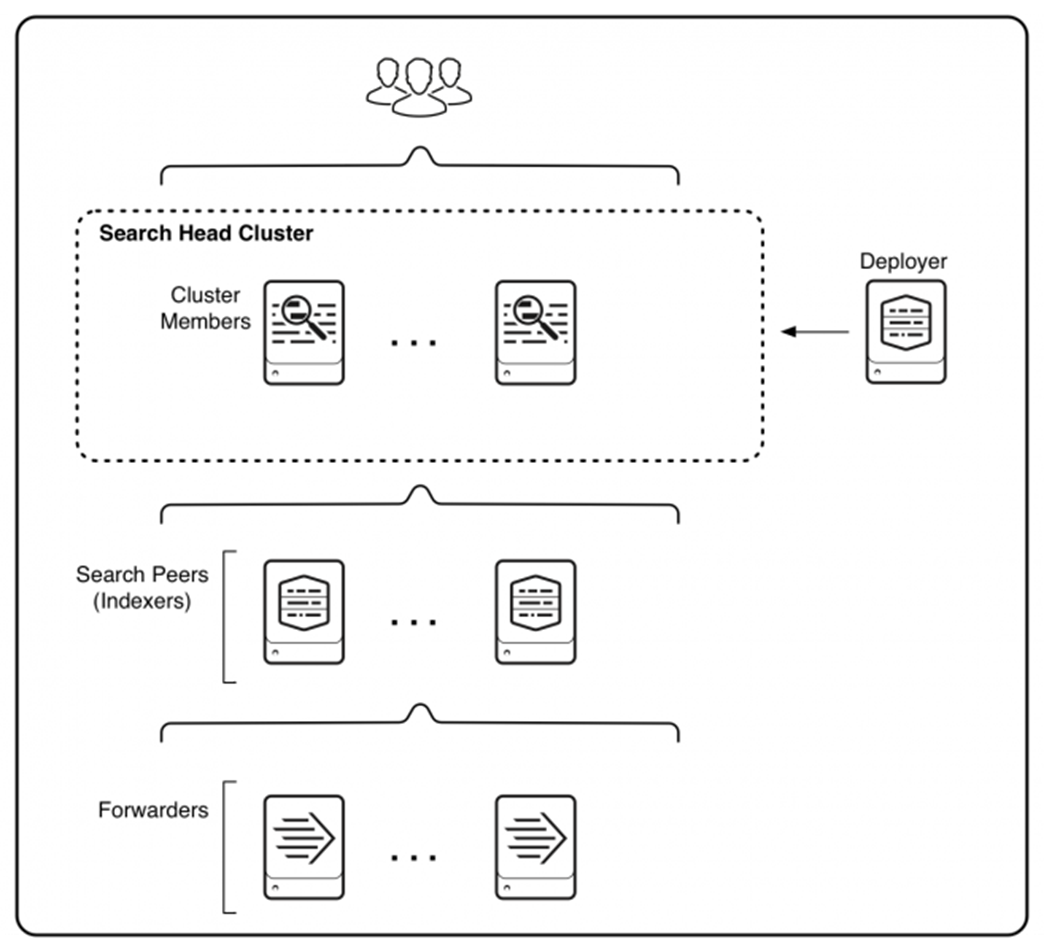

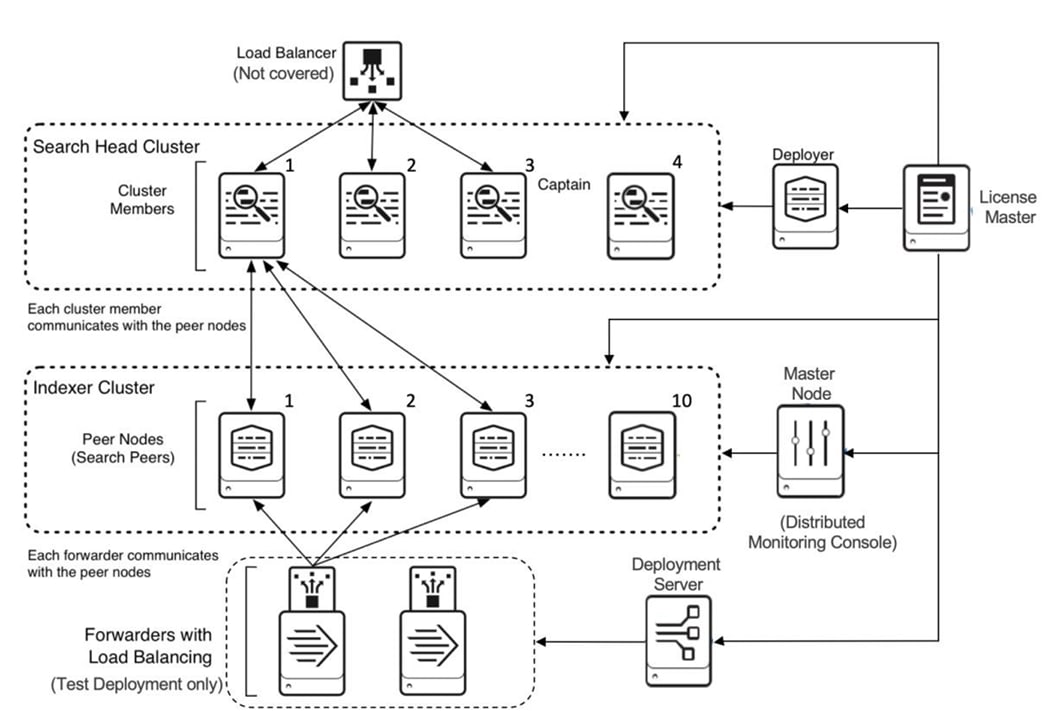

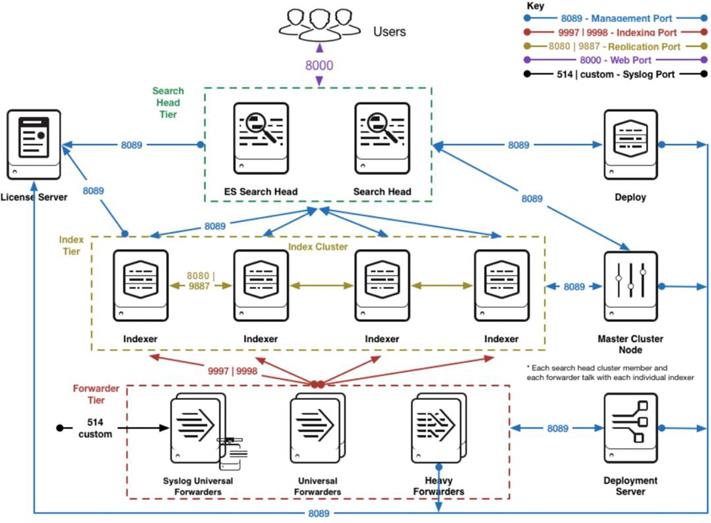

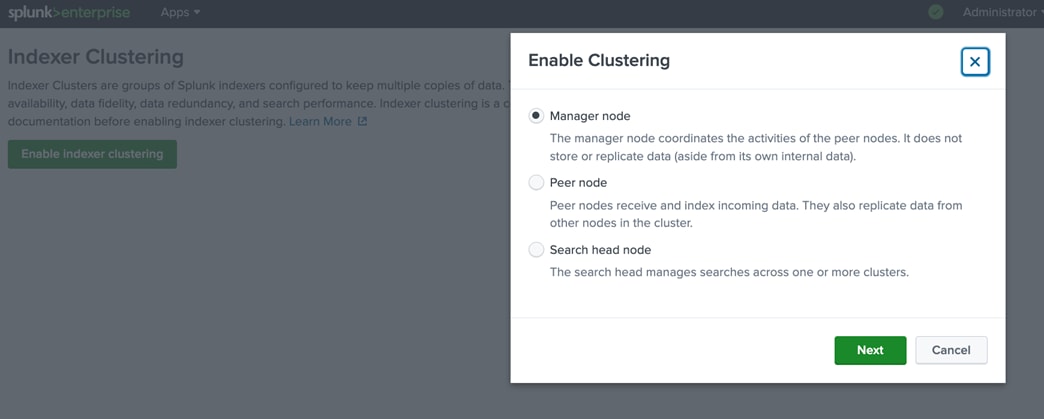

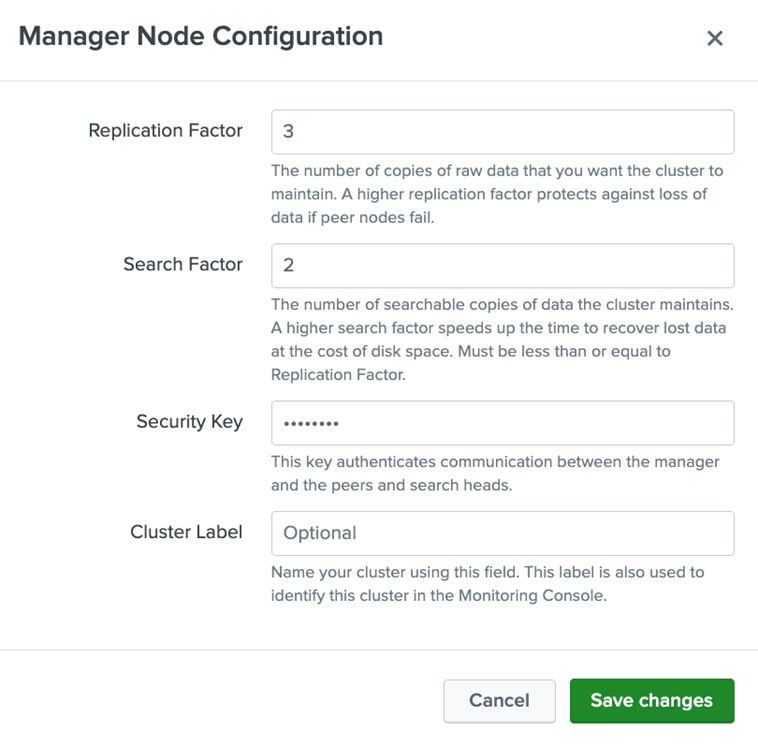

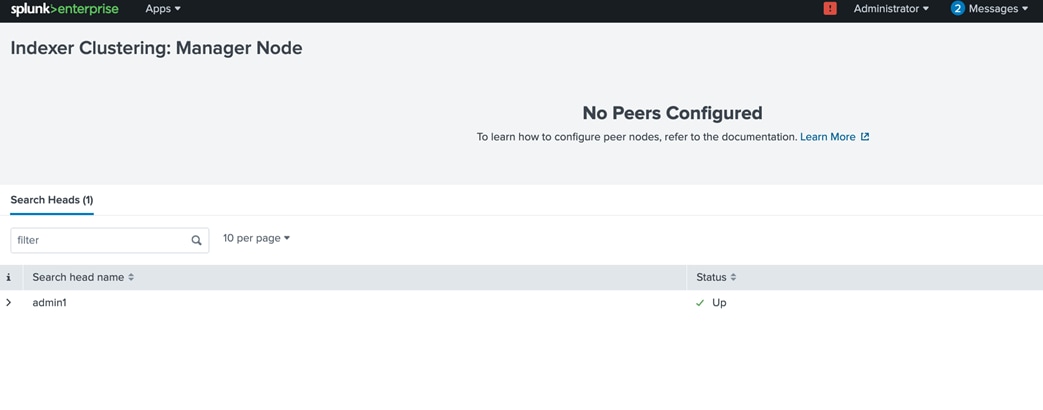

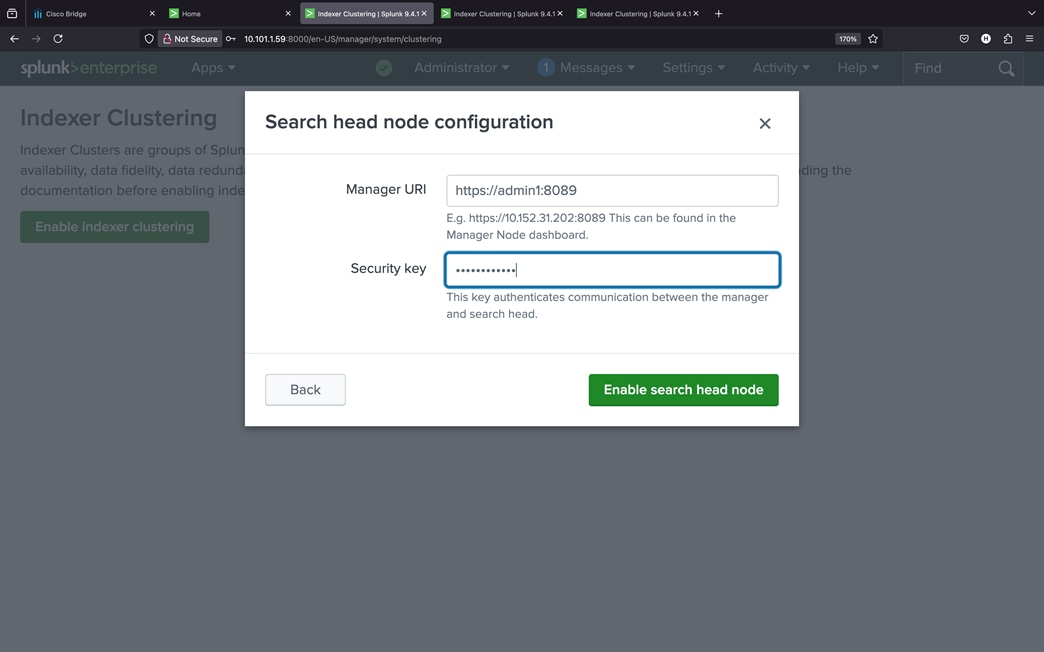

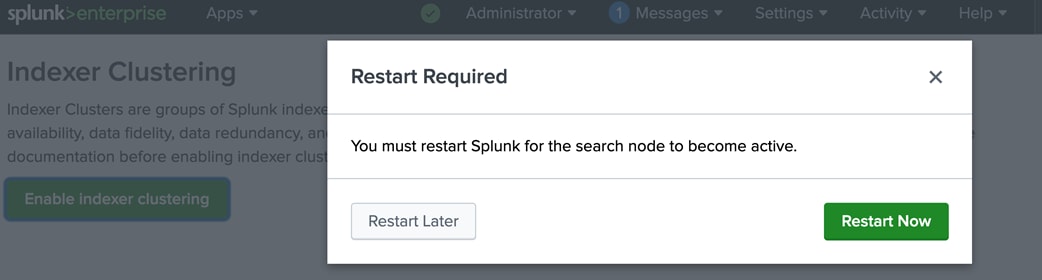

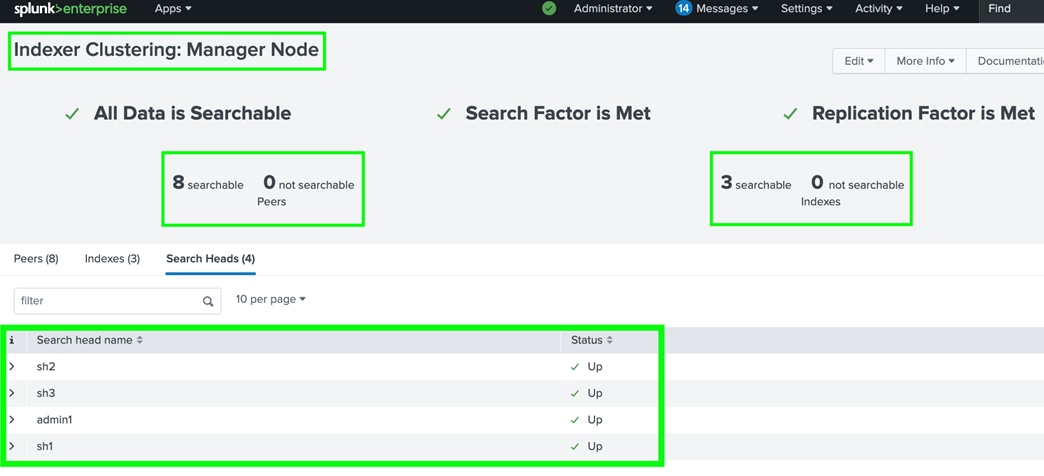

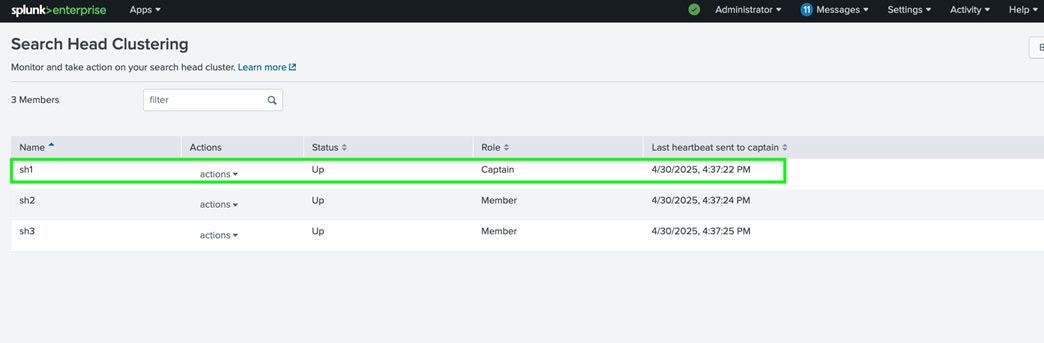

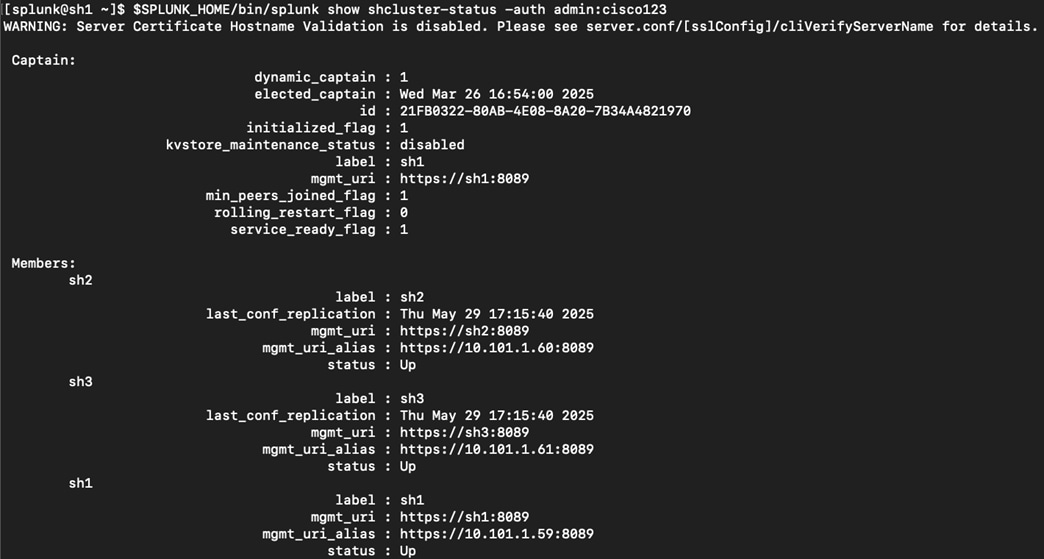

For this solution, we deployed Splunk enterprise on Single Site Distributed Clustered mode with Search Head Cluster (SHC) topology. The Single Site Distributed Clustered Deployment with a Search Head Cluster (SHC) topology uses clustering to add horizontal scalability and removes the single point of failure from the search tier. Figure 3 illustrates a single site distributed clustered deployment with a search head cluster (SHC) topology.

For more information, go to the Splunk Validated Architecture reference here: https://docs.splunk.com/Documentation/SVA/current/Architectures/C3C13

Note: If you’re considering deploying Enterprise Security (ES) in a C13 category code, review the guidance to install ES in search head cluster environments. Splunk strongly recommends engaging with Splunk Professional Services when deploying ES in a HA/DR environment.

Solution Design

This chapter contains the following:

The following sections detail the physical hardware, software revisions, and firmware versions required for deploying Splunk Enterprise on Cisco UCS.

Physical components

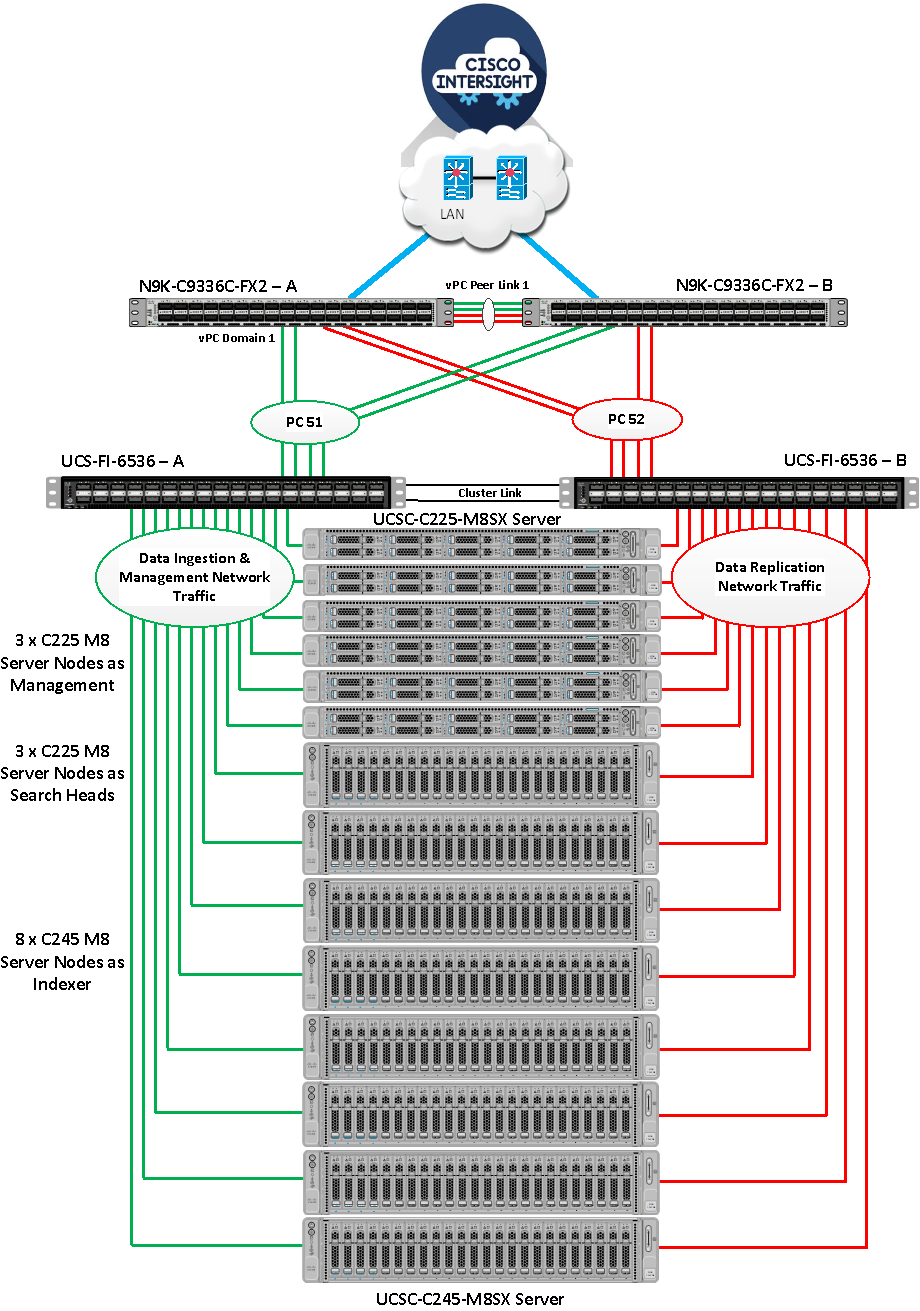

Figure 4 illustrates the single rack configuration containing six Cisco UCS C225 and eight Cisco UCS C245 M8 servers along with networking and management switches. Each server is then connected to each of the Fabric Interconnect (FI) by means of dedicated 100G ethernet link. This solution provides an end-to-end 100Gbps Ethernet capable architecture to demonstrate the benefits for running Splunk enterprise environment with superior performance, scalability and high availability using NVMe High Perf Endurance local storage drives.

The reference architecture highlighted in this deployment guide can be further extended to multiple racks catering to specific data storage and processing for the large-scale enterprise deployment with healthy data-movement.

Note: 2.4 TB/day is computed based on indexer’s capacity of indexing 300G per day for core IT operational analytics use cases.

The inventory of the hardware components used in this solution architecture is listed in Table 2.

Table 2. Hardware Inventory and Bill of Material

| Name |

Description |

PID |

Quantity |

| Cisco UCS 6536 Fabric Interconnects |

Cisco UCS 6536 Fabric Interconnect providing both network connectivity and management capabilities for the system |

UCS-FI-6536 |

2 |

| Cisco UCS Nexus Switch |

Cisco Nexus 9336C-FX2 Switch for uplink network connectivity |

N9K-9336C-FX2 |

2 |

| Cisco UCS C225 M8 |

Cisco UCS C-Series 1RU C225 M8 Compute Server Node |

UCSC-C225-M8S |

6 |

| Cisco UCS C245 M8 |

Cisco UCS C-Series 2RU C245 M8 Compute Server Node |

UCSC-C245-M8SX |

8 |

Note: To run Splunk enterprise management tier nodes (admin nodes), we used identical three Cisco UCSC C225 M8 Servers with the following configuration as described in the tables below.

Table 3. Hardware Inventory and Bill of Material

| Name |

Model |

Description |

PID |

| CPU |

16 Cores Processor (1 x AMD EPYC 9124) |

AMD 9124 3.0GHz 200W 16C/64MB Cache DDR5 4800MT/s |

UCS-CPU-A9124 |

| Memory |

192 GB (12 x 16G DDR5) |

16GB DDR5-5600 RDIMM 1Rx8 (16Gb) |

UCS-MRX16G1RE3 |

| Network Adapter |

1 x Cisco VIC 15237 |

Cisco VIC 15237 2x 40/100/200G mLOM C-Series w/Secure Boot |

UCSC-M-V5D200GV2D |

| RAID Controller |

HWRAID |

Cisco Boot optimized M.2 Raid controller. Support for RAID1 |

UCS-M2-HWRAID |

| Cisco Tri-Mode 24G SAS RAID Controller for Local Storage |

Cisco Tri-Mode 24G SAS RAID Controller w/4GB Cache. Support for RAID0, RAID1, RAID5, RAID6, RAID10, RAID50, RAID60 |

UCSC-RAID-HP |

|

| Boot |

2 x 480GB M.2 SATA SSD configured in RAID 1 for OS |

480GB M.2 SATA SSD |

UCS-M2-480G |

| Storage |

2 x 960G NVMe local drives for data storage |

960GB 2.5in U.3 Micron 7450 NVMe High Perf Medium Endurance |

UCS-NVMEG4-M960 |

Note: To run Splunk enterprise search tier nodes (search heads), we used identical three Cisco UCSC C225 M8 Servers with the following configuration as described in the table below.

Table 4. Hardware Inventory and Bill of Material

| Name |

Model |

Description |

PID |

| CPU |

24 Cores Processor (1 x AMD EPYC 9224) |

AMD 9224 2.5GHz 200W 24C/64MB Cache DDR5 4800MT/s |

UCS-CPU-A9224 |

| Memory |

384 GB (12 x 32G DDR5) |

32GB DDR5-5600 RDIMM 1Rx4 (16Gb) |

UCS-MRX32G1RE3 |

| Network Adapter |

1 x Cisco VIC 15237 |

Cisco VIC 15237 2x 40/100/200G mLOM C-Series w/Secure Boot |

UCSC-M-V5D200GV2D |

| RAID Controller |

HWRAID |

Cisco Boot optimized M.2 Raid controller. Support for RAID1 |

UCS-M2-HWRAID |

| Cisco Tri-Mode 24G SAS RAID Controller for local storage |

Cisco Tri-Mode 24G SAS RAID Controller w/4GB Cache. Support for RAID0, RAID1, RAID5, RAID6, RAID10, RAID50, RAID60 |

UCSC-RAID-HP |

|

| Boot |

2 x 480GB M.2 SATA SSD configured in RAID 1 for OS |

480GB M.2 SATA SSD |

UCS-M2-480G |

| Storage |

2 x 960G NVMe local drives for data storage |

960GB 2.5in U.3 Micron 7450 NVMe High Perf Medium Endurance |

UCS-NVMEG4-M960 |

Note: To run Splunk enterprise indexing tier nodes (indexers), we used identical eight Cisco UCSC C245 M8 Servers with the following configuration as described in the table below.

Table 5. Hardware Inventory and Bill of Material

| Name |

Model |

Description |

PID |

| CPU |

48 Cores Processor (2 x AMD EPYC 9224 24-Core) |

AMD 9224 2.5GHz 200W 24C/64MB Cache DDR5 4800MT/s |

UCS-CPU-A9224 |

| Memory |

384 GB (12 x 32G DDR5) |

32GB DDR5-5600 RDIMM 1Rx4 (16Gb) |

UCS-MRX32G1RE3 |

| Network Adapter |

1 x Cisco VIC 15237 |

Cisco VIC 15237 2x 40/100/200G mLOM C-Series w/Secure Boot |

UCSC-M-V5D200GV2D |

| RAID Controller |

HWRAID |

Cisco Boot optimized M.2 Raid controller. Support for RAID1 |

UCS-M2-HWRAID |

| Cisco Tri-Mode 24G SAS RAID Controller for local storage |

Cisco Tri-Mode 24G SAS RAID Controller w/4GB Cache. Support for RAID0, RAID1, RAID5, RAID6, RAID10, RAID50, RAID60 |

UCSC-RAID-HP |

|

| Boot |

2 x 480GB M.2 SATA SSD configured in RAID 1 for OS |

480GB M.2 SATA SSD |

UCS-M2-480G |

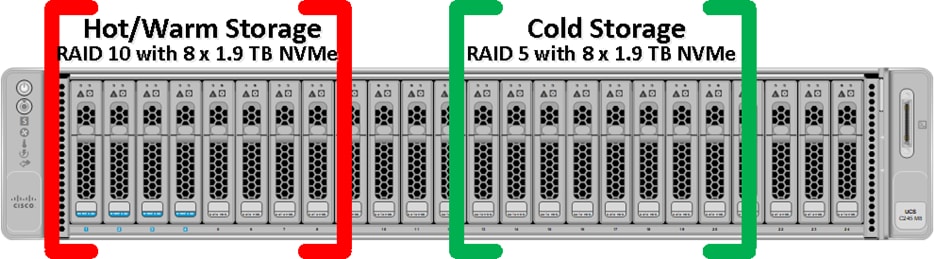

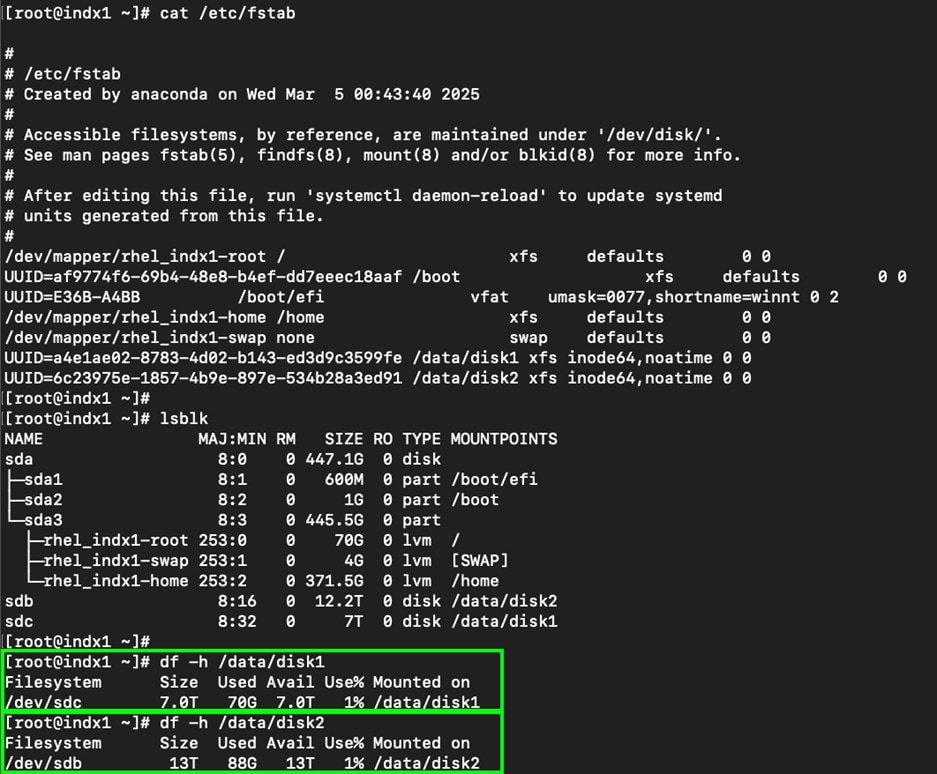

| Storage |

16 x 1.9TB NVMe local drives for data storage Hot/Warm Local Storage: 7 TB (8 x 1.9 TB Drives in RAID-10) per Indexer Cold Local Storage: 12.2 TB (8 x 1.9 TB Drives in RAID-5) per Indexer |

1.9TB 2.5in U.3 Micron 7450 NVMe High Perf Medium Endurance |

UCS-NVMEG4-M1920 |

Software components

Table 6 lists the software and firmware version used to validate and deploy this solution.

Table 6. software and firmware revision

| Devices |

Version |

| Cisco UCS FI 6536 |

Bundle Version 4.3(5.250004) |

| Cisco Nexus 9336C-FX2 NXOS |

NXOS System Version - 9.3(7) & BIOS Version – 05.45 |

| Cisco UCS C225 & C245 M8 Server |

Bundle Version 4.3(5.250001) & Firmware Version 4.3(5.250001) |

| Cisco UCS Adapter VIC 15237 |

Firmware Version 5.3(4.84) |

| M.2 Controller |

Firmware Version 2.3.17.1014 |

| MRAID Controller |

Firmware Version 8.6.2.0-00065-00001 |

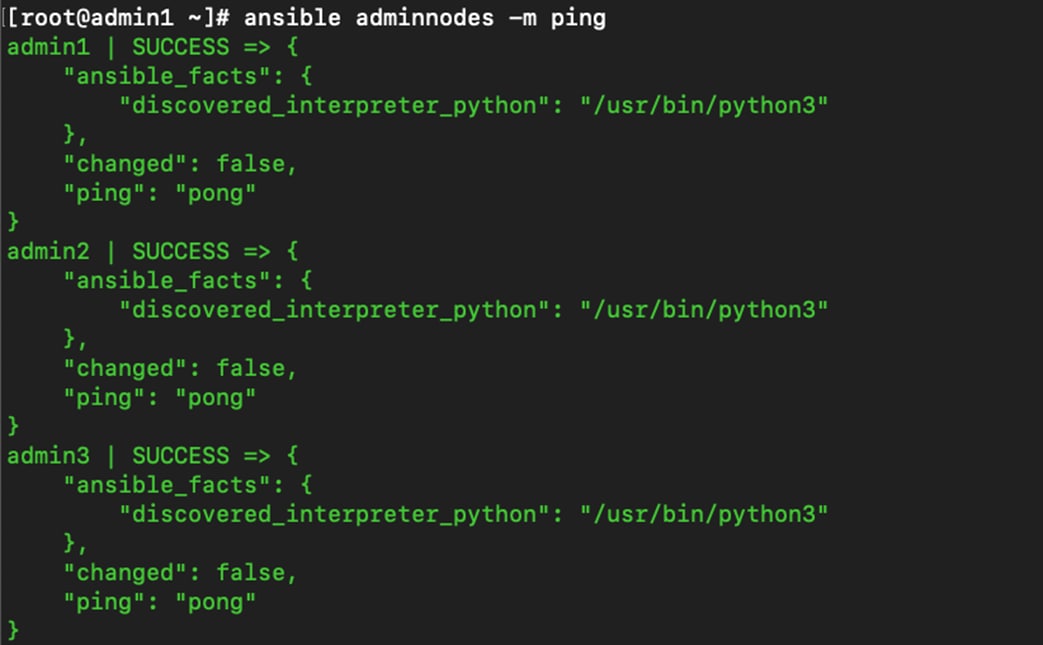

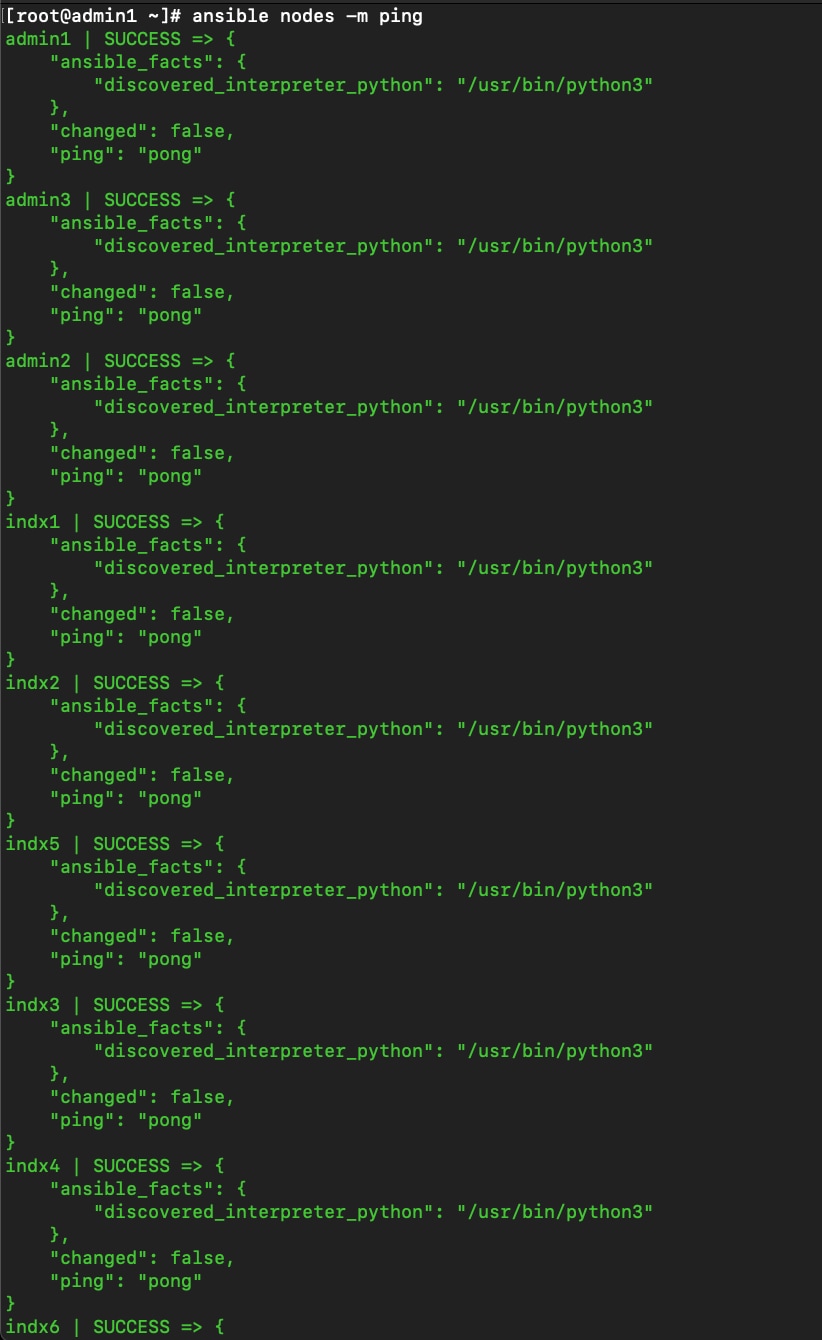

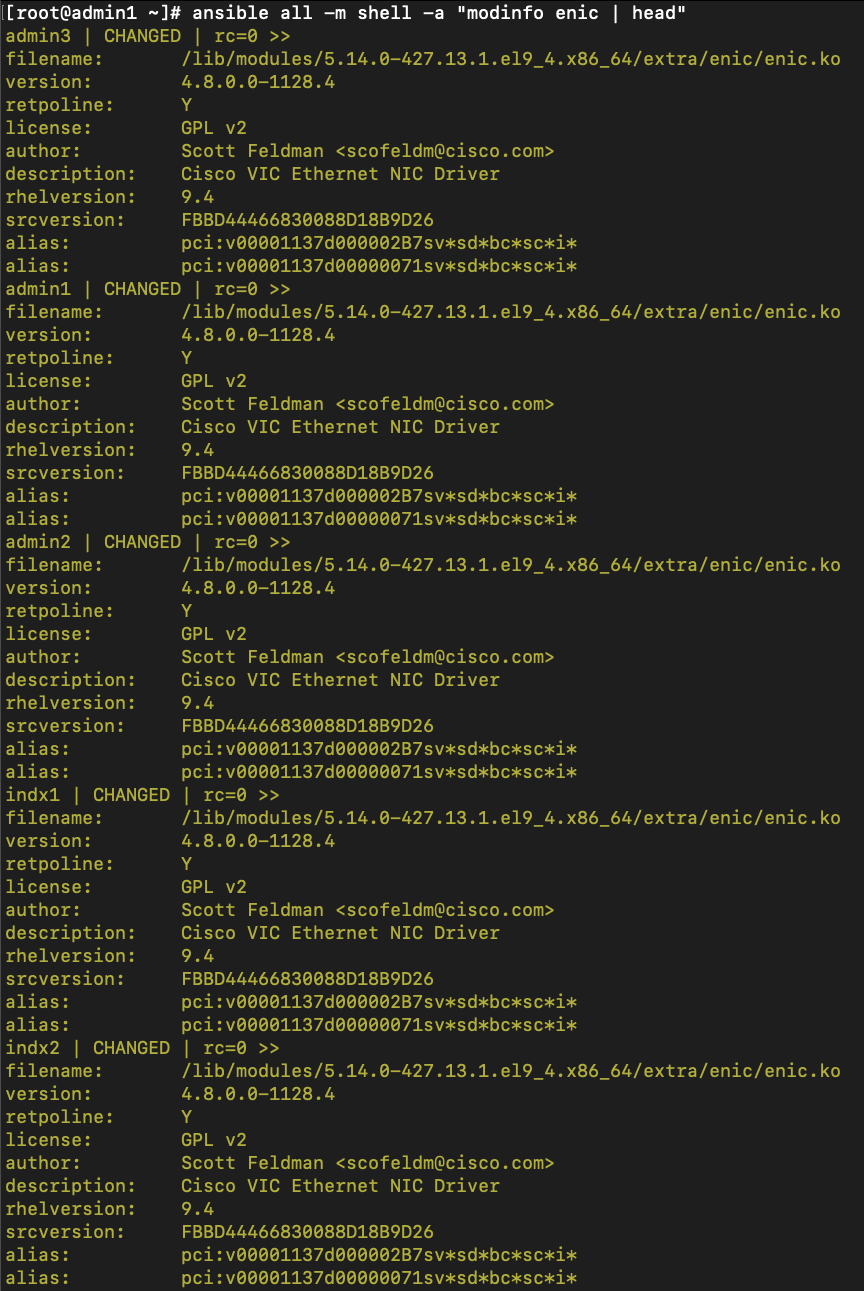

| Cisco eNIC (Cisco VIC Ethernet NIC Driver) (modinfo enic) |

4.8.0.0-1128.4 (kmod-enic-4.8.0.0-1128.4.rhel9u4_5.14.0_427.13.1.x86_64.rpm) |

| Red Hat Enterprise Linux Server |

Red Hat Enterprise Linux release 9.4 (5.14.0-427.13.1.el9_4.x86_64) |

| Splunk Enterprise Software |

9.4.1 |

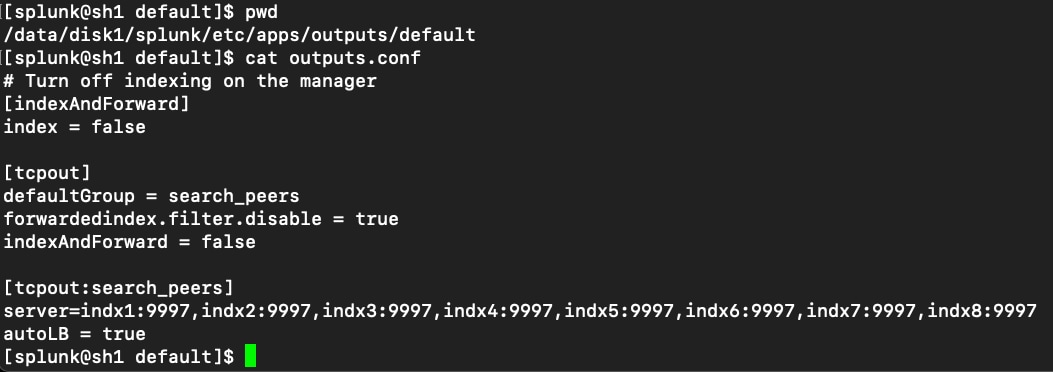

Deploying Splunk enterprise on bare metal Cisco UCS rack servers running Red Hat Linux will be carried out on this comprehensive end-to-end 100G architecture, as illustrated in Figure 5. This reference design is a typical network configuration that can be deployed in your environment. You can scale your solution as your workload demands, including expansion to thousands of servers. The configurations vary in disk capacity, bandwidth, price, and performance characteristic.

As shown in Figure 5, all the servers are directly connected to the ports of UCS Fabric Interconnects. These ports are configured as server ports in Intersight Managed Mode (IMM). A pair of Cisco UCS 6536 Fabric Interconnects (FI) carries both data ingestion, data replication and management network traffic from the Cisco UCS M8 C225 and C245 servers with the help of Cisco Nexus 9336C-FX2 switches. The Fabric Interconnects and the Cisco Nexus Switches are clustered with the peer link between them to provide high availability. Each server node is equipped with a 5th Gen VIC which offers an aggregate throughput of 200G ethernet connectivity (100G through each FI) in total to the fabric interconnects.

Fabric Interconnect – A links are used for Data Ingestion as well as Management Network Traffic Storage (VLAN1) and shown here as green lines while Fabric Interconnect – B links are used for data replication interconnect network traffic (VLAN 11) and shown here as red lines. This enables Splunk to take advantage of the UCS dual 100G links to isolate the inter server traffic from the ingress (data ingestion from forwards) on separate 10g links. Both VLANs must be trunked to the upstream distribution switch connecting the Fabric Interconnects. Two virtual Port-Channels (vPCs) are configured to provide public network and private network traffic paths for the server blades to northbound Nexus switches.

Additional 1Gb management connections are needed for an out-of-band network switch that is apart from this infrastructure. Each Cisco UCS FI and Cisco Nexus switch is connected to the out-of-band network switch. Although this is the base design, each of the components can be scaled easily to support specific business requirements. For example, more rack servers or even blade chassis can be deployed to increase compute capacity, additional local disk storage capacity can be deployed to improve I/O capability and throughput, and special software features can be added to introduce new features. This document guides you through the detailed steps for deploying the base architecture, as shown in the above figure. These procedures cover everything from physical cabling to network, compute, and storage device configurations.

Figure 6 illustrates the high-level view of the architecture for a Splunk medium or large enterprise deployment.

Starting from the bottom, Figure 6 illustrates three tiers of processing:

● Data input. Data enters the system through forwarders, which consume external data and forward the data to the indexers. You configure the forwarders to use their built-in load-balancing capabilities to spread the data across the set of indexers.

● Indexing. Indexers receive, index, and store incoming data from the forwarders.

● Search management. A search head cluster, consisting of three or more search head members, performs the search management function. The search heads in the cluster coordinate their activities to handle search requests, such as ad hoc requests from users and saved search requests, and to distribute the requests across the set of indexers. A deployer distributes apps to the members of the search head cluster.

In the transition from a small enterprise to a medium enterprise deployment, you need to increase both indexing and search capacity. For indexing, you can continue to add indexers. For search, you can add search heads to service more users and more search activity. The recommended approach for deploying multiple search heads is to combine the search heads in a search head cluster. Search head clusters allow users and searches to share resources across the set of search heads. They are also easier to manage than groups of individual search heads. Search head clusters require a minimum of three search heads.

The differences between a medium and a large enterprise deployment are mainly issues of scale and management. The fundamental deployment topologies are similar. They both employ a search head cluster with multiple indexers. A medium to large enterprise deployment provides even greater horizontal scaling. Characteristics of this type of deployment include:

● Indexing volume ranging from 300GB to many TBs per day.

● Many users, potentially numbering several hundred.

● Many thousands of forwarders.

There are several types of components to match the types of tasks in a deployment. Components fall into two broad categories:

● Processing components: These components handle the data.

● Management components: These components support the activities of the processing components.

Processing Components

The types of processing components are:

● Forwarders: Forwarders ingest raw data and forward the data to another component, either another forwarder or an indexer. Forwarders are usually co-located on machines running applications that generate data, such as web servers. The universal forwarder is the most common type of forwarder.

● Indexers: Indexers index and store data. They also search across the data. Indexers usually reside on dedicated machines. Indexers can be either independent (non-clustered) indexers or clustered indexers. Clustered indexers, also known as peer nodes, are nodes in an indexer cluster.

● Search heads: Search heads manage searches. They handle search requests from users and distribute the requests across the set of indexers, which search their local data. The search head then consolidates the results from all the indexers and serves them to the users. The search head provides the user with various tools, such as dashboards, to assist the search experience. Search heads can be independent search heads, search head cluster members, search head nodes in an indexer cluster or search head pool members.

Management Components

Management components are specially configured versions of Splunk Enterprise instances that support the activities of the processing components. A deployment usually includes one or more of these management components:

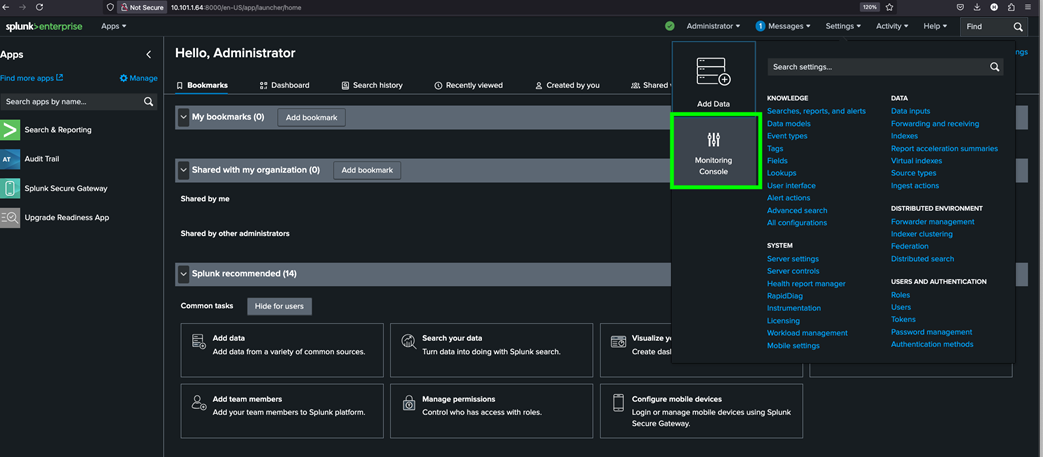

● Monitoring Console: Performs centralized monitoring of the entire deployment.

● Deployment Server: The deployment server distributes configuration updates and apps to some processing components, primarily forwarders.

● License Manager: The license manager handles Splunk Enterprise licensing

● Cluster Manager: The indexer cluster manager node coordinates the activities of an indexer cluster. It also handles updates for the cluster.

● Search Head Cluster Deployer: The search head cluster deployer handles updates for a search head cluster.

Your deployment might include all or none of these components, depending on the scale and specifics of your deployment topology. Multiple management components sometimes share a single Splunk Enterprise instance, perhaps along with a processing component. In large-scale deployments, however, each management component might reside on a dedicated instance.

Cisco UCS Install and Configure

This chapter contains the following:

● Cisco UCS Configuration – Intersight Managed Mode

● Configure Policies for Cisco UCS Domain

● Configure Cisco UCS Domain Profile

● Configure Policies for Server Profile

This chapter details the Cisco Intersight deployed Cisco UCS C225 and C245 M8 rack server connected to Cisco UCS Fabric Interconnect 6536 as part of the infrastructure build out. For detailed installation information, refer to the Cisco Intersight Managed Mode Configuration Guide

Cisco UCS Configuration – Intersight Managed Mode

This section contains the following procedures:

● Procedure 1. Configure Cisco UCS Fabric Interconnect for Cisco Intersight Managed Mode

● Procedure 2. Claim Fabric Interconnect in Cisco Intersight Platform

● Procedure 3. Configure Cisco Intersight Account and System Settings

● Procedure 5. Configure IMC Access Policy

Cisco Intersight Managed Mode (IMM) standardizes policy and operation management for Cisco UCS Servers. The compute nodes in Cisco UCS are configured using server profiles defined in Cisco Intersight. These server profiles derive all the server characteristics from various policies and templates. Figure 7 illustrates the high-level steps to configure Cisco UCS using Intersight Managed Mode.

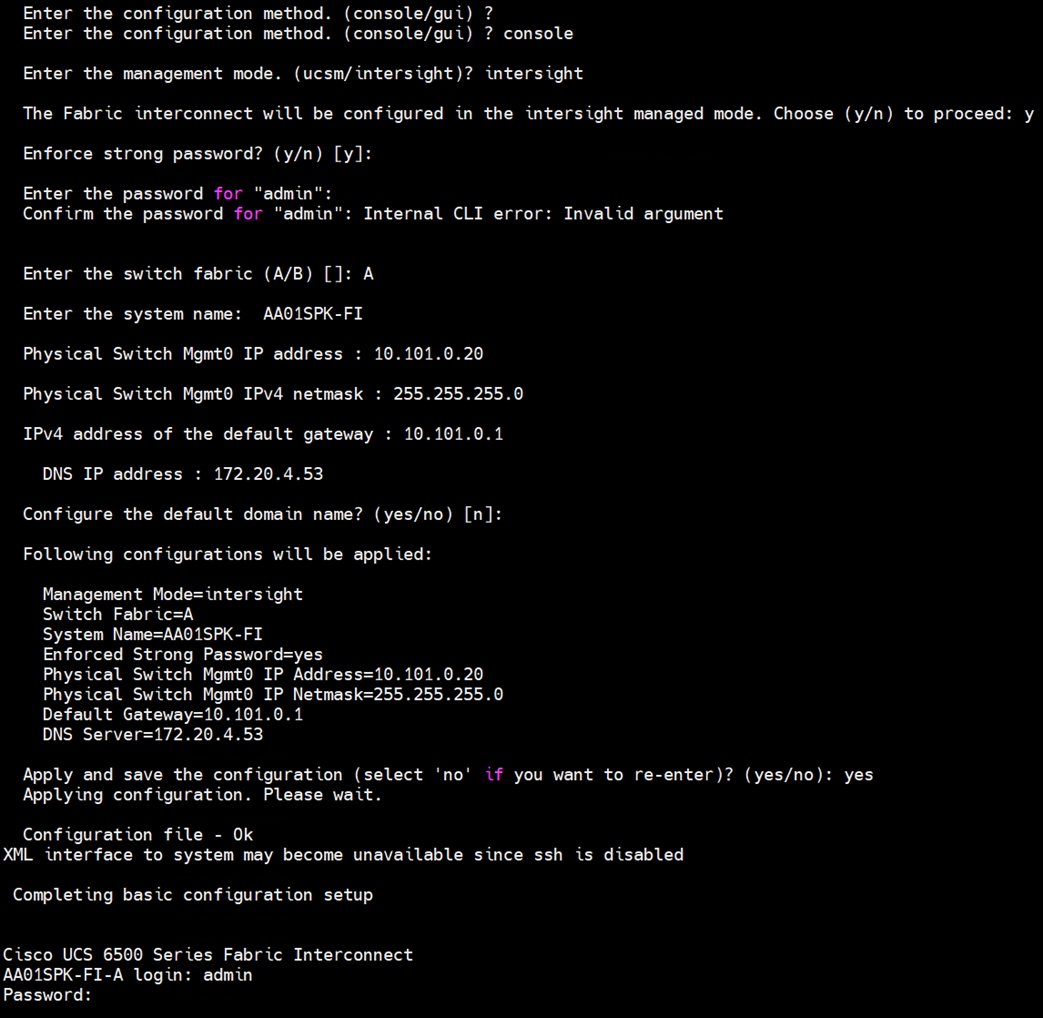

Procedure 1. Configure Cisco UCS Fabric Interconnects for Cisco Intersight Managed Mode

During the initial configuration, for the management mode, the configuration wizard enables you to choose whether to manage the fabric interconnect through Cisco UCS Manager or the Cisco Intersight platform. For this solution, we choose Intersight Managed Mode (IMM) for validating this Splunk Enterprise deployment.

Step 1. Verify the following physical connections on the fabric interconnect:

a. The management Ethernet port (mgmt0) is connected to an external hub, switch, or router.

b. The L1 ports on both fabric interconnects are directly connected to each other.

c. The L2 ports on both fabric interconnects are directly connected to each other.

Step 2. Connect to the console port on the first fabric interconnect and configure the first FI as shown below:

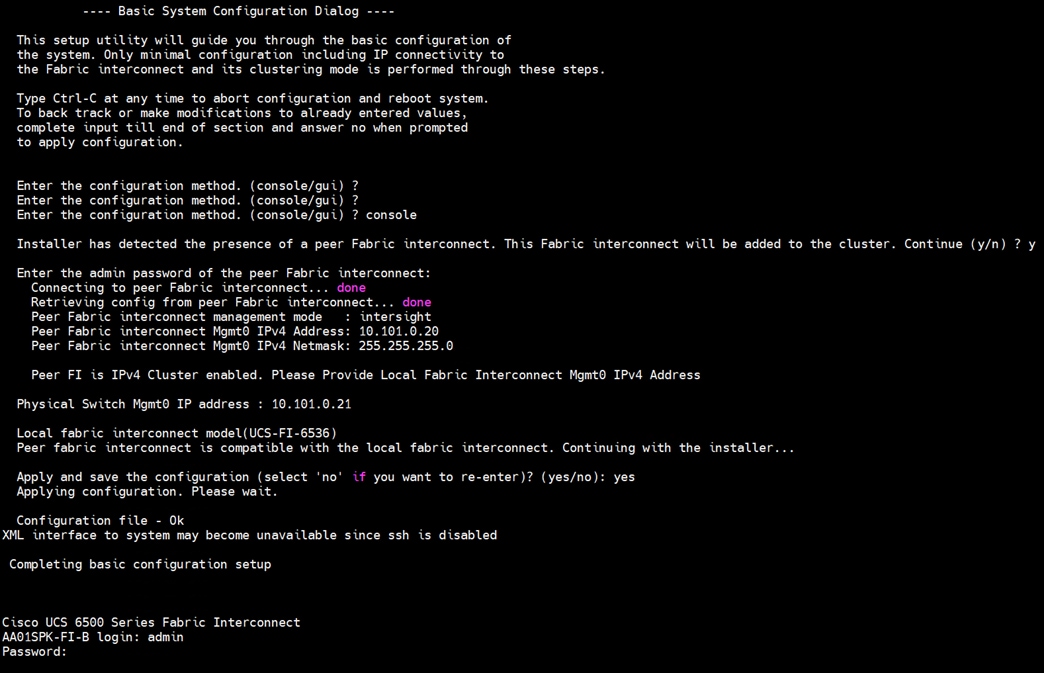

Step 3. Connect the console port on the second fabric interconnect B and configure it as shown below:

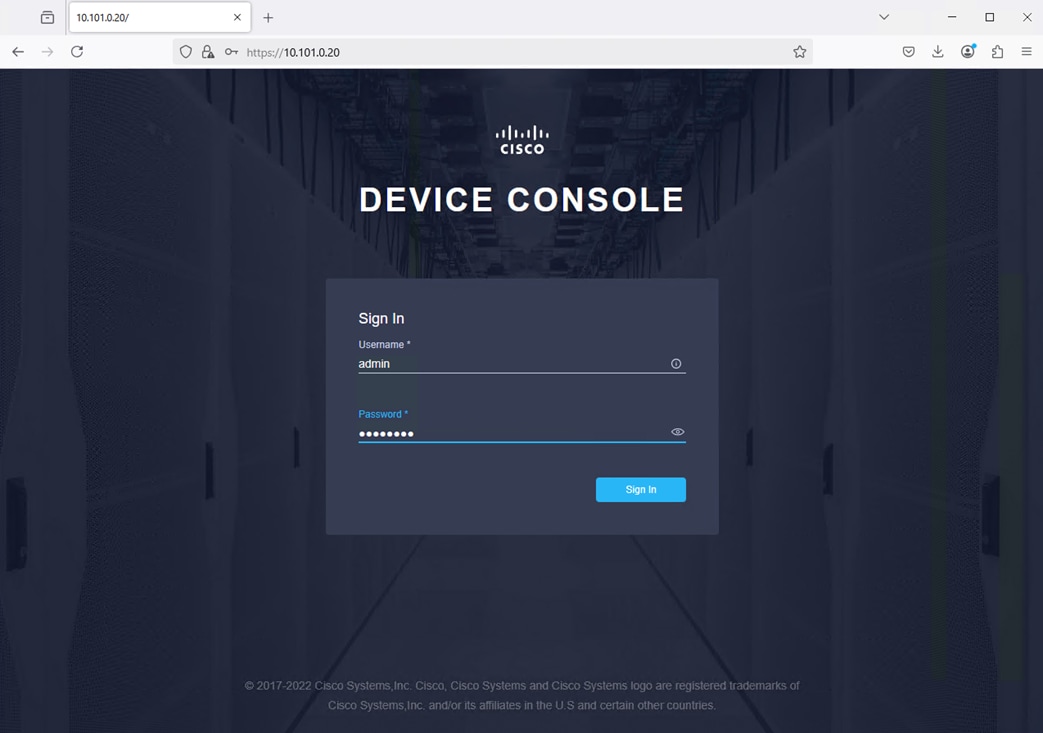

Step 4. After configuring both the FI management addresses, open a web browser and navigate to the Cisco UCS fabric interconnect management address as configured. If prompted to accept security certificates, accept, as necessary.

Step 5. Log into the device console for FI-A by entering your username and password.

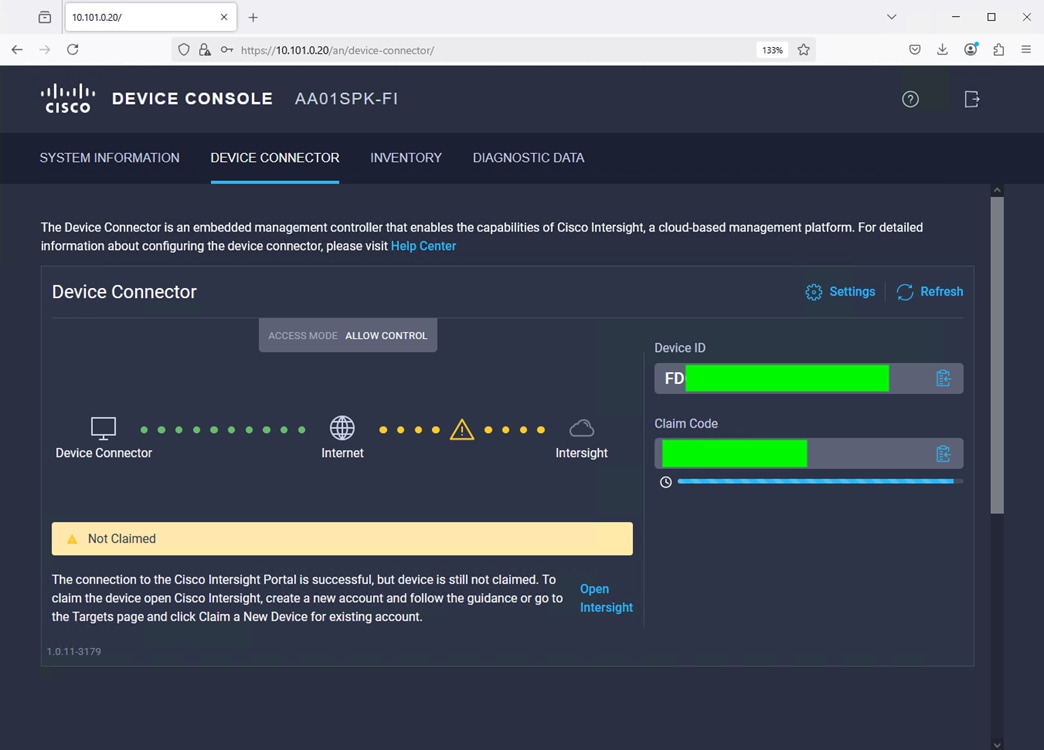

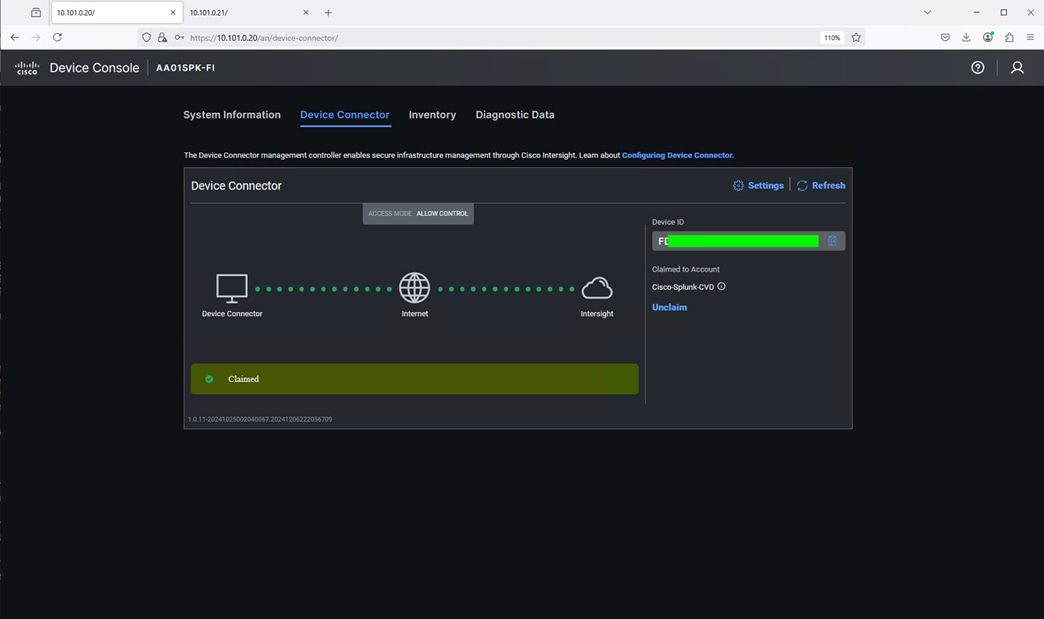

Step 6. Go to the Device Connector tab and get the DEVICE ID and CLAIM Code as shown below:

Note: After setting up the Cisco UCS fabric interconnect for Cisco Intersight Managed Mode, FIs can be claimed to a new or an existing Cisco Intersight account. When a Cisco UCS fabric interconnect is successfully added to the Cisco Intersight platform, all subsequent configuration steps are completed in the Cisco Intersight portal.

Procedure 2. Claim Fabric Interconnects in Cisco Intersight Platform

Step 1. After getting the device id and claim code of FI, go to https://intersight.com/.

Step 2. Sign in with your Cisco ID or if you don’t have one, click Sing Up and setup your account.

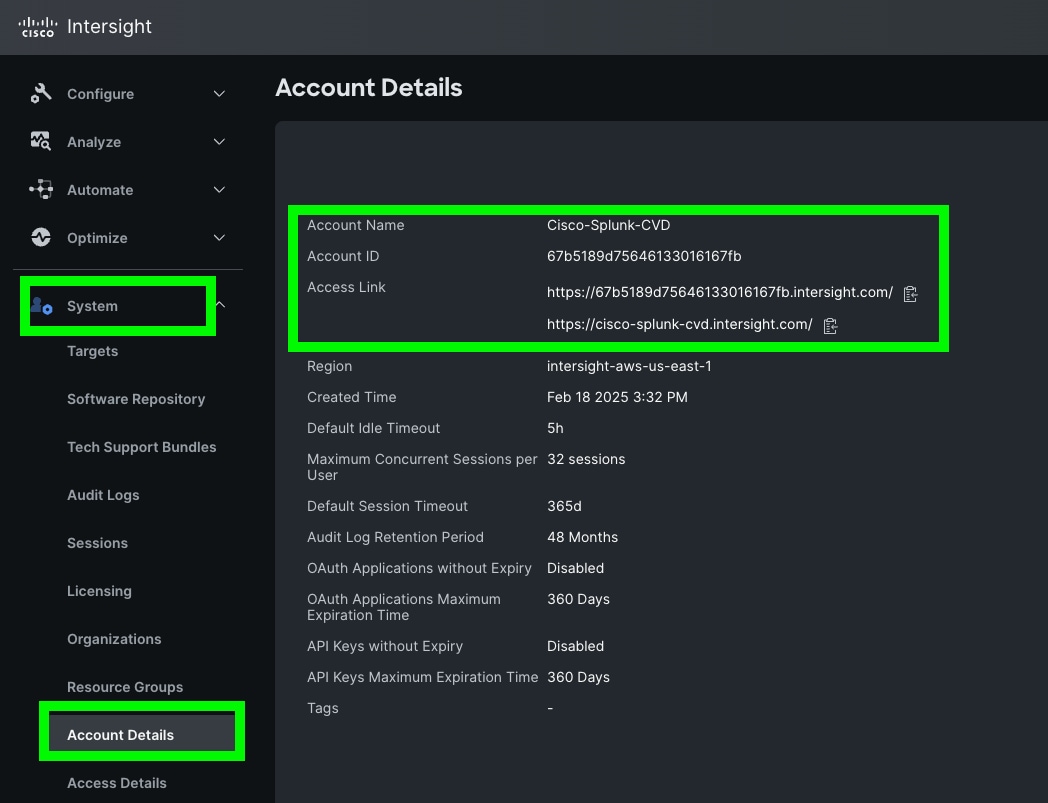

Note: We created the “Cisco-Splunk-CVD” account for this solution.

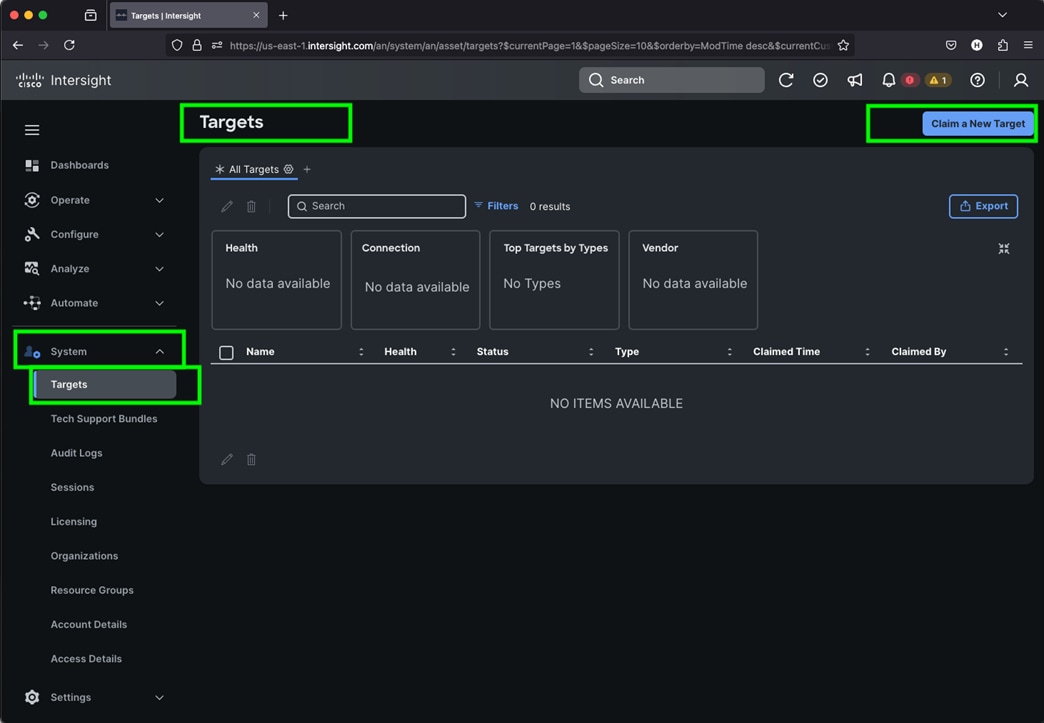

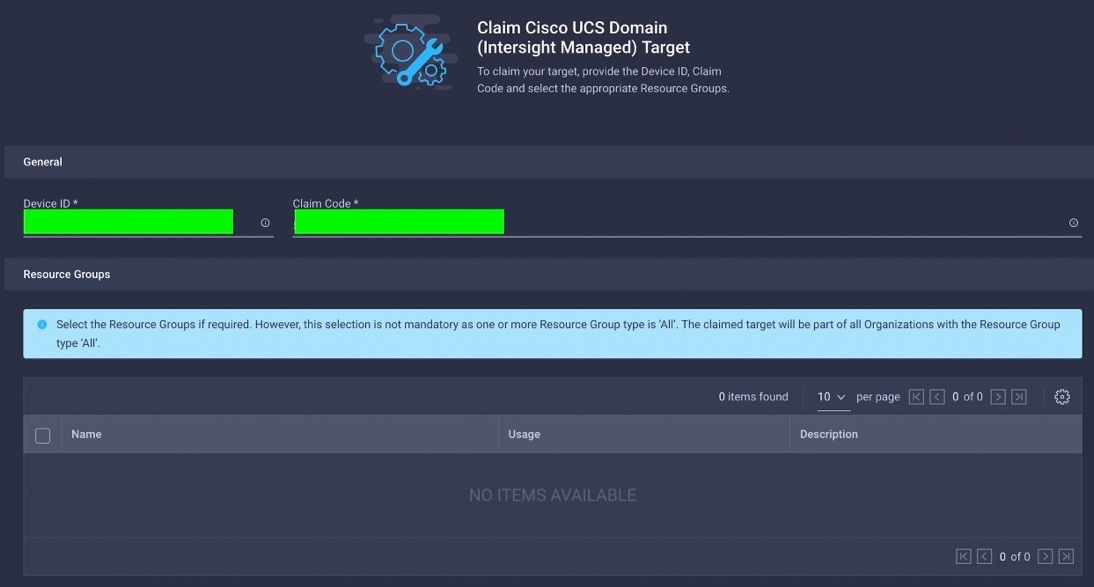

Step 3. After logging into your Cisco Intersight account, go to > System > Targets > Claim a New Target.

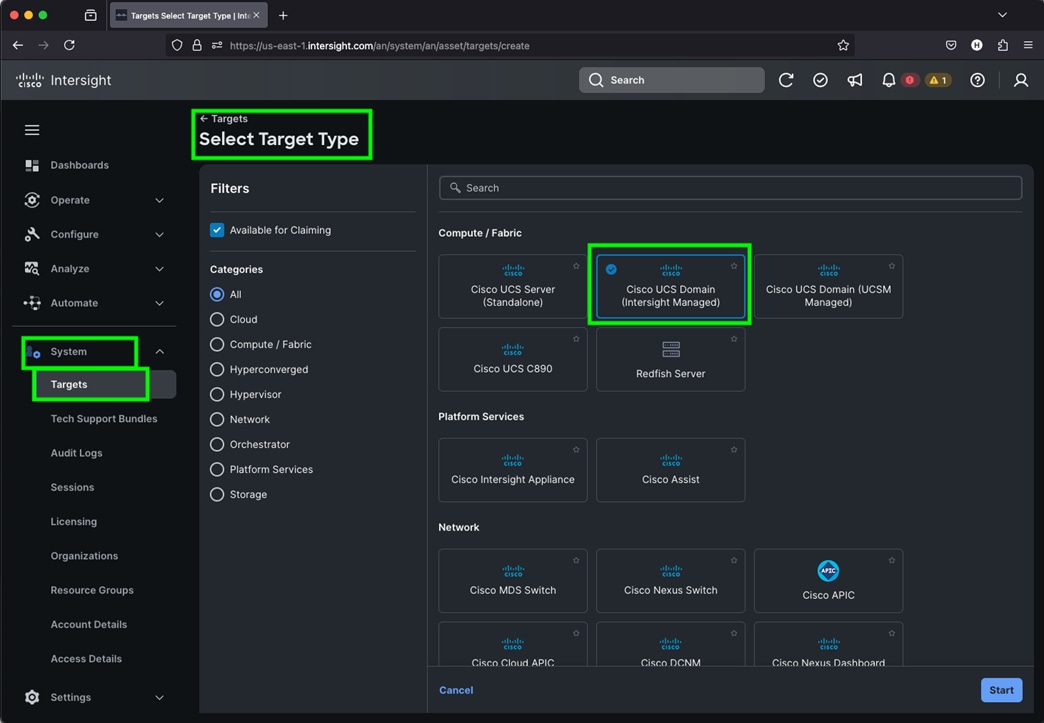

Step 4. For the Select Target Type, select “Cisco UCS Domain (Intersight Managed)” and click Start.

Step 5. Enter the Device ID and Claim Code which was previously captured. Click Claim to claim this domain in Cisco Intersight.

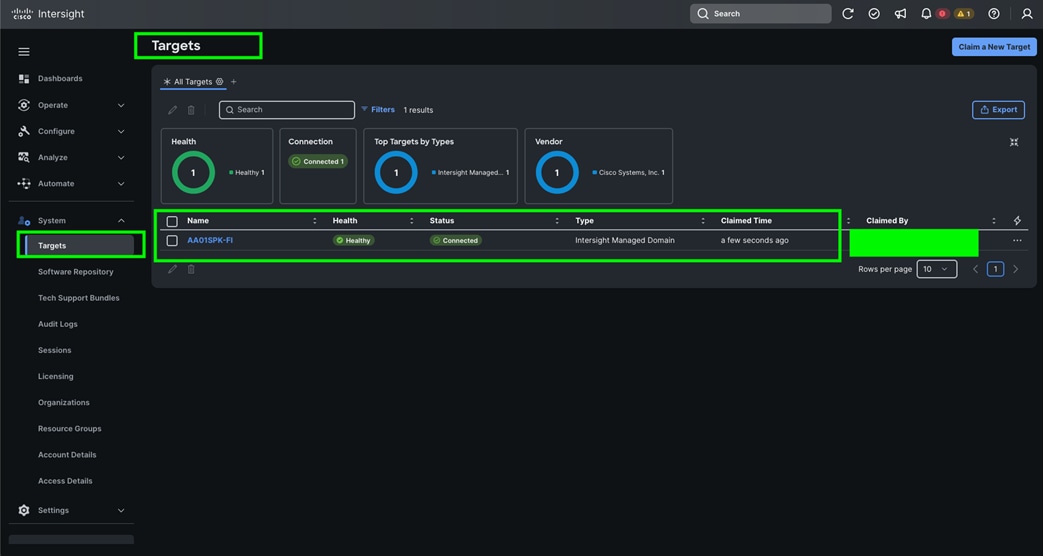

When you claim this domain, you can see both FIs under this domain and verify it’s under Intersight Managed Mode:

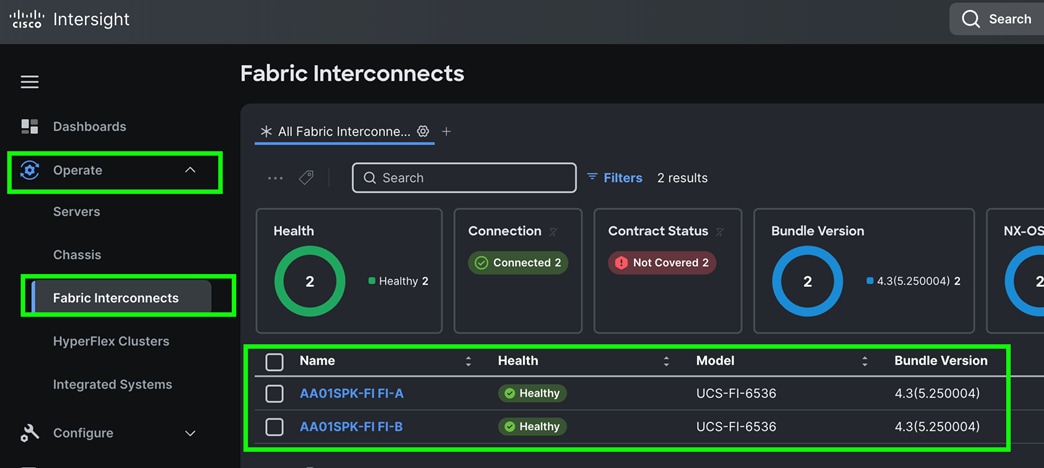

Cisco UCS Fabric Interconnect from the OPERATE tab shows details and Management Mode as shown below:

Cisco UCS fabric interconnect Device Console WebUI > Device Connector tab shows claimed account name as shown below:

Procedure 3. Configure Cisco Intersight Account and System Settings

Step 1. Go to System > Account Details. For more details: https://intersight.com/help/saas/system/settings

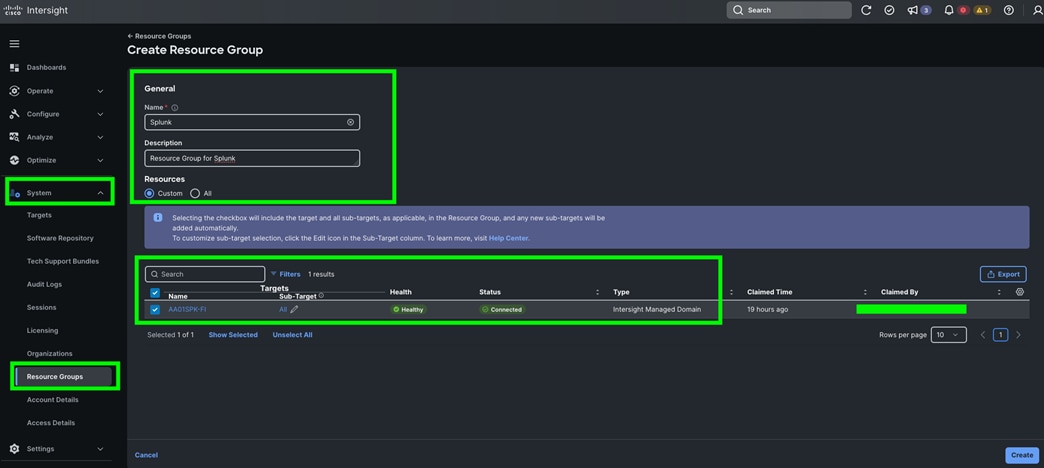

Step 2. In the System tab > Select Resource Group. Create New resource group.

Step 3. Select Targets to be part of this resource group and click Create. For this solution, we created new resource group as “Spk-Resource” and selected all the sub-targets as shown below.

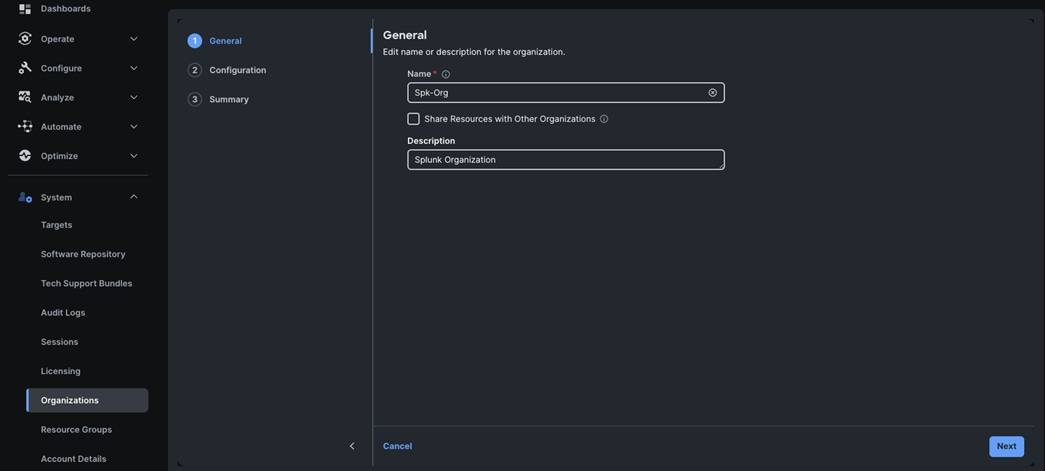

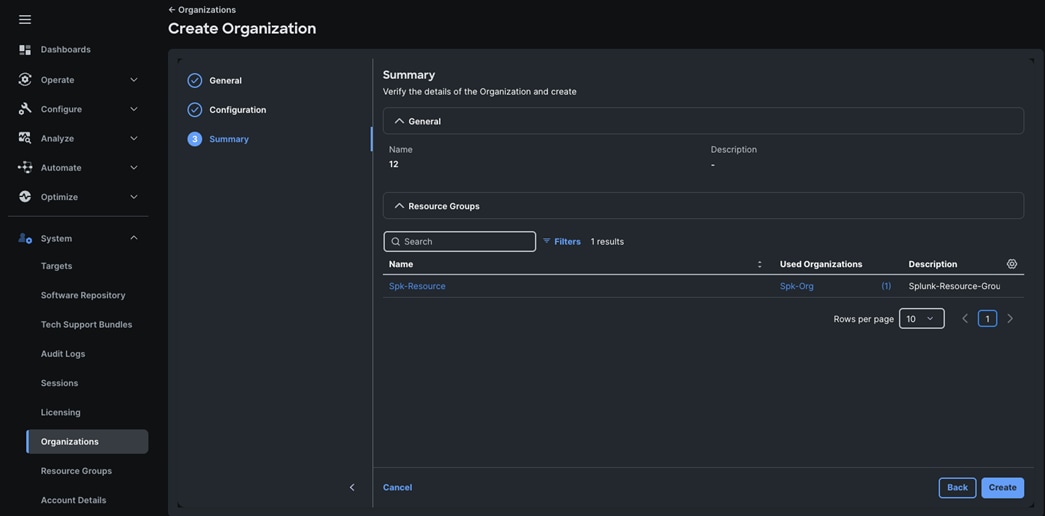

Step 4. Use the “Spk-Resource” group for this solution. Go to System menu, select Organizations then click Create Organization.

Step 5. Enter the name for the new Organization creation.

Step 6. (Optional) Check the box to share resources with other organizations. Click Next.

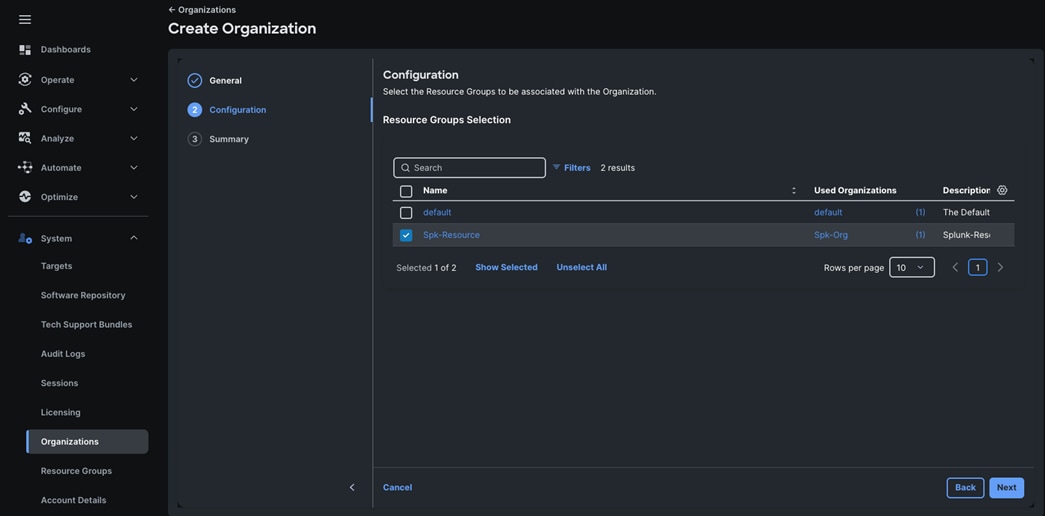

Step 7. In the configuration option, select the “Spk-Resource” configured earlier and click Next.

Step 8. Verify the summary page and then click Create to create organization with resource group for this deployment as shown below:

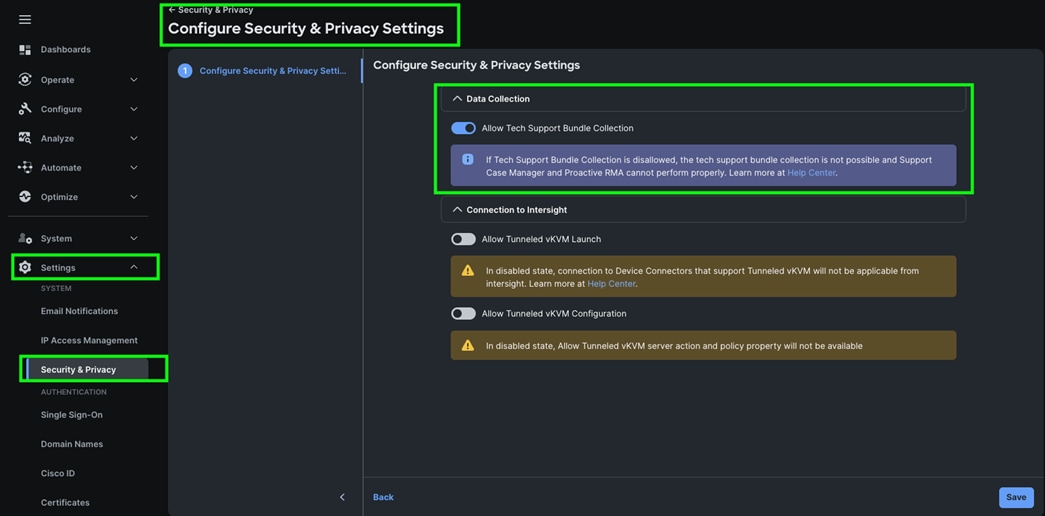

Step 9. To configure allowing tech support bundle collection, go to Settings > Security & Privacy > and enable the option and then click Save.

Note: For this solution we disabled Tunneled vKVM Launch and configuration.

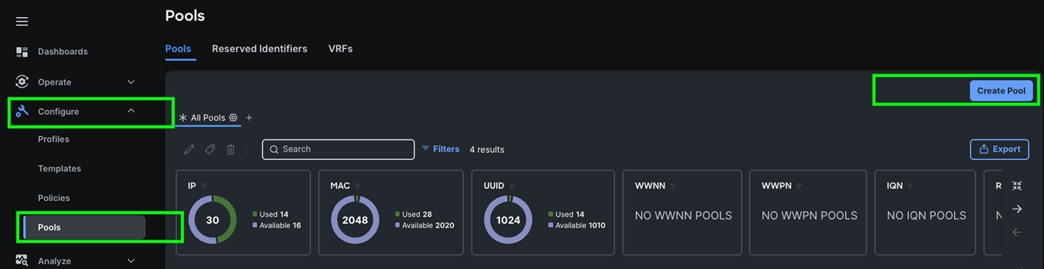

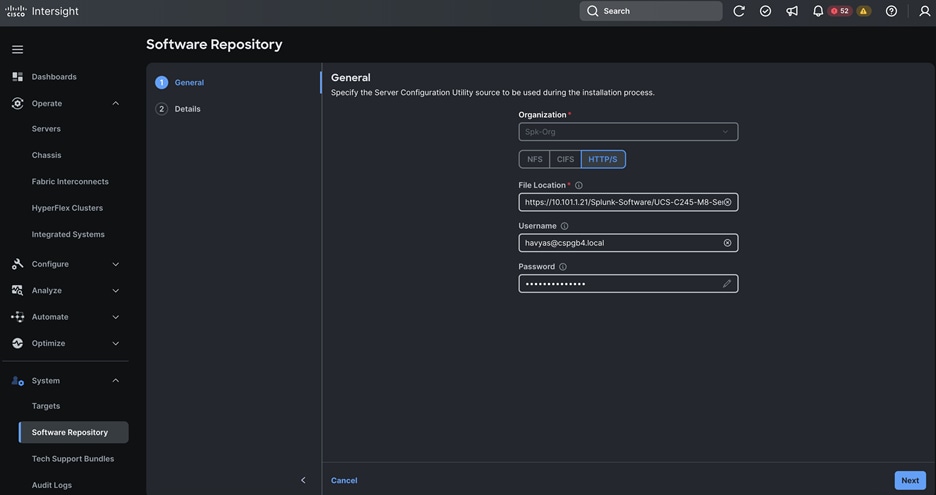

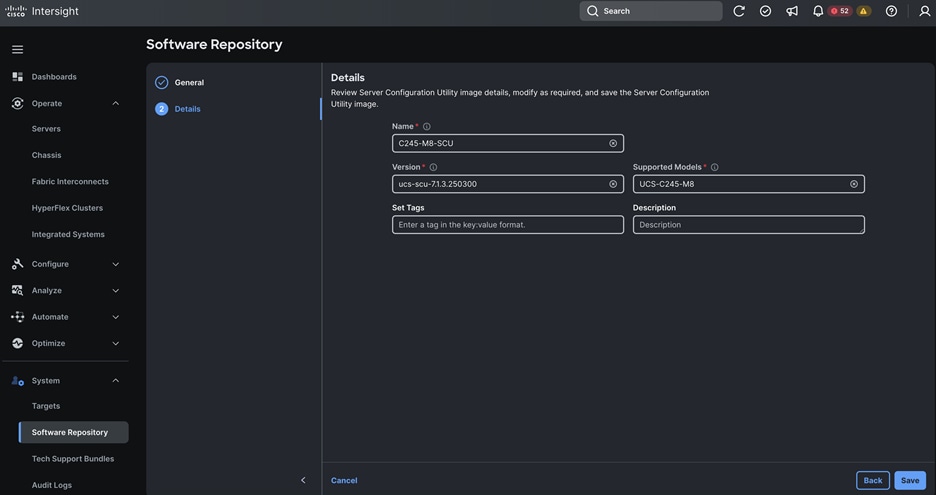

Note: We configured the IP Pool, IMC Access Policy, and Power Policy for the Cisco UCS Chassis profile as explained below.

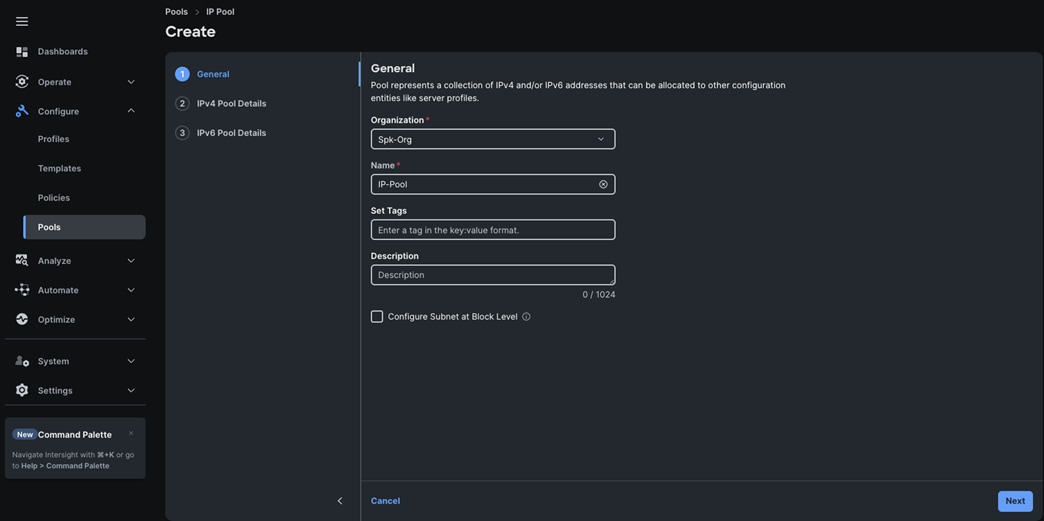

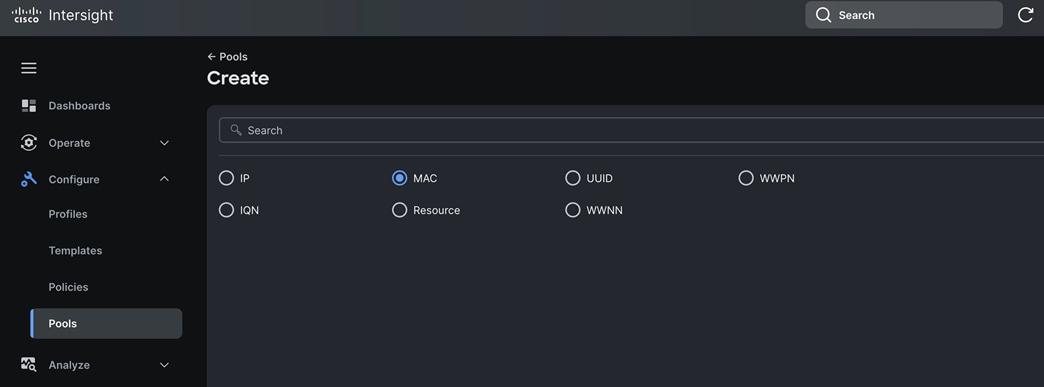

Step 1. Go to > Configure > Pools > and then select “Create Pool” on the top right corner.

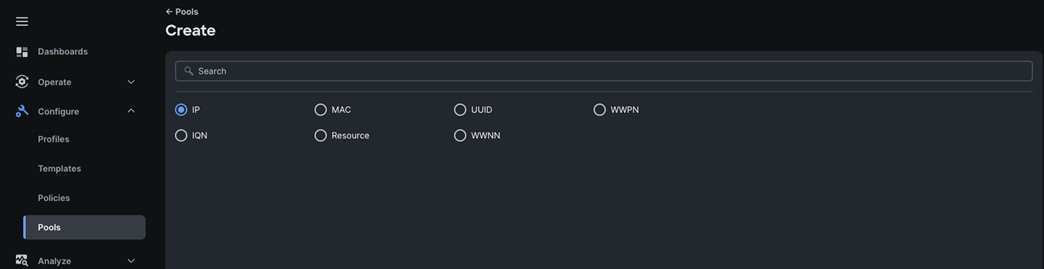

Step 2. Select option “IP” as shown below to create the IP Pool.

Step 3. In the IP Pool Create section, for Organization select “Spk-Org” and enter the Policy name “IP-Pool” and click Next.

Step 4. Enter Netmask, Gateway, Primary DNS, IP Blocks and Size according to your environment and click Next.

Note: For this solution, we did not configure the IPv6 Pool. Keep the Configure IPv6 Pool option disabled and click Create to create the IP Pool.

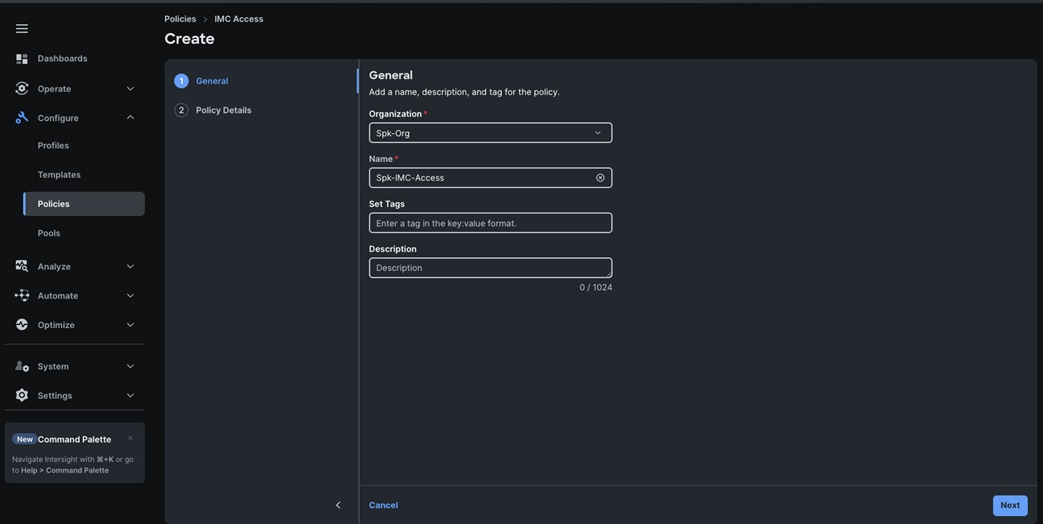

Procedure 5. Configure IMC Access Policy

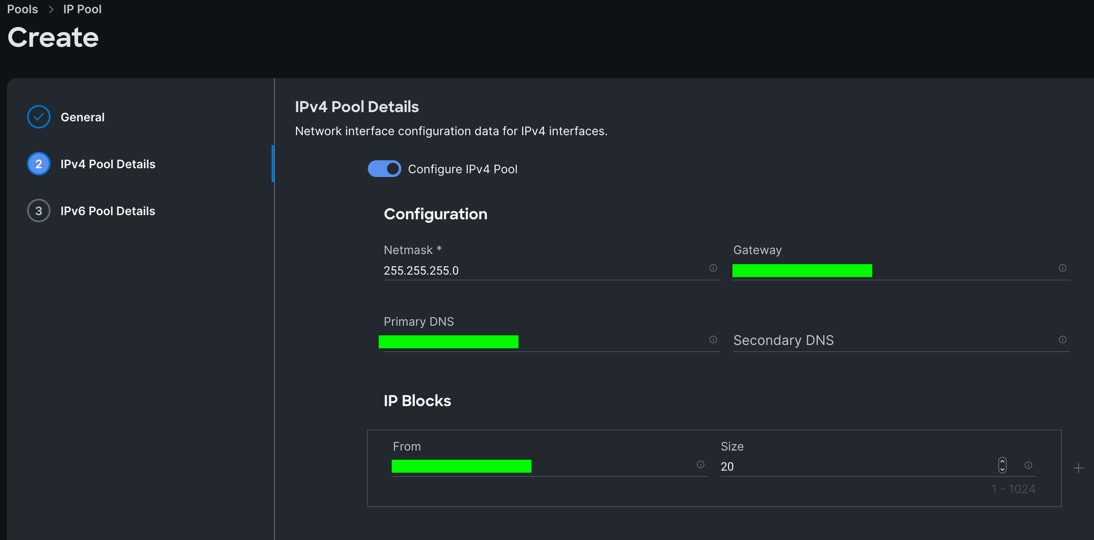

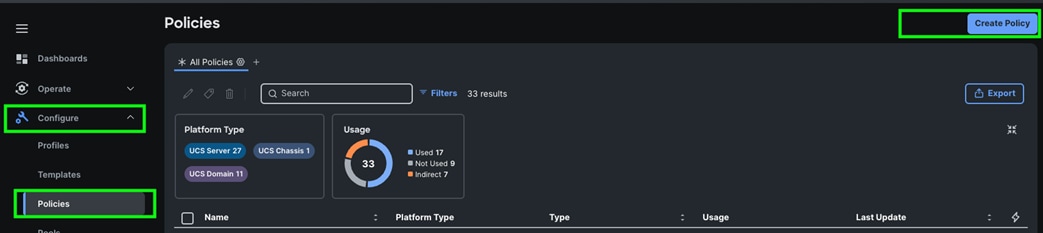

Step 1. Go to > Configure > Polices > and click Create Policy.

Step 2. Select the “IMC Access” policy from the list of policy.

Step 3. In the IMC Access Create section, for Organization select “Spk-Org” and enter the Policy name “Spk-IMC-Access” and click Next.

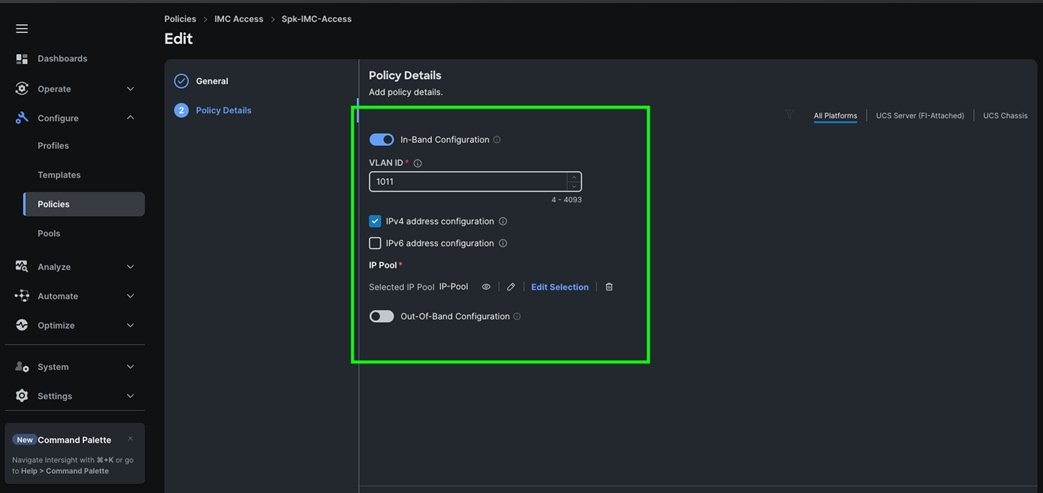

Step 4. In the Policy Details section, enable In-Band Configuration, enter the VLAN ID as 1011 and select the IP Pool “IP-Pool” as shown below:

Step 5. Click Create to create this policy.

Configure Policies for Cisco UCS Domain

This section contains the following procedures:

Procedure 1. Configure Multicast Policy

Procedure 3. Configure Port Policy

Procedure 4. Configure NTP Policy

Procedure 5. Configure Network Connectivity Policy

Procedure 6. Configure System QoS Policy

Procedure 7. Configure Switch Control Policy

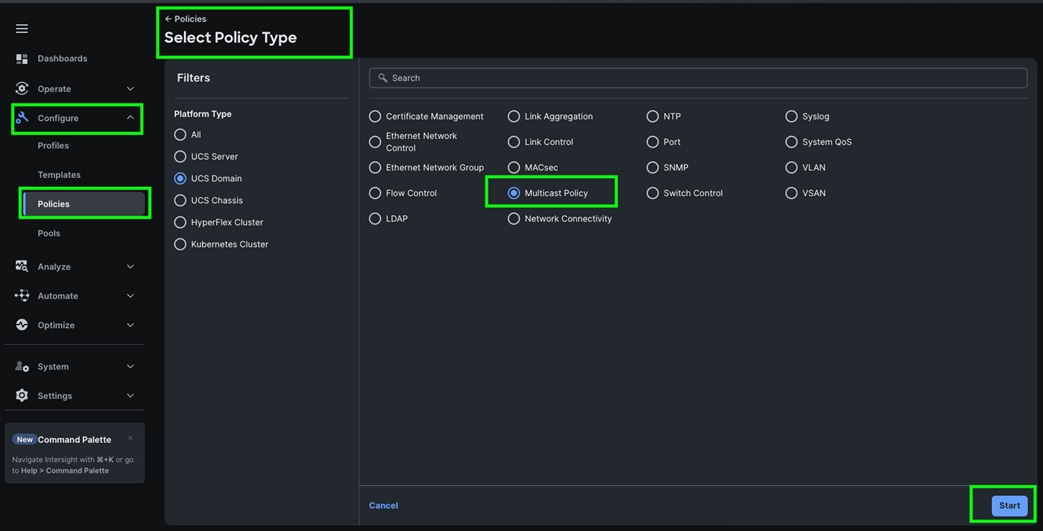

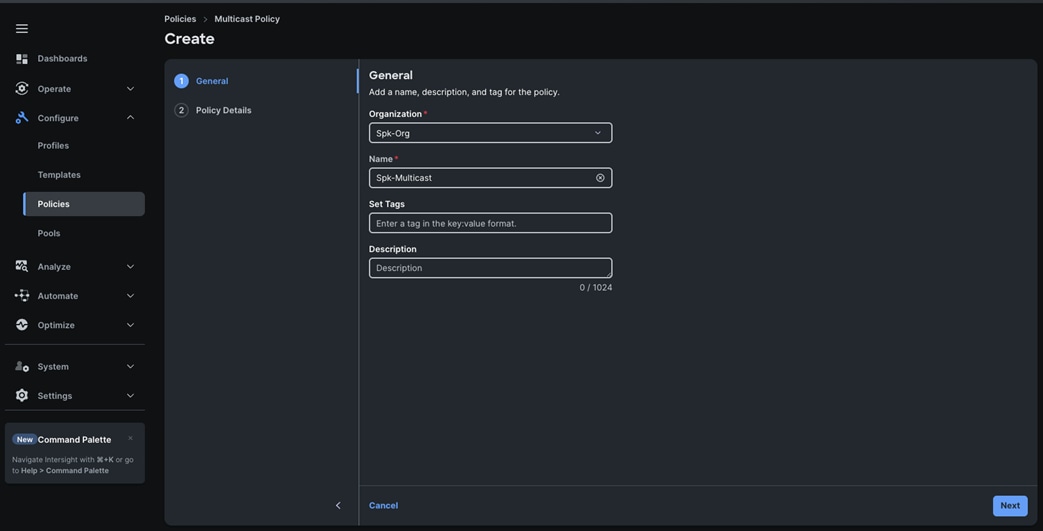

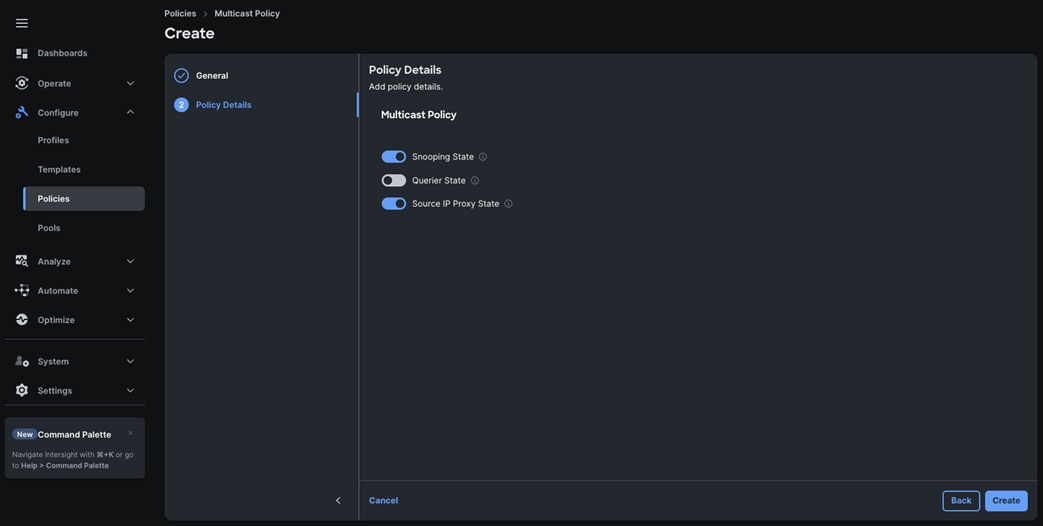

Procedure 1. Configure Multicast Policy

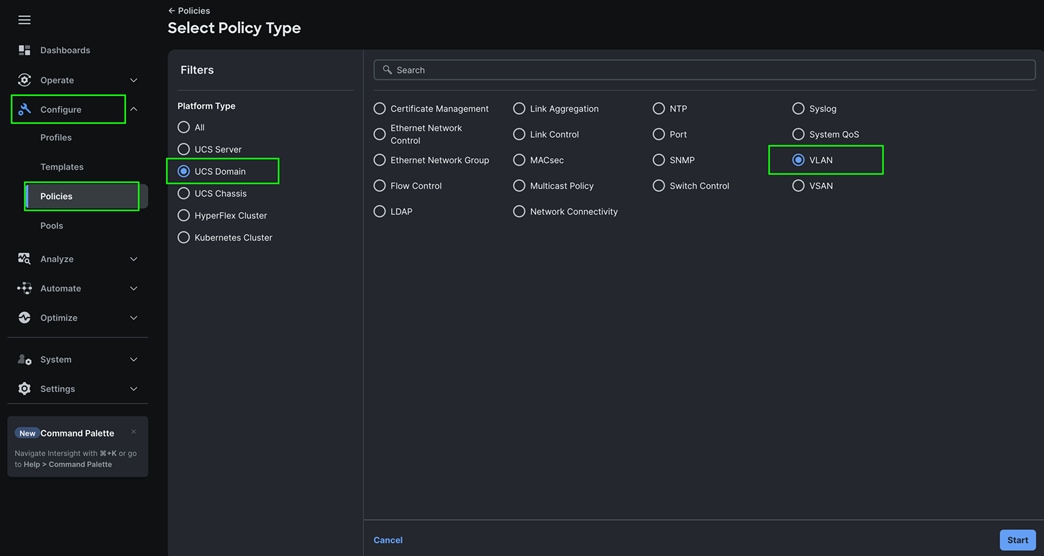

Step 1. To configure Multicast Policy for a Cisco UCS Domain profile, go to > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for Policy, select “Multicast Policy.”

Step 2. In the Multicast Policy Create section, for the Organization select “Spk-Org” and for the Policy name “Spk-Multicast.” Click Next.

Step 3. In the Policy Details section, select Snooping State and Source IP Proxy State.

Step 4. Click Create to create this policy.

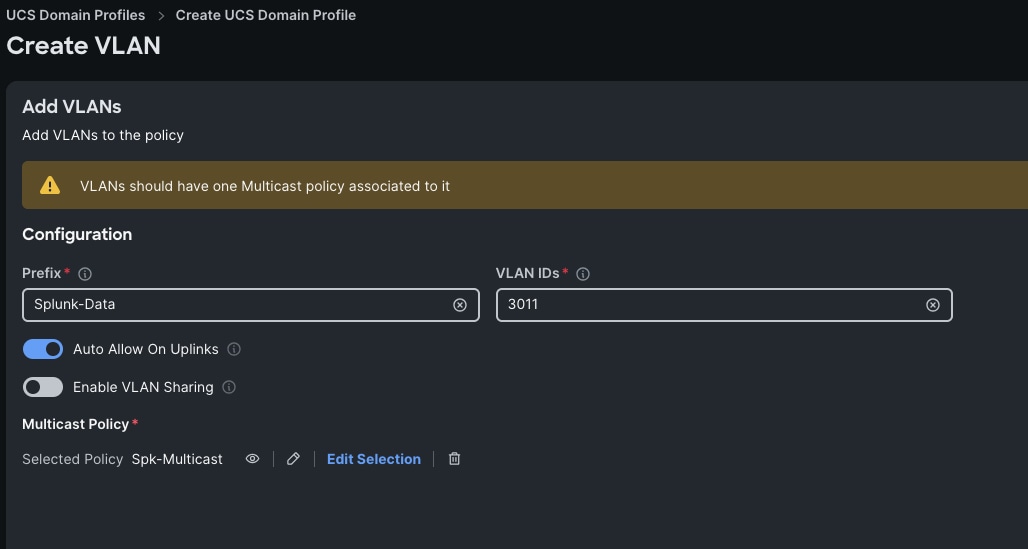

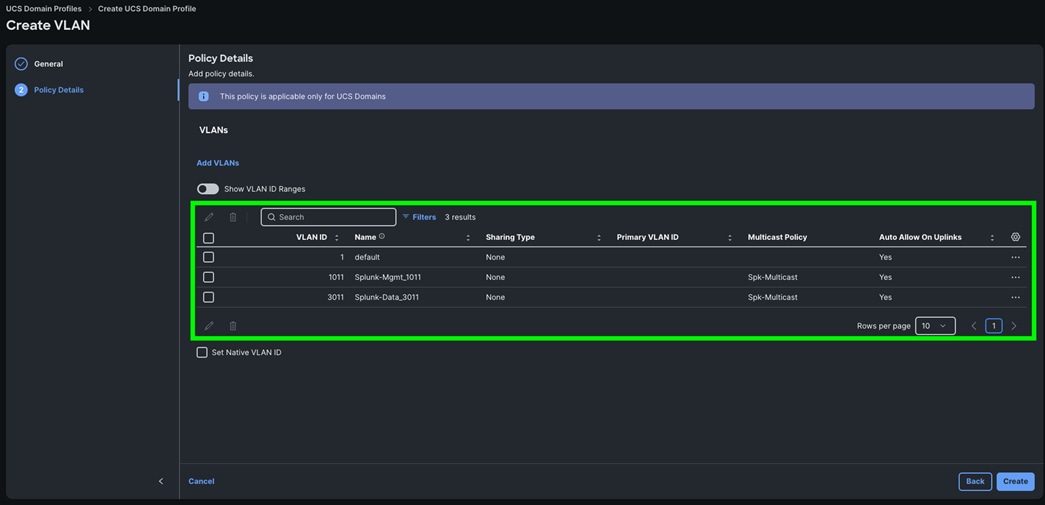

| VLAN Configuration |

||

| VLAN |

||

| Name |

ID |

Description |

| Default VLAN |

1 |

Native VLAN |

| Splunk-Mgmt |

1011 |

VLAN for Management and Data Ingestion Network Traffic |

| Splunk-Data |

3011 |

VLAN for Data Replication Network Traffic |

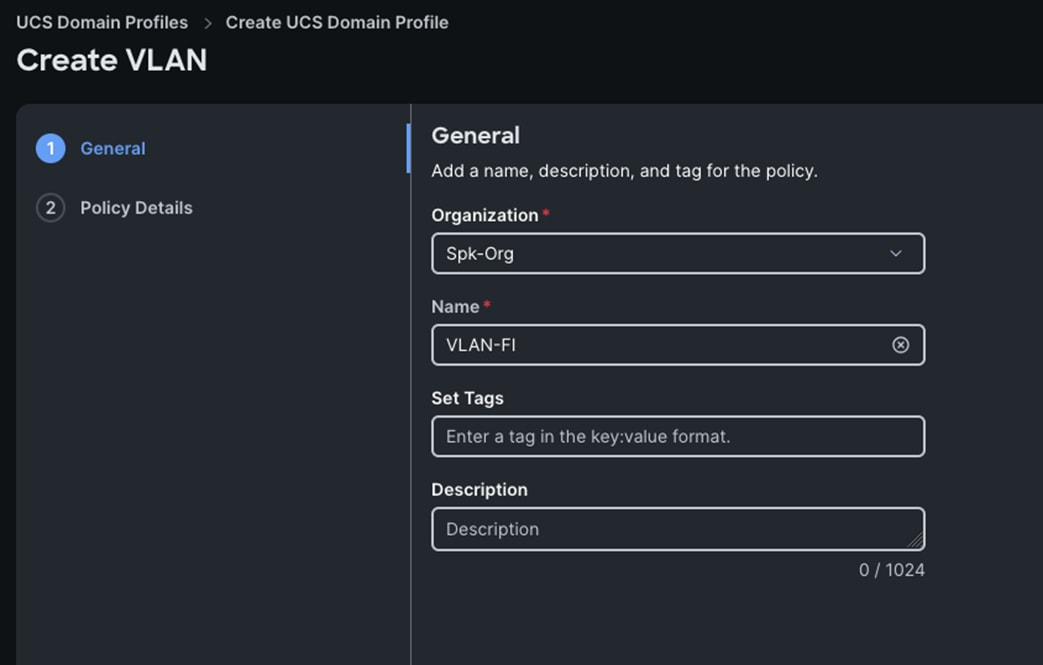

Step 1. To configure the VLAN Policy for the Cisco UCS Domain profile, go to > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the Policy select “VLAN.”

Step 2. In the VLAN Policy Create section, for the Organization select “Spk-Org” and for the Policy name select “VLAN-FI.” Click Next.

Step 3. In the Policy Details section, to configure the individual VLANs, select "Add VLANs."

Step 4. Provide a name, VLAN ID for the VLAN and select the Multicast Policy as shown below:

Step 5. Click Add to add this VLAN to the policy.

Step 6. Add another VLAN 1011 and provide the names as Splunk-Mgmt and select the same Multicast Policy as configured previously.

Step 7. Click Create to create this policy.

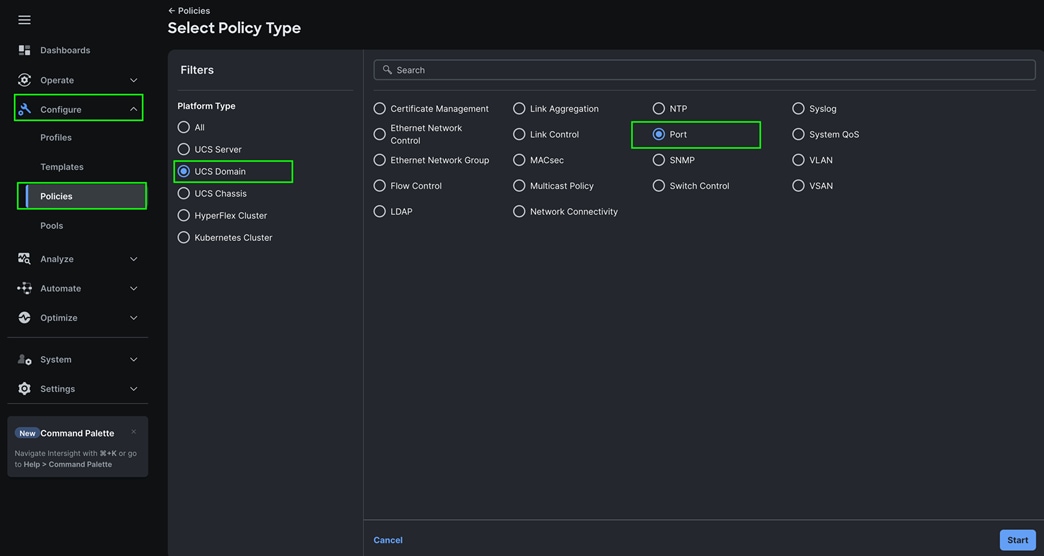

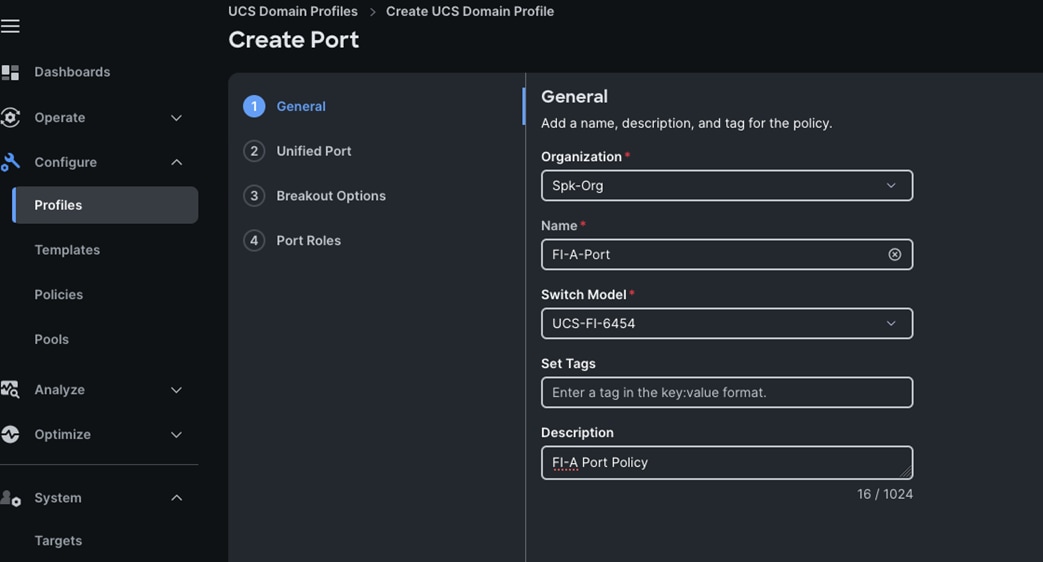

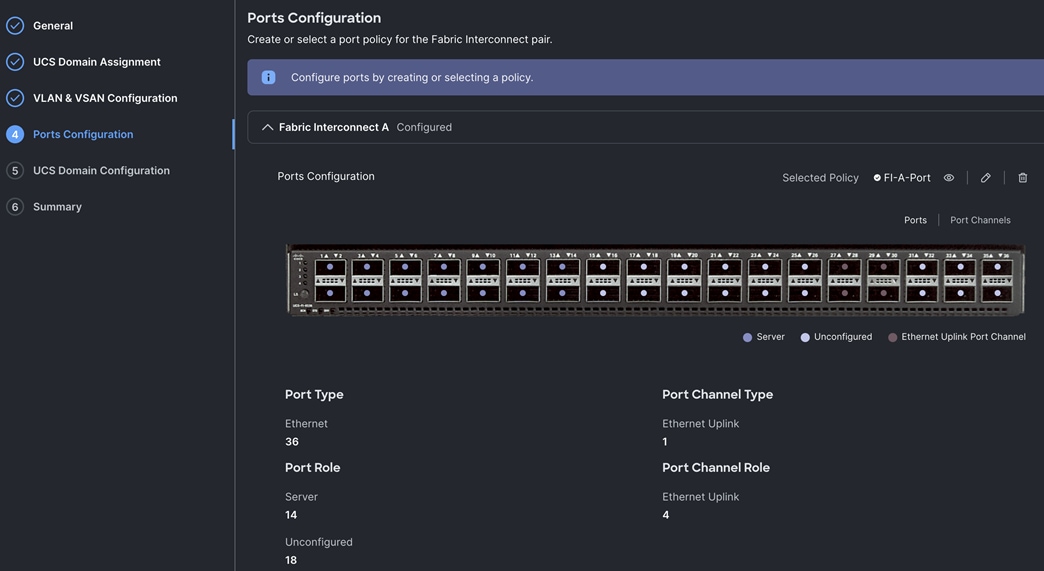

Procedure 3. Configure Port Policy

Step 1. Go to Configure > Polices > and click Create Policy.

Step 2. For the platform type select “UCS Domain” and for the policy, select “Port.”

Step 3. In the Port Policy Create section, for the Organization, select “Spk-Org,” for the policy name select “FI-A-Port” and for the Switch Model select "UCS-FI-6536.” Click Next.

Note: We did not configure the Fibre Channel Ports for this solution. In the Unified Port section, leave it as default and click Next.

Note: We did not configure the Breakout options for this solution. Leave it as default and click Next.

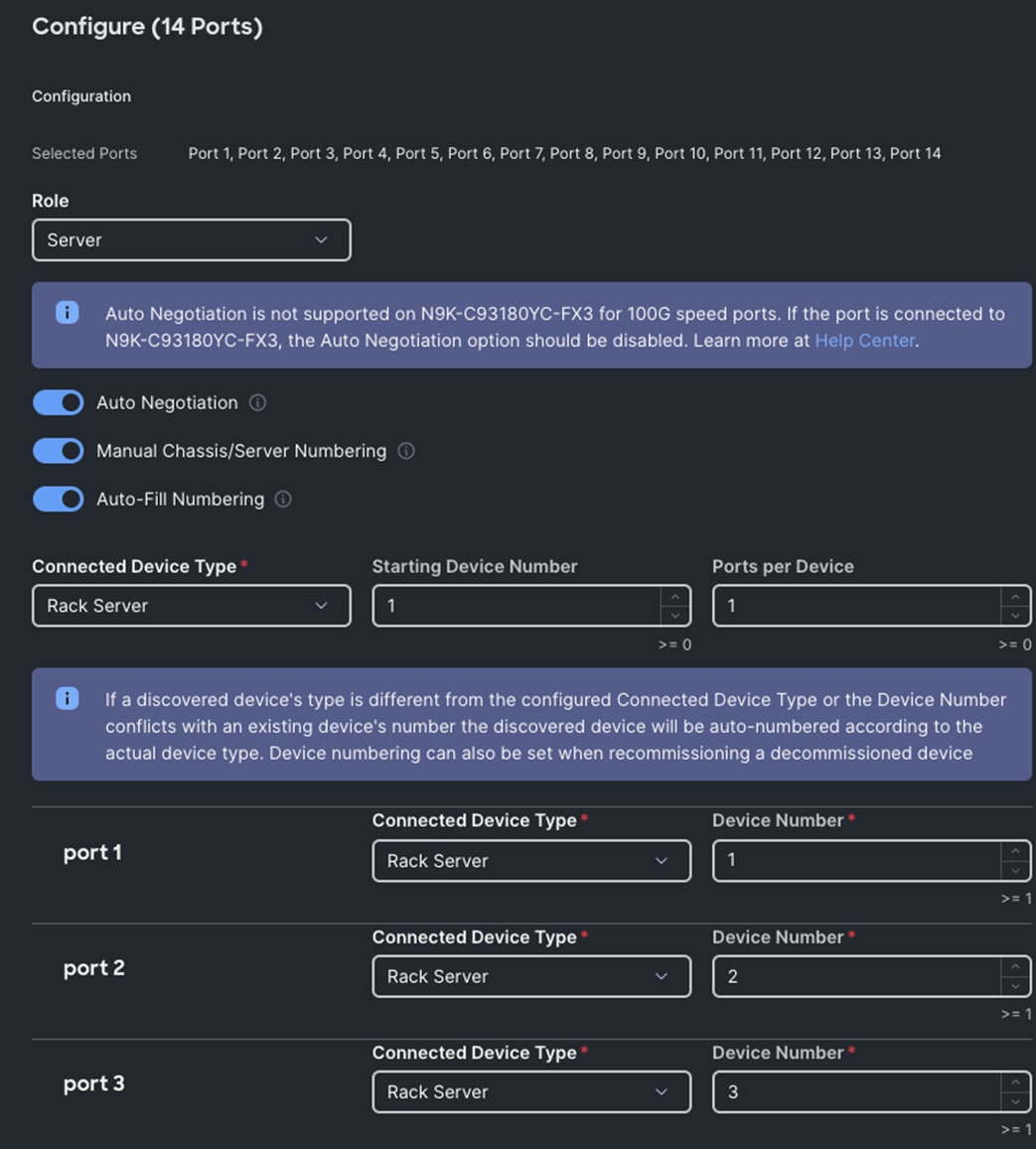

Step 4. In the Port Role section, select port 1 to 14 and click Configure.

Step 5. In the Configure section, for Role select Server and keep the Auto Negotiation ON, Manual Server Numbering and Auto Fill Numbering as shown below:

Step 6. Click SAVE to add this configuration for port roles.

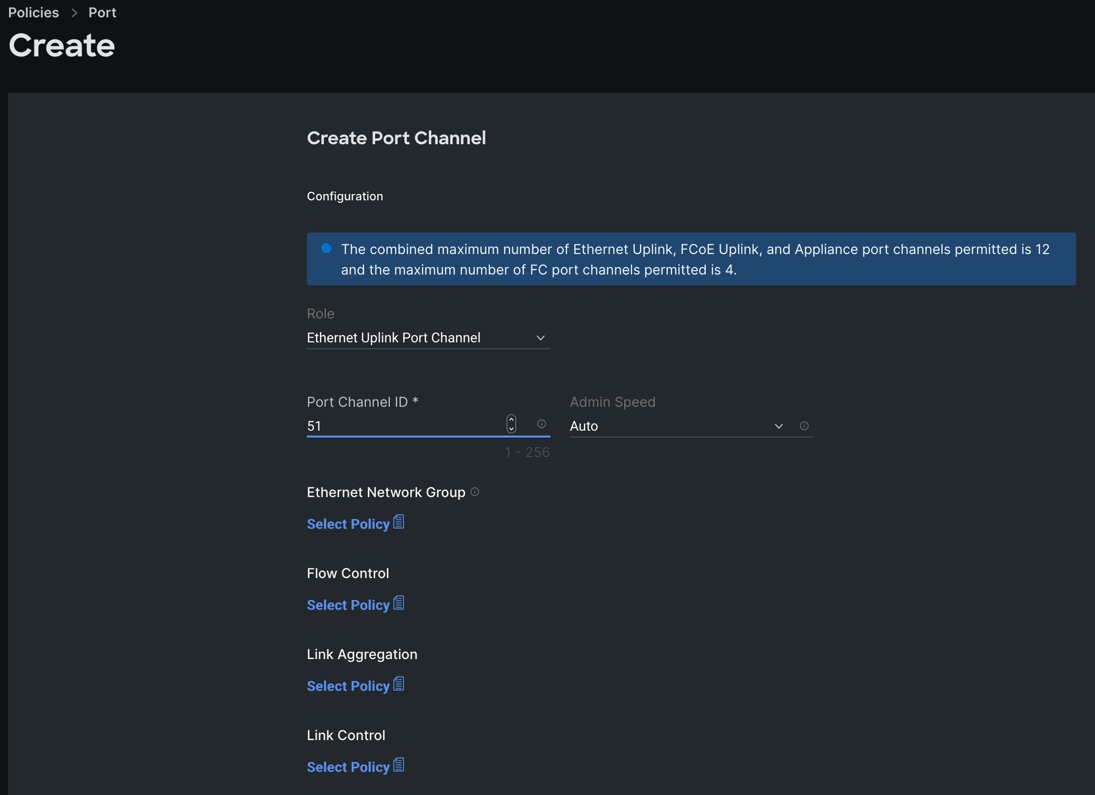

Step 7. Go to the Port Channels tab and select Port 27 to 30 and click Create Port Channel between FI-A and both Cisco Nexus Switches.

Step 8. In the Create Port Channel section, for Role select Ethernet Uplinks Port Channel, and for the Port Channel ID select 51 and select Auto for the Admin Speed.

Step 9. Click SAVE to add this configuration for uplink port roles.

Step 10. Click SAVE to complete this configuration for all the server ports and uplink port roles.

Note: We configured the FI-B ports and created a Port Policy for FI-B, “FI-B-Port.”

Note: As configured for FI-A, we configured the port policy for FI-B. For FI-B, configured port 1 to 14 for server ports and ports 27 to 30 as the ethernet uplink port-channel ports.

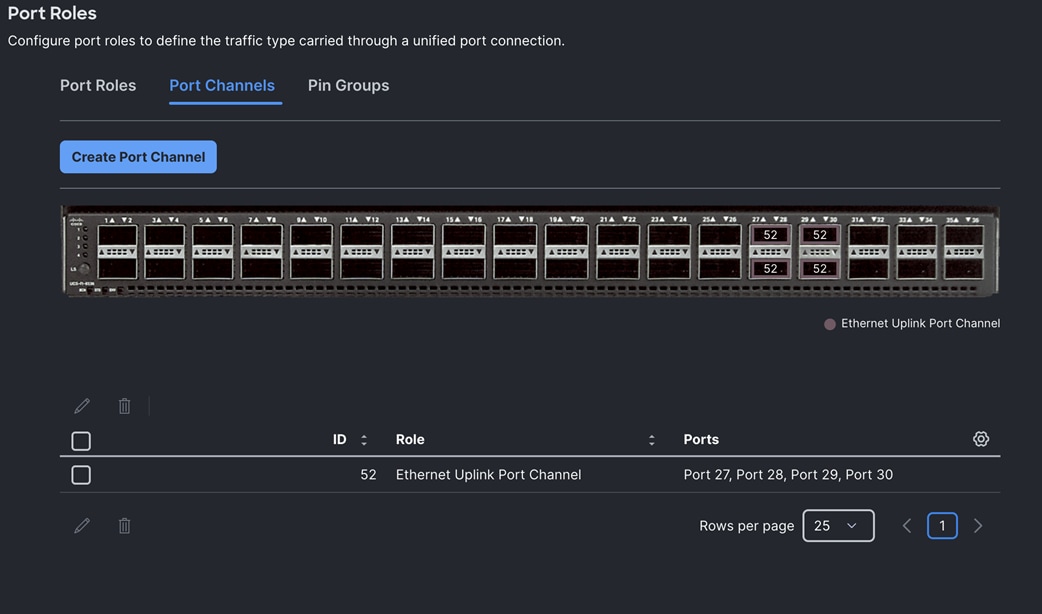

For FI-B, we configured Port-Channel ID as 52 for Ethernet Uplink Port Channel as shown below:

This completes the Port Policy for FI-A and FI-B for Cisco UCS Domain profile.

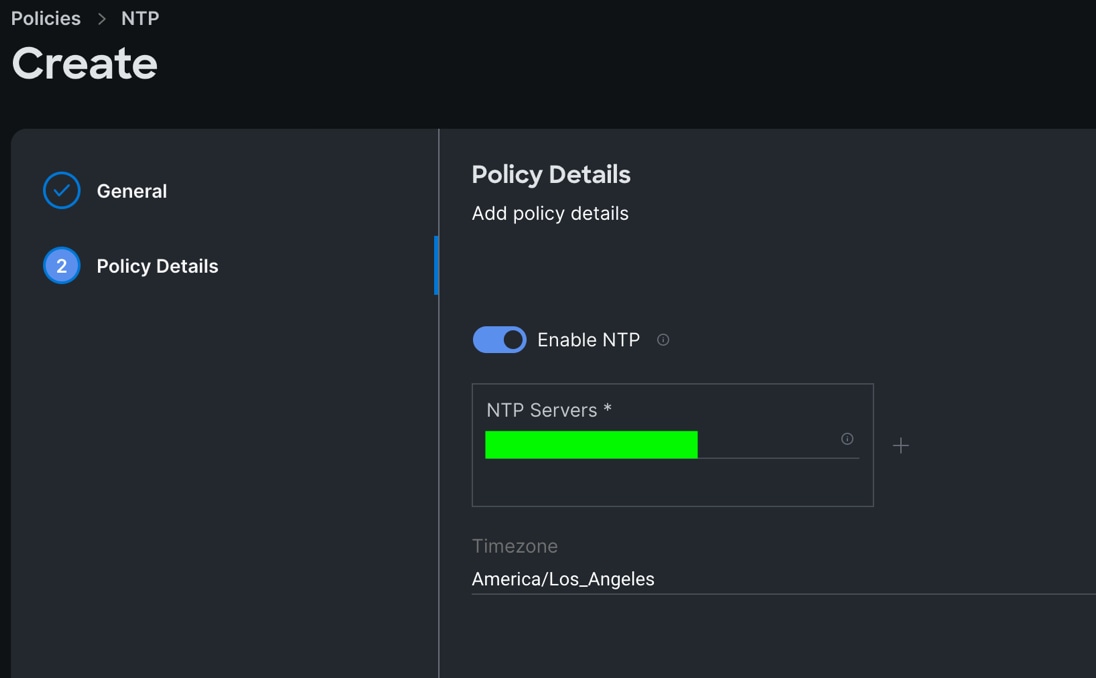

Procedure 4. Configure NTP Policy

Step 1. To configure the NTP Policy for the Cisco UCS Domain profile, go to > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy select “NTP.”

Step 2. In the NTP Policy Create section, for the Organization select “Spk-Org” and for the policy name select “NTP-Policy.” Click Next.

Step 3. In the Policy Details section, select the option to enable the NTP Server and enter your NTP Server details as shown below:

Step 4. Click Create.

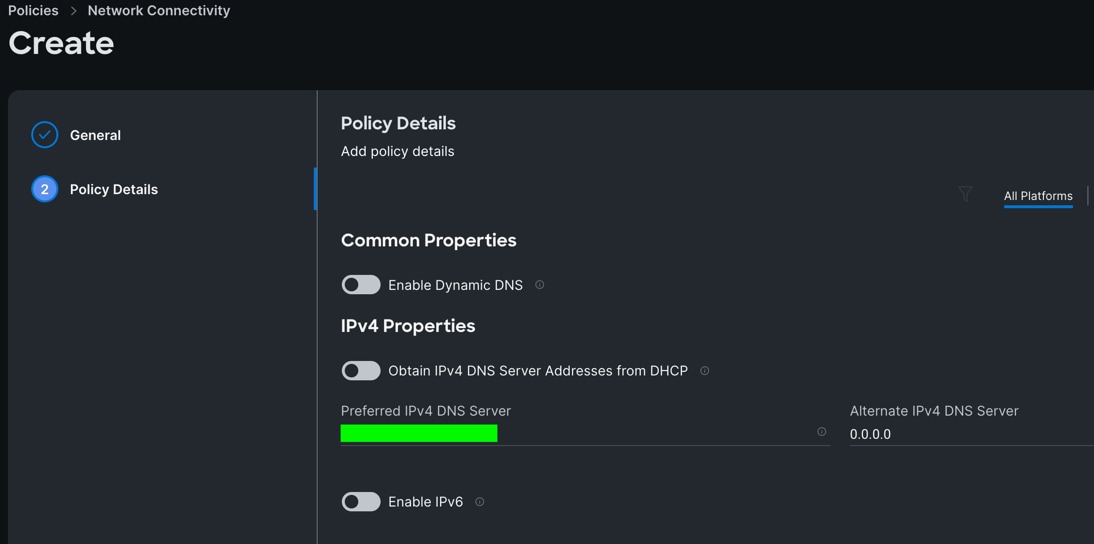

Procedure 5. Configure Network Connectivity Policy

Step 1. To configure to Network Connectivity Policy for the Cisco UCS Domain profile, go to > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy select “Network Connectivity.”

Step 2. In the Network Connectivity Policy Create section, for the Organization select “Spk-Org” and for the policy name select “Network-Connectivity-Policy.” Click Next.

Step 3. In the Policy Details section, enter the IPv4 DNS Server information according to your environment details as shown below:

Step 4. Click Create.

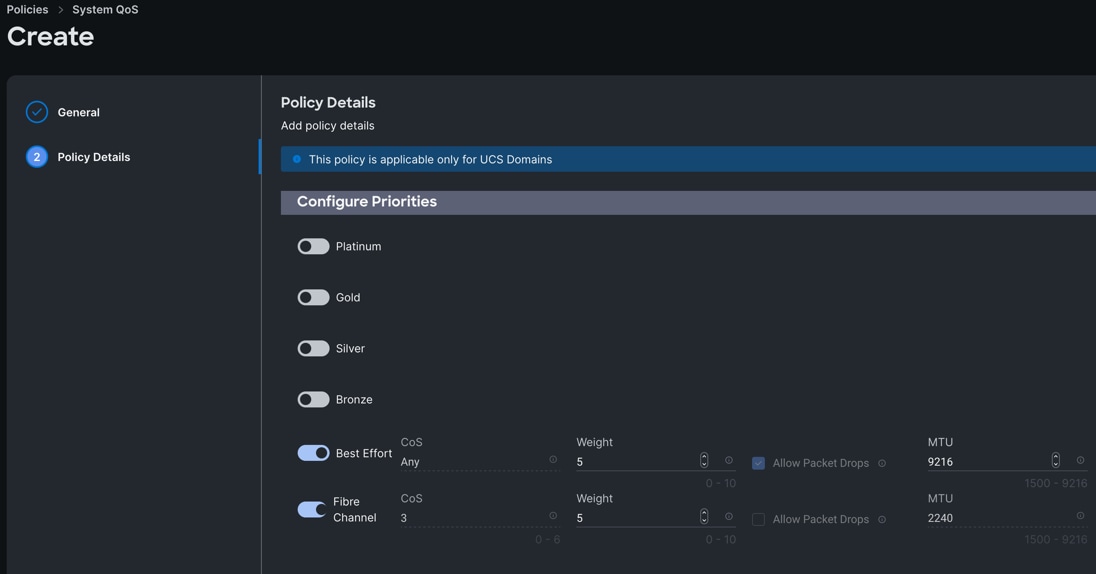

Procedure 6. Configure System QoS Policy

Step 1. To configure the System QoS Policy for the Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy select “System QoS.”

Step 2. In the System QoS Policy Create section, for the Organization select “Spk-Org” and for the policy name select “Spk-QoS.” Click Next.

Step 3. In the Policy Details section under Configure Priorities, select Best Effort and set the MTU size to 9216.

Step 4. Click Create.

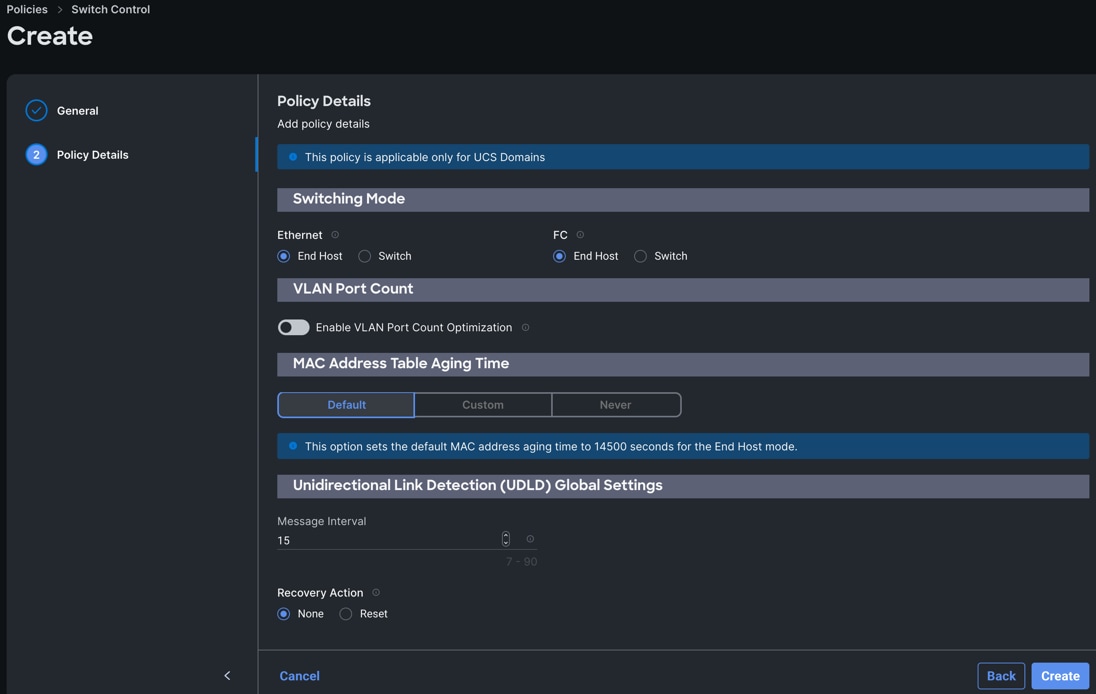

Procedure 7. Configure Switch Control Policy

Step 1. To configure the Switch Control Policy for the UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy select “Switch Control.”

Step 2. In the Switch Control Policy Create section, for the Organization select “Spk-Org” and for the policy name select “Spk-Switch-Control.” Click Next.

Step 3. In the Policy Details section, for the Switching Mode for Ethernet as well as FC, select and keep "End Host" Mode.

Step 4. Click Create to create this policy.

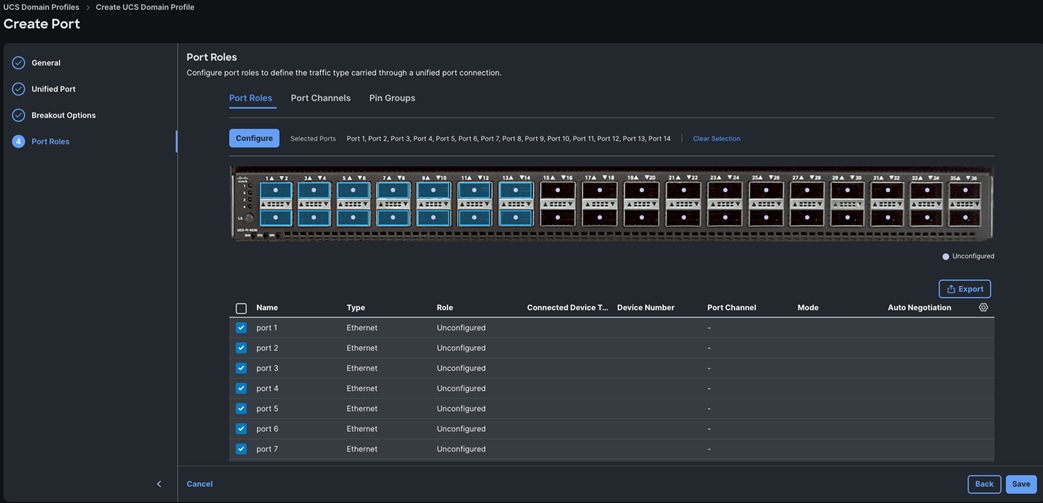

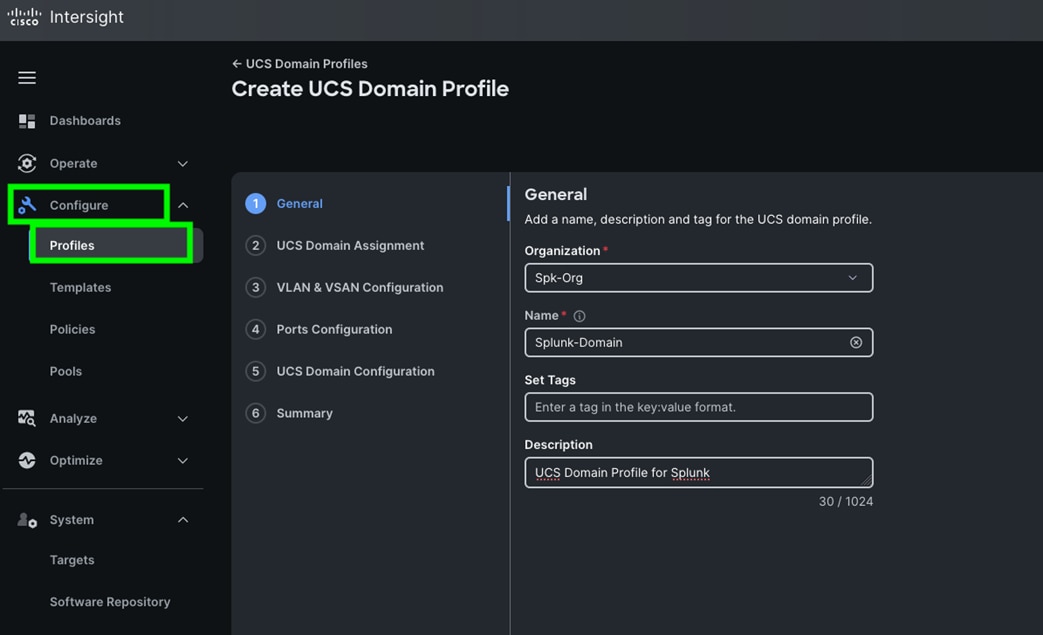

Configure Cisco UCS Domain Profile

This section contains the following procedures:

Procedure 1. Create a domain profile

With Cisco Intersight, a domain profile configures a fabric interconnect pair through reusable policies, allows for configuration of the ports and port channels, and configures the VLANs and VSANs in the network. It defines the characteristics of and configures ports on fabric interconnects. You can create a domain profile and associate it with a fabric interconnect domain. The domain-related policies can be attached to the profile either at the time of creation or later. One UCS Domain profile can be assigned to one fabric interconnect domain. For more information, go to: https://intersight.com/help/saas/features/fabric_interconnects/configure#domain_profile

Some of the characteristics of the Cisco UCS domain profile are:

● A single domain profile (Splunk-Domain) is created for the pair of Cisco UCS fabric interconnects.

● Unique port policies are defined for the two fabric interconnects.

● The VLAN configuration policy is common to the fabric interconnect pair because both fabric interconnects are configured for the same set of VLANs.

● The VSAN configuration policy is different to each of the fabric interconnects because both fabric interconnects are configured to carry separate storage traffic through separate VSANs.

● The Network Time Protocol (NTP), network connectivity, and system Quality-of-Service (QoS) policies are common to the fabric interconnect pair.

Procedure 1. Create a Domain Profile

Step 1. To create a domain profile, go to Configure > Profiles > then go to the UCS Domain Profiles tab and click Create UCS Domain Profile

Step 2. For the domain profile name, enter “Splunk-Domain” and for the Organization select what was previously configured. Click Next.

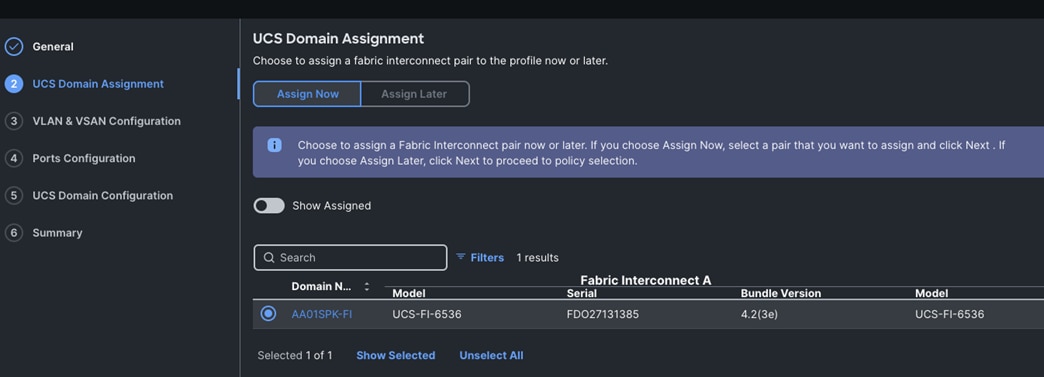

Step 3. In the UCS Domain Assignment menu, for the Domain Name select “Spk-OrgC-FI” which was added previously into this domain and click Next.

Step 4. In the VLAN Configuration screen, for the VLAN Configuration for both FIs, select VLAN-FI and click Next.

Step 5. In the Port Configuration section, for the Port Configuration Policy for FI-A select FI-A-Port.

Step 6. For the port configuration policy for FI-B select FI-B-Port.

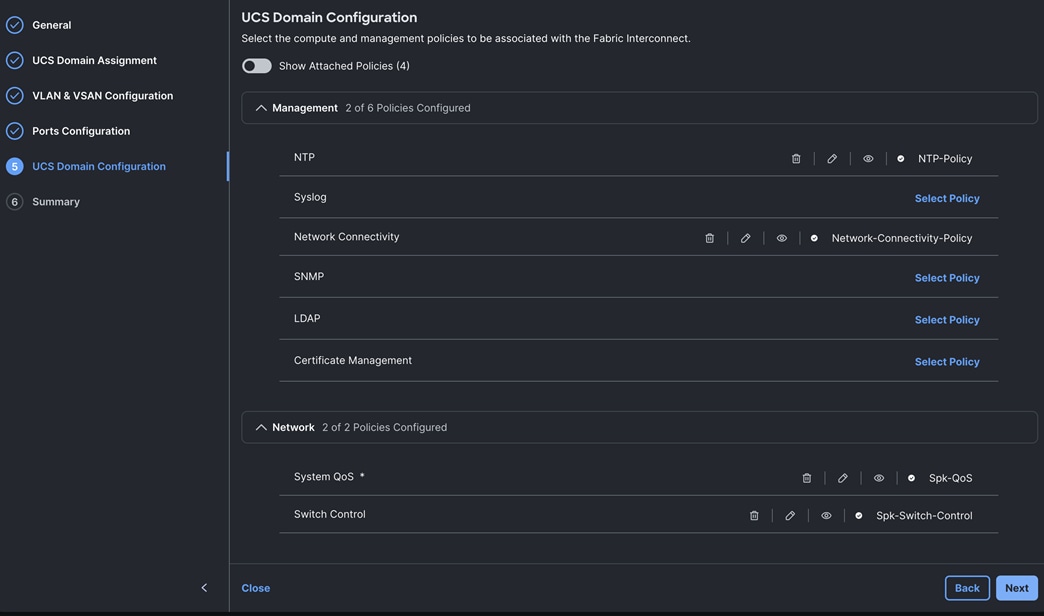

Step 7. In the UCS Domain Configuration section, select the policy for NTP, Network Connectivity, System QoS and Switch Control as shown below:

Step 8. In the Summary window, review the policies and click Deploy to create Domain Profile.

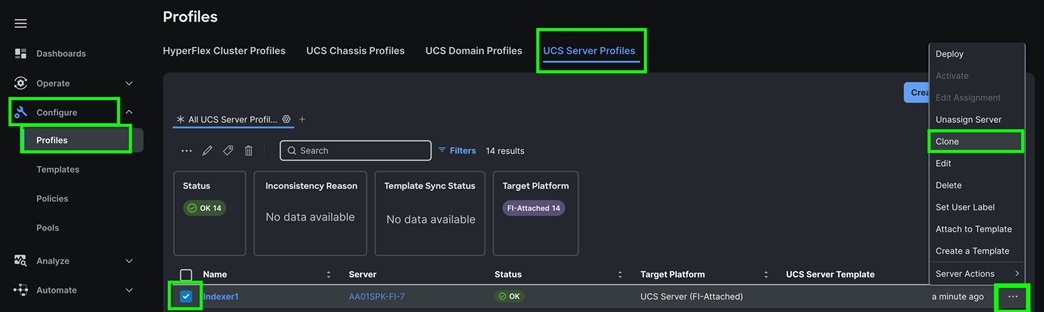

Note: After the Cisco UCS domain profile has been successfully created and deployed, the policies including the port policies are pushed to the Cisco UCS fabric interconnects. The Cisco UCS domain profile can easily be cloned to install additional Cisco UCS systems. When cloning the Cisco UCS domain profile, the new Cisco UCS domains utilize the existing policies for the consistent deployment of additional Cisco UCS systems at scale.

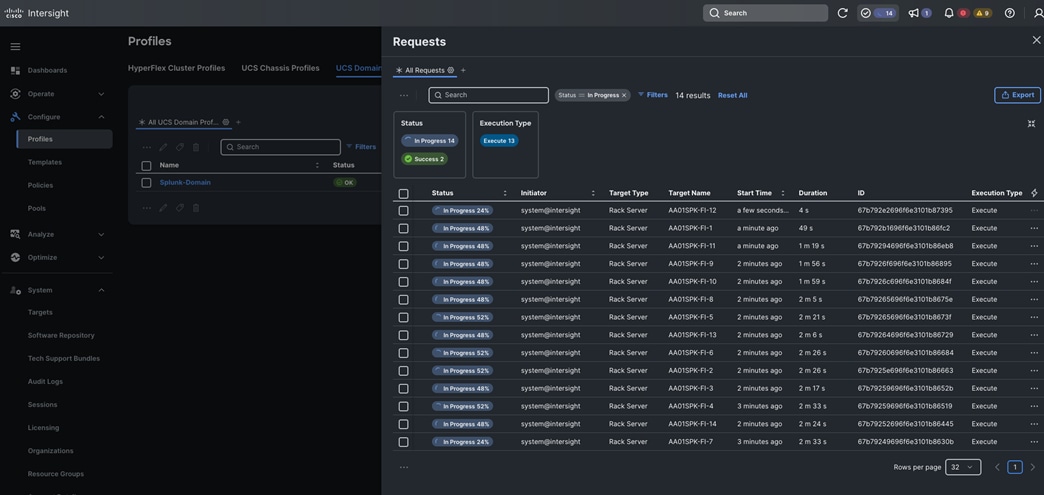

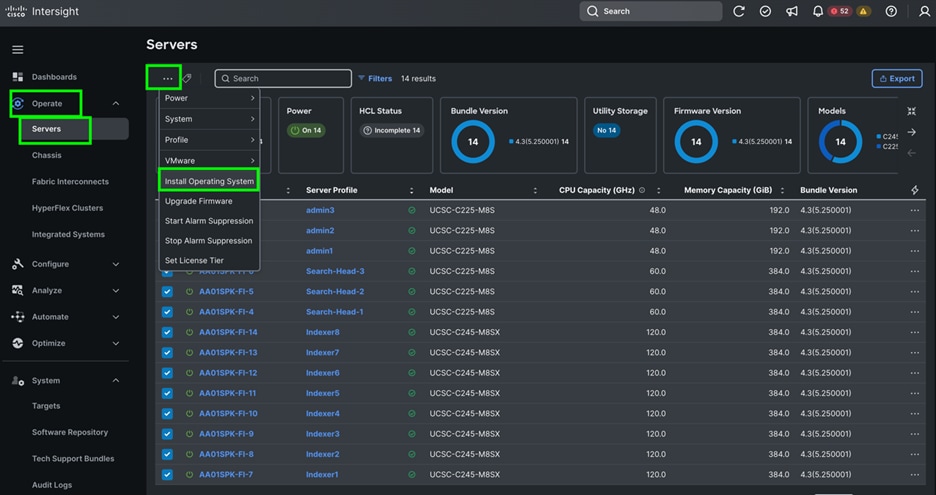

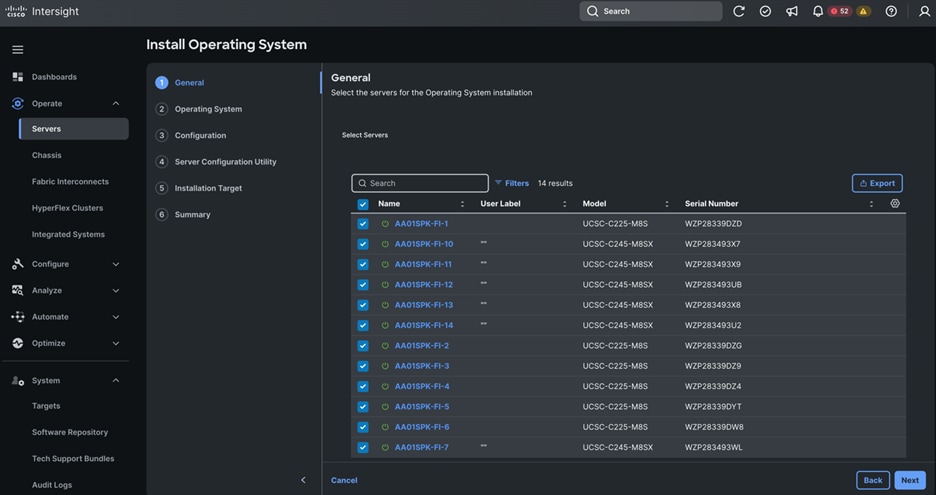

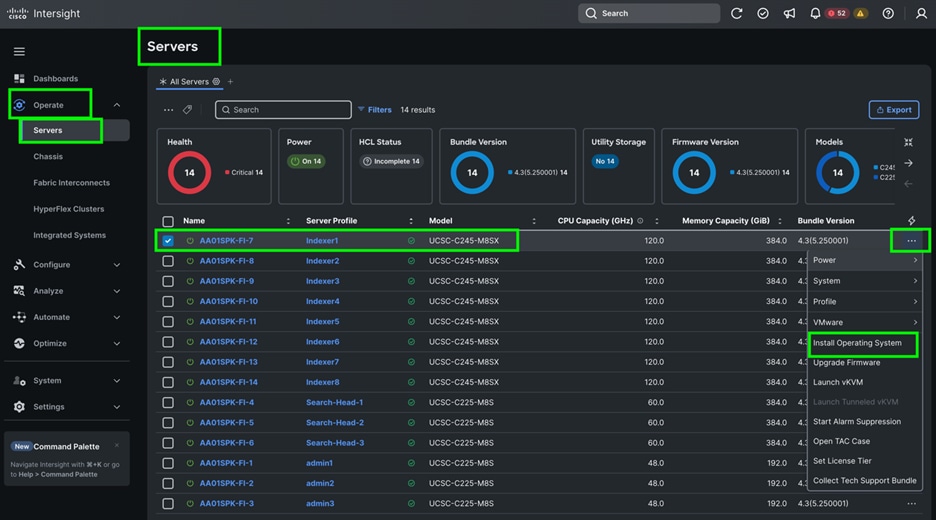

Step 9. The Cisco UCS C225 and C245 M8 Compute Nodes are automatically discovered when the ports are successfully configured using the domain profile as shown below. You can check the status of this discovery by clicking Request next to the Refresh page option.

After discovering the servers successfully, you will find all the servers as shown below.

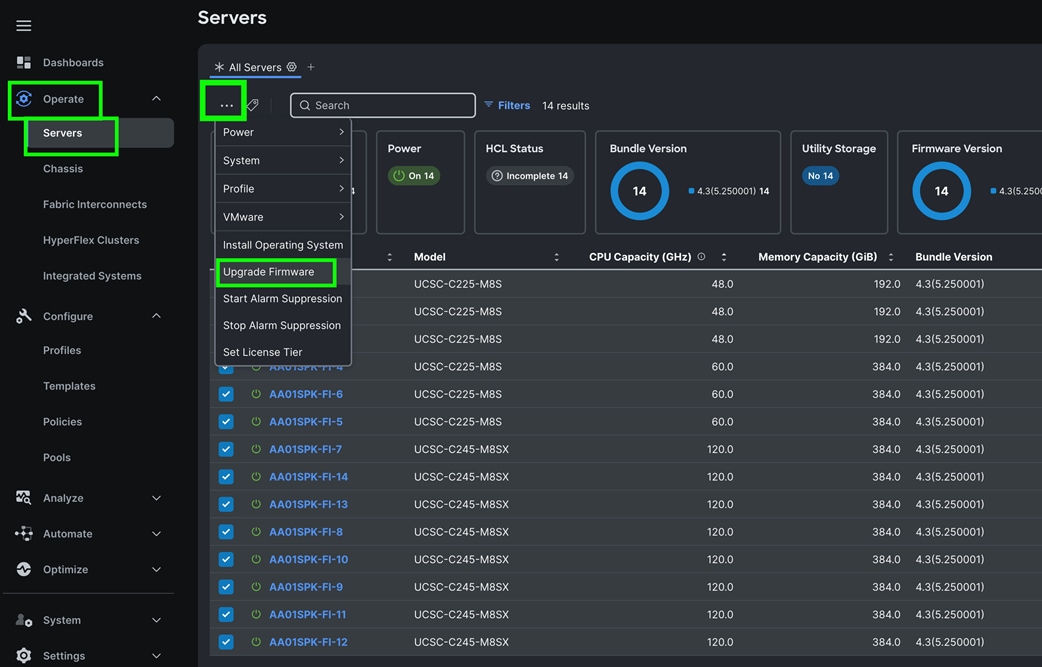

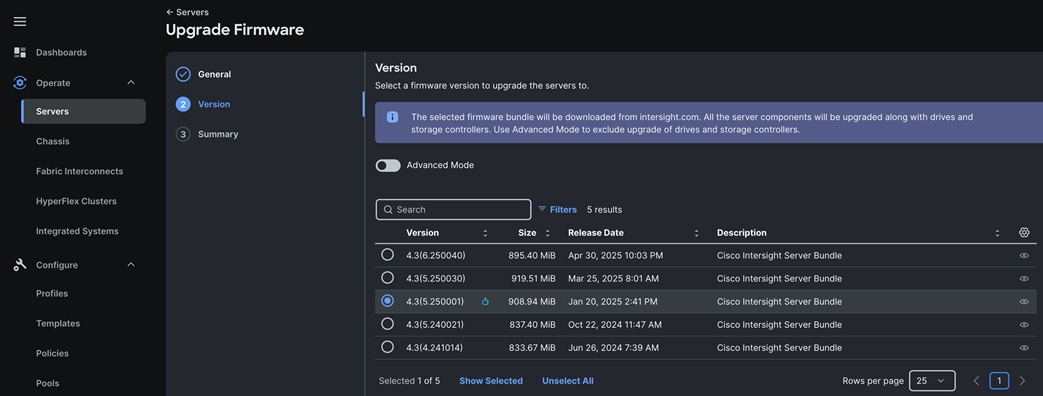

Step 10. After discovering the servers successfully, upgrade all server firmware through IMM to the supported release. To do this, check the box for All Servers and then click the ellipses and from the drop-down list, select Upgrade Firmware.

Step 11. In the Upgrade Firmware section, select all servers and click Next. In the Version section, for the supported firmware version release select “4.3 (5.250001)” and click Next, then click Upgrade to upgrade the firmware on all servers simultaneously.

Step 12. After the successful firmware upgrade, you can create a server profile template and a server profile for IMM configuration.

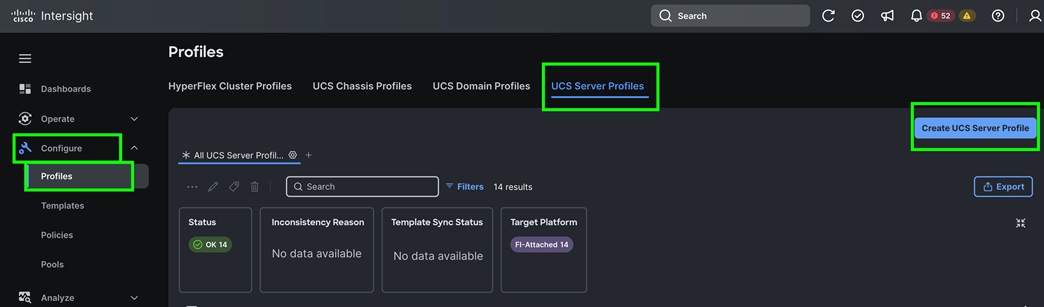

Configure Policies for Server Profile

This section contains the following procedures:

Procedure 1. Configure UUID Pool

Procedure 2. Configure BIOS Policy

Procedure 4. Configure Ethernet Network Control Policy

Procedure 5. Configure Ethernet Network Group Policy

Procedure 6. Configure Ethernet Adapter Policy

Procedure 7. Create Ethernet QoS Policy

Procedure 8. Configure LAN Connectivity Policy

Procedure 9. Configure Boot Order Policy

Procedure 10. Configure Storage Policy

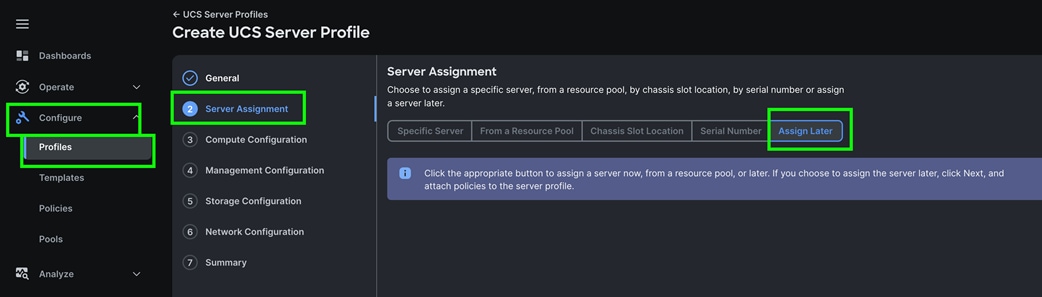

Procedure 11. Configure Server Profiles

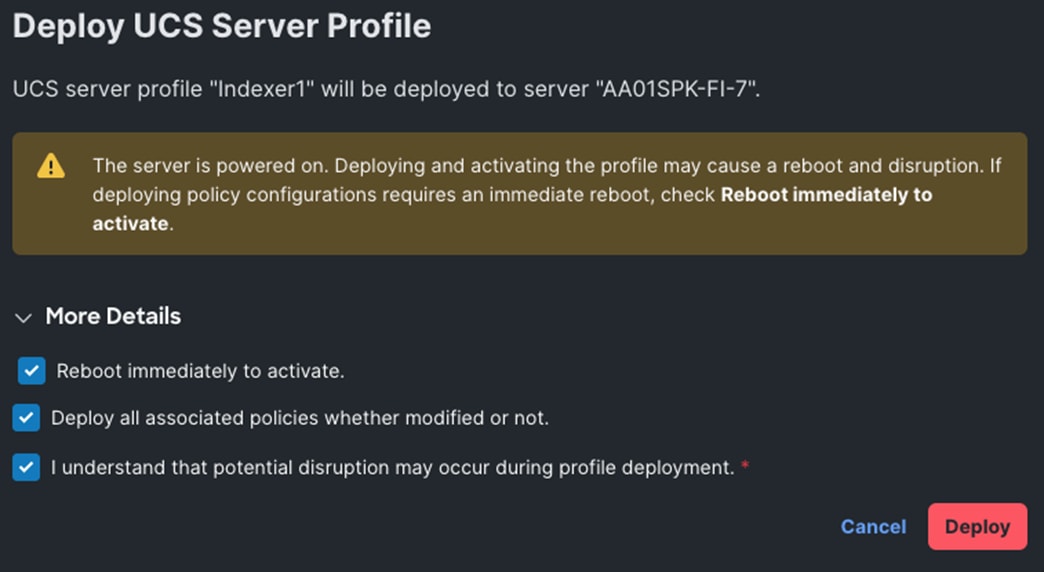

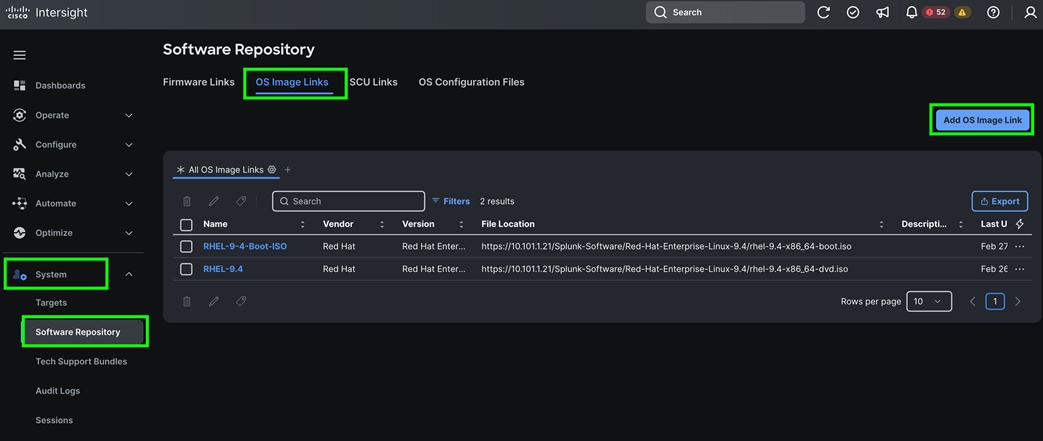

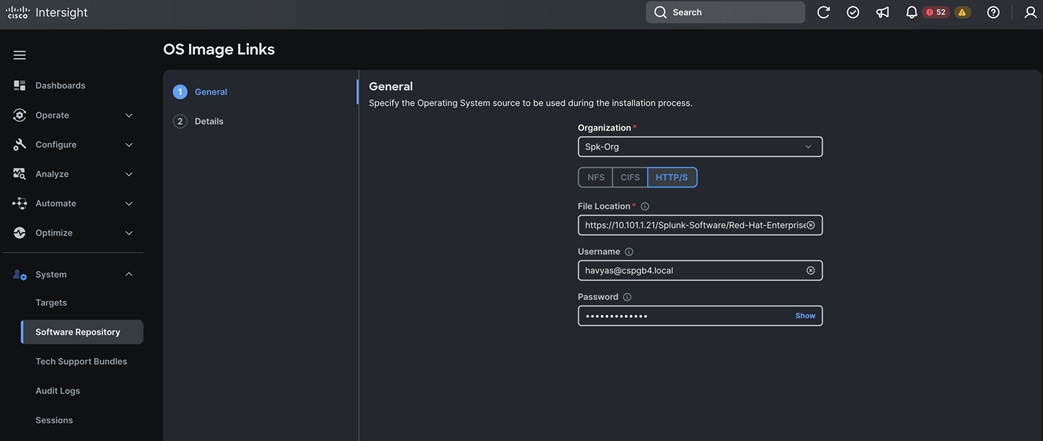

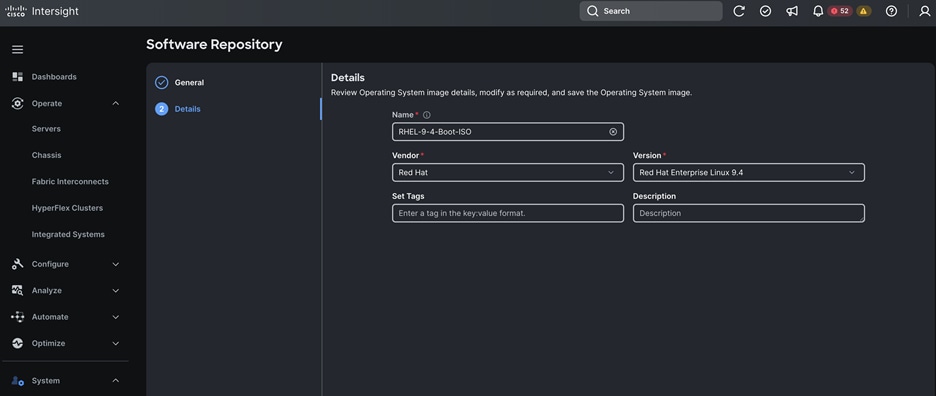

Procedure 12. Assign and Deploy Server Profiles

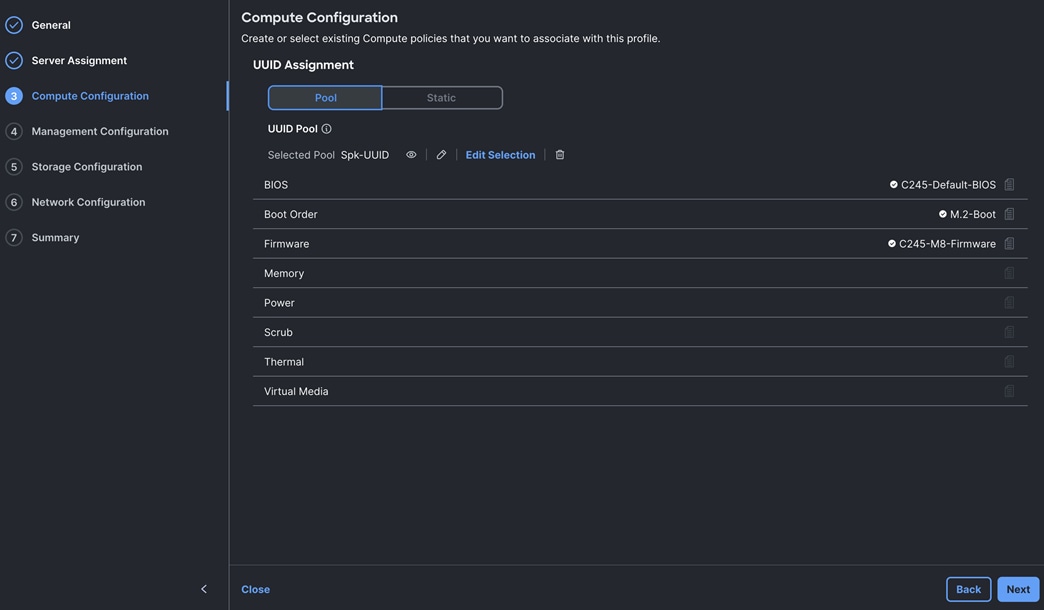

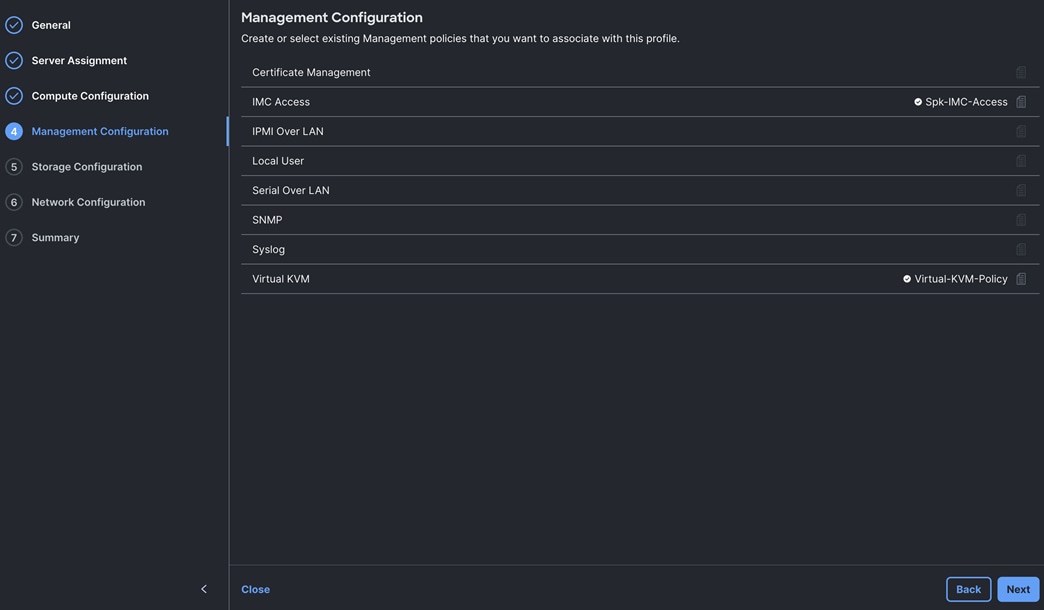

A server profile enables resource management by simplifying policy alignment and server configuration. The server profile wizard groups the server policies into the following categories to provide a quick summary view of the policies that are attached to a profile:

● Compute Configuration: BIOS, Boot Order, and Virtual Media policies.

● Management Configuration: Certificate Management, IMC Access, IPMI (Intelligent Platform Management Interface) Over LAN, Local User, Serial Over LAN, SNMP (Simple Network Management Protocol), Syslog and Virtual KVM (Keyboard, Video, and Mouse).

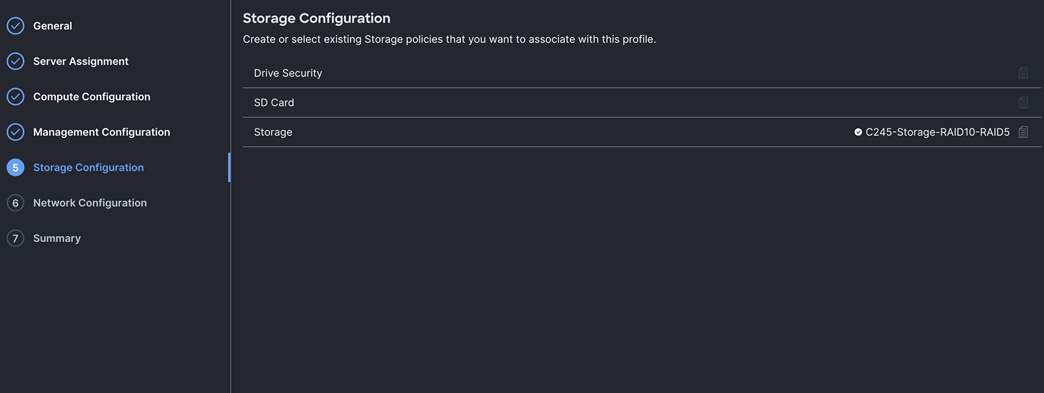

● Storage Configuration: SD Card, Storage.

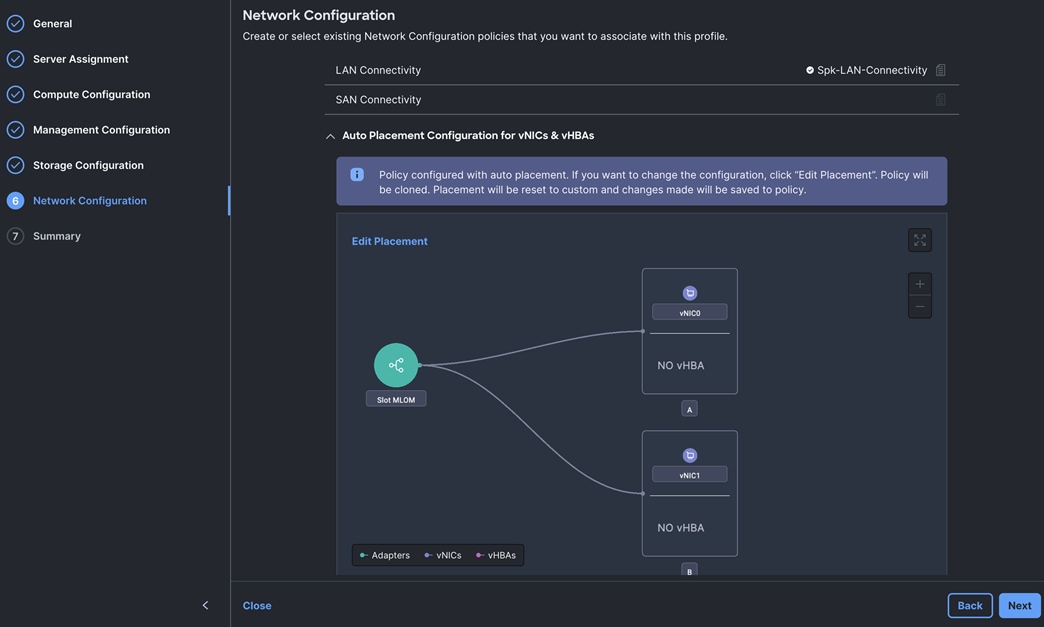

● Network Configuration: LAN connectivity and SAN connectivity policies.

Some of the characteristics of the server profile template for this solution are as follows:

● BIOS policy is created to specify various server parameters in accordance with AMD CPU’s best practices.

● Boot order policy defines virtual media (KVM mapper DVD) and local boot through M.2 SSD.

● IMC access policy defines the management IP address pool for KVM access.

● LAN connectivity policy is used to create two virtual network interface cards (vNICs) – One vNIC for Server Node Management and Splunk Data Ingestion Network Traffic, second vNIC for Splunk Indexing Server-to-Server Network Traffic Interface.

Procedure 1. Configure UUID Pool

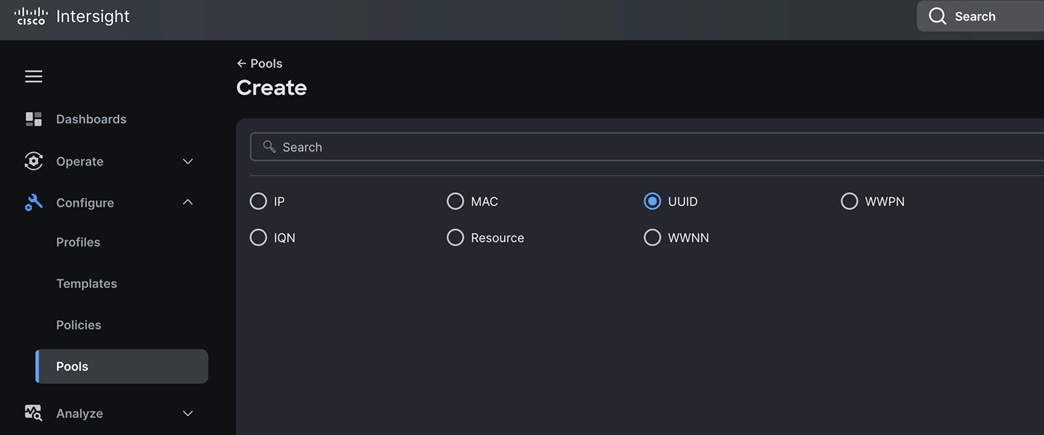

Step 1. To create UUID Pool for a Cisco UCS, go to > Configure > Pools > and click Create Pool. Select option UUID.

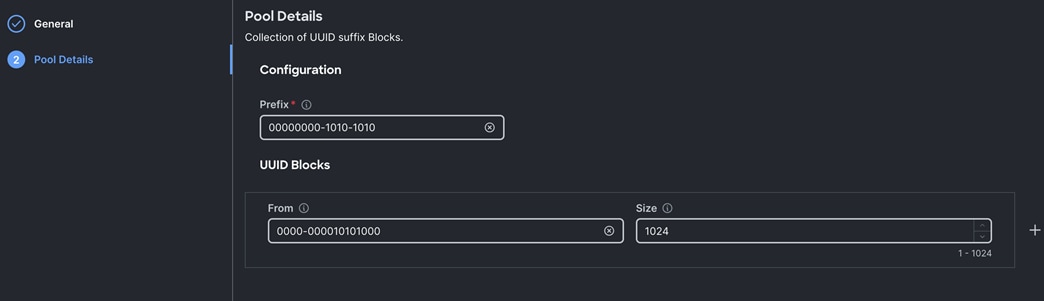

Step 2. In the UUID Pool Create section, for the Organization, select “Spk-Org” and for the Policy name Spk-UUID. Click Next.

Step 3. Select Prefix, UUID block and size according to your environment and click Create as shown below:

Procedure 2. Configure BIOS Policy

Note: For more information, see Performance Tuning for Cisco UCS M8 Platforms with AMD EPYC 4th Gen and 5th Gen Processors here: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/ucs-c245-m8-rack-ser-4th-gen-amd-epyc-pro-wp.html

Note: For this specific solution, we created a single BIOS policy (for both single socket C225 and two socket C245 M8 Server node) and used the setting as mentioned in Table 7.

Table 7. BIOS recommendations for Splunk Analytical Enterprise Workloads

| BIOS options |

BIOS values (platform default) |

Big Data analytics (Splunk Analytical Workload) |

| Processor |

||

| SMT mode |

Enabled |

Disabled |

| SVM mode |

Auto (Enabled) |

Auto |

| DF C-states |

Auto (Enabled) |

Auto |

| ACPI SRAT L3 Cache as NUMA Domain |

Auto (Disabled) |

Auto |

| APBDIS |

Auto (0) |

1 |

| Fixed SOC P-State SP5F 19h |

Auto (P0) |

Auto |

| 4-link xGMI max speed* |

Auto (32Gbps) |

Auto |

| Enhanced CPU performance* |

Disabled |

Disabled |

| Memory |

||

| NUMA nodes per socket |

Auto (NPS1) |

Auto |

| IOMMU |

Auto (Enabled) |

Auto |

| Memory interleaving |

Auto (Enabled) |

Auto |

| Power/Performance |

||

| Core performance boost |

Auto (Enabled) |

Auto |

| Global C-State control |

Auto (Enabled) |

Auto |

| L1 Stream HW Prefetcher |

Auto (Enabled) |

Auto |

| L2 Stream HW Prefetcher |

Auto (Enabled) |

Auto |

| Processor |

||

| Determinism slider |

Auto (Power) |

Auto |

| CPPC |

Auto (Disabled) |

Enabled |

| Power profile selection F19h |

High-performance mode |

High-performance mode |

Note: BIOS tokens with *highlighted are not applicable only for single socket optimized platform like Cisco UCS C225 M8 1U Rack Server.

Step 1. To create BIOS Policy, go to > Configure > Policies > and select Platform type as UCS Server and select on BIOS and click Start.

Step 2. In the BIOS create general menu, for the Organization, select Spk-Org and for the Policy name Spk-BIOS-Policy. Click Next.

Step 3. Apply the mentioned parameters from the above table to configure the BIOS for C225 and C245 M8 Server running as Big-Data Analytical workloads.

Step 4. Click Create to create the BIOS policy.

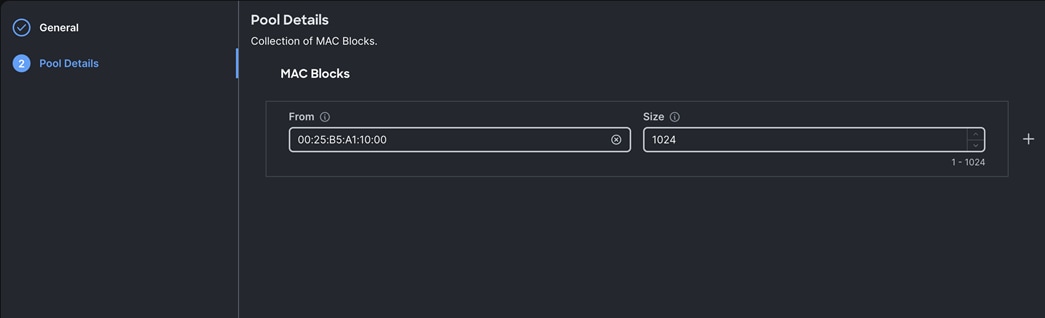

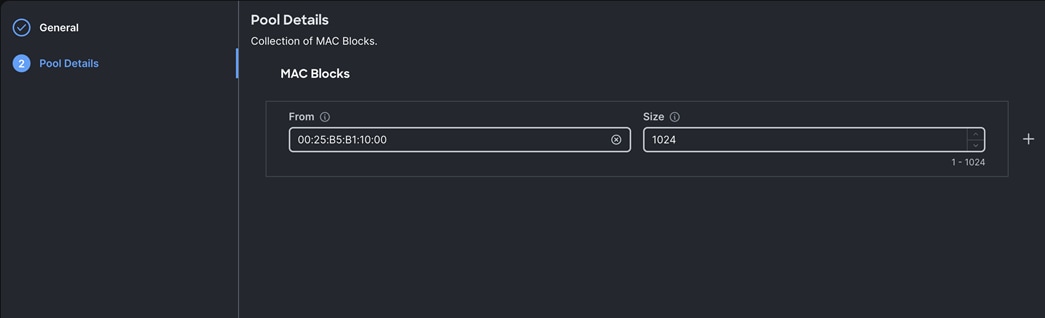

Step 1. To configure a MAC Pool for a Cisco UCS Domain profile, go to > Configure > Pools > and click Create Pool. Select option MAC to create MAC Pool.

Step 2. In the MAC Pool Create section, for the Organization, select Spk-Org and for the Policy name Spk-MAC-Pool-A. Click Next.

Step 3. Enter the MAC Blocks from and Size of the pool according to your environment and click Create.

Note: For this solution, we configured two MAC Pools. Spk-MAC-Pool-A for vNICs MAC Address VLAN 1011 on all servers through FI-A Side. Spk-MAC-Pool-B for vNICs MAC Address VLAN 3011 on all servers through FI-B Side

Step 4. Create a second MAC Pool to provide MAC addresses to all vNICs running on VLAN 3011.

Step 5. Go to > Configure > Pools > and click Create Pool. Select option MAC to create MAC Pool.

Step 6. In the MAC Pool Create section, for the Organization, select Spk-Org and for the Policy name “Spk-MAC-Pool-B.” Click Next.

Step 7. Enter the MAC Blocks from and Size of the pool according to your environment and click Create.

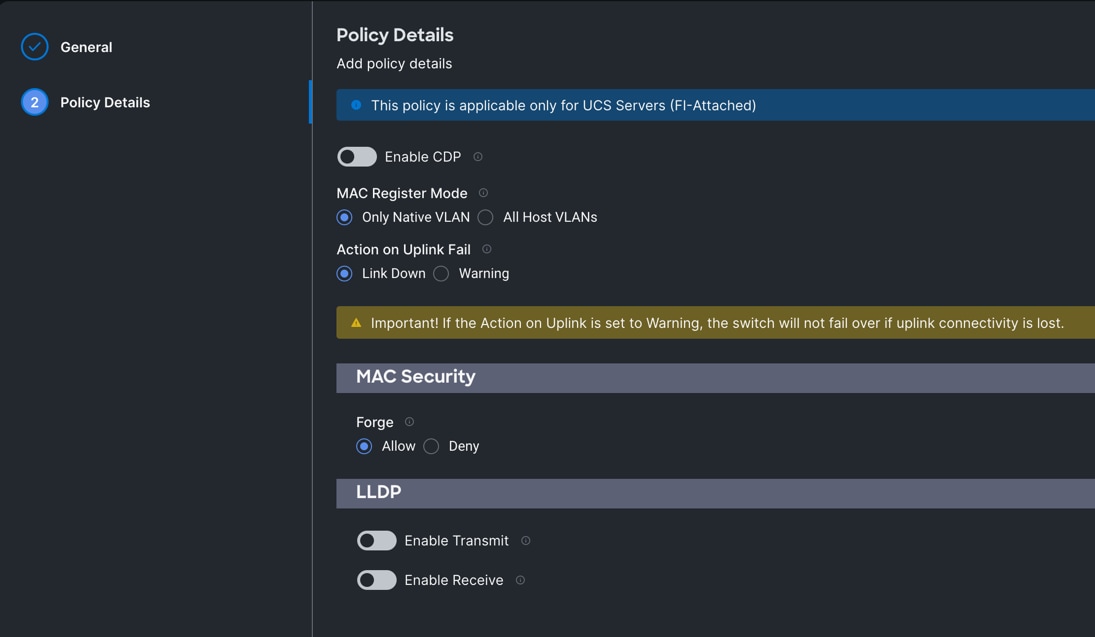

Procedure 4. Configure Ethernet Network Control Policy

Step 1. To configure the Ethernet Network Control Policy for the UCS server profile, go to > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Ethernet Network Control.

Step 3. In the Switch Control Policy Create section, for the Organization select Spk-Org and for the policy name enter “Eth-Network-Control.” Click Next.

Step 4. In the Policy Details section, keep the parameter as shown below:

Step 5. Click Create to create this policy.

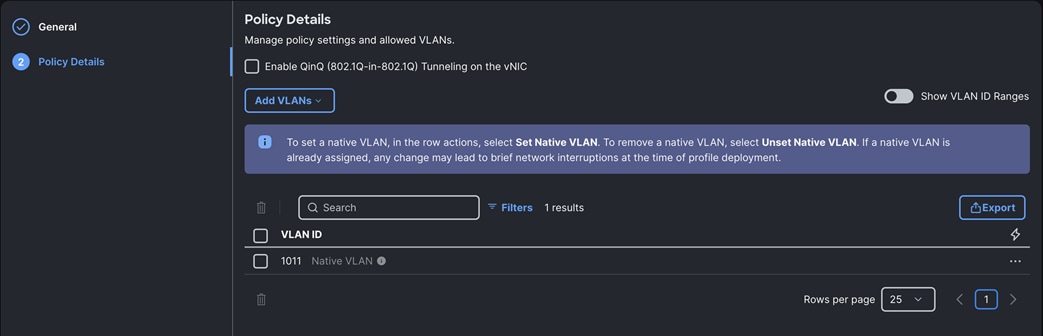

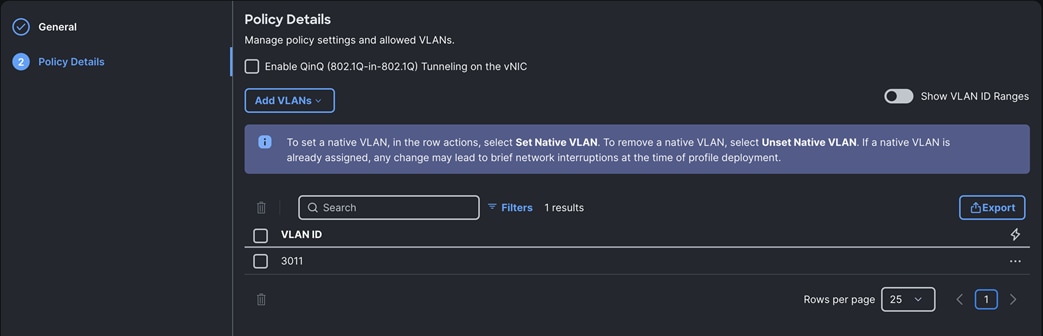

Procedure 5. Configure Ethernet Network Group Policy

Note: We configured two Ethernet Network Groups to allow two different VLAN traffic for this solution (one for VLAN 1011 and the other for VLAN 3011).

Step 1. To configure the Ethernet Network Group Policy for the UCS server profile, go to > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Ethernet Network Group.

Step 3. In the Switch Control Policy Create section, for the Organization select Spk-Org and for the policy name enter “Eth-Network-1011.” Click Next.

Step 4. In the Policy Details section, click Add VLANs and enter VLANs 1011 manually.

Step 5. For this VLAN, set the native VLAN as shown below:

Step 6. Click Create to create this policy for VLAN 1011.

Step 7. Create another “Eth-Network-3011” and add VLAN 3011.

Note: For this solution, we used these two Ethernet Network Group policies and applied them on different vNICs to carry individual both the VLAN traffic.

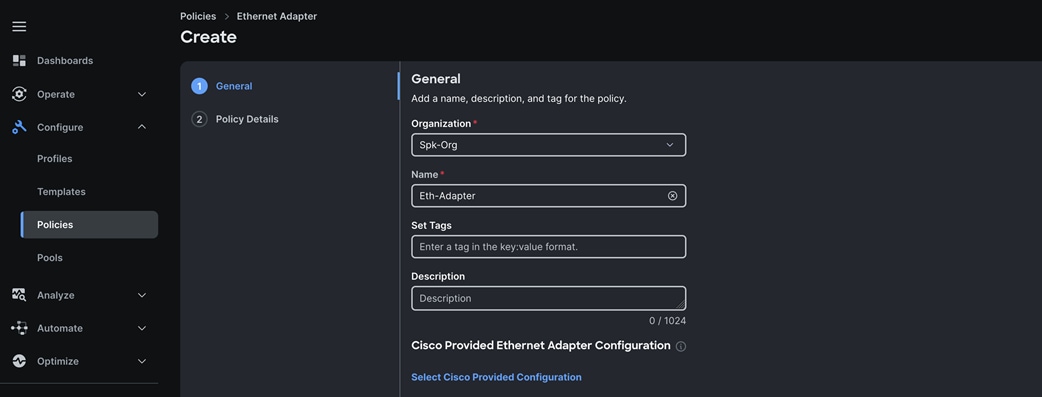

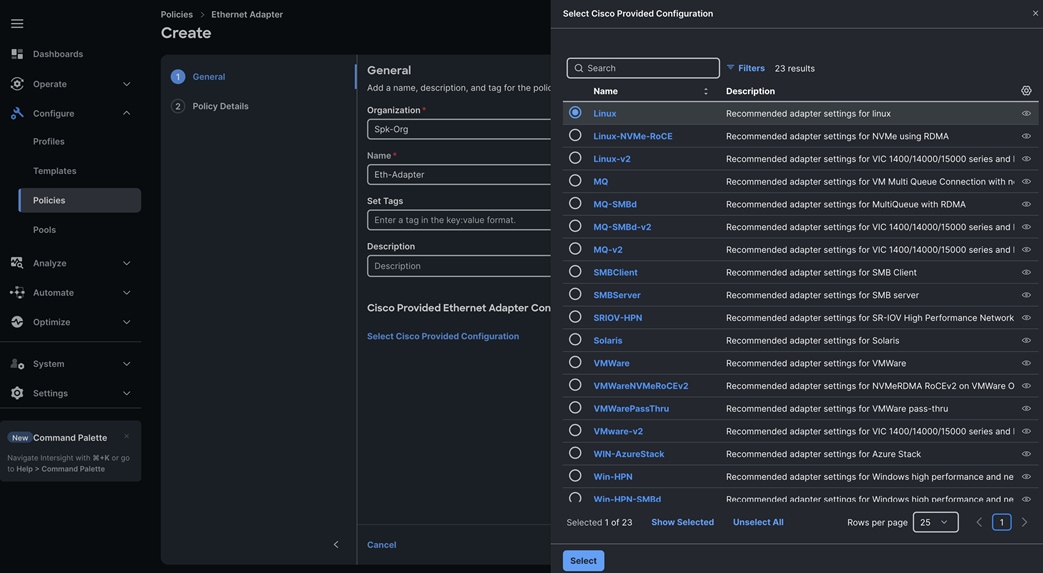

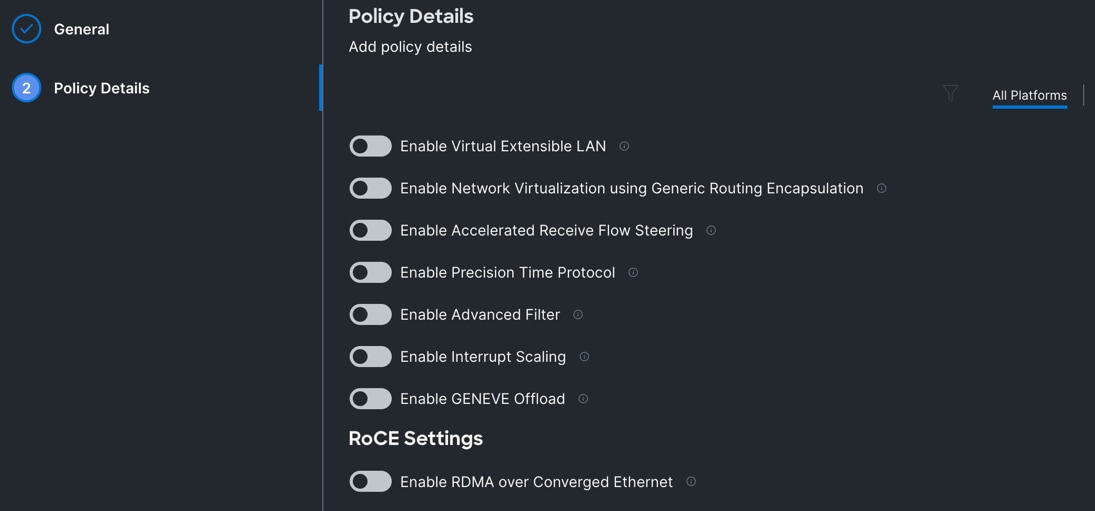

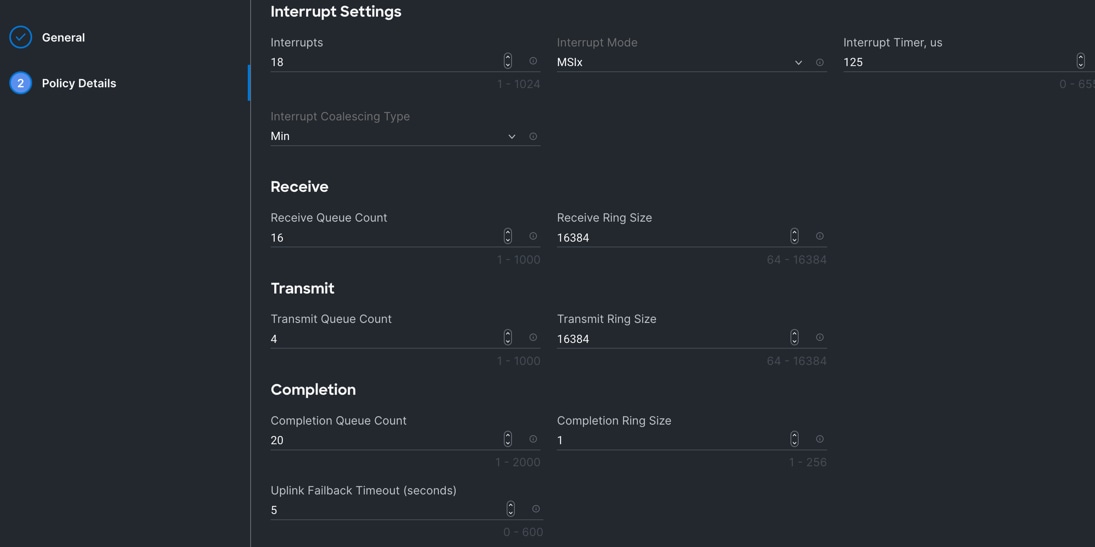

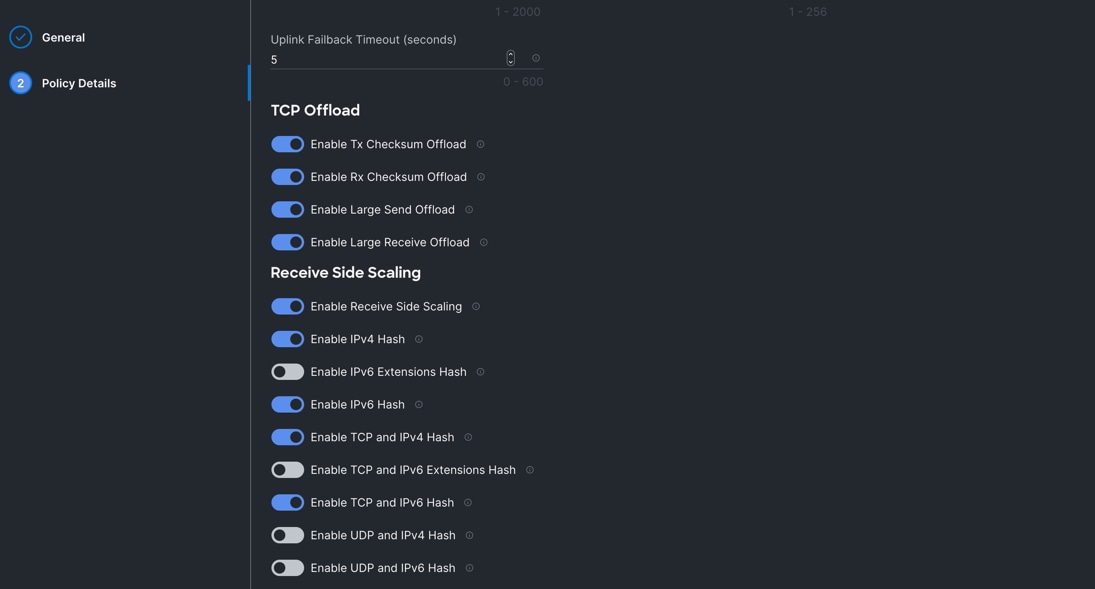

Procedure 6. Configure Ethernet Adapter Policy

Step 1. To configure the Ethernet Adapter Policy for the UCS Server profile, go to > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Ethernet Adapter.

Step 3. In the Ethernet Adapter Configuration section, for the Organization select Spk-Org and for the policy name enter Eth-Adapter.

Step 4. Select the “Cisco Provided Ethernet Adapter Configuration” and click the option “Select Cisco Provided Configuration” and then click Linux as shown below:

Step 5. In the Policy Details section, for the recommended performance on the ethernet adapter, keep the “Interrupt Settings” parameter.

Step 6. Click Create to create this policy.

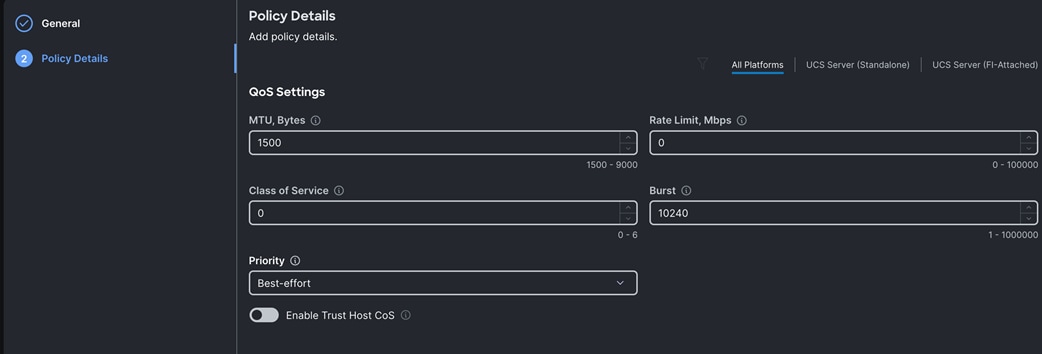

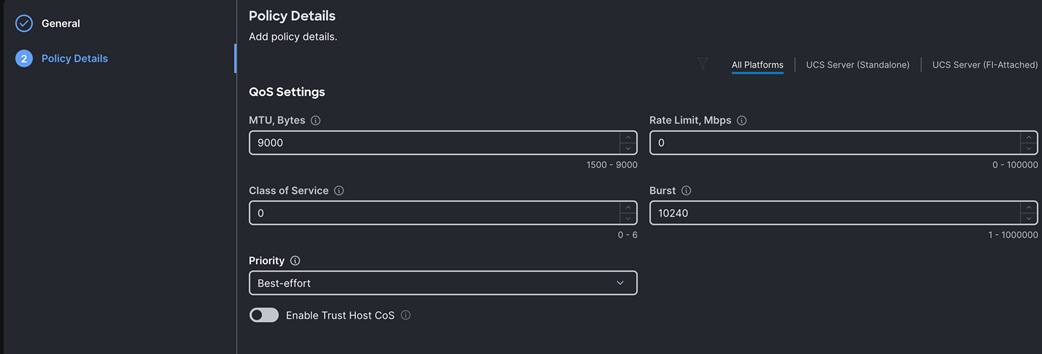

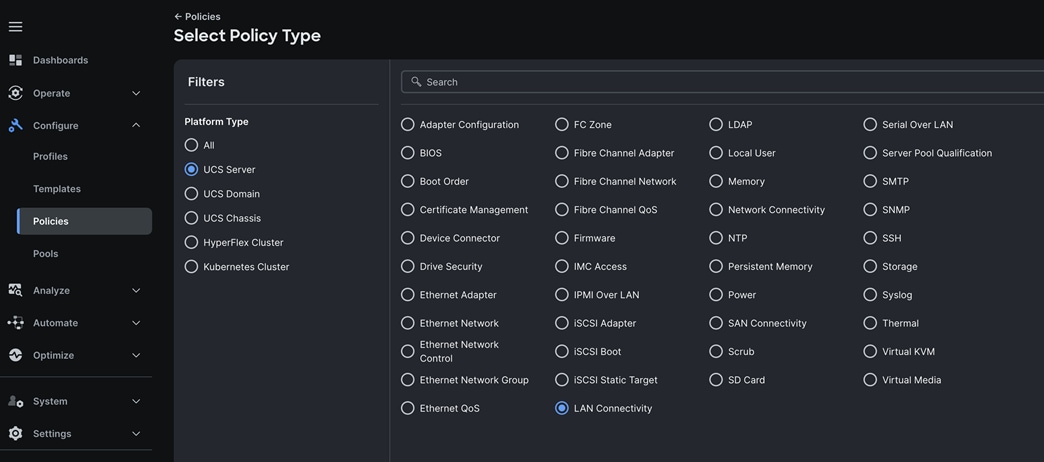

Procedure 7. Create Ethernet QoS Policy

Step 1. To configure the Ethernet QoS Policy for the UCS Server profile, go to > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Ethernet QoS.

Step 3. In the Create Ethernet QoS Configuration section, for the Organization select Spk-Org and for the policy name enter “Eth-QoS-1500” click Next.

Step 4. Enter QoS Settings as shown below to configure 1500 MTU for management vNIC:

Step 5. Click Create to create this policy for vNIC0.

Step 6. Create another QoS policy for second vNIC running indexing network traffic.

Step 7. In the Create Ethernet QoS Configuration section, for the Organization select Spk-Org and for the policy name enter “Eth-QoS-9000.” Click Next.

Step 8. Enter QoS Settings as shown below to configure 9000 MTU for replication vNIC traffic.

Step 9. Click Create to create this policy for vNIC1.

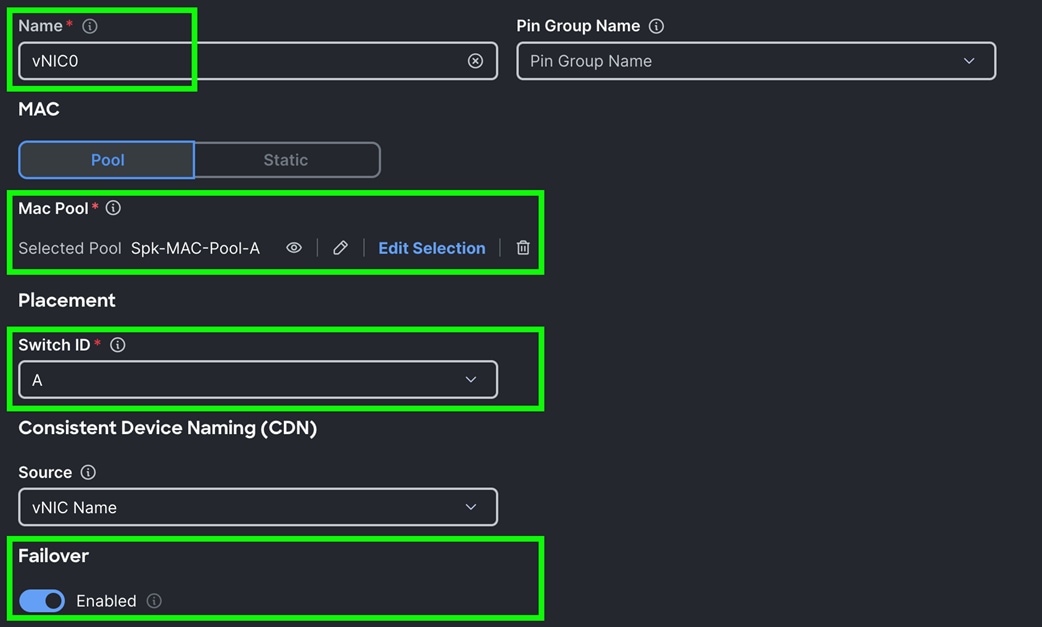

Procedure 8. Configure LAN Connectivity Policy

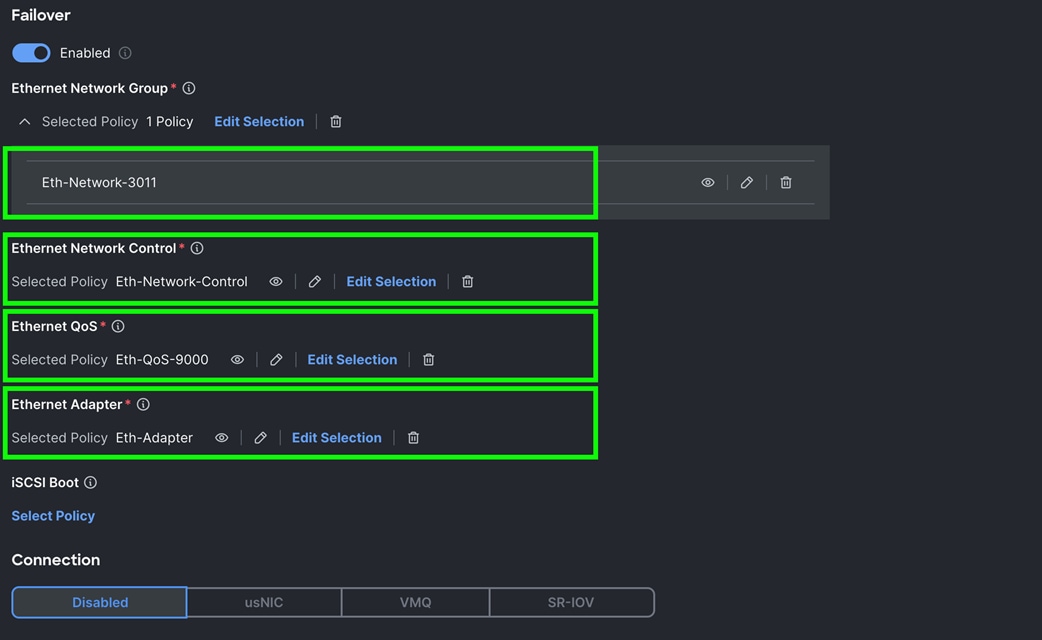

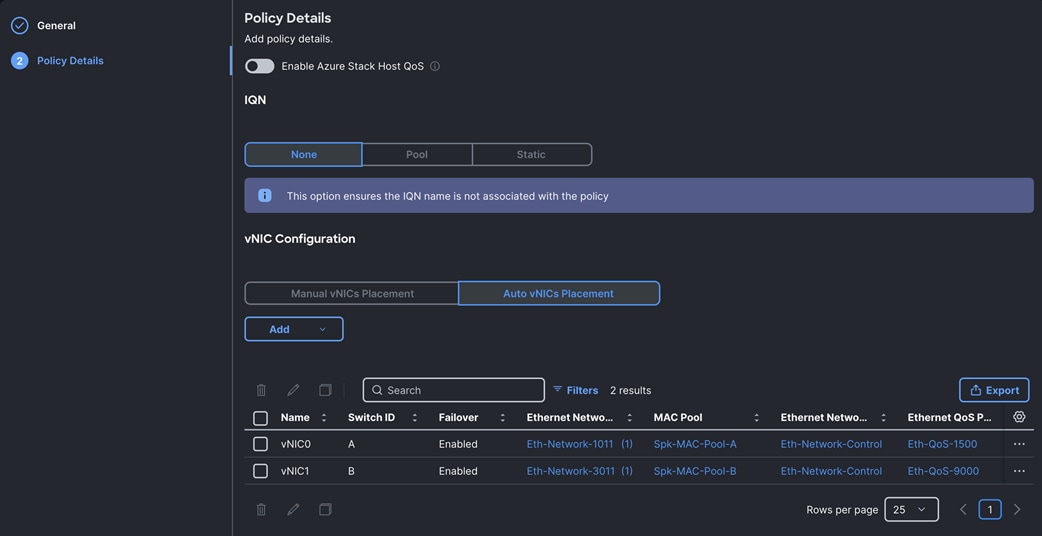

Two vNICs were configured per server as listed in Table 8.

| Name |

Switch ID |

Failover |

MAC Pool |

Ethernet QoS Policy |

Ethernet Network Group |

| vNIC0 |

FI – A |

Enabled |

Spk-MAC-Pool-A |

Eth-QoS-1500 |

Eth-Network-1011 |

| vNIC1 |

FI – B |

Enabled |

Spk-MAC-Pool-B |

Eth-QoS-9000 |

Eth-Network-3011 |

Step 1. Go to > Configure > Polices > and click Create Policy. For the platform type select “UCS Server” and for the policy select “LAN Connectivity.”

Step 2. In the LAN Connectivity Policy Create section, for the Organization select “Spk-Org” for the policy name enter “Spk-LAN-Connectivity” and for the Target Platform select UCS Server (FI-Attached). Click Next.

Step 3. In the Policy Details section, for vNIC Configuration select “Auto vNICs Placement” and then click Add vNIC.

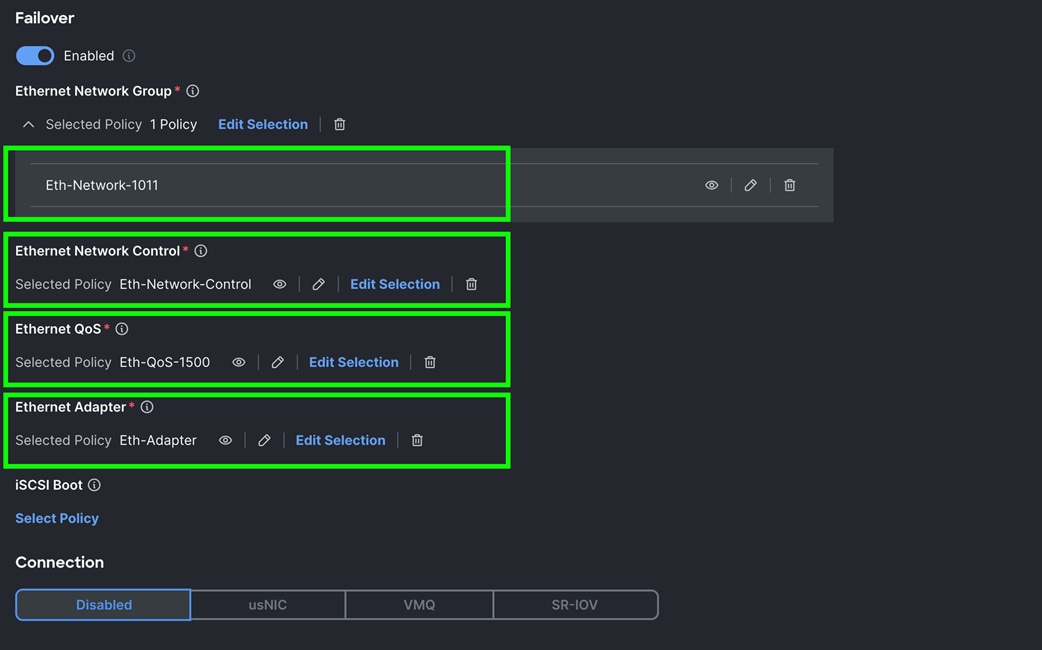

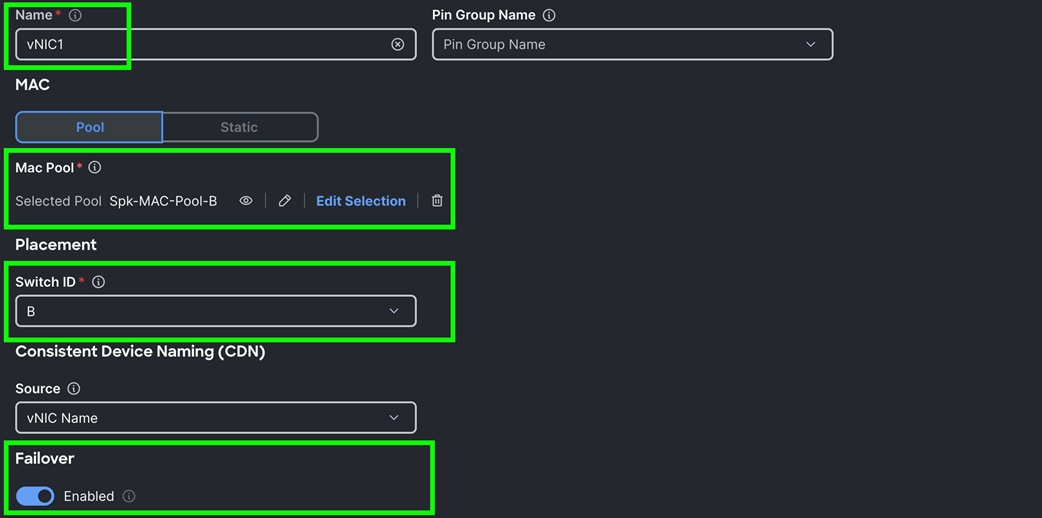

Step 4. In the Add vNIC section, for the first vNIC enter vNIC0. In the Edit vNIC section, for the vNIC name enter "vNIC0" and for the MAC Pool select Spk-MAC-Pool-A. In the Placement option, select Switch ID as A. Also enable the Failover option as shown below. This enables the vNIC to failover to another FI.

Step 5. For the Ethernet Network Group Policy, select Eth-Network-1011. For the Ethernet Network Control Policy select Eth-Network-Control. For Ethernet QoS, select Eth-QoS-1500, and for the Ethernet Adapter, select Eth-Adapter. Click Add to add vNIC0 to this policy.

Step 6. Add a second vNIC. For the name enter "vNIC1" and for the MAC Pool select Spk-MAC-Pool-B.

Step 7. In the Placement option, for Switch ID select B and enable Failover option for this vNIC configuration. This enables the vNIC to failover to another FI.

Step 8. For the Ethernet Network Group Policy, select Eth-Network-3011. For the Ethernet Network Control Policy, select Eth-Network-Control. For the Ethernet QoS, select Eth-QoS-9000, and for the Ethernet Adapter, select Eth-Adapter. Click Add to add vNIC1 to this policy.

Step 9. Click Add to add vNIC1 into this policy.

Step 10. After adding these two vNICs, review and make sure the Switch ID, PCI Order, Failover Enabled, and MAC Pool are as shown below:

Step 11. Click Create to create this policy.

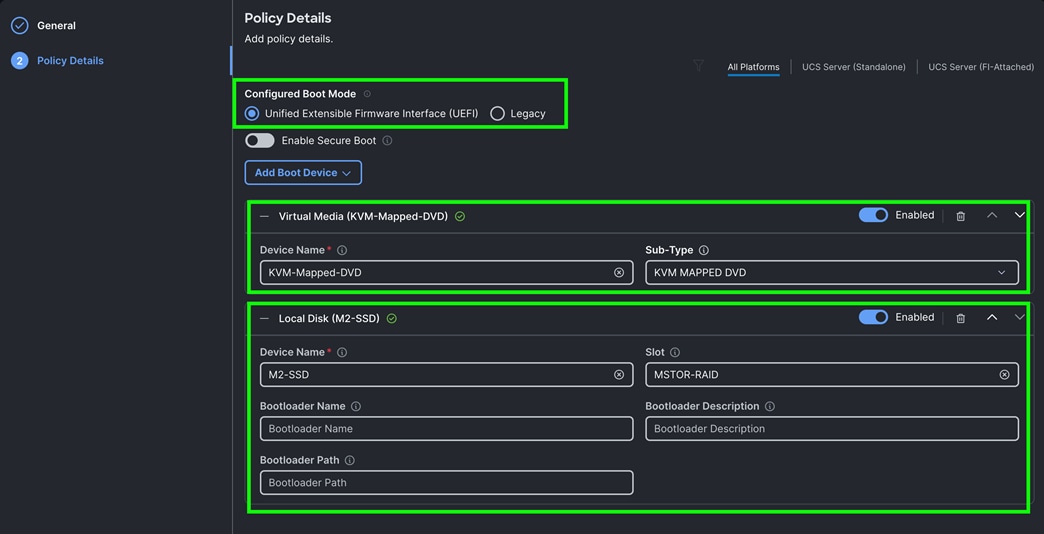

Procedure 9. Configure Boot Order Policy

For this solution, two local server nodes M.2 SSD were used, and the virtual drive was configured to install the OS locally on all of the server nodes.

Step 1. To configure Boot Order Policy for UCS Server profile, go to > Configure > Polices > and click Create Policy.

Step 2. For the platform type select “UCS Server” and for the policy select “Boot Order.”

Step 3. In the Boot Order Policy Create section, for the Organization select “Spk-Org” and for the name of the Policy select “M.2-Boot.” Click Next.

Step 4. In the Policy Details section, click Add Boot Device and for the boot order add “Virtual Media” (KVM-DVD) and “Local Disk” (M2-SSD) as shown below:

Step 5. Click Create to create this policy

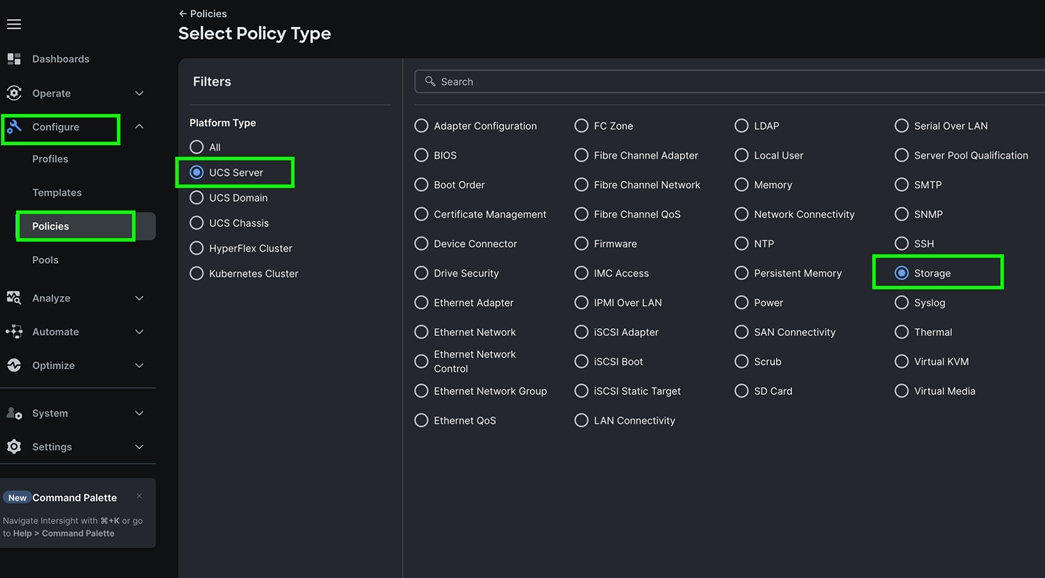

Procedure 10. Configure Storage Policy

For this solution, we configured two types of local storage policy. First storage policy was configured for all the indexer server nodes and second storage policy was configured for all the search head and admin server nodes.

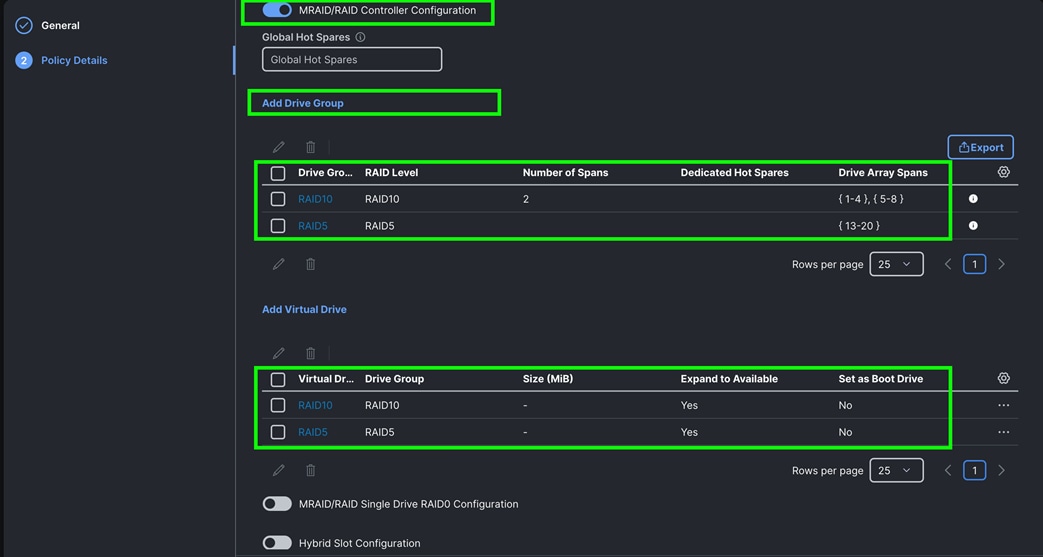

As documented earlier in UCS hardware inventory table, we used 16 NVMe drives for the indexer server nodes. And for the remaining search head and admin nodes, we used two local NVMe drives for local storage. We configured and created local storage and RAID configuration on each of the indexer as shown below.

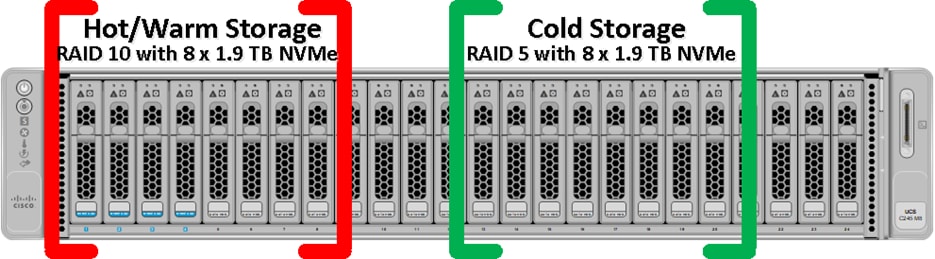

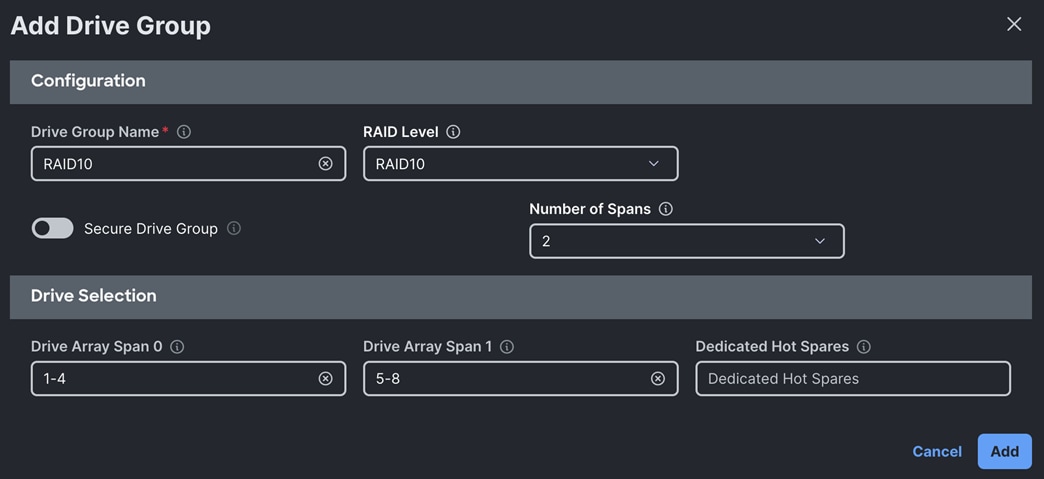

All the indexer Cisco UCS C245 M8 server nodes having 2 x Cisco Tri-Mode 24G SAS RAID controller and to take advantage of this RAID controllers for better read/write performance and even drive distribution, we spread 16 NVMe drives across two groups with each group having 8 drives for storage. First set of 8 NVMe drives were placed on RAID controller 1 (drive slot 1 to 8) as highlighted in red here and then RAID10 was configured for storing Hot/Warm local storage. Second set of 8 drives were distributed on RAID controller 2 (drive slot 13 to 20) as shown in green and then RAID5 was configured for storing Cold local storage.

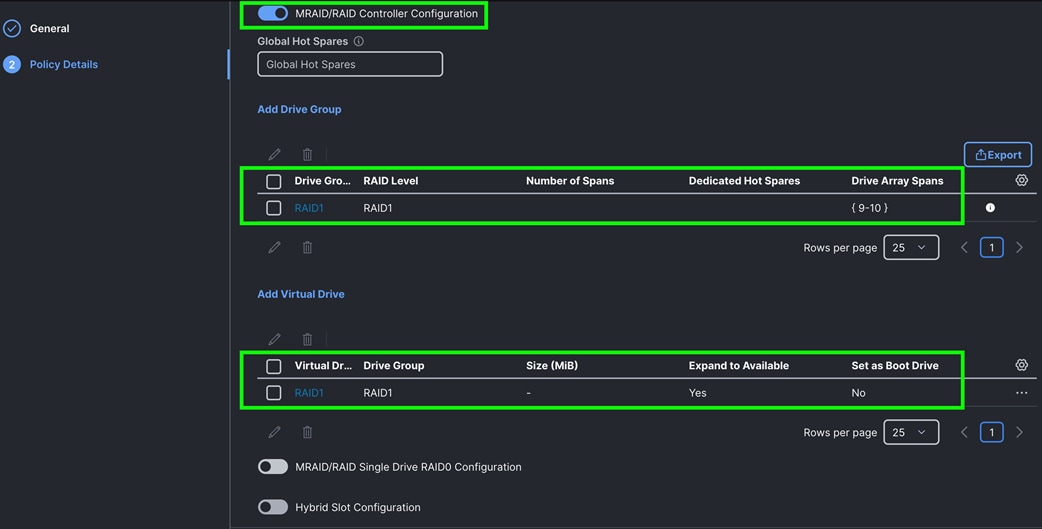

For all other management and search head server nodes, we used two local NVMe drives and configured RAID1 for storage policy as explained below.

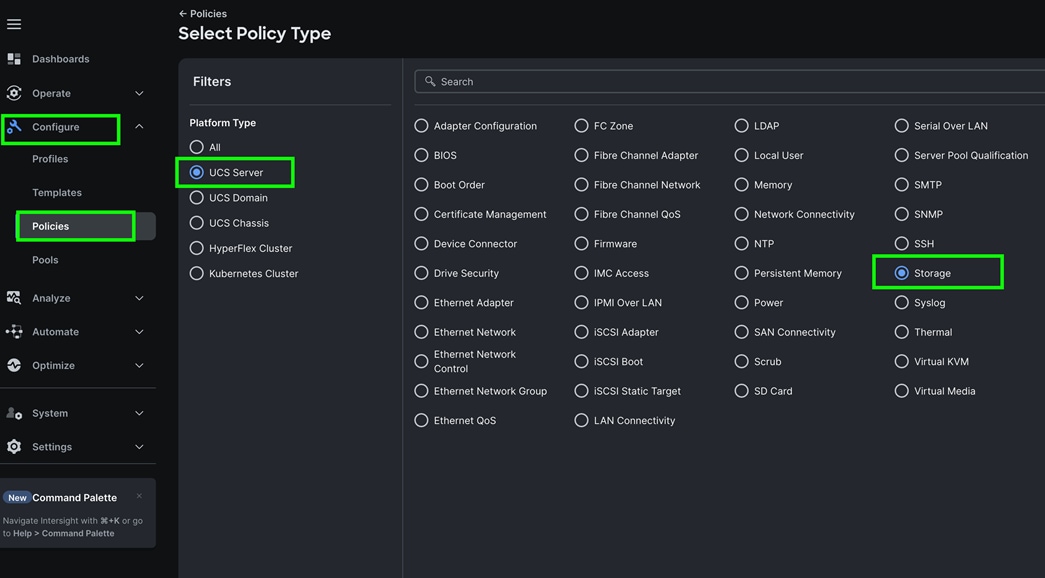

Step 1. To configure Storage Policy for UCS Server profile, go to > Configure > Polices > and then select the tab for UCS Server > Storage > and click Start.

Step 2. In Create Storage policy general menu, for the Organization select “Spk-Org” and for the Name for the policy as “C245-Storage-RAID10-RAID5” and then click Next.

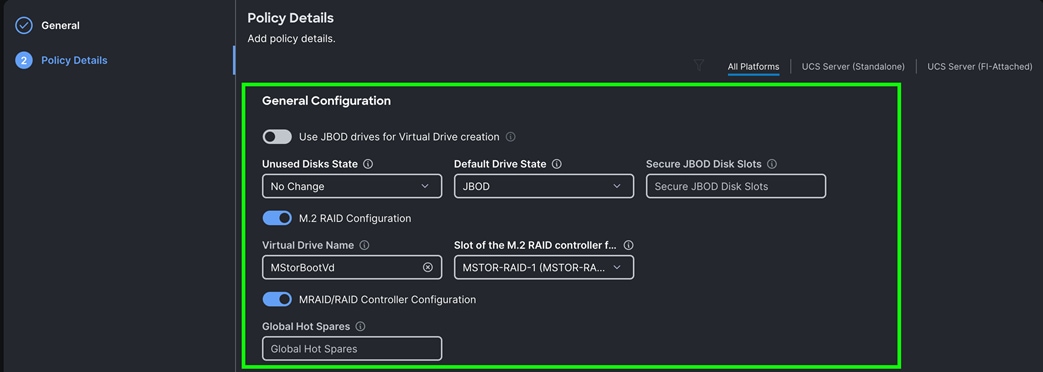

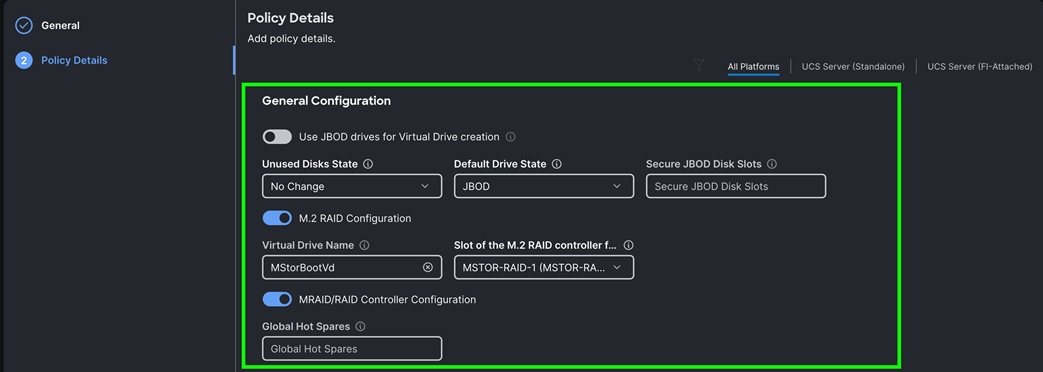

Step 3. Choose Default Drive State as JBOD, enabled M.2 RAID Configuration and selected appropriate slot as MSTOR-RAID-1 (MSTOR-RAID) as shown below:

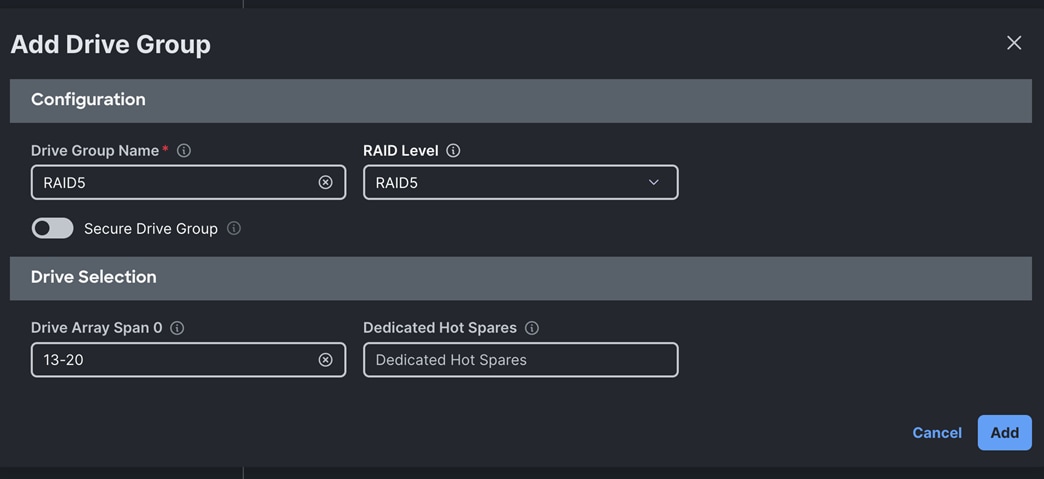

Step 4. Enable MRAID/RAID Controller configuration to create virtual drives. Click Add Drive Group.

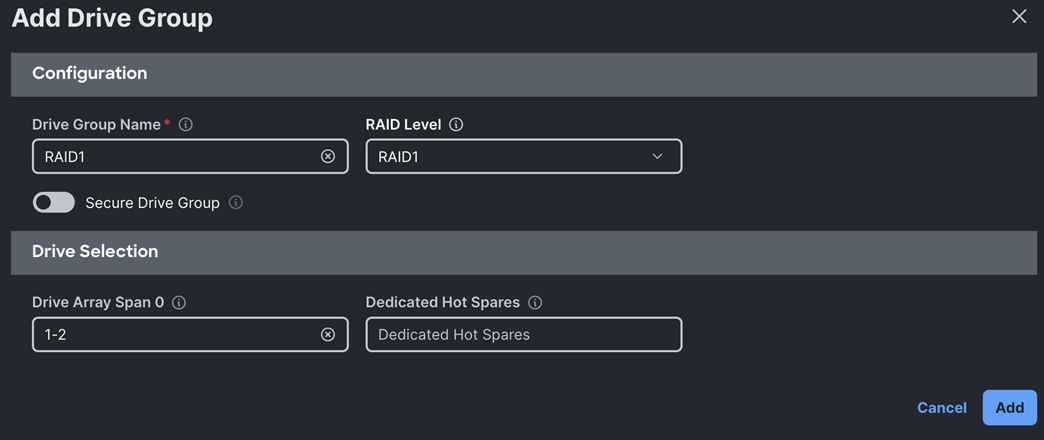

Note: We configured two Drive Group as RAID 10 and RAID5.

RAID10 drive group was configured for the drive span 1 to 8 as shown below:

RAID5 drive group was configured for the drive span 13 to 20 as shown below:

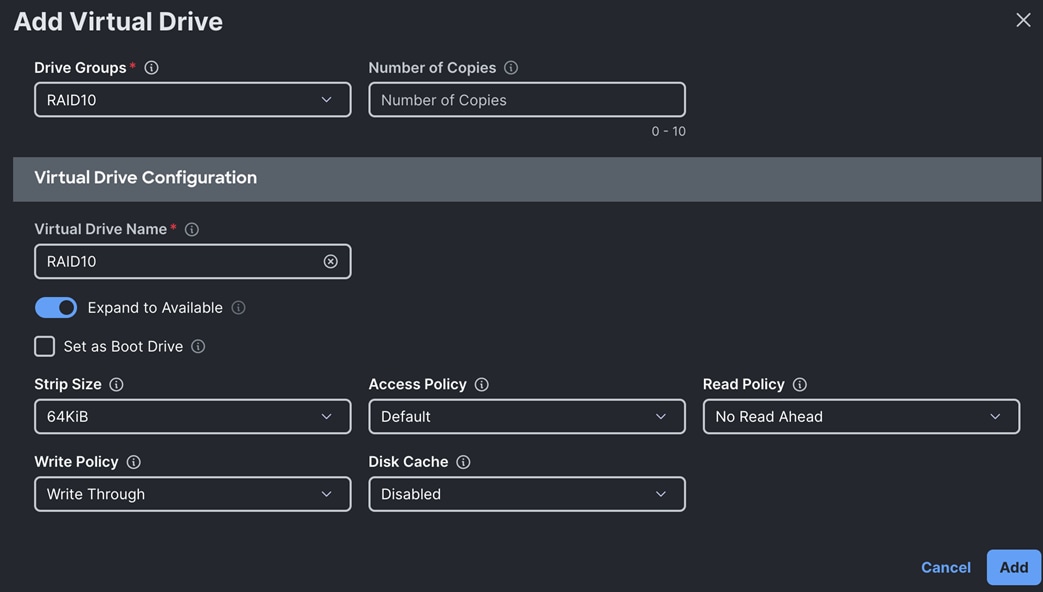

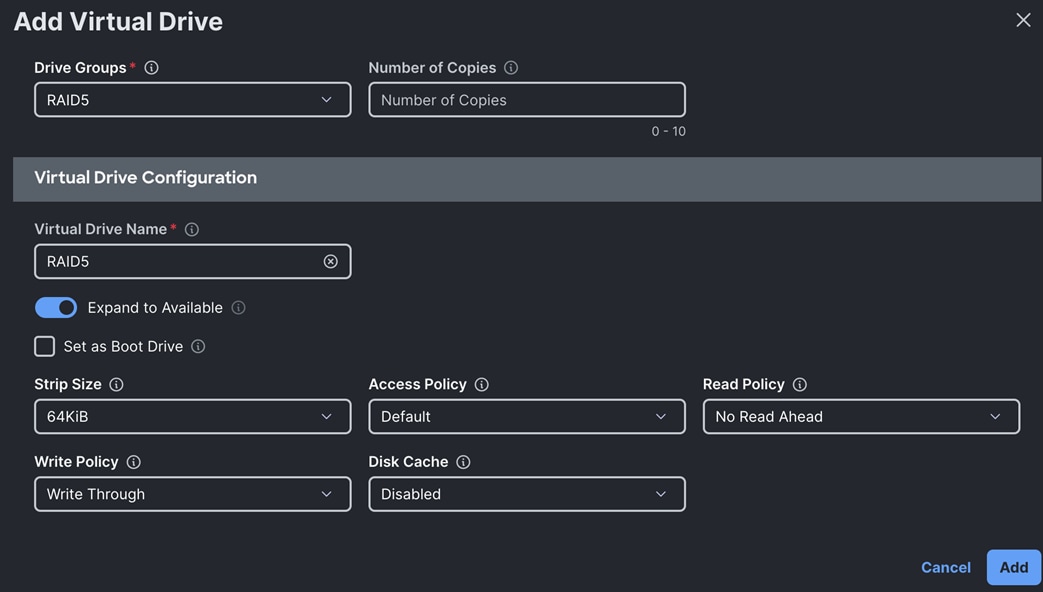

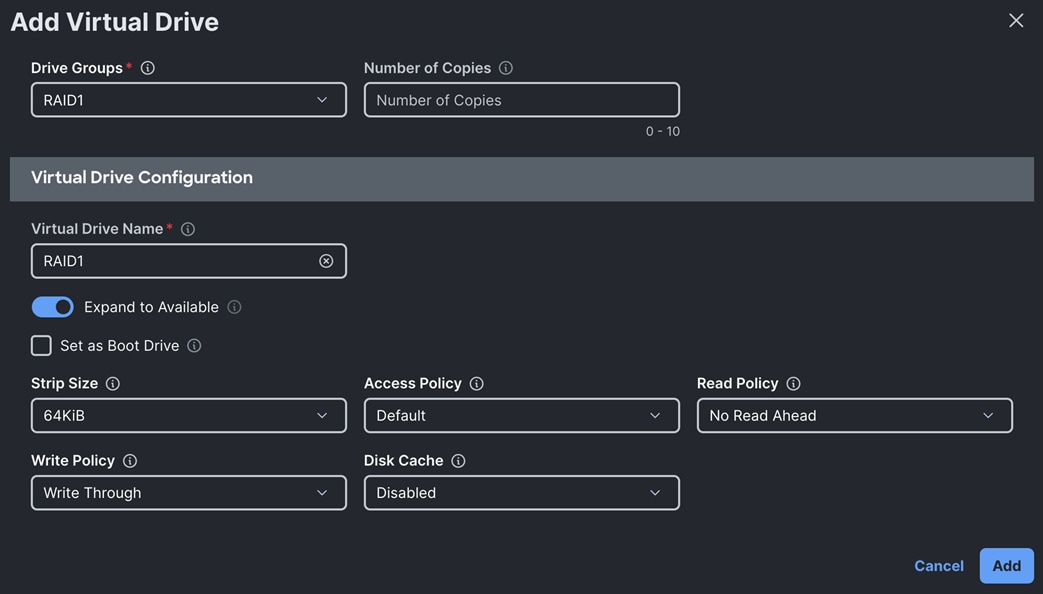

Step 5. After creating these two separate drive groups, click Add Virtual Drive to create virtual drive.

The first Virtual Drive for RAID10 was configured with using the Drive Group RAID10 as shown below:

The second Virtual Drive for RAID5 was configured with using the Drive Group RAID5 as shown below:

Step 6. After configuring the drive group and virtual drive, click Create.

The Virtual Drive Group and Virtual Drives for an indexer node is shown below:

Step 7. Create one virtual drive as RAID1 for admin and search head nodes.

Step 8. Go to > Configure > Polices > and then select the tab for UCS Server > Storage > and click Start.

Step 9. In Create Storage policy general menu, for the Organization select “Spk-Org” and for the Name for the policy as “C225-Storage-RAID1” and then click Next.

Step 10. For the Default Drive State choose JBOD, enabled M.2 RAID Configuration and select the appropriate slot as MSTOR-RAID-1 (MSTOR-RAID) as shown below:

Step 11. Enable MRAID/RAID Controller configuration to create virtual drives. Click Add Drive Group.

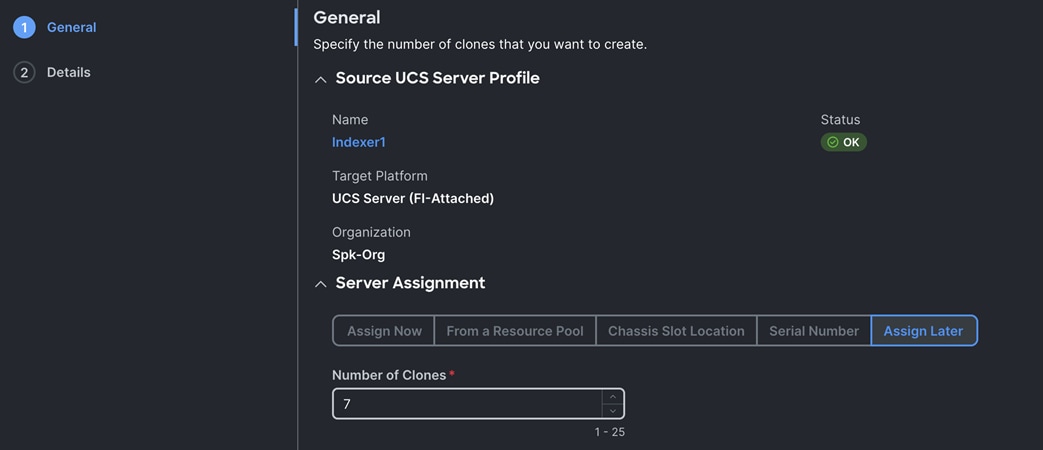

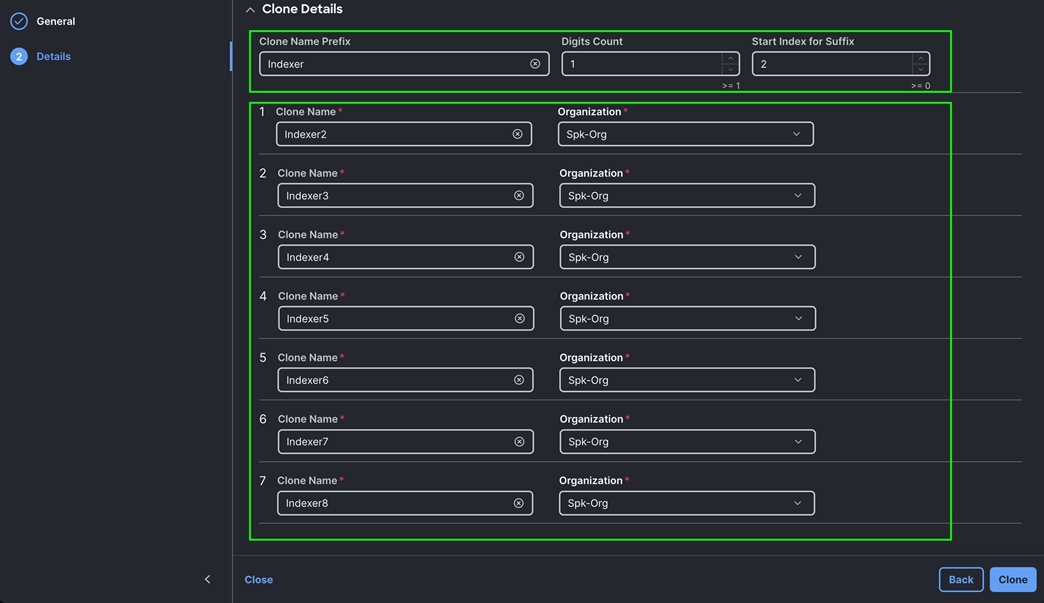

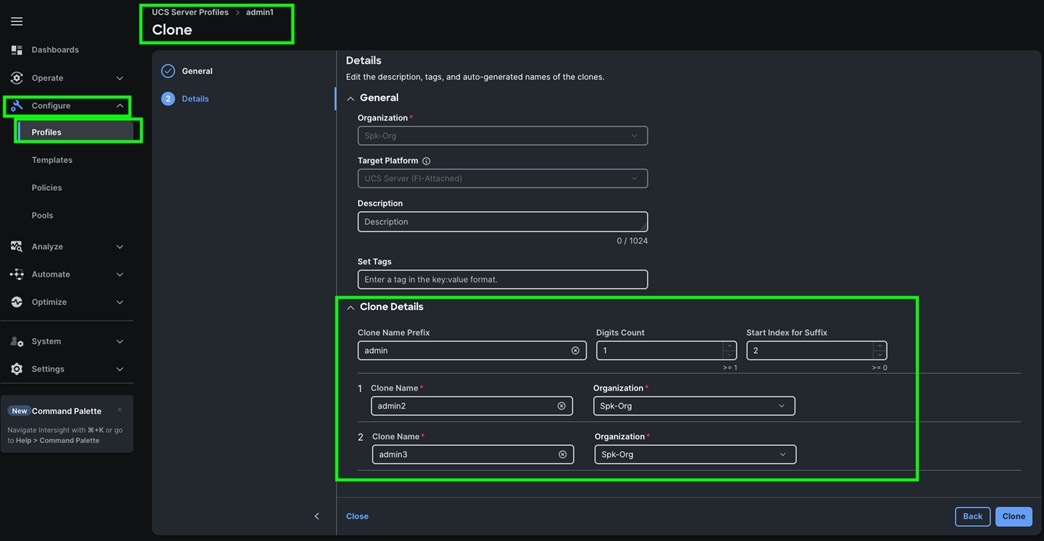

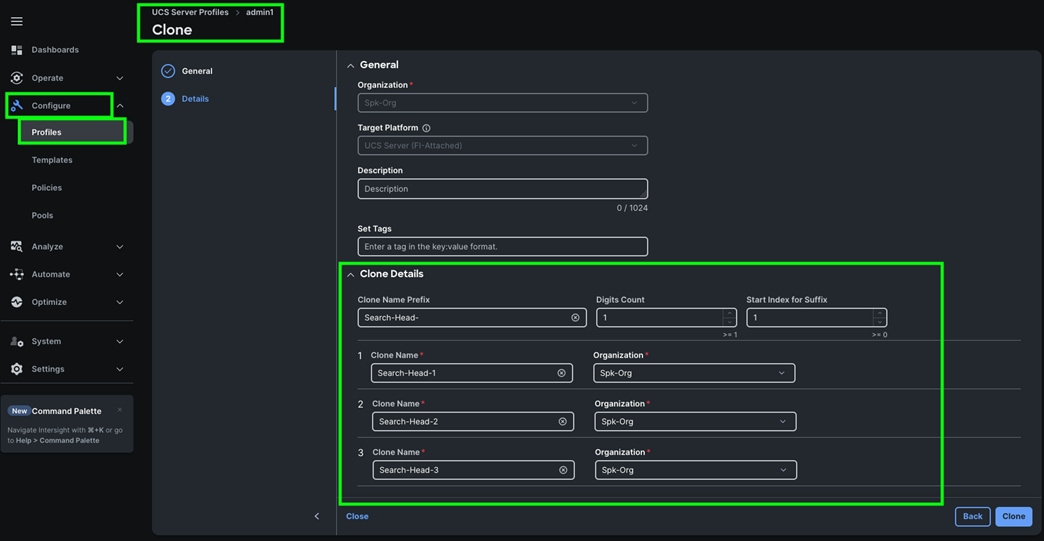

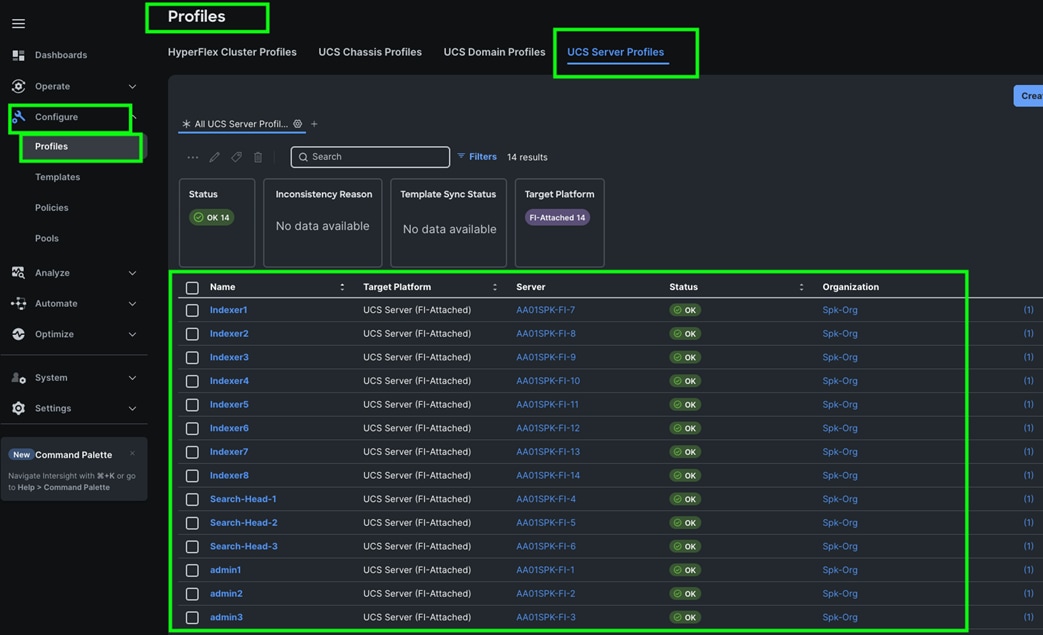

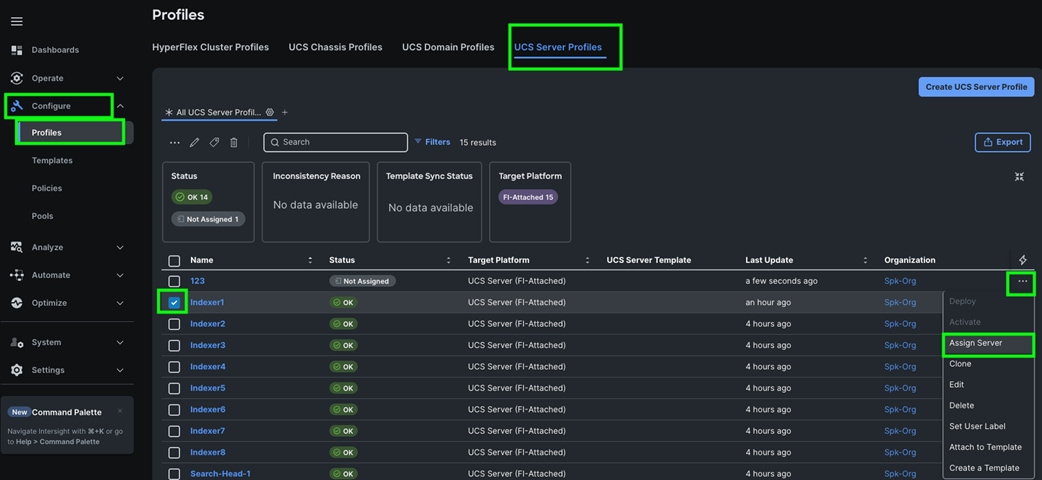

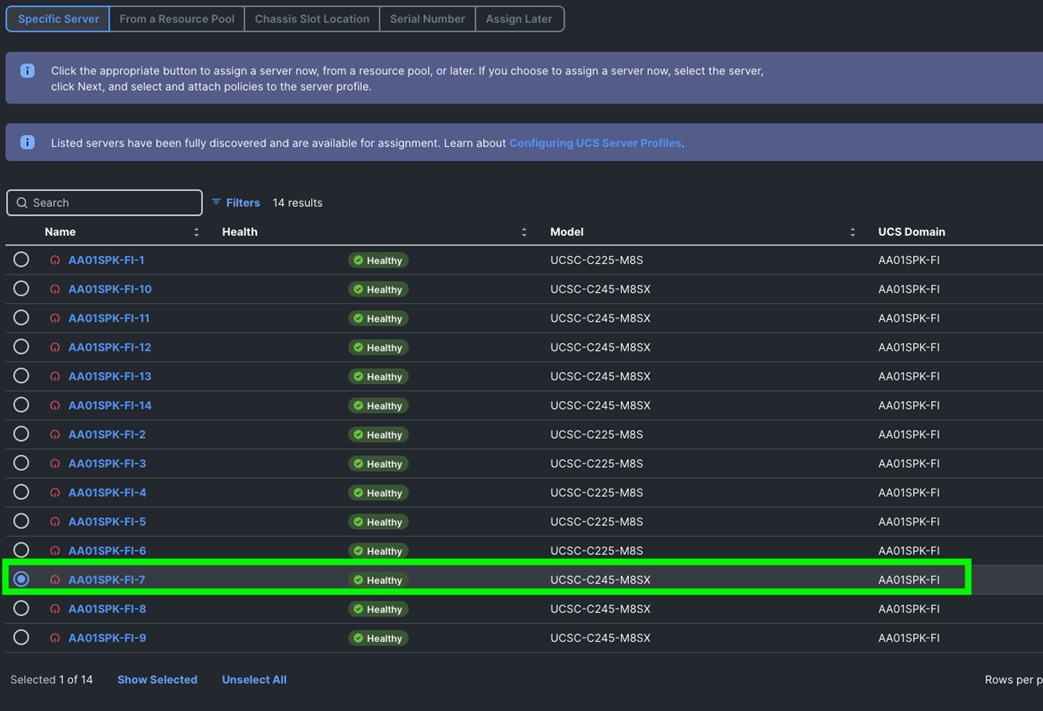

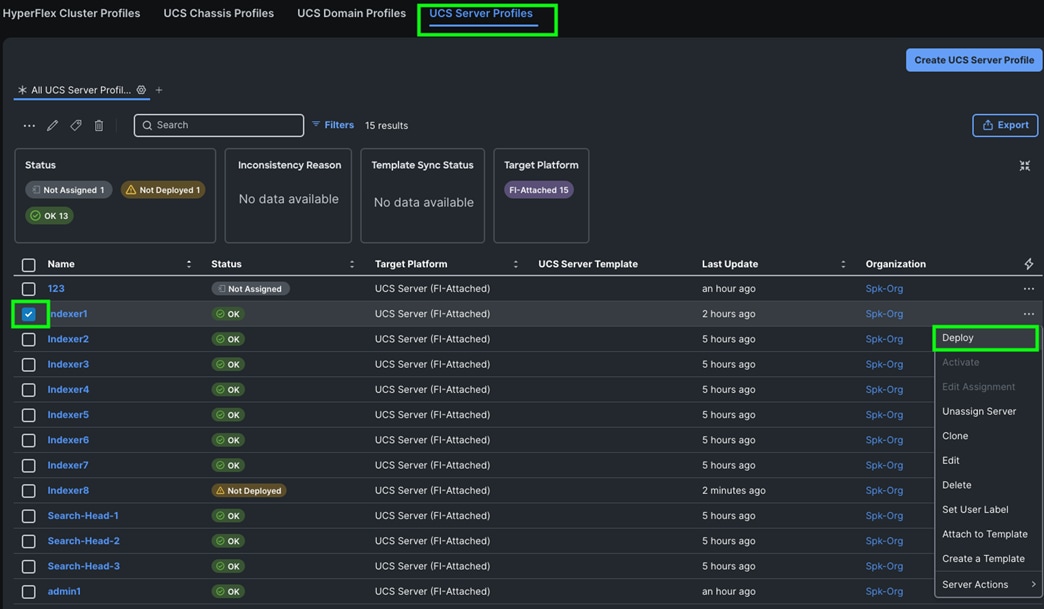

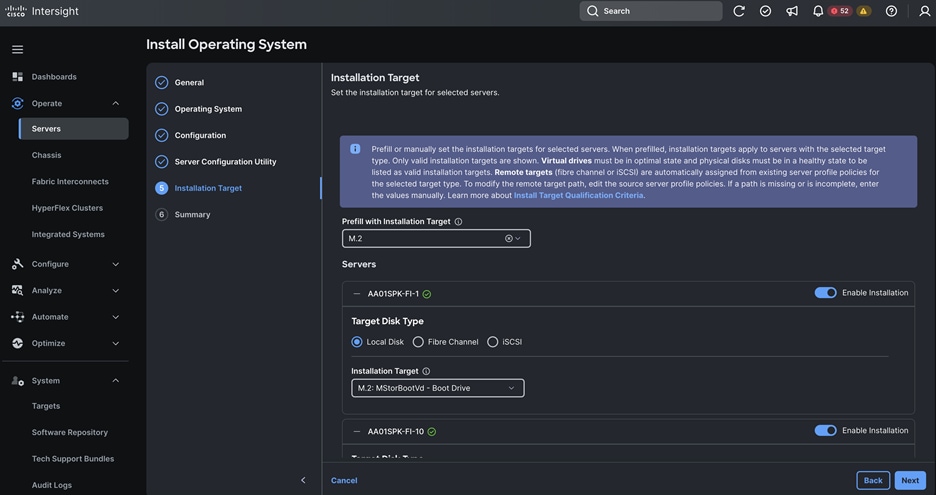

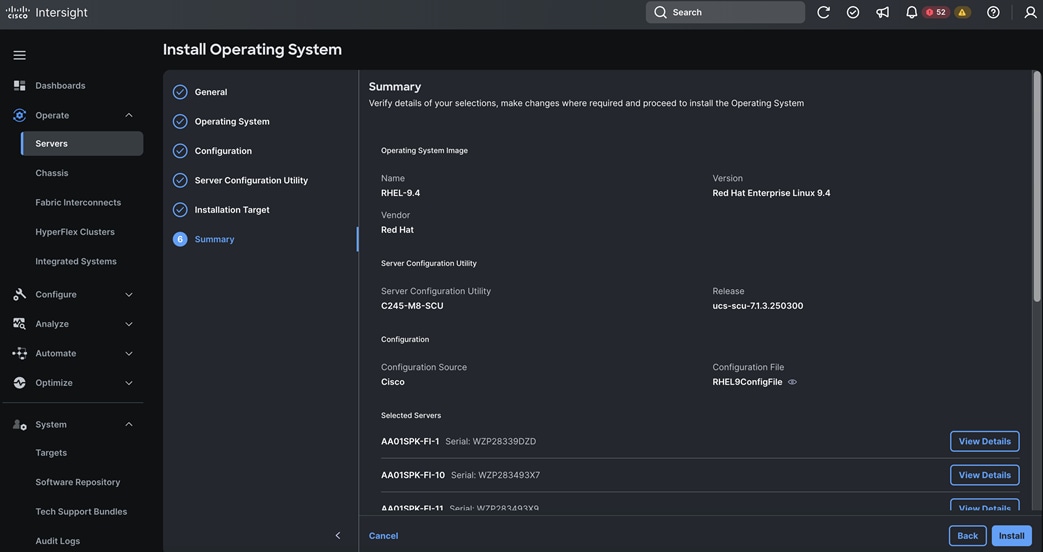

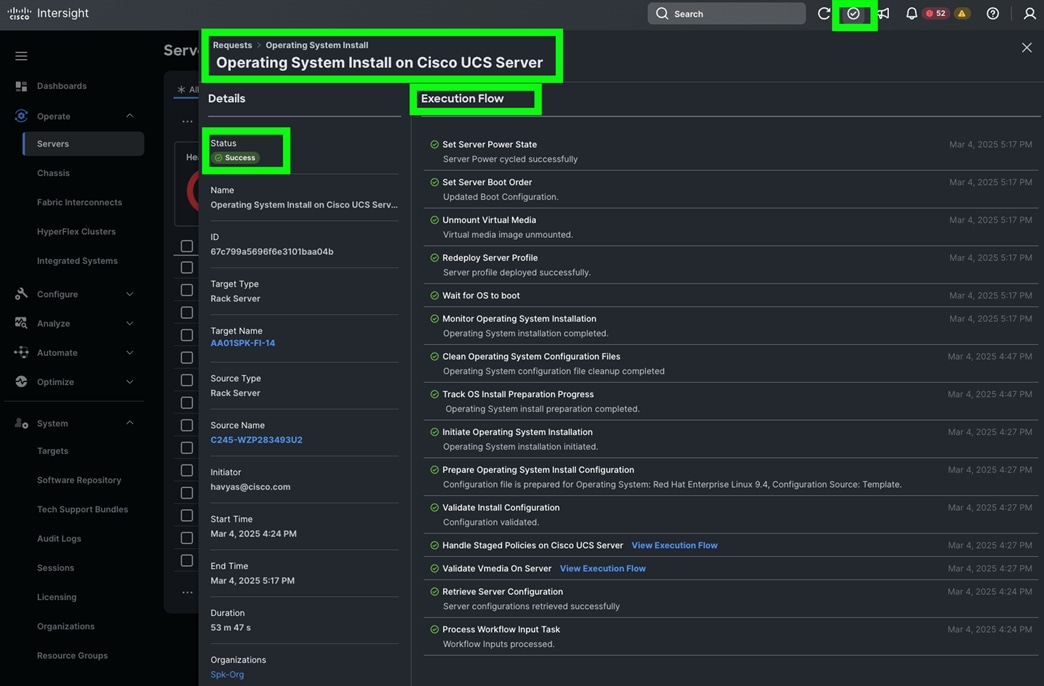

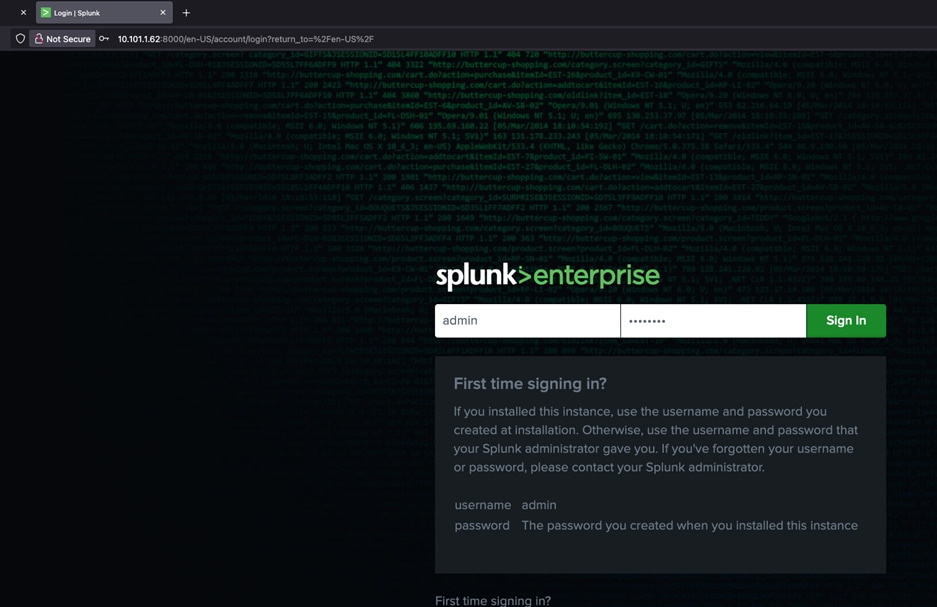

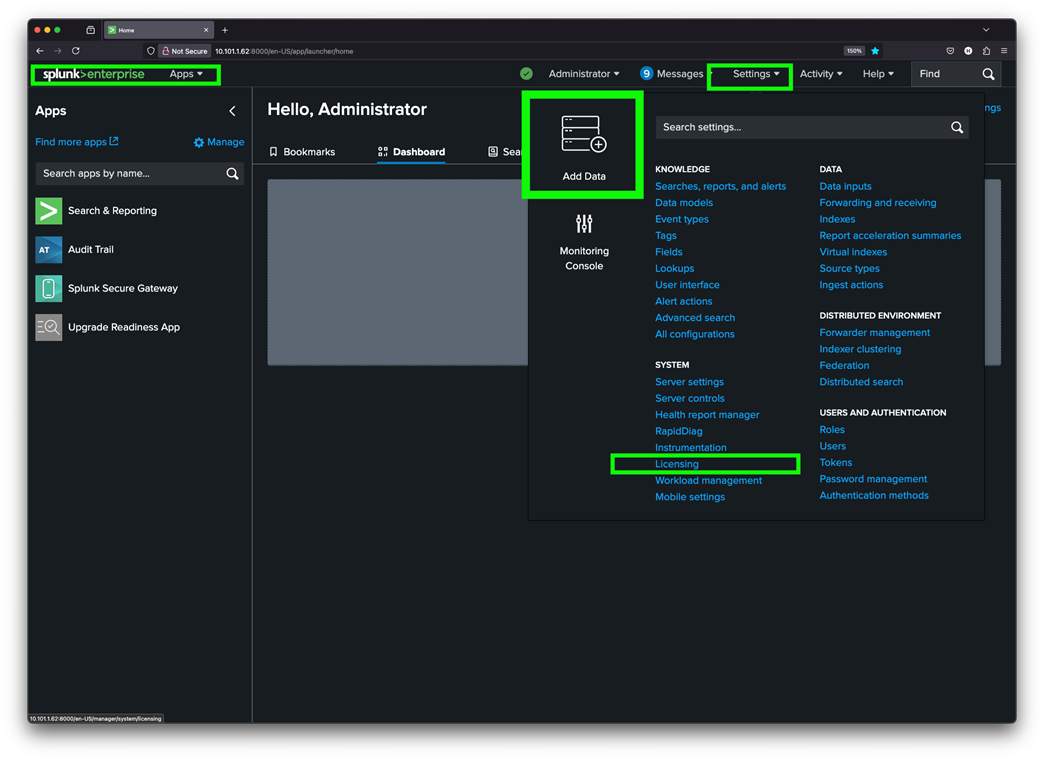

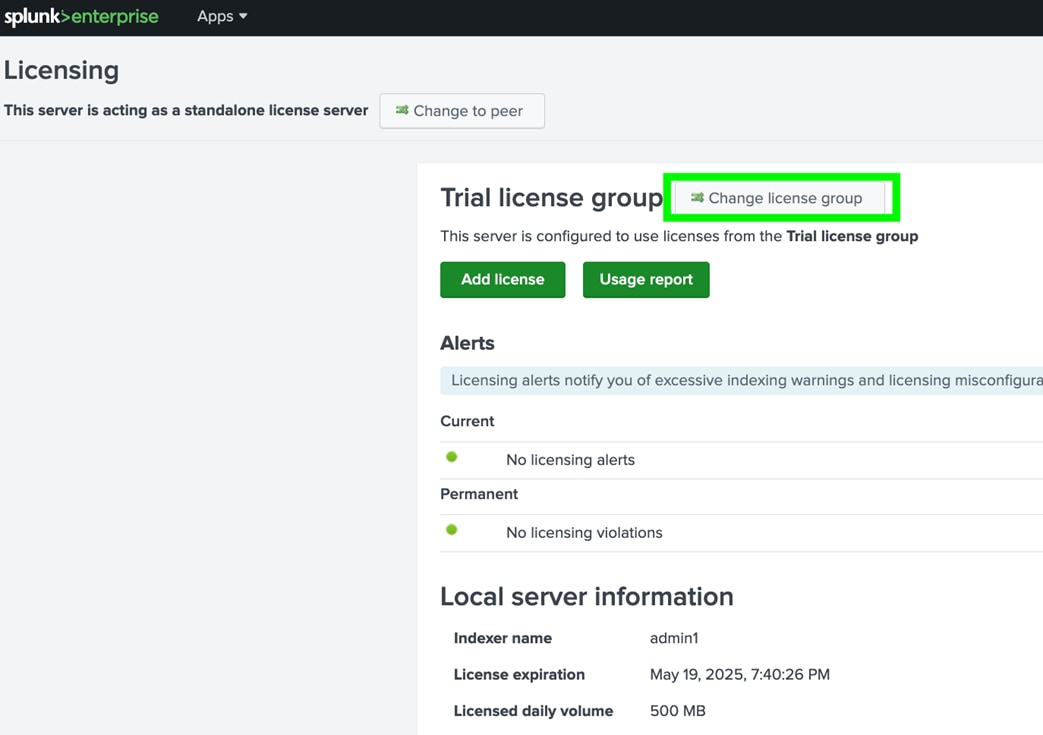

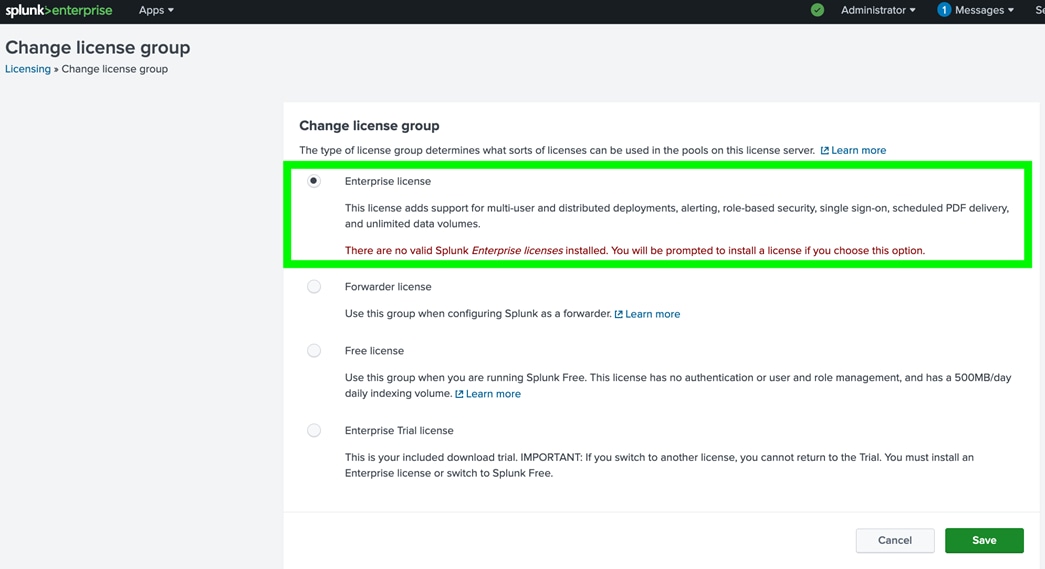

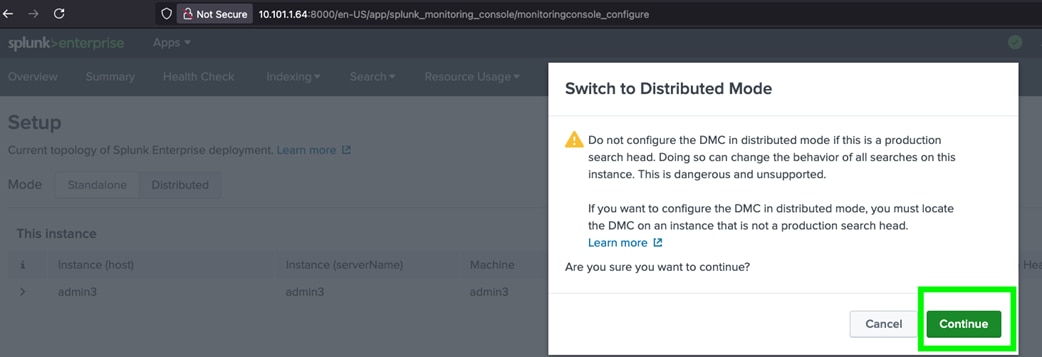

Note: We configured one Drive Group as RAID1.