Cisco Data Intelligence Platform on Cisco UCS M6 with Cloudera Data Platform Private Cloud

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Today, leading enterprises utilizes artificial intelligence/machine learning (AI/ML) to discover insights hidden in massive amounts of data through data processing and data engineering. As enterprises are adopting to newer AI/ML enabled use cases to support problem solving and progress toward business intelligence goal through revolution of increased computing power, vast amount of data storage and better algorithms are not enough to drive AI/ML enabled business challenges. Adoption, development and scale a cohesive data strategy focused data management platform providing centralized data access to existing and emerging workloads.

Data scientists are utilizing data sets on a magnitude and scale never seen before, implementing use cases such as transforming supply chain models, responding to increased levels of fraud, predicting customer churn, and developing new product lines. To be successful, data scientists need the tools and underlying processing power to train, evaluate, iterate, and retrain their models to obtain highly accurate results. The sheer size of the data to be processed and analyzed has a direct impact on the cost and speed at which companies can train and operate their AI/ML models with dynamic scalability. Data set size can also heavily influence where to deploy infrastructure—whether in a public, private, or hybrid cloud.

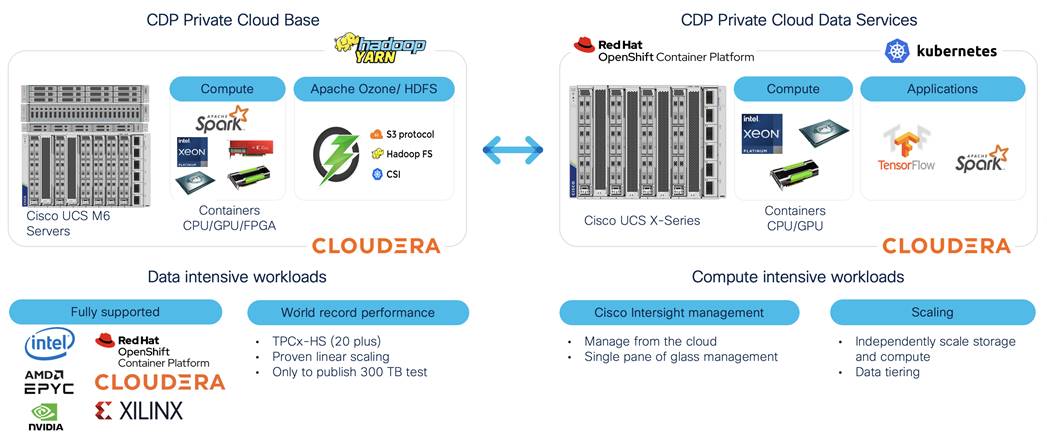

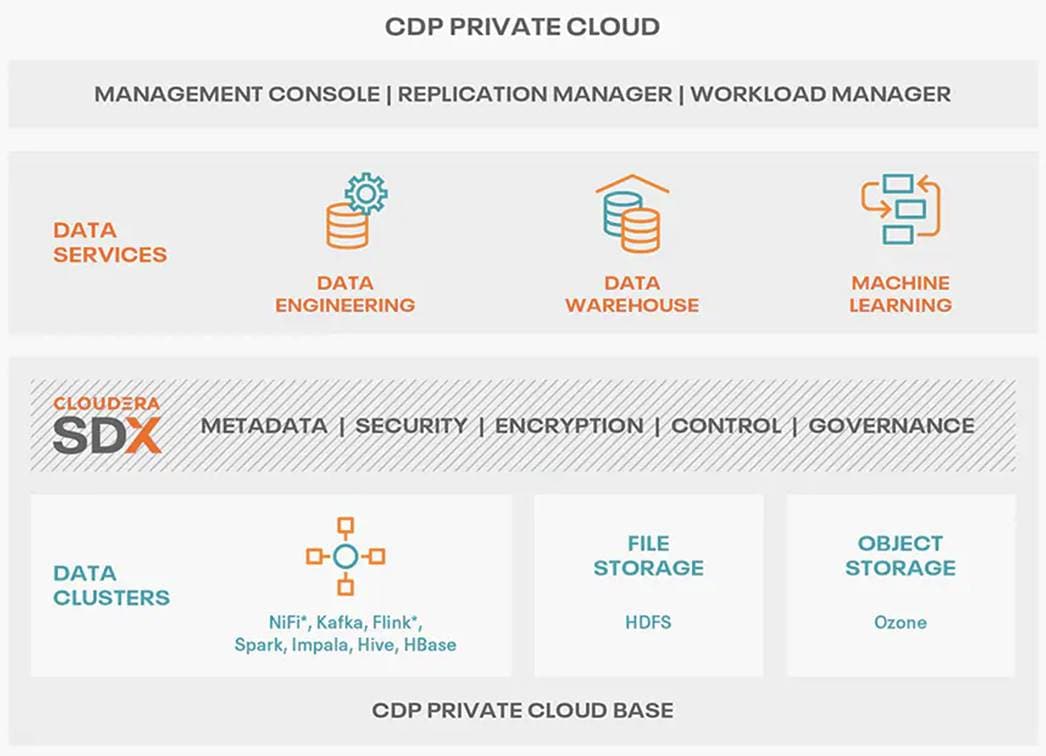

Cloudera Private Cloud enables unified data fabric with broad set of tools and management capability for data analytics and AI/ML use cases along with secure user access and data governance through:

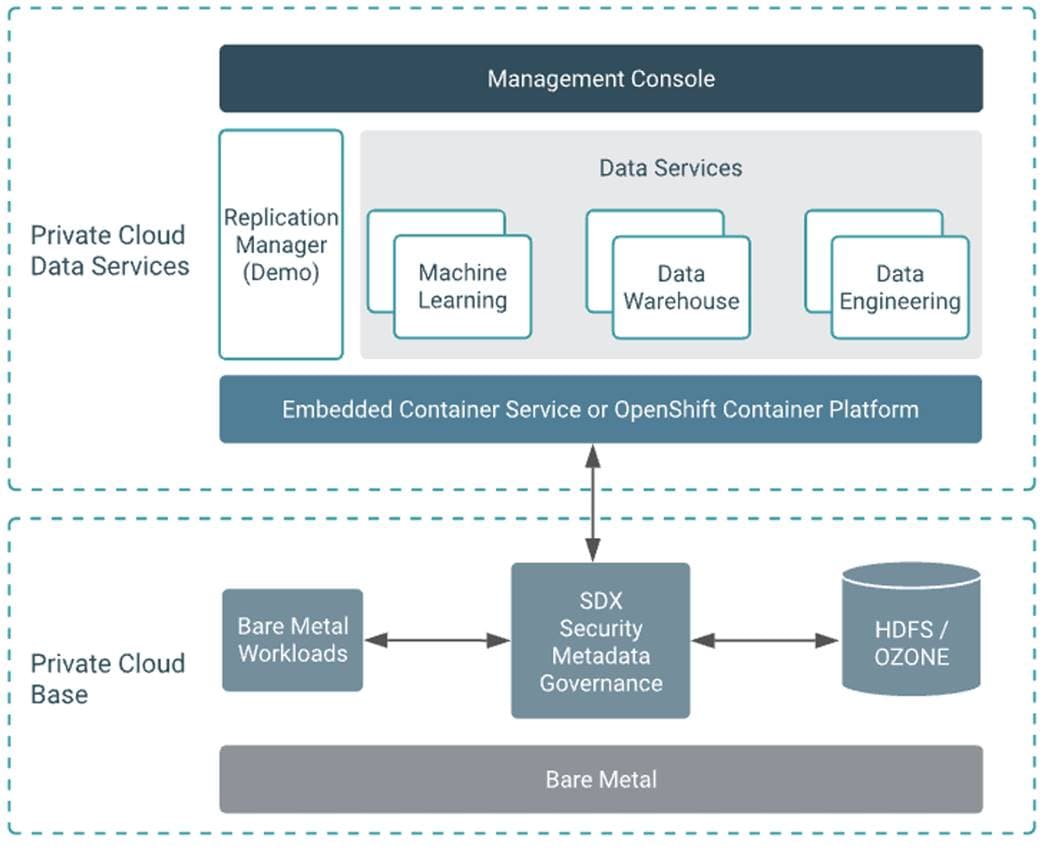

● Cloudera Data Platform Private Cloud Base (CDP PvC Base) - provides storage and supports the traditional data lake environments. It also introduced Apache Ozone, the next generation of filesystem for data lake

● Cloudera Data Platform Private Cloud Data Services (CDP PvC DS) - provides personas (such as data analyst, data scientist, data engineer) driven data services from private and hybrid data lakes.

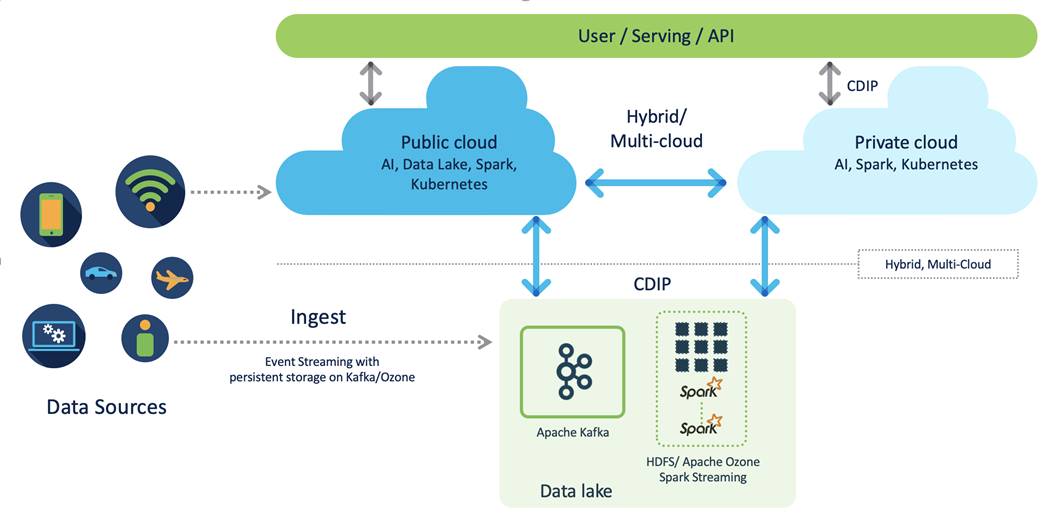

Cisco Data Intelligence Platform (CDIP) is thoughtfully designed private cloud for data lake. It supports data intensive workloads with Cloudera Data Platform Private Cloud Base and compute rich (AI/ML) and compute intensive workloads with Cloudera Data Platform Private Cloud Data Services. CDIP further provides storage consolidation with Apache Ozone on Cisco UCS infrastructure enables an object store implementation to support several new use cases and higher scale, which is fully managed by Cisco Intersight. Cisco Intersight simplifies management and moves management of computing resources from network to the cloud.

This CVD implements CDIP with cloud advantage in mind for private and hybrid cloud. It is based on Cisco UCS M6 family of servers which support 3rd Gen Intel Xeon Scalable family processors with PCIe Gen 4 capabilities. These servers include the following.

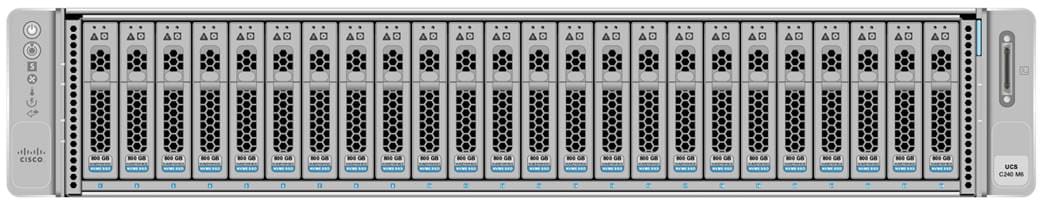

● The Cisco UCS C240 M6 Server for Storage (Apache Ozone and HDFS) - Extends the capabilities of the Cisco UCS rack server portfolio supporting more than 43 percent more cores per socket and 33 percent more memory when compared with the previous generation.

● The Cisco UCS® X-Series with Cisco Intersight - A modular system managed from the cloud. It is

designed to meet the needs of modern applications and improve operational efficiency, agility, and scale through an adaptable, future-ready, and modular design.

Furthermore, with Cisco Intersight you get all the benefits of SaaS delivery and full life cycle management of network and compute. This empowers you to analyze, update, fix, and automate your environment in ways that were not possible before.

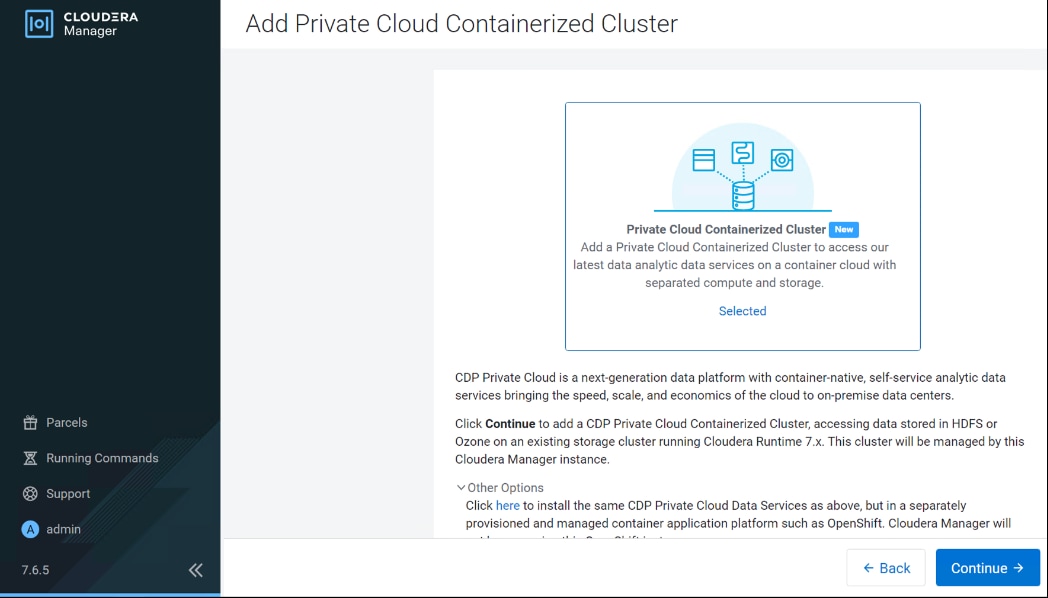

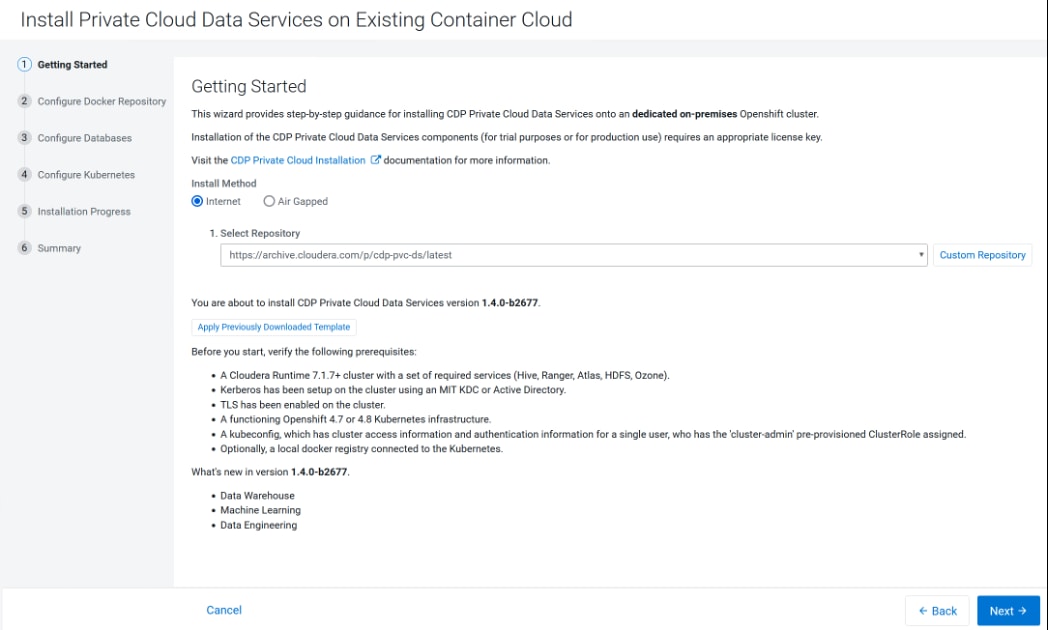

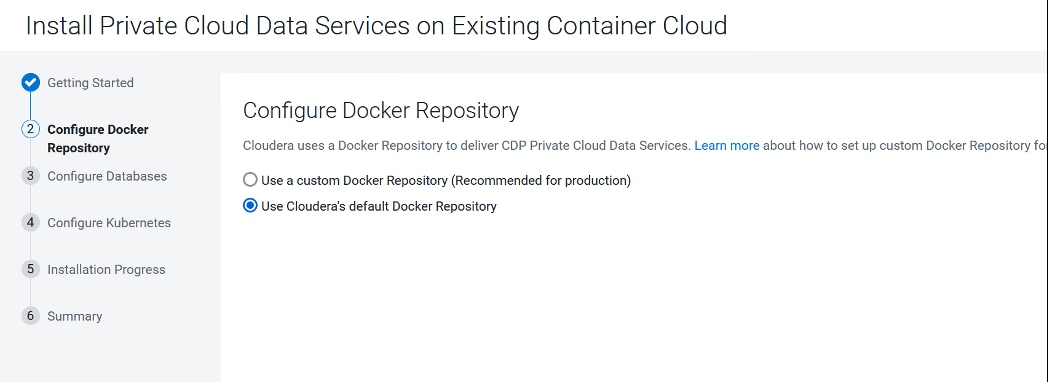

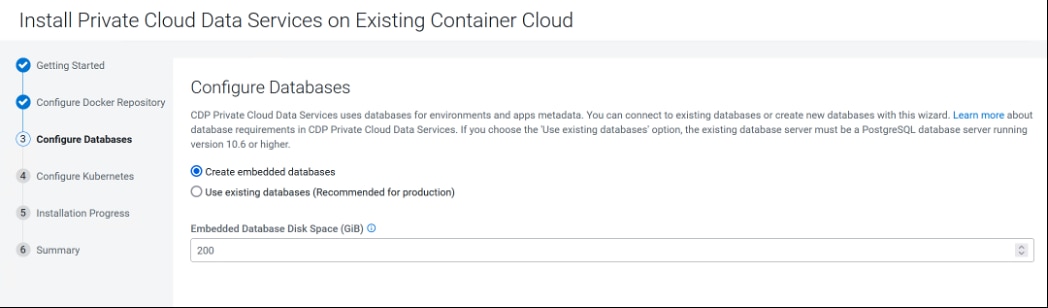

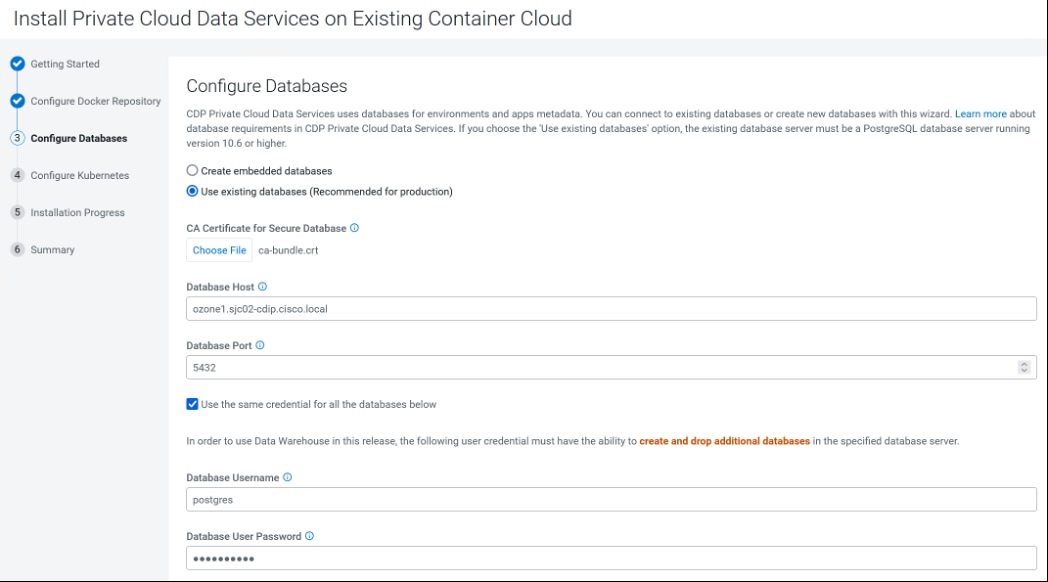

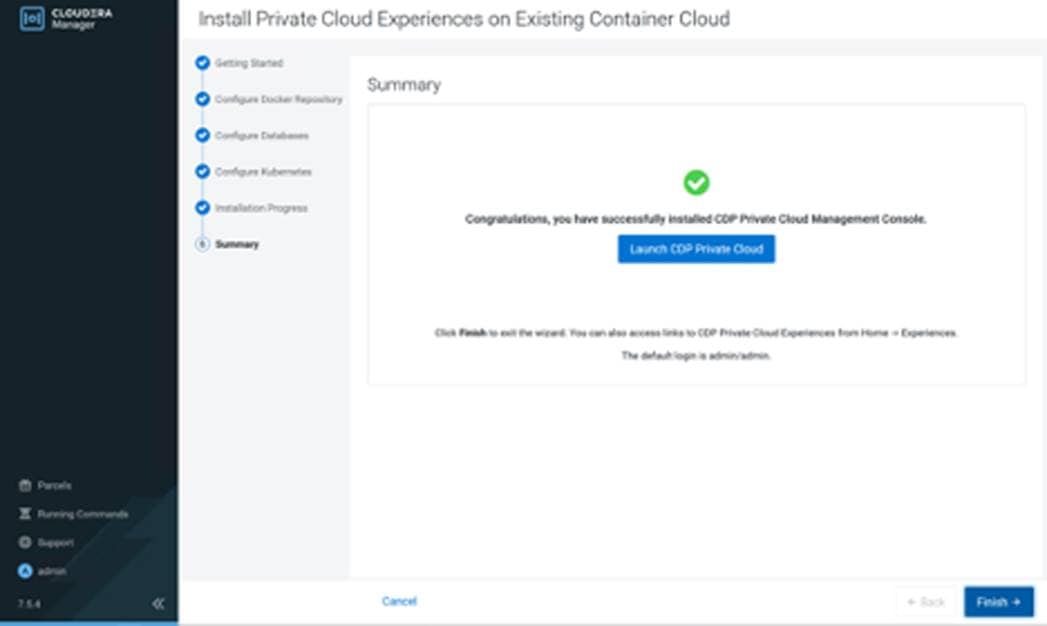

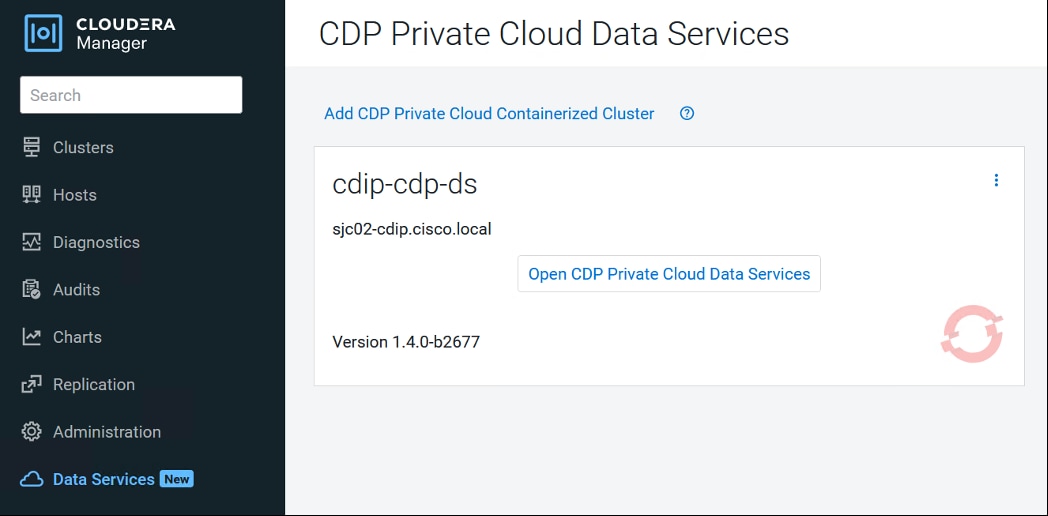

This CVD explains the implementation of Cloudera Data Platform Private Cloud Base (CDP PvC) 7.1.7 with CDP Private Cloud Data Services 1.4 running on Red Hat OpenShift Container Platform 4.8.

CDIP with Cloudera Data Platform enables customers to independently scale storage and computing resources as needed while offering an exabyte scale with low total cost of ownership (TCO). It offers future-proof architecture with the latest technologies provided by Cloudera.

This chapter contains the following:

● Audience

Both Big Data and machine learning technology have progressed at a point where they are being implemented in production systems running 24x7. There exists a need for a proven, dependable, and high-performance platform for ingestion, processing, storage, and analysis of the data, as well as the seamless dissemination of the outputs, results, and insights of the analysis.

This solution implements Cloudera Data Platform Private Cloud Base (CDP PvC Base) and Cloudera Data Platform Private Cloud Data Services (CDP PvC DS) on Cisco Data Intelligence Platform (CDIP) architecture, a world-class platform specifically designed for demanding workloads that is both easy to scale and easy to manage, even as the requirements grow to thousands of servers and petabytes of storage.

Today, many companies recognize the immense potential of big data and machine learning technologies. It is also evident that everyday enormous amount of data is being ingested in on-premises or cloud enabled data lakes with very high velocity. It is quite apparent that IT leaders are challenged in finding ways, how to maximize the ROI of their data, extract valuable insights, and make informed business decisions to gain competitive edge. Furthermore, Apps have transformed into whole new thinking of IT. Apps are becoming the “business” from just supporting the business functions. As a result, modernizing apps, adopting cloud-native architectures, creating micro-services, and utilizing advanced analytics using AI/ML frameworks are becoming de-facto standards for digital transformation. Amid those challenges, siloed monolithic apps and data are further slowing down the pace of innovation and limiting their transformation journey towards modern digitization.

Corporations are leveraging new capabilities, building out departments and increasing hiring. However, these efforts have a new set of challenges:

● Making the data available to the diverse set of engineers (Data engineers, analysts, data scientists) who need it

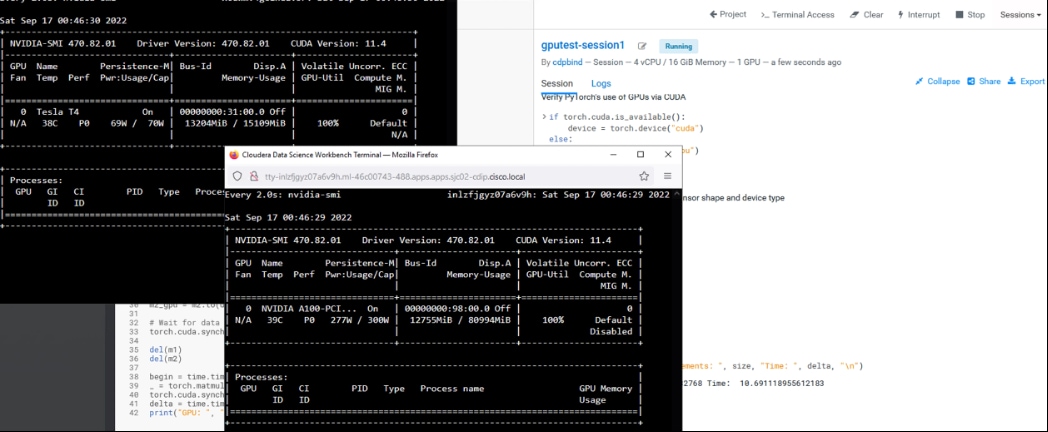

● Enabling access to high-performance computing resources, GPUs, that also scale with the data growth

● Allowing people to work with the data using the environments in which they are familiar

● Publishing their results so the organization can make use of it

● Enabling the automated production of those results

● Managing the data for compliance and governance

● Scaling the system as the data grows

● Managing and administering the system in an efficient, cost-effective way

This solution is based on the Cisco Data Intelligence Platform that includes computing, storage, connectivity, capabilities built on Cisco Unified Computing System (Cisco UCS) infrastructure, using Cisco UCS C-Series and S-Series Rack Servers and unified management with Cisco Intersight to help companies manage the entire infrastructure from a single pane of glass along with Cloudera Data Platform to provide the software for fast ingest of data and managing and processing exabyte scale data being collected. This architecture is specifically designed for performance and linear scalability for big data and machine learning workload.

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, IT engineers, partners, and customers who are interested in learning about and deploying the Cloudera Data Platform Private Cloud (CDP PvC) on the Cisco Data Intelligence Platform on Cisco UCS M6 Rack-Mount servers and Cisco UCS X-Series for digital transformation through cloud-native modern data analytics and AI/ML.

This document describes the architecture, installation, configuration, and validated use cases for the Cisco Data Intelligence Platform using Cloudera Data Platform Private Cloud Base and Cloudera Data Platform Private Cloud Data Services on Cisco UCS M6 Rack-Mount servers and Cisco UCS® X-Series. A reference architecture is provided to configure the Cloudera Data Platform on Cisco UCS X210c Compute Nodes and X440p PCIe node with NVIDIA A100 GPU.

This solution extends the portfolio of Cisco Data Intelligence Platform (CDIP) architecture with Cloudera Data Platform Private Cloud Data Services (CDP PvC DS), a state-of-the-art platform, providing a data cloud for demanding workloads that is easy to deploy, scale and manage which is built on top of Red Hat OpenShift Container Platform (RHOCP). Furthermore, as the enterprise’s requirements and needs changes overtime, the platform can grow to thousands of servers, at exabytes of storage and tens of thousands of cores to process this data.

The following will be implemented in this validated design:

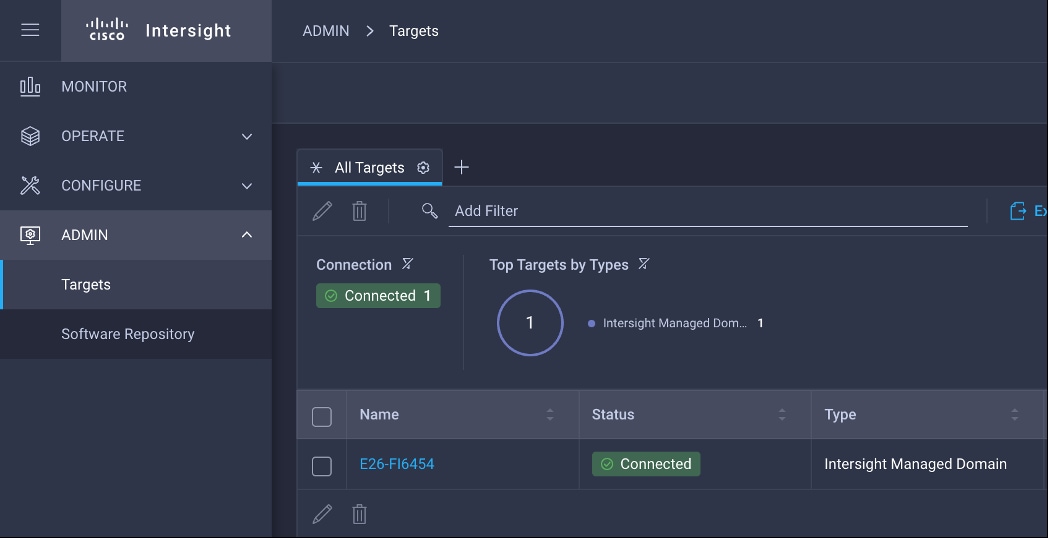

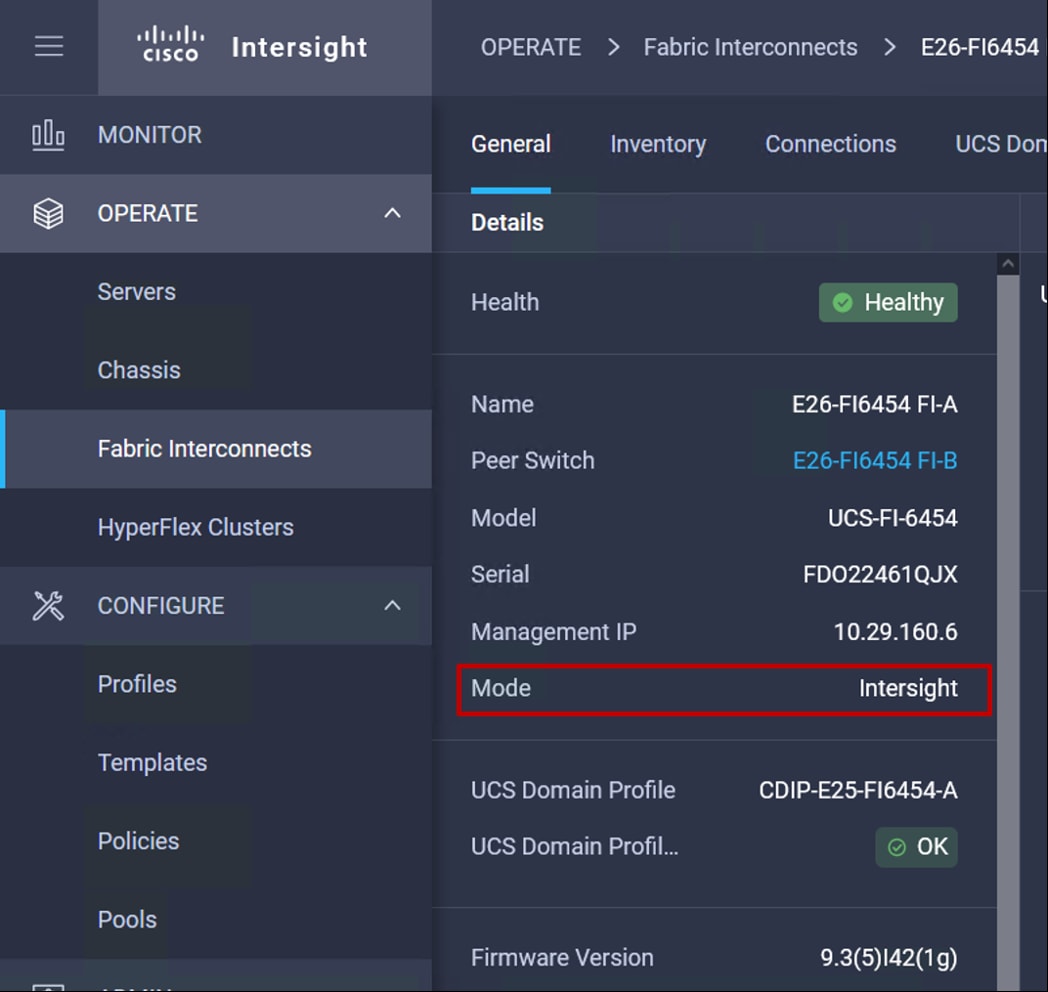

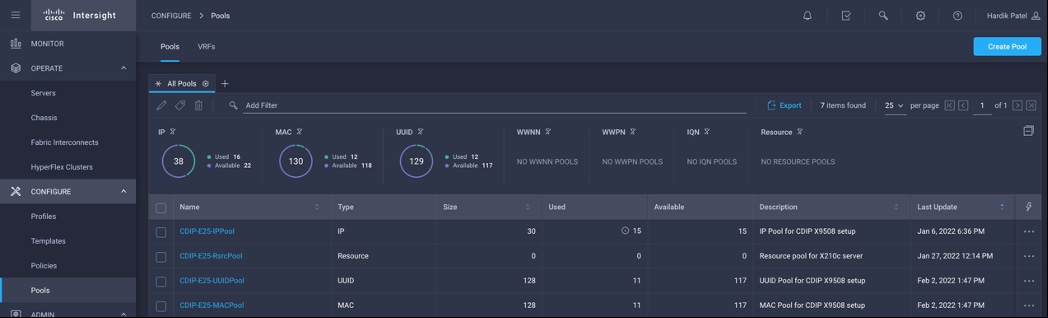

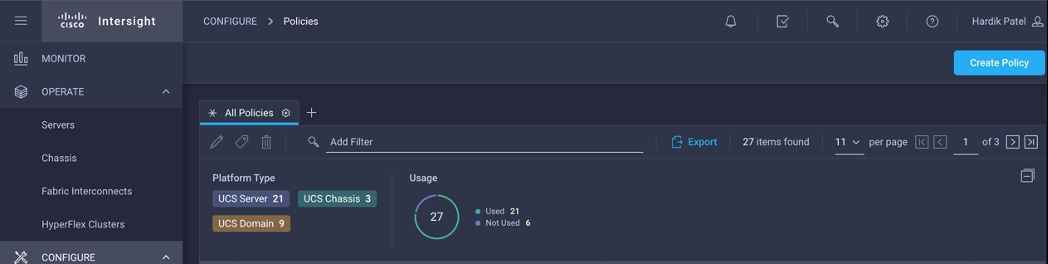

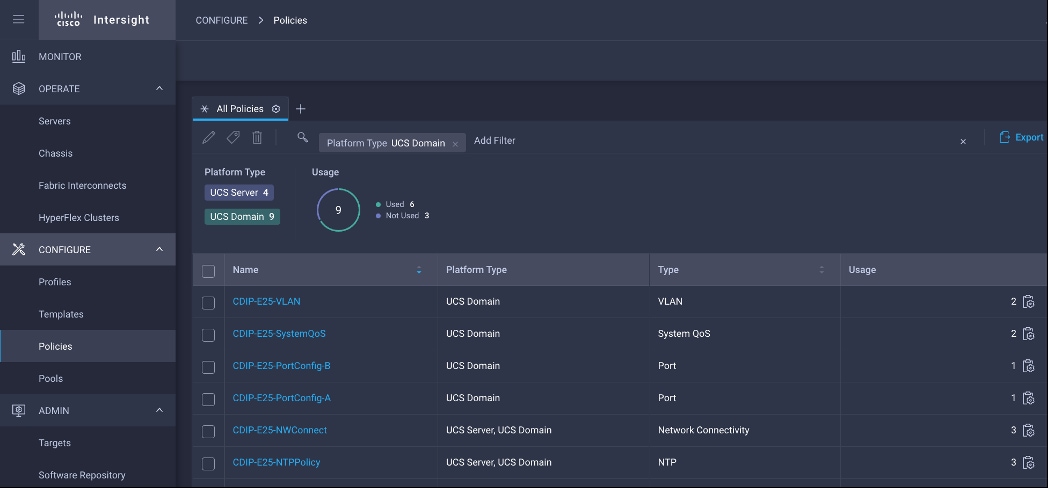

● Cisco Intersight to configure and manage Cisco Infrastructure

● Data lake provided by Cloudera Data Platform Private Cloud Base on Cisco UCS servers

● Compute Farm running

◦ Red Hat OpenShift Container Platform (RHOCP) or deploy an Embedded Container Service (ECS) to provide the Kubernetes and container platform for the private cloud

◦ Cloudera Data Platform Private Cloud Data Services as the application providing data processing, auto scaling and self-service onboarding of the user

In this release, you will be primarily exploring Cloudera Machine Learning as the persona to cater to data scientists. This release of Cloudera Private Cloud Data Services also includes Cloudera Data Warehouse and is not the subject of this document.

This chapter contains the following:

● Cisco Data Intelligence Platform

This CVD details the process of installing CDP Private Cloud including the installation of Red Hat OpenShift Container Platform 4.8, the prerequisites for CDP Private Cloud Data Services and the configuration details of the cluster.

Cisco Data Intelligence Platform

Cisco Data Intelligence Platform (CDIP) is a cloud-scale architecture, primarily for a private cloud data lake which brings together big data, AI/compute farm, and storage tiers to work together as a single entity while also being able to scale independently to address the IT issues in the modern data center. This architecture provides the following:

● Extremely fast data ingest, and data engineering done at the data lake.

● AI compute farm allowing for easy to manage different types of personas to work on AI/ML frameworks while achieving auto-scalability for different compute types (GPU, CPU, FPGA) to work on this data for further analytics.

Note: Cloudera Private Cloud Data Services 1.4 supports GPU only for Cloudera Machine Learning (CML). Cloudera Data Engineering (CDE) will support GPU in future release.

● A storage tier, allowing to gradually retire data which has been worked on to a storage dense system with a lower $/TB providing a better TCO. Next-generation Apache Ozone filesystem for storage in a data lake.

● Seamlessly scale the architecture to thousands of nodes with a single pane of glass management using Cisco Intersight and Cisco Application Centric Infrastructure (ACI).

Cisco Data Intelligence Platform caters to the evolving architecture bringing together a fully scalable infrastructure with centralized management and fully supported software stack (in partnership with industry leaders in the space) to each of these three independently scalable components of the architecture including data lake, AI/ML and Object stores.

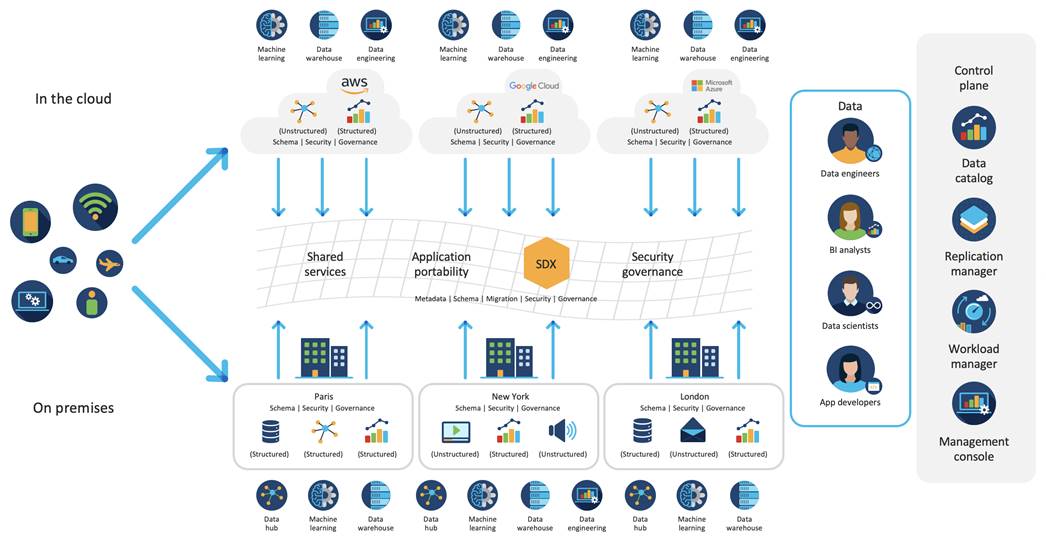

CDIP offers private cloud which enables it to become a hybrid cloud for the data lakes and apps which provides unified user experiences with common identity, single API framework that stretches from private cloud to public cloud, auto-scales when app demand grows. Further, implement tighter control over sensitive data with data governance and compliance, and integrate common data serving layer for data analytics, business intelligence, AI inferencing, and so on.

CDIP with CDP private cloud is built to meet the needs of enterprises for their hybrid cloud with unmatched choices such as any data, any analytics, and engineering anywhere. This solution includes:

● Flexibility to run workload anywhere for quick and easy insights

● Security that is consistent across all clouds provided by Cloudera’s SDX. Write centrally controlled compliance and governance policies once and apply everywhere, enabling safe, secure, and compliant end-user access to data and analytics

● Performance and scale to optimize TCO across your choices. It brings unparalleled scale and performance to your mission-critical applications while securing future readiness for evolving data models

● Single pane of glass visibility for your infrastructure and workloads. Register multi-cloud, including public and private in a single management console and launch virtual analytic workspaces or virtual warehouses within each environment as needed

● Secure data and workload migration to protect your enterprise data and deliver it where is needed. Securely manage data and meta-data migration across all environments

● Unified and multi-function Analytics for cloud-native workloads whether real-time or batch. Integrates data management and analytics experiences across the entire data lifecycle for data anywhere.

● Hybrid and multi-cloud data warehouse service for all modern, self-service, and advanced analytics use cases, at scale.

● Track and Audit everything across entire ecosystem of CDIP deployments

CDIP with CDP Private Cloud Hybrid Uses Cases

With the increasing hybrid cloud adoption due to increasing data volume and variety, CDIP addresses use cases that caters to the needs of today’s demand of hybrid data platforms, such as the following:

● Hybrid Workload – Offload workload on-premises to cloud or vice-versa as per the requirements or auto-scale during peak hours due to real-time urgency or seasonality Cloudera Replication Manager and Cloudera Workload Manager

● Hybrid Pipelines – Implement and optimize data pipelines for easier management. Automate and orchestrate your data pipelines as per demand or where it is needed the most. Implement secure data exchange between choice of your cloud and on-premises data hub at scale

● Hybrid Data Integration – Integrate data sources among clouds. Simplify application development or ML model training that needs on-premises data sources or cloud-native data stores

● Hybrid DevOps – Accelerate development with dev sandboxes in the cloud, however, production runs on-premises

● Hybrid Data Applications – Build applications that runs anywhere for cost, performance, and data residency

Cisco Data Intelligence Platform with Cloudera Data Platform

Cisco developed numerous industry leading Cisco Validated Designs (reference architectures) in the area of Big Data, compute farm with Kubernetes (CVD with RedHat OpenShift Container Platform) and Object store.

A CDIP architecture as a private cloud can be fully enabled by the Cloudera Data Platform with the following components:

● Data lake enabled through CDP PvC Base

● Private Cloud with compute on Kubernetes can be enabled through CDP Private Cloud Data Services

● Exabyte storage enabled through Apache Ozone

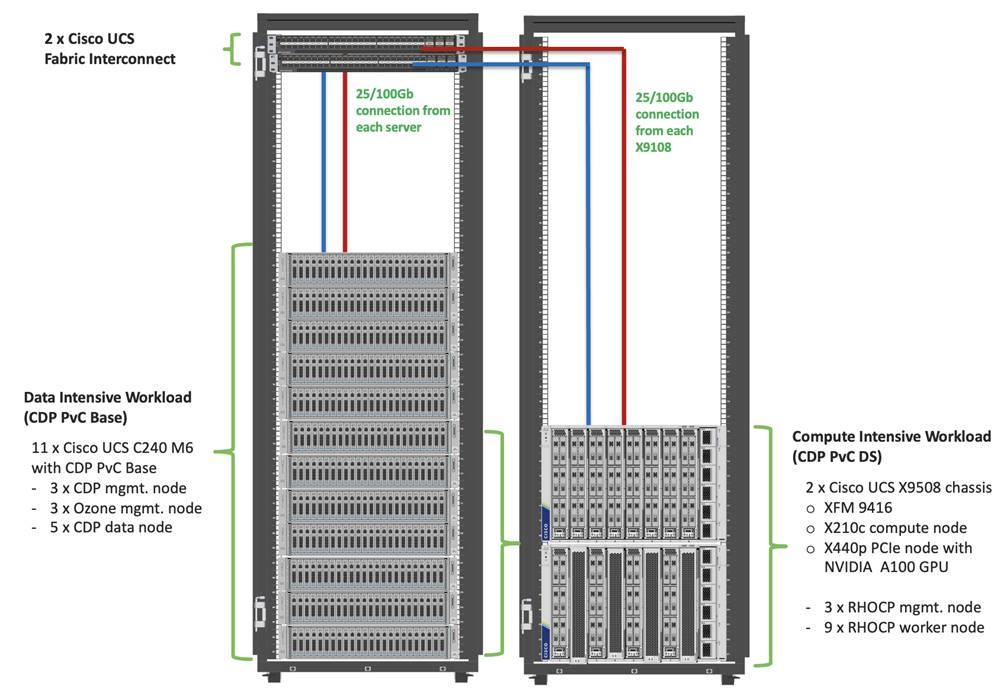

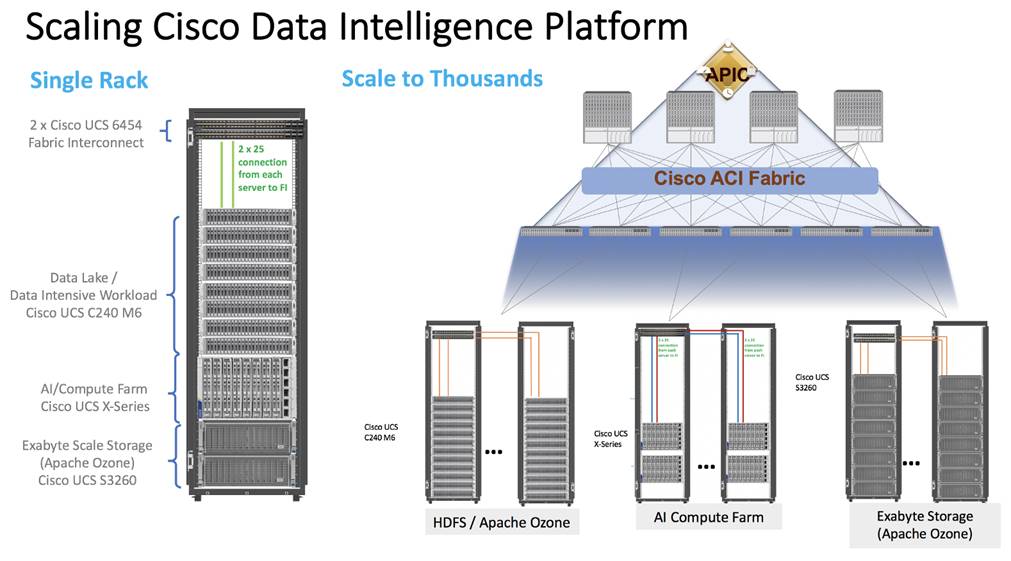

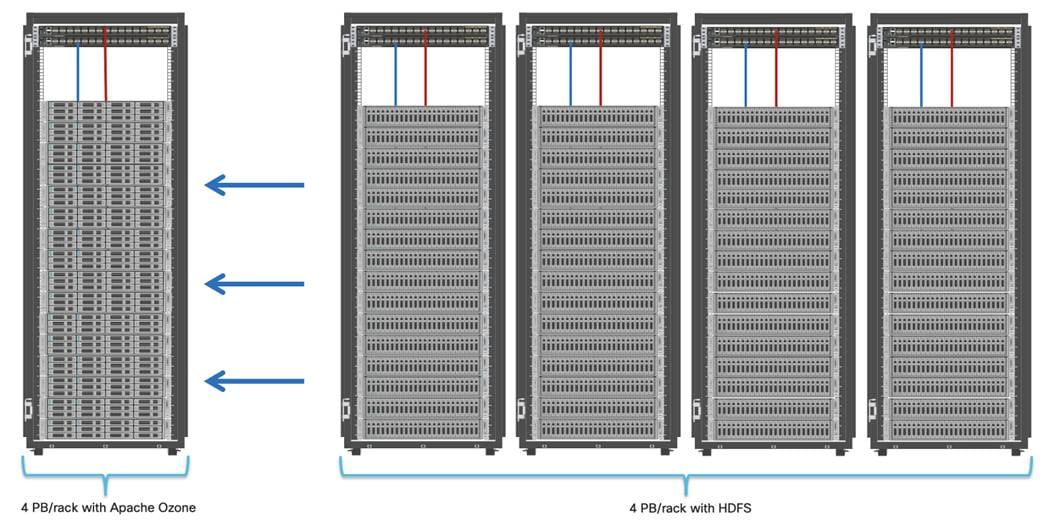

This architecture can start from a single rack (Figure 4) and scale to thousands of nodes with a single pane of glass management with Cisco Application Centric Infrastructure (ACI) (Figure 5).

Cisco Data Intelligence Platform reference architectures are carefully designed, optimized, and tested with the leading big data and analytics software distributions to achieve a balance of performance and capacity to address specific application requirements. You can deploy these configurations as is or use them as templates for building custom configurations. You can scale your solution as your workloads demand, including expansion to thousands of servers using Cisco Nexus 9000 Series Switches. The configurations vary in disk capacity, bandwidth, price, and performance characteristics.

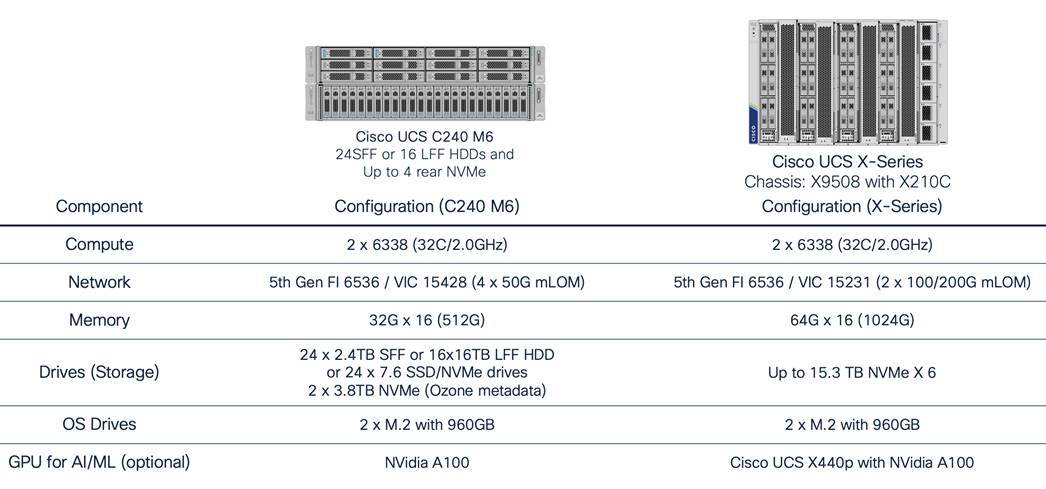

Data Lake (CDP PvC Base) Reference Architecture

Table 1 lists the CDIP with CDP PvC data lake and dense storage with Apache Ozone reference architecture.

Table 1. Cisco Data Intelligence Platform with CDP Private Cloud Base (Apache Ozone) Configuration on Cisco UCS M6

|

|

High Performance |

Performance |

Capacity |

| Server |

16 x Cisco UCS C240 M6SN Rack Servers with small-form-factor (SFF) drives |

16 x Cisco UCS C240 M6 Rack Servers with small-form-factor (SFF) drives |

16 x Cisco UCS C240 M6 Rack Servers with large-form-factor (LFF) drives |

| CPU |

2 x 3rd Gen Intel® Xeon® Scalable Processors 6338 processors (2 x 32 cores, at 2.0 GHz) |

2 x 3rd Gen Intel® Xeon® Scalable Processors 6338 processors (2 x 32 cores, at 2.0 GHz) |

2 x 3rd Gen Intel® Xeon® Scalable Processors 6338 processors (2 x 32 cores, at 2.0 GHz) |

| Memory |

16 x 32 GB RDIMM DRx4 3200 MHz (512 GB) |

16 x 32 GB RDIMM DRx4 3200 MHz (512 GB) |

16 x 32 GB RDIMM DRx4 3200 MHz (512 GB) |

| Boot |

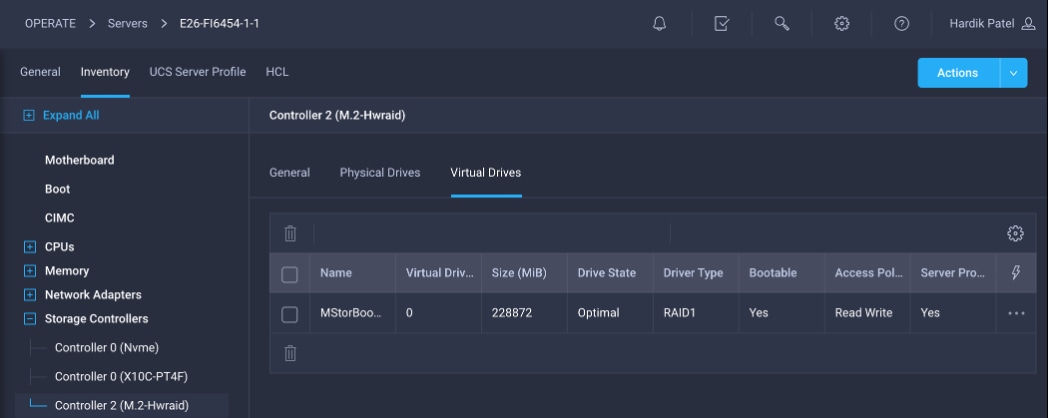

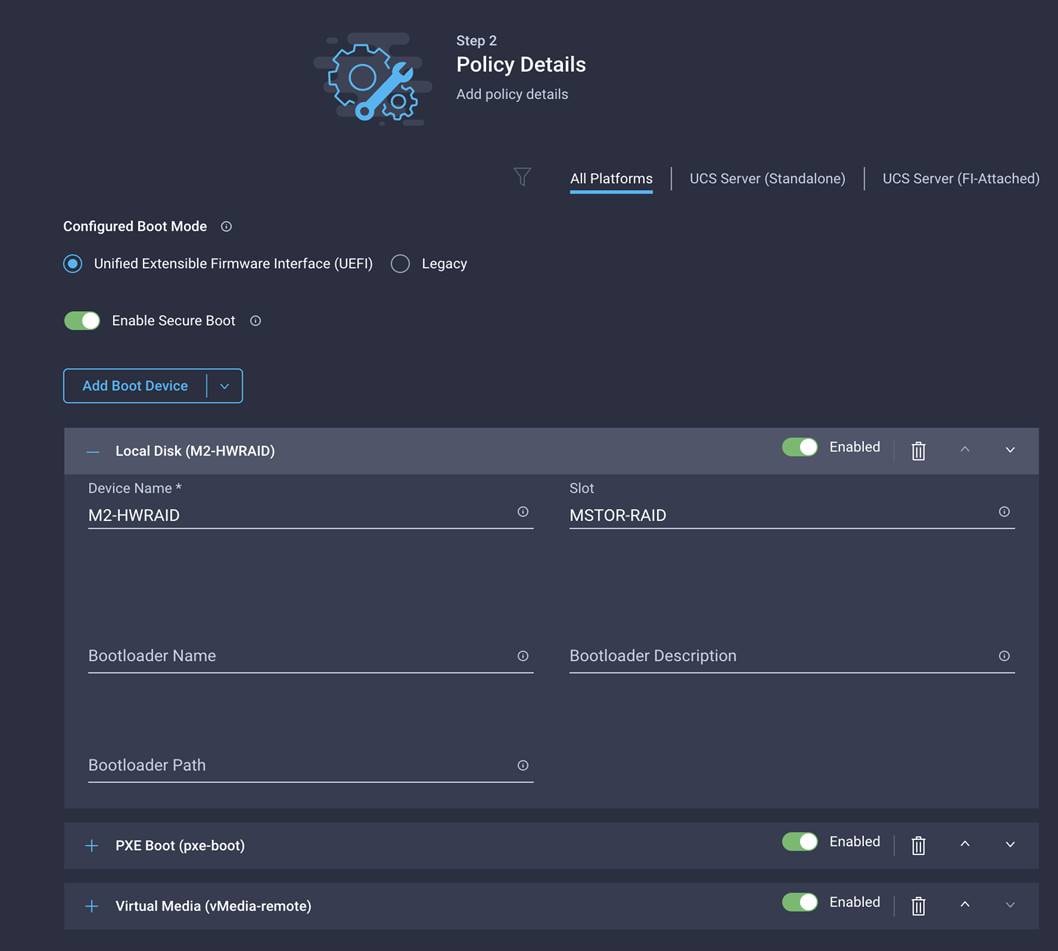

M.2 with 2 x 960-GB SSDs |

M.2 with 2 x 960-GB SSDs |

M.2 with 2 x 960-GB SSDs |

| Storage |

24 x 6.4TB 2.5in U2 NVMe and 2 x 3.2TB NVMe |

24 x 2.4TB 12G SAS 10K RPM SFF HDD (4K) (or 24 x 7.6TB Enterprise Value 12G SATA SSDs) and 2 x 3.2TB NVMe |

16 x 16TB 12G SAS 7.2K RPM LFF HDD(4K) and 2 x 3.2TB NVMe |

| Virtual Interface Card (VIC) |

Cisco UCS VIC 1467 (4 x 10/25G) Cisco UCS VIC 1477 (2 x 40/100G) Cisco UCS VIC 15428 (4 x 10/25/50G) |

Cisco UCS VIC 1467 (4 x 10/25G) Cisco UCS VIC 1477 (2 x 40/100G) Cisco UCS VIC 15428 (4 x 10/25/50G) |

Cisco UCS VIC 1467 (4 x 10/25G) Cisco UCS VIC 1477 (2 x 40/100G) Cisco UCS VIC 15428 (4 x 10/25/50G) |

| Storage Controller |

NA |

Cisco 12-Gbps SAS modular RAID controller with 4-GB flash-based write cache (FBWC) or Cisco 12-Gbps modular SAS host bus adapter (HBA) |

Cisco 12-Gbps SAS modular RAID controller with 4-GB FBWC or Cisco 12-Gbps modular SAS host bus adapter (HBA) |

| Network Connectivity |

Cisco UCS 6400 or 6500 Fabric Interconnect |

Cisco UCS 6400 or 6500 Fabric Interconnect |

Cisco UCS 6400 or 6500 Fabric Interconnect |

| GPU (optional) |

NVIDIA GPU A100 |

NVIDIA GPU A100 |

NVIDIA GPU A100 |

Note: Reference architecture highlighted here is the sizing guide for Apache Ozone based deployment. When sizing data lake for HDFS, Cloudera doesn’t support exceeding 100TB per data node and drives larger than 8TB. For more information, visit HDFS and Ozone section in CDP PvC Base hardware requirement: https://docs.cloudera.com/cdp-private-cloud-base/7.1.7/installation/topics/cdpdc-runtime.html

Compute Farm (CDP PvC DS) Reference Architecture

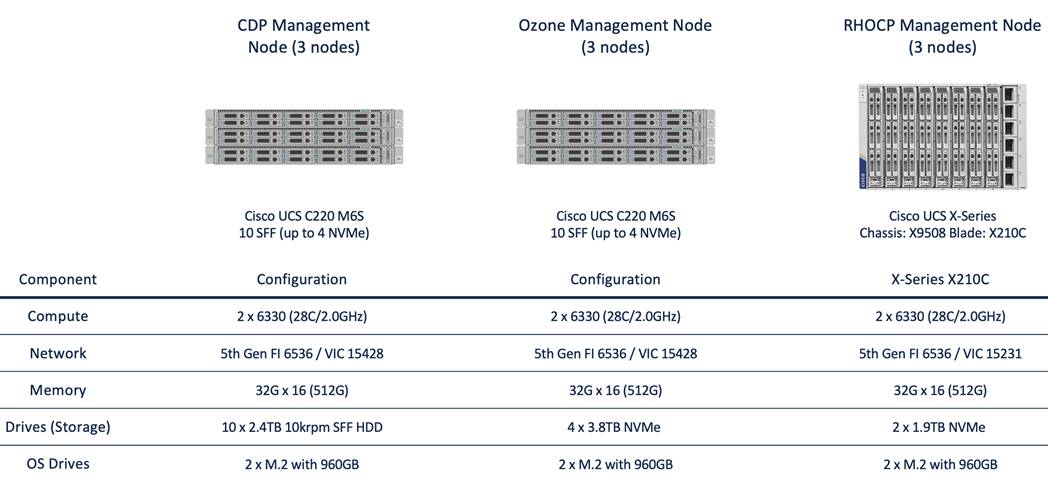

Table 2 lists the CDIP with CDP PvC DS configuration for master and worker nodes with RHOCP reference architecture.

Table 2. Cisco Data Intelligence Platform with CDP Private Cloud Data Services Configuration

|

|

High Core Option |

| Servers |

Cisco UCS X-Series 9508 chassis with X210C Blades (Up to 8 Per chassis) |

| CPU |

2 x 3rd Gen Intel® Xeon® Scalable Processors 6338 processors (2 x 32 cores, at 2.0 GHz) |

| Memory |

16 x 64GB RDIMM DRx4 3200 MHz (1TB) |

| Boot |

M.2 with 2 x 960GB SSD |

| Storage |

4 x 3.2TB 2.5in U2 NVMe* (Red Hat OpenShift Container Storage (RHOCS)/Portworx [2 drives], Local storage [2 drives]) |

| VIC |

Cisco UCS VIC 14425 4x25G mLOM or Cisco UCS VIC 15231 2x100/200G mLOM |

| Storage controller |

Cisco UCS X210c Compute Node compute pass through controller |

| Network connectivity |

Cisco UCS 6400 or 6500 Fabric Interconnect |

| GPU (optional) |

Cisco UCS X440p with NVIDIA A100 GPU |

Note: NVMe storage capacity and quantity needs to be updated based on the dataset requirement. For more information, visit CDP PvC DS with RHOCP hardware requirements: https://docs.cloudera.com/cdp-private-cloud-data-services/1.4.0/installation/topics/cdppvc-installation-openshift-requirements.html

Note: This deployment guide was tested with Cisco UCS Fabric Interconnect 6454 connected to Cisco UCS X9508 chassis via UCS 9108-25G IFM. Cisco UCS X9416 X-Fabric Module for 9508 chassis provides native PCIe Gen4 x16 connectivity to the X210c compute and Cisco UCS X440p PCIe node. For more details: https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-x-series-modular-system/x9508-specsheet.pdf

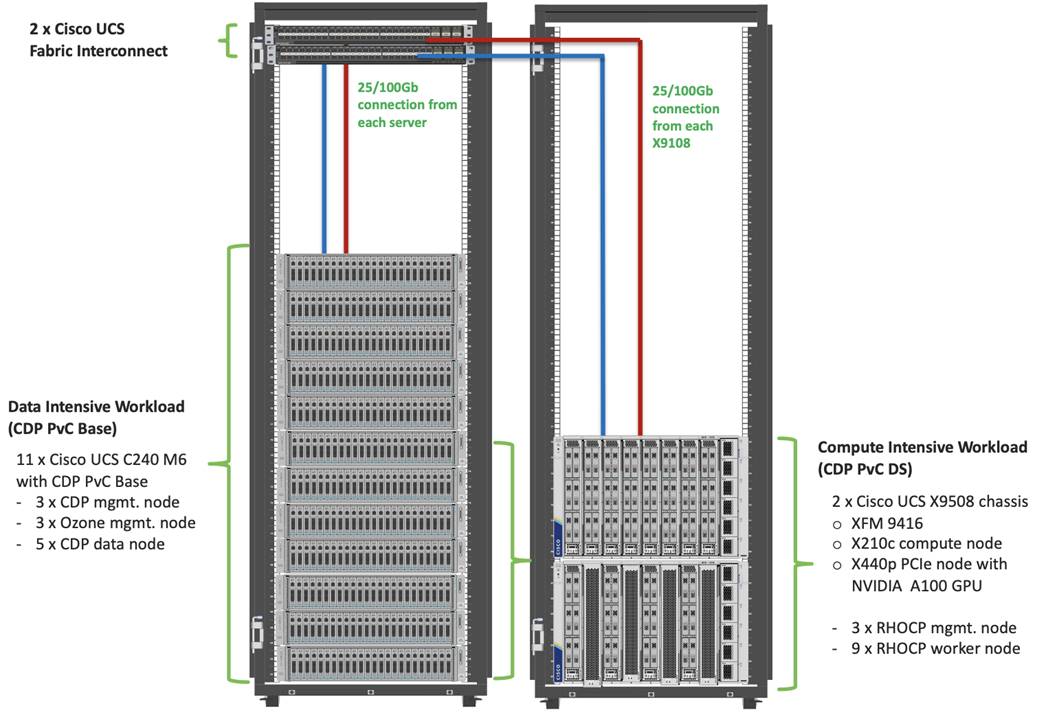

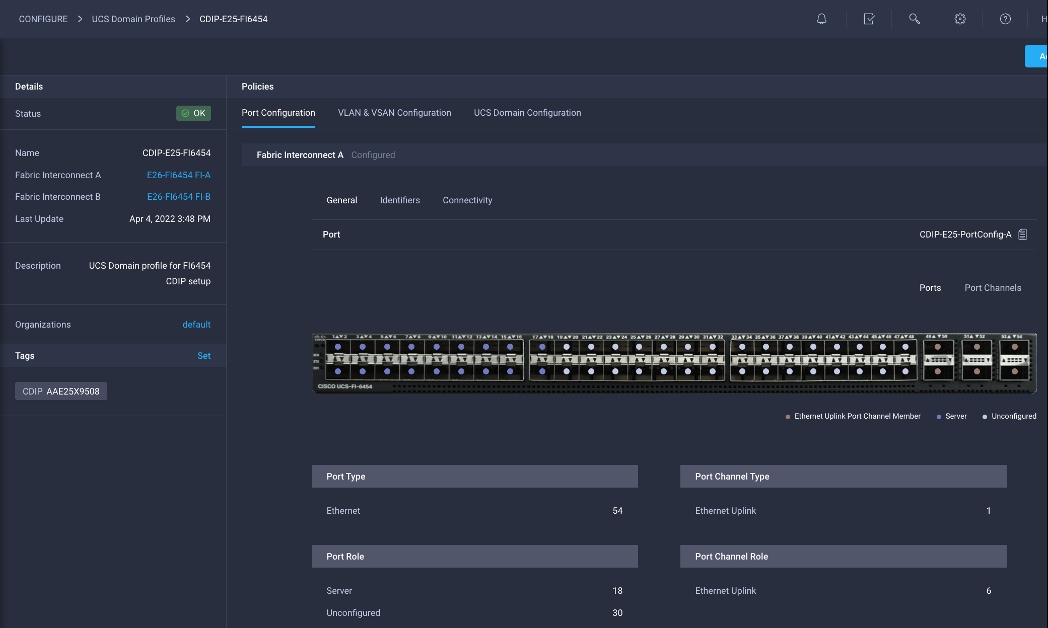

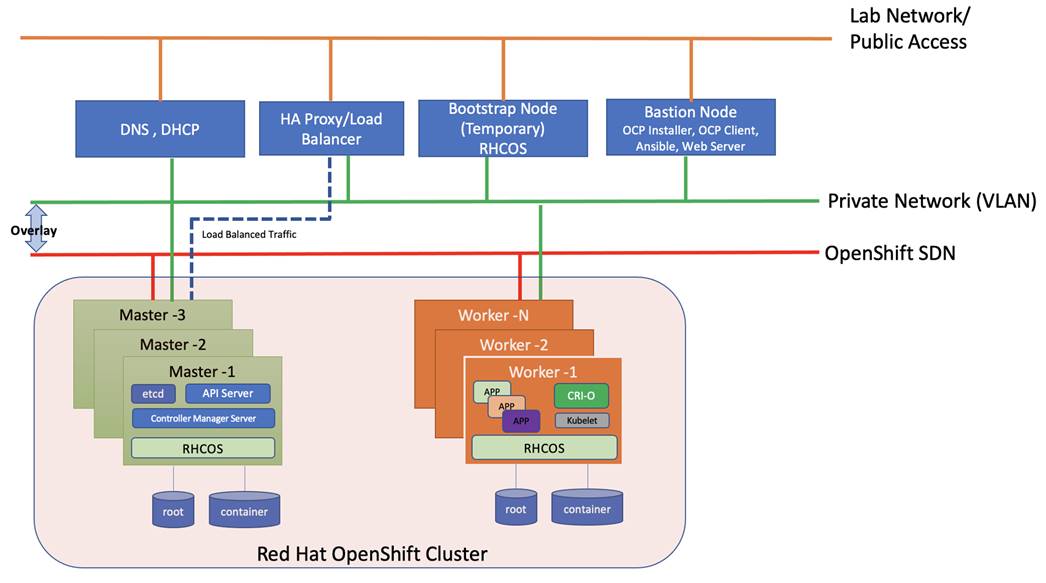

As illustrated in Figure 4, this CVD was designed with the following:

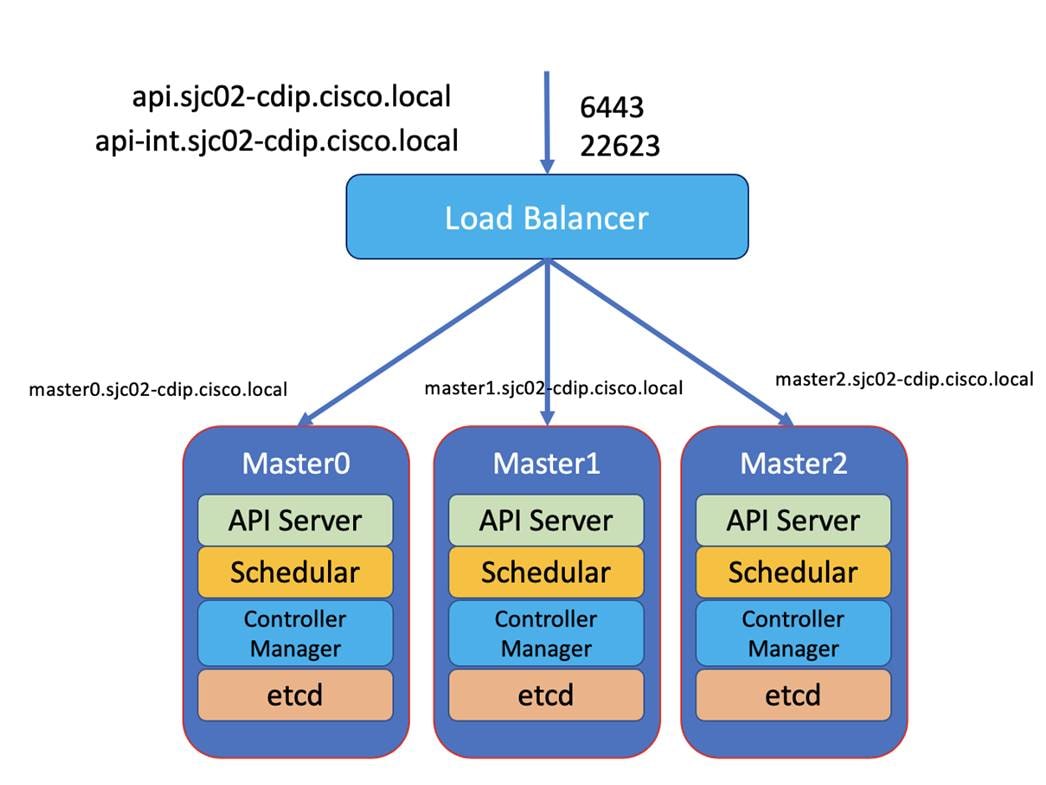

● 3 x Cisco UCS X210C compute node with RedHat OpenShift Container Platform master node

● 5 x Cisco UCS X210C compute node with RedHat OpenShift Container Platform worker nodes

● 4 x Cisco UCS X210c compute node and 4 x Cisco UCS X440p PCIe node hosting 2 x NVIDIA A100 GPU per node with RedHat OpenShift Container Platform worker nodes

● Cloudera Data Platform Private Cloud Data Services running on RedHat OpenShift Container Platform

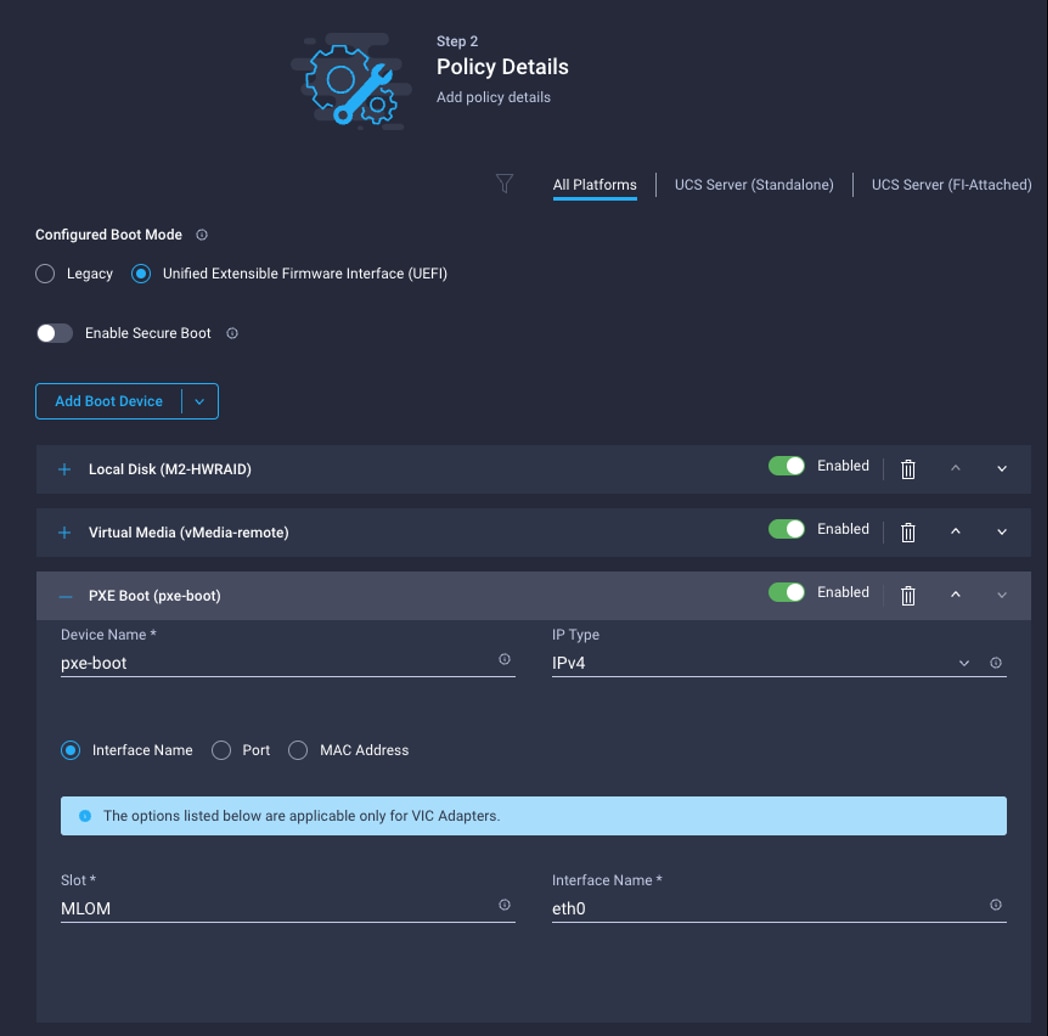

● 1 x Cisco UCS C240 M6 bootstrap node for RedHat OpenShift Container Platform

● 1 x Cisco UCS C240 M6 running HA Proxy

● Cloudera Data Platform Private Cloud Base running the Cloudera manager.

Refer to http://www.cisco.com/go/bigdata_design to build a fully supported CDP Private Cloud Base on CDIP reference architecture. This CVD does not provide the details to build a CDP Private Cloud Base. For detailed instruction, click the following links:

Cisco Data Intelligence Platform on Cisco UCS M6 with Cloudera Data Platform Ozone Design Guide

Cisco Data Intelligence Platform with All NVMe Storage, Cisco Intersight, and Cloudera Data Platform

Cisco Data Intelligence Platform on Cisco UCS S3260 with Cloudera Data Platform

Note: The bootstrap controller node is not shown in the reference architecture (Figure 4). The bootstrap node is temporary and is used to deploy OpenShift control plane, once OpenShift masters are up, it can be removed.

Note: HAproxy server is used for load balancing OpenShift control and application traffic. It is recommended to use external load balancer in production environment or implement HA for HAproxy load balancers with keepalived VIP.

This chapter contains the following:

● Cisco Data Intelligence Platform

● Cisco Unified Computing System

● Cisco UCS Fabric Interconnect

● Cloudera Data Platform (CDP)

● Cloudera Machine Learning (CML)

● Cloudera Data Warehouse (CDW)

● Cloudera Data Engineering (CDE)

Cisco Data Intelligence Platform

This section describes the components used to build Cisco Data Intelligence Platform, a highly scalable architecture designed to meet a variety of scale-out application demands with seamless data integration and management integration capabilities.

Cisco Data Intelligence Platform powered by Cloudera Data Platform delivers:

● Latest generation of CPUs from Intel (3rd generation Intel Scalable family, with Ice Lake CPUs).

● Cloud scale and fully modular architecture where big data, AI/compute farm, and massive storage tiers work together as a single entity and each CDIP component can also scale independently to address the IT issues in the modern data center.

● World record Hadoop performance both for MapReduce and Spark frameworks published at TPCx-HS benchmark.

● AI compute farm offers different types of AI frameworks and compute types (GPU, CPU, FPGA) to work data for analytics.

● A massive storage tier enables to gradually retire data and quick retrieval when needed on a storage dense sub-system with a lower $/TB providing a better TCO.

● Data compression with FPGA, offload compute-heavy compression tasks to FPGA, relieve CPU to perform other tasks, and gain significant performance.

● Seamlessly scale the architecture to thousands of nodes.

● Single pane of glass management with Cisco Intersight.

● ISV Partner ecosystem – Top notch ISV partner ecosystem, offering best of the breed end-to-end validated architectures.

● Pre-validated and fully supported platform.

● Disaggregate Architecture supports separation of storage and compute for a data lake.

● Container Cloud, Kubernetes, compute farm backed by the industry leading container orchestration engine and offers the very first container cloud plugged with data lake and object store.

Cloudera Data Platform Private Cloud Base (CDP PvC Base)

With the merger of Cloudera and Hortonworks, a new “Cloudera” software named Cloudera Data Platform (CDP) combined the best of Hortonwork’s and Cloudera’s technologies to deliver the industry leading first enterprise data cloud. CDP Private Cloud Base is the on-prem version of CDP and CDP Private Cloud Data Services is the on-prem version of Private Cloud to enable compute on Kubernetes with Red Hat OpenShift Container Platform. This unified distribution is a scalable and customizable platform where workloads can be securely provisioned. CDP gives a clear path for extending or refreshing your existing HDP and CDH deployments and set the stage for cloud-native architecture.

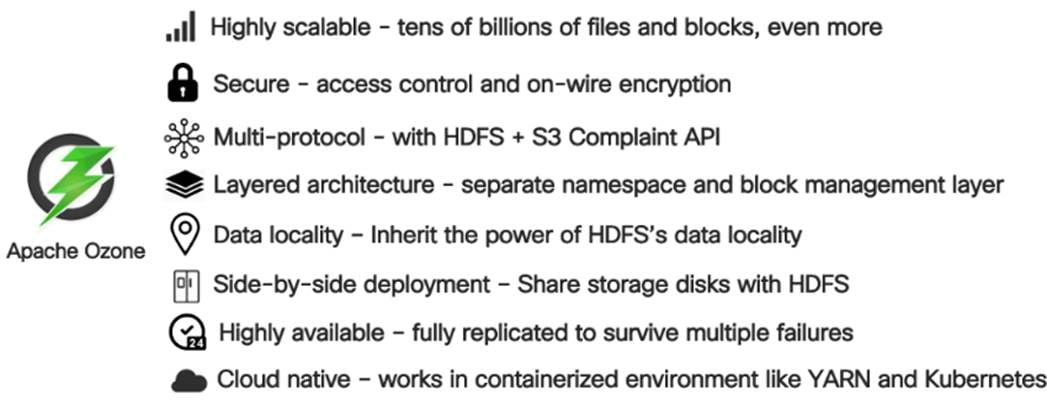

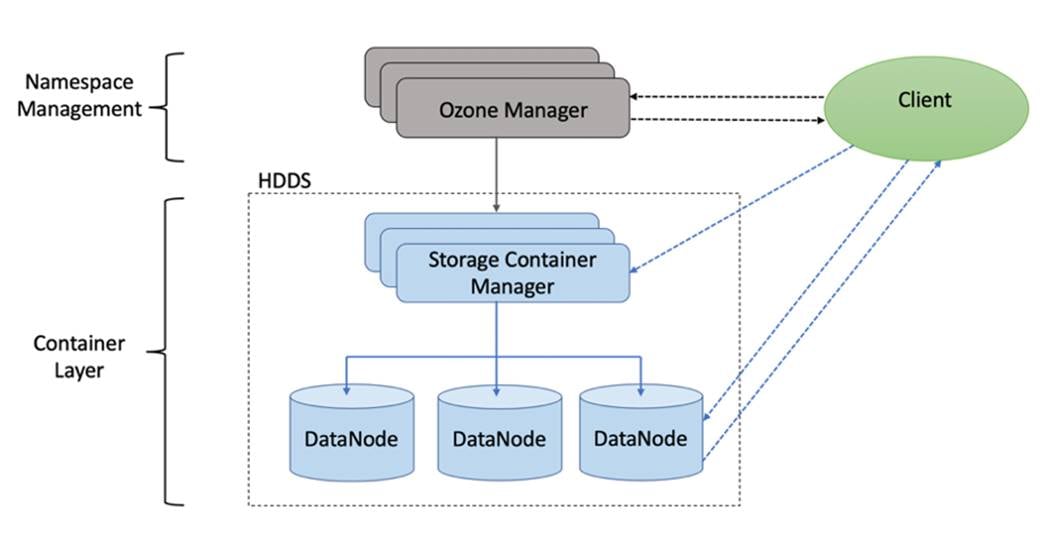

Apache Ozone Object Store

Apache Ozone is a scalable, redundant, and distributed object store for Hadoop. Apart from scaling to billions of objects of varying sizes, Ozone can function effectively in containerized environments such as Kubernetes and YARN. Applications using frameworks like Apache Spark, YARN, and Hive work natively without any modifications. Ozone is built on a highly available, replicated block storage layer called Hadoop Distributed Data Store (HDDS).

Ozone is a scale-out architecture with minimal operational overheads and long-term maintenance efforts. Ozone can be co-located with HDFS with single security and governance policies for easy data exchange or migration and offers seamless application portability. Ozone enables separation of compute and storage via the S3 API as well as like HDFS, it also supports data locality for applications that choose to use it.

The design of Ozone was guided by the following key principles:

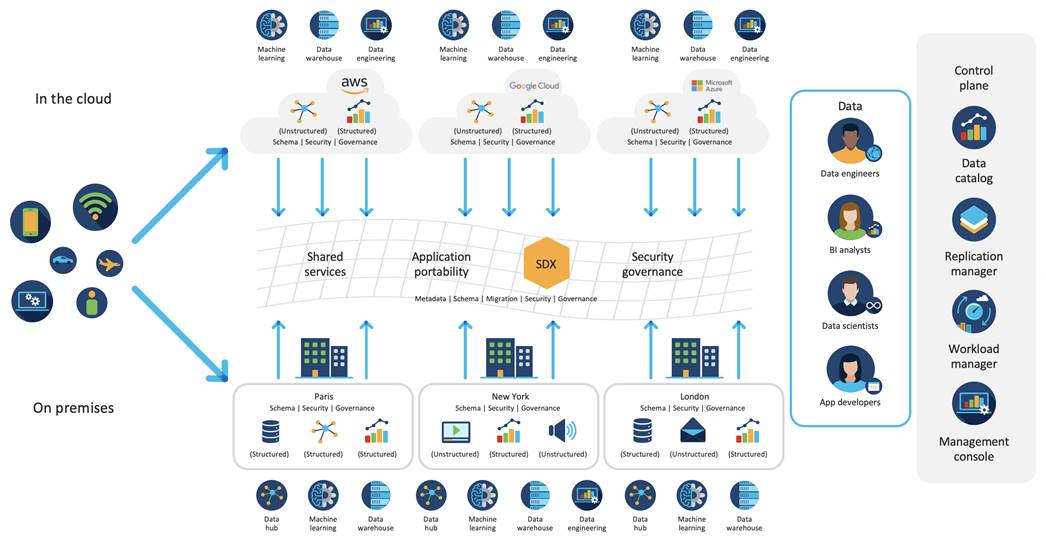

CDIP with CDP Hybrid Cloud Architecture

Cisco Data Intelligent Platform (CDIP) with Cloudera Data Platform (CDP) integrates different domains, such as specific layers of compute infrastructure between on-premises environments and public clouds. Integrations can include moving a Kubernetes-based application to establish secure connectivity, user access, or policies per workloads between environments. These hybrid cloud architecture frameworks and operating models are better defined with the more encompassing term hybrid IT, which also includes multi-cloud scenarios enabling distributed nature of the infrastructure that can assure elasticity, scalability, performance, and efficiency as well as bring apps closer to their intended users with ability to cloud burst.

Red Hat OpenShift or Embedded Container Service (ECS) being the preferred container cloud platform for CDP private cloud and so is for CDIP, is the market leading Kubernetes powered container platform. This combination is the first enterprise data cloud with a powerful hybrid architecture that decouples compute and storage for greater agility, ease-of-use, and more efficient use of private and multi-cloud infrastructure resources. With Cloudera’s Shared Data Experience (SDX), security and governance policies can be easily and consistently enforced across data and analytics in private as well as multi-cloud deployments. This hybridity will open myriad opportunities for seamless portability of workloads and applications for multi-function integration with other frameworks such as streaming data, batch workloads, analytics, data pipelining/engineering, and machine learning.

Cloud Native Architecture for Data Lake and AI

Cisco Data Intelligence Platform with CDP private cloud accelerates the process of becoming cloud-native for your data lake and AI/ML workloads. By leveraging Kubernetes powered container cloud, enterprises can now quickly break the silos in monolithic application frameworks and embrace a continuous innovation of micro-services architecture with CI/CD approach. With cloud-native ecosystem, enterprises can build scalable and elastic modern applications that extends the boundaries from private cloud to hybrid.

Containerization

Hadoop 3.0 introduced production-ready Docker container support on YARN with GPU isolation and scheduling. This created plethora of opportunities for modern applications, such as micro-services and distributed applications frameworks comprised of 1000s of containers to execute AI/ML algorithms on Peta bytes of data with ease and in a speedy fashion.

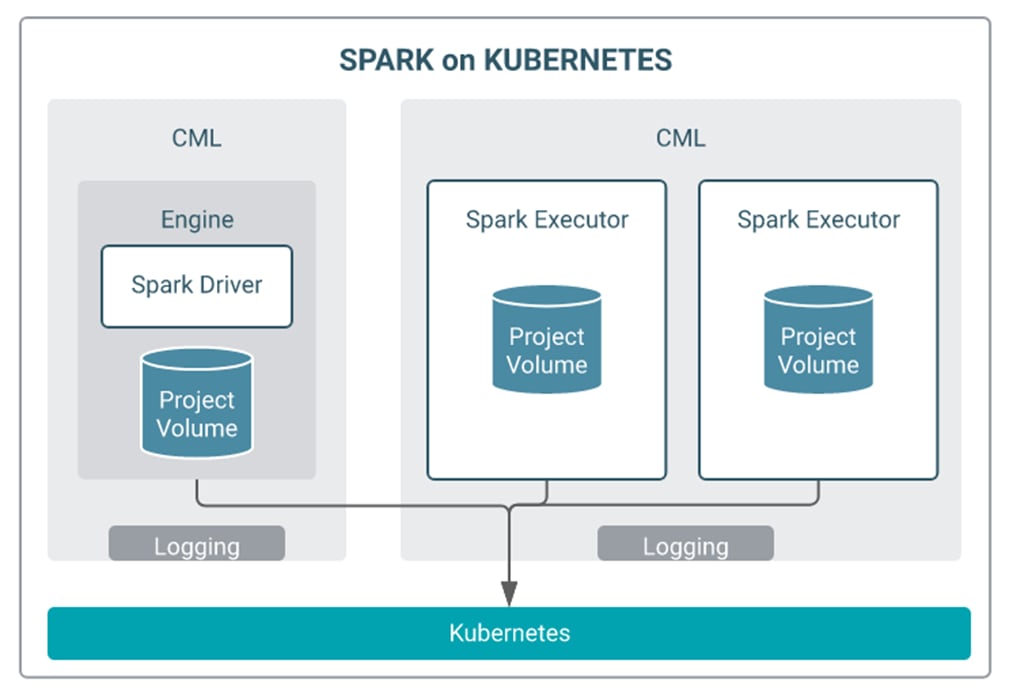

Apache Spark 3.0

Apache Spark 3.0 is a highly anticipated release. To meet this expectation, Spark is no longer limited just to CPU for its workload, it now offers GPU isolation and pooling GPUs from different servers to accelerated compute. To easily manage the deep learning environment, YARN launches the Spark 3.0 applications with GPU. This prepares the other workloads, such as Machine Learning and ETL, to be accelerated by GPU for Spark Workloads. Cisco Blog on Apache Spark 3.0

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next-generation data center platform that integrates computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership and increase business agility. The system integrates a low-latency, lossless 10-100 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform with a unified management domain for managing all resources.

Cisco Unified Computing System is revolutionizing the way servers are managed in the datacenter. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager:

● Embedded Management—In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Inter-connects, eliminating the need for any external physical or virtual devices to manage the servers.

● Unified Fabric—In Cisco UCS, from blade server chassis or rack servers to FI, there is a single Ethernet cable used for LAN, SAN, and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of the overall solution.

● Auto Discovery—By simply inserting the blade server in the chassis or connecting the rack server to the fabric interconnect, discovery and inventory of compute resources occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of Cisco UCS, where compute capability of Cisco UCS can be extended easily while keeping the existing external connectivity to LAN, SAN, and management networks.

Cisco UCS Manager (UCSM) provides unified, integrated management for all software and hardware components in Cisco UCS. Using Cisco Single Connect technology, it manages, controls, and administers multiple chassis for thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive graphical user interface (GUI), a command-line interface (CLI), or a through a robust application programming interface (API).

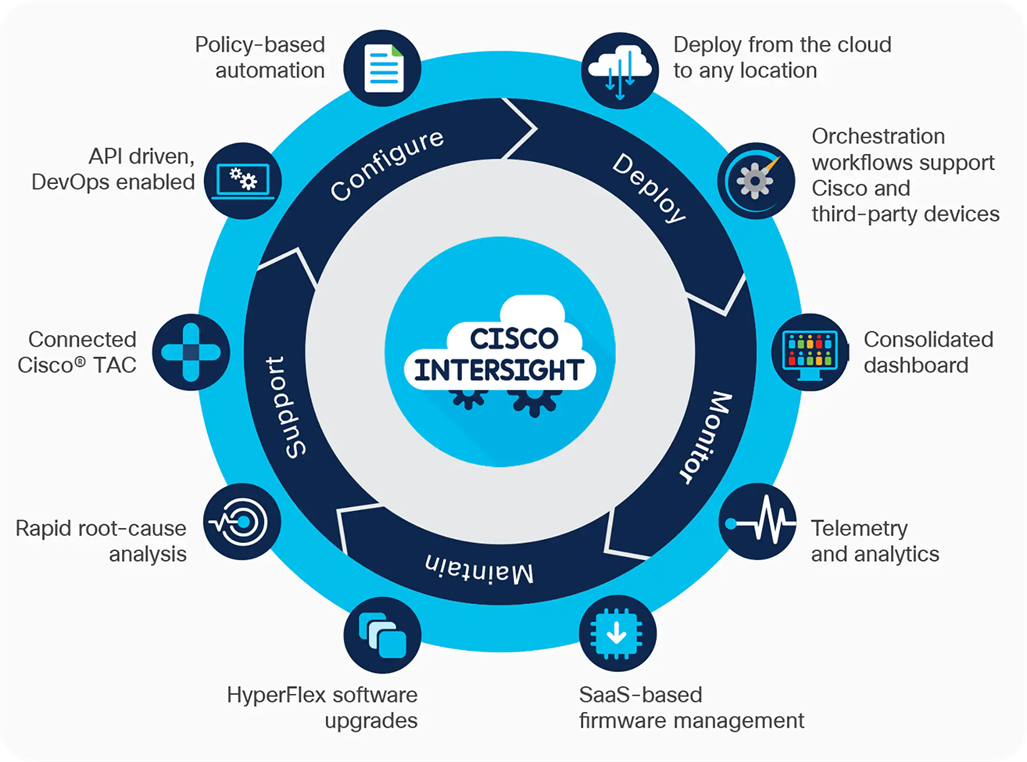

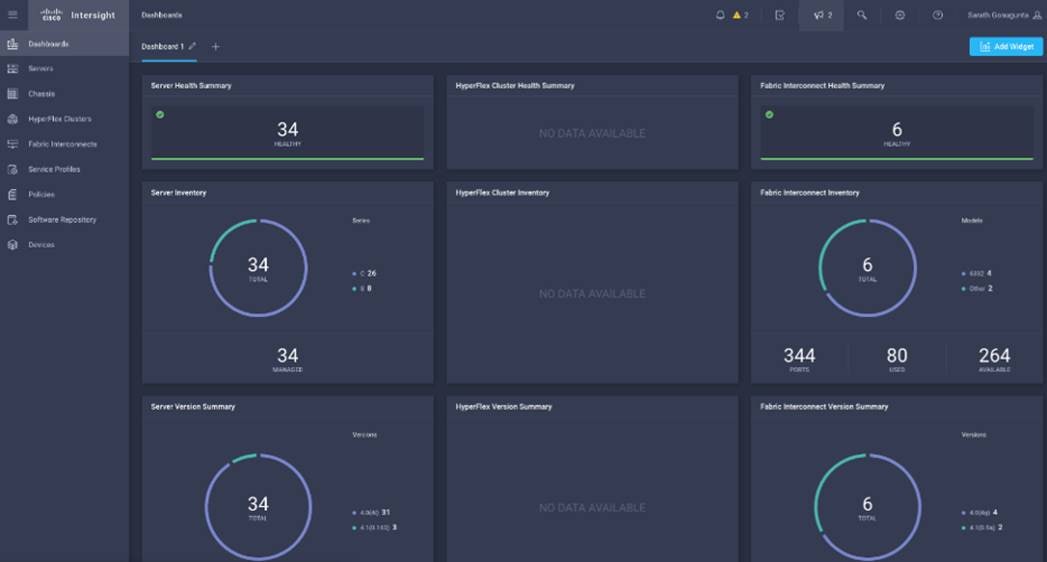

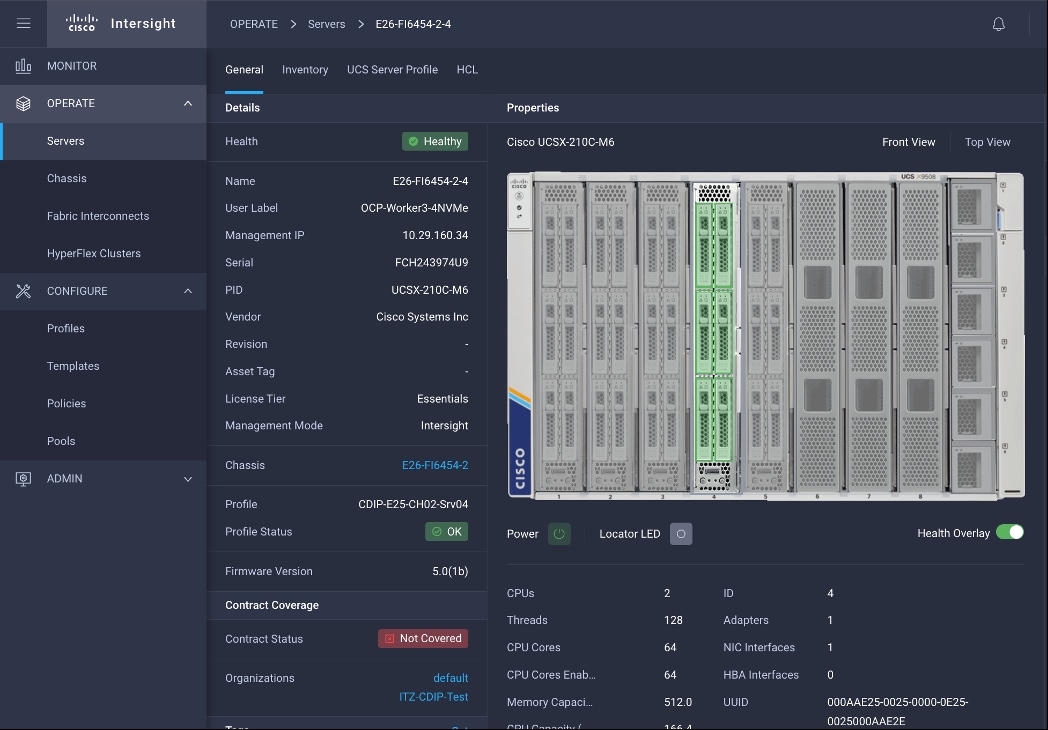

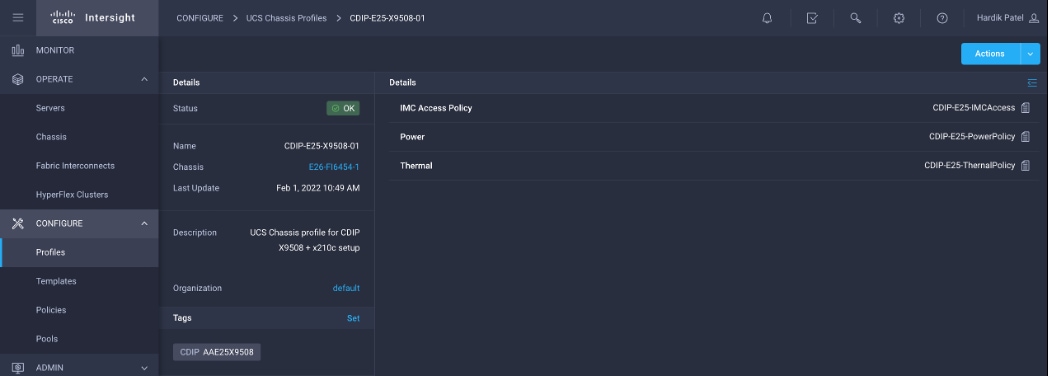

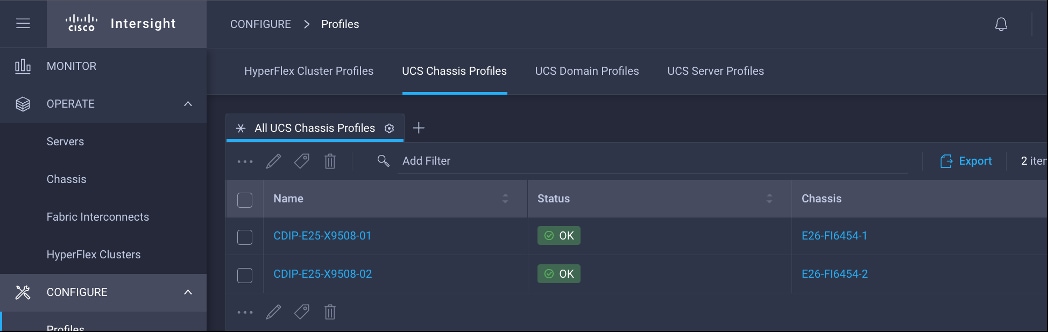

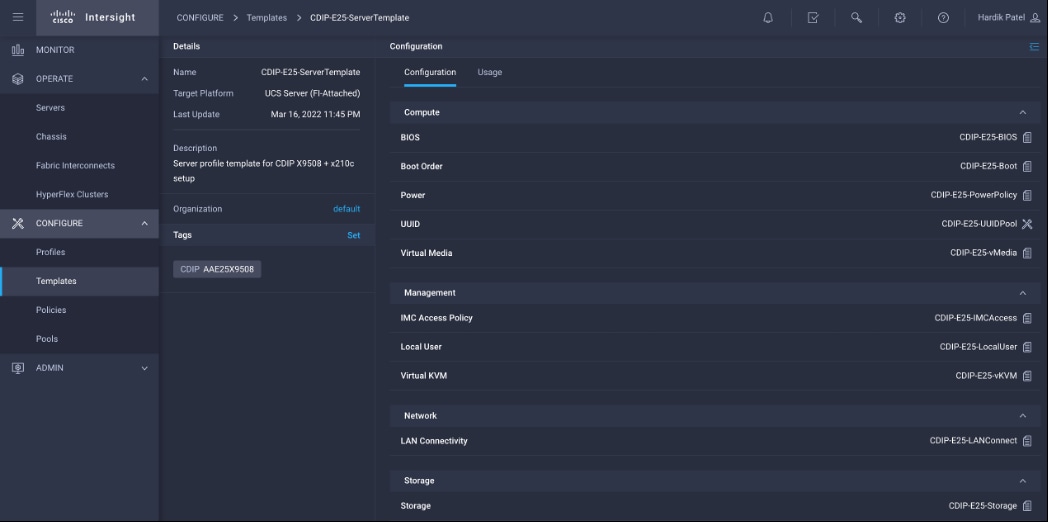

Cisco Intersight is a lifecycle management platform for your infrastructure, regardless of where it resides. In your enterprise data center, at the edge, in remote and branch offices, at retail and industrial sites—all these locations present unique management challenges and have typically required separate tools. Cisco Intersight Software as a Service (SaaS) unifies and simplifies your experience of the Cisco Unified Computing System (Cisco UCS) and Cisco HyperFlex systems. See Figure 11.

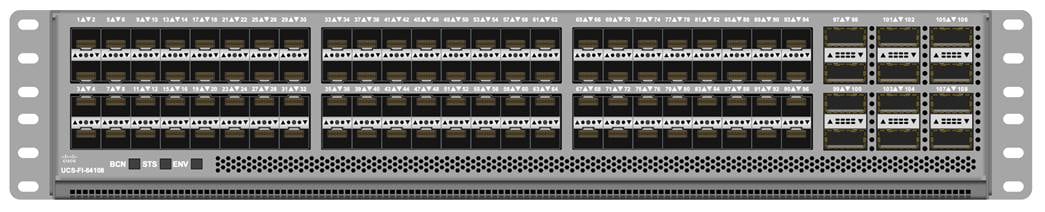

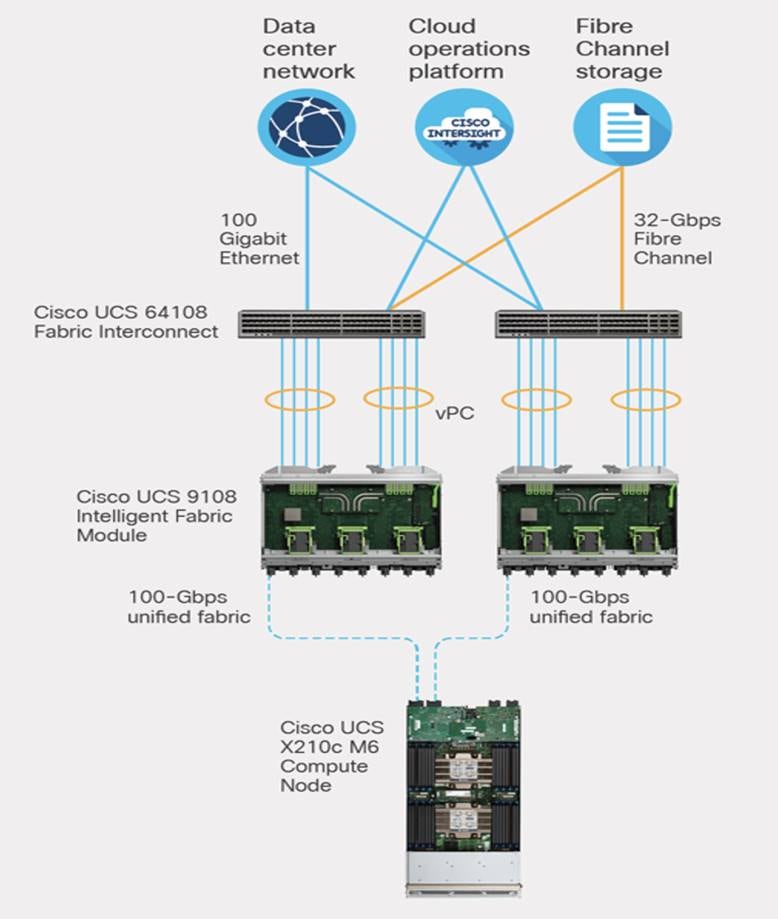

The Cisco UCS Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. Depending on the model chosen, the Cisco UCS Fabric Interconnect offers line-rate, low-latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE) and Fibre Channel connectivity. Cisco UCS Fabric Interconnects provide the management and communication backbone for the Cisco UCS C-Series, B-Series and X-Series Blade Servers, and 9508 Series Blade Server Chassis. All servers and chassis, and therefore all blades, attached to the Cisco UCS Fabric Interconnects become part of a single, highly available management domain. In addition, by supporting unified fabrics, the Cisco UCS Fabric Interconnects provide both the LAN and SAN connectivity for all servers within its domain.

The Cisco UCS 6454 54-Port Fabric Interconnect (Figure 12) is a One-Rack-Unit (1RU) 10/25/40/100 Gigabit Ethernet, FCoE, and Fibre Channel switch offering up to 3.82 Tbps throughput and up to 54 ports. The switch has 28 10/25-Gbps Ethernet ports, 4 1/10/25- Gbps Ethernet ports, 6 40/100-Gbps Ethernet uplink ports, and 16 unified ports that can support 10/25-Gbps Ethernet ports or 8/16/32-Gbps Fibre Channel ports. All Ethernet ports are capable of supporting FCoE.

The Cisco UCS 64108 Fabric Interconnect (Figure 13) is a 2-RU top-of-rack switch that mounts in a standard 19-inch rack such as the Cisco R Series rack. The 64108 is a 10/25/40/100 Gigabit Ethernet, FCoE and Fiber Channel switch offering up to 7.42 Tbps throughput and up to 108 ports. The switch has 16 unified ports (port numbers 1-16) that can support 10/25-Gbps SFP28 Ethernet ports or 8/16/32-Gbps Fibre Channel ports, 72 10/25-Gbps Ethernet SFP28 ports (port numbers 17-88), 8 1/10/25-Gbps Ethernet SFP28 ports (port numbers 89-96), and 12 40/100-Gbps Ethernet QSFP28 uplink ports (port numbers 97-108). All Ethernet ports are capable of supporting FCoE.

Cisco UCS C-Series Rack-Mount Servers

Cisco UCS C-Series Rack-Mount Servers keep pace with Intel Xeon processor innovation by offering the latest processors with increased processor frequency and improved security and availability features. With the increased performance provided by the Intel Xeon Scalable Family Processors, Cisco UCS C-Series servers offer an improved price-to-performance ratio. They also extend Cisco UCS innovations to an industry-standard rack-mount form factor, including a standards-based unified network fabric, Cisco VN-Link virtualization support, and Cisco Extended Memory Technology.

It is designed to operate both in standalone environments and as part of Cisco UCS managed configuration, these servers enable organizations to deploy systems incrementally—using as many or as few servers as needed—on a schedule that best meets the organization’s timing and budget. Cisco UCS C-Series servers offer investment protection through the capability to deploy them either as standalone servers or as part of Cisco UCS. One compelling reason that many organizations prefer rack-mount servers is the wide range of I/O options available in the form of PCIe adapters. Cisco UCS C-Series servers support a broad range of I/O options, including interfaces supported by Cisco and adapters from third parties.

Cisco UCS C240 M6 Rack-Mount Server

The Cisco UCS C240 M6 Rack Server is well-suited for a wide range of storage and I/O-intensive applications such as big data analytics, databases, collaboration, virtualization, consolidation, and high-performance computing in its two-socket, 2RU form factor.

The Cisco UCS C240 M6 Server extends the capabilities of the Cisco UCS rack server portfolio with 3rd Gen Intel Xeon Scalable Processors supporting more than 43 percent more cores per socket and 33 percent more memory when compared with the previous generation.

You can deploy the Cisco UCS C-Series rack servers as standalone servers or as part of the Cisco Unified Computing System managed by Cisco Intersight, or Intersight Managed Mode to take advantage of Cisco® standards-based unified computing innovations that can help reduce your Total Cost of Ownership (TCO) and increase your business agility.

These improvements deliver significant performance and efficiency gains that will improve your application performance. The Cisco UCS C240 M6 Rack Server delivers outstanding levels of expandability and performance.

The Cisco UCS C220 M6 Rack Server is the most versatile general-purpose infrastructure and application server in the industry. This high-density, 1RU, 2-socket rack server delivers industry-leading performance and efficiency for a wide range of workloads, including virtualization, collaboration, and bare-metal applications. You can deploy the Cisco UCS C-Series Rack Servers as standalone servers or as part of the Cisco Unified Computing System managed by Cisco Intersight, Cisco UCS Manager, or Intersight Managed Mode to take advantage of Cisco® standards-based unified computing innovations that can help reduce your Total Cost of Ownership (TCO) and increase your business agility.

The Cisco UCS C220 M6 Rack Server extends the capabilities of the Cisco UCS rack server portfolio. The Cisco UCS C220 M6 Rack Server delivers outstanding levels of expandability and performance.

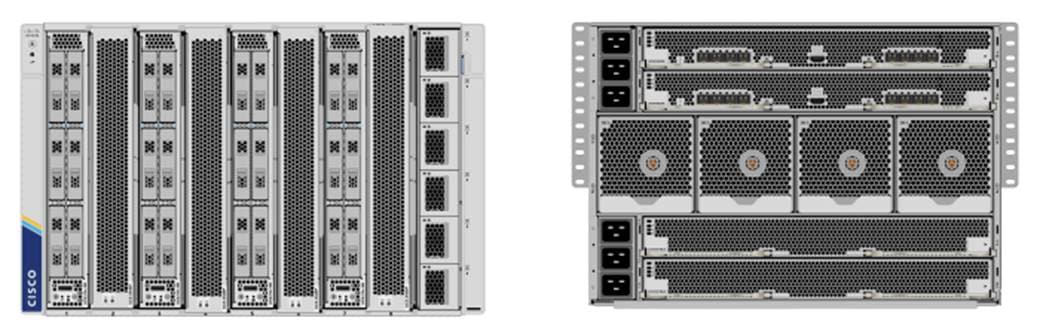

Cisco UCS X-Series Modular System

The Cisco UCS® X-Series with Cisco Intersight is a modular system managed from the cloud. It is designed to meet the needs of modern applications and improve operational efficiency, agility, and scale through an adaptable, future-ready, modular design.

Designed to deploy and automate hybrid cloud environments:

● Simplify with cloud-operated infrastructure

● Simplify with an adaptable system designed for modern applications

● Simplify with a system engineered for the future

For more details, visit https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-x-series-modular-system/x9508-specsheet.pdf

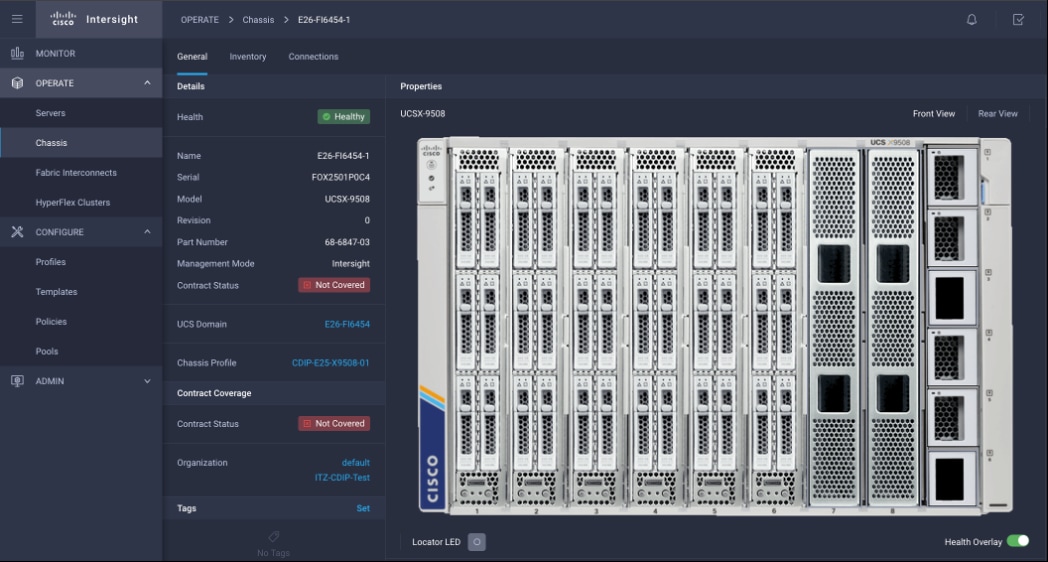

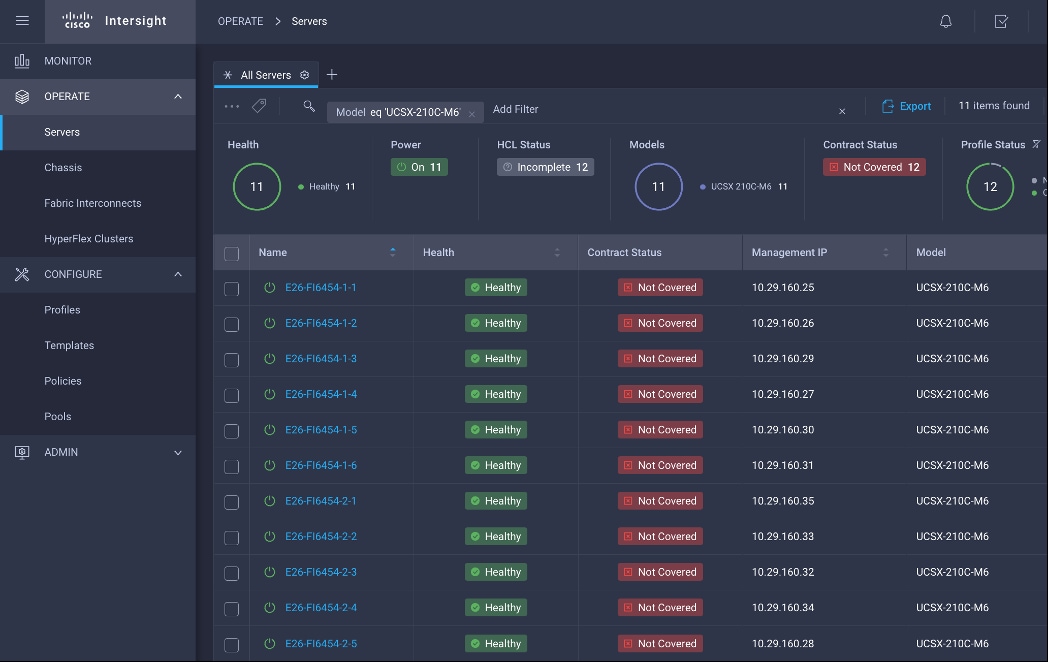

Cisco UCS X210c Compute Node

The Cisco UCS X210c M6 Compute Node is the first computing device to integrate into the Cisco UCS X-Series Modular System. Up to eight compute nodes can reside in the 7-Rack-Unit (7RU) Cisco UCS X9508 Chassis, offering one of the highest densities of compute, IO, and storage per rack unit in the industry.

The Cisco UCS X210c M6 server form factor offers more I/O, more storage, better cooling, and seamless upgrades to connectivity technologies. Its features include the following:

● The 14000 and 15000 Series VICs supply more aggregate bandwidth with up to 200 Gbps per server.

● With six large-capacity drives, the UCS X210c M6 can be used for many workloads that used to require a rack server simply because of the storage requirements.

● Optionally, if you run workloads that require graphical acceleration, you can have two drives and up to two GPUs.

● The X201c node supports Cisco UCS X-Fabric Technology for additional GPUs and future storage and memory options.

● Its vertical orientation and design allow for better airflow, increasing cooling for better reliability.

A unified fabric interconnects all devices in the system. It securely carries all traffic to the fabric interconnects where it can be broken out into IP networking, Fibre Channel SAN, and management connectivity.

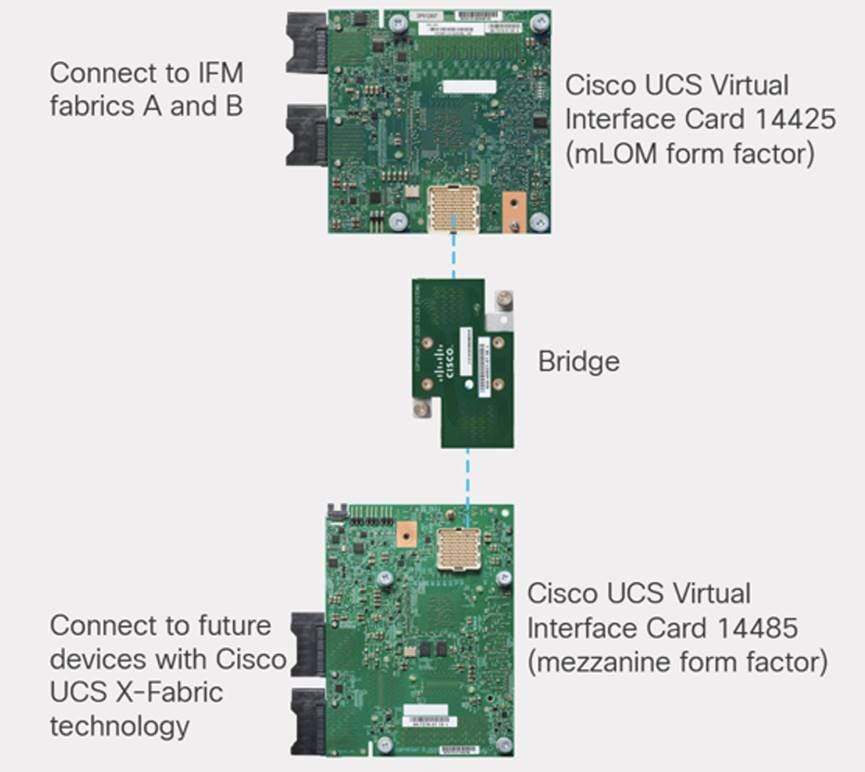

Cisco UCS Virtual Interface Card

Configuring your Cisco UCS X210c M6 Compute Node with both mLOM-and mezzanine-form-factor virtual interface card delivers up to 200 Gbps of network bandwidth to the node and prepares it for future devices with Cisco UCS X-Fabric technology.

The number and types of I/O devices are configured on demand through Intersight management.

The Cisco UCS X440p PCIe Node is the first PCIe resource node to integrate into the Cisco UCS X-Series Modular System. The Cisco UCS X9508 Chassis has eight node slots, up to four of which can be X440p PCIe nodes when paired with a Cisco UCS X210c M6 Compute Node. The Cisco UCS X440p PCIe Node supports two x16 full-height, full-length dual slot PCIe cards, or four x8 full-height, full-length single slot PCIe cards and requires both Cisco UCS 9416 X-Fabric modules for PCIe connectivity. This provides up to 16 GPUs per chassis to accelerate your applications with the Cisco UCS X440p Nodes. If your application needs even more GPU acceleration, up to two additional GPUs can be added on each Cisco UCS X210c compute node.

Benefits include:

● Accelerate more workloads with up to four GPUs

● Make it easy to add, update, and remove GPUs to Cisco UCS® X210c M6 Compute Nodes

● Get a zero-cable solution for improved reliability and ease of installation

● Have industry standard PCIe Gen 4 connections for compatibility

Ready for a Hybrid Cloud World

The Cisco Intersight cloud operations platform is the force that transforms the Cisco UCS X-Series Modular System from a set of components into a flexible server platform to propel your most important workloads.

The Cisco UCS X-Series with Intersight is built with a common purpose: to make hardware think like software so that you can easily adapt to a rapidly changing world. Through server profiles, Intersight defines the identity, connectivity, and I/O configuration of your servers and automates the entire infrastructure lifecycle. It’s easy to imagine how, as more features are released, the modular system supports a pool of I/O resources: banks of nonvolatile memory, GPU accelerators, specialized ASICs, and massive amounts of NVMe storage. Just as the chassis and Cisco UCS X-Fabric technology are designed to incorporate a constant flow of new capabilities, Cisco Intersight is designed to automatically integrate those technologies into servers along with a constant flow of new, higher-level management capabilities. Software as a service (SaaS) meets modular, infrastructure as code, and the line between hardware and software dissolves.

With Cisco Intersight and the Cisco UCS X-Series you can:

● Define desired system configurations based on policies that use pools of resources provided by the Cisco UCS X-Series. Let Cisco Intersight assemble the components and set up everything from firmware levels to which I/O devices are connected. Infrastructure is code, so your IT organization can use the Intersight GUI, and your DevOps teams can use the Intersight API, the Intersight Service for HashiCorp Terraform, or the many API bindings from languages such as Python and PowerShell.

● Deploy from the cloud to any location. Anywhere the cloud reaches, Intersight can automate your IT processes. We take the guesswork out of implementing new services with a curated set of services we bundle with the Intersight Kubernetes Service, for example.

● Visualize the interdependencies between software components and how they use the infrastructure that supports them with Intersight Workload Optimizer.

● Optimize your workload by analyzing runtime performance and make resource adjustments and workload placements to keep response time within a desired range. If your first attempt at matching resources to workloads doesn’t deliver the results you need, you can reshape the system quickly and easily. Cisco Intersight facilitates deploying workloads into your private cloud and into the public cloud. Now one framework bridges your core, cloud, and edge infrastructure, managing infrastructure and workloads wherever they are deployed.

● Maintain your infrastructure with a consolidated dashboard of infrastructure components regardless of location. Ongoing telemetry and analytics give early detection of possible failures. Reduce risk of configuration drift and inconsistent configurations through automation with global policy enforcement.

● Support your infrastructure with AI-driven root-cause analysis and automated case support for the always-connected Cisco Technical Assistance Center (Cisco TAC). Intersight watches over you when you update your solution stack, helping to prevent incompatible hardware, firmware, operating system, and hypervisor configurations.

Modular Management Architecture

Cisco Intersight is a unified, secure, modular platform that consists of a set of services that bridge applications and infrastructure to meet your specific needs, including:

● Intersight Infrastructure Service

Manage your infrastructure lifecycle, including Cisco data center products, Cisco converged infrastructure solutions, and third-party endpoints

● Intersight Workload Optimizer

Revolutionize how you manage application resources across any environment with real-time, full-stack visibility to help ensure performance and better cost control

● Intersight Kubernetes Service

Simplify Kubernetes with automated lifecycle management across your multi-cloud environment

● Intersight Virtualization Service

Deploy and manage virtual machines on premises or in the cloud

● Intersight Cloud Orchestrator

Standardize application lifecycle management across multiple clouds

Cisco Intersight is Cisco’s systems management platform that delivers intuitive computing through cloud-powered intelligence. This platform offers a more intelligent level of management that enables IT organizations to analyze, simplify, and automate their environments in ways that were not possible with prior generations of tools. This capability empowers organizations to achieve significant savings in Total Cost of Ownership (TCO) and to deliver applications faster, so they can support new business initiatives.

Cisco Intersight is a Software as a Service (SaaS) infrastructure management which provides a single pane of glass management of CDIP infrastructure in the data center. Cisco Intersight scales easily, and frequent updates are implemented without impact to operations. Cisco Intersight Essentials enables customers to centralize configuration management through a unified policy engine, determine compliance with the Cisco UCS Hardware Compatibility List (HCL), and initiate firmware updates. Enhanced capabilities and tight integration with Cisco TAC enables more efficient support. Cisco Intersight automates uploading files to speed troubleshooting. The Intersight recommendation engine provides actionable intelligence for IT operations management. The insights are driven by expert systems and best practices from Cisco.

Cisco Intersight offers flexible deployment either as Software as a Service (SaaS) on Intersight.com or running on your premises with the Cisco Intersight virtual appliance. The virtual appliance provides users with the benefits of Cisco Intersight while allowing more flexibility for those with additional data locality and security requirements.

Cisco Intersight provides the following features for ease of operations and administration for the IT staff:

● Connected TAC

● Security Advisories

● Hardware Compatibility List (HCL)

To learn more about all the features of Cisco Intersight, go to: https://www.cisco.com/c/en/us/products/servers-unified-computing/intersight/index.html

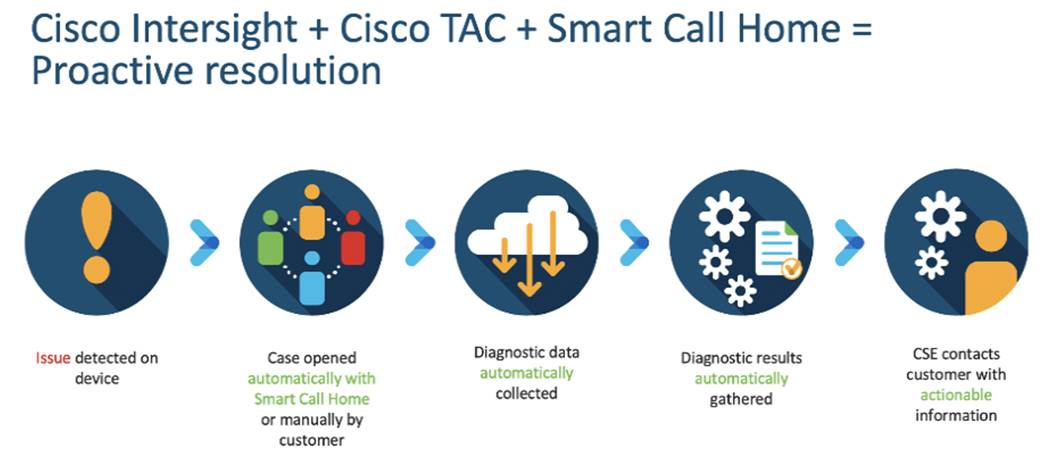

Connected TAC is an automated transmission of technical support files to the Cisco Technical Assistance Center (TAC) for accelerated troubleshooting.

Cisco Intersight enables Cisco TAC to automatically generate and upload Tech Support Diagnostic files when a Service Request is opened. If you have devices that are connected to Intersight but not claimed, Cisco TAC can only check the connection status and will not be permitted to generate Tech Support files. When enabled, this feature works in conjunction with the Smart Call Home service and with an appropriate service contract. Devices that are configured with Smart Call Home and claimed in Intersight can use Smart Call Home to open a Service Request and have Intersight collect Tech Support diagnostic files.

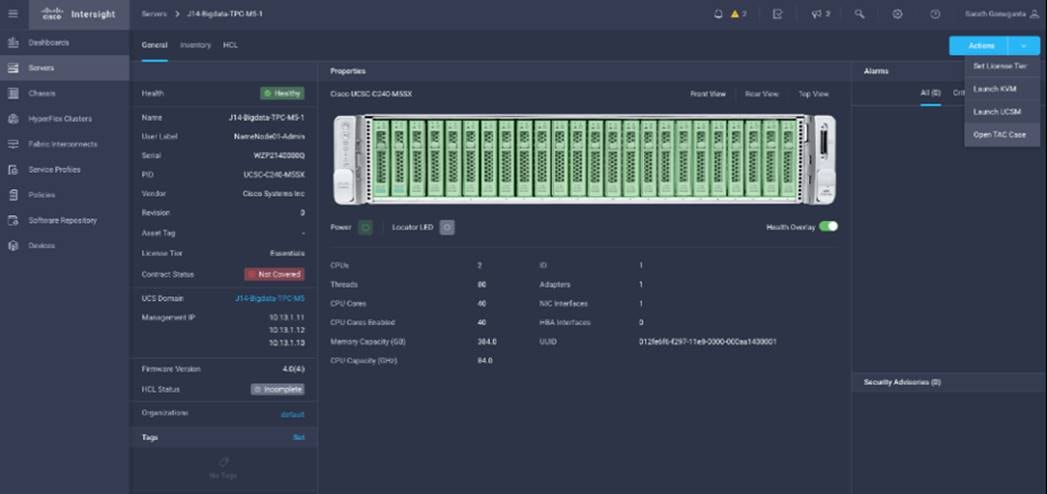

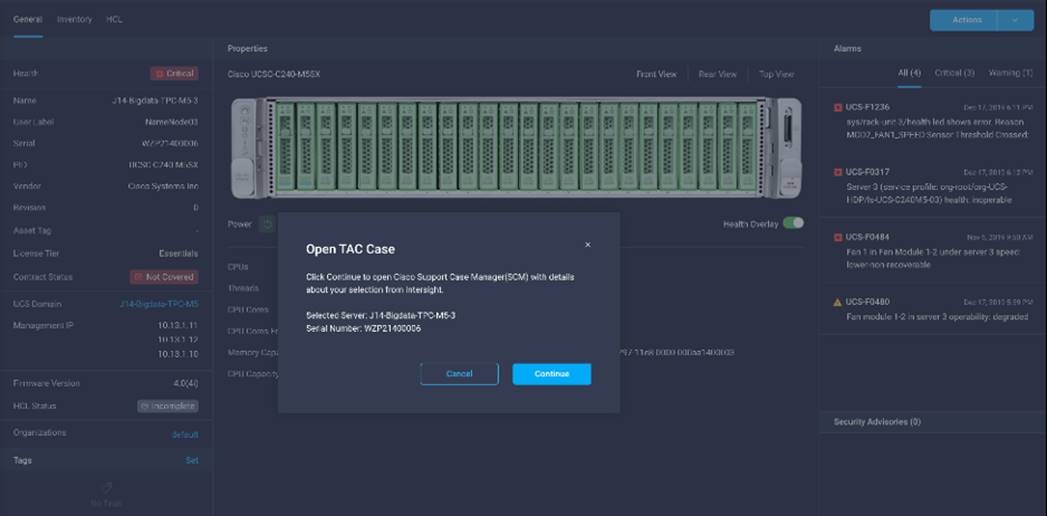

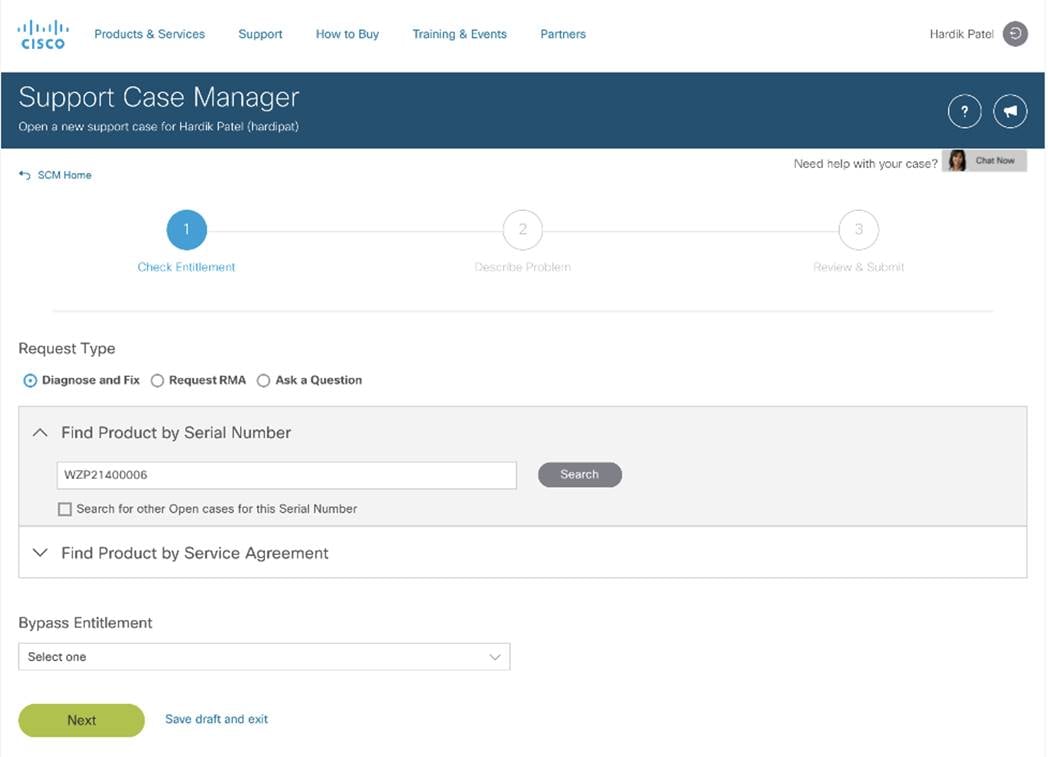

Procedure 1. Enable Connected TAC

Step 1. Log into Intersight.com.

Step 2. Click the Servers tab. Go to Server > Actions tab. From the drop-down list, click Open TAC Case.

Step 3. Click Open TAC Case to launch the Cisco URL for the support case manager where associated service contracts for Server or Fabric Interconnect is displayed.

Step 4. Click Continue.

Step 5. Follow the procedure to Open TAC Case.

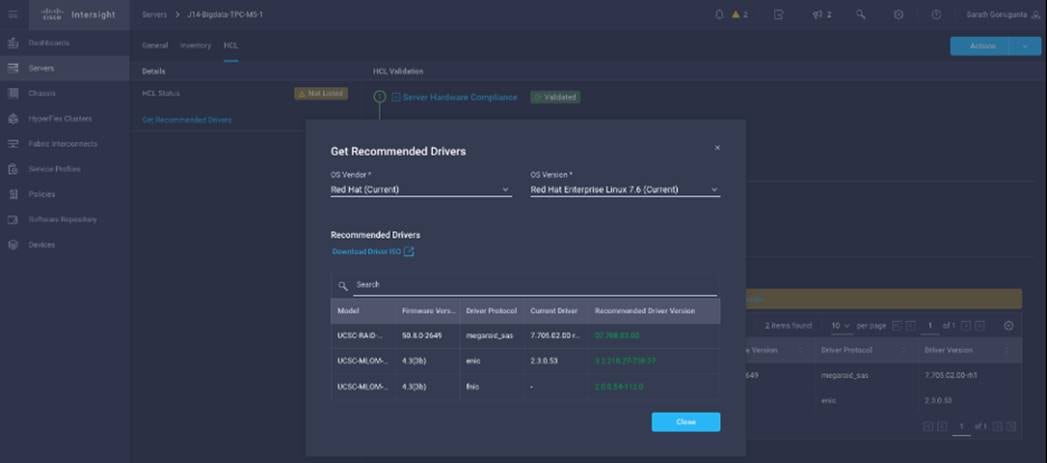

Cisco Intersight Integration for HCL

Cisco Intersight evaluates the compatibility of your Cisco UCS and HyperFlex systems to check if the hardware and software have been tested and validated by Cisco or Cisco partners. Cisco Intersight reports validation issues after checking the compatibility of the server model, processor, firmware, adapters, operating system, and drivers, and displays the compliance status with the Hardware Compatibility List (HCL).

You can use Cisco UCS Tools, a host utility vSphere Installation Bundle (VIB), or OS Discovery Tool, an open-source script to collect OS and driver information to evaluate HCL compliance.

In Cisco Intersight, you can view the HCL compliance status in the dashboard (as a widget), the Servers table view, and the Server details page.

Note: For more information, go to: https://www.intersight.com/help/features#compliance_with_hardware_compatibility_list_(hcl)

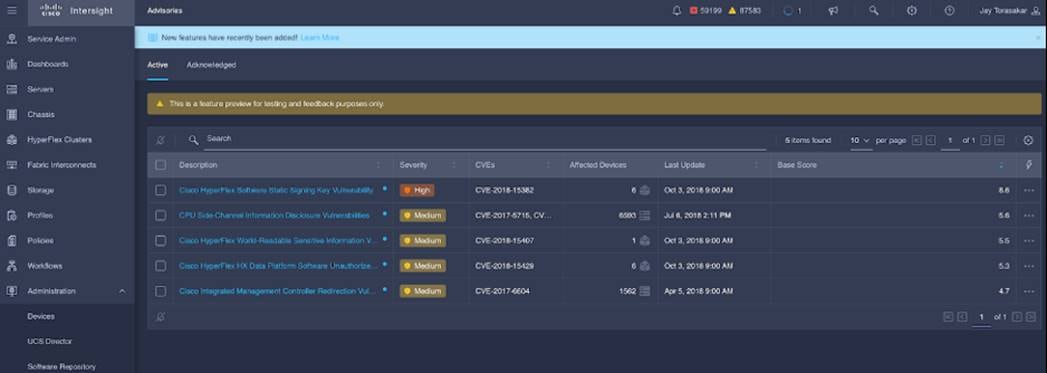

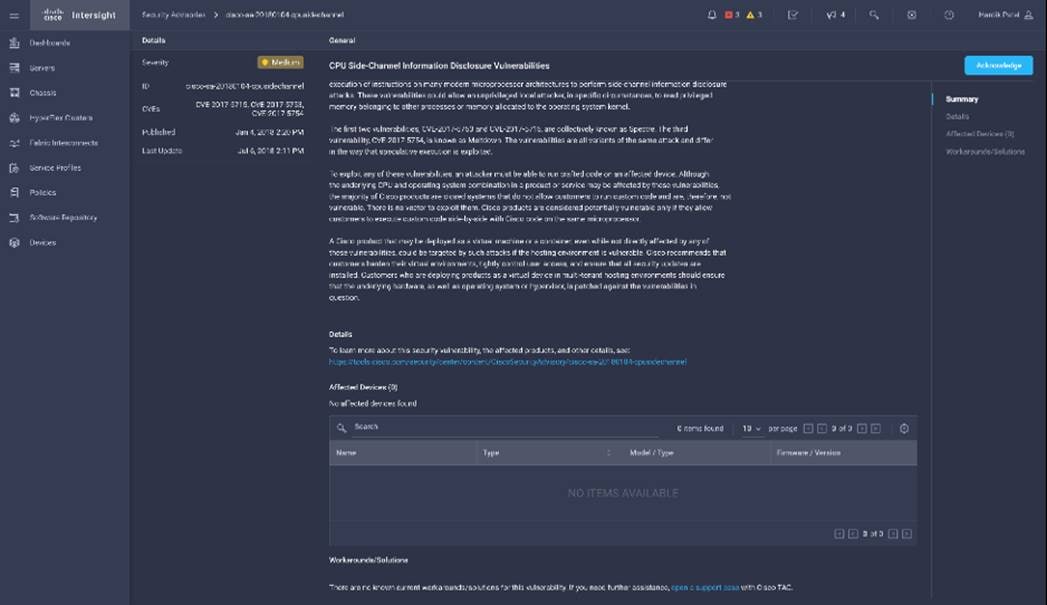

Cisco Intersight sources critical security advisories from the Cisco Security Advisory service to alert users about the endpoint devices that are impacted by the advisories and deferrals. These alerts are displayed as Advisories in Intersight. The Cisco Security Advisory service identifies and monitors and updates the status of the advisories to provide the latest information on the impacted devices, the severity of the advisory, the impacted products, and any available workarounds. If there are no known workarounds, you can open a support case with Cisco TAC for further assistance. A list of the security advisories is shown in Intersight under Advisories.

Cloudera Data Platform Private Cloud (CDP PvC) is the on-premises version of Cloudera Data Platform. CDP Private Cloud delivers powerful analytic, transactional, and machine learning workloads in a hybrid data platform, combining the agility and flexibility of public cloud with the control of the data center. With a choice of traditional as well as elastic analytics and scalable object storage, CDP Private Cloud modernizes traditional monolithic cluster deployments into a powerful and efficient platform.

An integral part of CDP Hybrid Cloud, CDP Private Cloud provides the first step for data center customers toward true data and workload mobility, managed from a single pane of glass and with consistent data security and governance across all clouds, public and private. CDP is an integrated data platform that is easy to deploy, manage, and use. By simplifying operations, CDP reduces the time to onboard new use cases across the organization.

With CDP Private Cloud, organizations benefit from:

● Unified Distribution: CDP offers rapid time to value through simplified provisioning of easy-to-use, self-service analytics enabling onboarding of new use cases at higher velocity.

● Hybrid & On-prem: Hybrid and multi-cloud experience, on-prem it offers best performance, cost, and security. It is designed for data centers with optimal infrastructure.

● Management: It provides consistent management and control points for deployments.

● Consistency: Security and governance policies can be configured once and applied across all data and workloads.

● Portability: Policies stickiness with data, even if it moves across all supported infrastructure.

● Improved cost efficiency with optimized resource utilization and the decoupling of compute and storage, lowering data center infrastructure costs up to 50%.

● Predictable performance thanks to workload isolation and perfectly managed multi-tenancy, eliminating the impact of spikes on critical workloads and resulting missed SLAs and SLOs.

Cloudera Data Platform Private Cloud Base (CDP PvC Base)

CDP Private Cloud Base is the on-premises version of Cloudera Data Platform. This new product combines the best of Cloudera Enterprise Data Hub and Hortonworks Data Platform Enterprise along with new features and enhancements across the stack. This unified distribution is a scalable and customizable platform where you can securely run many types of workloads.

CDP Private Cloud Base supports a variety of hybrid solutions where compute tasks are separated from data storage and where data can be accessed from remote clusters, including workloads created using CDP Private Cloud Data Services. This hybrid approach provides a foundation for containerized applications by managing storage, table schema, authentication, authorization, and governance.

CDP Private Cloud Base is comprised of a variety of components such as Apache HDFS, Apache Hive 3, Apache HBase, and Apache Impala, along with many other components for specialized workloads. You can select any combination of these services to create clusters that address your business requirements and workloads. Several pre-configured packages of services are also available for common workloads.

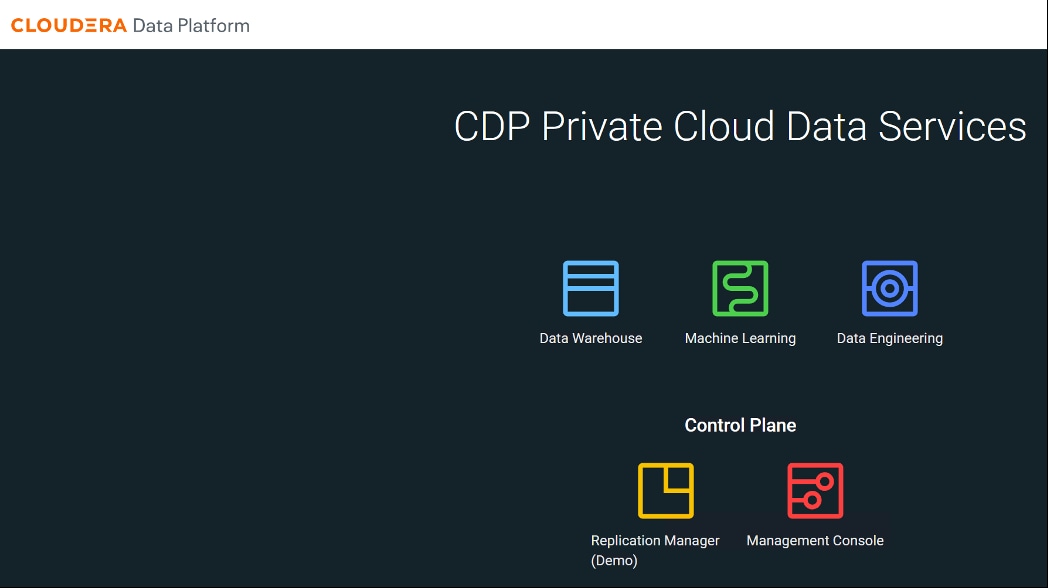

Cloudera Data Platform Private Cloud Data Services (CDP PvC DS)

Cloudera Data Platform (CDP) Private Cloud is the newest on-prem offering of CDP that brings many of the benefits of the public cloud deployments to the on-prem CDP deployments.

CDP Private Cloud provides a disaggregation of compute and storage and allows independent scaling of compute and storage clusters. Using containerized applications deployed on Kubernetes, CDP Private Cloud brings both agility and predictable performance to analytic applications. CDP Private Cloud gets unified security, governance, and metadata management through Cloudera Shared Data Experience (SDX), which is available on a CDP Private Cloud Base cluster.

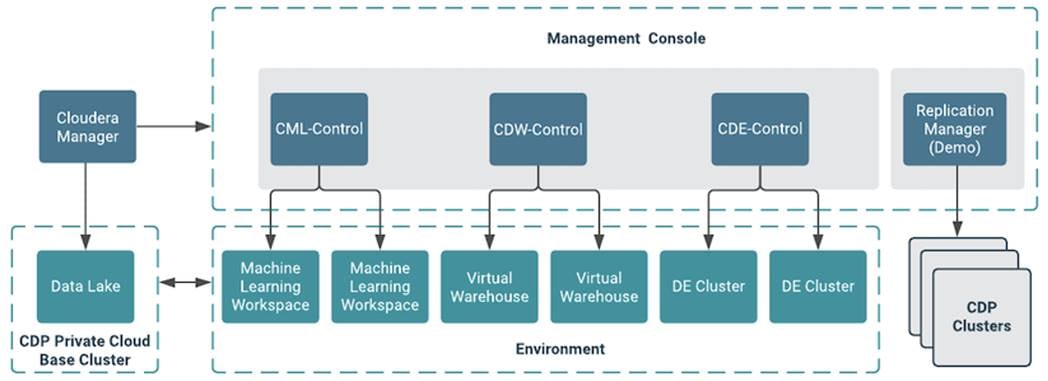

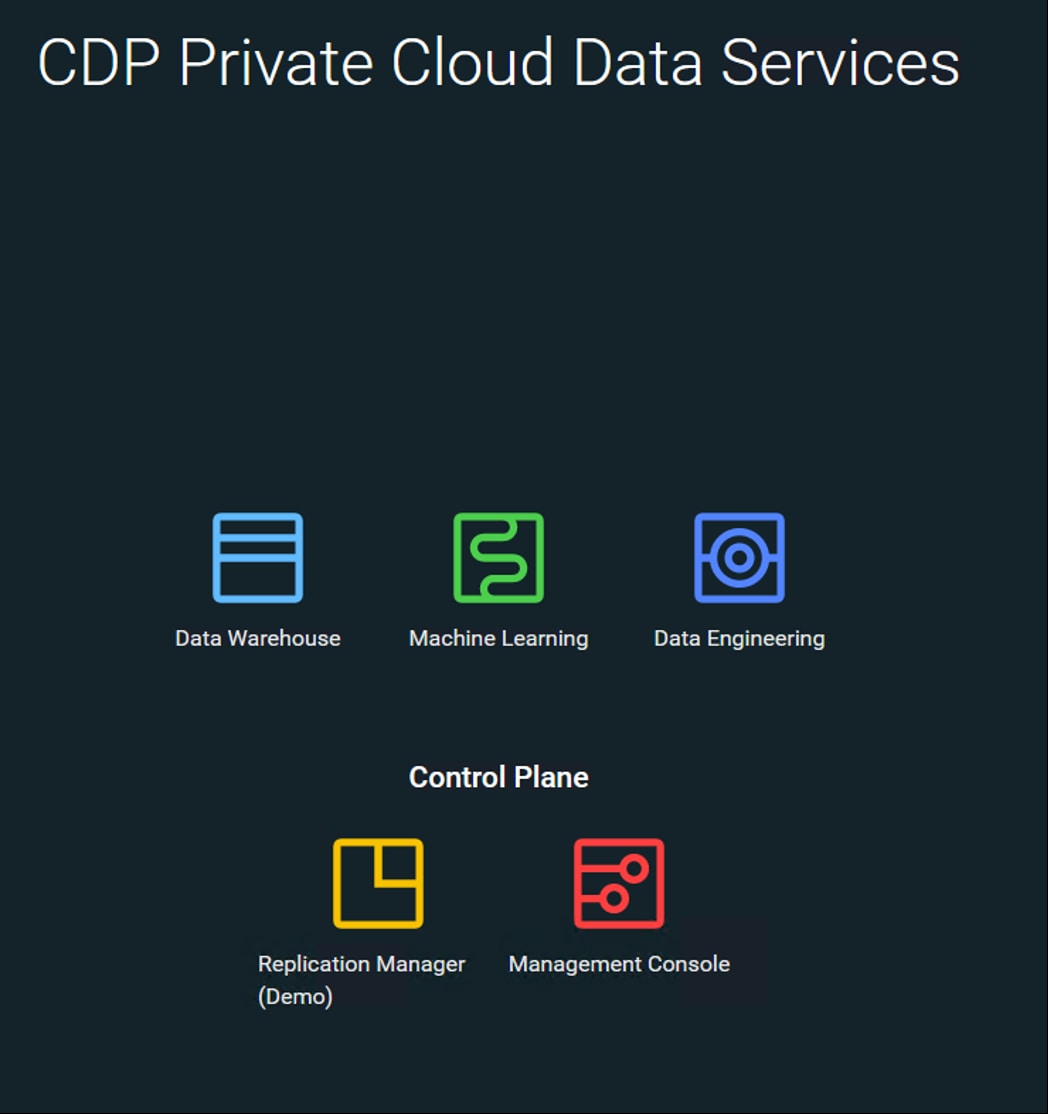

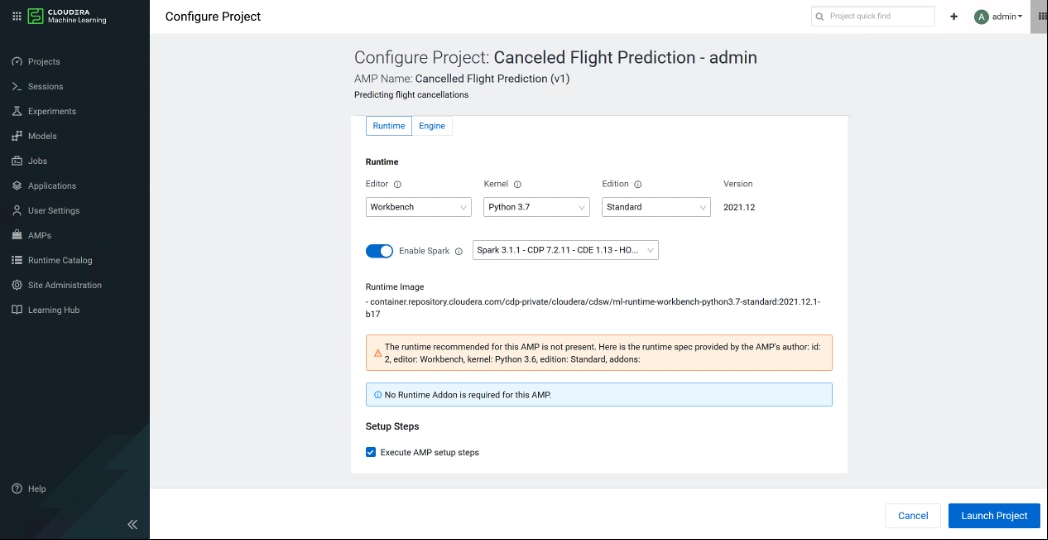

CDP Private Cloud users can rapidly provision and deploy Cloudera Data Engineering (CDE), Cloudera Data Warehousing (CDW) and Cloudera Machine Learning (CML) services through the Management Console, and easily scale them up or down as required.

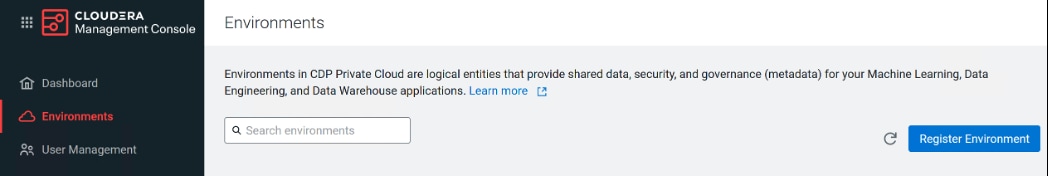

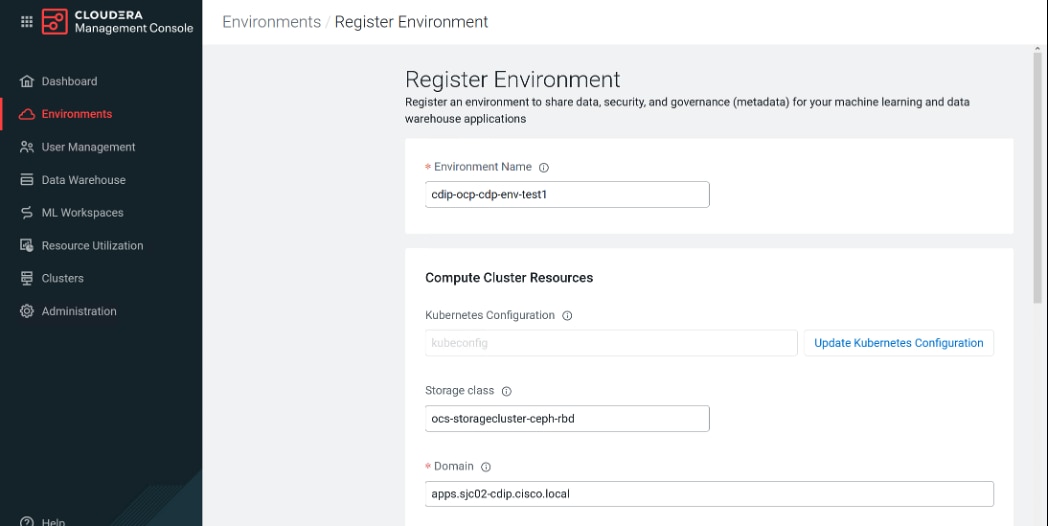

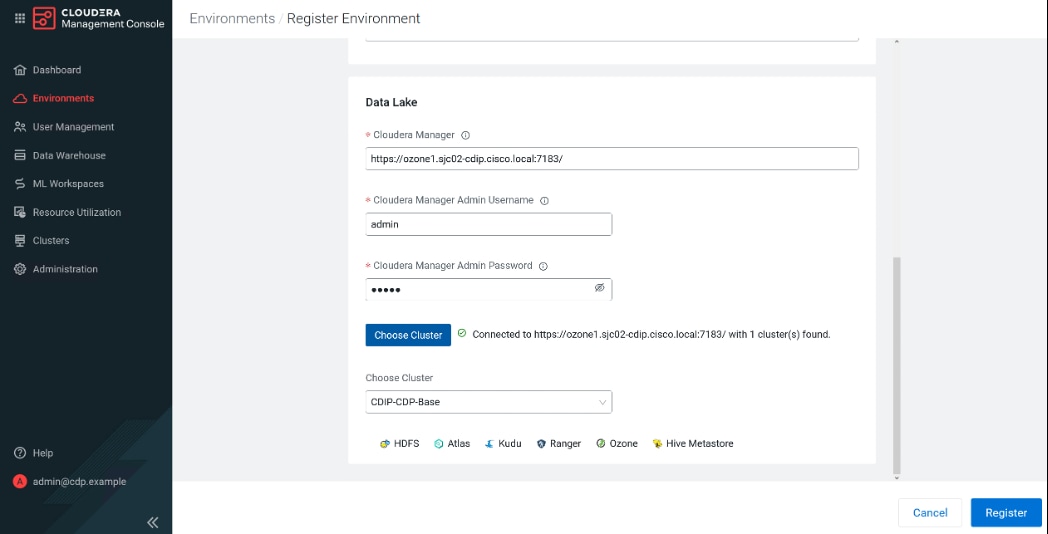

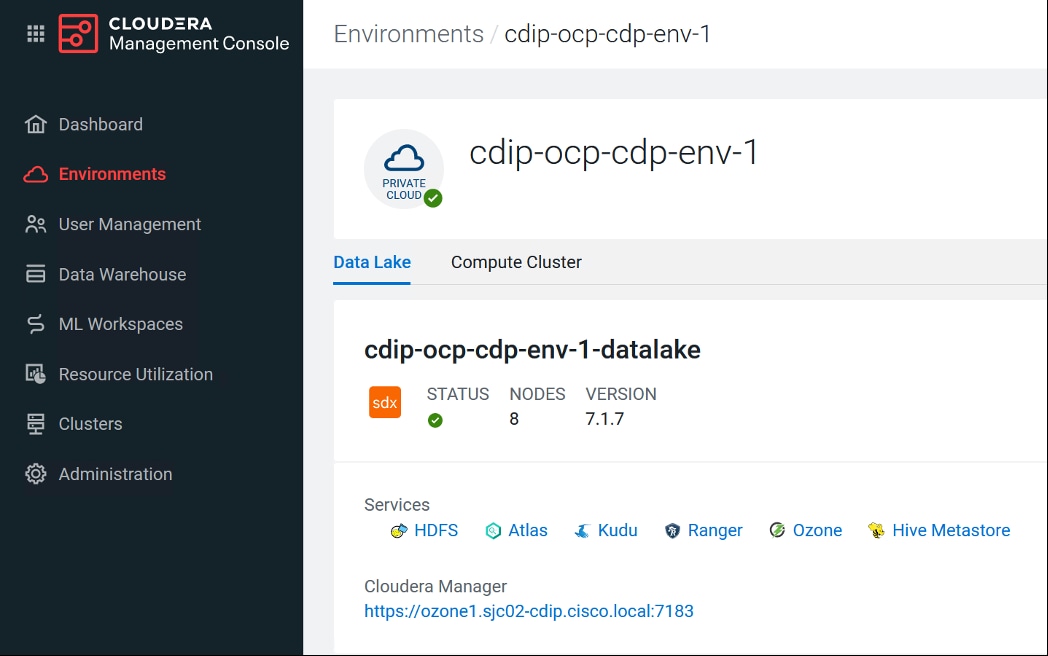

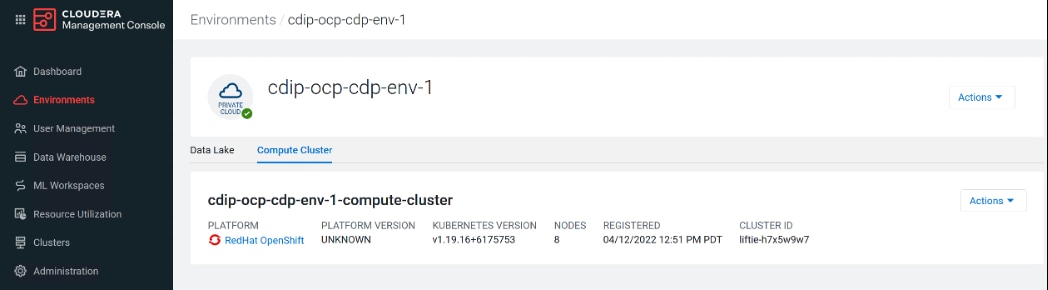

A CDP Private Cloud deployment requires you to have a Private Cloud Base cluster and a RedHat OpenShift Kubernetes cluster. The OpenShift cluster is set up on a Bare Metal deployment. The Private Cloud deployment process involves configuring the Management Console on the OpenShift cluster, registering an environment by providing details of the Data Lake configured on the Base cluster, and then creating the workloads.

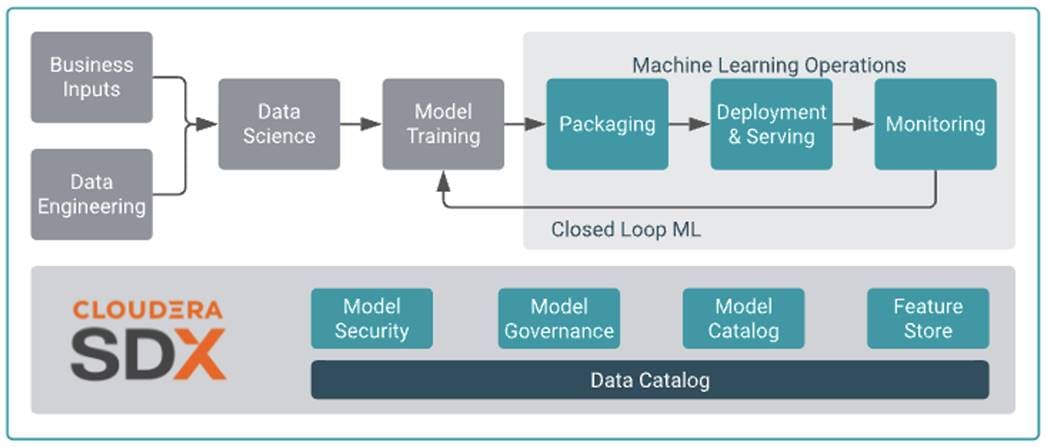

Cloudera Shared Data Experience (SDX)

SDX is a fundamental part of Cloudera Data Platform architecture, unlike other vendors’ bolt-on approaches to security and governance. Independent from compute and storage layers, SDX delivers an integrated set of security and governance technologies built on metadata and delivers persistent context across all analytics as well as public and private clouds. Consistent data context simplifies the delivery of data and analytics with a multi-tenant data access model that is defined once and seamlessly applied everywhere.

SDX reduces risk and operational costs by delivering consistent data context across deployments. IT can deploy fully secured and governed data lakes faster, giving more users access to more data, without compromise.

Key benefit and feature of SDX includes:

● Insightful metadata - Trusted, reusable data assets and efficient deployments need more than just technical and structural metadata. CDP’s Data Catalog provides a single pane of glass to administer and discover all data, profiled, and enhanced with rich metadata that includes the operational, social, and business context, and turns data into valuable information

● Powerful security - Eliminate business and security risks and ensure compliance by preventing unauthorized access to sensitive or restricted data across the platform with full auditing. SDX enables organizations to establish multi-tenant data access with ease through standardization and seamless enforcement of granular, dynamic, role- and attribute-based security policies on all clouds and data centers.

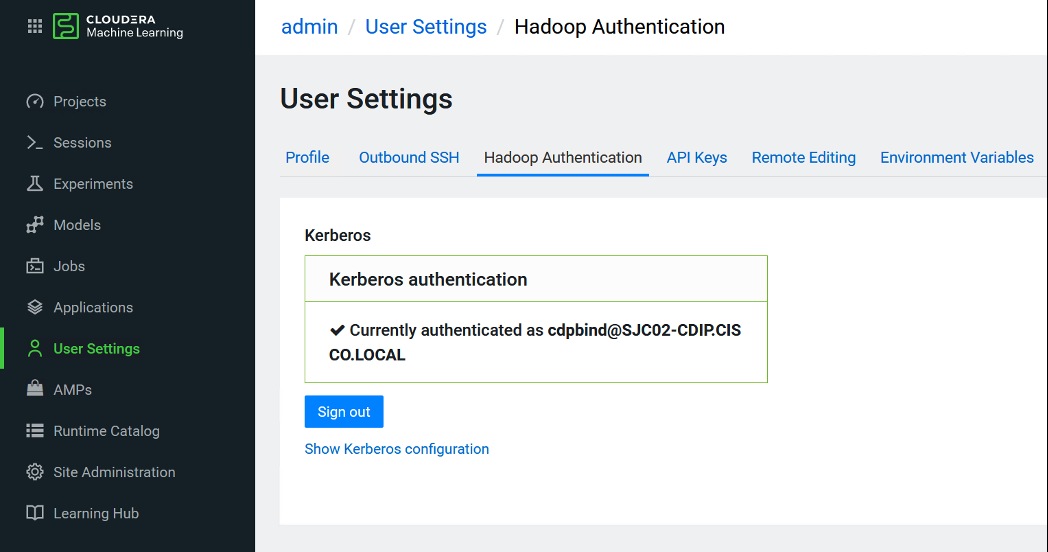

● Full encryption - Enjoy ultimate protection as a fundamental part of your CDP installation. Clusters are deployed and automatically configured to use Kerberos and for encrypted network traffic with Auto-TLS. Data at rest, both on-premises and in the cloud, is protected with enterprise-grade cryptography, supporting best practice tried and tested configurations

● Hybrid control - Meet the ever-changing business needs to balance performance, cost, and resilience. Deliver true infrastructure independence. SDX enables it all with the ability to move data, together with its context, as well as workloads between CDP deployments. Platform operational insight into aspects like workload performance deliver intelligent recommendations for optimal resource utilization

● Enterprise-grade governance - Prove compliance and manage the complete data lifecycle from the edge to AI and from ingestion to purge with data management across all analytics and deployments. Identify and manage sensitive data, and effectively address regulatory requirements with unified, platform-wide operations, including data classification, lineage, and modeling.

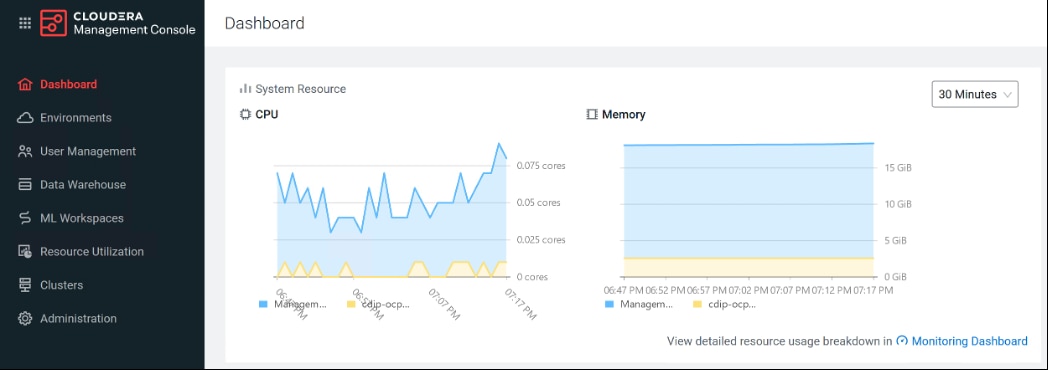

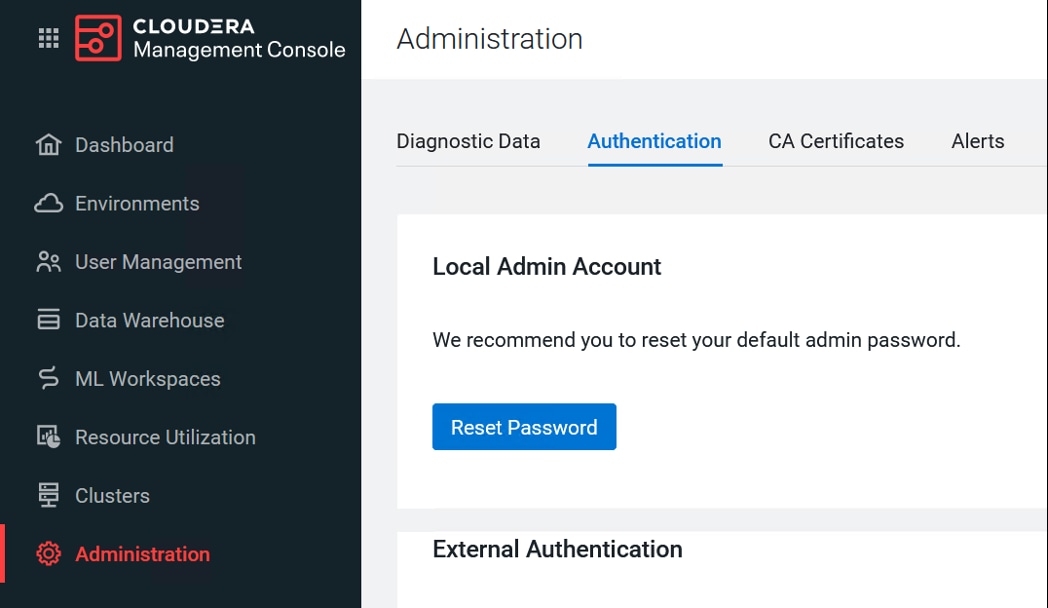

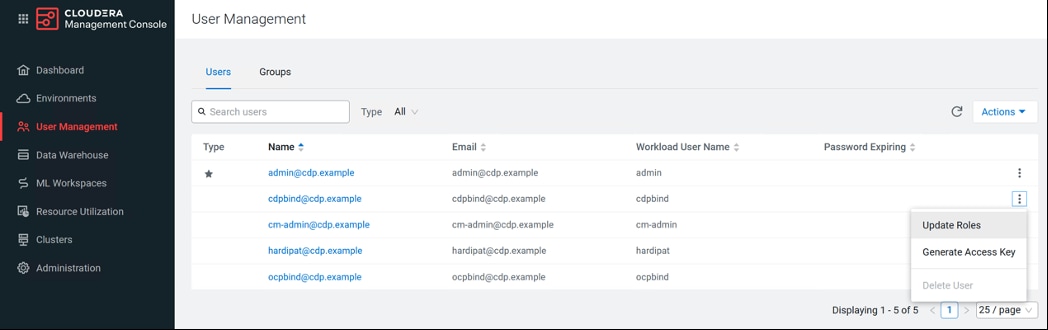

CDP Private Cloud Management Console

The Management Console is a service used by CDP administrators to manage environments, users, and services.

The Management Console allows you to:

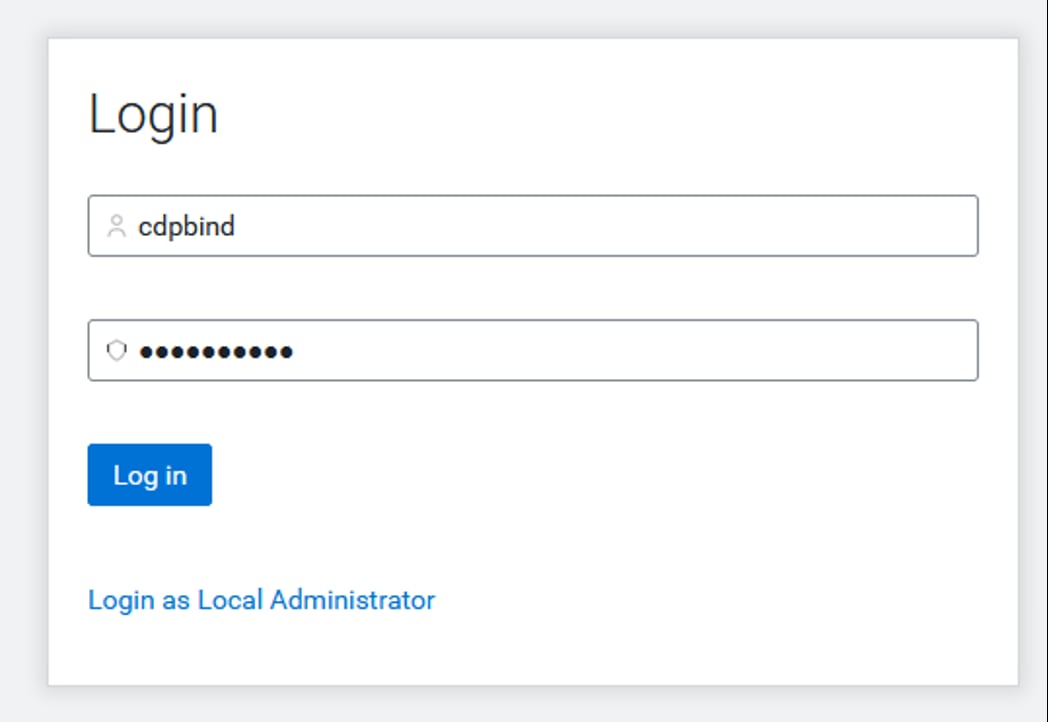

● Enable user access to CDP Private Cloud Data Services, onboard and set up authentication for users, and determine access rights for the various users to the available resources.

● Register an environment, which represents the association of your user account with compute resources using which you can manage and provision workloads such as Data Warehouse and Machine Learning. When registering the environment, you must specify a Data Lake residing on the Private Cloud base cluster to provide security and governance for the workloads.

● View information about the resources consumed by the workloads for an environment.

● Collect diagnostic information from the services for troubleshooting purposes.

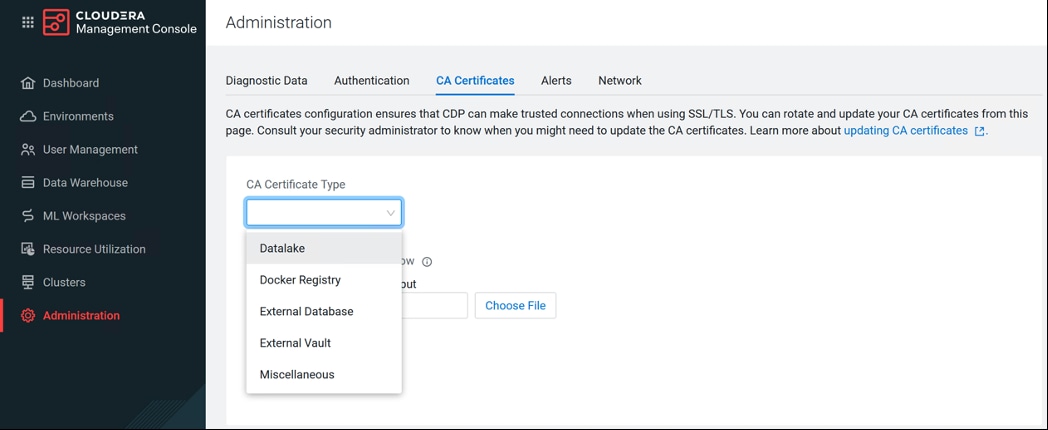

Figure 27 shows a basic architectural overview of the CDP Private Cloud Management Console.

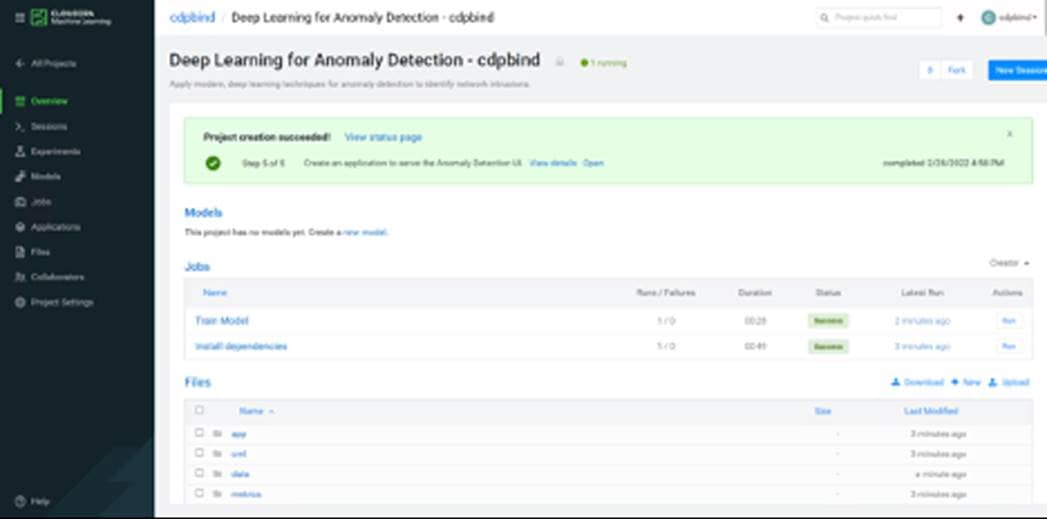

Cloudera Machine Learning (CML)

Cloudera Machine learning caters to data scientists to develop and operationalize ML models. From automating internal processes to optimizing the design, creation, and marketing processes behind virtually every product consumed, ML models have permeated almost every aspect of our work and personal lives. It has become one of the most critical capabilities for modern businesses to grow and stay competitive today.

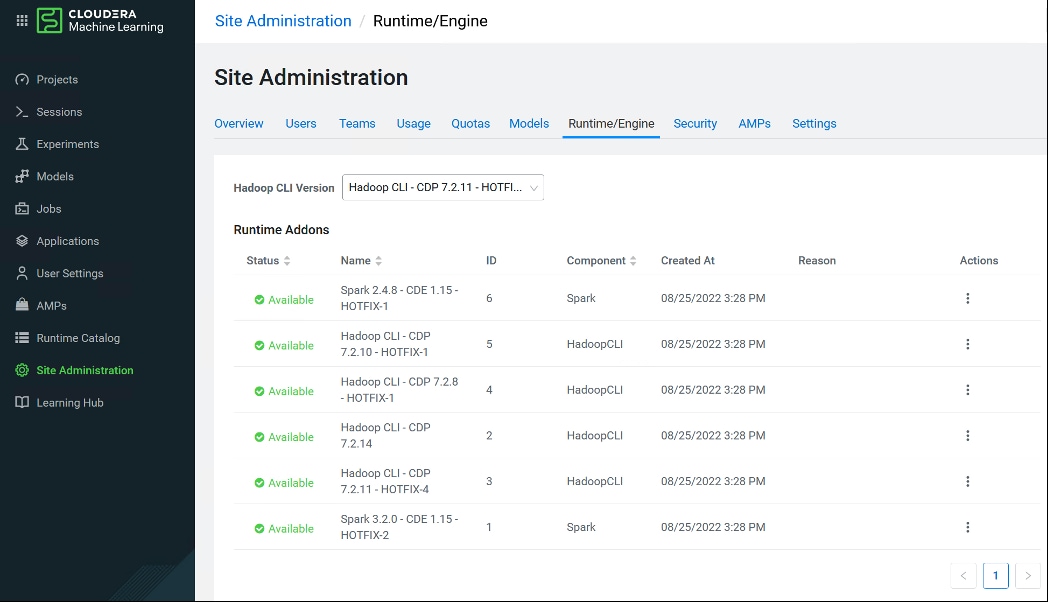

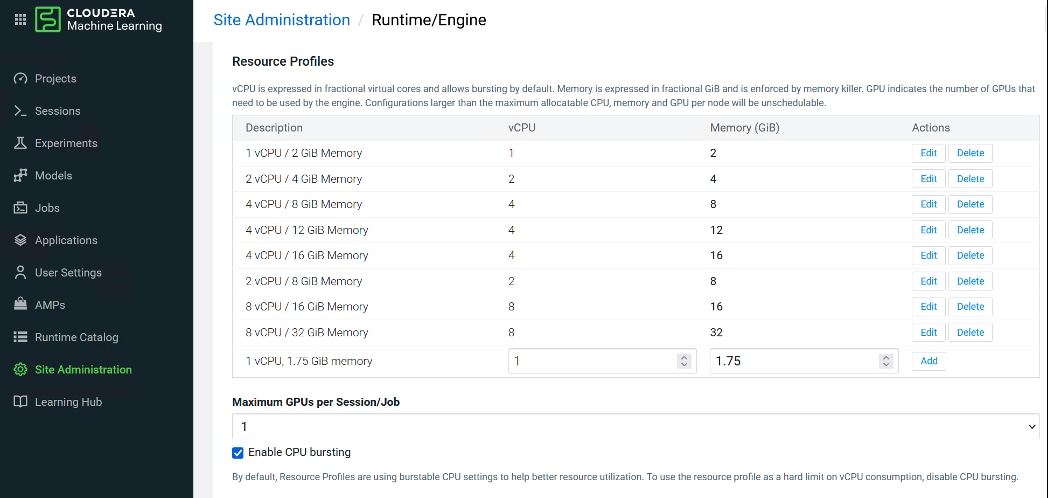

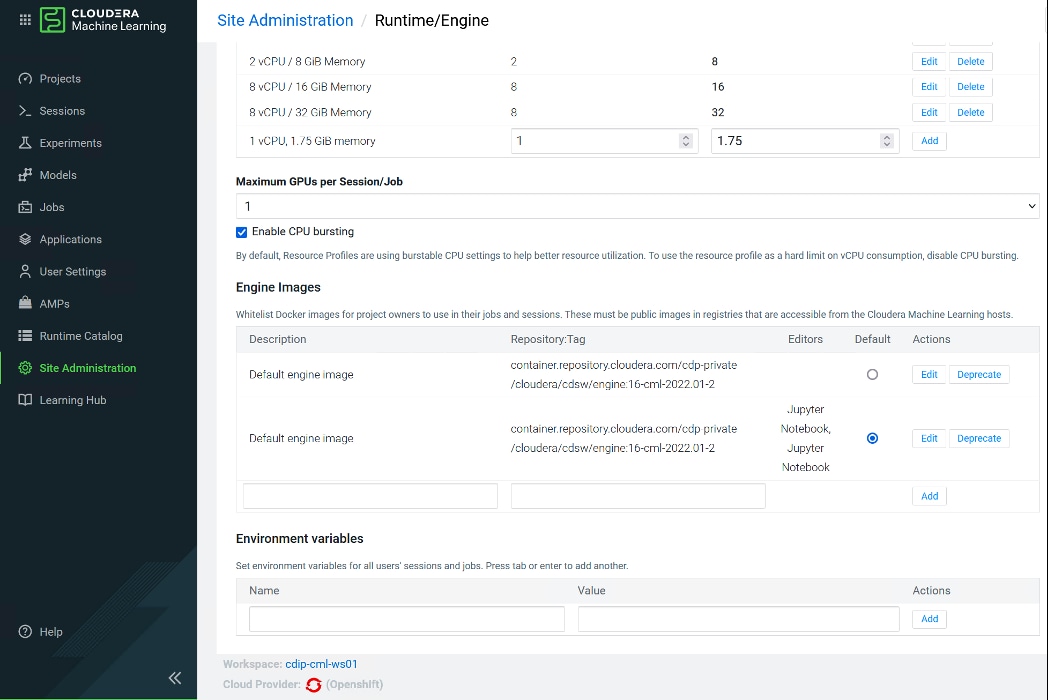

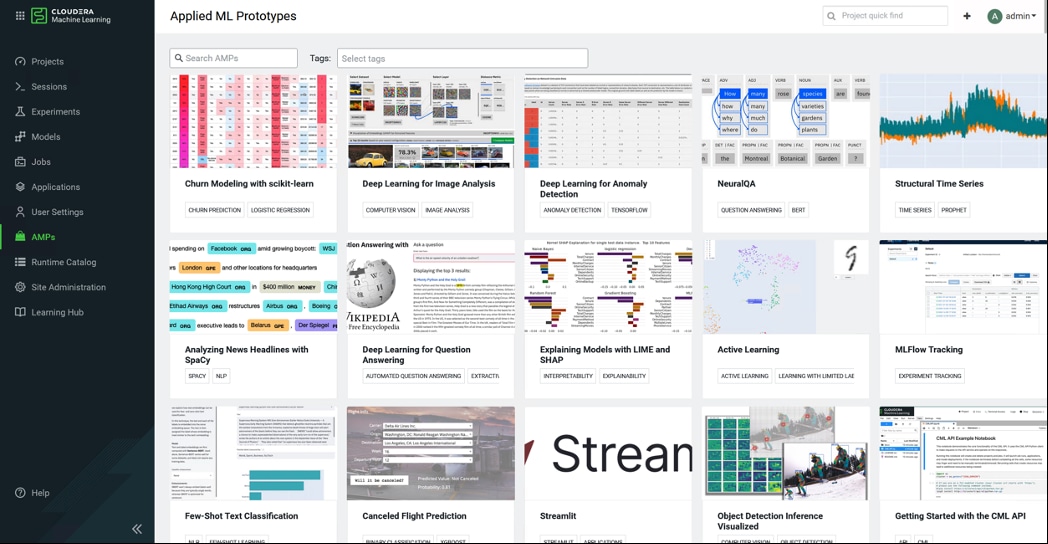

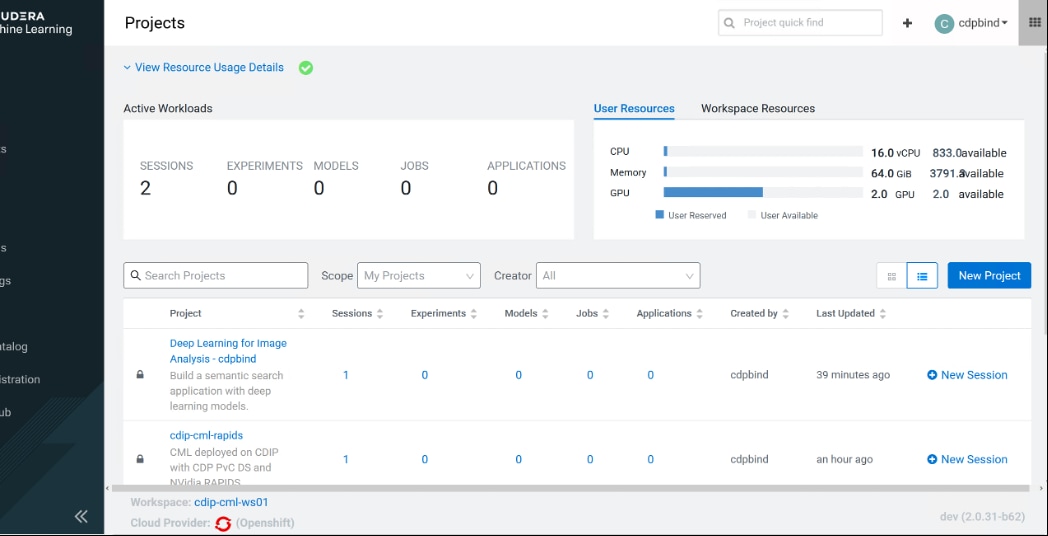

Cloudera Machine Learning (CML) is Cloudera’s new cloud-native machine learning service, built for CDP. The CML service provisions clusters, also known as ML workspaces, which run natively on Kubernetes.

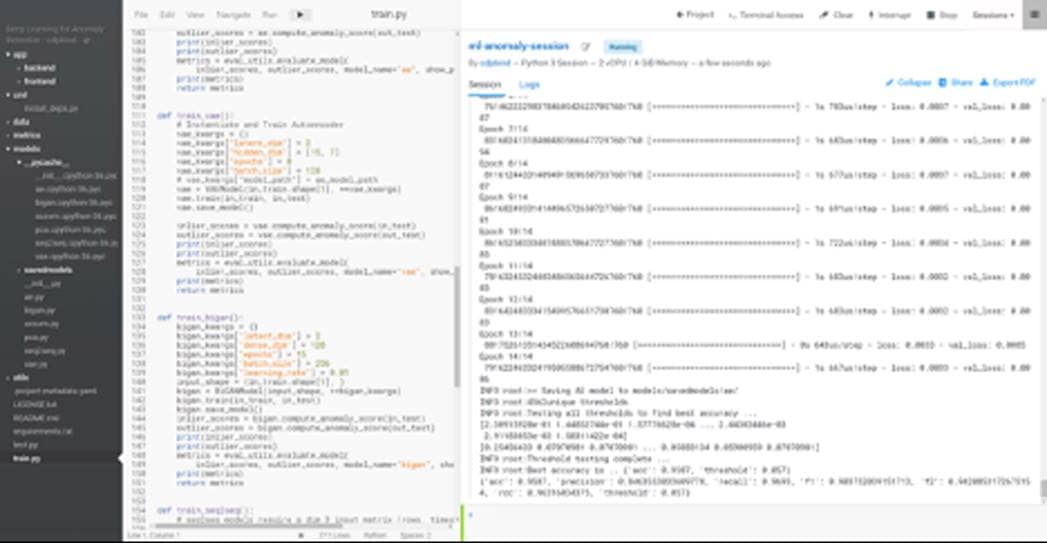

Each ML workspace enable teams of data scientists to develop, test, train, and ultimately deploy machine learning models for building predictive applications all on the data under management within the enterprise data cloud. ML workspaces are ephemeral, allowing you to create and delete them on-demand. ML workspaces support fully containerized execution of Python, R, Scala, and Spark workloads through flexible and extensible engines.

Cloudera Machine Learning covers the end-to-end machine learning workflow, enabling fully isolated and containerized workloads - including Python, R, and Spark-on-Kubernetes - for scale-out data engineering and machine learning with seamless distributed dependency management.

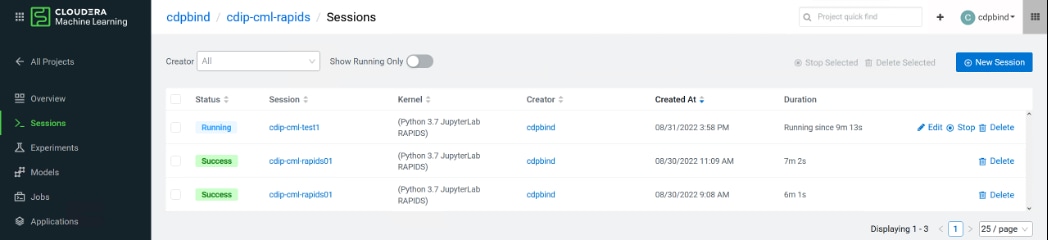

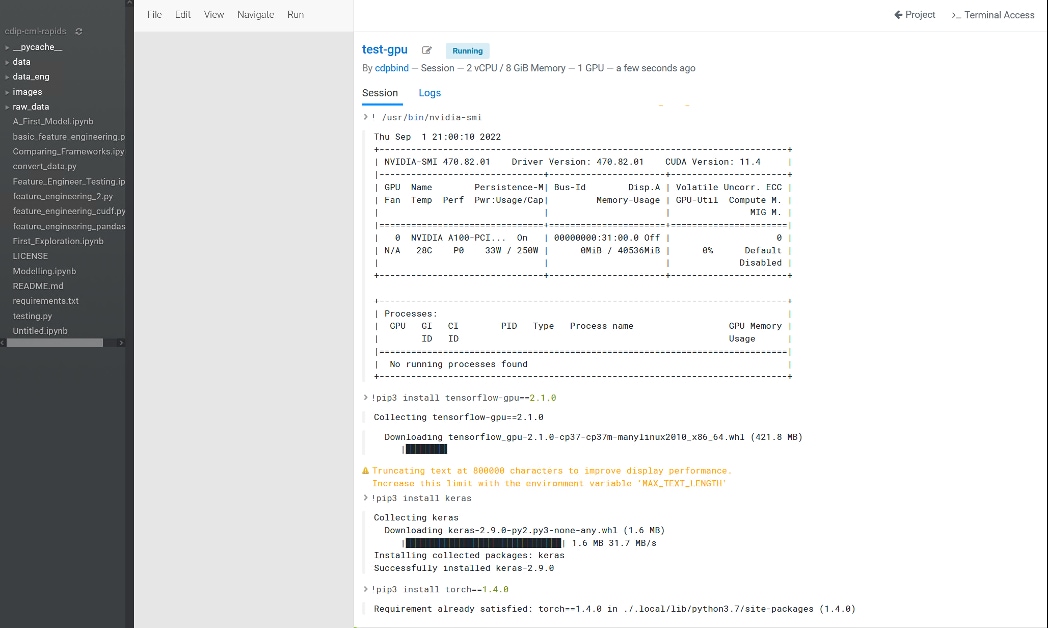

● Sessions enable Data Scientists to directly leverage the CPU, memory, and GPU compute available across the workspace, while also being directly connected to the data in the data lake.

● Experiments enable Data Scientists to run multiple variations of model training workloads, tracking the results of each Experiment to train the best possible Model.

● Models can be deployed in a matter of clicks, removing any roadblocks to production. They are served as REST endpoints in a high availability manner, with automated lineage building and metric tracking for MLOps purposes.

● Jobs can be used to orchestrate an entire end-to-end automated pipeline, including monitoring for model drift, and automatically kicking off model re-training and re-deployment as needed.

● Applications deliver interactive experiences for business users in a matter of clicks. Frameworks such as Flask and Shiny can be used in development of these Applications, while Cloudera Data Visualization is also available as a point-and-click interface for building these experiences.

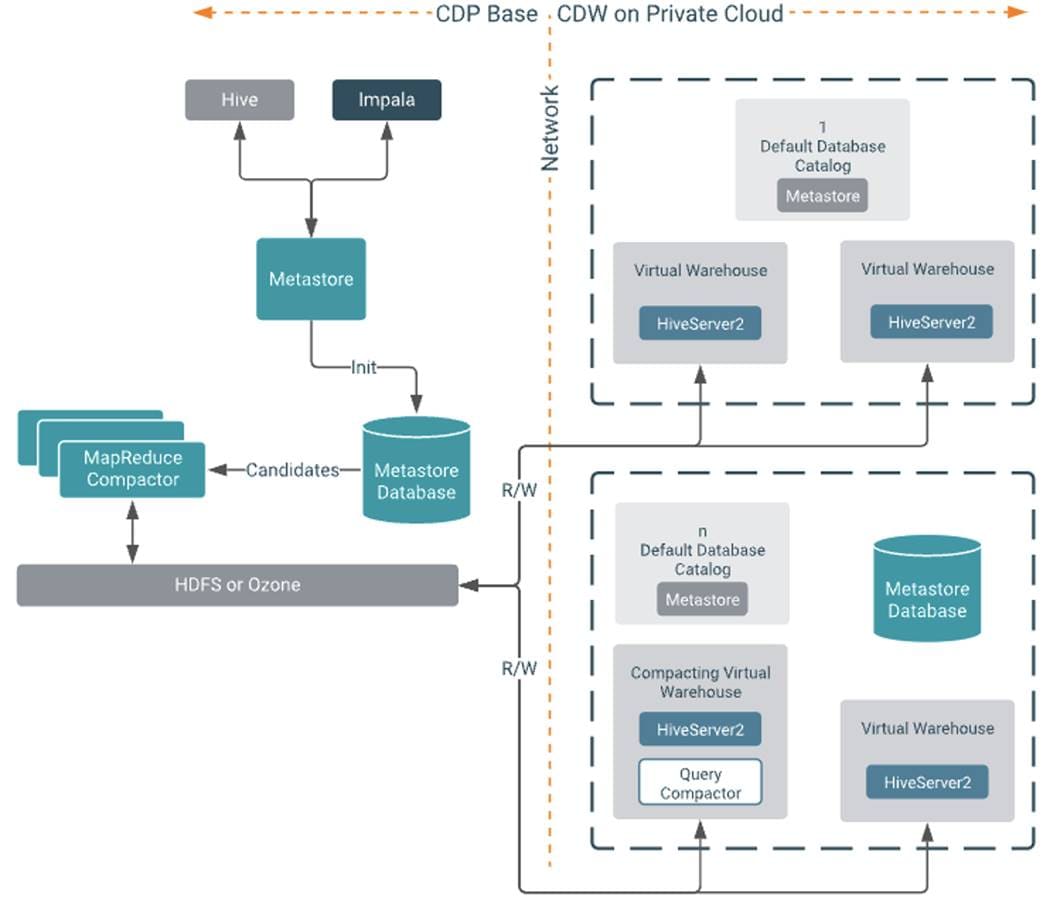

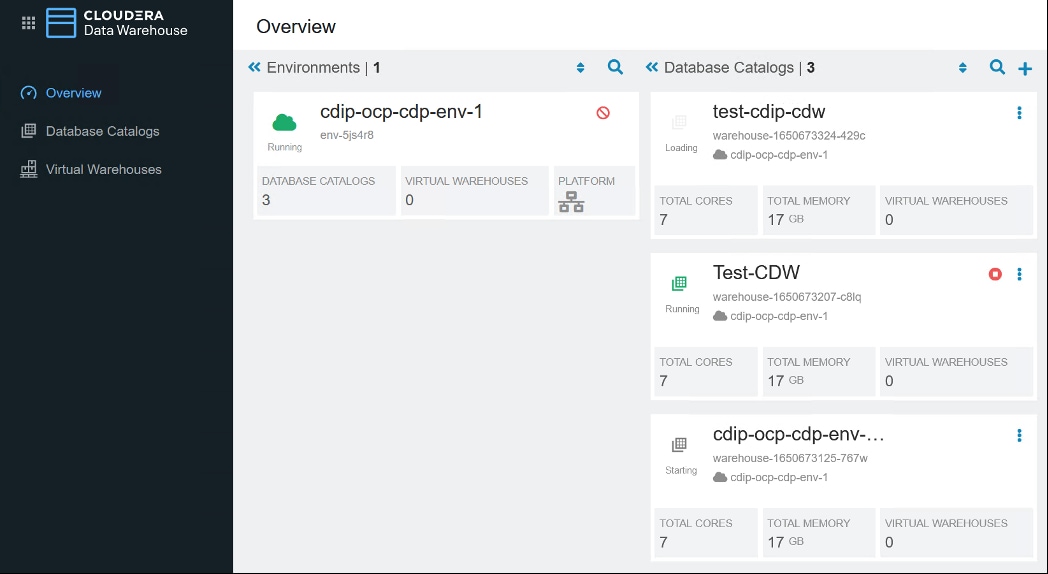

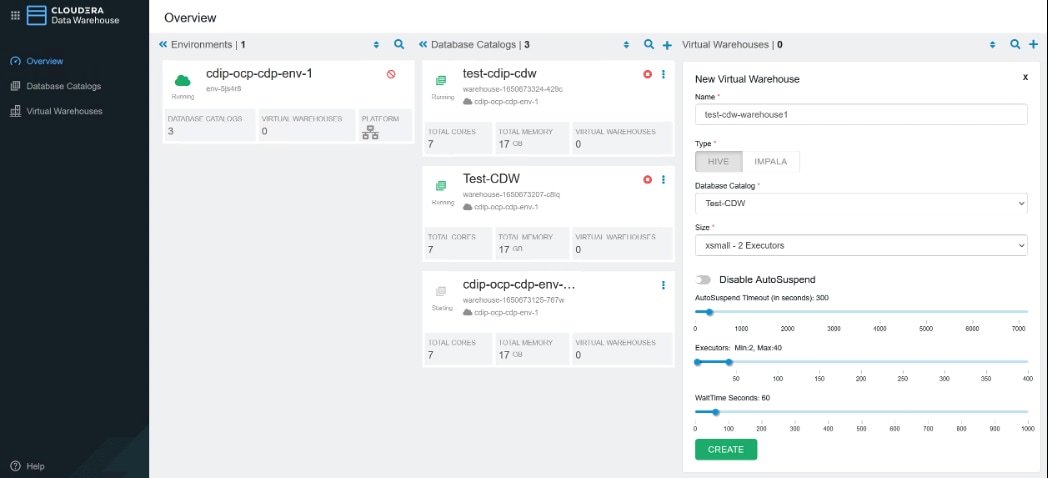

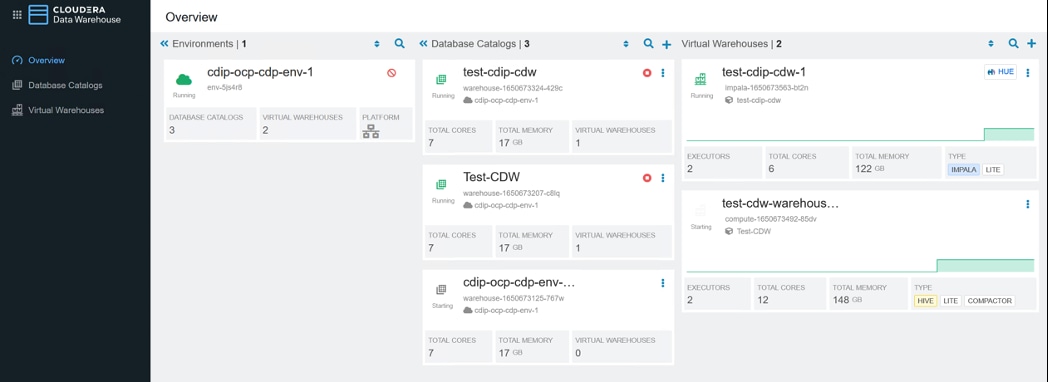

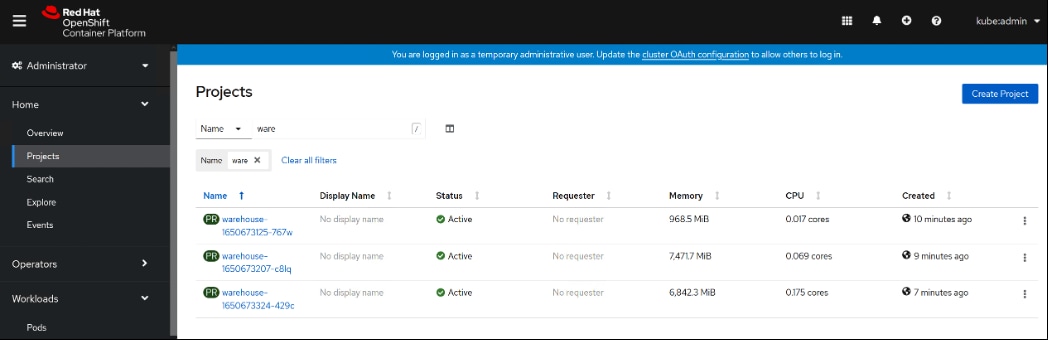

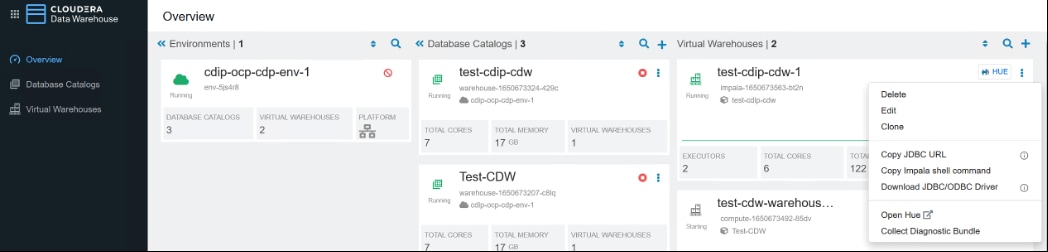

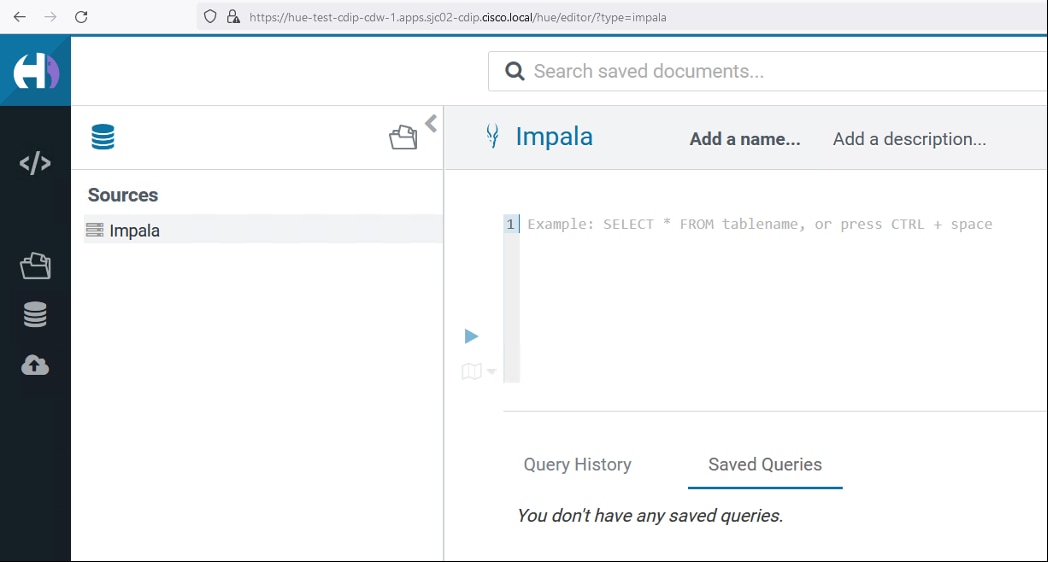

Cloudera Data Warehouse (CDW)

Data Warehouse is a CDP Private Cloud service for self-service creation of independent data warehouses and data marts that auto-scale up and down to meet your varying workload demands. The Data Warehouse service provides isolated compute instances for each data warehouse/mart, automatic optimization, and enables you to save costs while meeting SLAs. In the CDW Private Cloud service, your data is stored in HDFS in the base cluster. The service is composed of the following:

● Database Catalogs

A logical collection of metadata definitions for managed data with its associated data context. The data context is comprised of table and view definitions, transient user and workload contexts from the Virtual Warehouse, security permissions, and governance artifacts that support functions such as auditing. One Database Catalog can be queried by multiple Virtual Warehouses.

Database Catalogs are Hive MetaStore (HMS) instances and include references to the cloud storage where the data lives. An environment can have multiple Database Catalogs.

The default Database Catalog shares the HMS database with HMS in the base cluster. This enables you to access any objects or data sets created in the base clusters from CDW Virtual Warehouses and vice versa.

● Virtual Warehouses

An instance of compute resources that is equivalent to a cluster. A Virtual Warehouse provides access to the data in tables and views that correlate to a specific Database Catalog. Virtual Warehouses bind compute and storage by executing queries on tables and views that are accessible through the Database Catalog that they have been configured to access.

The Cloudera Data Warehouse service provides data warehouses and data marts that are:

● Automatically configured and isolated

● Optimized for your existing workloads when you move them to your private cloud

● Auto-scale up and down to meet your workloads' varying demands

● Auto-suspend and resume to allow optimal usage of resources

● Compliant with the security controls associated with your base cluster

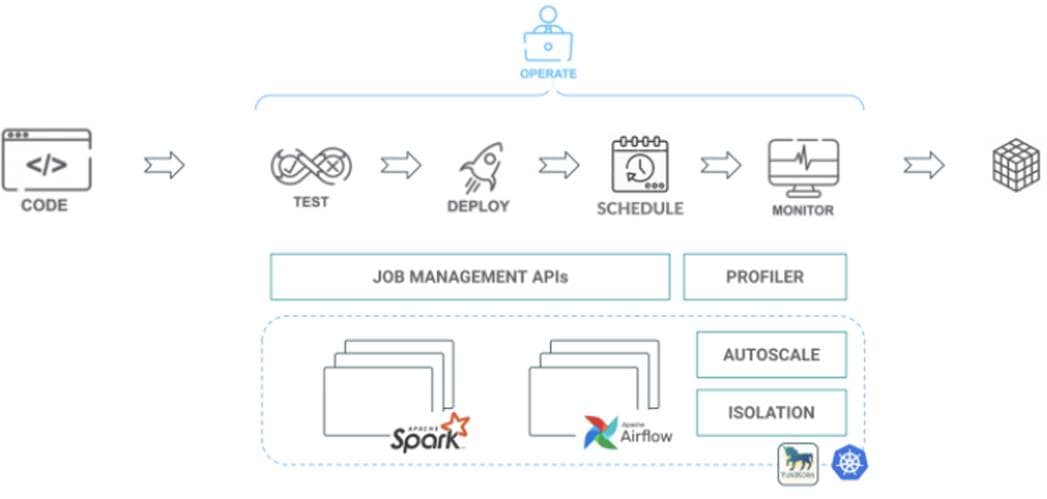

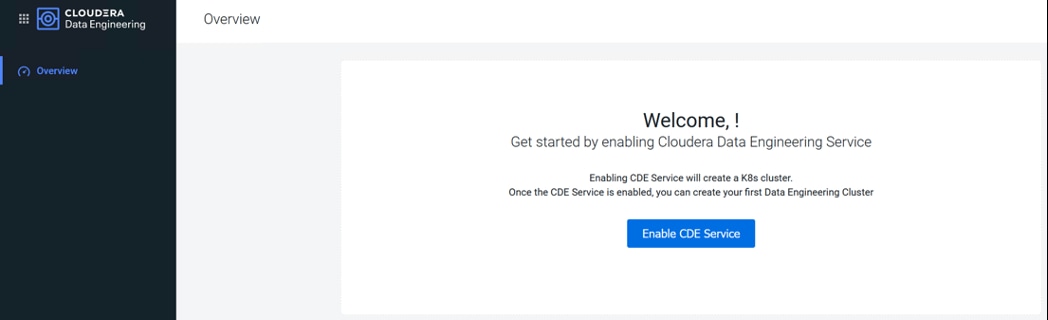

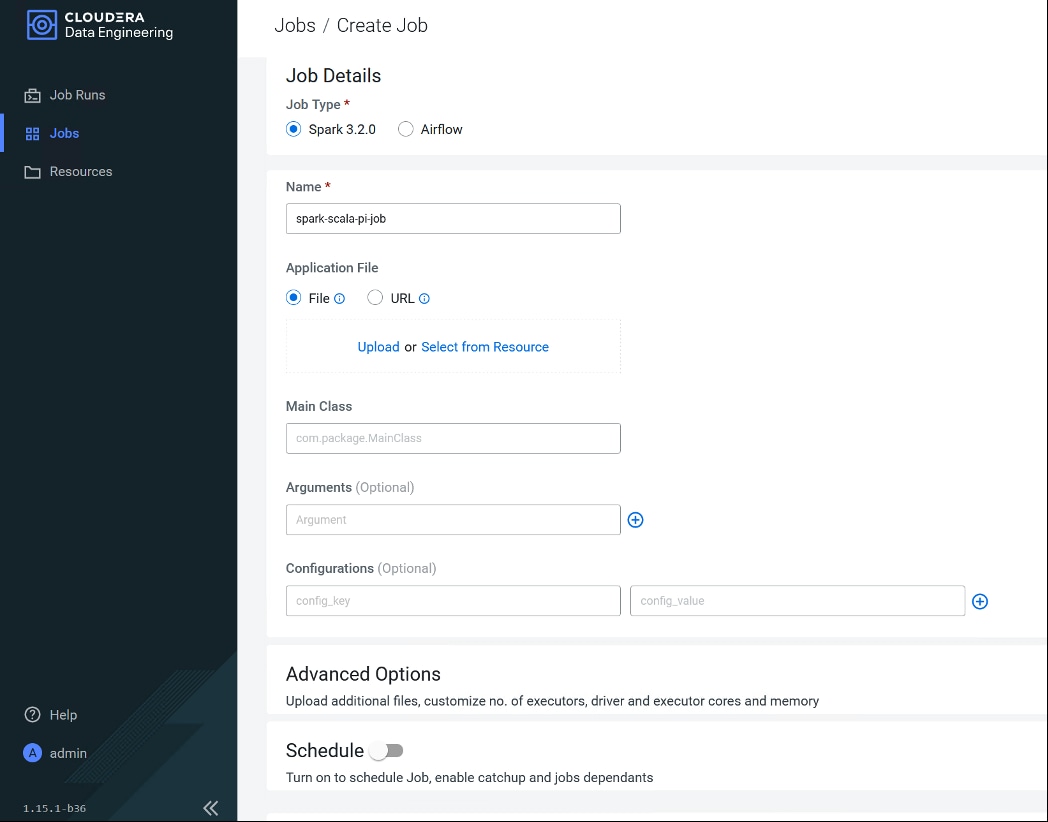

Cloudera Data Engineering (CDE)

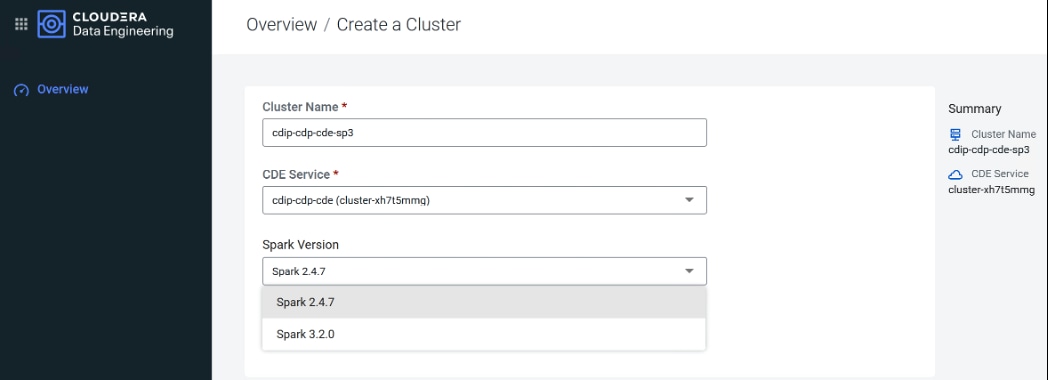

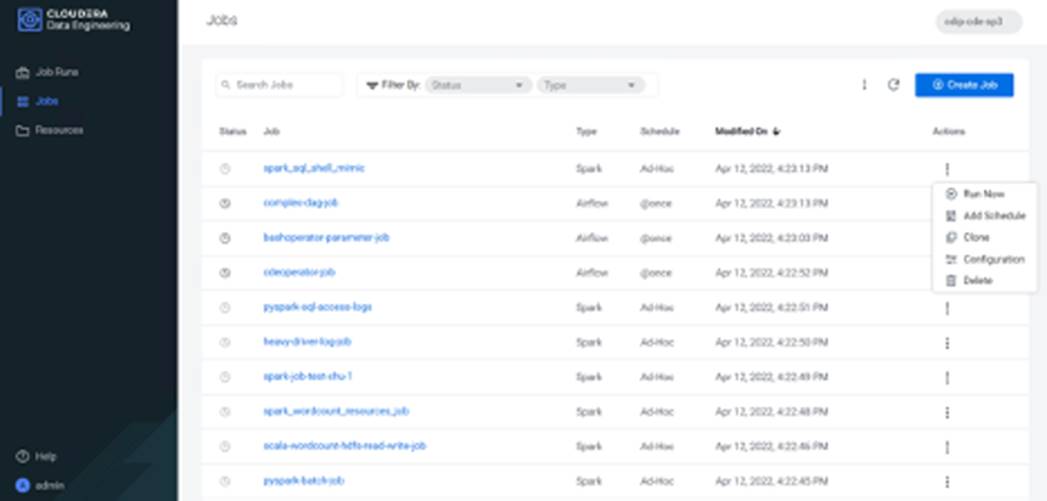

Cloudera Data Engineering is a CDP Private Cloud service for data engineers to operationalize their data pipelines. which allows to create, manage, and schedule Apache Spark jobs without the overhead of creating and maintaining Spark clusters. Cloudera Data Engineering, define virtual clusters with a range of CPU and memory resources, and the cluster scales up and down as needed to run your Spark workloads.

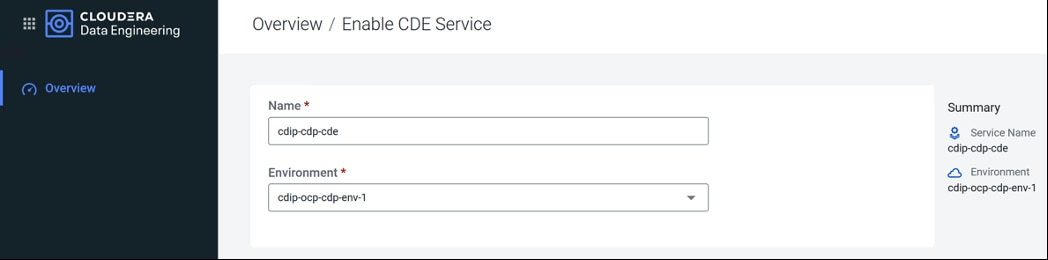

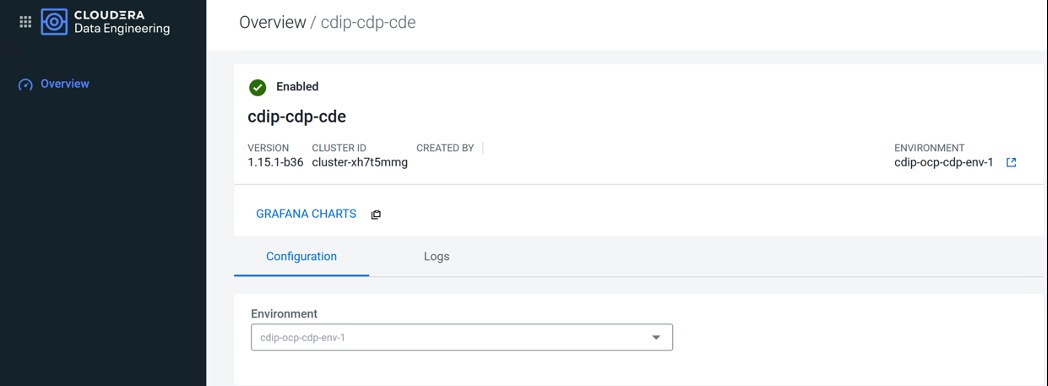

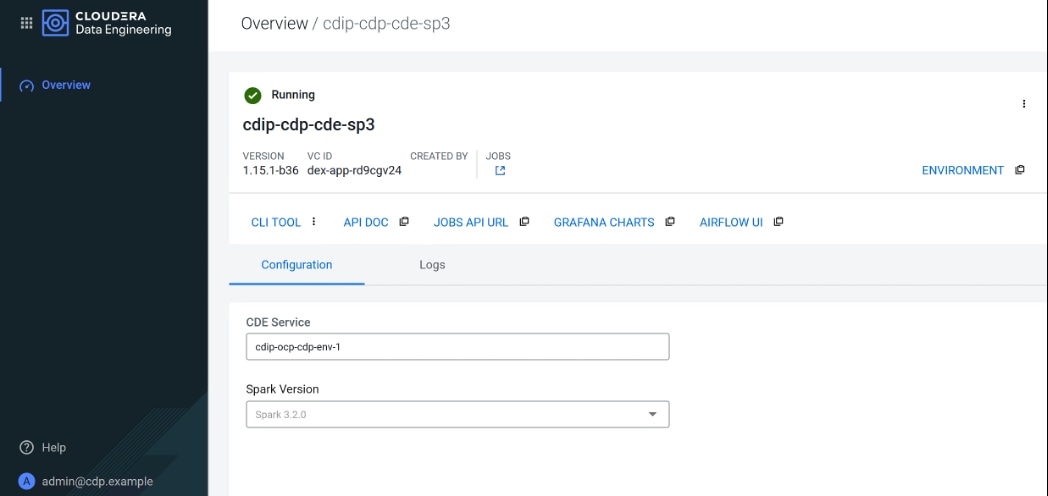

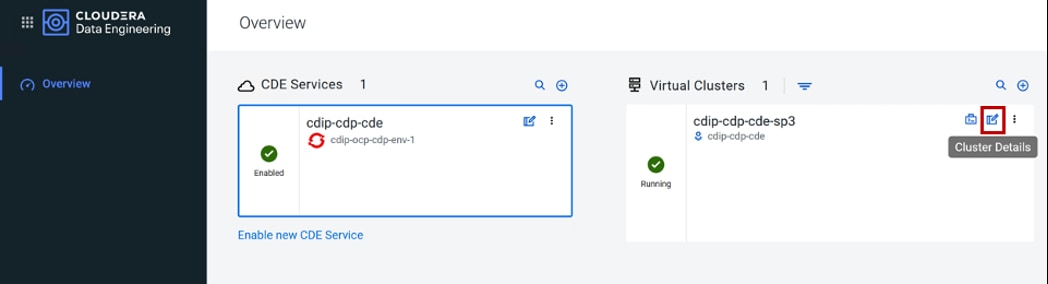

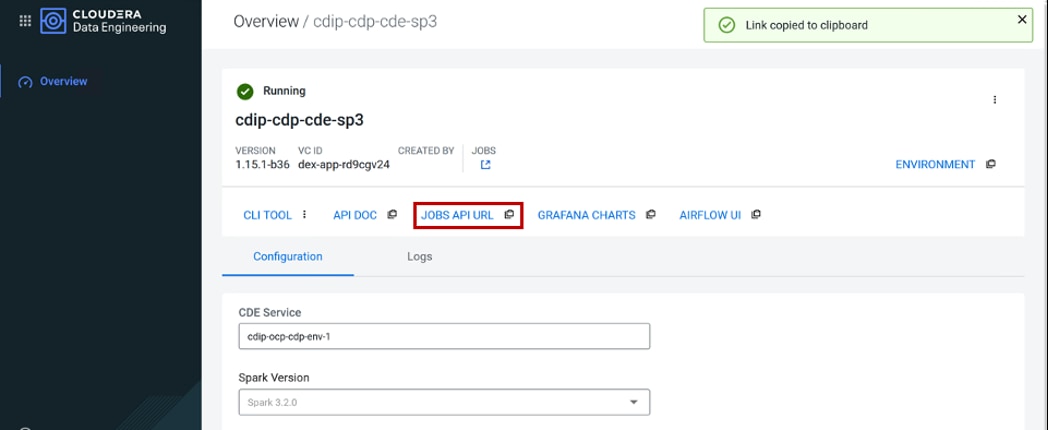

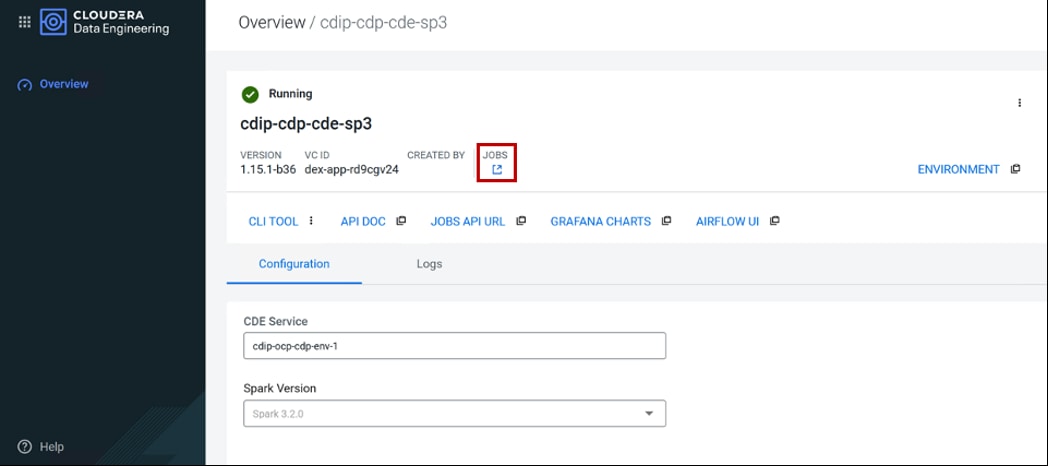

The CDE service involves several components:

● Environment – A logical subset of your cloud provider account including a specific virtual network. For more information, see Environments.

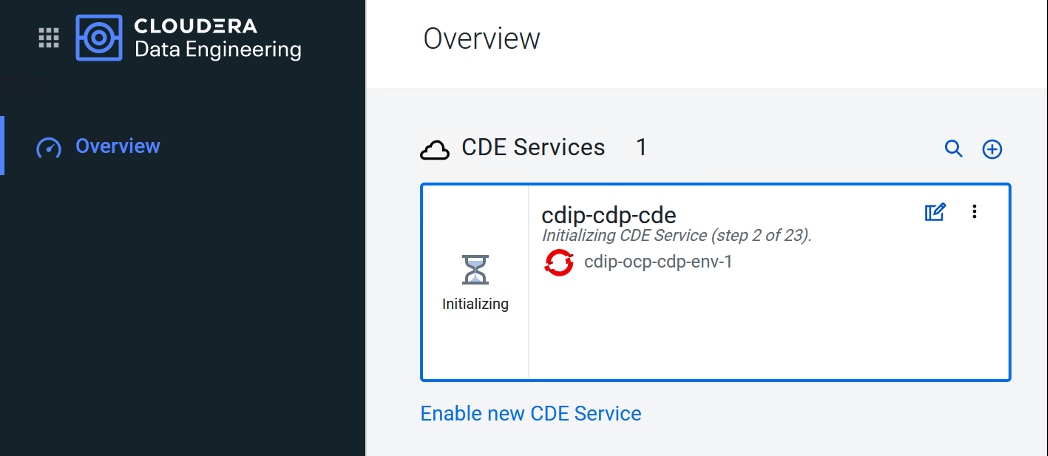

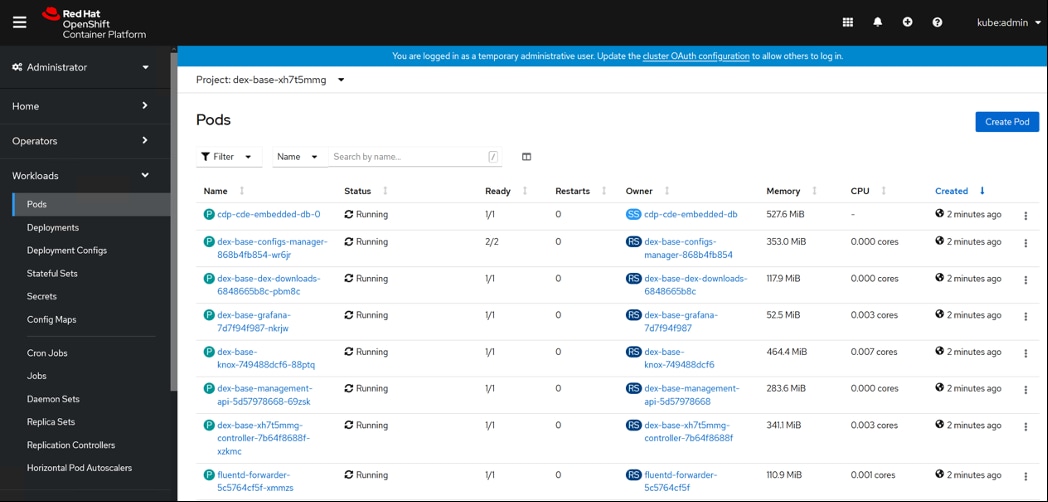

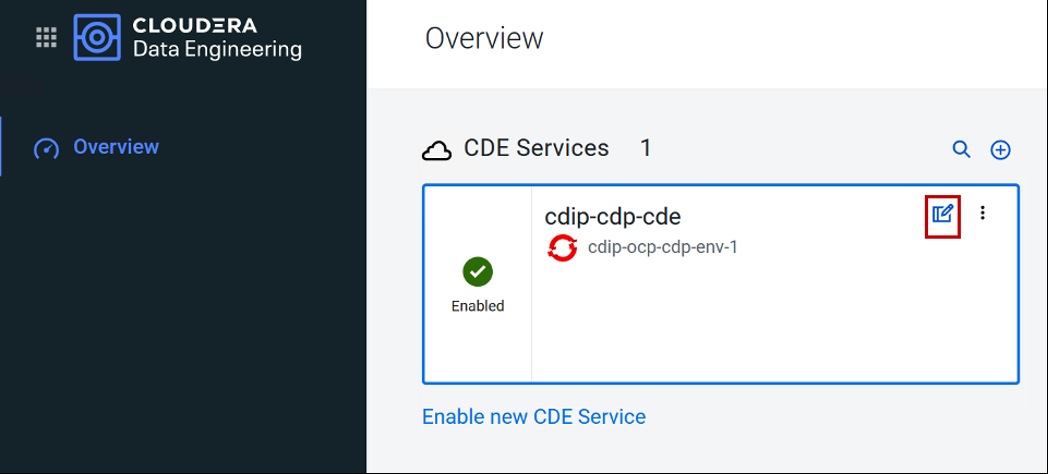

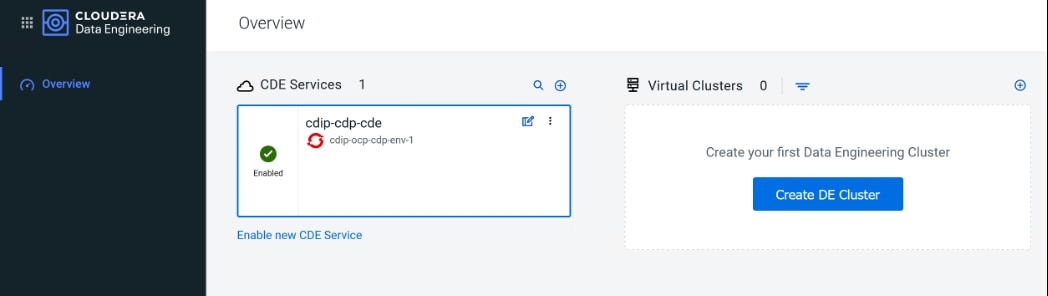

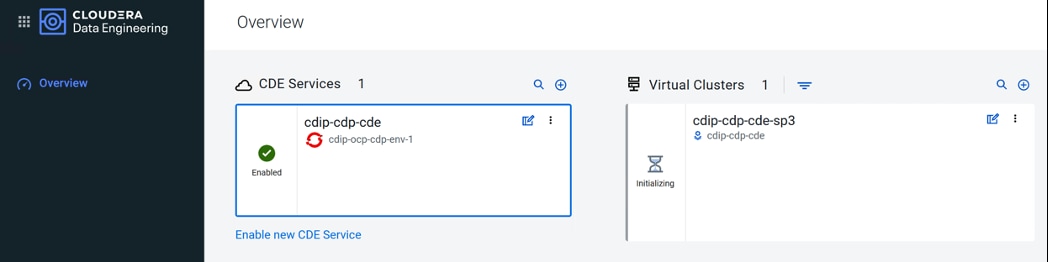

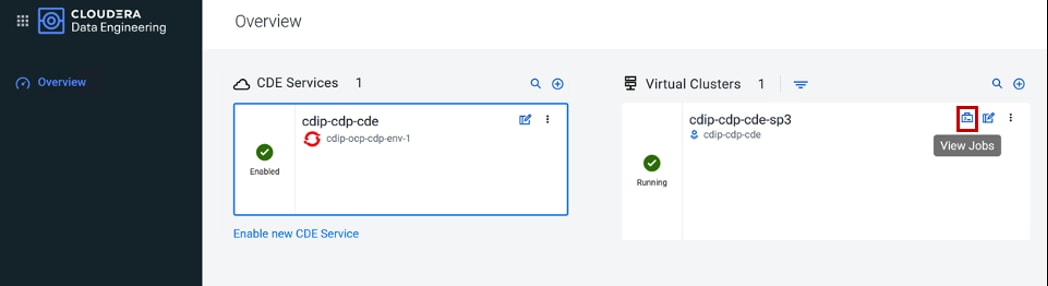

● CDE Service – The long-running Kubernetes cluster and services that manage the virtual clusters. The CDE service must be enabled on an environment before you can create any virtual clusters.

● Virtual Cluster – An individual auto-scaling cluster with defined CPU and memory ranges. Virtual Clusters in CDE can be created and deleted on demand. Jobs are associated with clusters.

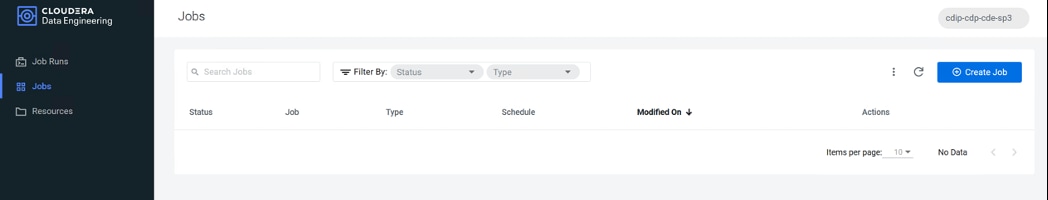

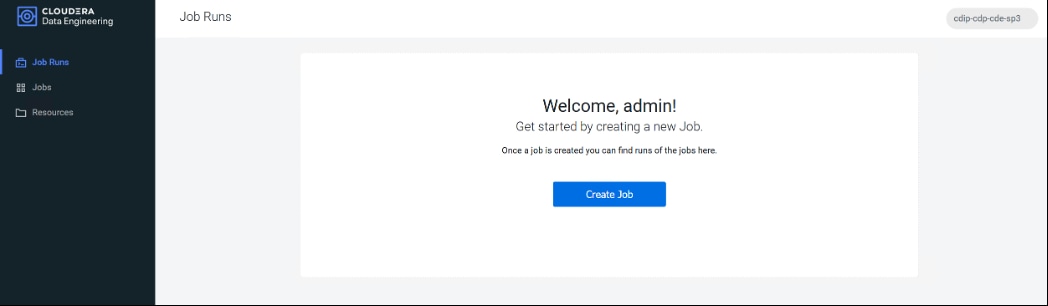

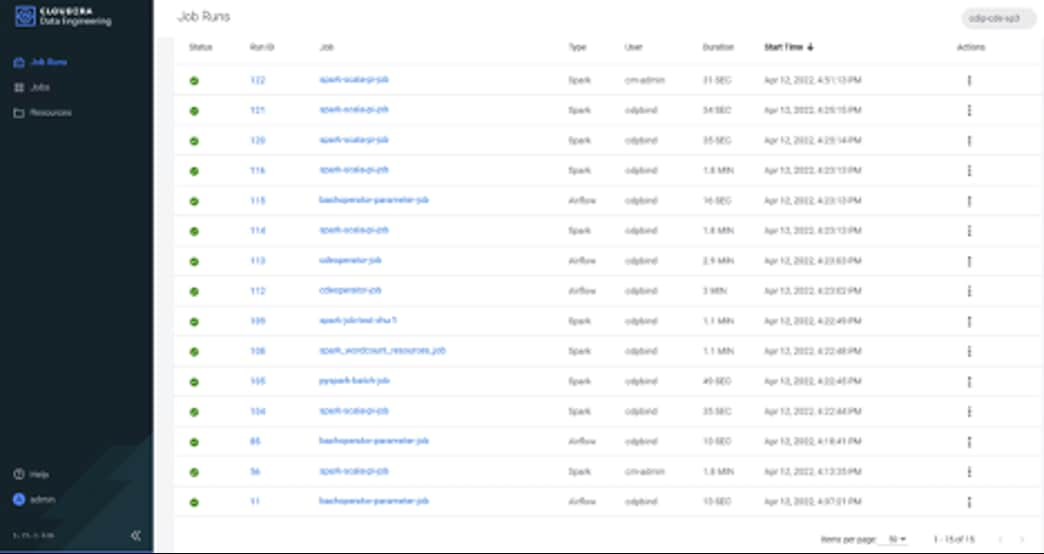

● Jobs – Application code along with defined configurations and resources. Jobs can be run on demand or scheduled. An individual job execution is called a job run.

● Resource – A defined collection of files such as a Python file or application JAR, dependencies, and any other reference files required for a job. A resource can be used by multiple jobs, and jobs can use multiple resources. The resource types supported by CDE are files and python-env.

● Job run – An individual job run.

Apache Ozone

Apache Ozone is a scalable, redundant, and distributed object store for Hadoop. Apart from scaling to billions of objects of varying sizes, Ozone can function effectively in containerized environments such as Kubernetes and YARN. Applications using frameworks like Apache Spark, YARN, and Hive work natively without any modifications. Apache Ozone is built on a highly available, replicated block storage layer called Hadoop Distributed Data Store (HDDS).

Apache Ozone consists of volumes, buckets, and keys:

● Volumes are similar to user accounts. Only administrators can create or delete volumes.

● Buckets are similar to directories. A bucket can contain any number of keys, but buckets cannot contain other buckets.

● Keys are similar to files. Each key is part of a bucket, which, in turn, belongs to a volume. Ozone stores data as keys inside these buckets.

When a key is written to Apache Ozone, the associated data is stored on the Data Nodes in chunks called blocks. Therefore, each key is associated with one or more blocks. Within the Data Nodes, a series of unrelated blocks is stored in a container, allowing many blocks to be managed as a single entity.

Apache Ozone separates management of namespaces and storage, helping it to scale effectively. Apache Ozone Manager manages the namespaces while Storage Container Manager handles the containers.

Apache Ozone is a distributed key-value store that can manage both small and large files alike. While HDFS provides POSIX-like semantics, Apache Ozone looks and behaves like an Object Store.

Apache Ozone has the following cost savings and benefits due to storage consolidation:

● Lower Infrastructure cost

● Lower software licensing and support cost

● Lower lab footprint

● Newer additional use cases with support for HDFS and S3 and billions of objects supporting both large and small files in a similar fashion.

For more information about Apache Ozone, go to: https://blog.cloudera.com/apache-ozone-and-dense-data-nodes/

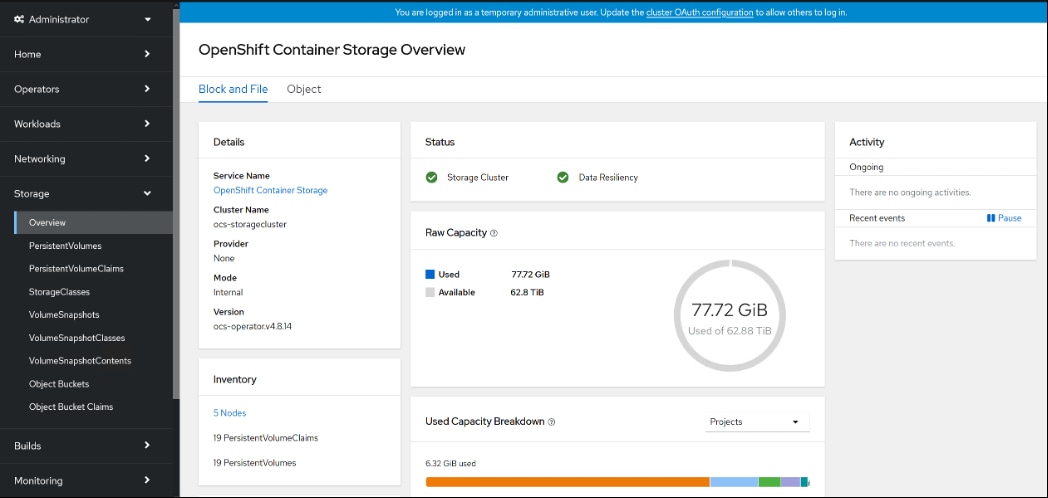

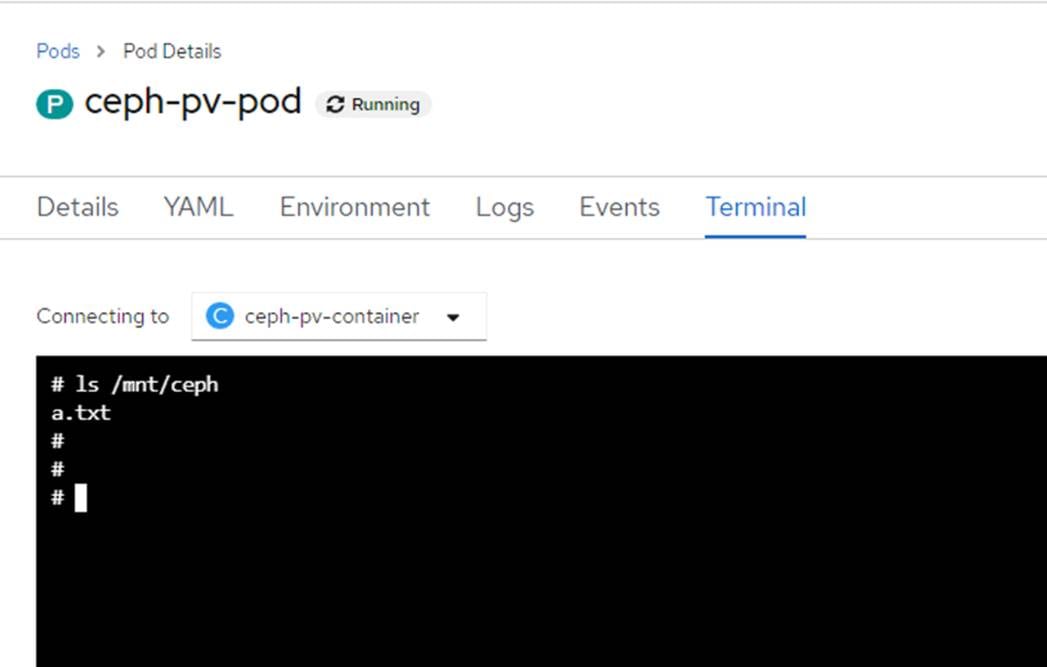

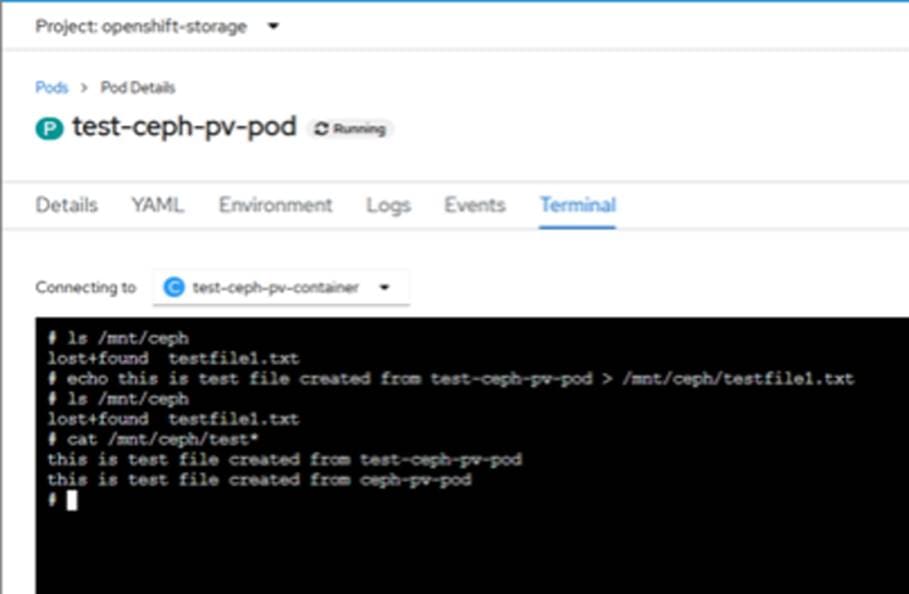

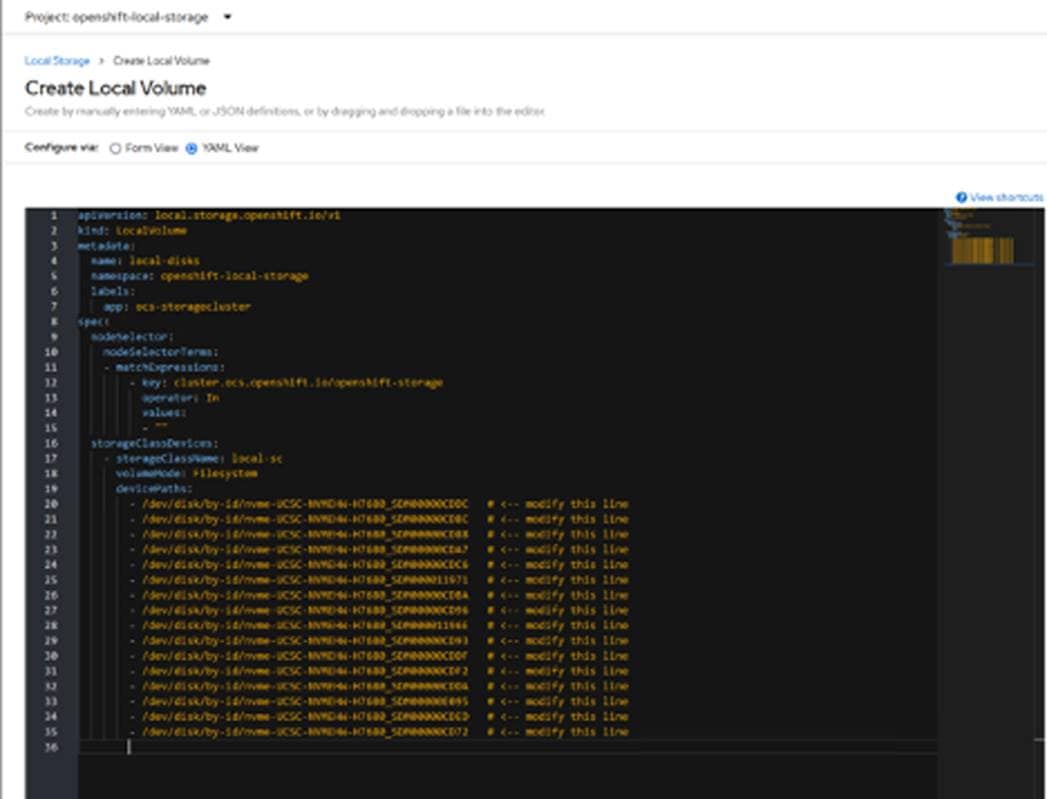

Persistent Storage for Kubernetes

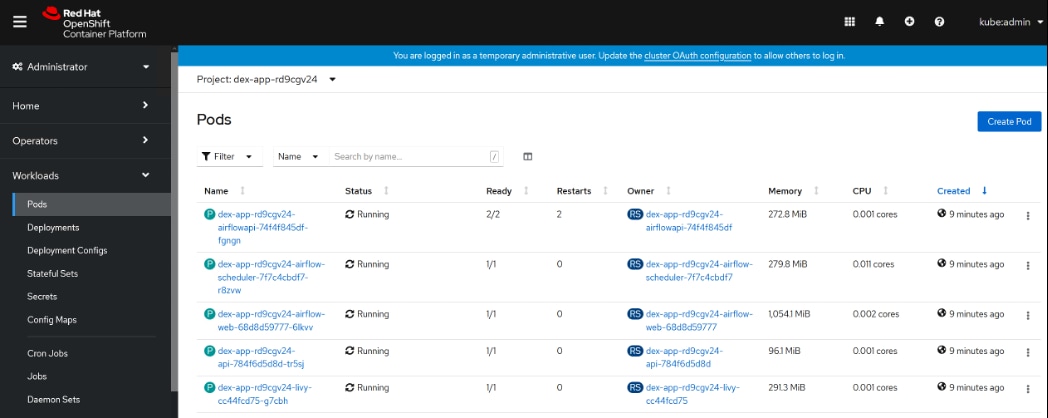

Workloads deployed in containers and orchestrated via Kubernetes(K8) are either stateless or stateful. By default, K8 workloads are stateless. Stateless applications don’t persist, which means it uses temporary storage provided within K8 and destroys once the application or pod is terminated. That’s why we call containers are ephemeral in nature, data associated with containers can be lost once the container is terminated or accidentally crashed. Furthermore, data can’t be shared among other containers either.

For stateful application, persistent storage is the first “must have” requirement. Kubernetes supports various persistent storage solutions that help addressing this problem and support stateful workloads in a containerized environment. Kubernetes introduces the concept of Persistent Volumes, which exist independently of containers, survive even after containers shut down, and can be requested and consumed by containerized workloads.

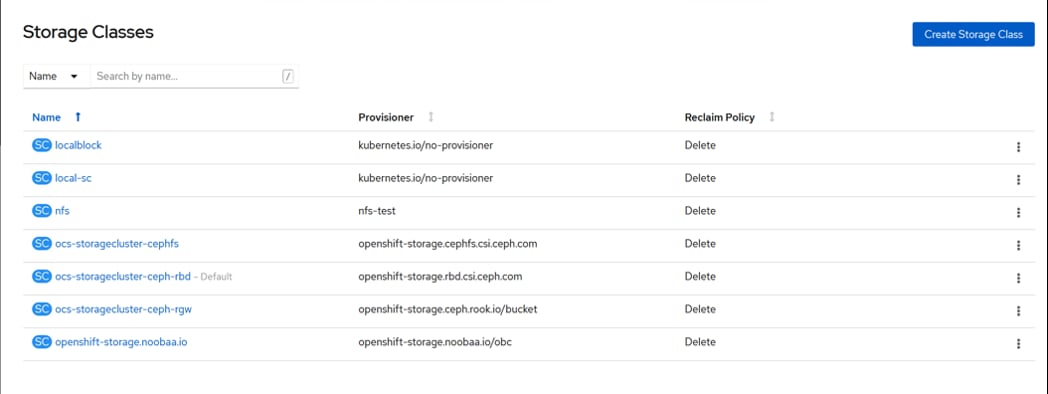

There are various methods of providing persistent storage to containers. However, in this reference design, Red Hat OpenShift Container Storage is used to provide persistent volume for Cloudera Private Cloud control plane and Cloudera Machine Learning backed by Red Hat OpenShift Container Platform.

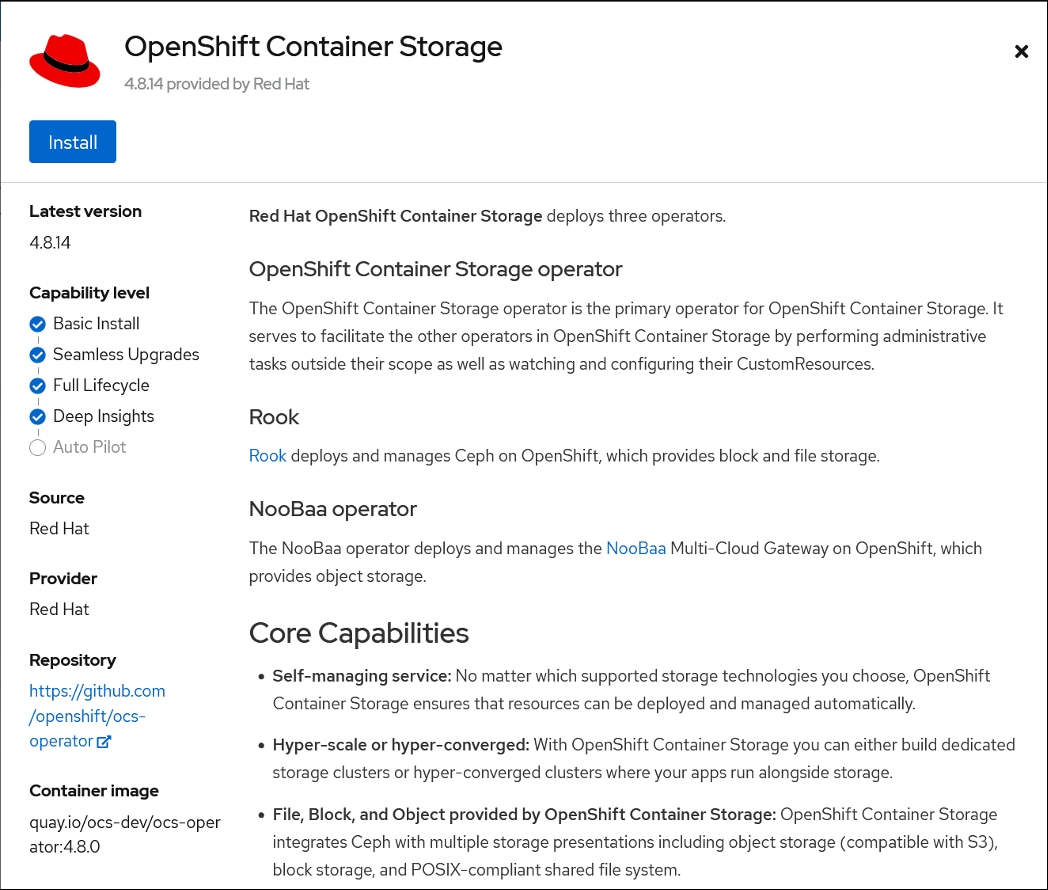

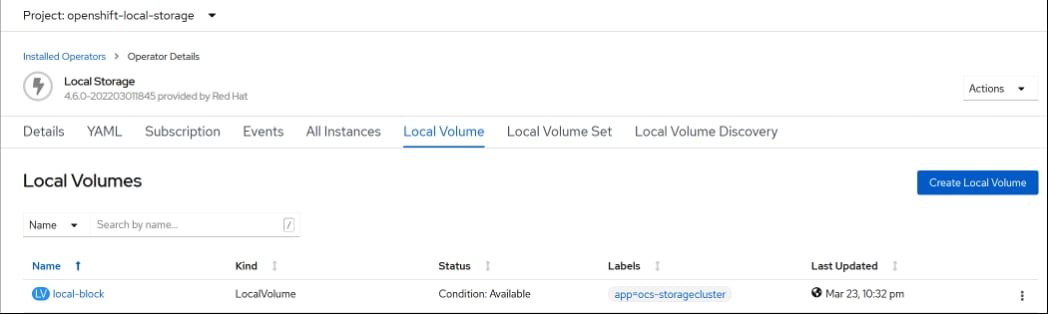

Red Hat OpenShift Container Storage (OCS)

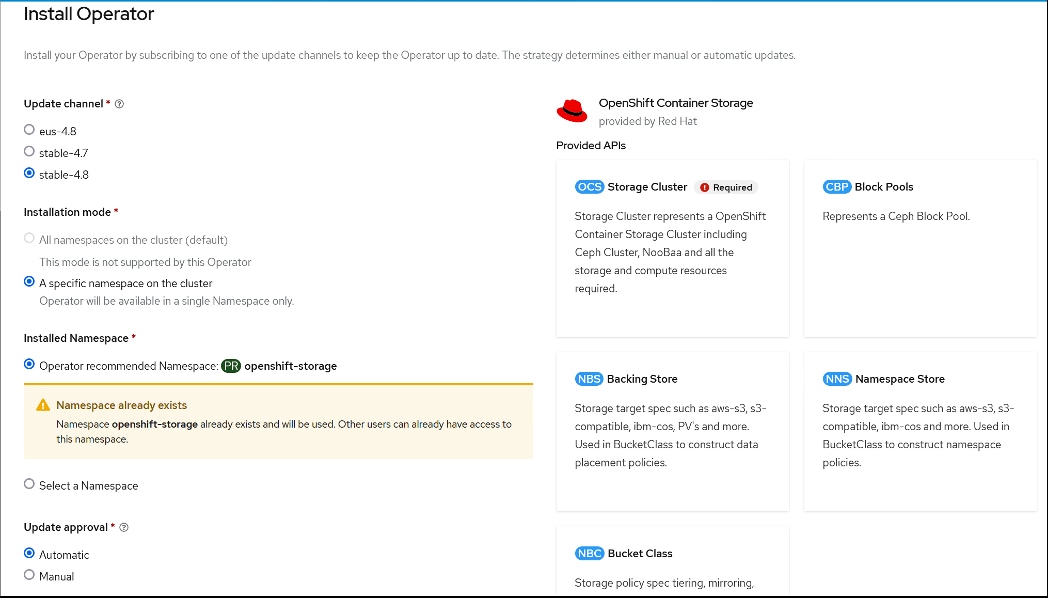

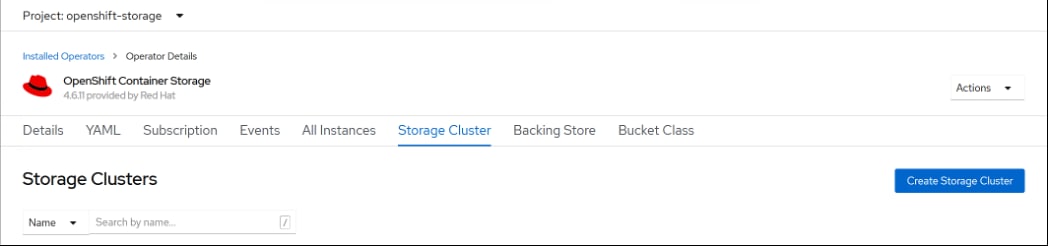

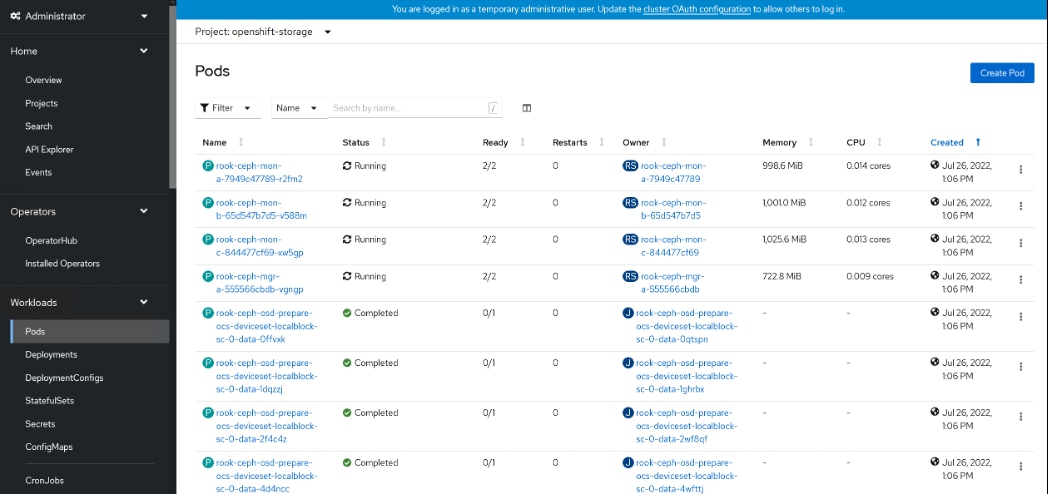

OCS is software-defined storage integrated with and optimized for Red Hat OpenShift Container Platform. OpenShift Container Storage 4.8 is built on Red Hat Ceph® Storage, Rook, and NooBaa to provide container native storage services that support block, file, and object services.

Leveraging the Kubernetes Operator framework, OpenShift Container Storage (OCS) automates a lot of the complexity involved in providing cloud native storage for OpenShift. OCS integrates deeply into cloud native environments by providing a seamless experience for scheduling, lifecycle management, resource management, security, monitoring, and user experience of the storage resources.

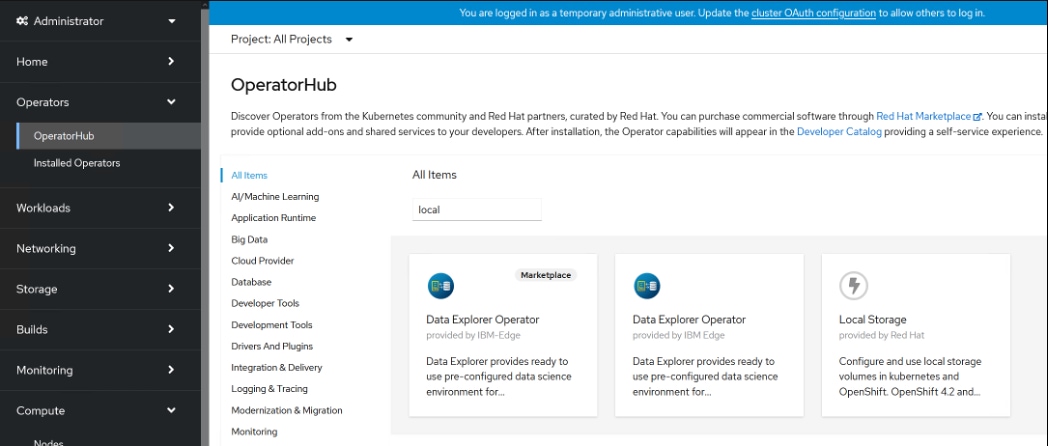

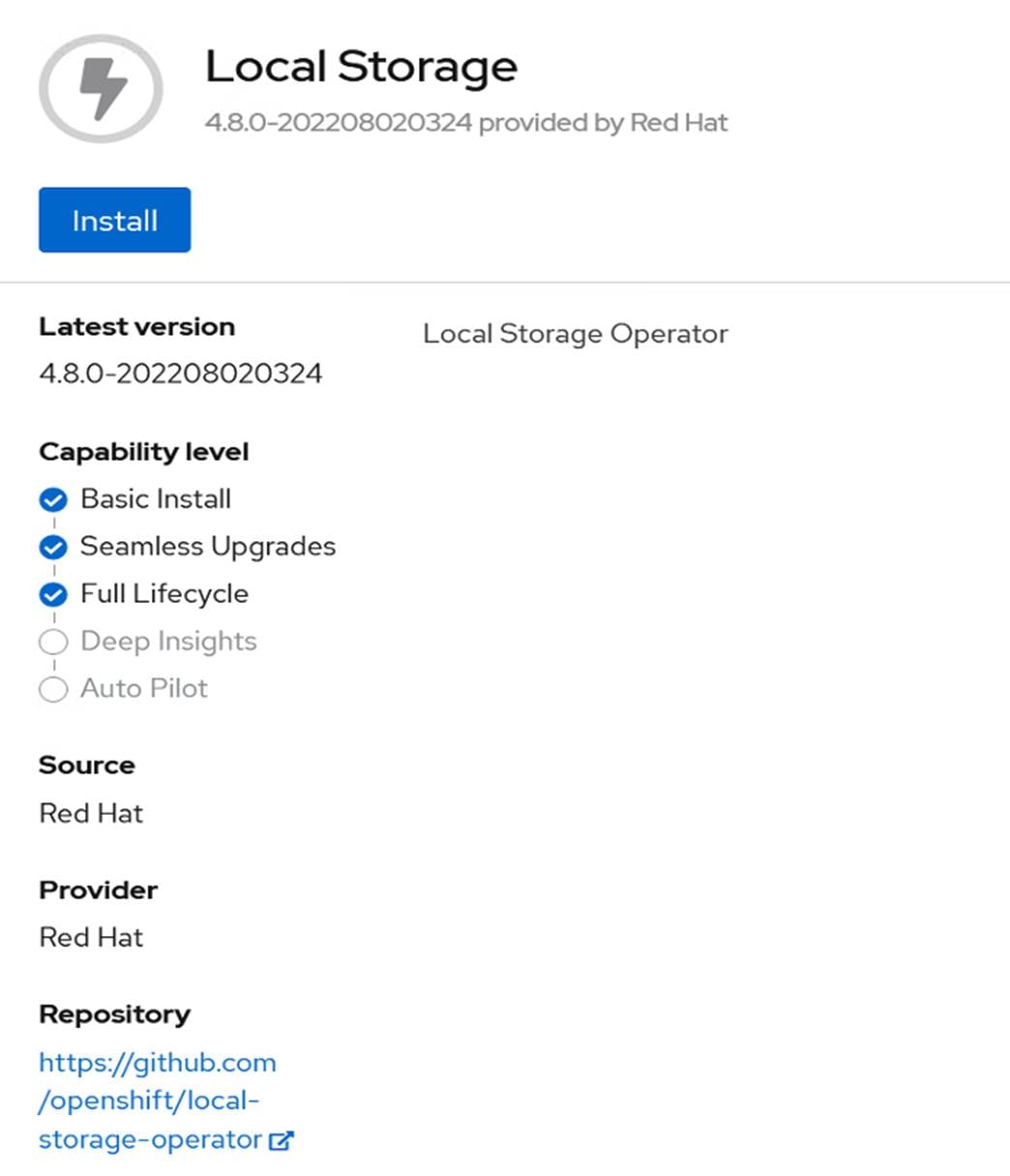

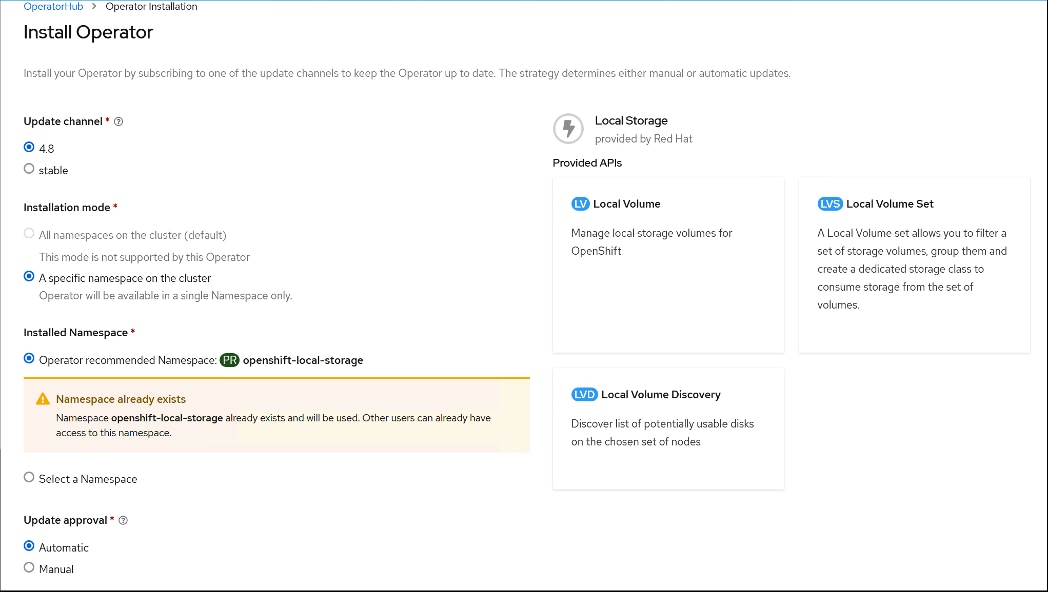

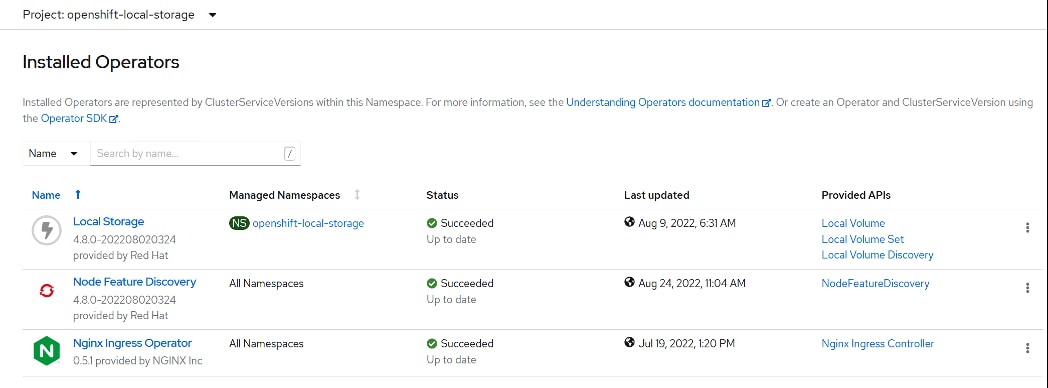

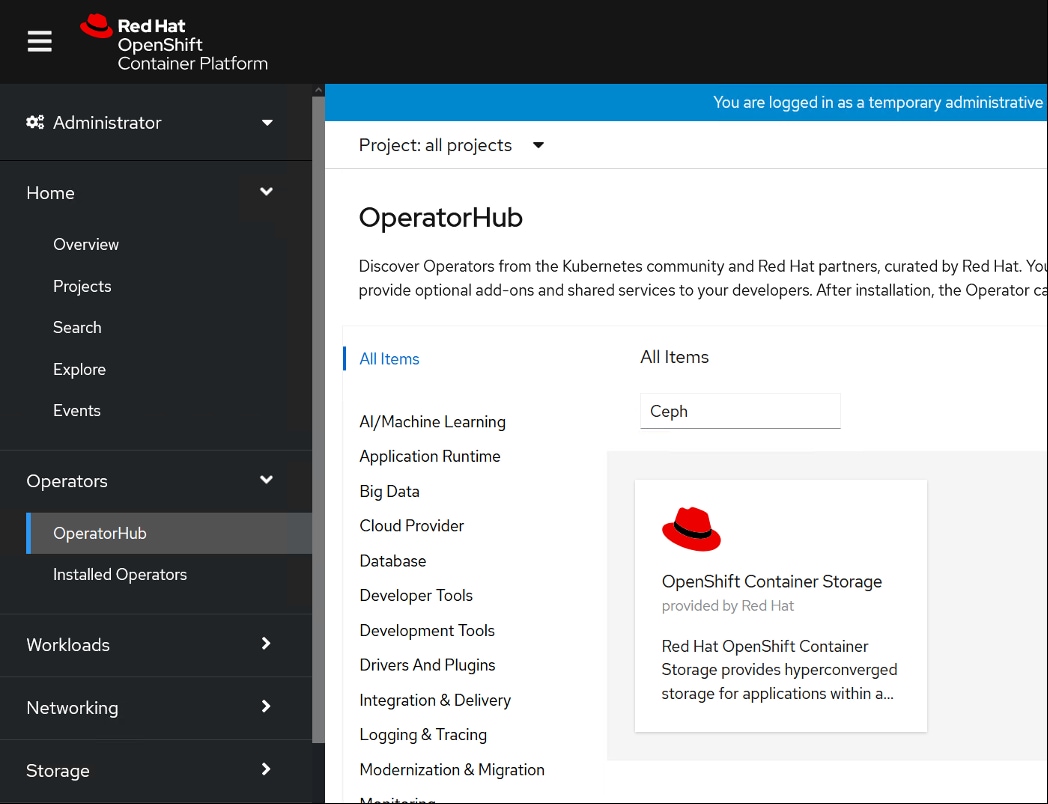

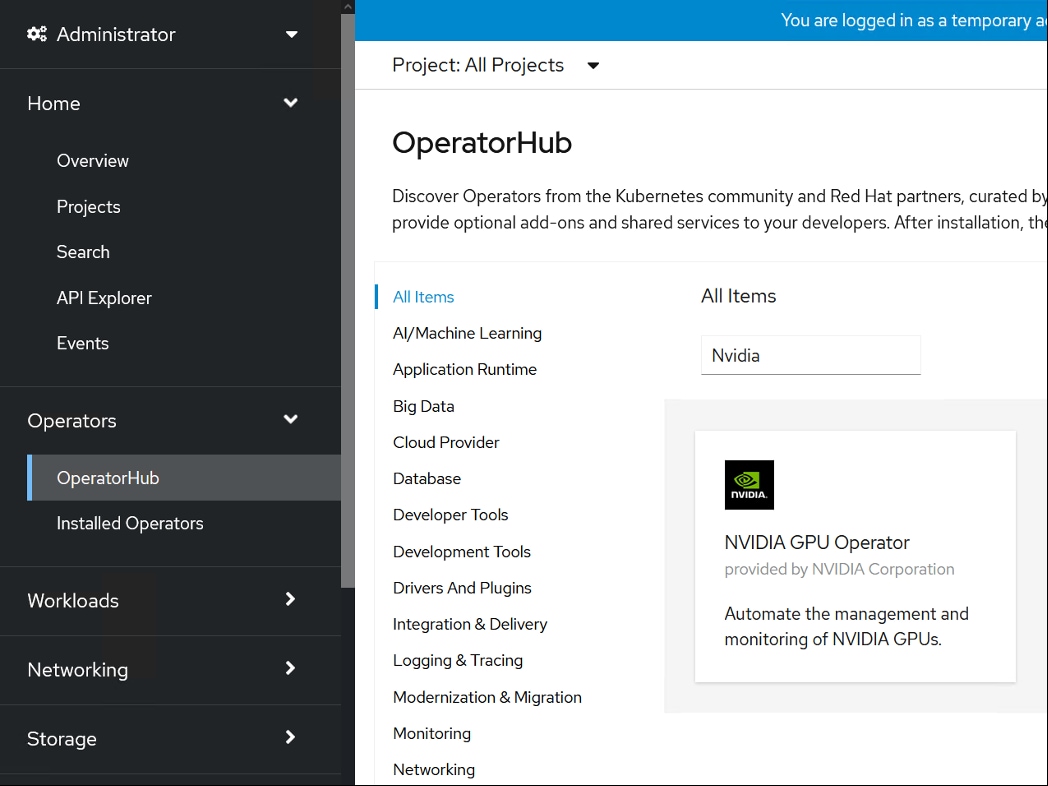

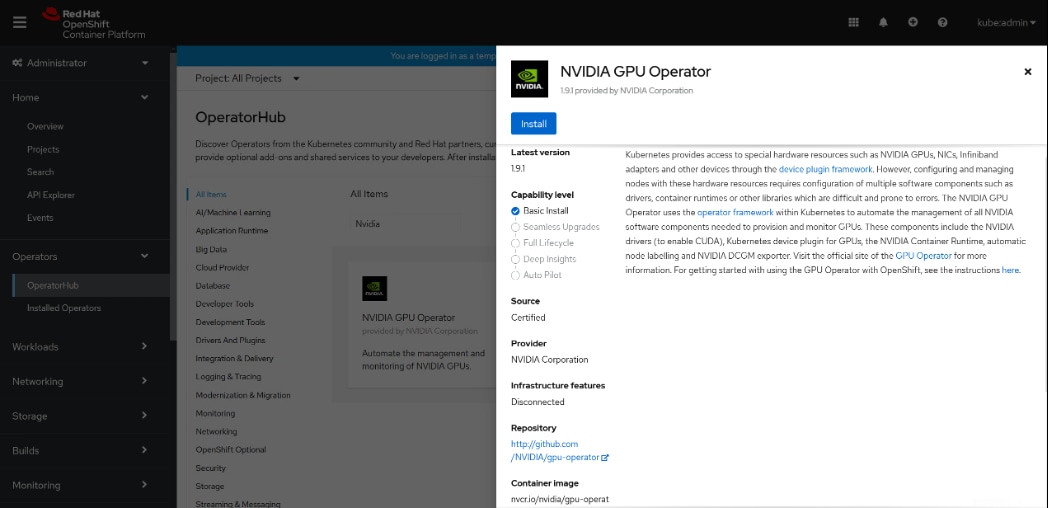

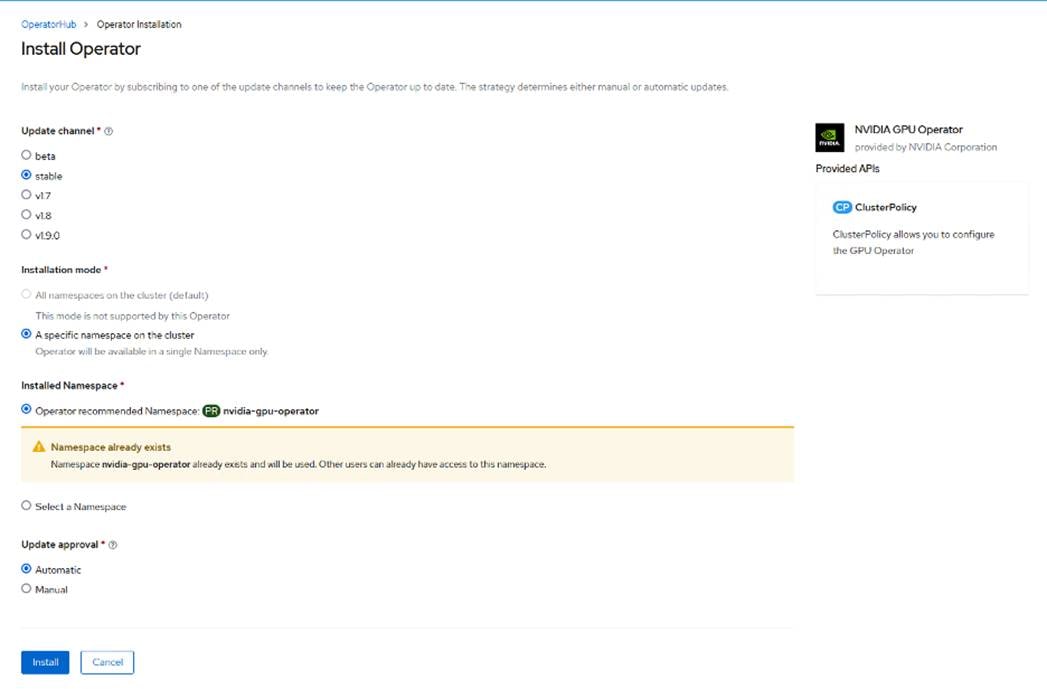

To deploy OpenShift Container Storage, the administrator can go to the OpenShift Administrator Console and navigate to the “Operator Hub” to find the OpenShift Container Storage Operator

OpenShift Container Storage may be used to provide storage for several workloads:

● Block storage for application development and testing environments that include databases, document stores, and messaging systems.

● File storage for CI/CD build environments, web application storage, and for ingest and aggregation of datasets for machine learning.

● Multi-cloud object storage for CI/CD builds artifacts, origin storage, data archives, and pre-trained machine learning models that are ready for serving.

To enable user provisioning of storage, OCS provides storage classes that are ready-to-use when OCS is deployed.

OpenShift Container Storage uses the following operators:

● The OpenShift Container Storage (OCS) Operator

A meta-operator that codifies and enforces the recommendations and requirements of a supported Red Hat OpenShift Container Storage deployment by drawing on other operators in specific, tested ways. This operator provides the storage cluster resource that wraps resources provided by the Rook-Ceph and NooBaa operators.

● The Rook-Ceph Operator

This operator automates the packaging, deployment, management, upgrading, and scaling of persistent storage provided to container applications, and infrastructure services provided to OpenShift Container Platform. It provides the Ceph cluster resource, which manages the pods that host services such as the Object Storage Daemons (OSDs), monitors, and the metadata server for the Ceph file system.

● The NooBaa Operator

This operator provides the Multi-cloud Object Gateway, an S3 compatible object store service that allows resource access across multiple cloud environments.

This chapter contains the following:

● Cloudera Data Platform Private Cloud Requirements

● Host OS Firewall for Required Ports

● Cloudera Private Cloud Storage Requirements

● Consistent Linux Storage Device Naming and Order

● CDP PvC DS Storage, Memory, and Cores

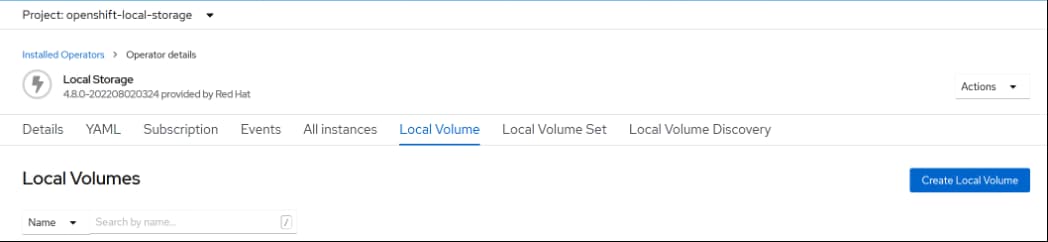

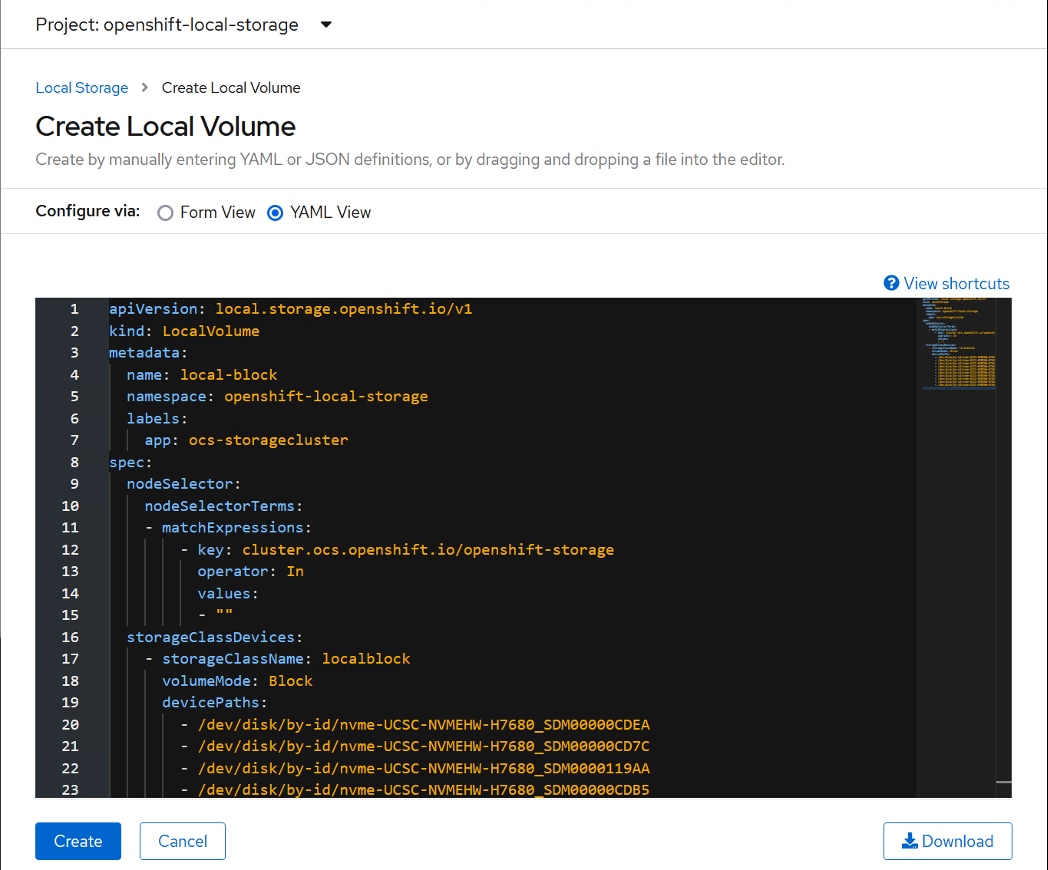

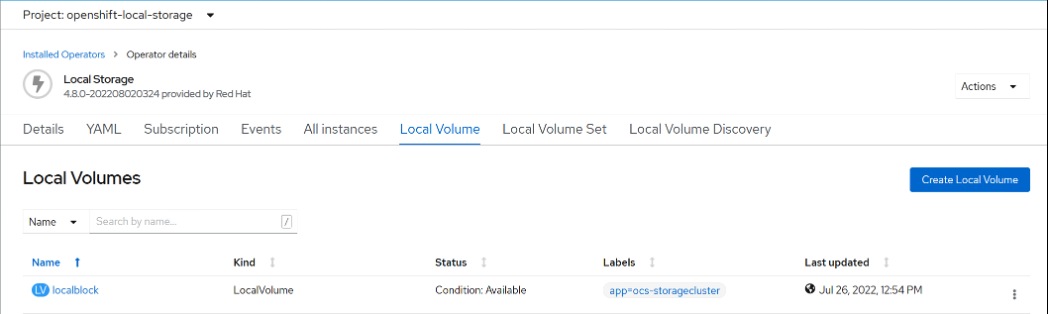

● Persistent Storage using Local Volumes

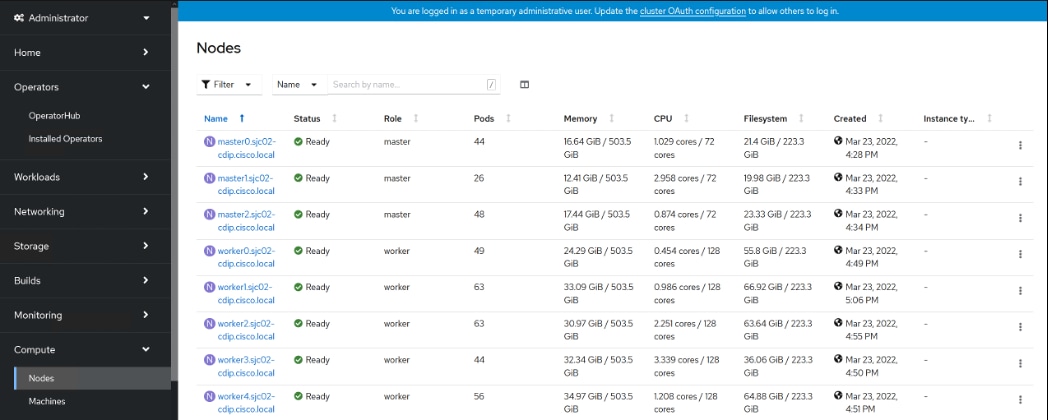

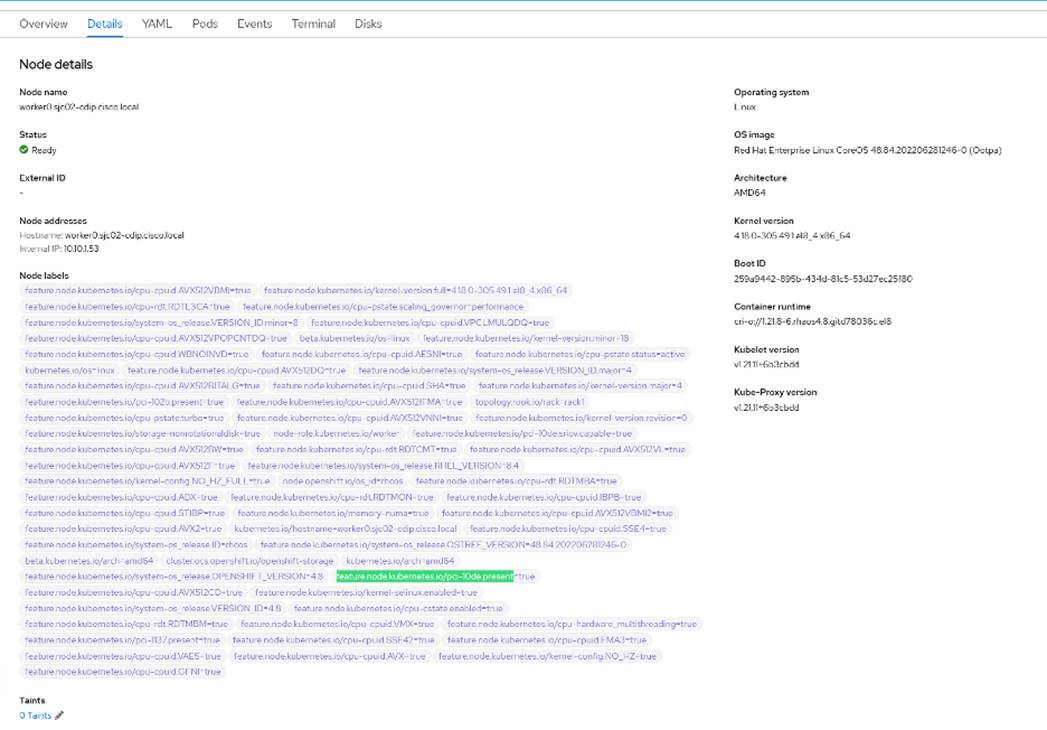

This CVD was designed with the following:

● 3 x Cisco UCS X210c compute node with RedHat OpenShift Container Platform Master nodes

● 5 x Cisco UCS X210c compute node with RedHat OpenShift Container Platform worker nodes

● 4 x Cisco UCS X210c compute node and 4 x Cisco UCS X440p PCIe node hosting 2 x NVIDIA A100 GPU per node with RedHat OpenShift Container Platform worker nodes

● Cloudera Data Platform Private Cloud Data Services running on the RedHat OpenShift Container Platform

● 1 x Cisco UCS C240 M6 bootstrap node for RedHat OpenShift Container Platform

● 1 x Cisco UCS C240 M6 running HA Proxy

● Cloudera Data Platform Private Cloud Base (the data lake) which is not detailed in this CVD but is extensively explained in the CVDs published here: http://www.cisco.com/go/bigdata_design.

Table 3 lists the required physical components and hardware.

Table 3. CDIP with CDP PvC DS System Components

| Component |

Hardware |

| Fabric Interconnects |

2 x Cisco UCS 6454 Fabric Interconnects |

| Servers |

Cisco UCS 9508 chassis - Cisco UCS X210c compute node - Cisco UCS X440p PCIe node |

Table 4 lists the software components and the versions required for a single cluster of the Cohesity Helios Platform running in Cisco UCS, as tested, and validated in this document.

Table 4. Software Components and Hardware

| Layer |

Component |

Version or Release |

| Compute |

Cisco UCS X210C |

5.0(2b) |

| Network

|

Cisco UCS Fabric Interconnect 6454 |

9.3(5)I42(2a) |

| Cisco UCS VIC 14425 4x25G mLOM for X Compute Node |

5.2(2b) |

|

| UCS 9108-25G IFM for X9508 chassis |

4.2(2a) |

|

| UCS 9416 X-Fabric module for 9508 chassis |

|

|

| Software |

Cloudera Data Platform Private Cloud Base |

7.1.7 SP1 |

| Cloudera Manager |

7.6.5 |

|

| Cloudera Data Platform Private Cloud Data Services |

1.4.0 |

|

| Postgres |

12.11 |

|

| Hadoop (Includes YARN and HDFS) |

3.1.1 |

|

| Spark |

2.4.7 |

|

| Red Hat Enterprise Linux Server (CDP Private Cloud Base) |

8.4 |

|

| Red Hat CoreOS (CDP Private Cloud Data Services) |

4.8.14 |

|

| Red Hat OpenShift Container Platform/Kubernetes |

stable-4.8 channel |

|

| OpenShift Container Storage |

4.8.14 |

Note: The Cisco latest drivers can be downloaded here: https://software.cisco.com/download/home.

Note: Please check the CDP PvC requirements and supported versions for information about hardware, operating system, and database requirements, as well as product compatibility matrices, here: https://supportmatrix.cloudera.com/ and here: https://docs.cloudera.com/cdp-private-cloud-upgrade/latest/release-guide/topics/cdpdc-requirements-supported-versions.html

Note: CDP PvC DS version 1.4.0 supports CentOS 8.4, 7.9, Red Hat Enterprise Linux 8.4. 7.9, Oracle Linux 7.9, and CentOS 8.2 (CDW only). For complete list of supported version visit, https://docs.cloudera.com/cdp-private-cloud-data-services/1.4.0/installation-ecs/topics/cdppvc-installation-ecs-software-requirements.html

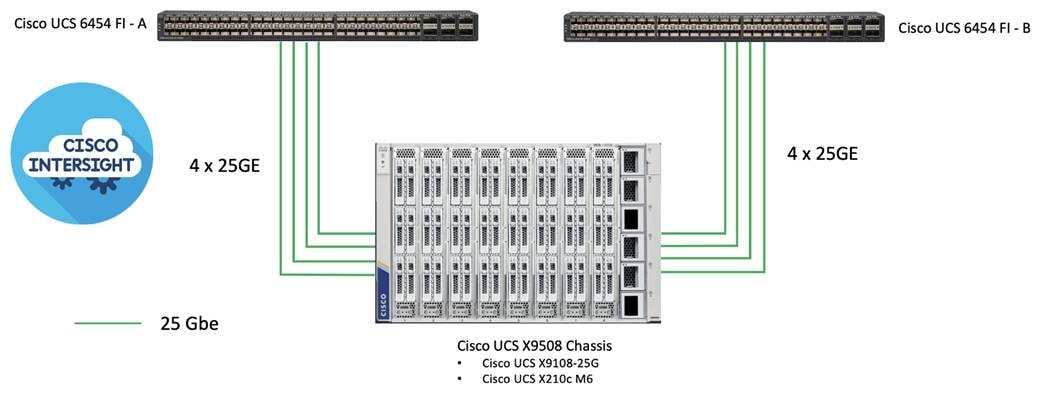

Physical Topology

Cisco UCS X-Series 9508 chassis with a pair of X9108 intelligent fabric module and a pair of XFM9416 per chassis consisting of 12 x X210C compute node and 4 x X440p PCIe node hosted in two chassis connected to a pair of Cisco UCS 6400 series Fabric Interconnects.

Note: Please contact your Cisco representative for country-specific information.

Figure 34 shows the logical topology

● Cisco UCS 6454 Fabric Interconnects provide the chassis and network connectivity.

● The Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCSX 9108-25G Intelligent Fabric Modules (IFMs), where four or eight 25 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI.

● Cisco UCS X210c M6 Compute Nodes contain fourth-generation Cisco 14425 virtual interface cards.

There are many platform dependencies to enable Cloudera Data Platform Private Cloud Data Services running on RedHat OpenShift Container Platform. The containers need to access data stored on HDFS in Cloudera Data Platform Private Cloud Base in a fully secure manner.

The following are the prerequisites needed to enable this solution:

● Network requirements

● Security requirements

● Operating System requirements

● RedHat OpenShift Container Platform requirements

● Cloudera Private Cloud persistence storage requirements

● Cloudera Data Warehouse local storage requirements

● NFS requirements for Cloudera Machine Learning workspaces

● Cloudera requirements

Network Requirements

Cloudera Private Cloud Base cluster that houses HDFS storage and Cloudera Private Cloud compute-only clusters should be reachable with no more than a 3:1 oversubscription to be able to read from and write to the base HDFS cluster. The recommended network architecture is Spine-Leaf between the spine and leaf switches. Additional routing hops should be avoided in production and ideally both HDFS/Ozone storage and Cloudera Private Cloud Data Services are on the same network.

For more information, go to: https://docs.cloudera.com/cdp-private-cloud-upgrade/latest/release-guide/topics/cdpdc-networking-security-requirements.html

Both CDP Private Cloud Base and CDP Private Cloud Data Services cluster should have their time synched with the NTP Clock time from same the NTP source. Also make sure, Active Directory server where Kerberos is setup for data lake and for other services must also be synced with same NTP source.

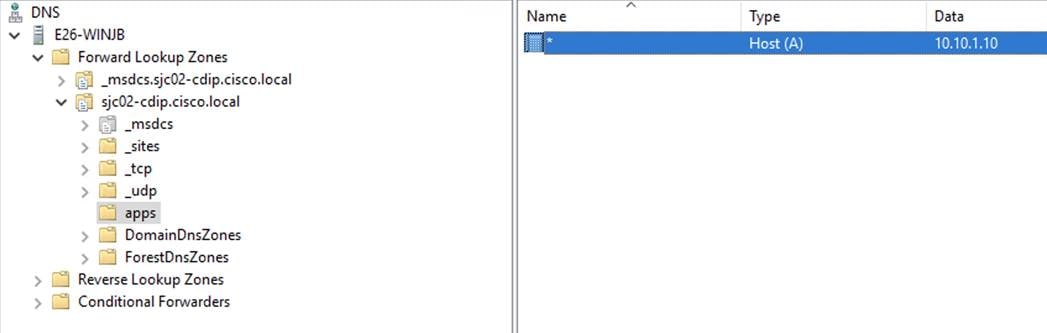

Note: DNS is required for this solution. It is the requirement for setting up Active Directory, Kerberos, Cloudera Manager authentication, and OpenShift.

DNS must have the following:

● Each host whether Cloudera Manger or Red Hat OpenShift must be accessible via DNS.

● DNS must be configured for forward AND reverse for each host. Reverse is required for Kerberos authentication to the Base Cloudera cluster.

● Cloudera Manager host must be able to resolve hostname of Red Hat OpenShift ingress/route via DNS of wildcard entry to load balancer of OpenShift Container Platform.

● Service DNS entry must be configured for ETCD cluster. For each control plane machine, OpenShift Container Platform also requires an SRV DNS record for etcd server on that machine with priority 0, weight 10 and port 2380.

A wildcard DNS entry is required for resolving the ingress/route for applications.

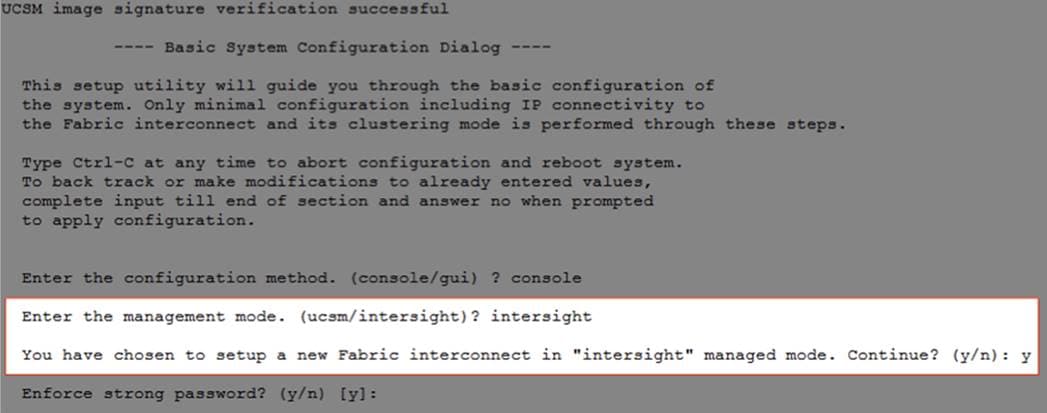

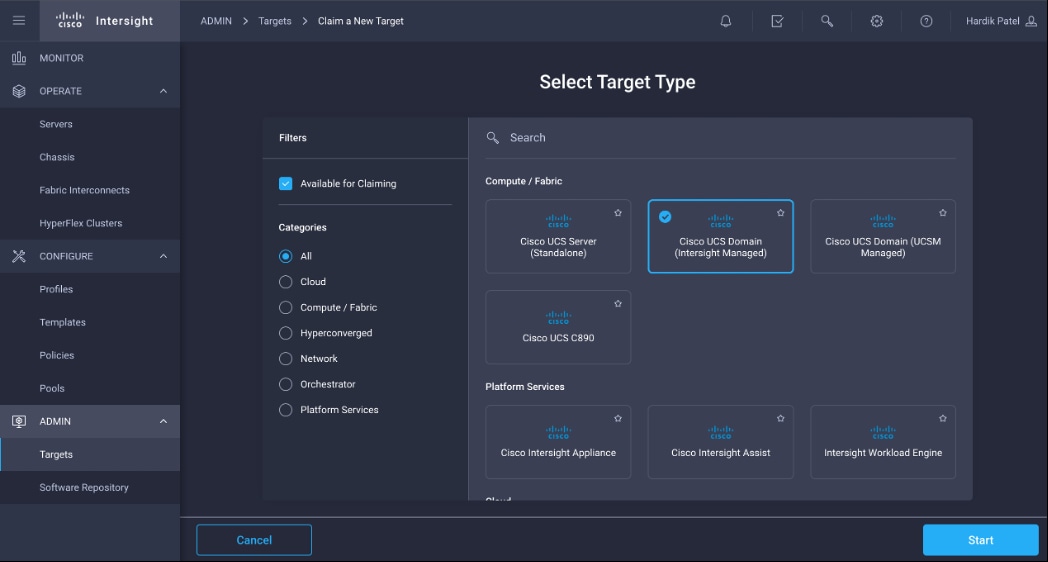

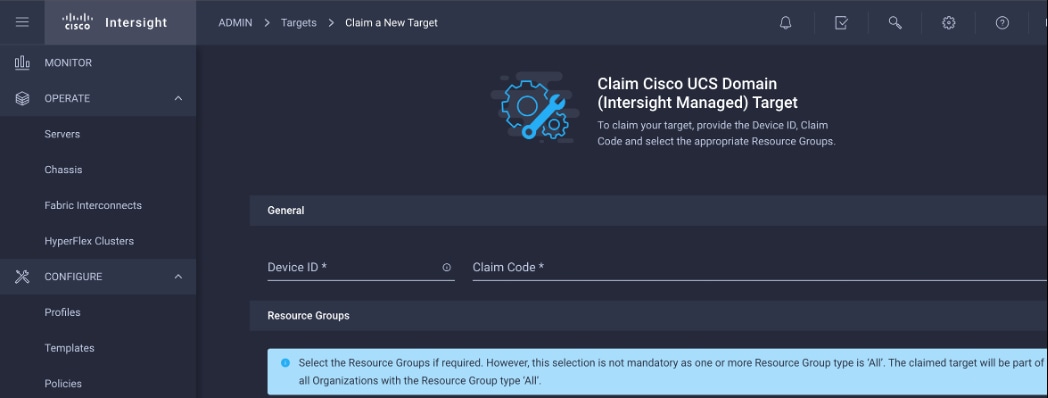

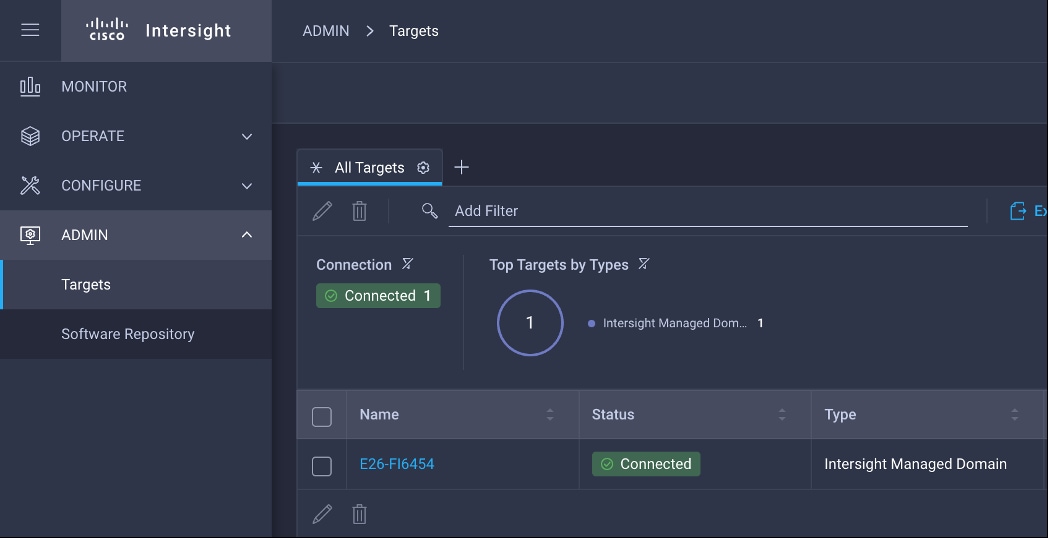

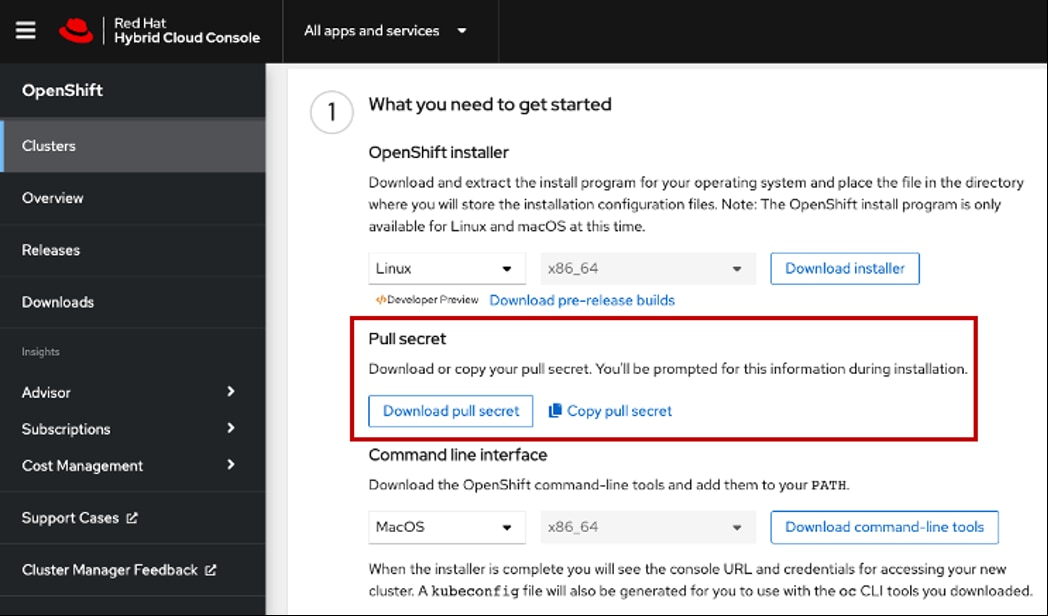

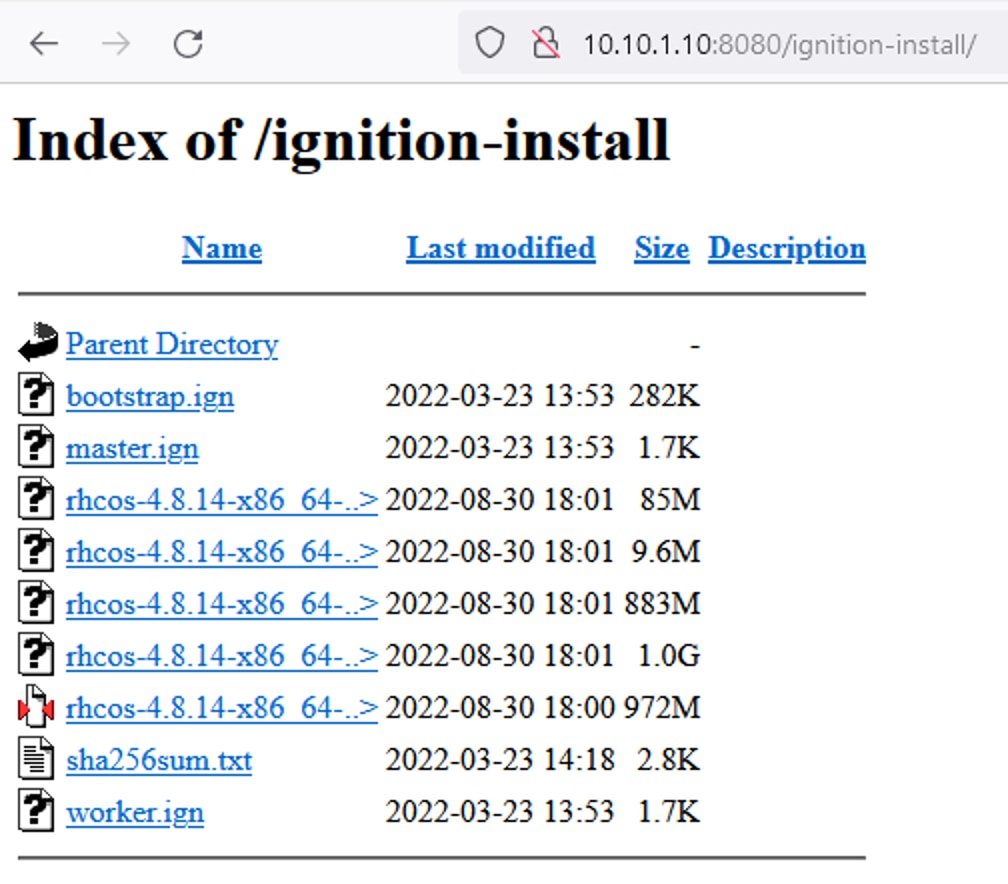

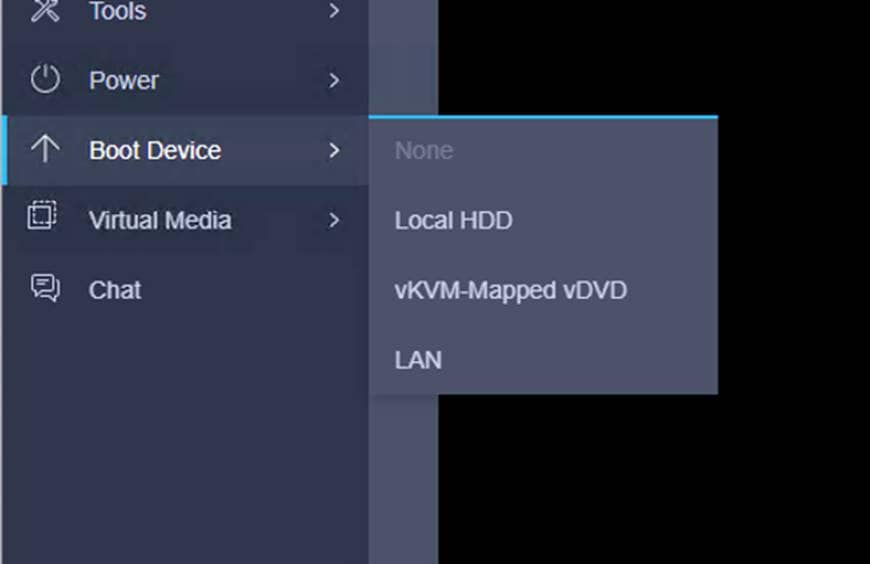

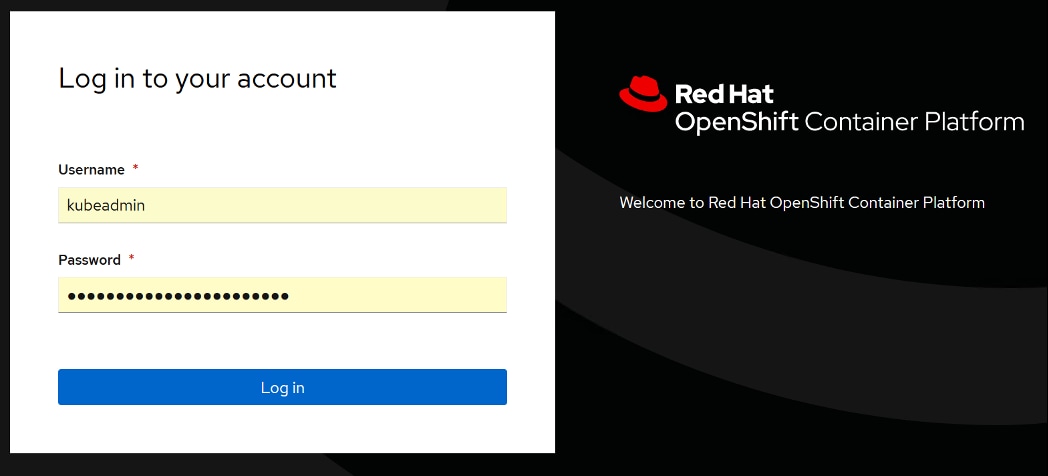

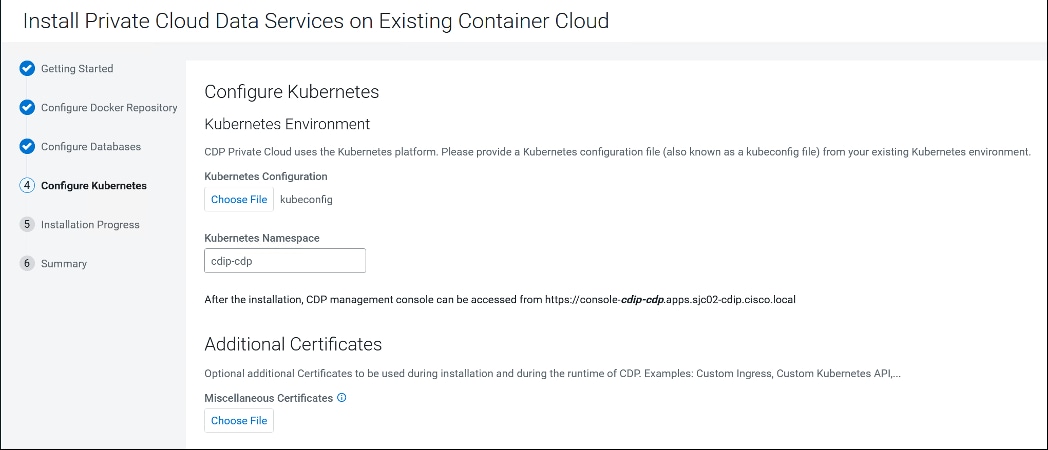

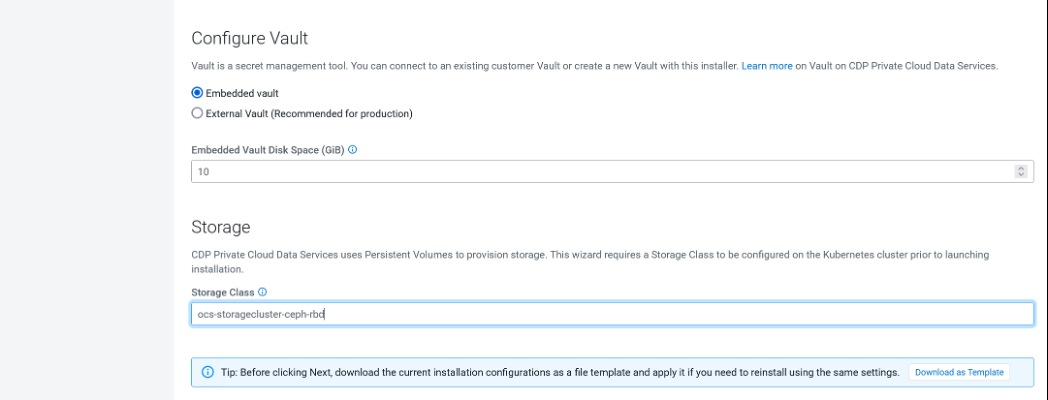

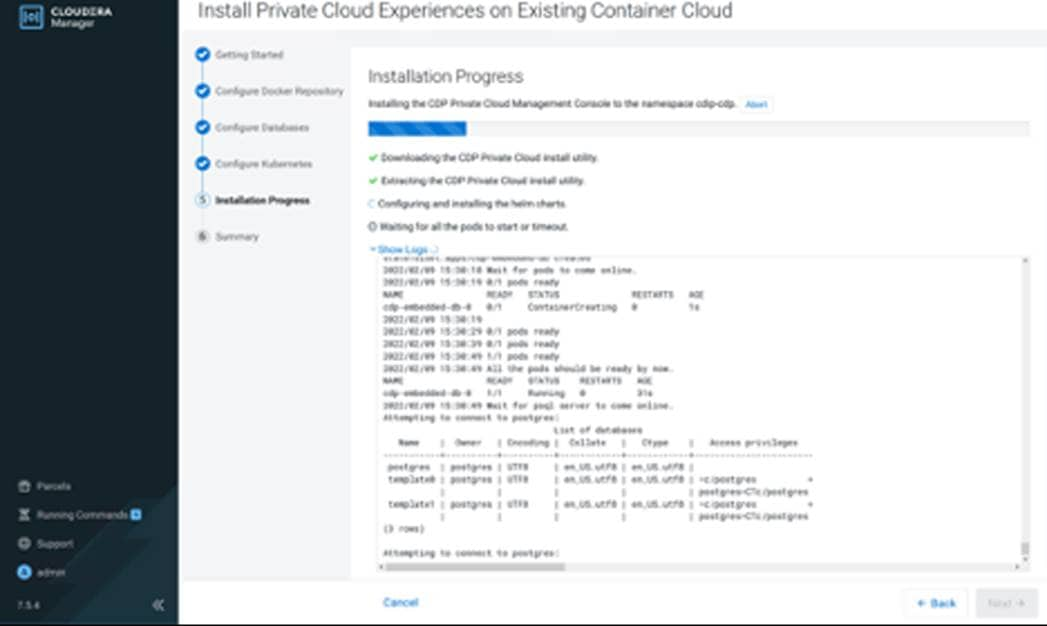

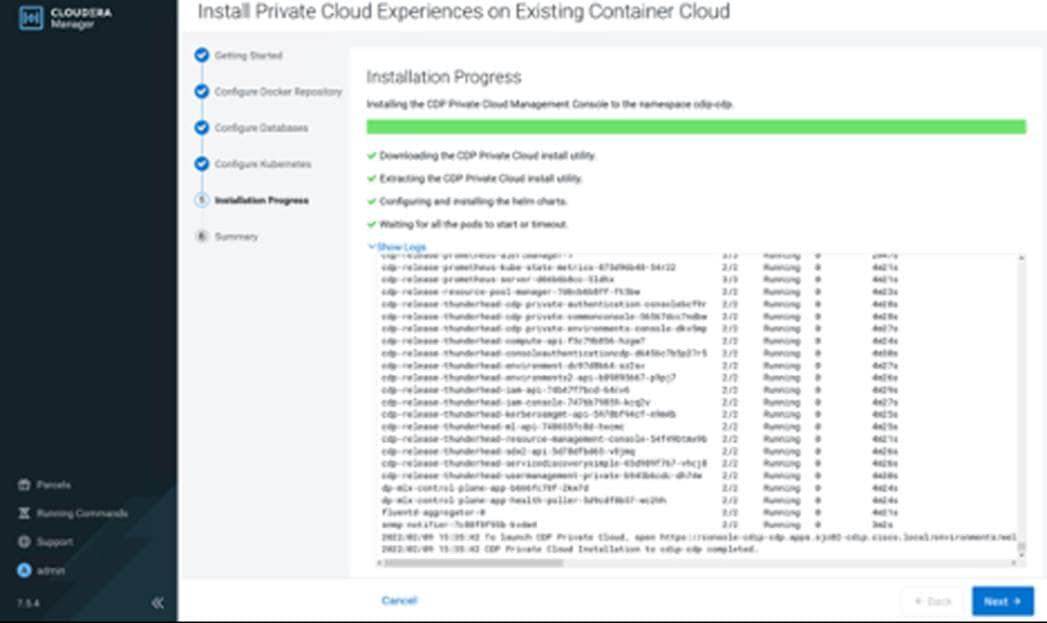

Cloudera Data Platform Private Cloud Requirements